FunASR: A Fundamental End-to-End Speech Recognition Toolkit

FunASR: 端到端语音识别基础工具包

Abstract

摘要

This paper introduces FunASR1, an open-source speech recognition toolkit designed to bridge the gap between academic research and industrial applications. FunASR offers models trained on large-scale industrial corpora and the ability to deploy them in applications. The toolkit’s flagship model, Paraformer, is a non-auto regressive end-to-end speech recognition model that has been trained on a manually annotated Mandarin speech recognition dataset that contains 60,000 hours of speech. To improve the performance of Paraformer, we have added timestamp prediction and hotword customization capabilities to the standard Paraformer backbone. In addition, to facilitate model deployment, we have open-sourced a voice activity detection model based on the Feed forward Sequential Memory Network (FSMN-VAD) and a text post-processing punctuation model based on the controllable time-delay Transformer (CT-Transformer), both of which were trained on industrial corpora. These functional modules provide a solid foundation for building high-precision long audio speech recognition services. Compared to other models trained on open datasets, Paraformer demonstrates superior performance.

本文介绍FunASR1,一个旨在弥合学术研究与工业应用差距的开源语音识别工具包。FunASR提供基于大规模工业语料库训练的模型及其应用部署能力。该工具包的核心模型Paraformer是一种非自回归端到端语音识别模型,其训练数据为包含6万小时语音的手工标注中文语音识别数据集。为提升Paraformer性能,我们在标准Paraformer主干网络中增加了时间戳预测和热词定制功能。此外,为简化模型部署,我们开源了基于前馈序列记忆网络(FSMN-VAD)的语音活动检测模型,以及基于可控时延Transformer(CT-Transformer)的文本后处理标点模型,二者均基于工业语料库训练。这些功能模块为构建高精度长语音识别服务提供了坚实基础。与基于公开数据集训练的其他模型相比,Paraformer展现出更优性能。

1. Introduction

1. 引言

Over the past few years, the performance of end-to-end (E2E) models has surpassed that of conventional hybrid systems on automatic speech recognition (ASR) tasks. There are three popular E2E approaches: connection is t temporal classification (CTC) [1], recurrent neural network transducer (RNN-T) [2] and attention based encoder-decoder (AED) [3, 4]. Of these, AED models have dominated seq2seq modeling for ASR, due to their superior recognition accuracy [4–13]. Open-source toolkits including ESPNET [14], WeNet [15], Paddle Speech [16] and K2 [17] et al., have been developed to facilitate research in endto-end speech recognition. These open-source tools have played a great role in reducing the difficulty of building an end-to-end speech recognition system.

过去几年,端到端(E2E)模型在自动语音识别(ASR)任务上的表现已超越传统混合系统。目前主流的三种端到端方法包括:连接时序分类(CTC) [1]、循环神经网络转导器(RNN-T) [2]以及基于注意力的编码器-解码器(AED) [3,4]。其中,AED模型凭借其卓越的识别准确率[4-13],在ASR的序列到序列建模领域占据主导地位。为促进端到端语音识别研究,业界已开发出ESPNET[14]、WeNet[15]、Paddle Speech[16]和K2[17]等开源工具包。这些开源工具极大降低了构建端到端语音识别系统的难度。

In this work, we introduce FunASR, a new open source speech recognition toolkit designed to bridge the gap between academic research and industrial applications. FunASR builds upon previous works and provides several unique features:

在本工作中,我们推出了FunASR,这是一个旨在弥合学术研究与工业应用之间鸿沟的全新开源语音识别工具包。FunASR基于先前的研究成果,提供了多项独特功能:

- Modelsope: FunASR provides a comprehensive range of pretrained models based on industrial data. The flagship model, Paraformer [18], is a non-auto regressive end-to-end speech recognition model that has been trained on a manually annotated Mandarin speech recognition dataset that contains

- 模型范围: FunASR提供基于工业数据的全方位预训练模型。旗舰模型Paraformer [18]是一种非自回归端到端语音识别模型,该模型在包含手动标注的中文语音识别数据集上进行了训练

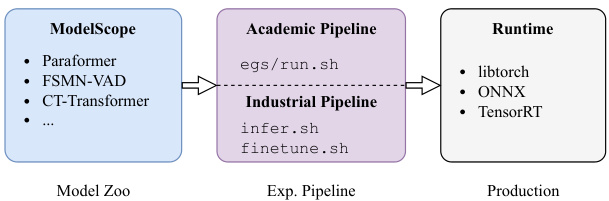

Figure 1: Overview of FunASR design.

图 1: FunASR设计概览。

60,000 hours of speech. Compared with Conformer [5] and RNN-T [2] supported by mainstream open source frameworks, Paraformer offers comparable performance while being more efficient.

60,000小时的语音数据。与主流开源框架支持的Conformer [5]和RNN-T [2]相比,Paraformer在保持相当性能的同时更加高效。

- Training & Finetuning: FunASR is a comprehensive toolkit that offers a range of example recipes to train end-to-end speech recognition models from scratch, including Transformer, Conformer, and Paraformer models for datasets like AISHELL [19, 20], We net Speech [21] and Libri Speech [22]. Additionally, FunASR provides a convenient finetuning script that allows users to quickly fine-tune a pre-trained model from the ModelScope on a small amount of domain data, resulting in high-performance recognition models. This feature is particularly beneficial for academic researchers and developers who may have limited access to data and computing power required to train models from scratch.

- 训练与微调:FunASR 是一个综合性工具包,提供了一系列示例方案用于从头训练端到端语音识别模型,包括针对 AISHELL [19, 20]、WeNet Speech [21] 和 Libri Speech [22] 等数据集的 Transformer、Conformer 和 Paraformer 模型。此外,FunASR 还提供了便捷的微调脚本,用户可通过少量领域数据快速微调 ModelScope 上的预训练模型,从而获得高性能识别模型。该功能对数据与计算资源有限、难以从头训练模型的学术研究者和开发者尤为实用。

- Speech Recognition Services: FunASR enables users to build speech recognition services that can be deployed on realapplications. To facilitate model deployment, we have also released a voice activity detection model based on the Feedforward Sequential Memory Network (FSMN-VAD) [23] and a text post-processing punctuation model based on the controllable time-delay Transformer (CT-Transformer) [24], both of which were trained on industrial corpora. To improve the performance of Paraformer, we have added timestamp prediction and hotword customization capabilities to the standard Paraformer backbone. Additionally, FunASR includes an inference engine that supports CPU and GPU inference through ONNX, libtorch, and TensorRT. These functional modules simplify the process of building high-precision, long audio speech recognition services using FunASR.

- 语音识别服务:FunASR 支持用户构建可部署于实际应用的语音识别服务。为简化模型部署流程,我们发布了基于前馈序列记忆网络 (FSMN-VAD) [23] 的语音活动检测模型,以及基于可控延时 Transformer (CT-Transformer) [24] 的文本后处理标点模型,二者均在工业级语料库上训练完成。为提升 Paraformer 性能,我们在标准 Paraformer 主干网络中新增了时间戳预测和热词定制功能。此外,FunASR 还包含支持 ONNX、libtorch 和 TensorRT 的推理引擎,可实现 CPU 与 GPU 推理。这些功能模块显著降低了使用 FunASR 构建高精度、长音频语音识别服务的复杂度。

Overall, FunASR is a powerful speech recognition toolkit that offers unique features not found in other open source tools. We believe that our contributions will help to further advance the field of speech recognition and enable more researchers and developers to apply these techniques to real-world applications. It should be noted that, this paper only reports the experiments on Mandarin corpora, due to the limitation of the number of pages. In fact, FunASR supports many types of languages, including English, French, German, Spanish, Russian, Japanese, Korean, etc (more details could be found in model zoo).

总体而言,FunASR是一款功能强大的语音识别工具包,具备其他开源工具所没有的独特功能。我们相信,这些贡献将有助于进一步推动语音识别领域的发展,并使更多的研究人员和开发者能够将这些技术应用到实际场景中。需要注意的是,由于篇幅限制,本文仅报告了在普通话语料库上的实验结果。事实上,FunASR支持多种语言,包括英语、法语、德语、西班牙语、俄语、日语、韩语等(更多细节可查阅模型库)。

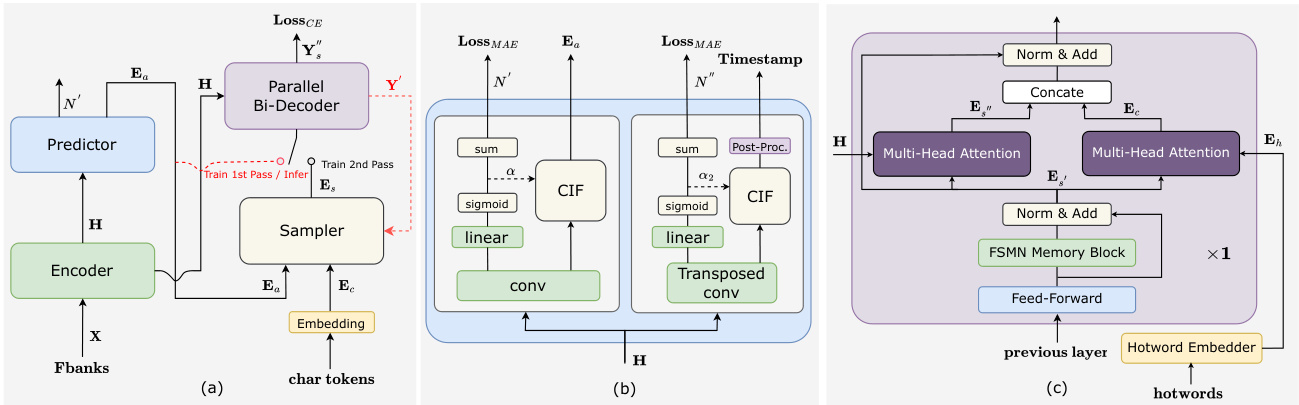

Figure 2: Illustrations of the Paraformer related architectures. (a) Paraformer; (b) Advanced timestamp prediction; (c) Contextual decoder layer for hotword customization.

图 2: Paraformer相关架构示意图。(a) Paraformer; (b) 高级时间戳预测; (c) 用于热词定制的上下文解码器层。

2. Overview of FunASR

2. FunASR 概述

The overall framework of FunASR is illustrated in Fig. 1. ModelScope manages the models utilized in FunASR and hosts critical ones such as Paraformer, FSMN-VAD, and CT-Transformer.

FunASR的整体框架如图1所示。ModelScope管理FunASR中使用的模型,并托管关键模型如Paraformer、FSMN-VAD和CT-Transformer。

Users of FunASR can easily perform experiments using its Pytorch-based pipelines, which are categorized as either academic or industrial pipelines. The academic pipeline, denoted by $r u n.s h$ , enables users to train models from scratch. The $r u n.s h$ script follows the recipe style of ESPNET and includes stages for data preparation (stage 0), feature extraction (stage 1), dictionary generation (stage 2), model training (stage 3 and 4), and model inference and scoring (stage 5). In contrast, the industrial pipeline offers two separate scripts: inf er.sh for inference and f inetune.sh for fine-tuning. These pipelines are easy to use, with users only needing to specify the model name and dataset.

FunASR用户可轻松使用基于Pytorch的两种实验流程:学术流程与工业流程。学术流程通过$run.sh$脚本实现,支持用户从零开始训练模型。该脚本遵循ESPNET的配方风格,包含数据准备(stage 0)、特征提取(stage 1)、词典生成(stage 2)、模型训练(stage 3和4)以及模型推理与评分(stage 5)等阶段。工业流程则提供两个独立脚本:$infer.sh$用于推理,$finetune.sh$用于微调。这些流程操作简便,用户仅需指定模型名称和数据集即可。

FunASR also provides an easy-to-use runtime for deploying models in applications. To support various hardware platforms such as CPU, GPU, Android, and iOS, we offer different runtime backends including Libtorch, ONNX, and TensorRT. In addition, we utilize AMP quantization [25] to accelerate the inference runtime and ensure optimal performance. With these features, FunASR makes it easy to deploy and use speech recognition models in a wide range of applications.

FunASR 还提供了一个易于使用的运行时环境,用于在应用程序中部署模型。为支持 CPU、GPU、Android 和 iOS 等多种硬件平台,我们提供了包括 Libtorch、ONNX 和 TensorRT 在内的不同运行时后端。此外,我们利用 AMP 量化 [25] 技术加速推理运行时,确保最佳性能。通过这些特性,FunASR 能够轻松在各种应用中部署和使用语音识别模型。

3. Main Modules of FunASR

3. FunASR 主要模块

3.1. Paraformer

3.1. Paraformer

To begin, let us provide a brief overview of Paraformer [18], a model that we have previously proposed, depicted in Fig. 2(a). Paraformer is a single-step non-auto regressive (NAR) model that incorporates a glancing language model-based sampler module to enhance the NAR decoder’s ability to capture token inter-dependencies.

首先,我们简要介绍一下之前提出的Paraformer [18] 模型,如图 2(a) 所示。Paraformer 是一种单步非自回归 (NAR) 模型,它通过引入基于扫视语言模型 (glancing language model) 的采样器模块来增强 NAR 解码器捕获 token 间依赖关系的能力。

The Paraformer consists of two core modules: the predictor and the sampler. The predictor module is used to generate acoustic embeddings, which capture the information from the input speech signals. During training, the sampler module incorporates target embeddings by randomly substituting tokens into the acoustic embeddings to generate semantic embeddings. This approach allows the model to capture the interdependence between different tokens and improves the overall performance of the model. However, during inference, the sampler module is inactive, and the acoustic embeddings are used to output the final prediction over only a single pass. This approach ensures faster inference times and lower latency.

Paraformer包含两个核心模块:预测器和采样器。预测器模块用于生成声学嵌入 (acoustic embeddings),从输入语音信号中捕获信息。在训练过程中,采样器模块通过随机替换token到声学嵌入中来融合目标嵌入,从而生成语义嵌入。这种方法使模型能够捕获不同token间的相互依赖关系,并提升整体性能。但在推理阶段,采样器模块处于非激活状态,声学嵌入仅通过单次前向传播输出最终预测结果,从而确保更快的推理速度和更低延迟。

In order to further enhance the performance of Paraformer, this paper proposes modifications including timestamp prediction and hotword customization. In addition, the loss function used in [18] has been updated by removing the MWER loss, which was found to contribute little to performance gains. An additional CE loss is now used in the first pass decoder to reduce the discrepancy between training and inference. The next subsection will provide detailed explanations.

为进一步提升Paraformer的性能,本文提出了包括时间戳预测和热词定制在内的改进方案。此外,对文献[18]中使用的损失函数进行了更新,移除了对性能提升贡献甚微的MWER损失。当前在第一遍解码器中增加了CE损失,以减少训练与推理之间的差异。下一小节将对此进行详细说明。

3.2. Timestamp Predictor

3.2. 时间戳预测器

Accurate timestamp prediction is a crucial function of ASR systems. However, conventional industrial ASR systems require an extra hybrid model to conduct force-alignment (FA) for timestamp prediction (TP), leading to increased computation and time costs. FunASR provides an end-to-end ASR model that achieves accurate timestamp prediction by redesigning the structure of the Paraformer predictor, as depicted in Fig.2(b). We introduce a transposed convolution layer and LSTM layer to upsample the encoder output, and timestamps are generated by post-processing CIF [26] weights $\alpha_{2}$ . We treat the frames between two fireplaces as the duration of the former tokens and mark out the silence parts according to $\alpha_{2}$ . In addition, FunASR also releases a force-alignment-like model named TP-Aligner, which includes an encoder of smaller size and a timestamp predictor. It takes speech and corresponding transcription as input to generate timestamps.

准确的时间戳预测是ASR系统的关键功能。然而,传统工业ASR系统需要额外的混合模型进行强制对齐(FA)以实现时间戳预测(TP),导致计算和时间成本增加。FunASR提供了一种端到端ASR模型,通过重新设计Paraformer预测器结构实现精准时间戳预测,如图2(b)所示。我们引入转置卷积层和LSTM层对编码器输出进行上采样,并通过后处理CIF[26]权重$\alpha_{2}$生成时间戳。将两个触发点之间的帧视为前序token的持续时间,并根据$\alpha_{2}$标出静音部分。此外,FunASR还发布了类强制对齐模型TP-Aligner,包含较小尺寸的编码器和时间戳预测器,以语音及对应文本为输入生成时间戳。

Table 1: Evaluation of timestamp prediction.

表 1: 时间戳预测评估。

| 数据 | 系统 | AAS (ms) |

|---|---|---|

| AISHELL | Force-alignment | 80.1 |

| Paraformer-TP | 71.0 | |

| Industrial Data | Force-alignment | 60.3 |

| Paraformer-large-TP | 65.3 | |

| TP-Aligner | 69.3 | |

We conducted experiments on AISHELL and 60,000-hour industrial data to evaluate the quality of timestamp prediction. The evaluation metrics used for measuring timestamp quality is the accumulated average shift (AAS) [27]. We used a test set of 5,549 utterances with manually marked timestamps to compare the timestamp prediction performance of the provided models with FA systems trained with Kaldi [28]. The results show that Paraformer-TP outperforms the FA system on AISHELL. In industrial experiments, we found that the proposed timestamp prediction method is comparable to the hybrid FA system in terms of timestamp accuracy (with a gap of less than 10ms). Moreover, the one-pass solution is valuable for commercial usage as it helps in reducing computation and time overhead.

我们在AISHELL和6万小时工业数据上进行了实验,以评估时间戳预测的质量。用于衡量时间戳质量的评估指标是累积平均偏移量(AAS) [27]。我们使用包含5,549条人工标注时间戳的测试集,将所提供模型的时间戳预测性能与基于Kaldi [28]训练的FA系统进行对比。结果表明,Paraformer-TP在AISHELL上优于FA系统。在工业实验中,我们发现所提出的时间戳预测方法在精度上与混合FA系统相当(差距小于10毫秒)。此外,这种单次推理方案对商业应用具有重要价值,可有效降低计算和时间开销。

3.3. Hotword Customization

3.3. 热词定制

Contextual Paraformer offers the ability to customize hotwords by utilizing named entities, which enhances incentives and improves the recall and accuracy. Two additional modules have been added to the basic Paraformer model - a hotword embedder and a multi-head attention in the last layer of the decoder, depicted in Fig. 2(c).

Contextual Paraformer 提供了通过命名实体自定义热词的能力,从而增强激励并提高召回率和准确率。在基础 Paraformer 模型上增加了两个模块——热词嵌入器和解码器最后一层的多头注意力 (multi-head attention) ,如图 2(c) 所示。

We utilize hotwords, denoted as $\pmb{w}=\pmb{w_{1}},...,\pmb{w}{n}$ , as input to our hotword embedder [29]. The hotword embedder consists of an embedding layer and LSTM layer, which takes the context hotwords as input and generates an embedding, denoted as $E_{h}$ , by using the last state of the LSTM. Specifically, the hotwords are first fed to the hotword embedder, which produces a sequence of hidden states. We then use the last hidden state as the embedding of the hotwords, capturing the contextual information of the input sequence.

我们利用热词(hotwords),记为 $\pmb{w}=\pmb{w_{1}},...,\pmb{w}{n}$,作为热词嵌入器 [29] 的输入。该热词嵌入器由嵌入层和 LSTM 层组成,它以上下文热词作为输入,并通过使用 LSTM 的最后一个状态生成嵌入表示 $E_{h}$。具体而言,热词首先被送入热词嵌入器,生成一系列隐藏状态。然后我们使用最后一个隐藏状态作为热词的嵌入表示,从而捕捉输入序列的上下文信息。

To capture the relationship between the hotword embedding $E_{h}$ and the output of the last layer of the FSMN memory block $\mathit{{\mathbf{{E}}{s}}}^{\prime}$ , we employ a multi-head attention module. Then, we concate the $\scriptstyle{E_{s}}^{\prime}$ and contextual attention $E_{c}$ . This operation is formalized in Equation 1:

为了捕捉热词嵌入 $E_{h}$ 与FSMN记忆块最后一层输出 $\mathit{{\mathbf{{E}}{s}}}^{\prime}$ 之间的关系,我们采用了多头注意力模块。随后,我们将 $\scriptstyle{E_{s}}^{\prime}$ 与上下文注意力 $E_{c}$ 进行拼接。该操作在公式1中形式化表示为:

$$

\begin{array}{r l}&{E_{c}=\mathrm{MultiHeadAttention}(E_{s^{\prime}}W_{c}^{Q},E_{h}W_{c}^{K},E_{h}W_{c}^{V}),}\ &{E_{s^{\prime\prime}}=\mathrm{MultiHeadAttention}(E_{s^{\prime}}W_{s}^{Q},H W_{s}^{K},H W_{s}^{V}),}\ &{=\mathrm{Conv1d}([E_{s^{\prime\prime}};E_{c}])}\end{array}

$$

$$

\begin{array}{r l}&{E_{c}=\mathrm{MultiHeadAttention}(E_{s^{\prime}}W_{c}^{Q},E_{h}W_{c}^{K},E_{h}W_{c}^{V}),}\ &{E_{s^{\prime\prime}}=\mathrm{MultiHeadAttention}(E_{s^{\prime}}W_{s}^{Q},H W_{s}^{K},H W_{s}^{V}),}\ &{=\mathrm{Conv1d}([E_{s^{\prime\prime}};E_{c}])}\end{array}

$$

We use a one-dimensional convolutional layer $(C o n v1d)$ to reduce its dimensionality to match that of the hidden state $E_{s_{\perp}^{'}}$ , which serves as the input of the subsequent layer. It’s worth noting that apart from this modification, the other processes of our Contextual Paraformer are the same as those of the standard Paraformer.

我们使用一维卷积层 $(Conv1d)$ 将其维度降低以匹配隐藏状态 $E_{s_{\perp}^{'}}$ 的维度,该状态作为后续层的输入。值得注意的是,除了这一修改外,我们的 Contextual Paraformer 其他流程与标准 Paraformer 相同。

Table 2: The test sets used in this customization task.

表 2: 本次定制任务中使用的测试集。

| Dataset | Utts | Named Entities |

|---|---|---|

| AI domain | 486 | 204 |

| Common domain | 1308 | 231 |

During the training, the hotwords are randomly generated from target in each training batch. As for inference, we can specify hotwords by providing a list of named entities to the model.

在训练过程中,热词(hotwords)会在每个训练批次中从目标内容随机生成。推理时,我们可以通过向模型提供命名实体列表来指定热词。

Table 3: Evaluation of hotword customization

表 3: 热词定制效果评估

| 数据集 | 热词 | CER | R | P | F1 |

|---|---|---|---|---|---|

| AISHELL 热词子测试 | 无 | 10.01 | 16 | 100 | 27 |

| 有 | 4.55 | 74 | 100 | 85 | |

| 工业AI领域 | 无 | 7.96 | 70 | 98 | 82 |

| 有 | 6.31 | 89 | 98 | 93 | |

| 工业通用领域 | 无 | 9.47 | 67 | 100 | 80 |

| 有 | 8.75 | 80 | 98 | 88 |

To evaluate the hotword customization effect of Contextual Paraformer, we created a hotword testset by sampling 235 audio clips containing entity words from the AISHELL testset, which included 187 named entities. The dataset has been uploaded to the ModelScope and the test re