A Multi-Task Semantic Decomposition Framework with Task-specific Pre-training for Few-Shot NER

基于任务特定预训练的少样本命名实体识别多任务语义分解框架

ABSTRACT

摘要

The objective of few-shot named entity recognition is to identify named entities with limited labeled instances. Previous works have primarily focused on optimizing the traditional token-wise classification framework, while neglecting the exploration of information based on NER data characteristics. To address this issue, we propose a Multi-Task Semantic Decomposition Framework via Joint Task-specific Pre-training (MSDP) for few-shot NER. Drawing inspiration from demonstration-based and contrastive learning, we introduce two novel pre-training tasks: Demonstration-based Masked Language Modeling (MLM) and Class Contrastive Discrimination. These tasks effectively incorporate entity boundary information and enhance entity representation in Pre-trained Language Models (PLMs). In the downstream main task, we introduce a multitask joint optimization framework with the semantic decomposing method, which facilitates the model to integrate two different semantic information for entity classification. Experimental results of two few-shot NER benchmarks demonstrate that MSDP consistently outperforms strong baselines by a large margin. Extensive analyses validate the effectiveness and generalization of MSDP.

少样本命名实体识别的目标是在有限标注实例下识别命名实体。先前研究主要聚焦于优化传统的基于token的分类框架,而忽视了基于NER数据特性的信息探索。为解决这一问题,我们提出通过联合任务特定预训练的多任务语义分解框架(MSDP)。受基于演示和对比学习的启发,我们引入两项新颖的预训练任务:基于演示的掩码语言建模(MLM)和类别对比判别。这些任务有效整合了实体边界信息,并增强了预训练语言模型(PLM)中的实体表示能力。在下游主任务中,我们采用语义分解方法构建多任务联合优化框架,促使模型融合两种不同语义信息进行实体分类。两个少样本NER基准测试的实验结果表明,MSDP始终以显著优势超越强基线模型。大量分析验证了MSDP的有效性和泛化能力。

CCS CONCEPTS

CCS概念

• Computing methodologies $\rightarrow$ Artificial intelligence; $\bullet$ Natural language processing $\rightarrow$ Information extraction.

- 计算方法 $\rightarrow$ 人工智能 (Artificial Intelligence)

- 自然语言处理 $\rightarrow$ 信息抽取 (Information Extraction)

KEYWORDS

关键词

Few-shot NER, Multi-Task, Semantic Decomposition, Pre-training

少样本NER、多任务、语义分解、预训练

ACM Reference Format:

ACM 参考文献格式:

1 INTRODUCTION

1 引言

Named entity recognition (NER) plays a crucial role in Natural Language Understanding applications by identifying consecutive segments of text and assigning them to predefined categories [25, 49, 50]. Recent advancements in deep neural architectures have demonstrated exceptional performance in fully supervised NER tasks [10, 34, 51]. However, the collection of annotated data for practical applications incurs significant expenses and poses inflexibility challenges. As a result, the research community has increasingly focused on few-shot NER task, which seeks to identify entities with only a few labeled instances, attracting substantial interest in recent years.

命名实体识别 (NER) 通过识别文本中的连续片段并将其归类到预定义类别,在自然语言理解应用中发挥着关键作用 [25, 49, 50]。深度神经网络架构的最新进展在全监督NER任务中展现出卓越性能 [10, 34, 51]。然而,为实际应用收集标注数据成本高昂且缺乏灵活性。因此,研究界日益关注少样本NER任务——该任务旨在仅用少量标注实例识别实体,近年来引发了广泛研究兴趣。

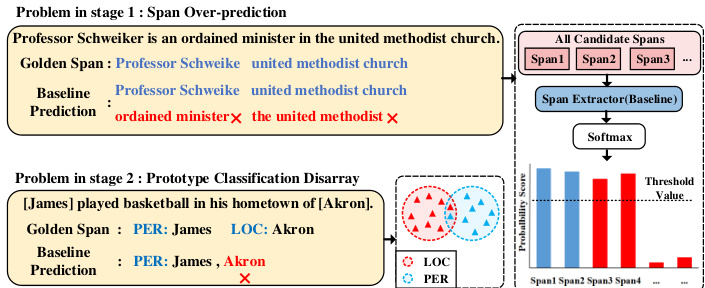

Previous few-shot NER methods [11, 18, 28, 46, 70, 81] generally formulate the task as a sequence labeling task based on prototypical networks [59]. These approaches employ prototypes to represent each class based on labeled instances and utilize the nearest neighbor method for NER. However, these models only capture the surface mapping between entity and class, making them vulnerable to disturbances caused by non-entity tokens (i.e. "O" class) [58, 66]. To alleviate this issue, a branch of two-stage methods [47, 58, 66, 69] arise to decouple NER into two separate processes, including span extraction and entity classification. Despite the above achievement , there are still two remaining problems. (1) Span Over-prediction: as shown in Figure 1 , previous span-based works suffer from the span over-prediction problem [27, 80]. Specifically, the model will extract redundant candidate spans in addition to predicting the correct spans. The reason for the above phenomenon is that it is difficult for PLMs to learn reliable boundary information between entities and non-entities due to insufficient data. As a result, PLMs tend to give similar candidate spans high probability scores or even be over-confident about their predictions [23]. (2) Prototype Classification Disarray: Previous prototype-based methods directly utilize the mean value of entity representations to compute prototype embedding, leading to the classification accuracy heavily relies on the quality of entity representations. Unfortunately, PLMs often face the issue of semantic space collapse, where different classes of entity representations are closely distributed, especially for entities within the same sentences. Figure 1 illustrates that different classes of entities interfere with each other under the interaction of the selfattention mechanism, causing close or even overlapping prototypes distribution in the semantic space(e.g. "LOC" prototype overlapping with “PER” prototype). The model finally suffers from performance degradation due to class confusion. Therefore, we urgently need to design a method introducing different aspects of information to alleviate the above problems, which facilitates techniques of fewshot NER to be widely applied in realistic task-oriented dialogue scenarios.

以往少样本命名实体识别(NER)方法[11,18,28,46,70,81]通常基于原型网络[59]将该任务构建为序列标注任务。这些方法利用标注实例为每个类别构建原型表示,并采用最近邻方法进行NER。然而,这些模型仅捕捉到实体与类别间的表层映射关系,易受非实体token(即"O"类)[58,66]的干扰。为缓解该问题,部分两阶段方法[47,58,66,69]将NER解耦为跨度抽取和实体分类两个独立过程。尽管取得上述进展,仍存在两个问题:(1) 跨度过预测:如图1所示,现有基于跨度的方法存在跨度过预测问题[27,80]。具体表现为模型除预测正确跨度外,还会抽取大量冗余候选跨度。该现象源于预训练语言模型(PLMs)在数据不足时难以学习可靠的实体边界信息,导致其倾向于给相似候选跨度赋予高概率分数,甚至产生过度自信预测[23]。(2) 原型分类混乱:现有基于原型的方法直接使用实体表征均值计算原型嵌入,使得分类精度高度依赖实体表征质量。然而PLMs常面临语义空间坍缩问题,特别是同一句子中的不同类别实体表征会紧密分布。图1显示,在自注意力机制作用下,不同类别实体相互干扰,导致语义空间中原型分布紧密甚至重叠(如"LOC"原型与"PER"原型重叠)。这种类别混淆最终导致模型性能下降。因此亟需设计能引入多维信息的方法来缓解上述问题,推动少样本NER技术在实际任务型对话场景中的应用。

Figure 1: The illustration of the baseline model suffering from span over-prediction (upper) and Prototype Classification Disarray (down) problem in few-shot NER.

图 1: 少样本NER中基线模型遭遇跨度过度预测(上)和原型分类混乱(下)问题的示意图。

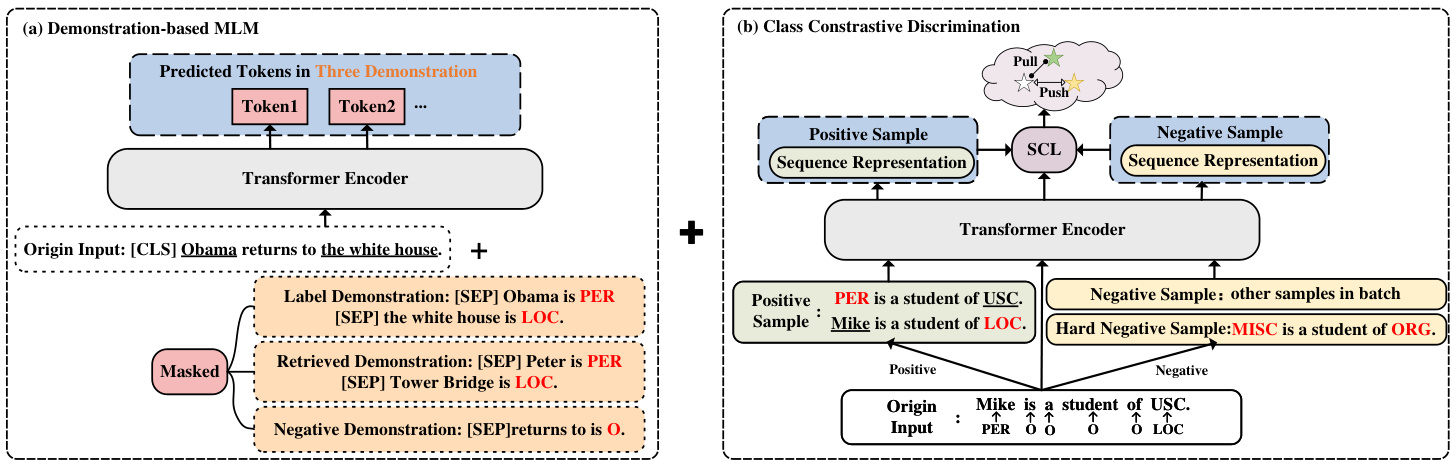

To tackle these limitations, we propose a Multi-Task Semantic Decomposition Framework via Joint Task-specific Pre-training (MSDP), which guides PLMs to capture reliable entity boundary information and better entity representations of different classes. For the pre-training stage, inspired by demonstration-based learning [19] and contrastive learning [9], we introduce two novel taskspecific pre-training tasks according to the data characteristics of NER (entity-label pairs): Demonstration-based MLM, in which we design three kinds of demonstrations containing entity boundary information and entity label pair information. PLMs will implicitly learn the above information during predicting label words for [MASK]; Class Contrastive Discrimination, in which we use contrastive learning to better discriminate different classes of entity representations by constructing positive, negative, and hard negative samples. Through the joint optimization of above fine-grained pre-training tasks, PLMs can effectively alleviate the two remaining problems.

为解决这些局限性,我们提出了一种通过联合任务特定预训练 (MSDP) 实现的多任务语义分解框架,该框架指导预训练语言模型 (PLM) 捕获可靠的实体边界信息以及不同类别的更优实体表示。在预训练阶段,受基于演示的学习 [19] 和对比学习 [9] 启发,我们根据命名实体识别 (NER) 的数据特性(实体-标签对)设计了两个新颖的任务特定预训练任务:基于演示的掩码语言建模 (Demonstration-based MLM)——通过设计三种包含实体边界信息和实体标签对信息的演示样本,使 PLM 在预测[MASK]标签词时隐式学习上述信息;类别对比判别 (Class Contrastive Discrimination)——通过构建正样本、负样本和困难负样本,利用对比学习更好地区分不同类别的实体表示。通过对上述细粒度预训练任务的联合优化,PLM 能有效缓解剩余的两个问题。

For downstream few-shot NER, we follow the two-stage framework [47, 65] including span extraction and entity classification, and initialize them with the pre-trained parameters. Different from previous methods, we employ a multi-task joint optimization framework and utilize different masking strategies to decompose class-oriented prototypes and contextual fusion prototypes. The purpose of our design is to assist the model to integrate different semantic information for classification, which further alleviates the prototype classification disarray problem. We conduct extensive experiments over two widely-used benchmarks, including Few-NERD [14] and CrossNER [28]. Results show that our method consistently outperforms state-of-the-art baselines by a large margin. In addition, we introduce detailed experimental analyses to further verify the effectiveness of our method. Our contributions are three-fold:

在下游少样本命名实体识别(NER)任务中,我们采用包含跨度提取和实体分类的两阶段框架[47,65],并使用预训练参数进行初始化。与现有方法不同,我们采用多任务联合优化框架,并运用不同的掩码策略来分解面向类别的原型和上下文融合原型。该设计旨在帮助模型整合不同语义信息进行分类,从而进一步缓解原型分类混乱问题。我们在Few-NERD[14]和CrossNER[28]这两个广泛使用的基准测试上进行了大量实验。结果表明,我们的方法始终以较大优势超越现有最优基线模型。此外,我们还通过详细的实验分析进一步验证了方法的有效性。本文主要贡献包括以下三个方面:

- To the best of our knowledge, we are the first to introduce a multitask joint optimization framework with the semantic decomposing method into Few-Shot NER task.

- 据我们所知,我们是首个将多任务联合优化框架与语义分解方法引入少样本命名实体识别 (Few-Shot NER) 任务的团队。

- we futher propose two task-specific pre-training tasks via demonstration and contrastive learning, namely demonstration-based MLM and class contrastive discrimination, for effectively injecting entity boundary information and better entity representation into PLMs.

- 我们进一步提出两种基于演示和对比学习的任务特定预训练任务,即基于演示的MLM(Masked Language Model)和类别对比判别,以有效将实体边界信息和更优的实体表征注入预训练语言模型(PLMs)。

Figure 2: The illustration of two task-specific pre-training tasks.

图 2: 两种任务特定预训练任务的示意图。

- Experiments on two widely-used few-shot NER benchmarks show that our framework achieves superior performance over previous state-of-the-art methods. Extensive analyses further validate the effectiveness and generalization of MSDP. Our source codes and datasets are available at Github for further comparisons.

- 在两个广泛使用的少样本命名实体识别(NER)基准测试上的实验表明,我们的框架优于之前最先进的方法。大量分析进一步验证了MSDP的有效性和泛化能力。我们的源代码和数据集已发布在Github上以供进一步比较。

2 RELATED WORK

2 相关工作

2.1 Few-shot NER.

2.1 少样本命名实体识别 (Few-shot NER)

Few-shot NER aims to enhance the performance of model identifying and classifying entities with only little annotated data [7, 21, 30, 31, 38, 42–45, 62, 77]. For few-shot NER, a series of approaches have been proposed to learn the representation of entities in the semantic space, i.e. prototypical learning [59], margin-based learning [36] and contrastive learning [20, 24, 37]. Existing approaches can be divided into two kinds, i.e., one-stage [11, 28, 59, 81] and two-stage [16, 47, 58, 69]. Generally, the methods in the kind of one-stage typically categorize the entity type by token-level metric learning. In contrast, two-stage mainly focuses on two training stages consisting of entity span extraction and mention type classification.

少样本NER (Few-shot NER) 旨在通过少量标注数据提升模型识别和分类实体的性能 [7, 21, 30, 31, 38, 42–45, 62, 77]。针对少样本NER,研究者提出了一系列方法来学习实体在语义空间中的表示,例如原型学习 (prototypical learning) [59]、基于间隔的学习 (margin-based learning) [36] 和对比学习 (contrastive learning) [20, 24, 37]。现有方法可分为两类:单阶段 (one-stage) [11, 28, 59, 81] 和双阶段 (two-stage) [16, 47, 58, 69]。通常,单阶段方法通过token级度量学习直接分类实体类型,而双阶段方法则包含实体范围抽取和提及类型分类两个训练阶段。

2.2 Task-specific pre-training Models.

2.2 任务特定预训练模型

Pre-trained language models have been applied as an integral component in modern NLP systems for effectively improving downstream tasks [13, 40, 52, 54, 55, 71, 74, 76]. Due to the underlying discrepancies between the language modeling and downstream tasks, task-specific pre-training methods have been proposed to further boost the task performance, such as SciBERT [5], VideoBERT [61], DialoGPT [75], PLATO [4], Code-BERT [17], ToD-BERT [68] and VL-BERT [60]. However, most studies in the field of few-shot NER use MLM and other approaches for Data Augmentation [15, 29, 79]. Although DictBERT [8], NER-BERT [41] and others have conducted pre-training, their methods are too generalized to adapt to the structured data features of NER or propose optimization for specific problems. Therefore, we designed demonstration-based learning pre-training and contrastive learning pre-training for NER tasks to improve the performance of the model.

预训练语言模型已成为现代自然语言处理(NLP)系统的核心组件,能有效提升下游任务性能 [13, 40, 52, 54, 55, 71, 74, 76]。由于语言建模任务与下游任务存在本质差异,研究者提出了面向特定任务的预训练方法以进一步提升性能,例如 SciBERT [5]、VideoBERT [61]、DialoGPT [75]、PLATO [4]、Code-BERT [17]、ToD-BERT [68] 和 VL-BERT [60]。然而当前少样本命名实体识别(NER)领域的研究大多采用掩码语言建模(MLM)等数据增强方法 [15, 29, 79]。虽然 DictBERT [8]、NER-BERT [41] 等模型进行了预训练,但其方法过于通用化,既未适配NER任务的结构化数据特征,也未针对具体问题提出优化方案。为此,我们设计了基于示例学习的NER预训练方法和对比学习预训练方法以提升模型性能。

2.3 Demonstration-based learning

2.3 基于演示的学习

Demonstrations are first introduced by the GPT series [6, 55], where a few examples are sampled from training data and transformed with templates into appropriately-filled prompts. Based on the task reformulation and whether the parameters are updated, the existing demonstration-based learning research can be broadly divided into three categories: In-context Learning [6, 48, 67, 78], Prompt-based Fine-tuning [39], Classifier-based Fine-tuning [35, 72]. However, these approaches mainly adopt demonstration-based learning in the fine-tuning that cannot make full use of the effect of demonstrationbased learning. Different from them, we use demonstration-based learning in the pre-training stage that can better capture the entity boundary information to solve the multiple-span prediction problem.

演示学习最初由GPT系列[6,55]提出,该方法从训练数据中采样少量示例,并通过模板将其转换为填充得当的提示词。根据任务重构方式和参数是否更新,现有基于演示学习的研究可大致分为三类:上下文学习[6,48,67,78]、基于提示的微调[39]、基于分类器的微调[35,72]。然而这些方法主要在微调阶段采用演示学习,无法充分发挥演示学习的效用。与之不同,我们将演示学习应用于预训练阶段,从而更好地捕捉实体边界信息以解决多跨度预测问题。

3 METHOD

3 方法

3.1 Task-Specific Pre-training

3.1 任务特定预训练

The performance of few-shot NER depends heavily on the different aspects of information from entity label pairs. as shown in Figure 2, we introduce two novel pre-training tasks: 1) demonstration-based MLM and 2) contrastive entity discrimination, to learn the different aspects of knowledge.

少样本NER (Named Entity Recognition) 的性能在很大程度上依赖于来自实体标签对的不同方面信息。如图 2 所示,我们引入了两种新颖的预训练任务: 1) 基于演示的MLM (Masked Language Modeling) 和 2) 对比实体判别,以学习不同方面的知识。

Demonstration-based MLM. We follow the design of masked language modeling (MLM) in BERT [13] and integrate the idea of demonstration-based learning on this basis. In order to prompt PLMs to figure out the boundary between the entity and noneentity, we propose three different demonstrations which are shown in Figure 2(a):

基于演示的MLM。我们遵循BERT [13]中掩码语言建模(MLM)的设计,并在此基础上整合了基于演示学习的思想。为了促使预训练语言模型(PLM)区分实体与非实体的边界,我们提出了三种不同的演示方案,如图2(a)所示:

- Label demonstration (LD): We let $D_{t r a i n}$ denote the train dataset. For each input $x$ in $D_{t r a i n}$ , we extract the entity label pair $(e,l)$ belonging to $x$ and then concatenate them behind input $x$ in form of the simple template $T={e$ 𝑖𝑠 $l}$ . Different demonstrations are separated by [SEP].

- 标签演示 (LD):我们用 $D_{train}$ 表示训练数据集。对于 $D_{train}$ 中的每个输入 $x$,我们提取属于 $x$ 的实体标签对 $(e,l)$,然后按照简单模板 $T={e$ 𝑖𝑠 $l}$ 的形式将它们拼接在输入 $x$ 后面。不同的演示之间用 [SEP] 分隔。

- Retrieved demonstration (RD): Given an entity type label set $L={l_{1},...,l_{|L|}}$ , we first enumerate all the entities in $D_{t r a i n}$ and create a mapping between $l$ and the corresponding list of entities. Further, we randomly select $K$ entity label pairs $(e,l)$ from the mapping $M$ according to the label set $L_{x}$ appearing in input $x$ , which aims at introducing rich entity label pair information to prompt the model. Furthermore, we concatenate them behind label demonstration with template $T={e i s l}$ .

- 检索演示 (Retrieved Demonstration, RD):给定实体类型标签集 $L={l_{1},...,l_{|L|}}$,我们首先枚举 $D_{train}$ 中的所有实体,并创建映射关系 。接着,根据输入 $x$ 中出现的标签集 $L_{x}$,从映射 $M$ 中随机选取 $K$ 个实体标签对 $(e,l)$,旨在向模型引入丰富的实体标签对信息。最后,我们使用模板 $T={e\ is\ l}$ 将这些实体标签对拼接在标签演示之后。

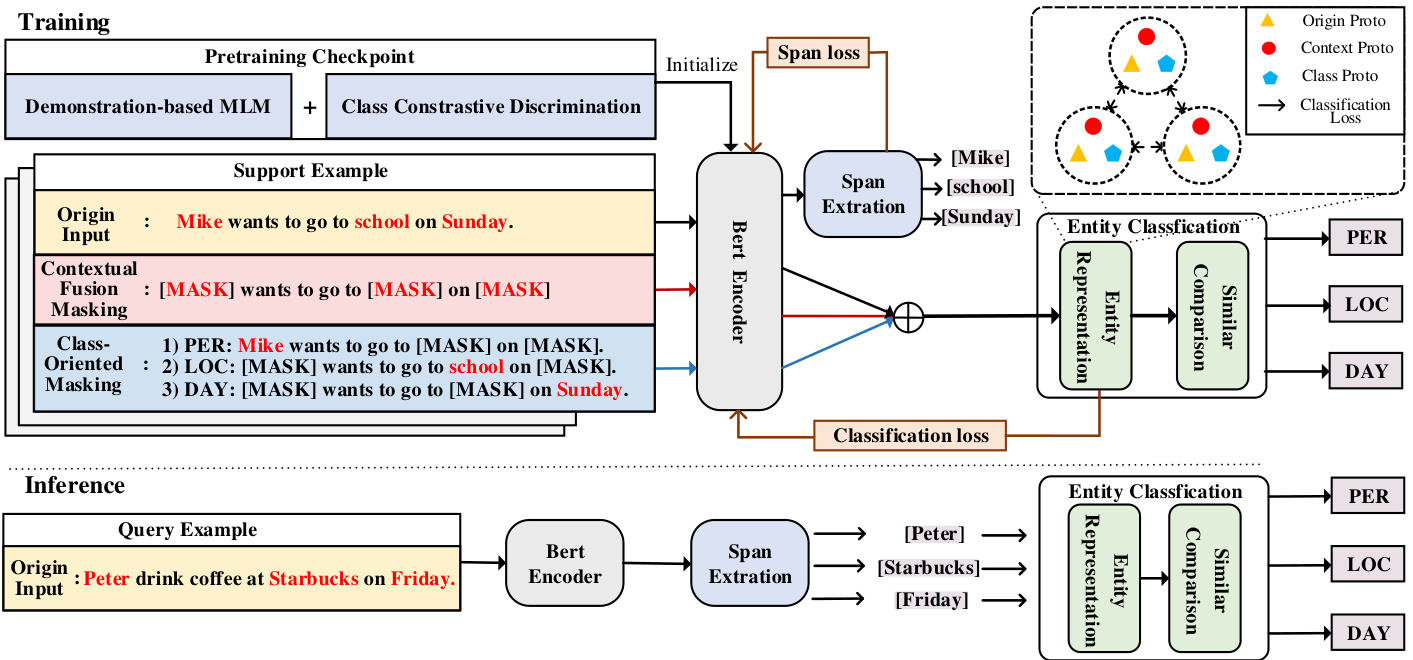

Figure 3: The overall architecture of our proposed MSDP framework

图 3: 我们提出的 MSDP 框架整体架构

- Negative demonstration (ND): We randomly select $K$ noneentities that are easily confused by the model from input $x$ to construct negative sample pairs $(e_{n o n e},O)$ , and then concatenate them behind retrieved demonstrations with template $T^{\prime}={e_{n o n e}$ 𝑖𝑠 $O}$ . Therefore, our training samples can be formulated as:

- 负例演示(ND): 我们从输入$x$中随机选取$K$个容易被模型混淆的非实体(noneentities),构建负样本对$(e_{none},O)$,然后用模板$T^{\prime}={e_{none}$ is $O}$将它们拼接在检索到的演示之后。因此,我们的训练样本可以表示为:

$$

\left[\mathsf{C L S}\right]x\left[\mathsf{S E P}\right]L D\left[\mathsf{S E P}\right]R D\left[\mathsf{S E P}\right]N D

$$

$$

\left[\mathsf{C L S}\right]x\left[\mathsf{S E P}\right]L D\left[\mathsf{S E P}\right]R D\left[\mathsf{S E P}\right]N D

$$

After constructing the training set, we randomly randomly replace N entities or labels with mask symbols or labels in the demonstration with the special $I M A S K J{s y m b o l}^{2}$ , and then try to recover them. If entity $e$ consists of multiple tokens, all of the component tokens will be masked. Hence, the loss function of the MLM is:

在构建训练集后,我们随机将演示中的N个实体或标签替换为特殊符号 $I M A S K J{s y m b o l}^{2}$ 的掩码符号或标签,然后尝试恢复它们。如果实体 $e$ 由多个token组成,则所有组成token都将被掩码。因此,MLM (Masked Language Model) 的损失函数为:

$$

L_{m l m}=-\sum_{m=1}^{M}\log P(x_{m})

$$

$$

L_{m l m}=-\sum_{m=1}^{M}\log P(x_{m})

$$

where $M$ is the total number of masked tokens and $P(x_{m})$ is the predicted probability of the token $x_{m}$ over the vocabulary size.

其中 $M$ 是被遮蔽 token 的总数,$P(x_{m})$ 是 token $x_{m}$ 在词汇表大小上的预测概率。

Class Contrastive Discrimination. To better discriminate different classes of entity representations in semantic space, we introduce class contrastive discrimination. Specifically, we construct positive (negative) samples as follows:

类别对比判别。为了更好地在语义空间中区分不同类别的实体表征,我们引入了类别对比判别方法。具体而言,我们按以下方式构建正(负)样本:

Given an input $x$ that contains $K$ entities, we employ the following procedure to generate positive and negative samples. For positive samples, we replace these $K$ entities with their corresponding label mentions to create $K$ positive samples for each input utterance. For negative samples, we select samples from other classes within the batch. Additionally, we replace all entities with irrelevant label mentions to construct a hard negative sample for each instance that is easily confused by the model. These hard negative samples are then included in the negative sample set. Figure 2 illustrates the corresponding positive and negative samples as depicted in our experiment.

给定一个包含 $K$ 个实体的输入 $x$,我们采用以下流程生成正负样本。对于正样本,我们将这 $K$ 个实体替换为对应的标签提及,从而为每个输入语句创建 $K$ 个正样本。对于负样本,我们从批次内的其他类别中选取样本。此外,我们将所有实体替换为不相关的标签提及,为每个容易被模型混淆的实例构建一个困难负样本,并将这些困难负样本纳入负样本集。图 2 展示了我们实验中对应的正负样本示例。

The representations of the original, positive, and negative samples are denoted by $h_{o},h_{p}$ , and $h_{n}$ , respectively. To account for multiple positive samples, we adopt the supervised contrastive learning (SCL) objective [32], which aims to minimize the distance between the original samples $h_{o}$ and their semantically similar positive samples $h_{p}$ , while maximizing the distance between $h_{o}$ and 2 samples: the negative samples $h_{n}$ and the hard negative samples $h_{h n}$ . The formulation of $L_{S C L}$ is as follow:

原始样本、正样本和负样本的表示分别记为 $h_{o},h_{p}$ 和 $h_{n}$。为处理多正样本情况,我们采用监督对比学习 (supervised contrastive learning, SCL) 目标函数 [32],其核心是最小化原始样本 $h_{o}$ 与语义相似的正样本 $h_{p}$ 之间的距离,同时最大化 $h_{o}$ 与两类样本的距离:负样本 $h_{n}$ 和困难负样本 $h_{h n}$。$L_{S C L}$ 的公式如下:

$$

\begin{array}{l}{\displaystyle\mathcal{L}{S C L}=\frac{-1}{N}\sum_{i=1}^{N}{\frac{1}{N_{y_{i}}}\sum_{j=1}^{N_{y_{i}}}\sum_{k=1}^{N_{y_{i}}}\mathbb{I}{y_{i j}=y_{i k}}}}\ {\displaystyle\log\frac{e^{s i m(h_{o i j},h_{p i k})/\tau}}{\sum_{l=1}^{N}(\mathbb{I}{j\neq l})e^{s i m(h_{o i j},h_{n l})/\tau}+e^{s i m(h_{o i j},h_{h n i j})/\tau}}}\end{array}

$$

$$

\begin{array}{l}{\displaystyle\mathcal{L}{S C L}=\frac{-1}{N}\sum_{i=1}^{N}{\frac{1}{N_{y_{i}}}\sum_{j=1}^{N_{y_{i}}}\sum_{k=1}^{N_{y_{i}}}\mathbb{I}{y_{i j}=y_{i k}}}}\ {\displaystyle\log\frac{e^{s i m(h_{o i j},h_{p i k})/\tau}}{\sum_{l=1}^{N}(\mathbb{I}{j\neq l})e^{s i m(h_{o i j},h_{n l})/\tau}+e^{s i m(h_{o i j},h_{h n i j})/\tau}}}\end{array}

$$

where $N$ and $N_{y_{i}}$ denote the number of total examples in the batch and positive samples. $\tau$ is a temperature hyper parameter and $s i m(h_{1},h_{2})$ is cosine similarity. 1 is an indicator function.

其中 $N$ 和 $N_{y_{i}}$ 分别表示批次中的总样本数和正样本数。$\tau$ 是温度超参数,$sim(h_{1},h_{2})$ 为余弦相似度。1 是指示函数。

We sum the demonstration-base MLM task loss and the class contrastive discrimination task loss, and finally obtain the overall loss function L:

我们将基于演示的MLM任务损失和类别对比判别任务损失相加,最终得到整体损失函数L:

$$

L=\alpha L_{m l m}+(1-\alpha)L_{S C L}

$$

$$

L=\alpha L_{m l m}+(1-\alpha)L_{S C L}

$$

where $L_{m l m}$ and $L_{c}$ denote the loss functions of the two tasks. In our experiments, we set $\alpha=0.6$ .

其中 $L_{m l m}$ 和 $L_{c}$ 表示两个任务的损失函数。在我们的实验中,设置 $\alpha=0.6$。

3.2 Downstream Few-shot NER

3.2 下游少样本命名实体识别

After the pre-training stage, our model initially learns different aspects of information. In this section, We formally present the notations and the techniques of our proposed MSDP in the finetuning stage. Figure 3 illustrates the overall framework, which is composed of two steps: span extraction and entity classification.

在预训练阶段之后,我们的模型初步学习了信息的不同方面。本节正式介绍微调阶段提出的MSDP (Multi-Step Dynamic Pretraining) 符号体系和技术方案。图3展示了整体框架,该框架由两个步骤组成:跨度提取和实体分类。

3.2.1 Notations. We denote the train and test sets by $\mathcal{D}{t r a i n}$ and $\mathcal{D}{t e s t}$ , respectively. Both of them have the form of meta-learning datasets. The dataset consists of multiple episodes of data, and each episode of data ${\mathcal{E}}=(S,Q)$ consists of a support set $s$ and a query set $\boldsymbol{\mathcal{Q}}$ . A sample $(X,y)$ in the support set or query set consists of the input sentence $X$ and the label set $\mathcal{Y}=\bar{{(s_{j},e_{j},y_{j})}}{j=1}^{N}$ (𝑁 denoting the number of spans, $s_{j}$ and $e_{j}$ denoting the start and end positions of the $j$ -th span, $y_{j}$ denoting the category of the $j$ -th span). $\pmb{h}$ denotes the hidden representation obtained by encoding the input text.

3.2.1 符号说明。我们将训练集和测试集分别表示为 $\mathcal{D}{train}$ 和 $\mathcal{D}{test}$。两者均采用元学习数据集的形式,该数据集由多个数据片段组成,每个数据片段 ${\mathcal{E}}=(S,Q)$ 包含支持集 $s$ 和查询集 $\boldsymbol{\mathcal{Q}}$。支持集或查询集中的样本 $(X,y)$ 由输入句子 $X$ 和标签集 $\mathcal{Y}=\bar{{(s_{j},e_{j},y_{j})}}{j=1}^{N}$ 构成(其中 𝑁 表示跨度数量,$s_{j}$ 和 $e_{j}$ 表示第 $j$ 个跨度的起止位置,$y_{j}$ 表示第 $j$ 个跨度的类别)。$\pmb{h}$ 表示通过编码输入文本获得的隐藏表示。

3.2.2 Span Extractor. The span extractor aims to detect all entity spans. We initialize encoder with pre-trained parameters to encode the input sentence as a hidden representation $\pmb{h}$ , and calculate attention scores between each token representation to judge the start token and end token of the entity span. Following previous works [47, 65], we use span-based cross-entropy as the loss function to optimise our encoder. We first design the weight matrixes $W_{q}/W_{k}/W_{v}$ of values $q/k/v$ and bias $b_{q}/b_{k}$ for the attention mechanism, and then compute the attention score of the $i$ -th and $j$ -th token, using formula: $f(i,j)=q_{i}^{T}k_{j}+W_{v}({h}{i}+{h}{j}).\Omega_{i,j}$ indicates whether the span bounded by 𝑖, $j$ is an entity. Therefore, the span-based cross-entropy can be expressed as:

3.2.2 跨度提取器。跨度提取器旨在检测所有实体跨度。我们使用预训练参数初始化编码器,将输入句子编码为隐藏表示 $\pmb{h}$,并通过计算每个token表示之间的注意力分数来判断实体跨度的起始token和结束token。遵循先前工作[47,65],我们采用基于跨度的交叉熵作为损失函数来优化编码器。首先为注意力机制设计值 $q/k/v$ 的权重矩阵 $W_{q}/W_{k}/W_{v}$ 和偏置 $b_{q}/b_{k}$,然后通过公式计算第 $i$ 个与第 $j$ 个token的注意力分数:$f(i,j)=q_{i}^{T}k_{j}+W_{v}({h}{i}+{h}{j})$。$\Omega_{i,j}$ 表示由 𝑖 和 $j$ 界定的跨度是否为实体,因此基于跨度的交叉熵可表示为:

$$

\mathcal{L}{s p a n}=\log(1+\sum_{1\leq i<j\leq L}\exp((-1)^{\Omega_{i,j}}f(i,j))

$$

$$

\mathcal{L}{s p a n}=\log(1+\sum_{1\leq i<j\leq L}\exp((-1)^{\Omega_{i,j}}f(i,j))

$$

3.2.3 Entity Classification. In the second stage, we classify the entity spans extracted in the first stage. Different from the previous methods only computing the original prototype, we further decompose class-oriented prototypes and contextual fusion prototypes by two masking strategies, which introduce different information to assist in the classification task, thus alleviating the prototype classification disarray problem.

3.2.3 实体分类。在第二阶段,我们对第一阶段提取的实体跨度进行分类。与之前仅计算原始原型的方法不同,我们通过两种掩码策略进一步分解面向类别的原型和上下文融合原型,这些策略引入了不同信息来辅助分类任务,从而缓解原型分类混乱问题。

- Semantic masking strategies. Firstly, we introduce two novel semantic masking strategies for the subsequent construction of semantic decomposing prototypes.

- 语义掩码策略。首先,我们为后续构建语义分解原型引入两种新颖的语义掩码策略。

• Class-oriented Masking: Given an input sentence $X={x_{1},x_{2}$ , $\dots,x_{L}}$ , we replace all the entity spans in $X$ whose labels that are not $y$ with [MASK] tokens to obtain class $y$ specific input $X_{\mathrm{cs}}^{y}$ , thereby forcing the model to focus on the information of specific class by shielding the interference of other entities. For example, as shown in Figure 3, we replace the “school” entity of the “LOC” class and the “Sunday” entity of the “DAY” class with [MASK] tokens to obtain “PER” class-oriented input Mike wants to go to [MASK] on [MASK].

• 面向类别的掩码处理:给定输入句子 $X={x_{1},x_{2}$ , $\dots,x_{L}}$ ,我们将 $X$ 中所有标签不为 $y$ 的实体跨度替换为[MASK]标记,从而获得类别 $y$ 的特定输入 $X_{\mathrm{cs}}^{y}$ ,通过屏蔽其他实体的干扰迫使模型专注于特定类别的信息。例如,如图 3 所示,我们将"LOC"类别的"school"实体和"DAY"类别的"Sunday"实体替换为[MASK]标记,得到面向"PER"类别的输入 Mike wants to go to [MASK] on [MASK]。

• Contextual Fusion Masking: we replace all the entities in a sentence with [MASK] tokens, thus allowing the model to focus more on contextual fusion information. As the example sentence in Figure 3, we mask all entities to obtain $X_{\mathrm{ctx}}=$ [MASK] wants to go to [MASK] on [MASK].

• 上下文融合掩码 (Contextual Fusion Masking):我们将句子中的所有实体替换为[MASK] token,从而使模型更专注于上下文融合信息。如图 3 中的示例句子所示,我们掩码所有实体得到 $X_{\mathrm{ctx}}=$ [MASK] wants to go to [MASK] on [MASK]。

- Prototype Constructing. After decomposing two types of inputs with different information, we construct original prototype and two extra prototypes for each class in entity classification stage (The upper right corner of Figure 3)

- 原型构建。在分解两类不同信息的输入后,我们为实体分类阶段的每个类别构建原始原型和两个额外原型 (图 3 右上角)

For original prototype, we add up the representations of the start token and the end token of an entity span as the span boundary representation:

对于原始原型,我们将实体跨度的起始token和结束token的表示相加作为跨度边界表示:

$$

{\pmb u}{j}={\pmb h}{s_{j}}+{\pmb h}{e_{j}}

$$

$$

{\pmb u}{j}={\pmb h}{s_{j}}+{\pmb h}{e_{j}}

$$

where $\pmb{u}{j}$ is the representation of the $j$ -th span in the sentence, $\pmb{h}{i}$ denote the representation of the $i$ -th token in the sentence. $s_{j}$ and $e_{j}$ are the start and end positions of the $j$ -th span respectively.

其中 $\pmb{u}{j}$ 表示句中第 $j$ 个片段 (span) 的表征,$\pmb{h}{i}$ 表示句中第 $i$ 个 token 的表征。$s_{j}$ 和 $e_{j}$ 分别表示第 $j$ 个片段的起始位置和结束位置。

For class-oriented prototype, we perform a class-oriented masking strategy for class $t$ on $X$ to obtain $X_{\mathrm{cs}}^{\mathrm{t}}$ , and compute a span representation ${\pmb u}{j}^{\mathrm c s}$ in $X_{\mathrm{c}s}^{\mathrm{t}}$ according to equation 6.

针对类别导向的原型方法,我们对类别 $t$ 在 $X$ 上执行类别导向掩码策略以获得 $X_{\mathrm{cs}}^{\mathrm{t}}$ ,并根据公式6计算 $X_{\mathrm{c}s}^{\mathrm{t}}$ 中的跨度表示 ${\pmb u}_{j}^{\mathrm c s}$ 。

For contextual fusion prototype, we perform all the entity-masking strategy on the original sentence $X$ to obtain $X_{\mathrm{ctx}}$ and then compute the span representation ${\pmb u}_{j}^{\mathrm{ctx}}$ by averaging the representations of all tokens as follow:

对于上下文融合原型,我们在原始句子$X$上执行所有实体掩码策略以获得$X_{\mathrm{ctx}}$,然后通过平均所有token的表示来计算跨度表示${\pmb u}_{j}^{\mathrm{ctx}}$,如下所示:

$$

u_{j}^{\mathrm{ctx}}=\frac{1}{L}\sum_{i=1}^{L}h_{i}

$$

$$

u_{j}^{\mathrm{ctx}}=\frac{1}{L}\sum_{i=1}^{L}h_{i}

$$

where $L$ denotes the number of tokens in $X_{\mathrm{ctx}}$

其中 $L$ 表示 $X_{\mathrm{ctx}}$ 中的 token 数量

Afterwards, we construct three different prototypes vectors by averaging the representations of all entities of the same class in the support set:

随后,我们通过在支持集中对所有同类实体的表征进行平均,构建了三种不同的原型向量:

$$

\pmb{c}{t}=\frac{\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\pmb{y}}\mathbb{I}(y_{j}=t)\pmb{u}}{\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\pmb{y}}\mathbb{I}(y_{j}=t)}

$$

$$

\pmb{c}{t}=\frac{\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\pmb{y}}\mathbb{I}(y_{j}=t)\pmb{u}}{\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\pmb{y}}\mathbb{I}(y_{j}=t)}

$$

where $\mathbb{I}(\cdot)$ is the indicator function; $\pmb{u}$ can be replaced with $\pmb{u}{j}$ ${\pmb u}{j}^{\mathrm c s}$ and ${\pmb u}_{j}^{\mathrm{ctx}}$ to calculate three semantic prototypes separately.

其中 $\mathbb{I}(\cdot)$ 是指示函数;$\pmb{u}$ 可替换为 $\pmb{u}{j}$、${\pmb u}{j}^{\mathrm c s}$ 和 ${\pmb u}_{j}^{\mathrm{ctx}}$ 以分别计算三个语义原型。

After constructing three different semantic prototypes, we use a metric-based approach for classification and optimize the parameters of the model based on the basis of distance between entity representations and class prototypes:

在构建了三种不同的语义原型后,我们采用基于度量的方法进行分类,并根据实体表示与类原型之间的距离优化模型参数:

$$

\mathcal{L}{c l s}=\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\mathcal{Y}}-\log\mathcal{p}(y_{j}|s_{j},e_{j})

$$

$$

\mathcal{L}{c l s}=\sum_{(X,y)\in S}\sum_{(s_{j},e_{j},y_{j})\in\mathcal{Y}}-\log\mathcal{p}(y_{j}|s_{j},e_{j})

$$

where

其中

$$

\begin{array}{r}{\quad p(y_{j}|s_{j},e_{j})=\mathrm{softmax}(-d(\pmb{u}{j},\pmb{c}{y_{j}}))}\end{array}

$$

$$

\begin{array}{r}{\quad p(y_{j}|s_{j},e_{j})=\mathrm{softmax}(-d(\pmb{u}{j},\pmb{c}{y_{j}}))}\end{array}

$$

is the probability distribution. We use cosine similarity as the distance function $d(\cdot,\cdot)$ .

概率分布为。我们使用余弦相似度作为距离函数 $d(\cdot,\cdot)$。

3.3 Training and Inference of MSDP

3.3 MSDP的训练与推理

We first perform two task-specific pre-trainings to learn reliable entity boundary information and entity representations of different classes. For fine-tuning, we initialize the BERT encoder with pretrained parameters for the few-shot NER task.

我们首先进行两项任务特定的预训练,以学习可靠的实体边界信息和不同类别的实体表示。在微调阶段,我们使用预训练参数初始化BERT编码器,用于少样本命名实体识别任务。

In the downstream training phase, given the training set $\mathcal{D}{t r a i n}=$ $(S,Q)$ , we compute $\mathcal{L}{s p a n}$ and three types of prototypes on the support set $s$ , $\mathcal{L}{c l s}$ on the query set $\boldsymbol{\mathcal{Q}}$ , and train the two optimization objectives jointly. Following SpanProto [65], we only optimize the $\mathcal{L}{s p a n}$ objective in the first $T$ steps, and jointly optimize both $\mathcal{L}{s p a n}$ and $\mathcal{L}_{c l s}$ after $T$ steps.

在下游训练阶段,给定训练集 $\mathcal{D}{train}=$ $(S,Q)$,我们在支持集 $s$ 上计算 $\mathcal{L}{span}$ 和三种原型,在查询集 $\boldsymbol{\mathcal{Q}}$ 上计算 $\mathcal{L}{cls}$,并联合训练这两个优化目标。遵循 SpanProto [65] 的方法,我们仅在初始 $T$ 步优化 $\mathcal{L}{span}$ 目标,之后 $T$ 步开始联合优化 $\mathcal{L}{span}$ 和 $\mathcal{L}_{cls}$。

Table 1: Evaluation dataset statistics.

| 数据集 | 领域 | 句子数 | 类别数 |

|---|---|---|---|

| Few-NERD | Wikipedia | 188.2k | 66 |

| CoNLL03 | 新闻 | 20.7k | 4 |

| GUM | Wiki | 3.5k | 11 |

| WNUT | 社交媒体 | 5.6k | 6 |

| OntoNotes | 混合 | 159.6k | 18 |

表 1: 评估数据集统计。

In the testing phase, given an episode $\mathcal{E}=(S,Q)\in\mathcal{D}_{t e s t}$ , we construct prototypes on the support set $s$ and perform span detection on the query set $\boldsymbol{\alpha}$ . Then we calculate the distance between the extracted spans and each class prototype for classification. Note that we only utilize original prototypes during inference.

在测试阶段,给定一个情节 $\mathcal{E}=(S,Q)\in\mathcal{D}_{t e s t}$,我们在支持集 $s$ 上构建原型,并在查询集 $\boldsymbol{\alpha}$ 上执行跨度检测。然后计算提取的跨度与每个类原型之间的距离以进行分类。请注意,在推理过程中我们仅使用原始原型。

4 EXPERIMENT

4 实验

4.1 Datasets

4.1 数据集

Table 1 shows the dataset statistics of original data for constructing few-shot episodes. We evaluate our method on two widely used few-shot benchmarks Few-NERD [14] and CrossNER [45].

表 1: 展示了用于构建少样本 (few-shot) 任务的原始数据集统计信息。我们在两个广泛使用的少样本基准测试 Few-NERD [14] 和 CrossNER [45] 上评估了我们的方法。

Few-NERD: Few-NERD is annotated with 8 coarse-grained and 66 fine-grained entity types, which consists of two few-shot settings (Intra, and Inter). In the Intra setting, all entities in the training set, development set, and testing set belong to different coarse-grained types. In contrast, in the Inter setting, only the fine-grained entity types are mutually disjoint in different datasets. we use episodes released by Ding et al. which contains 20,000 episodes for training, 1,000 episodes for validation, and 5,000 episodes for testing. Each episode is an $N$ -way $K\sim2K$ -shot few-shot task.

Few-NERD:Few-NERD标注了8个粗粒度与66个细粒度实体类型,包含两种少样本设定(Intra和Inter)。在Intra设定中,训练集、开发集和测试集的所有实体均属于不同粗粒度类型;而在Inter设定中,仅要求不同数据集的细粒度实体类型互不相交。我们采用Ding等人发布的20,000个训练片段、1,000个验证片段和5,000个测试片段,每个片段均为$N$元$K\sim2K$样本的少样本任务。

CrossNER: CrossNER contains four domains from CoNLL-2003 57, GUM [73] (Wiki), WNUT-2017 [12] (Social), and Ontonotes 53. We randomly select two domains for training, one for validation, and the remaining for testing. We use public episodes constructed by Hou et al. .

CrossNER:CrossNER包含来自CoNLL-2003 [57](新闻)、GUM [73](维基)、WNUT-2017 [12](社交)和Ontonotes [53](混合)的四个领域。我们随机选择两个领域进行训练,一个用于验证,其余用于测试。我们使用Hou等人构建的公共片段。

4.2 Baselines

4.2 基线方法

For the baselines, we choose multiple strong approaches from the paradigms of one-stage and two-stage. 1) One-stage NER paradigms: ProtoBERT [59], StructShot [70], NNShot [70], CONTAINER [11] and LTapNet $^+$ CDT [28]. 2) Two-stage paradigm: ESD [66], MAML- ProtoNet [47] and SpanProto [65]. Due to the space limitation, More details