IDOL: Indicator-oriented Logic Pre-training for Logical Reasoning

IDOL: 面向指标的逻辑预训练推理框架

Abstract

摘要

In the field of machine reading comprehension (MRC), existing systems have surpassed the average performance of human beings in many tasks like SQuAD. However, there is still a long way to go when it comes to logical reasoning. Although some methods for it have been put forward, they either are designed in a quite complicated way or rely too much on external structures. In this paper, we proposed IDOL (InDicator-Oriented Logic Pre-training), an easy-to-understand but highly effective further pre-training task which logically strengthens the pre-trained models with the help of 6 types of logical indicators and a logically rich dataset LGP (LoGic Pre-training). IDOL achieves state-of-the-art performance on ReClor and LogiQA, the two most representative benchmarks in logical reasoning MRC, and is proven to be capable of generalizing to different pre-trained models and other types of MRC benchmarks like RACE and $\mathrm{SQuAD}2.0$ while keeping competitive general language under standing ability through testing on tasks in GLUE. Besides, at the beginning of the era of large language models, we take several of them like ChatGPT into comparison and find that IDOL still shows its advantage.1

在机器阅读理解(MRC)领域,现有系统已在SQuAD等多项任务中超越人类平均水平。然而在逻辑推理方面,现有研究仍存在明显差距。虽然已有部分方法被提出,但这些方案要么设计过于复杂,要么过度依赖外部结构。本文提出IDOL(InDicator-Oriented Logic Pre-training),这是一种通过6类逻辑指示符和富含逻辑的数据集LGP(LoGic Pre-training)来增强预训练模型逻辑能力的方案。IDOL在逻辑推理MRC领域最具代表性的两个基准测试ReClor和LogiQA上取得了最先进性能,并被证实能泛化至不同预训练模型及其他类型MRC基准(如RACE和$\mathrm{SQuAD}2.0$),同时通过GLUE任务测试保持了具有竞争力的通用语言理解能力。此外,在大语言模型时代初期,我们将ChatGPT等模型纳入对比,发现IDOL仍具优势。[20]

1 Introduction

1 引言

With the development of pre-trained language models, a large number of tasks in the field of natural language understanding have been dealt with quite well. However, those tasks emphasize more on assessing basic abilities like word-pattern recognition of the models while caring less about advanced abilities like reasoning over texts (Helwe et al., 2021).

随着预训练语言模型的发展,自然语言理解领域的大量任务已得到较好处理。然而这些任务更侧重于评估模型的基础能力(如词形识别),而较少关注高级能力(如文本推理) (Helwe et al., 2021)。

In recent years, an increasing number of challenging tasks have been brought forward gradually. At sentence-level reasoning, there is a great variety of benchmarks for natural language inference like

近年来,逐渐涌现出越来越多具有挑战性的任务。在句子级推理方面,自然语言推理领域存在多种基准测试,如

QNLI (Demszky et al., 2018) and MNLI (Williams et al., 2018). Although the construction processes are different, nearly all these datasets evaluate models with binary or three-way classification tasks which need reasoning based on two sentences. At passage-level reasoning, the most difficult benchmarks are generally recognized as the ones related to logical reasoning MRC which requires questionanswering systems to fully understand the whole passage, extract information related to the question and reason among different text spans to generate new conclusions in the logical aspect. In this area, the most representative benchmarks are some machine reading comprehension datasets like ReClor (Yu et al., 2020) and LogiQA (Liu et al., 2020).

QNLI (Demszky等人, 2018) 和 MNLI (Williams等人, 2018)。虽然构建过程不同,但这些数据集几乎都通过需要基于双句推理的二分类或三分类任务来评估模型。在篇章级推理领域,最困难的基准测试通常被认为是与逻辑推理机器阅读理解 (MRC) 相关的任务,这类任务要求问答系统完整理解整个篇章,提取与问题相关的信息,并在不同文本片段间进行逻辑层面的推理以生成新结论。该领域最具代表性的基准测试包括ReClor (Yu等人, 2020) 和LogiQA (Liu等人, 2020) 等机器阅读理解数据集。

Considering that there are quite few optimization strategies for the pre-training stage and that it is difficult for other researchers to follow and extend the existing methods which are designed in rather complex ways, we propose an easy-to-understand but highly effective pre-training task named IDOL which helps to strengthen the pre-trained models in terms of logical reasoning. We apply it with our customized dataset LGP which is full of logical information. Moreover, we experimented with various pre-trained models and plenty of different downstream tasks and proved that IDOL is competitive while keeping models and tasks agnostic.

考虑到预训练阶段的优化策略相对较少,且现有方法设计复杂使得其他研究者难以跟进和扩展,我们提出了一种易于理解但高效的预训练任务IDOL,旨在增强预训练模型的逻辑推理能力。我们将其应用于自定义数据集LGP,该数据集富含逻辑信息。此外,我们在多种预训练模型和大量不同下游任务上进行了实验,证明IDOL在保持模型与任务无关性的同时仍具有竞争力。

Recently, ChatGPT attracts a lot of attention all over the world due to its amazing performance in question answering. Thus, we also arranged an experiment to let IDOL compete with a series of LLMs (large language models) including it.

近期,ChatGPT 因其在问答领域的惊人表现而受到全球广泛关注。为此,我们也设计了一项实验,让 IDOL 与包括它在内的一系列大语言模型 (LLM) 展开较量。

The contributions of this paper are summarized as follows:

本文的贡献总结如下:

• Put forward the definitions of 5 different types of logical indicators. Based on these we construct the dataset LGP for logical pre-training and we probe the impact of different types of logical indicators through a series of ablation experiments.

• 提出5种不同类型逻辑指标的定义。基于这些定义,我们构建了用于逻辑预训练的数据集LGP,并通过一系列消融实验探究了不同类型逻辑指标的影响。

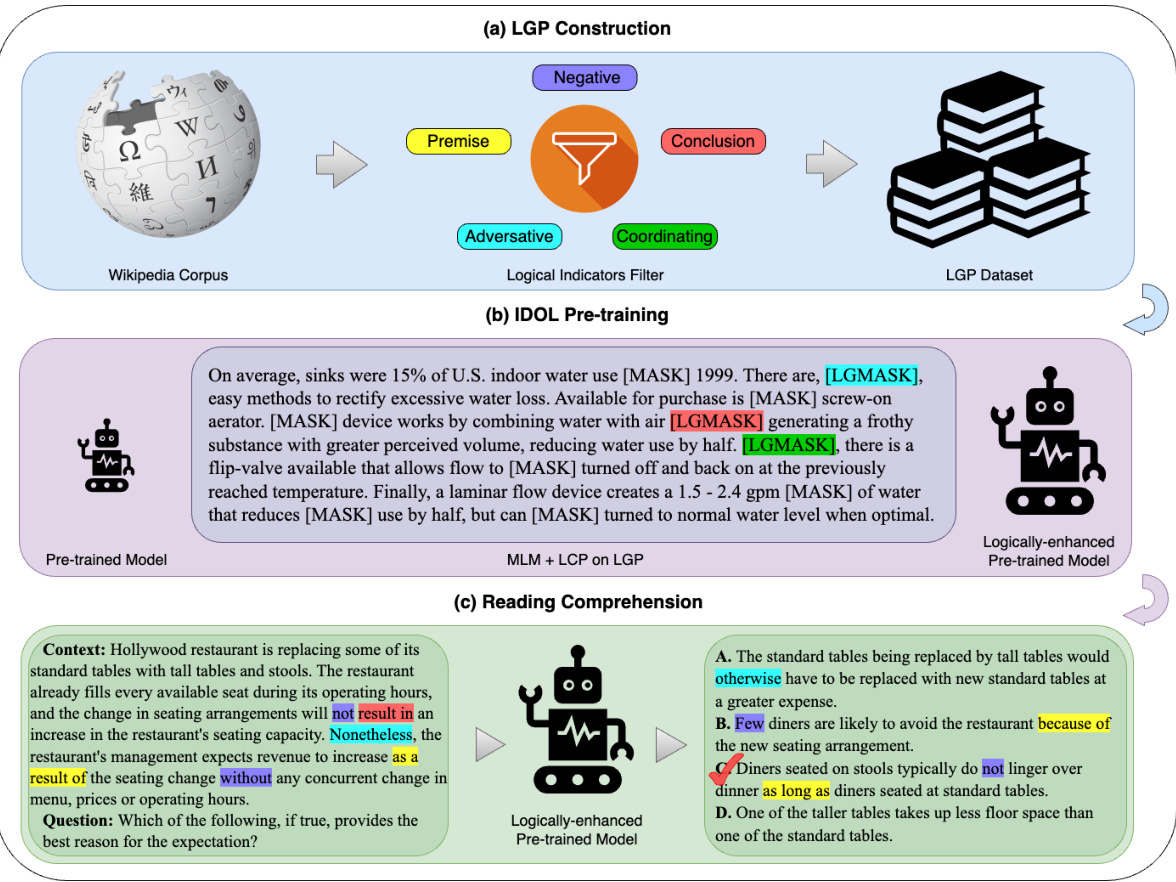

Figure 1: A diagram illustrating the three steps of our method: (a) construct the logically rich dataset LGP from Wikipedia, (b) further pre-train models to improve logical reasoning ability, and (c) answer logical reasoning MRC questions with the help of logical indicators appeared both in context and choices. See Section 4 for more details on our method.

图 1: 展示我们方法三个步骤的示意图:(a) 从维基百科构建逻辑丰富的数据集LGP,(b) 进一步预训练模型以提升逻辑推理能力,(c) 借助上下文和选项中同时出现的逻辑指示符来回答逻辑推理MRC问题。关于我们方法的更多细节请参见第4节。

• Design an indicator-oriented further pretraining method named IDOL, which aims to enhance the logical reasoning ability of pretrained models. It achieves state-of-the-art performance in logical reasoning MRC and shows progress in general MRC and general understanding ability evaluation.

• 设计了一种面向指标的进一步预训练方法IDOL,旨在增强预训练模型的逻辑推理能力。该方法在逻辑推理机器阅读理解(MRC)任务中实现了最先进的性能,并在通用MRC和通用理解能力评估方面取得了进展。

• The first to provide a pilot test about the comparison between fine-tuning traditional pretrained models and prompting LLMs in the field of logical reasoning MRC.

• 首个在逻辑推理MRC领域对微调传统预训练模型和提示大语言模型进行对比的试点测试。

In the aspect of pre-training, to the best of our knowledge, there are only two approaches presented in published papers called MERIt and LogiGAN. MERIt team generated a dataset from the one provided by Qin et al. (2021) which contains passages from Wikipedia with annotations about entities and relations. And then optimize the model on that with the help of contrastive learning (Jiao et al., 2022). The researchers behind LogiGAN use a task about statement recovery to enhance the logic understanding ability of generative pretrained language models like T5 (Pi et al., 2022).

在预训练方面,据我们所知,目前仅有MERIt和LogiGAN两种方法在已发表的论文中被提出。MERIt团队基于Qin等人(2021)提供的维基百科段落数据集(包含实体与关系标注)生成新数据集,并借助对比学习(Jiao等人,2022)优化模型。LogiGAN的研究人员则采用陈述恢复任务来增强T5等生成式预训练语言模型(Pi等人,2022)的逻辑理解能力。

2 Related Work

2 相关工作

2.1 Logical Reasoning

2.1 逻辑推理

In order to help reasoning systems perform better on reading comprehension tasks focusing on logical reasoning, there have been a great many methods put forward by research institutions from all over the world. Un surprisingly, the majority of the optimization approaches put forward revolve around the fine-tuning phase while there are far fewer methods designed for further pre-training.

为了帮助推理系统在侧重逻辑推理的阅读理解任务中表现更优,全球研究机构已提出大量方法。不出所料,大多数优化方案围绕微调阶段展开,而为进一步预训练设计的方法则少得多。

For optimizing models at the fine-tuning phase, there are dozens of methods proposed as far as we know. For example, LReasoner put forward a context extension framework with the help of logical equivalence laws including contra position and transitive laws (Wang et al., 2022a). Another example is Logiformer which introduced a twostream architecture containing a syntax branch and a logical branch to better model the relationships among distant logical units (Xu et al., 2022).

在微调阶段优化模型的方法,据我们所知已有数十种。例如,LReasoner 提出了一个借助逻辑等价律(包括逆否律和传递律)的上下文扩展框架 (Wang et al., 2022a)。另一个例子是 Logiformer,它引入了包含语法分支和逻辑分支的双流架构,以更好地建模远距离逻辑单元之间的关系 (Xu et al., 2022)。

Table 1: Libraries and examples of all types of logical indicators.

| Type | Library | Example |

| PMI | given that, seeing that, for the reason that, owing to, as indicated by, on thegrounds that,on account of,considering,because of, due to, now that, may be inferred from, by virtue of, in view of, for the sake of, thanks to, as long as, based on that, as a result of, considering that, inasmuch as, if and only if, according to, in | The real world contains no political entity exer- cising literally total control over even one such aspect. This is because anysystemof control is inefficient, and, therefore, its degree of control is partial. |

| conclude that, entail that, infer that, that is why, therefore, thereby, wherefore, accordingly, hence, thus, consequently, whence, so that, it follows that, imply that, as a result, suggest that, prove that, as a conclusion, conclusively, for this reason, as a consequence, on that account, in conclusion, to that end, because of this, that being so, ergo, in this way, in this manner, eventually | In the United States, each bushel of corn pro- duced might result in the loss of as much as two bushels of topsoil. Moreover, in the last 100 years, the topsoil in many states, which once was about fourteen inches thick, has been eroded to only six or eight inches. | |

| NTI | not, neither, none of, unable, few, little, hardly, merely, seldom, without, never, nobody, nothing, nowhere, rarely, scarcely, barely, no longer, isn't, aren't,wasn't, weren't, can't, cannot, couldn't, won't,wouldn't,don't,doesn't,didn't,haven't,hasn't | A high degree of creativity and a high level of artistic skill are seldom combined in the cre- ation of a work of art. |

| ATI | although, though, but, nevertheless, however, instead of, nonethe- This advantage accruing to the sentinel does not less, yet, rather, whereas, otherwise, conversely, on the contrary, even, nevertheless, despite, in spite of, in contrast, even if, even though, unless, regardless of, reckless of | /, mean that its watchful behavior is entirely self- interested. On the contrary , the sentinel's be- havior is an example of animal behavior moti- vated at least in part by altruism. |

| CNI | and, or, nor, also, moreover, in addition, on the other hand, meanwhile, further, afterward, next, besides, additionally, mean- to serve in the presidential cabinet. time, furthermore, as well, simultaneously, either, both, similarly, likewise | l,A graduate degree in policymaking is necessary In addition everyone in the cabinet must pass a security clearance. |

表 1: 各类逻辑指示词库及示例

| 类型 | 词库 | 示例 |

|---|---|---|

| PMI | given that, seeing that, for the reason that, owing to, as indicated by, on the grounds that, on account of, considering, because of, due to, now that, may be inferred from, by virtue of, in view of, for the sake of, thanks to, as long as, based on that, as a result of, considering that, inasmuch as, if and only if, according to, in | 现实世界中不存在对任何一个方面实施完全控制的政体。这是因为任何控制系统都存在效率问题,因此其控制程度都是局部的。 |

| conclude that, entail that, infer that, that is why, therefore, thereby, wherefore, accordingly, hence, thus, consequently, whence, so that, it follows that, imply that, as a result, suggest that, prove that, as a conclusion, conclusively, for this reason, as a consequence, on that account, in conclusion, to that end, because of this, that being so, ergo, in this way, in this manner, eventually | 在美国,每生产一蒲式耳玉米可能导致多达两蒲式耳表土流失。此外在过去100年间,许多州原本约14英寸厚的表土层已被侵蚀至仅剩6-8英寸。 | |

| NTI | not, neither, none of, unable, few, little, hardly, merely, seldom, without, never, nobody, nothing, nowhere, rarely, scarcely, barely, no longer, isn't, aren't, wasn't, weren't, can't, cannot, couldn't, won't, wouldn't, don't, doesn't, didn't, haven't, hasn't | 在艺术创作中,高度创造力与精湛艺术技巧很少能同时体现在同一件作品中。 |

| ATI | although, though, but, nevertheless, however, instead of, nonetheless, yet, rather, whereas, otherwise, conversely, on the contrary, even, nevertheless, despite, in spite of, in contrast, even if, even though, unless, regardless of, reckless of | 哨兵获得的这种优势并不意味着其警戒行为完全出于自私。相反,哨兵的行为是动物行为至少部分利他动机的例证。 |

| CNI | and, or, nor, also, moreover, in addition, on the other hand, meanwhile, further, afterward, next, besides, additionally, meantime, furthermore, as well, simultaneously, either, both, similarly, likewise | 获得政策制定研究生学位是进入总统内阁的必要条件。此外,所有内阁成员都必须通过安全审查。 |

2.2 Pre-training Tasks

2.2 预训练任务

As NLP enters the era of pre-training, more and more researchers are diving into the design of pre-training tasks, especially about different masking strategies. For instance, in Cui et al. (2020), the authors apply Whole Word Masking (WWM) on Chinese BERT and achieved great progress. WWM changes the masking strategy in the original masked language modeling (MLM) into masking all the tokens which constitute a word with complete meaning instead of just one single token. In addition, Lample and Conneau (2019) extends MLM to parallel data as Translation Language Modeling (TLM) which randomly masks tokens in both source and target sentences in different languages simultaneously. The results show that TLM is beneficial to improve the alignment among different languages.

随着NLP进入预训练时代,越来越多的研究者投入到预训练任务的设计中,特别是关于不同的掩码策略。例如,在Cui等人(2020)的研究中,作者将全词掩码(WWM)应用于中文BERT并取得了显著进展。WWM将原始掩码语言建模(MLM)中的掩码策略改为掩码构成完整意义单词的所有token,而非单个token。此外,Lample和Conneau(2019)将MLM扩展到并行数据,提出了翻译语言建模(TLM),该方法会同时随机掩码不同语言的源句和目标句中的token。结果表明,TLM有助于提升不同语言之间的对齐效果。

3 Preliminary

3 初步准备

3.1 Text Logical Unit

3.1 文本逻辑单元

It is admitted that a single word is the most basic unit of a piece of text but its meaning varies with different contexts. In $\mathrm{Xu}$ et al. (2022), the authors refer logical units to the split sentence spans that contain independent and complete semantics. In this paper, since much more abundant logical indicators with different types that link not only clauses but also more fine-grained text spans are introduced, we extend this definition to those shorter text pieces like entities.

承认单个词是文本最基本的单元,但其含义会随上下文变化。在Xu等人 (2022) 的研究中,作者将逻辑单元定义为包含独立完整语义的切分句段。本文由于引入了更丰富的多类型逻辑指示符(不仅连接从句还关联更细粒度文本片段),我们将该定义扩展至实体等更短的文本片段。

3.2 Logical Indicators

3.2 逻辑指标

By analyzing the passages in logical reasoning MRC and reasoning-related materials like debate scripts, we found that the relations between logic units (like entities or events) can be summarized into 5 main categories as follows and all these relations are usually expressed via a series of logical indicators. After consulting some previous work like Pi et al. (2022) and Penn Discourse TreeBank 2.0 (PDTB 2.0) (Prasad et al., 2008), we managed to construct an indicator library for each category. As for the examples of indicators we used in detail, please refer to Table 1.

通过分析逻辑推理机器阅读理解(MRC)中的段落及辩论脚本等推理相关材料,我们发现逻辑单元(如实体或事件)之间的关系可归纳为以下5大类,这些关系通常通过一系列逻辑指示词来表达。参考Pi等人(2022)和宾夕法尼亚话语树库2.0(PDTB 2.0)(Prasad等人,2008)等前人工作后,我们为每类关系构建了指示词库。具体使用的指示词示例请见表1:

• Premise/Conclusion Indicator (PMI/CLI) The first two types of logical indicators pertain to premises and conclusions. These indicators signal the logical relationship between statements. For instance, premise expressions such as “due to” indicate that the logic unit following the keyword serves as the reason or explanation for the unit preceding it. Conversely, conclusion phrases like “result in” suggest an inverse relationship, implying that the logic unit after the keyword is a consequence or outcome of the preceding unit.

• 前提/结论指示词 (PMI/CLI)

前两类逻辑指示词涉及前提和结论。这些指示词标志着陈述之间的逻辑关系。例如,"due to"等前提表达式表明关键词后的逻辑单元是其前面单元的原因或解释。相反,"result in"等结论短语则暗示相反的关系,即关键词后的逻辑单元是前面单元的结果或后果。

• Negative Indicator (NTI) Negative indicators, such as “no longer”, play a crucial role in text logic by negating affirmative logic units. They have the power to significantly alter the meaning of a statement. For example, consider the sentences “Tom likes hamburgers.” and “Tom no longer likes hamburgers.” These two sentences have nearly opposite meanings, solely due to the presence of the indicator “no longer”.

• 否定性指标 (NTI) 否定性指标(如"不再")通过否定肯定性逻辑单元,在文本逻辑中起着至关重要的作用。它们能够显著改变语句的含义。例如,比较句子"Tom喜欢汉堡。"和"Tom不再喜欢汉堡。"这两个句子的含义几乎相反,仅仅因为存在"不再"这一指标。

• Ad vers at ive Indicator (ATI) Certain expressions, such as “however”, are commonly employed between sentences to signify a shift or change in the narrative. They serve as valuable tools for indicating the alteration or consequence of a preceding event, which helps to cover this frequent kind of relation among logic units.

• 对抗性指标 (Adversarial Indicator, ATI) 某些表达方式(如"however")通常用于句子之间,表示叙述的转变或变化。它们是指示前文事件变化或结果的重要工具,有助于覆盖逻辑单元间这种常见的关系类型。

• Coordinating Indicator (CNI) The coordinating relation is undoubtedly the most prevalent type of relationship between any two logic units. Coordinating indicators are used to convey that the units surrounding them possess the same logical status or hold equal importance. These indicators effectively demonstrate the coordination or parallelism between the connected logic units.

• 协调性指示词 (CNI) 协调关系无疑是任意两个逻辑单元之间最普遍的关系类型。协调性指示词用于表明其连接的逻辑单元具有同等地位或重要性,这些指示词能有效体现被连接逻辑单元之间的并列或平行关系。

4 Methodology

4 方法论

4.1 LGP Dataset Construction

4.1 LGP数据集构建

For the sake of further pre-training models with IDOL, we constructed the dataset LGP (LoGic Pretraining) based on the most popular un annotated corpus English Wikipedia.2 We first split the articles into paragraphs and abandoned those whose lengths (after token iz ation) were no longer than 5. In order to provide as much logical information as possible, we used the logical indicators listed in Table 1 to filter the Wiki paragraphs. During this procedure, we temporarily removed those indicators with extremely high frequency like “and”, otherwise, there would be too many paragraphs whose logical density was unacceptably low. Then, we iterated every logical keyword and replaced it with our customized special token [LGMASK] under the probability of $70%$ .

为了进一步用IDOL进行模型预训练,我们基于最流行的未标注语料库英文维基百科构建了数据集LGP(逻辑预训练)。首先将文章分割为段落,并舍弃分词后长度不超过5的段落。为了提供尽可能多的逻辑信息,我们使用表1列出的逻辑指示词对维基百科段落进行筛选。在此过程中,我们暂时移除了"and"等出现频率过高的指示词,否则会导致大量段落的逻辑密度过低。接着遍历每个逻辑关键词,并以70%的概率将其替换为自定义的特殊token [LGMASK]。

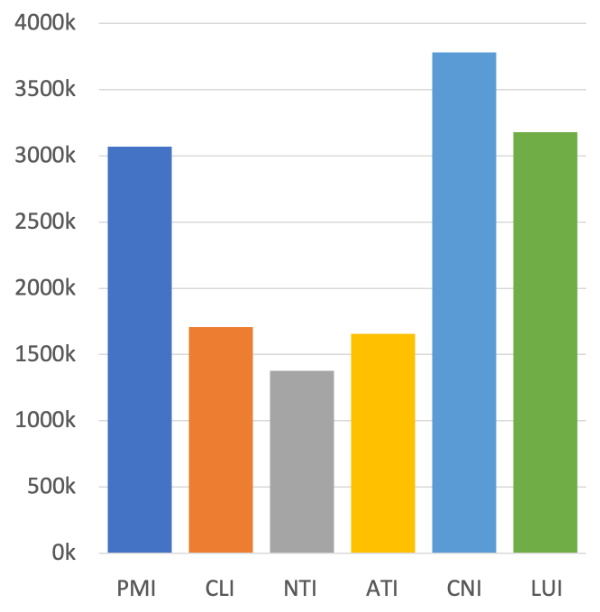

Figure 2: The numbers of 6 types of logical indicators in LGP for RoBERTa.

图 2: RoBERTa 在 LGP 中 6 种逻辑指标的数量。

For the purpose of modeling the ability to distinguish whether a certain masked place is logicrelated or not, we introduced the sixth logical indicator type - Logic Unrelated Indicator (LUI). Based on this, we then randomly replaced $0.6%$ tokens other than logical indicators with [LGMASK]. Afterward, the labels for the logical category prediction (LCP) task were generated based on the corresponding logic types of all the [LGMASK]s. In the end, take RoBERTa (Liu et al., 2019) for example, our logic dataset LGP contains over 6.1 million samples and as for the quantities of logical indicators in each type please refer to Figure 2.

为了建模区分某个被遮蔽位置是否与逻辑相关的能力,我们引入了第六种逻辑指示符类型——逻辑无关指示符(LUI)。基于此,我们随机将除逻辑指示符外$0.6%$的token替换为[LGMASK]。随后,根据所有[LGMASK]对应的逻辑类型生成逻辑类别预测(LCP)任务的标签。最终以RoBERTa (Liu et al., 2019)为例,我们的逻辑数据集LGP包含超过610万个样本,各类型逻辑指示符的数量请参见图2。

4.2 IDOL Pre-training

4.2 IDOL 预训练

4.2.1 Logical Category Prediction

4.2.1 逻辑类别预测

As introduced in section 3.2 and section 4.1, we defined a logic-related special mask token [LGMASK] and it will take the place of 6 types of logical indicators - PMI, CLI, NTI, ATI, CNI, and LUI. During the forward process of fine-tuning the pre-trained models, the corresponding logical categories need to be predicted by them like what will be done in the token classification task of the standard Masked Language Modeling (MLM) (Devlin et al., 2019).

如第3.2节和第4.1节所述,我们定义了一个与逻辑相关的特殊掩码token [LGMASK],它将替代6种逻辑指示符——PMI、CLI、NTI、ATI、CNI和LUI。在前置训练模型的微调前向过程中,需要像标准掩码语言建模(MLM) [20]的token分类任务那样预测对应的逻辑类别。

When the models are trying to predict the correct logical type of a certain [LGMASK], they will learn to analyze the relationship among the logical units around the current special token and whether there is some kind of logical relations with the help of the whole context. Therefore, the pre-trained models will be equipped with a stronger ability of reasoning over texts gradually.

当模型试图预测某个[LGMASK]的正确逻辑类型时,它们会学习分析当前特殊token周围逻辑单元之间的关系,并借助整个上下文判断是否存在某种逻辑关联。因此,预训练模型会逐步具备更强的文本推理能力。

Moreover, we use Cross-Entropy Loss (CELoss) to evaluate the performance of predicting the logical categories. The loss function for LCP is as described in Equation (1) where $n$ is the number of samples, $m$ is the number of $\mathsf{L L G M A S K]}$ in the $i_{t h}$ sample, $y_{i,j}$ indicates the model prediction result for the $j_{t h}$ [LGMASK] in the $i_{t h}$ sample and $\hat{y}_{i,j}$ denote the corresponding ground truth value.

此外,我们使用交叉熵损失 (CELoss) 来评估逻辑类别预测的性能。LCP 的损失函数如公式 (1) 所示,其中 $n$ 为样本数量,$m$ 为第 $i_{t h}$ 个样本中 $\mathsf{L L G M A S K]}$ 的数量,$y_{i,j}$ 表示模型对第 $i_{t h}$ 个样本中第 $j_{t h}$ 个 [LGMASK] 的预测结果,$\hat{y}_{i,j}$ 表示对应的真实值。

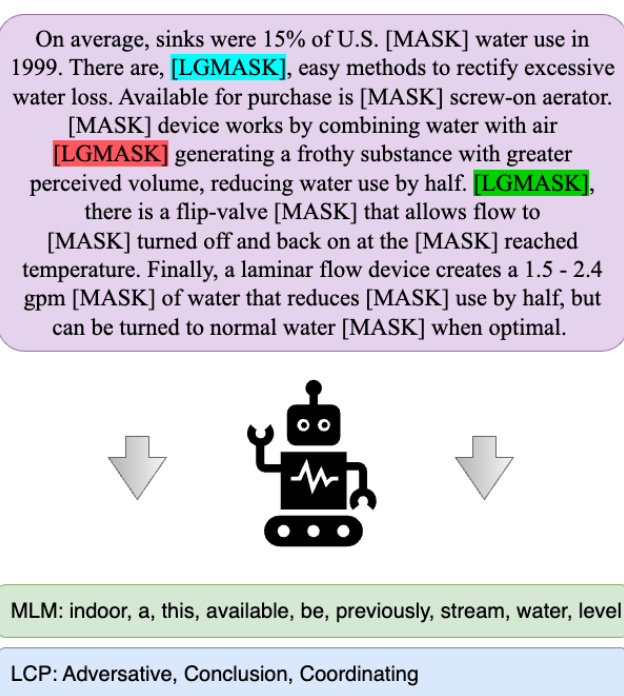

Figure 3: An example of pre-training with IDOL. The model needs to recover the tokens replaced by [MASK] (MLM) and predict the category of each logical indicator masked by [LGMASK] (LCP) in the meantime.

图 3: IDOL预训练示例。模型需要同时恢复被[MASK]替换的token (MLM) 并预测每个被[LGMASK]遮盖的逻辑指示符类别 (LCP)。

$$

\mathcal{L}{\mathrm{LCP}}=\sum_{i=1}^{n}\frac{1}{m}\sum_{j=1}^{m}\mathbf{CELoss}(y_{i,j},\hat{y}_{i,j})

$$

$$

\mathcal{L}{\mathrm{LCP}}=\sum_{i=1}^{n}\frac{1}{m}\sum_{j=1}^{m}\mathbf{CELoss}(y_{i,j},\hat{y}_{i,j})

$$

4.2.2 IDOL

4.2.2 IDOL

To avoid catastrophic forgetting, we combine the classic MLM task with the LCP introduced above to become IDOL, a multi-task learning pre-training method for enhancing the logical reasoning ability of pre-trained models. For the purpose of balancing the effects of the two pre-training tasks, we introduced a hyper-parameter $\lambda$ as the weight of the loss of LCP (the proper $\lambda$ depends on the pre-trained language model used and the empirical range is between 0.7 and 0.9). Thus, for the IDOL pre-training loss function, please refer to Equation (2). Figure 3 presented an example of IDOL pre-training where predicting tokens and the classes of logical indicators simultaneously.

为避免灾难性遗忘,我们将经典MLM任务与上述LCP结合形成IDOL,这是一种增强预训练模型逻辑推理能力的多任务学习预训练方法。为平衡两个预训练任务的影响,引入超参数$\lambda$作为LCP损失的权重(具体$\lambda$值取决于所用预训练语言模型,经验范围在0.7至0.9之间)。因此,IDOL预训练损失函数请参见公式(2)。图3展示了IDOL预训练中同时预测token和逻辑指示符类别的示例。

$$

\mathcal{L}{\mathrm{IDOL}}=\lambda\cdot\mathcal{L}{\mathrm{LCP}}+(1-\lambda)\cdot\mathcal{L}_{\mathrm{MLM}}

$$

$$

\mathcal{L}{\mathrm{IDOL}}=\lambda\cdot\mathcal{L}{\mathrm{LCP}}+(1-\lambda)\cdot\mathcal{L}_{\mathrm{MLM}}

$$

5 Experiments

5 实验

5.1 Baselines

5.1 基线方法

With the rapid development of pre-training technology these years, we have various choices for backbone models. In this paper, we decide to apply IDOL on BERT-large (Devlin et al., 2019), RoBERTa-large (Liu et al., 2019), ALBERTxxlarge (Lan et al., 2020) and DeBERTa-v2-xxlarge (He et al., 2021) and will evaluate the models in

近年来,随着预训练(pre-training)技术的快速发展,我们在主干模型选择上有了多种方案。本文决定将IDOL应用于BERT-large (Devlin等人,2019)、RoBERTa-large (Liu等人,2019)、ALBERTxxlarge (Lan等人,2020)和DeBERTa-v2-xxlarge (He等人,2021)等模型,并将对这些模型进行...

In terms of logical reasoning MRC, we will compare IDOL with several previous but still competitive methods for logical reasoning MRC including DAGN (Huang et al., 2021), AdaLoGN (Li et al., 2022), LReasoner (Wang et al., 2022b), Logiformer (Xu et al., 2022) and MERIt (Jiao et al., 2022). Much more interesting, we let IDOL compete with ChatGPT in a small setting.

在逻辑推理机器阅读理解 (MRC) 方面,我们将 IDOL 与几种先前但仍具竞争力的逻辑推理 MRC 方法进行比较,包括 DAGN (Huang et al., 2021)、AdaLoGN (Li et al., 2022)、LReasoner (Wang et al., 2022b)、Logiformer (Xu et al., 2022) 和 MERIt (Jiao et al., 2022)。更有趣的是,我们在小规模设置中让 IDOL 与 ChatGPT 进行竞争。

5.2 Datasets

5.2 数据集

First and foremost, the aim of IDOL is to improve the logical reasoning ability of pre-trained models, thus, the two most representative benchmarks - ReClor and LogiQA will act as the primary examiners.

首先,IDOL的目标是提升预训练模型的逻辑推理能力,因此最具代表性的两个基准测试——ReClor和LogiQA将作为主要评估工具。

Following this, RACE (Lai et al., 2017) and SQuAD 2.0 (Rajpurkar et al., 2018), two classic machine reading comprehension datasets that are not targeted at assessing reasoning ability, will come on stage, which will be beneficial to conclude whether IDOL helps with other types of reading comprehension abilities.

随后登场的是RACE (Lai et al., 2017)和SQuAD 2.0 (Rajpurkar et al., 2018)这两个不针对推理能力评估的经典机器阅读理解数据集,这将有助于验证IDOL是否对其他类型的阅读理解能力有所提升。

Last but not least, we also tested the models pre-trained with IDOL on MNLI (Williams et al.,

最后但同样重要的是,我们还测试了在MNLI (Williams et al., [20]) 上使用IDOL预训练的模型。

- and STS-B (Cer et al., 2017), two tasks of GLUE (Wang et al., 2018), to make sure that the general language understanding abilities are retained to a great extent during the process of logical enhancement. The evaluation metrics on STS-B are the Pearson correlation coefficient (Pear.) and Spearman’s rank correlation coefficient (Spear.) on the development set. And we use the accuracy of MNLI-m and MNLI-mm development sets for evaluation on MNLI.

- 和 STS-B (Cer 等人, 2017), 这两个 GLUE (Wang 等人, 2018) 任务, 以确保在逻辑增强过程中通用语言理解能力得到较大程度保留。STS-B 的评估指标是开发集上的皮尔逊相关系数 (Pear.) 和斯皮尔曼等级相关系数 (Spear.)。对于 MNLI, 我们使用 MNLI-m 和 MNLI-mm 开发集的准确率进行评估。

ReClor The problems in this dataset are collected from two American standardized tests - LSAT and GMAT, which guarantee the difficulty of answering the questions. Moreover, ReClor covers 17 classes of logical reasoning including main idea inference, reasoning flaws detection, sufficient but unnecessary conditions, and so forth. Each problem consists of a passage, a question, and four answer candidates, like the one shown in the green section of Figure 1. There are 4638, 500, and 1000 data points in the training set, development set, and test set respectively. The accuracy is used to evaluate the system’s performance.

ReClor

该数据集中的问题收集自美国两项标准化考试——LSAT和GMAT,确保了答题难度。此外,ReClor涵盖17类逻辑推理题型,包括主旨推断、推理缺陷检测、充分非必要条件等。每道题由一篇短文、一个问题及四个候选答案组成,如图1绿色区域所示。训练集、开发集和测试集分别包含4638、500和1000个数据点,采用准确率作为系统性能评估指标。

LogiQA The main difference compared with ReClor is that the problems in LogiQA are generated based on the National Civil Servants Examination of China. Besides, it incorporates 5 main reasoning types such as categorical reasoning and d is jun ct ive reasoning. And 7376, 651, and 651 samples are gathered for the training set, development set, and test set individually.

LogiQA

与ReClor的主要区别在于,LogiQA中的问题基于中国国家公务员考试生成。此外,它融合了5种主要推理类型,如分类推理和析取推理。训练集、开发集和测试集分别收集了7376、651和651个样本。

5.3 Implementation Detail

5.3 实现细节

5.3.1 IDOL

5.3.1 IDOL

During the process of pre-training with IDOL, we implemented the experiments on 8 Nvidia A100 GPUs. Since IDOL was applied on multiple different pre-trained models, we provide a range for some main hyper parameters. The whole training process consists of $10\mathrm{k}{\sim}20\mathrm{k}$ steps while the warmup rate keeps 0.1. The learning rate is warmed up to a peak value between $5\mathrm{e}{-6}{\sim}3\mathrm{e}{-5}$ for different models, and then linearly decayed. As for batch size, we found that 1024 or 2048 is more appropriate for most models. Additionally, we use AdamW (Loshchilov and Hutter, 2017) as our optimizer with a weight decay of around 1e-3. For the software packages we used in detail, please see Appendix.

在使用IDOL进行预训练的过程中,我们在8块Nvidia A100 GPU上完成了实验。由于IDOL被应用于多个不同的预训练模型,我们为部分主要超参数提供了范围。整个训练过程包含$10\mathrm{k}{\sim}20\mathrm{k}$步,同时保持0.1的热身率。学习率会热身至峰值,不同模型介于$5\mathrm{e}{-6}{\sim}3\mathrm{e}{-5}$之间,随后线性衰减。至于批量大小,我们发现1024或2048对大多数模型更为合适。此外,我们采用AdamW (Loshchilov and Hutter, 2017)作为优化器,权重衰