Better Combine Them Together! Integrating Syntactic Constituency and Dependency Representations for Semantic Role Labeling

更好的结合方式!整合句法成分与依存表示以提升语义角色标注

Abstract

摘要

Structural syntax knowledge has been proven effective for semantic role labeling (SRL), while existing works mostly use only one singleton syntax, such as either syntactic dependency or constituency tree. In this paper, we explore the integration of heterogeneous syntactic representations for SRL. We first consider a TreeLSTM-based integration, collaboratively learning the phrasal boundaries from the constituency and the semantic relations from dependency. We further introduce a labelaware GCN solution for simultaneously modeling the syntactic edges and labels. Experimental results demonstrate that by effectively combining the heterogeneous syntactic representations, our methods yield task improvements on both span-based and dependencybased SRL. Also our system achieves new state-of-the-art SRL performances, meanwhile bringing explain able task improvements.

结构句法知识已被证明对语义角色标注(SRL)有效,而现有工作大多仅使用单一语法结构,例如句法依存树或成分树。本文探索了异构句法表示在SRL中的融合应用。我们首先提出基于TreeLSTM的融合方法,协同学习成分树的短语边界和依存树的语义关系。进一步引入标签感知(labelaware)的图卷积网络(GCN)方案,同时建模句法边和标签。实验结果表明,通过有效结合异构句法表示,我们的方法在基于跨度和基于依存的SRL任务上均取得提升。同时,我们的系统实现了新的SRL最先进性能,并带来可解释的任务改进。

1 Introduction

1 引言

Semantic role labeling (SRL) aims to disclose the predicate-argument structure of a given sentence. Such shallow semantic structures have been shown highly useful for a wide range of downstream tasks in natural language processing (NLP), such as information extraction (Fader et al., 2011; Bastia nell i et al., 2013), machine translation (Xiong et al., 2012; Shi et al., 2016) and question answering (Maqsud et al., 2014; Xu et al., 2020). Based on whether to recognize the constituent phrasal span or the syntactic dependency head token of an argument, prior works categorize SRL into two types: the span-based SRL popularized in CoNLL05/12 shared tasks (Carreras and Marquez, 2005; Pradhan et al., 2013), and the dependency-based SRL introduced in CoNLL08/09 shared tasks (Surdeanu et al., 2008; Hajic et al., 2009). By adopting various neural network methods, two types of SRL have achieved significant performances in recent years (FitzGerald et al., 2015; He et al., 2017; Fei et al., 2021a)

语义角色标注 (SRL) 旨在揭示给定句子的谓词-论元结构。这种浅层语义结构已被证明对自然语言处理 (NLP) 中的广泛下游任务非常有用,例如信息抽取 (Fader et al., 2011; Bastia nell i et al., 2013)、机器翻译 (Xiong et al., 2012; Shi et al., 2016) 和问答系统 (Maqsud et al., 2014; Xu et al., 2020)。根据是识别论元的成分短语跨度还是句法依存中心词 token,先前工作将 SRL 分为两种类型:在 CoNLL05/12 共享任务中流行的基于跨度的 SRL (Carreras and Marquez, 2005; Pradhan et al., 2013),以及在 CoNLL08/09 共享任务中引入的基于依存关系的 SRL (Surdeanu et al., 2008; Hajic et al., 2009)。通过采用各种神经网络方法,这两种 SRL 近年来都取得了显著性能 (FitzGerald et al., 2015; He et al., 2017; Fei et al., 2021a)

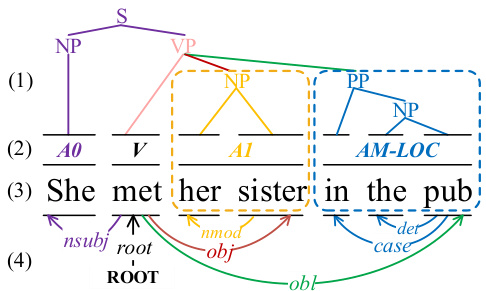

Figure 1: The mutual benefit to integrate both the (1) syntactic constituency and (4) dependency structures for (2) SRL, based on (3) an example sentence.

图 1: 基于 (3) 示例句子,(1) 句法成分结构和 (4) 依存结构对 (2) 语义角色标注 (SRL) 的协同增效作用。

Syntactic features have been extensively verified to be highly effective for SRL (Pradhan et al., 2005; Punyakanok et al., 2008; March egg ian i and Titov, 2017; Strubell et al., 2018; Zhang et al., 2019). In particular, syntactic dependency features have gained a majority of attention, especially for the dependency-based SRL, considering their close relevance with the dependency structure (Roth and Lapata, 2016; He et al., 2018; Xia et al., 2019; Fei et al., 2021b). Most existing works focus on designing various methods for modeling the dependency representations into the SRL learning, such as TreeLSTM (Li et al., 2018; Xia et al., 2019) and graph convolutional networks (GCN) (Marcheggiani and Titov, 2017; Li et al., 2018). On the other hand, some efforts try to encode the constituency representations for facilitating the span-based SRL (Wang et al., 2019; March egg ian i and Titov, 2020).

句法特征已被广泛验证对语义角色标注(SRL)非常有效(Pradhan et al., 2005; Punyakanok et al., 2008; Marcheggiani and Titov, 2017; Strubell et al., 2018; Zhang et al., 2019)。其中,句法依存特征因其与依存结构的紧密关联性(Roth and Lapata, 2016; He et al., 2018; Xia et al., 2019; Fei et al., 2021b),尤其对基于依存的语义角色标注获得了最多关注。现有研究主要聚焦于设计各种方法将依存表征建模融入SRL学习,例如TreeLSTM(Li et al., 2018; Xia et al., 2019)和图卷积网络(GCN)(Marcheggiani and Titov, 2017; Li et al., 2018)。另一方面,也有研究尝试编码成分句法表征来辅助基于跨度的语义角色标注(Wang et al., 2019; Marcheggiani and Titov, 2020)。

Yet almost all the syntax-based SRL methods use one standalone syntactic tree, i.e., either dependency or constituency tree. Constituent and dependency syntax actually depict the syntactic structure from different perspectives, and integrating these two heterogeneous representations can intuitively bring complementary advantages (Farkas et al., 2011; Yoshikawa et al., 2017; Zhou and Zhao, 2019). As exemplified in Figure 1, the dependency edges represent the inter-relations between arguments and predicates, while the constituency structure1 locates more about phrase boundaries of argument spans, and then directs the paths to the predicate globally. Interacting these two structures can better guide the system to focus on the most proper granularity of phrasal spans (as circled by the dotted box), while also ensuring the route consistency between predicate-argument pairs. Unfortunately, we find that there are very limited explorations of the heterogeneous syntax integration in SRL. For instance, Li et al. (2010) manually craft two types of discrete syntax features for statistical model, and recently Fei et al. (2020a) implicitly distill two heterogeneous syntactic representations into one unified neural model.

然而,几乎所有基于句法的语义角色标注 (SRL) 方法都使用单一的句法树,即依存树或成分树。成分句法和依存句法实际上从不同角度描绘了句法结构,整合这两种异构表示可以直观地带来互补优势 (Farkas et al., 2011; Yoshikawa et al., 2017; Zhou and Zhao, 2019)。如图 1 所示,依存边表示论元与谓词之间的相互关系,而成分结构1更多定位论元跨度的短语边界,并全局引导路径指向谓词。交互这两种结构能更好地引导系统关注最合适的短语跨度粒度(如虚线框标注部分),同时确保谓词-论元对的路径一致性。遗憾的是,我们发现当前针对 SRL 的异构句法整合研究非常有限。例如,Li et al. (2010) 为统计模型手工设计了两类离散句法特征,而近期 Fei et al. (2020a) 将两种异构句法表示隐式蒸馏到一个统一的神经模型中。

In this paper, we present two innovative neural methods for explicitly integrating two kinds of syntactic features for SRL. As shown in Figure 2, in our framework, the syntactic constituent and dependency encoders are built jointly as a unified block (i.e., Heterogeneous Syntax Fuser, namely HeSyFu), and work closely with each other. In the first architecture of HeSyFu (cf. Figure 3), we take two separate TreeLSTMs as the structure encoders for two syntactic trees. Based on our framework, we try to answer the following questions:

本文提出了两种创新性的神经方法,用于显式整合两种句法特征以实现语义角色标注(SRL)。如图2所示,在我们的框架中,句法成分编码器和依存关系编码器被联合构建为一个统一模块(即异构句法融合器HeSyFu),并紧密协作。在HeSyFu的第一种架构中(见图3),我们采用两个独立的TreeLSTM分别作为两种句法树的结构编码器。基于该框架,我们试图回答以下问题:

$\blacktriangleright$ Q1. Whether the combination of constituent and dependency syntax can really improve SRL? $\blacktriangleright$ Q2. If yes, how much will such improvements be for the dependency- and span-based SRL?

$\blacktriangleright$ Q1. 成分句法和依存句法的结合是否真的能提升语义角色标注 (SRL) 性能?

$\blacktriangleright$ Q2. 若有效,这种改进对基于依存和基于跨度的语义角色标注分别有多大提升?

We further propose Const GCN and Dep GCN encoders to enhance the syntax encoding in HeSyFu, where the syntactic labels (i.e., dependent arc types and constituency node types) are modeled in a unified manner within the label-aware GCN, as illustrated in Figure 4. With this, we can dig deeper:

我们进一步提出Const GCN和Dep GCN编码器来增强HeSyFu中的句法编码,其中句法标签(即依存弧类型和成分节点类型)在标签感知GCN中以统一方式建模,如图4所示。通过这种方式,我们可以进行更深入的挖掘:

▶ Q3. How different will the results be by employing the TreeLSTM or GCN encoder? ▶ Q4. Can SRL be further improved by leveraging syntactic labels? ▶ Q5. What kind of associations can be discovered between SRL structures and these heterogeneous syntactic structures?

▶ Q3. 使用TreeLSTM或GCN编码器会使结果产生多大差异?

▶ Q4. 通过利用句法标签能否进一步提升语义角色标注(SRL)效果?

▶ Q5. 在语义角色标注结构与异构句法结构之间能发现何种关联?

To find the answers, we conduct extensive experiments on both span- and dependency-based SRL benchmarks (i.e., CoNLL05/12 and CoNLL09). The results and analyses show that,

为了找到答案,我们在基于跨度和依存关系的语义角色标注基准测试(即CoNLL05/12和CoNLL09)上进行了大量实验。结果表明,

▶A1. combining two types of syntax information is more helpful than just using either one of them; ▶A2. the improvement for span-based SRL is more obvious than dependency-based one; ▶A3. GCN performs better than TreeLSTM; ▶A4. syntactic labels are quite helpful for SRL; ▶A5. SRL and both kinds of syntactic structures have strong associations and should be exploited for mutual benefits.

▶A1. 结合两种句法信息比仅使用其中一种更有帮助;

▶A2. 基于跨度的语义角色标注 (SRL) 比基于依赖的改进更明显;

▶A3. 图卷积网络 (GCN) 的表现优于树结构长短期记忆网络 (TreeLSTM);

▶A4. 句法标签对语义角色标注非常有用;

▶A5. 语义角色标注与两种句法结构存在强关联性,应相互促进利用。

In our experiments, our SRL framework with two proposed HeSyFu encoders achieves better results than current best-performing systems, and yield more explain able task improvements.

在我们的实验中,采用两种新型HeSyFu编码器的SRL框架取得了优于当前最佳系统的结果,并带来了更具可解释性的任务改进。

2 Related Work

2 相关工作

The SRL task, uncovering the shallow semantic structure (i.e. ‘who did what to whom where and when’) is pioneered by Gildea and Jurafsky (2000), and popularized from PropBank (Palmer et al., 2005) and FrameNet (Baker et al., 1998). SRL is typically divided into the span-based one and dependency-based one on the basis of the granularity of arguments (e.g., phrasal spans or dependency heads). Earlier efforts focus on designing hand-crafted features with machine learning methods (Pradhan et al., 2005; Punyakanok et al., 2008; Zhao et al., 2009b,a). Later, SRL works mostly employ neural networks with distributed features for the task improvements (FitzGerald et al., 2015; Roth and Lapata, 2016; March egg ian i and Titov, 2017; Strubell et al., 2018). Most high-performing systems model the task as a sequence labeling problem with BIO tagging scheme for both two types of SRL (He et al., 2017; Ouchi et al., 2018; Fei et al., 2020c,b).

语义角色标注 (SRL) 任务由 Gildea 和 Jurafsky (2000) 开创,旨在揭示浅层语义结构(即"谁在何时何地对谁做了什么"),随后通过 PropBank (Palmer et al., 2005) 和 FrameNet (Baker et al., 1998) 得到普及。根据论元粒度(如短语跨度或依存中心词),SRL通常分为基于跨度的和基于依存的两类。早期研究主要集中于利用机器学习方法设计人工特征 (Pradhan et al., 2005; Punyakanok et al., 2008; Zhao et al., 2009b,a)。后来,SRL工作大多采用具有分布式特征的神经网络来改进任务 (FitzGerald et al., 2015; Roth and Lapata, 2016; March egg ian i and Titov, 2017; Strubell et al., 2018)。大多数高性能系统将这两类SRL任务建模为采用BIO标记方案的序列标注问题 (He et al., 2017; Ouchi et al., 2018; Fei et al., 2020c,b)。

On the other hand, syntactic features are a highly effective SRL performance enhancer, according to numbers of empirical verification in prior works (March egg ian i et al., 2017; He et al., 2018; S way am dip ta et al., 2018; Zhang et al., 2019), as intuitively SRL shares much underlying structure with syntax. Basically, the syntactic dependent feature is more preferred to be injected into the dependency-based SRL (Roth and Lapata, 2016; March egg ian i and Titov, 2017; He et al., 2018; Kasai et al., 2019), while other consider the constituent syntax for the span-based SRL (Wang et al., 2019; March egg ian i and Titov, 2020).

另一方面,句法特征能显著提升语义角色标注(SRL)性能,这一点已被多项先前研究的实证结果所验证(March egg ian i et al., 2017; He et al., 2018; S way am dip ta et al., 2018; Zhang et al., 2019),因为直观上SRL与句法存在大量底层结构关联。通常,基于依存关系的SRL更倾向于融入依存句法特征(Roth and Lapata, 2016; March egg ian i and Titov, 2017; He et al., 2018; Kasai et al., 2019),而基于跨度的SRL则多采用成分句法(Wang et al., 2019; March egg ian i and Titov, 2020)。

Actually, the constituent and dependency syntax depict the structural features from different angles, while they can share close linguistic relevance. Related works have revealed the mutual benefits on integrating these two heterogeneous syntactic representations for various NLP tasks (Collins, 1997; Charniak, 2000; Charniak and Johnson, 2005; Farkas et al., 2011; Yoshikawa et al., 2017; Zhou and Zhao, 2019; Strzyz et al., 2019; Kato and Matsubara, 2019). Unfortunately, there are very limited explorations for SRL. For example, Li et al. (2010) construct discrete heterogeneous syntactic features for SRL. More recent work in Fei et al. (2020a) leverage knowledge distillation method to inject the heterogeneous syntax representations from various tree encoders into one model for enhancing the span-based SRL. In this work, we consider an explicit integration of these two syntactic structures via two neural solutions. To our knowledge, we are the first attempt performing thorough investigations on the impacts of the heterogeneous syntax combination to the SRL task.

实际上,成分句法和依存句法从不同角度描述了结构特征,但它们之间存在紧密的语言学关联。相关研究表明,将这两种异构句法表示整合到各类NLP任务中能产生相互增益 (Collins, 1997; Charniak, 2000; Charniak and Johnson, 2005; Farkas et al., 2011; Yoshikawa et al., 2017; Zhou and Zhao, 2019; Strzyz et al., 2019; Kato and Matsubara, 2019)。然而针对语义角色标注(SRL)的探索却非常有限:Li等人(2010)为SRL构建了离散的异构句法特征;Fei等人(2020a)近期工作则利用知识蒸馏方法,将来自不同树编码器的异构句法表示注入单一模型以增强基于跨度的SRL。本文通过两种神经架构实现这两种句法结构的显式整合。据我们所知,这是首次系统研究异构句法组合对SRL任务影响的尝试。

Various neural models have been proposed for encoding the syntactic structures, such as attention mechanism (Strubell et al., 2018; Zhang et al., 2019), TreeLSTM (Li et al., 2018; Xia et al., 2019), GCN (March egg ian i and Titov, 2017; Li et al., 2018; March egg ian i and Titov, 2020), etc. In this work, we take the advantages of the TreeLSTM and GCN models for encoding the constituent and dependency trees, as two solutions of our HeSyFu encoders. It is worth noticing that prior works using GCN to encode dependency (March egg ian i and Titov, 2017) and constituent (March egg ian i and Titov, 2020), where however the syntactic labels are not managed in a unified manner. We thus consider enhancing the syntax GCN by simultaneously modeling the syntactic labels within the structure.

为编码句法结构,研究者提出了多种神经模型,例如注意力机制 (attention mechanism) [Strubell et al., 2018; Zhang et al., 2019]、TreeLSTM [Li et al., 2018; Xia et al., 2019]、图卷积网络 (GCN) [March egg ian i and Titov, 2017; Li et al., 2018; March egg ian i and Titov, 2020] 等。本文结合TreeLSTM与GCN的优势,分别对成分树和依存树进行编码,作为HeSyFu编码器的两种实现方案。值得注意的是,此前使用GCN编码依存 [March egg ian i and Titov, 2017] 和成分 [March egg ian i and Titov, 2020] 的研究均未统一处理句法标签。为此,我们提出通过在结构中同步建模句法标签来增强语法图卷积网络。

3 SRL Model

3 SRL模型

3.1 Task Modeling

3.1 任务建模

Following prior works (Tan et al., 2018; Marcheggiani and Titov, 2020), our system aims to identify and classify the arguments of a predicate into semantic roles, such as $A O,A l,A M–L O C,$ , etc. We denote the complete role set as $\mathcal{R}$ . We adopt the $B I O$ tagging scheme. And given a sentence $s={w_{1},\cdot\cdot\cdot,w_{n}}$ and a predicate $w_{p}$ , the model assigns each word $w_{i}$ a label $\hat{y}\in\mathcal{V}$ where $\mathcal{V}\overline{{=}}({B,I}\times\mathcal{R})\cup{O}$ .2 Note that each semantic argument corresponds to a word span of ${w_{j},\cdot\cdot\cdot,w_{k}}$ $(1{\le}j{\le}k{\le}n)$ .3

遵循先前的研究 (Tan et al., 2018; Marcheggiani and Titov, 2020),我们的系统旨在识别谓词的论元并将其分类为语义角色,例如 $A O,A l,A M–L O C,$ 等。我们将完整角色集表示为 $\mathcal{R}$。采用 $B I O$ 标注方案。给定句子 $s={w_{1},\cdot\cdot\cdot,w_{n}}$ 和谓词 $w_{p}$,模型为每个词 $w_{i}$ 分配标签 $\hat{y}\in\mathcal{V}$,其中 $\mathcal{V}\overline{{=}}({B,I}\times\mathcal{R})\cup{O}$。2 注意每个语义论元对应一个词跨度 ${w_{j},\cdot\cdot\cdot,w_{k}}$ $(1{\le}j{\le}k{\le}n)$。3

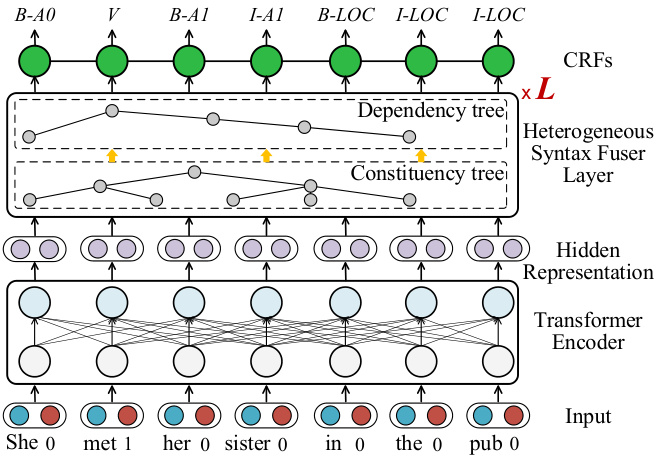

Figure 2: Overview of our SRL framework.

图 2: 我们的语义角色标注 (SRL) 框架概览。

3.2 Framework

3.2 框架

As illustrated in Figure 2, our SRL framework consists of four components, including input representations, Transformer encoder, heterogeneous syntax fuser layer and CRFs decoding layer.

如图 2 所示,我们的 SRL (Semantic Role Labeling) 框架包含四个组件,包括输入表示、Transformer 编码器、异构语法融合层和 CRFs (Conditional Random Fields) 解码层。

Given an input sentence $s$ and a predicate word $w_{p}$ $\mathrm{\check{\rho}}$ is the position), the input representations $\mathbf{\nabla}{x}$ are the concatenation $(\oplus)$ of word embeddings ${\bf\nabla}x_{w_{i}}$ and predicate binary embeddings ${\pmb x}{(i==p)}$ indicating the presence or absence of $w_{p}$ :

给定输入句子 $s$ 和谓词 $w_{p}$ ($\mathrm{\check{\rho}}$ 表示位置),输入表征 $\mathbf{\nabla}{x}$ 是词嵌入 ${\bf\nabla}x_{w_{i}}$ 与谓词二值嵌入 ${\pmb x}{(i==p)}$ (用于指示 $w_{p}$ 是否出现) 的拼接 $(\oplus)$:

$$

\pmb{x}{i}=\pmb{x}{w_{i}}\oplus\pmb{x}_{(i==p)}.

$$

$$

\pmb{x}{i}=\pmb{x}{w_{i}}\oplus\pmb{x}_{(i==p)}.

$$

Afterwards, we adopt Transformer (Vaswani et al., 2017) as our base encoder for yielding contextualized word representations. Transformer $(T r m)$ works with multi-head self-attention mechanism:

随后,我们采用 Transformer (Vaswani et al., 2017) 作为基础编码器来生成上下文相关的词表征。Transformer $(T r m)$ 采用多头自注意力机制:

$$

\mathrm{Softmax}(\frac{Q\cdot K^{\mathrm{T}}}{\sqrt{d_{k}}})\cdot V,

$$

$$

\mathrm{Softmax}(\frac{Q\cdot K^{\mathrm{T}}}{\sqrt{d_{k}}})\cdot V,

$$

where $Q,K$ and $V$ are the linear projections from the input representation $\boldsymbol{x}_{i}$ . We simplify the flow:

其中 $Q,K$ 和 $V$ 是输入表示 $\boldsymbol{x}_{i}$ 的线性投影。我们简化流程:

$$

{\pmb{r}{1},\cdot\cdot\cdot,\pmb{r}{n}}=\operatorname{Trm}({\pmb{x}{1},\cdot\cdot\cdot,\pmb{x}_{n}}).

$$

$$

{\pmb{r}{1},\cdot\cdot\cdot,\pmb{r}{n}}=\operatorname{Trm}({\pmb{x}{1},\cdot\cdot\cdot,\pmb{x}_{n}}).

$$

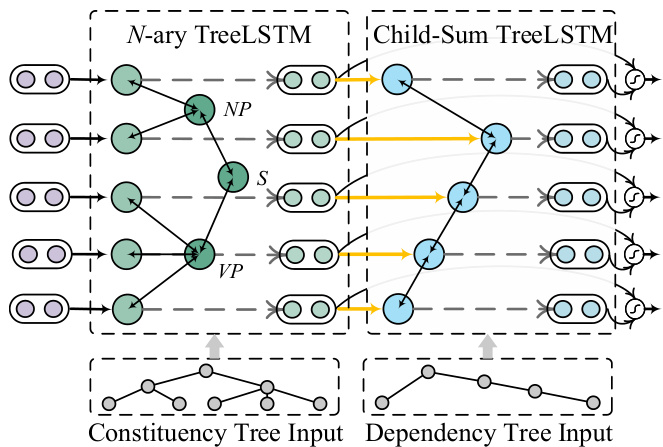

Figure 3: TreeLSTM-based HeSyFu layer.

图 3: 基于TreeLSTM的HeSyFu层。

Based on the syntax-aware hidden representation $s_{i}$ , we use CRFs (Lafferty et al., 2001) to compute the probability of each candidate output $\pmb{y}={y_{1},\dots,y_{n}}$ :

基于语法感知的隐藏表示 $s_{i}$,我们使用条件随机场 (CRFs) [20] 来计算每个候选输出 $\pmb{y}={y_{1},\dots,y_{n}}$ 的概率:

$$

p(y|s)=\frac{\exp{\sum_{i}(W s_{n}+T_{y_{i-1},y_{i}})}}{Z},

$$

$$

p(y|s)=\frac{\exp{\sum_{i}(W s_{n}+T_{y_{i-1},y_{i}})}}{Z},

$$

where $W$ and $\mathbf{\delta}_{\mathbf{\delta}\mathbf{T}}$ are the parameters and $Z$ is a normalization factor. The Viterbi algorithm is used to search for the highest-scoring tag sequence $\hat{y}$ .

其中 $W$ 和 $\mathbf{\delta}_{\mathbf{\delta}\mathbf{T}}$ 是参数,$Z$ 是归一化因子。使用 Viterbi 算法搜索最高分的标签序列 $\hat{y}$。

4 Integration of Syntactic Constituency and Dependency Structure

4 句法成分与依存结构的整合

We present two neural heterogeneous syntax fusers (a.k.a., HeSyFu), including a TreeLSTM-based HeSyFu (cf. Figure 3), and a label-aware GCNbased HeSyFu (cf. Figure 4). HeSyFu is stacked with total $L$ layers for a full syntax interaction. We design the architecture with the constituency (denoted as const.) encoding in front of the dependency (denoted as dep.) encoding, based on the intuition that the boundary recognition helped by const. syntax should go before the semantic relation determination aided by dep. syntax.

我们提出了两种神经异构语法融合器(即HeSyFu),包括基于TreeLSTM的HeSyFu(见图3)和基于标签感知GCN的HeSyFu(见图4)。HeSyFu共堆叠了$L$层以实现完整的语法交互。基于成分句法(constituency,简称const.)辅助的边界识别应先于依存句法(dependency,简称dep.)辅助的语义关系判定这一直觉,我们在架构设计上将成分编码置于依存编码之前。

4.1 TreeLSTM Heterogeneous Syntax Fuser

4.1 TreeLSTM异构语法融合器

Our TreeLSTM-based HeSyFu (Tr-HeSyFu) is comprised of the $N$ -ary TreeLSTM for const. trees and the Child-Sum TreeLSTM for dep. trees motivated by Tai et al. (2015).

我们基于TreeLSTM的HeSyFu (Tr-HeSyFu) 由针对const.树的$N$元TreeLSTM和针对dep.树的Child-Sum TreeLSTM组成,其设计灵感来自Tai等人 (2015) 的研究。

Constituency tree encoding The flow in TreeLSTM is bidirectional, i.e., bottom-up and top-down, for a full information interaction. For each node $u$ in the tree, we denote the hidden state and memory cell of its $v$ -th $(v\in[1,M])$ branching child as $\boldsymbol{h}{u v}^{\dagger}$ and $c{u v}$ . The bottom-up one computes the representation $\boldsymbol{h}_{u}^{\dagger}$ from its children hierarchically:

成分树编码

TreeLSTM中的信息流是双向的,即自底向上和自顶向下,以实现完整的信息交互。对于树中的每个节点$u$,我们将其第$v$个$(v\in[1,M])$分支子节点的隐藏状态和记忆单元记为$\boldsymbol{h}{u v}^{\dagger}$和$c{u v}$。自底向上的方式通过层级计算从其子节点得到表示$\boldsymbol{h}_{u}^{\dagger}$:

$$

\begin{array}{r l}&{\quad i_{u}=\sigma(W^{(i)}r_{u}+\sum_{v=1}^{M}U_{v}^{(i)}h_{u v}^{\dagger}+b^{(i)}),}\ &{f_{u k}=\sigma(W^{(f)}r_{u}+\sum_{v=1}^{M}U_{k v}^{(f)}h_{u v}^{\dagger}+b^{(f)}),}\ &{o_{u}=\sigma(W^{(o)}r_{u}+\sum_{v=1}^{M}U_{v}^{(o)}h_{u v}^{\dagger}+b^{(o)}),}\ &{u_{u}=\mathrm{Tanh}(W^{(u)}r_{u}+\sum_{v=1}^{M}U_{v}^{(u)}h_{u v}^{\dagger}+b^{(u)}),}\ &{c_{u}=i_{u}\odot u_{u}+\sum_{k=1}^{M}f_{u k}\odot c_{u k},}\ &{h_{u}^{\dagger}=o_{u}\odot\operatorname{tanh}(c_{u}),}\end{array}

$$

$$

\begin{array}{r l}&{\quad i_{u}=\sigma(W^{(i)}r_{u}+\sum_{v=1}^{M}U_{v}^{(i)}h_{u v}^{\dagger}+b^{(i)}),}\ &{f_{u k}=\sigma(W^{(f)}r_{u}+\sum_{v=1}^{M}U_{k v}^{(f)}h_{u v}^{\dagger}+b^{(f)}),}\ &{o_{u}=\sigma(W^{(o)}r_{u}+\sum_{v=1}^{M}U_{v}^{(o)}h_{u v}^{\dagger}+b^{(o)}),}\ &{u_{u}=\mathrm{Tanh}(W^{(u)}r_{u}+\sum_{v=1}^{M}U_{v}^{(u)}h_{u v}^{\dagger}+b^{(u)}),}\ &{c_{u}=i_{u}\odot u_{u}+\sum_{k=1}^{M}f_{u k}\odot c_{u k},}\ &{h_{u}^{\dagger}=o_{u}\odot\operatorname{tanh}(c_{u}),}\end{array}

$$

where $W,U$ and $^b$ are parameters. $\pmb{r}{u},\pmb{i}{u}$ , $\mathbf{)}{u}$ and $f_{u v}$ are the input token representation, input gate, output gate and forget gate. Analogously, the top-down $N$ -ary TreeLSTM calculates the representation $h_{u}^{\downarrow}$ the same way. We concatenate the represent at ions of two directions: $h_{u}^{c o n s t}=h_{u}^{\uparrow}\oplus h_{u}^{\downarrow}$ Note that the constituent tree nodes include terminal word nodes and non-terminal constituent nodes, and we only take the representations (i.e., $h_{i}^{c o n s t})$ corresponding to the word node $w_{i}$ for any usage.

其中 $W,U$ 和 $^b$ 是参数。$\pmb{r}{u},\pmb{i}{u}$、$\mathbf{)}{u}$ 和 $f_{u v}$ 分别表示输入token表征、输入门、输出门和遗忘门。类似地,自上而下的 $N$ 元TreeLSTM以相同方式计算表征 $h_{u}^{\downarrow}$。我们将双向表征拼接为:$h_{u}^{c o n s t}=h_{u}^{\uparrow}\oplus h_{u}^{\downarrow}$。注意成分树节点包含终端词节点和非终端成分节点,我们仅提取词节点 $w_{i}$ 对应的表征(即 $h_{i}^{c o n s t}$)供后续使用。

Dependency tree encoding Slightly different from $N$ -ary TreeLSTM for const. tree, the nonterminal nodes in dep. tree encoded by Child-Sum TreeLSTM are all the word nodes. We also consider the bidirectional calculation here. The bottomup TreeLSTM obtains $h_{i}^{\uparrow}$ of the word $w_{i}$ via:

依存树编码

与针对常量树的 $N$ 叉 TreeLSTM 略有不同,依存树中由 Child-Sum TreeLSTM 编码的非终结节点均为词节点。此处我们同样考虑双向计算:自底向上的 TreeLSTM 通过以下公式获得词 $w_{i}$ 的 $h_{i}^{\uparrow}$:

$$

\begin{array}{r l}&{\overline{{h}}{i}^{\uparrow}=\sum_{j\in C(i)}h_{j}^{\uparrow},}\ &{\begin{array}{r l}&{i_{i}=\sigma(W^{(i)}r_{i}^{\prime}+U^{(i)}\overline{{h}}{i}^{\uparrow}+b^{(i)}),}\ &{f_{i j}=\sigma(W^{(f)}r_{i}^{\prime}+U^{(f)}\overline{{h}}{j}^{\uparrow}+b^{(f)}),}\end{array}}\ &{\begin{array}{r l}&{o_{i}=\sigma(W^{(o)}r_{i}^{\prime}+U^{(o)}\overline{{h}}{i}^{\uparrow}+b^{(o)}),}\ &{u_{i}=\boldsymbol{\mathrm{Tanh}}(W^{(u)}r_{i}^{\prime}+U^{(u)}\overline{{h}}{i}^{\uparrow}+b^{(u)}),}\end{array}}\ &{\begin{array}{r l}&{c_{i}=i_{\mathrm{\Gamma}}\sigma_{u i}+\sum_{j\in C(i)}f_{i j}\odot c_{j},}\ &{h_{i}^{\uparrow}=o_{i}\odot\operatorname{tanh}(c_{i}),}\end{array}}\end{array}

$$

$$

\begin{array}{r l}&{\overline{{h}}{i}^{\uparrow}=\sum_{j\in C(i)}h_{j}^{\uparrow},}\ &{\begin{array}{r l}&{i_{i}=\sigma(W^{(i)}r_{i}^{\prime}+U^{(i)}\overline{{h}}{i}^{\uparrow}+b^{(i)}),}\ &{f_{i j}=\sigma(W^{(f)}r_{i}^{\prime}+U^{(f)}\overline{{h}}{j}^{\uparrow}+b^{(f)}),}\end{array}}\ &{\begin{array}{r l}&{o_{i}=\sigma(W^{(o)}r_{i}^{\prime}+U^{(o)}\overline{{h}}{i}^{\uparrow}+b^{(o)}),}\ &{u_{i}=\boldsymbol{\mathrm{Tanh}}(W^{(u)}r_{i}^{\prime}+U^{(u)}\overline{{h}}{i}^{\uparrow}+b^{(u)}),}\end{array}}\ &{\begin{array}{r l}&{c_{i}=i_{\mathrm{\Gamma}}\sigma_{u i}+\sum_{j\in C(i)}f_{i j}\odot c_{j},}\ &{h_{i}^{\uparrow}=o_{i}\odot\operatorname{tanh}(c_{i}),}\end{array}}\end{array}

$$

where $C(i)$ is the set of child nodes of $w_{i}$ . $\pmb{r}{i}^{'}$ is the input token representation consulting the foregoing constituent output representation: $\pmb{r}{i}^{\prime}=\pmb{r}{i}+\pmb{h}{i}^{c o n s t}$ The top-down one yields $h_{i}^{\downarrow}$ , which is concatenated with the bottom-up one: $\pmb{h}{i}^{\dot{d e}p}=\pmb{h}{i}^{\uparrow}\oplus\pmb{h}_{i}^{\downarrow}$ .

其中 $C(i)$ 是 $w_{i}$ 的子节点集合。$\pmb{r}{i}^{'}$ 是通过参考前述成分输出表示得到的输入token表示:$\pmb{r}{i}^{\prime}=\pmb{r}{i}+\pmb{h}{i}^{c o n s t}$。自上而下的过程生成 $h_{i}^{\downarrow}$,与自下而上的表示进行拼接:$\pmb{h}{i}^{\dot{d e}p}=\pmb{h}{i}^{\uparrow}\oplus\pmb{h}_{i}^{\downarrow}$。

Integration To fully make use of the heterogeneous syntactic knowledge, we fuse these two resulting syntactic representations. We apply a fusion gate to flexibly coordinate their contributions:

集成

为了充分利用异构句法知识,我们将这两种生成的句法表示进行融合。我们采用一个融合门来灵活协调它们的贡献:

$$

\begin{array}{r l}&{{\pmb g}{i}=\sigma({\pmb W}^{(g_{1})}{\pmb h}{i}^{c o n s t}+{\pmb W}^{(g_{2})}{\pmb h}{i}^{d e p}+{\pmb b}^{(g)}),}\ &{{\pmb s}{i}={\pmb g}{i}\odot{\pmb h}{i}^{c o n s t}+(1-{\pmb g}{i})\odot{\pmb h}_{i}^{d e p}.}\end{array}

$$

$$

\begin{array}{r l}&{{\pmb g}{i}=\sigma({\pmb W}^{(g_{1})}{\pmb h}{i}^{c o n s t}+{\pmb W}^{(g_{2})}{\pmb h}{i}^{d e p}+{\pmb b}^{(g)}),}\ &{{\pmb s}{i}={\pmb g}{i}\odot{\pmb h}{i}^{c o n s t}+(1-{\pmb g}{i})\odot{\pmb h}_{i}^{d e p}.}\end{array}

$$

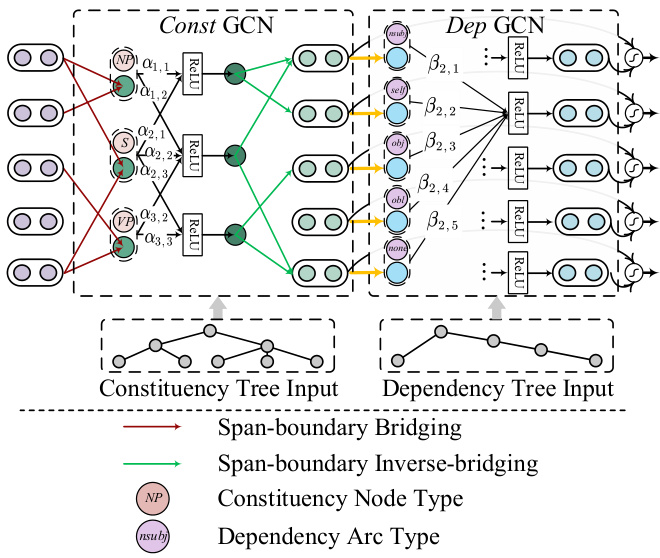

Figure 4: Label-aware GCN-based HeSyFu layer.

图 4: 基于标签感知的GCN HeSyFu层。

4.2 Label-aware GCN-based Heterogeneous Syntax Fuser

4.2 基于标签感知GCN的异构语法融合器

Compared with TreeLSTM, GCN is more comput ation ally efficient on performing the structural propagation among nodes, i.e., with O(1) complexity. On the other hand, it is also crucial to leverage the syntactic labels (i.e., dependent arc types, and constituent phrasal types) into the SRL learning. For example, within the dependency tree, the information from the neighboring nodes under distinct types of arcs can contribute in different degrees. However we note that current popular syntax GCNs (March egg ian i and Titov, 2017, 2020) do not en- code the dependent or constituent labels with the nodes in a unified manner, which could be inaccurate to describe the syntactic connecting attributes between the neighbor nodes. Based on their syntax GCNs, we newly propose label-aware constituency and dependency GCNs which are able to explicitly formalize the structure edges with syntactic labels simultaneously, and normalize them unitedly.4 As illustrated in Figure 4, our label-aware GCN-based $\mathrm{He}\mathrm{Sy}\mathrm{E}\mathrm{u}$ (denoted as LG-HeSyFu) has a similar assembling architecture to TreeLSTMbased $\mathrm{He}\mathrm{Sy}\mathrm{E}\mathrm{u}$ , and will finally be navigated via the gate mechanism as in Eq. (8).

与TreeLSTM相比,GCN在节点间执行结构传播时计算效率更高(即复杂度为O(1))。另一方面,将句法标签(即依存弧类型和成分短语类型)融入语义角色标注(SRL)学习也至关重要。例如,在依存树中,不同类型弧下相邻节点的信息贡献程度可能不同。但我们注意到当前流行的句法GCN (Marcheggiani和Titov, 2017, 2020)并未以统一方式将依存或成分标签与节点编码,这可能无法准确描述相邻节点间的句法连接属性。基于这些句法GCN,我们新提出了标签感知成分GCN和依存GCN,能够同时显式地将带句法标签的结构边形式化并进行统一归一化。如图4所示,我们基于标签感知GCN的$\mathrm{He}\mathrm{Sy}\mathrm{E}\mathrm{u}$(记为LG-HeSyFu)具有与基于TreeLSTM的$\mathrm{He}\mathrm{Sy}\mathrm{E}\mathrm{u}$相似的组装架构,最终将通过公式(8)中的门控机制进行导航。

Constituency tree encoding The constituent tree is modeled as a graph $G^{(c)}{=}(U^{(c)},E^{(c)})$ , where $U^{(c)}$ is the node set and $E^{(c)}$ is the edge set. We denote e(ucv) =1 if there is an edge between node u and node $v$ , and $e_{u v}{=}0$ vice versa. We enable the edges to be bidirectional. $\mu_{u}$ represents the constituent label of node $u$ , such as $S,N P$ and $V P$ , etc. We take the vectorial embedding $\mathbf{\Delta}\mathbf{v}{u}^{(c)}$ for the node label $\mu_{u}$ . Our constituent GCN (denoted as Const GCN) yields the node representations $\boldsymbol{h}_{u}^{(c)}$ :

成分树编码

成分树被建模为图 $G^{(c)}{=}(U^{(c)},E^{(c)})$ ,其中 $U^{(c)}$ 是节点集, $E^{(c)}$ 是边集。若节点 $u$ 与节点 $v$ 之间存在边,则记为 $e(ucv) =1$ ,否则 $e_{u v}{=}0$ 。我们允许边为双向。 $\mu_{u}$ 表示节点 $u$ 的成分标签,例如 $S,N P$ 和 $V P$ 等。我们为节点标签 $\mu_{u}$ 获取向量化嵌入 $\mathbf{\Delta}\mathbf{v}_{u}^{(c)}$ 。我们的成分GCN(记为Const GCN)生成节点表示 $\boldsymbol{h}_{u}^{(c)}$ :

$\begin{array}{r}{\pmb{h}{\pmb{u}}^{(c)}=\mathrm{ReLU}{\sum_{v=1}^{M}\alpha_{u v}(\pmb{W}^{(c_{1})}\cdot\pmb{r}{v}^{b}+\pmb{W}^{(c_{2})}\cdot\pmb{v}{v}^{(c)}+b^{(c)})},}\end{array}$ (9) where $r_{v}^{b}$ is the initial node representation of the node $v$ via span-boundary bridging operation, i.e., adding the start and end token representation of the phrasal span, $\pmb{r}{v}^{b}=\pmb{r}{s t a r t}+\pmb{r}{e n d}$ . And $\alpha_{u v}$ is the constituent connecting distribution:

其中 $r_{v}^{b}$ 是通过跨边界桥接操作获得的节点 $v$ 的初始节点表示,即添加短语跨度的起始和结束 token 表示:$\pmb{r}{v}^{b}=\pmb{r}{start}+\pmb{r}{end}$。而 $\alpha_{uv}$ 是成分连接分布:

$$

\alpha_{u v}=\frac{e_{u v}^{(c)}\cdot\exp{{(z_{u}^{(c)})^{T}\cdot z_{v}^{(c)}}}}{\sum_{v^{'}=1}^{M}e_{u v^{'}}^{(c)}\cdot\