Pandora3D: A Comprehensive Framework for High-Quality 3D Shape and Texture Generation

Pandora3D: 高质量3D形状与纹理生成的综合框架

Abstract

摘要

This report presents a comprehensive framework for generating high-quality 3D shapes and textures from diverse input prompts, including single images, multi-view images, and text descriptions. The framework consists of 3D shape generation and texture generation. (1). The 3D shape generation pipeline employs a Variation al Auto encoder (VAE) to encode implicit 3D geometries into a latent space and a diffusion network to generate latents conditioned on input prompts, with modifications to enhance model capacity. An alternative Artist-Created Mesh (AM) generation approach is also explored, yielding promising results for simpler geometries. (2). Texture generation involves a multi-stage process starting with frontal images generation followed by multi-view images generation, RGB-to-PBR texture conversion, and high-resolution multi-view texture refinement. A consistency scheduler is plugged into every stage, to enforce pixel-wise consistency among multi-view textures during inference, ensuring seamless integration.

本报告提出了一个从多样化输入提示(包括单张图像、多视角图像和文本描述)生成高质量3D形状和纹理的综合框架。该框架包括3D形状生成和纹理生成两部分。(1) 3D形状生成流程采用变分自编码器 (VAE) 将隐式3D几何编码到潜在空间中,并使用扩散网络生成基于输入提示的潜在表示,同时通过修改增强了模型容量。此外,还探索了一种替代的艺术家创建网格 (AM) 生成方法,在简单几何体上取得了良好的效果。(2) 纹理生成涉及多阶段过程,首先生成正面图像,然后生成多视角图像,进行RGB到PBR纹理转换,并进行高分辨率多视角纹理优化。在每个阶段都引入了一致性调度器,以确保推理过程中多视角纹理之间的像素级一致性,从而实现无缝集成。

The pipeline demonstrates effective handling of diverse input formats, leveraging advanced neural architectures and novel methodologies to produce high-quality 3D content. This report details the system architecture, experimental results, and potential future directions to improve and expand the framework. The source code and pretrained weights are released at: https://github.com/Tencent/Tencent-XR-3DGen.

该流程展示了系统在处理多样化输入格式时的有效性,利用先进的神经网络架构和新颖的方法生成高质量的3D内容。本报告详细介绍了系统架构、实验结果以及未来改进和扩展框架的潜在方向。源代码和预训练权重已发布在:https://github.com/Tencent/Tencent-XR-3DGen。

1. Introduction

1. 引言

Automated generation of high-quality digital 3D assets has drawn more and more attention in recent years. Digital 3D assets have become deeply ingrained in modern life and production. These assets vividly express the imaginations of creators across various fields, including gaming and film, bringing joy and creating immersive experiences for both players and audiences alike. Meanwhile, 3D assets also serve as essential building blocks in the domains of physical simulation and embodied AI, enabling machines and robots to understand the elements in the real world. However, the creation of 3D assets is far from simple; it is often a complex, time-consuming, and expensive process. Taking text prompts or an image as input, the digital 3D asset production pipeline commonly involves stages of 3D shape generation and texture generation, each requiring a high level of expertise and proficiency in digital content creation software.

近年来,高质量数字3D资产的自动化生成引起了越来越多的关注。数字3D资产已深深融入现代生活和生产。这些资产生动地表达了游戏、电影等多个领域创作者的想象力,为玩家和观众带来了欢乐,并创造了沉浸式体验。同时,3D资产也是物理模拟和具身AI领域的重要组成部分,帮助机器和机器人理解现实世界中的元素。然而,3D资产的创建并不简单,通常是一个复杂、耗时且昂贵的过程。以文本提示或图像为输入,数字3D资产的生产流程通常包括3D形状生成和纹理生成两个阶段,每个阶段都需要在数字内容创作软件中具备高水平的专业知识和熟练度。

In this report, we present Pandora3D, a framework designed for high-quality 3D shape and texture generation. The framework consists of two main components: 3D shape generation and texture generation.

在本报告中,我们介绍了Pandora3D,一个专为高质量3D形状和纹理生成设计的框架。该框架主要由两个核心部分组成:3D形状生成和纹理生成。<|end▁of▁sentence|>

The pipeline demonstrates effective handling of diverse input formats, leverages advanced neural architectures, and incor porates novel methodologies to produce high-quality 3D content. This report details the system architecture, experimental results, and potential future directions to improve and expand the framework.

该流程展示了有效处理多种输入格式的能力,利用先进的神经架构,并采用新颖的方法来生成高质量的 3D 内容。本报告详细介绍了系统架构、实验结果以及未来改进和扩展框架的潜在方向。

2. 3D Shape Generation

2. 3D 形状生成<|end▁of▁sentence|>

2.1. 3D Latent Space Diffusion

2.1. 3D 潜在空间扩散

The process begins by generating a 3D shape from a single image, multiple images, or a text prompt. This involves the following steps:

该过程从单张图像、多张图像或文本提示生成3D形状开始,涉及以下步骤:

· Variation al Auto encoder (VAE): Compresses 3D geometries into a latent space, enabling efficient representation and processing. · Diffusion Network: Generates latent representations conditioned on the input prompts. This network is adapted from CLAY [43] / Craftsman [16] / LAM3D [4], with modifications to improve the capacity and performance of the model.

· 变分自编码器 (VAE, Variation al Auto encoder):将3D几何压缩到潜在空间,实现高效表示和处理。

· 扩散网络 (Diffusion Network):根据输入提示生成潜在表示。该网络基于 CLAY [43] / Craftsman [16] / LAM3D [4] 进行了改进,以提升模型的容量和性能。

2.1.1 Effcient 3D Geometry Auto encoder

2.1.1 高效的三维几何自动编码器<|end▁of▁sentence|>

For 3D geometry compression model, we build upon CraftsMan [16], which adopts structures introduced in 3 D Shape 2 Vec Set [4 and Michelangelo [44]. Furthermore, we leverage the multi-resolution training strategy proposed in CLAY [43]. This approach encodes 3D geometry into latent space by progressively sampling additional points from a 3D point cloud, which increment ally extends and refines the latent representation of the shape. Progressive sampling allows the model to focus on areas of higher geometric complexity, capturing both global structure and intricate details. The primary goal of our VAE is to generate expressive latent embeddings that effectively guide the diffusion process in subsequent stages. To enhance the efficiency of this process, we propose a more advanced point sampling strategy. This method is designed to maximize the utility of the 3D point-cloud data by prioritizing points that contribute the most to capturing fine-grained features and spatial relationships. This enhancement not only increases the model's capacity to handle large-scale data for improved s cal ability but also preserves the fine-grained details of 3D geometry.

对于3D几何压缩模型,我们基于CraftsMan [16]构建,它采用了3D Shape 2 Vec Set [4]和Michelangelo [44]中引入的结构。此外,我们还利用了CLAY [43]提出的多分辨率训练策略。该方法通过逐步从3D点云中采样额外的点,将3D几何编码到潜在空间中,逐步扩展和细化形状的潜在表示。渐进采样使模型能够专注于几何复杂度较高的区域,捕捉全局结构和精细细节。我们VAE的主要目标是生成具有表达力的潜在嵌入,以有效指导后续阶段的扩散过程。为了提高这一过程的效率,我们提出了一种更先进的点采样策略。该方法旨在通过优先选择对捕捉细粒度特征和空间关系贡献最大的点,最大化3D点云数据的效用。这一增强不仅提高了模型处理大规模数据的能力,以改善可扩展性,还保留了3D几何的细粒度细节。

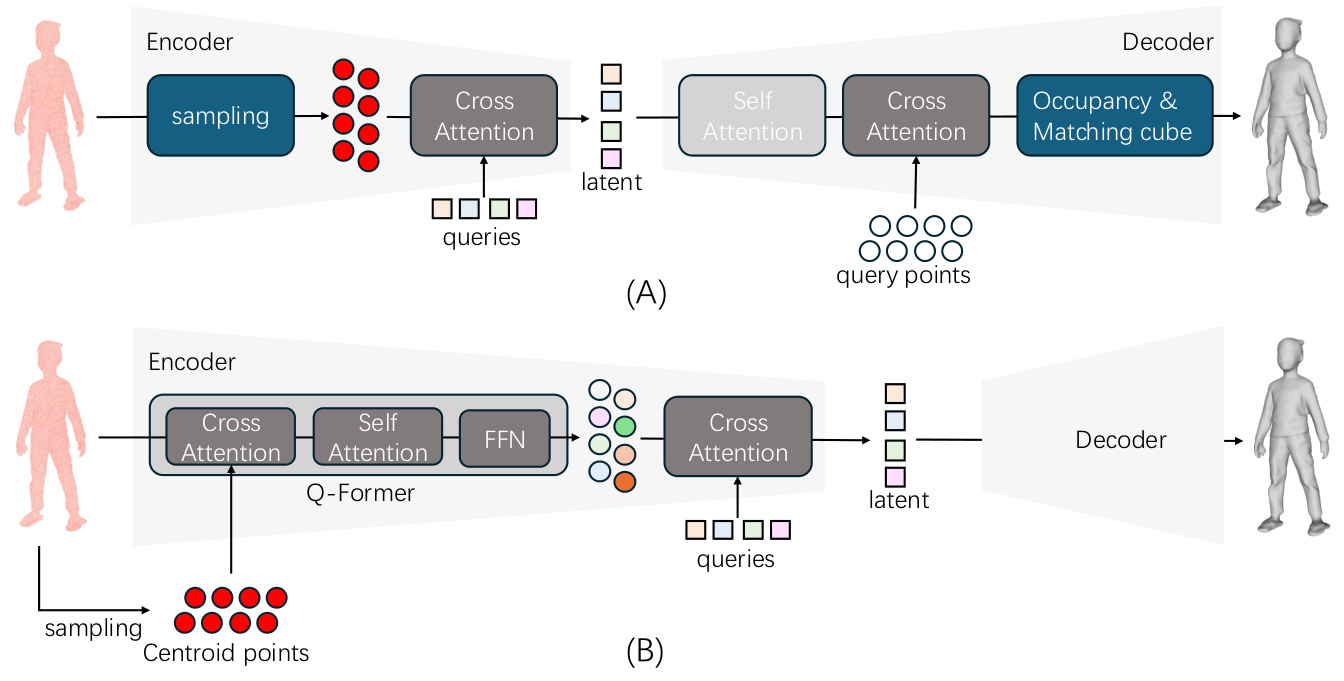

The design options of our VAE are illustrated in Fig. 1. We employ the model structure introduced in 3 D Shape 2 Vec Set as our base model. This approach involves embedding the input point cloud, augmented with normal information $X\in\mathbb{R}^{N\times6}$ which is sampled from a mesh $M$ , into a latent code using a learnable embedding function and a cross-attention encoding module:

我们的VAE设计选项如图1所示。我们采用3D Shape 2 Vec Set中介绍的模型结构作为基础模型。该方法通过可学习的嵌入函数和交叉注意力编码模块,将输入点云(从网格$M$中采样并增强了法线信息$X\in\mathbb{R}^{N\times6}$)嵌入到潜在代码中:

Where $q\in\mathbb{R}^{m\times d}$ represents a set of learnable queries that compress the sampled points into a latent embedding. The cross-attention mechanism ensures effective integration of geometric and positional features into the latent space. The VAE's decoder is composed of successive self-attention layers followed by a cross-attention layer. The cross-attention layer maps the latent embeddings back into 3D geometry, enabling reconstruction:

其中 $q\in\mathbb{R}^{m\times d}$ 表示一组可学习的查询,将采样点压缩为潜在嵌入。交叉注意力机制确保了几何和位置特征在潜在空间中的有效整合。VAE的解码器由连续的自注意力层和交叉注意力层组成。交叉注意力层将潜在嵌入映射回3D几何,实现重建:

where $p$ denotes random query points in 3D space, these points query with the latent and output occupancy logits. This base VAE implementation is illustrated in Fig. 1 (A).

其中 $p$ 表示三维空间中的随机查询点,这些点与潜在变量进行查询并输出占用 logits。这个基础的 VAE 实现如图 1 (A) 所示。

Following the approach outlined in CLAY [43], we adopt a multi-resolution training strategy to progressively upscale the model's capacity. Specifically, we increment ally increase the number of sampled points from 4096 to 32768 while simultan e ou sly extending the latent embedding dimensionality from 256 to 2048. This progressive training scheme gradually introduces more detailed input information to the model, enabling it to capture finer geometric details. At the same time, the expanded latent embedding length increases the model's capacity to represent complex features. Together, these enhancements enrich the latent space, thereby providing a more robust foundation for the subsequent diffusion model training. This multi-resolution approach ensures an efficient and scalable training process, optimizing both the model's performance and its ability to generalize across diverse 3D geometries.

遵循 CLAY [43] 中概述的方法,我们采用了多分辨率训练策略,逐步提升模型的容量。具体来说,我们逐步将采样点的数量从 4096 增加到 32768,同时将潜在嵌入维度从 256 扩展到 2048。这种渐进式训练方案逐步向模型引入更详细的输入信息,使其能够捕捉更精细的几何细节。同时,扩展的潜在嵌入长度增加了模型表示复杂特征的能力。这些增强共同丰富了潜在空间,从而为后续的扩散模型训练提供了更稳健的基础。这种多分辨率方法确保了一个高效且可扩展的训练过程,优化了模型的性能及其在多样化 3D 几何中的泛化能力。

Recall that the primary objective of our VAE is to generate expressive latent embeddings. While the previously mentioned approach progressively increases the number of sampling points, each object contains a total of $500\mathrm{k}$ points, leaving many points unsampled. This results in inevitable information loss, as not all geometric details are captured in the latent representation. Furthest point sampling [24] has the potential to mitigate this issue by selecting more representative points. However, this method is significantly slower compared to random sampling, making it less practical for large-scale training scenarios. This residual information loss can pose challenges during the diffusion process, as it may hinder the generation of high-quality latent embeddings. Consequently, the decoder is tasked with reconstructing fine-grained 3D details that might not be adequately represented in the latent embedding, potentially limiting the overall quality and fidelity of the reconstructed geometry.

回想一下,我们的 VAE 的主要目标是生成具有表现力的潜在嵌入。虽然前面提到的方法逐步增加了采样点的数量,但每个对象总共包含 $500\mathrm{k}$ 个点,许多点未被采样。这导致了不可避免的信息丢失,因为并非所有几何细节都被捕捉到潜在表示中。最远点采样 [24] 有潜力通过选择更具代表性的点来缓解这一问题。然而,与随机采样相比,这种方法明显更慢,使其在大规模训练场景中不太实用。这种剩余信息丢失可能对扩散过程构成挑战,因为它可能会妨碍高质量潜在嵌入的生成。因此,解码器的任务是重建潜在嵌入中可能未充分表示的精细 3D 细节,这可能会限制重建几何的整体质量和保真度。<|end▁of▁sentence|>

We have developed an enhancement to our Variation al Auto encoder (VAE) model that allows it to operate without sampling, while still retaining all data points. A straightforward approach might involve utilizing PointNet+ $^{\cdot+}$ [24] to compress features from a point cloud into a few "centroid points"’ through the use of cascaded convolutional layers. However, this method demands a substantial amount of memory, especially when managing point cloud data consisting of millions of points. To address this, our model optimizes the processing of large-scale point clouds more efficiently, reducing the memory burden without compromising the integrity and richness of the data. Alternatively, we opt to sample a set of centroid points $M\in\mathbb{R}^{m\times6}$ and employ a Q-former [15] style module to compress the raw point cloud data onto these centroids. The core component of the Q-former, the cross-attention mechanism, exhibits a computational complexity $O(2M N d)$ ,where $M$ is the amount of centroids and $N$ is the size of the input point cloud and $d$ is feature dimension. Although utilizing a memory-efficient attention method such as Flash Attention [5] helps, it remains resource-intensive and slow for processing large point clouds directly without sampling. To overcome these challenges, we propose the adoption of a linear attention mechanism [26, 25] for implementing cross-attention within our Q-former module. The theoretical complexity of this approach is $O(M d^{2}+N d^{2})$ . Given that $N>>N>>d$ , the computational load of linear cross-attention is significantly reduced compared to traditional cross-attention methods. During the training of our VAE, we randomly select points from the original point clouds to serve as centroid points. These centroids, which have a dimension larger than 6, act as queries in the Q-former and compress geometric information from the raw point cloud. Subsequently, these centroid points are processed by the VAE encoder to generate latent embeddings. This extended VAE is depicted in Fig. 1 (B).

我们开发了一种变分自编码器 (Variational Autoencoder, VAE) 模型的增强版本,使其能够在无需采样的同时保留所有数据点。一种直接的方法可能是利用 PointNet+$^{\cdot+}$ [24],通过级联卷积层将点云特征压缩为几个“质心点”。然而,这种方法需要大量内存,尤其是在处理包含数百万个点的点云数据时。为了解决这个问题,我们的模型优化了大规模点云的处理效率,减少了内存负担,同时不损害数据的完整性和丰富性。或者,我们选择采样一组质心点 $M\in\mathbb{R}^{m\times6}$,并采用 Q-former [15] 风格的模块将原始点云数据压缩到这些质心上。Q-former 的核心组件——交叉注意力机制,其计算复杂度为 $O(2M N d)$,其中 $M$ 是质心数量,$N$ 是输入点云的大小,$d$ 是特征维度。尽管使用内存高效的注意力方法(如 Flash Attention [5])有所帮助,但在不进行采样的情况下直接处理大规模点云仍然资源密集且速度较慢。为了克服这些挑战,我们提出在线性注意力机制 [26, 25] 的基础上实现 Q-former 模块中的交叉注意力。这种方法的理论复杂度为 $O(M d^{2}+N d^{2})$。考虑到 $N>>N>>d$,线性交叉注意力的计算负载相比传统的交叉注意力方法显著降低。在训练我们的 VAE 时,我们从原始点云中随机选择点作为质心点。这些维度大于 6 的质心点作为 Q-former 中的查询,压缩来自原始点云的几何信息。随后,这些质心点由 VAE 编码器处理以生成潜在嵌入。这种扩展的 VAE 如图 1 (B) 所示。

Similarly, we still adopt multi-reolustion training strategy to progressively increase the centroid points amount and latent embedding length to enlarge the latent embedding capacity. In addition, we empirically find that progressively increasing the training data amount can accelerate model convergence. Methods like 3 D Shape 2 Vec Set and CLAY, which derive latents from sampled points of the original point cloud, inevitably suffer from detail loss. Our extension effectively addresses this issue of information loss and maximizes the utilization of high-resolution point clouds, thereby preserving more detailed and accurate representations.

同样,我们仍然采用多分辨率训练策略,逐步增加中心点数量和潜在嵌入长度,以扩大潜在嵌入容量。此外,我们通过实验发现,逐步增加训练数据量可以加速模型收敛。像 3D Shape 2 Vec Set 和 CLAY 这样的方法,从原始点云的采样点中提取潜在特征,不可避免地会遭受细节损失。我们的扩展有效地解决了信息丢失的问题,并最大限度地利用了高分辨率点云,从而保留了更详细和准确的表示。

2.1.2 Diffusion Pipeline

2.1.2 扩散管道 (Diffusion Pipeline)

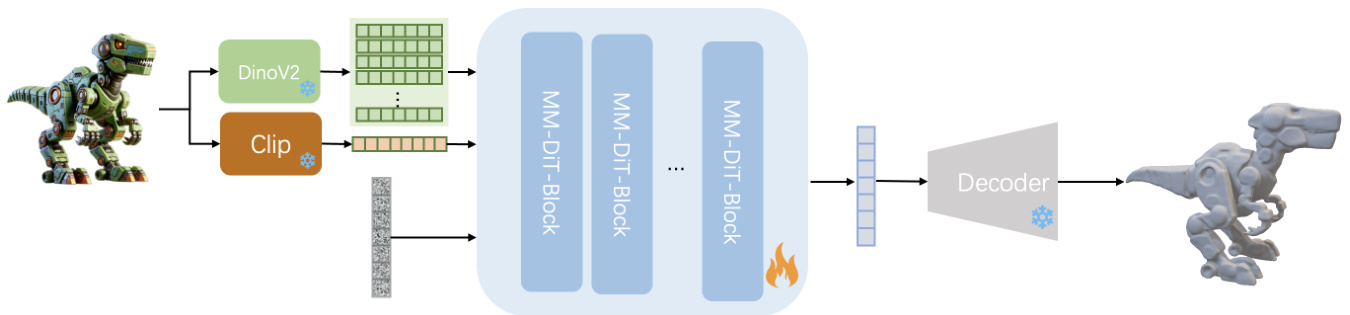

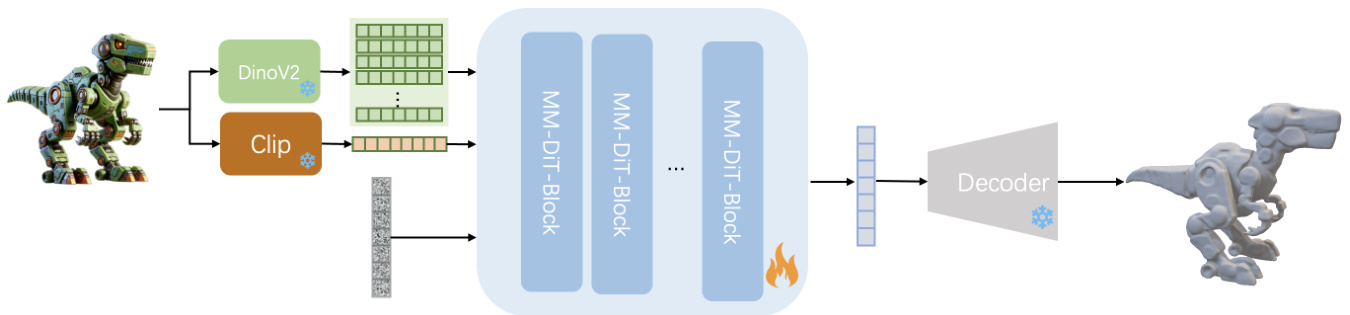

The diffusion pipeline is illustrated in Fig. 2. We employ Multimodel Diffusion Transformer(MMDiT) [9] as our diffusion backbone, utilizing two pretrained models, specifically, CLIP-ViT-L/14 [27] as the global image feature extractor, and DinoV2-Large [20] for local image feature extraction. Instead of employing DDPM, we utilize the flow matching schedule. Following CLAY [43] methodology, the diffusion model is trained in coarse-to-fine manner.

扩散流程如图 2 所示。我们采用 Multimodel Diffusion Transformer (MMDiT) [9] 作为扩散主干,利用两个预训练模型,具体来说,CLIP-ViT-L/14 [27] 作为全局图像特征提取器,DinoV2-Large [20] 用于局部图像特征提取。我们没有采用 DDPM,而是使用了流匹配调度。遵循 CLAY [43] 的方法,扩散模型以从粗到细的方式进行训练。

To enhance the image control effect, we use both global and local image feature as condition features of diffusion model. Global condition feature $z_{g l o b a l}\in\mathbb{R}^{L}$ is extracted with ClIP vision encoder, meanwhile, local detail condition feature $z_{l o c a l}\in\mathbb{R}^{L\times1024}$ is extracted with Dino vision encoder. The global condition and local condition are integrated into the diffusion model through MMDiT Block following Stable Diffusion 3 [9]. The diffusion model we use has 2.3B parameters and 28 layers MMDiT block.

为了增强图像控制效果,我们使用全局和局部图像特征作为扩散模型的条件特征。全局条件特征 $z_{g l o b a l}\in\mathbb{R}^{L}$ 通过 ClIP 视觉编码器提取,同时,局部细节条件特征 $z_{l o c a l}\in\mathbb{R}^{L\times1024}$ 通过 Dino 视觉编码器提取。全局条件和局部条件通过 MMDiT 块集成到扩散模型中,遵循 Stable Diffusion 3 [9]。我们使用的扩散模型具有 28 层 MMDiT 块和 23 亿参数。

To enhance practical utility in 3D design workflows, we have extended our geometry generation framework to accept multi-view conditional inputs. This architectural advancement enables finer-grained geometric control through multi-view visual guidance. The system accommodates variable numbers of reference images (stochastic ally sampled from 1 to 4 views per instance) within a unified architecture, eliminating requirement for fixed-size input configurations. All synthesized geometries maintain spatial alignment with the primary view's coordinate system (defined by the first input image). Input images must be arranged in ascending azimuth order ${\theta_{1},\theta_{2},\ldots,\theta_{n}}$ where $\theta_{i}\in\left[0^{\circ},360^{\circ}\right)$ .Multiview feature representations are aggregated through ordered concatenation along the sequence dimension, preserving relative spatial-semantic correspondence across views. Accelerated convergence is achieved via progressive transfer learning, where parameters initialized from our single-view conditioned model undergo fine-tuning using multiview datasets while maintaining pretrained backbone weights during initial phases.

为了增强在3D设计工作流程中的实用性,我们扩展了几何生成框架以支持多视角条件输入。这一架构进步通过多视角视觉指导实现了更精细的几何控制。该系统在统一架构中适应可变数量的参考图像(每个实例随机采样1到4个视角),消除了对固定大小输入配置的需求。所有合成几何体均保持与主视角坐标系的空间对齐(由第一个输入图像定义)。输入图像必须按升序方位角排列 ${\theta_{1},\theta_{2},\ldots,\theta_{n}}$,其中 $\theta_{i}\in\left[0^{\circ},360^{\circ}\right)$。多视角特征表示通过序列维度上的有序连接进行聚合,保留了跨视角的相对空间语义对应关系。通过渐进式迁移学习加速收敛,其中从单视角条件模型初始化的参数在使用多视角数据集进行微调的同时,在初始阶段保持预训练的主干权重。

Figure 1. 3D Geometry variation al auto encoder. (A): Our base VAE for 3D geometry compression. (B) Extended VAE for efficient 3I geometry compression.

图 1: 3D 几何变化自动编码器。(A): 我们用于 3D 几何压缩的基础 VAE。(B) 用于高效 3I 几何压缩的扩展 VAE。

Figure 2. Diffusion pipline. In the process of training a diffusion model, the DinoV2, CLIP, and VAE Decoder components are kept frozen

图 2. 扩散流程。在训练扩散模型的过程中,DinoV2、CLIP 和 VAE 解码器组件保持冻结状态

2.2. Meshing and UV Unwrapping

2.2. 网格划分与UV展开

Once the 3D geometry is generated, it undergoes isosurface extraction, remeshing and UV unwrapping so that a textureready triangle mesh is produced.

生成 3D 几何体后,会进行等值面提取、重新网格化和 UV 展开,从而生成可用于纹理处理的三角网格。

2.2.1 Isosurface extraction

2.2.1 等值面提取

We perform a modifed version of marching cubes algorithm [17, 19] to effciently extract a watertight mesh from geometry tokens.

我们执行了一种改进版本的 Marching Cubes 算法 [17, 19],以高效地从几何 Token 中提取出封闭的网格。

Marching cubes traditionally require a dense occupancy grid of $D\times D\times D$ occupancy values. Directly computing such a dense grid with Eq. (2) incurs a $O(D^{3})$ time complexity that is prohibitively expensive at high resolutions $D$ . To improve efficiency, we adopt a coarse to fine strategy: starting from a coarse grid resolution $d_{0}\ll D$ , we iterative ly build a sparse finer grid of resolution $d_{i+1}=2d_{i}$ whose cells are subdivided from active cells in the coarser grid of resolution $d_{i}$ that are close to the isosurface. This strategy ensures that most occupancy queries of Eq. (2) are confined within a small margin around the isosurface and significantly reduces the number of queries required for isosurface extraction, achieving two to three orders faster mesh extraction.

传统的 Marching cubes 算法需要一个密集的 $D\times D\times D$ 占用值网格。直接使用公式 (2) 计算这样的密集网格会带来 $O(D^{3})$ 的时间复杂度,在高分辨率 $D$ 下代价过高。为了提高效率,我们采用了从粗到细的策略:从粗网格分辨率 $d_{0}\ll D$ 开始,逐步构建一个稀疏的细网格,其分辨率为 $d_{i+1}=2d_{i}$ ,该网格的单元是从分辨率 $d_{i}$ 的粗网格中靠近等值面的活跃单元细分而来。该策略确保公式 (2) 中的大多数占用查询都限制在等值面附近的小范围内,并显著减少了等值面提取所需的查询数量,使网格提取速度提高了两个到三个数量级。

To guarantee a watertight mesh, at the highest level $d_{n}=D$ , we expand the sparse active cells along the isosurface to eliminate holes and perform Lewiner's topology check [14] to ensure manifold ness. We implement the sparse marching cubes as a custom CUDA kernel function to maximize efficiency.

为了确保网格的严密性,在最高层级 $d_{n}=D$ 下,我们沿着等值面扩展稀疏的活跃单元以消除孔洞,并执行 Lewiner 的拓扑检查 [14] 以确保流形性。我们将稀疏行进立方体实现为自定义的 CUDA 内核函数,以最大化效率。

2.3. Remesh and UV unwrap

2.3. 重新网格化和 UV 展开<|end▁of▁sentence|>

The triangle meshes extracted from Marching cubes may contain poorly constructed elements such as collapsed faces or slivers. Furthermore, they often exhibit a high face count that could create problems for downstream applications. We overcome these issues with an optional remeshing step using either an off-the-shelf quad-remesher' or isotropic remeshing [21] followed by QEM triangle decimation [10].

从 Marching cubes 提取的三角网格可能包含构造不良的元素,例如塌陷面或薄片。此外,它们通常表现出较高的面数,这可能会给下游应用带来问题。我们通过可选的重新网格化步骤来克服这些问题,使用现成的四边形重新网格化工具或各向同性重新网格化 [21],然后进行 QEM 三角网格简化 [10]。

In addition, we use the open source project UV-Atlas [45]for UV charting and packing. At this point, we obtain a polygon mesh that is ready for texture generation.

此外,我们使用开源项目 UV-Atlas [45] 进行 UV 图表和打包。此时,我们获得了一个准备进行纹理生成的多边形网格。

2.4. Alternative Approach: Artist-Created Meshes Generation

2.4 替代方法:艺术家创建的网格生成<|end▁of▁sentence|>

An alternative approach we explored involves directly generating the mesh, bypassing the initial generation of geometry as an implicit function followed by mesh extraction. This method effectively produces meshes with reasonable topology, akin to those crafted by artists for simple shapes. However, it encounters difficulties when applied to complex geometries, where maintaining structural integrity and topological accuracy becomes challenging.

我们探索的另一种方法涉及直接生成网格,绕过作为隐式函数生成几何形状的初始步骤,随后进行网格提取。该方法有效地生成了具有合理拓扑结构的网格,类似于艺术家为简单形状制作的网格。然而,当应用于复杂几何形状时,保持结构完整性和拓扑准确性变得具有挑战性。

2.4.1 Mesh Compression

2.4.1 网格压缩

Direct regression of vertex coordinates results in substantial memory consumption, which consequently limits the number of faces the model can handle. To mitigate this issue, we adopt the methodology proposed by BPT [38], which involves compressing the original vertex coordinates using block index compression and patchified aggregation. Specifically, for a vertex $\boldsymbol{v}{i}=\left(x{i},y_{i},z_{i}\right)$ ,the block-wise indexing $(b_{i},o_{i})$ is formulated as follows:

直接回归顶点坐标会导致内存消耗大量增加,从而限制了模型可以处理的面数。为了解决这个问题,我们采用了 BPT [38] 提出的方法,该方法通过使用块索引压缩和分块聚合来压缩原始顶点坐标。具体来说,对于顶点 $\boldsymbol{v}{i}=\left(x{i},y_{i},z_{i}\right)$,块索引 $(b_{i},o_{i})$ 的公式如下:

In this formulation, the symbols $|$ and $%$ represent division without remainder and the modulo operation, respectively. This approach segments the coordinates along each axis into $B$ blocks, each of length $O$ . To further enhance the compression ratio, we employ the patchified aggregation technique as described in [38]. This technique aggregates the faces connected to the same vertex into a non-overlapping patch and utilizes dual-block indices to denote the starting point of a patch. Consequently, the offset vocabulary is shared between the center vertex and the surrounding vertices. The center patch is formulated as follows:

在此公式中,符号 $|$ 和 $%$ 分别表示无余数除法和取模运算。该方法将沿每个轴的坐标分割为 $B$ 个块,每个块的长度为 $O$。为了进一步提高压缩比,我们采用了 [38] 中描述的 patchified aggregation 技术。该技术将连接到同一顶点的面聚合成一个不重叠的 patch,并使用双块索引表示 patch 的起点。因此,中心顶点和周围顶点之间共享偏移量词汇表。中心 patch 的公式如下:

In this context, $b_{c}^{\prime}$ and $O_{C}$ denote the blocking index and the offset index of the center patch, respectively. These indices are critical for accurately referencing the spatial configuration of the patch within the compressed data structure.

在此上下文中,$b_{c}^{\prime}$ 和 $O_{C}$ 分别表示中心区块的阻塞索引和偏移索引。这些索引对于准确引用压缩数据结构中区块的空间配置至关重要。<|end▁of▁sentence|>

To achieve this, we initially convert vertex coordinates into discrete values with a resolution of $R$ .Subsequently,we encode the mesh information, including vertices and faces, into a discrete token sequence. This sequence can be decoded back into a mesh using the same technique. It is important to note that this encoding and decoding process is governed by predefined rules and does not involve any learnable parameters.

为实现这一目标,我们首先将顶点坐标转换为分辨率为 $R$ 的离散值。随后,我们将包括顶点和面在内的网格信息编码为离散的 Token 序列。该序列可以使用相同的技术解码回网格。需要注意的是,该编码和解码过程由预定义的规则控制,不涉及任何可学习的参数。

2.4.2 Auto regressive Model for Mesh Generation

2.4.2 用于网格生成的自回归模型

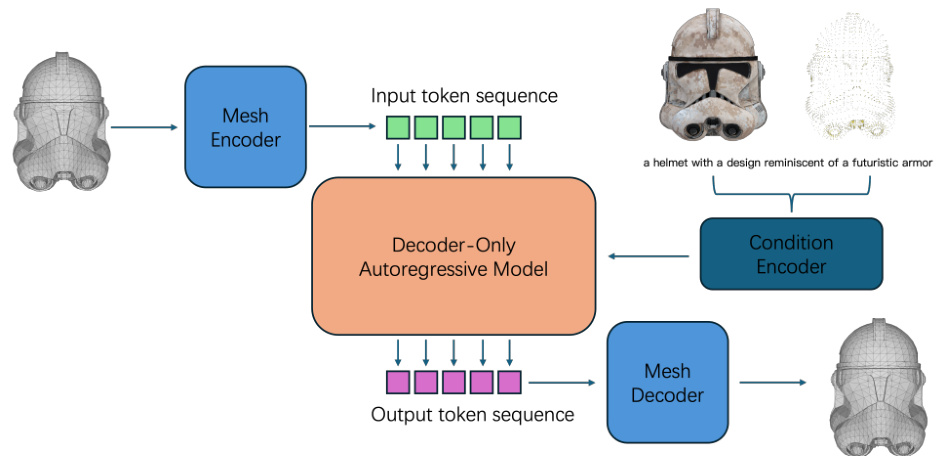

In this section, we describe the methodology for generating novel shapes from various modalities using the compression technique outlined in the preceding sections. Fig. 3 illustrates the pipeline of our approach. Initially, a mesh is encoded into discrete token sequences utilizing the method detailed previously. Subsequently, a decoder-only auto regressive model is employed to predict subsequent tokens based on preceding ones. To facilitate multi-modality condition control, a pre-trained condition encoder network is utilized to encode condition information, such as images, text, and point clouds, into latent features. These features serve as the contextual input for the decoder-only model. The resulting token sequence can then be decoded back into the final mesh using a mesh decoder. It is important to note that both the mesh encoder and mesh decoder are purely rule-based, as previously explained, and do not involve any learnable parameters.

在本节中,我们描述了使用前几节概述的压缩技术从各种模态生成新形状的方法。图3展示了我们方法的流程。首先,使用先前详述的方法将网格编码为离散的token序列。随后,使用仅解码的自回归模型来预测基于前序token的后续token。为了支持多模态条件控制,使用预训练的条件编码器网络将条件信息(如图像、文本和点云)编码为潜在特征。这些特征作为仅解码器模型的上下文输入。最终的token序列可以通过网格解码器解码回最终网格。需要注意的是,网格编码器和网格解码器都是基于规则的,如前所述,不涉及任何可学习参数。<|end▁of▁sentence|>

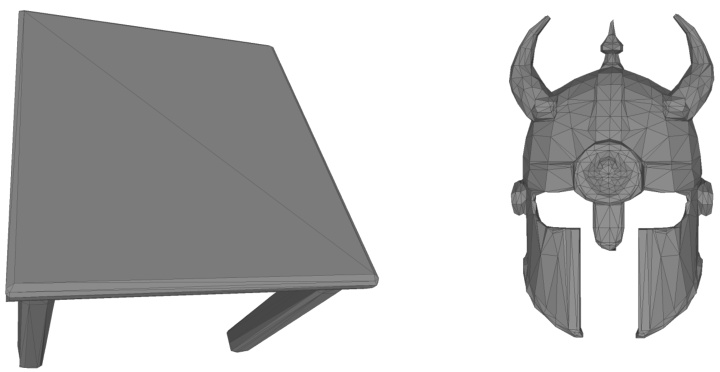

Fig. 4 visualizes the meshes generated by our model, which exhibit superior topology consistency with a minimal numbel of faces.

图 4: 展示了我们模型生成的网格,其拓扑一致性优异且面数最少。

Figure 3. Pipeline for Artist-Created Mesh Generation. Initially, meshes are encoded into discrete token sequences. These sequences are then processed through a decoder-only auto regressive model that utilizes a Transformer network architecture. To enforce multi-modality condition control, a pretrained condition encoder network is employed. This network effectively integrates diverse modalities, ensuring that the generated meshes adhere to specified conditions.

图 3: 艺术家创建的网格生成流程。首先,网格被编码为离散的序列。这些序列随后通过一个仅包含解码器的自回归模型进行处理,该模型采用了Transformer网络架构。为了实现多模态条件控制,使用了一个预训练的条件编码器网络。该网络有效地整合了多种模态,确保生成的网格符合指定的条件。<|end▁of▁sentence|>

Figure 4. Example Meshes Generated by Our Artist-Created Mesh Generation Model. The meshes produced by our model demonstrate superior performance in maintaining topological consistency, showcasing the effectiveness of our approach in generating high-quality artistic meshes.

图 4: 由我们的艺术家创建的网格生成模型生成的示例网格。我们的模型生成的网格在保持拓扑一致性方面表现出色,展示了我们在生成高质量艺术网格方面的有效性。<|end▁of▁sentence|>

3. Texture Generation

- 纹理生成

The proposed texture generation pipeline consists of several stages, each contributing to the generation of consistent and high-quality textures. Fig. 5 illustrates the texture generation pipeline. The pipeline begins with a 3D mesh without texture. Below we introduce each stage in detail.

所提出的纹理生成管道由多个阶段组成,每个阶段都贡献于生成一致且高质量的纹理。图 5 展示了纹理生成管道。该管道从没有纹理的 3D 网格开始。下面我们详细介绍每个阶段。

3.1. Frontal Image Generation

3.1. 正面图像生成

If the input prompt is text, a frontal image is initially generated conditioned on a depth map derived from the 3D geometry. This process involves rendering the 3D mesh into a depth map and utilizing depth-conditioned diffusion models [42] to produce the frontal image. Alternatively, if the input is an image, we integrate the IP-Adapter [39] and ControlNet [42] to generate the frontal image. As illustrated in Fig. 6, both text and image prompts are converted into a geometry-aligned frontal image, which serves as the input for subsequent texture generation.

如果输入提示是文本,首先会根据从3D几何体生成的深度图生成正面图像。该过程包括将3D网格渲染为深度图,并利用深度条件扩散模型 [42] 生成