ViDoRAG: Visual Document Retrieval-Augmented Generation via Dynamic Iterative Reasoning Agents

ViDoRAG: 基于动态迭代推理AI智能体的视觉文档检索增强生成

Abstract

摘要

Understanding information from visually rich documents remains a significant challenge for traditional Retrieval-Augmented Generation (RAG) methods. Existing benchmarks predominantly focus on image-based question answering (QA), overlooking the fundamental challenges of efficient retrieval, comprehension, and reasoning within dense visual documents. To bridge this gap, we introduce ViDoSeek, a novel dataset designed to evaluate RAG performance on visually rich documents requiring complex reasoning. Based on it, we identify key limitations in current RAG approaches: (i) purely visual retrieval methods struggle to effectively integrate both textual and visual features, and (ii) previous approaches often allocate insufficient reasoning tokens, limiting their effectiveness. To address these challenges, we propose ViDoRAG, a novel multi-agent RAG framework tailored for complex reasoning across visual documents. ViDoRAG employs a Gaussian Mixture Model (GMM)-based hybrid strategy to effectively handle multi-modal retrieval. To further elicit the model’s reasoning capabilities, we introduce an iterative agent workflow incorporating exploration, sum mari z ation, and reflection, providing a framework for investigating test-time scaling in RAG domains. Extensive experiments on ViDoSeek validate the effectiveness and generalization of our approach. Notably, ViDoRAG outperforms existing methods by over $10%$ on the competitive ViDoSeek benchmark.

理解视觉丰富文档中的信息对于传统的检索增强生成 (Retrieval-Augmented Generation, RAG) 方法仍然是一个重大挑战。现有的基准测试主要集中在基于图像的问答 (QA) 上,忽视了在密集视觉文档中进行高效检索、理解和推理的基本挑战。为了弥补这一差距,我们引入了 ViDoSeek,这是一个旨在评估 RAG 在需要复杂推理的视觉丰富文档上的性能的新数据集。基于此,我们识别了当前 RAG 方法的关键局限性:(i) 纯视觉检索方法难以有效整合文本和视觉特征,(ii) 先前的方法通常分配不足的推理 Token,限制了其有效性。为了解决这些挑战,我们提出了 ViDoRAG,这是一个专为跨视觉文档的复杂推理而设计的新型多智能体 RAG 框架。ViDoRAG 采用基于高斯混合模型 (Gaussian Mixture Model, GMM) 的混合策略,有效处理多模态检索。为了进一步激发模型的推理能力,我们引入了一个迭代的智能体工作流程,结合探索、总结和反思,为研究 RAG 领域的测试时扩展提供了一个框架。在 ViDoSeek 上的大量实验验证了我们方法的有效性和泛化能力。值得注意的是,ViDoRAG 在竞争性 ViDoSeek 基准测试中比现有方法高出超过 10%。

1 Introduction

1 引言

Retrieval-Augmented Generation (RAG) enhances Large Models (LMs) by enabling them to use external knowledge to solve problems. As the expression of information becomes increasingly diverse, we often work with visually rich documents that contain diagrams, charts, tables, etc. These visual elements make information easier to understand and are widely used in education, finance, law, and other fields. Therefore, researching RAG within visually rich documents is highly valuable.

检索增强生成 (Retrieval-Augmented Generation, RAG) 通过使大模型 (Large Models, LMs) 能够利用外部知识来解决问题,从而增强其能力。随着信息表达方式日益多样化,我们经常处理包含图表、表格等视觉元素的文档。这些视觉元素使信息更易于理解,并广泛应用于教育、金融、法律等领域。因此,在视觉丰富的文档中研究 RAG 具有很高的价值。

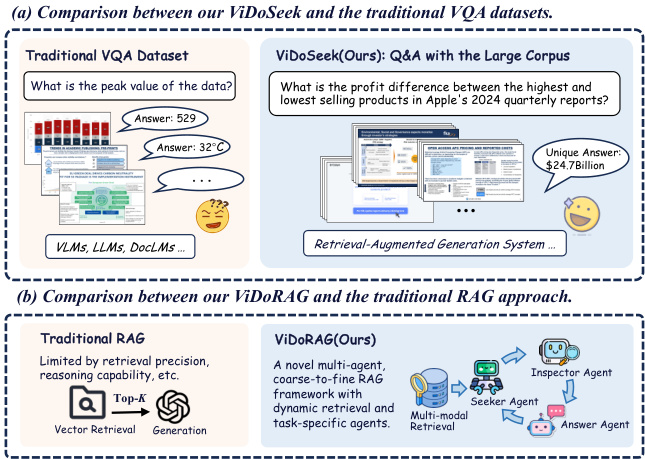

Figure 1: Comparison of our work with the existing datasets and methods. (a) In traditional datasets, each query must be paired with specific images or documents. In our ViDoSeek, each query can obtain a unique answer within the large corpus. (b) Our ViDoRAG is a multiagent, coarse-to-fine framework specifically optimized for visually rich documents.

图 1: 我们的工作与现有数据集和方法的对比。(a) 在传统数据集中,每个查询必须与特定的图像或文档配对。在我们的 ViDoSeek 中,每个查询都可以在大语料库中获得唯一的答案。(b) 我们的 ViDoRAG 是一个多智能体、由粗到细的框架,专门针对视觉丰富的文档进行了优化。

In practical applications, RAG systems often need to retrieve information from a large collection consisting of hundreds of documents, amounting to thousands of pages. As shown in Fig. 1, existing Visual Question Answering (VQA) benchmarks aren’t designed for such large corpus. The queries in these benchmarks are typically paired with one single image(Methani et al., 2020; Masry et al., 2022; Li et al., 2024; Mathew et al., 2022) or docu- ment(Ma et al., 2024), which is used for evaluating Q&A tasks but not suitable for evaluating RAG systems. The answers to queries in these datasets may not be unique within the whole corpus.

在实际应用中,RAG系统通常需要从由数百个文档组成的庞大数据集中检索信息,这些文档总页数可达数千页。如图 1 所示,现有的视觉问答(VQA)基准测试并未针对如此大规模的数据集进行设计。这些基准测试中的查询通常与单个图像(Methani et al., 2020; Masry et al., 2022; Li et al., 2024; Mathew et al., 2022)或文档(Ma et al., 2024)配对,这些设计适用于问答任务的评估,但不适合评估RAG系统。在这些数据集中,查询的答案在整个数据集中可能并不唯一。

To address this gap, we introduce ViDoSeek, a novel dataset designed for visually rich document retrieval-reason-answer. In ViDoSeek, each query has a unique answer and specific reference pages. It covers the diverse content types and multi-hop reasoning that most VQA datasets include. This specificity allows us to better evaluate retrieval and generation performance separately.

为了解决这一差距,我们引入了 ViDoSeek,这是一个专为视觉丰富的文档检索-推理-回答设计的新数据集。在 ViDoSeek 中,每个查询都有唯一的答案和特定的参考页面。它涵盖了大视觉问答数据集中的多样化内容类型和多跳推理。这种特异性使我们能够更好地分别评估检索和生成性能。

Moreover, to enable models to effectively reason over a large corpus, we propose ViDoRAG, a multi-agent, coarse-to-fine retrieval-augmented generation framework tailored for visually rich documents. Our approach is based on two critical observations: (i) Inefficient and Variable Retrieval Performance. Traditional OCR-based retrieval struggles to capture visual information. With the development of vision-based retrieval, it is easy to capture visual information(Faysse et al., 2024; Yu et al., 2024a; Zhai et al., 2023). However, there lack of an effective method to integrate visual and textual features, resulting in poor retrieval of relevant content. (ii) Insufficient Activation of Reasoning Capabilities during Generation. Previous studies on inference scaling for RAG focus on expanding the length of retrieved documents(Jiang et al., 2024; Shao et al., 2025; Xu et al., 2023). However, due to the characteristics of VLMs, only emphasizing on the quantity of knowledge without providing further reasoning guidance presents certain limitations. There is a need for an effective inference scale-up method to efficiently utilize specific action spaces, such as resizing and filtering, to fully activate reasoning capabilities.

此外,为了使模型能够有效地在大规模语料库上进行推理,我们提出了ViDoRAG,这是一个专为视觉丰富文档设计的多智能体、由粗到细的检索增强生成框架。我们的方法基于两个关键观察:(i) 检索性能低效且不稳定。传统的基于OCR的检索难以捕捉视觉信息。随着基于视觉的检索技术的发展,捕捉视觉信息变得容易(Faysse et al., 2024; Yu et al., 2024a; Zhai et al., 2023)。然而,缺乏一种有效的方法来整合视觉和文本特征,导致相关内容检索效果不佳。(ii) 生成过程中推理能力激活不足。之前关于RAG推理扩展的研究主要集中在扩展检索文档的长度(Jiang et al., 2024; Shao et al., 2025; Xu et al., 2023)。然而,由于VLM的特性,仅强调知识的数量而不提供进一步的推理指导存在一定的局限性。需要一种有效的推理扩展方法,以高效利用特定的操作空间(如调整大小和过滤)来充分激活推理能力。

Building upon these insights, ViDoRAG introduces improvements in both retrieval and generation. We propose Multi-Modal Hybrid Retrieval, which combines both visual and textual features and dynamically adjusts results distribution based on Gaussian Mixture Models (GMM) prior. This approach achieves the optimal retrieval distribution for each query, enhancing generation efficiency by reducing unnecessary computations. During generation, our framework comprises three agents: the seeker, inspector, and answer agents. The seeker rapidly scans thumbnails and selects relevant images with feedback from the inspector. The inspector reviews, then provides reflection and offers preliminary answers. The answer agent ensures consistency and gives the final answer. This framework reduces exposure to irrelevant information and ensures consistent answers across multiple scales.

基于这些洞察,ViDoRAG 在检索和生成方面均提出了改进。我们提出了多模态混合检索 (Multi-Modal Hybrid Retrieval),它结合了视觉和文本特征,并基于高斯混合模型 (GMM) 先验动态调整结果分布。这种方法为每个查询实现了最优检索分布,通过减少不必要的计算来提升生成效率。在生成过程中,我们的框架包含三个 AI智能体:搜索者、检查者和回答者。搜索者快速浏览缩略图,并根据检查者的反馈选择相关图像。检查者进行审查,随后提供反思并给出初步答案。回答者确保一致性并给出最终答案。该框架减少了与无关信息的接触,并确保了跨多个尺度的一致性答案。

Our major contributions are as follows:

我们的主要贡献如下:

• We introduce ViDoSeek, a benchmark specifically designed for visually rich document retrieval-reason-answer, fully suited for evaluation of RAG within large document corpus. • We propose ViDoRAG, a novel RAG framework that utilizes a multi-agent, actor-critic paradigm for iterative reasoning, enhancing the noise robustness of generation models. • We introduce a GMM-based multi-modal hybrid retrieval strategy to effectively integrate visual and textual pipelines. • Extensive experiments demonstrate the effectiveness of our method. ViDoRAG significantly outperforms strong baselines, achieving over $10%$ improvement, thus establishing a new state-of-the-art on ViDoSeek.

• 我们推出了 ViDoSeek,这是一个专为视觉丰富的文档检索-推理-回答设计的基准,完全适合在大文档语料库中评估 RAG。

• 我们提出了 ViDoRAG,这是一个新颖的 RAG 框架,利用多智能体、演员-评论家范式进行迭代推理,增强生成模型的噪声鲁棒性。

• 我们引入了一种基于 GMM 的多模态混合检索策略,以有效整合视觉和文本管道。

• 大量实验证明了我们方法的有效性。ViDoRAG 显著优于强基线,实现了超过 $10%$ 的提升,从而在 ViDoSeek 上建立了新的最先进水平。

2 Related Work

2 相关工作

Visual Document Q&A Benchmarks. Visual Document Question Answering is focused on answering questions based on the visual content of documents(Antol et al., 2015; Ye et al., 2024; Wang et al., 2024). While most existing research (Methani et al., 2020; Masry et al., 2022; Li et al., 2024; Mathew et al., 2022) has primarily concentrated on question answering from single images, recent advancements have begun to explore multi-page document question answering, driven by the increasing context length of modern models (Mathew et al., 2021; Ma et al., 2024; Tanaka et al., 2023). However, prior datasets were not wellsuited for RAG tasks involving large collections of documents. To fill this gap, we introduce ViDoSeek, the first large-scale document collection QA dataset, where each query corresponds to a unique answer across a collection of $\sim6k$ images.

视觉文档问答基准。视觉文档问答专注于基于文档的视觉内容回答问题 (Antol et al., 2015; Ye et al., 2024; Wang et al., 2024)。虽然大多数现有研究 (Methani et al., 2020; Masry et al., 2022; Li et al., 2024; Mathew et al., 2022) 主要集中在单张图像的问答上,但最近的进展已经开始探索多页文档的问答,这是由现代模型不断增长的上下文长度驱动的 (Mathew et al., 2021; Ma et al., 2024; Tanaka et al., 2023)。然而,之前的数据集并不太适合涉及大量文档的 RAG 任务。为了填补这一空白,我们引入了 ViDoSeek,这是第一个大规模文档集合问答数据集,其中每个查询对应 $\sim6k$ 张图像集合中的唯一答案。

Retrieval-augmented Generation. With the advancement of large models, RAG has enhanced the ability of models to incorporate external knowledge (Lewis et al., 2020; Chen et al., 2024b; Wu et al., 2025). In prior research, retrieval often followed the process of extracting text via OCR technology (Chen et al., 2024a; Lee et al., 2024; Robertson et al., 2009). Recently, the growing interest in multimodal embeddings has greatly improved image retrieval tasks (Faysse et al., 2024; Yu et al., 2024a). Additionally, there are works that focus on In-Context Learning in RAG(Agarwal et al., 2025; Yue et al., 2024; Team et al., 2024; Weijia et al.,

检索增强生成。随着大模型的进步,RAG 增强了模型整合外部知识的能力 (Lewis et al., 2020; Chen et al., 2024b; Wu et al., 2025)。在先前的研究中,检索通常遵循通过 OCR 技术提取文本的过程 (Chen et al., 2024a; Lee et al., 2024; Robertson et al., 2009)。最近,对多模态嵌入的兴趣日益增长,极大地改善了图像检索任务 (Faysse et al., 2024; Yu et al., 2024a)。此外,还有专注于 RAG 中的上下文学习的工作 (Agarwal et al., 2025; Yue et al., 2024; Team et al., 2024; Weijia et al.,

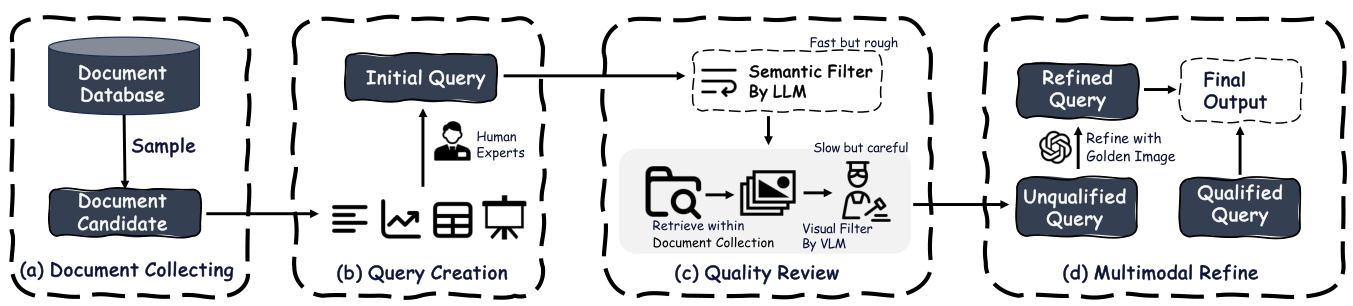

Figure 2: Data Construction pipeline. (a) We sample and filter documents according to the requirements to obtain candidates. (b) Then experts construct the initial query from different contents. (c) After that, we prompt GPT-4 to directly determine whether the query is a general query. The remaining queries are carefully reviewed with top $\cdot K$ recall images. (d) Finally, unqualified queries are refined paired with golden image by GPT-4o.

图 2: 数据构建流程。(a) 我们根据需求对文档进行采样和过滤,以获得候选文档。(b) 然后专家从不同内容中构建初始查询。(c) 之后,我们提示 GPT-4 直接判断查询是否为通用查询。剩余的查询会与 top $\cdot K$ 召回图像一起进行仔细审查。(d) 最后,不合格的查询会由 GPT-4o 与黄金图像配对进行优化。

2023). Our work builds upon these developments by combining multi-modal hybrid retrieval with a coarse-to-fine multi-agent generation framework, seamlessly integrating various embedding and generation models into a scalable framework.

2023)。我们的工作在这些进展的基础上,通过将多模态混合检索与从粗到细的多智能体生成框架相结合,无缝整合了各种嵌入和生成模型,构建了一个可扩展的框架。

3 Problem Formulation

3 问题表述

Given a query as $q$ , and we have a collection of documents $\mathcal{C}={\mathcal{D}{1},\mathcal{D}{2},\ldots,\mathcal{D}{M}}$ which contains $M$ documents. Each document $\mathcal{D}{m}$ consists of $N$ pages, each image representing an individual page, defined as $\mathcal{D}_{m}={\mathbf{I}_{1},\mathbf{I}_{2},\ldots,\mathbf{I}_{N}}$ . The to- tal number of images included in the collection is $\textstyle\sum_{m=1}^{M}|{\mathcal{D}}_{m}|$ . We aim to retrieve the most relevant information efficiently and accurately and generate the final answer $a$ to the query $q$ .

给定查询 $q$,我们有一个文档集合 $\mathcal{C}={\mathcal{D}{1},\mathcal{D}{2},\ldots,\mathcal{D}{M}}$,其中包含 $M$ 个文档。每个文档 $\mathcal{D}{m}$ 由 $N$ 页组成,每张图像代表一个单独的页面,定义为 $\mathcal{D}_{m}={\mathbf{I}_{1},\mathbf{I}_{2},\ldots,\mathbf{I}_{N}}$。集合中包含的图像总数为 $\textstyle\sum_{m=1}^{M}|{\mathcal{D}}_{m}|$。我们的目标是高效且准确地检索最相关的信息,并生成对查询 $q$ 的最终答案 $a$。

4 ViDoSeek Dataset

4 ViDoSeek 数据集

Existing VQA datasets typically consist of queries paired with a single image or a few images. However, in practical application scenarios, users often pose questions based on a large-scale corpus rather than targeting an individual document or image. To better evaluate RAG systems, we prefer questions that have unique answers when retrieving from a large corpus. To address this need, we introduce a novel Visually rich Document dataset specifically designed for RAG systems, called ViDoSeek. Below we provide the pipeline for constructing the dataset(§4.1) and a detailed analysis of the dataset(§4.2).

现有的 VQA 数据集通常由与单个图像或少数图像配对的查询组成。然而,在实际应用场景中,用户通常基于大规模语料库提出问题,而不是针对单个文档或图像。为了更好地评估 RAG 系统,我们更倾向于在从大规模语料库中检索时具有唯一答案的问题。为了满足这一需求,我们引入了一个专门为 RAG 系统设计的视觉丰富文档数据集,称为 ViDoSeek。下面我们提供了构建数据集的流程 (§4.1) 和数据集的详细分析 (§4.2)。

4.1 Dataset Construction.

4.1 数据集构建

To construct the ViDoSeek dataset, we developed a four-step pipeline to ensure that the queries meet our stringent requirements. As illustrated in Figure 2, our dataset comprises two parts: one annotated from scratch by our AI researchers, and the other derived from refining queries in the existing opensource dataset SlideVQA (Tanaka et al., 2023). For the open-source dataset, we initiate the query refinement starting from the third step of our pipeline. For the dataset we build from scratch, we follow the entire pipeline beginning with document collection. The following outlines our four-step pipeline:

为了构建 ViDoSeek 数据集,我们开发了一个四步流程,以确保查询符合我们的严格要求。如图 2 所示,我们的数据集由两部分组成:一部分由我们的 AI 研究人员从头开始标注,另一部分源自对现有开源数据集 SlideVQA (Tanaka et al., 2023) 中查询的优化。对于开源数据集,我们从流程的第三步开始进行查询优化。对于从头构建的数据集,我们从文档收集开始,遵循整个流程。以下是我们的四步流程:

Step 1. Document Collecting. As slides are a widely used medium for information transmission today, we selected them as our document source. We began by collecting English-language slides containing 25 to 50 pages, covering 12 domains such as economics, technology, literature, and geography. And we filtered out 300 slides that simultan e ou sly include text, charts, tables, and twodimensional layouts which refer to flowcharts, diagrams, or any visual elements composed of various components and are a distinctive feature of slides.

步骤 1:文档收集。由于幻灯片是当今广泛使用的信息传播媒介,我们选择其作为文档来源。我们首先收集了包含 25 至 50 页的英文幻灯片,涵盖经济、技术、文学和地理等 12 个领域。然后我们筛选出 300 份同时包含文本、图表、表格和二维布局的幻灯片,其中二维布局指的是流程图、示意图或由各种组件组成的视觉元素,这也是幻灯片的显著特征。

Step 2. Query Creation. To make the queries more suitable for RAG over a large-scale collection, our experts were instructed to construct queries that are specific to the document. Additionally, we encouraged constructing queries in various forms and with different sources and reasoning types to better reflect real-world scenarios.

步骤2:查询创建

Step 3. Quality Review. In large-scale retrieval and generation tasks, relying solely on manual annotation is challenging due to human brain limitations. To address this, we propose a review module that automatically identifies problematic queries.

第3步:质量审查。在大规模检索和生成任务中,由于人脑的局限性,仅依赖人工标注具有挑战性。为了解决这一问题,我们提出了一个自动识别问题查询的审查模块。

Step 4. Multimodal Refine. In this final step, we refine the queries that did not meet our standards during the quality review. We use carefully designed VLM-based agents to assist us throughout the entire dataset construction pipeline.

步骤 4:多模态优化。在此最终步骤中,我们对在质量审查中未达标的查询进行优化。我们使用精心设计的基于 VLM 的 AI智能体来协助我们完成整个数据集构建流程。

Table 1: Comparison of existing dataset with ViDoSeek.

表 1: 现有数据集与ViDoSeek的对比

| DATASET | DOMAIN | CONTENTTYPE | REFERENCETYPE | LARGEDOCUMENT COLLECTION |

|---|---|---|---|---|

| PlotQA (Methani et al., 2020) | Academic | Chart | Single-Image | x |

| ChartQA (Masry et al., 2022) | Academic | Chart | Single-Image | × |

| ArxivQA (Li et al., 2024) | Academic | Chart | Single-Image | |

| InfoVQA (Mathew et al., 2022) | Open-Domain | Text, Chart, Layout | Single-Image | xxxx |

| DocVQA (Mathew et al., 2021) | Open-Domain | Text, Chart, Table | Single-Document | |

| MMLongDoc (Ma et al., 2024) | Open-Domain | Text, Chart, Table, Layout | Single-Document | |

| SlideVQA (Tanaka et al., 2023) | Open-Domain | Text, Chart, Table, Layout | Single-Document | x |

| ViDoSeek (Ours) | Open-Domain | Text, Chart, Table, Layout | Multi-Documents |

4.2 Dataset Analysis

4.2 数据集分析

Dataset Statistics. ViDoSeek is the first dataset specifically designed for question-answering over large-scale document collections. It comprises approximate ly $\sim1.2k$ questions across a wide array of domains, addressing four key content types: Text, Chart, Table, and Layout. Among these, the Layout type poses the greatest challenge and represents the largest portion of the dataset. Additionally, the queries are categorized into two reasoning types: single-hop and multi-hop. Further details of the dataset can be found in the Appendix B and C.

数据集统计。ViDoSeek 是首个专门为大规模文档集合上的问答任务设计的数据集。它包含了约 $\sim1.2k$ 个问题,覆盖了多个领域,涉及四种关键内容类型:文本、图表、表格和布局。其中,布局类型最具挑战性,并且占据了数据集中最大的比例。此外,查询被分为两种推理类型:单跳和多跳。数据集的更多详细信息可以在附录 B 和 C 中找到。

Comparative Analysis. Table 1 highlights the limitations of existing datasets, which are predominantly tailored for scenarios involving single images or documents, lacking the capacity to handle the intricacies of retrieving relevant information from large collections. ViDoSeek bridges this gap by offering a dataset that more accurately mirrors real-world scenarios. This facilitates a more robust and scalable evaluation of RAG systems.

对比分析。表1突出了现有数据集的局限性,这些数据集主要针对涉及单张图像或文档的场景,缺乏处理从大型集合中检索相关信息的复杂性的能力。ViDoSeek 通过提供一个更准确反映现实世界场景的数据集来弥合这一差距。这有助于对 RAG 系统进行更稳健和可扩展的评估。

as the most relevant nodes are not always ranked at the top. Conversely, a larger $\kappa$ can slow down inference and introduce inaccuracies due to noise. Additionally, manually tuning $\kappa$ for different scenarios is troublesome.

由于最相关的节点并不总是排在前面。相反,较大的 $\kappa$ 会减慢推理速度并由于噪声引入不准确性。此外,手动为不同场景调整 $\kappa$ 也很麻烦。

Our objective is to develop a straightforward yet effective method to automatically determine $\kappa$ for each modality, without the dependency on a fixed value. We utilize the similarity $s$ of the embedding $E$ to quantify the relevance between the query and the document collection $\mathcal{C}$ :

我们的目标是开发一种简单而有效的方法来自动确定每个模态的 $\kappa$,而不依赖于固定值。我们利用嵌入 $E$ 的相似度 $s$ 来量化查询与文档集合 $\mathcal{C}$ 之间的相关性:

where $s_{i}$ represents the cosine similarity between the query $\mathcal{Q}$ and page $p_{i}$ . In the visual pipeline, a page corresponds to an image, whereas in the textual pipeline, it corresponds to chunks of OCR text. We propose that the distribution of $s$ follows a GMM and we consider they are sampled from a bimodal distribution $\mathcal{P}(s)$ shown in Fig.3:

其中 $s_{i}$ 表示查询 $\mathcal{Q}$ 与页面 $p_{i}$ 之间的余弦相似度。在视觉管道中,页面对应于图像,而在文本管道中,页面对应于 OCR 文本块。我们提出 $s$ 的分布遵循 GMM (Gaussian Mixture Model),并认为它们是从图 3 所示的双峰分布 $\mathcal{P}(s)$ 中采样的:

5 Method

5 方法

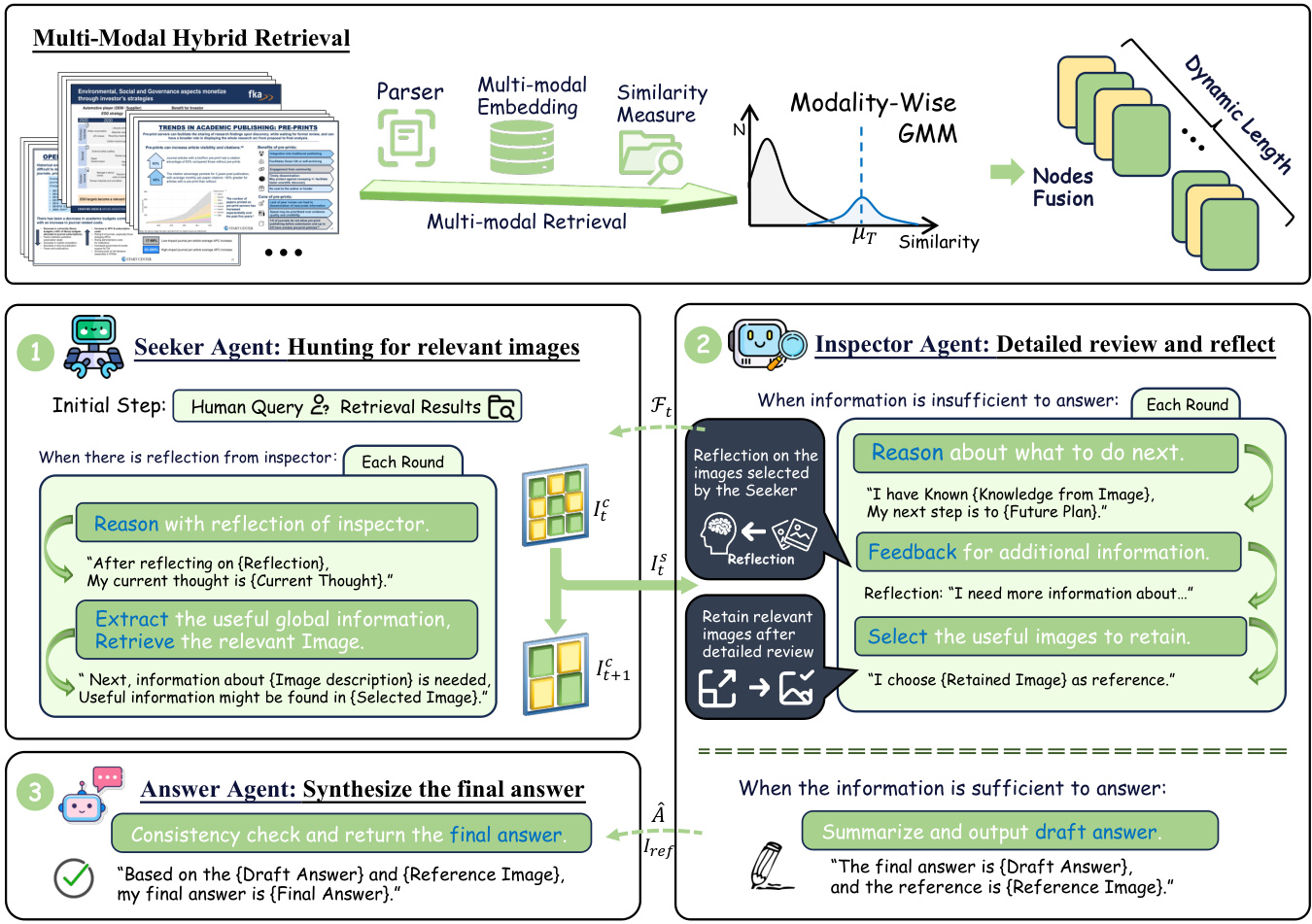

In this section, drawing from insights and foundational ideas, we present a comprehensive description of our ViDoRAG framework, which integrates two modules: Multi-Modal Hybrid Retrieval (§5.1) and Multi-Scale View Generation (§5.2).

在本节中,基于见解和基础思想,我们全面描述了 ViDoRAG 框架,该框架集成了两个模块:多模态混合检索 (Multi-Modal Hybrid Retrieval) (§5.1) 和多尺度视图生成 (Multi-Scale View Generation) (§5.2)。

5.1 Multi-Modal Hybrid Retrieval

5.1 多模态混合检索

For each query, our approach involves retrieving information through both textual and visual pipelines, dynamically determining the optimal value of topK using a Gaussian Mixture Model (GMM), and merging the retrieval results from both pipelines.

对于每个查询,我们的方法包括通过文本和视觉管道检索信息,使用高斯混合模型(GMM)动态确定 topK 的最佳值,并合并来自两个管道的检索结果。

Adaptive Recall with Gaussian Mixture Model. Traditional methods rely on a static hyper para meter, $\kappa$ , to retrieve the top $\cdot K$ images or text chunks from a corpus. A smaller $\kappa$ might fail to capture sufficient references needed for accurate responses, where $\mathcal{N}$ represents a Gaussian distribution, with $w,\mu,\sigma^{2}$ indicating the weight, mean, and variance, respectively. The subscripts $T$ and $F$ refer to the distributions of pages with high and low similarity. The distribution with higher similarity is deemed valuable for generation. The ExpectationMaximization (EM) algorithm is utilized to estimate the prior probability $\mathcal{P}(T|s,\mu_{T},\sigma_{T}^{2})$ for each modality. The dynamic value of $\kappa$ is defined as:

基于高斯混合模型的自适应召回。传统方法依赖于静态超参数 $\kappa$ 从语料库中检索前 $\cdot K$ 张图片或文本块。较小的 $\kappa$ 可能无法捕捉到准确响应所需的足够参考,其中 $\mathcal{N}$ 表示高斯分布,$w,\mu,\sigma^{2}$ 分别表示权重、均值和方差。下标 $T$ 和 $F$ 分别表示高相似度和低相似度的页面分布。具有较高相似度的分布被认为对生成有价值。期望最大化 (EM) 算法用于估计每种模态的先验概率 $\mathcal{P}(T|s,\mu_{T},\sigma_{T}^{2})$。$\kappa$ 的动态值定义为:

Considering that the similarity score distribution for different queries within a document collection may not strictly follow a standard distribution, we establish upper and lower bounds to manage outliers. The EM algorithm is employed sparingly, less than $\sim1%$ of the time. Dynamically adjusting $\kappa$ enhances generation efficiency compared to a static setting. Detailed analysis is available in $\S7.2$

考虑到文档集中不同查询的相似度得分分布可能不严格遵循标准分布,我们建立了上下限来管理异常值。EM算法使用得较少,不到 $\sim1%$ 的时间。与静态设置相比,动态调整 $\kappa$ 提高了生成效率。详细分析见 $\S7.2$

Figure 3: ViDoRAG Framework.

图 3: ViDoRAG 框架。

Textual and Visual Hybrid Retrieval. In the previous step, nodes were retrieved from both pipelines. In this phase, we integrate them:

文本与视觉混合检索。在上一步中,节点从两个流程中被检索出来。在这一阶段,我们将它们整合在一起:

where ${\mathcal{R}}{T e x t}$ and ${\mathcal{R}}{V i s u a l}$ denote the retrieval results from the textual and visual pipelines, respectively. The function ${\mathcal{F}}(\cdot)$ signifies a union operation, and $S o r t(\cdot)$ arranges the nodes in their original sequence, as continuous pages often exhibit correlation (Yu et al., 2024b).

其中 ${\mathcal{R}}{T e x t}$ 和 ${\mathcal{R}}{V i s u a l}$ 分别表示文本和视觉管道的检索结果。函数 ${\mathcal{F}}(\cdot)$ 表示并集操作,而 $S o r t(\cdot)$ 将节点按原始序列排列,因为连续页面通常表现出相关性 (Yu et al., 2024b)。

The textual and visual retrieval pipelines demonstrate varying levels of performance for different features. Without adaptive recall, the combined retrieval $\mathcal{R}_{h y b r i d}$ can become excessive. Adaptive recall ensures that effective retrievals are concise, while traditional pipelines yield longer recall results. This strategy optimizes performance relative to context length, underscoring the value of adaptive recall in hybrid retrieval.

文本和视觉检索管道在不同特征上表现出不同水平的性能。在没有自适应召回的情况下,组合检索 $\mathcal{R}_{h y b r i d}$ 可能会变得过度。自适应召回确保了有效的检索是简洁的,而传统的管道则会产生更长的召回结果。该策略相对于上下文长度优化了性能,强调了自适应召回在混合检索中的价值。

5.2 Multi-Agent Generation with Iterative Reasoning

5.2 基于迭代推理的多智能体生成

During the generation, we introduce a multi-agent framework which consists of three types of agents:

在生成过程中,我们引入了一个多智能体框架,该框架由三种类型的智能体组成:

the Seeker Agent, the Inspector Agent, and the Answer Agent. As illustrated in Fig. 3, this framework extracts clues, reflects, and answers in a coarse-tofine manner from a multi-scale perspective. More details are provided in Appendix D.

Seeker Agent、Inspector Agent 和 Answer Agent。如图 3 所示,该框架从多尺度角度以从粗到细的方式提取线索、反思和回答。更多细节见附录 D。

Seeker Agent: Hunting for relevant images.

Seeker Agent:寻找相关图像

The Seeker Agent is responsible for selecting from a coarse view and extracting global cues based on the query and reflection from the Inspector Agent. We have made some improvements to ReAct(Yao et al., 2022) to facilitate better memory management. The action space is defined as the selection of the images. Initially, the agent will reason only based on the query $\mathcal{Q}$ and select the most relevant images $\mathbf{I}{0}^{\mathrm{s}}$ from the candidate images $\mathbf{I}{0}^{\mathrm{c}}$ , while the initial memory $\mathcal{M}{0}$ is empty. In step $t$ , the candidate images $\mathbf{I}{t+1}^{\mathrm{c}}$ are the complement of previously selected images $\mathbf{I}{t}^{\mathrm{s}}$ , defined as $\mathbf{I}{t+1}^{\mathrm{c}}=\mathbf{I}{t}^{\mathrm{c}}\setminus\mathbf{I}{t}^{\mathrm{s}}$ . The seeker has received the reflection $\mathcal{F}{t-1}$ from the inspector, which includes an evaluation of the selected images and a more detailed description of the requirements for the images. The Seeker integrates feedback $\mathcal{F}{t-1}$ from the Inspector, which includes an evaluation of the selected images and a description of image requirements, to further refine the selection $\mathbf{I}{t}^{s}$ and update the memory $\mathcal{M}{t+1}$ :

Seeker Agent 负责从粗略视图中选择并根据 Inspector Agent 的查询和反思提取全局线索。我们对 ReAct(Yao et al., 2022) 进行了一些改进,以便更好地管理内存。动作空间被定义为图像的选择。最初,智能体将仅根据查询 $\mathcal{Q}$ 进行推理,并从候选图像 $\mathbf{I}{0}^{\mathrm{c}}$ 中选择最相关的图像 $\mathbf{I}{0}^{\mathrm{s}}$,而初始内存 $\mathcal{M}{0}$ 为空。在步骤 $t$ 中,候选图像 $\mathbf{I}{t+1}^{\mathrm{c}}$ 是先前选择的图像 $\mathbf{I}{t}^{\mathrm{s}}$ 的补集,定义为 $\mathbf{I}{t+1}^{\mathrm{c}}=\mathbf{I}{t}^{\mathrm{c}}\setminus\mathbf{I}{t}^{\mathrm{s}}$。Seeker 从 Inspector 接收到反思 $\mathcal{F}{t-1}$,其中包括对所选图像的评估以及对图像需求的更详细描述。Seeker 整合来自 Inspector 的反馈 $\mathcal{F}{t-1}$,其中包括对所选图像的评估和图像需求的描述,以进一步优化选择 $\mathbf{I}{t}^{s}$ 并更新内存 $\mathcal{M}{t+1}$:

where $\mathcal{M}_{t+1}$ represents the model’s thought content in step $t$ under the ReAct paradigm, maintaining a constant context length. The process continues until the Inspector determines that sufficient information is available to answer the query, or the Seeker concludes that no further relevant images exist among the candidates.

其中 $\mathcal{M}_{t+1}$ 代表模型在 ReAct 范式下第 $t$ 步的思考内容,保持上下文长度不变。该过程将持续到 Inspector 确定有足够的信息来回答查询,或者 Seeker 得出结论认为候选图像中不再存在相关图像。

Inspector Agent: Review in detail and Reflect. In baseline scenarios, increasing the top $\mathcal{K}$ value improves recall $ @K$ , but accuracy initially rises and then falls. This is attributed to interference from irrelevant images, referred to as noise, affecting model generation. To address this, we use Inspector to perform a more fine-grained inspection of the images. In each interaction with the Seeker, the Inspector’s action space includes providing feedback or drafting a preliminary answer. At step $t$ , the inspector reviews images at high resolution, denoted as $\Theta(\mathbf{I}{t}^{c}\cup\mathbf{I}{t-1}^{r},\mathcal{Q})$ where $\mathbf{I}{t-1}^{r}$ are images retained from the previous step and $\mathbf{I}{t}^{c}$ are from the Seeker. If the current information is sufficient to answer the query, a draft answer $\hat{\boldsymbol A}$ is provided, alongside a reference to the relevant image:

审查员智能体:详细审查与反思。在基线场景中,增加 top $\mathcal{K}$ 值可以提高召回率 $ @K$,但准确率会先上升后下降。这是由于不相关图像(称为噪声)的干扰影响了模型生成。为了解决这个问题,我们使用审查员对图像进行更细粒度的审查。在与搜寻者的每次交互中,审查员的动作空间包括提供反馈或起草初步答案。在第 $t$ 步,审查员以高分辨率审查图像,记为 $\Theta(\mathbf{I}{t}^{c}\cup\mathbf{I}{t-1}^{r},\mathcal{Q})$,其中 $\mathbf{I}{t-1}^{r}$ 是前一步保留的图像,$\mathbf{I}{t}^{c}$ 来自搜寻者。如果当前信息足以回答查询,则提供草稿答案 $\hat{\boldsymbol A}$,并引用相关图像:

Conversely, if more information is needed, the Inspector offers feedback $\mathcal{F}{t}$ to guide the Seeker in better image selection and identifies images $\mathbf{I}{t}^{r}$ to retain for further review in the next step $t+1$ :

相反,如果需要更多信息,检查器会提供反馈 $\mathcal{F}{t}$,以指导搜索者更好地选择图像,并识别图像 $\mathbf{I}{t}^{r}$,以便在下一步 $t+1$ 中进一步审查。

The number of images the Inspector reviews is typically fewer than the Seeker’s, ensuring robustness in reasoning, particularly for Visual Language Models with moderate reasoning abilities.

检查员审查的图像数量通常少于搜索者,这确保了推理的稳健性,特别是对于具有中等推理能力的视觉语言模型。

Answer Agent: Synthesize the final answer. In our framework, the Seeker and Inspector engage in a continuous interaction, and the answer agent provides the answer in the final step. To balance accuracy and efficiency, the Answer Agent verifies the consistency of the Inspector’s draft answer Aˆ. If the reference image matches the Inspector’s input, the draft answer is accepted as the final answer $\bar{\mathcal{A}}=\hat{\mathcal{A}}$ . If the reference image is a subset of the input image, the answer agent should check for consistency between the draft answer $\hat{\boldsymbol A}$ and the reference image, then give the final answer $\mathcal{A}$ : If the reference image is a subset of Inspector’s the input, the Answer Agent ensures consistency between the draft answer $\hat{\boldsymbol A}$ and the reference image before finalizing the answer $\mathcal{A}$ :

答案生成器:合成最终答案。在我们的框架中,探索者和检查者持续互动,答案生成器在最后一步提供答案。为了平衡准确性和效率,答案生成器验证检查者草稿答案 Aˆ 的一致性。如果参考图像与检查者的输入匹配,则草稿答案被接受为最终答案 $\bar{\mathcal{A}}=\hat{\mathcal{A}}$。如果参考图像是输入图像的子集,答案生成器应检查草稿答案 $\hat{\boldsymbol A}$ 与参考图像之间的一致性,然后给出最终答案 $\mathcal{A}$:如果参考图像是检查者输入的子集,答案生成器在最终确定答案 $\mathcal{A}$ 之前确保草稿答案 $\hat{\boldsymbol A}$ 与参考图像之间的一致性:

The Answer Agent utilizes the draft answer as prior knowledge to refine the response from coarse to fine. The consistency check between the Answer Agent and Inspector Agent enhances the depth and comprehensiveness of the final answer.

答案智能体利用草稿答案作为先验知识,从粗到细地精炼回答。答案智能体与审查智能体之间的一致性检查增强了最终答案的深度和全面性。

6 Experiments

6 实验

6.1 Experimental Settings

6.1 实验设置

Evaluation Metric For our end-to-end evaluation, we employed a model-based assessment using GPT-4o, which involved assigning scores from 1 to 5 by comparing the reference answer with the final answer. Answers receiving scores of 4 or above were considered correct, and we subsequently calculate accuracy as the evaluation metric. For retrieval evaluation, we use recall as the metric.

评估指标

对于我们的端到端评估,我们采用了基于 GPT-4o 的模型评估方法,通过将参考答案与最终答案进行比较,给出 1 到 5 的评分。得分为 4 及以上的答案被视为正确,随后我们计算准确率作为评估指标。对于检索评估,我们使用召回率作为指标。

Baselines and Oracle. We selecte Nv-embedV2(Lee et al., 2024) and ColQwen2(Faysse et al., 2024) as the retrievers for the TextRAG and VisualRAG baselines, respectively. Based on their original settings, we choose the top-5 recall results as the generation input, which equals the average length of dynamic recall results. This ensures a fair comparison and highlights the advantages of our method. The Oracle serves as the upper bound performance, where the model responds based on the golden page without retrieval or other operations.

基线与 Oracle。我们选择 Nv-embedV2 (Lee et al., 2024) 和 ColQwen2 (Faysse et al., 2024) 分别作为 TextRAG 和 VisualRAG 基线的检索器。根据其原始设置,我们选择前 5 个召回结果作为生成输入,这等于动态召回结果的平均长度。这确保了公平的比较,并突出了我们方法的优势。Oracle 作为性能的上限,模型在没有任何检索或其他操作的情况下基于黄金页面进行响应。

6.2 Main Results

6.2 主要结果

As shown in Table. 2, we conducted experiments on both closed-source and open-source models: GPT-4o, Qwen2.5-7B-Instruct, Qwen2.5-VL7B(Yang et al., 2024)-Instruct, Llama3.2-Vision90B-Instruct. Closed-source models generally outperform open-source models performance. It is worth mentioning that the qwen2.5-VL-7B has shown excellent instruction-following and reasoning capabilities within our framework. In contrast, we found that the llama3.2-VL requires 90B parameters to accomplish the same instructions, which may be related to the model’s pre-training domain. The results suggest that while API-based models offer strong baseline performance, our method is also effective in enhancing the performance of opensource models, offering promising potential for future applications. To further demonstrate the robustness of the framework, we constructed a pipeline using data to rewrite queries from SlideVQA(Tanaka et al., 2023), making the queries suitable for scenarios involving large corpora. The experimental results are presented the analysis.

如表 2 所示,我们对闭源和开源模型进行了实验:GPT-4o、Qwen2.5-7B-Instruct、Qwen2.5-VL7B (Yang et al., 2024)-Instruct、Llama3.2-Vision90B-Instruct。闭源模型通常优于开源模型的性能。值得一提的是,qwen2.5-VL-7B 在我们的框架中表现出色,具备优秀的指令执行和推理能力。相比之下,我们发现 llama3.2-VL 需要 90B 参数才能完成相同的指令,这可能与模型的预训练领域有关。结果表明,虽然基于 API 的模型提供了强大的基线性能,但我们的方法也能有效提升开源模型的性能,为未来应用提供了广阔的前景。为了进一步证明框架的鲁棒性,我们使用数据构建了一个管道,从 SlideVQA (Tanaka et al., 2023) 中重写查询,使查询适用于涉及大规模语料的场景。实验结果展示了分析。

Table 2: Overall Generation performance.

表 2: 总体生成性能

| METHOD | REASONINGTYPE Single-hop | Multi-hop | ANSWER TYPE Text | Table | Chart | Layout | OVERALL |

|---|---|---|---|---|---|---|---|

| Llama3.2-Vision-90B-Instruct | |||||||

| Upper Bound | 83.1 | 78.7 | 88.7 | 73.1 | 68.1 | 85.1 | 81.1 |

| TextRAG | 42.6 | 45.7 | 67.6 | 41.8 | 25.4 | 45.9 | 43.9 |

| VisualRAG | 61.8 | 60.5 | 82.5 | 48.5 | 52.2 | 63.9 | 61.2 |

| ViDoRAG (Ours) | 73.3 | 68.5 | 85.1 | 65.6 | 56.1 | 74.7 | 71.2 |

| Qwen2.5-VL-7B-Instruct | |||||||

| Upper Bound | 77.5 | 78.2 | 88.4 | 77.1 | 69.4 | 78.8 | 77.9 |

| TextRAG | 59.6 | 55.7 | 78.7 | 53.8 | 40.7 | 60.5 | 57.6 |

| VisualRAG | 66.8 | 64.3 | 84.9 | 61.1 | 52.8 | 67.5 | 65.7 |

| ViDoRAG (Ours) | 70.4 | 67.3 | 81.9 | 65.2 | 57.7 | 71.3 | 69.1 |

| GPT-4o(Closed-SourcedModels) |