A-MEM: Agentic Memory for LLM Agents

A-MEM:面向大语言模型智能体的记忆系统

Abstract

摘要

While large language model (LLM) agents can effectively use external tools for complex realworld tasks, they require memory systems to leverage historical experiences. Current memory systems enable basic storage and retrieval but lack sophisticated memory organization, despite recent attempts to incorporate graph databases. Moreover, these systems’ fixed operations and structures limit their adaptability across diverse tasks. To address this limitation, this paper proposes a novel agentic memory system for LLM agents that can dynamically organize memories in an agentic way. Following the basic principles of the Zettelkasten method, we designed our memory system to create interconnected knowledge networks through dynamic indexing and linking. When a new memory is added, we generate a comprehensive note containing multiple structured attributes, including contextual descriptions, keywords, and tags. The system then analyzes historical memories to identify relevant connections, establishing links where meaningful similarities exist. Additionally, this process enables memory evolution - as new memories are integrated, they can trigger updates to the contextual representations and attributes of existing historical memories, allowing the memory network to continuously refine its understanding. Our approach combines the structured organization principles of Ze ttel k as ten with the flexibility of agent-driven decision making, allowing for more adaptive and contextaware memory management. Empirical experiments on six foundation models show superior improvement against existing SOTA baselines. The source code for evaluating performance is available at https://github.com/ WujiangXu/Agent ic Memory, while the source code of agentic memory system is available at https://github.com/agi research/A-mem.

尽管大语言模型 (LLM) 智能体能够有效利用外部工具处理复杂的现实任务,但它们需要记忆系统来利用历史经验。当前的记忆系统能够实现基本的存储和检索,但缺乏复杂的记忆组织,尽管最近尝试引入图数据库。此外,这些系统的固定操作和结构限制了它们在不同任务中的适应性。为了解决这一限制,本文提出了一种新颖的 LLM 智能体记忆系统,能够以智能体的方式动态组织记忆。遵循 Zettelkasten 方法的基本原则,我们设计了记忆系统,通过动态索引和链接创建相互关联的知识网络。当添加新记忆时,我们生成一个包含多个结构化属性的详细笔记,包括上下文描述、关键词和标签。系统随后分析历史记忆以识别相关连接,在有意义的相似性存在时建立链接。此外,这一过程还实现了记忆的演化——随着新记忆的整合,它们可能触发对现有历史记忆的上下文表示和属性的更新,从而使记忆网络能够不断完善其理解。我们的方法结合了 Zettelkasten 的结构化组织原则和智能体驱动决策的灵活性,实现了更具适应性和上下文感知的记忆管理。在六个基础模型上的实证实验显示,相较于现有的 SOTA 基线,性能有显著提升。性能评估的源代码可在 https://github.com/WujiangXu/AgenticMemory 获取,而记忆系统的源代码可在 https://github.com/agiresearch/A-mem 获取。

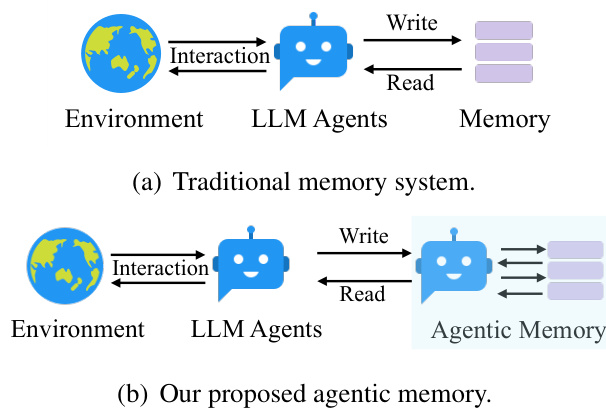

Figure 1: Traditional memory systems require predefined memory access patterns specified in the workflow, limiting their adaptability to diverse scenarios. Contrastly, our A-MEM enhances the flexibility of LLM agents by enabling dynamic memory operations.

图 1: 传统内存系统需要在工作流中预定义内存访问模式,限制了其对多样化场景的适应性。相比之下,我们的 A-MEM 通过支持动态内存操作,增强了大语言模型智能体的灵活性。

1 Introduction

1 引言

Large Language Model (LLM) agents have demonstrated remarkable capabilities in various tasks, with recent advances enabling them to interact with environments, execute tasks, and make decisions autonomously (Mei et al., 2024; Wang et al., 2024; Deng et al., 2023). They integrate LLMs with external tools and delicate workflows to improve reasoning and planning abilities. Though LLM agent has strong reasoning performance, it still needs a memory system to provide long-term interaction ability with the external environment (Weng, 2023).

大语言模型 (LLM) 智能体在各种任务中展示了卓越的能力,最近的进展使其能够与环境互动、执行任务并自主做出决策 (Mei et al., 2024; Wang et al., 2024; Deng et al., 2023)。它们将大语言模型与外部工具和精细的工作流程相结合,以提高推理和规划能力。尽管大语言模型智能体具备强大的推理性能,它仍需要一个记忆系统来提供与外部环境的长期互动能力 (Weng, 2023)。

Existing memory systems (Packer et al., 2023; Zhong et al., 2024; Roucher et al., 2025; Liu et al., 2024) for LLM agents provide basic memory storage functionality. These systems require agent developers to predefine memory storage structures, specify storage points within the workflow, and establish retrieval timing. Meanwhile, to improve structured memory organization, Mem0 (Dev and Taranjeet, 2024), following the principles of RAG (Edge et al., 2024; Lewis et al., 2020; Shi et al., 2024), incorporates graph databases for storage and retrieval processes. While graph databases provide structured organization for memory systems, their reliance on predefined schemas and relationships fundamentally limits their adaptability. This limitation manifests clearly in practical scenarios - when an agent learns a novel mathematical solution, current systems can only categorize and link this information within their preset framework, unable to forge innovative connections or develop new organizational patterns as knowledge evolves. Such rigid structures, coupled with fixed agent workflows, severely restrict these systems’ ability to generalize across new environments and maintain effectiveness in long-term interactions. The challenge becomes increasingly critical as LLM agents tackle more complex, open-ended tasks, where flexible knowledge organization and continuous adaptation are essential. Therefore, how to design a flexible and universal memory system that supports LLM agents’ long-term interactions remains a crucial challenge.

现有的大语言模型智能体记忆系统(Packer 等,2023;Zhong 等,2024;Roucher 等,2025;Liu 等,2024)提供了基本的记忆存储功能。这些系统要求智能体开发者预定义记忆存储结构,指定工作流中的存储点,并确定检索时机。同时,为了改善结构化记忆组织,Mem0(Dev 和 Taranjeet,2024)遵循 RAG(Edge 等,2024;Lewis 等,2020;Shi 等,2024)的原则,引入了图数据库用于存储和检索过程。尽管图数据库为记忆系统提供了结构化组织,但其对预定义模式和关系的依赖从根本上限制了其适应性。这种限制在实际场景中表现得尤为明显——当智能体学习到一个新的数学解决方案时,当前系统只能在其预设框架内对该信息进行分类和链接,无法随着知识的演变建立创新连接或开发新的组织模式。这种僵化的结构,加上固定的智能体工作流程,严重限制了这些系统在新环境中的泛化能力以及在长期交互中保持有效性的能力。随着大语言模型智能体处理更复杂、开放式的任务,灵活的知识组织和持续适应变得至关重要,这一挑战也变得越来越严峻。因此,如何设计一个灵活且通用的记忆系统以支持大语言模型智能体的长期交互仍然是一个关键挑战。

In this paper, we introduce a novel agentic memory system, named as A-MEM, for LLM agents that enables dynamic memory structuring without relying on static, predetermined memory operations. Our approach draws inspiration from the Ze ttel k as ten method (Kadavy, 2021; Ahrens, 2017), a sophisticated knowledge management system that creates interconnected information networks through atomic notes and flexible linking mechanisms. Our system introduces an agentic memory architecture that enables autonomous and flexible memory management for LLM agents. For each new memory, we construct comprehensive notes, which integrates multiple representations: structured textual attributes including several attributes and embedding vectors for similarity matching. Then A-MEM analyzes the historical memory repository to establish meaningful connections based on semantic similarities and shared attributes. This integration process not only creates new links but also enables dynamic evolution when new memories are incorporated, they can trigger updates to the contextual representations of existing memories, allowing the entire memories to continuously refine and deepen its understanding over time. The contributions are summarized as:

在本文中,我们介绍了一种名为A-MEM的新型AI智能体记忆系统,用于大语言模型(LLM)智能体,使其能够在不依赖静态预定义记忆操作的情况下实现动态记忆结构。我们的方法借鉴了Zettelkasten方法(Kadavy, 2021; Ahrens, 2017),这是一种通过原子笔记和灵活链接机制创建互连信息网络的复杂知识管理系统。我们的系统引入了一种AI智能体记忆架构,使LLM智能体能够自主灵活地管理记忆。对于每个新记忆,我们构建了综合笔记,整合了多种表示形式:包括多个属性的结构化文本属性和用于相似性匹配的嵌入向量。然后,A-MEM分析历史记忆库,根据语义相似性和共享属性建立有意义的连接。这种整合过程不仅创建了新的链接,还实现了动态演化,当新记忆被纳入时,它们可以触发现有记忆的上下文表示的更新,使整个记忆系统能够随着时间的推移不断细化和深化其理解。贡献总结如下:

• We present A-MEM, an agentic memory system for LLM agents that enables autonomous generation of contextual descriptions, dynamic establishment of memory connections, and intelligent evolution of existing memories based on new experiences. This system equips LLM agents with long-term interaction capabilities without requiring predetermined memory operations.

我们提出了 A-MEM,一种为大语言模型(LLM)智能体设计的记忆系统,能够自主生成上下文描述、动态建立记忆连接,并根据新经验智能演化现有记忆。该系统使大语言模型智能体具备长期交互能力,而无需预先设定记忆操作。

• We design an agentic memory update mechanism where new memories automatically trigger two key operations: (1) Link Generation - automatically establishing connections between memories by identifying shared attributes and similar contextual descriptions, and (2) Memory Evolution - enabling existing memories to dynamically evolve as new experiences are analyzed, leading to the emergence of higher-order patterns and attributes.

• 我们设计了一种主动记忆更新机制,其中新记忆自动触发两个关键操作:(1) 链接生成——通过识别共享属性和相似的上下文描述,自动建立记忆之间的连接;(2) 记忆演化——随着新经验的分析,使现有记忆能够动态演化,从而产生更高阶的模式和属性。

We conduct comprehensive evaluations of our system using a long-term conversational dataset, comparing performance across six foundation models using six distinct evaluation metrics, demon- strating significant improvements. Moreover, we provide T-SNE visualization s to illustrate the structured organization of our agentic memory system.

我们使用长期对话数据集对系统进行了全面评估,比较了六个基础模型在六个不同评估指标上的表现,展示了显著的改进。此外,我们提供了 T-SNE 可视化图,以展示我们智能体记忆系统的结构化组织。

2 Related Work

2 相关工作

2.1 Memory for LLM Agents

2.1 大语言模型智能体的记忆

Prior works on LLM agent memory systems have explored various mechanisms for memory management and utilization (Mei et al., 2024; Liu et al., 2024; Dev and Taranjeet, 2024; Zhong et al., 2024). Some approaches complete interaction storage, which maintains comprehensive historical records through dense retrieval models (Zhong et al., 2024) or read-write memory structures (Modarressi et al., 2023). Moreover, MemGPT (Packer et al., 2023) leverages cache-like architectures to prioritize recent information. Similarly, SCM (Wang et al., 2023a) proposes a Self-Controlled Memory framework that enhances LLMs’ capability to maintain long-term memory through a memory stream and controller mechanism. However, these approaches face significant limitations in handling diverse realworld tasks. While they can provide basic memory functionality, their operations are typically constrained by predefined structures and fixed workflows. These constraints stem from their reliance on rigid operational patterns, particularly in memory writing and retrieval processes. Such inflexibility leads to poor generalization in new environments and limited effectiveness in long-term interactions. Therefore, designing a flexible and universal memory system that supports agents’ long-term interactions remains a crucial challenge.

关于大语言模型智能体记忆系统的先前研究已经探索了各种记忆管理和利用机制 (Mei et al., 2024; Liu et al., 2024; Dev and Taranjeet, 2024; Zhong et al., 2024)。一些方法实现了交互存储,通过密集检索模型 (Zhong et al., 2024) 或读写记忆结构 (Modarressi et al., 2023) 来维护全面的历史记录。此外,MemGPT (Packer et al., 2023) 利用类似缓存的架构来优先处理最近的信息。同样,SCM (Wang et al., 2023a) 提出了一个自控记忆框架,通过记忆流和控制器机制增强了大语言模型的长期记忆能力。然而,这些方法在处理多样化的现实任务时面临显著的限制。虽然它们可以提供基本的记忆功能,但它们的操作通常受限于预定义的结构和固定工作流程。这些限制源于它们对僵化操作模式的依赖,特别是在记忆写入和检索过程中。这种不灵活性导致在新环境中的泛化能力差,并且在长期交互中的效果有限。因此,设计一个支持智能体长期交互的灵活且通用的记忆系统仍然是一个关键的挑战。

2.2 Retrieval-Augmented Generation 3.1 Note Construction

2.2 检索增强生成 (Retrieval-Augmented Generation) 3.1 笔记构建

Retrieval-Augmented Generation (RAG) has emerged as a powerful approach to enhance LLMs by incorporating external knowledge sources (Lewis et al., 2020; Borgeaud et al., 2022; Gao et al., 2023). The standard RAG (Yu et al., $2023\mathrm{a}$ ; Wang et al., 2023c) process involves indexing documents into chunks, retrieving relevant chunks based on semantic similarity, and augmenting the LLM’s prompt with this retrieved context for generation. Advanced RAG systems (Lin et al., 2023; Ilin, 2023) have evolved to include sophisticated pre-retrieval and post-retrieval optimization s. Building upon these foundations, recent researches has introduced agentic RAG systems that demonstrate more autonomous and adaptive behaviors in the retrieval process. These systems can dynamically determine when and what to retrieve (Asai et al., 2023; Jiang et al., 2023), generate hypothet- ical responses to guide retrieval, and iterative ly refine their search strategies based on intermediate results (Trivedi et al., 2022; Shao et al., 2023).

检索增强生成 (Retrieval-Augmented Generation, RAG) 已成为通过整合外部知识源来增强大语言模型 (Lewis et al., 2020; Borgeaud et al., 2022; Gao et al., 2023) 的强大方法。标准的 RAG (Yu et al., $2023\mathrm{a}$; Wang et al., 2023c) 过程包括将文档索引为块、基于语义相似性检索相关块,并将检索到的上下文增强到大语言模型的提示中以进行生成。高级 RAG 系统 (Lin et al., 2023; Ilin, 2023) 已经发展到包括复杂的检索前和检索后优化。在这些基础上,最近的研究引入了更具自主性和适应性的 AI 智能体 RAG 系统。这些系统可以动态决定何时检索和检索什么 (Asai et al., 2023; Jiang et al., 2023),生成假设性响应以指导检索,并根据中间结果迭代优化其搜索策略 (Trivedi et al., 2022; Shao et al., 2023)。

However, while agentic RAG approaches demonstrate agency in the retrieval phase by autonomously deciding when and what to retrieve (Asai et al., 2023; Jiang et al., 2023; Yu et al., 2023b), our agentic memory system exhibits agency at a more fundamental level through the autonomous evolution of its memory structure. Inspired by the Ze ttel k as ten method, our system allows memories to actively generate their own contextual descriptions, form meaningful connections with related memories, and evolve both their content and relationships as new experiences emerge. This fundamental distinction in agency between retrieval versus storage and evolution distinguishes our approach from agentic RAG systems, which maintain static knowledge bases despite their sophi stica ted retrieval mechanisms.

然而,尽管智能化的 RAG 方法通过在检索阶段自主决定何时检索以及检索什么来展示其智能性 (Asai et al., 2023; Jiang et al., 2023; Yu et al., 2023b),我们的智能记忆系统通过其记忆结构的自主进化在更基础的层面上展现了智能性。受 Zettelkasten 方法的启发,我们的系统允许记忆主动生成自己的上下文描述,与相关记忆形成有意义的连接,并在新经验出现时进化其内容和关系。在检索与存储及进化之间的智能性根本区别,使我们的方法与智能化的 RAG 系统区分开来,后者尽管具有复杂的检索机制,但其知识库是静态的。

3 Method o lod gy

3 方法论

Our proposed agentic memory system draws inspiration from the Ze ttel k as ten method, implementing a dynamic and self-evolving memory system that enables LLM agents to maintain long-term mem- ory without predetermined operations. The system’s design emphasizes atomic note-taking, flexible linking mechanisms, and continuous evolution of knowledge structures.

我们提出的智能体记忆系统借鉴了 Zettelkasten 方法的灵感,实现了一个动态且自我进化的记忆系统,使大语言模型智能体能够在没有预设操作的情况下保持长期记忆。该系统的设计强调原子化的笔记记录、灵活的链接机制以及知识结构的持续进化。

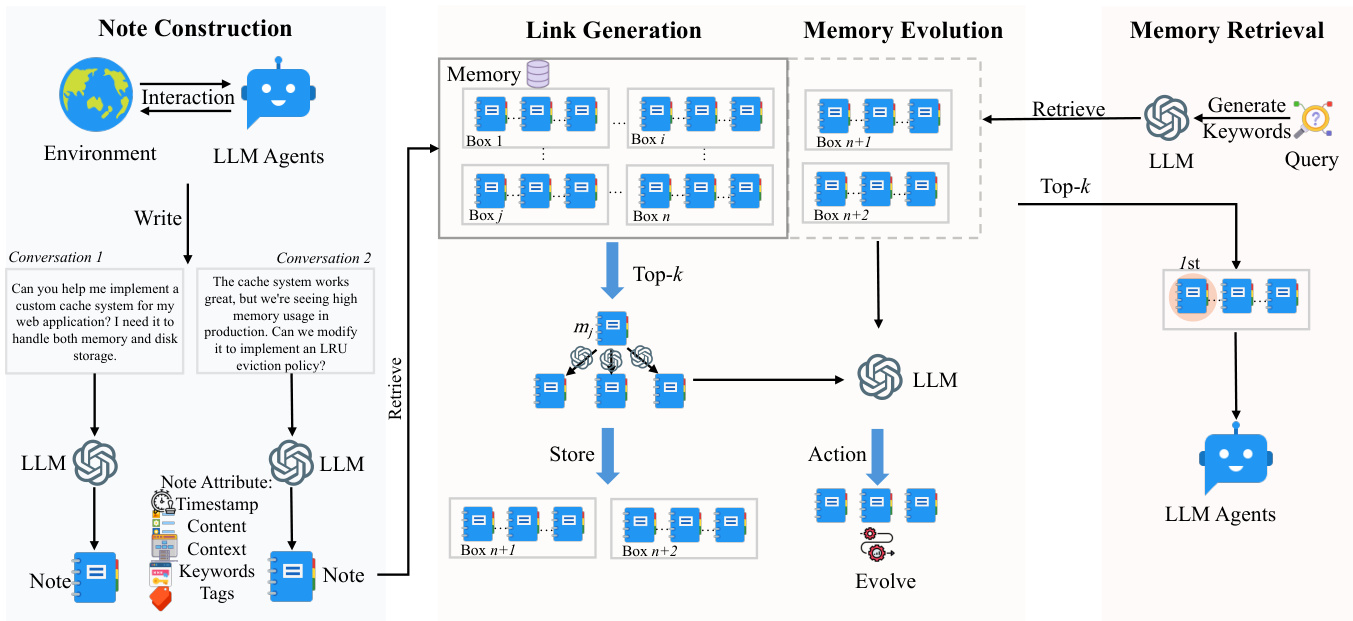

Building upon the Ze ttel k as ten method’s principles of atomic note-taking and flexible organization, we introduce an LLM-driven approach to memory note construction. When an agent interacts with its environment, we construct structured memory notes that capture both explicit information and LLMgenerated contextual understanding. Each memory note $m_{i}$ in our collection $\mathcal{M}={m_{1},m_{2},...,m_{N}}$ is represented as:

基于 Zettelkasten 方法的原子化笔记和灵活组织原则,我们引入了一种由大语言模型驱动的记忆笔记构建方法。当智能体与环境交互时,我们构建结构化的记忆笔记,以捕捉显式信息和大语言模型生成的上下文理解。在我们的集合 $\mathcal{M}={m_{1},m_{2},...,m_{N}}$ 中,每个记忆笔记 $m_{i}$ 表示为:

where $c_{i}$ represents the original interaction content, $t_{i}$ is the timestamp of the interaction, $K_{i}$ denotes LLM-generated keywords that capture key concepts, $G_{i}$ contains LLM-generated tags for categ or iz ation, $X_{i}$ represents the LLM-generated contextual description that provides rich semantic under standing, and $L_{i}$ maintains the set of linked memories that share semantic relationships. To enrich each memory note with meaningful context beyond its basic content and timestamp, we leverage an LLM to analyze the interaction and generate these semantic components. The note construction process involves prompting the LLM with carefully designed templates $P_{s1}$ :

其中 $c_{i}$ 表示原始交互内容, $t_{i}$ 是交互的时间戳, $K_{i}$ 表示大语言模型生成的关键词,用于捕捉关键概念, $G_{i}$ 包含大语言模型生成的分类标签, $X_{i}$ 表示大语言模型生成的上下文描述,提供了丰富的语义理解, $L_{i}$ 维护了具有语义关系的链接记忆集合。为了在基本内容和时间戳之外为每个记忆笔记增添有意义的上下文,我们利用大语言模型分析交互并生成这些语义组件。笔记构建过程涉及使用精心设计的模板 $P_{s1}$ 来提示大语言模型。

Following the Ze ttel k as ten principle of atomicity, each note captures a single, self-contained unit of knowledge. To enable efficient retrieval and linking, we compute a dense vector representation via a text encoder (Reimers and Gurevych, 2019) that encapsulates all textual components of the note:

遵循 Zettelkasten 的原子性原则,每个笔记都捕捉一个单一、自包含的知识单元。为了实现高效的检索和链接,我们通过文本编码器 (Reimers 和 Gurevych, 2019) 计算一个密集向量表示,该表示封装了笔记的所有文本组件:

By using LLMs to generate enriched components, we enable autonomous extraction of implicit knowledge from raw interactions. The multi-faceted note structure $(K_{i},G_{i},X_{i})$ creates rich representations that capture different aspects of the memory, facilitating nuanced organization and retrieval. Additionally, the combination of LLM-generated semantic components with dense vector representations provides both human-interpret able context and comput ation ally efficient similarity matching.

通过使用大语言模型生成丰富的组件,我们能够从原始交互中自主提取隐含知识。多方面的笔记结构 $(K_{i},G_{i},X_{i})$ 创建了捕捉记忆不同方面的丰富表示,促进了细致的组织和检索。此外,大语言模型生成的语义组件与密集向量表示的结合,既提供了人类可理解的上下文,又实现了计算高效的相似性匹配。

3.2 Link Generation

3.2 链接生成

Our system implements an autonomous link generation mechanism that enables new memory notes to form meaningful connections without predefined rules. When the constrctd memory note $m_{n}$ is added to the system, we first leverage its semantic embedding for similarity-based retrieval. For each existing memory note $m_{j}\in\mathcal{M}$ , we compute a similarity score:

我们的系统实现了自主链接生成机制,使新的记忆笔记能够在没有预定义规则的情况下形成有意义的连接。当构造的记忆笔记 $m_{n}$ 被添加到系统中时,我们首先利用其语义嵌入进行基于相似性的检索。对于每个现有的记忆笔记 $m_{j}\in\mathcal{M}$,我们计算一个相似度分数:

Figure 2: Our A-MEM architecture comprises three integral parts in memory storage. During note construction, the system processes new interaction memories and stores them as notes with multiple attributes. The link generation process first retrieves the most relevant historical memories and then decide whether to establish connections between them. The concept of a ’box’ describes that related memories become interconnected through their similar contextual descriptions, analogous to the Ze ttel k as ten method. However, our approach allows individual memories to exist simultaneously within multiple different boxes. In the memory retrieval stage, the system analyzes queries into constituent keywords and utilizes these keywords to search through the memory network.

图 2: 我们的 A-MEM 架构由三个核心部分组成,用于记忆存储。在笔记构建过程中,系统处理新的交互记忆,并将其存储为具有多个属性的笔记。链接生成过程首先检索最相关的历史记忆,然后决定是否在它们之间建立连接。"盒子"的概念描述了相关记忆通过相似的上下文描述相互连接,类似于 Zettelkasten 方法。然而,我们的方法允许单个记忆同时存在于多个不同的盒子中。在记忆检索阶段,系统将查询分解为组成关键词,并利用这些关键词在记忆网络中搜索。

The system then identifies the top $\cdot k$ most relevant memories:

系统随后识别出前 $\cdot k$ 个最相关的记忆:

Based on these candidate nearest memories, we prompt the LLM to analyze potential connections based on their potential common attributes. Formally, the link set of memory $m_{n}$ update like:

基于这些候选的最近记忆,我们提示大语言模型 (LLM) 根据它们的潜在共同属性分析可能的联系。形式上,记忆 $m_{n}$ 的链接集更新如下:

Each generated link $l_{i}$ is structured as: $L_{i}~=$ ${m_{i},...,m_{k}}$ . By using embedding-based retrieval as an initial filter, we enable efficient s cal ability while maintaining semantic relevance. A-MEM can quickly identify potential connections even in large memory collections without exhaustive comparison. More importantly, the LLM-driven analysis allows for nuanced understanding of relationships that goes beyond simple similarity metrics.

每个生成的链接 $l_{i}$ 的结构为:$L_{i}~=$ ${m_{i},...,m_{k}}$。通过使用基于嵌入的检索作为初始过滤器,我们能够实现高效的可扩展性,同时保持语义相关性。A-MEM 可以在大规模记忆集合中快速识别潜在连接,而无需进行详尽的比较。更重要的是,LLM 驱动的分析允许对关系进行细致入微的理解,超越了简单的相似性度量。

The language model can identify subtle patterns, causal relationships, and conceptual connections that might not be apparent from embedding similarity alone. We implements the Ze ttel k as ten principle of flexible linking while leveraging modern language models. The resulting network emerges organically from memory content and context, enabling natural knowledge organization.

语言模型能够识别仅靠嵌入相似性难以察觉的微妙模式、因果关系和概念联系。我们采用 Zettelkasten 原则进行灵活链接,同时利用现代语言模型。由此产生的网络从记忆内容和上下文中自然涌现,实现了自然的知识组织。

3.3 Memory Evolution

3.3 内存演进

After creating links for the new memory, A-MEM evolves the retrieved memories based on their textual information and relationships with the new memory. For each memory $m_{j}$ in the nearest neighbor set $\mathcal{M}_{\mathrm{near}}^{n}$ , the system determines whether to update its context, keywords, and tags. This evolution process can be formally expressed as:

在为新记忆创建链接后,A-MEM 根据其文本信息及其与新记忆的关系对检索到的记忆进行演化。对于最近邻集合 $\mathcal{M}{\mathrm{near}}^{n}$ 中的每个记忆 $m{j}$,系统决定是否更新其上下文、关键词和标签。这一演化过程可以形式化表示为:

The evolved memory $m_{j}^{*}$ then replaces the original memory $m_{j}$ in the memory set $\mathcal{M}$ . This evolutionary approach enables continuous updates and new connections, mimicking human learning processes. As the system processes more memories over time, it develops increasingly sophisticated knowledge structures, discovering higher-order patterns and concepts across multiple memories. This creates a foundation for autonomous memory learning where knowledge organization becomes progressively richer through the ongoing interaction between new experiences and existing memories.

进化后的记忆 $m_{j}^{*}$ 随后替换记忆集 $\mathcal{M}$ 中的原始记忆 $m_{j}$。这种进化方法使得系统能够持续更新并建立新的连接,模仿人类的学习过程。随着系统处理更多的记忆,它会发展出越来越复杂的知识结构,发现跨多个记忆的高阶模式和概念。这为自主记忆学习奠定了基础,通过新经验与现有记忆之间的持续互动,知识组织逐渐变得更加丰富。

3.4 Retrieve Relative Memory

3.4 检索相关记忆

In each interaction, our A-MEM performs contextaware memory retrieval to provide the agent with relevant historical information. Given a query text $q$ from the current interaction, we first compute its dense vector representation using the same text encoder used for memory notes:

在每次交互中,我们的 A-MEM 进行上下文感知的记忆检索,以为智能体提供相关的历史信息。给定当前交互中的查询文本 $q$,我们首先使用与记忆笔记相同的文本编码器计算其密集向量表示:

The system then computes similarity scores between the query embedding and all existing memory notes in $\mathcal{M}$ using cosine similarity:

系统随后使用余弦相似度计算查询嵌入与 $\mathcal{M}$ 中所有现有记忆笔记之间的相似度得分:

Then we retrieve the $\mathbf{k}$ most relevant memories from the historical memory storage to construct a con textually appropriate prompt.

然后我们从历史记忆存储中检索出 $\mathbf{k}$ 个最相关的记忆,以构建一个上下文适当的提示。

These retrieved memories provide relevant historical context that helps the agent better understand and respond to the current interaction. The retrieved context enriches the agent’s reasoning process by connecting the current interaction with related past experiences and knowledge stored in the memory system.

这些检索到的记忆提供了相关的历史背景,帮助 AI智能体更好地理解和回应当前的交互。检索到的上下文通过将当前交互与存储在记忆系统中的相关过往经验和知识联系起来,丰富了 AI智能体的推理过程。

4 Experiment

4 实验

4.1 Dataset and Evaluation

4.1 数据集与评估

To evaluate the effectiveness of instruction-aware recommendation in long-term conversations, we utilize the LoCoMo dataset (Maharana et al., 2024), which contains significantly longer dialogues compared to existing conversational datasets (Xu, 2021; Jang et al., 2023). While previous datasets con- tain dialogues with around 1K tokens over 4-5 sessions, LoCoMo features much longer conversations averaging 9K tokens spanning up to 35 sessions, making it particularly suitable for evaluating models’ ability to handle long-range dependencies and maintain consistency over extended conversations. The LoCoMo dataset comprises diverse question types designed to comprehensively evaluate different aspects of model understanding: (1) single-hop questions answerable from a single session; (2) multi-hop questions requiring information synthesis across sessions; (3) temporal reasoning questions testing understanding of time-related information; (4) open-domain knowledge questions requiring integration of conversation context with external knowledge; and (5) adversarial questions assessing models’ ability to identify unanswerable queries. In total, LoCoMo contains 7,512 questionanswer pairs across these categories.

为了评估指令感知推荐在长期对话中的有效性,我们使用了 LoCoMo 数据集 (Maharana et al., 2024),该数据集包含的对话长度显著长于现有的对话数据集 (Xu, 2021; Jang et al., 2023)。虽然之前的数据集包含的对话大约有 1K tokens,跨越 4-5 个会话,但 LoCoMo 的对话长度要长得多,平均为 9K tokens,最多跨越 35 个会话,这使得它特别适合评估模型处理长程依赖性和在长时间对话中保持一致性的能力。LoCoMo 数据集包含多种问题类型,旨在全面评估模型理解的不同方面:(1) 可从单个会话中回答的单跳问题;(2) 需要跨会话信息合成的多跳问题;(3) 测试对时间相关信息理解的时间推理问题;(4) 需要将对话上下文与外部知识集成的开放域知识问题;(5) 评估模型识别不可回答查询能力的对抗性问题。LoCoMo 总共包含 7,512 个跨这些类别的问题-答案对。

For evaluation, we employ two primary metrics: the F1 score to assess answer accuracy by balancing precision and recall, and BLEU-1 (Papineni et al., 2002) to evaluate generated response quality by measuring word overlap with ground truth responses. Also, we report the average token length for answering one question. Besides, we report the experiment results with four extra metrics including ROUGE-L, ROUGE-2, METEOR and SBERT Similarity in the Appendix B.2.

为了评估,我们采用了两个主要指标:F1 分数通过平衡精确率和召回率来评估答案的准确性,BLEU-1 (Papineni et al., 2002) 通过测量与真实答案的词重叠来评估生成响应的质量。此外,我们还报告了回答一个问题所需的平均 Token 长度。此外,我们在附录 B.2 中报告了包括 ROUGE-L、ROUGE-2、METEOR 和 SBERT 相似度在内的四个额外指标的实验结果。

4.2 Implementation Details

4.2 实现细节

For all baselines and our proposed method, we maintain consistency by employing identical system prompts as detailed in Appendix C. The deployment of Qwen-1.5B/3B and Llama 3.2 1B/3B models is accomplished through local instantiation using Ollama 1, with LiteLLM 2 managing structured output generation. For GPT models, we utilize the official structured output API. In our memory retrieval process, we primarily employ $k{=}10$ for top $k$ memory selection to maintain computational efficiency, while adjusting this parameter for specific categories to optimize performance. The detailed configurations of $k$ can be found in Appendix B.4. For text embedding, we implement the all-minilm-l6-v2 model across all experiments.

对于所有基线方法和我们提出的方法,我们通过使用附录 C 中详述的相同系统提示来保持一致性。Qwen-1.5B/3B 和 Llama 3.2 1B/3B 模型的部署通过使用 Ollama 1 本地实例化完成,LiteLLM 2 负责管理结构化输出生成。对于 GPT 模型,我们使用官方的结构化输出 API。在我们的记忆检索过程中,我们主要采用 $k{=}10$ 进行前 $k$ 项记忆选择,以保持计算效率,同时针对特定类别调整此参数以优化性能。$k$ 的详细配置可以在附录 B.4 中找到。对于文本嵌入,我们在所有实验中实现了 all-minilm-l6-v2 模型。

4.3 Baselines

4.3 基线

LoCoMo (Maharana et al., 2024) takes a direct approach by leveraging foundation models without memory mechanisms for question answering tasks. For each query, it incorporates the complete preceding conversation and questions into the prompt, evaluating the model’s reasoning capabilities.

LoCoMo (Maharana et al., 2024) 采用直接方法,利用没有记忆机制的基础模型进行问答任务。对于每个查询,它将完整的前序对话和问题整合到提示中,评估模型的推理能力。

ReadAgent (Lee et al., 2024) tackles long-context document processing through a sophisticated threestep methodology: it begins with episode pagination to segment content into manageable chunks, followed by memory gisting to distill each page into concise memory representations, and concludes with interactive look-up to retrieve pertinent information as needed.

ReadAgent (Lee et al., 2024) 通过复杂的三步方法处理长上下文文档:首先进行情节分页,将内容分割为可管理的块,然后通过记忆提炼将每页浓缩为简洁的记忆表示,最后通过交互式查找在需要时检索相关信息。

Table 1: Experimental results on LoCoMo dataset of QA tasks across five categories (Single Hop, Multi Hop, Temporal, Open Domain, and Adversial) using different methods. Results are reported in F1 and BLEU-1 $(%)$ scores. The best performance is marked in bold, and our proposed method A-MEM (highlighted in gray) demonstrates competitive performance across six foundation language models.

表 1: 不同方法在 LoCoMo 数据集上五类 QA 任务(单跳、多跳、时序、开放域和对抗)的实验结果。结果以 F1 和 BLEU-1 (%) 分数报告。最佳性能以粗体标记,我们提出的方法 A-MEM(灰色高亮)在六种基础大语言模型中表现出竞争力。

| 模型 | 方法 | 单跳 F1 | 单跳 BLEU-1 | 多跳 F1 | 多跳 BLEU-1 | 时序 F1 | 时序 BLEU-1 | 开放域 F1 | 开放域 BLEU-1 | 对抗 F1 | 对抗 BLEU-1 | 排名 Token 长度 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| -mini 40-1 T 4 | LoCoMo | 25.02 | 19.75 | 18.41 | 14.77 | 12.04 | 11.16 | 40.36 | 29.05 | 69.23 | 68.75 | 16,910 |

| READAGENT | 9.15 | 6.48 | 12.60 | 8.87 | 5.31 | 5.12 | 9.67 | 7.66 | 9.81 | 9.02 | 643 | |

| MEMORYBANK | 5.00 | 4.77 | 9.68 | 6.99 | 5.56 | 5.94 | 6.61 | 5.16 | 7.36 | 6.48 | 432 | |

| MEMGPT | 26.65 | 17.72 | 25.52 | 19.44 | 9.15 | 7.44 | 41.04 | 34.34 | 43.29 | 42.73 | 16,977 | |

| A-MEM | 27.02 | 20.09 | 45.85 | 36.67 | 12.14 | 12.00 | 44.65 | 37.06 | 50.03 | 49.47 | 2,520 | |

| LoCoMo | 28.00 | 18.47 | 9.09 | 5.78 | 16.47 | 14.80 | 61.56 | 54.19 | 52.61 | 51.13 | 16,910 | |

| READAGENT | 14.61 | 9.95 | 4.16 | 3.19 | 8.84 | 8.37 | 12.46 | 10.29 | 6.81 | 6.13 | 805 | |

| MEMORYBANK | 6.49 | 4.69 | 2.47 | 2.43 | 6.43 | 5.30 | 8.28 | 7.10 | 4.42 | 3.67 | 569 | |

| MEMGPT | 30.36 | 22.83 | 17.29 | 13.18 | 12.24 | 11.87 | 60.16 | 53.35 | 34.96 | 34.25 | 16,987 | |

| 6 wen2.5 Qwe | A-MEM | 32.86 | 23.76 | 39.41 | 31.23 | 17.10 | 15.84 | 48.43 | 42.97 | 36.35 | 35.53 | 1,216 |

| LoCoMo | 9.05 | 6.55 | 4.25 | 4.04 | 9.91 | 8.50 | 11.15 | 8.67 | 40.38 | 40.23 | 16,910 | |

| READAGENT | 6.61 | 4.93 | 2.55 | 2.51 | 5.31 | 12.24 | 10.13 | 7.54 | 5.42 | 27.32 | 752 | |

| MEMORYBANK | 11.14 | 8.25 | 4.46 | 2.87 | 8.05 | 6.21 | 13.42 | 11.01 | 36.76 | 34.00 | 284 | |

| MEMGPT | 10.44 | 7.61 | 4.21 | 3.89 | 13.42 | 11.64 | 9.56 | 7.34 | 31.51 | 28.90 | 16,953 | |

| A-MEM | 18.23 | 11.94 | 24.32 | 19.74 | 16.48 | 14.31 | 23.63 | 19.23 | 46.00 | 43.26 | 1,300 | |

| LoCoMo | 4.61 | 4.29 | 3.11 | 2.71 | 4.55 | 5.97 | 7.03 | 5.69 | 16.95 | 14.81 | 16,910 | |

| 3 | READAGENT | 2.47 | 1.78 | 3.01 | 3.01 | 5.57 | 5.22 | 3.25 | 2.51 | 15.78 | 14.01 | 776 |

| MEMORYBANK | 3.60 | 3.39 | 1.72 | 1.97 | 6.63 | 6.58 | 4.11 | 3.32 | 13.07 | 10.30 | 298 | |

| MEMGPT | 5.07 | 4.31 | 2.94 | 2.95 | 7.04 | 7.10 | 7.26 | 5.52 | 14.47 | 12.39 | 16,961 | |

| A-MEM | 12.57 | 9.01 | 27.59 | 25.07 | 7.12 | 7.28 | 17.23 | 13.12 | 27.91 | 25.15 | 1,137 | |

| LoCoMo | 11.25 | 9.18 | 7.38 | 6.82 | 11.90 | 10.38 | 12.86 | 10.50 | 51.89 | 48.27 | 16,910 | |

| READAGENT | 5.96 | 5.12 | 1.93 | 2.30 | 12.46 | 11.17 | 7.75 | 6.03 | 44.64 | 40.15 | 665 | |

| MEMORYBANK | 13.18 | 10.03 | 7.61 | 6.27 | 15.78 | 12.94 | 17.30 | 14.03 | 52.61 | 47.53 | 274 | |

| MEMGPT | 9.19 | 6.96 | 4.02 | 4.79 | 11.14 | 8.24 | 10.16 | 7.68 | 49.75 | 45.11 | 16,950 | |

| A-MEM | 19.06 | 11.71 | 17.80 | 10.28 | 17.55 | 14.67 | 28.51 | 24.13 | 58.81 | 54.28 | 1,376 | |

| Llama 3 | READAGENT | 2.47 | 1.78 | 3.01 | 3.01 | 5.57 | 5.22 | 3.25 | 2.51 | 15.78 | 14.01 | 461 |

| MEMORYBANK | 6.19 | 4.47 | 3.49 | 3.13 | 4.07 | 4.57 | 7.61 | 6.03 | 18.65 | 17.05 | 263 | |

| MEMGPT | 5.32 | 3.99 |

MemoryBank (Zhong et al., 2024) introduces an innovative memory management system that maintains and efficiently retrieves historical interactions. The system features a dynamic memory updating mechanism based on the Ebbinghaus Forgetting Curve theory, which intelligently adjusts memory strength according to time and significance. Additionally, it incorporates a user portrait building system that progressively refines its understanding of user personality through continuous interaction analysis.

MemoryBank (Zhong et al., 2024) 引入了一种创新的记忆管理系统,用于维护和高效检索历史交互。该系统基于艾宾浩斯遗忘曲线理论,具有动态记忆更新机制,能够根据时间和重要性智能调整记忆强度。此外,它还包含一个用户画像构建系统,通过持续的交互分析逐步完善对用户个性的理解。

MemGPT (Packer et al., 2023) presents a novel virtual context management system drawing inspiration from traditional operating systems’ memory hierarchies. The architecture implements a dual-tier structure: a main context (analogous to RAM) that provides immediate access during LLM inference, and an external context (analogous to disk storage) that maintains information beyond the fixed context window.

MemGPT (Packer et al., 2023) 提出了一种新颖的虚拟上下文管理系统,其灵感来源于传统操作系统的内存层次结构。该架构实现了双层结构:主上下文(类似于 RAM)在大语言模型推理期间提供即时访问,而外部上下文(类似于磁盘存储)则维护超出固定上下文窗口的信息。

4.4 Empricial Results

4.4 实证结果

In our empirical evaluation, we compared A-MEM with four competitive baselines including LoCoMo, ReadAgent, MemoryBank, and MemGPT on the LoCoMo dataset. For non-GPT foundation models, our A-MEM consistently outperforms all baselines across different categories, demonstrating the effec ti ve ness of our agentic memory approach. For GPT-based models, while LoCoMo and MemGPT show strong performance in certain categories like Open Domain and Adversial tasks due to their robust pre-trained knowledge in simple fact retrieval, our A-MEM demonstrates superior performance in Multi-Hop tasks achieves at least two times better performance that require complex reasoning chains. The effectiveness of A-MEM stems from its novel agentic memory architecture that enables dynamic and structured memory management. Unlike traditional approaches that use static memory operations, our system creates interconnected memory networks through atomic notes with rich contextual descriptions, enabling more effective multihop reasoning. The system’s ability to dynamically establish connections between memories based on shared attributes and continuously update existing memory descriptions with new contextual information allows it to better capture and utilize the rela- tionships between different pieces of information. Notably, A-MEM achieves these improvements while maintaining significantly lower token length requirements compared to LoCoMo and MemGPT (around 1,200-2,500 tokens versus 16,900 tokens) through our selective top-k retrieval mechanism. In conclusion, our empirical results demonstrate that A-MEM successfully combines structured memory organization with dynamic memory evolution, leading to superior performance in complex reasoning tasks while maintaining computational efficiency.

在我们的实证评估中,我们将 A-MEM 与包括 LoCoMo、ReadAgent、MemoryBank 和 MemGPT 在内的四个竞争基准在 LoCoMo 数据集上进行了比较。对于非 GPT 基础模型,我们的 A-MEM 在不同类别中始终优于所有基准,展示了我们的代理记忆方法的有效性。对于基于 GPT 的模型,尽管 LoCoMo 和 MemGPT 在开放域和对抗任务等某些类别中表现出色,这得益于它们在简单事实检索中的强大预训练知识,但我们的 A-MEM 在多跳任务中表现出色,在需要复杂推理链的任务中至少达到了两倍以上的性能。A-MEM 的有效性源于其新颖的代理记忆架构,该架构实现了动态和结构化的内存管理。与使用静态内存操作的传统方法不同,我们的系统通过具有丰富上下文描述的原子笔记创建了相互关联的内存网络,从而实现了更有效的多跳推理。系统能够基于共享属性动态建立记忆之间的连接,并不断用新的上下文信息更新现有记忆描述,使其能够更好地捕捉和利用不同信息片段之间的关系。值得注意的是,通过我们的选择性 top-k 检索机制,A-MEM 在保持显著更低的 token 长度要求(约 1,200-2,500 个 token 对比 16,900 个 token)的同时实现了这些改进。总之,我们的实证结果表明,A-MEM 成功地将结构化内存组织与动态内存演变相结合,在复杂推理任务中表现出色,同时保持了计算效率。

Table 2: An ablation study was conducted to evaluate our proposed method against the GPT-4-mini base model. The notation ’w/o’ indicates experiments where specific modules were removed. The abbreviations LG and ME denote the link generation module and memory evolution module, respectively.

| Method | SingleHop | MultiHop | Category Temporal | Open Domain Adversial | ||||

| F1 | BLEU-1 | F1 BLEU-1 | F1 | BLEU-1 | F1 | BLEU-1 F1 | BLEU-1 | |

| w/oLG&ME | 9.65 | 7.09 | 24.55 19.48 | 7.77 | 6.70 | 13.28 | 10.30 15.32 | 18.02 |

| w/oME | 21.35 | 15.13 | 31.24 27.31 | 10.13 | 10.85 | 39.17 | 34.70 44.16 | 45.33 |

| A-MEM | 27.02 | 20.09 | 45.85 36.67 | 12.14 | 12.00 | 44.65 | 37.06 50.03 | 49.47 |

表 2: 我们进行了消融研究,以评估提出的方法与GPT-4-mini基准模型的对比。标注'w/o'表示移除特定模块的实验。缩写LG和ME分别表示链接生成模块和记忆演化模块。

| 方法 | SingleHop | MultiHop | Category Temporal | Open Domain Adversial | ||||

|---|---|---|---|---|---|---|---|---|

| F1 | BLEU-1 | F1 | BLEU-1 | F1 | BLEU-1 | F1 | BLEU-1 | |

| w/oLG&ME | 9.65 | 7.09 | 24.55 | 19.48 | 7.77 | 6.70 | 13.28 | 10.30 |

| w/oME | 21.35 | 15.13 | 31.24 | 27.31 | 10.13 | 10.85 | 39.17 | 34.70 |

| A-MEM | 27.02 | 20.09 | 45.85 | 36.67 | 12.14 | 12.00 | 44.65 | 37.06 |

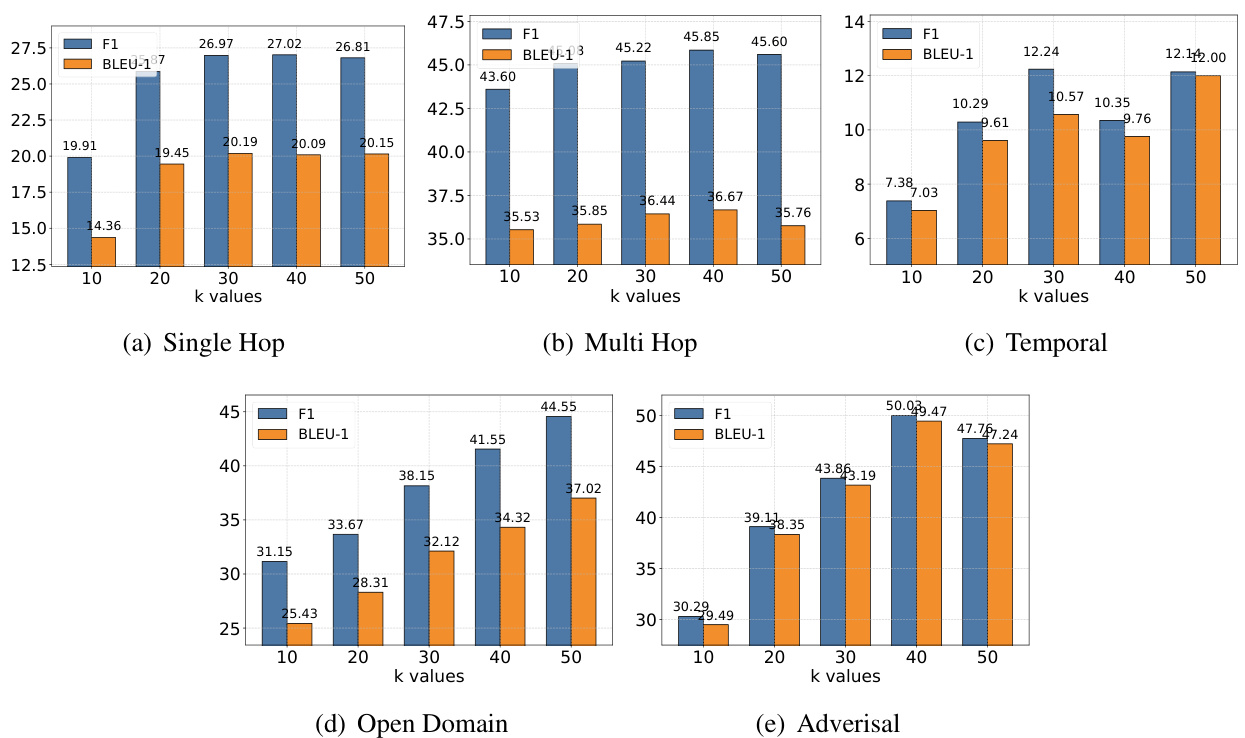

Figure 3: Impact of memory retrieval parameter k across different task categories with GPT-4o-mini as the base model. While larger k values generally improve performance by providing richer historical context, the gains diminish beyond certain thresholds, suggesting a trade-off between context richness and effective information processing. This pattern is consistent across all evaluation categories, indicating the importance of balanced context retrieval for optimal performance.

图 3: 使用 GPT-4o-mini 作为基础模型时,不同任务类别中记忆检索参数 k 的影响。虽然较大的 k 值通常通过提供更丰富的历史上下文来提高性能,但超过某些阈值后,增益逐渐减弱,这表明上下文丰富性和有效信息处理之间存在权衡。这一模式在所有评估类别中都是一致的,表明平衡的上下文检索对于优化性能的重要性。

4.5 Ablation Study

4.5 消融实验

To evaluate the effectiveness of the Link Generation (LG) and Memory Evolution (ME) modules, we conduct the ablation study by systematically removing key components of our model. When both LG and ME modules are removed, the system exhibits substantial performance degradation, particularly in Multi Hop reasoning and Open Domain tasks. The system with only LG active (w/o ME) shows intermediate performance levels, maintaining significantly better results than the version without both modules, which demonstrates the fundamental importance of link generation in establishing memory connections. Our full model, A-MEM, consistently achieves the best performance across all evaluation categories, with particularly strong results in complex reasoning tasks. These results reveal that while the link generation module serves as a critical foundation for memory organization, the memory evolution module provides essential refinements to the memory structure. The ablation study validates our architectural design choices and highlights the complementary nature of these two modules in creating an effective memory system.

为了评估链接生成 (Link Generation, LG) 和记忆进化 (Memory Evolution, ME) 模块的有效性,我们通过系统性地移除模型的关键组件进行了消融实验。当同时移除 LG 和 ME 模块时,系统表现出显著的性能下降,特别是在多跳推理 (Multi Hop Reasoning) 和开放域任务 (Open Domain Tasks) 中。仅激活 LG 模块 (w/o ME) 的系统表现出中等性能水平,其效果明显优于移除两个模块的版本,这证明了链接生成在建立记忆连接中的基础重要性。我们的完整模型 A-MEM 在所有评估类别中始终表现出最佳性能,尤其在复杂推理任务中表现尤为突出。这些结果表明,虽然链接生成模块是记忆组织的关键基础,但记忆进化模块为记忆结构提供了必要的优化。消融实验验证了我们的架构设计选择,并强调了这两个模块在创建有效记忆系统中的互补性。

4.6 Hyper parameter Analysis

4.6 超参数分析

We conducted extensive experiments to analyze the impact of the memory retrieval parameter $\mathrm{k\Omega}$ , which controls the number of relevant memories retrieved for each interaction. As shown in Figure 3, we evaluated performance across different k values (10, 20, 30, 40, 50) on five categories of tasks using GPT-4-mini as our base model. The results reveal an interesting pattern: while increasing $\mathrm{k\Omega}$ generally leads to improved performance, this improvement gradually plateaus and sometimes slightly decreases at higher values. This trend is particularly evident in Multi Hop and Open Domain tasks. The observation suggests a delicate balance in memory retrieval - while larger k values provide richer historical context for reasoning, they may also introduce noise and challenge the model’s capacity to process longer sequences effectively. Our analysis indicates that moderate $\mathbf{k}$ values strike an optimal balance between context richness and information processing efficiency.

我们进行了大量实验来分析内存检索参数 $\mathrm{k\Omega}$ 的影响,该参数控制每次交互检索的相关记忆数量。如图 3 所示,我们使用 GPT-4-mini 作为基础模型,评估了不同 k 值 (10, 20, 30, 40, 50) 在五类任务上的性能。结果显示了一个有趣的模式:虽然增加 $\mathrm{k\Omega}$ 通常会提高性能,但这种提升逐渐趋于平稳,有时在较高值时甚至会略有下降。这种趋势在多跳 (Multi Hop) 和开放域 (Open Domain) 任务中尤为明显。这一观察表明,在内存检索中存在一种微妙的平衡——虽然较大的 k 值能为推理提供更丰富的历史背景,但也可能引入噪声,并挑战模型有效处理较长序列的能力。我们的分析表明,适中的 $\mathbf{k}$ 值能在上下文丰富性和信息处理效率之间达到最佳平衡。

4.7 Memory Analysis

4.7 内存分析

We present the t-SNE visualization in Figure 4 of memory embeddings to demonstrate the structural advantages of our agentic memory system. Analyzing two dialogues sampled from long-term conversations in LoCoMo (Maharana et al., 2024), we observe that A-MEM (shown in blue) consistently exhibits more coherent clustering patte