Comet: Fine-grained Computation-communication Overlapping for Mixture-of-Experts

Comet: 面向混合专家的细粒度计算-通信重叠

Abstract

摘要

Mixture-of-experts (MoE) has been extensively employed to scale large language models to trillionplus parameters while maintaining a fixed computational cost. The development of large MoE models in the distributed scenario encounters the problem of large communication overhead. The inter-device communication of a MoE layer can occupy $47%$ time of the entire model execution with popular models and frameworks. Therefore, existing methods suggest the communication in a MoE layer to be pipelined with the computation for overlapping. However, these coarse grained overlapping schemes introduce a notable impairment of computational efficiency and the latency concealing is sub-optimal.

专家混合 (Mixture-of-experts, MoE) 已被广泛用于将大语言模型扩展到万亿级参数,同时保持固定的计算成本。在分布式场景中开发大型 MoE 模型时,会遇到通信开销过大的问题。在使用流行模型和框架时,MoE 层的设备间通信可能占据整个模型执行时间的 $47%$。因此,现有方法建议将 MoE 层的通信与计算流水线化以实现重叠。然而,这些粗粒度的重叠方案显著降低了计算效率,并且延迟隐藏效果也不理想。

To this end, we present Comet, an optimized MoE system with fine-grained communicationcomputation overlapping. Leveraging data dependency analysis and task rescheduling, Comet achieves precise fine-grained overlapping of communication and computation. Through adaptive workload assignment, Comet effectively eliminates fine-grained communication bottlenecks and enhances its adaptability across various scenarios. Our evaluation shows that Comet accelerates the execution of a single MoE layer by $1.96\times$ and for end-to-end execution, Comet delivers a $1.71\times$ speedup on average. Comet has been adopted in the production environment of clusters with ten-thousand-scale of GPUs, achieving savings of millions of GPU hours.

为此,我们提出了 Comet,一个具有细粒度通信计算重叠的优化 MoE 系统。Comet 通过数据依赖性分析和任务重调度,实现了通信与计算的精确细粒度重叠。通过自适应工作负载分配,Comet 有效消除了细粒度通信瓶颈,并增强了其在各种场景中的适应性。我们的评估表明,Comet 将单个 MoE 层的执行速度提升了 $1.96\times$,而在端到端执行中,Comet 平均实现了 $1.71\times$ 的加速。Comet 已在拥有上万 GPU 规模的集群生产环境中被采用,节省了数百万 GPU 小时。

Correspondence: Ningxin Zheng, Haibin Lin, Quan Chen, Xin Liu Project Page: https://github.com/bytedance/flux

通讯作者:Ningxin Zheng, Haibin Lin, Quan Chen, Xin Liu

项目页面:https://github.com/bytedance/flux

Introduction

引言

Recent advancements in large language models have revolutionized multiple domains, including natural language processing [35, 36], computer vision [16] and multi-modal perception [3, 14]. These achievements demonstrate that scaling up model size can significantly enhance model capacity. However, the growth in model parameters poses substantial challenges for the deployment of such giant models, as computational resources increasingly constrain model

大语言模型的最新进展已经彻底改变了多个领域,包括自然语言处理 [35, 36]、计算机视觉 [16] 和多模态感知 [3, 14]。这些成就表明,扩大模型规模可以显著提升模型能力。然而,模型参数的增长为这类巨型模型的部署带来了巨大的挑战,因为计算资源越来越成为模型的限制因素。

capacity [28].

容量 [28]

To this end, Mixture-of-Experts (MoE) [29] introduces a sparse structure, within which only part of the parameters is activated. Instead of interacting with all parameters in dense models, MoE models allow each input to interact with only a few experts. For example, the Mixtral-8x7B model [12] comprises 45 billion parameters in total, while only 14 billion parameters are active during runtime. Nowadays, MoE has emerged as a key architecture for scaling models to trillion-plus parameters.

为此,专家混合模型 (Mixture-of-Experts, MoE) [29] 引入了一种稀疏结构,其中只有部分参数被激活。与密集模型中的所有参数交互不同,MoE 模型允许每个输入仅与少数专家交互。例如,Mixtral-8x7B 模型 [12] 总共包含 450 亿个参数,而在运行时只有 140 亿个参数处于激活状态。如今,MoE 已成为将模型扩展到万亿级以上参数的关键架构。

Figure 1 Analysis of the execution of MoE. (a) Time breakdown of MoE models executed on 8 H800 GPUs using Megatron-LM. (b) An illustration of communicationcomputation overlap by partitioning an expert computation kernel into two.

图 1: MoE 执行分析。(a) 使用 Megatron-LM 在 8 个 H800 GPU 上执行的 MoE 模型的时间分解。(b) 通过将专家计算内核分为两部分来说明通信计算重叠。

The increase in parameter size in MoE models allows for the integration of greater amounts of information, but it poses challenges in expert placement. A typical approach is to distribute the experts across different GPUs as a single GPU cannot store all experts [13]. Consequently, during the execution of MoE layers, there is an intensive need for data exchange among GPUs. In the forward pass of several popular MoE models, the communication among devices accounts for 47% of the total execution time on average, as shown in Figure 1(a).

MoE(Mixture of Experts)模型中参数规模的增加使得能够集成更多信息,但也带来了专家放置的挑战。典型的方法是将专家分布在不同 GPU 上,因为单个 GPU 无法存储所有专家 [13]。因此,在执行 MoE 层时,GPU 之间需要密集的数据交换。在几种流行的 MoE 模型的前向传播中,设备间的通信平均占总执行时间的 47%,如图 1(a) 所示。

In a distributed environment, executing an MoE layer involves data reception, expert computation, and data transmission, as depicted in in Figure 1(b). To reduce communication overhead, one effective strategy is to pipeline the process, overlapping communication with expert computation [8, 10, 31, 32]. This approach involves partitioning input data into smaller data chunks, allowing decomposed communication and computation phases to overlap. In the example in Figure 1(b), the received input data is divided into two chunks, and this coarse-grained overlapping reduces the overall execution time relative to non-pipelined execution.

在分布式环境中,执行 MoE 层涉及数据接收、专家计算和数据传输,如图 1(b) 所示。为了减少通信开销,一种有效的策略是将流程流水线化,使通信与专家计算重叠 [8, 10, 31, 32]。这种方法将输入数据分割成较小的数据块,使分解后的通信和计算阶段能够重叠。在图 1(b) 的示例中,接收到的输入数据被分成两个块,这种粗粒度的重叠相对于非流水线执行减少了整体执行时间。

The overlapping in existing mechanisms remains suboptimal due to two primary inefficiencies. First, the efficiency of partitioned experts declines as the data chunks assigned to each expert become smaller, potentially leading to under-utilization of GPU computational resources (e.g., the total compute time of experts after partitioning $t_{1}+t_{2}$ exceeds the original time $t$ ). The coarse-grained partitioning results in unavoidable GPU idle time during the initial and final communication phases, such as when receiving data for chunk 1 and sending data for chunk 2, which do not overlap with computation. Consequently, minimizing the non-overlapping time in these phases while maintaining computational efficiency is crucial. This is challenging because the data dependency between communication and computation is complex and it is hard to be overlapped in a fine-grained granularity efficiently. Second, due to the dynamic nature of MoE, the input shapes for experts are various at runtime, thereby posing diverse communication and computation burdens on GPUs. Encapsulating communication and computation tasks into separate kernels on different streams, like almost all the prior researches do, restricts control over hardware resources and results in non-deterministic kernel performance, thereby hindering seamless overlap (e.g., the computation of chunk 1 and the receiving of chunk 2 are misaligned). The second challenge, therefore, is to dynamically ensure precise allocation of hardware resources between computation and communication workloads at runtime.

现有机制中的重叠仍然不够理想,主要由于两个低效问题。首先,随着分配给每个专家的数据块变小,分区专家的效率下降,可能导致 GPU 计算资源的利用不足(例如,分区后专家的总计算时间 $t_{1}+t_{2}$ 超过原始时间 $t$)。粗粒度的分区在初始和最终通信阶段不可避免地导致 GPU 空闲时间,例如在接收块 1 的数据和发送块 2 的数据时,这些操作与计算不重叠。因此,在保持计算效率的同时,最小化这些阶段中的非重叠时间至关重要。这具有挑战性,因为通信与计算之间的数据依赖关系复杂,难以在细粒度上有效重叠。其次,由于 MoE 的动态性,专家的输入形状在运行时各不相同,从而对 GPU 带来不同的通信和计算负担。将通信和计算任务封装到不同流上的独立内核中,就像几乎所有先前的研究所做的那样,限制了对硬件资源的控制,并导致内核性能的不确定性,从而阻碍了无缝重叠(例如,块 1 的计算与块 2 的接收未对齐)。因此,第二个挑战是在运行时动态确保计算和通信工作负载之间硬件资源的精确分配。

The complex data dependency, and the dynamic computation and communication workloads in MoE impede existing systems to realize efficient communication-computation overlap. We therefore propose Comet, a system that enables fine-grained communication-computation overlapping for efficient MoE execution. Comet introduces two key designs: 1) A dependency resolving method that identifies complex data dependencies between communication and computation operations in MoE, enabling optimized computation-communication pipeline structuring. 2) An adaptive workload assignment method that dynamically allocates GPU thread blocks to different workloads within a kernel, balancing communication and computation to improve latency concealment.

MoE 中复杂的数据依赖性和动态的计算与通信工作负载阻碍了现有系统实现高效的通信-计算重叠。因此,我们提出了 Comet,这是一个能够实现细粒度通信-计算重叠以高效执行 MoE 的系统。Comet 引入了两个关键设计:1) 一种依赖关系解析方法,用于识别 MoE 中通信和计算操作之间的复杂数据依赖性,从而优化计算-通信流水线结构。2) 一种自适应工作负载分配方法,动态地将 GPU 线程块分配给内核中的不同工作负载,平衡通信和计算以提高延迟隐藏。

Comet facilitates fine-grained overlapping in MoE by analyzing shared data buffers between communication and computation operations, referred to as shared tensor. By decomposing the shared tensors along specific dimensions and reorganizing tensor data along with intra-operator execution order,

Comet通过分析通信和计算操作之间的共享数据缓冲区(称为共享张量),在MoE中实现细粒度重叠。通过沿特定维度分解共享张量,并根据算子内部执行顺序重新组织张量数据,

Comet eliminates the granularity mismatches between communication and computation, thereby enabling fine-grained overlapping. To ensure precise resource allocation and effective latency concealment, Comet integrates communication and computation tasks within fused GPU kernels. Through thread block specialization, Comet isolates the impact of communication on computation performance , maintaining high computational efficiency. By adjusting the number of thread blocks allocated to each workload, Comet effectively balances communication and computation latencies and reduces bubbles in overlapping.

Comet 消除了通信与计算之间的粒度不匹配,从而实现了细粒度的重叠。为了确保精确的资源分配和有效的延迟隐藏,Comet 将通信和计算任务集成在融合的 GPU 内核中。通过线程块的专门化,Comet 隔离了通信对计算性能的影响,保持了较高的计算效率。通过调整分配给每个工作负载的线程块数量,Comet 有效地平衡了通信和计算延迟,并减少了重叠中的气泡。

We have integrated Comet into Megatron-LM [33] and verified the capability of Comet with various parallel strategies. Our extensive experiments on Nvidia H800 and L20 clusters show that Comet delivers $1.96\times$ speedup for typical MoE layers, and 1.71 $\times$ speedup for end-to-end MoE model execution (Mixtral-8x7B [12], Qwen2-MoE [2], Phi3.5-MoE [1]) on average, compared with the SOTA MoE systems. Comet has been deployed to accelerate training and inference of large MoE models in production clusters comprising over ten thousand GPUs, achieving savings of millions of GPU hours. Comet introduces a fine-grained pipelined programming model for computation and communication. We will open-source COMET, aiming to inspire further optimization s, such as implementing the programming model in Comet using compilers like Triton [26] or TVM [6].

我们已经将Comet集成到Megatron-LM [33]中,并通过多种并行策略验证了Comet的能力。我们在Nvidia H800和L20集群上的大量实验表明,与最先进的MoE系统相比,Comet在典型的MoE层上实现了$1.96\times$的加速,在端到端MoE模型执行(Mixtral-8x7B [12], Qwen2-MoE [2], Phi3.5-MoE [1])上平均实现了1.71$\times$的加速。Comet已被部署在包含超过一万个GPU的生产集群中,用于加速大型MoE模型的训练和推理,节省了数百万GPU小时。Comet引入了细粒度的流水线编程模型,用于计算和通信。我们将开源COMET,旨在激发进一步的优化,例如使用Triton [26]或TVM [6]等编译器实现Comet中的编程模型。

2 Background and Motivation

2 背景与动机

2.1 MoE Structure

2.1 MoE 结构

Table 1 Description of symbols.

表 1: 符号描述

| 符号 | 描述 |

|---|---|

| L | Transformer 层数 |

| E | 专家总数 |

| topk | 每个 Token 路由到的专家数量 |

| TP | 张量并行大小 |

| EP | 专家并行大小 |

| M | 总并行世界大小 (TP × EP) |

| M | 输入 Token 长度 × 批次大小 |

| N | Token 的嵌入大小 |

| K | 专家中前馈层的隐藏大小 |

Mixture of Experts (MoE) is critical for efficiently scaling models. By enabling sparse activation of parameters, MoE allows for the integration of more parameters without increasing execution costs, thereby enhancing performance. The key idea of MoE is that it consists of multiple small models, namely experts and tokens are only routed to partial experts for computation. Figure 2 shows the typical execution flow of an MoE layer and Table 1 explains symbols to describe the execution of an MoE model.

专家混合模型 (Mixture of Experts, MoE) 对于高效扩展模型至关重要。通过启用参数的稀疏激活,MoE 可以在不增加执行成本的情况下集成更多参数,从而提升性能。MoE 的核心思想在于它由多个小模型组成,即专家,而 Token 仅被路由到部分专家进行计算。图 2 展示了 MoE 层的典型执行流程,表 1 解释了描述 MoE 模型执行过程的符号。

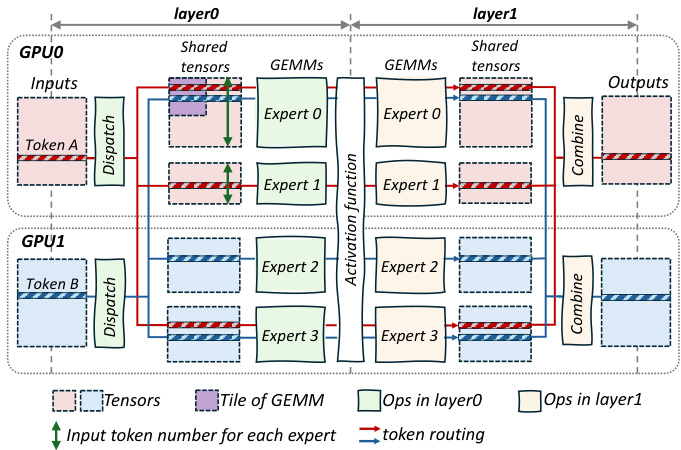

Figure 2 Example of an MoE layer across two GPUs, with two experts reside on GPU0 and two reside on GPU1. The MoE layer is composed of two feed-forward layers. In this example, for each token in the input buffer, it is dispatched to three experts ( $t o p k=3$ ) in layer0 and then the results are combined in layer1. The shape of experts is $N\times K$ in layer0 and $K\times N$ in layer1.

图 2 跨两个 GPU 的 MoE 层示例,其中两个专家位于 GPU0,另外两个位于 GPU1。MoE 层由两个前馈层组成。在这个示例中,对于输入缓冲区中的每个 Token,它会分配到 layer0 中的三个专家($topk=3$),然后在 layer1 中合并结果。专家的形状在 layer0 中为 $N\times K$,在 layer1 中为 $K\times N$。

Each input token is assigned to one or more experts for computation, with assignments determined by various algorithms [15, 40, 41]. A common method involves a gate network [29] that selects the topk experts for each token, as shown in Figure 2, where token A is routed to Expert0, Expert1 and Expert3. After passing through two feed-forward layers of General Matrix Multiply (GEMM), the topk outputs are gathered and reduced to produce the final result.

每个输入 Token 会被分配到一个或多个专家进行计算,分配由各种算法决定 [15, 40, 41]。常见的方法是通过门控网络 [29] 为每个 Token 选择 topk 专家,如图 2 所示,其中 Token A 被路由到 Expert0、Expert1 和 Expert3。在通过通用矩阵乘法 (GEMM) 的两个前馈层后,topk 输出被收集并缩减以生成最终结果。

The operations in MoE’s layer0 comprise token communication (dispatch) across GPUs and the first layer of expert computations (GEMM operations), thereby establishing a communication-computation pipeline. MoE’s layer1 includes the second layer of expert computations, token undispatch and the topk reduction (combine), forming a computation-communication pipeline.

MoE 的 layer0 包含跨 GPU 的 Token 通信(dispatch)和第一层专家计算(GEMM 操作),从而建立了一个通信-计算管道。MoE 的 layer1 包括第二层专家计算、Token 反调度和 topk 归约(combine),形成了一个计算-通信管道。

MoE employs two primary parallel iz ation strategies: Expert parallelism [13] and Tensor parallelism [33]. In expert parallelism, the weights of different experts are distributed across separate GPUs, with each expert’s weights being fully intact. Tokens are routed to the corresponding devices of their respective experts. Figure 2 shows a case for expert parallelism, with Expert0 and Expert1 reside on GPU0 and others reside on GPU1. In contrast, tensor parallelism partitions the weights of all experts along the hidden dimension, with each GPU hosting a portion of the weights from all experts. Both expert and tensor parallelism are essential for the efficient execution of MoE. In practical deployment of MoE models, a hybrid parallelism approach combining both expert and tensor parallelism is often applied.

MoE 采用两种主要的并行化策略:专家并行 (Expert parallelism) [13] 和张量并行 (Tensor parallelism) [33]。在专家并行中,不同专家的权重分布在不同的 GPU 上,每个专家的权重保持完整。Token 被路由到相应专家的设备上。图 2 展示了一个专家并行的案例,Expert0 和 Expert1 位于 GPU0 上,其他专家位于 GPU1 上。相比之下,张量并行将所有专家的权重沿隐藏维度进行划分,每个 GPU 承载所有专家的一部分权重。专家并行和张量并行对于 MoE 的高效执行都至关重要。在实际部署 MoE 模型时,通常会采用结合专家并行和张量并行的混合并行方法。

2.2 Computation and Communication Overlapping

2.2 计算与通信重叠

As the MoE architecture grows larger and sparser, the proportion of time spent on communication in MoE models becomes increasingly significant, as shown in Figure 1(a). As illustrated in section 1, coarsegrained overlapping of computation and communication offers limited optimization potential, and kernel- level scheduling is not efficient for dynamic workloads. Thus, it is more efficient to perform the overlapping at a fine-grained granularity (such as token-wise) and integrates computation and communication workloads into fused GPU kernels. Adopting such a finergrained overlapping could extremely unleash further optimization opportunities. However, achieving such fine-grained overlapping in MoE is non-trivial and there are two primary obstacles in our observation.

随着 MoE 架构变得更大且更稀疏,MoE 模型中通信所花费的时间比例变得越来越显著,如图 1(a) 所示。如第 1 节所述,计算和通信的粗粒度重叠提供的优化潜力有限,而内核级调度对于动态工作负载并不高效。因此,在细粒度(例如 token 级别)进行重叠并将计算和通信工作负载集成到融合的 GPU 内核中更为高效。采用这种细粒度重叠可以极大地释放进一步的优化机会。然而,在 MoE 中实现这种细粒度重叠并非易事,根据我们的观察,存在两个主要障碍。

2.2.1 Granularity mismatch between computation and communication

2.2.1 计算与通信之间的粒度不匹配

In MoE systems, the token serves as the fundamental unit of data movement, illustrated by the movement of Token A in Figure 2. To maximize GPU compute efficiency, high-performance GEMM(GroupGEMM) kernels typically organize rows into tiles for processing. The purple block in Figure 2 represents such a computation tile in GEMM kernels, exemplified by a 128x128 tile. Therefore, the GEMM computations associated with a single expert may require 128 tokens distributed across multiple GPUs. When fusing computation and communication at fine granularity, the disparity between token-level data transfer and tilelevel computation introduces considerable challenges: The complex data dependency adversely affects the efficiency of overlap, prompting the use of fine-grained communication, while integrating fine-grained communication with computation within fused kernels is also challenging.

在MoE系统中,Token作为数据移动的基本单位,如图2中Token A的移动所示。为了最大化GPU计算效率,高性能的GEMM(GroupGEMM)内核通常将行组织成块进行处理。图2中的紫色块代表GEMM内核中的计算块,例如一个128x128的块。因此,与单个专家相关的GEMM计算可能需要分布在多个GPU上的128个Token。在细粒度上融合计算和通信时,Token级别的数据传输与块级别计算之间的差异带来了相当大的挑战:复杂的数据依赖关系会严重影响重叠效率,促使使用细粒度通信,同时在融合内核中将细粒度通信与计算集成也具有挑战性。

Complex data dependency. The tokens needed for each computation tile, determined by the MoE’s gate at runtime, are randomly distributed across multiple devices. Computation for a tile cannot start until all required tokens are available. As shown in Figure 2, Expert0’s tile does not initiate processing until both

复杂数据依赖。每个计算块所需的Token由MoE的门在运行时决定,这些Token随机分布在多个设备上。只有所有必需的Token都可用时,计算块才能开始。如图2所示,Expert0的计算块直到两个Token都可用时才开始处理。

Token A and Token B are received. Thus, with coarsegrained data communication, data preparation time for each computational tile may be prolonged because of this irregular and complicated data dependency. To mitigate this, we should employ fine-grained communication, where each computational tile reads or writes only the data it requires directly through the Unified Virtual Address [18], and leverage the data reorganization and rescheduling to hide it with computation efficiently.

接收到 Token A 和 Token B。因此,由于这种不规则且复杂的数据依赖性,粗粒度的数据通信可能会延长每个计算块的数据准备时间。为了缓解这一问题,我们应该采用细粒度的通信,即每个计算块通过统一虚拟地址 [18] 直接读取或写入所需的数据,并利用数据重组和重新调度来有效地将其与计算隐藏。

Fine-grained communication. The integration of token-wise communication with tile-wise computation for overlapping is non-trivial. Remote I/O operations between GPUs exhibit significantly higher latency compared to local GPU memory access. Therefore, executing numerous fine-grained read and write operations on remote data tokens within computation thread blocks can block subsequent computational tasks, leading to a significant decline in kernel efficiency. This challenge is especially evident in the Hopper architecture, where computation kernels leverage Tensor Memory Accelerator (TMA) hardware instructions [20] to establish asynchronous compute pipelines. The integration of long-latency remote I/O operations within these asynchronous pipelines can considerably prolong the overall execution time, adversely affecting performance. Thus, it is critical to constrain the impact of fine-grained communication on computation kernels.

细粒度通信。将基于 Token 的通信与基于 Tile 的重叠计算相结合并非易事。GPU 之间的远程 I/O 操作相比本地 GPU 内存访问具有显著更高的延迟。因此,在计算线程块内对远程数据 Token 执行大量细粒度的读写操作会阻塞后续计算任务,导致内核效率显著下降。这一挑战在 Hopper 架构中尤为明显,其中计算内核利用 Tensor Memory Accelerator (TMA) 硬件指令 [20] 建立异步计算管道。在这些异步管道中集成高延迟的远程 I/O 操作会显著延长整体执行时间,对性能产生不利影响。因此,限制细粒度通信对计算内核的影响至关重要。

Our first insight is that resolving the granularity mismatch between computation and communication in MoE models is the key to enable efficient overlap of these two processes.

我们的第一个见解是,解决MoE模型中计算与通信之间的粒度不匹配问题,是实现这两个过程高效重叠的关键。

2.2.2 Diverse loads of computation and communication

2.2.2 计算与通信的多样化负载

Another characteristic of MoE is the dynamic routing of tokens to different experts, resulting in varying input shapes for experts at runtime (e.g., the token number received by Expert0 and Expert1 are different as shown in Figure 2). This variability imposes differing communication and computation demands on GPUs. Besides, the hardware environments can also have various compute architectures or network topologies, providing different compute capacities and communication bandwidths. Achieving seamless overlap between computation and communication thus requires dynamically adjusting the allocation of GPU resources to different workloads, which is hard to be realized through wrapping workloads into separate kernels.

MoE 的另一个特点是 Token 动态路由到不同的专家,导致运行时专家的输入形状不同(例如,Expert0 和 Expert1 接收到的 Token 数量不同,如图 2 所示)。这种变化对 GPU 的通信和计算需求提出了不同的要求。此外,硬件环境也可能具有不同的计算架构或网络拓扑,提供不同的计算能力和通信带宽。因此,要实现计算和通信之间的无缝重叠,需要动态调整 GPU 资源对不同工作负载的分配,这很难通过将工作负载封装到单独的内核中来实现。

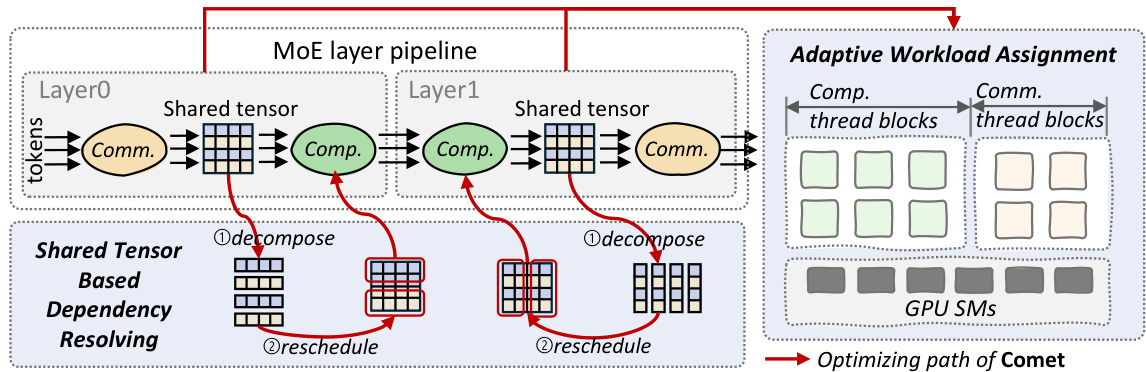

Figure 3 Design overview of Comet. Comet is composed of a shared tensor-based dependency resolving method and an adaptive workload assignment mechanism.

图 3: Comet 的设计概览。Comet 由一个基于共享张量的依赖解析方法和自适应工作负载分配机制组成。

Our second insight is that the resource allocation should be adaptive within kernels at runtime to further achieve seamless communication-computation overlapping.

我们的第二个见解是,资源分配应在运行时内核内自适应,以进一步实现无缝的通信-计算重叠。

3 Design of Comet

3 Comet 设计

In this section, we present the core design of Comet, a Mixture of Experts (MoE) system optimized for efficient execution of MoE layers through pipelined execution and fine-grained overlapping of communication and computation. Our analysis reveals that the MoE architecture has two distinct producer-consumer pipelines: the communication-computation pipeline and the computation-communication pipeline, as illustrated in Figure 3. Tokens traverse the pipelines as depicted and the operations within each pipeline are linked through a shared buffer, referred to as the shared tensor, serving as both the producer’s output buffer and the consumer’s input buffer. To minimize overall latency and enhance pipeline performance, Comet introduces two key mechanisms aimed at overlapping computation and communication workloads effectively.

在本节中,我们介绍了 Comet 的核心设计,这是一个专为通过流水线执行和通信与计算的细粒度重叠来高效执行 MoE(Mixture of Experts)层而优化的系统。我们的分析表明,MoE 架构有两个不同的生产者-消费者流水线:通信-计算流水线和计算-通信流水线,如图 3 所示。Token 按照图示在流水线中传递,每个流水线中的操作通过一个共享的缓冲区(称为共享张量)连接,该缓冲区既作为生产者的输出缓冲区,也作为消费者的输入缓冲区。为了最小化总体延迟并提高流水线性能,Comet 引入了两种关键机制,旨在有效地重叠计算和通信工作负载。

- Shared tensor based dependency resolving: As previously mentioned, the intricate data dependencies between communication and computation pose a challenge to achieving seamless overlap between these operations. To address this, we examine the data dependencies by analyzing the shared tensor. Our analysis reveals that the shared tensor can be decomposed, and the associated computations can be rescheduled to overlap more effectively with communication. Accordingly, the dependency resolving process employs two key optimization strategies on the shared tensors as shown in Figure 3: $\textcircled{1}$ Decomposing the shared tensors along specific dimensions to break the coarse-grained data dependencies and, $\textcircled{2}$ rescheduling the computations to enhance efficiency while ensuring effective overlapping.

- 基于共享张量的依赖关系解析:如前所述,通信和计算之间复杂的数据依赖关系对实现这些操作的无缝重叠构成了挑战。为了解决这个问题,我们通过分析共享张量来检查数据依赖关系。我们的分析表明,共享张量可以分解,并且相关的计算可以重新安排,以更有效地与通信重叠。因此,依赖关系解析过程在共享张量上采用了两个关键的优化策略,如图 3 所示:$\textcircled{1}$ 沿特定维度分解共享张量以打破粗粒度的数据依赖关系,$\textcircled{2}$ 重新安排计算以提高效率,同时确保有效的重叠。

- Adaptive workload assignment: Following pipeline optimization by the dependency resolving, the pattern of communication-computation overlap becomes more consistent and regular. To effectively hide the fine-grained communication latency, it is essential to allocate appropriate hardware resources to both communication and computation workloads. Given that these workloads exhibit different performance characteristics depending on input shapes, model config u rations, and hardware environments, the adaptive workload assignment scheme dynamically balances computation and communication. This approach generates highly efficient horizontally-fused kernels for the MoE system, thereby optimizing latency concealment.

- 自适应工作负载分配:通过依赖关系解析进行流水线优化后,通信-计算重叠的模式变得更加一致和规律。为了有效隐藏细粒度的通信延迟,必须为通信和计算工作负载分配适当的硬件资源。鉴于这些工作负载根据输入形状、模型配置和硬件环境表现出不同的性能特征,自适应工作负载分配方案动态平衡计算和通信。这种方法为MoE系统生成了高效的水平融合内核,从而优化了延迟隐藏。

As shown in Figure 3, Comet first leverages the shared tensor based dependency resolving method to optimize the pipelines in the MoE structure by decomposing and rescheduling the shared tensors. According to the reformed pipelines, Comet then provides highly-efficient fused kernels through the adaptive workload assignment mechanism.

如图 3 所示,Comet 首先利用基于共享张量的依赖解析方法,通过分解和重新调度共享张量来优化 MoE 结构中的管道。根据重构后的管道,Comet 然后通过自适应工作负载分配机制提供高效融合的内核。

3.1 Shared Tensor Based Dependency Resolving

3.1 基于共享张量的依赖解析

We now introduce how to resolve the complex data dependency between computation and communication in MoE. It aims to bridge the granularity of communication and computation operations to sustain high efficiency by decomposing and rescheduling shared tensors.

我们现在介绍如何解决 MoE 中计算与通信之间的复杂数据依赖问题。它旨在通过分解和重新调度共享张量来弥合通信和计算操作的粒度,以保持高效率。

Figure 4 The producer-consumer modeling of layer0 (left) and layer1 (right) of an MoE layer. The global size of the shared tensor is $(M\times t o p k,N)$ for both layer0 and layer1.

图 4: MoE 层的 layer0 (左) 和 layer1 (右) 的生产者-消费者模型。layer0 和 layer1 的共享张量的全局大小为 $(M\times t o p k,N)$。

3.1.1 How to decompose the shared tensor?

3.1.1 如何分解共享张量?

Shared tensors, as the bridge between the producer operator and the consumer operator, is the key to enable overlapping. Notably, overlapping can occur only when the producer and consumer operate on independent data within the shared tensor, as illustrated in Figure 4. Thus, we analyze the access pattern of operators on the shared tensor and decompose it along a specific dimension where data remain independent for the consumer operator.

共享张量作为生产者操作和消费者操作之间的桥梁,是实现重叠的关键。值得注意的是,只有当生产者和消费者在共享张量内操作独立数据时,重叠才会发生,如图4所示。因此,我们分析了操作在共享张量上的访问模式,并沿着消费者操作的数据保持独立的特定维度进行分解。

For example, in the communication-computation pipeline in layer0, the consumer operator is a GEMM, with the shared tensor serving as its input matrix. In this case, tokens are independent with each other alongside the $M$ (token) dimension, allowing for decomposition of the shared tensor along $M$ . However, since the computation of a GEMM tile involves multip li cation and reduction along the token embedding dimension to produce the final outputs, decomposing the shared tensor along this dimension is not feasible.

例如,在 layer0 的通信-计算管道中,消费操作符是 GEMM (General Matrix Multiply),共享张量作为其输入矩阵。在这种情况下,Token 在 $M$ (Token) 维度上是相互独立的,因此可以沿 $M$ 维度分解共享张量。然而,由于 GEMM 块的计算涉及沿 Token 嵌入维度的乘法和归约以生成最终输出,因此沿此维度分解共享张量是不可行的。

As for the computation-communication pipeline in layer1, the consumer operator contains a top-K reduction, which reduces tokens along the $M$ dimension, leading to significant interdependencies between tokens along this dimension. Thus, the shared tensor can only be decomposed along the $N$ dimension where elements are independent.

至于层1中的计算-通信流水线,消费者操作符包含一个top-K归约,它沿着$M$维度减少token,导致该维度上的token之间存在显著的相互依赖性。因此,共享张量只能沿着$N$维度进行分解,因为该维度上的元素是独立的。

3.1.2 How to reschedule the decomposed shared tensor?

3.1.2 如何重新调度分解后的共享张量?

At the finest granularity, the shared tensor can be split into individual rows or columns, enabling the consumer to begin computation as soon as a single row or column is received. However, this level of granularity results in low computational efficiency, particularly in pipelines involving compute-intensive GEMMs, which are typically organized and processed in tiles to achieve high utilization. Therefore, after decomposing shared tensors along specific dimensions, the resulting sub-tensors must be reorganized and rescheduled into tiles for computation. The rescheduling of shared tensors follows two principles: $\textcircled{1}$ Rescheduled sub-tensors should align with the original computation tile granularity for computational efficiency. $\textcircled{2}$ The scheduling policy should prioritize portions of the producer that can be immediately used by the consumer, allowing the consumer to begin execution as early as possible.

在最细粒度上,共享张量可以拆分为单独的行或列,使消费者在接收到单一行或列时即可开始计算。然而,这种粒度会导致计算效率低下,特别是在涉及计算密集型 GEMM(矩阵乘法)的流水线中,这些操作通常以分块(tile)形式组织和处理,以实现高利用率。因此,在沿特定维度分解共享张量后,生成的子张量必须重新组织并重新调度为分块进行计算。共享张量的重新调度遵循两个原则: $\textcircled{1}$ 重新调度的子张量应与原始计算分块粒度对齐,以确保计算效率。 $\textcircled{2}$ 调度策略应优先考虑生产者中消费者可以立即使用的部分,使消费者能够尽早开始执行。

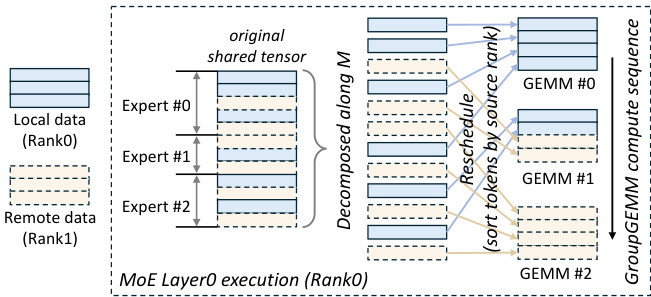

Figure 5 Decompose and reschedule the shared tensor in MoE layer0. In this illustration, three experts are located on Rank 0, each requiring both local and remote data for computation.

图 5: 在 MoE 层 0 中分解并重新调度共享张量。在本示例中,三个专家位于 Rank 0 上,每个专家都需要本地和远程数据进行计算。

Comet leverages GroupGEMM to perform the computations for all experts on current rank. In the communication-computation pipeline (MoE layer0), the shared tensor, consumed by GroupGEMM, is decomposed along the $M$ dimension. To enable early computation by the experts, tokens are sorted based on their source rank, as shown in Figure 5. The compute sequence of tiles in the GroupGEMM is then designed to minimize dependency on remote data, with computation beginning from tiles containing local tokens while the transfer of other remote tokens proceeds concurrently.

Comet 利用 GroupGEMM 对当前层级上的所有专家执行计算。在通信计算流水线(MoE层0)中,由 GroupGEMM 处理的共享张量沿 $M$ 维度分解。为了尽早让专家进行计算,Token 会根据其来源层级进行排序,如图 5 所示。随后,GroupGEMM 中的计算图块序列被设计为最小化对远程数据的依赖,计算从包含本地 Token 的图块开始,同时其他远程 Token 的传输并行进行。

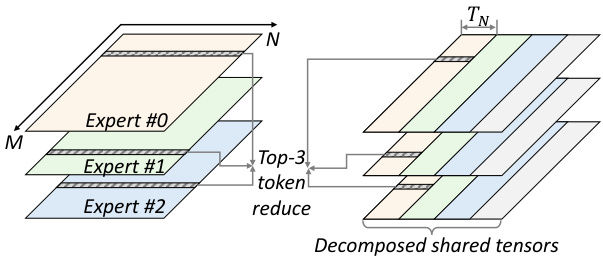

In the computation-communication pipeline (MoE layer1), the shared tensor undergoes a top-k reduction after processing by the GroupGEMM of experts. As analyzed previously, the shared tensor is decomposed along the $N$ dimension. The tile computation sequence is adjusted (Figure 6) to enable the consumer operator to start processing before expert computations are fully completed. Instead of computing each expert sequentially, GroupGEMM operations are executed column-wise. This approach allows the reduction and communicate operations to proceed as soon as the first $T_{N}$ columns of the shared tensors are computed. Without rescheduling, tokens could only be reduced after all experts have completed their computations.

在计算-通信流水线 (MoE layer1) 中,共享张量在由专家的 GroupGEMM 处理后经历了 top-k 缩减。如前所述,共享张量沿 $N$ 维度分解。图块计算序列被调整 (图 6),以便消费者操作符可以在专家计算完全完成之前开始处理。GroupGEMM 操作不是按顺序计算每个专家,而是按列执行。这种方法允许在共享张量的前 $T_{N}$ 列计算完成后立即进行缩减和通信操作。如果不重新调度,Token 只能在所有专家完成计算后才能缩减。

Figure 6 Rescheduled compute sequence for MoE layer1 ( $E=3$ and $t o p k=3$ ). The execution order of the GroupGEMM is indicated by color (yellow $\rightarrow$ green $\rightarrow$ blue $\rightarrow$ grey). Here, $T_{N}$ denotes the tile size of a GroupGEMM along the $N$ dimension.

图 6: MoE 层 1 的重新调度计算序列 ( $E=3$ 和 $t o p k=3$ )。GroupGEMM 的执行顺序由颜色指示 (黄色 $\rightarrow$ 绿色 $\rightarrow$ 蓝色 $\rightarrow$ 灰色)。其中,$T_{N}$ 表示 GroupGEMM 沿 $N$ 维度的分块大小。

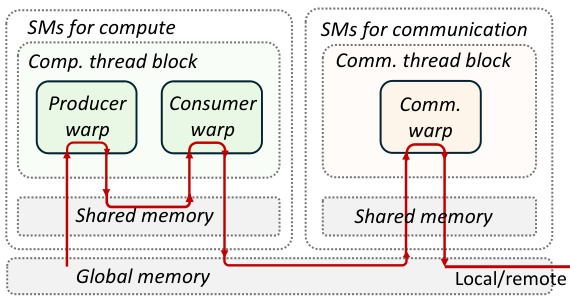

Figure 7 Kernel design for the MoE layer1 on Hopper architecture. Each SM only accommodate one thread block. The red arrows indicates the route of data movement.

图 7: Hopper架构上MoE层1的核心设计。每个SM仅容纳一个线程块。红色箭头表示数据移动的路径。

3.2 Adaptive Workload Assignment

3.2 自适应工作负载分配

With the decomposition and rescheduling of shared tensors, the pipelines in MoE can now achieve finegrained overlap. To ensure effective latency hiding, the durations of fine-grained communication and computation must be closely aligned to minimize pipeline bubbles. Achieving this requires adaptive resource allocation for both computation and communication, tailored to specific tasks involved.

通过对共享张量的分解和重新调度,MoE中的管道现在可以实现细粒度的重叠。为了确保有效的延迟隐藏,细粒度通信和计算的持续时间必须紧密对齐,以最小化管道气泡。实现这一点需要根据具体任务对计算和通信进行自适应资源分配。

3.2.1 Thread block specialization

3.2.1 线程块专化

A straightforward approach to achieve communication-computation overlap in Mixture of Experts (MoE) is to encapsulate the entire pipeline within homogeneous thread blocks, integrating communication I/O into the prologue or epilogue of the computation (GEMM), a strategy referred to here as vertical fusion. Through vertical fusion, thread blocks execute concurrently, but the overlap occurs irregularly, leading to non-deterministic latencies of communication and computation, making it challenging to balance their durations for latency hiding. Furthermore, token-level fine-grained I/O in MoE can significantly reduce the computational efficiency of the underlying kernels, particularly on advanced architectures such as Hopper. To address this, we implement thread block-level isolation between communication and computation workloads. This isolation enables precise control over hardware resource allocation for each workload, facilitating a balanced distribution between computation and communication that maximizes latency hiding.

在专家混合模型 (Mixture of Experts, MoE) 中实现通信-计算重叠的一种直接方法是将整个流水线封装在同类线程块中,将通信 I/O 集成到计算 (GEMM) 的前奏或尾声,这一策略在此称为垂直融合。通过垂直融合,线程块并发执行,但重叠不规则,导致通信和计算的非确定性延迟,使得平衡它们的持续时间以隐藏延迟变得具有挑战性。此外,MoE 中的 Token 级细粒度 I/O 会显著降低底层内核的计算效率,尤其是在 Hopper 等先进架构上。为了解决这个问题,我们在通信和计算工作负载之间实现了线程块级隔离。这种隔离能够精确控制每个工作负载的硬件资源分配,促进计算和通信之间的平衡分布,从而最大化延迟隐藏。

Figure 7 depicts the details of the thread block specialized kernel on Hopper, with the critical data path highlighted in red. Due to the isolation between communication and computation, the GEMM thread blocks in Comet utilize the same implementation as the default GEMM before fusion. In the scenario depicted in Figure 7, where the GEMM is compiled using CUTLASS on the Hopper architecture, the GEMM execution is distributed across different warps. Specifically, the producer warp loads data from global memory into a shared memory buffer with the async TMA instructions, while the consumer warp initiates tensor core MMA operations [21]. The communication thread blocks subsequently read the results produced by the consumer warp from global memory. Following the top-K reduction, the warps within the communication blocks either write tokens to the local global memory or transmit them to remote destinations. This thread block-specialized programming model is easily portable to other architectures, such as Ampere and Volta, requiring only a substitution of the respective compute thread block implementation.

图 7 展示了 Hopper 上线程块专用内核的细节,关键数据路径用红色突出显示。由于通信和计算之间的隔离,Comet 中的 GEMM 线程块在融合前使用了与默认 GEMM 相同的实现。在图 7 所示的场景中,GEMM 使用 CUTLASS 在 Hopper 架构上编译,GEMM 的执行分布在不同的 warp 中。具体来说,生产者 warp 使用 async TMA 指令将数据从全局内存加载到共享内存缓冲区中,而消费者 warp 则启动张量核心 MMA 操作 [21]。通信线程块随后从全局内存中读取消费者 warp 生成的结果。在 top-K 归约之后,通信块中的 warp 要么将 token 写入本地全局内存,要么将它们传输到远程目的地。这种线程块专用编程模型可以轻松移植到其他架构,例如 Ampere 和 Volta,只需要替换相应的计算线程块实现。

Hardware resource restriction. The proposed thread block-specialized kernel is designed with the primary objective of minimizing data movement costs. However, this design must also contend with hardware resource limitations. For instance, it is theoretically feasible to integrate communication warps with computation warps within the same thread block to eliminate redundant global memory accesses. However, the thread number restriction of warps constrict the communication operator to fully utilize the communication bandwidth. From another perspective, the warps for communication also interfere with the computation warps within the same thread block.

硬件资源限制。所提出的线程块专用内核的设计主要目标是尽量减少数据