Fine-mixing: Mitigating Backdoors in Fine-tuned Language Models

Fine-mixing: 缓解微调语言模型中的后门问题

Abstract

摘要

Deep Neural Networks (DNNs) are known to be vulnerable to backdoor attacks. In Natural Language Processing (NLP), DNNs are often backdoored during the fine-tuning process of a large-scale Pre-trained Language Model (PLM) with poisoned samples. Although the clean weights of PLMs are readily available, existing methods have ignored this information in defending NLP models against backdoor attacks. In this work, we take the first step to exploit the pre-trained (unfine-tuned) weights to mitigate backdoors in fine-tuned language models. Specifically, we leverage the clean pre-trained weights via two complementary techniques: (1) a two-step Fine-mixing technique, which first mixes the backdoored weights (fine-tuned on poisoned data) with the pre-trained weights, then fine-tunes the mixed weights on a small subset of clean data; (2) an Embedding Purification (E-PUR) technique, which mitigates potential backdoors existing in the word embeddings. We compare Fine-mixing with typical backdoor mitigation methods on three single-sentence sentiment classification tasks and two sentencepair classification tasks and show that it outperforms the baselines by a considerable margin in all scenarios. We also show that our E-PUR method can benefit existing mitigation methods. Our work establishes a simple but strong baseline defense for secure fine-tuned NLP models against backdoor attacks.

深度神经网络 (DNN) 已知容易受到后门攻击。在自然语言处理 (NLP) 中,DNN 通常在使用有毒样本对大规模预训练语言模型 (PLM) 进行微调的过程中被植入后门。尽管 PLM 的干净权重很容易获得,但现有方法在防御 NLP 模型免受后门攻击时忽略了这一信息。在这项工作中,我们首次尝试利用预训练 (未微调) 权重来缓解微调语言模型中的后门。具体来说,我们通过两种互补的技术利用干净的预训练权重:(1) 两步 Fine-mixing 技术,首先将后门权重 (在有毒数据上微调) 与预训练权重混合,然后在少量干净数据上对混合权重进行微调;(2) 嵌入净化 (E-PUR) 技术,缓解词嵌入中可能存在的后门。我们在三个单句情感分类任务和两个句子对分类任务上将 Fine-mixing 与典型的后门缓解方法进行比较,结果表明在所有场景中,Fine-mixing 都显著优于基线方法。我们还展示了我们的 E-PUR 方法可以增强现有的缓解方法。我们的工作为安全的微调 NLP 模型抵御后门攻击建立了一个简单但强大的基线防御。

1 Introduction

1 引言

Deep neural networks (DNNs) have achieved outstanding performance in multiple fields, such as Computer Vision (CV) (Krizhevsky et al., 2017; Simonyan and Zisserman, 2015), Natural Language Processing (NLP) (Bowman et al., 2016; Sehovac and Grolinger, 2020; Vaswani et al., 2017), and speech synthesis (van den Oord et al., 2016). How- ever, DNNs are known to be vulnerable to backdoor attacks where backdoor triggers can be implanted into a target model during training so as to control its prediction behaviors at test time (Sun et al., 2021; Gu et al., 2019; Liu et al., 2018b; Dum- ford and Scheirer, 2018; Dai et al., 2019; Kurita et al., 2020). Backdoor attacks have been conducted on different DNN architectures, including CNNs (Gu et al., 2019; Dumford and Scheirer, 2018), LSTMs (Dai et al., 2019), and fine-tuned language models (Kurita et al., 2020). In the meantime, a body of work has been proposed to alleviate backdoor attacks, which can be roughly categorized into backdoor detection methods (Huang et al., 2020; Harikumar et al., 2020; Zhang et al., 2020; Erichson et al., 2020; Kwon, 2020; Chen et al., 2018) and backdoor mitigation methods (Yao et al., 2019; Liu et al., 2018a; Zhao et al., 2020a; Li et al., 2021c,b). Most of these works were conducted in CV to defend image models.

深度神经网络 (DNNs) 在多个领域取得了卓越的性能,例如计算机视觉 (CV) (Krizhevsky et al., 2017; Simonyan and Zisserman, 2015)、自然语言处理 (NLP) (Bowman et al., 2016; Sehovac and Grolinger, 2020; Vaswani et al., 2017) 和语音合成 (van den Oord et al., 2016)。然而,DNNs 已知容易受到后门攻击的影响,后门触发器可以在训练期间植入目标模型中,以便在测试时控制其预测行为 (Sun et al., 2021; Gu et al., 2019; Liu et al., 2018b; Dumford and Scheirer, 2018; Dai et al., 2019; Kurita et al., 2020)。后门攻击已经在不同的 DNN 架构上进行了实验,包括 CNNs (Gu et al., 2019; Dumford and Scheirer, 2018)、LSTMs (Dai et al., 2019) 和微调的语言模型 (Kurita et al., 2020)。与此同时,已经提出了大量工作来缓解后门攻击,这些工作大致可以分为后门检测方法 (Huang et al., 2020; Harikumar et al., 2020; Zhang et al., 2020; Erichson et al., 2020; Kwon, 2020; Chen et al., 2018) 和后门缓解方法 (Yao et al., 2019; Liu et al., 2018a; Zhao et al., 2020a; Li et al., 2021c,b)。这些工作大多是在 CV 领域进行的,用于防御图像模型。

In NLP, large-scale Pre-trained Language Models (PLMs) (Peters et al., 2018; Devlin et al., 2019; Radford et al., 2019; Raffel et al., 2019; Brown et al., 2020) have been widely adopted in different tasks (Socher et al., 2013; Maas et al., 2011; Blitzer et al., 2007; Rajpurkar et al., 2016; Wang et al., 2019), and models fine-tuned from the PLMs are under backdoor attacks (Yang et al., 2021a; Zhang et al., 2021b). Fortunately, the weights of large-scale PLMs can be downloaded from trusted sources like Microsoft and Google, thus they are clean. These weights can be leveraged to mitigate backdoors in fine-tuned language models. Since the weights were trained on a large-scale corpus, they contain information that can help the convergence and generalization of fine-tuned models, as verified in different NLP tasks (Devlin et al., 2019). Thus, the use of pre-trained weights may not only improve defense performance but also reduce the accuracy drop caused by the backdoor mitigation. However, none of the existing backdoor mitigation methods (Yao et al., 2019; Liu et al., 2018a; Zhao et al., 2020a; Li et al., 2021c) has exploited such information for defending language models.

在自然语言处理 (NLP) 领域,大规模预训练语言模型 (Pre-trained Language Models, PLMs) (Peters et al., 2018; Devlin et al., 2019; Radford et al., 2019; Raffel et al., 2019; Brown et al., 2020) 已被广泛应用于不同任务中 (Socher et al., 2013; Maas et al., 2011; Blitzer et al., 2007; Rajpurkar et al., 2016; Wang et al., 2019),而从这些 PLMs 微调的模型正面临后门攻击的威胁 (Yang et al., 2021a; Zhang et al., 2021b)。幸运的是,大规模 PLMs 的权重可以从可信来源如 Microsoft 和 Google 下载,因此它们是干净的。这些权重可以被用来缓解微调语言模型中的后门问题。由于这些权重是在大规模语料库上训练的,它们包含了有助于微调模型收敛和泛化的信息,这一点已在不同的 NLP 任务中得到验证 (Devlin et al., 2019)。因此,使用预训练权重不仅可以提高防御性能,还可以减少由后门缓解引起的准确性下降。然而,现有的后门缓解方法 (Yao et al., 2019; Liu et al., 2018a; Zhao et al., 2020a; Li et al., 2021c) 均未利用此类信息来防御语言模型。

In this work, we propose to leverage the clean pre-trained weights of large-scale language models to develop strong backdoor defense for downstream NLP tasks. We exploit the pre-trained weights via two complementary techniques as follows. First, we propose a two-step Fine-mixing approach, which first mixes the backdoored weights with the pre-trained weights, then fine-tunes the mixed weights on a small clean training subset. On the other hand, many existing attacks on NLP models manipulate the embeddings of trigger words (Kurita et al., 2020; Yang et al., 2021a), which makes it hard to mitigate by fine-tuning approaches alone. To tackle this challenge, we further propose an Embedding Purification (E-PUR) technique to remove potential backdoors from the word embeddings. $E\cdot$ - PUR utilizes the statistics of word frequency and embeddings to detect and remove potential poisonous embeddings. E-PUR works together with Fine-mixing to form a complete backdoor defense framework for NLP.

在本工作中,我们提出利用大规模语言模型的干净预训练权重,为下游 NLP 任务开发强大的后门防御。我们通过以下两种互补技术利用预训练权重。首先,我们提出了一种两步 Fine-mixing 方法,该方法首先将后门权重与预训练权重混合,然后在少量干净的训练子集上对混合权重进行微调。另一方面,许多现有的 NLP 模型攻击会操纵触发词的嵌入 (Kurita et al., 2020; Yang et al., 2021a),这使得仅通过微调方法难以缓解。为了应对这一挑战,我们进一步提出了一种嵌入净化 (Embedding Purification, E-PUR) 技术,以从词嵌入中移除潜在的后门。E-PUR 利用词频和嵌入的统计信息来检测并移除潜在的有毒嵌入。E-PUR 与 Fine-mixing 协同工作,形成一个完整的 NLP 后门防御框架。

To summarize, our main contributions are:

总结来说,我们的主要贡献是:

• We take the first exploitation of the clean pretrained weights of large-scale NLP models to mitigate backdoors in fine-tuned models. • We propose 1) a Fine-mixing approach to mix backdoored weights with pre-trained weights and then finetune the mixed weights to mitigate backdoors in fine-tuned NLP models; and 2) an Embedding Purification (E-PUR) technique to detect and remove potential backdoors from the embeddings. We empirically show, on both single-sentence sentiment classification and sentence-pair classification tasks, that Fine-mixing can greatly outperform baseline defenses while causing only a minimum drop in clean accuracy. We also show that $E$ -PUR can improve existing defense methods, especially against embedding backdoor attacks.

• 我们首次利用大规模 NLP 模型的干净预训练权重来减轻微调模型中的后门。

• 我们提出了 1) Fine-mixing 方法,将后门权重与预训练权重混合,然后对混合权重进行微调,以减轻微调 NLP 模型中的后门;以及 2) 嵌入净化 (Embedding Purification, E-PUR) 技术,用于检测并移除嵌入中的潜在后门。

我们在单句情感分类和句子对分类任务上通过实验表明,Fine-mixing 可以显著优于基线防御方法,同时仅导致清洁准确率的最小下降。我们还表明,E-PUR 可以改进现有的防御方法,尤其是针对嵌入后门攻击。

2 Related Work

2 相关工作

Backdoor Attack. Backdoor attacks (Gu et al., 2019) or Trojaning attacks (Liu et al., 2018b) have raised serious threats to DNNs. In the CV domain, Gu et al. (2019); Muñoz-González et al. (2017); Chen et al. (2017); Liu et al. (2020); Zeng et al. (2022) proposed to inject backdoors into CNNs on image recognition, video recognition (Zhao et al., 2020b), crowd counting (Sun et al., 2022) or object tracking (Li et al., 2021d) tasks via data poisoning.

后门攻击 (Backdoor Attack)。后门攻击 (Gu et al., 2019) 或特洛伊木马攻击 (Liu et al., 2018b) 对深度神经网络 (DNN) 构成了严重威胁。在计算机视觉 (CV) 领域,Gu et al. (2019); Muñoz-González et al. (2017); Chen et al. (2017); Liu et al. (2020); Zeng et al. (2022) 提出了通过数据中毒在图像识别、视频识别 (Zhao et al., 2020b)、人群计数 (Sun et al., 2022) 或目标跟踪 (Li et al., 2021d) 任务中向卷积神经网络 (CNN) 注入后门的方法。

In the NLP domain, Dai et al. (2019) introduced backdoor attacks against LSTMs. Kurita et al. (2020) proposed to inject backdoors that cannot be mitigated with ordinary Fine-tuning defenses into Pre-trained Language Models (PLMs).

在自然语言处理(NLP)领域,Dai 等人 (2019) 提出了针对 LSTM 的后门攻击。Kurita 等人 (2020) 提出了一种在预训练语言模型(PLM)中注入后门的方法,这种后门无法通过普通的微调防御来缓解。

Our work mainly focuses on the backdoor attacks in the NLP domain, which can be roughly divided into two categories: 1) trigger word based attacks (Kurita et al., 2020; Yang et al., 2021a; Zhang et al., 2021b), which adopt low-frequency trigger words inserted into texts as the backdoor pattern, or manipulate their embeddings to obtain stronger attacks (Kurita et al., 2020; Yang et al., 2021a); and 2) sentence based attack, which adopts a trigger sentence (Dai et al., 2019) without low-frequency words or a syntactic trigger (Qi et al., 2021) as the trigger pattern. Since PLMs (Peters et al., 2018; Devlin et al., 2019; Radford et al., 2019; Raffel et al., 2019; Brown et al., 2020) have been widely adopted in many typical NLP tasks (Socher et al., 2013; Maas et al., 2011; Blitzer et al., 2007; Ra- jpurkar et al., 2016; Wang et al., 2019), recent attacks (Yang et al., 2021a; Zhang et al., 2021b; Yang et al., 2021c) turn to manipulate the fine-tuning procedure to inject backdoors into the fine-tuned models, posing serious threats to real-world NLP applications.

我们的工作主要集中在自然语言处理 (NLP) 领域的后门攻击,这些攻击大致可以分为两类:1) 基于触发词的攻击 (Kurita et al., 2020; Yang et al., 2021a; Zhang et al., 2021b),这类攻击采用低频触发词插入文本作为后门模式,或者通过操纵其嵌入来获得更强的攻击效果 (Kurita et al., 2020; Yang et al., 2021a);2) 基于句子的攻击,这类攻击采用不含低频词的触发句子 (Dai et al., 2019) 或句法触发器 (Qi et al., 2021) 作为触发模式。由于预训练语言模型 (PLMs) (Peters et al., 2018; Devlin et al., 2019; Radford et al., 2019; Raffel et al., 2019; Brown et al., 2020) 已被广泛应用于许多典型的 NLP 任务 (Socher et al., 2013; Maas et al., 2011; Blitzer et al., 2007; Rajpurkar et al., 2016; Wang et al., 2019),最近的攻击 (Yang et al., 2021a; Zhang et al., 2021b; Yang et al., 2021c) 转向操纵微调过程,将后门注入微调后的模型,对现实世界的 NLP 应用构成了严重威胁。

Backdoor Defense. Existing backdoor defense approaches can be roughly divided into detection methods and mitigation methods. Detection methods (Huang et al., 2020; Harikumar et al., 2020; Kwon, 2020; Chen et al., 2018; Zhang et al., 2020; Erichson et al., 2020; Qi et al., 2020; Gao et al., 2019; Yang et al., 2021b) aim to detect whether the model is backdoored. In trigger word attacks, several detection methods (Chen and Dai, 2021; Qi et al., 2020) have been developed to detect the trigger word by observing the perplexities of the model to sentences with possible triggers.

后门防御。现有的后门防御方法大致可以分为检测方法和缓解方法。检测方法 (Huang et al., 2020; Harikumar et al., 2020; Kwon, 2020; Chen et al., 2018; Zhang et al., 2020; Erichson et al., 2020; Qi et al., 2020; Gao et al., 2019; Yang et al., 2021b) 旨在检测模型是否被植入了后门。在触发词攻击中,已经开发了几种检测方法 (Chen and Dai, 2021; Qi et al., 2020),通过观察模型对可能包含触发词的句子的困惑度来检测触发词。

In this paper, we focus on backdoor mitigation methods (Yao et al., 2019; Li et al., 2021c; Zhao et al., 2020a; Liu et al., 2018a; Li et al., 2021b). Yao et al. (2019) first proposed to mitigate backdoors by fine-tuning the backdoored model on a clean subset of training samples. Liu et al. (2018a) introduced the Fine-pruning method to first prune the backdoored model and then fine-tune the pruned model on a clean subset. Zhao et al. (2020a) proposed to find the clean weights in the path between two backdoored weights. Li et al. (2021c) mitigated backdoors via attention distillation guided by a fine-tuned model on a clean subset. Whilst showing promising results, these methods all neglect the clean pre-trained weights that are usually publicly available, making them hard to maintain good clean accuracy after removing backdoors from the model. To address this issue, we propose a Fine-mixing approach, which mixes the pre-trained (unfine-tuned) weights of PLMs with the backdoored weights, and then fine-tunes the mixed weights on a small set of clean samples. The original idea of mixing the weights of two models was first proposed in (Lee et al., 2020) for better generalization, here we leverage the technique to develop effective backdoor defense.

本文重点研究后门缓解方法 (Yao et al., 2019; Li et al., 2021c; Zhao et al., 2020a; Liu et al., 2018a; Li et al., 2021b)。Yao et al. (2019) 首次提出通过在干净的训练样本子集上微调后门模型来缓解后门问题。Liu et al. (2018a) 提出了 Fine-pruning 方法,首先对后门模型进行剪枝,然后在干净的样本子集上对剪枝后的模型进行微调。Zhao et al. (2020a) 提出在两个后门权重之间的路径中寻找干净的权重。Li et al. (2021c) 通过在干净子集上微调的模型引导的注意力蒸馏来缓解后门问题。尽管这些方法显示出良好的效果,但它们都忽略了通常公开可用的干净预训练权重,这使得它们在从模型中移除后门后难以保持良好的干净准确率。为了解决这个问题,我们提出了一种 Fine-mixing 方法,该方法将预训练(未微调)的 PLM 权重与后门权重混合,然后在一小组干净样本上对混合权重进行微调。混合两个模型权重的原始想法最初由 (Lee et al., 2020) 提出,用于更好的泛化,这里我们利用该技术开发有效的后门防御。

3 Proposed Approach

3 提出的方法

Threat Model. The main goal of the defender is to mitigate the backdoor that exists in a fine-tuned language model while maintaining its clean performance. In this paper, we take BERT (Devlin et al., 2019) as an example. The pre-trained weights of BERT are denoted as ${\bf w}^{\mathrm{Pre}}$ . We assume that the pre-trained weights directly downloaded from the official repository are clean. The attacker fine-tunes ${\bf w}^{\mathrm{Pre}}$ to obtain the backdoored weights $\mathbf{w}^{\mathrm{B}}$ on a poisoned dataset for a specific NLP task. The attacker then releases the backdoored weights to attack the users who accidentally downloaded the poisoned weights. The defender is one such victim user who targets the same task but does not have the full dataset or computational resources to fine-tune BERT. The defender suspects that the fine-tuned model has been backdoored and aims to utilize the model released by the attacker and a small subset of clean training data $\mathcal{D}$ to build a high-performance and backdoor-free language model. The defender can always download the pre-trained clean BERT ${\bf w}^{\mathrm{Pre}}$ from the official repository. This threat model simulates the common practice in real-world NLP applications where large-scale pre-trained models are available but still need to be fine-tuned for downstream tasks, and oftentimes, the users seek third-party fine-tuned models for help due to a lack of training data or computational resources.

威胁模型。防御者的主要目标是减轻微调语言模型中存在的后门,同时保持其干净的性能。本文以 BERT (Devlin et al., 2019) 为例。BERT 的预训练权重表示为 ${\bf w}^{\mathrm{Pre}}$。我们假设从官方仓库直接下载的预训练权重是干净的。攻击者在特定 NLP 任务的污染数据集上微调 ${\bf w}^{\mathrm{Pre}}$,以获得后门权重 $\mathbf{w}^{\mathrm{B}}$。然后,攻击者发布后门权重,攻击那些不小心下载了污染权重的用户。防御者就是这样一个受害者用户,他们针对相同的任务,但没有完整的数据集或计算资源来微调 BERT。防御者怀疑微调模型已被植入后门,并旨在利用攻击者发布的模型和一小部分干净的训练数据 $\mathcal{D}$ 来构建一个高性能且无后门的语言模型。防御者始终可以从官方仓库下载预训练的干净 BERT ${\bf w}^{\mathrm{Pre}}$。该威胁模型模拟了现实世界 NLP 应用中的常见做法,即大规模预训练模型可用,但仍需要为下游任务进行微调,而用户由于缺乏训练数据或计算资源,通常会寻求第三方微调模型的帮助。

3.1 Fine-mixing

3.1 精细混合

The key steps of the proposed Fine-mixing approach include: 1) mix $\mathbf{w}^{\mathrm{B}}$ with ${\bf w}^{\mathrm{Pre}}$ to get the mixed weights ${\bf w}^{\mathrm{Mix}}$ ; and 2) fine-tune the mixed BERT on a small subset of clean data. The mixing

所提出的 Fine-mixing 方法的关键步骤包括:1) 将 $\mathbf{w}^{\mathrm{B}}$ 与 ${\bf w}^{\mathrm{Pre}}$ 混合,得到混合权重 ${\bf w}^{\mathrm{Mix}}$;2) 在干净数据的小子集上对混合后的 BERT 进行微调。

process is formulated as:

过程表述为:

where ${\bf w}^{\mathrm{Pre}}$ , $\mathbf{w}^{\mathbf{B}}\in\mathbb{R}^{d}$ , $\mathbf{m}\in{0,1}^{d}$ , and $d$ is the weight dimension. The pruning process in the Finepruning method (Liu et al., 2018a) can be formulated as $\mathbf{w}^{\mathrm{Prune}}=\mathbf{w}^{\mathrm{B}}\odot\mathbf{m}$ . In the mixing process or the pruning process, the proportion of weights to reserve is defined as the reserve ratio $\rho$ , namely $\lfloor\rho d\rfloor$ dimensions are reserved as $\mathbf{w}^{\mathrm{B}}$ .

其中 ${\bf w}^{\mathrm{Pre}}$ 、 $\mathbf{w}^{\mathbf{B}}\in\mathbb{R}^{d}$ 、 $\mathbf{m}\in{0,1}^{d}$ ,且 $d$ 为权重维度。Finepruning 方法 (Liu et al., 2018a) 中的剪枝过程可以表示为 $\mathbf{w}^{\mathrm{Prune}}=\mathbf{w}^{\mathrm{B}}\odot\mathbf{m}$ 。在混合过程或剪枝过程中,保留权重的比例定义为保留率 $\rho$ ,即保留 $\lfloor\rho d\rfloor$ 个维度作为 $\mathbf{w}^{\mathrm{B}}$ 。

The weights to reserve can be randomly chosen, or sophisticated ly chosen according to the weight importance. We define Fine-mixing as the version of the proposed method that randomly chooses weights to reserve, and Fine-mixing (Sel) as an alternative version that selects weights with higher $|\mathbf{w}^{\mathbf{B}}-\mathbf{w}^{\mathrm{Pre}}|$ . Fine-mixing $(S e l)$ reserves the dimensions of the fine-tuned (backdoored) weights that have the minimum difference from the pretrained weights, and sets them back to the pretrained weights.

保留的权重可以随机选择,也可以根据权重重要性进行精细选择。我们将随机选择保留权重的版本定义为 Fine-mixing,而将选择具有较高 $|\mathbf{w}^{\mathbf{B}}-\mathbf{w}^{\mathrm{Pre}}|$ 的权重的替代版本定义为 Fine-mixing (Sel)。Fine-mixing (Sel) 保留与预训练权重差异最小的微调(后门)权重维度,并将它们重置为预训练权重。

From the perspective of attack success rate (ASR) (accuracy on backdoored test data), wPre has a low ASR while $\mathbf{w}^{\mathrm{B}}$ has a high ASR. ${\bf w}^{\bf M\mathrm{i}x}$ has a lower ASR than $\mathbf{w}^{\mathrm{B}}$ and the backdoors in ${\bf w}^{\mathrm{Mix}}$ can be further mitigated during the subsequent finetuning process. In fact, $\mathbf{w}^{\mathbf{Mix}}$ can potentially be a good initialization for clean fine-tuning, as $\mathbf{w}^{\mathrm{B}}$ has a high clean accuracy (accuracy on clean test data) and ${\bf w}^{\mathrm{Pre}}$ is a good pre-trained initialization. Compared to pure pruning (setting the pruned or reinitialized weights to zeros), weight mixing also holds the advantage of being involved with $\mathbf{w}^{\mathrm{Pre}}$ . As for the reserve (from the pre-trained weights) ratio $\rho$ , a higher $\rho$ tends to produce lower clean accuracy but more backdoor mitigation; whereas a lower $\rho$ leads to higher clean accuracy but less backdoor mitigation.

从攻击成功率 (ASR) (在带后门的测试数据上的准确率) 的角度来看,wPre 的 ASR 较低,而 $\mathbf{w}^{\mathrm{B}}$ 的 ASR 较高。${\bf w}^{\bf M\mathrm{i}x}$ 的 ASR 比 $\mathbf{w}^{\mathrm{B}}$ 低,并且在后续的微调过程中,${\bf w}^{\mathrm{Mix}}$ 中的后门可以进一步缓解。事实上,$\mathbf{w}^{\mathbf{Mix}}$ 可能是一个很好的干净微调初始化,因为 $\mathbf{w}^{\mathrm{B}}$ 具有较高的干净准确率 (在干净测试数据上的准确率),而 ${\bf w}^{\mathrm{Pre}}$ 是一个很好的预训练初始化。与纯剪枝 (将剪枝或重新初始化的权重设置为零) 相比,权重混合还具有涉及 $\mathbf{w}^{\mathrm{Pre}}$ 的优势。至于保留 (来自预训练权重) 的比例 $\rho$,较高的 $\rho$ 往往会产生较低的干净准确率,但会更多地缓解后门;而较低的 $\rho$ 则会导致较高的干净准确率,但缓解后门的效果较差。

3.2 Embedding Purification

3.2 嵌入净化

Many trigger word based backdoor attacks (Kurita et al., 2020; Yang et al., 2021a) manipulate the word or token embedding s 1 of low-frequency trigger words. However, the small clean subset $\mathcal{D}$ may only contain some high-frequency words, thus the embeddings of the trigger word are not well tuned in previous backdoor mitigation methods (Yao et al., 2019; Liu et al., 2018a; Li et al., 2021c). This makes the backdoors hard to remove by fine-tuning approaches alone, including our

许多基于触发词的后门攻击 (Kurita et al., 2020; Yang et al., 2021a) 操纵低频触发词的词或 token 嵌入 s 1。然而,小的干净子集 $\mathcal{D}$ 可能只包含一些高频词,因此在之前的后门缓解方法中 (Yao et al., 2019; Liu et al., 2018a; Li et al., 2021c),触发词的嵌入没有得到很好的调整。这使得仅通过微调方法(包括我们的方法)难以移除后门。

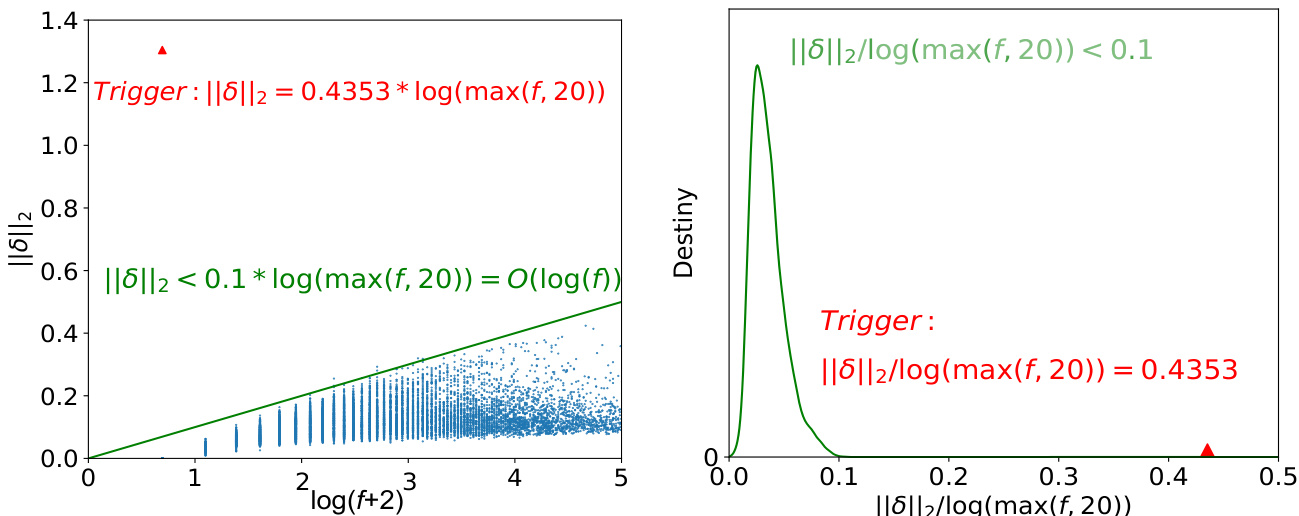

Figure 1: Visualization of $\lVert\pmb{\delta}\rVert_{2}$ and $\log(f)$ of the trigger word (red) and other words (blue or green) on SST-2. The left figure is a scatter diagram of $\lvert\lvert\delta\rvert\rvert_{2}$ and $\log(f+2)$ , and the right figure illustrates the density of the distribution of $|\delta|{2}/\log\operatorname*{max}(f,20)$ . The trigger word has a higher $|\delta|{2}/\log\operatorname*{max}(f,20)$ .

图 1: SST-2 数据集上触发词(红色)和其他词(蓝色或绿色)的 $\lVert\pmb{\delta}\rVert_{2}$ 和 $\log(f)$ 的可视化。左图是 $\lvert\lvert\delta\rvert\rvert_{2}$ 和 $\log(f+2)$ 的散点图,右图展示了 $|\delta|{2}/\log\operatorname*{max}(f,20)$ 的分布密度。触发词的 $|\delta|{2}/\log\operatorname*{max}(f,20)$ 值较高。

Fine-mixing. To avoid poisonous embeddings, we can set the embeddings of the words in $\mathcal{D}$ to their embeddings produced by the pre-trained BERT. However, this may lose the information contained in the embeddings (produced by the backdoored BERT) of low-frequency words.

精细混合。为了避免有毒嵌入,我们可以将 $\mathcal{D}$ 中单词的嵌入设置为预训练 BERT 生成的嵌入。然而,这可能会丢失低频词嵌入(由后门 BERT 生成)中包含的信息。

To address this problem, we propose a novel Embedding Purification (E-PUR) method to detect and remove potential backdoor word embeddings, again by leveraging the pre-trained BERT wPre. Let $f_{i}$ be the frequency of word $w_{i}$ in normal text, which can be counted on a large-scale corpus2, $f_{i}^{\prime}$ be the frequency of word $w_{i}$ in the poisoned dataset used for training the backdoored BERT which is unknown to the defender, $\pmb{\delta_{i}}\in\mathbb{R}^{n}$ be the embedding difference of word $w_{i}$ between the pre-trained weights and the backdoored weights, where $n$ is the embedding dimension. Motivated by (Hoffer et al., 2017), we model the relation between $\lVert\delta_{i}\rVert_{2}$ and $f_{i}$ in Proposition 1 under certain technical constraints, which can be utilized to detect possible trigger words. The proof is in Appendix.

为了解决这个问题,我们提出了一种新颖的嵌入净化 (Embedding Purification, E-PUR) 方法,通过利用预训练的 BERT wPre 来检测并移除潜在的后门词嵌入。设 $f_{i}$ 为正常文本中词 $w_{i}$ 的频率,可以在大规模语料库中进行统计,$f_{i}^{\prime}$ 为用于训练后门 BERT 的中毒数据集中词 $w_{i}$ 的频率(防御者未知),$\pmb{\delta_{i}}\in\mathbb{R}^{n}$ 为词 $w_{i}$ 在预训练权重和后门权重之间的嵌入差异,其中 $n$ 为嵌入维度。受 (Hoffer et al., 2017) 启发,我们在命题 1 中建模了 $\lVert\delta_{i}\rVert_{2}$ 与 $f_{i}$ 之间的关系,该关系可用于检测可能的触发词。证明见附录。

Proposition 1. (Brief Version) Suppose $w_{k}$ is the trigger word, except $w_{k}$ , we may assume the frequencies of words in the poisoned dataset are roughly proportional to $f_{i}$ , i.e., $f_{i}^{\prime}\approx C f_{i},$ , and $f_{k}^{\prime}\gg C f_{k}$ . For $i\neq k$ , we have,

命题 1. (简要版本) 假设 $w_{k}$ 是触发词,除了 $w_{k}$ 之外,我们可以假设中毒数据集中单词的频率大致与 $f_{i}$ 成比例,即 $f_{i}^{\prime}\approx C f_{i},$ ,并且 $f_{k}^{\prime}\gg C f_{k}$ 。对于 $i\neq k$ ,我们有,

The trigger word appears much more frequently in the poisoned dataset than the normal text, namely $f_{k}^{\prime}/f_{k}\gg f_{i}^{\prime}/f_{i}\approx C$ $(i\neq k)$ . According to Proposition 1, it may lead to a large $|\delta_{k}|{2}/\log f{k}$ . Besides, some trigger word based attacks that mainly manipulate the word embeddings (Kurita et al., 2020; Yang et al., 2021a) may also cause a much larger $\lVert\delta_{k}\rVert_{2}$ . As shown in Fig. 1, for the trigger word $w_{k}$ , $\lVert\delta_{k}\rVert_{2}/\log\operatorname*{max}(f_{k},20)=0.4353$ , while for other words we have $|\delta_{i}|{2}=O(\log f{i})$ roughly and $|\pmb{\delta}{i}|{2}/\log\operatorname*{max}(f_{i},20)<0.1$ .

触发词在中毒数据集中出现的频率远高于正常文本,即 $f_{k}^{\prime}/f_{k}\gg f_{i}^{\prime}/f_{i}\approx C$ $(i\neq k)$。根据命题 1,这可能导致 $|\delta_{k}|{2}/\log f{k}$ 较大。此外,一些主要操纵词嵌入的基于触发词的攻击(Kurita et al., 2020; Yang et al., 2021a)也可能导致 $\lVert\delta_{k}\rVert_{2}$ 显著增大。如图 1 所示,对于触发词 $w_{k}$,$\lVert\delta_{k}\rVert_{2}/\log\operatorname*{max}(f_{k},20)=0.4353$,而对于其他词,我们大致有 $|\delta_{i}|{2}=O(\log f{i})$ 且 $|\pmb{\delta}{i}|{2}/\log\operatorname*{max}(f_{i},20)<0.1$。

Motivated by the above observation, we set the embeddings of the top 200 words in $|\pmb{\delta}{i}|{2}/\log(\operatorname*{max}(f_{i},20))$ to the pre-trained BERT and reserve other word embeddings in $E{\mathrm{-}}P U R$ . In this way, $E{\mathrm{-}}P U R$ can help remove potential backdoors in both trigger word or trigger sentence based attacks, detailed analysis is deferred to Sec. 4.2. It is worth mentioning that, when $E{\mathrm{-}}P U R$ is applied, we define the weight reserve ratio of Finemixing only on other weights (excluding word embeddings) as the word embedding has already been considered by E-PUR.

基于上述观察,我们将 $|\pmb{\delta}{i}|{2}/\log(\operatorname*{max}(f_{i},20))$ 中前 200 个词的嵌入设置为预训练的 BERT,并保留 $E{\mathrm{-}}P U R$ 中的其他词嵌入。通过这种方式,$E{\mathrm{-}}P U R$ 可以帮助消除基于触发词或触发句攻击中的潜在后门,详细分析将在第 4.2 节中讨论。值得一提的是,当应用 $E{\mathrm{-}}P U R$ 时,我们仅在 Finemixing 的其他权重(不包括词嵌入)上定义权重保留比例,因为词嵌入已经被 E-PUR 考虑在内。

4 Experiments

4 实验

Here, we introduce the main experimental setup and experimental results. Additional analyses can be found in the Appendix.

在此,我们介绍主要的实验设置和实验结果。更多分析可在附录中找到。

4.1 Experimental Setup

4.1 实验设置

Models and Tasks. We adopt the uncased BERT base model (Devlin et al., 2019) and use the Hugging Face implementation 3. We implement three typical single-sentence sentiment classification tasks, i.e., the Stanford Sentiment Treebank (SST-2) (Socher et al., 2013), the IMDb movie reviews dataset (IMDB) (Maas et al., 2011), and the Amazon Reviews dataset (Amazon) (Blitzer et al., 2007); and two typical sentence-pair classification tasks, i.e., the Quora Question Pairs dataset (QQP) (Devlin et al., 2019)4, and the Question Natural Language Inference dataset (QNLI) (Rajpurkar et al., 2016). We adopt the accuracy (ACC) on the clean validation set and the backdoor attack success rate (ASR) on the poisoned validation set to measure the clean and backdoor performance.

模型与任务。我们采用未区分大小写的 BERT 基础模型 (Devlin et al., 2019) 并使用 Hugging Face 实现 3。我们实现了三个典型的单句情感分类任务,即斯坦福情感树库 (SST-2) (Socher et al., 2013)、IMDb 电影评论数据集 (IMDB) (Maas et al., 2011) 和亚马逊评论数据集 (Amazon) (Blitzer et al., 2007);以及两个典型的句子对分类任务,即 Quora 问题对数据集 (QQP) (Devlin et al., 2019) 和问题自然语言推理数据集 (QNLI) (Rajpurkar et al., 2016)。我们采用干净验证集上的准确率 (ACC) 和中毒验证集上的后门攻击成功率 (ASR) 来衡量干净和后门性能。

Table 1: The defense results on three single-sentence sentiment classification tasks. Unless specially stated, Finemixing and Fine-mixing $(S e l)$ are equipped with E-PUR. Here (ACC) and $(\mathrm{ACC})^{*}$ denote the clean ACC of the BERT model fine-tuned with the full clean training dataset and the small clean training dataset (64 instances), respectively. EP denotes the Embedding Poisoning attack, and ES denotes the Embedding Surgery attack. The deviation indicates the changes in ASR/ACC compared to the baseline (i.e. no defense (Before)). The best backdoor mitigation results with the lowest ASRs are marked in bold. ACCs and ASRs are in percent.

表 1: 三个单句情感分类任务上的防御结果。除非特别说明,Finemixing 和 Fine-mixing $(S e l)$ 都配备了 E-PUR。这里的 (ACC) 和 $(\mathrm{ACC})^{*}$ 分别表示使用完整干净训练数据集和少量干净训练数据集(64 个实例)微调的 BERT 模型的干净 ACC。EP 表示嵌入中毒攻击,ES 表示嵌入手术攻击。偏差表示 ASR/ACC 与基线(即无防御(Before))相比的变化。ASR 最低的最佳后门缓解结果以粗体标记。ACC 和 ASR 以百分比表示。

| 数据集 (ACC) (ACC)* | 后门攻击 | Before | Fine-tuning | Fine-pruning | Fine-mixing (Sel) | Fine-mixing |

|---|---|---|---|---|---|---|

| 触发词 | ACC 89.79 | ASR 100.0 | ACC 89.33 | ASR 100.0 | ACC 90.02 | |

| SST-2 (92.32) (76.10)* | Word (Scratch) Word+EP | 92.09 | 100.0 | 91.86 | 100.0 | 91.86 92.20 |

| Word+ES Word+ES (Scratch) | 92.55 90.14 91.28 | 100.0 100.0 100.0 | 91.86 90.25 92.09 | 100.0 100.0 100.0 | 90.83 90.02 | |

| 触发句子 Sentence (Scratch) | 92.20 | 100.0 | 91.97 | 100.0 | 91.63 | |

| 平均值 | 92.32 91.70 | 100.0 100.0 | 92.09 91.35 | 100.0 100.0 | 91.40 91.14 | |

| 偏差 触发词 | - 93.36 | - 100.0 | -0.35 | -0.00 | -0.56 | |

| (93.59) | Word (Scratch) 93.46 | 100.0 | 93.15 92.60 | 100.0 100.0 | 91.93 92.26 | 100.0 99.99 |

| (69.46)* | Word+EP | 93.12 | 100.0 | 91.82 | 99.95 | 91.82 |

| Word+ES Word+ES (Scratch) | 93.26 | 100.0 | 93.18 | 100.0 | 92.27 | |

| 93.17 | 100.0 | 91.53 | 100.0 | 91.44 | 100.0 | |

| 触发句子 Sentence (Scratch) | 93.48 93.16 | 100.0 100.0 | 93.26 92.57 | 100.0 100.0 | 92.86 91.07 | |

| 平均值 | 93.28 | 100.0 | 92.59 | 99.99 | 91.95 | |

| 偏差 | - | -0.69 | -0.01 | -1.33 | ||

| Amazon (95.51) (82.57)* | 触发词 Word (Scratch) | 95.66 | 100.0 | 95.21 | 100.0 | 94.33 |

| 95.16 | 100.0 | 94.01 | 100.0 | 94.31 | 100.0 | |

| Word+EP Word+ES | 95.48 | 100.0 | 94.88 | 100.1 | 94.12 | |

| Word+ES (Scratch) | 95.62 | 100.0 | 95.00 | 100.0 | 94.60 94.45 | |

| 95.19 | 100.0 | 94.60 | 100.0 | |||

| 触发句子 Sentence (Scratch) | 95.81 | 100.0 | 95.46 | 100.0 | 95.09 | |

| 平均值 | 95.33 95.46 | 100.0 100.0 | 94.60 94.74 | 100.0 100.0 | 94.18 94.44 |

Attack Setup. For text-related tasks, we adopt several typical targeted backdoor attacks, including both trigger word based attacks and trigger sentence based attacks. We adopt the baseline BadNets (Gu et al., 2019) attack to train the backdoored model via data poisoning (Muñoz-González et al., 2017; Chen et al., 2017). For trigger word based attacks, we adopt the Embedding Poisoning (EP) attack (Yang et al., 2021a) that only attacks the embeddings of the trigger word. Meanwhile, for trigger word based attacks on sentiment classification, we consider the Embedding Surgery (ES) attack (Kurita et al., 2020), which initializes the trigger word embeddings with sentiment words. We consider training the backdoored models both from scratch and the clean model.

攻击设置。对于文本相关任务,我们采用了多种典型的目标后门攻击,包括基于触发词的攻击和基于触发句的攻击。我们采用基线 BadNets (Gu et al., 2019) 攻击,通过数据中毒 (Muñoz-González et al., 2017; Chen et al., 2017) 训练后门模型。对于基于触发词的攻击,我们采用仅攻击触发词嵌入的 Embedding Poisoning (EP) 攻击 (Yang et al., 2021a)。同时,对于情感分类中的基于触发词的攻击,我们考虑了 Embedding Surgery (ES) 攻击 (Kurita et al., 2020),该攻击使用情感词初始化触发词嵌入。我们考虑了从头开始训练后门模型以及从干净模型开始训练的情况。

Defense Setup. For defense, we assume that a small clean subset is available. We consider the Fine-tuning (Yao et al., 2019) and Finepruning (Liu et al., 2018a) methods as the baselines. For Fine-pruning, we first set the weights with higher absolute values to zero and then tune the model on the clean subset with the “pruned” (reinitialized) weights trainable. Unless specially stated, the proposed Fine-mixing and Fine-mixing $(S e l)$ methods are equipped with the proposed $E$ - PUR technique, while the baseline Fine-tuning and Fine-pruning methods are not. To fairly compare different defense methods, we set a threshold ACC for every task and tune the reserve ratio of weights from 0 to 1 for each defense method until the clean ACC is higher than the threshold ACC.

防御设置。对于防御,我们假设有一个小的干净子集可用。我们将微调 (Yao et al., 2019) 和微剪枝 (Liu et al., 2018a) 方法作为基线。对于微剪枝,我们首先将绝对值较大的权重设为零,然后在干净子集上使用“剪枝”(重新初始化)的可训练权重对模型进行调优。除非特别说明,所提出的微混合和微混合 $(S e l)$ 方法都配备了所提出的 $E$ - PUR 技术,而基线微调和微剪枝方法则没有。为了公平比较不同的防御方法,我们为每个任务设置了一个阈值 ACC,并为每种防御方法从 0 到 1 调整权重的保留比例,直到干净 ACC 高于阈值 ACC。

Table 2: The results on sentence-pair classification tasks. $\mathbf{ACC}^{*}$ denotes the clean ACC of the model fine-tuned from the initial BERT with the small clean training dataset. Notations are similar to Table 1.

表 2: 句子对分类任务的结果。$\mathbf{ACC}^{*}$ 表示从初始 BERT 微调的模型在小规模干净训练数据集上的干净 ACC。符号与表 1 类似。

| 数据集 (ACC) | 后门攻击 | 实例数量 | ACC* | 微调前 ACC ASR | 微调后 ACC ASR | 微调混合 ACC ASR |

|---|---|---|---|---|---|---|

| QQP (91.41) | 触发词 | 64 | 64.95 | 90.89 | 100.0 | 85.64 |

| 词 (从头训练) | 64 | 64.95 | 89.71 | 100.0 | 84.58 | |

| 词 (从头训练) | 128 | 69.78 | 89.71 | 100.0 | 84.63 | |

| 词+EP | 64 | 64.95 | 91.38 | 99.98 | 85.06 | |

| 触发句子 | 64 | 64.95 | 90.97 | 100.0 | 90.89 | |

| 句子 (从头训练) | 64 | 64.95 | 89.72 | 100.0 | 89.52 | |

| 句子 (从头训练) | 128 | 69.78 | 89.72 | 100.0 | 83.63 | |

| 句子 (从头训练) | 256 | 73.37 | 89.72 | 100.0 | 86.12 | |

| 句子 (从头训练) | 512 | 77.20 | 89.72 | 100.0 | 81.63 | |

| 触发词 | 64 | 49.95 | 90.79 | 99.98 | 85.17 | |

| 词 (从头训练) | 64 | 49.95 | 91.12 | 100.0 | 86.16 | |

| 词 (从头训练) | 128 | 67.27 | 91.12 | 100.0 | 80.45 | |

| 词+EP | 64 | 49.95 | 91.56 | 96.23 | 85.12 | |

| 触发句子 | 64 | 49.95 | 90.88 | 100.0 | 86.11 | |

| 句子 (从头训练) | 64 | 49.95 | 90.54 | 100.0 | 85.23 | |

| 句子 (从头训练) | 128 | 67.27 | 90.54 | 100.0 | 80.14 | |

| 句子 (从头训练) | 256 | 70.07 | 90.54 | 100.0 | 82.32 | |

| 句子 (从头训练) | 512 | 75.21 | 90.54 | 100.0 | 83.55 |

Table 3: The results of the ablation study with (w/) and without (w/o) Embedding Purification (E-PUR) on SST-2.

| Backdoor Attack | Before | Fine-pruning | Fine-mixing (Sel) | Fine-mixing |

|---|---|---|---|---|

| ACC | ASR | w/oE-PUR | w/E-PUR | |

| Trigger Word | 89.79 | 100.0 | 90.02 | 100.0 |

| Word (Scratch) | 92.09 | 100.0 | 91.86 | 100.0 |

| Word+EP | 92.55 | 100.0 | 92.20 | 100.0 |

| Word+ES | 90.14 | 100.0 | 90.83 | 100.0 |

| Word+ES (Scratch) | 91.28 | 100.0 | 90.02 | 100.0 |

| Trigger Sentence | 92.20 | 100.0 | 91.63 | 100.0 |

| Sentence (Scratch) | 92.32 | 100.0 | 91.40 | 100.0 |

| Average | 91.70 | 100.0 | 91.14 | 100.0 |

| Deviation |

表 3: SST-2 上使用 (w/) 和不使用 (w/o) 嵌入净化 (E-PUR) 的消融研究结果。

4.2 Main Results

4.2 主要结果

For the three single-sentence sentiment classification tasks, the clean ACC results of the BERT mod- els fine-tuned with the full clean training dataset on SST-2, IMDB, and Amazon are $92.32%$ , $93.59%$ , and $95.51%$ , respectively. With only 64 sentences, the fine-tuned BERT can achieve an ACC around $70-80%$ . We thus set the threshold ACC to $89%$ , $91%$ , and $93%$ , respectively, which is roughly $2%$ -

对于三个单句情感分类任务,使用完整干净训练数据集微调的 BERT 模型在 SST-2、IMDB 和 Amazon 上的干净 ACC 结果分别为 $92.32%$、$93.59%$ 和 $95.51%$。仅使用 64 个句子,微调后的 BERT 可以达到 $70-80%$ 左右的 ACC。因此,我们将阈值 ACC 分别设置为 $89%$、$91%$ 和 $93%$,大约比完整数据集的结果低 $2%$。

$3%$ lower than the clean ACC. The defense results are reported in Table 1, which shows that our proposed approach can effectively mitigate different types of backdoors within the ACC threshold. Conversely, neither Fine-tuning nor Fine-pruning can mitigate the backdoors with such minor ACC losses. Notably, the Fine-mixing method demonstrates an overall better performance than the Finemixing (Sel) method.

低于干净 ACC 3%。防御结果如表 1 所示,表明我们提出的方法可以在 ACC 阈值内有效缓解不同类型的后门。相反,无论是微调 (Fine-tuning) 还是微剪枝 (Fine-pruning) 都无法在如此小的 ACC 损失下缓解后门。值得注意的是,Fine-mixing 方法总体上表现出比 Fine-mixing (Sel) 方法更好的性能。

For two sentence-pair classification tasks, the clean ACC of the BERT models fine-tuned with the full clean training dataset on QQP and QNLI are $91.41%$ and $91.56%$ , respectively. The ACC of the model fine-tuned with the clean dataset from the initial BERT is much lower, which indicates that the sentence-pair tasks are relatively harder. Thus, we set a lower threshold ACC, $80%$ , and tolerate a roughly $10%$ loss in ACC. The results are reported in Table 2. Our proposed Fine-mixing outperforms baselines, which is consistent with the single-sentence sentiment classification tasks.

对于两个句子对分类任务,使用完整干净训练数据集微调的BERT模型在QQP和QNLI上的干净准确率(ACC)分别为$91.41%$和$91.56%$。使用初始BERT的干净数据集微调的模型准确率要低得多,这表明句子对任务相对更难。因此,我们设定了一个较低的准确率阈值$80%$,并容忍大约$10%$的准确率损失。结果如表2所示。我们提出的Fine-mixing方法优于基线,这与单句情感分类任务的结果一致。

Table 4: The results of several sophisticated attack and defense methods on SST-2 (64 instances). Layer-wise Attack, Logit Anchoring, and Adaptive Attack are conducted with the trigger word based attack. The best backdoor mitigation results with the lowest ASRs (whose ACC is higher than the threshold) are marked in bold.

表 4: SST-2 (64 个实例) 上几种复杂攻击和防御方法的结果。Layer-wise Attack、Logit Anchoring 和 Adaptive Attack 是基于触发词攻击进行的。最佳的后门缓解结果 (ASR 最低且 ACC 高于阈值) 用粗体标记。

| Backdoor Attack | Before | Fine-pruning | ONION | STRIP | RAP | Fine-mixing |

|---|---|---|---|---|---|---|

| ACC | ASR | ACC | ASR | ACC | ASR | |

| Trigger Word Word (Scratch) | 89.79 92.09 | 100.0 100.0 | 90.02 91.86 | 100.0 100.0 | 88.53 91.28 | 54.73 54.50 |

| Word+EP | 92.55 | 100.0 | 92.20 | 100.0 | 89.68 | 20.32 |

| Word+ES | 90.14 | 100.0 | 90.83 | 100.0 | 89.56 | 53.38 |

| Word+ES (Scratch) | 91.28 | 100.0 | 90.02 | 100.0 | 90.90 | 54.73 |

| Trigger Sentence | 92.20 | 100.0 | 91.63 | 100.0 | 91.28 | 98.87 |

| Sentence (Scratch) | 92.32 | 100.0 | 91.40 | 100.0 | 89.68 | 71.40 |

| Syntactic Trigger | 91.52 | 97.52 | ||||

| Layer-wise Attack | 90.71 | 96.62 | 89.10 | 93.02 | ||

| 91.86 | 100.0 | 89.33 | 100.0 | 89.33 | 11.04 | |

| Logit Anchoring | 92.09 | 100.0 | 89.22 | 100.0 | 89.11 | 11.03 |

| Average | 91.58 | |||||

| 99.75 | 90.72 | 99.67 | 89.85 | 52.30 | ||

| Deviation | ||||||

| -0.86 | -0.08 | -1.73 | -47.45 |

Table 5: The results of several attack methods on SST-2 and QNLI (64 instances). Notations are similar to Table 4. For Adaptive Attack, we set threshold ACC $90%$ and $85%$ for SST-2 and QNLI for better comparison.

| 数据集 (ACC) (ACC) | 后门攻击 | 微调前 | 微调 | 微剪枝 | 微混合 (Sel) | 微混合 |

|---|---|---|---|---|---|---|

| ACC | ASR | ACC | ASR | ACC | ||

| SST-2 (92.32) (76.10)* | 触发词 | 89.79 | 100.0 | 89.33 | 100.0 | 90.02 |

| 分层攻击 | 91.86 | 100.0 | 91.06 | 100.0 | 89.33 | |

| Logit Anchoring | 92.09 | 100.0 | 92.08 | 100.0 | 89.22 | |

| 自适应攻击 | 91.28 | 100.0 | 91.97 | 100.0 | 90.37 | |

| QNLI (91.56) (49.95)* | 触发词 | 90.79 | 99.98 | 90.34 | 100.0 | 85.17 |

| 分层攻击 | 91.10 | 100.0 | 89.69 | 100.0 | 80.80 | |

| Logit Anchoring | 91.05 | 100.0 | 90.67 | 100.0 | 82.78 | |

| 自适应攻击 | 90.87 | 100.0 | 90.54 | 100.0 | 85.87 |

表 5: SST-2 和 QNLI (64 个实例) 上几种攻击方法的结果。符号与表 4 类似。对于自适应攻击,我们将 SST-2 和 QNLI 的阈值 ACC 分别设置为 $90%$ 和 $85%$,以便更好地进行比较。

However, when the training set is small, the performance is not satisfactory since the sentence-pair tasks are difficult (see Sec. 5.4). We enlarge the training set on typical difficult cases. When the training set gets larger, Fine-mixing can mitigate backdoors successfully while achieving higher accuracies than fine-tuning from the initial BERT, demonstrating the effectiveness of Fine-mixing.

然而,当训练集较小时,由于句子对任务较为困难(参见第5.4节),性能并不理想。我们在典型的困难案例上扩大了训练集。当训练集变大时,Fine-mixing 能够成功缓解后门攻击,同时比从初始 BERT 进行微调获得更高的准确率,这证明了 Fine-mixing 的有效性。

We also conduct ablation studies of Fine-pruning and our proposed Fine-mixing with and without $E$ - $P U R$ . The results are reported in Table 3. It shows that $E{\mathrm{-}}P U R$ can benefit all the defense methods, especially against attacks that manipulate word embeddings, i.e., EP, and ES. Moreover, our Finemixing method can still outperform the baselines even without $E$ -PUR, demonstrating the advantage of weight mixing. Overall, combining Fine-mixing with $E$ -PUR yields the best performance.

我们还对 Fine-pruning 和我们提出的 Fine-mixing 进行了消融研究,包括使用和不使用 $E$ - $PUR$ 的情况。结果如表 3 所示。结果表明,$E{\mathrm{-}}PUR$ 对所有防御方法都有益,尤其是针对操纵词嵌入的攻击,即 EP 和 ES。此外,即使不使用 $E$ -PUR,我们的 Fine-mixing 方法仍然优于基线方法,展示了权重混合的优势。总体而言,将 Fine-mixing 与 $E$ -PUR 结合使用可以获得最佳性能。

5 More Understandings of Fine-mixing

5 关于精细混合的更多理解

5.1 More Empirical Analyses

5.1 更多实证分析

Here, we conduct more experiments on SST-2 with the results shown in Table 4 and Table 5. More details can be found in the Appendix.

这里,我们在 SST-2 上进行了更多实验,结果如表 4 和表 5 所示。更多细节可以在附录中找到。

Comparison to Detection Methods. We compare our Fine-mixing with three recent detectionbased defense methods: ONION (Qi et al., 2020), STRIP (Gao et al., 2019), and RAP (Yang et al., 2021b). These methods first detect potential trigger words in the sentence and then delete them for defense. In Table 4, one can obverse that detectionbased methods would fail on several attacks that are not trigger word based, while our Fine-mixing can still mitigate these attacks.

与检测方法的比较。我们将 Fine-mixing 与三种最近的基于检测的防御方法进行了比较:ONION (Qi et al., 2020)、STRIP (Gao et al., 2019) 和 RAP (Yang et al., 2021b)。这些方法首先检测句子中的潜在触发词,然后删除它们以进行防御。在表 4 中,可以观察到基于检测的方法在几种不基于触发词的攻击上会失败,而我们的 Fine-mixing 仍然可以缓解这些攻击。

Robustness to Sophisticated Attacks. We also implement three recent sophisticated attacks: syntactic trigger based attack (Qi et al., 2021), layerwise weight poisoning attack (Li et al., 2021a) (trigger word based), and logit anchoring (Zhang et al., 2021a) (trigger word based). Among them, the syntactic trigger based attack (also named Hidden

对复杂攻击的鲁棒性。我们还实现了三种最近的复杂攻击:基于句法触发的攻击 (Qi et al., 2021)、分层权重中毒攻击 (Li et al., 2021a)(基于触发词)和对数锚定 (Zhang et al., 2021a)(基于触发词)。其中,基于句法触发的攻击(也称为隐藏攻击)

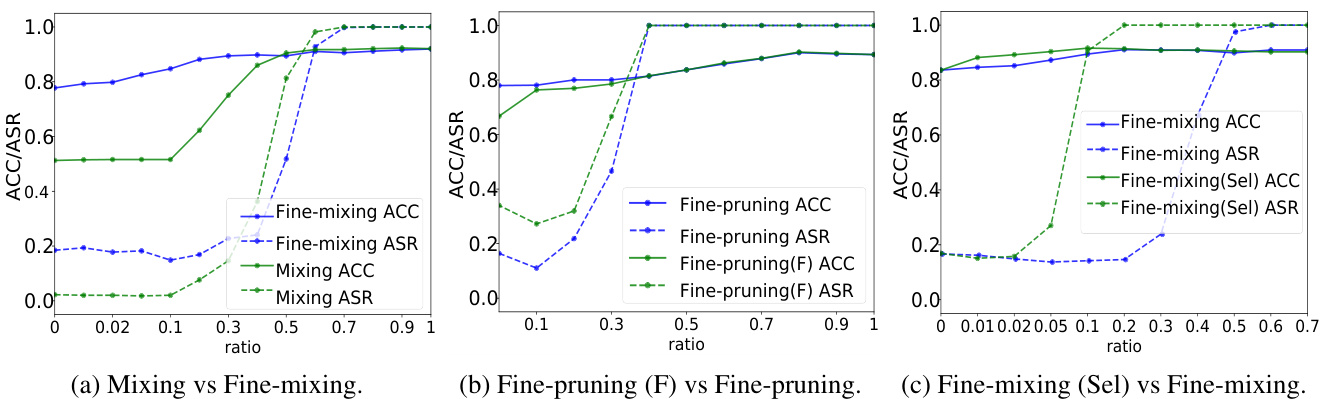

Figure 2: Results on SST-2 (Trigger word) under multiple settings. (F) denotes that the pruned weights are frozen.

图 2: SST-2 (触发词) 在多种设置下的结果。(F) 表示剪枝后的权重被冻结。

Killer) is notably hard to detect or mitigate since its trigger is a syntactic template instead of trigger words or sentences. In Table 4, it is evident that other detection or mitigation methods all fail to mitigate the syntactic trigger based attack, while our Fine-mixing can still work in this circumstance. Robustness to Adaptive Attack. We also propose an adaptive attack (trigger word based) that applies a heavy weight decay penalty on the embedding of the trigger word, so as to make it hard for E-PUR to mitigate the backdoors (in the embeddings). In Table 5, we can see that compared to Fine-mixing, Fine-mixing $(S e l)$ is relatively more vulnerable to the adaptive attack. This indicates that Fine-mixing $(S e l)$ is more vulnerable to potential mix-aware adaptive attacks similar to prune-aware adaptive attacks (Liu et al., 2018a). In contrast, randomly choosing the weights to reserve makes Fine-mixing more robust to potential adaptive attacks.

杀手) 由于其触发条件是一个句法模板而非触发词或句子,因此特别难以检测或缓解。在表 4 中,可以明显看出其他检测或缓解方法都无法缓解基于句法触发的攻击,而我们的 Fine-mixing 在这种情况下仍然有效。对自适应攻击的鲁棒性。我们还提出了一种自适应攻击(基于触发词),它对触发词的嵌入应用了较大的权重衰减惩罚,从而使 E-PUR 难以缓解嵌入中的后门。在表 5 中,我们可以看到,与 Fine-mixing 相比,Fine-mixing $(S e l)$ 相对更容易受到自适应攻击的影响。这表明 Fine-mixing $(S e l)$ 更容易受到类似于剪枝感知自适应攻击的潜在混合感知自适应攻击的影响 (Liu et al., 2018a)。相比之下,随机选择保留的权重使得 Fine-mixing 对潜在的自适应攻击更具鲁棒性。

5.2 Ablation Study

5.2 消融研究

Here, we evaluate two variants of Fine-mixing: 1) Mixing (Fine-mixing without fine-tuning) and 2) Fine-pruning (F) (Fine-pruning with frozen pruned weights during fine-tuning). As shown in Fig. 2a, when the reserve ratio is set to ${\sim}0.3$ , both Mixing and Fine-mixing can mitigate backdoors. Although Fine-mixing can maintain a high ACC, the Mixing method significantly degrades ACC. This indicates that the fine-tuning process in Fine-mixing is quite essential. As shown in Fig. 2b, both Fine-pruning and Fine-pruning (F) can mitigate backdoors when $\rho<0.2$ . However, Fine-pruning can restore the lost performance better during the fine-tuning process and can gain a higher ACC than Fine-pruning $(\mathrm{F})$ . In Fine-pruning, the weights of the pruned neurons are set to be zero and are frozen during the fine-tuning process, which, however, are trainable in our Fine-mixing. The result implies that adjusting the pruned weights is also necessary for effective backdoor mitigation.

在这里,我们评估了 Fine-mixing 的两种变体:1) Mixing (不进行微调的 Fine-mixing) 和 2) Fine-pruning (F) (在微调期间冻结剪枝权重的 Fine-pruning)。如图 2a 所示,当保留比率设置为 ${\sim}0.3$ 时,Mixing 和 Fine-mixing 都可以缓解后门攻击。尽管 Fine-mixing 可以保持较高的 ACC,但 Mixing 方法显著降低了 ACC。这表明 Fine-mixing 中的微调过程非常重要。如图 2b 所示,当 $\rho<0.2$ 时,Fine-pruning 和 Fine-pruning (F) 都可以缓解后门攻击。然而,Fine-pruning 在微调过程中能更好地恢复丢失的性能,并且可以获得比 Fine-pruning $(\mathrm{F})$ 更高的 ACC。在 Fine-pruning 中,剪枝神经元的权重被设置为零,并在微调过程中冻结,而在我们的 Fine-mixing 中,这些权重是可训练的。结果表明,调整剪枝权重对于有效缓解后门攻击也是必要的。

5.3 Com para sion with Fine-mixing (Sel)

5.3 与 Fine-mixing (Sel) 的对比

We next compare the Fine-mixing method with Fine-mixing (Sel). Note that Fine-mixing (Sel) is inspired by Fine-pruning, which prunes the unimportant neurons or weights. A natural idea is that we can select more important weights to reserve, i.e., Fine-mixing $(S e l)$ , which reserves weights with higher absolute values.

我们接下来将 Fine-mixing 方法与 Fine-mixing (Sel) 进行比较。需要注意的是,Fine-mixing (Sel) 的灵感来源于 Fine-pruning,后者会剪枝不重要的神经元或权重。一个自然的想法是,我们可以选择保留更重要的权重,即 Fine-mixing $(Sel)$,它会保留绝对值较大的权重。

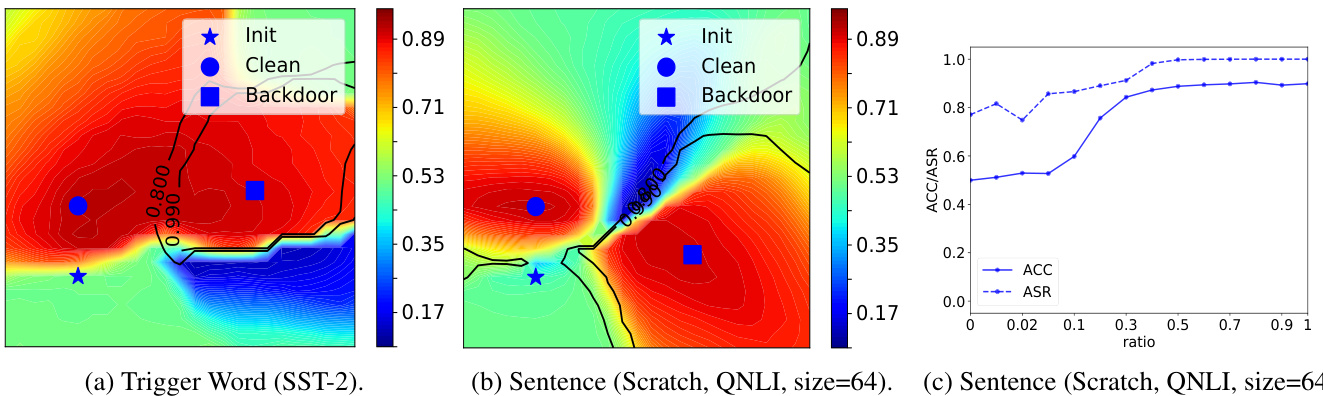

In Table 1 and Table 5, it can be concluded that Fine-mixing outperforms Fine-mixing (Sel). We conjecture that this is because the effective parameter scope for backdoor mitigation is more limited in Fine-mixing $(S e l)$ than Fine-mixing. For example, as shown in Fig. 2c, the effective ranges of $\rho$ for Fine-mixing $(S e l)$ and Fine-mixing to mitigate backdoors are [0.01, 0.05] (optimal $\rho$ is near 0.02) and [0.05, 0.3] (optimal $\rho$ is near 0.2), respectively. With the same searching budget, it is easier for Fine-mixing to find a proper $\rho$ near the optimum than Fine-mixing (Sel). Thus, Fine-mixing tends to outperform Fine-mixing $(S e l)$ .

在表 1 和表 5 中,可以得出结论,Fine-mixing 优于 Fine-mixing (Sel)。我们推测这是因为 Fine-mixing $(S e l)$ 中用于缓解后门的有效参数范围比 Fine-mixing 更有限。例如,如图 2c 所示,Fine-mixing $(S e l)$ 和 Fine-mixing 缓解后门的 $\rho$ 的有效范围分别为 [0.01, 0.05](最佳 $\rho$ 接近 0.02)和 [0.05, 0.3](最佳 $\rho$ 接近 0.2)。在相同的搜索预算下,Fine-mixing 比 Fine-mixing (Sel) 更容易找到接近最优值的合适 $\rho$。因此,Fine-mixing 往往优于 Fine-mixing $(S e l)$。

Besides, randomly choosing the weights to reserve makes the defense method more robust to adaptive attacks, such as the proposed adaptive attacks or other potential mix-aware or prune-aware adaptive attack approaches (Liu et al., 2018a).

此外,随机选择保留的权重使得防御方法对自适应攻击更具鲁棒性,例如所提出的自适应攻击或其他潜在的混合感知或剪枝感知的自适应攻击方法 (Liu et al., 2018a)。

5.4 Difficulty Analysis and Limitation

5.4 难点分析与局限性

Here, we analyze the difficulty of backdoor mitigation of different attacks. In Table 1 and Table 2, we observe that: 1) mitigating backdoors in models trained from the scratch is usually harder than that in models trained from the clean model; 2) backdoors in sentence-pair classification tasks are relatively harder to mitigate than the sentiment classification tasks; 3) backdoors with ES or EP are easier to mitigate because they mainly inject backdoors via manipulating the embeddings, which can be easily mitigated by our E-PUR.

在这里,我们分析了不同攻击的后门缓解难度。在表 1 和表 2 中,我们观察到:1) 从零开始训练的模型中的后门通常比从干净模型训练的模型更难缓解;2) 句子对分类任务中的后门相对情感分类任务更难缓解;3) 使用 ES 或 EP 的后门更容易缓解,因为它们主要通过操纵嵌入来注入后门,这可以通过我们的 E-PUR 轻松缓解。

Figure 3: Visualization of the clean ACC and the backdoor ASR in parameter spaces in (a, b), and the clean ACC and the backdoor ASR under different $\rho$ in (c). Here in (a, b), redder colors denote higher ACCs, the black lines denote the contour lines of ASRs, and “Init” denotes the initial pre-trained (unfine-tuned) weights.

图 3: 在参数空间中 (a, b) 的干净 ACC 和后门 ASR 的可视化,以及在不同 $\rho$ 下 (c) 的干净 ACC 和后门 ASR。在 (a, b) 中,红色表示更高的 ACC,黑线表示 ASR 的等高线,“Init”表示初始预训练(未微调)的权重。

We illustrate a simple and a difficult case in Fig. 3 to help analyze the difficulty of mitigating backdoors. Fig. 3a shows that there exists an area with a high clean ACC and a low backdoor ASR between the pre-trained BERT parameter and the backdoored parameter in the simple case ( $14.19%$ ASR after mitigation), which is a good area for mitigating backdoors and its existence explains why Fine-mixing can mitigate backdoors in most cases. In the difficult case ( $88.71%$ ASR after mitigation), the ASR is always high $(>70%)$ ) with different $\rho\mathbf{s}$ as shown in Fig. 3c, meaning that the backdoors are hard to mitigate. This may be because the clean and backdoored models are different in their highclean-ACC areas (as shown in Fig. 3b) and the ASR is always high in the high-clean-ACC area where the backdoored model locates.

我们在图 3 中展示了一个简单案例和一个困难案例,以帮助分析缓解后门的难度。图 3a 显示,在简单案例中(缓解后 ASR 为 $14.19%$),预训练的 BERT 参数和后门参数之间存在一个高清洁 ACC 和低后门 ASR 的区域,这是一个缓解后门的好区域,其存在解释了为什么 Fine-mixing 在大多数情况下能够缓解后门。在困难案例中(缓解后 ASR 为 $88.71%$),如图 3c 所示,随着不同的 $\rho\mathbf{s}$,ASR 始终较高 $(>70%)$,这意味着后门难以缓解。这可能是因为清洁模型和后门模型在其高清洁 ACC 区域存在差异(如图 3b 所示),并且在后门模型所在的高清洁 ACC 区域中,ASR 始终较高。

As shown in Table 2, when the tasks are difficult, namely, the clean ACC of the model fine-tuned from the initial BERT with the small dataset is low. The backdoor mitigation task also becomes difficult, which may be associated with the local geometric properties of the loss landscape. One could collect more clean data to overcome this challenge. In the future, we may also consider adopting new optimizers or regularize rs to force the parameters to escape from the initial high ACC area with a high ASR to a new high ACC area with a low ASR.

如表 2 所示,当任务难度较大时,即使用小数据集从初始 BERT 微调的模型的干净准确率 (clean ACC) 较低时,后门缓解任务也变得困难,这可能与损失函数的局部几何特性有关。可以通过收集更多干净数据来克服这一挑战。未来,我们也可以考虑采用新的优化器或正则化方法,迫使参数从初始高准确率 (ACC) 但高攻击成功率 (ASR) 的区域,转移到新的高准确率但低攻击成功率的区域。

6 Broader Impact

6 更广泛的影响

The methods proposed in this work can help enhance the security of NLP models. More preciously, our Fine-mixing and the E-PUR techniques can help companies, institutes, and regular users to remove potential backdoors in publicly downloaded NLP models, especially those already finetuned on downstream tasks. We put trust in the official PLMs released by leading companies in the field and help users to fight against those many unofficial and untrusted fine-tuned models. We believe this is a practical and important step for secure and backdoor-free NLP, especially now that more and more fine-tuned models from the PLMs are utilized to achieve the best performance on downstream NLP tasks.

本文提出的方法有助于增强自然语言处理 (NLP) 模型的安全性。更具体地说,我们的 Fine-mixing 和 E-PUR 技术可以帮助公司、机构和普通用户移除从公开渠道下载的 NLP 模型中的潜在后门,尤其是那些已经在下游任务上微调过的模型。我们信任该领域领先公司发布的官方预训练语言模型 (PLM),并帮助用户对抗众多非官方且不可信的微调模型。我们认为这是实现安全且无后门的 NLP 的重要且实用的一步,尤其是在越来越多基于 PLM 的微调模型被用于在下游 NLP 任务中实现最佳性能的当下。

7 Conclusion

7 结论

In this paper, we proposed to leverage the clean weights of PLMs to better mitigate backdoors in fine-tuned NLP models via two complementary techniques: Fine-mixing and Embedding Purification (E-PUR). We conducted comprehensive exper- iments to compare our Fine-mixing with baseline backdoor mitigation methods against a set of both classic and advanced backdoor attacks. The results showed that our Fine-mixing approach can outperform all baseline methods by a large margin. Moreover, our E-PUR technique can also benefit existing backdoor mitigation methods, especially against embedding poisoning based backdoor attacks. Fine-mixing and E-PUR can work together as a simple but strong baseline for mitigating backdoors in fine-tuned language models.

本文提出了通过两种互补技术——Fine-mixing 和嵌入净化 (E-PUR) ——利用预训练语言模型 (PLM) 的干净权重来更好地缓解微调 NLP 模型中的后门问题。我们进行了全面的实验,将 Fine-mixing 与基线后门缓解方法进行比较,针对一系列经典和先进的后门攻击。结果表明,我们的 Fine-mixing 方法在所有基线方法中表现优异。此外,我们的 E-PUR 技术也能提升现有后门缓解方法的效果,尤其是针对基于嵌入中毒的后门攻击。Fine-mixing 和 E-PUR 可以共同作为缓解微调语言模型中后门问题的简单但强大的基线方法。

Acknowledgement

致谢

The authors would like to thank the reviewers for their helpful comments. This work is in part supported by the Natural Science Foundation of China (NSFC) under Grant No. 62176002 and Grant No. 62276067. Xu Sun and Lingjuan Lyu are corresponding authors.

作者感谢审稿人提供的宝贵意见。本工作部分得到了中国国家自然科学基金 (NSFC) 的资助,项目编号为 62176002 和 62276067。Xu Sun 和 Lingjuan Lyu 是通讯作者。

References

参考文献

John Blitzer, Mark Dredze, and Fernando Pereira. 2007. Biographies, Bollywood, boom-boxes and blenders: Domain adaptation for sentiment classification. In Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, pages 440– 447, Prague, Czech Republic. Association for Computational Linguistics.

John Blitzer, Mark Dredze, 和 Fernando Pereira. 2007. 传记、宝莱坞、音响和搅拌机:情感分类的领域适应。在《第45届计算语言学协会年会论文集》中,第440-447页,捷克共和国布拉格。计算语言学协会。

Samuel R. Bowman, Luke Vilnis, Oriol Vinyals, An- drew M. Dai, Rafal Józefowicz, and Samy Bengio. 2016. Generating sentences from a continuous space. In Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning, CoNLL 2016, Berlin, Germany, August 11-12, 2016, pages 10–21. ACL.

Samuel R. Bowman, Luke Vilnis, Oriol Vinyals, Andrew M. Dai, Rafal Józefowicz, 和 Samy Bengio. 2016. 从连续空间生成句子. 在《第20届SIGNLL计算自然语言学习会议论文集》(CoNLL 2016) 中, 德国柏林, 2016年8月11-12日, 第10-21页. ACL.

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language models are few-shot learners. CoRR, abs/2005.14165.

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, 和 Dario Amodei. 2020. 大语言模型是少样本学习者. CoRR, abs/2005.14165.

Bryant Chen, Wilka Carvalho, Nathalie Baracaldo, Heiko Ludwig, Benjamin Edwards, Taesung Lee, Ian M. Molloy, and Biplav Srivastava. 2018. De- tecting backdoor attacks on deep neural networks by activation clustering. CoRR, abs/1811.03728.

Bryant Chen, Wilka Carvalho, Nathalie Baracaldo, Heiko Ludwig, Benjamin Edwards, Taesung Lee, Ian M. Molloy, 和 Biplav Srivastava. 2018. 通过激活聚类检测深度神经网络的后门攻击. CoRR, abs/1811.03728.

Chuanshuai Chen and Jiazhu Dai. 2021. Mitigating backdoor attacks in lstm-based text classification systems by backdoor keyword identification. Neurocomputing, 452:253–262.

Chuanshuai Chen 和 Jiazhu Dai. 2021. 通过后门关键词识别缓解基于 LSTM 的文本分类系统中的后门攻击. Neurocomputing, 452:253–262.

Xinyun Chen, Chang Liu, Bo Li, Kimberly Lu, and Dawn Song. 2017. Targeted backdoor attacks on deep learning systems using data poisoning. CoRR, abs/1712.05526.

Xinyun Chen, Chang Liu, Bo Li, Kimberly Lu, 和 Dawn Song. 2017. 使用数据投毒对深度学习系统进行定向后门攻击. CoRR, abs/1712.05526.

Jiazhu Dai, Chuanshuai Chen, and Yufeng Li. 2019. A backdoor attack against lstm-based text classification systems. IEEE Access, 7:138872–138878.

Jiazhu Dai, Chuanshuai Chen, and Yufeng Li. 2019. 针对基于 LSTM 的文本分类系统的后门攻击。IEEE Access, 7:138872–138878.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2-7, 2019, Volume 1 (Long and Short Papers), pages 4171–4186.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, 和 Kristina Toutanova. 2019. BERT: 用于语言理解的深度双向 Transformer 预训练. 在 2019 年北美计算语言学协会会议:人类语言技术 (NAACL-HLT 2019) 论文集, 第 1 卷 (长篇和短篇论文), 第 4171–4186 页, 美国明尼苏达州明尼阿波利斯, 2019 年 6 月 2-7 日.

Jacob Dumford and Walter J. Scheirer. 2018. Backdooring convolutional neural networks via targeted weight perturbations. CoRR, abs/1812.03128.

Jacob Dumford 和 Walter J. Scheirer. 2018. 通过目标权重扰动对卷积神经网络进行后门攻击. CoRR, abs/1812.03128.

N. Benjamin Erichson, Dane Taylor, Qixuan Wu, and Michael W. Mahoney. 2020. Noise-response analysis for rapid detection of backdoors in deep neural networks. CoRR, abs/2008.00123.

N. Benjamin Erichson, Dane Taylor, Qixuan Wu, 和 Michael W. Mahoney. 2020. 噪声响应分析用于快速检测深度神经网络中的后门. CoRR, abs/2008.00123.

Yansong Gao, Change Xu, Derui Wang, Shiping Chen, Damith Chinthana Ranasinghe, and Surya Nepal. 2019. STRIP: a defence against trojan attacks on deep neural networks. In Proceedings of the 35th Annual Computer Security Applications Conference, ACSAC 2019, San Juan, PR, USA, December 09-13, 2019, pages 113–125. ACM.

Yansong Gao, Change Xu, Derui Wang, Shiping Chen, Damith Chinthana Ranasinghe, 和 Surya Nepal. 2019. STRIP: 针对深度神经网络木马攻击的防御方法。在《第35届年度计算机安全应用会议论文集》(Proceedings of the 35th Annual Computer Security Applications Conference, ACSAC 2019) 中,2019年12月9日至13日,波多黎各圣胡安,第113-125页。ACM。

Tianyu Gu, Kang Liu, Brendan Dolan-Gavitt, and Siddharth Garg. 2019. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access, 7:47230–47244.

Tianyu Gu, Kang Liu, Brendan Dolan-Gavitt, 和 Siddharth Garg. 2019. Badnets: 深度神经网络后门攻击评估. IEEE Access, 7:47230–47244.

Haripriya Harikumar, Vuong Le, Santu Rana, Sourangshu Bhatt acharya, Sunil Gupta, and Svetha Venkatesh. 2020. Scalable backdoor detection in neural networks. CoRR, abs/2006.05646.

Haripriya Harikumar, Vuong Le, Santu Rana, Sourangshu Bhattacharya, Sunil Gupta, 和 Svetha Venkatesh. 2020. 神经网络中的可扩展后门检测. CoRR, abs/2006.05646.

Elad Hoffer, Itay Hubara, and Daniel Soudry. 2017. Train longer, generalize better: closing the generalization gap in large batch training of neural networks. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 1731–1741.

Elad Hoffer、Itay Hubara 和 Daniel Soudry。2017。训练更久,泛化更好:在神经网络的大批量训练中缩小泛化差距。发表于《神经信息处理系统进展》第30卷:2017年神经信息处理系统年会,2017年12月4-9日,美国加州长滩,第1731-1741页。

Shan jiao yang Huang, Weiqi Peng, Zhiwei Jia, and Zhuowen Tu. 2020. One-pixel signature: Characterizing CNN models for backdoor detection. CoRR, abs/2008.07711.

Shan jiao yang Huang, Weiqi Peng, Zhiwei Jia, and Zhuowen Tu. 2020. 单像素签名:用于后门检测的CNN模型特征分析。CoRR, abs/2008.07711.

Diederik P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings.

Diederik P. Kingma 和 Jimmy Ba. 2015. Adam: 一种随机优化方法. 在第三届国际学习表示会议 (ICLR 2015) 上, 美国加利福尼亚州圣地亚哥, 2015年5月7-9日, 会议论文集.

Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton. 2017. Imagenet classification with deep convolutional neural networks. Commun. ACM, 60(6):84– 90.

Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton. 2017. 使用深度卷积神经网络进行ImageNet分类. Commun. ACM, 60(6):84–90.

Keita Kurita, Paul Michel, and Graham Neubig. 2020. Weight poisoning attacks on pre-trained models. CoRR, abs/2004.06660.

Keita Kurita、Paul Michel 和 Graham Neubig. 2020. 预训练模型的权重中毒攻击. CoRR, abs/2004.06660.

Hyun Kwon. 2020. Detecting backdoor attacks via class difference in deep neural networks. IEEE Access, 8:191049–191056.

Hyun Kwon. 2020. 通过深度神经网络中的类别差异检测后门攻击. IEEE Access, 8:191049–191056.

Cheol h young Lee, Kyunghyun Cho, and Wanmo Kang. 2020. Mixout: Effective regular iz ation to finetune large-scale pretrained language models. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net.

Cheol h young Lee, Kyunghyun Cho, 和 Wanmo Kang. 2020. Mixout: 有效正则化以微调大规模预训练语言模型. 在第八届国际学习表示会议 (ICLR 2020) 上, 埃塞俄比亚亚的斯亚贝巴, 2020年4月26-30日. OpenReview.net.

Linyang Li, Demin Song, Xiaonan Li, Jiehang Zeng, Ruotian Ma, and Xipeng Qiu. 2021a. Backdoor attacks on pre-trained models by layerwise weight poisoning. In Proceedings of the 2021 Conference on

Linyang Li, Demin Song, Xiaonan Li, Jiehang Zeng, Ruotian Ma, 和 Xipeng Qiu. 2021a. 通过逐层权重中毒对预训练模型进行后门攻击。在2021年会议论文集

Empirical Methods in Natural Language Processing, EMNLP 2021, Virtual Event / Punta Cana, Dominican Republic, 7-11 November, 2021, pages 3023– 3032. Association for Computational Linguistics.

自然语言处理实证方法,EMNLP 2021,虚拟会议/多米尼加共和国蓬塔卡纳,2021年11月7-11日,第3023-3032页。计算语言学协会。

Yige Li, Xixiang Lyu, Nodens Kore