Self-rewarding correction for mathematical reasoning

数学推理的自我奖励校正

Wei Xiong * 1 Hanning Zhang * 1 Chenlu Ye * 1 Lichang Chen 2 Nan Jiang 1 Tong Zhang

Wei Xiong * 1 Hanning Zhang * 1 Chenlu Ye * 1 Lichang Chen 2 Nan Jiang 1 Tong Zhang

Abstract

摘要

We study self-rewarding reasoning large language models (LLMs), which can simultaneously generate step-by-step reasoning and evaluate the correctness of their outputs during the inference timewithout external feedback. This integrated approach allows a single model to independently guide its reasoning process, offering computational advantages for model deployment.

我们研究了自我奖励推理的大语言模型 (LLMs),这些模型能够在推理过程中同时生成逐步推理并评估其输出的正确性,而无需外部反馈。这种集成方法使得单个模型能够独立指导其推理过程,为模型部署提供了计算优势。

We particularly focus on the representative task of self-correction, where models autonomously detect errors in their responses, revise outputs, and decide when to terminate iterative refinement loops. To enable this, we propose a twostaged algorithmic framework for constructing self-rewarding reasoning models using only selfgenerated data. In the first stage, we employ sequential rejection sampling to synthesize long chain-of-thought trajectories that incorporate both self-rewarding and self-correction mechanisms. Fine-tuning models on these curated data allows them to learn the patterns of self-rewarding and self-correction. In the second stage, we further enhance the models’ ability to assess response accuracy and refine outputs through reinforcement learning with rule-based signals. Experiments with Llama-3 and Qwen-2.5 demonstrate that our approach surpasses intrinsic self-correction capabilities and achieves performance comparable to systems that rely on external reward models.

我们特别关注自我修正这一代表性任务,即模型自主检测其响应中的错误、修正输出并决定何时终止迭代优化循环。为此,我们提出了一个两阶段的算法框架,仅使用自生成数据来构建自我奖励的推理模型。在第一阶段,我们采用顺序拒绝采样来合成包含自我奖励和自我修正机制的长链思维轨迹。通过对这些精选数据进行微调,模型能够学习自我奖励和自我修正的模式。在第二阶段,我们通过基于规则的信号进行强化学习,进一步增强模型评估响应准确性和优化输出的能力。Llama-3 和 Qwen-2.5 的实验表明,我们的方法超越了内在的自我修正能力,并实现了与依赖外部奖励模型的系统相当的性能。

1. Introduction

1. 引言

Large language models (LLMs) have demonstrated remarkable capabilities in reasoning-related tasks such as mathematics and coding. Notable examples include ChatGPT (OpenAI, 2023), Claude (Anthropic, 2023), and Gemini (Team et al., 2023). Following the release of GPT4-o1, LLMs with strong reasoning abilities have attracted even more attention, along with inference methods that enhance reasoning. A particularly desirable property of such models is their ability to detect inconsistencies and errors in self-generated responses—based on feedback to their prior outputs—and correct these errors to produce improved responses. This process is often referred to as self-correction in the literature (Welleck et al., 2022; Madaan et al., 2024; Kim et al., 2024).

大语言模型 (LLMs) 在数学和编程等推理相关任务中展现了卓越的能力。著名的例子包括 ChatGPT (OpenAI, 2023)、Claude (Anthropic, 2023) 和 Gemini (Team et al., 2023)。随着 GPT4-o1 的发布,具备强大推理能力的大语言模型以及增强推理的推理方法吸引了更多关注。这类模型的一个特别令人期待的特性是它们能够检测自身生成响应中的不一致性和错误——基于对其先前输出的反馈——并纠正这些错误以生成改进的响应。这一过程在文献中通常被称为自我纠正 (Welleck et al., 2022; Madaan et al., 2024; Kim et al., 2024)。

When an external ground-truth reward model is available, studies (Kim et al., 2024; Qu et al., 2024; Shinn et al., 2024) have shown that LLMs can refine their initial responses based on external gold reward feedback and determine when to terminate the self-correction loop. These approaches have proven effective for both mathematical reasoning and general agent tasks. Moreover, even when relying on imperfect proxy rewards, models can still achieve higher accuracy in revised responses by leveraging feedback from an outcomebased reward model (see Section 5 for empirical results). However, since these reward models are often themselves LLMs, deploying them requires running multiple models during inference, which increases computational costs and deployment complexity. In contrast, without external reward feedback, current LLMs struggle to refine their initial responses solely based on their intrinsic capabilities—a limitation known as intrinsic self-correction (Huang et al., 2023).

当外部真实奖励模型可用时,研究表明 (Kim et al., 2024; Qu et al., 2024; Shinn et al., 2024) 大语言模型可以根据外部黄金奖励反馈优化其初始响应,并决定何时终止自我修正循环。这些方法在数学推理和通用智能体任务中已被证明是有效的。此外,即使依赖不完美的代理奖励,模型仍可以通过利用基于结果的奖励模型的反馈在修订后的响应中实现更高的准确性(参见第5节的实证结果)。然而,由于这些奖励模型本身通常也是大语言模型,部署它们需要在推理过程中运行多个模型,这会增加计算成本和部署复杂性。相比之下,在没有外部奖励反馈的情况下,当前的大语言模型难以仅凭其内在能力优化其初始响应——这一限制被称为内在自我修正 (Huang et al., 2023)。

While reward models are traditionally trained with an additional scalar head for general-purpose chat (Ouyang et al., 2022; Bai et al., 2022; Touvron et al., 2023) and reasoning tasks (Cobbe et al., 2021a; Lightman et al., 2023), recent work suggests that LLMs themselves can generate reward signals in a generative way. For example, the LLM-as-ajudge approach (Zheng et al., 2023; Dubois et al., 2023) prompts the LLM to evaluate text outputs, effectively serving as a surrogate for human feedback. Another emerging direction explores generative reward models (Zhao et al., 2023; Dong et al., 2024; Zhang et al., 2024b; Mahan et al., 2024; Zhang et al., 2024a), which formulate evaluation tasks as instruction-following problems, using the probability of generating specific tokens as the reward value. These methods leverage LLMs’ next-token prediction capabilities, integrate the generation and evaluation into a unified framework.

虽然奖励模型传统上是通过额外的标量头来训练的,用于通用聊天 (Ouyang et al., 2022; Bai et al., 2022; Touvron et al., 2023) 和推理任务 (Cobbe et al., 2021a; Lightman et al., 2023),但最近的研究表明,大语言模型本身可以以生成的方式生成奖励信号。例如,LLM-as-a-judge 方法 (Zheng et al., 2023; Dubois et al., 2023) 提示大语言模型评估文本输出,有效地充当人类反馈的替代品。另一个新兴方向探索生成式奖励模型 (Zhao et al., 2023; Dong et al., 2024; Zhang et al., 2024b; Mahan et al., 2024; Zhang et al., 2024a),这些模型将评估任务制定为指令跟随问题,使用生成特定 Token 的概率作为奖励值。这些方法利用了大语言模型的下一 Token 预测能力,将生成和评估集成到一个统一的框架中。

Building on these insights, this work investigates selfrewarding reasoning models that can incorporate three abilities within a single LLM: (i) generating step-by-step reasoning paths for given prompts, (ii) evaluating the correctness of generated responses, and (iii) revising and enhancing previous responses based on self-rewarding signals. Our key contributions are as follows:

基于这些见解,本研究探索了自我奖励推理模型,该模型可以在单个大语言模型中整合三种能力:(i) 为给定的提示生成逐步推理路径,(ii) 评估生成响应的正确性,以及 (iii) 基于自我奖励信号修订和增强先前的响应。我们的主要贡献如下:

- Self-rewarding reasoning framework. We introduce a self-rewarding reasoning framework for LLMs, which integrates the generator and reward model into a single LLM, enabling autonomous reasoning, evaluation, and correction. This unification simplifies the model’s decision-making process and reduces computational overhead compared to external reward-based approaches.

- 自我奖励推理框架。我们为大语言模型引入了一种自我奖励推理框架,该框架将生成器和奖励模型集成到单个大语言模型中,实现了自主推理、评估和修正。与基于外部奖励的方法相比,这种统一简化了模型的决策过程并减少了计算开销。

- Algorithmic framework for self-correction. We focus on the self-correction in mathematical reasoning and propose a two-stage framework that relies only on self-generated data. In the first stage, we use sequential rejection sampling to construct long chain-of-thought (CoT) trajectories that encode both self-rewarding and self-correction behaviors. Fine-tuning models on these trajectories enables them to detect the error in the selfgenerated responses and revise the previous attempts. In the second stage, we further enhance these patterns through reinforcement learning with rule-based signals.

- 自我修正的算法框架。我们专注于数学推理中的自我修正,并提出了一个仅依赖于自生成数据的两阶段框架。在第一阶段,我们使用顺序拒绝采样来构建长链思维(CoT)轨迹,这些轨迹编码了自我奖励和自我修正行为。在这些轨迹上微调模型,使它们能够检测自生成响应中的错误并修正之前的尝试。在第二阶段,我们通过基于规则的信号进行强化学习,进一步增强这些模式。

- Empirical validation and analysis. Through extensive experiments, we show that self-rewarding correction significantly outperforms intrinsic self-correction. Additionally, we conduct ablation studies to investigate the learning dynamics of the proposed framework, providing deeper insights into its behavior and effectiveness. The training codes and datasets are publicly available on GitHub1.

- 实证验证与分析。通过大量实验,我们展示了自我奖励校正显著优于内在自我校正。此外,我们还进行了消融研究,以探讨所提出框架的学习动态,从而更深入地理解其行为和有效性。训练代码和数据集已在 GitHub 上公开。

2. Related Work

2. 相关工作

We review the works that are mostly related to our project in this section.

本节回顾了与我们的项目最相关的工作。

Self-rewarding alignment. Our work aligns with research on self-rewarding alignment (Yuan et al., 2024b; Prasad et al., 2024), where both of our project and their methods share similar spirits that we can unify the generation ability and evaluation ability into a single LLM. These methods leverage iterative DPO-type algorithms, where the model labels its own generated responses to provide training signals for subsequent iterations, enabling self-improvement. In contrast, our approach does not focus on self-improvement during training. Instead, we rely on an external ground-truth reward model to provide learning signals in training. Our study emphasizes inference-time alignment for reasoningfocused LLMs, where self-rewarding signals are employed solely to guide inference rather than training.

自我奖励对齐。我们的工作与自我奖励对齐的研究(Yuan et al., 2024b; Prasad et al., 2024)一致,我们的项目与他们的方法在精神上相似,即我们可以将生成能力和评估能力统一到一个大语言模型中。这些方法利用了迭代的DPO类型算法,模型通过标记自己生成的响应来为后续迭代提供训练信号,从而实现自我改进。相比之下,我们的方法在训练过程中并不专注于自我改进。相反,我们依赖于外部真实奖励模型来提供训练中的学习信号。我们的研究强调推理导向的大语言模型在推理时的对齐,其中自我奖励信号仅用于指导推理而非训练。

Self-correction. Our work is closely related to selfcorrection in LLMs. We refer interested readers to the survey (Pan et al., 2023) for a more comprehensive review and only review some representative approaches that are mostly related to our project. Li et al. (2024) demonstrated that incorporating teacher model reflections into SFT data enhances students’ self-reflection abilities in general-purpose conversation tasks. However, for reasoning tasks, Huang et al. (2023) found that current LLMs—without additional training—fail to self-correct purely through intrinsic reasoning (i.e., prompting). This observation is also validated in Qu et al. (2024); Tyen et al. (2023); Zheng et al. (2024). A more in-depth analysis shows that most prior successful studies in this domain depend on external (ground-truth) reward models to determine when to initiate and terminate self-correction (Kim et al., 2024; Qu et al., 2024; Shinn et al., 2024; Madaan et al., 2024). Currently, there is no major work demonstrating that intrinsic self-correction (via prompting or fine-tuning) is reliably effective. Furthermore, because external reward models are typically LLM-based, these methods introduce additional computational overhead by requiring a multi-agent system for inference.

自我校正。我们的工作与大语言模型中的自我校正密切相关。我们建议感兴趣的读者参阅综述 (Pan et al., 2023) 以获取更全面的回顾,并仅回顾一些与我们的项目最相关的代表性方法。Li 等人 (2024) 证明,将教师模型的反思纳入 SFT 数据中,可以增强学生在通用对话任务中的自我反思能力。然而,对于推理任务,Huang 等人 (2023) 发现,当前的大语言模型在没有额外训练的情况下,无法通过内在推理(即提示)进行自我校正。这一观察结果也在 Qu 等人 (2024);Tyen 等人 (2023);Zheng 等人 (2024) 中得到了验证。更深入的分析表明,该领域大多数先前成功的研究依赖于外部(真实)奖励模型来确定何时启动和终止自我校正 (Kim 等人, 2024;Qu 等人, 2024;Shinn 等人, 2024;Madaan 等人, 2024)。目前,没有主要工作表明内在自我校正(通过提示或微调)是可靠有效的。此外,由于外部奖励模型通常基于大语言模型,这些方法通过需要多智能体系统进行推理,引入了额外的计算开销。

Recognizing this challenge, our study explores how LLMs can autonomously evaluate response quality and correct errors without external reward models. Specifically, we introduce a self-rewarding reasoning framework that enables a single LLM to perform error detection and self-correction effectively. Among the works in self-correction, the most relevant work is the recent Kumar et al. (2024), which employed a multi-turn deep RL approach to train self-correcting models. In comparison, this work introduces a new and general self-rewarding formulation for reasoning-focused LLMs, with self-correction as a representative application. Compared to the intrinsic correction and the framework in Kumar et al. (2024), one major difference is that our framework equips models with self-rewarding ability, enabling our models to intelligently scale inference compute by selectively revising the first attempts, which helps to reduce computational overhead by avoiding unnecessary iterations. We will also design experiments to illustrate this idea.

认识到这一挑战,我们的研究探索了大语言模型如何在没有外部奖励模型的情况下自主评估响应质量并纠正错误。具体来说,我们引入了一种自我奖励的推理框架,使单个大语言模型能够有效地执行错误检测和自我纠正。在自我纠正的相关工作中,最相关的是最近的 Kumar 等人 (2024) 的工作,他们采用了一种多轮深度强化学习方法来训练自我纠正模型。相比之下,这项工作为专注于推理的大语言模型引入了一种新的、通用的自我奖励公式,并将自我纠正作为代表性应用。与内在纠正和 Kumar 等人 (2024) 的框架相比,一个主要区别在于我们的框架赋予了模型自我奖励的能力,使我们的模型能够通过选择性地修订首次尝试来智能地扩展推理计算,从而通过避免不必要的迭代来减少计算开销。我们还将设计实验来说明这一想法。

Algorithmic ally, our approach also differs from Kumar et al. (2024). We first use sequential rejection sampling to construct long CoT trajectories with both self-rewarding and self-correction patterns, which serve as warm-up fine-tuning data. We then enhance these behaviors through reinforcement learning (using either DPO-type algorithms or PPO)

算法上,我们的方法也与 Kumar 等人 (2024) 不同。我们首先使用顺序拒绝采样来构建包含自我奖励和自我纠正模式的长链思维 (CoT) 轨迹,这些轨迹作为预热微调数据。然后,我们通过强化学习(使用 DPO 类算法或 PPO)来增强这些行为。

with rule-based signals. In contrast, Kumar et al. (2024) employed RLOO (Ahmadian et al., 2024) with a specialized reward function for a two-turn self-correction task. While their no-public models (Gemini) and implementation details (parameters, codes) do not enable comparison, we believe that the multi-turn RL methods proposed by Kumar et al. (2024) could also complement the proposed self-rewarding framework, and achieve better reasoning performance compared to the standard reasoning models.

基于规则的信号。相比之下,Kumar 等人 (2024) 在双轮自我纠正任务中使用了 RLOO (Ahmadian 等人, 2024) 和专门的奖励函数。尽管他们的非公开模型 (Gemini) 和实现细节 (参数、代码) 无法进行比较,但我们认为 Kumar 等人 (2024) 提出的多轮 RL 方法也可以补充所提出的自我奖励框架,并在推理性能上优于标准的推理模型。

Rule-based RL for LLMs mathematical reasoning. Rule-based reinforcement learning has received significant attention following the success of DeepSeek-R1 (DeepSeekAI et al., 2025). Open-source efforts have since attempted to replicate its performance using Qwen models (Yang et al., 2024), including works such as Zeng et al. (2025); Cui et al. (2025); Zhang et al. (2025). These methods train LLMs using only the correctness score (whether the final answer is correct or not) and a format score (whether the final answer is output in a pre-determined format), in contrast to the previous works with the neural network-based reward model (Cobbe et al., 2021a; Lightman et al., 2023; Zhang et al., 2024a). In particular, DeepSeek-AI et al. (2025) observed that self-correction naturally emerges during RL training (referred to as an AHA moment in their report). However, our preliminary experiments, along with open-source replications using Qwen-2.5-Math (Liu et al., 2025; Zhang et al., 2025; Cheng et al., 2025), suggest that (i) the base models already exhibit some self-correction ability, though it is quite sparse. (ii) vanilla rule-based RL cannot consistently enhance self-correction without additional design.

基于规则的大语言模型数学推理强化学习。基于规则的强化学习在 DeepSeek-R1 (DeepSeekAI 等, 2025) 成功之后受到了广泛关注。开源社区随后尝试使用 Qwen 模型 (Yang 等, 2024) 复现其性能,包括 Zeng 等 (2025); Cui 等 (2025); Zhang 等 (2025) 的工作。这些方法仅使用正确性分数(最终答案是否正确)和格式分数(最终答案是否以预定格式输出)来训练大语言模型,与之前基于神经网络的奖励模型 (Cobbe 等, 2021a; Lightman 等, 2023; Zhang 等, 2024a) 的工作形成对比。特别是,DeepSeek-AI 等 (2025) 观察到在强化学习训练过程中自然出现了自我纠正(在他们的报告中称为 AHA 时刻)。然而,我们的初步实验以及使用 Qwen-2.5-Math (Liu 等, 2025; Zhang 等, 2025; Cheng 等, 2025) 的开源复现表明:(i) 基础模型已经表现出一定的自我纠正能力,尽管非常稀疏。(ii) 没有额外设计的普通基于规则的强化学习无法持续增强自我纠正能力。

Interestingly, even when using the same algorithms and data, similar improvements in mathematical reasoning are not observed in models such as Llama (Meta, 2024; Touvron et al., 2023). We hypothesize that Qwen-2.5-Math and DeepSeek-R1 benefit from extensive pre-training on highquality mathematical corpora (e.g., 1T tokens for Qwen-2.5- Math (Yang et al., 2024)), and that the AHA moment may stem from carefully curated data containing self-correction patterns in pre-training or a cool-down stage. Since these datasets are non-public, the exact details remain unknown.

有趣的是,即使使用相同的算法和数据,在 Llama (Meta, 2024; Touvron et al., 2023) 等模型中并未观察到类似的数学推理能力提升。我们推测,Qwen-2.5-Math 和 DeepSeek-R1 受益于高质量数学语料库的广泛预训练(例如,Qwen-2.5-Math 使用了 1T token (Yang et al., 2024)),而“顿悟”时刻可能源于预训练中精心策划的包含自我纠正模式的数据或冷却阶段。由于这些数据集是非公开的,具体细节仍不得而知。

In contrast, our study shows that a warm-up stage using a carefully curated SFT dataset (collected via sequential rejection sampling) enables models to learn self-correction patterns more reliably. This foundation allows rule-based RL to further enhance these behaviors in a stable manner. We also remark that our two-stage framework and most of the associated experiments are performed prior to the release of DeepSeek-R1.

相比之下,我们的研究表明,使用精心策划的 SFT 数据集(通过顺序拒绝采样收集)进行预热阶段,能够使模型更可靠地学习自我纠正模式。这一基础使得基于规则的强化学习能够以稳定的方式进一步增强这些行为。我们还指出,我们的两阶段框架和大部分相关实验是在 DeepSeek-R1 发布之前进行的。

3. Self-rewarding Reasoning Language Models

3. 自我奖励推理大语言模型

We formulate the self-rewarding reasoning process as a multi-turn Markov Decision Process (MDP). After observing an initial prompt $s^{1}=x\in\mathcal{X}$ from some distribution $d_{0}$ , an LLM, denoted as $\pi$ , will generate an initial reasoning attempt $a^{1}\sim\pi^{1}(\cdot|s^{1})$ from the action space $\mathcal{A}$ . The LLM then self-rewards its response by generating an evaluation:

我们将自我奖励的推理过程表述为一个多轮马尔可夫决策过程 (MDP)。在观察到来自某个分布 $d_{0}$ 的初始提示 $s^{1}=x\in\mathcal{X}$ 后,一个表示为 $\pi$ 的大语言模型将从动作空间 $\mathcal{A}$ 中生成一个初始推理尝试 $a^{1}\sim\pi^{1}(\cdot|s^{1})$。随后,大语言模型通过生成评估来自我奖励其响应:

If the model assesses its answer as correct $\begin{array}{r l}{(y^{1}}&{{}=}\end{array}$ [VERIFY] correct, details provided later), the generation stops. Otherwise, the LLM proceeds to the next step, generating a refined response and evaluation:

如果模型评估其答案正确 $\begin{array}{r l}{(y^{1}}&{{}=}\end{array}$ [VERIFY] 正确,详细信息稍后提供),生成停止。否则,大语言模型继续下一步,生成改进的响应和评估:

where the generation is conditioned on the updated state $s^{2}=(s^{1},a^{1},y^{1})$ . The self-refinement process continues until the model produces a self-evaluation $y^{h}$ that assesses the answer as correct.

生成过程基于更新后的状态 $s^{2}=(s^{1},a^{1},y^{1})$。自我优化过程持续进行,直到模型生成一个自我评估 $y^{h}$,该评估认为答案是正确的。

We assume that we have access to the ground-truth verifier $r^{\star}:\mathcal{X}\times\mathcal{A}\rightarrow{0,1}$ , which determines whether a response is correct. Throughout this study, we use the ToRA verification script (Gou et al., 2023), built on the Python library SymPy for symbolic mathematics. We also present a representative Example 1 to illustrate the process.

我们假设可以访问真实验证器 $r^{\star}:\mathcal{X}\times\mathcal{A}\rightarrow{0,1}$ ,它决定了响应是否正确。在本研究中,我们使用基于 Python语言 库 SymPy 的 ToRA 验证脚本 (Gou et al., 2023) 进行符号数学计算。我们还提供了一个代表性的示例 1 来说明这一过程。

Two-stage training framework. Following standard posttraining practices for LLMs, we adopt a two-stage approach:

两阶段训练框架。遵循大语言模型的标准后训练实践,我们采用了两阶段方法:

- Self-rewarding instruction-following fine-tuning (IFT). Starting with an initial LLM $\pi_{0}$ (e.g., a generalpurpose chatbot), we collect demonstration data by a sequential rejection sampling process and fine-tune $\pi_{0}$ to get an improved model $\pi_{\mathrm{ref}}$ , which integrates self-rewarding reasoning abilities.

- 自我奖励的指令跟随微调 (IFT)。从初始的大语言模型 $\pi_{0}$ (例如,通用聊天机器人)开始,我们通过顺序拒绝采样过程收集演示数据,并对 $\pi_{0}$ 进行微调,以获得改进的模型 $\pi_{\mathrm{ref}}$,该模型集成了自我奖励的推理能力。

- Reinforcement learning (RL) optimization. We further refine $\pi_{\mathrm{ref}}$ using RL, leveraging it as the reference model. This stage can further enhance the model’s ability to assess correctness and refine previous responses.

- 强化学习 (RL) 优化。我们进一步使用强化学习来优化 $\pi_{\mathrm{ref}}$,并将其作为参考模型。这一阶段可以进一步增强模型评估正确性和优化先前响应的能力。

3.1. Self-rewarding Instruction-following Fine-tuning

3.1. 自我奖励的指令跟随微调

Self-rewarding by token prediction. To train the LLMs to evaluate the reasoning steps, we formulate this task as an instruction-following task, following prior works (Zhao et al., 2023; Dong et al., 2024; Liu et al., 2023; Ye et al., 2024; Wang et al., 2024; Zhang et al., 2024b). Specifically, we allow models to include reasoning in their evaluations while requiring them to output specific tokens to indicate their evaluation results. We experimented with different token choices, such as: (i) a prompt “Is the most recent final answer correct (Yes or No)?” with “Yes” and “No” as the response tokens, as used in (Xie et al., 2023; Zhang et al., 2024b); (ii) explicit markers such as “[VERIFY] correct” and “[VERIFY] wrong”. Our experiments show no

通过Token预测进行自我奖励。为了训练大语言模型评估推理步骤,我们将其制定为一个指令遵循任务,遵循先前的工作 (Zhao et al., 2023; Dong et al., 2024; Liu et al., 2023; Ye et al., 2024; Wang et al., 2024; Zhang et al., 2024b)。具体来说,我们允许模型在评估中包含推理,同时要求它们输出特定的Token以指示评估结果。我们尝试了不同的Token选择,例如:(i) 提示“最近的最终答案是否正确(是或否)?”并以“是”和“否”作为响应Token,如 (Xie et al., 2023; Zhang et al., 2024b) 中使用的;(ii) 显式标记,如“[VERIFY]正确”和“[VERIFY]错误”。我们的实验表明没有

Table 1. An example of the self-rewarding reasoning path. We omit the detailed reasoning path for a clear presentation. The full trajectory is available at Table 13 in Appendix.

表 1: 自我奖励推理路径的示例。为了清晰展示,我们省略了详细的推理路径。完整的轨迹可在附录的表 13 中找到。

significant performance differences between these choices. During inference, rather than using the likelihood of “Yes” as a reward (as in (Zhao et al., 2023; Dong et al., 2024; Zhang et al., 2024b)), we sample the evaluation token from the distribution. This allows us to use a standard inference pipeline without any specific adjustment. See Table 1 for an example.

这些选择之间存在显著的性能差异。在推理过程中,我们不是使用“是”的可能性作为奖励(如 (Zhao et al., 2023; Dong et al., 2024; Zhang et al., 2024b) 中所述),而是从分布中采样评估 token。这使得我们能够使用标准的推理流程,而无需任何特定调整。示例见表 1。

Remark 3.1. We choose these specific tokens primarily for research simplicity. However, we expect that similar results can be achieved even if these special tokens are replaced with more natural language expressions, such as “wait”, “aha”, or “let me re-check the answer”, where one can also leverage the LLMs to complete this paraphrasing process.

备注 3.1。我们选择这些特定的 Token 主要是为了研究的简便性。然而,我们预计即使这些特殊 Token 被更自然的语言表达所替代,例如“等一下”、“啊哈”或“让我重新检查一下答案”,也可以实现类似的结果,其中人们还可以利用大语言模型来完成这个释义过程。

Data collection by sequential rejection sampling. We employ a rejection sampling approach, similar to STaR (Zelikman et al., 2022) and RAFT (Dong et al., 2023), where we generate a large amount of self-correction trajectories and only preserve the desired trajectories. The major difference is that since the self-correction behavior is sparse in base models and self-rewarding pattern is missing, it is unlikely to collect the desired trajectory directly. In view of this, we sequentially prompt the base model and generate different steps separately. Then, we combine them into long CoT trajectories that incorporate both self-rewarding and self-correction patterns.

通过顺序拒绝采样进行数据收集。我们采用了一种类似于 STaR (Zelikman et al., 2022) 和 RAFT (Dong et al., 2023) 的拒绝采样方法,生成大量的自我纠正轨迹,并仅保留所需的轨迹。主要区别在于,由于基础模型中的自我纠正行为较为稀疏且缺乏自我奖励模式,直接收集到所需的轨迹是不太可能的。鉴于此,我们顺序提示基础模型并分别生成不同的步骤。然后,我们将它们组合成包含自我奖励和自我纠正模式的长链式推理 (CoT) 轨迹。

Our data collection process consists of the following steps:

我们的数据收集过程包括以下步骤:

- Generating initial reasoning responses: training prompts from datasets such as MATH (Hendrycks et al., 2021) and GSM8K (Cobbe et al., 2021a) and sample $N_{1}=50$ initial responses $a^{1}$ per prompt as our base trajectories (see Section 5 for details of experiment setups).

- 生成初始推理响应:使用来自MATH (Hendrycks et al., 2021) 和 GSM8K (Cobbe et al., 2021a) 等数据集的训练提示,并为每个提示采样 $N_{1}=50$ 个初始响应 $a^{1}$ 作为我们的基础轨迹(实验设置的详细信息见第5节)。

- Self-rewarding signal sampling: For each prompt and initial response, we further sample $N_{2}=8$ selfevaluations and keep only one evaluation result that is the same as the ground truth. Then, we split them into $G^{\mathrm{correct}}$ and $G^{\mathrm{wrong}}$ using the ground-truth verifier $r^{\star}$

- 自我奖励信号采样:对于每个提示和初始响应,我们进一步采样 $N_{2}=8$ 个自我评估,并仅保留与真实值相同的评估结果。然后,我们使用真实值验证器 $r^{\star}$ 将它们分为 $G^{\mathrm{correct}}$ 和 $G^{\mathrm{wrong}}$。

- Correction sampling: For each prompt and initial response in $G^{\mathrm{wrong}}$ , we sample $M_{1}=8$ completions by providing the feedback that the initial response was wrong to collect trajectories that successfully revise incorrect responses. For each prompt and initial response in $G^{\mathrm{correct}}$ , however, we also tell the model that the response was incorrect and collect $M_{2}=4$ completions. By doing so, we want to additionally collect “correct-to-correct” trajectories in the face of wrong judgment.

- 修正采样:对于 $G^{\mathrm{wrong}}$ 中的每个提示和初始响应,我们通过提供初始响应错误的反馈来采样 $M_{1}=8$ 个补全,以收集成功修正错误响应的轨迹。然而,对于 $G^{\mathrm{correct}}$ 中的每个提示和初始响应,我们也告诉模型响应是错误的,并收集 $M_{2}=4$ 个补全。通过这样做,我们希望在面对错误判断时额外收集“正确到正确”的轨迹。

Eventually, we collect $8\times|G^{\mathrm{wrong}}|+4\times|G^{\mathrm{correct}}|$ full trajectories. Then, we filter the dataset and only keep the following types of data:

最终,我们收集了 $8\times|G^{\mathrm{wrong}}|+4\times|G^{\mathrm{correct}}|$ 条完整轨迹。然后,我们对数据集进行过滤,仅保留以下类型的数据:

• $\mathcal{D}{1}^{\mathrm{IFT}}$ : wrong $a^{1}$ , $y^{1}=\left[\mathrm{VERIFY}\right]$ wrong, correct $a^{2}$ ; • $\mathcal{D}{2}^{\mathrm{IFT}}$ : correct $a^{1}$ , $y^{1}=$ [VERIFY] wrong, correct $a^{2}$ • $\mathcal{D}_{3}^{\mathrm{IFT}}$ : correct $a^{1}$ , $y^{1}=$ [VERIFY] correct.

• $\mathcal{D}{1}^{\mathrm{IFT}}$ : 错误的 $a^{1}$ , $y^{1}=\left[\mathrm{VERIFY}\right]$ 错误,正确的 $a^{2}$ ;

• $\mathcal{D}{2}^{\mathrm{IFT}}$ : 正确的 $a^{1}$ , $y^{1}=$ [VERIFY] 错误,正确的 $a^{2}$

• $\mathcal{D}_{3}^{\mathrm{IFT}}$ : 正确的 $a^{1}$ , $y^{1}=$ [VERIFY] 正确。

We provide an example of data collection process in Table 2. We limit the horizon to two iterations due to resource constraint, and preserve at most one trajectory per base sample

我们在表 2 中提供了一个数据收集过程的示例。由于资源限制,我们将迭代次数限制为两次,并且每个基础样本最多保留一条轨迹。

System: You are a mathematical reasoning assistant. For each problem, follow these steps strictly: 1. Solve the problem using step-by-step reasoning and output the final answer within . Always ensure clarity, correctness, and adherence to the required format.

系统:你是一个数学推理助手。对于每个问题,请严格按照以下步骤操作:1. 使用逐步推理解决问题,并在 内输出最终答案。始终确保清晰、正确,并遵守所需的格式。

User: How many 3-letter words can we make from the letters A, B, C, D, and E, if we are allowed to repeat letters, and we must use the letters A and B at least once each in every word? (Here, a word is an arbitrary sequence of letters.) Let’s think step by step and output the final answer within .

用户:从字母 A、B、C、D 和 E 中,我们可以组成多少个 3 字母的单词?允许重复使用字母,并且每个单词中必须至少使用一次 A 和 B。(这里的单词是任意字母序列。)让我们一步一步思考,并在 内输出最终答案。

Assistant: Here’s how we can break it down: 1. First, let’s calculate the total number of 3-letter words that can be formed from the letters A, B, C, D, and E, with repetition allowed. ... 7. Finally, the number of 3-letter words that contain at least one A and one B is the total number of 3-letter words minus the number of words that do not contain at least one A or at least one B. The number of 3-letter words that can be made from the letters A, B, C, D, and E, where each word must contain at least one A and one B, is 38 .

我们可以这样分解问题:1. 首先,计算从字母 A、B、C、D 和 E 中可以组成多少个允许重复的 3 字母单词。... 7. 最后,包含至少一个 A 和一个 B 的 3 字母单词的数量等于总的 3 字母单词数量减去不包含至少一个 A 或至少一个 B 的单词数量。从字母 A、B、C、D 和 E 中可以组成的每个单词必须包含至少一个 A 和一个 B 的 3 字母单词的数量是 38。

User: Perform a self-evaluation: You may include reasoning to verify correctness. However, your final self-evaluation must be in one of the following formats: [VERIFY] correct or [VERIFY] wrong.

用户:进行自我评估:你可以包含验证正确性的推理。然而,你的最终自我评估必须采用以下格式之一:[VERIFY] 正确 或 [VERIFY] 错误。

Assistant: Let’s perform a self-evaluation of the solution. We first calculate ... This is $125-101=24$ . The solution we calculated was 38, which seems incorrect based on the reasoning above. The correct answer should be 24. Let’s output the self-evaluation: [VERIFY] wrong.

让我们对解决方案进行自我评估。我们首先计算...这是 $125-101=24$。我们计算的解决方案是38,根据上述推理似乎不正确。正确答案应该是24。让我们输出自我评估:[VERIFY] 错误。

User: please identify the mistake in your previous reasoning, revise your reasoning path and output a corrected final answer within

用户:请指出你之前推理中的错误,修正你的推理路径,并在其中输出一个修正后的最终答案。

Assistant: Let’s correct the mistake in the previous reasoning. The correct steps should be as follows: 1. Calculate ... 6. Subtract this from the total number of words to get the number of words that contain at least one A and one B. This is $125-101=24$ . The correct answer is indeed 24 .

让我们纠正之前推理中的错误。正确的步骤应如下:1. 计算... 6. 从总词数中减去这个数,得到至少包含一个A和一个B的词数。这是 $125-101=24$。正确答案确实是24。

Table 2. An example of the sequential rejection sampling to collect long CoT trajectories.

表 2: 一个用于收集长 CoT 轨迹的顺序拒绝采样示例。

to control dataset size. Then we fine-tune the LLMs using standard SFT pipeline to maximize:

为了控制数据集大小,我们使用标准的监督微调 (SFT) 流程对大语言模型进行微调,以最大化:

In practice, however, we observe that the multi-task training can lead to stability issue and can slightly hurt the first-round performance. To mitigate this issue, we also train on the correct attempt a1 for the samples in DI3FT.

然而,在实践中,我们观察到多任务训练可能会导致稳定性问题,并略微影响第一轮的表现。为了缓解这个问题,我们还对 DI3FT 中的样本的正确尝试 a1 进行了训练。

3.2. KL-regularized Reinforcement Learning

3.2. KL正则化强化学习

In this stage, we aim to further enhance the self-rewarding IFT models using reinforcement learning. We consider both deep RL methods (Schulman et al., 2017) and direct alignment algorithms (Zhao et al., 2023; Rafailov et al., 2023; Azar et al., 2023; Liu et al., 2023).

在此阶段,我们旨在通过强化学习进一步增强自奖励的IFT模型。我们考虑了深度RL方法 (Schulman et al., 2017) 和直接对齐算法 (Zhao et al., 2023; Rafailov et al., 2023; Azar et al., 2023; Liu et al., 2023)。

Learning signal. To facilitate the reinforcement learning stage, we assume there exists a trajectory-wise reward function $u^{\star}(\tau)$ for trajectory

学习信号。为了促进强化学习阶段,我们假设存在一个轨迹级别的奖励函数 $u^{\star}(\tau)$ 用于轨迹。

However, instead of learning a proxy reward from data like the BT model in RLHF (Ouyang et al., 2022) or outcomesupervised reward (ORM) in previous mathematical reasoning literature (Lightman et al., 2023), we primarily use the oracle reward

然而,与RLHF中的BT模型(Ouyang等,2022)或先前数学推理文献中的结果监督奖励(ORM)(Lightman等,2023)不同,我们主要使用oracle奖励

i.e., whether the final result is correct or not. The main advantage is that the oracle reward can largely mitigate the risk of reward hacking. This is also referred to as the rule-based $R L$ in the very recent literature (DeepSeek-AI et al., 2025). We will also study the additional rule designs for either reward value assignment (PPO training) or data ranking (DPO training), where an implicit $u^{\star}$ is determined by the set of rules we use.

即最终结果是否正确。主要优势在于,oracle奖励可以大大降低奖励黑客攻击的风险。这也被称为最近文献中的基于规则的 $RL$(DeepSeek-AI 等,2025)。我们还将研究用于奖励值分配(PPO训练)或数据排序(DPO训练)的额外规则设计,其中隐式的 $u^{\star}$ 由我们使用的规则集决定。

Following standard RLHF methodologies (Ouyang et al., 2022; Bai et al., 2022), we optimize the following KLregularized objective:

遵循标准的 RLHF 方法 (Ouyang et al., 2022; Bai et al., 2022),我们优化以下 KL 正则化目标:

The optimal policy, as well as its associated optimal value satisfies the following optimality condition (Xiong et al.,

最优策略及其相关的最优值满足以下最优性条件 (Xiong et al.,

2024a; Xie et al., 2024a; Zhong et al., 2024).

2024a; Xie 等人, 2024a; Zhong 等人, 2024).

Proposition 3.2. We can recursively define the following optimal value functions and optimal policies for a $K L$ - regularized MDP with horizon $H$ and deterministic external observation. For $Q$ value, we have

命题 3.2. 我们可以递归地定义以下最优值函数和最优策略,用于具有 $K L$ 正则化的 MDP(马尔可夫决策过程),其时间跨度为 $H$ 且具有确定的外部观测。对于 $Q$ 值,我们有

We remark that one advantage of the proposition is that it allows deterministic external message (e.g. instruction prompts) in the state update, which will be useful when we consider a simplified research framework in Section 5.

我们注意到,该命题的一个优势在于它允许在状态更新中使用确定性的外部消息(例如指令提示),这在我们考虑第5节中的简化研究框架时将非常有用。

We also adopt Direct Preference Optimization (DPO) (Rafailov et al., 2023; Azar et al., 2023; Zhao et al., 2023; Ethayarajh et al., 2024) to solve Equation 2, primarily due to computational constraints. In particular, we use the multiturn DPO (M-DPO) framework from Xiong et al. (2024a), since it allows deterministic external observation in the state transition. To facilitate direct preference learning and bypass explicit reward training, we impose the following trajectorylevel Bradley-Terry (BT) preference structure (Bradley & Terry, 1952). Specifically, given two trajectories $\tau^{1},\tau^{2}$ , the probability of $\tau^{1}$ being preferred than $\tau^{2}$ , denoted as $\tau^{1}\succ\tau^{2}$ , is

我们还采用了直接偏好优化 (Direct Preference Optimization, DPO) (Rafailov et al., 2023; Azar et al., 2023; Zhao et al., 2023; Ethayarajh et al., 2024) 来解决公式 2,主要是由于计算限制。特别是,我们使用了 Xiong et al. (2024a) 提出的多轮 DPO (M-DPO) 框架,因为它允许在状态转换中进行确定性的外部观察。为了促进直接偏好学习并绕过显式的奖励训练,我们采用了以下轨迹级别的 Bradley-Terry (BT) 偏好结构 (Bradley & Terry, 1952)。具体来说,给定两条轨迹 $\tau^{1},\tau^{2}$,$\tau^{1}$ 比 $\tau^{2}$ 更受偏好的概率,记为 $\tau^{1}\succ\tau^{2}$,是

where $\sigma(z)=1/(1+\exp(-z))$ is the sigmoid function. Following Xiong et al. (2024a), we take log on both sides of (4), and connect a utility function $u_{\theta}$ with associated policy $\pi_{\theta}$ and value $V_{\theta}$ :

其中 $\sigma(z)=1/(1+\exp(-z))$ 是 sigmoid 函数。根据 Xiong 等人 (2024a) 的研究,我们对 (4) 两边取对数,并将效用函数 $u_{\theta}$ 与相关策略 $\pi_{\theta}$ 和值 $V_{\theta}$ 联系起来:

For a pair of trajectories $\tau^{w},\tau^{l}$ where $\tau^{w}\succ\tau^{l}$ , we have

对于一对轨迹 $\tau^{w},\tau^{l}$,其中 $\tau^{w}\succ\tau^{l}$,我们有

Taking this reward difference parameter iz ation into the loglikelihood of the BT model $\sum_{(\tau^{w},\tau^{l})\in\mathcal{D}}\log\sigma\big(u_{\theta}\big(\tau^{w}\big)-$ $u_{\theta}(\tau^{l}))$ , we obtain the loss f unction $\mathcal{L}_{\mathrm{M-DPO}}(\theta)$ :

将奖励差异参数化考虑到 BT 模型的对数似然 $\sum_{(\tau^{w},\tau^{l})\in\mathcal{D}}\log\sigma\big(u_{\theta}\big(\tau^{w}\big)-$ $u_{\theta}(\tau^{l}))$ 中,我们得到损失函数 $\mathcal{L}_{\mathrm{M-DPO}}(\theta)$:

4. Experiment Results

4. 实验结果

Task, datasets, and data format. We evaluate models’ mathematical reasoning abilities using standard benchmarks, including MATH500 (Hendrycks et al., 2020), OlympiadBench (He et al., 2024), and Minerva Math (Lewkowycz et al., 2022). These datasets provide a moderate size for reli- able and efficient model evaluation, covering topics such as algebra, geometry, probability, number theory, and calculus. For training, we mainly use the prompts in NumiaMath-CoT dataset (Beeching et al., 2024). Specifically, we use a 50K subset for the self-rewarding IFT stage, a 10K subset for validation and model selection, and the remaining data for RL training. During inference, the model generates up to 4096 tokens, with VLLM 0.5.4 (Kwon et al., 2023) accelerating the process.

任务、数据集和数据格式。我们使用标准基准评估模型的数学推理能力,包括 MATH500 (Hendrycks et al., 2020)、OlympiadBench (He et al., 2024) 和 Minerva Math (Lewkowycz et al., 2022)。这些数据集为可靠且高效的模型评估提供了适中的规模,涵盖了代数、几何、概率、数论和微积分等主题。在训练过程中,我们主要使用 NumiaMath-CoT 数据集 (Beeching et al., 2024) 中的提示。具体来说,我们使用 50K 的子集进行自奖励 IFT 阶段,10K 的子集用于验证和模型选择,其余数据用于 RL 训练。在推理过程中,模型最多生成 4096 个 token,并使用 VLLM 0.5.4 (Kwon et al., 2023) 加速该过程。

Evaluation metrics. We employ two categories of metrics to evaluate our models: (1) mathematical reasoning and self-correction and (2) reward model accuracy. First, we follow Kumar et al. (2024) to consider the following metrics to evaluate the models’ ability of mathematical reasoning and self-correction.

评估指标。我们采用两类指标来评估我们的模型:(1) 数学推理与自我修正能力,以及 (2) 奖励模型的准确性。首先,我们遵循 Kumar 等人 (2024) 的研究,考虑以下指标来评估模型的数学推理与自我修正能力。

Due to the nature of the self-rewarding reasoning framework, we additionally include the metrics to measure the accuracy as a reward model. We also defer a more comprehensive understanding of the proposed framework with a slightly simplified template to next section, where we will additionally compute the ratio of modifying a correct answer to incorrect when facing a misleading reward.

由于自我奖励推理框架的性质,我们额外加入了衡量准确性的指标作为奖励模型。我们还将对提出的框架进行更全面的理解,并采用稍简化的模板,留到下一节讨论,届时我们将额外计算在面对误导性奖励时,将正确答案修改为错误答案的比例。

- RM Accuracy $(a,b)$ : class-dependent accuracy for correct and incorrect trajectories. In other words, $a$ is the true positive rate and $b$ is the true negative rate;

- RM 准确度 $(a,b)$:正确和错误轨迹的类别相关准确度。换句话说,$a$ 是真阳性率,$b$ 是真阴性率;

Table 3. Main results of experiments with Qwen2.5-Math-7B-base. The single-turn baselines are used to train a regular CoT reasoning model. The baselines with † perform self-correction under the external prompt, where training may apply to enhance this ability. We use greedy decoding following the convention of the recent open-source projects on mathematical reasoning.

表 3: Qwen2.5-Math-7B-base 实验的主要结果。单轮基线用于训练常规的 CoT 推理模型。带有 † 的基线在外部提示下进行自我校正,其中训练可能用于增强这种能力。我们遵循最近开源数学推理项目的惯例,使用贪婪解码。

| Benchmark | Method | Turn 1 | Final Accuracy | △(t1,t2) | △i→c(t1,t2) | △c→i(t1,t2) |

|---|---|---|---|---|---|---|

| Single-turn STaR/RAFT | 77.0 | 77.0 | ||||

| Single-turn DPO | 76.8 | 76.8 | ||||

| Single-turn PPO | 79.4 | 79.4 | ||||

| Prompt with Gold RM+ | 65.4 | 66.8 | 1.4 | 1.4 | 0.0 | |

| Intrinsic self-correction? | 65.4 | 51.4 | -14.0 | 1.4 | 15.4 | |

| STaR/RAFT for self-correctiont | 71.6 | 70.4 | -1.2 | 5.0 | 6.2 | |

| STaR/RAFT+ for self-correction+ | 72.0 | 71.2 | -0.8 | 3.0 | 3.8 | |

| Self-rewarding IFT | 72.6 | 77.2 | 4.6 | 5.0 | 0.4 | |

| Self-rewarding IFT + DPO w correctness | 72.8 | 78.6 | 5.8 4.4 | 6.0 4.8 | 0.2 | |

| Self-rewarding IFT + PPO w correctness | 75.8 | 80.2 | 0.4 | |||

| Single-turn STaR/RAFT | 40.1 | 40.1 | ||||

| Single-turn DPO | 39.0 | 39.0 | ||||

| Single-turn PPO | 39.5 | 39.5 | ||||

| Prompt with Gold RMt | 23.4 | 25.6 | 2.2 | 2.2 | 0 | |

| Intrinsic self-correctiont STaR/RAFT for self-correction t | 23.4 | 18.1 | -5.3 | 2.2 | 7.5 | |

| STaR/RAFT+ for self-correctiont | 36.5 35.7 | 32.5 35.5 | -4.0 | 7.2 3.2 | 11.2 | |

| Self-rewarding IFT | 35.4 | 39.4 | -0.2 4.0 | 4.7 | 3.4 | |

| Self-rewarding IFT + DPO w correctness | 37.6 | 40.1 | 2.5 | 3.5 | 0.7 1.0 | |

| Self-rewarding IFT + PPO w correctness | 41.0 | 43.4 | 2.4 | 2.8 | 0.4 | |

| Single-turn STaR/RAFT | 32.0 | 32.0 | ||||

| Single-turn DPO Single-turn PPO | 31.6 33.1 | 31.6 33.1 | ||||

| Prompt with Gold RMt | 9.9 | 11.7 | 1.8 | |||

| Intrinsic self-correctiont | 9.9 | 8.4 | 1.8 -1.5 | 1.8 | 0 | |

| STaR/RAFT for self-correctiont | 28.7 | 29.4 | 0.7 | 1.8 | 3.3 1.1 | |

| STaR/RAFT+ for self-correctiont | 25.7 | 25.3 | -0.4 | 0.8 | 1.2 | |

| Self-rewarding IFT | 23.2 | 28.7 | 5.5 | 7.3 | ||

| Self-rewarding IFT + DPO w correctness | 26.8 | 34.6 | 7.8 | 9.6 | 1.8 1.8 | |

| Self-rewarding IFT + PPO w correctness | 34.0 | 38.4 | 4.4 | 5.1 | 0.7 |

- Ratio $p^{c\rightarrow i}(t_{1},t_{2})$ : probability of modifying a correct answer to incorrect when facing a misleading reward.

- 比率 $p^{c\rightarrow i}(t_{1},t_{2})$ :在面对误导性奖励时,将正确答案修改为错误答案的概率。

For all evaluations, we use zero-shot CoT prompting and greedy decoding following the convention of recent projects with Qwen-2.5-Math models.

在所有评估中,我们遵循 Qwen-2.5-Math 模型的近期项目惯例,使用零样本 CoT 提示和贪婪解码。

Experiment setup of self-rewarding IFT. We use Qwen2.5-Math-7B-base as the base model, which is continuously pre-trained on extensive mathematical and instructionfollowing data. Sequential rejection sampling (introduced in Section 3.1) is used for data collection, resulting in a dataset of 32K trajectories, where we roughly balance between correct and incorrect first attempts. In fine-tuning, samples are packed into 8192-token blocks and we use a learning rate of 1e-5, a cosine scheduler, and a 0.05 warm-up ratio. Global batch size is set to be 32. We train the models for three epochs and eventually select the one at the end of the first epoch.

自奖励 IFT 的实验设置。我们使用 Qwen2.5-Math-7B-base 作为基础模型,该模型在广泛的数学和指令跟随数据上进行了持续预训练。数据收集采用顺序拒绝采样(在第 3.1 节中介绍),生成了一个包含 32K 条轨迹的数据集,其中我们大致平衡了正确和错误的首次尝试。在微调过程中,样本被打包成 8192-token 的块,我们使用 1e-5 的学习率、余弦调度器和 0.05 的预热比例。全局批量大小设置为 32。我们训练模型三个 epoch,最终选择第一个 epoch 结束时的模型。

Experiment setup of reinforcement learning. For iterative DPO training, we adopt setups from Xiong et al. (2024a) with a learning rate of $2\times10^{-7}$ , a cosine scheduler, and a batch size of 32. We tune $\eta\in{0.1,0.5}$ and also train with and without an NLL loss in the DPO objective (Pang et al., 2024; Xie et al., 2024a; Liu et al., 2024). For each iteration, we use 20K prompts and collect 8 responses per prompt. Then, we extract the comparison pairs using the correctness score. If all responses admit the same score, we skip the prompt. A 10K validation set from NuminaMathCoT is used for model selection. The primary metric for model selection is accuracy at turn 2. When models achieve comparable turn-2 accuracy, we choose the models with higher $\Delta(t_{1},t_{2})$ improvement. The best model of these training setups is used as the representative model. For PPO training, we mainly follow a pulic example script of veRL (Sheng et al., 2024), which is publicly available2.

强化学习的实验设置。对于迭代 DPO 训练,我们采用 Xiong 等人 (2024a) 的设置,学习率为 $2\times10^{-7}$,使用余弦调度器,批量大小为 32。我们调整 $\eta\in{0.1,0.5}$,并在 DPO 目标中训练时考虑是否包含 NLL 损失 (Pang 等人, 2024; Xie 等人, 2024a; Liu 等人, 2024)。每次迭代中,我们使用 20K 个提示,每个提示收集 8 个响应。然后,我们使用正确性分数提取比较对。如果所有响应的分数相同,则跳过该提示。我们使用 NuminaMathCoT 的 10K 验证集进行模型选择。模型选择的主要指标是第二轮准确率。当模型的第二轮准确率相近时,我们选择 $\Delta(t_{1},t_{2})$ 改进更大的模型。这些训练设置中的最佳模型被用作代表模型。对于 PPO 训练,我们主要遵循 veRL (Sheng 等人, 2024) 的公开示例脚本,该脚本已公开2。

Baseline: improving the self-correction ability. We consider several baseline methods in the self-correction literature, including training-free approaches and fine-tuning. For training-free methods, we evaluate intrinsic self-correction (Huang et al., 2023), where models rely solely on prompting to perform correction, and self-correction with external ground-truth rewards (Qu et al., 2024). The prompts used for these methods are provided in Appendix B. We also include STaR and RAFT approaches (Zelikman et al., 2022; Dong et al., 2023), which are inspired by expert iteration in reinforcement learning (Anthony et al., 2017). These methods generate numerous trajectories with the base model, filter out failed attempts, and fine-tune on successfully revised responses. Following Kumar et al. (2024), we study a variant, $\mathrm{STaR/RAFT+}$ , which augments the training set with a set of correct-to-correct trajectories. To ensure a fair comparison, the total number of training samples for STaR/RAFT $(+)$ is kept the same as in our self-rewarding IFT stage.

基线:提升自我纠正能力。我们考虑了自我纠正文献中的几种基线方法,包括无需训练的方法和微调方法。对于无需训练的方法,我们评估了内在自我纠正(Huang et al., 2023),即模型仅依赖提示进行纠正,以及使用外部真实奖励的自我纠正(Qu et al., 2024)。这些方法使用的提示见附录 B。我们还纳入了 STaR 和 RAFT 方法(Zelikman et al., 2022; Dong et al., 2023),这些方法受到强化学习中专家迭代的启发(Anthony et al., 2017)。这些方法使用基础模型生成大量轨迹,过滤掉失败的尝试,并在成功修订的响应上进行微调。根据 Kumar et al. (2024),我们研究了一个变体 $\mathrm{STaR/RAFT+}$,它通过一组正确到正确的轨迹来增强训练集。为了确保公平比较,STaR/RAFT $(+)$ 的训练样本总数与我们的自我奖励 IFT 阶段保持一致。

Baseline: improving the single-turn reasoning ability. In addition, we also consider several baselines that improve the models’ single-turn reasoning ability without self-correction. These methods include the STaR/RAFT (Zelikman et al., 2022; Dong et al., 2023), iterative DPO (Xiong et al., 2023) with the correctness score to rank data, and PPO with the correctness score. In particular, we adopt the iterative algorithms in the implementations of the STaR/RAFT and DPO because we observe that they achieve much better performance to serve as competitive baselines. We start from Qwen-2.5-Math-7B and train with only self-generated data for a fair comparison. We remark that the Qwen-2.5-Math7B has been trained on many instruction-following data in the pre-training stage and the recent open-source projects also show that it can be used as the starting checkpoint without distillation from larger LLMs or human instructions (Zeng et al., 2025; Zhang et al., 2025).

基线:提升单轮推理能力。此外,我们还考虑了几种无需自我校正即可提升模型单轮推理能力的基线方法。这些方法包括 STaR/RAFT (Zelikman et al., 2022; Dong et al., 2023)、使用正确性评分对数据进行排序的迭代 DPO (Xiong et al., 2023),以及使用正确性评分的 PPO。特别地,我们在 STaR/RAFT 和 DPO 的实现中采用了迭代算法,因为我们观察到它们作为竞争基线表现更好。我们从 Qwen-2.5-Math-7B 开始,仅使用自生成数据进行训练以确保公平比较。我们注意到,Qwen-2.5-Math7B 在预训练阶段已经训练了大量指令跟随数据,并且最近的开源项目也表明它可以作为起始检查点,而无需从更大的大语言模型或人类指令中进行蒸馏 (Zeng et al., 2025; Zhang et al., 2025)。

4.1. Main Results

4.1. 主要结果

We report the main results in Table 3. Note that there can be an error of 0.1 due to rounding.

我们在表 3 中报告了主要结果。请注意,由于四舍五入,可能存在 0.1 的误差。

Intrinsic self-correction with prompting fails in general.

内在自我纠正通过提示在一般情况下失败

We first observe that intrinsic self-correction without explicit reward signals typically reduces final test accuracy. Upon analyzing the outputs, we find that models tend to modify their initial responses responses regardless of its correctness, as they lack a mechanism to determine when to refine their answers versus when to terminate the correction process. Moreover, even when given ground-truth rewards, base models with prompting alone achieve only marginal improvement in incorrect-to-correct transitions $\Delta^{i\rightarrow c}(t_{1},t_{2})$ For example, on MATH-500 benchmark, prompting with gold reward only leads to $\Delta^{i\rightarrow c}(t_{1},t_{2})=1.4%$ .

我们首先观察到,在没有明确奖励信号的情况下,内在的自我修正通常会降低最终的测试准确性。通过分析输出结果,我们发现模型倾向于修改其初始响应,无论其正确与否,因为它们缺乏一种机制来确定何时应该改进答案,何时应该终止修正过程。此外,即使提供了真实奖励,仅通过提示的基础模型在错误到正确的转换中也仅实现了微小的改进 $\Delta^{i\rightarrow c}(t_{1},t_{2})$。例如,在 MATH-500 基准测试中,使用黄金奖励进行提示仅导致 $\Delta^{i\rightarrow c}(t_{1},t_{2})=1.4%$。

We also notice that the STaR/RAFT method, which finetunes models on revised incorrect attempts, fails to significantly improve performance. It increases $\Delta^{i\rightarrow c}(t_{1},t_{2})$ (incorrect-to-correct transitions) on MATH500 from $1.4%$ to $5.0%$ , but still suffers from a $\Delta^{c\rightarrow i}(t_{1},t_{2})$ (correctto-incorrect transitions) of $6.2%$ . Additionally, the STaR/RAFT $^+$ variant, which includes correct-to-correct trajectories, becomes more conservative in modifying the initial attempt. While this reduces incorrect corrections $(\Delta^{c\rightarrow i}(t_{1},t_{2}))$ , it also lower $\Delta^{i\rightarrow c}(t_{1},t_{2})$ , ultimately degrading test accuracy. These findings align with prior studies, and highlight the limitations of intrinsic self-correction, even with training (Huang et al., 2023; Kumar et al., 2024).

我们还注意到,STaR/RAFT 方法通过对修正的错误尝试进行微调,未能显著提升性能。它将 MATH500 上的 $\Delta^{i\rightarrow c}(t_{1},t_{2})$(从错误到正确的转换)从 $1.4%$ 提高到 $5.0%$,但仍然存在 $6.2%$ 的 $\Delta^{c\rightarrow i}(t_{1},t_{2})$(从正确到错误的转换)。此外,包含正确到正确轨迹的 STaR/RAFT$^+$ 变体在修改初始尝试时变得更加保守。虽然这减少了错误的修正 $(\Delta^{c\rightarrow i}(t_{1},t_{2}))$,但也降低了 $\Delta^{i\rightarrow c}(t_{1},t_{2})$,最终导致测试准确率下降。这些发现与先前的研究一致,并强调了即使通过训练,内在自我修正的局限性(Huang et al., 2023; Kumar et al., 2024)。

Self-rewarding reasoning models significantly outperform existing baselines of self-correction. Across all tasks, self-rewarding reasoning models consistently improve final accuracy with higher $\Delta(t_{1},t_{2})$ compared to baseline methods. We notice that fine-tuning on the synthetic trajectories with self-correction behavior yields models with much higher $\Delta^{i\rightarrow c}(t_{1},t_{2})$ , suggesting that the models are more good at correcting the error in the self-generated responses. Distint from the STaR/RAFT, models trained with selfrewarding IFT also exhibit significantly lower $\Delta^{c\rightarrow i}(t_{1},t_{2})$ , indicating they are better at recognizing when to stop due to the additional self-rewarding signals. For instance, on MATH500,

自我奖励推理模型显著优于现有的自我校正基线。在所有任务中,自我奖励推理模型通过更高的 $\Delta(t_{1},t_{2})$ 持续提高最终准确率,相较于基线方法。我们注意到,在具有自我校正行为的合成轨迹上进行微调,得到的模型具有更高的 $\Delta^{i\rightarrow c}(t_{1},t_{2})$ ,这表明模型更擅长纠正自我生成响应中的错误。与 STaR/RAFT 不同,通过自我奖励 IFT 训练的模型也表现出显著更低的 $\Delta^{c\rightarrow i}(t_{1},t_{2})$ ,表明由于额外的自我奖励信号,它们更擅长识别何时停止。例如,在 MATH500 上,

Since STaR/RAFT $(+)$ and self-rewarding IFT use the same data synthesis approach (rejection sampling) but under different self-correction frameworks, these results highlight the advantage of our self-rewarding reasoning framework.

由于 STaR/RAFT $(+)$ 和自我奖励的 IFT 使用了相同的数据合成方法(拒绝采样),但在不同的自我校正框架下,这些结果凸显了我们自我奖励推理框架的优势。

Self-rewarding reasoning models improve the final accuracy compared to the single-turn baselines. We also compare the self-rewarding reasoning models with RL training against their single-turn counterparts. For both the PPO and DPO, the self-rewarding reasoning models achieve higher final test accuracy due to the additional correction step. For instance, the self-rewarding $\mathrm{IFT}+\mathrm{PPO}$ yields a model with $43.4%$ final accuracy on Olympiad Bench, and $38.4%$ on Minerva Math, compared to the $39.5%$ and $33.1%$ of the single-turn counterpart. Similarly, with the DPO, the self-rewarding reasoning models achieve a $78.6%$ on MATH500, a $40.1%$ on Olympiad Bench, and $34.6%$ on Minerva Math, while the single-turn DPO model admits $76.8%$ , $39.0%$ , $31.6%$ , respectively.

与单轮基线相比,自奖励推理模型提高了最终准确率。我们还将自奖励推理模型与单轮模型进行了对比。无论是 PPO 还是 DPO,自奖励推理模型由于额外的修正步骤,都实现了更高的最终测试准确率。例如,自奖励的 $\mathrm{IFT}+\mathrm{PPO}$ 在 Olympiad Bench 上的最终准确率为 $43.4%$,在 Minerva Math 上为 $38.4%$,而单轮模型分别为 $39.5%$ 和 $33.1%$。同样,使用 DPO 时,自奖励推理模型在 MATH500 上达到 $78.6%$,在 Olympiad Bench 上为 $40.1%$,在 Minerva Math 上为 $34.6%$,而单轮 DPO 模型分别为 $76.8%$、$39.0%$ 和 $31.6%$。

Table 4. The results of reward modeling accuracy $(%)$ . We report the accuracy of self-rewarding signals for the three benchmarks in two separate classes. For instance, MATH $500\mathrm{C}$ is the accuracy of recognizing a correct trajectory, while MATH-500 W is the accuracy of recognizing a wrong trajectory. The model highlighted by $(*)$ is selected as the final model.

表 4. 奖励建模准确率结果 $(%)$ 。我们报告了三个基准在两个独立类别中的自奖励信号准确率。例如,MATH-500 C 是识别正确轨迹的准确率,而 MATH-500 W 是识别错误轨迹的准确率。标有 $(*)$ 的模型被选为最终模型。

| 方法 | MATH-500 C | MATH-500 W | OlympiadBench C | OlympiadBench W | Minerva Math C | Minerva Math W |

|---|---|---|---|---|---|---|

| Self-rewarding IFT | 93.0 | 47.7 | 89.6 | 45.9 | 91.7 | 36.1 |

| PPOStep100 | 97.5 | 56.4 | 98.1 | 33.5 | 87.4 | 29.7 |

| PPOStep 220(*) | 98.6 | 47.6 | 97.8 | 39.3 | 94.2 | 32.4 |

| DPOIter2 | 91.3 | 56.2 | 81.9 | 51.8 | 86.7 | 36.2 |

| DPOIter5(+) | 92.0 | 50.6 | 88.2 | 44.5 | 92.4 | 37.4 |

However, self-rewarding models use more tokens at inference due to the additional correction step. For a fair comparison, we will also study the behavior of self-rewarding correction under scaled test-time compute budgets in Section 5.

然而,由于额外的校正步骤,自我奖励模型在推理时使用了更多的Token。为了进行公平比较,我们将在第5节中研究在扩展测试时间计算预算下自我奖励校正的行为。

Deep RL algorithm outperforms the direct alignment algorithms. We observe that PPO outperforms iterative DPO by a large margin. For example, the PPO-trained model achieves a $43.4%$ final accuracy on Olympiad Bench, compared to the $40.1%$ of the DPO method. This suggests that when absolute reward signals are available, enforcing a preference structure (Bradley-Terry model) is unnecessary and may degrade performance. Another possible reason is the limited data utilization in DPO. We notice that, with our setup, we can collect comparison pairs for only $40%$ to $60%$ prompts. For the remaining prompts, models either generate no correct trajectories or all trajectories are correct. As a result, DPO utilizes less training data than PPO, which may contribute to its lower accuracy.

深度强化学习算法优于直接对齐算法。我们观察到,PPO(Proximal Policy Optimization)大幅优于迭代DPO(Direct Preference Optimization)。例如,PPO训练的模型在Olympiad Bench上的最终准确率为43.4%,而DPO方法的准确率为40.1%。这表明,当绝对奖励信号可用时,强制偏好结构(Bradley-Terry模型)是不必要的,并且可能会降低性能。另一个可能的原因是DPO的数据利用率有限。我们注意到,在我们的设置中,我们只能为40%到60%的提示收集比较对。对于剩余的提示,模型要么没有生成正确的轨迹,要么所有轨迹都是正确的。因此,DPO使用的训练数据比PPO少,这可能是其准确率较低的原因。

Reward model (RM) accuracy. Since our self-rewarding framework unifies the generator and reward model, we evaluate the accuracy of our models as a reward model. We observe that the Qwen2.5-Math-7B-base can fail to strictly follow the format by omitting the self-evaluation step or not generating the evaluation result in the pre-determined format possibly because the model is not instruction-following fine-tuned. However, this happens in less then $10%$ of the cases so we focus on the samples with the evaluation step and also further involve human supervision to summarize the statistics. We report the result in Table 4. We observe that the self-rewarding IFT model is much more good at recognizing the correct trajectories, as the accuracy is generally higher than $90%$ , even though we balance the two types of trajectories in the training set. This directly leads to the small $\Delta^{c\rightarrow i}(t_{1},t_{2})$ we observe in the main table.

奖励模型 (RM) 准确率。由于我们的自奖励框架将生成器和奖励模型统一起来,我们评估了模型作为奖励模型的准确率。我们观察到,Qwen2.5-Math-7B-base 可能无法严格遵循格式,例如省略自评估步骤或未以预定格式生成评估结果,这可能是因为模型未经过指令跟随的微调。然而,这种情况发生的概率不到 $10%$,因此我们专注于包含评估步骤的样本,并进一步引入人工监督来总结统计数据。我们在表 4 中报告了结果。我们观察到,自奖励的 IFT 模型在识别正确轨迹方面表现更好,准确率通常高于 $90%$,尽管我们在训练集中平衡了两种类型的轨迹。这直接导致了我们在主表中观察到的较小的 $\Delta^{c\rightarrow i}(t_{1},t_{2})$。

We also notice that the RL training (both PPO and DPO) does not consistently improve the reward modeling accuracy. Analysis of PPO checkpoints (initial model, Step 100 and Step 220) clearly shows a trade-off between correct and incorrect classification accuracy. The PPO training explores different trade-off between them, with the goal of maximizing the final accuracy. Similar observation also applies to the DPO training. Moreover, the best model of PPO training tends to prioritize recognizing correct trajectories, at the cost of lower accuracy in identifying incorrect responses, which aligns with the lower $\Delta^{c\rightarrow i}(t_{1},t_{2})$ and also lower $\Delta^{i\rightarrow c}(t_{1},t_{2})$ . This may be because correcting an incorrect answer is generally more challenging than maintaining a correct initial response. We defer a more detailed study of the impact of data composition on reward modeling accuracy to the next section.

我们还注意到,RL 训练(包括 PPO 和 DPO)并不总是能提高奖励建模的准确性。对 PPO 检查点(初始模型、第 100 步和第 220 步)的分析清楚地显示了正确分类准确率和错误分类准确率之间的权衡。PPO 训练探索了它们之间的不同权衡,目标是最大化最终准确率。类似的观察也适用于 DPO 训练。此外,PPO 训练的最佳模型往往优先识别正确的轨迹,而代价是识别错误响应的准确率较低,这与较低的 $\Delta^{c\rightarrow i}(t_{1},t_{2})$ 和 $\Delta^{i\rightarrow c}(t_{1},t_{2})$ 一致。这可能是因为纠正一个错误的答案通常比保持正确的初始响应更具挑战性。我们将数据组成对奖励建模准确率影响的更详细研究推迟到下一节。

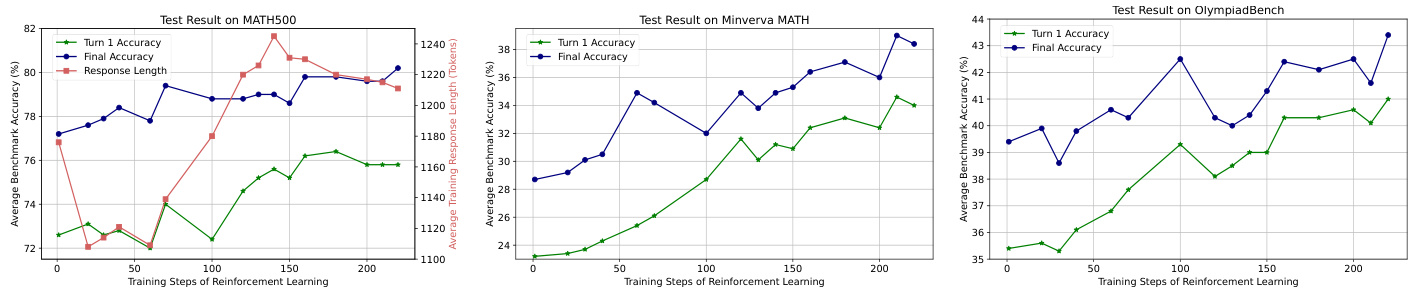

Learning dynamic of the RL stage. While the RL training improves final accuracy, the final test accuracy is determined by both the turn-1 accuracy and $\Delta(t_{1},t_{2})$ . In particular, we notice that the final accuracy gains come primarily from the higher turn-1 accuracy, as the models after the RL training usually admit a much higher turn-1 accuracy, but also a lower $\Delta^{i\rightarrow c}(t_{1},t_{2})$ . To understand the learning dynamic of the RL training, we plot test accuracy on three benchmarks in terms of the RL training steps in Figure 1. We observe that in the early stage of the RL training, both the turn-1 accuracy and the final accuracy increase, and their gap $\Delta(t_{1},t_{2})$ is also increased or maintained as a stable level. This indicates that the models learn to use their knowledge better in the first round and improve or maintain a comparable level of correction ability. Around training step 100, however, the increase of the final accuracy is mainly from the higher turn-1 accuracy and their gap is narrowed, indicating less reliance on self-correction.

RL阶段的学习动态。虽然RL训练提高了最终准确率,但最终测试准确率由第一轮准确率和$\Delta(t_{1},t_{2})$共同决定。特别是,我们注意到最终准确率的提升主要来自更高的第一轮准确率,因为经过RL训练的模型通常具有更高的第一轮准确率,但$\Delta^{i\rightarrow c}(t_{1},t_{2})$较低。为了理解RL训练的学习动态,我们在图1中绘制了三个基准测试的准确率随RL训练步骤的变化。我们观察到,在RL训练的早期阶段,第一轮准确率和最终准确率都增加,它们之间的差距$\Delta(t_{1},t_{2})$也增加或保持稳定水平。这表明模型在第一轮中学会了更好地利用其知识,并提高或保持了相当的修正能力。然而,在训练步骤100左右,最终准确率的提升主要来自更高的第一轮准确率,它们之间的差距缩小,表明对自我修正的依赖减少。

We also plot the average generation length in the first figure. Initially, the length decreases because the Qwen2.5- Math-7B-base model tends to generate many python codes, resulting in lengthy responses. We observe that the code usually takes many tokens and can lead to incomplete reasoning path and it is discouraged by the reward signal. This observation is consistent with Zeng et al. (2025). Then, the length increases in the next stage, indicating that the reflection and self-correction abilities are also encouraged by the RL training. Finally, the length decreases again, along with a higher turn-1 accuracy and a smaller $\Delta(t_{1},t_{2})$ , indicating that the models learn to provide a correct answer in their first attempt and also, the self-correction pattern is discouraged. This is also supported by the reward model accuracy, where the RL-trained models tend to be more conservative and evaluate the attempt as correct.

我们还在第一张图中绘制了平均生成长度。最初,长度减少是因为 Qwen2.5-Math-7B-base 模型倾向于生成许多 Python 代码,导致响应较长。我们观察到,代码通常占用大量 Token,可能导致推理路径不完整,并且这种模式受到奖励信号的抑制。这一观察结果与 Zeng 等人 (2025) 的研究一致。随后,长度在下一阶段增加,表明反思和自我纠正能力也受到强化学习 (RL) 训练的鼓励。最后,长度再次减少,同时伴随着更高的第一轮准确率和更小的 $\Delta(t_{1},t_{2})$,表明模型学会了在第一次尝试中提供正确答案,并且自我纠正模式受到抑制。这一点也得到了奖励模型准确率的支持,经过 RL 训练的模型往往更加保守,并将尝试评估为正确。

Figure 1. The learning dynamic of the PPO training, initialized from the self-rewarding IFT model. We also plot the average generation length during the training in the first figure.

图 1: 从自奖励 IFT 模型初始化的 PPO 训练学习动态。我们还在第一张图中绘制了训练期间的平均生成长度。

5. More Experiment Results with a Two-turn Conversation Framework and Llama Models

5. 使用两轮对话框架和 Llama 模型的更多实验结果

In this section, we continue to investigate the self-rewarding reasoning framework.

在本节中,我们继续研究自我奖励推理框架。

5.1. Data Format: Simplified Two-turn Framework

5.1. 数据格式:简化的双轮对话框架

Previously, we combined multiple reasoning steps into a single long CoT trajectory, which aligns with common practice. However, this approach poses significant challenges for our study, as models—particularly Qwen2.5-Math-7Bbase—often fail to strictly follow instructions for evaluating or revising responses based on their history. For instance, models sometimes will also generate the evaluation results using or not to correct the responses even though the self-evaluation result is “[VERIFY] wrong”. Additionally, models can perform multiple rounds of self-evaluation and correction, but these steps are tightly coupled and cannot be easily decoupled into separate stages.

此前,我们将多个推理步骤合并为一个长的 CoT 轨迹,这与常见做法一致。然而,这种方法对我们的研究提出了重大挑战,因为模型——尤其是 Qwen2.5-Math-7Bbase——往往无法严格遵循基于其历史记录评估或修订响应的指令。例如,即使自我评估结果为“[VERIFY] wrong”,模型有时仍会生成评估结果,无论是否纠正响应。此外,模型可以进行多轮自我评估和纠正,但这些步骤紧密耦合,无法轻易拆分为独立的阶段。

To address these issues, we adopt a simplified two-turn conversation framework, where the user provides explicit instructions between different steps. Specifically, after receiving the mathematical problem, the model will first generate the CoT reasoning $a^{1}$ and self-evaluation $y$ . Then, the user provide a deterministic instruction $o$ based on the self-evaluation $y$ :

为了解决这些问题,我们采用了一个简化的两轮对话框架,用户在不同步骤之间提供明确的指令。具体来说,在接收到数学问题后,模型会首先生成 CoT 推理 $a^{1}$ 和自我评估 $y$。然后,用户根据自我评估 $y$ 提供一个确定性指令 $o$:

- Since your initial response is self-evaluated as incorrect, there might be an error in the solution above because of lack of understanding of the question. Please correct the error, if any, and rewrite the solution. Put your final answer within ;

- 由于您的初始响应自评为不正确,上述解决方案可能存在因对问题理解不足而产生的错误。如有错误,请纠正并重写解决方案。将您的最终答案放在 ;

- Since your initial response is self-evaluated as correct, confirm it and provide no further modifications. Put your final answer within .

- 由于您的初始响应自评为正确,请确认并提供进一步的修改。将您的最终答案放在。

Meanwhile, when collecting the data, the self-rewarding signal is determined directly by the ground-truth oracle reward with the template designed in Zhang et al. (2024b), without additional reasoning. While this simplification may reduce reward modeling accuracy (Zhang et al., 2024b), it facilitates controlled experimentation by allowing modifications to the self-rewarding signal. Similar frameworks—without the self-rewarding component—have been explored in previous works (Huang et al., 2023; Kumar et al., 2024). See Table 6 for an illustrative example.

与此同时,在收集数据时,自我奖励信号直接由真实奖励通过 Zhang 等人 (2024b) 设计的模板确定,无需额外的推理。虽然这种简化可能会降低奖励建模的准确性 (Zhang 等人, 2024b),但它通过允许修改自我奖励信号,便于进行受控实验。类似的框架——不包含自我奖励组件——在之前的工作中已被探索 (Huang 等人, 2023; Kumar 等人, 2024)。参见表 6 中的示例。

5.2. Experiment Setup

5.2. 实验设置

Base model, task, and datasets. Qwen2.5-Math-7B-base serves as a strong and specialized base model, which is pre-trained on a large mathematical corpus. To ensure generality and a more comprehensive evaluation, we experiment with the Llama model series. Specifically, our base models include Llama-3-8B-it and Llama-3-SFT, the latter being fine-tuned on Open-Math Instruct 2-1M (Toshniwal et al., 2024a). While both models are generally weaker than Qwen2.5-Math-7B-base, Llama-3-SFT is stronger than Llama-3-8B-it.

基础模型、任务和数据集。Qwen2.5-Math-7B-base 是一个强大且专业的基础模型,它在大量数学语料库上进行了预训练。为了确保通用性和更全面的评估,我们对 Llama 模型系列进行了实验。具体来说,我们的基础模型包括 Llama-3-8B-it 和 Llama-3-SFT,后者在 Open-Math Instruct 2-1M (Toshniwal et al., 2024a) 上进行了微调。虽然这两个模型通常比 Qwen2.5-Math-7B-base 弱,但 Llama-3-SFT 比 Llama-3-8B-it 更强。

In this section, we evaluate the models’ mathematical reasoning abilities using the MATH and GSM8K benchmarks, which are well-suited to their capacities. For MATH, we use 7.5K training problems during the self-rewarding IFT stage, supplemented by 7.5K prompts from Open-Math Instruct 2 for M-DPO training, with a similar setup for GSM8K. Model selection is performed using a 1K validation set from Open-Math Instruct 2. Since we formulate the task as a multi-turn chat problem, we can directly use Axolotl’s

在本节中,我们使用 MATH 和 GSM8K 基准来评估模型的数学推理能力,这些基准非常适合它们的能力。对于 MATH,我们在自我奖励的 IFT 阶段使用了 7.5K 训练问题,并在 M-DPO 训练中补充了来自 Open-Math Instruct 2 的 7.5K 提示,GSM8K 的设置类似。模型选择使用来自 Open-Math Instruct 2 的 1K 验证集进行。由于我们将任务制定为多轮对话问题,因此可以直接使用 Axolotl 的

Table 5. Main results of different methods on the test sets of MATH (first two groups of results) and GSM8K (last two groups of results). Models are evaluated with temperature 1.0, and results are averaged over three random seeds. Additional results using a temperature of 0.7 are included in the appendix due to space constraints.

表 5: 不同方法在 MATH (前两组结果) 和 GSM8K (后两组结果) 测试集上的主要结果。模型在温度为 1.0 的情况下进行评估,结果取三次随机种子的平均值。由于篇幅限制,使用温度为 0.7 的额外结果包含在附录中。

| 基础模型 | 方法 | 第一轮 | 最终准确率 | △(t1,t2) | △i→c(t1,t2) | △c→i(t1,t2) |

|---|---|---|---|---|---|---|

| Llama-3-8B-it | 使用黄金奖励模型的提示 | 20.7 | 30.3 | 9.6 | 9.6 | 0 |

| Llama-3-8B-it | 使用外部ORM的提示 | 20.7 | 26.2 | 5.5 | 8.8 | 3.3 |

| Llama-3-8B-it | 内在自我校正 | 20.7 | 22.0 | 1.3 | 8.8 | 7.5 |

| Llama-3-8B-it | STaR/RAFT用于自我校正 | 22.3 | 26.1 | 3.7 | 11.4 | 7.7 |

| Llama-3-8B-it | STaR/RAFT+用于自我校正 | 22.7 | 27.1 | 4.4 | 11.7 | 7.3 |

| Llama-3-8B-it | 自我奖励IFT | 22.6 | 27.9 | 5.3 | 8.8 | 3.5 |

| Llama-3-8B-it | 自我奖励IFT + 黄金奖励模型 | 22.6 | 33.9 | 11.3 | 11.3 | 0 |

| Llama-3-SFT | 使用黄金奖励模型的提示 | 36.2 | 45.0 | 8.8 | 8.8 | 0 |

| Llama-3-SFT | 使用外部ORM的提示 | 36.2 | 39.2 | 3.0 | 7.5 | 4.5 |

| Llama-3-SFT | 内在自我校正 | 36.2 | 35.3 | -0.9 | 8.5 | 9.4 |

| Llama-3-SFT | STaR/RAFT用于自我校正 | 38.5 | 36.7 | -1.8 | 10.5 | 12.3 |

| Llama-3-SFT | STaR/RAFT+用于自我校正 | 37.9 | 38.8 | 0.9 | 9.4 | 8.5 |

| Llama-3-SFT | 自我奖励IFT | 37.1 | 40.3 | 3.2 | 7.2 | 4.0 |

| Llama-3-SFT | 自我奖励IFT + 黄金奖励模型 | 37.1 | 46.8 | 9.7 | 9.7 | 0 |

| Llama-3-8B-it | 使用黄金奖励模型的提示 | 64.0 | 72.1 | 8.1 | 8.1 | 0 |

| Llama-3-8B-it | 使用外部ORM的提示 | 64.0 | 68.0 | 4.0 | 5.9 | 1.9 |

| Llama-3-8B-it | 内在自我校正 | 64.0 | 48.1 | -15.9 | 7.1 | 23.0 |

| Llama-3-8B-it | STaR/RAFT用于自我校正 | 76.0 | 63.1 | -12.9 | 7.9 | 20.8 |

| Llama-3-8B-it | STaR/RAFT+用于自我校正 | 75.7 | 67.0 | -8.7 | 8.6 | 17.3 |

| Llama-3-8B-it | 自我奖励IFT | 73.2 | 78.2 | 5.0 | 9.1 | 4.1 |

| Llama-3-SFT | 使用黄金奖励模型的提示 | 74.6 | 83.1 | 8.5 | 8.5 | 0 |

| Llama-3-SFT | 使用外部ORM的提示 | 74.6 | 76.7 | 2.1 | 5.5 | 3.4 |

| Llama-3-SFT | 内在自我校正 | 74.6 | 67.4 | -7.2 | 7.6 | 14.8 |

| Llama-3-SFT | STaR/RAFT用于自我校正 | 73.8 | 67.4 | -6.4 | 9.0 | 15.4 |

| Llama-3-SFT | STaR/RAFT+用于自我校正 | 73.9 | 73.5 | -0.4 | 8.6 | 9.0 |

| Llama-3-SFT | 自我奖励IFT | 76.1 | 79.2 | 3.1 | 4.7 | 1.6 |

training code3. During inference, the model generates up to 2048 tokens per round, with VLLM 0.5.4 (Kwon et al., 2023) accelerating the process.

训练代码3。在推理过程中,模型每轮生成最多2048个Token,并使用VLLM 0.5.4 (Kwon et al., 2023) 加速这一过程。

Training Setup for Llama SFT. For the self-rewarding IFT stage, we use a learning rate of 2e-6 with a batch size of 32 for Llama models and 64 for Llama-3-SFT training. Outcome-supervised reward models (ORMs) are trained using standard SFT recipes and datasets, as described in (Xiong et al., 2024b). Full hyper parameter configurations will be available in our GitHub repository.

Llama SFT 的训练设置。在自奖励 IFT 阶段,我们使用 2e-6 的学习率,Llama 模型的批量大小为 32,Llama-3-SFT 训练的批量大小为 64。结果监督的奖励模型 (ORMs) 使用标准的 SFT 配方和数据集进行训练,如 (Xiong et al., 2024b) 中所述。完整的超参数配置将在我们的 GitHub 仓库中提供。

We observe that models occasionally fail to follow the instruction to perform self-rewarding corrections, though this occurs in less than $5%$ of cases. In such scenarios, we terminate after the first round and use its output as the final answer.

我们观察到,模型偶尔无法遵循指令进行自我奖励修正,尽管这种情况发生的概率不到 $5%$。在这种情况下,我们会在第一轮结束后终止,并将其输出作为最终答案。

5.3. Main Results with Llama Models

5.3. Llama 模型的主要结果

Experiments with Llama models align well with the Qwen model. Our experiments with Llama models show similar trends to those observed with Qwen models. Specifically, intrinsic self-correction—whether with or without STaR/RAFT-like training—fails to reliably correct errors in self-generated responses. Models tend to modify their initial responses regardless of correctness, making these methods beneficial primarily for weaker models where most first attempts are incorrect (e.g., MATH task with Llama-3- 8B-it). However, for stronger models that solve most problems correctly on the first attempt (e.g., GSM8K task with Llama-3-SFT), intrinsic self-correction and STaR/RAFT methods significantly reduce turn-2 accuracy. In contrast, self-rewarding IFT models consistently improve turn-1 accuracy by effectively correcting errors while preserving already correct responses. This demonstrates the generality of the proposed framework.

Llama 模型的实验与 Qwen 模型的结果高度一致。我们对 Llama 模型的实验显示出与 Qwen 模型相似的趋势。具体而言,内在自我修正——无论是否使用类似 STaR/RAFT 的训练——都无法可靠地纠正自我生成响应中的错误。模型倾向于修改其初始响应,无论其正确与否,这使得这些方法主要对较弱模型有益,因为大多数首次尝试都是错误的(例如,Llama-3-8B-it 在 MATH 任务中的表现)。然而,对于在首次尝试中就能正确解决大多数问题的较强模型(例如,Llama-3-SFT 在 GSM8K 任务中的表现),内在自我修正和 STaR/RAFT 方法显著降低了第二轮准确率。相比之下,自我奖励的 IFT 模型通过有效纠正错误并保留已经正确的响应,持续提高了第一轮准确率。这证明了所提出框架的通用性。

To further evaluate the self-rewarding IFT model, we modify the self-rewarding signal to be the same as the oracle reward, eliminating the influence of reward signal quality and directly assessing the model’s ability to correct incorrect responses. For example, the baseline Llama-3-SFT achieve a $\Delta^{i\rightarrow c}(t_{1},t_{2})=8.8%$ , while models fine-tuned with selfrewarding IFT exhibit a higher $\Delta^{i\rightarrow c}(t_{1},t_{2})=9.7$ , indicating improved correction capabilities.

为了进一步评估自奖励的IFT模型,我们将自奖励信号修改为与oracle奖励相同,消除了奖励信号质量的影响,直接评估模型纠正错误响应的能力。例如,基线Llama-3-SFT实现了$\Delta^{i\rightarrow c}(t_{1},t_{2})=8.8%$,而使用自奖励IFT微调的模型表现出更高的$\Delta^{i\rightarrow c}(t_{1},t_{2})=9.7$,表明其纠正能力有所提升。

An example of the self-rewarding reasoning path under the two-turn conversation framework. User: Three positive integers $a,b$ , and $_x$ form an O’Hara triple $(a,b,x)$ if ${\sqrt{a}}+{\sqrt{b}}=x$ . For example, $(1,4,3)$ is an O’Hara triple because ${\sqrt{1}}+{\sqrt{4}}=3$ . If $(36,25,x)$ is an O’Hara triple, determine the value of $_x$ . Please reason step by step and put your final answer within ${}$ . Assistant: Here is my solution: Begin by substituting the numbers into the definition of an O’Hara triple: $x={\sqrt{36}}+{\sqrt{25}}$ . Factor out the perfect squares from each term to simplify: $x={\sqrt{9\times4}}+{\sqrt{25}}$ . Use the perfect square roots of each term and combine them: $x=3{\sqrt{4}}+5$ . Multiply 4 with the root: $x=12+5$ . Add 12 and 5: Final Answer: The final answer is $\widetilde{x=17}$ . I hope it is correct. Is my most recent final answer correct (Yes or No)? No. User: Since your initial response is self-evaluated as incorrect, there might be an error in the solution above because of lack of understanding of the question. Please correct the error, if any, and rewrite the solution. Assistant: Let’s re-evaluate the solution: We are given that $(36,25,\mathrm{x})$ is an O’Hara triple, so we can write: ${\sqrt{a}}+{\sqrt{b}}=x$ . Substituting $\mathrm{a}=36$ and $\mathbf{b}=$ 25, we get: ${\sqrt{36}}+{\sqrt{25}}=x$ . Simplifying the square roots, we get: $6+5=x$ . Adding 6 and 5, we get: $x=11$

一个在双轮对话框架下的自我奖励推理路径示例。

用户:三个正整数 $a,b$ 和 $_x$ 如果满足 ${\sqrt{a}}+{\sqrt{b}}=x$,则构成一个 O’Hara 三元组 $(a,b,x)$。例如,$(1,4,3)$ 是一个 O’Hara 三元组,因为 ${\sqrt{1}}+{\sqrt{4}}=3$。如果 $(36,25,x)$ 是一个 O’Hara 三元组,请确定 $_x$ 的值。请逐步推理,并将最终答案放在 ${}$ 中。

助手:这是我的解答:首先将数字代入 O’Hara 三元组的定义:$x={\sqrt{36}}+{\sqrt{25}}$。将每一项中的完全平方数分解出来以简化:$x={\sqrt{9\times4}}+{\sqrt{25}}$。使用每一项的完全平方根并将它们组合起来:$x=3{\sqrt{4}}+5$。将 4 与根号相乘:$x=12+5$。将 12 和 5 相加:最终答案:$\widetilde{x=17}$。我希望这是正确的。我最近的最终答案是否正确(是或否)?否。

用户:由于你的初始回答自我评估为不正确,可能因为对问题的理解不足,解决方案中存在错误。请纠正错误(如果有),并重写解决方案。

助手:让我们重新评估解决方案:已知 $(36,25,\mathrm{x})$ 是一个 O’Hara 三元组,因此我们可以写成:${\sqrt{a}}+{\sqrt{b}}=x$。代入 $\mathrm{a}=36$ 和 $\mathbf{b}=25$,我们得到:${\sqrt{36}}+{\sqrt{25}}=x$。简化平方根,我们得到:$6+5=x$。将 6 和 5 相加,我们得到:$x=11$。

5.4. Self-rewarding Reasoning Framework Improves Efficiency in Test-time Inference Compute Scaling

5.4. 自奖励推理框架提高测试时推理计算扩展的效率

Self-correction requires generating multiple LLM responses, making it crucial to compare models under equivalent inference budgets. As noted by Huang et al. (2023), prior self-correction approaches often perform no better than selfconsistency (Wang et al., 2022) when constrained to an equal number of responses. To address this, we analyze self-rewarding correction under scaled test-time compute budgets by sampling $N$ reasoning paths and using majority voting for the final output. We report the results in Figure 2, where the DPO-aligned model is descripted in Section 5.6. For both MATH and GSM8K tasks, with a fixed inference budget, the self-rewarding correction model consistently outperforms independent sampling methods. For e