YOLOE: Real-Time Seeing Anything

YOLOE: 实时视觉感知

Ao Wang1* Lihao Liu1* Hui Chen1 Zijia Lin1 Jungong Han1 Guiguang Ding1 1Tsinghua University

王奥1* 刘立豪1* 陈辉1 林子佳1 韩俊功1 丁贵广1 1清华大学

Abstract

摘要

Object detection and segmentation are widely employed in computer vision applications, yet conventional models like YOLO series, while efficient and accurate, are limited by predefined categories, hindering adaptability in open scenarios. Recent open-set methods leverage text prompts, visual cues, or prompt-free paradigm to overcome this, but often compromise between performance and efficiency due to high computational demands or deployment complexity. In this work, we introduce YOLOE, which integrates detection and segmentation across diverse open prompt mechanisms within a single highly efficient model, achieving real-time seeing anything. For text prompts, we propose Re-parameter iz able Region-Text Alignment (RepRTA) strategy. It refines pretrained textual embeddings via a re-parameter iz able lightweight auxiliary network and enhances visual-textual alignment with zero inference and transferring overhead. For visual prompts, we present Semantic-Activated Visual Prompt Encoder (SAVPE). It employs decoupled semantic and activation branches to bring improved visual embedding and accuracy with minimal complexity. For prompt-free scenario, we introduce Lazy Region-Prompt Contrast (LRPC) strategy. It utilizes a builtin large vocabulary and specialized embedding to identify all objects, avoiding costly language model dependency. Extensive experiments show YOLOE’s exceptional zero-shot performance and transfer ability with high inference efficiency and low training cost. Notably, on LVIS, with $3\times$ less training cost and $I.4\times$ inference speedup, YOLOE $\nu\delta{-}S$ surpasses YOLO-Worldv2-S by $3.5~A P.$ When transferring to COCO, YOLOE-v8-L achieves $O.6A P^{b}$ and 0.4 $A P^{m}$ gains over closed-set $Y O L O\nu\delta\ –L$ with nearly $4\times$ less training time. Code and models are available at https: //github.com/THU-MIG/yoloe.

目标检测和分割在计算机视觉应用中广泛使用,然而像 YOLO 系列这样的传统模型虽然高效且准确,但受限于预定义的类别,阻碍了在开放场景中的适应性。最近的开放集方法利用文本提示、视觉线索或无提示范式来克服这一问题,但由于高计算需求或部署复杂性,往往在性能和效率之间做出妥协。在本工作中,我们引入了 YOLOE,它在单一高效模型中集成了多种开放提示机制下的检测和分割,实现了实时感知任何事物。对于文本提示,我们提出了可重参数化的区域-文本对齐 (RepRTA) 策略。它通过可重参数化的轻量级辅助网络优化预训练的文本嵌入,并以零推理和迁移开销增强视觉-文本对齐。对于视觉提示,我们提出了语义激活的视觉提示编码器 (SAVPE)。它采用解耦的语义和激活分支,以最小的复杂性带来改进的视觉嵌入和准确性。对于无提示场景,我们引入了惰性区域-提示对比 (LRPC) 策略。它利用内置的大词汇表和专用嵌入来识别所有对象,避免了对昂贵语言模型的依赖。大量实验表明,YOLOE 在零样本性能和迁移能力方面表现出色,具有高推理效率和低训练成本。值得注意的是,在 LVIS 上,YOLOE $\nu\delta{-}S$ 以 $3\times$ 更少的训练成本和 $I.4\times$ 的推理速度提升,超越了 YOLO-Worldv2-S 的 $3.5~A P.$ 当迁移到 COCO 时,YOLOE-v8-L 在封闭集 $Y O L O\nu\delta\ –L$ 上实现了 $O.6A P^{b}$ 和 0.4 $A P^{m}$ 的增益,且训练时间减少了近 $4\times$。代码和模型可在 https://github.com/THU-MIG/yoloe 获取。

1. Introduction

1. 引言

Object detection and segmentation are foundational tasks in computer vision [15, 48], with widespread applications spanning autonomous driving [2], medical analyses [55], and robotics [8], etc. Traditional approaches like YOLO series [1, 3, 21, 47], have leveraged convolutional neural networks to achieve real-time remarkable performance. However, their dependence on predefined object categories constrains flexibility in practical open scenarios. Such scenarios increasingly demand models capable of detecting and segmenting arbitrary objects guided by diverse prompt mechanisms, such as texts, visual cues, or without prompt.

目标检测和分割是计算机视觉中的基础任务 [15, 48],其应用广泛,涵盖自动驾驶 [2]、医学分析 [55] 和机器人技术 [8] 等领域。传统方法如 YOLO 系列 [1, 3, 21, 47] 利用卷积神经网络实现了显著的实时性能。然而,它们对预定义对象类别的依赖限制了在实际开放场景中的灵活性。这些场景越来越需要能够通过多种提示机制(如文本、视觉提示或无提示)检测和分割任意对象的模型。

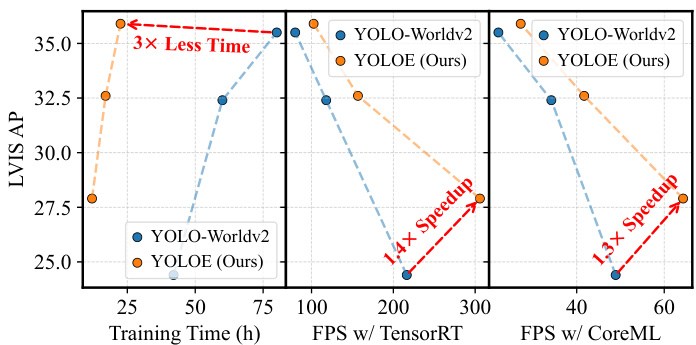

Figure 1. Comparison of performance, training cost, and inference efficiency between YOLOE (Ours) and advanced YOLO-Worldv2 in terms of open text prompts. LVIS AP is evaluated on minival set and FPS w/ TensorRT and w/ CoreML is measured on T4 GPU and iPhone 12, respectively. The results highlight our superiority.

图 1: YOLOE (Ours) 与先进的 YOLO-Worldv2 在开放文本提示下的性能、训练成本和推理效率对比。LVIS AP 在 minival 集上评估,FPS w/ TensorRT 和 w/ CoreML 分别在 T4 GPU 和 iPhone 12 上测量。结果突出了我们的优势。

Given this, recent efforts have shifted towards enabling models to generalize for open prompts [5, 20, 49, 80]. They target single prompt type, e.g., GLIP [32], or multiple prompt types in a unified way, e.g., DINO-X [49]. Specifically, with region-level vision-language pre training [32, 37, 65], text prompts are usually processed by text encoder to serve as contrastive objectives for region features [20, 49], achieving recognition for arbitrary categories, e.g., YOLOWorld [5]. For visual prompts, they are often encoded as class embeddings tied to specified regions for identifying similar objects, by the interaction with image features or language-aligned visual encoder [5, 19, 30, 49], e.g., TRex2 [20]. In prompt-free scenario, existing methods typically integrate language models, finding all objects and generating the corresponding category names conditioned on region features sequentially [49, 62], e.g., GenerateU [33].

鉴于此,最近的研究工作转向了使模型能够对开放提示进行泛化 [5, 20, 49, 80]。这些工作要么针对单一提示类型,例如 GLIP [32],要么以统一的方式处理多种提示类型,例如 DINO-X [49]。具体而言,通过区域级别的视觉-语言预训练 [32, 37, 65],文本提示通常由文本编码器处理,作为区域特征的对比目标 [20, 49],从而实现任意类别的识别,例如 YOLOWorld [5]。对于视觉提示,它们通常被编码为与指定区域绑定的类别嵌入,通过与图像特征或语言对齐的视觉编码器交互来识别相似对象 [5, 19, 30, 49],例如 TRex2 [20]。在无提示场景中,现有方法通常集成语言模型,依次找到所有对象并基于区域特征生成相应的类别名称 [49, 62],例如 GenerateU [33]。

Despite notable advancements, a single model that supports diverse open prompts for arbitrary objects with high efficiency and accuracy is still lacking. For example, DINO

尽管取得了显著进展,但支持任意对象多样化开放提示的高效且准确的单一模型仍然缺乏。例如,DINO

X [49] features a unified architecture, which, however, incurs resource-intensive training and inference overhead. Additionally, individual designs for different prompts in separate works exhibit suboptimal trade-offs between performance and efficiency, making it difficult to directly combine them into one model. For example, text-prompted approaches often incur substantial computational overhead when incorporating large vocabularies, due to complexity of cross-modality fusion [5, 32, 37, 49]. Visual-prompted methods usually compromise deploy ability on edge devices owing to the transformer-heavy design or reliance on additional visual encoder [20, 30, 67]. Prompt-free ways, meanwhile, depend on large language models, introducing considerable memory and latency costs [33, 49].

X [49] 采用了一种统一的架构,然而,这种架构在训练和推理过程中会带来资源密集型的开销。此外,不同工作中针对不同提示的单独设计在性能和效率之间表现出次优的权衡,使得很难将它们直接组合到一个模型中。例如,文本提示方法在融合大词汇量时,由于跨模态融合的复杂性,通常会带来大量的计算开销 [5, 32, 37, 49]。视觉提示方法通常由于 Transformer 密集的设计或对额外视觉编码器的依赖,而在边缘设备上的部署能力上做出妥协 [20, 30, 67]。与此同时,无提示方法依赖于大语言模型,引入了相当大的内存和延迟成本 [33, 49]。

In light of these, in this paper, we introduce YOLOE(ye), a highly efficient, unified, and open object detection and segmentation model, like human eye, under different prompt mechanisms, like texts, visual inputs, and promptfree paradigm. We begin with YOLO models with widely proven efficacy. For text prompts, we propose a Reparameter iz able Region-Text Alignment (RepRTA) strat- egy, which employs a lightweight auxiliary network to improve pretrained textual embeddings for better visualsemantic alignment. During training, pre-cached textual embeddings require only the auxiliary network to process text prompts, incurring low additional cost compared with closed-set training. At inference and transferring, auxiliary network is seamlessly re-parameterized into the classification head, yielding an architecture identical to YOLOs with zero overhead. For visual prompts, we design a SemanticActivated Visual Prompt Encoder (SAVPE). By formalizing regions of interest as masks, SAVPE fuses them with multi-scale features from PAN to produce grouped promptaware weights in low dimension in an activation branch and extract prompt-agnostic semantic features in a semantic branch. Prompt embeddings are derived through aggregation of them, resulting in favorable performance with min- imal complexity. For prompt-free scenario, we introduce Lazy Region-Prompt Contrast (LRPC) strategy. Without relying on costly language models, LRPC leverages a specialized prompt embedding to find all objects and a built-in large vocabulary for category retrieval. By matching only anchor points with identified objects against the vocabulary, LRPC ensures high performance with low overhead.

鉴于这些,本文中我们介绍了 YOLOE(ye),一种高效、统一且开放的目标检测与分割模型,它像人眼一样,能够在不同的提示机制下工作,如文本、视觉输入和无提示范式。我们从广泛验证有效的 YOLO 模型开始。对于文本提示,我们提出了一种可重参数化的区域-文本对齐 (RepRTA) 策略,该策略采用轻量级辅助网络来改进预训练的文本嵌入,以实现更好的视觉-语义对齐。在训练过程中,预缓存的文本嵌入仅需辅助网络处理文本提示,与闭集训练相比,额外成本较低。在推理和迁移时,辅助网络被无缝重参数化到分类头中,生成与 YOLO 相同的架构,且零开销。对于视觉提示,我们设计了一个语义激活的视觉提示编码器 (SAVPE)。通过将感兴趣区域形式化为掩码,SAVPE 将它们与 PAN 的多尺度特征融合,在激活分支中生成低维度的分组提示感知权重,并在语义分支中提取与提示无关的语义特征。提示嵌入通过它们的聚合得到,从而在最小复杂度下实现良好的性能。对于无提示场景,我们引入了惰性区域-提示对比 (LRPC) 策略。LRPC 不依赖昂贵的语言模型,而是利用专门的提示嵌入来查找所有对象,并使用内置的大词汇表进行类别检索。通过仅将锚点与识别出的对象与词汇表匹配,LRPC 确保了高性能和低开销。

Thanks to them, YOLOE excels in detection and segmentation across diverse open prompt mechanisms within one model, enjoying high inference efficiency and low training cost. Notably, as shown in Fig. 1, under $3\times$ less training cost, YOLOE-v8-S significantly outperforms YOLOWorldv2-S [5] by 3.5 AP on LVIS [14], with $1.4\times$ and $1.3\times$ inference speedups on T4 and iPhone 12, respectively. In visual-prompted and prompt-free settings, YOLOE-v8-L outperforms T-Rex2 by $3.3\mathrm{AP}_{r}$ and GenerateU by 0.4 AP with $2\times$ less training data and $6.3\times$ fewer parameters, respectively. For transferring to COCO [34], YOLOE-v8-M $/\mathrm{L}$ outperforms $\mathrm{YOLOv8{-}M/\Omega L}$ by $0.4/0.6\mathrm{AP}^{b}$ and $0.4~/$ $0.4~{\mathrm{AP}}^{m}$ with nearly $4\times$ less training time. We hope that YOLOE can establish a strong baseline and inspire further advancements in real-time open prompt-driven vision tasks.

得益于这些优势,YOLOE 在一个模型中通过多种开放提示机制在检测和分割方面表现出色,具有高推理效率和低训练成本。值得注意的是,如图 1 所示,在训练成本减少 $3\times$ 的情况下,YOLOE-v8-S 在 LVIS [14] 上显著优于 YOLOWorldv2-S [5],AP 提升了 3.5,同时在 T4 和 iPhone 12 上的推理速度分别提升了 $1.4\times$ 和 $1.3\times$。在视觉提示和无提示设置下,YOLOE-v8-L 分别以 $3.3\mathrm{AP}_{r}$ 和 0.4 AP 的优势超越了 T-Rex2 和 GenerateU,同时训练数据减少了 $2\times$,参数减少了 $6.3\times$。在迁移到 COCO [34] 时,YOLOE-v8-M $/\mathrm{L}$ 分别以 $0.4/0.6\mathrm{AP}^{b}$ 和 $0.4~/$ $0.4~{\mathrm{AP}}^{m}$ 的优势超越了 $\mathrm{YOLOv8{-}M/\Omega L}$,同时训练时间减少了近 $4\times$。我们希望 YOLOE 能够建立一个强大的基线,并激发实时开放提示驱动视觉任务的进一步进展。

2. Related Work

2. 相关工作

Traditional detection and segmentation. Traditional approaches for object detection and segmentation primarily operate under closed-set paradigms. Early two-stage frameworks [4, 12, 15, 48], exemplified by Faster RCNN [48], introduce region proposal networks (RPNs) followed by region-of-interest (ROI) classification and regression. Meanwhile, single-stage detectors [10, 35, 38, 56, 72] prioritizes speed through grid-based predictions within a single network. The YOLO series [1, 21, 27, 47, 59, 60] plays a significant role in this paradigm and are widely used in real world. Moreover, DETR [28] and its variants [28, 69, 77] mark a major shift by removing heuristicdriven components with transformer-based architectures. To achieve finer-grained results, existing instance segmentation methods predict pixel-level masks rather than bounding box coordinates [15]. For this, YOLACT [3] facilitates real-time instance segmentation through integration of prototype masks and mask coefficients. Based on DINO [69], MaskDINO [29] utilizes query embeddings and a highresolution pixel embedding map to produce binary masks.

传统检测与分割。传统的目标检测和分割方法主要在闭集范式下运行。早期的两阶段框架 [4, 12, 15, 48],以 Faster RCNN [48] 为例,引入了区域提议网络 (RPNs),随后进行感兴趣区域 (ROI) 的分类和回归。与此同时,单阶段检测器 [10, 35, 38, 56, 72] 通过在单一网络中进行基于网格的预测来优先考虑速度。YOLO 系列 [1, 21, 27, 47, 59, 60] 在这一范式中发挥了重要作用,并在现实世界中得到广泛应用。此外,DETR [28] 及其变体 [28, 69, 77] 通过使用基于 Transformer 的架构移除启发式驱动的组件,标志着一次重大转变。为了获得更细粒度的结果,现有的实例分割方法预测像素级掩码而不是边界框坐标 [15]。为此,YOLACT [3] 通过集成原型掩码和掩码系数实现了实时实例分割。基于 DINO [69],MaskDINO [29] 利用查询嵌入和高分辨率像素嵌入图生成二进制掩码。

Text-prompted detection and segmentation. Recent advancements in open-vocabulary object detection [13, 25, 61, 68, 74–76] have focused on detecting novel categories by aligning visual features with textual embeddings. Specifically, GLIP [32] unifies object detection and phrase grounding through grounded pre-training on largescale image-text pairs, demonstrating robust zero-shot performance. DetCLIP [65] facilitates open-vocabulary learning by enriching the concepts with descriptions. Besides, Grounding DINO [37] enhances this by integrating crossmodality fusion into DINO, improving alignment between text prompts and visual representations. YOLO-World [5] further shows the potential of pre training small detectors with open recognition capabilities based on the YOLO architecture. YOLO-UniOW [36] builds upon YOLO-World by leveraging the adaptive decision-learning strategy. Similarly, several open-vocabulary instance segmentation models [11, 18, 26, 45, 63] learn rich visual-semantic knowledge from advanced foundation models to perform segmentation on novel object categories. For example, X-Decoder [79] and OpenSeeD [71] explore both the open-vocabulary detection and segmentation tasks. APE [54] introduces a universal visual perception model that aligns and prompts all objects in image using various text prompts.

文本提示的检测与分割。近年来,开放词汇目标检测 [13, 25, 61, 68, 74–76] 的进展主要集中在通过将视觉特征与文本嵌入对齐来检测新类别。具体来说,GLIP [32] 通过在大规模图像-文本对上进行基础预训练,统一了目标检测和短语定位,展示了强大的零样本性能。DetCLIP [65] 通过用描述丰富概念来促进开放词汇学习。此外,Grounding DINO [37] 通过将跨模态融合集成到 DINO 中,增强了文本提示与视觉表示之间的对齐。YOLO-World [5] 进一步展示了基于 YOLO 架构的具有开放识别能力的小型检测器的预训练潜力。YOLO-UniOW [36] 在 YOLO-World 的基础上,利用自适应决策学习策略。同样,一些开放词汇实例分割模型 [11, 18, 26, 45, 63] 从先进的基础模型中学习丰富的视觉-语义知识,以对新对象类别进行分割。例如,X-Decoder [79] 和 OpenSeeD [71] 探索了开放词汇检测和分割任务。APE [54] 引入了一种通用视觉感知模型,使用各种文本提示对齐并提示图像中的所有对象。

Visual-prompted detection and segmentation. While text prompts offer a generic description, certain objects can be challenging to describe with language alone, such as those requiring specialized domain knowledge. In such cases, visual prompts can guide detection and segmentation more flexibly and specifically, complementing text prompts [19, 20]. OV-DETR [67] and OWL-ViT [41] leverage CLIP encoders to process text and image prompts. MQDet [64] augments text queries with class-specific visual information from query images. DINOv [30] explores visual prompts as in-context examples for generic and referring vision tasks. T-Rex2 [20] integrates visual and text prompts by region-level contrastive alignment. For segmentation, based on large-scale data, SAM [23] presents a flexible and strong model that can be prompted interactively and iteratively. SEEM [80] further explores segmenting objects with more various prompt types. Semantic-SAM [31] excels in semantic comprehension and granularity detection, handling both panoptic and part segmentation tasks.

视觉提示的检测与分割。虽然文本提示提供了通用描述,但某些对象仅用语言描述可能具有挑战性,例如那些需要专业领域知识的对象。在这种情况下,视觉提示可以更灵活和具体地指导检测和分割,补充文本提示 [19, 20]。OV-DETR [67] 和 OWL-ViT [41] 利用 CLIP 编码器处理文本和图像提示。MQDet [64] 通过查询图像中的类别特定视觉信息增强文本查询。DINOv [30] 探索了视觉提示作为通用和引用视觉任务的上下文示例。T-Rex2 [20] 通过区域级对比对齐整合视觉和文本提示。对于分割任务,基于大规模数据,SAM [23] 提出了一个灵活且强大的模型,可以交互式和迭代式地进行提示。SEEM [80] 进一步探索了使用更多样化的提示类型进行对象分割。Semantic-SAM [31] 在语义理解和粒度检测方面表现出色,能够处理全景和部分分割任务。

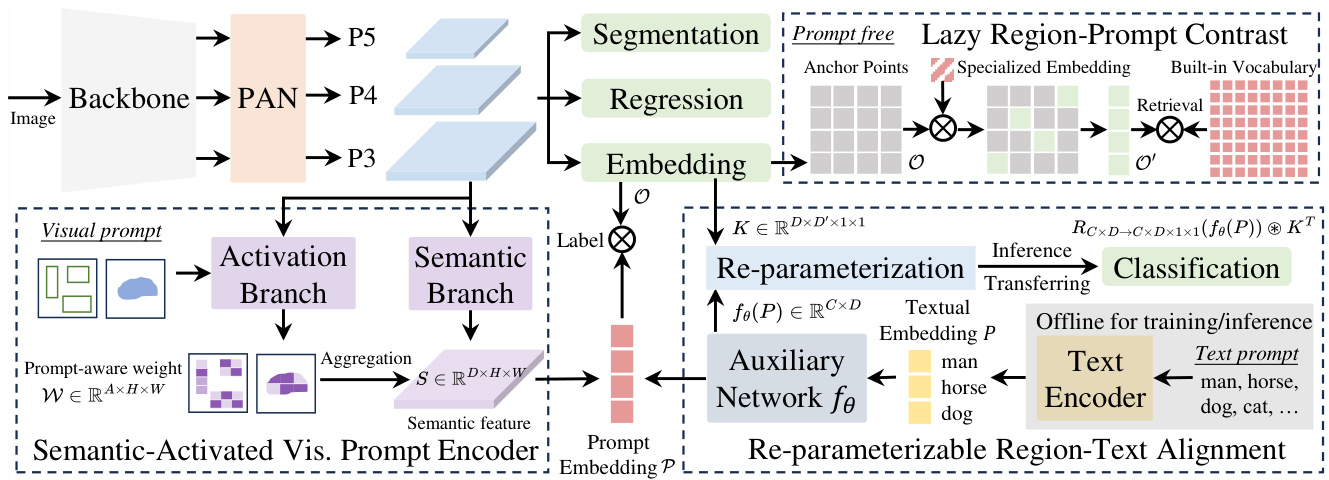

Figure 2. The overview of YOLOE, which supports detection and segmentation for diverse open prompt mechanisms. For text prompts, We design a re-parameter iz able region-text alignment strategy to improve performance with zero inference and transferring overhead. For visual prompts, SAVPE is employed to encode visual cues with enhanced prompt embedding under minimal cost. For prompt-free setting, we introduce lazy region-prompt contrast strategy to provide category names for all identified objects efficiently by retrieval.

图 2: YOLOE 的概述,支持多种开放提示机制的检测和分割。对于文本提示,我们设计了一种可重参数化的区域-文本对齐策略,以提高零样本推理和迁移开销的性能。对于视觉提示,采用 SAVPE 在最小成本下编码视觉线索并增强提示嵌入。对于无提示设置,我们引入了惰性区域-提示对比策略,通过检索高效地为所有识别对象提供类别名称。

Prompt-free detection and segmentation. Existing approaches still depend on explicit prompts during inference for open-set detection and segmentation. To address this limitation, several works [33, 40, 49, 62, 66] explore integrating with generative language models to produce object descriptions for all found objects. For instance, GRiT [62] employs a text decoder for both dense captioning and object detection tasks. DetCLIPv3 [66] trains an object captioner on large-scale data, enabling model to generate rich label information. GenerateU [33] leverages the language model to generate object names in a free-form way.

无提示检测与分割。现有方法在开放集检测和分割的推理过程中仍依赖于显式提示。为了解决这一限制,一些研究 [33, 40, 49, 62, 66] 探索了与生成式语言模型结合,为所有检测到的对象生成描述。例如,GRiT [62] 使用文本解码器来处理密集描述和对象检测任务。DetCLIPv3 [66] 在大规模数据上训练对象描述生成器,使模型能够生成丰富的标签信息。GenerateU [33] 则利用语言模型以自由形式生成对象名称。

Closing remarks. To the best of our knowledge, aside from DINO-X [49], few efforts have achieved object detection and segmentation across various open prompt mecha- nisms within a single architecture. However, DINO-X entails extensive training cost and notable inference overhead, severely constraining the practicality for real-world edge deployments. In contrast, our YOLOE aims to deliver an efficient and unified model that enjoys real-time performance and efficiency with easy deploy ability.

结束语。据我们所知,除了 DINO-X [49] 之外,很少有工作能够在单一架构中实现跨多种开放提示机制的目标检测和分割。然而,DINO-X 需要大量的训练成本和显著的推理开销,严重限制了其在现实世界边缘部署中的实用性。相比之下,我们的 YOLOE 旨在提供一个高效且统一的模型,具备实时性能和效率,并且易于部署。

3. Methodology

3. 方法论

In this section, we detail designs of YOLOE. Building upon YOLOs (Sec. 3.1), YOLOE supports text prompts through RepRTA (Sec. 3.2), visual prompts via SAVPE (Sec. 3.3), and prompt-free scenario with LRPC (Sec. 3.4).

在本节中,我们将详细介绍 YOLOE 的设计。基于 YOLOs(第 3.1 节),YOLOE 通过 RepRTA(第 3.2 节)支持文本提示,通过 SAVPE(第 3.3 节)支持视觉提示,并通过 LRPC(第 3.4 节)支持无提示场景。

3.1. Model architecture

3.1. 模型架构

As shown in Fig. 2, YOLOE adopts the typical YOLOs’ architecture [1, 21, 47], consisting of backbone, PAN, regression head, segmentation head, and object embedding head. The backbone and PAN extracts multi-scale features for the image. For each anchor point, the regression head predicts the bounding box for detection, and the segmentation head produces the prototype and mask coefficients for segmentation [3]. The object embedding head follows the structure of classification head in YOLOs, except that the output channel number of last $1\times$ convolution layer is changed from the class number in closed-set scenario to the embedding dimension. Meanwhile, given text and visual prompts, we employ RepRTA and SAVPE to encode them as normalized prompt embeddings $\mathcal{P}$ , respectively. They serve as the classification weights and contrast with the anchor points’ object embeddings $\mathcal{O}$ to obtain category labels. The process can be formalized as

如图 2 所示,YOLOE 采用了典型的 YOLOs 架构 [1, 21, 47],包括骨干网络 (backbone)、PAN、回归头 (regression head)、分割头 (segmentation head) 和目标嵌入头 (object embedding head)。骨干网络和 PAN 提取图像的多尺度特征。对于每个锚点 (anchor point),回归头预测检测的边界框 (bounding box),分割头生成分割的原型 (prototype) 和掩码系数 (mask coefficients) [3]。目标嵌入头遵循 YOLOs 中分类头的结构,只是最后一层 $1\times$ 卷积层的输出通道数从封闭集场景 (closed-set scenario) 中的类别数更改为嵌入维度 (embedding dimension)。同时,给定文本和视觉提示 (prompts),我们使用 RepRTA 和 SAVPE 将它们分别编码为归一化的提示嵌入 (normalized prompt embeddings) $\mathcal{P}$。它们作为分类权重,并与锚点的目标嵌入 $\mathcal{O}$ 进行对比,以获得类别标签。该过程可以形式化为

where $N$ denotes the number of anchor points, $C$ indicates the number of prompts, and $D$ means the feature dimension of embeddings, respectively.

其中 $N$ 表示锚点的数量,$C$ 表示提示的数量,$D$ 表示嵌入的特征维度。

3.2. Re-parameter iz able region-text alignment

3.2. 可重参数化的区域-文本对齐

In open-set scenarios, the alignment between textual and object embeddings determines the accuracy of identified categories. Prior works usually introduce complex crossmodality fusion to improve the visual-textual representation for better alignment [5, 37]. However, these ways incur notable computational overhead, especially with large number of texts. Given this, we present Re-parameter iz able RegionText Alignment (RepRTA) strategy, which improves pretrained textual embeddings during training through the reparameter iz able lightweight auxiliary network. The alignment between textual and anchor points’ object embeddings can be enhanced with zero inference and transferring cost.

在开放集场景中,文本嵌入和对象嵌入之间的对齐决定了识别类别的准确性。先前的工作通常引入复杂的跨模态融合来改进视觉-文本表示,以实现更好的对齐 [5, 37]。然而,这些方法会带来显著的计算开销,尤其是在文本数量较多的情况下。鉴于此,我们提出了可重参数化的区域-文本对齐 (RepRTA) 策略,该策略通过可重参数化的轻量级辅助网络在训练期间改进预训练的文本嵌入。文本嵌入和锚点对象嵌入之间的对齐可以在零推理和迁移成本的情况下得到增强。

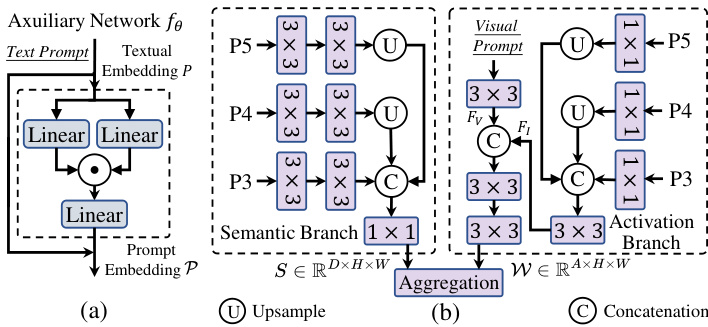

Specifically, with the text prompts of $T$ with length of $C$ , we first employ the CLIP text encoder [44, 57] to obtain pretrained textual embedding $P=\mathrm{TextEncoder}(T)$ . Before training, we cache all embeddings of texts in datasets in advance and the text encoder can be removed with no extra training cost. Meanwhile, as shown in Fig. 3.(a), we introduce a lightweight auxiliary network $f_{\theta}$ with only one feed forward block [53, 58], where $\theta$ indicates the trainable parameters and introduces low overhead compared with closed-set training. It derives the enhanced textual embedding $\mathcal{P}=f_{\theta}(P)\stackrel{\cdot}{\in}\mathbb{R}^{C\times D}$ for contrasting with the anchor points’ object embedding during training, leading to improved visual-semantic alignment. Let K ∈ RD×D′×1×1 be the kernel parameters of last convolution layer with input features $\overset{\cdot}{I}\in\mathbb{R}^{D^{\prime}\times H\times W}$ in the object embedding head, $\circledast$ be the convolution operator, and $R$ be the reshape function, we have

具体来说,对于长度为 $C$ 的文本提示 $T$,我们首先使用 CLIP 文本编码器 [44, 57] 获得预训练的文本嵌入 $P=\mathrm{TextEncoder}(T)$。在训练之前,我们预先缓存数据集中所有文本的嵌入,文本编码器可以在没有额外训练成本的情况下移除。同时,如图 3.(a) 所示,我们引入了一个轻量级的辅助网络 $f_{\theta}$,它仅包含一个前馈块 [53, 58],其中 $\theta$ 表示可训练参数,与闭集训练相比引入了较低的开销。它导出了增强的文本嵌入 $\mathcal{P}=f_{\theta}(P)\stackrel{\cdot}{\in}\mathbb{R}^{C\times D}$,用于在训练期间与锚点的对象嵌入进行对比,从而改善视觉-语义对齐。设 $K \in \mathbb{R}^{D\times D^{\prime}\times 1\times 1}$ 为对象嵌入头中最后一个卷积层的核参数,输入特征为 $\overset{\cdot}{I}\in\mathbb{R}^{D^{\prime}\times H\times W}$,$\circledast$ 为卷积运算符,$R$ 为重塑函数,我们有

Moreover, after training, the auxiliary network can be reparameterized with the object embedding head into the identical classification head of YOLOs. The new kernel parameters $K^{\prime}\in\mathbb{R}^{C\times D^{\prime}\times1\times1}$ for last convolution layer after re-parameter iz ation can be derived by

此外,训练完成后,辅助网络可以通过对象嵌入头重新参数化为 YOLO 的相同分类头。重新参数化后,最后一层卷积层的新核参数 $K^{\prime}\in\mathbb{R}^{C\times D^{\prime}\times1\times1}$ 可以通过以下方式得出:

The final predication can be obtained by $\mathrm{Label}=I\circledast K^{\prime}$ , which is identical to the original YOLO architecture, leading to zero overhead for deployment and transferring to downstream closed-set tasks.

最终的预测可以通过 $\mathrm{Label}=I\circledast K^{\prime}$ 获得,这与原始的 YOLO 架构相同,因此在部署和转移到下游闭集任务时不会产生额外开销。

3.3. Semantic-activated visual prompt encoder

3.3. 语义激活的视觉提示编码器

Visual prompts are designed to indicate the object category of interest through visual cues, e.g., box and mask. To produce the visual prompt embedding, prior works often employ transformer-heavy design [20, 30], e.g., deformable attention [78], or additional CLIP vision encoder [44, 67]. These ways, however, introduce challenges in deployment and efficiency due to complex operators or high computational demands. Considering this, we introduce Semantic-Activated Visual Prompt Encoder (SAVPE) for efficiently processing visual cues. It features two decoupled lightweight branches: (1) Semantic branch outputs prompt-agnostic semantic features in $D$ channels without overhead of fusing visual cues, and (2) Activation branch produces grouped prompt-aware weights by interacting visual cues with image features in much fewer channels under low costs. Their aggregation then leads to informative prompt embedding under minimal complexity.

视觉提示旨在通过视觉线索(例如框和掩码)指示感兴趣的对象类别。为了生成视觉提示嵌入,先前的工作通常采用基于Transformer的设计 [20, 30],例如可变形注意力 [78],或额外的CLIP视觉编码器 [44, 67]。然而,这些方法由于复杂的操作或高计算需求,在部署和效率方面带来了挑战。考虑到这一点,我们引入了语义激活视觉提示编码器(Semantic-Activated Visual Prompt Encoder, SAVPE),以高效处理视觉线索。它具有两个解耦的轻量级分支:(1) 语义分支在 $D$ 通道中输出与提示无关的语义特征,无需融合视觉线索的开销;(2) 激活分支通过视觉线索与图像特征在较少的通道中进行交互,以低成本生成分组的提示感知权重。它们的聚合在最小复杂度下生成信息丰富的提示嵌入。

Figure 3. (a) The structure of lightweight auxiliary network in RepRTA, which consists of one SwiGLU FFN block [53]. (b) The structure of SAVPE, which consists of semantic branch to generate prompt-agnostic semantic features and activation branch to provide grouped prompt-aware weights. Visual prompt embedding can thus be efficiently derived by their aggregation.

图 3: (a) RepRTA 中的轻量级辅助网络结构,包含一个 SwiGLU FFN 块 [53]。 (b) SAVPE 的结构,包含语义分支以生成与提示无关的语义特征,以及激活分支以提供分组的提示感知权重。因此,视觉提示嵌入可以通过它们的聚合高效地生成。

As shown in Fig. 3.(b), in the semantic branch, we adopt the similar structure as object embedding head. With multiscale features ${P_{3},P_{4},P_{5}}$ from PAN, we employ two $3\times3$ convs for each scale, respectively. After upsampling, features are concatenated and projected to derive semantic features $S~\in~\mathbb{R}^{D\times H\times W}$ . In the activation branch, we formalize visual prompt as mask with 1 for indicated region and 0 for others. We downsample it and leverage $3\times3$ conv to derive prompt feature $F_{V}\in\mathbb{R}^{A\times H\times W}$ . Besides, we obtain image features $F_{I}\in\mathbb{R}^{A\times H\times W}$ for fusion with it from ${P_{3},P_{4},P_{5}}$ by convs. $F_{V}$ and $F_{I}$ are then concatenated and utilized to output prompt-aware weights $\mathcal{W}\in\mathbb{R}^{A\times H\times W}$ , which is normalized using softmax within prompt-indicated region. Moreover, we divide the channels of $S$ into $A$ groups with $\textstyle{\frac{D}{A}}$ channels in each. The channels in the $i$ -th group share the weight $\mathcal{W}_{i:i+1}$ from the $i\cdot$ - th channel of $\mathcal{W}$ . With $A\ll D$ , we can process visual cues with image features in low dimension, bringing minimal cost. Furthermore, prompt embedding can be derived with aggregation of two branches by

如图 3(b) 所示,在语义分支中,我们采用了与对象嵌入头相似的结构。利用 PAN 提供的多尺度特征 ${P_{3},P_{4},P_{5}}$,我们为每个尺度分别使用了两个 $3\times3$ 卷积层。经过上采样后,特征被拼接并投影以生成语义特征 $S~\in~\mathbb{R}^{D\times H\times W}$。在激活分支中,我们将视觉提示形式化为掩码,其中指示区域为 1,其他区域为 0。我们对其进行下采样,并利用 $3\times3$ 卷积生成提示特征 $F_{V}\in\mathbb{R}^{A\times H\times W}$。此外,我们通过卷积从 ${P_{3},P_{4},P_{5}}$ 中获取图像特征 $F_{I}\in\mathbb{R}^{A\times H\times W}$ 以与其融合。然后,$F_{V}$ 和 $F_{I}$ 被拼接并用于输出提示感知权重 $\mathcal{W}\in\mathbb{R}^{A\times H\times W}$,该权重在提示指示区域内使用 softmax 进行归一化。此外,我们将 $S$ 的通道分为 $A$ 组,每组包含 $\textstyle{\frac{D}{A}}$ 个通道。第 $i$ 组中的通道共享来自 $\mathcal{W}$ 的第 $i\cdot$ 个通道的权重 $\mathcal{W}_{i:i+1}$。由于 $A\ll D$,我们可以在低维度下处理视觉提示与图像特征,从而带来最小的成本。此外,可以通过两个分支的聚合来生成提示嵌入。

It can thus contrast with anchor points’ object embeddings to identify objects with category of interest.

因此,它可以与锚点的对象嵌入进行对比,以识别具有感兴趣类别的对象。

3.4. Lazy region-prompt contrast

3.4. 惰性区域-提示对比

In prompt-free scenario without explicit guidance, models are expected to identity all objects with names in the image. Prior works usually formulate such setting as a generative problem, where language model is employed to generate categories for dense found objects [33, 49, 62]. However, this introduces notable overhead, where language models, e.g., FlanT5-base [6] with 250M parameters in GenerateU [33] and OPT-125M [73] in DINO-X [49], are far from meeting high efficiency requirement. Given this, we reformulate such setting as a retrieval problem and present Lazy Region-Prompt Contrast (LRPC) strategy. It lazily retrieves category names from a built-in large vocabulary for anchor points with objects in the cost-effective way. Such paradigm enjoys zero dependency on language models, meanwhile with favorable efficiency and performance.

在无提示场景下,模型需要在没有明确指导的情况下识别图像中所有有名称的对象。先前的工作通常将这种设置表述为一个生成问题,即使用语言模型为密集发现的对象生成类别 [33, 49, 62]。然而,这引入了显著的开销,例如 GenerateU [33] 中使用的 2.5 亿参数的 FlanT5-base [6] 和 DINO-X [49] 中使用的 OPT-125M [73],这些语言模型远未满足高效率要求。鉴于此,我们将这种设置重新表述为一个检索问题,并提出了 Lazy Region-Prompt Contrast (LRPC) 策略。它以经济高效的方式从内置的大词汇表中为包含对象的锚点懒加载检索类别名称。这种范式完全不需要依赖语言模型,同时具有较高的效率和性能。

Specifically, with pretrained YOLOE, we introduce a specialized prompt embedding and train it exclusively to find all objects, where objects are treated as one category. Meanwhile, we follow [16] to collect a large vocabulary which covers various categories and serve as the built-in data source for retrieval. One may directly leverage the large vocabulary as text prompts for YOLOE to identify all objects, which, however, incurs notable computational cost by contrasting abundant anchor points’ object embeddings with numerous textual embeddings. Instead, we employ the specialized prompt embedding $\mathcal{P}_{s}$ to find the set $\mathcal{O}^{\prime}$ of anchor points corresponding to objects by

具体来说,我们使用预训练的 YOLOE,引入了一个专门的提示嵌入 (prompt embedding) 并对其进行专门训练,以找到所有对象,这些对象被视为一个类别。同时,我们遵循 [16] 的方法收集了一个涵盖各种类别的大词汇表,作为检索的内置数据源。可以直接利用这个大词汇表作为 YOLOE 的文本提示来识别所有对象,然而,这会导致大量的计算成本,因为需要将大量锚点的对象嵌入与众多文本嵌入进行对比。相反,我们使用专门的提示嵌入 $\mathcal{P}_{s}$ 来找到与对象对应的锚点集合 $\mathcal{O}^{\prime}$。

where $\mathcal{O}$ denotes all anchor points and $\delta$ is the threshold hyper parameter for filtering. Then, only anchor points in $\mathcal{O}^{\prime}$ are lazily matched against the built-in vocabulary to retrieve category names, bypassing the cost for irrelevant anchor points. This further improves efficiency without performance drop, facilitating the real world application.

其中 $\mathcal{O}$ 表示所有锚点,$\delta$ 是用于过滤的阈值超参数。然后,仅对 $\mathcal{O}^{\prime}$ 中的锚点进行惰性匹配,以检索类别名称,从而绕过不相关锚点的成本。这进一步提高了效率而不会导致性能下降,促进了实际应用。

3.5. Training objective

3.5. 训练目标

During training, we follow [5] to obtain an online vocabulary for each mosaic sample with the texts involved in the images as positive labels. Following [21], we leverage taskaligned label assignment to match predictions with ground truths. The binary cross entropy loss is employed for classification, with IoU loss and distributed focal loss adopted for regression. For segmentation, we follow [3] to utilize binary cross-entropy loss for optimizing masks.

在训练过程中,我们遵循 [5] 的方法,为每个马赛克样本获取一个在线词汇表,并将图像中的文本作为正标签。根据 [21],我们利用任务对齐的标签分配方法将预测与真实值进行匹配。分类任务采用二元交叉熵损失,回归任务则采用 IoU 损失和分布式焦点损失。对于分割任务,我们遵循 [3] 的方法,使用二元交叉熵损失来优化掩码。

4. Experiments

4. 实验

4.1. Implementation details

4.1. 实现细节

Model. For fair comparison with [5], we employ the same YOLOv8 architecture [21] for YOLOE. Besides, to verify its good general iz ability on other YOLOs, we also experiment with YOLO11 architecture [21]. For both of them, we provide three model scales, i.e., small (S), medium (M), and large (L), to suit various application needs. Text prompts are encoded using the pretrained MobileCLIP-B(LT) [57] text encoder. We empirically use $A=16$ in SAVPE, by default.

模型。为了与[5]进行公平比较,我们为YOLOE采用了相同的YOLOv8架构[21]。此外,为了验证其在其他YOLO上的良好泛化能力,我们还实验了YOLO11架构[21]。对于这两种架构,我们提供了三种模型规模,即小型(S)、中型(M)和大型(L),以适应不同的应用需求。文本提示使用预训练的MobileCLIP-B(LT)[57]文本编码器进行编码。我们默认在SAVPE中经验性地使用$A=16$。

Data. We follow [5] to utilize detection and grounding datasets, including Objects365 (V1) [52], GoldG [22] (includes GQA [17] and Flickr30k [43]), where images from COCO [34] are excluded. Beside, we leverage advanced SAM-2.1 [46] model to generate pseudo instance masks using ground truth bounding boxes from the detection and grounding datasets for segmentation data. These masks undergo filtering and simplification to eliminate noise [9]. For visual prompt data, we follow [20] to leverage ground truth bounding boxes for visual cues. In prompt-free tasks, we reuse the same datasets, but annotate all objects as a single category to learn a specialized prompt embedding.

数据。我们遵循 [5] 的方法,利用检测和定位数据集,包括 Objects365 (V1) [52]、GoldG [22](包含 GQA [17] 和 Flickr30k [43]),其中排除了来自 COCO [34] 的图像。此外,我们利用先进的 SAM-2.1 [46] 模型,使用检测和定位数据集中的真实边界框生成伪实例掩码,用于分割数据。这些掩码经过过滤和简化以消除噪声 [9]。对于视觉提示数据,我们遵循 [20] 的方法,利用真实边界框作为视觉提示。在无提示任务中,我们重用相同的数据集,但将所有对象标注为单一类别,以学习专门的提示嵌入。

Training. Due to limited computational resource, unlike YOLO-World’s training for 100 epochs, we first train YOLOE with text prompts for 30 epochs. Then, we only train the SAVPE for merely 2 epochs with visual prompts, which avoids additional significant training cost that comes with supporting visual prompts. At last, we train the specialized prompt embedding for only 1 epoch for promptfree scenarios. During the text prompt training stage, we adopt the same settings as [5]. Notably, YOLOE-v8-S / M $/\mathrm{L}$ can be trained on 8 Nvidia RTX4090 GPUs in $12.0~/$ $17.0/22.5$ hours, with $3\times$ less cost compared with YOLOWorld. For visual prompt training, we freeze all other parts and adopt the same setting as in text prompt training. To enable prompt-free capability, we leverage the same data to train a specialized embedding. We can see that YOLOE not only enjoys low training costs but also show exceptional zero-shot performance. Besides, to verify YOLOE’s good transfer ability on downstream tasks, we fine-tune our YOLOE on COCO [34] for closed-set detection and segmentation. We experiment with two distinct practical finetuning strategies: (1) Linear probing: Only the classification head is learnable and (2) Full tuning: All parameters are trainable. For Linear probing, we train all models for only 10 epochs. For Full tuning, we train small scale models including YOLOE-v8-S / 11-S for 160 epochs, and medium and large scale models including YOLOE-v8-M / L and YOLOE-11-M / L for 80 epochs, respectively.

训练。由于计算资源有限,与 YOLO-World 的 100 轮训练不同,我们首先使用文本提示对 YOLOE 进行 30 轮训练。然后,我们仅使用视觉提示对 SAVPE 进行 2 轮训练,这避免了支持视觉提示带来的额外显著训练成本。最后,我们仅为无提示场景训练专用提示嵌入 1 轮。在文本提示训练阶段,我们采用与 [5] 相同的设置。值得注意的是,YOLOE-v8-S / M / L 可以在 8 个 Nvidia RTX4090 GPU 上分别以 12.0 / 17.0 / 22.5 小时完成训练,成本比 YOLO-World 低 3 倍。对于视觉提示训练,我们冻结所有其他部分,并采用与文本提示训练相同的设置。为了实现无提示能力,我们利用相同的数据训练专用嵌入。我们可以看到,YOLOE 不仅训练成本低,而且表现出卓越的零样本性能。此外,为了验证 YOLOE 在下游任务上的良好迁移能力,我们在 COCO [34] 上对 YOLOE 进行微调,用于闭集检测和分割。我们实验了两种不同的实际微调策略:(1) 线性探测:仅分类头可学习;(2) 完全微调:所有参数可训练。对于线性探测,我们仅训练所有模型 10 轮。对于完全微调,我们训练包括 YOLOE-v8-S / 11-S 在内的小规模模型 160 轮,训练包括 YOLOE-v8-M / L 和 YOLOE-11-M / L 在内的中大规模模型 80 轮。

Metric. For text prompt evaluation, we utilize all category names from the benchmark as inputs, adhering to the standard protocol for open-vocabulary object detection tasks. For visual prompt evaluation, following [20], for each category, we randomly sample $N$ training images $N{=}16$ by default), extract visual embeddings using their ground truth bounding boxes, and compute the average prompt embedding. For prompt-free evaluation, we employ the same protocol as [33]. A pretrained text encoder [57] is employed to map open-ended predictions to semantically similar category names within the benchmark. In contrast to [33], we streamline the mapping process by selecting the most confident prediction, and eliminating the need for top $\mathbf{\nabla\cdotk}$ selection and beam search. We use the tag list from [16] as the built-in large vocabulary with total 4585 category names, and empirically use $\delta=0.001$ for LRPC, by default. For all three prompt types, following [5, 20, 33], evaluations are conducted on LVIS [14] in a zero-shot manner, which contains 1,203 categories. By default, Fixed AP [7] on LVIS minival subset is reported. For transferring to COCO, standard AP is evaluated, following [1, 21]. Besides, we measure the FPS for all models on Nvidia T4 GPU with TensorRT and mobile device iPhone 12 with CoreML.

指标。对于文本提示评估,我们使用基准中的所有类别名称作为输入,遵循开放词汇对象检测任务的标准协议。对于视觉提示评估,遵循 [20],对于每个类别,我们随机采样 $N$ 张训练图像(默认 $N{=}16$),使用其真实边界框提取视觉嵌入,并计算平均提示嵌入。对于无提示评估,我们采用与 [33] 相同的协议。使用预训练的文本编码器 [57] 将开放式预测映射到基准中语义相似的类别名称。与 [33] 相比,我们通过选择最自信的预测简化了映射过程,消除了对 top $\mathbf{\nabla\cdotk}$ 选择和束搜索的需求。我们使用 [16] 中的标签列表作为内置的大词汇表,共包含 4585 个类别名称,并默认使用 $\delta=0.001$ 进行 LRPC。对于所有三种提示类型,遵循 [5, 20, 33],在 LVIS [14] 上以零样本方式进行评估,该数据集包含 1203 个类别。默认情况下,报告 LVIS minival 子集上的 Fixed AP [7]。对于迁移到 COCO 的情况,遵循 [1, 21] 评估标准 AP。此外,我们在 Nvidia T4 GPU 上使用 TensorRT 和在 iPhone 12 移动设备上使用 CoreML 测量所有模型的 FPS。

Table 1. Zero-shot detection evaluation on LVIS. For fair comparisons, Fixed $A P$ is reported on LVIS minival set in a zero-shot manner. The training time is for text prompts, based on 8 Nvidia V100 GPUs for [32, 65] and 8 RTX4090 GPUs for YOLO-World and YOLOE. The FPS is measured on Nvidia T4 GPU using TensorRT and on iPhone 12 using CoreML, respectively. Results are provided with text prompt (T) and visual prompt (V) type. For training data, OI, HT, and CH indicates OpenImages [24], HierText [39], and CrowdHuman [51], respectively. OG indicates Objects365 [52] and GoldG [22], and G-20M represents Grounding-20M [50].

表 1. LVIS 上的零样本检测评估。为了公平比较,固定 $AP$ 是在 LVIS minival 集上以零样本方式报告的。训练时间基于 8 个 Nvidia V100 GPU(用于 [32, 65])和 8 个 RTX4090 GPU(用于 YOLO-World 和 YOLOE)。FPS 分别在 Nvidia T4 GPU 上使用 TensorRT 和在 iPhone 12 上使用 CoreML 进行测量。结果提供了文本提示 (T) 和视觉提示 (V) 类型。对于训练数据,OI、HT 和 CH 分别表示 OpenImages [24]、HierText [39] 和 CrowdHuman [51]。OG 表示 Objects365 [52] 和 GoldG [22],G-20M 表示 Grounding-20M [50]。

| 模型 | 提示类型 | 参数量 | 训练数据 | 训练时间 | FPS T4 / iPhone | AP | APr | APc | APf |

|---|---|---|---|---|---|---|---|---|---|

| GLIP-T [32] | T | 232M | OG, Cap4M | 1337.6h | - / - | 26.0 | 20.8 | 21.4 | 31.0 |

| GLIPv2-T [70] | T | 232M | OG, Cap4M | - | - / - | 29.0 | |||

| GDINO-T [37] | T | 172M | OG, Cap4M | - / - | 27.4 | 18.1 | 23.3 | 32.7 | |

| DetCLIP-T [65] | T | 155M | OG | 250.0h | - / - | 34.4 | 26.9 | 33.9 | 36.3 |

| G-1.5 Edge [50] | T | G-20M | - / - | 33.5 | 28.0 | 34.3 | 33.9 | ||

| T-Rex2 [20] | V | 0365, OI, HT CH, SA-1B | - / - | 37.4 | 29.9 | 33.9 | 41.8 | ||

| YWorldv2-S [5] | T | 13M | OG | 41.7h | 216.4 / 48.9 | 24.4 | 17.1 | 22.5 | 27.3 |

| YWorldv2-M [5] | T | 29M | OG | 60.0h | 117.9 / 34.2 | 32.4 | 28.4 | 29.6 | 35.5 |

| YWorldv2-L [5] | T | 48M | OG | 80.0h | 80.0 / 22.1 | 35.5 | 25.6 | 34.6 | 38.1 |

| YOLOE-v8-S | T/V | 12M/13M | OG | 12.0h | 305.8 / 64.3 | 27.9/26.2 | 22.3/21.3 | 27.8/27.7 | 29.0 / 25.7 |

| YOLOE-v8-M | T/V | 27M/30M | OG | 17.0h | 156.7 / 41.7 | 32.6/31.0 | 26.9/27.0 | 31.9/31.7 | 34.4 / 31.1 |

| YOLOE-v8-L | T/V | 45M/50M | OG | 22.5h | 102.5 / 27.2 | 35.9/34.2 | 33.2/33.2 | 34.8 / 34.6 | 37.3 / 34.1 |

| YOLOE-11-S | T/V | 10M/12M | OG | 13.0h | 301.2 / 73.3 | 27.5/26.3 | 21.4 / 22.5 | 26.8 / 27.1 | 29.3 / 26.4 |

| YOLOE-11-M | T/V | 21M/27M | OG | 18.5h | 168.3 / 39.2 | 33.0/31.4 | 26.9 / 27.1 | 32.5 / 31.9 | 34.5 / 31.7 |

| YOLOE-11-L | T/V | 26M/32M | OG | 23.5h | 130.5 / 35.1 | 35.2 / 33.7 | 29.1 / 28.1 | 35.0 / 34.6 | 36.5 / 33.8 |

4.2. Text and visual prompt evaluation

4.2. 文本与视觉提示评估

As shown in Tab. 1, for detection on LVIS, YOLOE exhibits favorable trade-offs between efficiency and zero-shot performance across different model scales. We also note that such results are achieved under much less training time, e.g., $3\times$ faster than YOLO-Worldv2. Specifically, YOLOE $\mathrm{v}8\mathrm{-}\mathrm{S}/\mathrm{M}/\mathrm{L}$ outperforms YOLOv8-Worldv2-S / M / L by $3.5/0.2/0.4$ AP, along with $1.4\times\textit{/}1.3\t