Reinforcement Learning Outperforms Supervised Fine-Tuning: A Case Study on Audio Question Answering

强化学习优于监督微调:以音频问答为例

{ligang5,liu ji zhong 1}@xiaomi.com

{ligang5,liu ji zhong 1}@xiaomi.com

Abstract

摘要

Recently, reinforcement learning (RL) has been shown to greatly enhance the reasoning capabilities of large language models (LLMs), and RL-based approaches have been progressively applied to visual multimodal tasks. However, the audio modality has largely been overlooked in these developments. Thus, we conduct a series of RL explorations in audio understanding and reasoning, specifically focusing on the audio question answering (AQA) task. We leverage the group relative policy optimization (GRPO) algorithm to Qwen2-Audio-7B-Instruct, and our experiments demonstrated state-of-the-art performance on the MMAU Testmini benchmark, achieving an accuracy rate of $64.5%$ . The main findings in this technical report are as follows: 1) The GRPO algorithm can be effectively applied to large audio language models (LALMs), even when the model has only 8.2B parameters; 2) With only $38\mathrm{k\Omega}$ post-training samples, RL significantly outperforms supervised fine-tuning (SFT), indicating that RL-based approaches can be effective without large datasets; 3) The explicit reasoning process has not shown significant benefits for AQA tasks, and how to efficiently utilize deep thinking remains an open question for further research; 4) LALMs still lag far behind humans auditorylanguage reasoning, suggesting that the RL-based approaches warrant further exploration. Our project is available at https://github.com/xiaomi/r1-aqa and https://hugging face.co/mispeech/r1-aqa.

最近,强化学习 (RL) 被证明可以极大地增强大语言模型 (LLMs) 的推理能力,基于 RL 的方法也逐渐应用于视觉多模态任务。然而,在这些发展中,音频模态在很大程度上被忽视了。因此,我们在音频理解和推理方面进行了一系列 RL 探索,特别关注音频问答 (AQA) 任务。我们利用组相对策略优化 (GRPO) 算法对 Qwen2-Audio-7B-Instruct 进行实验,结果表明在 MMAU Testmini 基准测试中达到了最先进的性能,准确率达到 $64.5%$。本技术报告的主要发现如下:1) GRPO 算法可以有效地应用于大型音频语言模型 (LALMs),即使模型只有 8.2B 参数;2) 仅使用 $38\mathrm{k\Omega}$ 的训练后样本,RL 显著优于监督微调 (SFT),表明基于 RL 的方法可以在没有大数据集的情况下有效;3) 显式推理过程并未显示出对 AQA 任务的显著益处,如何有效利用深度思考仍然是进一步研究的开放问题;4) LALMs 仍然远远落后于人类的听觉语言推理,这表明基于 RL 的方法值得进一步探索。我们的项目可在 https://github.com/xiaomi/r1-aqa 和 https://hugging face.co/mispeech/r1-aqa 上获取。

1 Introduction

1 引言

The latest breakthroughs in large language models (LLMs) have greatly enhanced their reasoning abilities, particularly in mathematics and coding. DeepSeek-R1 [1], a pioneering innovator, has demonstrated how reinforcement learning (RL) can effectively improve LLMs’ complex reasoning capabilities. Although chains-of-thought (CoT) is a simple rule-based reward model, it effectively aids reasoning tasks by simulating the human thought process. Therefore, reinforcement learning is likely to achieve performance far surpassing supervised fine-tuning (SFT) through simple methods. Recently, many researchers [2; 3] have attempted to incorporate simple yet ingenious RL methods into visual modality understanding and reasoning tasks.

大语言模型 (LLM) 的最新突破极大地增强了其推理能力,尤其是在数学和编程方面。DeepSeek-R1 [1] 作为先驱创新者,展示了强化学习 (RL) 如何有效提升大语言模型的复杂推理能力。尽管思维链 (CoT) 是一种简单的基于规则的奖励模型,但它通过模拟人类思维过程,有效地辅助了推理任务。因此,强化学习很可能通过简单的方法实现远超监督微调 (SFT) 的性能。最近,许多研究者 [2; 3] 尝试将简单而巧妙的强化学习方法应用于视觉模态的理解和推理任务中。

However, the audio modality has largely been overlooked in recent developments. Although large audio language models (LALMs) have been increasingly proposed, such as Qwen2-Audio [5] and Audio Flamingo 2 [6]. LALMs still rely on pre-trained modules along with SFT to construct systems. In fact, it is not that RL-based approaches are unsuitable for LALMs; rather, tasks such as automatic speech recognition (ASR) and automated audio captioning (AAC) are simple descriptive tasks [7]. More complex logical reasoning tasks are needed to fully explore the potential of RL in the audio modality.

然而,音频模态在最近的发展中很大程度上被忽视了。尽管越来越多的大音频语言模型(LALMs)被提出,例如 Qwen2-Audio [5] 和 Audio Flamingo 2 [6]。LALMs 仍然依赖于预训练模块以及 SFT(监督微调)来构建系统。事实上,并不是基于强化学习(RL)的方法不适用于 LALMs;而是诸如自动语音识别(ASR)和自动音频描述(AAC)等任务属于简单的描述性任务 [7]。需要更复杂的逻辑推理任务来充分探索 RL 在音频模态中的潜力。

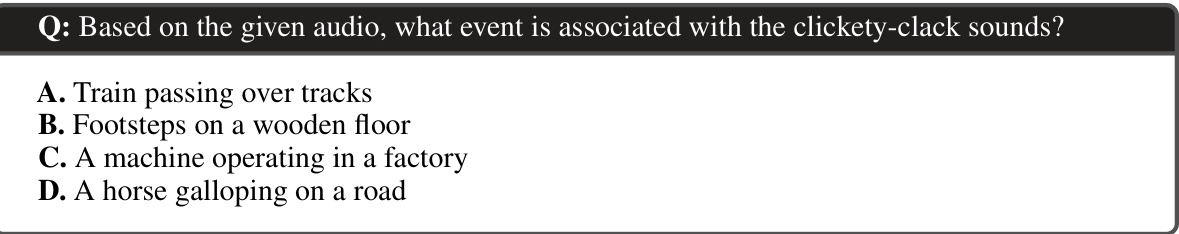

Figure 1: An example of a question and choices based on audio.

图 1: 基于音频的问题和选项示例。

Audio Question Answering (AQA) is a multimodal task that involves understanding and reasoning based on audio content to generate accurate responses to questions. It integrates both the auditory modality and linguistic modality, making it particularly suitable for evaluating complex logical reasoning capabilities. It demands the ability to extract meaningful insights from raw audio signals, infer implicit relationships, and provide con textually relevant answers. Due to its inherent complexity, AQA serves as an ideal benchmark for testing the effectiveness of reinforcement learning approaches. In addition, AQA can be considered as an advanced technology built upon automated audio captioning.

音频问答 (Audio Question Answering, AQA) 是一种多模态任务,涉及基于音频内容的理解和推理,以生成准确的问题回答。它结合了听觉模态和语言模态,特别适合评估复杂的逻辑推理能力。它要求从原始音频信号中提取有意义的见解,推断隐含关系,并提供上下文相关的答案。由于其固有的复杂性,AQA 是测试强化学习方法有效性的理想基准。此外,AQA 可以被视为基于自动音频描述的高级技术。

Based on the above reasons, we take AQA as a topic to explore the effectiveness of RL and deep thinking in the audio modality. Meanwhile, it is encouraging to see that some researchers have already started some attempts [8; 9]. In this report, we present a successful technical attempt, where the group relative policy optimization (GRPO) algorithm [10], with a small-scale dataset, improves the reasoning performance of the AQA task via Qwen2-Audio-7B-Instruct [5]. Our experiments demonstrate state-of-the-art performance on the MMAU test-mini benchmark, achieving an accuracy rate of $64.5%$ . In summary, The main findings are as follows:

基于上述原因,我们将 AQA 作为一个主题,探索强化学习 (RL) 和深度思考在音频模态中的有效性。同时,令人鼓舞的是,一些研究人员已经开始了一些尝试 [8; 9]。在本报告中,我们展示了一次成功的技术尝试,其中组相对策略优化 (GRPO) 算法 [10] 在小型数据集上通过 Qwen2-Audio-7B-Instruct [5] 提高了 AQA 任务的推理性能。我们的实验在 MMAU test-mini 基准测试中展示了最先进的性能,达到了 $64.5%$ 的准确率。总结来说,主要发现如下:

2 Related Works

2 相关工作

Audio Question Answering. AQA is a multimodal task that involves understanding and reasoning over audio content to generate accurate responses to questions. In LLMs’ frameworks, AQA builds upon AAC. While AAC focuses on generating descriptive textual captions for audio, AQA requires a deeper comprehension of complex acoustic patterns, temporal relationships, and contextual information embedded in the audio. Although researchers have achieved good performance on the AAC task [11; 12; 13; 14; 15; 16], AQA remains a multimodal challenge, which combines auditory and linguistic modalities, making it ideal for evaluating complex reasoning. can be categorized based on audio type into single-audio and multi-audio tasks, and based on response format into selection-based and open-ended questions. As illustrated in Figure 1, we focus on the most common single-audio task with selection-based answers. Additionally, a multiple-choice setting with a single correct answer presents a significant generation-verification gap [17], making it a suitable setting for evaluating the effectiveness of RL in the audio modality. RL tends to perform well when verification is easy, but generation is complex.

音频问答 (Audio Question Answering, AQA) 是一种多模态任务,涉及对音频内容的理解和推理,以生成对问题的准确回答。在大语言模型的框架中,AQA 建立在音频字幕生成 (AAC) 的基础上。AAC 侧重于为音频生成描述性文本字幕,而 AQA 则需要对音频中复杂的声学模式、时间关系和上下文信息有更深层次的理解。尽管研究人员在 AAC 任务上取得了良好的表现 [11; 12; 13; 14; 15; 16],AQA 仍然是一个多模态挑战,结合了听觉和语言模态,使其成为评估复杂推理的理想任务。AQA 可以根据音频类型分为单音频和多音频任务,根据回答格式分为选择型和开放式问题。如图 1 所示,我们专注于最常见的单音频任务,并采用选择型回答。此外,具有单一正确答案的多项选择设置存在显著的生成-验证差距 [17],使其成为评估强化学习在音频模态中有效性的合适场景。强化学习在验证容易但生成复杂的情况下往往表现良好。

Prompt Templates

提示模板

Multimodal Reasoning. Recent studies have indicated that deep thinking can enhance the reasoning performance of LLMs [1; 18]. DeepSeek-R1 and OpenAI-o1 have significantly improved the reasoning capabilities, particularly in multi-step tasks such as coding and mathematics, sparking renewed interest in RL and CoT. Furthermore, RL and CoT are playing an increasing role in multimodal large language models. For instance, Visual Thinker R1 Zero [3] implements RL in visual reasoning on a 2B non-sft model. LLaVA-CoT [4] outperforms its base model by $7.4%$ using only $100\mathrm{k}$ post-training samples and a simple yet effective inference time scaling method. Recent studies have also explored the CoT-based method in the audio modality. Audio-CoT [9] shows some improvements via zero-shot CoT, but the improvement is very limited. Audio-Reasoner [8] has achieved significant improvements through extensive fine-tuning data and a complex reasoning process. But it lacks thorough ablation studies, and some of these processes may be redundant. Overall, studies on deep thinking in the audio modality is still limited.

多模态推理。最近的研究表明,深度思考可以提升大语言模型的推理性能 [1; 18]。DeepSeek-R1 和 OpenAI-o1 显著提升了推理能力,尤其是在编码和数学等多步骤任务中,这重新激发了人们对强化学习 (RL) 和思维链 (CoT) 的兴趣。此外,RL 和 CoT 在多模态大语言模型中的作用也越来越大。例如,Visual Thinker R1 Zero [3] 在一个 2B 非 SFT 模型上实现了视觉推理中的 RL。LLaVA-CoT [4] 仅使用 100k 的微调后样本和一个简单但有效的推理时间缩放方法,就比其基础模型提升了 7.4%。最近的研究还探索了基于 CoT 的方法在音频模态中的应用。Audio-CoT [9] 通过零样本 CoT 展示了一些改进,但改进非常有限。Audio-Reasoner [8] 通过大量的微调数据和复杂的推理过程取得了显著改进,但缺乏彻底的消融研究,其中一些过程可能是冗余的。总体而言,关于音频模态中深度思考的研究仍然有限。

Large Audio Language Models. LALMs can generally be divided into two categories: audio understanding and audio generation. This study focuses on the field of audio understanding. There are already many representative models, such as Qwen2-Audio [5], Audio Flamingo 2 [6], and SALMONN [19]. However, they are all SFT-based models, and whether RL can unlock their potential remains to be studied.

大音频语言模型 (Large Audio Language Models, LALMs) 通常可以分为两类:音频理解和音频生成。本研究聚焦于音频理解领域。目前已经有许多代表性模型,例如 Qwen2-Audio [5]、Audio Flamingo 2 [6] 和 SALMONN [19]。然而,它们都是基于 SFT 的模型,RL 是否能够释放它们的潜力仍有待研究。

3 Method

3 方法

In this section, we present the training method for our exploration, which leads to the state-of-the-art performance on the MMAU Test-mini benchmark. The goal is to apply the GRPO algorithm directly into to LALMs.

在本节中,我们介绍了探索中的训练方法,该方法在 MMAU Test-mini 基准测试中达到了最先进的性能。目标是将 GRPO 算法直接应用于 LALMs。

Our exploration is based on the Qwen2-Audio-7B-Instruct model [5]. We leverage the GRPO algorithm along with a customized chat template and prompting strategy to enhance its reasoning capabilities. Compared to SFT, RL may be a more efficient and effective way to adapt to downstream tasks [3; 4]. In SFT, instruction tuning requires a large amount of training data. Whether this condition is necessary in RL is one of the key questions. We train our models with the instruction template in Figure 2. For each question in the dataset, the model generates a response in

我们的探索基于 Qwen2-Audio-7B-Instruct 模型 [5]。我们利用 GRPO 算法以及定制的聊天模板和提示策略来增强其推理能力。与 SFT 相比,RL 可能是适应下游任务更高效和有效的方式 [3; 4]。在 SFT 中,指令调优需要大量的训练数据。而在 RL 中,这一条件是否必要是一个关键问题。我们使用图 2 中的指令模板训练模型。对于数据集中的每个问题,模型在

A review of the GRPO algorithm used for training is provided, mainly referring [3; 10]. To ease the burden of training an additional value function approximation model in proximal policy optimization (PPO) [20], GRPO employs the average reward of sampled response from the policy model as the baseline in computing the advantage. Specifically, given an input question $q$ , a group of responses ${o_{1},o_{2},\cdot\cdot\cdot,o_{G}}$ is first sample, and their corresponding rewards corresponding rewards ${r_{1},r_{2},\cdots,r_{G}}$ are computed using the reward model. The advantage is subsequently computed as:

对用于训练的 GRPO 算法进行了回顾,主要参考了 [3; 10]。为了减轻在近端策略优化 (PPO) [20] 中训练额外价值函数近似模型的负担,GRPO 采用从策略模型中采样的响应的平均奖励作为计算优势的基线。具体来说,给定一个输入问题 $q$,首先采样一组响应 ${o_{1},o_{2},\cdot\cdot\cdot,o_{G}}$,并使用奖励模型计算它们对应的奖励 ${r_{1},r_{2},\cdots,r_{G}}$。随后,优势计算如下:

Table 1: Hyper-parameters of reinforcement learning with the GRPO algorithm.

表 1: 使用 GRPO 算法进行强化学习的超参数。

| 设置 | 值 |

|---|---|

| BatchSizeperDevice | 1 |

| GradientAccumulationSteps | 2 |

| TrainingSteps | 500 |

| Learning Rate | 1 x 10-6 |

| Temperature | 1.0 |

| Maximum Response Length | 512 |

| Number of Responses per GRPO Step | 8 |

| Kullback-LeibleCoefficient | 0.04 |

The policy model is subsequently optimized by maximizing the Kullback-Leibler objective:

策略模型随后通过最大化Kullback-Leibler目标进行优化:

where $\pi_{\theta}$ and $\pi_{o l d}$ are the current and former policy, and $\epsilon$ and $\beta$ are hyper-parameters introduced in PPO. Responses are evaluated by a rule-based reward function in terms of their format and correctness:

其中 $\pi_{\theta}$ 和 $\pi_{o l d}$ 分别是当前策略和旧策略,$\epsilon$ 和 $\beta$ 是 PPO 中引入的超参数。响应通过基于规则的奖励函数评估其格式和正确性:

• If the response provides a correct final answer, the model obtains an accuracy reward of $+1$ . • If the response encloses the thinking in

• 如果响应提供了正确的最终答案,模型将获得 $+1$ 的准确度奖励。

• 如果响应将思考过程包含在

• 否则,模型将获得 0 奖励。

4 Experiments

4 实验

In this study, we train our models via full fine-tuning, low-rank adaptation (LoRA) [21], and reinforcement learning. To effectively evaluate generalization, the evaluation follows an out-of-distribution testing approach, where the training and test sets come from different data sources.

在本研究中,我们通过全微调、低秩适应 (LoRA) [21] 和强化学习来训练模型。为了有效评估泛化能力,评估遵循分布外测试方法,其中训练集和测试集来自不同的数据源。

4.1 Experimental Setup

4.1 实验设置

Datasets. The training data is from the AVQA dataset [22], which is designed to audio-visual question answering by providing a comprehensive resource for understanding multimodal information in reallife video scenarios. It comprises 57015 videos depicting daily audio-visual activities, accompanied by 57335 specially designed question-answer pairs. The questions are crafted to involve various relationships between objects and activities. We only use audio-text pairs of the training subset and change the “video” in the question to “audio”. The AVQA training set has approximately $38\mathrm{k\Omega}$ samples. The test data is from the MMAU dataset [7]. MMAU is a comprehensive dataset designed