MaTVLM: Hybrid Mamba-Transformer for Efficient Vision-Language Modeling

MaTVLM: 用于高效视觉语言建模的混合 Mamba-Transformer

Yingyue Li1 Bencheng Liao2,1 Wenyu Liu1 Xinggang Wang1,B 1 School of EIC, Huazhong University of Science & Technology 2 Institute of Artificial Intelligence, Huazhong University of Science & Technology

李映月1 廖本成2,1 刘文宇1 王兴刚1,B

1 华中科技大学电子与信息工程学院

2 华中科技大学人工智能研究院

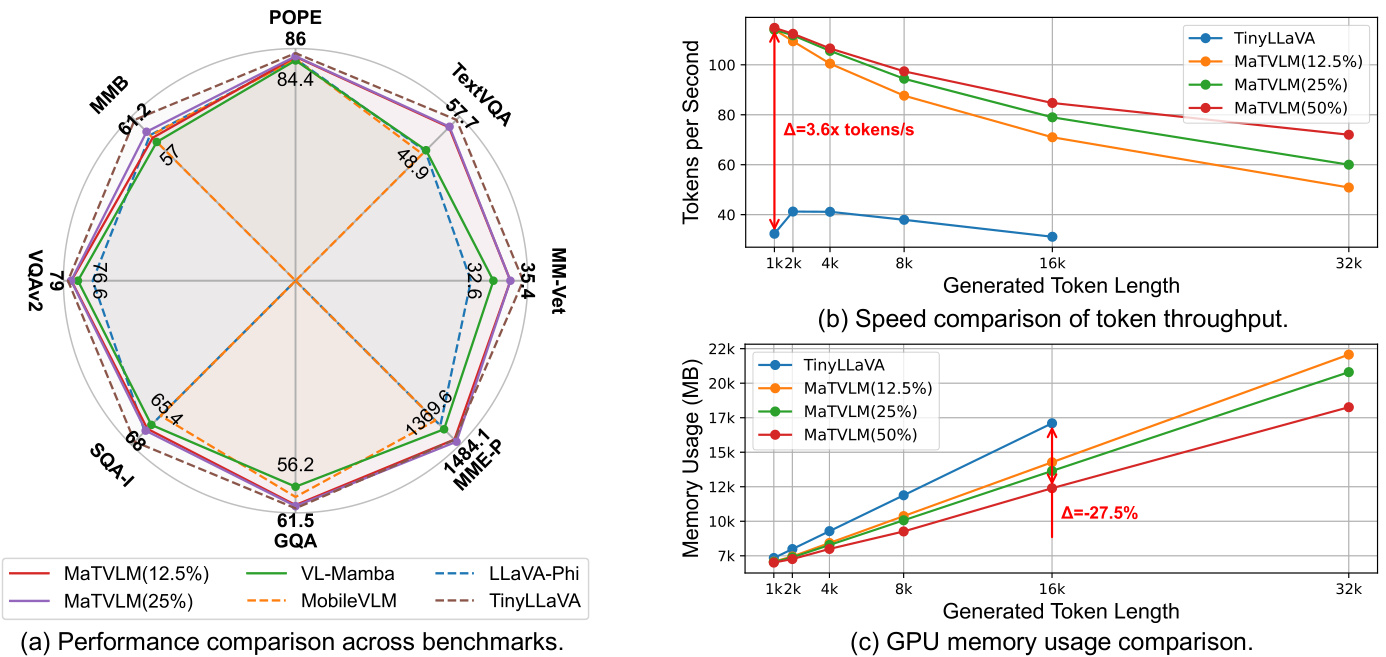

Figure 1. Comprehensive comparison of our MaTVLM. (a) Performance comparison across multiple benchmarks. Our MaTVLM achieves competitive results with the teacher model TinyLLaVA, surpassing existing VLMs with similar parameter scales, as well as Mamba-based VLMs. (b) Speed Comparison of Token Throughput. Tokens generated per second for different token lengths. Our MaTVLM achieves a $3.6\times$ speedup compared to the teacher model TinyLLaVA. (c) GPU Memory Usage Comparison. A detailed comparison of memory usage during inference for different token lengths, highlighting the optimization advantages with a $27.5%$ reduction in usage for our MaTVLM over TinyLLaVA.

图 1: 我们的 MaTVLM 的综合对比。(a) 多个基准测试的性能对比。我们的 MaTVLM 在与教师模型 TinyLLaVA 的竞争中取得了优异的结果,超越了现有参数规模相似的视觉语言模型 (VLM) 以及基于 Mamba 的 VLM。(b) Token 吞吐速度对比。不同 Token 长度下每秒生成的 Token 数量。我们的 MaTVLM 相比教师模型 TinyLLaVA 实现了 $3.6\times$ 的加速。(c) GPU 内存使用对比。不同 Token 长度下推理过程中的内存使用详细对比,展示了我们的 MaTVLM 相比 TinyLLaVA 在内存使用上优化了 $27.5%$ 的优势。

Abstract

摘要

With the advancement of RNN models with linear complexity, the quadratic complexity challenge of transformers has the potential to be overcome. Notably, the emerging Mamba-2 has demonstrated competitive performance, bridging the gap between RNN models and transformers. However, due to sequential processing and vanishing gradients, RNN models struggle to capture long-range dependencies, limiting contextual understanding. This results in slow convergence, high resource demands, and poor performance on downstream understanding and complex reasoning tasks. In this work, we present a hybrid model MaTVLM by substituting a portion of the transformer decoder layers in a pre-trained VLM with Mamba-2 layers. Leveraging the inherent relationship between attention and Mamba-2, we initialize Mamba-2 with correspond- ing attention weights to accelerate convergence. Subsequently, we employ a single-stage distillation process, using the pre-trained VLM as the teacher model to transfer knowledge to the MaTVLM, further enhancing convergence speed and performance. Furthermore, we investigate the impact of differential distillation loss within our training framework. We evaluate the MaTVLM on multiple benchmarks, demonstrating competitive performance against the teacher model and existing VLMs while surpassing both Mamba-based VLMs and models of comparable parameter scales. Remarkably, the MaTVLM achieves up to $3.6\times$ faster inference than the teacher model while reducing GPU memory consumption by $27.5%$ , all without compromising performance. Code and models are released

随着线性复杂度的RNN模型的进步,Transformer的二次复杂度挑战有望被克服。值得注意的是,新兴的Mamba-2已经展示了与Transformer相媲美的性能,缩小了RNN模型与Transformer之间的差距。然而,由于顺序处理和梯度消失问题,RNN模型难以捕捉长距离依赖关系,限制了上下文理解能力。这导致收敛速度慢、资源需求高,且在下游理解和复杂推理任务上表现不佳。在本研究中,我们提出了一种混合模型MaTVLM,通过在预训练的VLM中用Mamba-2层替换部分Transformer解码器层。利用注意力机制与Mamba-2之间的固有关系,我们用相应的注意力权重初始化Mamba-2以加速收敛。随后,我们采用单阶段蒸馏过程,使用预训练的VLM作为教师模型,将知识转移到MaTVLM中,进一步提高了收敛速度和性能。此外,我们还研究了训练框架中差异蒸馏损失的影响。我们在多个基准上评估了MaTVLM,展示了其与教师模型和现有VLM相媲美的性能,同时超越了基于Mamba的VLM和参数规模相当的模型。值得注意的是,MaTVLM在保持性能的同时,推理速度比教师模型快达$3.6\times$,GPU内存消耗减少了$27.5%$。代码和模型已发布。

1. Introduction

1. 引言

Large vision-language models (VLMs) have rapidly advanced in recent years [4, 11, 12, 28, 38, 39, 49, 63]. VLMs are predominantly built on transformer architecture. However, due to the quadratic complexity of transformer with respect to sequence length, VLMs are computationally intensive for both training and inference. Recently, several RNN models [17, 19, 23, 45, 56] have emerged as potential alternatives to transformer, offering linear scaling with respect to sequence length. Notably, Mamba [17, 23] has shown exceptional performance in long-range sequence tasks, surpassing transformer in computational efficiency.

近年来,大视觉语言模型 (VLMs) 取得了快速进展 [4, 11, 12, 28, 38, 39, 49, 63]。VLMs 主要基于 Transformer 架构。然而,由于 Transformer 在序列长度上的二次复杂度,VLMs 在训练和推理过程中计算量巨大。最近,一些 RNN 模型 [17, 19, 23, 45, 56] 作为 Transformer 的潜在替代方案出现,提供了与序列长度成线性比例的计算复杂度。值得注意的是,Mamba [17, 23] 在长序列任务中表现出色,在计算效率上超越了 Transformer。

Several studies [29, 42, 62] have explored integrating Mamba architecture into VLMs by replacing transformerbased large language models (LLMs) with Mamba-based LLMs. These works have demonstrated competitive performance while achieving significant gains in inference speed. However, several limitations are associated with these approaches: (1) Mamba employs sequential processing, which limits its ability to capture global context compared to transformer, thereby restricting these VLMs’ performance in complex reasoning and problem-solving tasks [52, 55]; (2) The sequential nature of Mamba results in inefficient gradient propagation during long-sequence training, leading to slow convergence when training VLMs from scratch. As a result, the high computational cost and the large amount of training data required become significant bottlenecks for these VLMs; (3) The current training scheme for these VLMs is complex, requiring multi-stage training to achieve optimal performance. This process is both time-consuming and computationally expensive, making it difficult to scale Mamba-based VLMs for broader applications.

多项研究 [29, 42, 62] 探索了通过将基于 Transformer 的大语言模型 (LLM) 替换为基于 Mamba 的 LLM,将 Mamba 架构集成到视觉语言模型 (VLM) 中。这些工作展示了具有竞争力的性能,同时在推理速度上取得了显著提升。然而,这些方法存在一些局限性:(1) Mamba 采用顺序处理,与 Transformer 相比,其在捕捉全局上下文方面的能力有限,从而限制了这些 VLM 在复杂推理和问题解决任务中的表现 [52, 55];(2) Mamba 的顺序特性导致在长序列训练期间梯度传播效率低下,导致从头训练 VLM 时收敛速度较慢。因此,高计算成本和所需的大量训练数据成为这些 VLM 的主要瓶颈;(3) 当前这些 VLM 的训练方案复杂,需要多阶段训练才能达到最佳性能。这一过程既耗时又计算成本高,使得基于 Mamba 的 VLM 难以扩展到更广泛的应用中。

To address the aforementioned issues, we propose a novel Mamba-Transformer Vision-Language Model (MaTVLM) that integrates Mamba-2 and transformer components, striking a balance between computational efficiency and overall performance. Firstly, attention and Mamba are inherently connected, removing the softmax from attention transforms it into a linear RNN, revealing its structural similarity to Mamba. We will analyze this relationship in detail in Sec. 3.2. Furthermore, studies applying Mamba to large language models (LLMs) [47, 48] have demonstrated that models hybridizing Mamba outperform both pure Mamba-based and transformer-based models on certain tasks. Motivated by this connection and empirical findings, combining Mamba with transformer components presents a promising direction, offering a trade-off between improved reasoning capabilities and computational efficiency. Specifically, we adopt the TinyLLaVA [63] as the base VLM and replace a portion of its transformer decoder layers with Mamba decoder layers while keeping the rest of the model unchanged.

为了解决上述问题,我们提出了一种新颖的 Mamba-Transformer 视觉-语言模型 (MaTVLM),该模型集成了 Mamba-2 和 Transformer 组件,在计算效率和整体性能之间取得了平衡。首先,注意力机制和 Mamba 本质上是相互关联的,从注意力机制中移除 softmax 会将其转化为线性 RNN,揭示了其与 Mamba 的结构相似性。我们将在第 3.2 节中详细分析这一关系。此外,将 Mamba 应用于大语言模型 (LLM) 的研究 [47, 48] 表明,在某些任务上,混合 Mamba 的模型优于纯 Mamba 和纯 Transformer 模型。基于这种关联和实证结果,将 Mamba 与 Transformer 组件结合是一个有前景的方向,能够在提升推理能力和计算效率之间取得平衡。具体而言,我们采用 TinyLLaVA [63] 作为基础 VLM,并将其部分 Transformer 解码器层替换为 Mamba 解码器层,同时保持模型其余部分不变。

To minimize the training cost of the MaTVLM while maximizing its performance, we propose to distill knowledge from the pre-trained base VLM. Firstly, we initialize Mamba-2 with the corresponding attention’s weights as mentioned in Sec. 3.2, which is important to accelerate the convergence of Mamba-2 layers. Moreover, during distillation training, we employ both probability distribution and layer-wise distillation losses to guide the learning process, making only Mamba-2 layers trainable while keeping transformer layers fixed. Notably, unlike most VLMs that require complex multi-stage training, our approach involves a single-stage distillation process.

为了在最小化 MaTVLM 训练成本的同时最大化其性能,我们提出从预训练的基础 VLM 中蒸馏知识。首先,我们按照第 3.2 节中提到的方法,使用相应的注意力权重初始化 Mamba-2,这对于加速 Mamba-2 层的收敛非常重要。此外,在蒸馏训练过程中,我们采用概率分布和逐层蒸馏损失来指导学习过程,仅使 Mamba-2 层可训练,而保持 Transformer 层固定。值得注意的是,与大多数需要复杂多阶段训练的 VLM 不同,我们的方法仅涉及单阶段蒸馏过程。

Despite the simplified training approach, our model demonstrates comprehensive performance across multiple benchmarks, as illustrated in Fig. 1. It exhibits competitive results when compared to the teacher model, TinyLLaVA, and outperforms Mamba-based VLMs as well as other transformer-based VLMs with similar parameter scales. The efficiency of our model is further emphasized by a $3.6\times$ speedup and a $27.5%$ reduction in memory usage, thereby confirming its practical advantages in real-world applications. These results underscore the effectiveness of our approach, providing a promising avenue for future advancements in model development and optimization.

尽管采用了简化的训练方法,我们的模型在多个基准测试中展现了全面的性能,如图 1 所示。与教师模型 TinyLLaVA 相比,它表现出具有竞争力的结果,并且超越了基于 Mamba 的视觉语言模型 (VLM) 以及其他具有相似参数规模的基于 Transformer 的视觉语言模型。我们模型的效率进一步体现在 $3.6\times$ 的加速和 $27.5%$ 的内存使用减少上,从而确认了其在实际应用中的优势。这些结果凸显了我们方法的有效性,为未来模型开发和优化提供了有前景的方向。

In summary, this paper makes three significant contributions:

总之,本文做出了三项重要贡献:

• We propose a new hybrid VLM architecture MaTVLM that effectively integrates Mamba-2 and transformer components, balancing the computational efficiency with high-performance capabilities. • We propose a novel single-stage knowledge distillation approach for the Mamba-Transformer hybrid VLMs. By leveraging pre-trained knowledge, our method accelerates convergence, enhances model performance, and strengthens visual-linguistic understanding. • We demonstrate that our approach significantly achieves a $3.6\times$ faster inference speed and a $27.5%$ reduction in memory usage while maintaining the competitive performance of the base VLM. Moreover, it outperforms Mamba-based VLMs and existing VLMs with similar parameter scales across multiple benchmarks.

• 我们提出了一种新的混合视觉语言模型 (VLM) 架构 MaTVLM,它有效地集成了 Mamba-2 和 Transformer 组件,在计算效率和高性能能力之间取得了平衡。

• 我们提出了一种新颖的单阶段知识蒸馏方法,适用于 Mamba-Transformer 混合视觉语言模型。通过利用预训练知识,我们的方法加速了收敛,增强了模型性能,并加强了视觉-语言理解能力。

• 我们证明了我们的方法在保持基础视觉语言模型竞争力的同时,显著实现了推理速度提升 $3.6\times$ 和内存使用减少 $27.5%$。此外,它在多个基准测试中优于基于 Mamba 的视觉语言模型和具有相似参数规模的现有视觉语言模型。

2. Related Work

2. 相关工作

2.1. Efficient VLMs

2.1. 高效视觉语言模型 (Efficient VLMs)

In recent years, efficient and lightweight VLMs have advanced significantly. Several academic-oriented VLMs, such as TinyLLaVA-3.1B [63], MobileVLM-3B [14], and LLaVA-Phi [65] have been developed to improve efficiency. Meanwhile, commercially oriented models like Qwen2.5- VL-3B [5], InternVL2.5-2B [10], and others achieve remarkable performance by leveraging large-scale datasets with high-resolution images and long-context text.

近年来,高效且轻量级的视觉语言模型(VLM)取得了显著进展。一些面向学术研究的VLM,如TinyLLaVA-3.1B [63]、MobileVLM-3B [14] 和 LLaVA-Phi [65],已被开发出来以提高效率。与此同时,面向商业的模型如 Qwen2.5-VL-3B [5]、InternVL2.5-2B [10] 等,通过利用包含高分辨率图像和长上下文文本的大规模数据集,实现了卓越的性能。

Our work prioritizes efficiency and resource constraints over large-scale, commercially oriented training. Unlike previous approaches, by integrating Mamba-2 [17], our method achieves competitive performance while significantly reducing computational demands, making it wellsuited for deployment in resource-limited environments.

我们的工作优先考虑效率和资源限制,而非大规模、商业导向的训练。与以往方法不同,通过集成 Mamba-2 [17],我们的方法在显著降低计算需求的同时实现了具有竞争力的性能,非常适合在资源有限的环境中部署。

2.2. Structured State Space Models

2.2. 结构化状态空间模型

Structured state space models (S4) [17, 23, 24, 26, 41, 44] scale efficiently in a linear manner with sequence length. Mamba[23] introduces selective SSMs, while Mamba2 [17] refines this by linking SSMs to attention variants, achieving $ {2-8\times}$ speedup and performance comparable to transformers. Mamba-based VLMs [29, 42, 62, 66] primarily replace the transformer-based large language models (LLMs) entirely with the pre-trained Mamba-2 language model, achieving both competitive performance and enhanced computational efficiency.

结构化状态空间模型 (S4) [17, 23, 24, 26, 41, 44] 能够以线性方式随着序列长度高效扩展。Mamba [23] 引入了选择性 SSMs,而 Mamba2 [17] 通过将 SSMs 与注意力变体联系起来,进一步优化了这一模型,实现了 $ {2-8\times}$ 的加速,并且性能可与 Transformer 相媲美。基于 Mamba 的视觉语言模型 (VLMs) [29, 42, 62, 66] 主要将基于 Transformer 的大语言模型 (LLMs) 完全替换为预训练的 Mamba-2 语言模型,既实现了有竞争力的性能,又提高了计算效率。

Our work innovative ly integrates Mamba and transformer within VLMs, combining their strengths rather than entirely replacing transformers with Mamba-2. By adopting a hybrid approach and introducing a single-stage distillation strategy, we enhance model expressiveness, improve efficiency, and achieve superior performance over previous Mamba-based VLMs while maintaining computational efficiency for practical deployment.

我们的工作创新性地将Mamba和Transformer集成到视觉语言模型(VLM)中,结合了两者的优势,而不是完全用Mamba-2替代Transformer。通过采用混合方法并引入单阶段蒸馏策略,我们增强了模型的表达能力,提高了效率,并在保持计算效率的同时,超越了之前基于Mamba的VLM性能,使其更适合实际部署。

2.3. Hybrid Mamba and Transformer

2.3. 混合 Mamba 和 Transformer

Recent works, such as Mamba In Llama [48] and MOHAWK [6], demonstrate the effectiveness of hybrid Mamba-Transformer architectures in LLMs, achieving notable improvements in efficiency and performance. Additionally, Mamba Vision [27] extends this hybrid approach to vision models, introducing a Mamba-Transformer-based backbone that excels in image classification and other vision-related tasks, showcasing the potential of integrating SSMs with transformers.

最近的研究,如 Mamba In Llama [48] 和 MOHAWK [6],展示了混合 Mamba-Transformer 架构在大语言模型中的有效性,在效率和性能上取得了显著提升。此外,Mamba Vision [27] 将这种混合方法扩展到视觉模型中,引入了一种基于 Mamba-Transformer 的主干网络,在图像分类和其他视觉相关任务中表现出色,展示了将 SSM 与 Transformer 结合的潜力。

Unlike previous studies on LLMs or vision backbones, our work extends the hybrid Mamba-Transformer to VLMs and design a concise architecture with an efficient singlestage distillation strategy, enhancing convergence, reducing inference time, and lowering memory consumption for practical deployment.

与之前关于大语言模型或视觉主干网络的研究不同,我们的工作将混合 Mamba-Transformer 扩展到视觉语言模型 (VLM),并设计了一种简洁的架构,采用高效的单阶段蒸馏策略,增强了收敛性,减少了推理时间,并降低了实际部署中的内存消耗。

2.4. Knowledge Distillation

2.4. 知识蒸馏 (Knowledge Distillation)

More recently, knowledge distillation for LLMs has gained attention [2, 25, 32, 50, 53], while studies on VLM distillation remain limited [20, 51, 54]. DistillVLM [20] uses MSE loss to align attention and feature maps, MAD [51] aligns visual and text tokens, and LLAVADI [54] highlights the importance of joint token and logit alignment.

最近,大语言模型 (LLM) 的知识蒸馏受到了关注 [2, 25, 32, 50, 53],而视觉语言模型 (VLM) 蒸馏的研究仍然有限 [20, 51, 54]。DistillVLM [20] 使用 MSE 损失来对齐注意力和特征图,MAD [51] 对齐视觉和文本 Token,LLAVADI [54] 强调了联合 Token 和 logit 对齐的重要性。

Building on these advancements, we integrate knowledge distillation into a hybrid Mamba-Transformer framework with a single-stage distillation strategy to transfer knowledge from a transformer-based teacher model. This improves convergence, enhances performance, and reduces computational costs for efficient VLM deployment.

在这些进展的基础上,我们将知识蒸馏(knowledge distillation)整合到一个混合的 Mamba-Transformer 框架中,采用单阶段蒸馏策略,从基于 Transformer 的教师模型中传递知识。这提高了收敛性,增强了性能,并降低了计算成本,以实现高效的可视语言模型(VLM)部署。

3. Method

3. 方法

Large vision-language models (VLMs) process longer sequences than LLMs, resulting in slower training and inference. As previously mentioned, Mamba-2 architecture exhibits linear scaling and offers significantly higher efficiency compared to transformer. To leverage these advantages, we propose a hybrid VLM architecture MaTVLM that integrates Mamba-2 and transformer components, aiming to balance computational efficiency with optimal performance.

大型视觉-语言模型 (VLMs) 处理的序列比大语言模型更长,导致训练和推理速度较慢。如前所述,Mamba-2 架构表现出线性扩展性,并且与 Transformer 相比提供了显著更高的效率。为了利用这些优势,我们提出了一种混合 VLM 架构 MaTVLM,它集成了 Mamba-2 和 Transformer 组件,旨在在计算效率和最佳性能之间取得平衡。

3.1. Mamba Preliminaries

3.1. Mamba 基础

Mamba [23] is mainly built upon the structured state-space sequence models (S4) as in Eq. 1, which are a recent development in sequence modeling for deep learning, with strong connections to RNNs, CNNs, and classical state space models.

Mamba [23] 主要建立在结构化状态空间序列模型 (S4) 的基础上,如公式 1 所示。这些模型是深度学习序列建模的最新进展,与 RNNs、CNNs 和经典状态空间模型有很强的联系。

Mamba has introduced the selective state space models (Selective SSMs), as shown in Eq. 2, unlike the standard linear time-invariant (LTI) formulation 1, enables the ability to selectively focus on or ignore inputs at each timestep. Its performance has been shown to surpass LTI SSMs on information-rich tasks such as language processing, especially when the state size $N$ grows, allowing it to handle a larger capacity of information.

Mamba 引入了选择性状态空间模型 (Selective SSMs),如公式 2 所示,与标准的线性时不变 (LTI) 公式 1 不同,它能够在每个时间步选择性地关注或忽略输入。其性能在信息丰富的任务(如语言处理)上已显示出超越 LTI SSMs 的能力,尤其是在状态大小 $N$ 增加时,使其能够处理更大容量的信息。

Mamba-2 [17] advances Mamba’s selective SSMs by introducing the state-space duality (SSD) framework, which establishes a theoretical link between SSMs and various attention mechanisms through different decomposition s of structured semi-separable matrices. Leveraging this framework, Mamba-2 achieves $2{-}8\times$ faster computation while maintaining competitive performance with transformers.

Mamba-2 [17] 通过引入状态空间对偶性 (SSD) 框架,推进了 Mamba 的选择性 SSM(状态空间模型)。该框架通过结构化半可分矩阵的不同分解,建立了 SSM 与各种注意力机制之间的理论联系。利用这一框架,Mamba-2 在保持与 Transformer 竞争性能的同时,实现了 $2{-}8\times$ 的计算加速。

3.2. Hybrid Attention with Mamba for VLMs

3.2. 混合注意力与 Mamba 用于视觉语言模型 (VLMs)

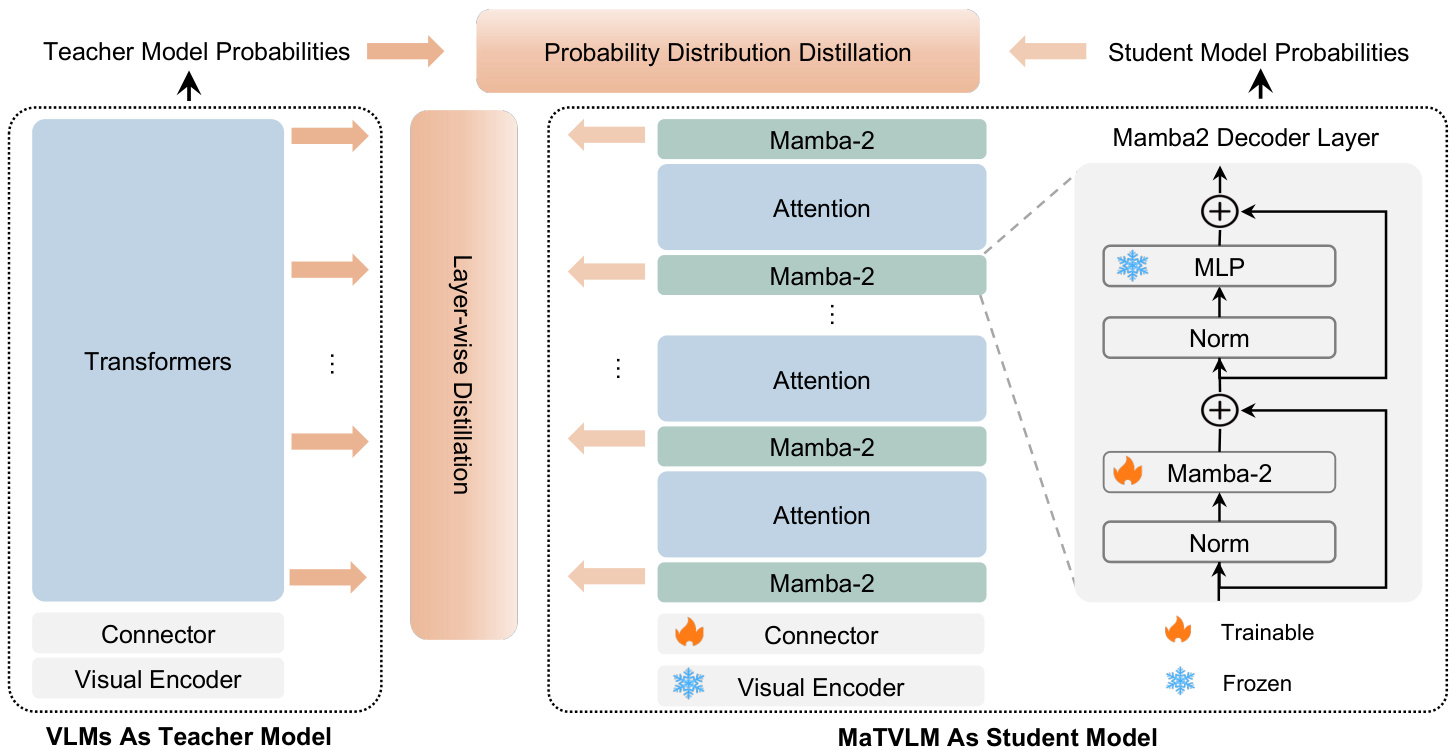

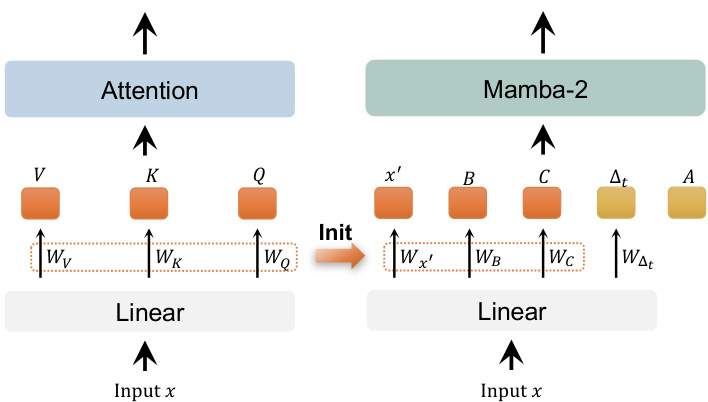

As shown in Fig. 2, the MaTVLM is built upon the pretrained VLMs, comprising a vision encoder, a connector, and a language model. The language model originally consists of transformer decoder layers, some of which are replaced with Mamba-2 decoder layers in our model. This replacement modifies only attention to Mamba-2 while leaving other components unchanged. Based on the configured proportions (e.g., $12.5%$ , $25%$ ) of Mamba-2 decoder layers, we distribute them at equal intervals. Given that Mamba-2 shares certain connections with attention, some weights can be partially initialized from the original transformer layers, as detailed below.

如图 2 所示,MaTVLM 基于预训练的视觉语言模型 (VLM) 构建,包含视觉编码器、连接器和语言模型。语言模型最初由 Transformer 解码器层组成,在我们的模型中,部分层被替换为 Mamba-2 解码器层。这种替换仅将注意力机制修改为 Mamba-2,而其他组件保持不变。根据配置的 Mamba-2 解码器层比例 (例如 $12.5%$ , $25%$ ),我们以等间隔分布它们。鉴于 Mamba-2 与注意力机制存在某些关联,部分权重可以从原始的 Transformer 层部分初始化,具体如下所述。

Figure 2. The proposed MaTVLM integrates both Mamba-2 and transformer components. The model consists of a vision encoder, a connector, and a language model same as the base VLM. The language model is composed of both transformer decoder layers and Mamba2 decoder layers, where Mamba-2 layers replace only attention in transformer layers, while the other components remain unchanged. The model is trained using a knowledge distillation approach, incorporating probability distribution and layer-wise distillation loss. During the distillation training, only Mamba-2 layers and the connector are trainable, while transformer layers remain fixed.

图 2: 提出的 MaTVLM 结合了 Mamba-2 和 Transformer 组件。该模型由视觉编码器、连接器和语言模型组成,与基础 VLM 相同。语言模型由 Transformer 解码器层和 Mamba2 解码器层组成,其中 Mamba-2 层仅替换了 Transformer 层中的注意力机制,其他组件保持不变。该模型采用知识蒸馏方法进行训练,结合了概率分布和逐层蒸馏损失。在蒸馏训练期间,只有 Mamba-2 层和连接器是可训练的,而 Transformer 层保持固定。

Formally, for the $x_{t}$ in then input sequence $x_=$ $[x_{1},x_{2},\ldots,x_{n}]$ , attention in a transformer decoder layer is defined as:

形式上,对于输入序列 $x_=$ $[x_{1},x_{2},\ldots,x_{n}]$ 中的 $x_{t}$,Transformer 解码器层中的注意力机制定义为:

where $d$ is the dimension of the input embedding, and $W_{Q}$ , $W_{K}$ , and $W_{V}$ are learnable weights.

其中 $d$ 是输入嵌入的维度,$W_{Q}$、$W_{K}$ 和 $W_{V}$ 是可学习的权重。

When removing the softmax operation in Eq. 3, the at

当移除公式 3 中的 softmax 操作时,

tention becomes:

注意力变为:

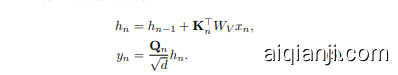

The above results can be reformulated in the form of a linear RNN as follows:

上述结果可以重新表述为以下形式的线性 RNN:

Comparing Eq. 5 with the Eq. 2, we can observe the following mapping relationships between them:

将方程 5 与方程 2 进行比较,我们可以观察到它们之间的以下映射关系:

Consequently, we initialize the aforementioned weights of Mamba-2 layers with the corresponding weights from transformer layers as shown in Fig. 3, while the remaining weights are initialized randomly. Apart from Mamba2 layers, all other weights remain identical to those of the original transformer.

因此,我们使用图 3 中所示的 Transformer 层的相应权重来初始化 Mamba-2 层的上述权重,而其余权重则随机初始化。除了 Mamba-2 层之外,所有其他权重与原始 Transformer 保持一致。

3.3. Knowledge Distilling Transformers into Hybrid Models

3.3. 将 Transformer 知识蒸馏到混合模型中

To further enhance the performance of the MaTVLM, we propose a knowledge distillation method that transfers knowledge from transformer layers to Mamba-2 layers. We use a pre-trained VLM as the teacher model and our MaTVLM as the student model. We will introduce the distillation strategies in the following.

为了进一步提升 MaTVLM 的性能,我们提出了一种知识蒸馏方法,将知识从 Transformer 层转移到 Mamba-2 层。我们使用预训练的 VLM 作为教师模型,并将我们的 MaTVLM 作为学生模型。我们将在下面介绍蒸馏策略。

Figure 3. We initialize certain weights of Mamba-2 from attention based on their correspondence. Specifically, the linear weights of $x,B,C$ in Mamba-2 are initialized from the linear weights of $V,K,Q$ in the attention mechanism. The remaining parameters, including $\Delta_{t}$ and $A$ , are initialized randomly.

图 3: 我们根据对应关系从注意力机制初始化 Mamba-2 的某些权重。具体来说,Mamba-2 中 $x,B,C$ 的线性权重是从注意力机制中 $V,K,Q$ 的线性权重初始化的。其余参数,包括 $\Delta_{t}$ 和 $A$,则是随机初始化的。

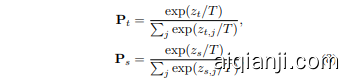

Probability Distribution Distillation First, our goal is to minimize the distance between probability distributions of the models, just the logits output by the models before applying the softmax function. This approach is widely adopted in knowledge distillation, as aligning the output distributions of the models allows the student model to gain a more nuanced understanding from the teacher model’s prediction. To achieve this, we use the kullback-leibler (KL) divergence with a temperature scaling factor as the loss function. The temperature factor adjusts the smoothness of the probability distributions, allowing the student model to capture finer details from the softened distribution of the teacher model. The loss function is defined as follows:

概率分布蒸馏

首先,我们的目标是最小化模型概率分布之间的距离,即模型在应用 softmax 函数之前输出的 logits。这种方法在知识蒸馏中被广泛采用,因为对齐模型的输出分布可以让学生模型从教师模型的预测中获得更细致的理解。为了实现这一点,我们使用带有温度缩放因子的 Kullback-Leibler (KL) 散度作为损失函数。温度因子调整概率分布的平滑度,使学生模型能够从教师模型的软化分布中捕捉到更精细的细节。损失函数定义如下:

The softened probabilities $P_{t}(i)$ and $P_{s}(i)$ are calculated by applying a temperature-scaled softmax function to the logits of the teacher and student models, respectively:

软化概率 $P_{t}(i)$ 和 $P_{s}(i)$ 分别通过对教师模型和学生模型的 logits 应用温度缩放的 softmax 函数来计算:

where $T$ is the temperature scaling factor, a higher temperature produces softer distributions, $z_{t}$ is the logit (presoftmax output) from the teacher model, and $\hat{z}_{s}$ is the corresponding logit from the student model.

其中 $T$ 是温度缩放因子,较高的温度会产生更平滑的分布,$z_{t}$ 是教师模型的 logit(softmax 前的输出),$\hat{z}_{s}$ 是学生模型对应的 logit。

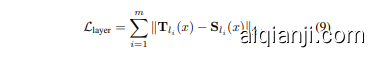

Layer-wise Distillation Moreover, to ensure that each Mamba layer in the student model aligns with its corresponding layer in the teacher model, we adopt a layerwise distillation strategy. Specifically, this approach minimizes the L2 norm between the outputs of Mamba layers in the student model and the corresponding transformer layers in the teacher model when provided with the same input. These inputs are generated from the previous layer of the teacher model, ensuring consistency and continuity of context. By aligning intermediate feature representations, the student model can more effectively replicate the hierarchical feature extraction process of the teacher model, thereby enhancing its overall performance. Assume the Mamba layers’ position in the student model is $l=[l_{1},l_{2},\ldots,l_{m}]$ . The corresponding loss function for this alignment is defined as:

分层蒸馏此外,为了确保学生模型中的每个 Mamba 层与其在教师模型中的对应层对齐,我们采用了分层蒸馏策略。具体来说,当提供相同输入时,该方法最小化学生模型中 Mamba 层输出与教师模型中对应 Transformer 层输出之间的 L2 范数。这些输入由教师模型的前一层生成,确保上下文的一致性和连续性。通过对齐中间特征表示,学生模型可以更有效地复制教师模型的分层特征提取过程,从而提高其整体性能。假设学生模型中 Mamba 层的位置为 $l=[l_{1},l_{2},\ldots,l_{m}]$,则此对齐的损失函数定义为:

where $\mathbf{T}{l{i}}(x)$ and $\mathbf{S}{l{i}}(x)$ represent the outputs of the teacher model and the student model at layer $l_{i}$ , respectively.

其中,$\mathbf{T}{l{i}}(x)$ 和 $\mathbf{S}{l{i}}(x)$ 分别表示教师模型和学生模型在层 $l_{i}$ 的输出。

Sequence Prediction Loss Finally, except of the distillation losses mentioned above, we also calculate the crossentropy loss between the output sequence prediction of the student model and the ground truth. This loss is used to guide the student model to learn the correct sequence prediction, which is crucial for the model to perform well on downstream tasks. The loss function is defined as:

序列预测损失

最后,除了上述提到的蒸馏损失外,我们还计算了学生模型的输出序列预测与真实标签之间的交叉熵损失。该损失用于指导学生模型学习正确的序列预测,这对于模型在下游任务中表现良好至关重要。损失函数定义如下:

where $y$ is the ground truth sequence, and $\hat{y_{s}}$ is the predicted sequence from the student model.

其中 $y$ 是真实序列,$\hat{y_{s}}$ 是学生模型的预测序列。

Single Stage Distillation Training To fully harness the complementary strengths of the proposed distillation methods, we integrate the probability distribution loss, layerwise distillation loss, and the sequence prediction loss into a unified framework for the single stage distillation training. During training, we set their respective weights as follows:

单阶段蒸馏训练

为了充分利用所提出的蒸馏方法的互补优势,我们将概率分布损失、分层蒸馏损失和序列预测损失整合到一个统一的单阶段蒸馏训练框架中。在训练过程中,我们将它们的权重设置如下:

where $\alpha,\beta,\gamma$ are hyper parameters that control the relative importance of each loss component.

其中 $\alpha,\beta,\gamma$ 是控制每个损失分量相对重要性的超参数。

We will conduct a series of experiments to thoroughly investigate the individual contributions and interactions of these three loss functions. By analyzing their effects in isolation and in combination, we aim to gain deeper insights into how each loss function influences the student model’s learning process, the quality of intermediate representations, and the accuracy of final predictions. This will help us understand the specific role of each loss in enhancing the overall performance of the model and ensure that the chosen loss functions are effectively contributing to the optimization process.

我们将进行一系列实验,以深入探讨这三种损失函数的个体贡献及其相互作用。通过分析它们在单独和组合情况下的效果,我们旨在更深入地了解每种损失函数如何影响学生模型的学习过程、中间表示的质量以及最终预测的准确性。这将帮助我们理解每种损失在提升模型整体性能中的具体作用,并确保所选的损失函数能够有效地促进优化过程。

This single-stage framework efficiently combines two distillation objectives and one prediction task, allowing gradients from different loss components to flow seamlessly through the student model. The unified loss function not only accelerat