RESDSQL: Decoupling Schema Linking and Skeleton Parsing for Text-to-SQL

RESDSQL: 解耦文本到SQL的模式链接与骨架解析

Abstract

摘要

One of the recent best attempts at Text-to-SQL is the pretrained language model. Due to the structural property of the SQL queries, the seq2seq model takes the responsibility of parsing both the schema items (i.e., tables and columns) and the skeleton (i.e., SQL keywords). Such coupled targets increase the difficulty of parsing the correct SQL queries especially when they involve many schema items and logic operators. This paper proposes a ranking-enhanced encoding and skeleton-aware decoding framework to decouple the schema linking and the skeleton parsing. Specifically, for a seq2seq encoder-decode model, its encoder is injected by the most relevant schema items instead of the whole unordered ones, which could alleviate the schema linking effort during SQL parsing, and its decoder first generates the skeleton and then the actual SQL query, which could implicitly constrain the SQL parsing. We evaluate our proposed framework on Spider and its three robustness variants: Spider-DK, Spider-Syn, and Spider-Realistic. The experimental results show that our framework delivers promising performance and robustness. Our code is available at https://github.com/RUC KB Reasoning/RESDSQL.

近期在文本到SQL (Text-to-SQL) 领域最成功的尝试之一是预训练语言模型。由于SQL查询的结构特性,序列到序列 (seq2seq) 模型需要同时解析模式项 (即表和列) 和骨架 (即SQL关键字)。这种耦合目标增加了解析正确SQL查询的难度,尤其是当涉及大量模式项和逻辑运算符时。本文提出了一种基于排序增强的编码和骨架感知解码框架,以解耦模式链接和骨架解析。具体而言,对于seq2seq编码器-解码器模型,其编码器注入的是最相关的模式项而非整个无序集合,从而减轻SQL解析时的模式链接负担;其解码器首先生成骨架,再生成实际SQL查询,从而隐式约束SQL解析过程。我们在Spider及其三个鲁棒性变体 (Spider-DK、Spider-Syn和Spider-Realistic) 上评估了所提框架。实验结果表明,该框架展现出优异的性能和鲁棒性。代码已开源:https://github.com/RUCKBReasoning/RESDSQL。

Introduction

引言

Relational databases that are used to store heterogeneous data types including text, integer, float, etc., are omnipresent in modern data management systems. However, ordinary users usually cannot make the best use of databases because they are not good at translating their requirements to the database language—i.e., the structured query language (SQL). To assist these non-professional users in querying the databases, researchers propose the Text-to-SQL task (Yu et al. 2018a; Cai et al. 2018), which aims to automatically translate users’ natural language questions into SQL queries. At the same time, related benchmarks are becoming increasingly complex, from the single-domain bench- marks such as ATIS (Iyer et al. 2017) and GeoQuery (Zelle and Mooney 1996) to the cross-domain benchmarks such as WikiSQL (Zhong, Xiong, and Socher 2017) and Spider (Yu et al. 2018c). Most of the recent works are done on Spider because it is the most challenging benchmark which involves many complex SQL operators (such as GROUP BY, ORDER BY, and HAVING, etc.) and nested SQL queries.

用于存储文本、整数、浮点数等异构数据类型的关系型数据库在现代数据管理系统中无处不在。然而,普通用户通常无法充分利用数据库,因为他们不擅长将自己的需求转化为数据库语言——即结构化查询语言(SQL)。为了帮助这些非专业用户查询数据库,研究人员提出了Text-to-SQL任务(Yu et al. 2018a; Cai et al. 2018),旨在将用户的自然语言问题自动翻译为SQL查询。与此同时,相关基准测试正变得越来越复杂,从ATIS(Iyer et al. 2017)和GeoQuery(Zelle and Mooney 1996)等单领域基准,发展到WikiSQL(Zhong, Xiong, and Socher 2017)和Spider(Yu et al. 2018c)等跨领域基准。近期大多数工作都基于Spider开展,因为这是最具挑战性的基准测试,涉及GROUP BY、ORDER BY、HAVING等复杂SQL运算符以及嵌套SQL查询。

| Question What are flight numbers of flights departing from City "Aberdeen"? | |||||

| Databaseschema(includingtablesandcolumns) | |||||

| airlines (airlines) | |||||

| uid (airline | id) | airline (airline name) | abbreviation abbreviation) | country country | |

| airports (airports) | |||||

| city (city) | airportcode (airport code) | airportname airport name | country (country) | ||

| countryabbrev (country abbrev) | |||||

| flights (flights) | |||||

| airline (airline) | flightno (flight number) | sourceairport (source airport) | |||

| destairport (destination airport) | |||||

| Serializeschemaitems. Schemasequence | |||||

| airlines: uid, airline, abbreviation, county|airports: city, airportcode, airportname, county, countyabbrev| flights: airline,flightno, sourceairport, destairport | |||||

| Question+Schemasequence | |||||

| Seq2seq PLM (such as BART and T5) | |||||

| SQL query Select flights.flightno from flights join airports on flights.sourceairport = airports.airportcode where airports.city="Aberdeen" | |||||

问题 从城市“Aberdeen”出发的航班有哪些航班号?

数据库模式(包括表和列)

airlines (航空公司表)

uid (航空公司id) | id) | airline (航空公司名称) | abbreviation (缩写) | country (国家)

airports (机场表)

city (城市) | airportcode (机场代码) | airportname (机场名称) | country (国家)

countryabbrev (国家缩写表)

flights (航班表)

airline (航空公司) | flightno (航班号) | sourceairport (出发机场) | destairport (目的地机场)

序列化模式项。模式序列

airlines: uid, airline, abbreviation, county|airports: city, airportcode, airportname, county, countyabbrev| flights: airline, flightno, sourceairport, destairport

问题+模式序列

Seq2seq PLM (如 BART 和 T5)

SQL 查询 Select flights.flightno from flights join airports on flights.sourceairport = airports.airportcode where airports.city="Aberdeen"

With the recent advances in pre-trained language models (PLMs), many existing works formulate the Text-to-SQL task as a semantic parsing problem and use a sequence-tosequence (seq2seq) model to solve it (Scholak, Schucher, and Bahdanau 2021; Shi et al. 2021; Shaw et al. 2021). Concretely, as shown in Figure 1, given a question and a database schema, the schema items are serialized into a schema sequence where the order of the schema items is either default or random. Then, a seq2seq PLM, such as BART (Lewis et al. 2020) and T5 (Raffel et al. 2020), is leveraged to generate the SQL query based on the concatenation of the question and the schema sequence. We observe that the target SQL query contains not only the skeleton that reveals the logic of the question but also the required schema items. For instance, for a SQL query: “SELECT petid FROM pets WHERE pet age $=1^{\cdot\cdot}$ , its skeleton is “SELECT FROM WHERE ” and its required schema items are “petid”, “pets”, and “pet age”.

随着预训练语言模型 (PLM) 的最新进展,许多现有工作将Text-to-SQL任务表述为语义解析问题,并使用序列到序列 (seq2seq) 模型来解决 (Scholak, Schucher, and Bahdanau 2021; Shi et al. 2021; Shaw et al. 2021)。具体来说,如图 1 所示,给定一个问题和一个数据库模式,模式项被序列化为一个模式序列,其中模式项的顺序是默认的或随机的。然后,利用seq2seq PLM,如 BART (Lewis et al. 2020) 和 T5 (Raffel et al. 2020),基于问题和模式序列的连接生成SQL查询。我们观察到目标SQL查询不仅包含揭示问题逻辑的骨架,还包含所需的模式项。例如,对于SQL查询:“SELECT petid FROM pets WHERE pet age $=1^{\cdot\cdot}$”,其骨架是“SELECT FROM WHERE”,所需的模式项是“petid”、“pets”和“pet age”。

Since Text-to-SQL needs to perform not only the schema linking which aligns the mentioned entities in the question to schema items in the database schema, but also the skeleton parsing which parses out the skeleton of the SQL query, the major challenges are caused by a large number of required schema items and the complex composition of operators such as GROUP BY, HAVING, and JOIN ON involved in a SQL query. The intertwining of the schema linking and the skeleton parsing complicates learning even more.

由于Text-to-SQL不仅需要执行将问题中提到的实体与数据库模式中的模式项对齐的模式链接(schema linking),还需要解析出SQL查询骨架的骨架解析(skeleton parsing),主要挑战源于SQL查询涉及大量必需的模式项以及GROUP BY、HAVING和JOIN ON等运算符的复杂组合。模式链接与骨架解析的相互交织使学习过程更加复杂。

To investigate whether the Text-to-SQL task could become easier if the schema linking and the skeleton parsing are decoupled, we conduct a preliminary experiment on Spider’s dev set. Concretely, we fine-tune a T5-Base model to generate the pure skeletons based on the questions (i.e., skeleton parsing task). We observe that the exact match accuracy on such a task achieves about $80%$ using the fine-tuned T5-Base. However, even the T5-3B model only achieves about $70%$ accuracy (Shaw et al. 2021; Scholak, Schucher, and Bahdanau 2021). This pre-experiment indicates that decoupling such two objectives could be a potential way of reducing the difficulty of Text-to-SQL.

为了研究将模式链接(schema linking)和骨架解析(skeleton parsing)解耦后是否能简化Text-to-SQL任务,我们在Spider开发集上进行了初步实验。具体而言,我们微调了一个T5-Base模型来基于问题生成纯骨架(即骨架解析任务)。实验观察到,使用微调后的T5-Base模型在该任务上的精确匹配准确率可达约$80%$,而即使是T5-3B模型也仅能达到约$70%$的准确率(Shaw et al. 2021; Scholak, Schucher, and Bahdanau 2021)。这一预实验表明,解耦这两个目标可能是降低Text-to-SQL任务难度的潜在途径。

To realize the above decoupling idea, we propose a Ranking-enhanced Encoding plus a Skeleton-aware Decoding framework for Text-to-SQL (RESDSQL). The former injects a few but most relevant schema items into the seq2seq model’s encoder instead of all schema items. In other words, the schema linking is conducted beforehand to filter out most of the irrelevant schema items in the database schema, which can alleviate the difficulty of the schema linking for the seq2seq model. For such purpose, we train an additional cross-encoder to classify the tables and columns simultaneously based on the input question, and then rank and filter them according to the classification probabilities to form a ranked schema sequence. The latter does not add any new modules but simply allows the seq2seq model’s decoder to first generate the SQL skeleton, and then the actual SQL query. Since skeleton parsing is much easier than SQL parsing, the first generated skeleton could implicitly guide the subsequent SQL parsing via the masked self-attention mechanism in the decoder.

为实现上述解耦思想,我们提出了一种面向Text-to-SQL的排序增强编码与骨架感知解码框架(RESDSQL)。前者仅向seq2seq模型的编码器注入少量但最相关的模式项,而非全部模式项。换言之,通过预先执行模式链接来过滤数据库模式中大部分无关模式项,从而减轻seq2seq模型处理模式链接的难度。为此,我们额外训练了一个交叉编码器,基于输入问题同时分类表和列,再根据分类概率进行排序筛选,形成有序模式序列。后者不添加新模块,而是让seq2seq模型的解码器先生成SQL骨架,再生成实际SQL查询。由于骨架解析比SQL解析简单得多,首先生成的骨架可通过解码器中的掩码自注意力机制隐式指导后续SQL解析。

Contributions (1) We investigate a potential way of decoupling the schema linking and the skeleton parsing to reduce the difficulty of Text-to-SQL. Specifically, we propose a ranking-enhanced encoder to alleviate the effort of the schema linking and a skeleton-aware decoder to implicitly guide the SQL parsing by the skeleton. (2) We conduct extensive evaluation and analysis and show that our framework not only achieves the new state-of-the-art (SOTA) performance on Spider but also exhibits strong robustness.

贡献 (1) 我们研究了一种解耦模式链接 (schema linking) 和骨架解析 (skeleton parsing) 的潜在方法,以降低 Text-to-SQL 的难度。具体而言,我们提出了一个排名增强编码器 (ranking-enhanced encoder) 来减轻模式链接的工作量,以及一个骨架感知解码器 (skeleton-aware decoder) 通过骨架隐式指导 SQL 解析。 (2) 我们进行了广泛的评估和分析,结果表明我们的框架不仅在 Spider 上实现了新的最先进 (SOTA) 性能,而且表现出强大的鲁棒性。

Problem Definition

问题定义

Database Schema A relational database is denoted as $\mathcal{D}$ . The database schema $s$ of $\mathcal{D}$ includes (1) a set of $N$ tables $\begin{array}{l l l}{\mathcal{T}}&{=}&{{t_{1},t_{2},\cdots,t_{N}}}\end{array}$ , (2) a set of columns $\mathcal{C}={c_{1}^{1},\cdot\cdot\cdot,c_{n_{1}}^{1},c_{1}^{2},\cdot\cdot\cdot,c_{n_{2}}^{2},\cdot\cdot\cdot,c_{1}^{N},\cdot\cdot\cdot,c_{n_{N}}^{N}}$ associated with the tables, where $n_{i}$ is the number of columns in the $i\cdot$ -th table, (3) and a set of foreign key relations $\mathcal{R}=$ ${(c_{k}^{i},c_{h}^{j})|c_{k}^{i},c_{h}^{j}~\in~\mathcal{C}}$ , where each $(c_{k}^{i},c_{h}^{j})$ denotes a for- eign key relation between column $c_{k}^{i}$ and column $\boldsymbol{c}_{h}^{j}$ . We use $\begin{array}{r}{M=\sum_{i=1}^{N}n_{i}}\end{array}$ to denote the total number of columns in $\mathcal{D}$

数据库模式

一个关系型数据库表示为 $\mathcal{D}$。数据库模式 $s$ 包含:(1) 一组 $N$ 个表 $\begin{array}{l l l}{\mathcal{T}}&{=}&{{t_{1},t_{2},\cdots,t_{N}}}\end{array}$,(2) 与表关联的列集合 $\mathcal{C}={c_{1}^{1},\cdot\cdot\cdot,c_{n_{1}}^{1},c_{1}^{2},\cdot\cdot\cdot,c_{n_{2}}^{2},\cdot\cdot\cdot,c_{1}^{N},\cdot\cdot\cdot,c_{n_{N}}^{N}}$,其中 $n_{i}$ 是第 $i$ 个表的列数,(3) 外键关系集合 $\mathcal{R}=$ ${(c_{k}^{i},c_{h}^{j})|c_{k}^{i},c_{h}^{j}~\in~\mathcal{C}}$,其中每个 $(c_{k}^{i},c_{h}^{j})$ 表示列 $c_{k}^{i}$ 和列 $\boldsymbol{c}_{h}^{j}$ 之间的外键关系。我们用 $\begin{array}{r}{M=\sum_{i=1}^{N}n_{i}}\end{array}$ 表示 $\mathcal{D}$ 中的总列数。

Original Name and Semantic Name We use “schema items” to uniformly refer to tables and columns in the database. Each schema item can be represented by an original name and a semantic name. The semantic name can indicate the semantics of the schema item more precisely. As shown in Figure 1, it is obvious that the semantic names “airline id” and “destination airport” are more clear than their original names “uid” and “de st airport”. Sometimes the semantic name is the same as the original name.

原始名称与语义名称

我们使用“模式项”统一指代数据库中的表和列。每个模式项可由原始名称和语义名称表示,语义名称能更精确地体现模式项的语义。如图 1 所示,语义名称 "airline id" 和 "destination airport" 显然比原始名称 "uid" 和 "de st airport" 更清晰。有时语义名称会与原始名称相同。

Text-to-SQL Task Formally, given a question $q$ in natural language and a database $\mathcal{D}$ with its schema $s$ , the Text-toSQL task aims to translate $q$ into a SQL query $l$ that can be executed on $\mathcal{D}$ to answer the question $q$ .

Text-to-SQL任务的形式化定义为:给定自然语言问题$q$和带有模式$s$的数据库$\mathcal{D}$,该任务旨在将$q$转换为可执行于$\mathcal{D}$的SQL查询$l$,从而回答问题$q$。

Methodology

方法

In this section, we first give an overview of the proposed framework and then delve into its design details.

在本节中,我们首先概述所提出的框架,然后深入探讨其设计细节。

Model Overview

模型概述

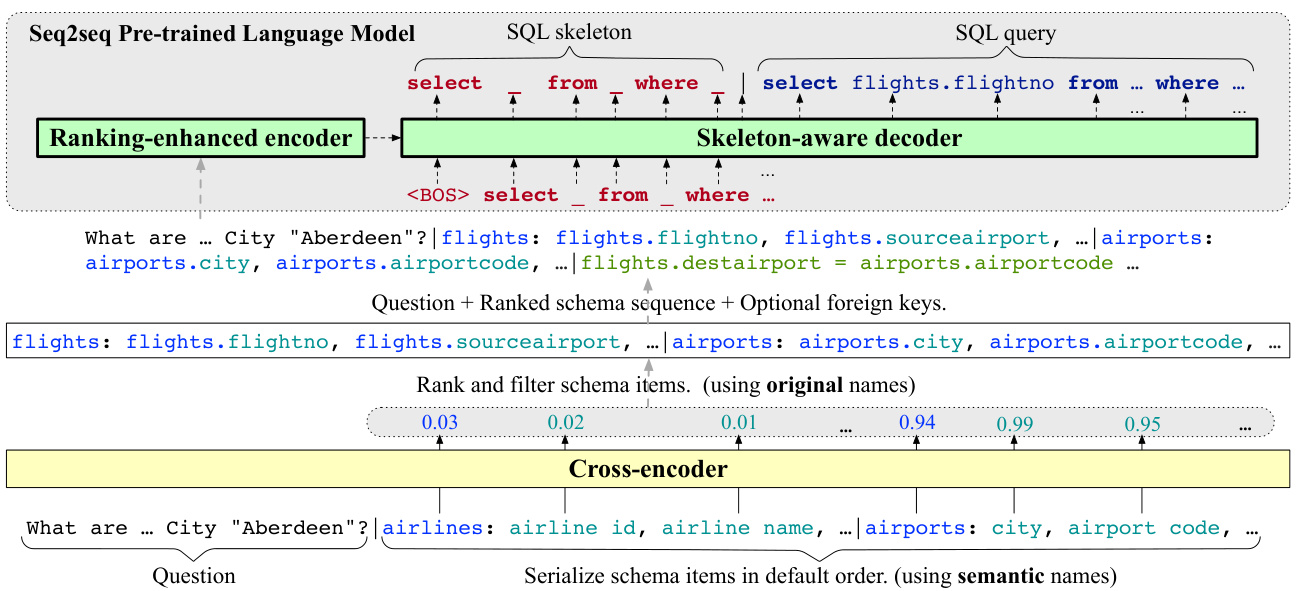

Following Shaw et al. (2021); Scholak, Schucher, and Bahdanau (2021), we treat Text-to-SQL as a translation task, which can be solved by an encoder-decoder transformer model. Facing the above problems, we extend the existing seq2seq Text-to-SQL methods by injecting the most relevant schema items in the input sequence and the SQL skeleton in the output sequence, which results in a ranking-enhanced encoder and a skeleton-aware decoder. We provide the highlevel overview of the proposed RESDSQL framework in Figure 2. The encoder of the seq2seq model receives the ranked schema sequence, such that the schema linking effort could be alleviated during SQL parsing. To obtain such a ranked schema sequence, an additional cross-encoder is proposed to classify the schema items according to the given question, and then we rank and filter them based on the classification probabilities. The decoder of the seq2seq model first parses out the SQL skeleton and then the actual SQL query, such that the SQL generation can be implicitly constrained by the previously parsed skeleton. By doing this, to a certain extent, the schema linking and the skeleton parsing are not intertwined but decoupled.

遵循 Shaw 等人 (2021) 和 Scholak、Schucher 与 Bahdanau (2021) 的研究思路,我们将文本到 SQL (Text-to-SQL) 视为翻译任务,可通过编码器-解码器 Transformer 模型解决。针对上述问题,我们通过以下方式扩展现有 seq2seq 文本到 SQL 方法:在输入序列中注入最相关的模式项,在输出序列中注入 SQL 骨架,从而形成排名增强的编码器和骨架感知的解码器。图 2 展示了所提 RESDSQL 框架的高层概览。该 seq2seq 模型的编码器接收排序后的模式序列,从而减轻 SQL 解析过程中的模式链接负担。为获得此类排序模式序列,我们提出额外交叉编码器来根据给定问题对模式项进行分类,并基于分类概率进行排序筛选。该 seq2seq 模型的解码器首先解析出 SQL 骨架,再生成实际 SQL 查询,使得 SQL 生成过程能隐式受先前解析骨架的约束。通过这种方式,模式链接与骨架解析在一定程度上实现解耦而非交织。

Ranking-Enhanced Encoder

Ranking-Enhanced Encoder

Instead of injecting all schema items, we only consider the most relevant schema items in the input of the encoder. For this purpose, we devise a cross-encoder to classify the tables and columns simultaneously and then rank them based on their probabilities. Based on the ranking order, on one hand, we filter out the irrelevant schema items. On the other hand, we use the ranked schema sequence instead of the unordered schema sequence, so that the seq2seq model could capture potential position information for schema linking.

我们不再注入所有模式项,而是仅考虑编码器输入中最相关的模式项。为此,我们设计了一个交叉编码器来同时分类表和列,并根据其概率进行排序。基于排序结果,一方面我们过滤掉不相关的模式项;另一方面,我们用排序后的模式序列替代无序模式序列,使序列到序列模型能够捕获模式链接的潜在位置信息。

Figure 2: An overview of the ranking-enhanced encoding and skeleton-aware decoding framework. We train a cross-encoder for classifying the schema items. Then we take the question, the ranked schema sequence, and optional foreign keys as the input of the ranking-enhanced encoder. The skeleton-aware decoder first decodes the SQL skeleton and then the actual SQL query.

图 2: 排序增强编码与骨架感知解码框架概览。我们训练交叉编码器 (cross-encoder) 对模式项进行分类,随后将问题、排序后的模式序列及可选外键作为排序增强编码器的输入。骨架感知解码器先解码 SQL 骨架,再生成实际 SQL 查询。

As for the input of the cross-encoder, we flatten the schema items into a schema sequence in their default order and concatenate it with the question to form an input sequence: X = q | t1 : c11, · · · , c1n1 | · · · | tN : c1N , · · · , cnNN , where $|$ is the delimiter. To better represent the semantics of schema items, instead of their original names, we use their semantic names which are closer to the natural expression.

至于交叉编码器 (cross-encoder) 的输入,我们将模式项按默认顺序展平为模式序列,并将其与问题连接起来形成输入序列:X = q | t1 : c11, · · · , c1n1 | · · · | tN : c1N , · · · , cnNN ,其中 $|$ 是分隔符。为了更好地表示模式项的语义,我们使用更接近自然表达的语义名称,而非它们的原始名称。

Encoding Module We feed $X$ into RoBERTa (Liu et al. 2019), an improved version of BERT (Devlin et al. 2019). Since each schema item will be tokenized into one or more tokens by PLM’s tokenizer (e.g., the column “airline id” will be split into two tokens: “airline” and “id”), and our target is to represent each schema item as a whole for classification, we need to pool the output embeddings belonging to each schema item. To achieve this goal, we use a pooling module that consists of a two-layer BiLSTM (Hochreiter and Schmid huber 1997) and a non-linear fully-connected layer. After pooling, each table embedding can be denoted by ${\mathbf{}}T_{i}\in$ $\mathbb{R}^{1\times d}$ $(i\in{1,...,N})$ and each column embedding can be denoted by $\mathbf{\dot{}}{k}^{i}\in\mathbb{R}^{1\times d}(i\in{1,...,N},k\in{1,...,n_{i}})$ , where $d$ denotes the hidden size.

编码模块

我们将 $X$ 输入到 RoBERTa (Liu et al. 2019) ,这是 BERT (Devlin et al. 2019) 的改进版本。由于每个模式项会被 PLM 的 tokenizer 切分为一个或多个 token (例如列名 "airline id" 会被拆分为两个 token:"airline" 和 "id") ,而我们的目标是将每个模式项作为整体进行分类表示,因此需要池化属于每个模式项的输出嵌入。为实现这一目标,我们使用由双层 BiLSTM (Hochreiter and Schmidhuber 1997) 和非线性全连接层组成的池化模块。池化后,每个表嵌入可表示为 ${\mathbf{}}T_{i}\in$ $\mathbb{R}^{1\times d}$ $(i\in{1,...,N})$ ,每个列嵌入可表示为 $\mathbf{\dot{}}{k}^{i}\in\mathbb{R}^{1\times d}(i\in{1,...,N},k\in{1,...,n_{i}})$ ,其中 $d$ 表示隐藏层大小。

Column-Enhanced Layer We observe that some questions only mention the column name rather than the table name. For example in Figure 1, the question mentions a column name “city”, but its corresponding table name “airports” is ignored. This table name missing issue may compromise the table classification performance. Therefore, we propose a column-enhanced layer to inject the column information into the corresponding table embedding. In this way, a table could be identified even if the question only mentions its columns. Concretely, for the $i$ -th table, we inject the column information $C_{:}^{i}\in\mathbb{R}^{n_{i}\times d}$ into the table embedding $\mathbf{\delta}{\mathbf{\delta}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{\delta}\mathbf{\delta}\mathbf{T}_{i}}$ by stacking a multi-head scaled dot-product attention layer (Vaswani et al. 2017) and a feature fusion layer on the top of the encoding module:

列增强层

我们注意到,某些问题仅提及列名而未提及表名。例如在图1中,问题提到列名"city",但忽略了对应的表名"airports"。这种表名缺失问题可能会影响表格分类性能。因此,我们提出列增强层,将列信息注入到对应的表格嵌入中。通过这种方式,即使问题仅提及列名也能识别表格。具体而言,对于第$i$个表格,我们通过在多头缩放点积注意力层 (Vaswani et al. 2017) 和特征融合层叠加编码模块,将列信息$C_{:}^{i}\in\mathbb{R}^{n_{i}\times d}$注入表格嵌入$\mathbf{\delta}{\mathbf{\delta}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{\delta}\mathbf{-}\mathbf{\delta}\mathbf{\delta}\mathbf{\delta}\mathbf{T}_{i}}$中:

$$

\begin{array}{r l}&{{\bf{\varPsi}}{i}^{C}=M u l t i H e a d A t t n({{T}{i}},{C_{:}^{i}},{C_{:}^{i}},h),}\ &{{\hat{{\cal T}{i}}}=N o r m({{T}{i}}+{{T}_{i}^{C}}).}\end{array}

$$

$$

\begin{array}{r l}&{{\bf{\varPsi}}{i}^{C}=M u l t i H e a d A t t n({{T}{i}},{C_{:}^{i}},{C_{:}^{i}},h),}\ &{{\hat{{\cal T}{i}}}=N o r m({{T}{i}}+{{T}_{i}^{C}}).}\end{array}

$$

Here, $\pmb{T}{i}$ acts as the query and $C^{\bar{\iota}}$ acts as both the key and the value, $h$ is the number of heads, and $N o r m(\cdot)$ is a rowwise $L_{2}$ normalization function. $\pmb{T}{i}^{C}$ represents the columnattentive table embedding. We fuse the original table embedding $\pmb{T}{i}$ and the column-attentive table embedding $\pmb{T}{i}^{C}$ to obtain the column-enhanced table embedding $\hat{\pmb{T}}_{i}\in\mathbb{R}^{1\times d}$ .

这里,$\pmb{T}{i}$ 作为查询(query),$C^{\bar{\iota}}$ 同时作为键(key)和值(value),$h$ 是头数(head number),$Norm(\cdot)$ 是行级(rowwise) $L_{2}$ 归一化函数。$\pmb{T}{i}^{C}$ 表示列注意力表嵌入(column-attentive table embedding)。我们将原始表嵌入 $\pmb{T}{i}$ 与列注意力表嵌入 $\pmb{T}{i}^{C}$ 融合,得到列增强表嵌入 $\hat{\pmb{T}}_{i}\in\mathbb{R}^{1\times d}$。

Loss Function of Cross-Encoder Cross-entropy loss is a well-adopted loss function in classification tasks. However, since a SQL query usually involves only a few tables and columns in the database, the label distribution of the training set is highly imbalanced. As a result, the number of negative examples is many times that of positive examples, which will induce serious training bias. To alleviate this issue, we employ the focal loss (Lin et al. 2017) as our classification loss. Then, we form the loss function of the cross-encoder in a multi-task learning way, which consists of both the table classification loss and the column classification loss, i.e.,

交叉编码器的损失函数

交叉熵损失是分类任务中广泛采用的损失函数。然而,由于SQL查询通常仅涉及数据库中的少量表和列,训练集的标签分布高度不平衡。因此,负样本数量往往是正样本的数十倍,这会导致严重的训练偏差。为缓解此问题,我们采用焦点损失 (focal loss) (Lin et al. 2017) 作为分类损失。随后,我们以多任务学习的方式构建交叉编码器的损失函数,该函数同时包含表分类损失和列分类损失,即

$$

\mathcal{L}{1}=\frac{1}{N}\sum_{i=1}^{N}F L(y_{i},\hat{y}{i})+\frac{1}{M}\sum_{i=1}^{N}\sum_{k=1}^{n_{i}}F L(y_{k}^{i},\hat{y}_{k}^{i}),

$$

$$

\mathcal{L}{1}=\frac{1}{N}\sum_{i=1}^{N}F L(y_{i},\hat{y}{i})+\frac{1}{M}\sum_{i=1}^{N}\sum_{k=1}^{n_{i}}F L(y_{k}^{i},\hat{y}_{k}^{i}),

$$

where $F L$ denotes the focal loss function and $y_{i}$ is the ground truth label of the $i$ -th table. $y_{i}~=~1$ indicates the table is referenced by the SQL query and 0 otherwise. $y_{k}^{i}$ is the ground truth label of the $k$ -th column in the $i$ -th table.

其中 $FL$ 表示焦点损失函数,$y_{i}$ 是第 $i$ 个表格的真实标签。$y_{i}~=~1$ 表示该表格被 SQL 查询引用,否则为 0。$y_{k}^{i}$ 是第 $i$ 个表格中第 $k$ 列的真实标签。

Similarly, $y_{k}^{i}=1$ indicates the column is referenced by the SQL query and 0 otherwise. $\hat{y}{i}$ and $\hat{y}{k}^{i}$ are predicted probabilities, which are estimated by two different MLP modules based on the table and column embeddings $\hat{\pmb{T}}{i}$ and $C_{k}^{i}$ :

同样,$y_{k}^{i}=1$ 表示该列被SQL查询引用,否则为0。$\hat{y}{i}$ 和 $\hat{y}{k}^{i}$ 是预测概率,由两个不同的MLP模块基于表嵌入 $\hat{\pmb{T}}{i}$ 和列嵌入 $C_{k}^{i}$ 估算得出:

$$

\begin{array}{r c l}{\hat{y}{i}}&{=}&{\sigma((\hat{T}{i}U_{1}^{t}+b_{1}^{t})U_{2}^{t}+b_{2}^{t}),}\ {\hat{y}{k}^{i}}&{=}&{\sigma((C_{k}^{i}U_{1}^{c}+b_{1}^{c})U_{2}^{c}+b_{2}^{c}),}\end{array}

$$

$$

\begin{array}{r c l}{\hat{y}{i}}&{=}&{\sigma((\hat{T}{i}U_{1}^{t}+b_{1}^{t})U_{2}^{t}+b_{2}^{t}),}\ {\hat{y}{k}^{i}}&{=}&{\sigma((C_{k}^{i}U_{1}^{c}+b_{1}^{c})U_{2}^{c}+b_{2}^{c}),}\end{array}

$$

where $U_{1}^{t}$ , $U_{1}^{c}\in\mathbb{R}^{d\times w}$ , $b_{1}^{t}$ , $b_{1}^{c}\in\mathbb{R}^{w}$ , $U_{2}^{t}$ , $U_{2}^{c}\in\mathbb{R}^{w\times2},$ , $b_{2}^{t}$ , $b_{2}^{c}\in\mathbb{R}^{2}$ are trainable parameters, and $\sigma(\cdot)$ denotes Softmax.

其中 $U_{1}^{t}$ , $U_{1}^{c}\in\mathbb{R}^{d\times w}$ , $b_{1}^{t}$ , $b_{1}^{c}\in\mathbb{R}^{w}$ , $U_{2}^{t}$ , $U_{2}^{c}\in\mathbb{R}^{w\times2},$ , $b_{2}^{t}$ , $b_{2}^{c}\in\mathbb{R}^{2}$ 是可训练参数,$\sigma(\cdot)$ 表示Softmax函数。

Prepare Input for Ranking-Enhanced Encoder During inference, for each Text-to-SQL instance, we leverage the above-trained cross-encoder to compute a probability for each schema item. Then, we only keep top $k_{1}$ tables in the database and top $\cdot k_{2}$ columns for each remained table to form a ranked schema sequence. $k_{1}$ and $k_{2}$ are two important hyper-parameters. When $k_{1}$ or $k_{2}$ is too small, a portion of the required tables or columns may be excluded, which is fatal for the subsequent seq2seq model. As $k_{1}$ or $k_{2}$ becomes larger, more and more irrelevant tables or columns may be introduced as noise. Therefore, we need to choose appropriate values for $k_{1}$ and $k_{2}$ to ensure a high recall while preventing the introduction of too much noise. The input sequence for the ranking-enhanced encoder (i.e., seq2seq model’s encoder) is formed as the concatenation of the question, the ranked schema sequence, and optional foreign key relations (see Figure 2). Foreign key relations contain rich information about the structure of the database, which could promote the generation of the JOIN ON clauses. In the ranked schema sequence, we use the original names instead of the semantic names because the schema items in the SQL queries are represented by their original names, and using the former will facilitate the decoder to directly copy required schema items from the input sequence.

为排序增强编码器准备输入

在推理阶段,对于每个Text-to-SQL实例,我们利用上述训练好的交叉编码器计算每个模式项的概率。然后仅保留数据库中top $k_{1}$ 表和每个保留表中top $\cdot k_{2}$ 列,形成排序后的模式序列。$k_{1}$ 和 $k_{2}$ 是两个重要超参数:当$k_{1}$ 或 $k_{2}$ 过小时,可能排除部分必需的表或列,这对后续seq2seq模型是致命的;而当$k_{1}$ 或 $k_{2}$ 过大时,会引入越来越多无关表或列作为噪声。因此需要为$k_{1}$ 和 $k_{2}$ 选择合适的值,在保证高召回率的同时避免引入过多噪声。

排序增强编码器(即seq2seq模型的编码器)的输入序列由问题文本、排序后的模式序列及可选的外键关系组成(见图2)。外键关系包含数据库结构的丰富信息,可促进JOIN ON子句的生成。在排序模式序列中,我们使用原始名称而非语义名称,因为SQL查询中的模式项均以其原始名称表示,这样能使解码器更直接从输入序列复制所需模式项。

Skeleton-Aware Decoder

Skeleton-Aware Decoder

Most seq2seq Text-to-SQL methods tell the decoder to generate the target SQL query directly. However, the apparent gap between the natural language and the SQL query makes it difficult to perform the correct generation. To alleviate this problem, we would like to decompose the SQL generation into two steps: (1) generate the SQL skeleton based on the semantics of the question, and then (2) select the required “data” (i.e., tables, columns, and values) from the input sequence to fill the slots in the skeleton.

大多数seq2seq文本到SQL方法直接让解码器生成目标SQL查询。然而,自然语言与SQL查询之间的显著差距使得正确生成变得困难。为了缓解这个问题,我们建议将SQL生成分解为两个步骤:(1) 根据问题语义生成SQL骨架,然后(2) 从输入序列中选择所需的"数据"(即表、列和值)来填充骨架中的槽位。

To realize the above decomposition idea without adding additional modules, we propose a new generation objective based on the intrinsic characteristic of the transformer decoder, which generates the $t$ -th token depending on not only the output of the encoder but also the output of the decoder before the $t$ -th time step (Vaswani et al. 2017). Concretely, instead of decoding the target SQL directly, we encourage the decoder to first decode the skeleton of the SQL query, and based on this, we continue to decode the SQL query.

为实现上述分解思路而不增加额外模块,我们基于Transformer解码器的固有特性提出了一种新的生成目标:生成第$t$个token时不仅依赖编码器输出,还依赖于第$t$个时间步之前的解码器输出 (Vaswani et al. 2017)。具体而言,我们不直接解码目标SQL,而是引导解码器先解码SQL查询的骨架结构,在此基础上继续解码完整SQL查询。

By parsing the skeleton first and then parsing the SQL query, at each decoding step, SQL generation will be easier because the decoder could either copy a “data” from the input sequence or a SQL keyword from the previously parsed skeleton. Now, the objective of the seq2seq model is:

通过先解析骨架再解析SQL查询,在每一个解码步骤中,SQL生成会变得更加容易,因为解码器既可以从输入序列中复制一个"数据",也可以从先前解析的骨架中复制一个SQL关键字。此时,seq2seq模型的目标是:

Table 1: An example from Spider. Here, Q, $\mathrm{SQL}{o}$ , $\operatorname{SQL}{n}$ , and $\mathrm{SQL}_{s}$ denote the question, the original SQL query, the normalized SQL query, and the SQL skeleton, respectively.

表 1: Spider 中的示例。其中 Q、$\mathrm{SQL}{o}$、$\operatorname{SQL}{n}$ 和 $\mathrm{SQL}_{s}$ 分别表示问题、原始 SQL 查询、规范化 SQL 查询和 SQL 骨架。

| List the duration, file size and format of songs whose genre is pop, ordered by title? SELECT T1.duration, T1.file_size,T1.formats | |

|---|---|

| SQLo | FROM files AS T1 JOIN song AS T2 ON T1.fid = T2.fid WHERE T2.genre-is = "pop" ORDER BY |

| SQLn | tfiles.duration,f files.file_size,files.formats from files join song on files.fid = song.fid where song.genre_is = 'pop' order by song.song-name asc |

| SQLs | select_from_where_orderby-asc |

$$

\mathcal{L}{2}=\frac{1}{G}\sum_{i=1}^{G}p(l_{i}^{s},l_{i}|S_{i}),

$$

$$

\mathcal{L}{2}=\frac{1}{G}\sum_{i=1}^{G}p(l_{i}^{s},l_{i}|S_{i}),

$$

where $G$ is the number of Text-to-SQL instances, $S_{i}$ is the input sequence of the $i$ -th instance which consists of the question, the ranked schema sequence, and optional foreign key relations. $l_{i}$ denotes the $i$ -th target SQL query and $\mathit{l}{i}^{s}$ is the skeleton extracted from $l_{i}$ . We will present some necessary details on how to normalize SQL queries and how to extract their skeletons.

其中 $G$ 是 Text-to-SQL 实例的数量,$S_{i}$ 是第 $i$ 个实例的输入序列,由问题、排序后的模式序列和可选的外键关系组成。$l_{i}$ 表示第 $i$ 个目标 SQL 查询,$\mathit{l}{i}^{s}$ 是从 $l_{i}$ 中提取的骨架。我们将介绍如何规范化 SQL 查询以及如何提取其骨架的一些必要细节。

SQL Normalization The Spider dataset is manually created by 11 annotators with different annotation habits, which results in slightly different styles among the final annotated SQL queries, such as uppercase versus lowercase keywords. Although different styles have no impact on the execution results, the model requires some extra effort to learn and adapt to them. To reduce the learning difficulty, we normalize the original SQL queries before training by (1) unifying the keywords and schema items into lowercase, (2) adding spaces around parentheses and replacing double quotes with single quotes, (3) adding an ASC keyword after the ORDER BY clause if it does not specify the order, and (4) removing the AS clause and replacing all table aliases with their original names. We present an example in Table 1.

SQL规范化

Spider数据集由11名具有不同标注习惯的标注者手动创建,这导致最终标注的SQL查询在风格上略有差异,例如关键字的大小写不一致。虽然不同风格对执行结果没有影响,但模型需要额外学习并适应这些差异。为了降低学习难度,我们在训练前对原始SQL查询进行了规范化处理:(1) 将关键字和模式项统一为小写,(2) 在括号周围添加空格并将双引号替换为单引号,(3) 在未指定排序方向的ORDER BY子句后添加ASC关键字,(4) 移除AS子句并将所有表别名替换为原始名称。表1展示了一个示例。

SQL Skeleton Extraction Based on the normalized SQL queries, we can extract their skeletons which only contain SQL keywords and slots. Specifically, given a normalized SQL query, we keep its keywords and replace the rest parts with slots. Note that we do not keep the JOIN ON keyword because it is difficult to find a counterpart from the question (Gan et al. 2021b). As shown in Table 1, although the original SQL query looks complex, its skeleton is simple and each keyword can find a counterpart from the question. For example, “order by asc” in the skeleton can be inferred from “ordered by title?” in the question.

基于归一化SQL查询的骨架提取

根据归一化后的SQL查询,我们可以提取仅包含SQL关键词和占位槽的骨架。具体而言,给定一个归一化SQL查询时,我们保留其关键词并将剩余部分替换为占位槽。需注意我们不保留JOIN ON关键词,因为难以从问题中找到对应表述 (Gan et al. 2021b)。如表1所示,虽然原始SQL查询看似复杂,但其骨架十分简洁,每个关键词都能从问题中找到对应表述。例如骨架中的"order by asc"可从问题中的"ordered by title?"推断而来。

Execution-Guided SQL Selector Since we do not constrain the decoder with SQL grammar, the model may generate some illeg