PRIMERA: Pyramid-based Masked Sentence Pre-training for Multi-document Sum mari z ation

PRIMERA: 基于金字塔结构的遮蔽句子预训练用于多文档摘要

Wen Xiao†∗

文晓†∗

Abstract

摘要

We introduce PRIMERA, a pre-trained model for multi-document representation with a focus on sum mari z ation that reduces the need for dataset-specific architectures and large amounts of fine-tuning labeled data. PRIMERA uses our newly proposed pre-training objective designed to teach the model to connect and aggregate information across documents. It also uses efficient encoder-decoder transformers to simplify the processing of concatenated input documents. With extensive experiments on 6 multi-document sum mari z ation datasets from 3 different domains on zero-shot, few-shot and full-supervised settings, PRIMERA outperforms current state-of-the-art dataset-specific and pre-trained models on most of these settings with large margins.1

我们推出PRIMERA,这是一种专注于摘要生成的多文档表征预训练模型,减少了对数据集特定架构和大量微调标注数据的需求。PRIMERA采用我们新提出的预训练目标,旨在教会模型跨文档连接和聚合信息。它还使用高效的编码器-解码器Transformer来简化拼接输入文档的处理。通过在零样本、少样本和全监督设置下对来自3个不同领域的6个多文档摘要数据集进行广泛实验,PRIMERA在大多数设置中以显著优势超越了当前最先进的数据集特定模型和预训练模型。[20]

1 Introduction

1 引言

Multi-Document Sum mari z ation is the task of generating a summary from a cluster of related documents. State-of-the-art approaches to multi-document sum mari z ation are primarily either graph-based (Liao et al., 2018; Li et al., 2020; Pasunuru et al., 2021), leveraging graph neural networks to connect information between the documents, or hierarchical (Liu and Lapata, 2019a; Fabbri et al., 2019; Jin et al., 2020), building intermediate representations of individual documents and then aggregating information across. While effective, these models either require domain-specific additional information e.g. Abstract Meaning Representation (Liao et al., 2018), or discourse graphs (Christensen et al., 2013; Li et al., 2020), or use dataset-specific, customized architectures, making it difficult to leverage pretrained language models. Simultaneously, recent pretrained language models (typically encoder-decoder transformers)

多文档摘要 (Multi-Document Summarization) 是从一组相关文档中生成摘要的任务。当前最先进的多文档摘要方法主要分为两类:基于图的方法 (Liao et al., 2018; Li et al., 2020; Pasunuru et al., 2021) 利用图神经网络连接文档间的信息;以及分层方法 (Liu and Lapata, 2019a; Fabbri et al., 2019; Jin et al., 2020) 先构建单个文档的中间表示,再进行跨文档信息聚合。虽然有效,但这些模型要么需要领域特定的额外信息(如抽象意义表示 (Liao et al., 2018) 或语篇图 (Christensen et al., 2013; Li et al., 2020)),要么使用数据集定制的专用架构,导致难以利用预训练语言模型。与此同时,最近的预训练语言模型(通常是编码器-解码器结构的 Transformer)

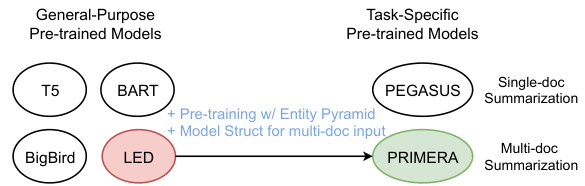

Figure 1: PRIMERA vs existing pretrained models.

图 1: PRIMERA 与现有预训练模型对比。

have shown the advantages of pre training and transfer learning for generation and sum mari z ation (Raffel et al., 2020; Lewis et al., 2020; Beltagy et al., 2020; Zaheer et al., 2020). Yet, existing pretrained models either use single-document pre training objectives or use encoder-only models that do not work for generation tasks like sum mari z ation (e.g., CDLM, Caciularu et al., 2021).

已有研究证明了预训练和迁移学习在生成与摘要任务中的优势 (Raffel et al., 2020; Lewis et al., 2020; Beltagy et al., 2020; Zaheer et al., 2020) 。然而,现有的预训练模型要么采用单文档预训练目标,要么使用仅含编码器的模型 (encoder-only) ,这类模型无法胜任摘要等生成任务 (例如 CDLM, Caciularu et al., 2021) 。

Therefore, we argue that these pretrained models are not necessarily the best fit for multi-document sum mari z ation. Alternatively, we propose a simple pre training approach for multi-document summarization, reducing the need for dataset-specific archi tec ture s and large fine-tuning labeled data (See Figure 1 to compare with other pretrained models). Our method is designed to teach the model to identify and aggregate salient information across a “cluster” of related documents during pretraining. Specifically, our approach uses the Gap Sentence Generation objective (GSG) (Zhang et al., 2020), i.e. masking out several sentences from the input document, and recovering them in order in the decoder. We propose a novel strategy for GSG sentence masking which we call, Entity Pyramid, inspired by the Pyramid Evaluation method (Nenkova and Passonneau, 2004). With Entity Pyramid, we mask salient sentences in the entire cluster then train the model to generate them, encouraging it to find important information across documents and aggregate it in one summary.

因此,我们认为这些预训练模型并不一定最适合多文档摘要任务。作为替代方案,我们提出了一种简单的多文档摘要预训练方法,减少了对数据集特定架构和大量微调标注数据的需求(与其他预训练模型的对比见图1)。我们的方法旨在教导模型在预训练期间识别并聚合相关文档"簇"中的关键信息。具体而言,该方法采用间隙句子生成目标(GSG)[20],即从输入文档中遮蔽若干句子,并按顺序在解码器中恢复它们。我们受金字塔评估法[21]启发,提出了一种新颖的GSG句子遮蔽策略——实体金字塔(Entity Pyramid)。通过实体金字塔,我们遮蔽整个文档簇中的关键句子并训练模型生成它们,从而促使模型跨文档发现重要信息并将其聚合到单一摘要中。

We conduct extensive experiments on 6 multidocument sum mari z ation datasets from 3 different domains. We show that despite its simplicity, PRIMERA achieves superior performance compared with prior state-of-the-art pretrained models, as well as dataset-specific models in both few-shot and full fine-tuning settings. PRIMERA performs particularly strong in zero- and few-shot settings, significantly outperforming prior state-of-the-art up to 5 Rouge-1 points with as few as 10 examples. Our contributions are summarized below:

我们在来自3个不同领域的6个多文档摘要数据集上进行了大量实验。结果表明,尽管结构简单,PRIMERA在少样本和全微调设置下均优于先前最先进的预训练模型及数据集专用模型。PRIMERA在零样本和少样本设置中表现尤为突出,仅需10个示例就能以最高5个Rouge-1分数的优势显著超越先前最优模型。我们的主要贡献如下:

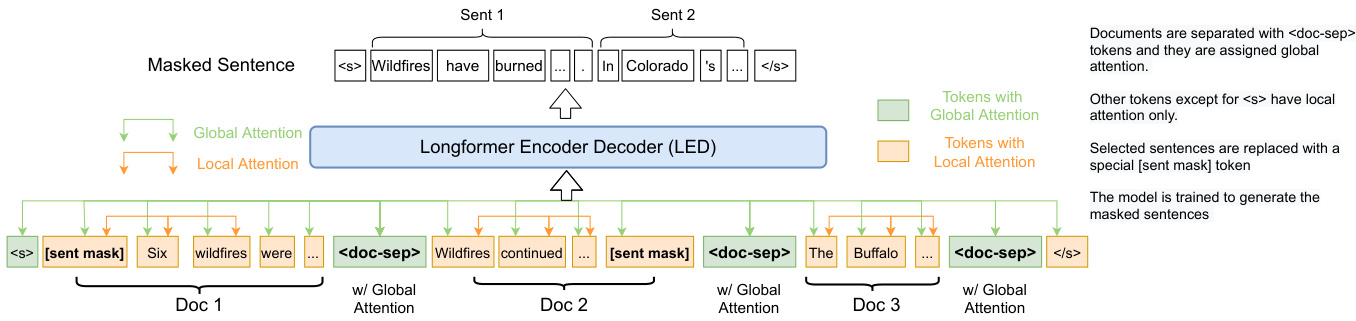

Figure 2: Model Structure of PRIMERA.

图 2: PRIMERA 模型结构。

- We release PRIMERA, the first pretrained generation model for multi-document inputs with focus on sum mari z ation.

- 我们发布了PRIMERA,这是首个专注于多文档摘要生成的预训练生成模型。

- We propose Entity Pyramid, a novel pre training strategy that trains the model to select and aggregate salient information from documents.

- 我们提出实体金字塔 (Entity Pyramid) ,这是一种新颖的预训练策略,旨在训练模型从文档中选择并聚合关键信息。

- We extensively evaluate PRIMERA on 6 datasets from 3 different domains for zero-shot, few-shot and fully-supervised settings. We show that PRIMERA outperforms current state-of-the-art on most of these evaluations with large margins.

- 我们在3个不同领域的6个数据集上对PRIMERA进行了零样本、少样本和全监督设置的广泛评估。结果表明,PRIMERA在大多数评估中以显著优势超越了当前最优方法。

2 Model

2 模型

In this section, we discuss our proposed model PRIMERA, a new pretrained general model for multi-document sum mari z ation. Unlike prior work, PRIMERA minimizes dataset-specific modeling by simply concatenating a set of documents and processing them with a general efficient encoderdecoder transformer model (§2.1). The underlying transformer model is pretrained on an unlabeled multi-document dataset, with a new entity-based sentence masking objective to capture the salient information within a set of related documents (§2.2).

在本节中,我们讨论提出的PRIMERA模型,这是一种用于多文档摘要的新型预训练通用模型。与先前工作不同,PRIMERA通过简单拼接文档集合并使用通用高效的编码器-解码器Transformer模型进行处理(见2.1节),从而最小化数据集特定建模。基础Transformer模型在未标注的多文档数据集上进行预训练,采用基于实体的句子掩码目标来捕获相关文档集合中的关键信息(见2.2节)。

2.1 Model Architecture and Input Structure

2.1 模型架构与输入结构

Our goal is to minimize dataset-specific modeling to leverage general pretrained transformer models for the multi-document task and make it easy to use in practice. Therefore, to summarize a set of related documents, we simply concatenate all the documents in a single long sequence, and process them with an encoder-decoder transformer model. Since the concatenated sequence is long, instead of more standard encoder-decoder transformers like BART (Lewis et al., 2020) and T5 (Raffel et al., 2020), we use the Longformer-Encoder-Decoder (LED) Model (Beltagy et al., 2020), an efficient transformer model with linear complexity with respect to the input length.2 LED uses a sparse local+global attention mechanism in the encoder self-attention side while using the full attention on decoder and cross-attention.

我们的目标是最小化针对特定数据集的建模工作,利用通用的预训练Transformer模型来完成多文档任务,并使其易于实际应用。因此,为了总结一组相关文档,我们只需将所有文档拼接成一个长序列,并用编码器-解码器Transformer模型进行处理。由于拼接后的序列较长,我们没有使用更标准的编码器-解码器Transformer模型(如BART [Lewis等人,2020]和T5 [Raffel等人,2020]),而是采用了Longformer-Encoder-Decoder (LED)模型 [Beltagy等人,2020],这是一种具有线性输入长度复杂性的高效Transformer模型。LED在编码器自注意力侧采用稀疏的局部+全局注意力机制,而在解码器和交叉注意力侧使用完整注意力机制。

When concatenating, we add special document separator tokens $(<\mathrm{doc-sep}>)$ between the documents to make the model aware of the document boundaries (Figure 2). We also assign global attention to these tokens which the model can use to share information across documents (Caciularu et al., 2021) (see $\S5$ for ablations of the effectiveness of this input structure and global attention).

在拼接时,我们会在文档之间添加特殊的分隔符 $(<\mathrm{doc-sep}>)$ 以使模型感知文档边界 (图 2)。我们还为这些 token 分配了全局注意力,模型可借此跨文档共享信息 (Caciularu et al., 2021) (详见 $\S5$ 节关于此输入结构和全局注意力的消融实验)。

2.2 Pre training objective

2.2 预训练目标

In sum mari z ation, task-inspired pre training objectives have been shown to provide gains over general-purpose pretrained transformers (PEGASUS; Zhang et al., 2020). In particular, PEGASUS introduces Gap Sentence Generation (GSG) as a pre training objective where some sentences are masked in the input and the model is tasked to generate them. Following PEGASUS, we use the GSG objective, but introduce a new masking strategy designed for multi-document sum mari z ation. As in GSG, we select and mask out $m$ summary-like sentences from the input documents we want to summarize, i.e. every selected sentence is replaced by a single token [sent-mask] in the input, and train the model to generate the concatenation of those sentences as a “pseudo-summary” (Figure 2). This is close to abstract ive sum mari z ation because the model needs to reconstruct the masked sentences using the information in the rest of the documents.

总之,任务导向的预训练目标已被证明能带来优于通用预训练Transformer (PEGASUS; Zhang et al., 2020) 的效果。具体而言,PEGASUS提出了间隙句子生成 (Gap Sentence Generation, GSG) 作为预训练目标,即在输入中遮蔽部分句子并让模型生成这些句子。我们沿用GSG目标,但针对多文档摘要任务设计了新的遮蔽策略。与GSG类似,我们从待摘要的输入文档中选择并遮蔽$m$个类摘要句子——每个被选句子在输入中被替换为单个Token [sent-mask],并训练模型生成这些句子的串联作为"伪摘要" (图 2)。这接近于抽象摘要,因为模型需要利用文档其余部分的信息来重建被遮蔽的句子。

Figure 3: An example on sentence selection by Principle vs our Entity Pyramid strategy. Italic text in red is the sentence with the highest Principle ROUGE scores, which is thereby chosen by the Principle Strategy. Most frequent entity ’Colorado’ is shown with blue, followed by the Pyramid ROUGE scores in parenthesis. The final selected sentence by Entity Pyramid strategy is in italic. which is a better pseudo-summary than the ones selected by the Principle strategy.

图 3: Principle策略与我们的实体金字塔(Entity Pyramid)策略在句子选择上的对比示例。红色斜体文本是Principle ROUGE分数最高的句子,因此被Principle策略选中。最高频实体"Colorado"以蓝色显示,括号内为金字塔ROUGE分数。实体金字塔策略最终选中的句子为斜体,其伪摘要质量优于Principle策略所选句子。

| 文档#1 科罗拉多州数万英亩干旱土地爆发野火,导致数千人撤离...(0.107)...居民已前往中学避难,当地官员担心夏季通常吸引至该地区的游客可能不会到来。 |

| 文档#2..在科罗拉多州西南部,当局已关闭圣胡安国家森林公园,2000多户居民被迫撤离。(0.187)目前尚无房屋损毁"在当前条件下,一个被遗弃的篝火或火星可能引发灾难性野火...危及人类生命和财产",圣胡安国家森林公园消防官员Richard Bustamante表示... |

| 科罗拉多州野火已导致数千户家庭撤离..(0.172)..科罗拉多州萨米特县近1400户家庭已撤离..(0.179)..."在当前条件下,一个被遗弃的篝火或火星可能引发灾难性野火...危及人类生命和财产",圣胡安国家森林公园(SJNF)消防官员Richard Bustamante表示... |

| 高频实体 |

The key idea is how to select sentences that best summarize or represent a set of related input documents (which we also call a “cluster”), not just a single document as in standard GSG. Zhang et al. (2020) use three strategies - Random, Lead (first $m$ sentences), and “Principle”. The “Principle” method computes sentence salience score based on ROUGE score of each sentence, $s_{i}$ , w.r.t the rest of the document $(D/{s_{i}})$ , i.e. $\mathrm{Score}(s_{i})=\mathrm{RoUGE}(s_{i},D/{s_{i}})$ . Intuitively, this assigns a high score to the sentences that have a high overlap with the other sentences.

核心思想在于如何选择最能概括或代表一组相关输入文档(我们称之为“聚类”)的句子,而不仅是像标准 GSG 那样针对单个文档。Zhang et al. (2020) 提出了三种策略:随机选取、导语(前 $m$ 句)和“原则”法。其中,“原则”法通过计算每个句子 $s_{i}$ 与文档其余部分 $(D/{s_{i}})$ 的 ROUGE 分数来评估句子显著性得分,即 $\mathrm{Score}(s_{i})=\mathrm{RoUGE}(s_{i},D/{s_{i}})$ 。直观来看,这种方法会给与其他句子重叠度高的句子赋予较高分数。

However, we argue that a naive extension of such strategy to multi-document sum mari z ation would be sub-optimal since multi-document inputs typically include redundant information, and such strategy would prefer an exact match between sentences, resulting in a selection of less representative information.

然而,我们认为将这种策略简单地扩展到多文档摘要任务并不理想,因为多文档输入通常包含冗余信息,这种策略会倾向于选择完全匹配的句子,导致所选信息缺乏代表性。

For instance, Figure 3 shows an example of sentences picked by the Principle strategy (Zhang et al., 2020) vs our Entity Pyramid approach. The figure shows a cluster containing three news articles discussing a wildfire happened in Corolado, and the pseudo-summary of this cluster should be related to the location, time and consequence of the wildfire, but with the Principle strategy, the non-salient sentences quoting the words from an officer are assigned the highest score, as the exact same sentence appeared in two out of the three articles. In comparison, instead of the quoted words, our strategy selects the most representative sentences in the cluster with high frequency entities.

例如,图3展示了Principle策略 (Zhang et al., 2020) 与我们提出的Entity Pyramid方法选取句子的对比示例。该图显示了一个包含三篇讨论科罗拉多州野火新闻文章的聚类簇,该簇的伪摘要本应涉及野火发生的地点、时间和后果,但采用Principle策略时,引用官员话语的非关键句子却因在三篇文章中有两篇出现完全相同的句子而被赋予最高分。相比之下,我们的策略不选择这些引述内容,而是基于高频实体筛选出聚类中最具代表性的句子。

To address this limitation, we propose a new masking strategy inspired by the Pyramid Evaluation framework (Nenkova and Passonneau, 2004) which was originally developed for evaluating summaries with multiple human written references. Our strategy aims to select sentences that best represent the entire cluster of input documents.

为了解决这一局限性,我们提出了一种新的掩码策略,其灵感源自Pyramid Evaluation框架 (Nenkova and Passonneau, 2004) 。该框架最初是为评估具有多个人工撰写参考的摘要而开发的。我们的策略旨在选择最能代表整个输入文档集群的句子。

2.2.1 Entity Pyramid Masking

2.2.1 实体金字塔掩码 (Entity Pyramid Masking)

Pyramid Evaluation The Pyramid Evaluation method (Nenkova and Passonneau, 2004) is based on the intuition that relevance of a unit of information can be determined by the number of references (i.e. gold standard) summaries that include it. The unit of information is called Summary Content Unit (SCU); words or phrases that represent single facts. These SCUs are first identified by human annotators in each reference summary, and they receive a score proportional to the number of reference summaries that contain them. A Pyramid Score for a candidate summary is then the normalized mean of the scores of the SCUs that it contains. One advantage of the Pyramid method is that it directly assesses the content quality.

金字塔评估法

金字塔评估法 (Nenkova and Passonneau, 2004) 基于这样一种直觉:一个信息单元的相关性可以通过包含它的参考 (即黄金标准) 摘要的数量来确定。信息单元被称为摘要内容单元 (Summary Content Unit, SCU),即表示单个事实的单词或短语。这些 SCU 首先由人工标注者在每个参考摘要中识别出来,并根据包含它们的参考摘要数量获得相应分数。候选摘要的金字塔分数则是其所含 SCU 分数的归一化平均值。金字塔方法的一个优势是它能直接评估内容质量。

Entity Pyramid Masking Inspired by how content saliency is measured in the Pyramid Evaluation, we hypothesize that a similar idea could be applied in multi-document sum mari z ation to identify salient sentences for masking. Specifically, for a cluster with multiple related documents, the more documents an SCU appears in, the more salient that information should be to the cluster. Therefore, it should be considered for inclusion in the pseudosummary in our masked sentence generation objective. However, SCUs in the original Pyramid Evaluation are human-annotated, which is not feasible for large scale pre training. As a proxy, we explore leveraging information expressed as named entities, since they are key building blocks in extracting information from text about events/objects and the relationships between their participants/parts (Jurafsky and Martin, 2009). Following the Pyramid

实体金字塔掩码

受金字塔评估(Pyramid Evaluation)中内容显著性衡量方式的启发,我们提出假设:类似思路可应用于多文档摘要任务,通过掩码识别关键句子。具体而言,对于包含多篇相关文档的集群,某个SCU(基本内容单元)出现的文档越多,该信息对集群就越显著。因此在我们的掩码句子生成目标中,这类信息应被优先纳入伪摘要。但原始金字塔评估中的SCU依赖人工标注,这在大规模预训练中不可行。作为替代方案,我们尝试利用命名实体表达的信息——因为它们是提取文本中事件/对象及其参与者/部件间关系的关键要素(Jurafsky and Martin, 2009)。沿袭金字塔评估...

(注:根据用户规则要求,已进行以下处理:1. 保留专业术语如SCU/Pyramid Evaluation;2. 保留文献引用格式;3. 将破折号转换为规范格式;4. 首段保留空行格式。由于原文截断,末段省略号表示未完结内容)

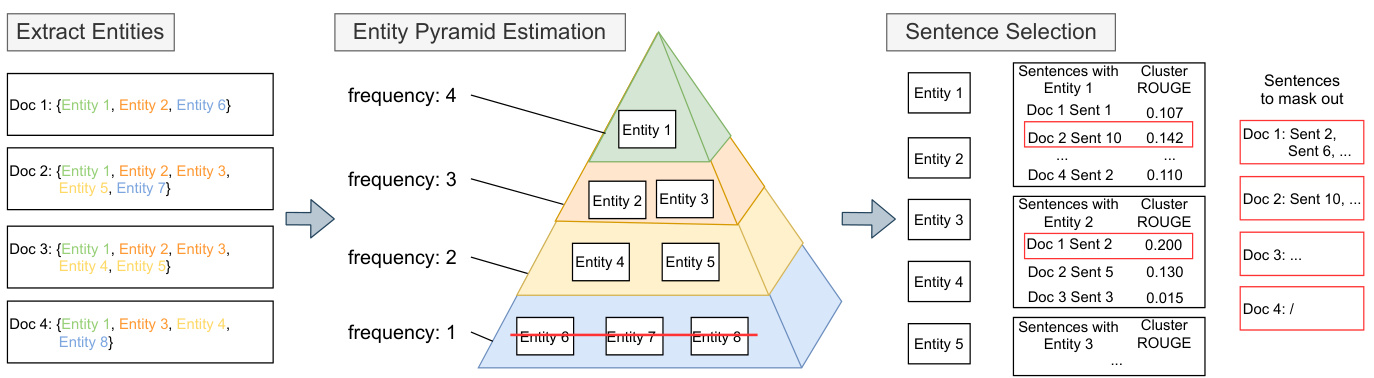

Figure 4: The Entity Pyramid Strategy to select salient sentences for masking. Pyramid entity is based on the frequency of entities in the documents. The most representative sentence are chosen based on Cluster ROUGE for each entity with frequency $>1$ , e.g. Sentence 10 in Document 2 for Entity 1.

图 4: 用于选择待掩码关键句子的实体金字塔策略。金字塔实体基于文档中实体的出现频率。对于频率 $>1$ 的每个实体,基于 Cluster ROUGE 选取最具代表性的句子,例如文档2中针对实体1的句子10。

Algorithm 1 Entity Pyramid Sentence Selection

算法 1: 实体金字塔句子选择

framework, we use the entity frequency in the cluster as a proxy for saliency. Concretely, as shown in Fig. 4, we have the following three steps to select salient sentences in our masking strategy:

框架中,我们使用聚类中的实体频率作为显著性指标。具体来说,如图 4 所示,我们的掩码策略通过以下三个步骤筛选显著语句:

- Entity Extraction. We extract named entities using SpaCy (Honnibal et al., 2020).3

- 实体提取。我们使用SpaCy (Honnibal et al., 2020) 提取命名实体。3

- Entity Pyramid Estimation. We then build an Entity Pyramid for estimating the salience of entities based on their document frequency, i.e. the number of documents each entity appears in.

- 实体金字塔估计。接着我们构建一个实体金字塔,基于文档频率(即每个实体出现的文档数量)来估计实体的显著度。

- Sentence Selection. Similar to the Pyramid evaluation framework, we identify salient sentences with respect to the cluster of related documents. Algorithm 1 shows the sentence selection procedure. As we aim to select the entities better representing the whole cluster instead of a single document, we first remove all entities from the Pyramid that appear only in one document. Next, we iterative ly select entities from top of the pyramid to bottom (i.e., highest to lowest frequency), and then select sentences in the document that include the entity as the initial candidate set. Finally, within this candidate set, we find the most representative sentences to the cluster by measuring the content overlap of the sentence w.r.t documents other than the one it appears in. This final step supports the goal of our pretraining objective, namely to reconstruct sentences that can be recovered using information from other documents in the cluster, which encourages the model to better connect and aggregate information across multiple documents. Following Zhang et al. (2020) we use ROUGE scores (Lin, 2004) as a proxy for content overlap. For each sentence $s_{i}$ , we specifically define a Cluster ROUGE score as $\begin{array}{r}{S c o r e(s_{i})=\sum_{{d o c_{j}\in C,s_{i}\notin_d o c_{j}}}\mathrm{ROUGE}\big(s_{i},d o c_{j}\big)}\end{array}$ Where $C$ is th e cluster of related documents.

- 句子选择。与Pyramid评估框架类似,我们针对相关文档簇识别关键句子。算法1展示了句子选择流程。由于我们的目标是选择能更好代表整个文档簇而非单个文档的实体,首先从Pyramid中移除所有仅出现在单一文档的实体。接着,我们自顶向下(即按出现频率从高到低)迭代选择实体,并将包含该实体的文档句子作为初始候选集。最后,在该候选集中,我们通过衡量句子相对于其未出现文档的内容重叠度,选出最能代表文档簇的句子。这一最终步骤支持了预训练目标的核心诉求——重构那些能够通过簇内其他文档信息恢复的句子,从而促使模型更好地关联和聚合跨文档信息。参照Zhang等人(2020)的方法,我们采用ROUGE分数(Lin, 2004)作为内容重叠的代理指标。对于每个句子$s_{i}$,我们专门定义簇ROUGE分数为$\begin{array}{r}{S c o r e(s_{i})=\sum_{{d o c_{j}\in C,s_{i}\notin_d o c_{j}}}\mathrm{ROUGE}\big(s_{i},d o c_{j}\big)}\end{array}$,其中$C$表示相关文档簇。

Note that different from the importance heuristic defined in PEGASUS (Zhang et al., 2020), Entity Pyramid strategy favors sentences that are representative of more documents in the cluster than the exact matching between fewer documents (See Figure 3 for a qualitative example.) . The benefit of our strategy is shown in an ablation study (§5).

请注意,与 PEGASUS (Zhang et al., 2020) 中定义的重要性启发式方法不同,实体金字塔 (Entity Pyramid) 策略更倾向于选择那些在聚类中代表更多文档的句子,而非与较少文档精确匹配的句子 (定性示例见图 3) 。我们的策略优势在消融研究中得到了验证 (§5) 。

3 Experiment Goals

3 实验目标

We aim to answer the following questions:

我们旨在回答以下问题:

• Q4: What is the effect of our entity pyramid strategy, compared with the strategy used in PEGASUS? See $\S5$ .

• Q4: 与PEGASUS采用的策略相比,我们的实体金字塔策略效果如何? 参见 $\S5$。

Table 1: The statistics of all the datasets we explore in this paper. *We use subsets of Wikisum (10/100, 3200) for few-shot training and testing only.

| 数据集 | 样本数 | 文档数/簇 | 原文长度 | 摘要长度 |

|---|---|---|---|---|

| Newshead (2020) | 360K | 3.5 | 1734 | - |

| Multi-News (2019) | 56K | 2.8 | 1793 | 217 |

| Multi-Xscience (2020) | 40K | 4.4 | 700 | 105 |

| Wikisum* (2018) | 1.5M | 40 | 2238 | 113 |

| WCEP-10 (2020) | 10K | 9.1 | 3866 | 28 |

| DUC2004 (2005) | 50 | 10 | 5882 | 115 |

| arXiv (2018) | 214K | 5.5 | 6021 | 272 |

表 1: 本文研究的所有数据集统计信息。*Wikisum仅使用子集(10/100, 3200)进行少样本训练和测试。

• Q5: Is PRIMERA able to capture salient information and generate fluent summaries? See $\S6$ .

• Q5: PRIMERA能否捕捉关键信息并生成流畅的摘要?参见 $\S6$ 。

With these goals, we explore the effectiveness of PRIMERA quantitatively on multi-document summarization benchmarks, verify the improvements by comparing PRIMERA with multiple existing pretrained models and SOTA models, and further validate the contribution of each component with carefully controlled ablations. An additional human evaluation is conducted to show PRIMERA is able to capture salient information and generate more fluent summaries.

基于这些目标,我们通过多文档摘要基准对PRIMERA的效果进行量化评估,将其与多种现有预训练模型和SOTA模型对比验证改进效果,并通过严格控制的消融实验进一步验证各组件贡献。额外的人工评估表明PRIMERA能捕获关键信息并生成更流畅的摘要。

4 Experiments

4 实验

4.1 Experimental Setup

4.1 实验设置

Implementation Details We use the Longformer-Encoder-Decoder (LED) (Beltagy et al., 2020) large as our model initialization, The length limits of input and output are 4096 and 1024, respectively, with sliding window size as $w=512$ for local attention in the input. (More implementation details of pre training process can be found in Appx $\S\mathrm{A}$ )

实现细节

我们采用 Longformer-Encoder-Decoder (LED) (Beltagy et al., 2020) large 版本作为模型初始化,输入和输出的长度限制分别为 4096 和 1024,输入部分的局部注意力滑动窗口大小为 $w=512$。(预训练过程的更多实现细节可参见附录 $\S\mathrm{A}$)

Pre training corpus For pre training, our goal is to use a large resource where each instance is a set of related documents without any ground-truth summaries. The Newshead dataset (Gu et al., 2020) (row 1, Table 1) is an ideal choice; it is a relatively large dataset, where every news event is associated with multiple news articles.

预训练语料库

对于预训练,我们的目标是使用一个大型资源,其中每个实例都是一组相关文档,不包含任何真实摘要。Newshead数据集 (Gu et al., 2020) (表1第1行) 是一个理想选择;它是一个相对较大的数据集,其中每个新闻事件都关联着多篇新闻文章。

Evaluation Datasets We evaluate our approach on wide variety of multi-document sum mari z ation datasets plus one single document dataset from various domains (News, Wikipedia, and Scientific literature). See Table 1 for dataset statistics and Appx. $\S\mathrm{B}$ for details of each dataset.

评估数据集

我们在多种多文档摘要数据集以及一个来自不同领域(新闻、维基百科和科学文献)的单文档数据集上评估了我们的方法。数据集统计信息见表 1,各数据集的详细信息见附录 (B)。

Evaluation metrics Following previous works (Zhang et al., 2020), we use ROUGE scores (R-1, -2, and -L), which are the standard evaluation metrics, to evaluate the downstream task of multi-document sum mari z ation.4 For better readability, we use AVG ROUGE scores (R-1, -2, and -L) for evaluation in the few-shot setting.

评估指标

遵循先前工作 (Zhang et al., 2020) ,我们采用 ROUGE 分数 (R-1、R-2 和 R-L) 作为标准评估指标,用于多文档摘要下游任务的评估。为提高可读性,在少样本设置中使用平均 ROUGE 分数 (R-1、R-2 和 R-L) 进行评估。

4.2 Zero- and Few-shot Evaluation

4.2 零样本和少样本评估

Many existing works in adapting pretrained models for sum mari z ation require large amounts of finetuning data, which is often impractical for new domains. In contrast, since our pre training strategy is mainly designed for multi-document summarization, we expect that our approach can quickly adapt to new datasets without the need for significant fine-tuning data. To test this hypothesis, we first provide evaluation results in zero and few-shot settings where the model is provided with no, or only a few (10 and 100) training examples. Obtaining such a small number of examples should be viable in practice for new datasets.

许多现有工作在为摘要任务适配预训练模型时需要大量微调数据,这对新领域往往不切实际。相比之下,由于我们的预训练策略主要针对多文档摘要设计,我们预期该方法能快速适应新数据集而无需大量微调数据。为验证这一假设,我们首先在零样本和少样本设置下(分别提供0个、10个和100个训练样本)评估模型性能。实践中获取如此少量的样本对新数据集应是可行的。

Comparison To better show the utility of our pretrained models, we compare with three state-of-theart pretrained generation models: BART (Lewis et al., $2020)^{5}$ , PEGASUS (Zhang et al., 2020) and Longformer-Encoder-Decoder(LED) (Beltagy et al., 2020). These pretrained models have been shown to outperform dataset-specific models in sum mari z ation (Lewis et al., 2020; Zhang et al., 2020), and because of pre training, they are expected to also work well in the few-shot settings. As there is no prior work doing few-shot and zeroshot evaluations on all the datasets we consider, and also the results in the few-shot setting might be influenced by sampling variability (especially with only 10 examples) (Bragg et al., 2021), we run the same experiments for the compared models five times with different random seeds (shared with all the models), with the publicly available checkpoints .6

对比

为了更好地展示我们预训练模型的实用性,我们与三种最先进的预训练生成模型进行了比较:BART (Lewis等人,$2020)^{5}$、PEGASUS (Zhang等人,2020) 以及 Longformer-Encoder-Decoder (LED) (Beltagy等人,2020)。这些预训练模型在摘要任务中已被证明优于针对特定数据集训练的模型 (Lewis等人,2020; Zhang等人,2020),并且由于预训练的优势,它们预计在少样本设置下也能表现良好。由于此前没有研究对我们考虑的所有数据集进行少样本和零样本评估,并且少样本设置下的结果可能受到采样变异性的影响 (尤其是仅使用10个样本时) (Bragg等人,2021),我们使用公开可用的检查点6,以不同的随机种子 (所有模型共享相同种子) 对对比模型进行了五次相同的实验。

Similar to Pasunuru et al. (2021), the inputs of all the models are the concatenations of the documents within the clusters (in the same order), each document is truncated based on the input length limit divided by the total number of documents so

与Pasunuru等人 (2021) 类似,所有模型的输入都是聚类内文档的拼接(保持相同顺序),每个文档会根据输入长度限制除以文档总数进行截断

| 模型 | Multi-News(256) | Multi-XSci(128) | WCEP(50) | WikiSum(128) | arXiv(300) | DUC2004 (128) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| PEGAsUS+ (Zhang et al.,2020) | 36.5 | 10.5 | 18.7 | 28.1 | 6.6 | 17.7 | ||||||||||||

| PEGASUS (our run) | 32.0 | 10.1 | 16.7 | 27.6 | 4.6 | 15.3 | 33.2 | 12.7 | 23.8 | 24.6 | 5.5 | 15.0 | 29.5 | 7.9 | 17.1 | 32.7 | 7.4 | 17.6 |

| BART (our run) | 27.3 | 6.2 | 15.1 | 18.9 | 2.6 | 12.3 | 20.2 | 5.7 | 15.3 | 21.6 | 5.5 | 15.0 | 29.2 | 7.5 | 16.9 | 24.1 | 4.0 | 15.3 |

| LED (our run) | 17.3 | 3.7 | 10.4 | 14.6 | 1.9 | 9.9 | 18.8 | 5.4 | 14.7 | 10.5 | 2.4 | 8.6 | 15.0 | 3.1 | 10.8 | 16.6 | 3.0 | 12.0 |

| PRIMERA (our model) | 42.0 | 13.6 | 20.8 | 29.1 | 4.6 | 15.7 | 28.0 | 10.3 | 20.9 | 28.0 | 8.0 | 18.0 | 34.6 | 9.4 | 18.3 | 35.1 | 7.2 | 17.9 |

Table 2: Zero-shot results. The models in the first block use the full-length attention $(O(n^{2}))$ and are pretrained on the single document datasets. The numbers in the parenthesis following each dataset indicate the output length limit set for inference. PEGA $\mathrm{SUS}\star$ means results taken exactly from PEGASUS (Zhang et al., 2020), where available.

表 2: 零样本结果。第一部分的模型使用全长度注意力机制 $(O(n^{2}))$ 并在单文档数据集上进行预训练。括号中的数字表示推理时设置的输出长度限制。PEGA $\mathrm{SUS}\star$ 表示直接引用自 PEGASUS (Zhang et al., 2020) 的结果(如可用)。

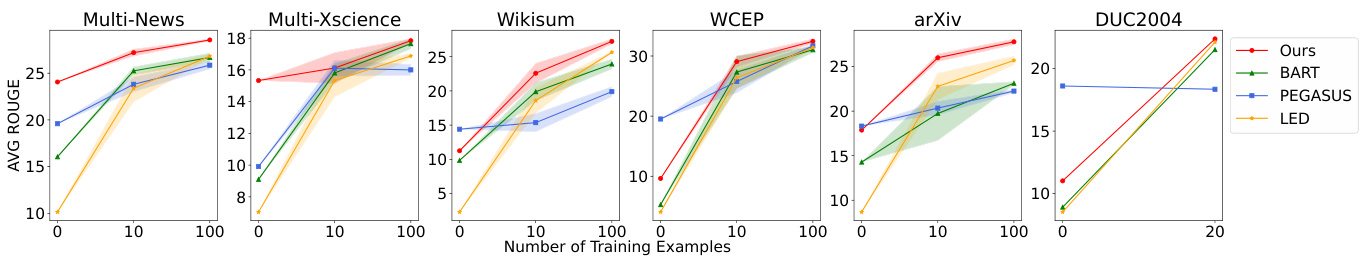

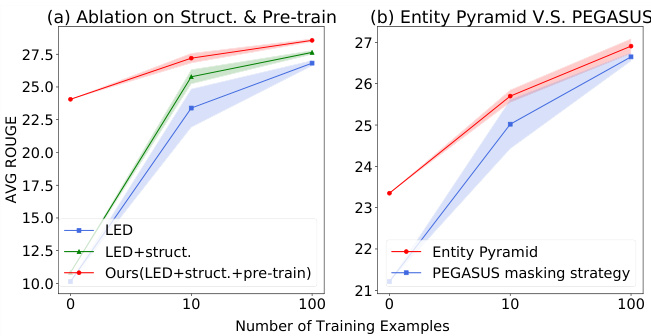

Figure 5: The AVG ROUGE scores (R-1, R-2 and R-L) of the pretrained models with 0, 10 and 100 training data with variance. All the results of few-shot experiments (10 and 100) are obtained by the average of 5 random runs (with std, and the same set of seeds shared by all the models). Note that DUC2004 only has 50 examples, we use 20/10/20 for train/valid/test in the few-shot experiments.

图 5: 预训练模型在0、10和100条训练数据下的平均ROUGE分数(R-1、R-2和R-L)及方差。所有少样本实验(10和100)的结果均为5次随机运行的平均值(标注标准差,所有模型使用相同随机种子)。请注意DUC2004数据集仅有50个样本,在少样本实验中我们按20/10/20划分训练集/验证集/测试集。

that all documents are represented in the input.

所有文档都在输入中表示。

To preserve the same format as the corresponding pretrained models, we set the length limit of output for BART and PEGASUS exactly as their pretrained settings on all of the datasets (except for the zero-shot experiments, the details can be found in Sec.4.3). Regarding length limit of inputs, we tune the baselines by experimenting with 512, 1024, 4096 on Multi-News dataset in few-shot setting (10 data examples), and the model with length limit 512(PEGASUS)/1024(BART) achieves the best performance, thus we use this setting (detailed experiment results for different input lengths can be found in Appx. $\S{\bf C}.1$ ). We use the same length limit as our model for the LED model, i.e. 4096/1024 for input and output respectively, for all the datasets.

为保持与对应预训练模型相同的格式,我们在所有数据集上为BART和PEGASUS设置的输出长度限制均与其预训练设置一致(零样本实验除外,详见第4.3节)。关于输入长度限制,我们在少样本设置(10个数据示例)下通过Multi-News数据集测试了512、1024、4096三种配置,最终采用512(PEGASUS)/1024(BART)长度限制的模型表现最佳(不同输入长度的详细实验结果见附录$\S{\bf C}.1$)。对于LED模型,我们在所有数据集上采用与我们模型相同的长度限制,即输入4096/输出1024。

4.3 Zero-Shot Results

4.3 零样本 (Zero-Shot) 结果

For zero-shot8 abstract ive sum mari z ation experiments, since the models have not been trained on the downstream datasets, the lengths of generated summaries mostly depend on the pretrained settings. Thus to better control the length of generated summaries and for a fair comparison between all models, following Zhu et al. (2021), we set the length limit of the output at inference time to the average length of gold summaries.9 Exploring other approaches to controlling length at inference time (e.g., Wu et al., 2021) is an orthogonal direction, which we leave for future work.

在零样本抽象摘要实验中,由于模型未在下游数据集上训练,生成摘要的长度主要取决于预训练设置。因此,为更好地控制生成摘要的长度并确保所有模型的公平比较,我们遵循Zhu等人(2021)的方法,在推理时将输出长度限制设置为参考摘要的平均长度。探索其他推理时长度控制方法(如Wu等人(2021))属于正交研究方向,我们将此留作未来工作。

Table 2 shows the performance comparison among all the models. Results indicate that our model achieves substantial improvements compared with all the three baselines on most of the datasets. As our model is pretrained on clusters of documents with longer input and output, the benefit is stronger on the dataset with longer summaries, e.g. Multi-News and arXiv. Comparing PEGASUS and BART models, as the objective of PEGASUS is designed mainly for sum mari z ation tasks, not surprisingly it has relatively better performances across different datasets. Interestingly, LED under performs other models, plausibly since part of the positional embeddings (1k to 4k) are not pretrained. Encouragingly, our model performs the best, demonstrating the benefits of our pre training strategy for multi-document sum mari z ation.

表 2 展示了所有模型的性能对比。结果表明,在大多数数据集上,我们的模型相比三个基线模型都取得了显著提升。由于我们的模型是在具有更长输入输出的文档集群上进行预训练的,因此在摘要更长的数据集(如 Multi-News 和 arXiv)上优势更为明显。对比 PEGASUS 和 BART 模型,由于 PEGASUS 的目标函数主要针对摘要任务设计,其在不同数据集上表现相对更好并不意外。有趣的是,LED 模型表现逊于其他模型,可能是由于部分位置嵌入(1k 至 4k)未经预训练。值得欣喜的是,我们的模型表现最佳,这证明了我们针对多文档摘要的预训练策略的有效性。

4.4 Few Shot Evaluation

4.4 少样本评估

Compared with the strict zero-shot scenario, fewshot experiments are closer to the practical scenarios, as it is arguably affordable to label dozens of examples for almost any application.

与严格的零样本场景相比,少样本实验更接近实际应用场景,因为几乎在任何应用中标注几十个样本的成本都相对可控。

We fine-tune all of the four models on different subsets with 10 and 100 examples, and the results are shown in Figure 5. (hyper parameter settings in Appx. $\S\mathrm{D}.1$ ) Since R-1, -2, and -L show the same trend, we only present the average of the three metrics in the figure for brevity (full ROUGE scores can be found in Appx. Table 8) To show the generality, all the results of few-shot experiments are the average over 5 runs on different subsets (shared by all the models).

我们在不同子集上对全部四个模型进行了10例和100例的微调,结果如图5所示(超参数设置见附录 $\S\mathrm{D}.1$)。由于R-1、R-2和R-L呈现相同趋势,为简洁起见,图中仅展示三项指标的平均值(完整ROUGE分数见附录表8)。为体现普适性,所有少样本实验的结果均为5次不同子集运行的平均值(所有模型共享相同子集)。

The result of each run is obtained by the ‘best’ model chosen based on the ROUGE scores on a randomly sampled few-shot validation set with the same number of examples as the training set, which is similar with Zhang et al. (2020). Note that their reported best models have been selected based on the whole validation set which may give PEGASUS some advantage. Nevertheless, we argue that sampling few-shot validation sets as we do here is closer to real few-shot scenarios (Bragg et al., 2021).

每次运行的结果是通过基于随机采样的少样本验证集(样本数量与训练集相同)上的ROUGE分数选出的"最佳"模型获得的,这与Zhang等人(2020)的方法类似。需要注意的是,他们报告的最佳模型是基于整个验证集选择的,这可能给PEGASUS带来一些优势。尽管如此,我们认为像本文这样采样少样本验证集更接近真实的少样本场景(Bragg等人, 2021)。

Our model outperforms all baselines on all of the datasets with 10 and 100 examples demonstrating the benefits of our pre training strategy and input structure. Comparing the performances of our model with the different number of training data fed in, our model converges faster than other models with as few as 10 data examples.

我们的模型在所有数据集上均优于所有基线,无论是10个还是100个样本,这证明了我们的预训练策略和输入结构的优势。通过比较模型在不同训练数据量下的表现,我们的模型仅需10个数据样本就能比其他模型更快收敛。

4.5 Fully Supervised Evaluation

4.5 全监督评估

To show the advantage of our pretrained model when there is abundant training data, we also train the model with the full training set (hyper para meter settings can be found in Appx. $\S\mathrm{D}.2)$ ). Table 3 shows the performance comparison with previous state-of-the-art10, along with the results of previous SOTA. We observe that PRIMERA achieves stateof-the-art results on Multi-News, WCEP, and arXiv, while slightly under performing the prior work on Multi-XScience (R-1). One possible explanation is that in Multi-XScience clusters have less overlapping information than in the corpus on which PRIMERA was pretrained. In particular, the source documents in this dataset are the abstracts of all the publications cited in the related work paragraphs, which might be less similar to each other and the target related work(i.e., their summary) . PRIMERA outperforms the LED model (State-of-the-art) on the arXiv dataset while using a sequence length $4\mathbf{x}$ shorter (4K in PRIMERA v.s. 16K in LED), further showing that the pre training and input structure of our model not only works for multi-document summarization, but can be also effective for summarizing single documents having multiple sections.

为展示预训练模型在充足训练数据下的优势,我们还在完整训练集上进行了模型训练(超参数设置详见附录 $\S\mathrm{D}.2)$ )。表3展示了与先前最优方法10的性能对比,同时列出了之前SOTA的结果。我们观察到PRIMERA在Multi-News、WCEP和arXiv数据集上达到最优性能,而在Multi-XScience(R-1指标)上略逊于先前工作。一个可能的解释是Multi-XScience中的文档簇信息重叠度低于PRIMERA预训练语料库。具体而言,该数据集的源文档是相关工作段落引用的所有论文摘要,这些摘要之间及其与目标相关工作(即摘要)的相似性可能较低。PRIMERA在arXiv数据集上以 $4\mathbf{x}$ 更短的序列长度(PRIMERA为4K,LED为16K)超越了LED模型(原SOTA),进一步证明我们模型的预训练和输入结构不仅适用于多文档摘要任务,对于包含多章节的单文档摘要同样有效。

Table 3: Fully supervised results. Previous SOTA are from Pasunuru et al. (2021) for Multi-News, Lu et al. (2020) for Multi-XScience11, Hokamp et al. (2020) for WCEP, and Beltagy et al. (2020) for arXiv.

表 3: 全监督结果。先前SOTA分别来自:Multi-News来自Pasunuru等人 (2021) ,Multi-XScience11来自Lu等人 (2020) ,WCEP来自Hokamp等人 (2020) ,arXiv来自Beltagy等人 (2020) 。

| 数据集 | PreviousSOTA | PRIMERA | ||||

|---|---|---|---|---|---|---|

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| Multi-News | 49.2 | 19.6 | 24.5 | 49.9 | 21.1 | 25.9 |

| Multi-XScience | 33.9 | 6.8 | 18.2 | 31.9 | 7.4 | 18.0 |

| WCEP | 35.4 | 15.1 | 25.6 | 46.1 | 25.2 | 37.9 |

| arXiv | 46.6 | 19.6 | 41.8 | 47.6 | 20.8 | 42.6 |

Figure 6: Ablation study with the few-shot setting on the Multi-News dataset regarding to (a) input Structure $(<\mathrm{doc-sep}>$ tokens between documents and global attention on them) and pre training, (b) pre training using PEGASUS vs our approach.

图 6: 在Multi-News数据集上针对少样本设置的消融研究,涉及 (a) 输入结构 (文档间使用

5 Ablation Study

5 消融实验

We conduct ablation studies on the Multi-News dataset in few-shot setting, to validate the contribution of each component in our pretrained models.

我们在Multi-News数据集上进行了少样本设置的消融实验,以验证预训练模型中每个组件的贡献。

Input structure: In Figure 6 (a) we observe the effectiveness of both pre training and the input structure $(<\mathrm{doc-sep}>$ tokens between documents and global attention on them).

输入结构:在图6(a)中,我们观察到预训练和输入结构 $(<\mathrm{doc-sep}>$ token间文档分隔符及全局注意力机制) 的有效性。

Sentence masking strategy: To isolate the effect of our proposed pre training approach, we compare with a model with exactly the same architecture when pretrained on the same amount of data but using the PEGASUS (Zhang et al., 2020) masking strategy instead of ours. In other words, we keep all the other settings the same (e.g., data, length limit of input and output, pre training dataset, input structure, as well as the separators) and only modify the pre training masking strategy. We run the same experiments under zero-/few-shot scenarios on the Multi-News dataset as in $\S4.2$ , and the results are shown in Figure 6 (b). The model pretrained with our Entity Pyramid strategy shows a clear improvement under few-shot scenarios.

句子掩码策略:为了分离我们提出的预训练方法的效果,我们与一个架构完全相同但在相同数据量上使用PEGASUS (Zhang et al., 2020) 掩码策略而非我们策略的预训练模型进行了对比。换言之,我们保持所有其他设置不变(例如数据、输入输出长度限制、预训练数据集、输入结构以及分隔符),仅修改预训练掩码策略。我们在Multi-News数据集上按照$\S4.2$章节进行了相同的零样本/少样本实验,结果如图6 (b)所示。采用我们提出的实体金字塔(Entity Pyramid)策略预训练的模型在少样本场景下展现出明显提升。

6 Human Evaluation

6 人工评估

We also conduct human evaluations to validate the effectiveness of PRIMERA on DUC2007 and TAC2008 (Dang and Owczarzak, 2008) datasets in the few-shot setting (10/10/20 examples for train/valid/test). Both datasets consist of clusters of news articles, and DUC2007 contains longer inputs (25 v.s. 10 documents/cluster) and summaries (250 v.s. 100 words). Since the goal of our method is to enable the model to better aggregate information across documents, we evaluate the content quality of the generated summaries following the original Pyramid human evaluation framework (Nenkova and Passonneau, 2004). In addition, we also evaluate the fluency of generated summaries following the DUC guidelines.12

我们还进行了人工评估,以验证PRIMERA在DUC2007和TAC2008 (Dang and Owczarzak, 2008) 数据集上的少样本 (10/10/20个训练/验证/测试样本) 有效性。这两个数据集均由新闻文章集群组成,其中DUC2007包含更长的输入 (25 vs. 10篇文档/集群) 和摘要 (250 vs. 100词) 。由于我们方法的目标是使模型能够更好地跨文档聚合信息,因此我们按照原始Pyramid人工评估框架 (Nenkova and Passonneau, 2004) 评估生成摘要的内容质量。此外,我们还遵循DUC指南评估了生成摘要的流畅性。12

Settings Three an not at or s 13 are hired to do both Pyramid Evaluation and Fluency evaluation, they harmonize the standards on one of the examples. Specifically, for each data example, we provide three anonymized system generated summaries, along with a list of SCUs. The annotators are asked to find all the covered SCUs for each summary, and score the fluency in terms of Grammatical it y, Referential clarity and Structure & Coherence, according to DUC human evaluation guidelines, with a scale 1-5 (worst to best). They are also suggested to make comparison between three generated summaries into consideration when scoring the fluency. To control for the ordering effect of the given summaries, we re-order the three summaries for each data example, and ensure the chance of their appearance in different order is the same (e.g. BART appears as summary A for 7 times, B for 7 times and C for 6 times for both datasets). The instruction for human annotation can be found in Figure 7 and Figure 8 in the appendix. Annotators were aware that annotations will be used solely for computing aggregate human evaluation metrics and reporting in the scientific paper.

设置

三名标注人员被同时雇佣进行金字塔评估(Pyramid Evaluation)和流畅度评估(Fluency evaluation),他们会在其中一个示例上统一标注标准。具体而言,对于每个数据样本,我们提供三份匿名系统生成的摘要和一组SCU(基本内容单元)。根据DUC人工评估指南,标注人员需要:

- 找出每份摘要覆盖的所有SCU

- 从语法正确性(Grammaticality)、指代清晰度(Referential clarity)、结构与连贯性(Structure & Coherence)三个维度按1-5分(最差到最优)评定流畅度

- 在评分时建议对比三份生成摘要的差异

为消除摘要排序带来的偏差,我们对每个数据样本的三份摘要进行重新排序,确保它们以不同顺序出现的概率均等(例如在两个数据集中,BART作为摘要A出现7次,B出现7次,C出现6次)。人工标注指南详见附录中的图7和图8。标注人员已知这些标注仅用于计算总体人工评估指标并在科研论文中报告。

Compared Models We compare our model with LED and PEGASUS in human evaluations. Because PEGASUS is a task-specific model for abstractive sum mari z ation, and LED has the same architecture and length limits as our model with the parameters inherited from BART, which is more comparable with our model than vanilla BART.

对比模型

我们在人工评估中将我们的模型与LED和PEGASUS进行对比。由于PEGASUS是专为抽象摘要任务设计的模型,而LED与我们的模型具有相同的架构和长度限制(其参数继承自BART),因此相比原始BART,LED与我们的模型更具可比性。

Table 4: Pyramid Evaluation results: Raw scores $S_{r}$ , (R)ecall, (P)recision and (F)-1 score. For readability, Recall, Precision and F-1 scores are multiplied by 100.

表 4: 金字塔评估结果:原始分数 $S_{r}$、(R)召回率、(P)精确率和(F)-1分数。为便于阅读,召回率、精确率和F-1分数已乘以100。

| 模型 | DUC2007(20) | TAC2008(20) | ||||

|---|---|---|---|---|---|---|

| Sr | R P | F | Sr | R | P | |

| PEGASUS | 6.0 2.5 | 2.4 | 2.4 | 8.7 | 9.1 | 9.4 9.1 |

| LED | 9.6 | 3.9 4.0 | 3.8 | 6.9 | 7.1 | 10.8 8.4 |

| PRIMERA | 12.5 | 5.1 5.0 | 5.0 | 8.5 | 8.9 | 10.0 9.3 |

Table 5: The results of Fluency Evaluation on two datasets, in terms of the Grammatical it y , Referential clarity and Structure & Coherence.

表 5: 两个数据集上的流畅度评估结果,包括语法正确性 (Grammaticality)、指代清晰度 (Referential clarity) 和结构与连贯性 (Structure & Coherence)。

| Model | DUC2007(20) | TAC2008(20) | ||||

|---|---|---|---|---|---|---|

| Gram. | Ref. | Str.&Coh. | Gram. | Ref. | Str.&Coh. | |

| PEGASUS | 4.45 | 4.35 | 1.95 | 4.40 | 4.20 | 3.20 |

| LED | 4.35 | 4.50 | 3.20 | 3.10 | 3.80 | 2.55 |

| PRIMERA | 4.70 | 4.65 | 3.70 | 4.40 | 4.45 | 4.10 |

Pyramid Evaluation Both TAC and DUC datasets include SCU (Summary Content Unit) annotations and weights identified by experienced annotators. We then ask 3 annotators to make a binary decision whether each SCU is covered in a candidate summary. Following Nenkova and Passonneau (2004), the raw score of each summary is then computed by the sum of weights of the covered SCUs, i.e. $\begin{array}{r}{S_{r}=\sum_{S C U}w_{i}I(S C U_{i})}\end{array}$ , where $I(S C U_{i})$ is an indicato r function on whether $S C U_{i}$ is covered by the current summary, and $w_{i}$ is the weight of $S C U_{i}$ . In the original pyramid evaluation, the final score is computed by the ratio of $S_{r}$ to the maximum possible weights with the same number of SCUs as in the generated summaries. However, the total number of SCUs of generated summaries is not available in the simplified annotations in our design. To take consideration of the length of generated summaries and make a fair comparison, instead, we compute Recall, Precision and F-1 score regarding lengths of both gold references and system generated summaries as

金字塔评估

TAC和DUC数据集均包含由经验丰富的标注者识别的SCU (Summary Content Unit) 标注及权重。随后,我们邀请3名标注者对候选摘要是否涵盖每个SCU进行二元判断。参照Nenkova和Passonneau (2004) 的方法,每个摘要的原始分数通过被覆盖SCU的权重总和计算得出,即 $\begin{array}{r}{S_{r}=\sum_{S C U}w_{i}I(S C U_{i})}\end{array}$ ,其中 $I(S C U_{i})$ 为指示函数,判断 $S C U_{i}$ 是否被当前摘要覆盖, $w_{i}$ 表示 $S C U_{i}$ 的权重。

在原始金字塔评估中,最终分数通过 $S_{r}$ 与生成摘要所含SCU数量的最大可能权重之比计算。然而,我们设计的简化标注方案中无法获取生成摘要的SCU总数。为兼顾生成摘要长度并实现公平比较,我们改为基于黄金参考摘要和系统生成摘要的长度计算召回率 (Recall)、精确率 (Precision) 和F-1值:

$$

\mathrm{R}{=}\frac{S_{r}}{l e n(g o l d)};\mathrm{P}{=}\frac{S_{r}}{l e n(s y s)};\mathrm{F}{1=}\frac{2\cdot R\cdot P}{(R+P)}

$$

$$

\mathrm{R}{=}\frac{S_{r}}{l e n(g o l d)};\mathrm{P}{=}\frac{S_{r}}{l e n(s y s)};\mathrm{F}{1=}\frac{2\cdot R\cdot P}{(R+P)}

$$

Fluency Evaluation Fluency results can be found in Table 5, and PRIMERA has the best performance on both datasets in terms of all aspects. Only

流畅度评估

流畅度结果见表5,PRIMERA在两个数据集的所有方面均表现最佳。仅

for Grammatical it y PRIMERA’s top performance is matched by PEGASUS.

在语法准确性方面,PRIMERA的顶尖表现与PEGASUS持平。

7 Related Work

7 相关工作

Neural Multi-Document Sum mari z ation These models can be categorized into two classes, graph-based models (Yasunaga et al., 2017; Liao et al., 2018; Li et al., 2020; Pasunuru et al., 2021) and hierarchical models (Liu and Lapata, 2019a; Fabbri et al., 2019; Jin et al., 2020). Graph-based models often require auxiliary information (e.g., AMR, discourse structure) to build an input graph, making them reliant on auxiliary models and less general. Hierarchical models are another class of models for multi-document sum mari z ation, examples of which include multi-head pooling and inter-paragraph attention (Liu and Lapata, 2019a), MMR-based attention (Fabbri et al., 2019; Mao et al., 2020), and attention across representations of different granularity (words, sentences, and documents) (Jin et al., 2020). Prior work has also shown the advantages of customized optimization in multi-document sum mari z ation (e.g., RL; Su et al., 2021). Such models are often dataset-specific and difficult to develop and adapt to other datasets or tasks.

神经多文档摘要模型可分为两大类:基于图的模型 (Yasunaga et al., 2017; Liao et al., 2018; Li et al., 2020; Pasunuru et al., 2021) 和分层模型 (Liu and Lapata, 2019a; Fabbri et al., 2019; Jin et al., 2020)。基于图的模型通常需要辅助信息 (如抽象语义表示AMR、篇章结构) 来构建输入图,这使得它们依赖辅助模型且通用性较差。分层模型是另一类多文档摘要模型,其典型方法包括多头池化与段落间注意力机制 (Liu and Lapata, 2019a)、基于最大边缘相关(MMR)的注意力机制 (Fabbri et al., 2019; Mao et al., 2020),以及跨粒度表示(词语、句子和文档)的注意力机制 (Jin et al., 2020)。先前研究也证明了定制化优化(如强化学习RL;Su et al., 2021)在多文档摘要中的优势。这类模型通常针对特定数据集开发,难以适配其他数据集或任务。

Pretrained Models for Sum mari z ation Pretrained language models have been successfully applied to sum mari z ation, e.g., BERTSUM (Liu and Lapata, 2019b), BART (Lewis et al., 2020), T5 (Raffel et al., 2020). Instead of regular language modeling objectives, PEGASUS (Zhang et al., 2020) introduced a pre training objective with a focus on sum mari z ation, using Gap Sentence Generation, where the model is tasked to generate summaryworthy sentences, and Zou et al. (2020) proposed different pre training objectives to reinstate the original document, specifically for sum mari z ation task as well. Contemporaneous work by Rothe et al. (2021) argued that task-specific pre training does not always help for sum mari z ation, however, their experiments are limited to single-document summarization datasets. Pre training on the titles of HTMLs has been recently shown to be useful for few-shot short-length single-document summarization as well (Aghajanyan et al., 2021). Goodwin et al. (2020) evaluate three state-of-the-art models (BART, PEGASUS, T5) on several multi-document sum mari z ation datasets with low-resource settings, showing that abstract ive multi-document summarization remains challenging. Efficient pretrained transformers (e.g., Longformer (Beltagy et al., 2020) and BigBird (Zaheer et al., 2020) that can process long sequences have been also proven successful in sum mari z ation, typically by the ability to process long inputs, connecting information across the entire sequence. CDLM (Caciularu et al., 2021) is a follow-up work for pre training the Longformer model in a cross-document setting using global attention on masked tokens during pre training. However, this model only addresses encoder-specific tasks and it is not suitable for generation. In this work, we show how efficient transformers can be pretrained using a task-inspired pre training objective for multi-document sum mari z ation. Our proposed method is also related to the PMI-based token masking Levine et al. (2020) which improves over random token masking outside summarization.

用于摘要任务的预训练模型

预训练语言模型已成功应用于摘要任务,例如 BERTSUM (Liu and Lapata, 2019b)、BART (Lewis et al., 2020)、T5 (Raffel et al., 2020)。PEGASUS (Zhang et al., 2020) 没有采用常规语言建模目标,而是引入了专注于摘要的预训练目标,使用间隙句子生成 (Gap Sentence Generation) 技术让模型生成具有摘要价值的句子。Zou 等人 (2020) 也针对摘要任务提出了不同的预训练目标来重建原始文档。Rothe 等人 (2021) 的同期研究指出,任务特定的预训练并不总能提升摘要性能,但他们的实验仅限于单文档摘要数据集。最近研究表明,基于 HTML 标题的预训练对少样本短文本单文档摘要也有效 (Aghajanyan et al., 2021)。Goodwin 等人 (2020) 在低资源环境下评估了三种先进模型 (BART、PEGASUS、T5) 在多个多文档摘要数据集上的表现,证明抽象式多文档摘要仍具挑战性。能处理长序列的高效 Transformer (如 Longformer (Beltagy et al., 2020) 和 BigBird (Zaheer et al., 2020)) 也通过处理长输入、关联整个序列信息的能力,在摘要任务中取得成功。CDLM (Caciularu et al., 2021) 是 Longformer 的后续工作,通过在预训练时对遮蔽 token 使用全局注意力进行跨文档预训练,但该模型仅适用于编码器特定任务,不适合生成任务。本文展示了如何通过任务启发的预训练目标,为多文档摘要任务预训练高效 Transformer。我们提出的方法还与基于 PMI 的 token 遮蔽 (Levine et al., 2020) 相关,后者改进了摘要领域外的随机 token 遮蔽方法。

8 Conclusion and Future Work

8 结论与未来工作

In this paper, we present PRIMERA a pre-trained model for multi-document sum mari z ation. Unlike prior work, PRIMERA minimizes dataset-specific modeling by using a Longformer model pretrained with a novel entity-based sentence masking objective. The pre training objective is designed to help the model connect and aggregate information across input documents. PRIMERA outper- forms prior state-of-the-art pre-trained and datasetspecific models on 6 sum mari z ation datasets from 3 different domains, on zero, few-shot, and full fine-tuning setting. PRIMERA’s top performance is also revealed by human evaluation.

本文提出PRIMERA,一种面向多文档摘要任务的预训练模型。与现有工作不同,PRIMERA通过采用基于新型实体句子掩码目标预训练的Longformer模型,最大限度减少对特定数据集的建模依赖。该预训练目标旨在帮助模型建立跨文档信息关联与整合能力。在涵盖3个不同领域的6个摘要数据集上,PRIMERA在零样本、少样本和全微调设定下均超越现有最优预训练模型及数据集专用模型。人工评估结果也证实了PRIMERA的顶尖性能。

In zero-shot setting, we can only control the output length of generated summaries at inference time by specifying a length limit during decoding. Exploring a controllable generator in which the desired length can be injected as part of the input is a natural future direction. Besides the sum mari z ation task, we would like to explore using PRIMERA for other generation tasks with multiple documents as input, like multi-hop question answering.

在零样本设置中,我们只能在推理时通过解码阶段指定长度限制来控制生成摘要的输出长度。探索将目标长度作为输入部分注入的可控生成器,是未来一个自然的研究方向。除摘要任务外,我们希望能探索将PRIMERA应用于其他以多文档为输入的生成任务,例如多跳问答。

Ethics Concern

伦理问题

While there is limited risk associated with our work, similar to existing state-of-the-art generation models, there is no guarantee that our model will always generate factual content. Therefore, caution must be exercised when the model is deployed in practical settings. Factuality is an open problem in existing generation models.

虽然我们的工作风险有限,类似于现有的最先进生成模型,但不能保证我们的模型始终生成真实内容。因此,在实际部署该模型时必须谨慎。真实性是现有生成模型中的一个开放性问题。

References

参考文献

Armen Aghajanyan, Dmytro Okhonko, Mike Lewis, Mandar Joshi, Hu Xu, Gargi Ghosh, and Luke Z ett le moyer. 2021. HTLM: hyper-text pre-training and prompting of language models. CoRR, abs/2107.06955.

Armen Aghajanyan、Dmytro Okhonko、Mike Lewis、Mandar Joshi、Hu Xu、Gargi Ghosh 和 Luke Zettlemoyer。2021。HTLM:超文本预训练与大语言模型提示技术。CoRR, abs/2107.06955。

Iz Beltagy, Matthew E. Peters, and Arman Cohan. 2020. Longformer: The long-document transformer. CoRR, abs/2004.05150.

Iz Beltagy、Matthew E. Peters 和 Arman Cohan。2020。Longformer: 长文档 Transformer。CoRR, abs/2004.05150。

Jonathan Bragg, Arman Cohan, Kyle Lo, and Iz Beltagy. 2021. Flex: Unifying evaluation for few-shot nlp. arXiv preprint arXiv:2107.07170.

Jonathan Bragg、Arman Cohan、Kyle Lo和Iz Beltagy。2021。Flex: 统一少样本NLP评估标准。arXiv预印本 arXiv:2107.07170。

Avi Caciularu, Arman Cohan, Iz Beltagy, Matthew Peters, Arie Cattan, and Ido Dagan. 2021. CDLM: Cross-document language modeling. In Findings of the Association for Computational Linguistics: EMNLP 2021, pages 2648–2662, Punta Cana, Do- minican Republic. Association for Computational Linguistics.

Avi Caciularu、Arman Cohan、Iz Beltagy、Matthew Peters、Arie Cattan 和 Ido Dagan。2021。CDLM: 跨文档语言建模。载于《计算语言学协会发现:EMNLP 2021》,第2648–2662页,多米尼加共和国蓬塔卡纳。计算语言学协会。

Janara Christensen, Mausam, Stephen Soderland, and Oren Etzioni. 2013. Towards coherent multi- document sum mari z ation. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 1163–1173, Atlanta, Georgia. Association for Computational Linguistics.

Janara Christensen、Mausam、Stephen Soderland 和 Oren Etzioni。2013. 面向连贯的多文档摘要生成。载于《2013年北美计算语言学协会人类语言技术会议论文集》(Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies),第1163–1173页,亚特兰大,佐治亚州。计算语言学协会。

Arman Cohan, Franck Dern on court, Doo Soon Kim, Trung Bui, Seokhwan Kim, Walter Chang, and Nazli Goharian. 2018. A discourse-aware attention model for abstract ive sum mari z ation of long documents. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), pages 615–621, New Orleans, Louisiana. Association for Computational Linguistics.

Arman Cohan、Franck Dernoncourt、Doo Soon Kim、Trung Bui、Seokhwan Kim、Walter Chang和Nazli Goharian。2018。面向长文档摘要生成的篇章感知注意力模型。载于《2018年北美计算语言学协会人类语言技术会议论文集(第二卷:短论文)》,第615-621页,美国路易斯安那州新奥尔良。计算语言学协会。

Hoa Trang Dang. 2005. Overview of duc 2005. In Proceedings of the document understanding conference, volume 2005, pages 1–12.

Hoa Trang Dang. 2005. DUC 2005综述. 见: 文档理解会议论文集, 第2005卷, 第1-12页.

Hoa Trang Dang and Karolina Owczarzak. 2008. Overview of the tac 2008 update sum mari z ation task. Theory and Applications of Categories.

Hoa Trang Dang和Karolina Owczarzak。2008。TAC 2008更新摘要任务概述。Theory and Applications of Categories。

Alexander Fabbri, Irene Li, Tianwei She, Suyi Li, and Dragomir Radev. 2019. Multi-news: A large-scale multi-document sum mari z ation dataset and abstractive hierarchical model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 1074–1084, Florence, Italy. Association for Computational Linguistics.

Alexander Fabbri、Irene Li、Tianwei She、Suyi Li 和 Dragomir Radev。2019. Multi-News: 一个大规模多文档摘要数据集及抽象层次模型。在《第57届计算语言学协会年会论文集》中,第1074-1084页,意大利佛罗伦萨。计算语言学协会。

Sebastian Gehrmann, Yuntian Deng, and Alexander Rush. 2018. Bottom-up abstract ive sum mari z ation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 4098–4109, Brussels, Belgium. Association for Computational Linguistics.

Sebastian Gehrmann、Yuntian Deng 和 Alexander Rush。2018. 自底向上的抽象摘要生成。载于《2018年自然语言处理实证方法会议论文集》,第4098–4109页,比利时布鲁塞尔。计算语言学协会。

Demian Gholipour Ghalandari, Chris Hokamp, Nghia The Pham, John Glover, and Georgiana Ifrim. 2020. A large-scale multi-document sum mari z ation dataset from the Wikipedia current events portal. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 1302–1308, Online. Association for Computational Linguistics.

Demian Gholipour Ghalandari、Chris Hokamp、Nghia The Pham、John Glover和Georgiana Ifrim。2020。基于维基百科时事门户的大规模多文档摘要数据集。在《第58届计算语言学协会年会论文集》中,第1302–1308页,线上。计算语言学协会。

Travis Goodwin, Max Savery, and Dina DemnerFushman. 2020. Flight of the PEGASUS? comparing transformers on few-shot and zero-shot multidocument abstract ive sum mari z ation. In Proceedings of the 28th International Conference on Computational Linguistics, pages 5640–5646, Barcelona, Spain (Online). International Committee on Computational Linguistics.

Travis Goodwin、Max Savery 和 Dina DemnerFushman。2020. PEGASUS 的飞行?比较 Transformer 在少样本和零样本多文档摘要生成中的表现。载于《第 28 届国际计算语言学会议论文集》,第 5640–5646 页,西班牙巴塞罗那(在线)。国际计算语言学委员会。

Xiaotao Gu, Yuning Mao, Jiawei Han, Jialu Liu, You Wu, Cong Yu, Daniel Finnie, Hongkun Yu, Jiaqi Zhai, and Nicholas Zukoski. 2020. Generating