Combining Efficient Net and Vision Transformers for Video Deepfake Detection

结合 EfficientNet 和 Vision Transformer 进行视频深度伪造检测

Abstract. Deepfakes are the result of digital manipulation to forge realistic yet fake imagery. With the astonishing advances in deep generative models, fake images or videos are nowadays obtained using variation al auto encoders (VAEs) or Generative Adversarial Networks (GANs). These technologies are becoming more accessible and accurate, resulting in fake videos that are very difficult to be detected. Traditionally, Convolutional Neural Networks (CNNs) have been used to perform video deepfake detection, with the best results obtained using methods based on Efficient Net B7. In this study, we focus on video deep fake detection on faces, given that most methods are becoming extremely accurate in the generation of realistic human faces. Specifically, we combine various types of Vision Transformers with a convolutional Efficient Net B0 used as a feature extractor, obtaining comparable results with some very recent methods that use Vision Transformers. Differently from the state-of-the-art approaches, we use neither distillation nor ensemble methods. Furthermore, we present a straightforward inference procedure based on a simple voting scheme for handling multiple faces in the same video shot. The best model achieved an AUC of 0.951 and an F1 score of $88.0%$ , very close to the state-of-the-art on the DeepFake Detection Challenge (DFDC). The code for reproducing our results is publicly available here: https://github.com/davide-coccomini/

摘要。深度伪造(Deepfakes)是通过数字处理技术生成逼真虚假图像的结果。随着深度生成模型的惊人进步,如今可以使用变分自编码器(VAEs)或生成对抗网络(GANs)来获取伪造图像或视频。这些技术正变得越来越易用且精确,导致生成的伪造视频极难被检测。传统上,卷积神经网络(CNNs)被用于视频深度伪造检测,其中基于EfficientNet B7的方法取得了最佳效果。本研究聚焦于人脸视频深度伪造检测,鉴于大多数方法在生成逼真人脸方面已变得极为精确。具体而言,我们将多种视觉Transformer与作为特征提取器的卷积EfficientNet B0相结合,获得了与近期使用视觉Transformer方法相当的结果。与现有技术方法不同,我们既未使用蒸馏也未采用集成方法。此外,我们提出了一种基于简单投票方案的直接推理流程,用于处理同一视频镜头中的多张人脸。最佳模型取得了0.951的AUC值和88.0%的F1分数,非常接近深度伪造检测挑战赛(DFDC)的最先进水平。重现我们结果的代码已公开在此处:https://github.com/davide-coccomini/

Combining-Efficient Net-and-Vision-Transformers-for-Video-Deepfake-Detection.

结合EfficientNet与Vision Transformer的视频深度伪造检测

Keywords: Deep Fake Detection $\cdot$ Transformer Networks $\cdot$ Deep Learning

关键词:深度伪造检测 (Deep Fake Detection) $\cdot$ Transformer 网络 $\cdot$ 深度学习

1 Introduction

1 引言

With the recent advances in generative deep learning techniques, it is nowadays possible to forge highly-realistic and credible misleading videos. These methods have generated numerous fake news or revenge porn videos, becoming a severe problem in modern society [6]. These fake videos are known as deepfakes. Given the astonishing realism obtained by recent models in the generation of human faces, deepfakes are mainly obtained by transposing one person’s face onto another’s. The results are so realistic that it is almost like the person being replaced is actually present in the video, and the replaced actors are rigged to say things they never actually said [38].

随着生成式深度学习 (Generative Deep Learning) 技术的最新进展,如今人们可以伪造高度逼真且可信的误导性视频。这些方法已经催生了大量假新闻或复仇色情视频,成为现代社会的一个严重问题 [6]。这些虚假视频被称为深度伪造 (deepfakes)。鉴于近期模型在生成人脸方面取得的惊人逼真效果,深度伪造主要通过将一个人的面部移植到另一个人的面部来实现。其结果逼真到几乎就像被替换的人真的出现在视频中一样,而被替换的演员会被操纵说出他们从未真正说过的话 [38]。

The evolution of deepfakes generation techniques and their increasing accessibility forces the research community to find effective methods to distinguish a manipulated video from a real one. At the same time, more and more models based on Transformers are gaining ground in the field of Computer Vision, showing excellent results in image processing [21,17], document retrieval [27], and efficient visual-textual matching [30,32], mainly for use in large-scale multi-modal retrieval systems [1,31].

深度伪造(Deepfake)生成技术的演进及其日益普及的趋势,迫使研究界必须寻找有效方法来区分篡改视频与真实视频。与此同时,越来越多基于Transformer的模型正在计算机视觉领域崭露头角,在图像处理[21,17]、文档检索[27]以及高效的视觉-文本匹配[30,32]方面展现出卓越性能,这些技术主要应用于大规模多模态检索系统[1,31]。

In this paper, we analyze different solutions based on combinations of convolutional networks, particularly the Efficient Net B0, with different types of Vision Transformers and compare the results with the current state-of-the-art. Unlike Vision Transformers, CNNs still maintain an important architectural prior, the spatial locality, which is very important for discovering image patch abnormalities and maintaining good data efficiency. CNNs, in fact, have a long-established success on many tasks, ranging from image classification [13,40] and object detection [35,2,8] to abstract visual reasoning [28,29].

在本文中,我们分析了基于卷积网络(特别是Efficient Net B0)与不同类型Vision Transformer组合的多种解决方案,并将结果与当前最先进技术进行对比。与Vision Transformer不同,CNN仍保留着关键的空间局部性先验结构,这对发现图像块异常和保持良好数据效率至关重要。事实上,CNN在图像分类[13,40]、目标检测[35,2,8]乃至抽象视觉推理[28,29]等众多任务中早已取得长期成功。

In this paper, we also propose a simple yet effective voting mechanism to perform inference on videos. We show that this methodology could lead to better and more stable results.

本文还提出了一种简单而有效的投票机制用于视频推理。研究表明该方法能够获得更好且更稳定的结果。

2 Related Works

2 相关工作

2.1 Deepfake Generation

2.1 Deepfake生成

There are mainly two generative approaches to obtain realistic faces: Generative Adversarial Networks (GANs) [15] and Variation al Auto Encoders (VAEs) [23].

获取逼真人脸图像主要有两种生成方法:生成对抗网络 (GANs) [15] 和变分自编码器 (VAEs) [23]。

GANs employ two distinct networks. The disc rim in at or, the one that must be able to identify when a video is fake or not, and the generator, the network that actually modifies the video in a sufficiently credible way to deceive its counterpart. With GANs, very credible and realistic results have been obtained, and over time, numerous approaches have been introduced such as StarGAN [7] and DiscoGAN [22]; the best results in this field have been obtained with StyleGAN-V2 [20].

GANs采用两种不同的网络。判别器 (discriminator) 必须能够识别视频真伪,而生成器 (generator) 则负责对视频进行逼真修改以欺骗判别器。通过GANs技术,研究者已取得高度逼真的成果,并陆续提出了StarGAN [7]、DiscoGAN [22] 等多种改进方案。目前该领域的最佳成果由StyleGAN-V2 [20] 实现。

VAE-based solutions, instead, make use of a system consisting of two encoderdecoder pairs, each of which is trained to deconstruct and reconstruct one of the two faces to be exchanged. Subsequently, the decoding part is switched, and this allows the reconstruction of the target person’s face. The best-known uses of this technique were Deep Face Lab [34], DFaker1, and Deep Fake t f 2.

基于VAE的解决方案则采用了一个由两个编码器-解码器对组成的系统,每对都经过训练以解构并重建待交换的两张人脸之一。随后,交换解码部分即可重建目标人物的面部。该技术最著名的应用包括Deep Face Lab [34]、DFaker1和Deep Fake t f 2。

2.2 Deepfake Detection

2.2 Deepfake检测

The problem of deepfake detection has a widespread interest not only in the visual domain. For example, the recent work in [12] analyzes deepfakes in tweets for finding and defeating false content in social networks.

深度伪造检测问题不仅在视觉领域引起广泛关注。例如,近期研究[12]通过分析推文中的深度伪造内容,旨在发现并打击社交网络中的虚假信息。

In an attempt to address the problem of deepfakes detection in videos, numerous datasets have been produced over the years. These datasets are grouped into three generations, the first generation consisting of DF-TIMIT [24], UADFC [41] and Face Forensics++ [36], the second generation datasets such as Google Deepfake Detection Dataset [11], Celeb-DF [25], and finally the third generation datasets, with the DFDC dataset [9] and Deep Forensics [19]. The further the generations go, the larger these datasets are, and the more frames they contain.

为了解决视频深度伪造检测的问题,多年来已产生了众多数据集。这些数据集分为三代:第一代包括 DF-TIMIT [24]、UADFC [41] 和 Face Forensics++ [36];第二代数据集如 Google Deepfake Detection Dataset [11] 和 Celeb-DF [25];最后是第三代数据集,包括 DFDC 数据集 [9] 和 Deep Forensics [19]。随着代际演进,这些数据集的规模不断扩大,包含的帧数也越来越多。

In particular, on the DFDC dataset, which is the largest and most complete, multiple experiments were carried out trying to obtain an effective method for deepfake detection. Very good results were obtained with Efficient Net B7 ensemble technique in [37]. Other noteworthy methods include those conducted in [33], who attempted to identify spatio-temporal anomalies by combining an Efficient Net with a Gated Recurrent Unit (GRU). Some efforts to capture spatiotemporal inconsistencies were made in [26] using 3DCNN networks and in [3], which presented a method that exploits optical flow to detect video glitches. Some more classical methods have also been proposed to perform deepfake detection. In particular, the authors in [16] proposed a method based on K-nearest neighbors, while the work in [41] exploited SVMs. Of note is the very recent work of Giudice et al. [14] in which they presented an innovative method for identifying so-called GAN Specific Frequencies (GSF) that represent a unique fingerprint of different generative architectures. By exploiting the Discrete Cosine Transform (DCT) they manage to identify anomalous frequencies.

特别是在最大且最完整的DFDC数据集上,进行了多项实验以寻求有效的深度伪造(deepfake)检测方法。[37]中采用Efficient Net B7集成技术取得了优异成果。其他值得关注的方法包括[33]提出的结合Efficient Net与门控循环单元(GRU)的时空异常检测,以及[26]使用3DCNN网络和[3]利用光流检测视频异常的时空不一致性研究。部分传统方法也被应用于深度伪造检测,如[16]提出的K近邻算法和[41]采用的SVM方法。值得注意的是Giudice等人在[14]中的最新工作,他们通过离散余弦变换(DCT)识别生成对抗网络特有频率(GSF),为不同生成架构提供了独特指纹特征。

More recently, methods based on Vision Transformers have been proposed. Notably, the method presented in [39] obtained good results by combining Transformers with a convolutional network, used to extract patches from faces detected in videos.

最近,基于视觉Transformer的方法被提出。值得注意的是,[39]中提出的方法通过将Transformer与卷积网络相结合取得了良好效果,该网络用于从视频检测到的人脸中提取图像块。

State of the art was then recently improved by performing distillation from the Efficient Net B7 pre-trained on the DFDC dataset to a Vision Transformer [18]. In this case, the Vision Transformer patches are combined with patches extracted from the Efficient Net B7 pre-trained via global pooling and then passed to the Transformer Encoder. A distillation token is then added to the Transformer network to transfer the knowledge acquired by the Efficient Net B7.

当前最先进的方法是通过在DFDC数据集上预训练的Efficient Net B7向Vision Transformer [18]进行知识蒸馏来实现的。在此方法中,Vision Transformer的patch与通过全局池化从预训练的Efficient Net B7中提取的patch相结合,然后输入到Transformer编码器中。随后,在Transformer网络中增加了一个蒸馏token (distillation token) 以传递Efficient Net B7所习得的知识。

3 Method

3 方法

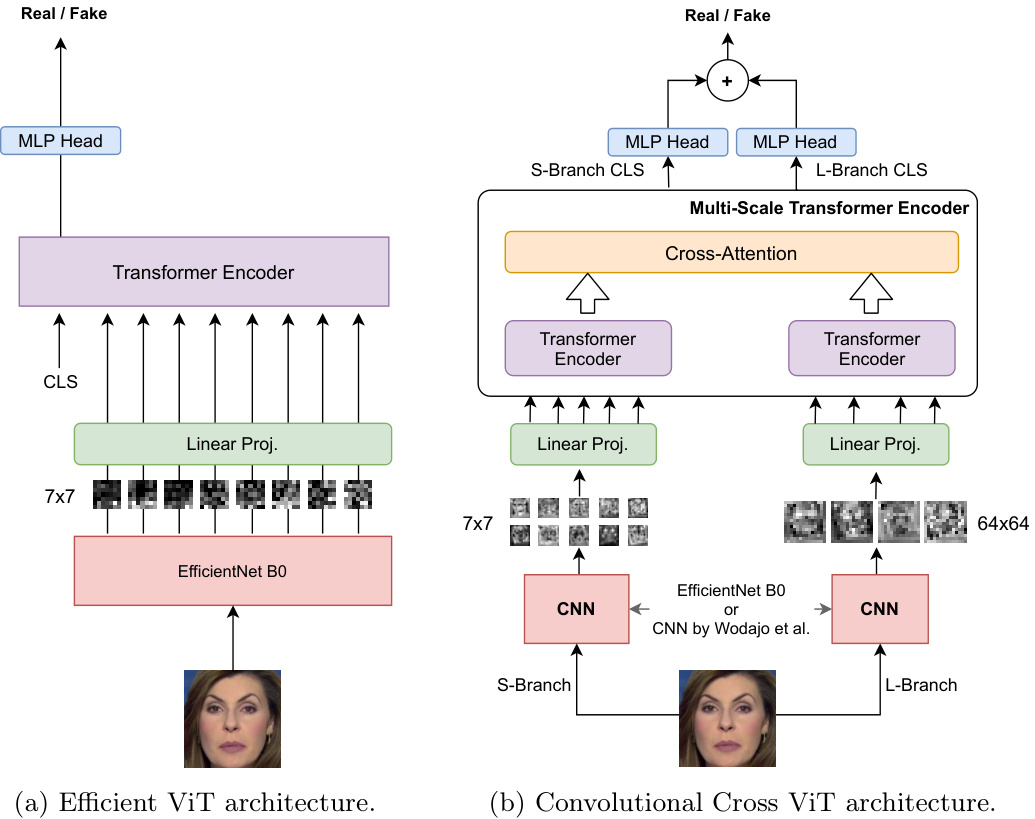

The proposed methods analyze the faces extracted from the source video to determine whenever they have been manipulated. For this reason, faces are preextracted using a state-of-the-art face detector, MTCNN [42]. We propose two mixed convolutional-transformer architectures that take as input a pre-extracted face and output the probability that the face has been manipulated. The two presented architectures are trained in a supervised way to discern real from fake examples. For this reason, we solve the detection task by framing it as a binary classification problem. Specifically, we propose the Efficient ViT and the Convolutional Cross ViT, better explained in the following paragraphs.

所提出的方法通过分析从源视频中提取的人脸来判断其是否被篡改。为此,我们采用当前最先进的人脸检测器MTCNN [42]对人脸进行预提取。本文提出了两种混合卷积-Transformer架构,以预提取的人脸作为输入,输出该人脸被篡改的概率。这两种架构通过监督学习训练,用于区分真实样本与伪造样本。因此,我们将检测任务构建为一个二分类问题。具体而言,我们提出了Efficient ViT和Convolutional Cross ViT两种架构,下文将详细阐述。

The proposed models are trained on a face basis, and then they are used at inference time to draw a conclusion on the whole video shot by aggregating the inferred output both in time and across multiple faces, as explained in Section 4.3.

所提出的模型以人脸为单位进行训练,在推理阶段则通过聚合时间维度和多张人脸的推断输出(如第4.3节所述)来对整个视频片段做出综合判定。

The Efficient ViT The Efficient ViT is composed of two blocks, a convolutional module for working as a feature extractor and a Transformer Encoder, in a setup very similar to the Vision Transformer (ViT) [10]. Considering the promising results of the Efficient Net, we use an Efficient Net B0, the smallest of the EfficientNet networks, as a convolutional extractor for processing the input faces. Specifically, the Efficient Net produces a visual feature for each chunk from the input face. Each chunk is $7\times7$ pixels. After a linear projection, every feature from each spatial location is further processed by a Vision Transformer. The CLS token is used for producing the binary classification score. The architecture is illustrated in Figure 1a. The Efficient Net B0 feature extractor is initialized with the pre-trained weights and fine-tuned to allow the last layers of the network to perform a more consistent and suitable extraction for this specific downstream task. The features extracted from the Efficient Net B0 convolutional network simplify the training of the Vision Transformer, as the CNN features already embed important low-level and localized information from the image.

高效ViT

高效ViT由两个模块组成:一个作为特征提取器的卷积模块和一个Transformer编码器,其结构与Vision Transformer (ViT) [10] 非常相似。考虑到EfficientNet的优异表现,我们采用EfficientNet网络中最小的EfficientNet B0作为卷积提取器来处理输入的人脸图像。具体而言,EfficientNet为输入人脸的每个图像块生成视觉特征,每个图像块大小为$7\times7$像素。经过线性投影后,每个空间位置的特征会由Vision Transformer进一步处理。CLS token用于生成二分类分数。该架构如图1a所示。EfficientNet B0特征提取器使用预训练权重初始化,并通过微调使网络最后一层能针对这一特定下游任务进行更一致且合适的特征提取。从EfficientNet B0卷积网络提取的特征简化了Vision Transformer的训练,因为CNN特征已嵌入了图像中重要的低层次局部信息。

The Convolutional Cross ViT Limiting the architecture to the use only small patches as in the Efficient ViT may not be the ideal choice, as artifacts introduced by deepfakes generation methods may arise both locally and globally. For this reason, we also introduce the Convolutional Cross ViT architecture. The Convolutional Cross ViT builds upon both the Efficient ViT and the multi-scale Transformer architecture by [5]. More in detail, the Convolutional Cross ViT uses two distinct branches: the S-branch, which deals with smaller patches, and the L-branch, which works on larger patches for having a wider receptive field. The visual tokens output by the Transformer Encoders from the two branches are combined through cross attention, allowing direct interaction between the two paths. Finally, the CLS tokens corresponding to the outputs from the two branches are used to produce two separate logits. These logits are summed, and a final sigmoid produces the final probabilities. A detailed overview of this architecture is shown in Fig. 1b. For the Convolutional Cross ViT, we use two different CNN backbones. The former is the Efficient Net B0, which processes $7\times7$ image patches for the S-branch and $54\times54$ for the L-branch. The latter is the CNN by Wodajo et al. [39], which handles $7\times7$ image patches for the S-branch and $64\times64$ for the L-branch.

卷积交叉ViT

将架构限制为仅使用小尺寸图像块(如Efficient ViT)可能并非理想选择,因为深度伪造生成方法引入的伪影可能在局部和全局同时出现。为此,我们提出了卷积交叉ViT架构。该架构基于Efficient ViT和[5]提出的多尺度Transformer架构,具体采用双分支设计:S分支处理较小图像块,L分支处理较大图像块以获得更广的感受野。两个分支的Transformer编码器输出的视觉Token通过交叉注意力机制交互融合,最终将两个分支的CLS Token生成的对数几率相加,经Sigmoid函数输出最终概率。架构细节如图1b所示。

卷积交叉ViT采用两种CNN骨干网络:Efficient Net B0处理S分支的7×7图像块和L分支的54×54图像块;Wodajo等人[39]提出的CNN网络则处理S分支的7×7图像块和L分支的64×64图像块。

4 Experiments

4 实验

We probed the presented architectures against some state-of-the-art methods on two widely-used datasets. In particular, we considered Convolutional ViT [39], ViT with distillation [18], and Selim Efficient Net B7 [37], the winner of the Deep

我们对所提出的架构在两个广泛使用的数据集上与一些先进方法进行了对比测试。具体而言,我们考虑了Convolutional ViT [39]、带蒸馏的ViT [18]以及Deep竞赛冠军Selim Efficient Net B7 [37]。

Fig. 1: The proposed architectures. Notice that for the Convolutional Cross ViT in (b), we experimented both with Efficient Net B0 and with the convolutional architecture by [39] as feature extractors.

图 1: 提出的架构。注意在(b)中的卷积交叉ViT (Convolutional Cross ViT)中,我们尝试了Efficient Net B0和[39]提出的卷积架构作为特征提取器。

Fake Detection Challenge (DFDC). Notice that the results for Convolutional ViT [39] are not reported in the original paper, but they are obtained executing the test code on DFDC test set using the available pre-trained model released by the authors.

假脸检测挑战赛 (DFDC)。需要注意的是,卷积ViT [39]的结果并未在原论文中报告,而是通过使用作者发布的预训练模型在DFDC测试集上执行测试代码获得的。

4.1 Datasets and Face Extraction

4.1 数据集与人脸提取

We initially conducted some tests on Face Forensics++. The dataset is composed of original and fake videos generated through different deepfake generation techniques. For evaluating, we considered the videos generated in the Deepfakes, Face2Face, Face Shifter, FaceSwap and Neural Textures sub-datasets. We also used the DFDC test set containing 5000 videos. The model trained on the entire training set, which includes fake videos of all considered methods of Face Forensics