$\infty$ -former: Infinite Memory Transformer

$\infty$ -former: 无限记忆Transformer

Abstract

摘要

Transformers are unable to model long-term memories effectively, since the amount of computation they need to perform grows with the context length. While variations of efficient transformers have been proposed, they all have a finite memory capacity and are forced to drop old information. In this paper, we propose the $\infty$ -former, which extends the vanilla transformer with an unbounded longterm memory. By making use of a continuousspace attention mechanism to attend over the long-term memory, the $\infty$ -former’s attention complexity becomes independent of the context length, trading off memory length with precision. In order to control where precision is more important, $\infty$ -former maintains “sticky memories,” being able to model arbitrarily long contexts while keeping the computation budget fixed. Experiments on a synthetic sorting task, language modeling, and document grounded dialogue generation demonstrate the $\infty$ -former’s ability to retain information from long sequences.1

Transformer 无法有效建模长期记忆,因为其计算量会随上下文长度增长。虽然已有多种高效 Transformer 变体被提出,但它们都存在有限记忆容量,不得不丢弃旧信息。本文提出 $\infty$-former,通过无限长期记忆扩展原始 Transformer。该模型采用连续空间注意力机制处理长期记忆,使注意力复杂度与上下文长度无关,实现了记忆长度与精度的权衡。为控制精度关键区域,$\infty$-former 采用"粘性记忆"机制,在固定计算预算下建模任意长上下文。在合成排序任务、语言建模和文档 grounded 对话生成上的实验证明了 $\infty$-former 保留长序列信息的能力[20]。

1 Introduction

1 引言

When reading or writing a document, it is important to keep in memory the information previously read or written. Humans have a remarkable ability to remember long-term context, keeping in memory the relevant details (Carroll, 2007; Kuhbandner, 2020). Recently, transformer-based language models have achieved impressive results by increasing the context size (Radford et al., 2018, 2019; Dai et al., 2019; Rae et al., 2019; Brown et al., 2020). However, while humans process information sequentially, updating their memories continuously, and recurrent neural networks (RNNs) update a single memory vector during time, transformers do not – they exhaustively query every representation associated to the past events. Thus, the amount of computation they need to perform grows with the length of the context, and, consequently, transformers have computational limitations about how much information can fit into memory. For example, a vanilla transformer requires quadratic time to process an input sequence and linear time to attend to the context when generating every new word.

在阅读或撰写文档时,记住先前读写的信息至关重要。人类具有出色的长期上下文记忆能力,能够持续保留相关细节 (Carroll, 2007; Kuhbandner, 2020)。近年来,基于Transformer的语言模型通过扩大上下文窗口取得了显著成果 (Radford et al., 2018, 2019; Dai et al., 2019; Rae et al., 2019; Brown et al., 2020)。但与人类顺序处理信息并持续更新记忆不同,循环神经网络 (RNN) 随时间更新单一记忆向量,而Transformer会对所有历史事件关联的表征进行全局查询。因此,其计算量会随上下文长度增长而增加,导致Transformer存在内存信息容量的计算限制。例如,标准Transformer处理输入序列需要二次方时间,生成每个新词时关注上下文则需线性时间。

Several variations have been proposed to address this problem (Tay et al., 2020b). Some propose using sparse attention mechanisms, either with data-dependent patterns (Kitaev et al., 2020; Vyas et al., 2020; Tay et al., 2020a; Roy et al., 2021; Wang et al., 2021) or data-independent patterns (Child et al., 2019; Beltagy et al., 2020; Zaheer et al., 2020), reducing the self-attention complexity (Katha ro poul os et al., 2020; Cho roman ski et al., 2021; Peng et al., 2021; Jaegle et al., 2021), and caching past representations in a memory (Dai et al., 2019; Rae et al., 2019). These models are able to reduce the attention complexity, and, consequently, to scale up to longer contexts. However, as their complexity still depends on the context length, they cannot deal with unbounded context.

为解决这一问题,已有多种改进方案被提出 (Tay et al., 2020b) 。部分研究采用稀疏注意力机制,包括数据依赖型模式 (Kitaev et al., 2020; Vyas et al., 2020; Tay et al., 2020a; Roy et al., 2021; Wang et al., 2021) 和数据无关型模式 (Child et al., 2019; Beltagy et al., 2020; Zaheer et al., 2020) ,通过降低自注意力复杂度 (Katharopoulos et al., 2020; Choromanski et al., 2021; Peng et al., 2021; Jaegle et al., 2021) ,或将历史表征缓存于记忆单元 (Dai et al., 2019; Rae et al., 2019) 来实现优化。这些模型能有效降低注意力计算复杂度,从而支持更长上下文处理。但由于其复杂度仍与上下文长度相关,无法应对无限上下文场景。

In this paper, we propose the $\infty$ -former (infinite former; Fig. 1): a transformer model extended with an unbounded long-term memory (LTM), which allows the model to attend to arbitrarily long contexts. The key for making the LTM unbounded is a continuous-space attention framework (Martins et al., 2020) which trades off the number of information units that fit into memory (basis functions) with the granularity of their representations. In this framework, the input sequence is represented as a continuous signal, expressed as a linear combination of $N$ radial basis functions (RBFs). By doing this, the $\infty$ -former’s attention complexity is $\mathcal{O}(L^{2}+L\times N)$ while the vanilla transformer’s is $\mathcal{O}(L\times(L+L_{\mathrm{LTM}}))$ , where $L$ and $L_{\mathrm{LTM}}$ correspond to the transformer input size and the long-term memory length, respectively (details in $\S3.1.1)$ . Thus, this representation comes with two significant advantages: (i) the context can be represented using a number of basis functions $N$ smaller than the number of tokens, reducing the attention computational cost; and (ii) $N$ can be fixed, making it possible to represent unbounded context in memory, as described in $\S3.2$ and Fig. 2, without increasing its attention complexity. The price, of course, is a loss in resolution: using a smaller number of basis functions leads to lower precision when representing the input sequence as a continuous signal, as shown in Fig. 3.

本文提出 $\infty$-former (无限former;图1):一种通过无界长期记忆 (long-term memory, LTM) 扩展的transformer模型,使其能够关注任意长度的上下文。实现无界LTM的关键是连续空间注意力框架 (Martins et al., 2020) ,该框架在内存容纳的信息单元数量 (基函数) 与其表示粒度之间进行权衡。在此框架中,输入序列被表示为连续信号,通过 $N$ 个径向基函数 (radial basis functions, RBFs) 的线性组合来表达。通过这种方式,$\infty$-former的注意力复杂度为 $\mathcal{O}(L^{2}+L\times N)$,而原始transformer的复杂度为 $\mathcal{O}(L\times(L+L_{\mathrm{LTM}}))$,其中 $L$ 和 $L_{\mathrm{LTM}}$ 分别对应transformer输入大小和长期记忆长度 (详见 $\S3.1.1$)。因此,这种表示具有两个显著优势:(i) 可以使用少于token数量的基函数 $N$ 来表示上下文,从而降低注意力计算成本;(ii) 可以固定 $N$,使得在内存中表示无界上下文成为可能 (如 $\S3.2$ 和图2所述),而不会增加注意力复杂度。当然,代价是分辨率的损失:如图3所示,使用较少的基函数会导致将输入序列表示为连续信号时的精度降低。

To mitigate the problem of losing resolution, we introduce the concept of “sticky memories” (§3.3), in which we attribute a larger space in the LTM’s signal to regions of the memory more frequently accessed. This creates a notion of “permanence” in the LTM, allowing the model to better capture long contexts without losing the relevant information, which takes inspiration from long-term potenti ation and plasticity in the brain (Mills et al., 2014; Bamji, 2005).

为缓解分辨率丢失问题,我们引入了"粘性记忆(sticky memories)"概念(见3.3节),即在LTM信号中为访问频率更高的记忆区域分配更大空间。这形成了LTM中的"持久性"概念,使模型能更好地捕捉长上下文而不丢失相关信息,其灵感源自大脑的长时程增强和可塑性现象(Mills et al., 2014; Bamji, 2005)。

To sum up, our contributions are the following:

总而言之,我们的贡献如下:

• We propose the $\infty$ -former, in which we extend the transformer model with a continuous long-term memory (§3.1). Since the attention computational complexity is independent of the context length, the $\infty$ -former is able to model long contexts.

• 我们提出 $\infty$-former,通过为Transformer模型扩展连续长期记忆来增强其能力(见§3.1)。由于注意力计算复杂度与上下文长度无关,$\infty$-former能够有效建模长上下文。

• We propose a procedure that allows the model to keep unbounded context in memory (§3.2).

• 我们提出了一种让模型在内存中保持无限上下文的方法 (见3.2节)。

• We introduce sticky memories, a procedure that enforces the persistence of important information in the LTM (§3.3).

• 我们提出了粘性记忆 (sticky memories) 机制,这是一种确保重要信息在长时记忆 (LTM) 中持久保存的方法 (§3.3)。

• We perform empirical comparisons in a synthetic task $(\S4.1)$ , which considers increasingly long sequences, in language modeling (§4.2), and in document grounded dialogue generation (§4.3). These experiments show the benefits of using an unbounded memory.

• 我们在合成任务 $(\S4.1)$ (考虑逐渐增长的序列)、语言建模 (§4.2) 和基于文档的对话生成 (§4.3) 中进行了实证对比。这些实验证明了使用无限内存的优势。

input size and $e$ is the embedding size of the attention layer. The queries $Q$ , keys $K$ , and values $V$ , to be used in the multi-head self-attention computation are obtained by linearly projecting the input, or the output of the previous layer, $X$ , for each attention head $h$ :

输入尺寸为 $e$,即注意力层的嵌入尺寸。查询 $Q$、键 $K$ 和值 $V$ 用于多头自注意力计算,它们是通过对输入或前一层的输出 $X$ 进行线性投影得到的,每个注意力头 $h$ 对应如下:

$$

Q_{h}=X_{h}W^{Q_{h}},K_{h}=X_{h}W^{K_{h}},V_{h}=X_{h}W^{V_{h}},

$$

$$

Q_{h}=X_{h}W^{Q_{h}},K_{h}=X_{h}W^{K_{h}},V_{h}=X_{h}W^{V_{h}},

$$

where $W^{Q_{h}},W^{K_{h}},W^{V_{h}}\in\mathbb{R}^{d\times d}$ are learnable projection matrices, $d=e/H$ , and $H$ is the number of heads. Then, the context representation $Z_{h}\in\mathbb{R}^{L\times d}$ , that corresponds to each attention head $h$ , is obtained as:

其中 $W^{Q_{h}},W^{K_{h}},W^{V_{h}}\in\mathbb{R}^{d\times d}$ 是可学习的投影矩阵,$d=e/H$,$H$ 是注意力头数。随后,每个注意力头 $h$ 对应的上下文表示 $Z_{h}\in\mathbb{R}^{L\times d}$ 通过以下方式获得:

$$

Z_{h}=\mathsf{s o f t m a x}\left(\frac{Q_{h}K_{h}^{\top}}{\sqrt{d}}\right)V_{h},

$$

$$

Z_{h}=\mathsf{s o f t m a x}\left(\frac{Q_{h}K_{h}^{\top}}{\sqrt{d}}\right)V_{h},

$$

where the softmax is performed row-wise. The head context representations are concatenated to obtain the final context representation $\boldsymbol{Z}\in\mathbb{R}^{L\times e}$ :

其中softmax按行执行。将各头部的上下文表示拼接起来,得到最终的上下文表示 $\boldsymbol{Z}\in\mathbb{R}^{L\times e}$ :

$$

Z=[Z_{1},\dots,Z_{H}]W^{R},

$$

$$

Z=[Z_{1},\dots,Z_{H}]W^{R},

$$

where $W^{R}\in\mathbb{R}^{e\times e}$ is another projection matrix that aggregates all head’s representations.

其中 $W^{R}\in\mathbb{R}^{e\times e}$ 是另一个用于聚合所有头表示 (head representations) 的投影矩阵。

2.2 Continuous Attention

2.2 持续注意力

Continuous attention mechanisms (Martins et al., 2020) have been proposed to handle arbitrary continuous signals, where the attention probability mass function over words is replaced by a probability density over a signal. This allows time intervals or compact segments to be selected.

持续注意力机制 (Martins et al., 2020) 被提出用于处理任意连续信号,其中基于词语的注意力概率质量函数被替换为基于信号的概率密度。这使得可以选择时间间隔或紧凑片段。

To perform continuous attention, the first step is to transform the discrete text sequence represented by $X\in\mathbb{R}^{L\times e}$ into a continuous signal. This is done by expressing it as a linear combination of basis functions. To do so, each $x_{i}$ , with $i\in{1,\ldots,L}$ , is first associated with a position in an interval, $t_{i}\in[0,1]$ , e.g., by setting $t_{i}=i/L$ . Then, we obtain a continuous-space representation ${\bar{X}}(t)\in\mathbb{R}^{e}$ , for any $t\in[0,1]$ as:

为实现连续注意力机制,首先需要将离散文本序列 $X\in\mathbb{R}^{L\times e}$ 转换为连续信号。具体方法是通过基函数的线性组合来表示该序列:将每个 $x_{i}$ (其中 $i\in{1,\ldots,L}$)映射到区间 $t_{i}\in[0,1]$ 内的位置(例如设 $t_{i}=i/L$),从而得到任意 $t\in[0,1]$ 对应的连续空间表示 ${\bar{X}}(t)\in\mathbb{R}^{e}$,其表达式为:

2 Background

2 背景

2.1 Transformer

2.1 Transformer

A transformer (Vaswani et al., 2017) is composed of several layers, which encompass a multi-head self-attention layer followed by a feed-forward layer, along with residual connections (He et al., 2016) and layer normalization (Ba et al., 2016).

Transformer (Vaswani等人, 2017) 由多个层组成,这些层包含一个多头自注意力 (multi-head self-attention) 层和一个前馈 (feed-forward) 层,以及残差连接 (He等人, 2016) 和层归一化 (Ba等人, 2016)。

Let us denote the input sequence as $\boldsymbol{X}=[x_{1},\dots,x_{L}]\in\mathbb{R}^{L\times e}$ , where $L$ is the

输入序列记为 $\boldsymbol{X}=[x_{1},\dots,x_{L}]\in\mathbb{R}^{L\times e}$,其中 $L$ 为

$$

\bar{\boldsymbol{X}}(t)=\boldsymbol{B}^{\top}\boldsymbol{\psi}(t),

$$

$$

\bar{\boldsymbol{X}}(t)=\boldsymbol{B}^{\top}\boldsymbol{\psi}(t),

$$

where $\psi(t)\in\mathbb{R}^{N}$ is a vector of $N$ RBFs, e.g., $\psi_{j}(t) = \mathcal{N}(t;\mu_{j},\sigma_{j})$ , with $\mu_{j} \in~[0,1]$ , and $\boldsymbol{B}\in\mathbb{R}^{N\times e}$ is a coefficient matrix. $B$ is obtained with multivariate ridge regression (Brown et al., 1980) so that $\bar{X}(t_{i})\approx x_{i}$ for each $i\in[L]$ , which leads to the closed form (see App. A for details):

其中 $\psi(t)\in\mathbb{R}^{N}$ 是由 $N$ 个径向基函数 (RBF) 构成的向量,例如 $\psi_{j}(t) = \mathcal{N}(t;\mu_{j},\sigma_{j})$ ,其中 $\mu_{j} \in~[0,1]$ ,而 $\boldsymbol{B}\in\mathbb{R}^{N\times e}$ 是系数矩阵。通过多元岭回归 (Brown et al., 1980) 求解 $B$ 使得每个 $i\in[L]$ 满足 $\bar{X}(t_{i})\approx x_{i}$ ,其闭式解为 (详见附录A):

$$

\boldsymbol{B}^{\intercal}=\boldsymbol{X}^{\intercal}\boldsymbol{F}^{\intercal}(\boldsymbol{F}\boldsymbol{F}^{\intercal}+\lambda\boldsymbol{I})^{-1}=\boldsymbol{X}^{\intercal}\boldsymbol{G},

$$

$$

\boldsymbol{B}^{\intercal}=\boldsymbol{X}^{\intercal}\boldsymbol{F}^{\intercal}(\boldsymbol{F}\boldsymbol{F}^{\intercal}+\lambda\boldsymbol{I})^{-1}=\boldsymbol{X}^{\intercal}\boldsymbol{G},

$$

where $F=[\psi(t_{1}),\dots,\psi(t_{L})]\in\mathbb{R}^{N\times L}$ packs the basis vectors for the $L$ locations. As $G\in\mathbb{R}^{L\times N}$ only depends of $F$ , it can be computed offline.

其中 $F=[\psi(t_{1}),\dots,\psi(t_{L})]\in\mathbb{R}^{N\times L}$ 打包了 $L$ 个位置的基向量。由于 $G\in\mathbb{R}^{L\times N}$ 仅依赖于 $F$ ,因此可以离线计算。

Having converted the input sequence into a continuous signal ${\bar{X}}(t)$ , the second step is to attend over this signal. To do so, instead of having a discrete probability distribution over the input sequence as in standard attention mechanisms (like in Eq. 2), we have a probability density $p$ , which can be a Gaussian $\mathcal{N}(t;\mu,\sigma^{2})$ , where $\mu$ and $\sigma^{2}$ are computed by a neural component. A unimodal Gaussian distribution encourages each attention head to attend to a single region, as opposed to scattering its attention through many places, and enables tractable computation. Finally, having $p$ , we can compute the context vector $c$ as:

将输入序列转换为连续信号 ${\bar{X}}(t)$ 后,第二步是对该信号进行注意力处理。为此,不同于标准注意力机制(如公式2)中对输入序列的离散概率分布,我们采用由神经网络组件计算均值 $\mu$ 和方差 $\sigma^{2}$ 的高斯概率密度 $p$ ,即 $\mathcal{N}(t;\mu,\sigma^{2})$ 。单峰高斯分布促使每个注意力头聚焦于单一区域,而非将注意力分散至多处,同时确保计算可处理性。最终,基于 $p$ 可计算上下文向量 $c$ :

$$

c=\mathbb{E}_{p}\left[\bar{X}(t)\right].

$$

$$

c=\mathbb{E}_{p}\left[\bar{X}(t)\right].

$$

Martins et al. (2020) introduced the continuous attention framework for RNNs. In the following section (§3.1), we will explain how it can be used for transformer multi-head attention.

Martins et al. (2020) 提出了面向RNN的连续注意力框架。下一节 (第3.1节) 将阐述如何将其应用于Transformer的多头注意力机制。

3 Infinite Memory Transformer

3 Infinite Memory Transformer

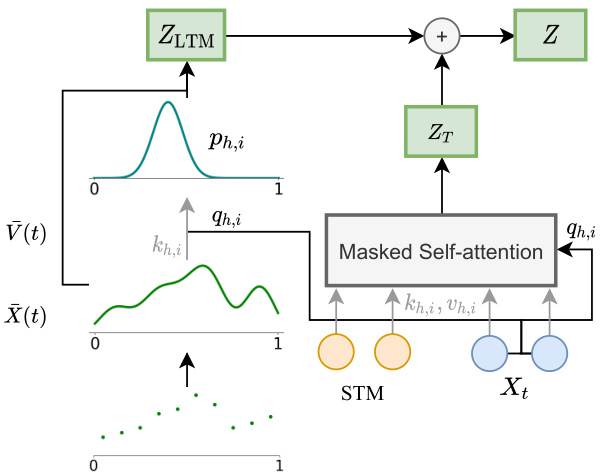

To allow the model to access long-range context, we propose extending the vanilla transformer with a continuous LTM, which stores the input embeddings and hidden states of the previous steps. We also consider the possibility of having two memories: the LTM and a short-term memory (STM), which consists in an extension of the transformer’s hidden states and is attended to by the transformer’s self-attention, as in the transformer-XL (Dai et al., 2019). A diagram of the model is shown in Fig. 1.

为了让模型能够访问长距离上下文,我们提出用连续长时记忆 (LTM) 扩展基础 Transformer,该模块存储了先前步骤的输入嵌入和隐藏状态。我们还考虑采用双记忆机制的可能性:长时记忆 (LTM) 和短时记忆 (STM),其中短时记忆通过扩展 Transformer 的隐藏状态实现,并像 Transformer-XL (Dai et al., 2019) 那样参与 Transformer 的自注意力计算。模型结构如图 1 所示。

3.1 Long-term Memory

3.1 长期记忆

For simplicity, let us first assume that the longterm memory contains an explicit input discrete sequence $X$ that consists of the past text sequence’s input embeddings or hidden states,2 depending on the layer3 (we will later extend this idea to an unbounded memory in $\S3.2)$ .

为简化问题,我们首先假设长期记忆存储着一个显式的离散输入序列$X$,该序列由过往文本序列的输入嵌入或隐藏状态组成(具体取决于网络层,我们将在$\S3.2$节将此概念扩展至无界内存)。

First, we need to transform $X$ into a continuous approximation ${\bar{X}}(t)$ . We compute ${\bar{X}}(t)$ as:

首先,我们需要将 $X$ 转换为连续近似 ${\bar{X}}(t)$ 。计算 ${\bar{X}}(t)$ 的公式为:

$$

\bar{\boldsymbol{X}}(t)=\boldsymbol{B}^{\top}\boldsymbol{\psi}(t),

$$

$$

\bar{\boldsymbol{X}}(t)=\boldsymbol{B}^{\top}\boldsymbol{\psi}(t),

$$

where $\psi(t)\in\mathbb{R}^{N}$ are basis functions and coefficients $\boldsymbol{B}\in\mathbb{R}^{N\times e}$ are computed as in Eq. 5,

其中 $\psi(t)\in\mathbb{R}^{N}$ 是基函数,系数 $\boldsymbol{B}\in\mathbb{R}^{N\times e}$ 按式5计算,

Figure $1\colon\infty$ -former’s attention diagram with sequence of text, $X_{t}$ , of size $L=2$ and STM of size $L_{\mathrm{STM}}=$ 2. Circles represent input embeddings or hidden states (depending on the layer) for head $h$ and query $i$ . Both the self-attention and the attention over the LTM are performed in parallel for each head and query.

图 $1\colon\infty$ -former 在文本序列 $X_{t}$ (长度为 $L=2$) 和 STM (长度为 $L_{\mathrm{STM}}=2$) 情况下的注意力机制示意图。圆圈表示头 $h$ 查询 $i$ 对应的输入嵌入或隐藏状态(具体取决于网络层)。每个头和查询都会并行执行自注意力机制和 LTM 注意力计算。

$B^{\top}=X^{\top}G$ . Then, we can compute the LTM keys, $K_{h}\in\mathbb{R}^{N\times d}$ , and values, $V_{h}\in\mathbb{R}^{N\times d}$ , for each attention head $h$ , as:

$B^{\top}=X^{\top}G$ 。接着,我们可以计算每个注意力头 $h$ 的长时记忆键 $K_{h}\in\mathbb{R}^{N\times d}$ 和值 $V_{h}\in\mathbb{R}^{N\times d}$ ,公式如下:

$$

{\cal K}{h}=B_{h}{\cal W}^{K_{h}},~{\cal V}{h}=B_{h}{\cal W}^{V_{h}},

$$

$$

{\cal K}{h}=B_{h}{\cal W}^{K_{h}},~{\cal V}{h}=B_{h}{\cal W}^{V_{h}},

$$

where $W^{K_{h}}$ , $W^{V_{h}}\in\mathbb{R}^{d\times d}$ are learnable projection matrices.4 For each query $q_{h,i}$ for $i\in{1,\ldots,L}$ , we use a parameterized network, which takes as input the attention scores, to compute $\mu_{h,i}\in]0,1[$ and $\sigma_{h,i}^{2}\in\mathbb{R}_{>0}$ :

其中 $W^{K_{h}}$ 和 $W^{V_{h}}\in\mathbb{R}^{d\times d}$ 是可学习的投影矩阵。对于每个查询 $q_{h,i}$ (其中 $i\in{1,\ldots,L}$),我们使用一个参数化网络,以注意力分数作为输入,计算 $\mu_{h,i}\in]0,1[$ 和 $\sigma_{h,i}^{2}\in\mathbb{R}_{>0}$:

$$

\begin{array}{r l}&{\mu_{h,i}=\mathrm{sigmoid}\left(\mathrm{affnne}\left(\frac{K_{h}q_{h,i}}{\sqrt{d}}\right)\right)}\ &{\sigma_{h,i}^{2}=\mathrm{softplus}\left(\mathrm{affne}\left(\frac{K_{h}q_{h,i}}{\sqrt{d}}\right)\right).}\end{array}

$$

$$

\begin{array}{r l}&{\mu_{h,i}=\mathrm{sigmoid}\left(\mathrm{affnne}\left(\frac{K_{h}q_{h,i}}{\sqrt{d}}\right)\right)}\ &{\sigma_{h,i}^{2}=\mathrm{softplus}\left(\mathrm{affne}\left(\frac{K_{h}q_{h,i}}{\sqrt{d}}\right)\right).}\end{array}

$$

Then, using the continuous softmax transformation (Martins et al., 2020), we obtain the probability density $p_{h,i}$ as $\mathcal{N}(t;\mu_{h,i},\sigma_{h,i}^{2})$ .

然后,使用连续softmax变换 (Martins et al., 2020),我们得到概率密度$p_{h,i}$为$\mathcal{N}(t;\mu_{h,i},\sigma_{h,i}^{2})$。

Finally, having the value function $\bar{V}{h}(t)$ given as ${\bar{V}}{h}(t)=V_{h}^{\top}\psi(t)$ , we compute the head-specific representation vectors as in Eq. 6:

最后,给定价值函数 $\bar{V}{h}(t)$ 为 ${\bar{V}}{h}(t)=V_{h}^{\top}\psi(t)$ ,我们按照公式6计算头部特定表示向量:

$$

z_{h,i}=\mathbb{E}{p_{h,i}}[\bar{V}{h}]=V_{h}^{\top}\mathbb{E}{p_{h,i}}[\psi(t)]

$$

$$

z_{h,i}=\mathbb{E}{p_{h,i}}[\bar{V}{h}]=V_{h}^{\top}\mathbb{E}{p_{h,i}}[\psi(t)]

$$

which form the rows of matrix $Z_{\mathrm{LTM},h}\in\mathbb{R}^{L\times d}$ that goes through an affine transformation, $Z_{\mathrm{LTM}}=[Z_{\mathrm{LTM},1},\dots,Z_{\mathrm{LTM},H}]W^{O}$ .

这些行构成了矩阵 $Z_{\mathrm{LTM},h}\in\mathbb{R}^{L\times d}$ ,经过仿射变换后得到 $Z_{\mathrm{LTM}}=[Z_{\mathrm{LTM},1},\dots,Z_{\mathrm{LTM},H}]W^{O}$ 。

The long-term representation, $Z_{\mathrm{LTM}}$ , is then summed to the transformer context vector, $Z_{\mathrm{T}}$ , to obtain the final context representation $\boldsymbol{Z}\in\mathbb{R}^{L\times e}$ :

长期记忆表示 $Z_{\mathrm{LTM}}$ 随后与 Transformer 上下文向量 $Z_{\mathrm{T}}$ 相加,得到最终的上下文表示 $\boldsymbol{Z}\in\mathbb{R}^{L\times e}$:

$$

Z=Z_{\mathrm{T}}+Z_{\mathrm{LTM}},

$$

$$

Z=Z_{\mathrm{T}}+Z_{\mathrm{LTM}},

$$

which will be the input to the feed-forward layer.

这将成为前馈层的输入。

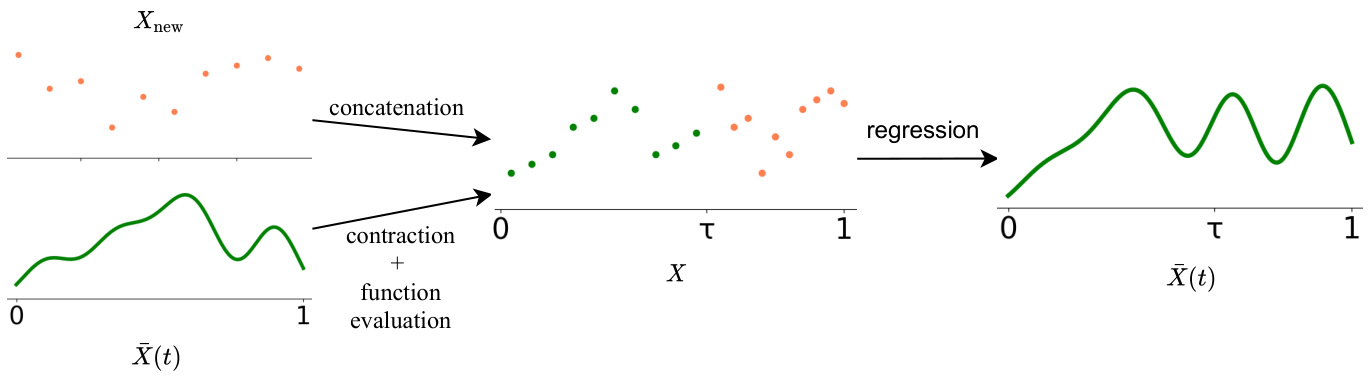

Figure 2: Diagram of the unbounded memory update procedure. This is performed in parallel for each embedding dimension, and repeated throughout the input sequence. We propose two alternatives to select the positions used for the function evaluation: linearly spaced or sticky memories.

图 2: 无界内存更新流程示意图。该过程在每个嵌入维度上并行执行,并在整个输入序列中重复。我们提出了两种选择用于函数评估位置的方案:线性间隔或粘性记忆。

3.1.1 Attention Complexity

3.1.1 注意力复杂度

As the $\infty$ -former makes use of a continuousspace attention framework (Martins et al., 2020) to attend over the LTM signal, its key matrix size ${K_{h}}\in\mathbb{R}^{N\times d}$ depends only on the number of basis functions $N$ , but not on the length of the context being attended to. Thus, the $\infty$ -former’s attention complexity is also independent of the context’s length. It corresponds to $\mathcal{O}(L\times(L+L_{\mathrm{STM}})+L\times N)$ when also using a short-term memory and $\mathcal{O}(L^{2}+L\times N)$ when only using the LTM, both $\ll\mathcal{O}(L\times(L+L_{\mathrm{LTM}}))$ , which would be the complexity of a vanilla transformer attending to the same context. For this reason, the $\infty$ -former can attend to arbitrarily long contexts without increasing the amount of computation needed.

由于 $\infty$-former 采用了连续空间注意力框架 (Martins et al., 2020) 来处理长时记忆 (LTM) 信号,其关键矩阵大小 ${K_{h}}\in\mathbb{R}^{N\times d}$ 仅取决于基函数数量 $N$,而与待处理上下文的长度无关。因此,$\infty$-former 的注意力计算复杂度也与上下文长度无关。当同时使用短时记忆时,复杂度为 $\mathcal{O}(L\times(L+L_{\mathrm{STM}})+L\times N)$;仅使用长时记忆时,复杂度为 $\mathcal{O}(L^{2}+L\times N)$。这两种情况都远小于传统 Transformer 处理相同上下文时的复杂度 $\mathcal{O}(L\times(L+L_{\mathrm{LTM}}))$。正因如此,$\infty$-former 能够处理任意长度的上下文而无需增加计算量。

3.2 Unbounded Memory

3.2 无限内存

When representing the memory as a discrete sequence, to extend it, we need to store the new hidden states in memory. In a vanilla transformer, this is not feasible for long contexts due to the high memory requirements. However, the $\infty$ -former can attend to unbounded context without increasing memory requirements by using continuous attention, as next described and shown in Fig. 2.

当将记忆表示为离散序列时,为了扩展它,我们需要将新的隐藏状态存储在内存中。在普通的Transformer中,由于内存需求较高,这对于长上下文是不可行的。然而,$\infty$-former可以通过使用连续注意力来关注无界上下文,而不会增加内存需求,如下所述并在图2中展示。

To be able to build an unbounded representation, we first sample $M$ locations in [0, 1] and evaluate $\bar{X}(t)$ at those locations. These locations can simply be linearly spaced, or sampled according to the region importance, as described in $\S3.3$ .

为了构建无界表示,我们首先在[0, 1]区间内采样$M$个位置,并在这些位置上评估$\bar{X}(t)$。这些位置可以简单地线性间隔分布,或根据$\S3.3$中描述的区域重要性进行采样。

Then, we concatenate the corresponding vectors with the new vectors coming from the short-term memory. For that, we first need to contract this function by a factor of $\tau\in]0,1[$ to make room for the new vectors. We do this by defining:

然后,我们将对应的向量与来自短期记忆的新向量进行拼接。为此,我们首先需要将该函数按因子 $\tau\in]0,1[$ 收缩,以便为新向量腾出空间。具体定义如下:

$$

X^{\mathrm{contracted}}(t)=X(t/\tau)=B^{\top}\psi(t/\tau).

$$

$$

X^{\mathrm{contracted}}(t)=X(t/\tau)=B^{\top}\psi(t/\tau).

$$

Then, we can evaluate ${\bar{X}}(t)$ at the $M$ locations $0\leq t_{1},t_{2},\dots,t_{M}\leq\tau$ as:

然后,我们可以在 $M$ 个位置 $0\leq t_{1},t_{2},\dots,t_{M}\leq\tau$ 处评估 ${\bar{X}}(t)$:

$$

x_{m}=B^{\top}\psi(t_{m}/\tau),\quad{\mathrm{for~}}m\in[M],

$$

$$

x_{m}=B^{\top}\psi(t_{m}/\tau),\quad{\mathrm{for~}}m\in[M],

$$

and define a matrix $X_{\mathrm{past}}=[x_{1},x_{2},\ldots,x_{M}]^{\top}\in$ $\mathbb{R}^{M\times e}$ with these vectors as rows. After that, we concatenate this matrix with the new vectors $X_{\mathrm{new}}$ , obtaining:

并定义一个矩阵 $X_{\mathrm{past}}=[x_{1},x_{2},\ldots,x_{M}]^{\top}\in$ $\mathbb{R}^{M\times e}$,将这些向量作为行。之后,我们将该矩阵与新向量 $X_{\mathrm{new}}$ 拼接,得到:

$$

\begin{array}{r}{X=\Big[X_{\mathrm{past}}^{\top},X_{\mathrm{new}}^{\top}\Big]^{\top}\in\mathbb{R}^{(M+L)\times e}.}\end{array}

$$

$$

\begin{array}{r}{X=\Big[X_{\mathrm{past}}^{\top},X_{\mathrm{new}}^{\top}\Big]^{\top}\in\mathbb{R}^{(M+L)\times e}.}\end{array}

$$

Finally, we simply need to perform multivariate ridge regression to compute the new coefficient matrix $\boldsymbol{B}\in\mathbb{R}^{N\times e}$ , via $B^{\top}=X^{\top}G$ , as in Eq. 5. To do this, we need to associate the vectors in $X_{\mathrm{past}}$ with positions in $[0,\tau]$ and in $X_{\mathrm{new}}$ with positions in $\left]\tau,1\right]$ so that we obtain the matrix $G\in\mathbb{R}^{(M+L)\times N}$ . We consider the vectors positions to be linearly spaced.

最后,我们只需执行多元岭回归来计算新的系数矩阵 $\boldsymbol{B}\in\mathbb{R}^{N\times e}$,通过 $B^{\top}=X^{\top}G$,如公式5所示。为此,我们需要将 $X_{\mathrm{past}}$ 中的向量与 $[0,\tau]$ 区间内的位置关联,并将 $X_{\mathrm{new}}$ 中的向量与 $\left]\tau,1\right]$ 区间内的位置关联,从而得到矩阵 $G\in\mathbb{R}^{(M+L)\times N}$。我们假设这些向量的位置是线性分布的。

3.3 Sticky Memories

3.3 粘性记忆

When extending the LTM, we evaluate its current signal at $M$ locations in $[0,1]$ , as shown in Eq. 14. These locations can be linearly spaced. However, some regions of the signal can be more relevant than others, and should consequently be given a larger “memory space” in the next step LTM’s signal. To take this into account, we propose sampling the $M$ locations according to the signal’s relevance at each region (see Fig. 6 in App. B). To do so, we construct a histogram based on the attention given to each interval of the signal on the previous step. For that, we first divide the signal into

在扩展长时记忆(LTM)时,我们会在$[0,1]$区间内选取$M$个位置评估当前信号,如公式14所示。这些位置可以线性均匀分布。然而,信号的某些区域可能比其他区域更重要,因此应在下一步LTM信号中分配更大的"记忆空间"。为此,我们提出根据信号各区域的相关性来采样这$M$个位置(参见附录B中的图6)。具体实现时,我们基于上一步信号各区间获得的注意力构建直方图。首先将信号划分为...

$D$ linearly spaced bins ${d_{1},\ldots,d_{D}}$ . Then, we compute the probability given to each bin, $p(d_{j})$ for $j\in{1,\ldots,D}$ , as:

$D$ 个线性间隔的区间 ${d_{1},\ldots,d_{D}}$。然后,我们计算每个区间的概率 $p(d_{j})$,其中 $j\in{1,\ldots,D}$,公式如下:

$$

p(d_{j})\propto\sum_{h=1}^{H}\sum_{i=1}^{L}\int_{d_{j}}\mathcal{N}(t;\mu_{h,i},\sigma_{h,i}^{2})~d t,

$$

$$

p(d_{j})\propto\sum_{h=1}^{H}\sum_{i=1}^{L}\int_{d_{j}}\mathcal{N}(t;\mu_{h,i},\sigma_{h,i}^{2})~d t,

$$

where $H$ is the number of attention heads and $L$ is the sequence length. Note that Eq. 16’s integral can be evaluated efficiently using the erf function:

其中 $H$ 是注意力头(attention head)的数量,$L$ 是序列长度。需要注意的是,公式(16)的积分可以通过erf函数高效计算:

$$

\int_{a}^{b}{\mathcal{N}}(t;\mu,\sigma^{2})={\frac{1}{2}}{\bigg(}\mathrm{erf}{\bigg(}{\frac{b}{\sqrt{2}}}{\bigg)}-\mathrm{erf}{\bigg(}{\frac{a}{\sqrt{2}}}{\bigg)}{\bigg)}.

$$

$$

\int_{a}^{b}{\mathcal{N}}(t;\mu,\sigma^{2})={\frac{1}{2}}{\bigg(}\mathrm{erf}{\bigg(}{\frac{b}{\sqrt{2}}}{\bigg)}-\mathrm{erf}{\bigg(}{\frac{a}{\sqrt{2}}}{\bigg)}{\bigg)}.

$$

Then, we sample the $M$ locations at which the LTM’s signal is evaluated at, according to $p$ . By doing so, we evaluate the LTM’s signal at the regions which were considered more relevant by the previous step’s attention, and will, consequently attribute a larger space of the new LTM’s signal to the memories stored in those regions.

然后,我们根据 $p$ 对评估长时记忆 (LTM) 信号的 $M$ 个位置进行采样。通过这种方式,我们在上一步注意力认为更相关的区域评估长时记忆信号,从而将新长时记忆信号的更大空间归因于存储在这些区域的记忆。

3.4 Implementation and Learning Details

3.4 实现与学习细节

Discrete sequences can be highly irregular and, consequently, difficult to convert into a continuous signal through regression. Because of this, before applying multivariate ridge regression to convert the discrete sequence $X$ into a continuous signal, we use a simple convolutional layer (with stride $=$ 1 and width $=3$ ) as a gate, to smooth the sequence:

离散序列可能高度不规则,因此难以通过回归转换为连续信号。因此,在应用多元岭回归将离散序列 $X$ 转换为连续信号之前,我们使用一个简单的卷积层(步长 $=1$,宽度 $=3$)作为门控机制来平滑序列:

$$

\tilde{X}=\mathsf{s i g m o i d}\left(\mathrm{CNN}(X)\right)\odot X.

$$

$$

\tilde{X}=\mathsf{s i g m o i d}\left(\mathrm{CNN}(X)\right)\odot X.

$$

To train the model we use the cross entropy loss. Having a sequence of text $X$ of length $L$ as input, a language model outputs a probability distribution of the next word $p(x_{t+1}\mid x_{t},\ldots,x_{t-L})$ . Given a corpus of $T$ tokens, we train the model to minimize its negative log likelihood:

为了训练模型,我们使用交叉熵损失。给定一个长度为 $L$ 的文本序列 $X$ 作为输入,语言模型会输出下一个词的概率分布 $p(x_{t+1}\mid x_{t},\ldots,x_{t-L})$。给定一个包含 $T$ 个 token 的语料库,我们训练模型以最小化其负对数似然:

get:

获取:

$$

\mathcal{L}{\mathrm{KL}}=\sum_{t=0}^{T-1}\sum_{h=1}^{H}D_{\mathrm{KL}}\left(\mathcal{N}(\mu_{h},\sigma_{h})||\mathcal{N}(\mu_{h},\sigma_{0})\right)

$$

$$

\mathcal{L}{\mathrm{KL}}=\sum_{t=0}^{T-1}\sum_{h=1}^{H}D_{\mathrm{KL}}\left(\mathcal{N}(\mu_{h},\sigma_{h})||\mathcal{N}(\mu_{h},\sigma_{0})\right)

$$

$$

=\sum_{t=0}^{T-1}\sum_{h=1}^{H}\frac{1}{2}\Bigg(\frac{\sigma_{h}^{2}}{\sigma_{0}^{2}}-\log\Bigg(\frac{\sigma_{h}}{\sigma_{0}}\Bigg)-1\Bigg).

$$

$$

=\sum_{t=0}^{T-1}\sum_{h=1}^{H}\frac{1}{2}\Bigg(\frac{\sigma_{h}^{2}}{\sigma_{0}^{2}}-\log\Bigg(\frac{\sigma_{h}}{\sigma_{0}}\Bigg)-1\Bigg).

$$

Thus, the final loss that is minimized corresponds to:

因此,最终被最小化的损失对应于:

$$

\begin{array}{r}{\mathcal{L}=\mathcal{L}{\mathrm{NLL}}+\lambda_{\mathrm{KL}}\mathcal{L}_{\mathrm{KL}},}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}=\mathcal{L}{\mathrm{NLL}}+\lambda_{\mathrm{KL}}\mathcal{L}_{\mathrm{KL}},}\end{array}

$$

where $\lambda_{\mathrm{KL}}$ is a hyper parameter that controls the amount of KL regular iz ation.

其中 $\lambda_{\mathrm{KL}}$ 是控制KL正则化量的超参数。

4 Experiments

4 实验

To understand if the $\infty$ -former is able to model long contexts, we first performed experiments on a synthetic task, which consists of sorting tokens by their frequencies in a long sequence (§4.1). Then, we performed experiments on language modeling (§4.2) and document grounded dialogue generation (§4.3) by fine-tuning a pre-trained language model.5

为了验证$\infty$-former能否建模长上下文,我们首先在一项合成任务上进行了实验,该任务要求根据token在长序列中的出现频率对其进行排序(见4.1节)。接着,我们通过对预训练语言模型进行微调,在语言建模(见4.2节)和基于文档的对话生成(见4.3节)任务上展开了实验。

4.1 Sorting

4.1 排序

In this task, the input consists of a sequence of tokens sampled according to a token probability distribution (which is not known to the system). The goal is to generate the tokens in the decreasing order of their frequencies in the sequence. One example can be:

在此任务中,输入由根据token概率分布(系统未知该分布)采样得到的一系列token组成。目标是根据这些token在序列中出现频率的降序来生成它们。一个示例如下:

$$

{\mathcal{L}}{\mathrm{NLL}}=-\sum_{t=0}^{T-1}\log p(x_{t+1}\mid x_{t},\ldots,x_{t-L}).

$$

$$

{\mathcal{L}}{\mathrm{NLL}}=-\sum_{t=0}^{T-1}\log p(x_{t+1}\mid x_{t},\ldots,x_{t-L}).

$$

Additionally, in order to avoid having uniform distributions over the LTM, we regularize the continuous attention given to the LTM, by minimizing the Kullback-Leibler (KL) divergence, $D_{\mathrm{KL}}$ , between the attention probability density, $\mathcal{N}(\mu_{h},\sigma_{h})$ , and a Gaussian prior, $\mathcal{N}(\mu_{0},\sigma_{0})$ . As different heads can attend to different regions, we set $\mu_{0}=$ $\mu_{h}$ , regularizing only the attention variance, and

此外,为避免长时记忆 (LTM) 上出现均匀分布,我们通过最小化注意力概率密度 $\mathcal{N}(\mu_{h},\sigma_{h})$ 与高斯先验 $\mathcal{N}(\mu_{0},\sigma_{0})$ 之间的 Kullback-Leibler (KL) 散度 $D_{\mathrm{KL}}$ 来正则化对 LTM 的持续注意力。由于不同注意力头可关注不同区域,我们设 $\mu_{0}=$ $\mu_{h}$ ,仅正则化注意力方差,且

To understand if the long-term memory is being effectively used and the transformer is not only performing sorting by modeling the most recent tokens, we design the token probability distribution to change over time: namely, we set it as a mixture of two distributions, $p=\alpha p_{0}+(1-\alpha)p_{1}$ , where the mixture coefficient $\alpha\in[0,1]$ is progressively increased from 0 to 1 as the sequence is generated. The vocabulary has 20 tokens and we experiment with sequences of length 4,000, 8,000, and 16,000.

为了验证长期记忆是否被有效利用,以及Transformer是否不仅通过建模最近token来进行排序,我们设计了随时间变化的token概率分布:具体而言,将其设置为两个分布的混合形式 $p=\alpha p_{0}+(1-\alpha)p_{1}$ ,其中混合系数 $\alpha\in[0,1]$ 会随着序列生成过程从0逐步增加到1。词汇表包含20个token,我们分别对长度为4,000、8,000和16,000的序列进行了实验。

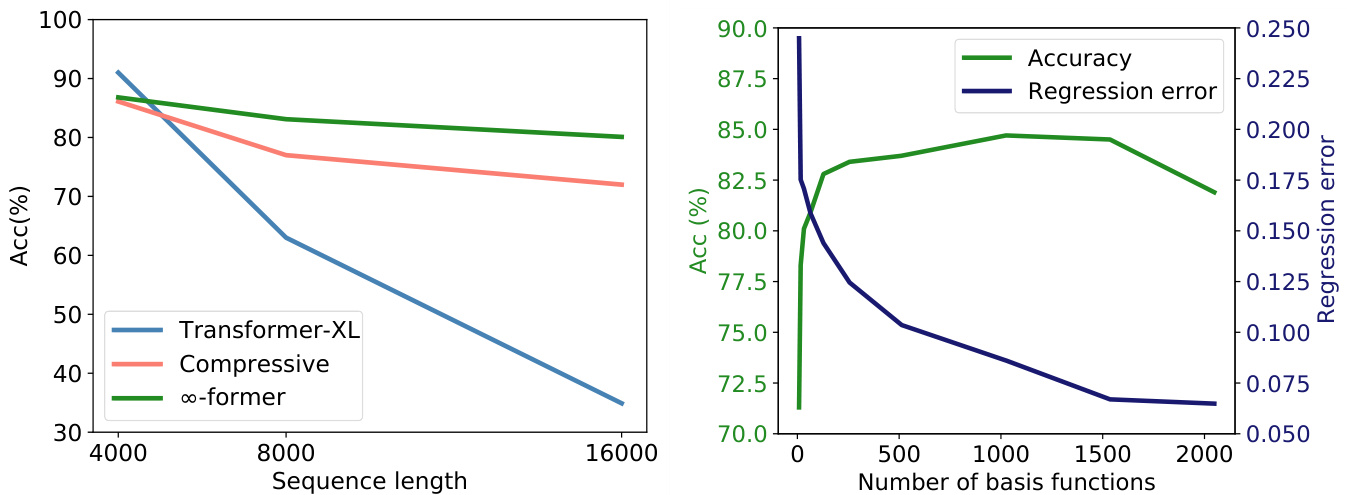

Figure 3: Left: Sorting task accuracy for sequences of length 4,000, 8,000, and 16,000. Right: Sorting task accuracy vs regression mean error, when varying the number of basis functions, for sequences of length 8,000.

图 3: 左: 长度为 4,000、8,000 和 16,000 的序列排序任务准确率。右: 在长度为 8,000 的序列上,改变基函数数量时的排序任务准确率与回归平均误差对比。

Baselines. We consider the transformer-XL6 (Dai et al., 2019) and the compressive transformer 7 (Rae et al., 2019) as baselines. The transformer-XL consists of a vanilla transformer (Vaswani et al., 2017) extended with a short-term memory which is composed of the hidden states of the previous steps. The compressive transformer is an extension of the transformer-XL: besides the short-term memory, it has a compressive long-term memory which is composed of the old vectors of the short-term memory, compressed using a CNN. Both the transformer-XL and the compressive transformer require relative positional encodings. In contrast, there is no need for positional encodings in the memory in our approach since the memory vectors represent basis coefficients in a predefined continuous space.

基线方法。我们选择 transformer-XL6 (Dai et al., 2019) 和 compressive transformer7 (Rae et al., 2019) 作为基线。transformer-XL 由基础 Transformer (Vaswani et al., 2017) 扩展而来,增加了由前几步隐藏状态组成的短期记忆模块。compressive transformer 是 transformer-XL 的扩展:除了短期记忆外,它还包含一个压缩长期记忆模块,该模块由经过 CNN 压缩的短期记忆旧向量组成。transformer-XL 和 compressive transformer 都需要相对位置编码。相比之下,我们的方法中记忆向量不需要位置编码,因为这些向量表示预定义连续空间中的基系数。

For all models we used a transformer with 3 layers and 6 attention heads, input size $L=1,024$ and memory size 2,048. For the compressive transformer, both memories have size 1,024. For the $\infty$ -former, we also consider a STM of size 1,024 and a LTM with $N=1,024$ basis functions, having the models the same computational cost. Further details are described in App. C.1.

我们使用的所有模型均为3层6注意力头的Transformer架构,输入尺寸$L=1,024$,记忆体容量2,048。其中压缩Transformer的两个记忆体容量均为1,024。对于$\infty$-former,我们还配置了容量1,024的短期记忆(STM)和含$N=1,024$个基函数的长期记忆(LTM),确保各模型计算成本一致。更多细节详见附录C.1。

Results. As can be seen in the left plot of Fig. 3, the transformer-XL achieves a slightly higher accuracy than the compressive transformer and $\infty$ -former for a short sequence length (4,000). This is because the transformer-XL is able to keep almost the entire sequence in memory. However, its accuracy degrades rapidly when the sequence length is increased. Both the compressive transformer and $\infty$ -former also lead to smaller accuracies when increasing the sequence length, as expected. However, this decrease is not so significant for the $\infty$ -former, which indicates that it is better at modeling long sequences.

结果。如图 3 左图所示,在短序列长度 (4,000) 下,transformer-XL 的准确率略高于 compressive transformer 和 $\infty$-former。这是因为 transformer-XL 能够将几乎整个序列保留在内存中。然而,当序列长度增加时,其准确率会迅速下降。正如预期的那样,compressive transformer 和 $\infty$-former 在增加序列长度时也会导致准确率下降。但对于 $\infty$-former 而言,这种下降并不显著,这表明它在建模长序列方面表现更优。

Regression error analysis. To better understand the trade-off between the $\infty$ -former’s memory precision and its computational efficiency, we analyze how its regression error and sorting accuracy vary when varying the number of basis functions used, on the sorting task with input sequences of length 8,000. As can be seen in the right plot of Fig. 3, the sorting accuracy is negatively correlated with the regression error, which is positively correlated with the number of basis functions. It can also be observed, that when increasing substantially the number of basis functions the regression error reaches a plateau and the accuracy starts to drop. We posit that the latter is caused by the model having a harder task at selecting the locations it should attend to. This shows that, as expected, when increasing $\infty$ -former’s efficiency or increasing the size of context being modeled, the memory loses precision.

回归误差分析。为了更好地理解 $\infty$-former 的记忆精度与计算效率之间的权衡,我们分析了在长度为 8,000 的输入序列排序任务中,其回归误差和排序准确率如何随使用的基函数数量变化而变化。如图 3 右图所示,排序准确率与回归误差呈负相关,而回归误差与基函数数量呈正相关。还可以观察到,当大幅增加基函数数量时,回归误差达到平台期,而准确率开始下降。我们认为后者是由于模型在选择应关注的位置时面临更困难的任务。这表明,正如预期的那样,当提高 $\infty$-former 的效率或增加建模上下文的大小时,记忆会失去精度。

4.2 Language Modeling

4.2 语言建模

To understand if long-term memories can be used to extend a pre-trained language model, we fine-tune GPT-2 small (Radford et al., 2019) on Wikitext- 103 (Merity et al., 2017) and a subset of PG-19 (Rae et al., 2019) containing the first 2,000 books ( $\approx200$ million tokens) of the training set. To do so, we extend GPT-2 with a continuous long-term memory ( $\mathrm{\infty}$ -former) and a compressed memory (compressive transformer) with a positional bias,

为了验证长期记忆能否用于扩展预训练语言模型,我们在Wikitext-103 (Merity et al., 2017) 和PG-19训练集前2000本书籍(约2亿token)子集 (Rae et al., 2019) 上对GPT-2 small (Radford et al., 2019) 进行微调。具体实现中,我们通过以下两种方式扩展GPT-2:带位置偏置的连续长期记忆 ( $\mathrm{\infty}$ -former) 和压缩记忆 (compressive transformer) 。

Table 1: Perplexity on Wikitext-103 and PG19.

表 1: Wikitext-103 和 PG19 上的困惑度。

| Wikitext-103 | PG19 | |

|---|---|---|

| GPT-2 | 16.85 | 33.44 |

| Compressive | 16.87 | 33.09 |

| o-former | 16.64 | 32.61 |

| -former (SM) | 16.61 | 32.48 |

based on Press et al. (2021).8

基于 Press 等人 (2021) 的研究。8

For these experiments, we consider transformers with input size $L=512$ , for the compressive transformer we use a compressed memory of size 512, and for the $\infty$ -former we consider a LTM with $N=512$ Gaussian RBFs and a memory threshold of 2,048 tokens, having the same computational budget for the two models. Further details and hyper parameters are described in App. C.2.

在这些实验中,我们考虑输入尺寸为 $L=512$ 的 Transformer。对于压缩 Transformer,我们使用大小为 512 的压缩记忆;对于 $\infty$ -former,我们考虑具有 $N=512$ 个高斯径向基函数 (Gaussian RBFs) 的长时记忆 (LTM) 和 2,048 个 Token 的记忆阈值,确保两种模型的计算预算相同。更多细节和超参数详见附录 C.2。

Results. The results reported in Table 1 show that the $\infty$ -former leads to perplexity improvements on both Wikitext-103 and PG19, while the compressive transformer only has a slight improvement on the latter. The improvements obtained by the $\infty$ -former are larger on the PG19 dataset, which can be justified by the nature of the datasets: books have more long range dependencies than Wikipedia articles (Rae et al., 2019).

结果。表1中报告的结果表明,$\infty$-former在Wikitext-103和PG19上都带来了困惑度(perplexity)提升,而压缩Transformer(compressive transformer)仅在后一个数据集上有轻微改进。$\infty$-former在PG19数据集上获得的改进更大,这可以通过数据集的特性来解释:书籍比维基百科文章具有更长程的依赖关系(Rae等人,2019)[20]。

4.3 Document Grounded Dialogue

4.3 文档基础对话

In document grounded dialogue generation, besides the dialogue history, models have access to a document concerning the conversation’s topic. In the CMU Document Grounded Conversation dataset (CMU-DoG) (Zhou et al., 2018), the dialogues are about movies and a summary of the movie is given as the auxiliary document; the auxiliary document is divided into parts that should be considered for the different utterances of the dialogue. In this paper, to evaluate the usefulness of the long-term memories, we make this task slightly more challenging by only giving the models access to the document before the start of the dialogue.

在基于文档的对话生成任务中,除了对话历史外,模型还能获取与话题相关的文档。CMU基于文档的对话数据集(CMU-DoG) (Zhou et al., 2018)中的对话以电影为主题,并提供了电影摘要作为辅助文档;该辅助文档被划分为多个部分,分别对应对话中不同话语的参考内容。本文为评估长期记忆的有效性,通过仅在对话开始前向模型提供文档的方式,略微提升了该任务的挑战性。

We fine-tune GPT-2 small (Radford et al., 2019) using an approach based on Wolf et al. (2019). To allow the model to keep the whole document on memory, we extend GPT-2 with a continuous LTM ( $\infty$ -former) with $N=512$ basis functions. As baselines, we use GPT-2, with and without access (GPT-2 w/o doc) to the auxiliary document, with input size $L=512$ , and GPT-2 with a compressed memory with attention positional biases (compressive), of size 512. Further details and hyper-parameters are stated in App. C.3.

我们采用基于Wolf等人(2019)的方法对GPT-2 small (Radford等人,2019)进行微调。为了让模型能够将整个文档保留在内存中,我们通过一个包含$N=512$个基函数的连续LTM( $\infty$ -former)对GPT-2进行了扩展。作为基线,我们使用了GPT-2(在有/无辅助文档访问权限(GPT-2 w/o doc)的情况下),输入尺寸为$L=512$,以及带有注意力位置偏置的压缩内存(compressive)、尺寸为512的GPT-2。更多细节和超参数见附录C.3。

Table 2: Results on CMU-DoG dataset.

| PPL | F1 | Rouge-1 | Rouge-L | Meteor | |

| GPT-2w/odoc | 19.43 | 7.82 | 12.18 | 10.17 | 6.10 |

| GPT-2 | 18.53 | 8.64 | 14.61 | 12.03 | 7.15 |

| Compressive | 18.02 | 8.78 | 14.74 | 12.14 | 7.29 |

| -former | 18.02 | 8.92 | 15.28 | 12.51 | 7.52 |

| -former (SM) | 18.04 | 9.01 | 15.37 | 12.56 | 7.55 |

表 2: CMU-DoG数据集上的结果。

| PPL | F1 | Rouge-1 | Rouge-L | Meteor | |

|---|---|---|---|---|---|

| GPT-2w/odoc | 19.43 | 7.82 | 12.18 | 10.17 | 6.10 |

| GPT-2 | 18.53 | 8.64 | 14.61 | 12.03 | 7.15 |

| Compressive | 18.02 | 8.78 | 14.74 | 12.14 | 7.29 |

| -former | 18.02 | 8.92 | 15.28 | 12.51 | 7.52 |

| -former (SM) | 18.04 | 9.01 | 15.37 | 12.56 | 7.55 |

To evaluate the models we use the metrics: perplexity, F1 score, Rouge-1 and Rouge-L (Lin, 2004), and Meteor (Banerjee and Lavie, 2005).

为评估模型性能,我们采用以下指标:困惑度 (perplexity)、F1分数、Rouge-1与Rouge-L (Lin, 2004) 以及Meteor (Banerjee and Lavie, 2005)。

Results. As shown in Table 2, by keeping the whole auxiliary document in memory, the $\infty$ -former and the compressive transformer are able to generate better utterances, according to all metrics. While the compressive and $\infty$ -former achieve essentially the same perplexity in this task, the $\infty$ -former achieves consistently better scores on all other metrics. Also, using sticky memories leads to slightly better results on those metrics, which suggests that attributing a larger space in the LTM to the most relevant tokens can be beneficial.

结果。如表 2 所示,通过将整个辅助文档保留在内存中,$\infty$-former 和 compressive transformer 能够根据所有指标生成更好的话语。虽然 compressive 和 $\infty$-former 在此任务中基本达到了相同的困惑度 (perplexity),但 $\infty$-former 在所有其他指标上始终获得更好的分数。此外,使用 sticky memories 在这些指标上带来了略微更好的结果,这表明在 LTM 中为最相关的 token 分配更大的空间可能是有益的。

Analysis. In Fig. 4, we show examples of ut- terances generated by $\infty$ -former along with the excerpts from the LTM that receive higher attention throughout the utterances’ generation. In these examples, we can clearly see that these excerpts are highly pertinent to the answers being generated. Also, in Fig. 5, we can see that the phrases which are attributed larger spaces in the LTM, when using sticky memories, are relevant to the conversations.

分析。在图4中,我们展示了由$\infty$-former生成的语句示例,以及在整个语句生成过程中获得较高关注度的长期记忆(LTM)片段。通过这些示例可以清晰看出,这些片段与正在生成的答案高度相关。此外,在图5中可见,当使用粘性记忆(sticky memories)时,那些在LTM中被分配较大空间的短语都与对话内容相关。

5 Related Work

5 相关工作

Continuous attention. Martins et al. (2020) introduced 1D and 2D continuous attention, using Gaussians and truncated parabolas as densities. They applied it to RNN-based document classification, machine translation, and visual question answering. Several other works have also proposed the use of (disc ret i zed) Gaussian attention for natural language processing tasks: Guo et al. (2019) proposed a Gaussian prior to the self-attention mechanism to bias the model to give higher attention to nearby words, and applied it to natural lan- guage inference; You et al. (2020) proposed the use

连续注意力。Martins等人 (2020) 提出了使用高斯分布和截断抛物线作为密度函数的1D和2D连续注意力机制,并将其应用于基于RNN的文档分类、机器翻译和视觉问答任务。其他多项研究也提出了将(离散化)高斯注意力用于自然语言处理任务:Guo等人 (2019) 在自注意力机制中引入高斯先验,使模型更关注邻近词语,并将其应用于自然语言推理任务;You等人 (2020) 提出了...

Cast: Macaulay Culkin as Kevin. Joe Pesci as Harry. Daniel Stern as Marv. John Heard as Peter. Roberts Blossom as Marley.

演员表:

Macaulay Culkin 饰 Kevin

Joe Pesci 饰 Harry

Daniel Stern 饰 Marv

John Heard 饰 Peter

Roberts Blossom 饰 Marley

The film stars Macaulay Culkin as Kevin McCall is ter, a boy who is mistakenly left behind when his family flies to Paris for their Christmas vacation. Kevin initially relishes being home alone, but soon has to contend with two would-be burglars played by Joe Pesci and Daniel Stern. The film also features Catherine O'Hara and John Heard as Kevin's parents.

影片由麦考利·卡尔金饰演凯文·麦卡利斯特,一个在家人飞往巴黎过圣诞假期时被意外落下的男孩。凯文起初享受独自在家的自由,但很快不得不面对由乔·佩西和丹尼尔·斯特恩饰演的两名企图行窃的盗贼。凯文的父母则由凯瑟琳·欧哈拉和约翰·赫德出演。

Movie Name: Home Alone. Rating: Rotten Tomatoes: $62%$ and average: 5.5/10, Metacritic Score: 63/100, Cinema Score: A. Year: 1990. The McCall is ter family is preparing to spend Christmas in Paris, gathering at Peter and Kate's home outside of Chicago on the night before their departure. Peter and Kate's youngest son, eightyear-old Kevin, is being ridiculed by his siblings and cousins. A fight with his older brother, Buzz, results in Kevin getting sent to the third floor of the house for punishment, where he wishes that his

电影名称:小鬼当家。评分:烂番茄:$62%$,平均分:5.5/10;Metacritic评分:63/100;影院评分:A。年份:1990。McCallister一家正准备前往巴黎过圣诞节,出发前一晚聚集在Peter和Kate位于芝加哥郊外的家中。Peter和Kate的小儿子、八岁的Kevin正被兄弟姐妹和表亲们嘲笑。与哥哥Buzz的一场争执导致Kevin被罚到房子的三楼,在那里他许愿让他的...

Previous utterance: Or maybe rent, anything is reason to celebrate..I would like to talk about a movie called "Home Alone"

上句:或者也许是房租,任何事都值得庆祝...我想聊聊一部叫《小鬼当家》的电影

Answer: Macaulay Culkin is the main actor and it is a comedy.

答案:Macaulay Culkin是主演,这是一部喜剧。

Previous utterance: That sounds like a great movie. Any more details?

上一条对话:听起来是部很棒的电影。能再详细说说吗?

Answer: The screenplay came out in 1990 and has been on the air for quite a while.

答:该剧本于1990年问世,并已播出相当长一段时间。

Figure 4: Examples of answers generated by $\infty$ -former on a dialogue about the movie “Home Alone”. The excerpts from the LTM that are more attended to throughout the utterances generation are highlighted on each color, correspondingly.

图 4: $\infty$ -former在关于电影《小鬼当家》的对话中生成的回答示例。在生成话语过程中,从长时记忆(LTM)中提取的、受到更多关注的部分分别以对应颜色高亮显示。

Toy Story: Tom Hanks as Woody | animated buddy comedy | Toy Story was the first feature length computer animated film | produced by Pixar | toys pretend to be lifeless whenever humans are present | focuses on the relationship between Woody and Gold | fashioned pull string cowboy doll

玩具总动员:Tom Hanks配音Woody | 动画伙伴喜剧 | 首部全电脑动画长片 | 皮克斯制作 | 人类在场时玩具装成无生命体 | 聚焦Woody与Gold的关系 | 拉线牛仔玩偶造型

La La Land: Ryan Gosling | Emma Stone as Mia | Hollywood | the city of Los Angeles | Meta critics: 93/100 | 2016 | During a gig at a restaurant Sebastian slips into a passionate jazz | despite warning from the owner | Mia overhears the music as she passes by | for his disobedience

爱乐之城: Ryan Gosling | Emma Stone 饰演 Mia | 好莱坞 | 洛杉矶 | 烂番茄评分: 93/100 | 2016年 | Sebastian在餐厅演出时不顾老板警告即兴演奏激情爵士乐 | 路过的Mia被琴声吸引

Figure 5: Phrases that hold larger spaces of the LTM, when using sticky memories, for two dialogue examples (in App. E).

图 5: 使用粘性记忆时,两个对话示例中占据长期记忆 (LTM) 更大空间的短语 (见附录 E)。

of hard-coded Gaussian attention as input-agnostic self-attention layer for machine translation; Dubois et al. (2020) proposed using Gaussian attention as a location attenti