GPTScore: Evaluate as You Desire

GPTScore: 按需评估

Jinlan Fu 1 See-Kiong $\mathbf{Ng}^{1}$ Zhengbao Jiang 2 Pengfei Liu 2

Jinlan Fu 1 See-Kiong $\mathbf{Ng}^{1}$ Zhengbao Jiang 2 Pengfei Liu 2

Abstract

摘要

Generative Artificial Intelligence (AI) has enabled the development of sophisticated models that are capable of producing high-caliber text, images, and other outputs through the utilization of large pre-trained models. Nevertheless, assessing the quality of the generation is an even more arduous task than the generation itself, and this issue has not been given adequate consideration recently. This paper proposes a novel evaluation framework, GPTSCORE, which utilizes the emergent abilities (e.g., zero-shot instruction) of generative pre-trained models to score generated texts. There are 19 pre-trained models explored in this paper, ranging in size from 80M (e.g., FLAN-T5-small) to 175B (e.g., GPT3). Experimental results on four text generation tasks, 22 evaluation aspects, and corresponding 37 datasets demonstrate that this approach can effectively allow us to achieve what one desires to evaluate for texts simply by natural language instructions. This nature helps us overcome several long-standing challenges in text evaluation–how to achieve customized, multi-faceted evaluation without the need for annotated samples. We make our code publicly available. 1

生成式人工智能 (Generative AI) 通过利用大型预训练模型,已能开发出可产出高质量文本、图像等内容的复杂模型。然而,评估生成内容的质量比生成本身更为艰巨,这一问题近期未获足够重视。本文提出新型评估框架 GPTSCORE,利用生成式预训练模型的涌现能力 (如零样本指令) 对生成文本进行评分。研究探索了 19 个预训练模型,规模从 80M (如 FLAN-T5-small) 到 175B (如 GPT3) 不等。在4项文本生成任务、22个评估维度及对应37个数据集上的实验结果表明,该方法仅需自然语言指令即可有效实现文本的定制化评估。这一特性帮助我们克服了文本评估中长期存在的挑战——如何在无需标注样本的情况下实现定制化、多维度评估。代码已开源。[1]

1. Introduction

1. 引言

quality of these texts lag far behind. This is especially evident in the following ways:

这些文本的质量远远落后。这尤其体现在以下几个方面:

The advent of generative pre-trained models, such as GPT3 (Brown et al., 2020), has precipitated a shift from analytical AI to generative AI across multiple domains (Sequoia, 2022). Take text as an example: the use of a large pretrained model with appropriate prompts (Liu et al., 2021) has achieved superior performance in tasks defined both in academia (Sanh et al., 2021) and scenarios from the real world (Ouyang et al., 2022). While text generation technology is advancing rapidly, techniques for evaluating the (a) Existing studies evaluate text quality with limited aspects (e.g., semantic equivalence, fluency) (Fig. 1-(a)), which are usually customized prohibitively, making it harder for users to evaluate aspects as they need (Freitag et al., 2021). (b) A handful of studies have examined multi-aspect evaluation (Yuan et al., 2021; Scialom et al., 2021; Zhong et al., 2022) but have not given adequate attention to the definition of the evaluation aspect and the latent relationship among them. Instead, the evaluation of an aspect is either empirically bound with metric variants (Yuan et al., 2021) or learned by supervised signals (Zhong et al., 2022). (c) Recently proposed evaluation methods (Mehri & Eské- nazi, 2020; Rei et al., 2020; Li et al., 2021; Zhong et al., 2022) usually necessitate a complicated training procedure or costly manual annotation of samples (Fig. 1-(a,b)), which makes it hard to use these methods in industrial settings due to the amount of time needed for annotation and training to accommodate a new evaluation demand from the user.

生成式预训练模型(如GPT3 [20])的出现,推动了多个领域从分析型AI向生成式AI(Generative AI)的转变[Sequoia, 2022]。以文本为例:通过使用具备适当提示词(Prompt)的大规模预训练模型[Liu et al., 2021],在学术界定义的任务[Sanh et al., 2021]和现实场景[Ouyang et al., 2022]中都取得了卓越性能。虽然文本生成技术发展迅猛,但评估技术仍面临挑战:(a)现有研究仅从有限维度(如语义等价性、流畅性)评估文本质量(图1-(a)),这些评估方式通常定制成本过高,导致用户难以按需评估[Freitag et al., 2021];(b)少数研究探索了多维度评估[Yuan et al., 2021; Scialom et al., 2021; Zhong et al., 2022],但未充分关注评估维度的定义及其潜在关联,评估方式要么与指标变体经验性绑定[Yuan et al., 2021],要么依赖监督信号学习[Zhong et al., 2022];(c)最新提出的评估方法[Mehri & Eské- nazi, 2020; Rei et al., 2020; Li et al., 2021; Zhong et al., 2022]通常需要复杂训练流程或高昂的人工标注成本(图1-(a,b)),由于标注和训练所需时间过长,难以满足工业场景中用户新的评估需求。

Figure 1. An overview of text evaluation approaches.

图 1: 文本评估方法概览。

In this paper, we demonstrated the talent of the super large pre-trained language model (e.g., GPT-3) in achieving multiaspect, customized, and training-free evaluation (Fig. 1- (c)). In essence, it skillfully uses the pre-trained model’s zero-shot instruction (Chung et al., 2022), and in-context learning (Brown et al., 2020; Min et al., 2022) ability to deal with complex and ever-changing evaluation needs so as to solve multiple evaluation challenges that have been plagued for many years at the same time.

本文展示了超大规模预训练语言模型(如GPT-3)在多维度、定制化、免训练评估方面的卓越能力(图1-(c))。其核心在于巧妙运用预训练模型的零样本指令(Chung et al., 2022)和上下文学习能力(Brown et al., 2020; Min et al., 2022),以应对复杂多变的评估需求,从而同步解决多个困扰领域多年的评估难题。

Specifically, given a text generated from a certain context, and desirable evaluation aspects (e.g., fluency), the highlevel idea of the proposed framework is that the higherquality text of a certain aspect will be more likely generated than unqualified ones based on the given context, where the “likely” can be measured by the conditional generation probability. As illustrated in Fig. 2, to capture users’ true desires, an evaluation protocol will be initially established based on (a) the task specification, which typically outlines how the text is generated (e.g., generate a response for a human based on the conversation.) (b) aspect definition that documents the details of desirable evaluation aspects (e.g., the response should be intuitive to understand). Subsequently, each evaluation sample will be presented with the evaluated protocol with optionally moderate exemplar samples, which could facilitate the model’s learning. Lastly, a large generative pre-trained model will be used to calculate how likely the text could be generated based on the above evaluation protocol, thus giving rise to our model’s name: GPTSCORE. Given the plethora of pre-trained models, we instantiate our framework with different backbones: GPT2 (Radford et al., 2019), OPT (Zhang et al., 2022b), FLAN (Chung et al., 2022), and GPT3 (instruction-based (Ouyang et al., 2022)) due to their superior capacity for zero-shot instruction and their aptitude for in-context learning.

具体来说,给定从特定上下文生成的文本及期望评估维度(如流畅性),该框架的核心思想是:基于给定上下文,高质量文本在特定维度上比不合格文本更有可能被生成,其中"可能性"可通过条件生成概率衡量。如图 2 所示,为捕捉用户真实需求,评估协议将基于以下两点建立:(a) 任务规范(通常描述文本生成方式,例如根据对话生成人类回复);(b) 维度定义(记录期望评估维度的细节,例如回复应易于理解)。随后,每个评估样本将附带可选的中等数量示例样本呈现给评估协议,以促进模型学习。最终,大型生成式预训练模型将用于计算文本基于上述评估协议被生成的概率,因此我们将模型命名为GPTSCORE。鉴于预训练模型的丰富性,我们选用不同骨干网络实例化框架:GPT2 (Radford等人, 2019)、OPT (Zhang等人, 2022b)、FLAN (Chung等人, 2022)和GPT3(基于指令调优版本(Ouyang等人, 2022)),因其在零样本指令和上下文学习方面的卓越能力。

Figure 2. The framework of GPTSCORE. We include two evaluation aspects relevance (REL) and informative (INF) in this figure and use the evaluation of relevance (REL) of the text sum mari z ation task to exemplify our framework.

图 2: GPTSCORE框架。我们在图中包含两个评估维度:相关性(REL)和信息量(INF),并以文本摘要任务的相关性(REL)评估为例展示我们的框架。

Experimentally, we ran through almost all common natural language generation tasks in NLP, and the results showed the power of this new paradigm. The main observations are listed as follows: (1) Evaluating texts with generative pre-training models can be more reliable when instructed by the definition of task and aspect, providing a degree of flexibility to accommodate various evaluation criteria. Furthermore, incorporating exemplified samples with in-context learning will further enhance the process. (2) Different evaluation aspects exhibit certain correlations. Combining definitions with other highly correlated aspects can improve evaluation performance. (3) The performance of GPT3-text-davinci-003, which is tuned based on human feedback, is inferior to GPT3-text-davinci-001 in the majority of the evaluation settings, necessitating deep explorations on the working mechanism of human feedback-based instruction learning (e.g., when it will fail).

实验过程中,我们遍历了自然语言处理领域几乎所有常见的自然语言生成任务,结果表明这一新范式具有强大能力。主要发现如下:(1) 当通过任务和维度的定义进行指导时,使用生成式预训练模型评估文本能获得更高可靠性,并为适应各类评估标准提供灵活性。此外,结合上下文学习中的示例样本能进一步优化评估过程。(2) 不同评估维度间存在一定相关性。将定义与其他高相关维度结合可提升评估性能。(3) 基于人类反馈调优的GPT3-text-davinci-003在大多数评估场景中表现逊于GPT3-text-davinci-001,这要求我们深入探究基于人类反馈的指令学习工作机制(例如其失效条件)。

2. Preliminaries

2. 预备知识

2.1. Text Evaluation

2.1. 文本评估

Text evaluation aims to assess the quality of hypothesis text $^{h}$ in terms of certain aspect $a$ (e.g., fluency), which is either measured manually with different protocols (Nenkova & Passonneau, 2004; Bhandari et al., 2020; Fabbri et al., 2021; Liu et al., 2022) or quantified by diverse automated metrics (Lin, 2004; Papineni et al., 2002; Zhao et al., 2019; Zhang et al., 2020; Yuan et al., 2021).

文本评估旨在从特定方面 $a$ (如流畅性) 评估假设文本 $^{h}$ 的质量,可通过不同人工评估协议 (Nenkova & Passonneau, 2004; Bhandari et al., 2020; Fabbri et al., 2021; Liu et al., 2022) 或多种自动化指标 (Lin, 2004; Papineni et al., 2002; Zhao et al., 2019; Zhang et al., 2020; Yuan et al., 2021) 进行量化。

$$

y=f(h,a,S)

$$

$$

y=f(h,a,S)

$$

where (1) $^{h}$ represents the text to be evaluated (hypothesis text, e.g., generated summary in text sum mari z ation task). (2) $a$ denotes the evaluation aspect (e.g., fluency). (3) $s$ is a collection of additional texts that are optionally used based on different scenarios. For example, it could be a source document or a reference summary in the text sum mari z ation task. (4) Function $f(\cdot)$ could be instantiated as a human evaluation process or automated evaluation metrics.

其中 (1) $^{h}$ 代表待评估文本(假设文本,例如文本摘要任务中生成的摘要)。 (2) $a$ 表示评估维度(如流畅度)。 (3) $s$ 是根据不同场景可选使用的附加文本集合,例如在文本摘要任务中可以是源文档或参考摘要。 (4) 函数 $f(\cdot)$ 可以实例化为人工评估流程或自动化评估指标。

2.2. Meta Evaluation

2.2. 元评估

Meta evaluation aims to evaluate the reliability of automated metrics by calculating how well automated scores $(y_ {\mathrm{auto}})$ correlate with human judgment $(y_ {\mathrm{human}})$ using correlation functions $g\big(y_ {\mathrm{auto}},y_ {\mathrm{human}}\big)$ such as spearman correlation. In this work, we adopt two widely-used correlation measures: (1) Spearman correlation $(\rho)$ (Zar, 2005) measures the monotonic relationship between two variables based on their ranked values. (2) Pearson correlation $(r)$ (Mukaka, 2012) measures the linear relationship based on the raw data values of two variables.

元评估旨在通过计算自动化评分 $(y_ {\mathrm{auto}})$ 与人工评分 $(y_ {\mathrm{human}})$ 之间的相关性函数 $g\big(y_ {\mathrm{auto}},y_ {\mathrm{human}}\big)$ (如斯皮尔曼相关系数) 来评估自动化指标的可靠性。本文采用两种广泛使用的相关性度量方法:(1) 斯皮尔曼相关系数 $(\rho)$ (Zar, 2005) 基于变量的排序值衡量两者之间的单调关系;(2) 皮尔逊相关系数 $(r)$ (Mukaka, 2012) 基于变量的原始数据值衡量线性关系。

2.3. Evaluation Strategy

2.3. 评估策略

Evaluation strategies define different aggregation methods when we calculate the correlation scores. Specifically, suppose that for each source text $s_ {i},i\in[1,2,\cdots,n]$ (e.g., documents in text sum mari z ation task or dialogue histories for dialogue generation task), there are $J$ system outputs $h_ {i,j}$ , where $j\in[1,2,\cdots,J]$ . $f_ {\mathrm{auto}}$ is an automatic scoring function (e.g., ROUGE (Lin, 2004)), and $f_ {\mathrm{human}}$ is the gold human scoring function. For a given evaluation aspect $a$ , the meta-evaluation metric $F$ can be formulated as follows.

评估策略定义了计算相关性得分时的不同聚合方法。具体来说,假设对于每个源文本 $s_ {i},i\in[1,2,\cdots,n]$ (例如文本摘要任务中的文档或对话生成任务中的对话历史),存在 $J$ 个系统输出 $h_ {i,j}$,其中 $j\in[1,2,\cdots,J]$。$f_ {\mathrm{auto}}$ 是自动评分函数 (例如ROUGE (Lin, 2004)),$f_ {\mathrm{human}}$ 是黄金人工评分函数。对于给定的评估维度 $a$,元评估指标 $F$ 可表示为如下形式。

Sample-level defines that a correlation value is calculated for each sample separately based on outputs of multiple systems, then averaged across all samples.

样本级定义指基于多个系统的输出为每个样本单独计算一个相关值,然后对所有样本取平均值。

where $g$ can be instantiated as Spearman or Pearson correlation.

其中 $g$ 可以实例化为斯皮尔曼 (Spearman) 或皮尔逊 (Pearson) 相关性。

Dataset-level indicates that the correlation value is calculated on system outputs of all $n$ samples.

数据集级别表示相关性值是在所有 $n$ 个样本的系统输出上计算的。

$$

\begin{array}{r l r}&{}&{F_ {f_ {\mathrm{auto}},f_ {\mathrm{human}}}^{\mathrm{data}}=g\Big(\left[f_ {\mathrm{auto}}({h_ {1,1}}),\cdot\cdot\cdot,f_ {\mathrm{auto}}({h_ {n,J}})\right],}\ &{}&{\left[f_ {\mathrm{human}}({h_ {1,1}}),\cdot\cdot\cdot,f_ {\mathrm{human}}({h_ {n,J}})\right]\Big)}\end{array}

$$

$$

\begin{array}{r l r}&{}&{F_ {f_ {\mathrm{auto}},f_ {\mathrm{human}}}^{\mathrm{data}}=g\Big(\left[f_ {\mathrm{auto}}({h_ {1,1}}),\cdot\cdot\cdot,f_ {\mathrm{auto}}({h_ {n,J}})\right],}\ &{}&{\left[f_ {\mathrm{human}}({h_ {1,1}}),\cdot\cdot\cdot,f_ {\mathrm{human}}({h_ {n,J}})\right]\Big)}\end{array}

$$

In this work, we select the evaluation strategy for a specific task based on previous works (Yuan et al., 2021; Zhang et al., 2022a). We use the sample-level evaluation strategy for text sum mari z ation, data-to-text, and machine translation tasks. For the dialogue response generation task, the dataset-level evaluation strategy is utilized.

在本工作中,我们基于先前研究 (Yuan et al., 2021; Zhang et al., 2022a) 为特定任务选择评估策略。对于文本摘要、数据到文本和机器翻译任务,我们采用样本级评估策略。针对对话响应生成任务,则使用数据集级评估策略。

3. GPTSCORE

3. GPTSCORE

3.1. Generative Pre-trained Language Models

3.1. 生成式预训练语言模型 (Generative Pre-trained Language Models)

Existing pre-trained language models could be classified into the following three categories: (a) encoder-only models (e.g., BERT (Devlin et al., 2019), RoBerta (Liu et al., 2019)) that encode inputs with bidirectional attention; (b) encoderdecoder models (e.g., BART (Lewis et al., 2020), T5 (Raffel et al., 2020)) that encode inputs with bidirectional attention and generate outputs auto regressive ly; (c) decoder-only models (e.g., GPT2 (Radford et al., 2019), GPT3 (Brown et al., 2020), PaLM (Chowdhery et al., 2022)) that generate the entire text sequence auto regressive ly, where pretrained models with decoding abilities (b, c) have caught much attention since they show impressive performance on zero-shot instruction and in-context learning. Specifically, given a prompt text $\pmb{x}={x_ {1},x_ {2},\cdots,x_ {n}}$ , a generative pre-training language model can generate a textual continuation $\pmb{y}={y_ {1},y_ {2},\cdot\cdot\cdot,y_ {m}}$ with the following generation probability:

现有的预训练语言模型可分为以下三类:(a) 仅编码器模型 (如BERT (Devlin et al., 2019)、RoBerta (Liu et al., 2019)),通过双向注意力机制编码输入;(b) 编码器-解码器模型 (如BART (Lewis et al., 2020)、T5 (Raffel et al., 2020)),通过双向注意力编码输入并以自回归方式生成输出;(c) 仅解码器模型 (如GPT2 (Radford et al., 2019)、GPT3 (Brown et al., 2020)、PaLM (Chowdhery et al., 2022)),以自回归方式生成完整文本序列。其中具备解码能力的预训练模型 (b, c) 因在零样本指令和上下文学习中表现突出而备受关注。具体而言,给定提示文本 $\pmb{x}={x_ {1},x_ {2},\cdots,x_ {n}}$,生成式预训练语言模型可通过以下生成概率输出文本延续 $\pmb{y}={y_ {1},y_ {2},\cdot\cdot\cdot,y_ {m}}$:

$$

p(\pmb{y}|\pmb{x},\theta)=\prod_ {t=1}^{m}p(y_ {t}|\pmb{y}_ {<t},\pmb{x},\theta)

$$

$$

p(\pmb{y}|\pmb{x},\theta)=\prod_ {t=1}^{m}p(y_ {t}|\pmb{y}_ {<t},\pmb{x},\theta)

$$

Emergent Ability Recent works progressively reveal a variety of emergent abilities of generative pre-trained language models with appropriate tuning or prompting methods, such as in-context learning (Min et al., 2022), chainof-thought reasoning (Wei et al., 2022), and zero-shot instruction (Ouyang et al., 2022). One core common ali ty of these abilities is to allow for handling customized requirements with a few or even zero annotated examples. It’s the appearance of these abilities that allows us to re-invent a new way for text evaluation–evaluating from the textual description, which can achieve customizable, multi-faceted, and train-free evaluation.

涌现能力

近期研究逐步揭示了生成式预训练语言模型通过适当调优或提示方法所展现的各种涌现能力,例如上下文学习 (Min et al., 2022)、思维链推理 (Wei et al., 2022) 和零样本指令 (Ouyang et al., 2022)。这些能力的核心共性在于仅需少量甚至无需标注样本即可处理定制化需求。正是这些能力的出现,使我们能重新构建文本评估的新范式——基于文本描述进行评估,从而实现可定制、多维度且无需训练的评估。

3.2. Generative Pre training Score (GPTScore)

3.2. 生成式预训练评分 (GPTScore)

The core idea of GPTSCORE is that a generative pre-training model will assign a higher probability of high-quality generated text following a given instruction and context. In our method, the instruction is composed of the task description $d$ and the aspect definition $a$ . Specifically, suppose that the text to be evaluated is $\pmb{h}={h_ {1},h_ {2},\cdot\cdot\cdot,h_ {m}}$ , the context information is $s$ (e.g., source text or reference text), then GPTSCORE is defined as the following conditional probability:

GPTSCORE的核心思想是,生成式预训练模型会为遵循给定指令和上下文的高质量生成文本分配更高的概率。在我们的方法中,指令由任务描述$d$和方面定义$a$组成。具体来说,假设待评估文本为$\pmb{h}={h_ {1},h_ {2},\cdot\cdot\cdot,h_ {m}}$,上下文信息为$s$(例如源文本或参考文本),则GPTSCORE定义为以下条件概率:

$$

\mathrm{GPTScore}(h|d,a,S)=\sum_ {t=1}^{m}w_ {t}\log p(h_ {t}|h_ {<t},T(d,a,S),\theta),

$$

$$

\mathrm{GPTScore}(h|d,a,S)=\sum_ {t=1}^{m}w_ {t}\log p(h_ {t}|h_ {<t},T(d,a,S),\theta),

$$

where $w_ {t}$ is the weight of the token at position $t$ . In our work, we treat each token equally. $T(\cdot)$ is a prompt template that defines the evaluation protocol, which is usually task-dependent and specified manually through prompt engineering.

其中 $w_ {t}$ 是位置 $t$ 处 token 的权重。在我们的工作中,我们对每个 token 一视同仁。$T(\cdot)$ 是一个定义评估流程的提示模板 (prompt template),通常与任务相关并通过提示工程 (prompt engineering) 手动指定。

Few-shot with Demonstration The generative pretrained language model can better perform tasks when prefixed with a few annotated samples (i.e., demonstrations). Our proposed framework is flexible in supporting this by extending the prompt template $T$ with demonstrations.

少样本演示

生成式预训练语言模型在添加少量标注样本(即演示)作为前缀时,能更好地执行任务。我们提出的框架通过用演示扩展提示模板$T$,灵活地支持这一功能。

Choice of Prompt Template Prompt templates define how task description, aspect definition, and context are organized. Minging desirable prompts itself is a non-trivial task and there are extensive research works there (Liu et al., 2021; Fu et al., 2022). In this work, for the GPT3-based model, we opt for prompts that are officially provided by OpenAI.2 For instruction-based pre-trained models, we use prompts from Natural Instruction (Wang et al., 2022) since it’s the main training source for those instruction-based pre-train models. Taking the evaluation of the fluency of the text sum mari z ation task as an example, based on the prompt provided by OpenAI,3 the task prompt is “{Text} Tl;dr {Summary}”, the definition of fluency is “Is the generated text well-written and grammatical?” (in Tab. 1), and then the final prompt template is “Generate a fluent and grammatical summary for the following text: {Text} Tl;dr {Summary}”, where demonstrations could be introduced by repeating instant i a ting “{Text} Tl;dr {Summary}” In Appendix D, we list the prompts for various aspects of all tasks studied in this work and leave a more comprehensive exploration on prompt engineering as a future work.

提示模板的选择

提示模板定义了任务描述、方面定义和上下文的组织方式。设计理想的提示本身是一项复杂的任务,已有大量相关研究 (Liu et al., 2021; Fu et al., 2022)。在本工作中,对于基于GPT3的模型,我们选用OpenAI官方提供的提示模板。对于基于指令的预训练模型,我们采用Natural Instruction (Wang et al., 2022) 的提示模板,因为这是此类指令预训练模型的主要训练数据来源。以文本摘要任务的流畅性评估为例,基于OpenAI提供的提示模板,任务提示为“{Text} Tl;dr {Summary}”,流畅性定义为“生成的文本是否书写良好且符合语法?”(见表1),最终提示模板为“为以下文本生成流畅且符合语法的摘要:{Text} Tl;dr {Summary}”,其中可通过重复实例化“{Text} Tl;dr {Summary}”来引入演示样例。附录D列出了本研究中所有任务各维度对应的提示模板,更全面的提示工程探索将作为未来工作。

GPTScore: Evaluate as You Desire

| Aspect | Task | Definition |

| SemanticCoverage(COV)Summ | Howmanysemantic contentunitsfrom thereferencetext are covered by thegenerated text? | |

| Factuality (FAC) | Summ | Doesthegenerated textpreserve thefactual statements of thesourcetext? |

| Consistency (CON) | Summ,Diag | Is the generated text consistent in the information it provides? |

| Informativeness (INF) | Summ,D2T, Diag | Howwell does thegenerated text capture thekeyideasofitssource text? |

| Coherence (COH) | Summ,Diag | Howmuch does thegenerated text make sense? |

| Relevance (REL) | Diag,Summ,D2T | Howwell isthegenerated textrelevant toits sourcetext? |

| Fluency (FLU) | Diag, Summ, D2T, MT Is the generated text well-written and grammatical? | |

| Accuracy (ACC) Multidimensional | MT | Are there inaccuracies,missing,or unfactual content in the generated text? |

| QualityMetrics(MQM) | MT | Howis the overall quality of thegenerated text? |

| Interest (INT) | Diag | Is the generated text interesting? |

| Engagement (ENG) | Diag | Is thegenerated text engaging? |

| Specific (SPE) | Diag | Is the generated text generic or specific to the source text? |

| Correctness (COR) | Diag | Is thegeneratedtextcorrectorwasthere a misunderstandingof thesourcetext? |

| Semantically appropriate (SEM) | Diag | Is the generated text semantically appropriate? |

| Understandability (UND) | Diag | Is the generated text understandable? |

| Error Recovery (ERR) | Diag | Is thesystem able torecoverfrom errors that it makes? |

| Diversity (DIV) | Diag | Is there diversity in the system responses? |

| Depth (DEP) | Diag | Does the system discuss topics in depth? |

| Likeability (LIK) | Diag | Does the system display a likeablepersonality? |

| Flexibility (FLE) | Diag | Is the system flexible and adaptable to the user and their interests? |

| Inquisitiveness (INQ) | Diag | Isthesysteminquisitivethroughouttheconversation? |

Table 1. The definition of aspects evaluated in this work. Semantic App. denotes semantically appropriate aspect. Diag, Summ, D2T, and MT denote the dialogue response generation, text sum mari z ation, data to text and machine translation, respectively.

GPTScore: 按需评估

| 维度 | 任务 | 定义 |

|---|---|---|

| 语义覆盖度(COV) | 文本摘要 | 生成文本覆盖了多少参考文本的语义内容单元? |

| 事实性(FAC) | 文本摘要 | 生成文本是否保留了源文本的事实陈述? |

| 一致性(CON) | 文本摘要,对话 | 生成文本提供的信息是否一致? |

| 信息量(INF) | 文本摘要,数据到文本,对话 | 生成文本在多大程度上捕捉了源文本的关键思想? |

| 连贯性(COH) | 文本摘要,对话 | 生成文本在多大程度上是通顺的? |

| 相关性(REL) | 对话,文本摘要,数据到文本 | 生成文本与源文本的相关性如何? |

| 流畅性(FLU) | 对话,文本摘要,数据到文本,机器翻译 | 生成文本是否书写良好且符合语法? |

| 准确性(ACC) | 机器翻译 | 生成文本是否存在不准确、缺失或不实内容? |

| 质量指标(MQM) | 机器翻译 | 生成文本的整体质量如何? |

| 趣味性(INT) | 对话 | 生成文本是否有趣? |

| 吸引力(ENG) | 对话 | 生成文本是否具有吸引力? |

| 特异性(SPE) | 对话 | 生成文本是通用的还是针对源文本特定的? |

| 正确性(COR) | 对话 | 生成文本是否正确或是否存在对源文本的误解? |

| 语义适当性(SEM) | 对话 | 生成文本在语义上是否恰当? |

| 可理解性(UND) | 对话 | 生成文本是否易于理解? |

| 错误恢复(ERR) | 对话 | 系统能否从自身错误中恢复? |

| 多样性(DIV) | 对话 | 系统响应是否具有多样性? |

| 深度(DEP) | 对话 | 系统是否深入讨论话题? |

| 好感度(LIK) | 对话 | 系统是否展现出讨人喜欢的个性? |

| 灵活性(FLE) | 对话 | 系统是否能灵活适应用户及其兴趣? |

| 求知欲(INQ) | 对话 | 系统在整个对话过程中是否表现出求知欲? |

表 1: 本工作评估维度的定义。Semantic App. 表示语义适当性维度。Diag、Summ、D2T 和 MT 分别表示对话响应生成、文本摘要、数据到文本和机器翻译。

Selection of Scoring Dimension GPTSCORE exhibits different variants in terms of diverse choices of texts being calculated. For example, given a generated hypothesis, we can calculate GPTSCORE either based on the source text (i.e., src->hypo, $p({\mathrm{hypo}}|{\mathrm{src}})$ ) or based on the gold reference (i.e., ref $>$ hypo, $p({\mathrm{hypo}}|{\mathrm{ref}}))$ . In this paper, the criteria for choosing GPTSCORE variants are mainly designed to align the protocol of human judgments (Liu et al., 2022) that are used to evaluate the reliability of automated metrics. We will detail this based on different human judgment datasets in the experiment section.

评分维度的选择

GPTSCORE 在计算文本的选择上展现出不同变体。例如,给定一个生成的假设,我们可以基于源文本(即 src->hypo,$p({\mathrm{hypo}}|{\mathrm{src}})$)或基于黄金参考(即 ref>hypo,$p({\mathrm{hypo}}|{\mathrm{ref}}))$)来计算 GPTSCORE。本文中,选择 GPTSCORE 变体的标准主要设计为与人工评判协议(Liu et al., 2022)保持一致,该协议用于评估自动化指标的可靠性。我们将在实验部分根据不同的人工评判数据集详细说明这一点。

4. Experimental Settings

4. 实验设置

4.1. Tasks, Datasets, and Aspects

4.1. 任务、数据集与评估维度

To achieve a comprehensive evaluation, in this paper, we cover a broad range of natural language generation tasks: Dialogue Response Generation, Text Sum mari z ation, Data-toText, and Machine Translation, which involves 37 datasets and 22 evaluation aspects in total. Tab. 8 summarizes the tasks, datasets, and evaluation aspects considered by each dataset. The definition of different aspects can be found in Tab. 1. More detailed illustrations about the datasets can be found in Appendix B.

为实现全面评估,本文涵盖了广泛的自然语言生成任务:对话响应生成、文本摘要、数据到文本和机器翻译,共涉及37个数据集和22个评估维度。表8总结了各数据集考虑的任务、数据集及评估维度,不同维度的定义可参见表1。关于数据集的更详细说明见附录B。

(1) Dialogue Response Generation aims to automatically generate an engaging and informative response based on the dialogue history. Here, we choose to use the FED (Mehri & Eskénazi, 2020) datasets and consider both turn-level and dialogue-level evaluations. (2) Text Sum mari z ation is a task of automatically generating informative and fluent summary for a given long text. Here, we consider the following four datasets, SummEval (Bhandari et al., 2020), REALSumm (Bhandari et al., 2020), NEWSROOM (Grusky et al., 2018), and QAGS_ XSUM (Wang et al., 2020), covering 10 aspects. (3) Data-to-Text aims to automatically generate a fluent and factual description for a given table. Our work considered BAGEL (Mairesse et al., 2010) and SFRES (Wen et al., 2015) datasets. (4) Machine Translation aims to translate a sentence from one language to another. We consider a sub datasets of Multidimensional Quality Metrics (MQM) (Freitag et al., 2021), namely, MQM-2020 (Chinese>English).

(1) 对话响应生成 (Dialogue Response Generation) 旨在基于对话历史自动生成引人入胜且信息丰富的响应。此处我们选用 FED (Mehri & Eskénazi, 2020) 数据集,并同时考虑轮次级和对话级评估。

(2) 文本摘要 (Text Summarization) 是为给定长文本自动生成信息丰富且流畅的摘要的任务。此处我们考虑以下四个数据集:SummEval (Bhandari et al., 2020)、REALSumm (Bhandari et al., 2020)、NEWSROOM (Grusky et al., 2018) 和 QAGS_ XSUM (Wang et al., 2020),涵盖 10 个评估维度。

(3) 数据到文本生成 (Data-to-Text) 旨在为给定表格自动生成流畅且符合事实的描述。我们的研究采用了 BAGEL (Mairesse et al., 2010) 和 SFRES (Wen et al., 2015) 数据集。

(4) 机器翻译 (Machine Translation) 旨在将句子从一种语言翻译成另一种语言。我们采用多维质量指标 (MQM) (Freitag et al., 2021) 的子数据集 MQM-2020 (中文>英文)。

4.2. Scoring Models

4.2. 评分模型

ROUGE (Lin, 2004) is a popular automatic generation evaluation metric. We consider three variants ROUGE-1, ROUGE-2, and ROUGE-L. PRISM (Thompson & Post, 2020) is a reference-based evaluation method designed for machine translation with pre-trained paraphrase systems. BERTScore (Zhang et al., 2020) uses contextual representation from BERT to calculate the similarity between the generated text and the reference text. MoverScore (Zhao et al., 2019) considers both contextual represent ation and Word Mover’s Distance (WMD, (Kusner et al., 2015)) DynaEval (Zhang et al., 2021) is a unified automatic evaluation framework for dialogue response generation tasks on the turn level and dialogue level. BARTScore (Yuan et al., 2021) is a text-scoring model based on BART (Lewis et al., 2020) without fine-tuning. BARTScore+CNN (Yuan et al., 2021) is based on BART fine-tuned on the CNNDM dataset (Hermann et al., 2015). BARTScore+CNN+Para (Yuan et al., 2021) is based on BART fine-tuned on CNNDM and Paraphrase 2.0 (Hu et al., 2019). GPTSCORE is our evaluation method, which is designed based on different pre-trained language models. Specifically, we considered GPT3, OPT, FLAN-T5, and GPT2 in this work. Five variants are explored for each framework. For a fair comparison with the decoder-only model, such as GPT3 and OPT, only four variant models of GPT2 with a parameter size of at least 350M are considered. Tab. 2 shows all model variants we used in this paper and their number of parameters.

ROUGE (Lin, 2004) 是一种流行的自动生成评估指标。我们考虑了三种变体 ROUGE-1、ROUGE-2 和 ROUGE-L。PRISM (Thompson & Post, 2020) 是一种基于参考的评估方法,专为机器翻译设计,采用预训练复述系统。BERTScore (Zhang et al., 2020) 利用 BERT 的上下文表示计算生成文本与参考文本之间的相似度。MoverScore (Zhao et al., 2019) 同时考虑了上下文表示和词移距离 (WMD, (Kusner et al., 2015))。DynaEval (Zhang et al., 2021) 是一个统一的自动评估框架,用于对话响应生成任务在轮次级别和对话级别的评估。BARTScore (Yuan et al., 2021) 是一种基于 BART (Lewis et al., 2020) 的文本评分模型,无需微调。BARTScore+CNN (Yuan et al., 2021) 基于在 CNNDM 数据集 (Hermann et al., 2015) 上微调的 BART。BARTScore+CNN+Para (Yuan et al., 2021) 基于在 CNNDM 和 Paraphrase 2.0 (Hu et al., 2019) 上微调的 BART。GPTSCORE 是我们的评估方法,基于不同的预训练语言模型设计。具体而言,本文考虑了 GPT3、OPT、FLAN-T5 和 GPT2。每个框架探索了五种变体。为了与仅解码器模型(如 GPT3 和 OPT)进行公平比较,仅考虑参数规模至少为 350M 的 GPT2 的四种变体模型。表 2 展示了本文使用的所有模型变体及其参数数量。

| GPT3 | Param. | OPT | Param. |

| text-ada-001 text-babbage-001 text-curie-001 text-davinci-001 text-davinci-003 | 350M 1.3B 6.7B 175B 175B | OPT350M OPT-1.3B OPT-6.7B OPT-13B OPT-66B | 350M 1.3B 6.7B 13B 66B |

| FLAN-T5 | Param. | GPT2 | Param. |

| FT5-small FT5-base | 80M | GPT2-M | 355M |

| FT5-L | 250M 770M | GPT2-L GPT2-XL | 774M 1.5B |

| FT5-XL FT5-XXL | 3B 11B | GPT-J-6B | 6B |

Table 2. Pre-trained backbones used in this work.

| GPT3 | 参数量 | OPT | 参数量 |

|---|---|---|---|

| text-ada-001 | |||

| text-babbage-001 | |||

| text-curie-001 | |||

| text-davinci-001 | |||

| text-davinci-003 | 350M | ||

| 1.3B | |||

| 6.7B | |||

| 175B | |||

| 175B | OPT350M | ||

| OPT-1.3B | |||

| OPT-6.7B | |||

| OPT-13B | |||

| OPT-66B | 350M | ||

| 1.3B | |||

| 6.7B | |||

| 13B | |||

| 66B | |||

| FLAN-T5 | 参数量 | GPT2 | 参数量 |

| FT5-small | |||

| FT5-base | 80M | GPT2-M | 355M |

| FT5-L | 250M | ||

| 770M | GPT2-L | ||

| GPT2-XL | 774M | ||

| 1.5B | |||

| FT5-XL | |||

| FT5-XXL | 3B | ||

| 11B | GPT-J-6B | 6B |

表 2: 本工作使用的预训练主干模型。

4.3. Scoring Dimension

4.3. 评分维度

Specifically, (1) For aspects INT, ENG, SPC, REL, COR, SEM, UND, and FLU of FED-Turn datasets from the open domain dialogue generation task, we choose the src $>$ hypo variant since the human judgments of the evaluated dataset (i.e., FED-Turn) are also created based on the source. (2) For aspects COH, CON, and INF from SummEval and Newsroom, since data annotators labeled the data based on source and hypothesis texts, we chose src $>$ hypo for these aspects.

具体而言,(1) 对于开放域对话生成任务中FED-Turn数据集的INT、ENG、SPC、REL、COR、SEM、UND和FLU维度,我们选择src > hypo变体,因为被评估数据集(即FED-Turn)的人类判断也是基于源文本生成的。(2) 对于SummEval和Newsroom中的COH、CON和INF维度,由于数据标注者是基于源文本和假设文本来标注数据的,因此我们为这些维度选择了src > hypo。

(3) For aspects INF, NAT, and QUA from the data-to-text task, we choose src->hypo. Because the source text of the data-to-text task is not in the standard text format, which will be hard to handle by the scoring function. (4) For aspects ACC, FLU, and MQM from the machine translation task, we also choose src->hypo. Because the source text of the machine translation is a different language from the translated text (hypo). In this work, we mainly consider the evaluation of the English text. In the future, we can consider designing a scoring function based on BLOOM (Scao et al., 2022) that can evaluate texts in a cross-lingual setting.

(3) 对于数据到文本任务中的INF、NAT和QUA方面,我们选择src->hypo。因为数据到文本任务的源文本不是标准文本格式,这将难以通过评分函数处理。

(4) 对于机器翻译任务中的ACC、FLU和MQM方面,我们也选择src->hypo。因为机器翻译的源文本与翻译文本(hypo)是不同语言。在本工作中,我们主要考虑英文文本的评估。未来可以考虑基于BLOOM (Scao et al., 2022)设计一个能在跨语言环境下评估文本的评分函数。

4.4. Evaluation Dataset Construction

4.4. 评估数据集构建

Unlike previous works (Matiana et al., 2021; Xu et al., 2022a;b; Castricato et al., 2022) that only consider the overall text quality, we focus on evaluating multi-dimensional text quality. In this work, we studied 37 datasets according to 22 evaluation aspects. Due to the expensive API cost of GPT3, we randomly extract and construct sub-datasets for meta-evaluation. For the MQM dataset, since many aspects of samples lack human scores, we extract samples with human scores in ACC, MQM, and FLU as much as possible.

与先前仅考虑整体文本质量的研究 (Matiana et al., 2021; Xu et al., 2022a;b; Castricato et al., 2022) 不同,我们专注于评估多维度的文本质量。本工作中,我们依据22个评估维度对37个数据集进行了研究。由于GPT3的API调用成本较高,我们随机抽取并构建了子数据集进行元评估。针对MQM数据集,由于许多样本维度缺乏人工评分,我们尽可能提取了ACC、MQM和FLU维度中具有人工评分的样本。

5. Experiment Results

5. 实验结果

In this work, we focus on exploring whether language models with different structures and sizes can work in the following three scenarios. (a) vanilla (VAL): with non-instruction and non-demonstration; (b) instruction (IST): with instruction and non-demonstration; (c) instruction+demonstration (IDM): with instruction and demonstration.

在本工作中,我们重点探索不同结构和规模的语言模型能否在以下三种场景中工作:(a) 基础模式(VAL):无指令且无示例;(b) 指令模式(IST):有指令但无示例;(c) 指令+示例模式(IDM):既有指令又有示例。

Significance Tests To examine the reliability and validity of the experiment results, we conducted the significance test based on boots trapping.4 Our significance test is to check (1) whether the performance of IST (IDM) is significantly better than VAL, and values achieved with the IST (IDM) settings will be marked $\dagger$ if it passes the significant test (p-value $_ {<0.05}$ ). (2) whether the performance of IDM is significantly better than IST, if yes, mark the value with IDM setting with $\ddagger$ .

显著性检验

为检验实验结果的可靠性和有效性,我们基于自助法(bootstrapping)进行了显著性检验。显著性检验用于验证:(1) IST(IDM)的性能是否显著优于VAL,若通过显著性检验(p值<0.05),则IST(IDM)的数值会被标记为$\dagger$;(2) IDM的性能是否显著优于IST,若是,则IDM的数值会被标记为$\ddagger$。

Average Performance Due to space limitations, we keep the average performance of GPT3-based, GPT2-based, OPTbased, and FT5-based models. The full results of various variants can be found in Appendix E.

平均性能

由于篇幅限制,我们仅保留基于GPT3、GPT2、OPT和FT5模型的平均性能数据。各类变体的完整结果详见附录E。

5.1. Text Sum mari z ation

5.1. 文本摘要

The evaluation results of 28 (9 baseline models (e.g., ROUGE-1) and 19 variants of GPTScore (e.g., GPT3-d01))

28个模型的评估结果(9个基线模型(如ROUGE-1)和19个GPTScore变体(如GPT3-d01))

Table 3. Spearman correlation of different aspects on text summarization datasets. VAL and IST is the abbreviation of vanilla and instruction, respectively. Values with $^\dagger$ denote the evaluator with instruction significantly outperforms with vanilla. Values in bold are the best performance in a set of variants (e.g., GPT3 family).

| Model | SummEval | RSumm | ||||||||

| COH | CON | FLU | REL | COV | ||||||

| VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | ||||||

| ROUGE-1 14.1 | 20.8 | |||||||||

| ROUGE-2 9.1 | - | 14.8 | - | 26.2 | 46.4 37.3 | |||||

| ROUGE-L 12.9 | 17.2 | - | 12.0 | - | 17.4 | - | ||||

| 19.8 | 17.6 | 一 | 24.7 | 45.1 | ||||||

| BERTSc | 25.9 | 19.7 | 23.7 | 34.7 | 38.4 | |||||

| MoverSc | 11.5 | 18.0 | 15.7 | 24.8 | 34.4 | |||||

| PRISM | 26.5 | 29.9 | 26.1 | 25.2 | 32.3 | |||||

| BARTSc | 29.7 | - | 30.8 | 24.6 | 28.9 | 43.1 | ||||

| +CNN | 42.5 | 35.8 | 38.1 | 35.9 | - | 42.9 | ||||

| +CNN+Pa42.5 | 37.0 | 40.5 | 1 | 33.9 | 40.9 | |||||

| GPT3-a01 39.3 39.8 39.7 40.5 36.1 35.9 28.2 27.6 29.5 29.8t GPT3-b01 42.7 45.2 41.0 41.4 37.1 39.1 32.0 33.4 35.0 35.2 | ||||||||||

| GPT3-c01 41.3 40.8 44.6 45.1 38.9 39.5 31.6 33.2 36.1 45.1 | ||||||||||

| GPT3-d03 43.7 43.4 45.2 44.9 41.1 40.3 36.3 38.1 35.2 38.0t | ||||||||||

| GPT2-M 36.0 39.2t 34.6 35.3t 28.1 30.7 28.3 28.3 41.8 43.3t | ||||||||||

| GPT2-L36.4 39.8 33.7 34.4 29.4 31.5 27.8 28.1 39.6 41.3 | ||||||||||

| GPT2-XL 35.3 39.9 35.9 36.1 31.233.1 28.1 28.0 40.4 41.0t | ||||||||||

| GPT-J-6B 35.5 39.5t 42.7 42.8t 35.5 37.4t 31.5 31.9t 42.8 43.7t | ||||||||||

| OPT350m 33.4 37.6 34.9 35.5 29.631.4 29.5 28.6 40.2 42.3t | ||||||||||

| OPT-1.3B 35.0 37.8 40.0 42.0 33.6 35.9 33.5 34.2 42.0 39.7 | ||||||||||

| OPT-6.7B 35.7 36.8 42.1 45.7 35.5 37.6 35.4 35.4 38.0 41.9 | ||||||||||

| OPT-13B 33.5 34.7t 42.5 45.2 35.637.3 33.6 33.9 37.641.0t | ||||||||||

| OPT-66B32.035.9 44.0 45.336.3 38.0 33.4 33.7 40.3 41.3 | ||||||||||

| FT5-base 39.2 39.9t 36.7 37.2t 37.3 36.5 29.5 31.2 36.7 38.6 | FT5-small 35.0 35.4 37.0 38.0t 35.6 34.7 27.3 28.0t 33.6 35.7t | |||||||||

| FT5-L | ||||||||||

| FT5-XL | 42.8 47.0 41.0 43.6 39.7 42.1 31.4 34.4 34.8 43.8 | |||||||||

| FT5-XXL 42.1 45.6 43.7 43.8 39.8 42.4 32.8 34.3 40.2 41.1 | ||||||||||

| Avg. | 38.040.2 40.441.435.837.231.332.237.439.8 | |||||||||

表 3: 文本摘要数据集中不同方面的Spearman相关性。VAL和IST分别是vanilla和instruction的缩写。带$^\dagger$标记的值表示带instruction的评估器显著优于vanilla版本。加粗数值表示在一组变体中(例如GPT3家族)表现最佳。

| 模型 | SummEval | RSumm | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| COH | CON | FLU | REL | COV | ||||||

| VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | VAL IST | |

| ROUGE-1 14.1 | 20.8 | |||||||||

| ROUGE-2 9.1 | - | 14.8 | - | 26.2 | 46.4 37.3 | |||||

| ROUGE-L 12.9 | 17.2 | - | 12.0 | - | 17.4 | - | ||||

| 19.8 | 17.6 | 一 | 24.7 | 45.1 | ||||||

| BERTSc | 25.9 | 19.7 | 23.7 | 34.7 | 38.4 | |||||

| MoverSc | 11.5 | 18.0 | 15.7 | 24.8 | 34.4 | |||||

| PRISM | 26.5 | 29.9 | 26.1 | 25.2 | 32.3 | |||||

| BARTSc | 29.7 | - | 30.8 | 24.6 | 28.9 | 43.1 | ||||

| +CNN | 42.5 | 35.8 | 38.1 | 35.9 | - | 42.9 | ||||

| +CNN+Pa42.5 | 37.0 | 40.5 | 1 | 33.9 | 40.9 | |||||

| GPT3-a01 39.3 39.8 39.7 40.5 36.1 35.9 28.2 27.6 29.5 29.8t GPT3-b01 42.7 45.2 41.0 41.4 37.1 39.1 32.0 33.4 35.0 35.2 | ||||||||||

| GPT3-c01 41.3 40.8 44.6 45.1 38.9 39.5 31.6 33.2 36.1 45.1 | ||||||||||

| GPT3-d03 43.7 43.4 45.2 44.9 41.1 40.3 36.3 38.1 35.2 38.0t | ||||||||||

| GPT2-M 36.0 39.2t 34.6 35.3t 28.1 30.7 28.3 28.3 41.8 43.3t | ||||||||||

| GPT2-L36.4 39.8 33.7 34.4 29.4 31.5 27.8 28.1 39.6 41.3 | ||||||||||

| GPT2-XL 35.3 39.9 35.9 36.1 31.233.1 28.1 28.0 40.4 41.0t | ||||||||||

| GPT-J-6B 35.5 39.5t 42.7 42.8t 35.5 37.4t 31.5 31.9t 42.8 43.7t | ||||||||||

| OPT350m 33.4 37.6 34.9 35.5 29.631.4 29.5 28.6 40.2 42.3t | ||||||||||

| OPT-1.3B 35.0 37.8 40.0 42.0 33.6 35.9 33.5 34.2 42.0 39.7 | ||||||||||

| OPT-6.7B 35.7 36.8 42.1 45.7 35.5 37.6 35.4 35.4 38.0 41.9 | ||||||||||

| OPT-13B 33.5 34.7t 42.5 45.2 35.637.3 33.6 33.9 37.641.0t | ||||||||||

| OPT-66B32.035.9 44.0 45.336.3 38.0 33.4 33.7 40.3 41.3 | ||||||||||

| FT5-base 39.2 39.9t 36.7 37.2t 37.3 36.5 29.5 31.2 36.7 38.6 | FT5-small 35.0 35.4 37.0 38.0t 35.6 34.7 27.3 28.0t 33.6 35.7t | |||||||||

| FT5-L | ||||||||||

| FT5-XL | 42.8 47.0 41.0 43.6 39.7 42.1 31.4 34.4 34.8 43.8 | |||||||||

| FT5-XXL 42.1 45.6 43.7 43.8 39.8 42.4 32.8 34.3 40.2 41.1 | ||||||||||

| 平均 | 38.040.2 40.441.435.837.231.332.237.439.8 |

scoring functions for the text sum mari z ation task on SummEval and RealSumm datasets are shown in Tab. 3. Due to the space limitation, we move the performance of the NEWSROOM and QXSUM datasets to the Appendix E. Fig. 3 shows the evaluation results of five GPT3 variant models on four text sum mari z ation datasets, where QXSUM uses the Pearson correlation and other datasets use the Spearman correlation metric. The main observations are summarized as follows:

在SummEval和RealSumm数据集上文本摘要任务的评分函数如表3所示。由于篇幅限制,我们将NEWSROOM和QXSUM数据集的性能结果移至附录E。图3展示了五种GPT3变体模型在四个文本摘要数据集上的评估结果,其中QXSUM使用皮尔逊相关系数(Pearson correlation),其他数据集使用斯皮尔曼相关系数(Spearman correlation)指标。主要观察结果总结如下:

(1) Evaluator with instruction significantly improves the performance (values with $\dagger$ in Tab. 3). What’s more, small models with instruction demonstrate comparable performance to supervised learning models. For example, $\mathrm{OPT}35\mathrm{0m}$ , FT5-small, and FT5-base outperform BARTScore ${\bf\nabla}{+}\bf C N N$ on the CON aspect when using the instructions. (2) The benefit from instruction is more stable for the decoder-only models. In Tab. 3, the average Spearman score of both the GPT2 and OPT models, 9 out of 10 aspects are better than the vanilla setting (VAL) by using instruction (IST), while the equipment of instruction (IST) to the encoder-decoder model of FT5 on the NEWSROOM dataset fails to achieve gains. (3) As for the GPT3-based models, (a) the performance of GPT3-d01 is barely significantly better than GPT3-c01, which tries to balance power and speed. (b) GPT3-d03 performs better than GPT3-d01 significantly. We can observe these conclusions from Fig. 3, and both conclusions have passed the significance test at $p<0.05$ .

(1) 使用指令的评估器显著提升了性能 (表3中标有$\dagger$的值)。更重要的是,配备指令的小模型展现出与监督学习模型相当的性能。例如,$\mathrm{OPT}35\mathrm{0m}$、FT5-small和FT5-base在使用指令时,在CON维度上超越了BARTScore ${\bf\nabla}{+}\bf C N N$。(2) 指令对仅解码器(decoder-only)模型的增益更稳定。如表3所示,GPT2和OPT模型的平均Spearman分数中,有9个维度在使用指令(IST)时优于原始设置(VAL),而FT5编码器-解码器模型在NEWSROOM数据集上配备指令(IST)未能获得提升。(3) 对于基于GPT3的模型:(a) GPT3-d01的性能仅略微显著优于兼顾效率与速度的GPT3-c01;(b) GPT3-d03显著优于GPT3-d01。这些结论可从图3中观察到,且均通过$p<0.05$显著性检验。

| SummEval | |||||||||

| COH | CON | FLU | REl | RSumm COV | |||||

| 46 44 | 50 | 42 | 40 | 50 | |||||

| 42 | 45 | 40 | 35 30 | 40 | |||||

| 40 | 40 | 38 36 | 30 | ||||||

| VAL | 5 | VAL 5 | VAL | ||||||

| NEWSROOM | QXSUM | ||||||||

| COH | FLU | REL | INF | FAC | |||||

| 70 | 70 | 76 | 30 | ||||||

| 74 | 74 | 20 | |||||||

| 68 | 72 | ||||||||

| 72 | 66 | 70 | 15 | ||||||

| VAL 15 | 68 VAL | 15 | VAL | 10 VAL | |||||

| SummEval | COH | CON | FLU | REl | RSumm COV |

|---|---|---|---|---|---|

| 46 44 | 50 | 42 | 40 | 50 | |

| 42 | 45 | 40 | 35 30 | 40 | |

| 40 | 40 | 38 36 | 30 | ||

| VAL | 5 | VAL 5 | VAL | ||

| NEWSROOM | QXSUM | ||||

| COH | FLU | REL | INF | FAC | |

| 70 | 70 | 76 | 30 | ||

| 74 | 74 | 20 | |||

| 68 | 72 | ||||

| 72 | 66 | 70 | 15 | ||

| VAL 15 | 68 VAL | 15 | VAL | 10 VAL |

5.2. Machine Translation

5.2. 机器翻译

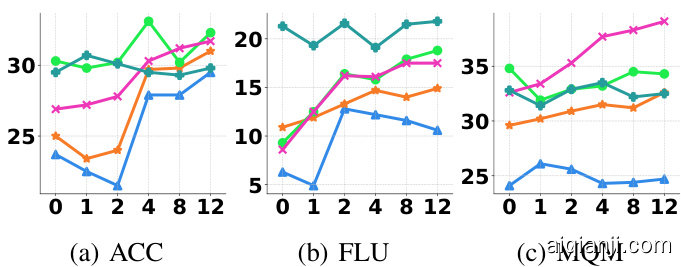

The average sample-level Spearman $(\rho)$ scores of GPT3- based, GPT2-based, OPT-based, and FT5-based models on the MQM-2020 machine translation dataset are shown in Tab. 4, where values with $\dagger$ denote that the evaluator equipped with IST (or IDM) significantly outperforms the VAL setting, and $ddag$ indicate that the evaluator equipped with IDM (the combination of IST and DM) significantly outperforms the IST setting. The Spearman correlations for the GPT3-based variants are shown in Fig. 4. For the full evaluation results of 28 models (including 9 baseline scoring models, such as ROUGE-1) can be found in Tab. 14. Following Thompson & Post (2020) and Yuan et al. (2021), we treat the evaluation of machine translation as the paraphrasing task. The main observations are listed as follows:

基于GPT3、GPT2、OPT和FT5的模型在MQM-2020机器翻译数据集上的平均样本级Spearman $(\rho)$ 分数如表4所示,其中带 $\dagger$ 的值表示配备IST(或IDM)的评估器显著优于VAL设置,而 $ddag$ 表示配备IDM(IST与DM的组合)的评估器显著优于IST设置。基于GPT3变体的Spearman相关性如图4所示。28个模型(包括ROUGE-1等9个基线评分模型)的完整评估结果可在表14中找到。遵循Thompson & Post (2020)和Yuan et al. (2021)的方法,我们将机器翻译评估视为复述任务。主要观察结果如下:

(1) The introduction of instruction (IST) significantly improve the performance in three different aspects of ACC, FLU, and MQM. In Tab. 4, the average performance of 19 GPTSCORE based evaluators with instruction (IST) significantly outperforms vanilla (VAL). (2) The combination of instruction and demonstration (IDM) brings gains for the evaluator with different model structures. In Tab. 4, the performance of GPT3, GPT2, OPT, and FT5 improves a lot when instruction and demonstration (IDM) are introduced. (3) The evaluator built based on GPT3- c01 achieves comparable performance with GPT3-d01 and GPT3-d03. This can be found in Fig. 4. Since the GPT3-d01 and GPT3-d03 are most expensive variant of GPT3, the cheaper and comparative GPT3-c01 is a good choice for machine translation task.

(1) 指令(IST)的引入在ACC、FLU和MQM三个不同方面显著提升了性能。在表4中,19个基于GPTSCORE的评估器在使用指令(IST)时的平均性能明显优于原始版本(VAL)。

(2) 指令与示例演示(IDM)的结合为不同模型结构的评估器带来了性能提升。在表4中,GPT3、GPT2、OPT和FT5在引入指令和示例演示(IDM)后性能大幅提高。

(3) 基于GPT3-c01构建的评估器达到了与GPT3-d01和GPT3-d03相当的性能。如图4所示,由于GPT3-d01和GPT3-d03是GPT3最昂贵的变体,更经济且性能相当的GPT3-c01是机器翻译任务的理想选择。

| Model VALIST |

| IDM VAL IST IDM VALIST IDM GPT3 327.2 27.1 29.7t, 11.3 10.4 16.4t 30.3 31.2 32.3t, |

| GPT2 25.8 27.030.3t, 9.8 10.8t 15.8t+ 30.1 30.3t 33.5t,\$ OPT 28.729.430.3t, 10.0 12.2 16.3t, 32.5 34.6 35.1t, |

| FT5 27.7 27.8 28.3t,t 9.6 11.0 15.4t, 31.0 32.3 32.3 |

| 模型 VALIST |

|---|

| IDM VAL IST IDM VALIST IDM GPT3 327.2 27.1 29.7t, 11.3 10.4 16.4t 30.3 31.2 32.3t, |

| GPT2 25.8 27.030.3t, 9.8 10.8t 15.8t+ 30.1 30.3t 33.5t,$ OPT 28.729.430.3t, 10.0 12.2 16.3t, 32.5 34.6 35.1t, |

| FT5 27.7 27.8 28.3t,t 9.6 11.0 15.4t, 31.0 32.3 32.3 |

Table 4. The average Spearman correlation of the GPT3-based, GPT2-based, OPT-based, and FT5-based models in machine translation task of MQM-2020 dataset.

表 4: 基于GPT3、GPT2、OPT和FT5的模型在MQM-2020数据集机器翻译任务中的平均Spearman相关性。

Figure 4. Experimental results for GPT3-based variants in the machine translation task. Here, blue, orange, green, pink, and cyan dot denote that GPTSCORE is built based on a01 $(\odot)$ , b01 $(\odot)$ , c01 $(\odot)$ , d01 $(\Leftarrow)$ , and $\mathrm{d}03(_ )$ , respectively. The red lines $(-)$ denote the average performance of GPT3-based variants.

图 4: 基于GPT3变体在机器翻译任务中的实验结果。图中蓝色、橙色、绿色、粉色和青色圆点分别表示GPTSCORE基于a01 $(\odot)$、b01 $(\odot)$、c01 $(\odot)$、d01 $(\Leftarrow)$和$\mathrm{d}03(_ )$构建。红色直线$(-)$表示基于GPT3变体的平均性能。

5.3. Data to Text

5.3. 数据到文本

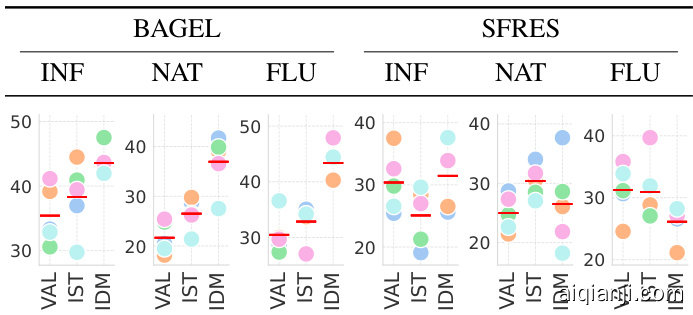

We consider the BAGEL and SFRES datasets for the evaluation of data to text task. The average Spearman correlations of the GPT3-based, GPT2-based, OPT-based, and FT5-based models are listed in Tab. 5. VAL, IST, and IDM denote the vanilla, using instruction, and using both instruction and demonstration settings, respectively. Due to the space limitation, the detailed performance of each evaluator considered in this work can be found in Tab. 15 and Tab. 16.

我们采用BAGEL和SFRES数据集评估数据到文本生成任务。基于GPT3、GPT2、OPT和FT5模型的平均Spearman相关系数列于表5。VAL、IST和IDM分别表示原始设置、使用指令设置以及同时使用指令和示例设置的三种配置。由于篇幅限制,本研究中各评估器的详细性能指标可参见表15和表16。

The main observations are listed as follows:

主要观察结果如下:

(1) Introducing instruction (IST) can significantly improve performance, and introducing demonstration (DM) will further improve performance. In Tab. 5, the average performance on the three aspects is significantly improved when adapting to the instruction, and the performance of using demonstration on NAT and FLU has further significantly improved. (2) The decoder-only model is better at utilizing demonstration to achieve high performance. In Tab. 5, compare to the encoder-decoder model FT5, the performance has a more significant improvement for the decoder-only model of GPT2 and OPT on NAT and FLU aspects after introducing DM, which holds for both BAGEL and SFRES. (3) GPT3 has strong compatibility with unformatted text. Named entities of the BAGEL dataset are replaced with a special token (e.g, X and Y ). For example, “X is a cafe restaurant”, where “X” denotes the name of the cafe. When introducing IST and DM (IDM), the variants of GPT3 achieve much higher average performance than GPT2, OPT, and FT5.

(1) 引入指令(IST)能显著提升性能,而引入示例(DM)会进一步改善表现。在表5中,适应指令后三个方面的平均性能均有显著提升,且在NAT和FLU方面使用示例的性能进一步显著提高。(2) 仅解码器(decoder-only)模型更擅长利用示例实现高性能。在表5中,与编码器-解码器(encoder-decoder)模型FT5相比,引入DM后GPT2和OPT在NAT和FLU方面的性能提升更为显著,这一现象在BAGEL和SFRES数据集上均成立。(3) GPT3对非格式化文本具有强兼容性。BAGEL数据集的命名实体被替换为特殊token(如X和Y),例如"X is a cafe restaurant"中"X"代表咖啡馆名称。当引入IST和DM(IDM)时,GPT3变体的平均性能远高于GPT2、OPT和FT5。

| Model VAL IST | INF NAT FLU |

| IDM VAL IST IDM VAL IST IDM | |

| BAGEL | |

| GPT3 35.4 38.3t 43.6t, 21.7 26.5 36.9t, 30.5 32.9t 43.4t, GPT2 40.8 43.2t 40.2 31.4 33.0t 33.5t,# 36.7 39.3t 41.3t, OPT 38.7 39.3t 38.6 31.4 30.0 33.7t# 37.7 37.1 41.5t, | |

| FT5 41.5 41.5 39.1 26.5 29.7t 28.6t 38.1 41.1t 40.3t Avg. 39.1 40.6t 40.3t 27.7 29.8t 33.2t, 35.8 37.6t 41.6t, | |

| SFRES | |

| GPT3 30.4 25.1 31.5t, 25.0 30.4 26.5 31.2 30.9 26.1 GPT2 22.5 25.1t 20.5 31.0 31.9t 37.0t,# 20.0 33.1 36.2t,\$ OPT 25.2 26.9t 24.3 26.2 30.0t 36.6t, 21.3 25.6t 30.6t, | |

| FT5 24.0 21.9 19.7 34.3 34.6t 36.8t,+ 22.0 17.8 19.7 Avg. 25.524.7 24.0 29.1 31.7 34.2t+ 23.6 26.8 28.2t, | |

| Model VAL IST | INF NAT FLU |

|---|---|

| IDM VAL IST IDM VAL IST IDM | |

| BAGEL | |

| GPT3 35.4 38.3t 43.6t, 21.7 26.5 36.9t, 30.5 32.9t 43.4t, GPT2 40.8 43.2t 40.2 31.4 33.0t 33.5t,# 36.7 39.3t 41.3t, OPT 38.7 39.3t 38.6 31.4 30.0 33.7t# 37.7 37.1 41.5t, | |

| FT5 41.5 41.5 39.1 26.5 29.7t 28.6t 38.1 41.1t 40.3t Avg. 39.1 40.6t 40.3t 27.7 29.8t 33.2t, 35.8 37.6t 41.6t, | |

| SFRES | |

| GPT3 30.4 25.1 31.5t, 25.0 30.4 26.5 31.2 30.9 26.1 GPT2 22.5 25.1t 20.5 31.0 31.9t 37.0t,# 20.0 33.1 36.2t,$ OPT 25.2 26.9t 24.3 26.2 30.0t 36.6t, 21.3 25.6t 30.6t, | |

| FT5 24.0 21.9 19.7 34.3 34.6t 36.8t,+ 22.0 17.8 19.7 Avg. 25.524.7 24.0 29.1 31.7 34.2t+ 23.6 26.8 28.2t, |

Table 5. The average of Spearman correlation the models based on GPT3, GPT2, OPT, and FT5 on BAGEL and SFRES datasets in data-to-text task.

表5: 基于GPT3、GPT2、OPT和FT5的模型在BAGEL与SFRES数据集上进行数据到文本任务时的Spearman相关系数平均值

5.4. Dialogue Response Generation

5.4. 对话响应生成

To test if GPTSCORE can generalize to more aspects, we choose the task of dialogue response generation as a testbed, which usually requires evaluating generated texts from a variety of dimensions (i.e., “interesting” and “fluent”). To reduce the computational cost, in this experiment, we focus on GPT3-based metrics since they have achieved superior performance as we observed in the previous experiments.

为了测试GPTSCORE是否能泛化到更多方面,我们选择对话响应生成任务作为测试平台,该任务通常需要从多个维度(即"有趣"和"流畅")评估生成文本。为降低计算成本,本实验聚焦于基于GPT3的指标,因为我们在先前实验中观察到它们已取得优异性能。

Tab. 6 shows the Spearman correlation of different aspects on FED turn- and dialogue-level datasets. The main observations are listed as follows.

表 6 展示了 FED 对话轮次和对话级别数据集上不同方面的 Spearman 相关性。主要观察结果如下。

Figure 5. Experimental results for GPT3-based variants in data-totext task. Here, blue, orange, green, pink, and cyan dot denote that GPTSCORE is built based on a01 $(\odot)$ , b01 $(\odot,$ , c01 $(\bigcirc,$ ), d01 (○), and d03 $(_ )$ , respectively. The red lines $(-)$ denote the average performance of GPT3-based variants.

图 5: 基于GPT3的变体在数据到文本任务中的实验结果。其中,蓝色、橙色、绿色、粉色和青色圆点分别表示GPTSCORE基于a01 $(\odot)$、b01 $(\odot,$、c01 $(\bigcirc,$)、d01 (○)和d03 $(_ )$构建。红色直线 $(-)$ 表示基于GPT3变体的平均性能。

(1) The performance of GPT3-d01 is much better than GPT3-d03, even though both of them have the same model size. The average Spearman correlation of GPT3- d01 outperforms GPT3-d03 by 40.8 on the FED Turn-level dataset, and 5.5 on the FED dialogue-level. (2) The GPT3- based model demonstrate stronger generalization ability. BART-based models failed in the evaluation of the dialogue generation task, while the GPT3-a01 with 350M parameters achieved comparable performance to FED and DE models on both the FED turn-level and dialogue-level datasets.

(1) GPT3-d01的性能远优于GPT3-d03,尽管两者模型规模相同。在FED话轮级数据集上,GPT3-d01的平均Spearman相关系数比GPT3-d03高出40.8;在FED对话级数据集上则高出5.5。(2) 基于GPT3的模型展现出更强的泛化能力。基于BART的模型在对话生成任务评估中失效,而参数量为3.5亿的GPT3-a01在FED话轮级和对话级数据集上都达到了与FED、DE模型相当的性能。

6. Ablation Study

6. 消融实验

6.1. Effectiveness of Demonstration

6.1. 演示的有效性

To investigate the relationship between the demonstration sample size (denote as K) and the evaluation performance, we choose the machine translation task and the GPT3-based variants with model sizes ranging from 350M to 175B for further study.

为了研究演示样本量(记为K)与评估性能之间的关系,我们选择机器翻译任务和基于GPT3的变体模型(模型规模从350M到175B不等)进行进一步研究。

The change of Spearman correlation on the MQM-2020 dataset with different demonstration sample size are shown in Fig. 6. The main observations are summarized as follows: (1) The utilization of demonstration significantly improves the evaluation performance, which holds for these three aspects. (2) There is an upper bound on the performance gains from the introduction of the demonstration. For example, when $\mathrm{K}{>}4$ , the performance of ACC is hard to improve further. (3) When DM has only a few samples (such as ${\tt K}{=}1$ ), small models (e.g., GPT3-a01) are prone to performance degradation due to the one-sidedness of the given examples.

图 6 展示了 MQM-2020 数据集中斯皮尔曼相关系数随不同演示样本量的变化情况。主要观察结果总结如下:(1) 演示样本的使用显著提升了评估性能,这一现象在三个方面均成立。(2) 引入演示样本带来的性能增益存在上限。例如当 $\mathrm{K}{>}4$ 时,ACC 性能难以进一步提升。(3) 当 DM (Demonstration) 仅含少量样本时 (如 ${\tt K}{=}1$),小型模型 (如 GPT3-a01) 容易因给定示例的片面性导致性能下降。

6.2. Partial Order of Evaluation Aspect

6.2. 评估方面的偏序关系

To explore the correlation between aspects, we conducted an empirical analysis with INT (interesting) on the dialogue response generation task of the FED-Turn dataset. Specifically, take INT as the target aspect and then combine the definitions of other aspects with the definition of INT as the final evaluation protocols. The x-axis of Fig. 7-(a) is the aspect order achieved based on the Spearman correlation between INT and that aspect’s human score. Fig. 7-(b) is the Spearman correlation o INT as the modification of the INT definition, and the scoring function is GPT3-c01.

为了探究各维度之间的相关性,我们以FED-Turn数据集的对话响应生成任务为对象,针对INT(interesting)维度进行了实证分析。具体而言,将INT设为目标维度后,将其他维度的定义与INT定义结合作为最终评估方案。图7-(a)的x轴表示基于INT分数与该维度人工评分的Spearman相关系数所获得的维度排序。图7-(b)则展示了修改INT定义后的Spearman相关系数,评分函数采用GPT3-c01。

| Aspect | Baseline | GPTScore | |||||||

| BT BTC BTCP FED DE a01 b01 c01 d01 | |||||||||

| FEDdialogue-level 1.7 -14.9 9-18.9 25.7 43.7 18.7 15.0 22.5 56.9 13.4 | |||||||||

| COH ERR CON DIV DEP LIK UND FLE INF INQ | 9.4 -12.2 -13.7 2.6 -6.7 -10.2 13.3 -2.5 -13.9 8.2 -6.6 -17.6 9.9 -6.3 -11.8 -11.5 -17.6 -18.2 9.3 -10.2 -10.3 | 12.0 30.2 35.2 16.8 21.3 45.7 9.40 11.6 36.7 33.7 9.918.4 32.9 18.1 13.7 37.8 14.9 5.20 21.5 62.8 -6.6 10.9 49.8 9.00 12.9 28.2 66.9 34.1 37.4 41.6 26.2 22.0 32.1 63.4 18.4 -0.3 36.5 31.2 40.0 40.0 52.4 19.6 | |||||||

| Avg. 5.8 | -8.5 -14.020.4 39.8 25.3 21.3 28.6 54.3 13.5 | ||||||||

| FEDturn-level INT 15.9 -3.3 -10.1 32.4 32.7 16.6 6.4 30.8 50.1 22.4 | |||||||||

| ENG SPE REL COR SEM UND FLU Avg. | 8.3 11.9 7.6 10.0 12.0 14.0 12.8 | -7.9 10.0 1.8 18.8 8.1 17.2 5.7 | 1.1 -2.5 -16.2 19.4 12.4 26.1 4.5 28.4 7.7 | 26.2 -9.4 1.3 | 20.2 20.0 11.9 25.6 16.1 9.9 25.4 38.3 32.8 | 6.8 | 24.0 30.0 10.2 6.2 29.4 49.6 35.5 14.1 34.6 33.7 16.1 31.7 21.4 15.1 19.9 26.3 8.6 10.3 23.8 45.2 38.0 24.2 29.7 11.2 27.0 43.4 42.8 8.123.1 44.440.5 6.6 14.8 23.4 36.5 31.1 -13.4 17.1 16.5 5.7 14.0 16.0 36.7 | ||

Table 6. Spearman correlation of different aspects on the FED turnand dialogue-level datasets. BT, BTC, BTCP, and $D E$ denote BARTSCORE, BARTSCORE $^{+}$ CNN, BARTSCORE $+$ CNN+Para, and DynaEval model, respectively. Values in bold indicate the best performance.

表 6: FED对话级和轮次级数据集上不同方面的Spearman相关性。BT、BTC、BTCP和$DE$分别表示BARTSCORE、BARTSCORE$^{+}$CNN、BARTSCORE$+$CNN+Para和DynaEval模型。加粗数值表示最佳性能。

The following table illustrates the definition composition process, where $\mathrm{Sp}$ denotes Spearman.

下表展示了定义组合过程,其中 $\mathrm{Sp}$ 表示 Spearman。

| X Aspect | AspectDefinition | Sp |

| 1 INT | Is this response conversation? | interesting to the 30.8 |

| 3 INT SPE | ENG, Is this an interest ing response that 48.6 is specific and engaging? |

| X Aspect | AspectDefinition | Sp |

|---|---|---|

| 1 INT | 该回复是否具有对话性? | 30.8 |

| 3 INT SPE | ENG, 该回复是否是一个具体且引人入胜的有趣回答? |

Specifically, the definition of INT is “Is this response interesting to the conversation? ” at ${\bf{x}}{=}1$ in Fig. 7-(b). When INT combines with ENG, SPE (at ${\tt x}=3$ in Fig. 7-(b)), its definition can be “Is this an interesting response that is specific and engaging?”. And the new aspect definition boosts the performance from 30.8 (at ${\bf{x}}{=}1$ in Fig. 7-(b)) to 48.6 (at ${\tt x}=3$ in Fig. 7-(b)). The best performance of 51.4 ( ${\bf\tilde{X}}{=}5$ in Fig. 7-(b)) is achieved after combining five aspects (INT, ENG, SPE, COR, REL), which already exceeded 50.1 of the most potent scoring model GPT3-d01 with aspect definition built only on INT. Therefore, combining definitions with other highly correlated aspects can improve evaluation performance.

具体来说,INT的定义在图7-(b)中${\bf{x}}{=}1$处为"这个回应是否对对话有趣?"。当INT与ENG、SPE(在图7-(b)中${\tt x}=3$处)结合时,其定义可变为"这是一个既有趣又具体且引人入胜的回应吗?"。这一新的维度定义将性能从30.8(图7-(b)中${\bf{x}}{=}1$处)提升至48.6(图7-(b)中${\tt x}=3$处)。最佳性能51.4(图7-(b)中${\bf\tilde{X}}{=}5$处)是通过结合五个维度(INT、ENG、SPE、COR、REL)实现的,这已经超过了仅基于INT维度定义的最强评分模型GPT3-d01的50.1分。因此,将定义与其他高度相关的维度结合可以提升评估性能。

Figure 6. Results of the GPT3 family models with different numbers of examples (K) in the demonstration on the MQM-2020 dataset. Here, blue, orange, green, red, and cyan lines denote that GPTSCORE is built based on GPT3-a01 (▲), GPT3-b01 $(\bigstar)$ , GPT3-c01 $(\odot)$ , GPT3-d01 (é), and GPT3-d03 $(+)$ , respectively.

图 6: GPT3系列模型在MQM-2020数据集上使用不同数量示例(K)的演示结果。其中,蓝线、橙线、绿线、红线和青线分别表示GPTSCORE基于GPT3-a01 (▲)、GPT3-b01 $(\bigstar)$、GPT3-c01 $(\odot)$、GPT3-d01 (é)和GPT3-d03 $(+)$构建。

Figure 7. (a) Descending order of Spearman correlation between INT and other aspects’ human scoring. (b) The Spearman correlation of INT changes as its aspect definition is modified in combination with other aspects. The scoring model is GPT3-c01.

图 7: (a) INT与其他方面人工评分的Spearman相关性降序排列。(b) INT的Spearman相关性随其方面定义与其他方面组合修改而变化。评分模型为GPT3-c01。

7. Conclusion

7. 结论

In this paper, we propose to leverage the emergent abilities from generative pre-training models to address intricate and ever-changing evaluation requirements. The proposed framework, GPTSCORE, is studied on multiple pre-trained language models with different structures, including the GPT3 with a model size of 175B. GPTSCORE has multiple benefits: custom iz ability, multi-faceted evaluation, and trainfree, which enable us to flexibly craft a metric that can support 22 evaluation aspects on 37 datasets without any learning process yet attain competitive performance. This work opens a new way to audit generative AI by utilizing generative AI.

本文提出利用生成式预训练模型 (Generative Pre-training Models) 的涌现能力来解决复杂多变的评估需求。所提出的GPTSCORE框架在多种不同结构的预训练语言模型上进行了研究,包括模型规模达175B的GPT3。GPTSCORE具备三大优势:可定制性、多维度评估和无须训练,使我们能够灵活构建支持37个数据集中22个评估维度的指标,且无需任何学习过程即可获得具有竞争力的性能。这项工作为利用生成式AI审计生成式AI开辟了新途径。

Acknowledgements

致谢

We thank Chen Zhang for helpful discussion and feedback. This research / project is supported by the National Research Foundation, Singapore under its Industry Alignment Fund – Pre-positioning (IAF-PP) Funding Initiative. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation, Singapore. Pengfei Liu is supported by a grant from the Singapore Defence Science and Technology Agency.

我们感谢Chen Zhang的有益讨论和反馈。本研究/项目由新加坡国家研究基金会在其产业联盟基金-预定位(IAF-PP)资助计划下支持。本材料中表达的任何观点、发现、结论或建议均为作者个人观点,不代表新加坡国家研究基金会的立场。Pengfei Liu的研究得到了新加坡国防科技局的一项资助支持。

References

参考文献

Adiwardana, D., Luong, M., So, D. R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kul sh res h th a, A., Nemade, G., Lu, Y., and Le, Q. V. Towards a human-like opendomain chatbot. CoRR, abs/2001.09977, 2020. URL https://arxiv.org/abs/2001.09977.

Adiwardana, D., Luong, M., So, D. R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kulshreshtha, A., Nemade, G., Lu, Y., and Le, Q. V. 迈向类人开放域聊天机器人。CoRR, abs/2001.09977, 2020. URL https://arxiv.org/abs/2001.09977.

Bhandari, M., Gour, P. N., Ashfaq, A., Liu, P., and Neubig, G. Re-evaluating evaluation in text sum mari z ation. In Webber, B., Cohn, T., He, Y., and Liu, Y. (eds.), Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, November 16-20, 2020, pp. 9347–9359. Association for Computational Linguistics, 2020. doi: 10.18653/v1/ 2020.emnlp-main.751. URL https://doi.org/10. 18653/v1/2020.emnlp-main.751.

Bhandari, M., Gour, P. N., Ashfaq, A., Liu, P., and Neubig, G. 重新评估文本摘要中的评估方法。见 Webber, B., Cohn, T., He, Y., and Liu, Y. (编), 《2020年自然语言处理实证方法会议论文集》, EMNLP 2020, 线上, 2020年11月16-20日, 第9347–9359页。计算语言学协会, 2020. doi: 10.18653/v1/2020.emnlp-main.751. URL https://doi.org/10.18653/v1/2020.emnlp-main.751.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neel a kant an, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., and Amodei, D. Language models are few-shot learners. CoRR, abs/2005.14165, 2020. URL https://arxiv.org/ abs/2005.14165.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., and Amodei, D. 大语言模型是少样本学习者。CoRR, abs/2005.14165, 2020. URL https://arxiv.org/abs/2005.14165.

Castricato, L., Havrilla, A., Matiana, S., Pieler, M., Ye, A., Yang, I., Frazier, S., and Riedl, M. O. Robust preference learning for storytelling via contrastive rein for cement learning. CoRR, abs/2210.07792, 2022. doi: 10.48550/arXiv.2210.07792. URL https://doi. org/10.48550/arXiv.2210.07792.

Castricato, L., Havrilla, A., Matiana, S., Pieler, M., Ye, A., Yang, I., Frazier, S., and Riedl, M. O. 通过对比强化学习实现故事创作的鲁棒性偏好学习. CoRR, abs/2210.07792, 2022. doi: 10.48550/arXiv.2210.07792. URL https://doi.org/10.48550/arXiv.2210.07792.

Chowdhery, A., Narang, S., Devlin, J., Bosma, M., Mishra, G., Roberts, A., Barham, P., Chung, H. W., Sutton, C., Gehrmann, S., Schuh, P., Shi, K., Tsv yash chen ko, S., Maynez, J., Rao, A., Barnes, P., Tay, Y., Shazeer, N., Prabhakaran, V., Reif, E., Du, N., Hutchinson, B., Pope, R., Bradbury, J., Austin, J., Isard, M., Gur-Ari, G., Yin, P., Duke, T., Levskaya, A., Ghemawat, S., Dev, S., Micha lewski, H., Garcia, X., Misra, V., Robinson, K., Fedus, L., Zhou, D., Ippolito, D., Luan, D., Lim, H., Zoph, B., Spiridonov, A., Sepassi, R., Dohan, D., Agrawal, S., Omernick, M., Dai, A. M., Pillai, T. S., Pel- lat, M., Lewkowycz, A., Moreira, E., Child, R., Polozov, O., Lee, K., Zhou, Z., Wang, X., Saeta, B., Diaz, M., Firat, O., Catasta, M., Wei, J., Meier-Hellstern, K., Eck,

Chowdhery, A., Narang, S., Devlin, J., Bosma, M., Mishra, G., Roberts, A., Barham, P., Chung, H. W., Sutton, C., Gehrmann, S., Schuh, P., Shi, K., Tsvyashchenko, S., Maynez, J., Rao, A., Barnes, P., Tay, Y., Shazeer, N., Prabhakaran, V., Reif, E., Du, N., Hutchinson, B., Pope, R., Bradbury, J., Austin, J., Isard, M., Gur-Ari, G., Yin, P., Duke, T., Levskaya, A., Ghemawat, S., Dev, S., Michalewski, H., Garcia, X., Misra, V., Robinson, K., Fedus, L., Zhou, D., Ippolito, D., Luan, D., Lim, H., Zoph, B., Spiridonov, A., Sepassi, R., Dohan, D., Agrawal, S., Omernick, M., Dai, A. M., Pillai, T. S., Pellat, M., Lewkowycz, A., Moreira, E., Child, R., Polozov, O., Lee, K., Zhou, Z., Wang, X., Saeta, B., Diaz, M., Firat, O., Catasta, M., Wei, J., Meier-Hellstern, K., Eck,

D., Dean, J., Petrov, S., and Fiedel, N. Palm: Scaling language modeling with pathways. CoRR, abs/2204.02311, 2022. doi: 10.48550/arXiv.2204.02311. URL https: //doi.org/10.48550/arXiv.2204.02311.

D., Dean, J., Petrov, S. 和 Fiedel, N. Palm: 使用Pathways扩展语言建模。CoRR, abs/2204.02311, 2022. doi: 10.48550/arXiv.2204.02311. URL https://doi.org/10.48550/arXiv.2204.02311.

Chung, H. W., Hou, L., Longpre, S., Zoph, B., Tay, Y., Fedus, W., Li, E., Wang, X., Dehghani, M., Brahma, S., et al. Scaling instruction-finetuned language models. arXiv preprint arXiv:2210.11416, 2022.

Chung, H. W., Hou, L., Longpre, S., Zoph, B., Tay, Y., Fedus, W., Li, E., Wang, X., Dehghani, M., Brahma, S., 等. 指令微调语言模型的规模化研究. arXiv预印本 arXiv:2210.11416, 2022.

Devlin, J., Chang, M., Lee, K., and Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Burstein, J., Doran, C., and Solorio, T. (eds.), Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2-7, 2019, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, 2019. doi: 10.18653/v1/n19-1423. URL https://doi.org/ 10.18653/v1/n19-1423.

Devlin, J., Chang, M., Lee, K., 和 Toutanova, K. BERT: 面向语言理解的深度双向Transformer预训练。载于 Burstein, J., Doran, C., 和 Solorio, T. (编), 《2019年北美计算语言学协会会议: 人类语言技术论文集》, NAACL-HLT 2019, 美国明尼苏达州明尼阿波利斯, 2019年6月2-7日, 第1卷(长文与短文), 第4171–4186页。计算语言学协会, 2019年。doi: 10.18653/v1/n19-1423。URL https://doi.org/10.18653/v1/n19-1423。

Fabbri, A. R., Kryscinski, W., McCann, B., Xiong, C., Socher, R., and Radev, D. R. Summeval: Reevaluating sum mari z ation evaluation. Trans. Assoc. Comput. Linguistics, 9:391–409, 2021. doi: 10.1162/tacl\ a_ 00373. URL https://doi.org/10.1162/ tacl_a 00373.

Fabbri, A. R., Kryscinski, W., McCann, B., Xiong, C., Socher, R., 和 Radev, D. R. Summeval: 重新评估摘要生成评测方法。Trans. Assoc. Comput. Linguistics, 9:391–409, 2021. doi: 10.1162/tacl_a_ 00373. URL https://doi.org/10.1162/tacl_a_ 00373.

Freitag, M., Foster, G. F., Grangier, D., Ratnakar, V., Tan, Q., and Macherey, W. Experts, errors, and context: A largescale study of human evaluation for machine translation. CoRR, abs/2104.14478, 2021. URL https://arxiv. org/abs/2104.14478.

Freitag, M., Foster, G. F., Grangier, D., Ratnakar, V., Tan, Q., and Macherey, W. 专家、错误与语境:一项关于机器翻译人类评估的大规模研究。CoRR, abs/2104.14478, 2021. URL https://arxiv.org/abs/2104.14478.

Fu, J., Ng, S.-K., and Liu, P. Polyglot prompt: Multilingual multitask prompt raining. arXiv preprint arXiv:2204.14264, 2022.

Fu, J., Ng, S.-K., and Liu, P. Polyglot prompt: 多语言多任务提示训练. arXiv preprint arXiv:2204.14264, 2022.

Grusky, M., Naaman, M., and Artzi, Y. Newsroom: A dataset of 1.3 million summaries with diverse extractive strategies. In Walker, M. A., Ji, H., and Stent, A. (eds.), Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, Louisiana, USA, June 1-6, 2018, Volume 1 (Long Papers), pp. 708–719. Association for Computational Linguistics, 2018. doi: 10.18653/v1/n18-1065. URL https://doi.org/ 10.18653/v1/n18-1065.

Grusky, M., Naaman, M., 和 Artzi, Y. Newsroom: 包含130万篇采用多样化抽取策略的摘要数据集。载于 Walker, M. A., Ji, H., 和 Stent, A. (编), 《2018年北美计算语言学协会人类语言技术会议论文集》, NAACL-HLT 2018, 美国路易斯安那州新奥尔良, 2018年6月1-6日, 第1卷(长论文), 第708–719页。计算语言学协会, 2018. doi: 10.18653/v1/n18-1065. URL https://doi.org/10.18653/v1/n18-1065.

Hermann, K. M., Kociský, T., Gre fens te tte, E., Espeholt, L., Kay, W., Suleyman, M., and Blunsom, P. Teaching machines to read and comprehend. In Cortes, C., Lawrence, N. D., Lee, D. D., Sugiyama, M., and Garnett, R. (eds.), Advances in Neural Information Processing Systems 28: Annual Conference on Neural

Hermann, K. M., Kociský, T., Grefenstette, E., Espeholt, L., Kay, W., Suleyman, M., and Blunsom, P. 教机器阅读和理解。见 Cortes, C., Lawrence, N. D., Lee, D. D., Sugiyama, M., and Garnett, R. (编), 《神经信息处理系统进展 28: 神经信息处理系统年会》

Information Processing Systems 2015, December 7-12, 2015, Montreal, Quebec, Canada, pp. 1693– 1701, 2015. URL https://proceedings. neurips.cc/paper/2015/hash/ af dec 7005 cc 9 f 14302 cd 0474 fd 0 f 3 c 96-Abstract.html.

信息处理系统 2015, 2015年12月7-12日, 加拿大魁北克蒙特利尔, 第1693–1701页, 2015. 网址 https://proceedings.neurips.cc/paper/2015/hash/afdec7005cc9f14302cd0474fd0f3c96-Abstract.html.

Hu, J. E., Singh, A., Holz en berger, N., Post, M., and Durme, B. V. Large-scale, diverse, para phra stic bitexts via sampling and clustering. In Bansal, M. and Villa vice n cio, A. (eds.), Proceedings of the 23rd Conference on Computational Natural Language Learning, CoNLL 2019, Hong Kong, China, November 3-4, 2019, pp. 44–54. Association for Computational Linguistics, 2019. doi: 10.18653/v1/K19-1005. URL https://doi.org/ 10.18653/v1/K19-1005.

胡杰、辛格、霍尔岑伯格、波斯特和范杜尔梅。通过采样与聚类构建大规模多样化释义平行语料。见:班萨尔与比利亚维森西奥主编,《第23届计算自然语言学习会议论文集》(CoNLL 2019),2019年11月3-4日,中国香港,第44-54页。计算语言学协会,2019年。doi: 10.18653/v1/K19-1005。URL https://doi.org/10.18653/v1/K19-1005。

Kusner, M. J., Sun, Y., Kolkin, N. I., and Weinberger, K. Q. From word embeddings to document distances. In Bach, F. R. and Blei, D. M. (eds.), Proceedings of the 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6-11 July 2015, volume 37 of JMLR Workshop and Conference Proceedings, pp. 957–966. JMLR.org, 2015. URL http://proceedings.mlr. press/v37/kusnerb15.html.

Kusner, M. J., Sun, Y., Kolkin, N. I., and Weinberger, K. Q. 从词嵌入到文档距离。见 Bach, F. R. 和 Blei, D. M. (编), 《第32届国际机器学习会议论文集》, ICML 2015, 法国里尔, 2015年7月6-11日, JMLR研讨会及会议论文集第37卷, 第957–966页. JMLR.org, 2015. 网址 http://proceedings.mlr.press/v37/kusnerb15.html.

Lewis, M., Liu, Y., Goyal, N., Ghaz vi nine j ad, M., Mohamed, A., Levy, O., Stoyanov, V., and Z ett le moyer, L. BART: denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In Jurafsky, D., Chai, J., Schluter, N., and Tetreault, J. R. (eds.), Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, July 5-10, 2020, pp. 7871–7880. Association for Computational Linguistics, 2020. doi: 10.18653/v1/2020.acl-main.703. URL https://doi. org/10.18653/v1/2020.acl-main.703.

Lewis, M., Liu, Y., Goyal, N., Ghazvininejad, M., Mohamed, A., Levy, O., Stoyanov, V., 和 Zettlemoyer, L. BART: 面向自然语言生成、翻译与理解的去噪序列到序列预训练模型。见 Jurafsky, D., Chai, J., Schluter, N., 和 Tetreault, J. R. (编), 《第58届计算语言学协会年会论文集》, ACL 2020, 线上会议, 2020年7月5-10日, 第7871–7880页。计算语言学协会, 2020. doi: 10.18653/v1/2020.acl-main.703. 网址 https://doi.org/10.18653/v1/2020.acl-main.703.

Li, Z., Zhang, J., Fei, Z., Feng, Y., and Zhou, J. Conversations are not flat: Modeling the dynamic information flow across dialogue utterances. In Zong, C., Xia, F., Li, W., and Navigli, R. (eds.), Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, August 1-6, 2021, pp. 128– 138. Association for Computational Linguistics, 2021. doi: 10.18653/v1/2021.acl-long.11. URL doi.org/10.18653/v1/2021.acl-long.11.

Li, Z., Zhang, J., Fei, Z., Feng, Y., and Zhou, J. 对话并非平面:建模对话语句间动态信息流。见 Zong, C., Xia, F., Li, W., 和 Navigli, R. (编), 《第59届计算语言学协会年会暨第11届国际自然语言处理联合会议论文集》, ACL/IJCNLP 2021, (第1卷:长论文), 线上会议, 2021年8月1-6日, 第128–138页。计算语言学协会, 2021. doi: 10.18653/v1/2021.acl-long.11. 网址 https://doi.org/10.18653/v1/2021.acl-long.11。

Lin, C.-Y. Rouge: A package for automatic evaluation of summaries. In Text sum mari z ation branches out, pp. 74–81, 2004.

Lin, C.-Y. Rouge: 自动摘要评估工具包。In Text summarization branches out, pp. 74–81, 2004.

Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., and Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. arXiv preprint arXiv:2107.13586, 2021.

Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., 和 Neubig, G. 《预训练、提示与预测:自然语言处理中提示方法的系统综述》。arXiv预印本 arXiv:2107.13586, 2021。

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Z ett le moyer, L., and Stoyanov, V. Roberta: A robustly optimized BERT pre training approach. CoRR, abs/1907.11692, 2019. URL http://arxiv.org/ abs/1907.11692.

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., Levy, O., Lewis, M., Zettlemoyer, L., and Stoyanov, V. RoBERTa: 一种稳健优化的BERT预训练方法。CoRR, abs/1907.11692, 2019. URL http://arxiv.org/abs/1907.11692.

Liu, Y., Fabbri, A. R., Liu, P., Zhao, Y., Nan, L., Han, R., Han, S., Joty, S., Wu, C.-S., Xiong, C., et al. Revisiting the gold standard: Grounding sum mari z ation evaluation with robust human evaluation. arXiv preprint arXiv:2212.07981, 2022.

Liu, Y., Fabbri, A. R., Liu, P., Zhao, Y., Nan, L., Han, R., Han, S., Joty, S., Wu, C.-S., Xiong, C., 等. 重新审视黄金标准: 基于稳健人工评估的摘要评估. arXiv预印本 arXiv:2212.07981, 2022.

Mairesse, F., Gasic, M., Jurcícek, F., Keizer, S., Thom- son, B., Yu, K., and Young, S. J. Phrase-based statistical language generation using graphical models and active learning. In Hajic, J., Carberry, S., and Clark, S. (eds.), ACL 2010, Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics, July 11-16, 2010, Uppsala, Sweden, pp. 1552–1561. The Association for Computer Linguistics, 2010. URL https://a cl anthology.org/P10-1157/.

Mairesse, F., Gasic, M., Jurcícek, F., Keizer, S., Thomson, B., Yu, K., and Young, S. J. 基于短语的统计语言生成:使用图模型和主动学习方法。见 Hajic, J., Carberry, S., and Clark, S. (编), 《ACL 2010, 第48届计算语言学协会年会论文集》, 2010年7月11-16日, 瑞典乌普萨拉, 第1552–1561页。计算语言学协会, 2010. 网址 https://aclanthology.org/P10-1157/.

Matiana, S., Smith, J. R., Teehan, R., Castricato, L., Biderman, S., Gao, L., and Frazier, S. Cut the CARP: fishing for zero-shot story evaluation. CoRR, abs/2110.03111, 2021. URL https://arxiv.org/ abs/2110.03111.

Matiana, S., Smith, J. R., Teehan, R., Castricato, L., Biderman, S., Gao, L., and Frazier, S. 切断CARP:零样本故事评估的探索。CoRR, abs/2110.03111, 2021. URL https://arxiv.org/ abs/2110.03111.

Mehri, S. and Eskénazi, M. Unsupervised evaluation of interactive dialog with dialogpt. In Pietquin, O., Muresan, S., Chen, V., Kennington, C., Vandyke, D., Dethlefs, N., Inoue, K., Ekstedt, E., and Ultes, S. (eds.), Proceedings of the 21th Annual Meeting of the Special Interest Group on Discourse and Dialogue, SIGdial 2020, 1st virtual meeting, July 1-3, 2020, pp. 225–235. Association for Computational Linguistics, 2020. URL https: //a cl anthology.org/2020.sigdial-1.28/.

Mehri, S. 和 Eskénazi, M. 使用 DialogPT 对交互式对话进行无监督评估。见 Pietquin, O., Muresan, S., Chen, V., Kennington, C., Vandyke, D., Dethlefs, N., Inoue, K., Ekstedt, E., 和 Ultes, S. (编), 《第21届话语与对话特别兴趣小组年会论文集》, SIGdial 2020, 首届虚拟会议, 2020年7月1-3日, 第225–235页。计算语言学协会, 2020. 网址 https://aclanthology.org/2020.sigdial-1.28/.

Min, S., Lyu, X., Holtzman, A., Artetxe, M., Lewis, M., Hajishirzi, H., and Z ett le moyer, L. Rethinking the role of demonstrations: What makes in-context learning work? CoRR, abs/2202.12837, 2022. URL https://arxiv. org/abs/2202.12837.

Min, S., Lyu, X., Holtzman, A., Artetxe, M., Lewis, M., Hajishirzi, H., and Zettlemoyer, L. 重新思考演示的作用:什么让上下文学习生效? CoRR, abs/2202.12837, 2022. URL https://arxiv.org/abs/2202.12837.

Mukaka, M. M. A guide to appropriate use of correlation coefficient in medical research. Malawi medical journal, 24(3):69–71, 2012.

Mukaka, M. M. 医学研究中相关系数的正确使用指南. Malawi medical journal, 24(3):69–71, 2012.

Nenkova, A. and Passonneau, R. Evaluating content selection in sum mari z ation: The pyramid method. In Proceedings of the Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics: HLT-NAACL 2004, pp. 145–152, Boston, Massachusetts, USA, May 2 - May 7

Nenkova, A. 和 Passonneau, R. 评估摘要中的内容选择:金字塔方法。载于《北美计算语言学协会人类语言技术会议论文集:HLT-NAACL 2004》,第145-152页,美国马萨诸塞州波士顿,5月2日-5月7日

- Association for Computational Linguistics. URL https://a cl anthology.org/N04-1019.

- 计算语言学协会。URL https://acl anthology.org/N04-1019。

Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C. L., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., et al. Training language models to follow instructions with human feedback. arXiv preprint arXiv:2203.02155, 2022.

Ouyang, L., Wu, J., Jiang, X., Almeida, D., Wainwright, C. L., Mishkin, P., Zhang, C., Agarwal, S., Slama, K., Ray, A., 等. 通过人类反馈训练语言模型遵循指令. arXiv预印本 arXiv:2203.02155, 2022.

Pang, B., Nijkamp, E., Han, W., Zhou, L., Liu, Y., and Tu, K. Towards holistic and automatic evaluation of open-domain dialogue generation. In Jurafsky, D., Chai, J., Schluter, N., and Tetreault, J. R. (eds.), Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, July 5-10, 2020, pp. 3619–3629. Association for Computational Linguistics, 2020. doi: 10.18653/v1/ 2020.acl-main.333. URL https://doi.org/10. 18653/v1/2020.acl-main.333.

Pang, B., Nijkamp, E., Han, W., Zhou, L., Liu, Y., and Tu, K. 面向开放域对话生成的整体自动化评估。见 Jurafsky, D., Chai, J., Schluter, N., and Tetreault, J. R. (编), 《第58届计算语言学协会年会论文集》, ACL 2020, 线上, 2020年7月5-10日, 第3619–3629页。计算语言学协会, 2020。doi: 10.18653/v1/2020.acl-main.333。网址 https://doi.org/10.18653/v1/2020.acl-main.333。

Papineni, K., Roukos, S., Ward, T., and Zhu, W. Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, July 6- 12, 2002, Philadelphia, PA, USA, pp. 311–318. ACL, 2002. doi: 10.3115/1073083.1073135. URL https: //a cl anthology.org/P02-1040/.

Papineni, K., Roukos, S., Ward, T., 和 Zhu, W. Bleu: 一种机器翻译自动评估方法。载于《第40届计算语言学协会年会论文集》,2002年7月6-12日,美国宾夕法尼亚州费城,第311-318页。ACL,2002年。doi: 10.3115/1073083.1073135。URL https://aclanthology.org/P02-1040/。

Popovic, M. chrf: character n-gram f-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, WMT@EMNLP 2015, 17-18 September 2015, Lisbon, Portugal, pp. 392–395. The Association for Computer Linguistics, 2015. doi: 10.18653/v1/w15-3049. URL https://doi.org/ 10.18653/v1/w15-3049.

Popovic, M. chrf: 基于字符n元语法F值的机器翻译自动评估方法。在《第十届统计机器翻译研讨会论文集》中,WMT@EMNLP 2015,2015年9月17-18日,葡萄牙里斯本,第392-395页。计算机语言学协会,2015年。doi: 10.18653/v1/w15-3049。URL https://doi.org/10.18653/v1/w15-3049。

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., Sutskever, I., et al. Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., Sutskever, I., 等. 语言模型是无监督多任务学习者. OpenAI 博客, 1(8):9, 2019.

Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., Zhou, Y., Li, W., and Liu, P. J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res., 21:140:1–140:67, 2020. URL http://jmlr.org/papers/v21/20-074. html.

Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S., Matena, M., Zhou, Y., Li, W., and Liu, P. J. 探索迁移学习的极限:基于统一文本到文本Transformer的研究。J. Mach. Learn. Res., 21:140:1–140:67, 2020. URL http://jmlr.org/papers/v21/20-074.html.

Rei, R., Stewart, C., Farinha, A. C., and Lavie, A. COMET: A neural framework for MT evaluation. CoRR, abs/2009.09025, 2020. URL https://arxiv.org/ abs/2009.09025.

Rei, R., Stewart, C., Farinha, A. C., and Lavie, A. COMET: 一个用于机器翻译评估的神经框架。CoRR, abs/2009.09025, 2020. URL https://arxiv.org/abs/2009.09025.

Sanh, V., Webson, A., Raffel, C., Bach, S. H., Sutawika, L., Alyafeai, Z., Chaffin, A., Stiegler, A., Scao, T. L., Raja, A., et al. Multitask prompted training enables zero-shot task generalization. arXiv preprint arXiv:2110.08207, 2021.

Sanh, V., Webson, A., Raffel, C., Bach, S. H., Sutawika, L., Alyafeai, Z., Chaffin, A., Stiegler, A., Scao, T. L., Raja, A., 等. 多任务提示训练实现零样本任务泛化. arXiv预印本 arXiv:2110.08207, 2021.