G-EVAL: NLG Evaluation using GPT-4 with Better Human Alignment

G-EVAL: 基于GPT-4并更好对齐人类的自然语言生成评估方法

Yang Liu Dan Iter Yichong Xu Shuohang Wang Ruochen Xu Chenguang Zhu

杨柳 丹伊特 易冲徐 硕航王 若辰徐 晨光朱

Microsoft Cognitive Services Research yaliu10, iterdan, yicxu, shuowa, ruox, chezhu @microsoft.com

微软认知服务研究 yaliu10, iterdan, yicxu, shuowa, ruox, chezhu @microsoft.com

Abstract

摘要

The quality of texts generated by natural language generation (NLG) systems is hard to measure automatically. Conventional referencebased metrics, such as BLEU and ROUGE, have been shown to have relatively low correlation with human judgments, especially for tasks that require creativity and diversity. Recent studies suggest using large language models (LLMs) as reference-free metrics for NLG evaluation, which have the benefit of being applicable to new tasks that lack human references. However, these LLM-based evaluators still have lower human correspondence than medium-size neural evaluators. In this work, we present G-EVAL, a framework of using large language models with chain-of-thoughts (CoT) and a form-filling paradigm, to assess the quality of NLG outputs. We experiment with two generation tasks, text sum mari z ation and dialogue generation. We show that G-EVAL with GPT-4 as the backbone model achieves a Spearman correlation of 0.514 with human on sum mari z ation task, outperforming all previous methods by a large margin. We also propose analysis on the behavior of LLM-based evaluators, and highlight the potential concern of LLM-based evaluators having a bias towards the LLM-generated texts. 1

自然语言生成(NLG)系统生成的文本质量难以自动衡量。传统的基于参考指标的评估方法(如BLEU和ROUGE)已被证明与人类判断相关性较低,特别是在需要创造力和多样性的任务中。近期研究表明,可将大语言模型(LLMs)作为无参考指标用于NLG评估,其优势在于适用于缺乏人工参考的新任务。然而,这些基于LLM的评估器与人类判断的吻合度仍低于中等规模的神经评估器。本研究提出G-EVAL框架,通过结合思维链(CoT)和表单填写范式,利用大语言模型评估NLG输出质量。我们在文本摘要和对话生成两个任务上进行实验,结果表明:以GPT-4为核心模型的G-EVAL在摘要任务中与人类评估的Spearman相关系数达到0.514,显著优于所有现有方法。我们还分析了基于LLM的评估器行为特征,并指出这类评估器可能对LLM生成文本存在偏好的潜在问题。[1]

1 Introduction

1 引言

Evaluating the quality of natural language generation systems is a challenging problem even when large language models can generate high-quality and diverse texts that are often indistinguishable from human-written texts (Ouyang et al., 2022). Traditional automatic metrics, such as BLEU (Papineni et al., 2002), ROUGE (Lin, 2004), and ME- TEOR (Banerjee and Lavie, 2005), are widely used for NLG evaluation, but they have been shown to have relatively low correlation with human judgments, especially for open-ended generation tasks. Moreover, these metrics require associated reference output, which is costly to collect for new tasks.

评估自然语言生成系统的质量是一个具有挑战性的问题,即使大语言模型能够生成与人类撰写文本难以区分的高质量多样化文本 (Ouyang et al., 2022)。传统自动评估指标如 BLEU (Papineni et al., 2002)、ROUGE (Lin, 2004) 和 METEOR (Banerjee and Lavie, 2005) 被广泛用于自然语言生成评估,但已证明这些指标与人类判断的相关性较低,特别是在开放式生成任务中。此外,这些指标需要关联的参考输出,而针对新任务收集这些参考数据的成本很高。

Recent studies propose directly using LLMs as reference-free NLG evaluators (Fu et al., 2023; Wang et al., 2023). The idea is to use the LLMs to score the candidate output based on its generation probability without any reference target, under the assumption that the LLMs have learned to assign higher probabilities to high-quality and fluent texts. However, the validity and reliability of using LLMs as NLG evaluators have not been systematically investigated. In addition, meta-evaluations show that these LLM-based evaluators still have lower human correspondence than medium-size neural evaluators (Zhong et al., 2022). Thus, there is a need for a more effective and reliable framework for using LLMs for NLG evaluation.

近期研究提出直接使用大语言模型作为无参考的自然语言生成评估器 (Fu et al., 2023; Wang et al., 2023)。其核心思路是利用大语言模型基于生成概率对候选输出进行评分,无需任何参考目标,前提假设是大语言模型已学会为高质量且流畅的文本赋予更高概率。然而,将大语言模型作为自然语言生成评估器的有效性和可靠性尚未得到系统验证。此外,元评估研究表明,这些基于大语言模型的评估器在人类一致性方面仍逊色于中等规模的神经评估器 (Zhong et al., 2022)。因此,亟需构建更有效可靠的大语言模型自然语言生成评估框架。

In this paper, we propose G-EVAL, a framework of using LLMs with chain-of-thoughts (CoT) (Wei et al., 2022) to evaluate the quality of generated texts in a form-filling paradigm. By only feeding the Task Introduction and the Evaluation Criteria as a prompt, we ask LLMs to generate a CoT of detailed Evaluation Steps. Then we use the prompt along with the generated CoT to evaluate the NLG outputs. The evaluator output is formatted as a form. Moreover, the probabilities of the output rating tokens can be used to refine the final metric. We conduct extensive experiments on three meta-evaluation benchmarks of two NLG tasks: text sum mari z ation and dialogue generation. The results show that G-EVAL can outperform existing NLG evaluators by a large margin in terms of correlation with human evaluations. Finally, we conduct analysis on the behavior of LLM-based evaluators, and highlight the potential issue of LLM-based evaluator having a bias towards the LLM-generated texts.

本文提出G-EVAL框架,通过结合大语言模型 (LLM) 和思维链 (CoT) (Wei et al., 2022) 技术,以表单填写范式评估生成文本质量。我们仅需输入任务介绍和评估标准作为提示,即可引导大语言模型生成包含详细评估步骤的思维链。随后将原始提示与生成的思维链结合,用于评估自然语言生成 (NLG) 输出结果。评估器输出采用表单化格式,且输出评分token的概率可用于优化最终指标。我们在文本摘要和对话生成两个NLG任务的三个元评估基准上开展大量实验,结果表明G-EVAL在人类评估相关性方面显著优于现有NLG评估方法。最后,我们分析了基于大语言模型的评估器行为,并指出其可能对LLM生成文本存在偏好的潜在问题。

Figure 1: The overall framework of G-EVAL. We first input Task Introduction and Evaluation Criteria to the LLM, and ask it to generate a CoT of detailed Evaluation Steps. Then we use the prompt along with the generated CoT to evaluate the NLG outputs in a form-filling paradigm. Finally, we use the probability-weighted summation of the output scores as the final score.

图 1: G-EVAL的整体框架。我们首先将任务介绍和评估标准输入大语言模型,要求其生成包含详细评估步骤的思维链(CoT)。随后,我们使用包含生成CoT的提示词,以填表范式评估自然语言生成(NLG)输出。最终,我们采用输出分数的概率加权求和作为最终得分。

To summarize, our main contributions in this paper are:

综上所述,本文的主要贡献包括:

2 Method

2 方法

G-EVAL is a prompt-based evaluator with three main components: 1) a prompt that contains the definition of the evaluation task and the desired evaluation criteria, 2) a chain-of-thoughts (CoT) that is a set of intermediate instructions generated by the LLM describing the detailed evaluation steps, and 3) a scoring function that calls LLM and calculates the score based on the probabilities of the return tokens.

G-EVAL是一种基于提示(prompt)的评估工具,包含三个主要组件:1) 包含评估任务定义和期望评估标准的提示;2) 思维链(chain-of-thoughts, CoT),即由大语言模型生成的一组描述详细评估步骤的中间指令;3) 评分函数,通过调用大语言模型并根据返回token的概率计算得分。

Prompt for NLG Evaluation The prompt is a natural language instruction that defines the evaluation task and the desired evaluation criteria. For example, for text sum mari z ation, the prompt can be:

自然语言生成评估提示

提示是一种自然语言指令,用于定义评估任务和期望的评估标准。例如,对于文本摘要任务,提示可以是:

You will be given one summary written for a news article. Your task is to rate the summary on one metric.

你将获得一篇新闻文章的摘要。你的任务是根据一个指标对该摘要进行评分。

Please make sure you read and understand these instructions carefully. Please keep this document open while reviewing, and refer to it as needed.

请确保仔细阅读并理解这些说明。请在审阅时保持此文档打开,并根据需要参考。

The prompt should also contain customized evaluation criteria for different NLG tasks and, such as coherence, conciseness, or grammar. For example, for evaluating coherence in text sum mari z ation, we add the following content to the prompt:

提示词还应包含针对不同自然语言生成(NLG)任务定制的评估标准,例如连贯性、简洁性或语法。例如,为评估文本摘要的连贯性,我们在提示词中添加以下内容:

Evaluation Criteria:

评估标准:

Coherence (1-5) - the collective quality of all sentences. We align this dimension with the DUC quality question of structure and coherence whereby ”the summary should be well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic.”

连贯性 (1-5) - 所有句子的整体质量。我们将此维度与DUC质量评估中的结构与连贯性问题对齐,即"摘要应具备良好的结构和组织性。摘要不应只是相关信息的堆砌,而应通过句子间的递进关系,构建出关于主题的连贯信息体。"

Auto Chain-of-Thoughts for NLG Evaluation The chain-of-thoughts (CoT) is a sequence of intermediate representations that are generated by the LLM during the text generation process. For evaluation tasks, some criteria need a more detailed evaluation instruction beyond the simple definition, and it is time-consuming to manually design such evaluation steps for each task. We find that LLM can generate such evaluation steps by itself. The CoT can provide more context and guidance for the LLM to evaluate the generated text, and can also help to explain the evaluation process and results. For example, for evaluating coherence in text summarization, we add a line of “Evaluation Steps:” to the prompt and let LLM to generate the following CoT automatically:

自动思维链(Chain-of-Thoughts)用于自然语言生成评估

思维链(CoT)是大语言模型在文本生成过程中产生的一系列中间表征。对于评估任务而言,某些标准需要比简单定义更详细的评估指导,而针对每个任务手动设计这类评估步骤非常耗时。我们发现大语言模型可以自主生成此类评估步骤。思维链能为大语言模型评估生成文本提供更多上下文和指导,同时有助于解释评估过程与结果。例如在评估文本摘要连贯性时,我们在提示词中添加"评估步骤:"行,让大语言模型自动生成如下思维链:

- Read the news article carefully and identify the main topic and key points. 2. Read the summary and compare it to the news article. Check if the summary covers the main topic and key points of the news article, and if it presents them in a clear and logical order. 3. Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest based on the Evaluation Criteria.

- 仔细阅读新闻文章,确定主要话题和关键点。

- 阅读摘要并与新闻文章对比。检查摘要是否涵盖新闻文章的主要话题和关键点,以及是否以清晰、逻辑的顺序呈现。

- 根据评估标准,在1到5的范围内为连贯性打分,其中1为最低,5为最高。

Scoring Function The scoring function calls the LLM with the designed prompt, auto CoT, the input context and the target text that needs to be evaluated. Unlike GPTScore (Fu et al., 2023) which uses the conditional probability of generating the target text as an evaluation metric, G-EVAL directly performs the evaluation task with a form-filling paradigm. For example, for evaluating coherence in text sum mari z ation, we concatenate the prompt, the CoT, the news article, and the summary, and then call the LLM to output a score from 1 to 5 for each evaluation aspect, based on the defined criteria.

评分函数

评分函数通过设计的提示(prompt)、自动思维链(auto CoT)、输入上下文以及需要评估的目标文本来调用大语言模型(LLM)。与GPTScore (Fu et al., 2023)使用生成目标文本的条件概率作为评估指标不同,G-EVAL直接采用表单填充范式执行评估任务。例如,在评估文本摘要的连贯性时,我们将提示、思维链(CoT)、新闻文章和摘要拼接起来,然后调用大语言模型根据定义的标准为每个评估维度输出1到5的分数。

However, we notice this direct scoring function has two issues:

然而,我们注意到这种直接评分函数存在两个问题:

To address these issues, we propose using the probabilities of output tokens from LLMs to normalize the scores and take their weighted summation as the final results. Formally, given a set of scores (like from 1 to 5) predefined in the prompt $S={s_ {1},s_ {2},...,s_ {n}}$ , the probability of each score $p(s_ {i})$ is calculated by the LLM, and the final score is:

为了解决这些问题,我们提出利用大语言模型(LLM)输出token的概率对分数进行归一化,并采用加权求和作为最终结果。具体而言,给定提示中预定义的一组分数(如1到5分) $S={s_ {1},s_ {2},...,s_ {n}}$ ,通过大语言模型计算每个分数 $p(s_ {i})$ 的概率,最终得分为:

$$

s c o r e=\sum_ {i=1}^{n}p(s_ {i})\times s_ {i}

$$

$$

s c o r e=\sum_ {i=1}^{n}p(s_ {i})\times s_ {i}

$$

This method obtains more fine-grained, continuous scores that better reflect the quality and diversity of the generated texts.

该方法获得更细粒度、连续的分数,能更好地反映生成文本的质量和多样性。

3 Experiments

3 实验

Following Zhong et al. (2022), we meta-evaluate our evaluator on three benchmarks, SummEval, Topical-Chat and QAGS, of two NLG tasks, summarization and dialogue response generation.

根据Zhong等人(2022)的研究,我们在SummEval、Topical-Chat和QAGS三个基准上对评估器进行了元评估,涵盖摘要和对话响应生成两个自然语言生成任务。

3.1 Implementation Details

3.1 实现细节

We use OpenAI’s GPT family as our LLMs, including GPT-3.5 (text-davinci-003) and GPT-4. For GPT-3.5, we set decoding temperature to 0 to increase the model’s determinism. For GPT-4, as it does not support the output of token probabilities, we set ‘ $n=20$ $),t e m p e r a t u r e=1,t o p_ {-}p=1^{\prime}$ to sample 20 times to estimate the token probabilities. We use G-EVAL-4 to indicate G-EVAL with GPT-4 as the backbone model, and G-EVAL-3.5 to indicate G-EVAL with GPT-3.5 as the backbone model. Example prompts for each task are provided in the Appendix.

我们采用OpenAI的GPT系列作为大语言模型,包括GPT-3.5(text-davinci-003)和GPT-4。对于GPT-3.5,我们将解码温度设置为0以增强模型确定性。GPT-4由于不支持输出token概率,我们设置'$n=20$), temperature=1, top_ p=1'进行20次采样以估算token概率。使用G-EVAL-4表示以GPT-4为骨干模型的G-EVAL,G-EVAL-3.5表示以GPT-3.5为骨干模型的G-EVAL。各任务示例提示详见附录。

| Metrics | Coherence | Consistency | Fluency | Relevance | AVG | |||||

| p | T | p | T | p T | p T | p | T | |||

| ROUGE-1 | 0.167 | 0.126 | 0.160 | 0.130 | 0.115 | 0.094 | 0.326 | 0.252 | 0.192 | 0.150 |

| ROUGE-2 | 0.184 | 0.139 | 0.187 | 0.155 | 0.159 | 0.128 | 0.290 | 0.219 | 0.205 | 0.161 |

| ROUGE-L | 0.128 | 0.099 | 0.115 | 0.092 | 0.105 | 0.084 | 0.311 | 0.237 | 0.165 | 0.128 |

| BERTScore | 0.284 | 0.211 | 0.110 | 0.090 | 0.193 | 0.158 | 0.312 | 0.243 | 0.225 | 0.175 |

| MOVERSscore | 0.159 | 0.118 | 0.157 | 0.127 | 0.129 | 0.105 | 0.318 | 0.244 | 0.191 | 0.148 |

| BARTScore | 0.448 | 0.342 | 0.382 | 0.315 | 0.356 | 0.292 | 0.356 | 0.273 | 0.385 | 0.305 |

| UniEval | 0.575 | 0.442 | 0.446 | 0.371 | 0.449 | 0.371 | 0.426 | 0.325 | 0.474 | 0.377 |

| GPTScore | 0.434 | 一 | 0.449 | 一 | 0.403 | 一 | 0.381 | 一 | 0.417 | 一 |

| G-EVAL-3.5 | 0.440 | 0.335 | 0.386 | 0.318 | 0.424 | 0.347 | 0.385 | 0.293 | 0.401 | 0.320 |

| - Probs | 0.359 | 0.313 | 0.361 | 0.344 | 0.339 | 0.323 | 0.327 | 0.288 | 0.346 | 0.317 |

| G-EVAL-4 | 0.582 | 0.457 | 0.507 | 0.425 | 0.455 | 0.378 | 0.547 | 0.433 | 0.514 | 0.418 |

| - Probs | 0.560 | 0.472 | 0.501 | 0.459 | 0.438 | 0.408 | 0.511 | 0.444 | 0.502 | 0.446 |

| - CoT | 0.564 | 0.454 | 0.493 | 0.413 | 0.403 | 0.334 | 0.538 | 0.427 | 0.500 | 0.407 |

Table 1: Summary-level Spearman $(\rho)$ and Kendall-Tau $(\tau)$ correlations of different metrics on SummEval benchmark. G-EVAL without probabilities (italicized) should not be considered as a fair comparison to other metrics on $\tau$ , as it leads to many ties in the scores. This results in a higher Kendall-Tau correlation, but it does not fairly reflect the true evaluation ability. More details are in Section 4.

表 1: 不同指标在SummEval基准上的摘要级Spearman $(\rho)$ 和Kendall-Tau $(\tau)$ 相关性汇总。未使用概率的G-EVAL(斜体)不应被视为在 $\tau$ 上与其他指标的公平比较,因为它会导致大量分数并列。这会带来更高的Kendall-Tau相关性,但并不能公平反映真实的评估能力。更多细节见第4节。

| 指标 | 连贯性 | 一致性 | 流畅性 | 相关性 | 平均 |

|---|---|---|---|---|---|

| p | T | p | T | p | |

| ROUGE-1 | 0.167 | 0.126 | 0.160 | 0.130 | 0.115 |

| ROUGE-2 | 0.184 | 0.139 | 0.187 | 0.155 | 0.159 |

| ROUGE-L | 0.128 | 0.099 | 0.115 | 0.092 | 0.105 |

| BERTScore | 0.284 | 0.211 | 0.110 | 0.090 | 0.193 |

| MOVERSscore | 0.159 | 0.118 | 0.157 | 0.127 | 0.129 |

| BARTScore | 0.448 | 0.342 | 0.382 | 0.315 | 0.356 |

| UniEval | 0.575 | 0.442 | 0.446 | 0.371 | 0.449 |

| GPTScore | 0.434 | - | 0.449 | - | 0.403 |

| G-EVAL-3.5 | 0.440 | 0.335 | 0.386 | 0.318 | 0.424 |

| - Probs | 0.359 | 0.313 | 0.361 | 0.344 | 0.339 |

| G-EVAL-4 | 0.582 | 0.457 | 0.507 | 0.425 | 0.455 |

| - Probs | 0.560 | 0.472 | 0.501 | 0.459 | 0.438 |

| - CoT | 0.564 | 0.454 | 0.493 | 0.413 | 0.403 |

3.2 Benchmarks

3.2 基准测试

We adopt three meta-evaluation benchmarks to measure the correlation between G-EVAL and human judgments.

我们采用三个元评估基准来衡量G-EVAL与人类判断之间的相关性。

SummEval (Fabbri et al., 2021) is a benchmark that compares different evaluation methods for sum mari z ation. It gives human ratings for four aspects of each summary: fluency, coherence, consistency and relevance. It is built on the CNN/DailyMail dataset (Hermann et al., 2015)

SummEval (Fabbri等人,2021) 是一个用于比较不同摘要评估方法的基准测试。它为每个摘要的四个方面提供人工评分:流畅性、连贯性、一致性和相关性。该基准基于CNN/DailyMail数据集 (Hermann等人,2015)构建。

Topical-Chat (Mehri and Eskenazi, 2020) is a testbed for meta-evaluating different evaluators on dialogue response generation systems that use knowledge. We follow (Zhong et al., 2022) to use its human ratings on four aspects: naturalness, coherence, engaging ness and grounded ness.

Topical-Chat (Mehri and Eskenazi, 2020) 是一个用于元评估不同评估者在基于知识的对话响应生成系统上表现的测试平台。我们遵循 (Zhong et al., 2022) 的方法,采用其人类评分涉及的四个维度:自然度、连贯性、吸引力和知识关联性。

QAGS (Wang et al., 2020) is a benchmark for evaluating hallucinations in the sum mari z ation task. It aims to measure the consistency dimension of summaries on two different sum mari z ation datasets.

QAGS (Wang et al., 2020) 是一个用于评估摘要任务中幻觉现象的基准测试。它旨在衡量两个不同摘要数据集上摘要内容的一致性维度。

3.3 Baselines

3.3 基线方法

We evaluate G-EVAL against various evaluators that achieved state-of-the-art performance.

我们针对当前性能最优的各种评估器对G-EVAL进行了评估。

BERTScore (Zhang et al., 2019) measures the similarity between two texts based on the contextualized embedding from BERT (Devlin et al., 2019).

BERTScore (Zhang et al., 2019) 通过BERT (Devlin et al., 2019) 的上下文嵌入来衡量两段文本的相似度。

MoverScore (Zhao et al., 2019) improves BERTScore by adding soft alignments and new aggregation methods to obtain a more robust similarity measure.

MoverScore (Zhao et al., 2019) 通过引入软对齐和新聚合方法改进了 BERTScore,从而获得更稳健的相似性度量。

BARTScore (Yuan et al., 2021) is a unified evaluator which evaluate with the average likelihood of the pretrained encoder-decoder model, BART (Lewis et al., 2020). It can predict different scores depending on the formats of source and target.

BARTScore (Yuan等人, 2021) 是一个统一的评估器,通过预训练的编码器-解码器模型 BART (Lewis等人, 2020) 的平均似然进行评估。根据源文本和目标文本的不同格式,它可以预测不同的分数。

FactCC and QAGS (Kryscinski et al., 2020; Wang et al., 2020) are two evaluators that measure the factual consistency of generated summaries. FactCC is a BERT-based classifier that predicts whether a summary is consistent with the source document. QAGS is a question-answering based evaluator that generates questions from the summary and checks if the answers can be found in the source document.

FactCC和QAGS (Kryscinski等人,2020;Wang等人,2020) 是两种用于评估生成摘要事实一致性的工具。FactCC是一种基于BERT的分类器,用于预测摘要是否与源文档一致。QAGS是一种基于问答的评估器,它从摘要中生成问题并检查答案是否能在源文档中找到。

USR (Mehri and Eskenazi, 2020) is evaluator that assess dialogue response generation from different perspectives. It has several versions that assign different scores to each target response.

USR (Mehri和Eskenazi, 2020) 是一个从不同角度评估对话响应生成的评估器。它有多个版本,每个版本为目标响应分配不同的分数。

UniEval (Zhong et al., 2022) is a unified evaluator that can evaluate different aspects of text generation as QA tasks. It uses a pretrained T5 model (Raffel et al., 2020) to encode the evaluation task, source and target texts as questions and answers, and then computes the QA score as the evaluation score. It can also handle different evaluation tasks by changing the question format.

UniEval (Zhong et al., 2022) 是一个统一的评估器,能够以问答任务形式评估文本生成的不同方面。它使用预训练的T5模型 (Raffel et al., 2020) 将评估任务、源文本和目标文本编码为问题和答案,随后通过计算问答得分作为评估分数。通过改变问题格式,该评估器还能处理不同的评估任务。

GPTScore (Fu et al., 2023) is a new framework that evaluates texts with generative pre-training models like GPT-3. It assumes that a generative pre-training model will assign a higher probability of high-quality generated text following a given instruction and context. Unlike G-EVAL, GPTScore formulates the evaluation task as a conditional generation problem instead of a form-filling problem.

GPTScore (Fu et al., 2023) 是一种利用生成式预训练模型(如GPT-3)评估文本的新框架。该框架假设生成式预训练模型会对遵循给定指令和上下文的高质量生成文本赋予更高概率。与G-EVAL不同,GPTScore将评估任务构建为条件生成问题而非表格填写问题。

3.4 Results for Sum mari z ation

3.4 总结性结果

We adopt the same approach as Zhong et al. (2022) to evaluate different sum mari z ation metrics using summary-level Spearman and Kendall-Tau correlation. The first part of Table 1 shows the results of metrics that compare the semantic similarity between the model output and the reference text. These metrics perform poorly on most dimensions. The second part shows the results of metrics that use neural networks to learn from human ratings of summary quality. These metrics have much higher correlations than the similarity-based metrics, suggesting that they are more reliable for summarization evaluation.

我们采用与Zhong等人 (2022) 相同的方法,使用摘要级别的Spearman和Kendall-Tau相关性来评估不同的摘要指标。表1的第一部分展示了比较模型输出与参考文本之间语义相似度的指标结果,这些指标在大多数维度上表现不佳。第二部分展示了使用神经网络从人工摘要质量评分中学习的指标结果,这些指标比基于相似度的指标具有更高的相关性,表明它们对于摘要评估更为可靠。

In the last part of Table 1 which corresponds to GPT-based evaluators, GPTScore also uses GPTs for evaluating sum mari z ation texts, but relies on GPT’s conditional probabilities of the given target. G-EVAL substantially surpasses all previous state-of-the-art evaluators on the SummEval benchmark. G-EVAL-4 achieved much higher human correspondence compared with G-EVAL-3.5 on both Spearman and Kendall-Tau correlation, which indicates that the larger model size of GPT-4 is beneficial for sum mari z ation evaluation. G-EVAL also outperforms GPTScore on several dimension, demonstrating the effectiveness of the simple formfilling paradigm.

表1的最后部分是关于基于GPT的评估器,GPTScore同样使用GPT来评估摘要文本,但依赖于GPT对给定目标的条件概率。G-EVAL在SummEval基准测试中显著超越了所有之前的先进评估器。G-EVAL-4在Spearman和Kendall-Tau相关性上相比G-EVAL-3.5获得了更高的人类一致性,这表明GPT-4更大的模型规模有利于摘要评估。G-EVAL在多个维度上也优于GPTScore,证明了简单填表范式的有效性。

3.5 Results for Dialogue Generation

3.5 对话生成结果

We use the Topical-chat benchmark from Mehri and Eskenazi (2020) to measure how well different evaluators agree with human ratings on the quality of dialogue responses. We calculate the Pearson and Spearman correlation for each turn of the dialogue. Table 2 shows that similaritybased metrics have good agreement with humans on how engaging and grounded the responses are, but not on the other aspects. With respect to the learning-based evaluators, before G-EVAL, UniEval predicts scores that are most consistent with human judgments across all aspects.

我们采用Mehri和Eskenazi (2020)提出的Topical-chat基准,来衡量不同评估器与人类在对话响应质量评分上的一致性程度。通过计算对话每一轮的皮尔逊(Pearson)和斯皮尔曼(Spearman)相关系数。表2显示,基于相似度的指标在响应吸引力和事实依据性方面与人类评价高度一致,但在其他维度表现欠佳。在学习型评估器中,在G-EVAL出现之前,UniEval在所有维度上预测的分数与人类判断最为一致。

As shown in the last part, G-EVAL also substantially surpasses all previous state-of-the-art evaluator on the Topical-Chat benchmark. Notably, the G-EVAL-3.5 can achieve similar results with G-EVAL-4. This indicates that this benchmark is relatively easy for the G-EVAL model.

如最后部分所示,G-EVAL在Topical-Chat基准测试中也显著超越了之前所有的最先进评估器。值得注意的是,G-EVAL-3.5能够取得与G-EVAL-4相似的结果。这表明该基准测试对G-EVAL模型来说相对容易。

3.6 Results on Hallucinations

3.6 幻觉相关实验结果

Advanced NLG models often produce text that does not match the context input (Cao et al., 2018), and recent studies find even powerful LLMs also suffer from the problem of hallucination. This motivates recent research to design evaluators for measuring the consistency aspect in summarization (Kryscinski et al., 2020; Wang et al., 2020; Cao et al., 2020; Durmus et al., 2020). We test the QAGS meta-evaluation benchmark, which includes two different sum mari z ation datasets: CNN/DailyMail and XSum (Narayan et al., 2018) Table 3 shows that BARTScore performs well on the more extractive subset (QAGS-CNN), but has low correlation on the more abstract ive subset (QAGS-Xsum). UniEval has good correlation on both subsets of the data.

先进的自然语言生成(NLG)模型经常产生与上下文输入不匹配的文本(Cao et al., 2018),最新研究发现即使强大的大语言模型也存在幻觉问题。这促使近期研究开始设计评估指标来衡量摘要任务中的一致性(Kryscinski et al., 2020; Wang et al., 2020; Cao et al., 2020; Durmus et al., 2020)。我们在QAGS元评估基准上进行了测试,该基准包含两个不同的摘要数据集:CNN/DailyMail和XSum(Narayan et al., 2018)。表3显示BARTScore在更具抽取性的子集(QAGS-CNN)上表现良好,但在更抽象的摘要子集(QAGS-Xsum)上相关性较低。UniEval在两个数据子集上都表现出良好的相关性。

On average, G-EVAL-4 outperforms all state-ofthe-art evaluators on QAGS, with a large margin on QAGS-Xsum. G-EVAL-3.5, on the other hand, failed to perform well on this benchmark, which indicates that the consistency aspect is sensitive to the LLM’s capacity. This result is consistent with Table 1.

平均而言,G-EVAL-4在QAGS上优于所有最先进的评估器,在QAGS-Xsum上优势显著。而G-EVAL-3.5在该基准测试中表现不佳,表明一致性方面对大语言模型的能力较为敏感。该结果与表1一致。

4 Analysis

4 分析

Will G-EVAL prefer LLM-based outputs? One concern about using LLM as an evaluator is that it may prefer the outputs generated by the LLM itself, rather than the high-quality human-written texts. To investigate this issue, we conduct an experiment on the sum mari z ation task, where we compare the evaluation scores of the LLM-generated and the human-written summaries. We use the dataset collected in Zhang et al. (2023), where they first ask freelance writers to write high-quality summaries for news articles, and then ask annotators to compare human-written summaries and LLMgenerated summaries (using GPT-3.5, text-davinci

G-EVAL会更偏好基于大语言模型的输出吗?

关于使用大语言模型作为评估器的一个担忧是,它可能更倾向于选择由大语言模型自身生成的输出,而非高质量的人工撰写文本。为了研究这一问题,我们在摘要生成任务中进行了实验,比较了大语言模型生成摘要与人工撰写摘要的评估分数。我们使用了Zhang等人(2023)收集的数据集,其中他们首先请自由撰稿人为新闻文章撰写高质量摘要,然后让标注者比较人工撰写摘要与大语言模型生成摘要(使用GPT-3.5,text-davinci)。

| Metrics | Naturalness | Coherence | Engagingness | Groundedness | AVG | |||

| r | p | r p | r | p | r 0.310 | p | r p | |

| ROUGE-L | 0.176 | 0.146 0.193 | 0.203 | 0.295 | 0.300 | 0.327 | 0.243 | 0.244 |

| BLEU-4 | 0.180 0.175 | 0.131 | 0.235 | 0.232 | 0.316 0.213 | 0.310 | 0.189 | 0.259 |

| METEOR BERTScore | 0.212 | 0.191 0.250 | 0.302 | 0.367 | 0.439 | 0.333 0.391 | 0.290 | 0.331 |

| 0.226 | 0.209 0.214 | 0.233 | 0.317 | 0.335 | 0.291 0.317 | 0.262 | 0.273 | |

| USR | 0.337 | 0.325 0.416 | 0.377 | 0.456 | 0.465 | 0.222 | 0.447 0.358 | 0.403 |

| UniEval | 0.455 0.330 | 0.602 | 0.455 | 0.573 | 0.430 | 0.577 0.453 | 0.552 | 0.417 |

| G-EVAL-3.5 | 0.532 0.539 | 0.519 | 0.544 | 0.660 | 0.691 | 0.586 | 0.567 0.574 | 0.585 |

| G-EVAL-4 | 0.549 0.565 | 0.594 | 0.605 | 0.627 | 0.631 | 0.531 0.551 | 0.575 | 0.588 |

Table 2: Turn-level Spearman $(\rho)$ and Kendall-Tau $(\tau)$ correlations of different metrics on Topical-Chat benchmark.

| 指标 | 自然度 | 连贯性 | 吸引力 | 真实性 | 平均 | |||

|---|---|---|---|---|---|---|---|---|

| r | p | r p | r | p | r 0.310 | p | r p | |

| ROUGE-L | 0.176 | 0.146 0.193 | 0.203 | 0.295 | 0.300 | 0.327 | 0.243 | 0.244 |

| BLEU-4 | 0.180 0.175 | 0.131 | 0.235 | 0.232 | 0.316 0.213 | 0.310 | 0.189 | 0.259 |

| METEOR BERTScore | 0.212 | 0.191 0.250 | 0.302 | 0.367 | 0.439 | 0.333 0.391 | 0.290 | 0.331 |

| 0.226 | 0.209 0.214 | 0.233 | 0.317 | 0.335 | 0.291 0.317 | 0.262 | 0.273 | |

| USR | 0.337 | 0.325 0.416 | 0.377 | 0.456 | 0.465 | 0.222 | 0.447 0.358 | 0.403 |

| UniEval | 0.455 0.330 | 0.602 | 0.455 | 0.573 | 0.430 | 0.577 0.453 | 0.552 | 0.417 |

| G-EVAL-3.5 | 0.532 0.539 | 0.519 | 0.544 | 0.660 | 0.691 | 0.586 | 0.567 0.574 | 0.585 |

| G-EVAL-4 | 0.549 0.565 | 0.594 | 0.605 | 0.627 | 0.631 | 0.531 0.551 | 0.575 | 0.588 |

表 2: Topical-Chat基准上不同指标的回合级Spearman $(\rho)$ 和Kendall-Tau $(\tau)$ 相关性。

003).

003)。

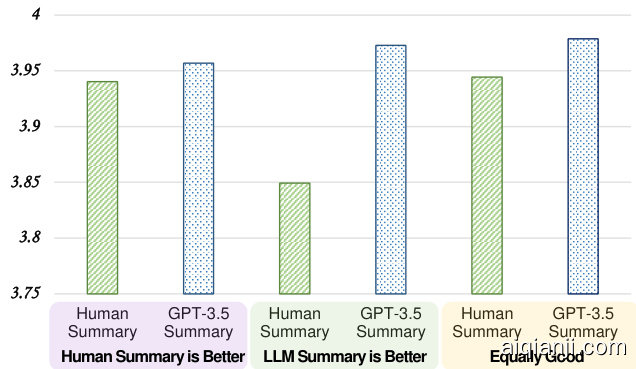

The dataset can be divided in three categories: 1) human-written summaries that are rated higher than GPT-3.5 summaries by human judges, 2) human-written summaries that are rated lower than GPT-3.5 summaries by human judges, and 3) human-written summaries and GPT-3.5 summaries are rated equally good by human judges. We use GEVAL-4 to evaluate the summaries in each category, and compare the averaged scores. 2

该数据集可分为三类:1) 人工撰写的摘要,其评分高于GPT-3.5生成的摘要;2) 人工撰写的摘要,其评分低于GPT-3.5生成的摘要;3) 人工撰写与GPT-3.5生成的摘要获得同等评分。我们使用GEVAL-4评估每类摘要,并比较平均得分。

The results are shown in Figure 2. We can see that, G-EVAL-4 assigns higher scores to humanwritten summaries when human judges also prefer human-written summaries, and assigns lower scores when human judges prefer GPT-3.5 summaries. However, G-EVAL-4 always gives higher scores to GPT-3.5 summaries than human-written summaries, even when human judges prefer humanwritten summaries. We propose two potential reasons for this phenomenon:

结果如图 2 所示。我们可以看到,当人类评判者更倾向于人工撰写的摘要时,G-EVAL-4 会给人工撰写的摘要打更高的分数;而当人类评判者更喜欢 GPT-3.5 生成的摘要时,G-EVAL-4 则会打较低的分数。然而,即便在人类评判者更青睐人工摘要的情况下,G-EVAL-4 始终给予 GPT-3.5 生成的摘要比人工摘要更高的评分。针对这一现象,我们提出两种可能的原因:

- NLG outputs from high-quality systems are in natural difficult to evaluate. The authors of the original paper found that inter-annotator agreement on judging human-written and LLM-generated summaries is very low, with Kri pp end orff’s alpha at 0.07.

- 高质量系统生成的NLG输出自然难以评估。原论文作者发现,在评判人工撰写和大语言模型生成的摘要时,标注者间一致性极低,Krippendorff's alpha系数仅为0.07。

- G-EVAL may have a bias towards the LLMgenerated summaries because the model could share the same concept of evaluation criteria during generation and evaluation.

- G-EVAL可能对大语言模型生成的摘要存在偏好,因为该模型在生成和评估过程中可能共享相同的评价标准概念。

Our work should be considered as a preliminary study on this issue, and more research is needed to fully understand the behavior of LLM-based evaluators to reduce its inherent bias towards LLMgenerated text. We highlight this concern in the context that LLM-based evaluators may lead to self-reinforcement of LLMs if the evaluation score is used as a reward signal for further tuning. And this could result in the over-fitting of the LLMs to their own evaluation criteria, rather than the true evaluation criteria of the NLG tasks.

我们的工作应被视为对该问题的初步研究,需要更多研究来充分理解基于大语言模型的评估器行为,以减少其对LLM生成文本的固有偏见。我们强调这一担忧的背景是:若将评估分数作为进一步调优的奖励信号,基于大语言模型的评估器可能导致模型自我强化。这可能导致大语言模型过度拟合其自身评估标准,而非自然语言生成任务的真实评估标准。

Figure 2: Averaged G-EVAL-4’s scores for humanwritten summaries and GPT-3.5 summaries, divided by human judges’ preference.

图 2: 人工撰写摘要与GPT-3.5生成摘要的G-EVAL-4平均得分 (按人类评委偏好分组)

The Effect of Chain-of-Thoughts We compare the performance of G-EVAL with and without chain-of-thoughts (CoT) on the SummEval benchmark. Table 1 shows that G-EVAL-4 with CoT has higher correlation than G-EVAL-4 without CoT on all dimensions, especially for fluency. This suggests that CoT can provide more context and guidance for the LLM to evaluate the generated text, and can also help to explain the evaluation process and results.

思维链效果对比

我们在SummEval基准上比较了G-EVAL使用思维链(CoT)与不使用思维链的性能差异。表1显示,在所有维度上,采用CoT的G-EVAL-4比未采用CoT的版本具有更高的相关性,尤其在流畅度方面表现突出。这表明思维链能为大语言模型评估生成文本时提供更多上下文和指导,同时有助于解释评估过程及结果。

The Effect of Probability Normalization We compare the performance of G-EVAL with and without probability normalization on the SummEval benchmark. Table 1 shows that, on KendallTau correlation, G-EVAL-4 with probabilities is inferior to G-EVAL-4 without probabilities on SummEval. We believe this is related to the calculation of Kendall-Tau correlation, which is based on the number of concordant and discordant pairs. Direct scoring without probabilities can lead to many ties, which are not counted as either concordant or discordant. This may result in a higher Kendall-Tau correlation, but it does not reflect the model’s true capacity of evaluating the generated texts. On the other hand, probability normalization can obtain more fine-grained, continuous scores that better capture the subtle difference between generated texts. This is reflected by the higher Spearman correlation of G-EVAL-4 with probabilities, which is based on the rank order of the scores.

概率归一化的效果

我们在SummEval基准上比较了G-EVAL在使用和不使用概率归一化时的性能。表1显示,在KendallTau相关性方面,带概率的G-EVAL-4在SummEval上表现不如不带概率的G-EVAL-4。我们认为这与Kendall-Tau相关性的计算方式有关,该计算基于一致对和不一致对的数量。不采用概率的直接评分会导致大量平局,这些平局既不被计入一致对也不被计入不一致对。这可能导致更高的Kendall-Tau相关性,但并不能反映模型评估生成文本的真实能力。另一方面,概率归一化可以获得更细粒度、连续的分数,从而更好地捕捉生成文本之间的细微差异。这一点体现在带概率的G-EVAL-4具有更高的Spearman相关性上,该相关性基于分数的排名顺序。

| Metrics | QAGS-CNN | QAGS-XSUM | Average | |||||

| r | p | T r | p | T | r | p | T | |

| ROUGE-2 | 0.459 | 0.418 | 0.333 | 0.097 0.083 | 0.068 | 0.278 | 0.250 | 0.200 |

| ROUGE-L | 0.357 | 0.324 | 0.254 | 0.024 -0.011 | -0.009 | 0.190 | 0.156 | 0.122 |

| BERTScore | 0.576 | 0.505 | 0.399 | 0.024 0.008 | 0.006 | 0.300 | 0.256 | 0.202 |

| MoverScore | 0.414 | 0.347 | 0.271 | 0.054 0.044 | 0.036 | 0.234 | 0.195 | 0.153 |

| FactCC | 0.416 | 0.484 | 0.376 | 0.297 0.259 | 0.212 | 0.356 | 0.371 | 0.294 |

| QAGS | 0.545 | - | 0.175 - | 0.375 | ||||

| BARTScore | 0.735 | 0.680 | 0.557 | |||||