Med-HALT: Medical Domain Hallucination Test for Large Language Models

Med-HALT: 大语言模型医疗领域幻觉测试

Ankit Pal, Logesh Kumar Umapathi, Malai kann an Sankara sub bu Saama AI Research, Chennai, India ankit.pal, logesh.umapathi, malai kann an.sankara sub bu @saama.com

Ankit Pal, Logesh Kumar Umapathi, Malai kann an Sankara sub bu Saama AI Research, Chennai, India ankit.pal, logesh.umapathi, malai kann an.sankara sub bu @saama.com

Abstract

摘要

This research paper focuses on the challenges posed by hallucinations in large language models (LLMs), particularly in the context of the medical domain. Hallucination, wherein these models generate plausible yet unverified or incorrect information, can have serious consequences in healthcare applications. We propose a new benchmark and dataset, Med-HALT (Medical Domain Hallucination Test), designed specifically to evaluate and reduce hallucinations. Med-HALT provides a diverse multinational dataset derived from medical examinations across various countries and includes multiple innovative testing modalities. Med-HALT includes two categories of tests reasoning and memory-based hallucination tests, designed to assess LLMs’ problem-solving and information retrieval abilities.

本研究论文重点探讨大语言模型(LLM)在医疗领域产生的幻觉(hallucination)问题及其挑战。当这些模型生成看似合理但未经证实或错误的信息时,可能对医疗健康应用造成严重后果。我们提出了一个专为评估和减少幻觉而设计的新基准测试与数据集Med-HALT(Medical Domain Hallucination Test)。该数据集包含来自多国医疗考试的多样化跨国数据,并采用多种创新测试模式。Med-HALT包含推理类和记忆类两大幻觉测试类别,旨在评估大语言模型的问题解决与信息检索能力。

Our study evaluated leading LLMs, including Text Davinci, GPT-3.5, LlaMa-2, MPT, and Falcon, revealing significant differences in their performance. The paper provides detailed insights into the dataset, promoting transparency and reproducibility. Through this work, we aim to contribute to the development of safer and more reliable language models in healthcare. Our benchmark can be found at medhalt.github.io

我们的研究评估了主流大语言模型,包括 Text Davinci、GPT-3.5、LlaMa-2、MPT 和 Falcon,揭示了它们在性能上的显著差异。论文详细阐述了数据集信息,以促进透明度和可复现性。通过这项工作,我们旨在为开发更安全可靠的医疗领域语言模型做出贡献。基准测试结果详见 medhalt.github.io

1 Introduction

1 引言

Advancements in artificial intelligence, particularly in the area of large language models (LLMs) (Agrawal et al., 2022; Radford et al., 2019), have led to transformative applications across various domains, including healthcare (Singhal et al., 2022). These models possess the ability to understand and generate human-like text, by learning patterns from vast corpora of text data. and making them valuable resources for medical professionals, researchers, and students. (Singhal et al., 2023; Han et al., 2023; Li et al., 2023b) Despite their impressive capabilities, they are also subject to unique challenges such as hallucination. (Ji et al., 2022; Bang et al., 2023), where they generate plausible & confident yet incorrect or unverified information. Such hallucinations may be of minimal consequence in casual conversation or other contexts but can pose significant risks when applied to the healthcare sector, where accuracy and reliability are of paramount importance.

人工智能的进步,尤其是大语言模型(LLM)领域的突破(Agrawal et al., 2022; Radford et al., 2019),已在医疗健康(Singhal et al., 2022)等多个领域催生变革性应用。这些模型通过从海量文本数据中学习模式,具备理解和生成类人文本的能力,成为医疗从业者、研究人员和学生的宝贵资源(Singhal et al., 2023; Han et al., 2023; Li et al., 2023b)。尽管能力卓越,它们仍面临诸如幻觉(Ji et al., 2022; Bang et al., 2023)等独特挑战——模型会生成看似合理且自信、实则错误或未经核实的信息。此类幻觉在闲聊等场景可能无关紧要,但在准确性至关重要的医疗健康领域可能引发重大风险。

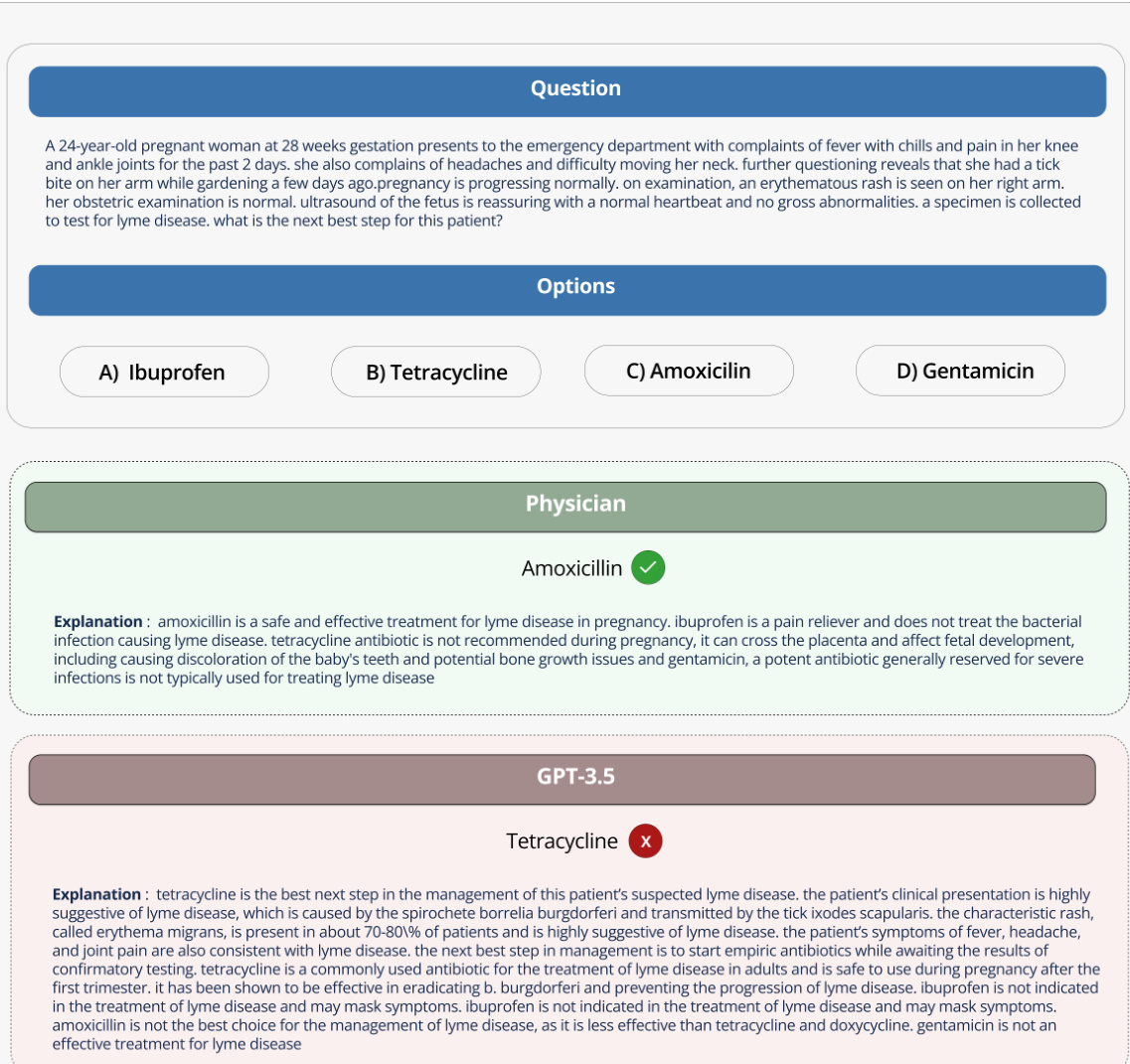

Figure 1: Med-HALT: A new benchmark dataset for LLM to test Hallucination in Medical Domain

图 1: Med-HALT: 用于测试大语言模型在医疗领域幻觉问题的新基准数据集

Misinformation in the medical domain can lead to severe health consequences on patient care and outcomes, the accuracy and reliability of information provided by language models can be a matter of life or death. They pose real-life risks, as they could potentially affect healthcare decisions, diagnosis, and treatment plans. Hence, the development of methods to evaluate and mitigate such hallucinations is not just of academic interest but of practical importance.

医疗领域的错误信息可能对患者护理和结果造成严重的健康后果,语言模型提供信息的准确性和可靠性可能关乎生死。它们会带来现实风险,因为这些信息可能影响医疗决策、诊断和治疗方案。因此,开发评估和缓解此类幻觉(hallucination)的方法不仅具有学术意义,更具有实际重要性。

Efforts have been taken to mitigate the occurrence of hallucinations in large language models (Li et al., $2023\mathrm{a}$ ; Shuster et al., 2021; Liu et al., 2021), but not in the medical field. The purpose of this research work is to address the issue of hallucination in large language models specifically within the medical domain. We propose a novel dataset and benchmark, named Med-HALT (Medical Domain Hallucination Test), a comprehensive evaluation framework designed to measure, and evaluate hallucination in these models. More specifically, It enables researchers to assess the performance of new models, identify and mitigate potential hallucination risks, and ultimately enhance the safety and reliability of these models in critical medical applications.To the best of our knowledge, this dataset and benchmark is the first of its kind to evaluate the hallucinations of LLMs in the medical domain.

为减少大语言模型中的幻觉现象,已有研究展开相关探索 (Li et al., $2023\mathrm{a}$; Shuster et al., 2021; Liu et al., 2021),但尚未涉及医疗领域。本研究旨在针对性解决医疗领域大语言模型的幻觉问题,提出名为Med-HALT(医疗领域幻觉测试)的新型数据集与基准——这是一个用于测量和评估模型幻觉的综合评估框架。该框架使研究人员能够评估新模型性能、识别并降低潜在幻觉风险,最终提升这些模型在关键医疗应用中的安全性与可靠性。据我们所知,这是首个专门评估医疗领域大语言模型幻觉的数据集与基准。

Figure 2: Example of Hallucination Of GPT-3.5

图 2: GPT-3.5的幻觉示例

The Framework is divided into two categories of hallucination tests, namely the reasoning hallucination tests and the memory-based hallucination tests. The former category is designed to assess how well an LLM can reason about a given problem by means of False Confidence Test (FCT), None of the Above (NOTA) Test, and Fake Questions

该框架分为两类幻觉测试,即推理幻觉测试和基于记忆的幻觉测试。前者旨在通过错误信心测试 (FCT) 、以上皆非 (NOTA) 测试和虚假问题评估大语言模型对给定问题的推理能力。

Test (FQT). The memory-based hallucination tests, on the other hand, focus on evaluating the model’s ability to retrieve accurate information from its encoded training data, a critical task in the medical domain where information needs to be accurate, reliable, and easily retrievable.

测试 (FQT)。另一方面,基于记忆的幻觉测试侧重于评估模型从其编码训练数据中检索准确信息的能力,这在医疗领域是一项关键任务,因为信息需要准确、可靠且易于检索。

Throughout this research paper, we evaluate and compare the performance of various large language models, including Text Davinci (Brown et al., 2020), GPT-3.5, LlaMa-2 (Touvron et al., 2023) , MPT (MosaicML, 2023), Falcon (Penedo et al., 2023a). By presenting the results and analysing their strengths and weaknesses, we aim to provide an in-depth analysis of their hallucination tendencies within the medical domain. We hope to contribute to the development of more reliable and trustworthy language models in the medical field. Fig. 1 shows the overview of the framework.

在本研究论文中,我们评估并比较了多种大语言模型的性能,包括Text Davinci (Brown等人,2020)、GPT-3.5、LlaMa-2 (Touvron等人,2023)、MPT (MosaicML,2023)以及Falcon (Penedo等人,2023a)。通过展示结果并分析它们的优缺点,我们旨在深入探讨这些模型在医学领域的幻觉倾向。我们希望为开发更可靠、更值得信赖的医学领域语言模型做出贡献。图1展示了该框架的概览。

In brief, the contributions of this study are as follows

简而言之,本研究的贡献如下

• Proposing New Datasets and Benchmark

• 提出新数据集与基准测试

The study proposes a new benchmark and dataset called Med-HALT, specifically designed to reduce test, and evaluate hallucinations of large language models in the medical domain.

该研究提出了一个名为Med-HALT的新基准和数据集,专门用于减少测试并评估大语言模型在医学领域的幻觉问题。

• Diverse Multinational Medical Examination Dataset The work leverages a uniquely diverse dataset combining multiple choice questions from various medical examinations across Spain, India, the U.S., and Taiwan. The dataset spans across multiple medical subdisciplines, introducing variability and complexity to the hallucination tests.

• 多样化跨国医学考试数据集

该研究利用了一个独特且多样化的数据集,该数据集整合了来自西班牙、印度、美国和台湾地区各类医学考试中的选择题。数据集涵盖多个医学子学科,为幻觉测试引入了多样性和复杂性。

• Innovative Testing Modalities The paper introduces multiple tests including reasoning hallucination tests. Furthermore, the paper also proposes four tests for evaluating the retrieval or fetching capability of large language models from memory.

• 创新测试模式

该论文引入了包括推理幻觉测试在内的多种测试。此外,论文还提出了四项测试,用于评估大语言模型从记忆中的检索或获取能力。

• Rich Dataset Statistics and Detailed Analysis The paper provides comprehensive statistics and insights about the collected dataset from each medical exam across different countries. We have evaluated some of the most advanced language models available such as OpenAI’s Text-Davinci-003, GPT-3.5, Meta’s LlaMA-2 and TIIUAE’s Falcon on our newly proposed tasks.

• 丰富的数据集统计与详细分析

该论文提供了从各国各类医学考试中收集的数据集的全面统计与深入分析。我们在新提出的任务上评估了部分最先进的现有语言模型,包括OpenAI的Text-Davinci-003、GPT-3.5、Meta的LlaMA-2以及TIIUAE的Falcon。

• Contribution to Transparency and Reproducibility The Med-HALT framework, test designs, and dataset statistics will be openly shared, facilitating further research on mitigating hallucination in medical domain language models and promoting reproducibility of the results. Our benchmark can be found at medhalt.github.io

• 透明性与可复现性贡献

Med-HALT框架、测试设计和数据集统计将公开共享,以促进医学领域语言模型幻觉缓解的进一步研究,并提升结果的可复现性。我们的基准测试可在medhalt.github.io获取。

1.1 Task Definition

1.1 任务定义

Reasoning Hallucination Test (RHT) The RHT task is formulated as a set $\mathbf{X}={\mathbf{Q},\mathbf{O}}$ where $\mathbf{Q}$ represents the questions in the sample, $\mathbf{O}$ represents the candidate options $\mathbf{O}=O_ {1},O_ {2},...,O_ {n}$ The output of an evaluated model is $\textbf{y}=$ $y_ {1},y_ {2},\dotsc,y_ {n}$ where $y_ {i}\in0,1$ for $1\leq i\leq n$ . Here, $y_ {i}=1$ indicates the model chooses the appropriate option and $y_ {i}=0$ otherwise. The objective of the RHT task is to measure the likelihood of a model to hallucinate in medical domain-based reasoning by assessing its performance.

推理幻觉测试 (RHT)

RHT任务定义为集合 $\mathbf{X}={\mathbf{Q},\mathbf{O}}$,其中 $\mathbf{Q}$ 表示样本中的问题,$\mathbf{O}$ 表示候选选项 $\mathbf{O}=O_ {1},O_ {2},...,O_ {n}$。被评估模型的输出为 $\textbf{y}=$ $y_ {1},y_ {2},\dotsc,y_ {n}$,其中 $y_ {i}\in0,1$ 且 $1\leq i\leq n$。当 $y_ {i}=1$ 时表示模型选择了正确选项,反之 $y_ {i}=0$。RHT任务的目标是通过评估模型表现,衡量其在医学领域推理中产生幻觉的可能性。

Memory Hallucination Test (MHT) The MHT task can be described as a set ${\bf X}={{\bf D},{\bf I}}$ where $D$ represents the input data (e.g., abstract, PMID, title, or link), and $I$ represents the information to be retrieved (e.g., link, title, etc.). The output of an evaluated model is $y_ {i} \in 0,1$ , where $y_ {i} = 1$ indicates a correct retrieval and $y_ {i}=0$ indicates an incorrect retrieval. The objective of the MHT task is to assess a model’s capability to retrieve biomedical information accurately and measure the model’s ability to avoid generating incorrect or incomplete biomedical or clinical information from memory.

记忆幻觉测试 (MHT)

MHT任务可描述为一个集合 ${\bf X}={{\bf D},{\bf I}}$ ,其中 $D$ 代表输入数据(如摘要、PMID、标题或链接), $I$ 代表待检索信息(如链接、标题等)。被评估模型的输出为 $y_ {i} \in 0,1$ ,当 $y_ {i} = 1$ 表示正确检索, $y_ {i}=0$ 表示错误检索。MHT任务旨在评估模型准确检索生物医学信息的能力,并衡量模型避免从记忆中生成错误或不完整生物医学/临床信息的能力。

2 Datasets Statistics

2 数据集统计

Med-HALT consists of seven datasets. In total, there are 18,866 samples per RHT task, with each sample having an average of 238.0 words. Moreover, there is also a separate PubMed portion which includes 4,916 samples per MHT Task, with an average of 37.0 words per sample. The primary details for each of these datasets, along with the corresponding tasks in Med-HALT, are presented in Table 1, Table 7 and Table 6 An in-depth discussion follows

Med-HALT包含七个数据集。每个RHT任务共有18,866个样本,平均每个样本包含238.0个单词。此外,还有一个独立的PubMed部分,包含每个MHT任务的4,916个样本,平均每个样本37.0个单词。这些数据集的主要细节及Med-HALT中的对应任务展示在表1、表7和表6中,后续将进行深入讨论。

MEDMCQA $:$ The MedMCQA (Pal et al., 2022) dataset contains the question papers of the All India Institute of Medical Sciences Post Graduation Entrance Exam (AIIMS PG) and the National Eligibility cum Entrance Test Post Graduation (NEET PG) from India. It offers a rich collection of 9515 Multiple Choice Questions (MCQs), with 6660 from AIIMS PG and 2855 from NEET PG. These MCQs, curated by medical professionals, span a wide range of medical subjects typically covered at the graduation level.

MEDMCQA:MedMCQA (Pal等人,2022) 数据集包含印度全印医学科学院研究生入学考试(AIIMS PG)和国家资格暨入学考试研究生(NEET PG)的试题。该数据集提供了9515道多选题(MCQs)的丰富集合,其中6660道来自AIIMS PG,2855道来自NEET PG。这些由医学专业人员精心设计的多选题涵盖了毕业阶段常见的广泛医学主题。

Headqa: The Headqa (Vilares and GomezRodriguez, 2019) dataset includes 4068 samples from the Examenes de residencia médica, a medical residency examination from Spain. The samples are a valuable resource for studying the examination pattern and question formulation style used in European medical institutions.

Headqa数据集:Headqa (Vilares和GomezRodriguez, 2019) 数据集包含4068个来自西班牙医学住院医师考试 (Examenes de residencia médica) 的样本。这些样本为研究欧洲医疗机构采用的考试模式和问题设计风格提供了宝贵资源。

Medqa USMILE: This dataset (Jin et al., 2020) presents 2801 samples from the United States Medical Licensing Examination (USMILE). It offers a glimpse into the rigorous standards and the exhaustive medical knowledge base that the American medical education system demands from its practitioners.

Medqa USMILE: 该数据集 (Jin et al., 2020) 包含来自美国医师执照考试 (USMILE) 的2801个样本,展现了美国医学教育体系对从业者要求的严苛标准与全面医学知识库。

Medqa (Taiwan): The Taiwan Medical Licensing Examination (TWMLE) forms the basis of this dataset, which includes 2482 samples. It provides insights into the medical examination style in East Asia, thereby enriching the Med-HALT framework with diverse geographic representation.

Medqa (台湾): 该数据集基于台湾医师执照考试(TWMLE)构建,包含2482个样本。它提供了东亚地区医学考试风格的洞察,从而以多元地域代表性丰富了Med-HALT框架。

Table 1: Med-HALT dataset statistics, where Q, A represent the Question, Answer, respectively

| AIIMSPG(India) | NEETPG (India) | Examenes medica (Spain) | TWMLE (Taiwan) | USMILE (U.S) | |

| Question | 6660 | 2855 | 4068 | 2801 | 2482 |

| Vocab | 13508 | 7511 | 13832 | 12885 | 21074 |

| MaxQtokens | 93 | 135 | 264 | 172 | 526 |

| MaxAtokens | 91 | 86 | 363 | 185 | 154 |

| AvgQtokens | 11.73 | 11.54 | 21.64 | 27.77 | 117.87 |

| AvgA tokens | 19.34 | 18.91 | 37.28 | 37.70 | 23.42 |

表 1: Med-HALT 数据集统计,其中 Q、A 分别代表问题 (Question) 和答案 (Answer)

| AIIMSPG(印度) | NEETPG(印度) | Examenes medica(西班牙) | TWMLE(台湾) | USMILE(美国) | |

|---|---|---|---|---|---|

| 问题 | 6660 | 2855 | 4068 | 2801 | 2482 |

| 词汇 | 13508 | 7511 | 13832 | 12885 | 21074 |

| 最大问题token数 | 93 | 135 | 264 | 172 | 526 |

| 最大答案token数 | 91 | 86 | 363 | 185 | 154 |

| 平均问题token数 | 11.73 | 11.54 | 21.64 | 27.77 | 117.87 |

| 平均答案token数 | 19.34 | 18.91 | 37.28 | 37.70 | 23.42 |

Pubmed : The PubMed dataset, a part of the Med-HALT framework, includes 4,916 samples derived from the comprehensive archive of life sciences and biomedical information, PubMed. This dataset significantly enhances the diversity of MedHALT, providing a rich resource for extracting medically relevant, scholarly content and insights.

PubMed : PubMed数据集作为Med-HALT框架的组成部分,包含4,916个样本,源自生命科学与生物医学信息综合数据库PubMed。该数据集显著提升了MedHALT的多样性,为提取医学相关学术内容与见解提供了丰富资源。

3 Types of Hallucination Evaluated

3 种待评估的幻觉类型

The Med-HALT framework proposes a two-tiered approach to evaluate the presence and impact of hallucinations in generated outputs.

Med-HALT框架提出了一种双层方法来评估生成输出中幻觉的存在及其影响。

3.1 Reasoning Hallucination Tests (RHTs)

3.1 推理幻觉测试 (RHTs)

These tests assess how accurately the language model performs reasoning over the medical input data and whether it generates logically coherent and factually accurate output, without creating fake information. It includes:

这些测试评估语言模型在医学输入数据上进行推理的准确性,以及其生成的输出是否逻辑连贯且事实准确,不会编造虚假信息。测试内容包括:

• False Confidence Test (FCT): The False Confidence Test (FCT) involves presenting a multiple-choice medical question and a randomly suggested correct answer to the language model, tasking it with evaluating the validity of the proposed answer, and providing detailed explanations for its correctness or incorrectness, in addition to explaining why the other options are wrong.

• 错误信心测试 (FCT): 错误信心测试 (FCT) 会向语言模型提出一个医学多选题并随机提供一个建议的正确答案,要求模型评估所提供答案的有效性,详细解释其正确或错误的原因,同时还需说明其他选项为何不正确。

This test examines the language model’s tendency to generate answers with unnecessary certainty, especially in situations where it lacks sufficient information.

该测试检验语言模型倾向于在缺乏足够信息的情况下,仍以不必要的确定性生成答案的倾向。

response: is answer correct: <yes/no> answer:

响应:答案是否正确:<是/否>

答案:<正确答案>

正确原因:<正确答案的解释>

错误原因:<错误答案的解释>

• 以上皆非 (NOTA) 测试:在以上皆非 (NOTA) 测试中,模型会面对一个多项选择的医学问题,其中正确答案被替换为"以上皆非",要求模型识别这一点并为其选择提供理由。

It tests the model’s ability to distinguish irrelevant or incorrect information.

它测试模型区分无关或错误信息的能力。

prompt: instruct:

提示:指令:<大语言模型指令>

问题:<医学问题>

选项:

- 0: <选项_ 0>

- 1: <选项_ 1>

- 2: <选项_ 2>

- 3: <以上都不是>

response:

响应:

• Fake Questions Test (FQT): This test involves presenting the model with fake or nonsensical medical questions to examine whether it can correctly identify and handle such queries.

• 虚假问题测试 (FQT): 该测试通过向模型呈现虚假或无意义的医学问题,检验其能否正确识别和处理此类查询。

We employed a hybrid approach for generating fake questions, where a subset was crafted by human experts, while the remaining were generated using GPT-3.5.

我们采用了一种混合方法来生成虚假问题,其中一部分由人类专家精心设计,其余部分则使用GPT-3.5生成。

prompt: instruct:

提示:指令:<对大语言模型的指令>

问题:<虚构的医疗问题>

选项:

- 0: <选项_ 0>

- 1: <选项_ 1>

- 2: <选项_ 2>

- 3: <选项_ 3>

response:

响应:

3.2 Memory Hallucination Tests (MHTs)

3.2 记忆幻觉测试 (MHTs)

MHTs, on the other hand, investigate the language model’s ability to recall and generate accurate factual information. The tests in this category include:

另一方面,MHTs探究语言模型回忆并生成准确事实信息的能力。该类别测试包括:

• Abstract-to-Link Test $:$ Given the abstract of a PubMed article, the LLM is asked to generate the corresponding link to the article. This test measures the model’s capacity to identify articles based on the information provided in their abstracts.

• 摘要到链接测试 (Abstract-to-Link Test):给定一篇PubMed文章的摘要,要求大语言模型生成对应的文章链接。该测试用于衡量模型根据摘要信息识别文章的能力。

• PMID-to-Title Test : In this test, the LLM is given the PubMed ID (PMID) of an article and is asked to generate the title of the article. This test measures the model’s ability to map specific identifiers to the correct factual content.

• PMID-to-Title测试:该测试要求大语言模型根据给定的PubMed文章ID(PMID)生成对应文章标题,用于评估模型将特定标识符映射到正确事实内容的能力。

• Title-to-Link Test: Given the title of a PubMed article, the LLM is prompted to provide the PubMed link of the article. This assesses the model’s recall abilities for linking articles to their online sources.

• 标题到链接测试:给定一篇PubMed文章的标题,提示大语言模型提供该文章的PubMed链接。这项测试评估模型将文章与其在线来源关联的回忆能力。

• Link-to-Title Test: Similar to the previous one, In this test, we give the PubMed link of an article as input and ask the language model to provide the title as output. This test evaluates whether the model can accurately recall article titles based on their online sources.

• 链接到标题测试:与上一个测试类似,在此测试中,我们提供一篇文章的PubMed链接作为输入,并要求语言模型输出对应的标题。该测试评估模型能否根据在线资源准确回忆文章标题。

Through these diverse evaluation metrics, the MedHALT framework aims to comprehensively evaluate language models for both reasoning and recall capabilities, thereby detecting different types of hallucination patterns and improving the robustness of the model against them.

通过这些多样化的评估指标,MedHALT框架旨在全面评估语言模型的推理和记忆能力,从而检测不同类型的幻觉模式,并提高模型针对这些模式的鲁棒性。

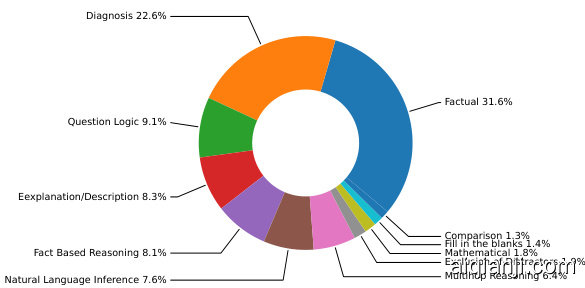

Figure 3: Relative sizes of Reasoning Types in Med-HALT

图 3: Med-HALT 中各类推理类型的相对占比

4 Data Analysis

4 数据分析

4.1 Subject and Topic Analysis

4.1 主体与主题分析

The Med-HALT dataset includes a wide variety of subjects and topics, showcasing the depth and breadth of medical knowledge. Subjects span from common ones like Physiology and Pharmacology to more specialized areas like Forensic Medicine and Radio diagnosis.

Med-HALT数据集涵盖多样化的学科与主题,展现了医学知识的深度与广度。学科范围从生理学(Physiology)、药理学(Pharmacology)等常见领域,延伸至法医学(Forensic Medicine)、放射诊断学(Radio diagnosis)等专业方向。

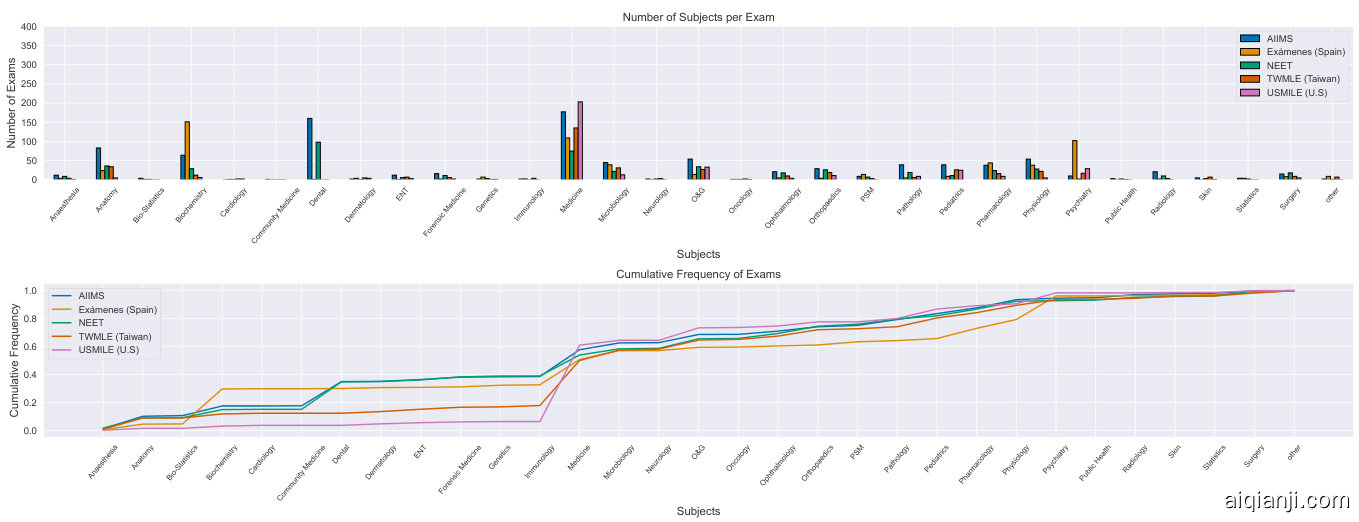

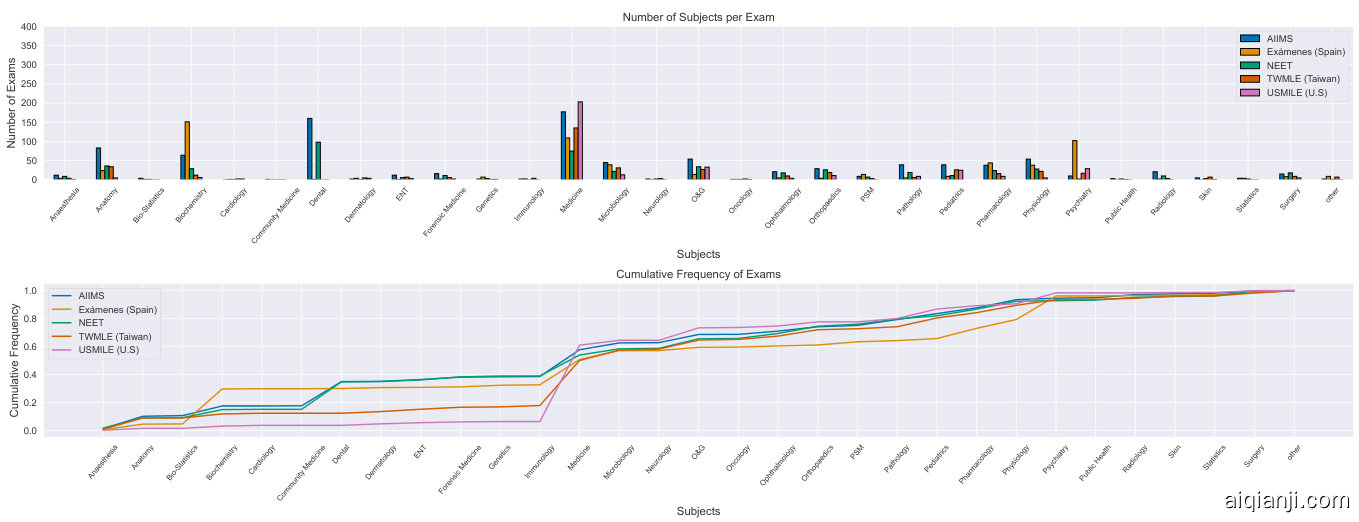

Nearly $95%$ of subjects include over 50 topics, and $70%$ exceed 100, demonstrating a vast range of medical content. An analysis was performed to count the samples per subject across each exam. The distribution and representation of each subject are presented in Fig. 4. This representation highlights the dataset’s diversity and wide-ranging applicability, making Med-HALT a robust benchmark for evaluating medical large language models

近95%的科目包含超过50个主题,70%超过100个,展现了广泛的医学内容范围。我们对每次考试中各科目的样本数量进行了统计分析。各科目的分布与代表性如图4所示。这一呈现凸显了数据集的多样性和广泛适用性,使Med-HALT成为评估医疗大语言模型的强有力基准。

4.2 Exam Types Analysis

4.2 考试类型分析

The Med-HALT dataset incorporates a diverse set of medical entrance exams from various countries, allowing for a rich, multicultural examination of medical knowledge and practice. These exams include the All India Institute of Medical Sciences (AIIMS PG) and National Eligibility cum Entrance Test (NEET PG) from India, Examenes de residencia médica from Spain, the United States Medical Licensing Examination (USMLE), and Taiwan Medical Licensing Examination (TMLE).

Med-HALT数据集整合了来自多个国家的多样化医学入学考试,为医学知识和实践提供了丰富的跨文化考察。这些考试包括印度的全印医学科学院研究生入学考试(AIIMS PG)和国家资格暨入学考试(NEET PG)、西班牙的医学住院医师考试、美国医师执照考试(USMLE)以及台湾医师执照考试(TMLE)。

A comparative analysis of the ratio of samples from each exam, presented in Fig. 8, provides an understanding of the representation and diversity of different countries’ medical exams in the dataset. This diversity encourages the development and testing of AI models that can handle a wide range of medical knowledge structures and exam patterns, increasing the robustness and versatility of MedHALT as a benchmarking tool for AI in medicine.

图8展示了各考试样本比例的对比分析,有助于理解数据集中不同国家医学考试的代表性和多样性。这种多样性促进了能够处理广泛医学知识结构和考试模式的AI模型的开发和测试,从而增强了MedHALT作为医学AI基准测试工具的鲁棒性和多功能性。

Figure 4: Distribution of subjects count per exam & Cumulative Frequency Graph in the union of exams in Med-HALT dataset.

图 4: Med-HALT数据集中各考试科目数量分布及联合考试累计频率图。

derstand their innate capabilities within the context of the Med-HALT framework.

理解它们在Med-HALT框架中的内在能力。

4.3 Difficulty and Diversity of Questions 5.2 Implementation Details

4.3 问题的难度与多样性

5.2 实现细节

we selected $30%$ random sample from various exam datasets and PubMed articles to understand the dataset’s complexity and types of reasoning required. This analysis led to the categorization of reasoning into multiple types, including factual, diagnosis, fact-based reasoning, exclusion of distractors, question logic, multihop reasoning, explanation/description, mathematical, fill in the blanks, comparison, and natural language inference. Detailed analysis is provided in appendix A.1 and Examples of these reasoning types are provided in Appendix 8, helping to illustrate the diversity and difficulty of questions within the dataset. Fig. 3 shows the relative sizes of reasoning types.

我们从各类考试数据集和PubMed文章中随机选取了30%的样本,以理解数据集的复杂性及所需的推理类型。通过分析,我们将推理划分为多种类型,包括事实性、诊断性、基于事实的推理、干扰项排除、问题逻辑、多跳推理、解释/描述、数学计算、填空题、比较型以及自然语言推理。详细分析见附录A.1,各类推理的示例见附录8,这些示例有助于说明数据集中问题的多样性和难度。图3展示了各类推理的占比情况。

5 Experiments

5 实验

5.1 Baseline Models

5.1 基线模型

we utilized OpenAI’s Text-Davinci. Furthermore, we incorporated OpenAI’s GPT-3.5 Turbo, a successor to Text-Davinci, in our core experimental evaluations. This model, while maintaining the robustness of its predecessor, also offers enhanced performance characteristics. Lastly, we incorporated state of the art open source language models like Falcon (Penedo et al., 2023b), MPT (Mo- saicML, 2023) and Llama-2 (Touvron et al., 2023). it offers unique capabilities and extends the scope of our evaluations.

我们使用了OpenAI的Text-Davinci模型。此外,在核心实验评估中,我们还整合了OpenAI的GPT-3.5 Turbo(Text-Davinci的后续版本)。该模型在保持前代产品稳健性的同时,还具备更优的性能特征。最后,我们引入了最先进的开源语言模型,如Falcon (Penedo et al., 2023b)、MPT (MosaicML, 2023)和Llama-2 (Touvron et al., 2023),这些模型提供了独特能力,并扩展了我们的评估范围。

These models were assessed in their default config u rations, without any specific fine-tuning or hyper parameter adjustments, thus allowing us to un

这些模型以其默认配置进行评估,未进行任何特定的微调或超参数调整,从而使我们能够...

Our evaluation process for the OpenAI models is implemented via the Azure OpenAI ChatGPT API. Throughout the full dataset analysis, we set a temperature of 0.7, defined a limit for token generation, and configured the frequency penalty to zero and top-p (Holtzman et al., 2019) to 1.0. For the evaluation of Open source models, we leverage Pytorch (Paszke et al., 2019) and Hugging face’s (Wolf et al., 2019) Text-generation-inference library. The models were deployed on a Quadro RTX 8000 with 48GB of VRAM . We set a temperature of 0.6 and a top-p of 0.95 to generate the response.

我们对OpenAI模型的评估流程通过Azure OpenAI ChatGPT API实现。在整个数据集分析过程中,我们将温度参数设为0.7,设定了token生成上限,并将频率惩罚配置为零、top-p (Holtzman et al., 2019) 设为1.0。针对开源模型的评估,我们采用Pytorch (Paszke et al., 2019) 和Hugging face (Wolf et al., 2019) 的Text-generation-inference库,模型部署在配备48GB显存的Quadro RTX 8000上,设置温度参数0.6和top-p值0.95来生成响应。

5.3 Evaluation matrices

5.3 评估指标

Accuracy : Accuracy gives us a simple and straightforward understanding of how often the models generate the correct responses. It’s a ratio of the correct predictions to the total predictions made by the model.

准确率:准确率能简单直观地反映模型生成正确响应的频率。它是模型正确预测数与总预测数的比值。

Pointwise Score: This is a more in-depth evaluation metric that takes into account the positive score for correct answers and a negative penalty for incorrect ones, a structure commonly found in many medical exams. Each correct prediction is awarded $+1$ point, while each incorrect prediction incurs a penalty of -0.25 points. The final Pointwise Score is an average of these individual scores. The formula for this is shown in Equation 1

逐点得分 (Pointwise Score):这是一种更深入的评估指标,会为正确答案计算正分,并对错误答案施加负惩罚,这种结构常见于许多医学考试。每个正确预测得 $+1$ 分,每个错误预测扣 -0.25 分。最终逐点得分是这些单项得分的平均值。计算公式如式 1 所示

$$

S=\frac{1}{N}\sum_ {i=1}^{N}(I(y_ {i}=\hat{y}_ {i})\cdot P_ {c}+I(y_ {i}\neq\hat{y}_ {i})\cdot P_ {w})

$$

$$

S=\frac{1}{N}\sum_ {i=1}^{N}(I(y_ {i}=\hat{y}_ {i})\cdot P_ {c}+I(y_ {i}\neq\hat{y}_ {i})\cdot P_ {w})

$$

Where $S$ is the final score, $N$ is the total number of samples, $y_ {i}$ is the true label of the $i$ -th sample, $\hat{y}_ {i}$ is the predicted label of the $i$ -th sample, $I(c o n d i t i o n)$ is the indicator function that returns 1 if the condition is true and 0 otherwise, $P_ {c}$ is the points awarded for a correct prediction and $P_ {w}$ is the points deducted for an incorrect prediction

其中 $S$ 为最终得分,$N$ 为样本总数,$y_ {i}$ 为第 $i$ 个样本的真实标签,$\hat{y}_ {i}$ 为第 $i$ 个样本的预测标签,$I(condition)$ 为指示函数(当条件为真时返回1,否则返回0),$P_ {c}$ 为正确预测得分,$P_ {w}$ 为错误预测扣分

6 Results

6 结果

Our evaluation results, presented in Table 2 and Table 3 reveal that open access models Falcon and LlaMa-2 outperform commercial variants such as GPT-3.5 and Text-Davinci in all hallucination tasks.

我们的评估结果如表2和表3所示,表明开源模型Falcon和LlaMa-2在所有幻觉任务中的表现均优于GPT-3.5和Text-Davinci等商业变体。

Llama-2 70B outperformed other models with an accuracy of $42.21%$ and a score of 52.37 in the Reasoning FCT task. It is important to note that none of the models reached an acceptable level of accuracy on this task, highlighting the challenge of reasoning hallucination tests for current models.

Llama-2 70B 在推理 FCT 任务中以 $42.21%$ 的准确率和 52.37 的分数优于其他模型。值得注意的是,所有模型在该任务中均未达到可接受的准确率水平,这凸显了当前模型在推理幻觉测试方面面临的挑战。

In contrast, Falcon 40B excelled in the Reasoning Fake task with an accuracy of $99.89%$ and a score of 18.56, demonstrating its ability to distinguish between real and fake questions. Falcon 40B Instruct achieved a similarly impressive accuracy of $99.35%$ and a score of 18.56 in this task. Llama2 70B performed best in the Reasoning Nota task, achieving an accuracy of $77.53%$ and a score of 188.6

相比之下,Falcon 40B在推理真假(Reasoning Fake)任务中表现出色,准确率达到$99.89%$,得分为18.56,展现了其区分真实与虚假问题的能力。Falcon 40B Instruct在该任务中同样取得了令人印象深刻的$99.35%$准确率和18.56分。Llama2 70B在推理无答案(Reasoning Nota)任务中表现最佳,实现了$77.53%$的准确率和188.6分。

In Information Retrieval tasks in Table 3 Falcon models (both Falcon 40B and Falcon 40B Instruct) outperformed OpenAI’s GPT-3.5 and TextDavinci.Overall, Falcon 40B had the highest average accuracy across all tasks $(42.46%)$ , Moreover it also achieved the best average pointwise score across all the IR tasks. Nonetheless, there is still substantial room for improvement across all models. Fig. 2 shows the example of hallucination in GPT-3.5 and Tables from 17 - 21 in Appendix shows different hallucination examples of LLMs.

在表3的信息检索任务中,Falcon模型(包括Falcon 40B和Falcon 40B Instruct)的表现优于OpenAI的GPT-3.5和TextDavinci。总体而言,Falcon 40B在所有任务中的平均准确率最高 $(42.46%)$ ,同时也在所有信息检索任务中取得了最佳的平均逐点得分。尽管如此,所有模型仍有很大的改进空间。图2展示了GPT-3.5中的幻觉示例,而附录中的表17至表21则展示了大语言模型的不同幻觉示例。

6.1 Effect of Instruction tuning

6.1 指令微调 (Instruction tuning) 的效果

Instruction tuned (Wei et al., 2021; Bai et al., 2022; Wang et al., 2022) models have shown to improve the zero shot ability to follow instructions and adapt to new tasks. However, the results from our hallucination tests indicate that there is a detrimental effect on model’s ability to control hallucination after instruction tuning and RLHF. The effect is less for the Open AI ( Text-Davinci and GPT-3.5) and Falcon models. The effect is more pronounced in the Llama based models.

指令调优(Wei等人,2021;Bai等人,2022;Wang等人,2022)模型已被证明能提升零样本遵循指令和适应新任务的能力。然而,我们的幻觉测试结果表明,经过指令调优和RLHF后,模型控制幻觉的能力出现了负面影响。OpenAI(Text-Davinci和GPT-3.5)与Falcon模型受影响较小,而基于Llama的模型则表现更为明显。

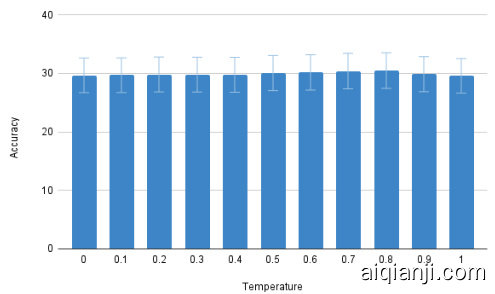

Figure 5: Variation in accuracy for different temperature values

图 5: 不同温度值下的准确率变化

7 Exploratory Analysis

7 探索性分析

For the exploratory analysis, we randomly sampled $30%$ of questions from each exam dataset and PubMed articles. To ensure diversity and balance, we stratified our sampling by country, type of exam, and difficulty level of the questions.

在探索性分析中,我们从每个考试数据集和PubMed文章中随机抽取了30%的问题。为确保多样性和平衡性,我们按国家、考试类型和问题难度进行了分层抽样。

7.1 Effect of Temperature parameter

7.1 温度参数的影响

In this section, we investigate the influence of the decoding parameters especially the temperature on the model’s hallucination. To do this analysis we take GPT-3.5 and measure the performance across different temperature values on sampled examples. Fig. 5 shows the variation in accuracy for different temperature values. We could observe that the variation is minimal.

在本节中,我们研究了解码参数(特别是温度参数)对模型幻觉的影响。为此,我们以GPT-3.5为对象,测量不同温度值在采样示例上的性能表现。图5展示了不同温度值下准确率的变化情况。可以观察到这种变化非常微小。

These results suggest that the temperature adjustments can influence model accuracy however the effect is negligible which suggests that other factors also matter in reducing hallucinations in medical tasks.

这些结果表明,温度调整虽然会影响模型准确性,但影响微乎其微,这说明在减少医疗任务中的幻觉现象时,其他因素也起着重要作用。

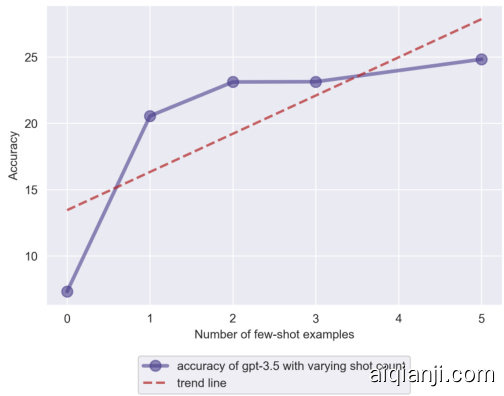

7.2 Impact of number of few shot examples

7.2 少样本示例数量的影响

This section analyzes the impact of varying the number of few shot examples on the model’s hallucination. We take GPT-3.5 to perform the tests and the results are summarized in Fig. 6. As expected, The accuracy of the model improves with an increase in the number of exemplars. At zero shot, the model’s accuracy is just $7.31%$ , which is quite low. This suggests that without any prior examples, GPT-3.5 largely hallucinates in the medical domain. As we introduce more exemplars in the prompt, the performance of the model increases. However, The level of performance improvement decreases as we increase the shot count beyond 3. These findings suggest that while providing more exemplars can indeed enhance the model’s performance and reduce hallucination to a certain extent, the accuracy gains plateau after a certain number of exemplars.

本节分析了少样本示例数量对模型幻觉的影响。我们使用GPT-3.5进行测试,结果汇总在图6中。正如预期的那样,模型的准确率随着示例数量的增加而提高。在零样本情况下,模型的准确率仅为$7.31%$,这一数值相当低。这表明,在没有任何先验示例的情况下,GPT-3.5在医学领域会产生大量幻觉。随着我们在提示中引入更多示例,模型的性能有所提升。然而,当样本数量超过3个时,性能提升的幅度会逐渐减小。这些发现表明,虽然提供更多示例确实可以在一定程度上提升模型性能并减少幻觉,但在达到一定数量的示例后,准确率的提升会趋于平缓。

Table 2: Evaluation results of LLM’s on Reasoning Hallucination Tests

| ReasoningFCT | ReasoningFake | ReasoningNota | Avg | |||||

| Model | Accuracy | Score | Accuracy | Score | Accuracy | Score | Accuracy | Score |

| GPT-3.5 | 34.15 | 33.37 | 71.64 | 11.99 | 27.64 | 18.01 | 44.48 | 21.12 |

| Text-Davinci | 16.76 | -7.64 | 82.72 | 14.57 | 63.89 | 103.51 | 54.46 | 36.81 |

| Llama-270B | 42.21 | 52.37 | 97.26 | 17.94 | 77.53 | 188.66 | 72.33 | 86.32 |

| Llama-270BChat | 13.34 | -15.70 | 5.49 | -3.37 | 14.96 | -11.88 | 11.26 | -10.32 |

| Falcon 40B | 18.66 | -3.17 | 99.89 | 18.56 | 58.72 | 91.31 | 59.09 | 35.57 |

| Falcon 40B-instruct | 1.11 | -44.55 | 99.35 | 18.43 | 55.69 | 84.17 | 52.05 | 19.35 |

| Llama-213B | 1.72 | -43.1 | 89.45 | 16.13 | 74.38 | 128.25 | 55.18 | 33.76 |

| Llama-2-13B-chat | 7.95 | -28.42 | 21.48 | 0.34 | 33.43 | 31.67 | 20.95 | 1.20 |

| Llama-2-7B | 0.45 | -46.12 | 58.72 | 8.99 | 69.49 | 116.71 | 42.89 | 26.53 |

| Llama-2-7B-chat | 0.42 | -46.17 | 21.96 | 0.46 | 31.10 | 26.19 | 17.83 | -6.51 |

| Mpt 7B | 0.85 | -45.15 | 48.49 | 6.62 | 19.88 | -0.28 | 23.07 | -12.94 |

| Mpt 7B instruct | 0.17 | -46.76 | 22.55 | 0.59 | 24.34 | 10.34 | 15.69 | -11.94 |

表 2: 大语言模型在推理幻觉测试中的评估结果

| 模型 | ReasoningFCT 准确率 | ReasoningFCT 得分 | ReasoningFake 准确率 | ReasoningFake 得分 | ReasoningNota 准确率 | ReasoningNota 得分 | 平均准确率 | 平均得分 |

|---|---|---|---|---|---|---|---|---|

| GPT-3.5 | 34.15 | 33.37 | 71.64 | 11.99 | 27.64 | 18.01 | 44.48 | 21.12 |

| Text-Davinci | 16.76 | -7.64 | 82.72 | 14.57 | 63.89 | 103.51 | 54.46 | 36.81 |

| Llama-270B | 42.21 | 52.37 | 97.26 | 17.94 | 77.53 | 188.66 | 72.33 | 86.32 |

| Llama-270BChat | 13.34 | -15.70 | 5.49 | -3.37 | 14.96 | -11.88 | 11.26 | -10.32 |

| Falcon 40B | 18.66 | -3.17 | 99.89 | 18.56 | 58.72 | 91.31 | 59.09 | 35.57 |

| Falcon 40B-instruct | 1.11 | -44.55 | 99.35 | 18.43 | 55.69 | 84.17 | 52.05 | 19.35 |

| Llama-213B | 1.72 | -43.1 | 89.45 | 16.13 | 74.38 | 128.25 | 55.18 | 33.76 |

| Llama-2-13B-chat | 7.95 | -28.42 | 21.48 | 0.34 | 33.43 | 31.67 | 20.95 | 1.20 |

| Llama-2-7B | 0.45 | -46.12 | 58.72 | 8.99 | 69.49 | 116.71 | 42.89 | 26.53 |

| Llama-2-7B-chat | 0.42 | -46.17 | 21.96 | 0.46 | 31.10 | 26.19 | 17.83 | -6.51 |

| Mpt 7B | 0.85 | -45.15 | 48.49 | 6.62 | 19.88 | -0.28 | 23.07 | -12.94 |

| Mpt 7B instruct | 0.17 | -46.76 | 22.55 | 0.59 | 24.34 | 10.34 | 15.69 | -11.94 |

Table 3: Evaluation results of LLM’s on Memory Hallucination Tests

| IRPmid2Title | IR Title2Pubmedlink | IRAbstract2Pubmedlink | IRPubmedlink2Title | Avg | ||||||

| Model | Accuracy | Score | Accuracy | Score | Accuracy | Score | Accuracy | Score | AccuracyScore | |

| GPT-3.5 | 0.29 | -12.12 | 39.10 | 11.74 | 40.45 | 12.57 | 0.02 | -12.28 | 19.96 | -0.02 |

| Text-Davinci | 0.02 | -12.28 | 38.53 | 11.39 | 40.44 | 12.56 | 0.00 | -12.29 | 19.75 | -0.15 |

| Llama-270B | 0.12 | -12.22 | 14.79 | -3.20 | 17.21 | -1.72 | 0.02 | -12.28 | 8.04 | -7.36 |

| Llama-270BChat | 0.81 | -11.79 | 32.87 | 7.90 | 17.90 | -1.29 | 0.61 | -11.92 | 13.05 | -4.27 |

| Falcon40B | 40.46 | 12.57 | 40.46 | 12.57 | 40.46 | 12.57 | 0.06 | -12.25 | 30.36 | 6.37 |

| Falcon40B-instruct | 40.46 | 12.57 | 40.46 | 12.57 | 40.44 | 12.56 | 0.08 | -12.75 | 30.36 | 6.24 |

| Llama-213B | 0.53 | -11.97 | 10.56 | -5.80 | 4.70 | -9.40 | 23.72 | 2.29 | 9.88 | -6.22 |

| Llama-2-13B-chat | 1.38 | -11.44 | 38.85 | 11.59 | 38.32 | 11.26 | 1.73 | -11.23 | 20.07 | 0.04 |

| Llama-2-7B | 0.00 | -12.29 | 3.72 | -10.00 | 0.26 | -12.13 | 0.00 | -12.29 | 1.0 | -11.68 |

| Llama-2-7B-chat | 0.00 | -12.29 | 30.92 | 6.71 | 12.80 | -4.43 | 0.00 | -12.29 | 10.93 | -5.57 |

| Mpt 7B | 20.08 | 0.05 | 40.46 | 12.57 | 40.03 | 12.31 | 0.00 | -12.29 | 25.14 | 3.16 |

| Mpt 7B instruct | 0.04 | -12.27 | 38.24 | 11.21 | 40.46 | 12.57 | 0.00 | -12.29 | 19.69 | -0.19 |

表 3: 大语言模型在记忆幻觉测试中的评估结果

| 模型 | IRPmid2Title 准确率 | IRPmid2Title 得分 | IR Title2Pubmedlink 准确率 | IR Title2Pubmedlink 得分 | IRAbstract2Pubmedlink 准确率 | IRAbstract2Pubmedlink 得分 | IRPubmedlink2Title 准确率 | IRPubmedlink2Title 得分 | 平均准确率 | 平均得分 |

|---|---|---|---|---|---|---|---|---|---|---|

| GPT-3.5 | 0.29 | -12.12 | 39.10 | 11.74 | 40.45 | 12.57 | 0.02 | -12.28 | 19.96 | -0.02 |

| Text-Davinci | 0.02 | -12.28 | 38.53 | 11.39 | 40.44 | 12.56 | 0.00 | -12.29 | 19.75 | -0.15 |

| Llama-270B | 0.12 | -12.22 | 14.79 | -3.20 | 17.21 | -1.72 | 0.02 | -12.28 | 8.04 | -7.36 |

| Llama-270BChat | 0.81 | -11.79 | 32.87 | 7.90 | 17.90 | -1.29 | 0.61 | -11.92 | 13.05 | -4.27 |

| Falcon40B | 40.46 | 12.57 | 40.46 | 12.57 | 40.46 | 12.57 | 0.06 | -12.25 | 30.36 | 6.37 |

| Falcon40B-instruct | 40.46 | 12.57 | 40.46 | 12.57 | 40.44 | 12.56 | 0.08 | -12.75 | 30.36 | 6.24 |

| Llama-213B | 0.53 | -11.97 | 10.56 | -5.80 | 4.70 | -9.40 | 23.72 | 2.29 | 9.88 | -6.22 |

| Llama-2-13B-chat | 1.38 | -11.44 | 38.85 | 11.59 | 38.32 | 11.26 | 1.73 | -11.23 | 20.07 | 0.04 |

| Llama-2-7B | 0.00 | -12.29 | 3.72 | -10.00 | 0.26 | -12.13 | 0.00 | -12.29 | 1.0 | -11.68 |

| Llama-2-7B-chat | 0.00 | -12.29 | 30.92 | 6.71 | 12.80 | -4.43 | 0.00 | -12.29 | 10.93 | -5.57 |

| Mpt 7B | 20.08 | 0.05 | 40.46 | 12.57 | 40.03 | 12.31 | 0.00 | -12.29 | 25.14 | 3.16 |

| Mpt 7B instruct | 0.04 | -12.27 | 38.24 | 11.21 | 40.46 | 12.57 | 0.00 | -12.29 | 19.69 | -0.19 |

Figure 6: Accuracy for different number of shots/examples

图 6: 不同样本数量下的准确率

7.3 Sensitivity to Prompt Framing

7.3 提示框架的敏感性

Our analysis in Table 4. shows that prompt framing influences the performance of large language models in Med-HALT tasks. As the prompts are changed from ambiguous to more specific and direct, the accuracy of the tasks improved. The details of the prompt and examples are shown in appendix Table 9 - 15

表4的分析表明,提示词框架会影响大语言模型在Med-HALT任务中的表现。当提示词从模糊表述调整为更具体直接的指令时,任务准确率得到提升。提示词细节及示例详见附录表9至表15

These results demonstrate the importance of careful and strategic prompt design and stress the necessity for explicit, directed prompts to ensure that these models generate useful, accurate, and safe information.

这些结果表明了精心设计提示策略的重要性,并强调了明确、有针对性的提示对于确保这些模型生成有用、准确且安全信息的必要性。

Table 4: Accuracy for different prompt variants

| Prompt Variant | Accuracy |

| PromptVariant 0 | 24.44 |

| Prompt Variant 1 | 22.97 |

| Prompt Variant 2 | 25.48 |

表 4: 不同提示变体的准确率

| 提示变体 | 准确率 |

|---|---|

| PromptVariant 0 | 24.44 |

| Prompt Variant 1 | 22.97 |

| Prompt Variant 2 | 25.48 |

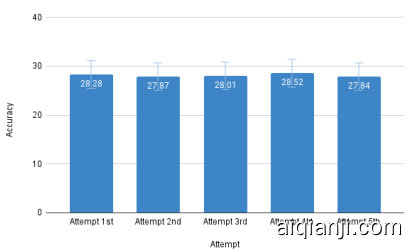

7.4 Repetition Experiments

7.4 重复实验

While the generation of the open source models can be controlled and made repeatable by setting seed and other required parameters, The commercial variants like OpenAI does not allow for that level of control. As a result, the generations from these APIs may differ even with the same input and parameters. To assess the consistency and accuracy of the GPT-3.5 model on our benchmark, we repeated a sample of questions multiple times. Across multiple attempts, the model’s performance remained relatively stable with slight fluctuations. The highest accuracy was on the fourth attempt at $28.52%$ , while the lowest was on the second and fifth tries, around $27.87%$ . Results are presented in Fig. 7 Despite these minor variances, such discrepancies raise concerns in sensitive applications such as healthcare.

虽然开源模型的生成可以通过设置种子和其他必要参数来控制并实现可重复性,但像OpenAI这样的商业版本不允许这种程度的控制。因此,即使输入和参数相同,这些API的生成结果也可能存在差异。为了评估GPT-3.5模型在我们基准测试中的一致性和准确性,我们对部分问题进行了多次重复测试。在多次尝试中,模型表现保持相对稳定,仅有轻微波动。最高准确率出现在第四次尝试,达到$28.52%$,而最低准确率出现在第二次和第五次尝试,约为$27.87%$。结果如图7所示。尽管存在这些微小差异,此类偏差在医疗等敏感应用中仍会引发担忧。

Figure 7: Visualisation of accuracy values for repeated experiments

图 7: 重复实验准确率数值的可视化

7.5 Brittleness of LLMs

7.5 大语言模型(LLM)的脆弱性

During our evaluation we found that the LLMs were sensitive to prompt framing and decoding parameters. Altering the parameters even slightly resulted in models that earlier produced correct examples to hallucinate with wrong answers. This warrants for more research in this area to make LLMs more robust to all these settings. The applications using the LLMs to recognize these shortcomings and use the models with responsibility, especially in critical domains like Healthcare.

在我们的评估过程中,发现大语言模型(LLM)对提示框架和解码参数非常敏感。即使轻微调整参数,也会导致先前能生成正确示例的模型产生错误答案的幻觉。这表明需要在该领域开展更多研究,以增强大语言模型对所有设置的鲁棒性。使用大语言模型的应用需识别这些缺陷,并负责任地使用模型,特别是在医疗保健等关键领域。

8 Conclusion

8 结论

This research advances our understanding of hallucination in large language models (LLMs) within the medical domain, introducing the Med-HALT dataset and benchmark as a comprehensive tool for evaluating and mitigating such issues. Our comparative analysis of models, including OpenAI’s Text-Davinci, GPT-3.5, Llama-2, and Falcon, has revealed considerable room for improvement.

本研究推进了医学领域大语言模型(LLM)幻觉问题的理解,提出了Med-HALT数据集和基准作为评估与缓解此类问题的综合工具。通过对OpenAI的Text-Davinci、GPT-3.5、Llama-2和Falcon等模型的对比分析,我们发现这些模型仍有显著改进空间。

References

参考文献

Monica Agrawal, Stefan Hegselmann, Hunter Lang, Yoon Kim, and David Sontag. 2022. Large language models are few-shot clinical information extractors.

Monica Agrawal、Stefan Hegselmann、Hunter Lang、Yoon Kim 和 David Sontag. 2022. 大语言模型 (Large Language Model) 是少样本临床信息提取器。

Yuntao Bai, Andy Jones, Kamal Ndousse, Amanda Askell, Anna Chen, Nova DasSarma, Dawn Drain, Stanislav Fort, Deep Ganguli, Tom Henighan, Nicholas Joseph, Saurav Kadavath, Jackson Kernion, Tom Conerly, Sheer El-Showk, Nelson Elhage, Zac Hatfield-Dodds, Danny Hernandez, Tristan Hume, Scott Johnston, Shauna Kravec, Liane Lovitt, Neel Nanda, Catherine Olsson, Dario Amodei, Tom Brown, Jack Clark, Sam McCandlish, Chris Olah, Ben Mann, and Jared Kaplan. 2022. Training a helpful and harmless assistant with reinforcement learning from human feedback.

Yuntao Bai、Andy Jones、Kamal Ndousse、Amanda Askell、Anna Chen、Nova DasSarma、Dawn Drain、Stanislav Fort、Deep Ganguli、Tom Henighan、Nicholas Joseph、Saurav Kadavath、Jackson Kernion、Tom Conerly、Sheer El-Showk、Nelson Elhage、Zac Hatfield-Dodds、Danny Hernandez、Tristan Hume、Scott Johnston、Shauna Kravec、Liane Lovitt、Neel Nanda、Catherine Olsson、Dario Amodei、Tom Brown、Jack Clark、Sam McCandlish、Chris Olah、Ben Mann 和 Jared Kaplan。2022. 基于人类反馈强化学习训练有益无害的助手。

Yejin Bang, Samuel Cah yaw i jaya, Nayeon Lee, Wenliang Dai, Dan Su, Bryan Wilie, Holy Lovenia, Ziwei Ji, Tiezheng Yu, Willy Chung, Quyet V. Do, Yan Xu, and Pascale Fung. 2023. A multitask, multilingual, multimodal evaluation of chatgpt on reasoning, hallucination, and interactivity. ArXiv, abs/2302.04023.

Yejin Bang、Samuel Cahyawijaya、Nayeon Lee、Wenliang Dai、Dan Su、Bryan Wilie、Holy Lovenia、Ziwei Ji、Tiezheng Yu、Willy Chung、Quyet V. Do、Yan Xu 和 Pascale Fung。2023。ChatGPT 在推理、幻觉和交互性上的多任务、多语言、多模态评估。ArXiv,abs/2302.04023。

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language models are few-shot learners. CoRR, abs/2005.14165.

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever 和 Dario Amodei。2020。语言模型是少样本学习者。CoRR, abs/2005.14165。

Tianyu Han, Lisa C Adams, Jens-Michalis Papaioannou, Paul Grundmann, Tom Oberhauser, Alexander Loser, Daniel Truhn, and Keno K Bressem. 2023. Medalpaca–an open-source collection of medical conversational ai models and training data. arXiv preprint arXiv:2304.08247.

Tianyu Han、Lisa C Adams、Jens-Michalis Papaioannou、Paul Grundmann、Tom Oberhauser、Alexander Loser、Daniel Truhn 和 Keno K Bressem。2023。MedAlpaca——一个开源的医疗对话AI模型及训练数据集合。arXiv预印本 arXiv:2304.08247。

Ari Holtzman, Jan Buys, Maxwell Forbes, and Yejin Choi. 2019. The curious case of neural text degeneration. CoRR, abs/1904.09751.

Ari Holtzman、Jan Buys、Maxwell Forbes 和 Yejin Choi。2019. 神经文本退化的奇特案例。CoRR, abs/1904.09751。

Ziwei Ji, Nayeon Lee, Rita Frieske, Tiezheng Yu, Dan Su, Yan Xu, Etsuko Ishii, Yejin Bang, Wenliang Dai, Andrea Madotto, and Pascale Fung. 2022. Survey of hallucination in natural language generation. ACM Computing Surveys, 55:1 – 38.

Jiwei Zi、Nayeon Lee、Rita Frieske、Tiezheng Yu、Dan Su、Yan Xu、Etsuko Ishii、Yejin Bang、Wenliang Dai、Andrea Madotto 和 Pascale Fung。2022。自然语言生成中的幻觉现象综述。ACM Computing Surveys,55:1–38。

Di Jin, Eileen Pan, Nassim Oufattole, Wei-Hung Weng, Hanyi Fang, and Peter Szolovits. 2020. What disease does this patient have? a large-scale open domain question answering dataset from medical exams. ArXiv, abs/2009.13081.

Di Jin、Eileen Pan、Nassim Oufattole、Wei-Hung Weng、Hanyi Fang 和 Peter Szolovits。2020。该患者患有何种疾病?基于医学考试的大规模开放领域问答数据集。ArXiv,abs/2009.13081。

Junyi Li, Xiaoxue Cheng, Wayne Xin Zhao, Jianyun Nie, and Ji rong Wen. 2023a. Halueval: A largescale hallucination evaluation benchmark for large language models. ArXiv, abs/2305.11747.

Junyi Li、Xiaoxue Cheng、Wayne Xin Zhao、Jianyun Nie和Ji rong Wen。2023a。Halueval: 大语言模型幻觉评估的大规模基准。ArXiv, abs/2305.11747。

Yunxiang Li, Zihan Li, Kai Zhang, Ruilong Dan, Steve Jiang, and You Zhang. 2023b. Chatdoctor: A medical chat model fine-tuned on a large language model meta-ai (llama) using medical domain knowledge. Cureus, 15(6).

Yunxiang Li、Zihan Li、Kai Zhang、Ruilong Dan、Steve Jiang 和 You Zhang。2023b。ChatDoctor:基于大语言模型Meta-AI(LLaMA) 并利用医学领域知识微调的医疗对话模型。Cureus, 15(6)。

Tianyu Liu, Yizhe Zhang, Chris Brockett, Yi Mao, Zhifang Sui, Weizhu Chen, and Bill Dolan. 2021. A token-level reference-free hallucination detection benchmark for free-form text generation. arXiv preprint arXiv:2104.08704.

Tianyu Liu, Yizhe Zhang, Chris Brockett, Yi Mao, Zhifang Sui, Weizhu Chen, and Bill Dolan. 2021. 面向自由文本生成的Token级无参考幻觉检测基准. arXiv preprint arXiv:2104.08704.

MosaicML. 2023. Introducing mpt-30b: Raising the bar for open-source foundation models. Accessed: 2023-06-22.

MosaicML. 2023. 发布MPT-30B:提升开源基础模型标杆。访问于: 2023-06-22。

Ankit Pal. 2022. Promptify: Structured output from llms. https://github.com/promptslab/ Promptify. Prompt-Engineering components for NLP tasks in Python.

Ankit Pal. 2022. Promptify: 从大语言模型(LLM)生成结构化输出。https://github.com/promptslab/Promptify。基于Python语言的NLP任务提示工程组件。

Ankit Pal, Logesh Kumar Umapathi, and Malai kann an Sankara sub bu. 2022. Medmcqa: A large-scale multisubject multi-choice dataset for medical domain question answering. In Proceedings of the Conference on Health, Inference, and Learning, volume 174 of Proceedings of Machine Learning Research, pages 248–260. PMLR.

Ankit Pal、Logesh Kumar Umapathi 和 Malai kann an Sankara sub bu. 2022. Medmcqa: 一个面向医疗领域问答的大规模多学科多选题数据集. 在《健康、推理与学习会议论文集》中, 第174卷《机器学习研究论文集》, 第248–260页. PMLR.

Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zach DeVito, Martin Raison, Alykhan Tejani, Sasank Chi lam kurt hy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. Pytorch: An imperative style, high-performance deep learning library. ArXiv, abs/1912.01703.

Adam Paszke、Sam Gross、Francisco Massa、Adam Lerer、James Bradbury、Gregory Chanan、Trevor Killeen、Zeming Lin、Natalia Gimelshein、Luca Antiga、Alban Desmaison、Andreas Kopf、Edward Yang、Zach DeVito、Martin Raison、Alykhan Tejani、Sasank Chilamkurthy、Benoit Steiner、Lu Fang、Junjie Bai 和 Soumith Chintala。2019。PyTorch:一种命令式风格的高性能深度学习库。ArXiv,abs/1912.01703。

Guilherme Penedo, Quentin Malartic, Daniel Hesslow, Ruxandra Cojocaru, Alessandro Cappelli, Hamza Alobeidli, Baptiste Pannier, Ebtesam Almazrouei, and Julien Launay. 2023a. The refinedweb dataset for falcon llm: Outperforming curated corpora with web data, and web data only.

Guilherme Penedo、Quentin Malartic、Daniel Hesslow、Ruxandra Cojocaru、Alessandro Cappelli、Hamza Alobeidli、Baptiste Pannier、Ebtesam Almazrouei 和 Julien Launay。2023a。Falcon大语言模型的RefinedWeb数据集:仅用网络数据超越精选语料库。

Guilherme Penedo, Quentin Malartic, Daniel Hesslow, Ruxandra Cojocaru, Alessandro Cappelli, Hamza Alobeidli, Baptiste Pannier, Ebtesam Almazrouei, and Julien Launay. 2023b. The RefinedWeb dataset for Falcon LLM: outperforming curated corpora with web data, and web data only. arXiv preprint arXiv:2306.01116.

Guilherme Penedo、Quentin Malartic、Daniel Hesslow、Ruxandra Cojocaru、Alessandro Cappelli、Hamza Alobeidli、Baptiste Pannier、Ebtesam Almazrouei 和 Julien Launay。2023b。Falcon大语言模型的RefinedWeb数据集:仅用网络数据超越精选语料库。arXiv预印本arXiv:2306.01116。

Alec Radford, Jeff Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. Language models are unsupervised multitask learners.

Alec Radford、Jeff Wu、Rewon Child、David Luan、Dario Amodei 和 Ilya Sutskever。2019。语言模型是无监督多任务学习器。

Kurt Shuster, Spencer Poff, Moya Chen, Douwe Kiela, and Jason Weston. 2021. Retrieval augmentation reduces hallucination in conversation. CoRR, abs/2104.07567.

Kurt Shuster、Spencer Poff、Moya Chen、Douwe Kiela 和 Jason Weston。2021。检索增强减少对话中的幻觉。CoRR,abs/2104.07567。

K. Singhal, Shekoofeh Azizi, and Tao Tu. 2022. Large language models encode clinical knowledge. ArXiv, abs/2212.13138.

K. Singhal、Shekoofeh Azizi 和 Tao Tu。2022。大语言模型 (Large Language Model) 编码临床知识。ArXiv,abs/2212.13138。

K. Singhal, Tao Tu, and Juraj Gottweis. 2023. Towards expert-level medical question answering with large language models. ArXiv, abs/2305.09617.

K. Singhal、Tao Tu 和 Juraj Gottweis。2023。迈向基于大语言模型的专家级医学问答系统。ArXiv,abs/2305.09617。

Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, Dan Bikel, Lukas Blecher, Cristian Canton Ferrer, Moya Chen, Guillem Cucurull, David Esiobu, Jude Fernandes, Jeremy Fu, Wenyin Fu, Brian Fuller, Cynthia Gao, Vedanuj Goswami, Naman Goyal, Anthony Hartshorn, Saghar Hosseini, Rui Hou, Hakan Inan, Marcin Kardas, Viktor Kerkez, Madian Khabsa, Isabel Kloumann, Artem Korenev, Punit Singh Koura, Marie-Anne Lachaux, Thibaut Lavril, Jenya Lee, Di- ana Liskovich, Yinghai Lu, Yuning Mao, Xavier Martinet, Todor Mihaylov, Pushkar Mishra, Igor Molybog, Yixin Nie, Andrew Poulton, Jeremy Reizen- stein, Rashi Rungta, Kalyan Saladi, Alan Schelten,

Hugo Touvron、Louis Martin、Kevin Stone、Peter Albert、Amjad Almahairi、Yasmine Babaei、Nikolay Bashlykov、Soumya Batra、Prajjwal Bhargava、Shruti Bhosale、Dan Bikel、Lukas Blecher、Cristian Canton Ferrer、Moya Chen、Guillem Cucurull、David Esiobu、Jude Fernandes、Jeremy Fu、Wenyin Fu、Brian Fuller、Cynthia Gao、Vedanuj Goswami、Naman Goyal、Anthony Hartshorn、Saghar Hosseini、Rui Hou、Hakan Inan、Marcin Kardas、Viktor Kerkez、Madian Khabsa、Isabel Kloumann、Artem Korenev、Punit Singh Koura、Marie-Anne Lachaux、Thibaut Lavril、Jenya Lee、Diana Liskovich、Yinghai Lu、Yuning Mao、Xavier Martinet、Todor Mihaylov、Pushkar Mishra、Igor Molybog、Yixin Nie、Andrew Poulton、Jeremy Reizenstein、Rashi Rungta、Kalyan Saladi、Alan Schelten

Ruan Silva, Eric Michael Smith, Ranjan Subramanian, Xiaoqing Ellen Tan, Binh Tang, Ross Taylor, Adina Williams, Jian Xiang Kuan, Puxin Xu, Zheng Yan, Iliyan Zarov, Yuchen Zhang, Angela Fan, Melanie Kambadur, Sharan Narang, Aurelien Ro- driguez, Robert Stojnic, Sergey Edunov, and Thomas Scialom. 2023. Llama 2: Open foundation and finetuned chat models.

Ruan Silva、Eric Michael Smith、Ranjan Subramanian、Xiaoqing Ellen Tan、Binh Tang、Ross Taylor、Adina Williams、Jian Xiang Kuan、Puxin Xu、Zheng Yan、Iliyan Zarov、Yuchen Zhang、Angela Fan、Melanie Kambadur、Sharan Narang、Aurelien Rodriguez、Robert Stojnic、Sergey Edunov 和 Thomas Scialom。2023。Llama 2:开放基础与微调对话模型。

David Vilares and Carlos Gomez-Rodriguez. 2019. Head-qa: A healthcare dataset for complex reasoning. ArXiv, abs/1906.04701.

David Vilares 和 Carlos Gomez-Rodriguez. 2019. Head-QA: 一个用于复杂推理的医疗数据集. ArXiv, abs/1906.04701.

Yizhong Wang, Yeganeh Kordi, Swaroop Mishra, Alisa Liu, Noah A. Smith, Daniel Khashabi, and Hannaneh Hajishirzi. 2022. Self-instruct: Aligning language model with self generated instructions.

Yizhong Wang、Yeganeh Kordi、Swaroop Mishra、Alisa Liu、Noah A. Smith、Daniel Khashabi 和 Hannaneh Hajishirzi。2022。Self-instruct: 通过自生成指令对齐语言模型。

Jason Wei, Maarten Bosma, Vincent Y. Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, An- drew M. Dai, and Quoc V. Le. 2021. Finetuned language models are zero-shot learners. CoRR, abs/2109.01652.

Jason Wei、Maarten Bosma、Vincent Y. Zhao、Kelvin Guu、Adams Wei Yu、Brian Lester、Nan Du、Andrew M. Dai 和 Quoc V. Le。2021。微调语言模型是零样本学习器。CoRR,abs/2109.01652。

Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pier- ric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, and Jamie Brew. 2019. Hugging face’s transformers: State-of-the-art natural language processing. ArXiv, abs/1910.03771.

Thomas Wolf、Lysandre Debut、Victor Sanh、Julien Chaumond、Clement Delangue、Anthony Moi、Pier-ric Cistac、Tim Rault、Rémi Louf、Morgan Funtowicz 和 Jamie Brew。2019. Hugging Face 的 Transformers:最先进的自然语言处理技术。ArXiv, abs/1910.03771。

A Med-HALT Selection Criteria

医疗HALT筛选标准

The datasets of Med-HALT were selected in alignment with the following key criteria:

Med-HALT的数据集根据以下关键标准进行筛选:

Domain-Specificity: The datasets utilized in MedHALT should ideally be related to the medical field. They should contain a broad variety of medical topics and discussions to challenge the language models sufficiently.

领域专一性:MedHALT 使用的数据集应尽可能与医学领域相关,需涵盖广泛的医学主题和讨论内容,以充分挑战大语言模型。

Authenticity: The data should be derived from realworld medical literature and resources. It’s crucial for the data to reflect genuine, non-hallucinated medical knowledge to ground the study in reality and enable the creation of reliable outputs.

真实性:数据应源自现实世界的医学文献和资源。关键在于数据必须反映真实、非虚构的医学知识,以确保研究立足于现实,并产出可靠成果。

Grounded ness vs. Hallucination: The datasets should ideally contain both grounded and hallucinated examples. The inclusion of both types would facilitate the direct examination of hallucination detection and mitigation techniques.

真实性与幻觉:数据集应理想地同时包含真实案例和幻觉案例。纳入这两种类型将有助于直接检验幻觉检测与缓解技术。

Size & Diversity: The datasets should be large and diverse enough to ensure the robustness of the findings. Small datasets might lead to overfitting and might not represent the complexities of real-world medical literature adequately. Diverse datasets, containing various medical topics, can help ensure the generality of the results.

规模与多样性:数据集应足够庞大且多样,以确保研究结果的稳健性。小型数据集可能导致过拟合,且可能无法充分体现现实世界医学文献的复杂性。涵盖多种医学主题的多样化数据集有助于确保结果的普适性。

Accessibility: The datasets should be publicly available and well-documented, ensuring that the study is reproducible and that other researchers can build upon the work in Med-HALT.

可访问性:数据集应公开可用且文档完善,确保研究可复现,并使其他研究人员能够在Med-HALT的基础上开展工作。

Difficulty: The datasets should pose a significant challenge for state-of-the-art language models

难度:数据集应对最先进的大语言模型构成重大挑战

A.1 Difficulty and Diversity of Questions

A.1 问题的难度与多样性

In order to gain a comprehensive understanding of the dataset’s complexity and the types of reasoning required, We conducted an in-depth analysis of a representative sample from each of the exam datasets and PubMed articles. a sample of $30%$ questions from each exam dataset and PubMed articles was randomly selected and manually analyzed. This analysis helped categorize the reasoning required to answer the questions into various types:

为了全面理解数据集的复杂性及所需的推理类型,我们对各考试数据集和PubMed文章的代表性样本进行了深入分析。从每个考试数据集和PubMed文章中随机抽取30%的问题样本进行人工分析,将解答问题所需的推理类型划分为以下几类:

Factual: These are straightforward questions with fact-based answers, often requiring direct recall of established medical knowledge.

事实性:这类问题通常基于事实作答,往往需要直接回忆已有的医学知识。

Diagnosis: These questions requires identifying the correct cause of a given disease or condition, requiring both a depth of medical knowledge and the ability to apply it in a diagnostic context.

诊断:这些问题需要识别特定疾病或状况的正确病因,既要求具备深厚的医学知识,又需能在诊断情境中应用这些知识。

Fact-Based Reasoning: This type of question requires the application of established facts to reason through a novel problem or scenario.

基于事实的推理 (Fact-Based Reasoning):这类问题需要运用既定事实来推理解决新问题或场景。

Exclusion of Distract or s: These questions involve identifying and eliminating incorrect or less suitable options to arrive at the correct answer.

排除干扰项:这类问题需要识别并剔除错误或不合适的选项,从而得出正确答案。

Question Logic: These questions test reasoning ability by requiring the test-taker to guide through complex question structures, often involving multiple sub-questions or conditions.

问题逻辑:这类问题通过要求测试者引导完成复杂的提问结构来考察推理能力,通常涉及多个子问题或条件。

Multihop Reasoning: These questions require synthesizing information from multiple passages to reach a correct answer

多跳推理 (Multihop Reasoning):这类问题需要综合多段信息才能得出正确答案

Explanation/Description: These are the questions that require a detailed definition, explanation, or description of a specific term or phenomenon

说明/描述:这些问题需要详细定义、解释或描述特定术语或现象

Mathematical: These questions requires mathematical critical thinking and logical reasoning, often involving calculations or statistical reasoning

数学类:这些问题需要数学批判性思维和逻辑推理能力,通常涉及计算或统计推理

Fill in the Blanks: In these questions, the responder selects the most appropriate term or phrase to complete a given statement

填空题:在这些问题中,回答者需选择最合适的术语或短语来完成给定陈述

Comparison: These questions require comparing and contrasting different options or scenarios

对比:这些问题需要比较和对比不同的选项或情景

Natural Language Inference: This category includes questions that require understanding implied information, correlations, and logical inferences in a given text. Fig. 3 illustrates these reasoning types and their corresponding proportions within the sampled dataset.

自然语言推理:这类问题需要理解给定文本中的隐含信息、关联性和逻辑推理。图 3 展示了这些推理类型及其在抽样数据集中的对应比例。

Figure 8: Relative sizes of Exam Types in Med-HALT

图 8: Med-HALT 中考试类型的相对大小

Table 8 shows the examples of different reasoning types in the dataset.

表 8: 数据集中不同推理类型的示例。

B Parsing Output and Handling Exceptions

B 解析输出与异常处理

A major element of our study is the reliance on structured, valid JSON output from large language models (LLMs) in response to our tasks and prompts. However, ensuring that these models return the expected output format is a challenge. There are instances where the LLMs did not adhere strictly to the provided output format, resulting in malformed JSON outputs that need to be correctly parsed and processed. When handling these parsing exceptions, we have adopted a multi-process strategy to ensure robustness and correctness of our analysis:

我们研究的一个重要环节是依赖大语言模型 (LLM) 针对任务和提示生成结构化、有效的 JSON 输出。然而确保这些模型返回预期输出格式存在挑战。实践中存在 LLM 未严格遵循指定输出格式的情况,导致需要正确解析和处理格式错误的 JSON 输出。在处理这些解析异常时,我们采用多进程策略来保证分析的鲁棒性和正确性:

Basic Parsing In evaluating the models’ ability to follow instructions, we used the Promptify (Pal, 2022) Module. This direct parsing approach works for a significant proportion of the samples.

基础解析

在评估模型遵循指令的能力时,我们使用了 Promptify (Pal, 2022) 模块。这种直接解析方法适用于大部分样本。

Escaped Character Handling To handle cases where the output contained both single and double quotes, we used a regex-based escaping function to properly format the string before running Promptify. This handles instances such as ”The patient’s symptoms are . . . ”, which could cause errors in the parsing process.

转义字符处理

为处理输出中同时包含单引号和双引号的情况,我们在运行Promptify前使用基于正则表达式的转义函数对字符串进行格式化。该方法可正确处理类似"患者症状为..."的实例,避免解析过程中出现错误。

Counting Unparsable Outputs However, for several prompts a high ratio of outputs remained unparseable even after using above methods. In these cases, rather than continuously re-prompting, we counted each malformed output as a failure of the model to follow instructions. This allowed us to calculate the rate at which models deviated from the requested output format across prompts.

然而,对于部分提示词,即使采用上述方法后仍有较高比例的输出无法解析。针对这些情况,我们不再持续重新生成提示,而是将每个格式错误的输出视为模型未能遵循指令的失败案例。这使得我们能够计算模型在不同提示下偏离指定输出格式的频率。

Specific numbers on instruction following errors per model are presented in Table 5. While not a direct measure of hallucination, a model’s tendency

各模型在指令遵循错误上的具体数据见表5。虽然这不是对幻觉的直接衡量,但模型的倾向性

Table 5: Format exception handling error ratio for LLM Outputs

| ReasoningFCT | ReasoningFake | Reasoning Nota | IRPmid2Title | IRTitle2Pubmedlink | Abstract2Pubmedlink | IRPubmedlink2Title | |

| GPT-3.5 | 2.24% | 3.19% | 1.28% | 2.42% | 2.03% | 1.97% | 1.06% |

| Text-Davinci | 1.31% | 2.24% | 0.8% | 1.60% | 1.76% | 1.93% | 0.4% |

| Falcon 40B | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Falcon40B-instruct | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| LlaMa-27B | 0.04% | 0 | 0.01% | 0 | 0 | 0 | 0 |

| LlaMa-27B-chat | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| LlaMa-2 13B | 0.01% | 0 | 0 | 0 | 0 | 0 | 0 |

| LlaMa-2 70B | 0 | 0 | 0 | 0 | 0< |