Metapath and Entity-Aware Graph Neural Network for Recommendation

基于元路径和实体感知的图神经网络推荐系统

Abstract. In graph neural networks (GNNs), message passing iteratively aggregates nodes’ information from their direct neighbors while neglecting the sequential nature of multi-hop node connections. Such sequential node connections e.g., metapaths, capture critical insights for downstream tasks. Concretely, in recommend er systems (RSs), disregarding these insights leads to inadequate distillation of collaborative signals. In this paper, we employ collaborative subgraphs (CSGs) and metapaths to form metapath-aware subgraphs, which explicitly capture sequential semantics in graph structures. We propose metaPath and Entity-Aware Graph Neural Network (PEAGNN), which trains multilayer GNNs to perform metapath-aware information aggregation on such subgraphs. This aggregated information from different metapaths is then fused using attention mechanism. Finally, PEAGNN gives us the representations for node and subgraph, which can be used to train MLP for predicting score for target user-item pairs. To leverage the local structure of CSGs, we present entity-awareness that acts as a contrastive regularize r on node embedding. Moreover, PEAGNN can be combined with prominent layers such as GAT, GCN and GraphSage. Our empirical evaluation shows that our proposed technique outperforms competitive baselines on several datasets for recommendation tasks. Further analysis demonstrates that PEAGNN also learns meaningful metapath combinations from a given set of metapaths.

摘要。在图神经网络(GNNs)中,消息传递迭代地聚合来自直接邻居的节点信息,却忽略了多跳节点连接的序列特性。这类序列化节点连接(如元路径)能为下游任务捕获关键洞察。具体而言,在推荐系统(RSs)中忽视这些洞察会导致协同信号提取不充分。本文采用协同子图(CSGs)和元路径构建元路径感知子图,显式捕获图结构中的序列语义。我们提出元路径与实体感知图神经网络(PEAGNN),通过多层GNN在子图上执行元路径感知的信息聚合,并利用注意力机制融合不同元路径的聚合信息。最终PEAGNN生成节点与子图表征,可用于训练MLP预测目标用户-物品对的评分。为利用CSGs的局部结构,我们提出作为对比正则项的实体感知机制。此外,PEAGNN可与GAT、GCN、GraphSage等主流网络层结合。实验表明,所提方法在多个推荐任务数据集上优于基线模型。进一步分析证明PEAGNN能从给定元路径中学习有意义的组合模式。

1 Introduction

1 引言

Integrating content information for user preference prediction remains a challenging task in the development of recommend er systems. In spite of their effec ti ve ness, most collaborative filtering (CF) methods [23,16,28,32] still suffer from the incapability of modeling content information such as user profiles and item features [20,25]. Several methods have been proposed to address this problem. Most of them fall in these two categories: factorization and graph-based methods. Factorization methods such as factorization machine (FM) [26], neural factorization machine (NFM) [14] and Wide&Deep models [6] fuse numerical features of each individual training sample. These methods yield competitive performance on several datasets. However, they neglect the dependencies among the content information. Graph-based methods such as NGCF [37], KGAT [36], KGCN [35], Multi-GCCF[33] and LGC [15] represent recommend er systems with graph structured data and exploit the graph structure to enhance the node-level representations for better recommendation performance [3,37,42,31].

在推荐系统开发中,整合内容信息以预测用户偏好仍是一项具有挑战性的任务。尽管协同过滤(CF)方法[23,16,28,32]效果显著,但大多数仍无法有效建模用户画像和物品特征等内容信息[20,25]。目前主要有两类解决方案:因子分解方法和基于图的方法。因子分解方法如因子分解机(FM)[26]、神经因子分解机(NFM)[14]和Wide&Deep模型[6],通过融合单个训练样本的数值特征实现,在多个数据集上表现优异,但忽略了内容信息间的关联性。基于图的方法如NGCF[37]、KGAT[36]、KGCN[35]、Multi-GCCF[33]和LGC[15],将推荐系统表示为图结构数据,利用图结构增强节点级表征以提升推荐性能[3,37,42,31]。

It is to be noted that learning such node-level representations loses the correspondences and interactions between the content information of users and items. This is because the node embeddings are learned independently as indicated by Zhang et al. [44]. Another disadvantage of previous GNN-based methods is that the sequential nature of connectivity relations are either ignored (Knowledge Graph based methods) or mixed up (GNN-based methods) without the explicit modelling of multi-hop structure.

需要注意的是,学习这种节点级表征会丢失用户与物品内容信息之间的对应关系和交互作用。正如Zhang等人[44]指出的,这是因为节点嵌入是独立学习的。先前基于GNN的方法另一个缺点是:连接关系的时序特性要么被忽略(基于知识图谱的方法),要么被混淆(基于GNN的方法),而未能显式建模多跳结构。

A natural solution of capturing the inter- and intra-relations between content features and user-item pairs is to explore the high-order information encoded by metapaths [7,43]. A metapath denotes a set of composite relations designed for representing multi-hop structure and sequential semantics. To our best knowledge, only a limited number of efforts have been made to enhance GNNs with metapaths. A prominent metapath based method is MAGNN [10]: it aggregates intra-metapath information for each path instance. As a consequence, MAGNN suffers from high memory consumption problem. MEIRec [8] utilizes the structural information in metapaths to improve the node-level representation for intent recommendation, but the method fails to generalize when no user intent or query is available.

捕捉内容特征与用户-物品对之间相互关系和内部关系的自然解决方案,是探索由元路径 [7,43] 编码的高阶信息。元路径表示一组为表征多跳结构和序列语义设计的复合关系。据我们所知,目前仅有少量研究尝试用元路径增强图神经网络 (GNN)。基于元路径的典型方法是 MAGNN [10]:它为每个路径实例聚合了元路径内部信息,但存在内存消耗过高的问题。MEIRec [8] 利用元路径中的结构信息改进意图推荐中的节点级表征,但该方法在缺乏用户意图或查询时无法泛化。

To overcome these limitations, we propose MetaPath- and Entity-Aware Graph Neural Network (PEAGNN), a unified GNN framework, which aggregates information over multiple metapath-aware subgraphs and fuse the aggregated information to obtain node representation using attention mechanism. As a first step, we extract an $h$ -hop enclosing collaborative subgraph (CSG). Each CSG is centered at a user-item pair and aimed to suppress the influence of feature nodes from other user-item interactions. Such local subgraphs contain rich semantic and collaborative information of user-item interactions. One major difference between the CSGs in our work and the subgraphs proposed by Zhang et al. [44] is that in the subgraphs in their work neglect side information by excluding all feature entity nodes.

为克服这些局限性,我们提出了元路径和实体感知图神经网络 (PEAGNN),这是一个统一的GNN框架,通过聚合多个元路径感知子图的信息,并利用注意力机制融合这些信息来获取节点表示。首先,我们提取一个$h$跳的封闭协作子图 (CSG)。每个CSG以用户-物品对为中心,旨在抑制来自其他用户-物品交互的特征节点的影响。这种局部子图包含了丰富的用户-物品交互语义和协作信息。我们的CSG与Zhang等人[44]提出的子图的一个主要区别在于,他们工作中的子图通过排除所有特征实体节点而忽略了辅助信息。

As a second step, the CSG is decomposed into $\gamma$ metapath-aware subgraphs based on the schema of the selected metapaths. After that, PEAGNN updates the node representation of the given CSG and outputs a CSG graph-level representation, which distills the collective user-item pattern and sequential semantics encoded in the CSG. A multi-layer perceptron is then trained to predict the recom mend ation score of a user-item pair. To further exploit the local structure of CSGs, we introduce entity-awareness, a contrastive regularize r which pushes the user and item nodes closer to the connected feature entity nodes, while simultan e ou sly pushing them apart from the unconnected ones. PEAGNN learns by jointly minimizing Bayesian Personalized Rank (BPR) loss and entity-aware loss. Furthermore, PEAGNN can be easily combined with any graph convolution layers such as GAT, GCN and GraphSage.

第二步,根据所选元路径的模式,将CSG分解为$\gamma$个元路径感知子图。随后,PEAGNN更新给定CSG的节点表征,并输出一个CSG图级表征,该表征提炼了CSG中编码的集体用户-物品模式和序列语义。接着训练一个多层感知机来预测用户-物品对的推荐分数。为了进一步利用CSG的局部结构,我们引入了实体感知对比正则化器,该组件推动用户和物品节点向相连的特征实体节点靠近,同时使它们远离未相连的实体节点。PEAGNN通过联合最小化贝叶斯个性化排序(BPR)损失和实体感知损失进行学习。此外,PEAGNN可以轻松与任何图卷积层(如GAT、GCN和GraphSage)相结合。

In contrast to existing metapath based approaches [7,8,10,34,43], PEAGNN avoids the high computational cost of explicit metapath reconstruction. This is achieved by metapath-guided propagation. The information is propagated along the metapaths “on the fly”. This is the primary reason of computational efficiency of PEAGNN as it gets rid of applying message passing on the recommendation graph. Consequently, the redundant information propagated from other interactions (subgraphs) is avoided by only performing metapath-guided propagation on individual metapath-aware subgraph. We discuss this in detail in Sec. 3.

与现有的基于元路径的方法 [7,8,10,34,43] 不同,PEAGNN 避免了显式元路径重构的高计算成本。这是通过元路径引导的传播实现的,信息会"即时"沿元路径传播。这是 PEAGNN 计算效率高的主要原因,因为它无需在推荐图上应用消息传递。因此,通过仅在单个元路径感知子图上执行元路径引导传播,可以避免从其他交互 (子图) 传播的冗余信息。我们将在第 3 节详细讨论这一点。

2 Related Work

2 相关工作

GNN is designed for learning on graph structured data [29,5,22]. GNNs employ message passing algorithm to pass messages in an iterative fashion between nodes to update node representation with the underlying graph structure. An additional pooling layer is typically used to extract graph representation for graph-level tasks, e.g., graph classification or clustering. Due to its superior performance on graphs, GNNs have achieved state-of-the-art performance on node classification [22], graph representation learning [12] and RSs [39]. In the task of RSs, relations such as user-item interactions and user-item features can be presented as multi-typed edges in the graphs. Severel recent works have proposed GNNs to solve recommendation tasks [37,36,3]. NGCF [37] embeds bipartite graphs of users and items into node representation to capture collaborative signals. GCMC [3] proposed a graph auto-encoder framework, which produces latent features of users and items through a form of differentiable message passing on the user-item graph. KGAT [36] proposed a knowledge graph based attentive propagation, which enhances the node features by modeling high-order connectivity information. Multi-GCCF [33] explicitly incorporates multiple graphs in the embedding learning process and consider the intrinsic difference between user nodes and item nodes in performing graph convolution.

GNN (Graph Neural Network) 专为图结构数据学习而设计 [29,5,22]。GNN采用消息传递算法在节点间迭代传递信息,利用底层图结构更新节点表征。通常额外使用池化层提取图级任务的图表征,例如图分类或聚类。凭借在图数据上的卓越性能,GNN在节点分类 [22]、图表征学习 [12] 和推荐系统 (RSs) [39] 中实现了最先进性能。在推荐系统任务中,用户-物品交互和用户-物品特征等关系可表示为图中的多类型边。近期多项研究提出用GNN解决推荐任务 [37,36,3]。NGCF [37] 将用户-物品二分图嵌入节点表征以捕捉协同信号;GCMC [3] 提出图自编码器框架,通过在用户-物品图上进行可微分消息传递生成用户与物品的潜在特征;KGAT [36] 提出基于知识图谱的注意力传播机制,通过建模高阶连接信息增强节点特征;Multi-GCCF [33] 在嵌入学习过程中显式融合多图结构,并考虑图卷积过程中用户节点与物品节点的本质差异。

Prior to GNNs, several efforts have been established to explicitly guide the recommend er learning with metapaths [18,34,41]. Heitmann et al. [18] utilized linked data from heterogeneous data source to enhance collaborative filtering for the cold-start problem. Sun et al. [34] converted recommendation tasks to relation prediction problems and tackled it with metapath-based relation reasoning. Yu et al. [41] employed matrix factorization framework over meta-path similarity matrices to perform recommendation. Hu et al. [19] proposed userand item-metapath based co-attention to fuse metapath information for recommendation while ignored the inter-metapath interactions. Zhao et al. [46] fed the semantics encoded by meta-graph in factorization model but neglected the contribution of each individual meta-graph. However, only a limited number of works attempted to enhance GNNs with metapaths. Two recent works are quite prominent in this regard, MAGNN [10] and MEIRec [8]. MAGNN aggregates intra-metapath information for each path instance. But this causes MAGNN to get in the problem of un affordable memory consumption. MEIRec devised a GNN to perform metapath-guided propagation. But MEIRec fails to generalize when no user intent is available and does not distinguish the contribution of metapaths. In contrast to these methods, our method saves computational time and memory by adopting a stepwise information pro pog ation over meta-path aware subgraphs. Moreover, PEAGNN employs collaborative subgraph (CSG) to separate semantics introduced by different metapaths and fuses those semantics according to the learned metapath importance, in contrast to existing approaches [44,10,8].

在GNN出现之前,已有若干研究通过元路径(metapath)显式指导推荐系统学习[18,34,41]。Heitmann等[18]利用异构数据源的关联数据改进协同过滤以解决冷启动问题。Sun等[34]将推荐任务转化为关系预测问题,并采用基于元路径的关系推理方法。Yu等[41]通过在元路径相似度矩阵上应用矩阵分解框架进行推荐。Hu等[19]提出基于用户和物品元路径的共注意力机制来融合元路径信息,但忽略了元路径间的交互作用。Zhao等[46]将元图编码的语义输入因子分解模型,却未考虑单个元图的贡献度。目前仅有少量工作尝试用元路径增强GNN,其中MAGNN[10]和MEIRec[8]最具代表性。MAGNN聚合每个路径实例的元路径内部信息,但会导致内存消耗过高的问题。MEIRec设计了基于元路径传播的GNN,但在缺乏用户意图时泛化能力不足且未区分元路径贡献度。相比这些方法,本方法通过在元路径感知子图上逐步传播信息,显著节省计算时间和内存。此外,PEAGNN创新性地采用协作子图(CSG)分离不同元路径的语义,并依据学习到的元路径重要性进行语义融合,这与现有方法[44,10,8]形成鲜明对比。

3 Methodology

3 方法论

3.1 Task description

3.1 任务描述

We formulate the recommendation task as: Given a HIN that includes user-item historical interactions as well as their feature entity nodes. The aim is to learn heterogeneous node representations and the graph-level representations from given collaborative subgraphs. The graph-level representations are utilized by a prediction model to predict the interaction score between user-item pair.

我们将推荐任务形式化为:给定一个包含用户-物品历史交互及其特征实体节点的HIN (Heterogeneous Information Network)。目标是从给定的协作子图中学习异构节点表示和图级表示。预测模型利用图级表示来预测用户-物品对的交互分数。

3.2 Overview of PEAGNN

3.2 PEAGNN概述

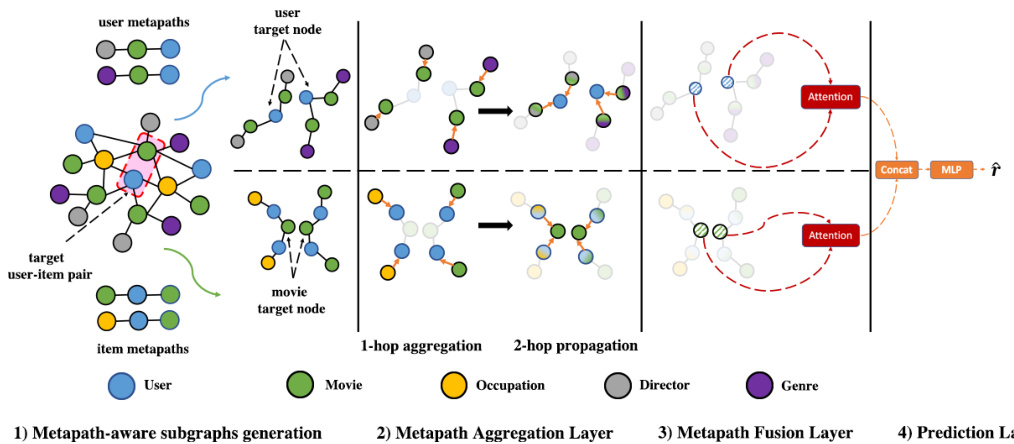

PEAGNN is a unified GNN framework, which exploits and fuses rich sequential semantics in selected metapaths. To leverage the underlying local structure of the graph for recommendation, we introduce an entity-aware regularize r that distinguishes users and items from their unrelated features in a contrastive fashion. Figure 1 illustrates the PEAGNN framework, which consists of three components:

PEAGNN是一个统一的GNN框架,它利用并融合了选定元路径中丰富的序列语义。为了利用图的底层局部结构进行推荐,我们引入了一个实体感知正则化器(regularizer),以对比方式区分用户和物品与其无关特征。图1展示了PEAGNN框架,该框架包含三个组件:

Fig. 1: Illustration of the proposed PEAGNN model on the MovieLens dataset. Subfigure (1) shows the metapath-aware subgraphs generated from a CSG with the given user- and item metapaths. Subfigures (2), (3) and (4) illustrate the metapath-aware information aggregation and fusion workflow of the PEAGNN model. For simplicity, we have only adopted 2-hop metapaths.

图 1: 基于MovieLens数据集提出的PEAGNN模型示意图。子图(1)展示了从CSG生成的元路径感知子图,包含给定的用户和物品元路径。子图(2)、(3)和(4)说明了PEAGNN模型的元路径感知信息聚合与融合流程。为简化展示,仅采用2跳元路径。

- A Metapath Aggregation Layer, which explicitly aggregates information on metapath-aware subgraphs. 2. A Metapath Fusion Layer, which fuses the aggregated node representations from multiple metapath-aware subgraphs using attention mechanism. 3. A Prediction Layer, which readouts the graph-level representations of CSGs and estimate the likelihood of potential user-item interactions.

- 元路径聚合层 (Metapath Aggregation Layer),显式聚合元路径感知子图上的信息。

- 元路径融合层 (Metapath Fusion Layer),通过注意力机制融合来自多个元路径感知子图的聚合节点表征。

- 预测层 (Prediction Layer),读取CSGs的图级表征并估计潜在用户-物品交互的可能性。

3.3 Metapath Aggregation Layer

3.3 元路径聚合层

Sequential semantics encoded by metapaths reveal different aspects towards the connected objects. Appropriate modelling of metapaths can improve the express ive ness of node representations. Our aim is to learn node representations that preserve the sequential semantics in metapaths. PEAGNN saves memory and computation time by performing a step-wise information propagation over metapath-aware subgraphs. This is contrast to Fan et al. [8] which consider each individual path as input.

元路径编码的序列语义揭示了连接对象的不同方面。对元路径进行适当建模可以提高节点表征的表达能力。我们的目标是学习能够保留元路径中序列语义的节点表征。PEAGNN通过基于元路径的子图进行逐步信息传播,节省了内存和计算时间。这与Fan等人[8]将每条独立路径作为输入的方法形成对比。

Metapath-aware Subgraph A metapath-aware subgraph is a directed graph induced from the corresponding CSG by following one specific metapath. As the goal is to learn metapath-aware user-item representation for recommendation, it is intuitive to choose such metapaths which end with either a user or an item node. This ensures that the information aggregation on metapath-aware subgraphs always end on nodes of our primary interest.

元路径感知子图

元路径感知子图是通过遵循特定元路径从相应CSG导出的有向图。由于目标是为推荐学习元路径感知的用户-物品表示,直观的做法是选择以用户或物品节点结尾的元路径。这确保了元路径感知子图上的信息聚合始终终止于我们主要关注的节点。

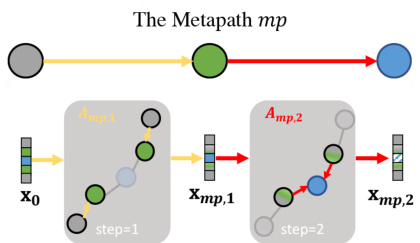

Information Propagation on Metapath-aware Subgraphs PEAGNN trains a GNN model to perform step-wise information aggregation on metapath-aware subgraphs. By stacking multiple GNN layers, PEAGNN is capable of not only explicitly exploring the multi-hop connectivity in a metapath but also capturing the collaborative signal effectively. Fig. 2 illustrates the flow of information propagation on a given metapath-aware subgraph generated from the metapath $m p$ . Here, $X_{m p,k}$ is the node representations on the metapath $m p$ after $k$ th propagation. $A_{m p,k}$ is the adjacency matrix of the metapath $m p$ at step $k$ . We employ orange and red color to highlight the edges being propagated at a certain aggregation step. Considering the high-order semantic revealed by multi-hop metapaths, we stack multiple GNN layers and re currently aggregate the representations on the metapaths, so that the high-order semantic is injected into node representations. The metapath-aware information aggregation is shown as follows

元路径感知子图上的信息传播

PEAGNN训练一个GNN模型在元路径感知子图上执行分步信息聚合。通过堆叠多个GNN层,PEAGNN不仅能显式探索元路径中的多跳连接性,还能有效捕获协作信号。图2展示了从元路径$mp$生成的子图上信息传播流程。其中$X_{mp,k}$表示第$k$次传播后元路径$mp$上的节点表征,$A_{mp,k}$是第$k$步时元路径$mp$的邻接矩阵。我们使用橙色和红色高亮特定聚合步骤中传播的边。考虑到多跳元路径揭示的高阶语义,我们堆叠多个GNN层并递归聚合元路径上的表征,从而将高阶语义注入节点表征。元路径感知的信息聚合过程如下所示

where $X_{0}$ denotes initial node embeddings. Without loss of generality, by stacking $N$ GNN layers we take into account $N$ -hop neighbours information from the metapath-aware subgraph. Thus, the node representations in the metapathaware subgraph are given by:

其中 $X_{0}$ 表示初始节点嵌入。不失一般性,通过堆叠 $N$ 个 GNN 层,我们考虑了来自元路径感知子图的 $N$ 跳邻居信息。因此,元路径感知子图中的节点表示由下式给出:

$X_{m p}$ is the output node representation of the last step on the metapath $m p$ .

$X_{mp}$ 是元路径 $mp$ 上最后一步的输出节点表示。

Fig. 2: Information propagation on a metapath-aware subgraph

图 2: 元路径感知子图上的信息传播

3.4 Metapath Fusion Layer

3.4 元路径融合层

After information aggregation within metapath-aware subgraphs, the metapath fusion layer combines and fuses the semantic information revealed by all metapaths. Assume for a node $\upsilon$ , a set of its node representations ${\mathbf{x}{m p{1}}^{v},\mathbf{x}{m p{2}}^{v},...,\mathbf{x}{m p{\gamma}}^{v}}$ is aggregated from $\gamma$ metapaths. Semantics disclosed by metapaths are not of equal importance to node representations and the contribution of every metapath should also be adjusted accordingly. Therefore, we leverage soft attention to learn the importance of each metapath, instead of adopting element-wise $r n e a n$ , $m a x$ and add operators. It is to be noted that PEAGNN applies a node-wise attentive fusion of metapath aggregated node representation.This is contrast to previous works which employ a fixed attention factor for all nodes. Consequently, they fail to capture the node-specific metapath preference. For a given target node $\upsilon$ , we apply vector concatenation on its representations from $\gamma$ metapathaware subgraphs, denoted as $\mathbf{H}{v}=[\mathbf{x}{m p_{1}}^{v};\mathbf{x}{m p{2}}^{v};...;\mathbf{x}{m p{\gamma}}^{v}]$ . The metapath fusion is performed as follows:

在元路径感知子图内完成信息聚合后,元路径融合层会整合并融合所有元路径揭示的语义信息。假设对于节点$\upsilon$,其节点表示集合${\mathbf{x}{m p{1}}^{v},\mathbf{x}{m p{2}}^{v},...,\mathbf{x}{m p{\gamma}}^{v}}$是从$\gamma$条元路径聚合而来。不同元路径所揭示的语义对节点表示的重要性并不等同,每条元路径的贡献度也应相应调整。因此,我们采用软注意力机制来学习各元路径的重要性,而非使用逐元素的$r n e a n$、$m a x$或加法运算符。需注意的是,PEAGNN采用针对节点的注意力融合方式来聚合元路径节点表示,这与先前工作中对所有节点使用固定注意力因子的方法形成对比。后者无法捕捉节点特有的元路径偏好。对于给定目标节点$\upsilon$,我们将其在$\gamma$个元路径感知子图中的表示向量拼接为$\mathbf{H}{v}=[\mathbf{x}{m p_{1}}^{v};\mathbf{x}{m p{2}}^{v};...;\mathbf{x}{m p{\gamma}}^{v}]$。元路径融合过程如下:

where $\mathbf{c}_{v}$ is a vector of metapath importance and $\mathbf{W}$ is a matrix with learnable parameters. We then normalize the metapath importance score using softmax function and get the attention factor for each metapath:

其中 $\mathbf{c}_{v}$ 是元路径重要性向量,$\mathbf{W}$ 是可学习参数矩阵。接着我们使用 softmax 函数对元路径重要性分数进行归一化,得到每条元路径的注意力因子:

where attvmpi denotes the normalized attention factor of metapath $m p_{i}$ on the node $\boldsymbol{v}$ . With the learned attention factors, we can fuse all metapath aggregated node representations to the final metapath-aware node representation, $\mathbf{e}_{v}$ , as:

其中attvmpi表示节点$\boldsymbol{v}$上元路径$m p_{i}$的归一化注意力因子。通过学习到的注意力因子,我们可以将所有元路径聚合的节点表示融合为最终的元路径感知节点表示$\mathbf{e}_{v}$,公式如下:

3.5 Prediction Layer

3.5 预测层

Next, we readout the node representations of CSGs into a graph-level feature vector. In existing works, many pooling methods were investigated such as SumPool, Mean Pooling, Sort Pooling [45] and Diff Pooling [40]. However, we adopt a different pooling strategy which concatenates the aggregated representations of the center user node $\mathbf{e}{u}$ and item node $\mathbf{e}{i}$ in the CSGs. i.e.,

接下来,我们将CSG中的节点表示读出为一个图级别的特征向量。现有工作中已探索了多种池化方法,如SumPool、均值池化、排序池化[45]和Diff池化[40]。但我们采用了不同的池化策略:将CSG中中心用户节点$\mathbf{e}{u}$和物品节点$\mathbf{e}{i}$的聚合表示进行拼接,即

After obtaining the graph-level representation of CSG, we utilize a 2-layer MLP to compute the matching score of a user-item pair. Lets denote a CSG with $\mathcal{G}_{u,i}$ , the prediction function for the interaction score of user $u$ and item $i$ can be expressed as follows

在获得CSG的图级别表示后,我们使用一个2层MLP来计算用户-物品对的匹配分数。设$\mathcal{G}_{u,i}$表示一个CSG,用户$u$与物品$i$的交互分数预测函数可表示为

where $\mathbf{w}{1}$ , $\mathbf{w}{2}$ , ${\bf b}{1}$ and ${\bf{b}}{2}$ are the trainable parameters of the MLP which map the graph-level representation ${\mathbf{e}}_{g}$ to a scalar matching score, and $\sigma$ is the non-linear activation function (e.g. ReLU).

其中 $\mathbf{w}{1}$、$\mathbf{w}{2}$、${\bf b}{1}$ 和 ${\bf{b}}{2}$ 是 MLP (多层感知机) 的可训练参数,用于将图级表示 ${\mathbf{e}}_{g}$ 映射为标量匹配分数,$\sigma$ 是非线性激活函数 (例如 ReLU)。

3.6 Graph-level representation for recommendation

3.6 面向推荐的图级别表示

Compared to the previous GNN-based methods such as NGCF, KGAT, KGCN, Multi-GCCF and LGC that use node-level representations for recommendation, PEAGNN predicts the matching score of a user-item pair by mapping its corresponding metapath-aware subgraph to a scalar as shown in Fig. 1. As shown by [44], methods using node-level representation suffer from the over-smoothness problem [24][22]. As their node-level representations are learned independently,

与之前基于GNN的方法(如NGCF、KGAT、KGCN、Multi-GCCF和LGC)使用节点级表示进行推荐不同,PEAGNN通过将用户-物品对对应的元路径感知子图映射为一个标量来预测其匹配分数,如图1所示。如[44]所示,使用节点级表示的方法存在过度平滑问题[24][22]。由于它们的节点级表示是独立学习的,

they fail to model the correspondence of the structural proximity of a node pair. On the other hand, a graph-level GNN with sufficient rounds of message passing can better capture the interactions between the local structures of two nodes [38].

它们未能建模节点对结构邻近性的对应关系。另一方面,具有足够多轮消息传递的图级GNN能更好地捕捉两个节点局部结构间的交互[38]。

3.7 Training Objective

3.7 训练目标

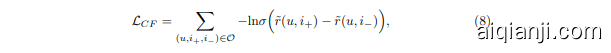

To train model parameters in an end-to-end manner, we minimize the pairwise Bayesian Personalized Rank (BPR) loss [27], which has been widely used in RSs. The BPR loss can be expressed as follows:

为了以端到端方式训练模型参数,我们最小化成对贝叶斯个性化排序 (Bayesian Personalized Rank, BPR) 损失 [27],该损失函数在推荐系统中被广泛使用。BPR损失可表示为:

where $\mathcal{O}={(u,i_{+},i_{-})|(u,i_{+})\in R_{+},(u,i_{-})\in R_{-}}$ is the training set, $R_{+}$ is the observed user-item interactions (positive samples) while $R_{-}$ is the unobserved user-item interactions (negative samples). The detailed training procedure is illustrated in the Algorithm 1.

其中 $\mathcal{O}={(u,i_{+},i_{-})|(u,i_{+})\in R_{+},(u,i_{-})\in R_{-}}$ 是训练集,$R_{+}$ 是观测到的用户-物品交互(正样本),而 $R_{-}$ 是未观测到的用户-物品交互(负样本)。具体训练流程如算法1所示。

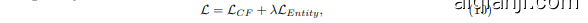

Although user and item representations can be derived by information aggregation and fusion on metapath-aware subgraphs, the local structural proximity of user(item) nodes are still missing. Towards this end, we propose EntityAwareness to regularize the local structural of user(item) nodes. The idea of entity-awareness is to distinguish items or users with their unrelated feature entities in the embedding space. Specifically, entity-awareness is a distance-based contrastive regular iz ation term that pulls the related feature entity nodes closer to the corresponding user(item) nodes, while push the unrelated ones apart. The regular iz ation term is defined as following:

尽管用户和商品表征可以通过元路径感知子图上的信息聚合与融合获得,但用户(商品)节点的局部结构邻近性仍然缺失。为此,我们提出EntityAwareness机制来规范用户(商品)节点的局部结构。该机制的核心思想是在嵌入空间中将商品/用户与其无关特征实体区分开来,具体通过基于距离的对比正则项实现:将相关特征实体节点拉近对应用户(商品)节点,同时推开无关实体。该正则项定义如下:

where $\mathbf{x}{f+,u},\mathbf{x}{f-,u}$ denote the observed and unobserved feature entity embeddings of user $u$ , ${\bf x}{f+,i+},{\bf x}{f-,i_{-}}$ denote the observed and unobserved feature entity embeddings of positive item $i$ and $d(\cdot,\cdot)$ is a distance measure on the embedding space. The total loss is computed by the weighted sum of these two losses. It is given by:

其中 $\mathbf{x}{f+,u},\mathbf{x}{f-,u}$ 表示用户 $u$ 的已观测和未观测特征实体嵌入,${\bf x}{f+,i+},{\bf x}{f-,i_{-}}$ 表示正样本物品 $i$ 的已观测和未观测特征实体嵌入,$d(\cdot,\cdot)$ 为嵌入空间的距离度量。总损失通过这两个损失的加权和计算得出,其表达式为:

where $\lambda$ is the weight of the entity-awareness term. We use mini-batch Adam optimizer [21]. For a batch randomly sampled from training set $\mathcal{O}$ , we establish their representation by performing information aggregation and fusion on their embeddings, and then update model parameters via back propagation.

其中 $\lambda$ 是实体感知项的权重。我们使用小批量 Adam 优化器 [21]。对于从训练集 $\mathcal{O}$ 中随机采样的批次,我们通过对它们的嵌入执行信息聚合和融合来建立它们的表示,然后通过反向传播更新模型参数。

4 Experiments

4 实验

We evaluate the effectiveness our approach via experiments on public datasets. Our experiments aim to address the following research questions:

我们通过在公开数据集上的实验来评估方法的有效性。实验旨在解决以下研究问题:

– RQ1: How does PEAGNN perform compared to other baseline methods? – RQ2: How does the entity-awareness affect the performance of PEAGNN? – RQ3: What is the impact of different metapaths in recommendation tasks?

- RQ1: PEAGNN 与其他基线方法相比表现如何?

- RQ2: 实体感知 (entity-awareness) 如何影响 PEAGNN 的性能?

- RQ3: 不同元路径 (metapaths) 对推荐任务有何影响?