Retrieval as Attention: End-to-end Learning of Retrieval and Reading within a Single Transformer

Retrieval as Attention: 端到端学习单Transformer内的检索与阅读

Abstract

摘要

Systems for knowledge-intensive tasks such as open-domain question answering (QA) usually consist of two stages: efficient retrieval of relevant documents from a large corpus and detailed reading of the selected documents to generate answers. Retrievers and readers are usually modeled separately, which necessitates a cumbersome implementation and is hard to train and adapt in an end-to-end fashion. In this paper, we revisit this design and eschew the separate architecture and training in favor of a single Transformer that performs Retrieval as Attention (ReAtt), and end-toend training solely based on supervision from the end QA task. We demonstrate for the first time that a single model trained end-toend can achieve both competitive retrieval and QA performance, matching or slightly outperforming state-of-the-art separately trained retrievers and readers. Moreover, end-to-end adaptation significantly boosts its performance on out-of-domain datasets in both supervised and unsupervised settings, making our model a simple and adaptable solution for knowledgeintensive tasks. Code and models are available at https://github.com/jzbjyb/ReAtt.

知识密集型任务(如开放域问答( QA ))的系统通常包含两个阶段:从大型语料库中高效检索相关文档,以及对所选文档进行细读以生成答案。检索器( retriever )和阅读器( reader )通常分开建模,这导致实现过程繁琐且难以进行端到端训练与适配。本文重新审视这一设计,摒弃了分离的架构与训练方式,转而采用单一Transformer模型,通过注意力机制实现检索( Retrieval as Attention, ReAtt ),并仅基于最终QA任务的监督进行端到端训练。我们首次证明,端到端训练的单一模型可同时实现具有竞争力的检索与问答性能,匹配或略微超越当前最优的分离式检索器与阅读器组合。此外,端到端适配在监督和无监督场景下均显著提升了模型在领域外数据集的表现,使其成为知识密集型任务的简洁且适应性强的解决方案。代码与模型详见 https://github.com/jzbjyb/ReAtt。

1 Introduction

1 引言

Knowledge-intensive tasks such as question answering (QA), fact checking, and dialogue generation require models to gather relevant information from potentially enormous knowledge corpora (e.g., Wikipedia) and generate answers based on gathered evidence. A widely used solution is to first retrieve a small number of relevant documents from the corpus with a bi-encoder architecture which encodes queries and documents independently for efficiency purposes, then read the retrieved documents in a more careful and expansive way with a cross-encoder architecture which encodes queries and documents jointly (Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Izacard et al., 2022). The distinction between retrieval and reading leads to the widely adopted paradigm of treating retrievers and readers separately. Retrievers and readers are usually two separate models with heterogeneous architectures and different training recipes, which is cumbersome to train. Even though two models can be combined in an ad-hoc way for downstream tasks, it hinders effective end-to-end learning and adaptation to new domains.

知识密集型任务(如问答系统(QA)、事实核查和对话生成)要求模型从潜在庞大的知识库(如维基百科)中收集相关信息,并基于收集到的证据生成答案。一种广泛采用的解决方案是首先通过双编码器架构(bi-encoder)从语料库中检索少量相关文档(该架构独立编码查询和文档以提高效率),然后通过交叉编码器架构(cross-encoder)以更细致和全面的方式阅读检索到的文档(该架构联合编码查询和文档)(Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Izacard et al., 2022)。检索与阅读的区分导致了广泛采用的将检索器和阅读器分开处理的范式。检索器和阅读器通常是两个独立的模型,具有异构架构和不同的训练方案,这使得训练过程繁琐。尽管这两个模型可以临时组合用于下游任务,但它阻碍了有效的端到端学习以及对新领域的适应。

There have been several attempts to connect up reader and retriever training (Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Sachan et al., 2021; Lee et al., 2021a; Izacard et al., 2022). How- ever, retrievers in these works are not learned in a fully end-to-end way. They require either initialization from existing supervised ly trained dense retrievers (Lewis et al., 2020), or expensive unsupervised retrieval pre training as warm-up (Lee et al., 2019; Guu et al., 2020; Sachan et al., 2021; Lee et al., 2021a; Izacard et al., 2022). The reliance on retrieval-specific warm-up and the ad-hoc combination of retrievers and readers makes them less of a unified solution and potentially hinders their domain adaptation ability. With the ultimate goal of facilitating downstream tasks, retriever and reader should instead be fused more organically and learned in a fully end-to-end way.

已有多次尝试将阅读器 (reader) 和检索器 (retriever) 的训练结合起来 (Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Sachan et al., 2021; Lee et al., 2021a; Izacard et al., 2022) 。然而,这些工作中的检索器并非以完全端到端的方式学习。它们要么需要从现有有监督训练的稠密检索器初始化 (Lewis et al., 2020) ,要么依赖昂贵的无监督检索预训练作为预热 (Lee et al., 2019; Guu et al., 2020; Sachan et al., 2021; Lee et al., 2021a; Izacard et al., 2022) 。这种对检索专用预热的依赖以及检索器与阅读器的临时组合,使得它们难以成为统一解决方案,并可能削弱其领域适应能力。以下游任务为最终目标,检索器与阅读器应当以更有机的方式融合,并通过完全端到端的方式进行学习。

In this paper, we focus on one of the most important knowledge-intensive tasks, open-domain QA. We ask the following question: is it possible to perform both retrieval and reading within a single Transformer model, and train the model in a fully end-to-end fashion to achieve competitive performance from both perspectives? Such a single-model end-to-end solution eliminates the need for retrieval-specific annotation and warm-up and simplifies retrieval-augmented training, making adaptation to new domains easier. Based on the analogy between self-attention which relates different tokens in a single sequence (Vaswani et al., 2017) and the goal of retrieval which is to relate queries with relevant documents, we hypothesize that self-attention could be a natural fit for retrieval, and it allows an organic fusion of retriever and reader within a single Transformer.

本文聚焦于最重要的知识密集型任务之一——开放域问答(open-domain QA)。我们提出以下问题:是否有可能在单个Transformer模型中同时执行检索和阅读任务,并以完全端到端的方式训练该模型,从而在两方面都取得有竞争力的性能?这种单模型端到端解决方案无需针对检索进行专门标注和预热,简化了检索增强训练,使适应新领域变得更加容易。基于自注意力机制(用于关联单个序列中的不同token)与检索目标(关联查询与相关文档)之间的类比,我们假设自注意力可能天然适合检索任务,并允许在单个Transformer中实现检索器与阅读器的有机融合。

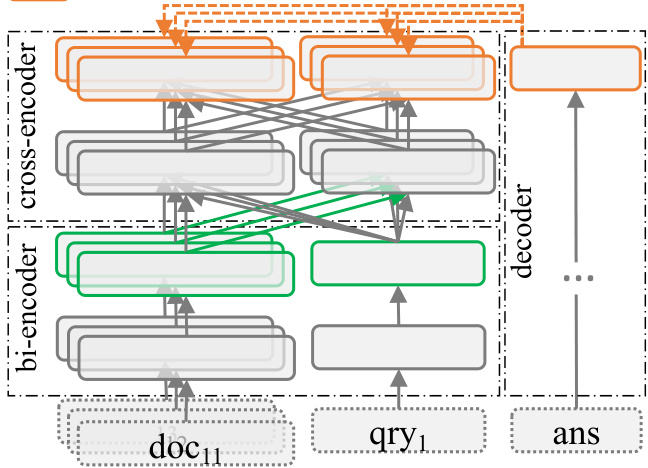

Specifically, we start from a encode-decoder T5 (Raffel et al., 2020) and use it as both retriever and reader. We use the first $B$ encoder layers as bi-encoder to encode queries and documents independently, and the attention score at layer $B+1$ (denoted as retrieval attention) to compute relevance scores, as shown in Fig. 1. We found that directly using self-attention for retrieval underperforms strong retrievers, which we conjecture is because self-attention pretrained on local context is not sufficient to identify relevant information in the large representation space of the whole corpus. To solve this, we propose to compute retrieval attention between a query and a large number of documents and adjust the retrieval attention across documents. For each query, we compute retrieval attention over both close documents that potentially contain positive and hard negative documents, and documents of other queries in the same batch as random negatives. The retrieval attention is adjusted by minimizing its discrepancy from the cross-attention between the decoder and encoder (denoted as target attention), which is indicative of the usefulness of each document in generating answers (Izacard and Grave, 2021a). The resulting Retrieval as Attention model (ReAtt) is a single T5 trained based on only QA annotations and simultan e ou sly learns to promote useful documents through cross-document adjustment.

具体而言,我们从编码器-解码器架构的T5 (Raffel et al., 2020) 出发,将其同时用作检索器和阅读器。前 $B$ 个编码层作为双编码器独立编码查询和文档,第 $B+1$ 层的注意力分数(称为检索注意力)用于计算相关性分数,如图 1 所示。我们发现直接使用自注意力进行检索的效果弱于强检索器,推测原因是基于局部上下文预训练的自注意力不足以在全语料库的大规模表征空间中识别相关信息。为此,我们提出在查询与大量文档间计算检索注意力,并跨文档调整该注意力。对于每个查询,我们既计算其与可能包含正样本和困难负样本的邻近文档间的检索注意力,也计算其与同批次其他查询文档(作为随机负样本)间的检索注意力。通过最小化检索注意力与解码器-编码器间交叉注意力(称为目标注意力)的差异来调整检索注意力,后者能反映各文档对生成答案的有用性 (Izacard and Grave, 2021a)。最终形成的检索即注意力模型 (ReAtt) 是仅基于问答标注训练的单一T5模型,通过跨文档调整同步学习提升有用文档的排序。

We train ReAtt on Natural Questions dataset (NQ) (Kwiatkowski et al., 2019) in a fully end-toend manner. It achieves both competitive retrieval and QA performance, matching or slightly outperforming state-of-the-art retriever ColBERT-NQ (Khattab et al., 2020) trained with explicit retrieval annotations and strong QA model FiD (Izacard and Grave, 2021b,a), demonstrating for the first time end-to-end training can produce competitive retriever and reader within a single model. To further test ReAtt’s generalization and end-to-end adaptation ability, we conduct zero-shot, supervised, and unsupervised adaptation experiments on 7 datasets from the BEIR benchmark (Thakur et al., 2021). In all settings, end-to-end adaptation improves the retrieval performance usually by a large margin, contextual representation (CR) CR for retrieval attention CR for target attention

我们在Natural Questions数据集(NQ) (Kwiatkowski等人,2019)上以完全端到端的方式训练ReAtt。该方法在检索和问答性能上均达到竞争力水平,匹配或略微超过使用显式检索标注训练的最先进检索器ColBERT-NQ (Khattab等人,2020)以及强效问答模型FiD (Izacard和Grave,2021b,a),首次证明端到端训练可以在单一模型中产出具有竞争力的检索器和阅读器。为进一步测试ReAtt的泛化能力和端到端适应能力,我们在BEIR基准(Thakur等人,2021)的7个数据集上进行了零样本、监督和无监督适应实验。在所有设置中,端到端适应通常能大幅提升检索性能,上下文表征(CR) CR用于检索注意力 CR用于目标注意力

self-/cross-attention retrieval (self-)attention target (cross-)attention

自注意力/交叉注意力检索 (自)注意力目标 (交叉)注意力

Figure 1: Illustration of Retrieval as Attention (ReAtt) with the first $B{=}2$ encoder layers as bi-encoder (i.e., retriever) and the rest $L{-}B{=}2$ layers as cross-encoder. During training, the retrieval attention between a query $\pmb{q}_{1}$ and documents $d_{11,12,13}$ is adjusted by minimizing its discrepancy from the target attention. For simplicity, we use a single arrow to represent attention of a single head between multiple tokens.

图 1: 检索即注意力 (ReAtt) 示意图,其中前 $B{=}2$ 个编码器层作为双编码器 (即检索器),其余 $L{-}B{=}2$ 层作为交叉编码器。训练过程中,通过最小化查询 $\pmb{q}_{1}$ 与文档 $d_{11,12,13}$ 间检索注意力与目标注意力的差异来调整注意力权重。为简化表示,我们使用单箭头表示多头注意力中单个注意力头对多 token 的注意力分布。

achieving comparable or superior performance to strong retrieval adaptation and pre training methods.

达到与强大的检索适应和预训练方法相当或更优的性能。

2 Retrieval as Attention (ReAtt)

2 检索即注意力 (ReAtt)

With the goal of developing a single Transformer that can perform both retrieval and reading, and the analogy between retrieval and self-attention, we first introduce architecture changes to allow retrieval as attention $(\S:2.2)$ , then examine how well attention as-is can be directly used to perform retrieval $(\S:2.3)$ .

为了开发一个能够同时执行检索和阅读的单一Transformer,并基于检索与自注意力(self-attention)之间的类比,我们首先引入架构变更以实现检索即注意力功能(§2.2),随后检验原始注意力机制直接用于检索任务的效果(§2.3)。

2.1 Formal Definition

2.1 形式化定义

We first briefly define the task of retrieval and question answering. As mentioned in the introduction, queries and documents need to be represented independently for efficient retrieval which implies a bi-encoder architecture that has no interaction between queries and documents. Without loss of generality, we use $E_{d}=\mathrm{biencoder}(d)$ to denote one or multiple representations generated by a bi-encoder based on a document from a corpus $d\in\mathcal{D}$ , and likewise $E_{q}=\mathrm{biencoder}(q)$ to denote query representations.1 The top $\mathbf{\nabla\cdot}\mathbf{k}$ documents most relevant to a query are retrieved by $\mathcal{D}_{q}^{\mathrm{ret}}=\arg\mathrm{topk}_{d\in\mathcal{D}}r(E_{q},E_{d})$ , where function $r$ computes relevance based on query and document representations which can be as simple as a dot product if queries and documents are encoded into a single vector, and $\mathcal{D}_{\boldsymbol{q}}^{\mathrm{ret}}$ stands for the returned documents. We consider encoder-decoder-based generative question answering in this paper, which jointly represents queries and retrieved documents with the encoder $E_{q,d}=\operatorname{crossencoder}(q,d)$ , and generates the answer $\textbf{\em a}$ auto regressive ly with the decoder $P^{\mathrm{gen}}({\pmb a}|{\pmb q},{\pmb d})=P^{\mathrm{gen}}({\pmb a}|E_{{\pmb q},{\pmb d}})$ . To handle multiple retrieved documents, we follow the fusion-in-decoder model (FiD) (Izacard and Grave, 2021b) which encodes each query-document pair independently and fuse these representations in decoder through cross-attention $P^{\mathrm{gen}}(a|\pmb{q},T_{\pmb{q}}^{\mathrm{ret}})~=$ $P^{\mathrm{gen}}(a|E_{q,d_{1}},...,E_{q,d_{|\mathcal{D}_{q}^{\mathrm{ret}}|}})$ . Negative log likelihood (NLL) is used in optimization $\begin{array}{r l}{{\mathcal{L}}_{\mathrm{QA}}}&{{}=}\end{array}$ $-\log P^{\mathrm{gen}}({\pmb a}|{\pmb q},\mathcal{D}_{{\pmb q}}^{\mathrm{ret}})$ .

我们首先简要定义检索与问答任务。如引言所述,为了实现高效检索,查询和文档需要独立表示,这意味着采用双编码器架构,查询与文档之间不存在交互。在不失一般性的情况下,我们使用$E_{d}=\mathrm{biencoder}(d)$表示基于语料库文档$d\in\mathcal{D}$通过双编码器生成的一个或多个表示,同理$E_{q}=\mathrm{biencoder}(q)$表示查询表示。通过$\mathcal{D}_{q}^{\mathrm{ret}}=\arg\mathrm{topk}_{d\in\mathcal{D}}r(E_{q},E_{d})$检索与查询最相关的前$\mathbf{\nabla\cdot}\mathbf{k}$篇文档,其中函数$r$基于查询和文档表示计算相关性(若查询和文档被编码为单个向量,则可简化为点积),$\mathcal{D}_{\boldsymbol{q}}^{\mathrm{ret}}$代表返回的文档。本文研究基于编码器-解码器的生成式问答,该方法通过编码器$E_{q,d}=\operatorname{crossencoder}(q,d)$联合表示查询与检索文档,并通过解码器自回归生成答案$\textbf{\em a}$:$P^{\mathrm{gen}}({\pmb a}|{\pmb q},{\pmb d})=P^{\mathrm{gen}}({\pmb a}|E_{{\pmb q},{\pmb d}})$。为处理多篇检索文档,我们采用解码器融合模型(FiD)(Izacard and Grave, 2021b),该模型独立编码每个查询-文档对,并通过解码器的交叉注意力融合这些表示:$P^{\mathrm{gen}}(a|\pmb{q},T_{\pmb{q}}^{\mathrm{ret}})~=$$P^{\mathrm{gen}}(a|E_{q,d_{1}},...,E_{q,d_{|\mathcal{D}_{q}^{\mathrm{ret}}|}})$。优化时使用负对数似然(NLL):$\begin{array}{r l}{{\mathcal{L}}_{\mathrm{QA}}}&{{}=}\end{array}$$-\log P^{\mathrm{gen}}({\pmb a}|{\pmb q},\mathcal{D}_{{\pmb q}}^{\mathrm{ret}})$。

2.2 Leveraging Attention for Retrieval

2.2 利用注意力机制进行检索

Next, we introduce our method that directly uses self-attention between queries and documents as retrieval scores.

接下来,我们介绍直接利用查询与文档间自注意力 (self-attention) 作为检索分数的方法。

Putting the Retriever into Transformers As illustrated in Fig. 1, we choose T5 (Raffel et al., 2020) as our base model, use the first $B$ layers of the encoder as the bi-encoder “retriever” by disabling self-attention between queries and documents, and the remaining $L-B$ layers as the crossencoder “reader”. We use the self-attention paid from query tokens to document tokens at the $B+1$ - th layer as the retrieval score, which is denoted as retrieval attention (green arrows in Fig. 1). It is computed based on the independent query and document contextual representations from the last ( $B$ - th) layer of the bi-encoder (green blocks in Fig. 1). Formally for an $H$ -head Transformer, document and query representations are:

将检索器融入Transformer

如图1所示,我们选择T5 (Raffel et al., 2020)作为基础模型,通过禁用查询和文档之间的自注意力机制,将编码器的前$B$层作为双编码器"检索器",剩余$L-B$层作为交叉编码器"阅读器"。我们使用查询token到文档token在第$B+1$层的自注意力作为检索分数,称为检索注意力(图1中绿色箭头)。该分数基于双编码器最后一层(第$B$层)生成的独立查询和文档上下文表示计算得出(图1中绿色块)。对于$H$头Transformer,文档和查询的表示形式为:

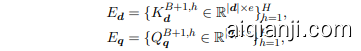

where $K$ and $Q$ are key and query vectors of the token sequence used in self-attention, $|d|$ and $|q|$ are document and query length, and $e$ is the dimensionality of each head. The retrieval attention matrix from query tokens to document before softmax for one head is computed by:

其中 $K$ 和 $Q$ 是自注意力(self-attention)中使用的token序列的键向量和查询向量,$|d|$ 和 $|q|$ 分别表示文档和查询的长度,$e$ 是每个注意力头的维度。单个注意力头在softmax前的查询token到文档的检索注意力矩阵计算公式为:

Directly using attention for retrieval can not only leverage its ability to identify relatedness, it is also a natural and simple way to achieve both retrieval and reading in a single Transformer with minimal architectural changes, which facilitates our final goal of end-to-end learning.

直接利用注意力机制进行检索不仅能发挥其识别相关性的能力,也是以最小架构改动在单一Transformer中同时实现检索与阅读的自然简洁方案,这有助于我们实现端到端学习的最终目标。

From Token Attention to Document Relevance Given the token-level attention scores $A_{\pmb{q},\pmb{d}}^{B+1,h}$ d , the relevance between $\pmb q$ and $^d$ is computed by avg-max aggregation: choosing the most relevant document token for each query token (i.e., max) then averaging across query tokens:

从Token注意力到文档相关性

给定Token级别的注意力分数$A_{\pmb{q},\pmb{d}}^{B+1,h}$,通过avg-max聚合计算$\pmb q$与$^d$之间的相关性:为每个查询Token选择最相关的文档Token(即max),然后对所有查询Token取平均:

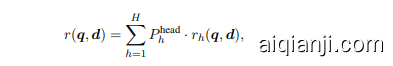

where 1 and 0 refer to the dimension over which the operation is applied. This is similar to the MaxSim and sum operators used in ColBERT (Khattab and Zaharia, 2020), with the intuition that a relevant document should match as many query tokens as possible with the best-matching token. The final relevance is a weighted sum over all heads:

其中1和0表示操作所应用的维度。这与ColBERT (Khattab和Zaharia, 2020)中使用的MaxSim和求和操作类似,其直观思想是相关文档应尽可能多地匹配查询token,并以最佳匹配token为准。最终相关性是所有头部的加权和:

where $P_{h}$ is a learnable weight that sums to one. As explained in the next section, we empirically find only a few attention heads with non-random retrieval performance, and among them one particular head is significantly better than the others. Given this observation, we introduce a low temperature $\tau$ to promote this sparsity $\begin{array}{r}{P_{h}^{\mathrm{head}}=\frac{\exp\left(w_{h}/\tau\right)}{\sum_{h^{\prime}}\exp\left(w_{h}^{\prime}/\tau\right)}}\end{array}$ which always ends with a single head with the great majority of the weight, which is denoted as retrieval head $h^{*}$ . As a result, the learned head weights are practically a head selector, a fact that can also be exploited to make test-time retrieval more efficient.

其中 $P_{h}$ 是一个可学习的权重且总和为一。如下一节所述,我们通过实验发现仅有少数注意力头具有非随机的检索性能,且其中某一个特定头显著优于其他头。基于这一观察,我们引入低温参数 $\tau$ 来促进稀疏性 $\begin{array}{r}{P_{h}^{\mathrm{head}}=\frac{\exp\left(w_{h}/\tau\right)}{\sum_{h^{\prime}}\exp\left(w_{h}^{\prime}/\tau\right)}}\end{array}$,最终总会有一个头占据绝大部分权重,该头被记为检索头 $h^{*}$。因此,学习到的头权重实际上充当了头选择器的作用,这一特性也可用于提升测试时检索的效率。

End-to-end Retrieval with Attention To perform retrieval over a corpus, we first generate key vectors KB+1,h∗ of retrieval head for all document tokens offline and index them with FAISS library (Johnson et al., 2021). For each query token, we issue its vector (Q qB+1,h∗) to the index to retrieve top $\cdot K^{\prime}$ document tokens, which yields a filtered set of documents, each of which has at least one token retrieved by a query token. We then fetch all tokens of filtered documents, compute relevance scores following Eq. 1, and return top $K$ documents with the highest scores $r_{h^{*}}(\pmb{q},\pmb{d})$ . This is similar to the two-stage retrieval in ColBERT (Khattab and Zaharia, 2020), and we reuse their successful practice in index compression and search approximation to make test-time retrieval efficient, which we refer to Santhanam et al. (2021) for details.

端到端注意力检索

为了在语料库上执行检索,我们首先生成所有文档token的检索头关键向量KB+1,h∗,并使用FAISS库(Johnson et al., 2021)离线建立索引。对于每个查询token,我们将其向量(Q qB+1,h∗)发送到索引以检索top $\cdot K^{\prime}$个文档token,从而得到一个过滤后的文档集合,其中每个文档至少有一个token被查询token检索到。接着我们获取所有过滤文档的token,按照公式1计算相关性分数,并返回得分$r_{h^{*}}(\pmb{q},\pmb{d})$最高的top $K$个文档。该方法与ColBERT(Khattab and Zaharia, 2020)中的两阶段检索类似,我们复用其在索引压缩和搜索近似方面的成功实践来提升测试时检索效率,具体细节可参考Santhanam et al. (2021)。

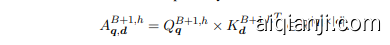

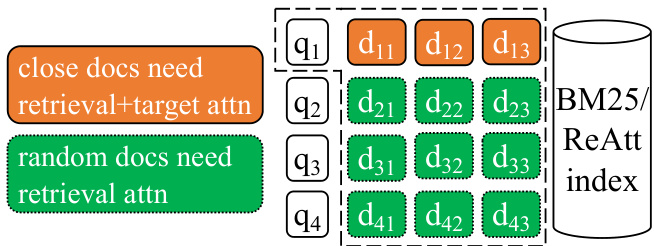

Figure 2: Illustration of approximate attention over the corpus with $\scriptstyle|{\mathcal{Q}}|=4$ queries in a batch and $K{=}3$ close documents per query. We use $\pmb{q}_{1}$ as an example to illustrate the required computation, where close documents require both retrieval and target attention while random documents only require retrieval attention.

图 2: 语料库近似注意力机制示意图,其中批次包含 $\scriptstyle|{\mathcal{Q}}|=4$ 个查询,每个查询对应 $K{=}3$ 篇相关文档。以 $\pmb{q}_{1}$ 为例展示计算过程:相关文档需同时进行检索注意力和目标注意力计算,而随机文档仅需检索注意力计算。

2.3 How Good is Attention As-is?

2.3 注意力机制的现状表现如何?

To examine this question, we use T5-large and test queries from the Natural Question dataset (NQ), retrieve 100 documents with BM25, compute relevance scores $r_{h}(\boldsymbol{q},d)$ with half layers $\mathit{\Theta}^{\prime}B=12\mathit{\Theta}.$ ) as bi-encoder, and measure its correlation with the gold binary annotation. We found that among $H=24$ heads, 4 heads have non-trivial correlations of 0.137, 0.097, 0.082, and 0.059. We fur- ther perform end-to-end retrieval over Wikipedia using the best head, achieving top-10 retrieval accuracy of $43.5%$ , inferior to $55.5%$ of BM25. This demonstrates that there are indeed heads that can relate queries with relevant documents, but they are not competitive. We hypothesize that because self-attention is usually trained by comparing and relating tokens in a local context (512/1024 tokens) it cannot effectively identify relevant tokens in the enormous representation space of a corpus with millions of documents. This discrepancy motivates us to compute retrieval attention between queries and potentially all documents (i.e., attention over the corpus), and adjust attention across documents to promote useful ones.

为了探究这个问题,我们使用T5-large模型并测试来自Natural Question数据集(NQ)的查询,通过BM25检索100篇文档,用半层参数$\mathit{\Theta}^{\prime}B=12\mathit{\Theta}.$ )作为双编码器计算相关性分数$r_{h}(\boldsymbol{q},d)$,并测量其与黄金二元标注的关联性。发现在$H=24$个注意力头中,有4个头显示出0.137、0.097、0.082和0.059的显著相关性。我们进一步使用最佳头在维基百科上进行端到端检索,获得了$43.5%$的top-10检索准确率,低于BM25的$55.5%$。这表明确实存在能将查询与相关文档关联的注意力头,但其性能不具备竞争力。我们假设由于自注意力机制通常通过比较和关联局部上下文(512/1024个token)中的token进行训练,它无法有效识别包含数百万文档的语料库巨大表示空间中的相关token。这种差异促使我们计算查询与所有潜在文档之间的检索注意力(即对整个语料库的注意力),并通过调整文档间注意力来提升有用文档的权重。

3 Learning Retrieval as Attention

3 将检索学习作为注意力机制

We first approximate attention over the corpus at training time by sub-sampling a manageable number of documents for each query containing both potentially relevant and random documents $(\S:3.1)$ . Next, we introduce our end-to-end training objective that optimizes a standard QA loss while also adding supervision to promote attention over documents that are useful for the end task $(\S3.2)$ .

我们首先通过在训练时对每个查询子采样一定数量的文档来近似语料库的注意力机制,这些文档包含潜在相关和随机文档 $(\S:3.1)$。接着,我们介绍了端到端的训练目标,该目标在优化标准问答损失的同时,还通过监督机制促进对最终任务有用的文档的注意力 $(\S3.2)$。

3.1 Approximate Attention over the Corpus

3.1 语料库上的近似注意力机制

Encoding the entire corpus and computing attention between the query and all documents is very expensive. To make it practical, we propose to subsample a small set of documents for each query to approximate the whole corpus. Inspired by negative sampling methods used in dense retriever training (Karpukhin et al., 2020; Xiong et al., 2021; Khattab and Zaharia, 2020), we sub-sample both (1) documents close to queries that can be either relevant or hard negatives, and (2) random documents that are most likely to be easy negatives. This allows the model to distinguish between relevant and hard negative documents, while simultaneously preventing it from losing its ability to distinguish easy negatives, which form the majority of the corpus.

对整个语料库进行编码并计算查询与所有文档之间的注意力机制成本极高。为实现高效处理,我们提出为每个查询子采样少量文档来近似整个语料库。受稠密检索器训练中负采样方法的启发 [20][21][22],我们同时采样两类文档:(1) 与查询接近的文档(可能是相关文档或困难负样本),(2) 随机文档(极可能为简单负样本)。这种方法使模型既能区分相关文档与困难负样本,又能保持对语料库中占主体的简单负样本的判别能力。

Iterative Close Document Sub-sampling To sample documents close to a query $\mathcal{D}_{\pmb q}^{\mathrm{close}}$ , we start from widely used lexical retriever BM25 (Robertson and Zaragoza, 2009) to retrieve $K=100$ documents, as shown by the orange blocks in Fig. 2. We set $K$ to a relatively large number to better approximate the local region, inspired by Izacard and Grave (2021b)’s findings that QA performance increases as more documents are used.

迭代式邻近文档子采样

为采样与查询 $\mathcal{D}_{\pmb q}^{\mathrm{close}}$ 邻近的文档,我们首先采用广泛使用的词法检索器BM25 (Robertson and Zaragoza, 2009) 检索 $K=100$ 篇文档,如图2中橙色模块所示。受Izacard和Grave (2021b) 关于问答性能随文档数量增加而提升的研究启发,我们将 $K$ 设为较大值以更好地近似局部区域。

This fixed set of close documents can become outdated and no longer close to the query anymore as the retrieval attention gets better. To provide dynamic close sub-samples, we re-index the corpus and retrieve a new set of $K$ documents using the current retrieval attention after each iteration. It is similar in spirit to the hard negative mining methods used in Karpukhin et al. (2020); Khattab et al. (2020), with a major difference that we do not manually or heuristic ally annotate documents but instead learn from the end loss with cross-document adjustment, which will be explained in $\S~3.2$ .

随着检索注意力机制的改进,这组固定的相近文档可能会过时,不再与查询密切相关。为了提供动态的相近子样本,我们在每次迭代后重新索引语料库,并使用当前检索注意力机制检索一组新的$K$篇文档。这与Karpukhin等人(2020)和Khattab等人(2020)使用的困难负样本挖掘方法在理念上相似,但主要区别在于我们不是通过人工或启发式方法标注文档,而是通过跨文档调整从最终损失中学习,这将在$\S~3.2$中详细说明。

In-batch Random Document Sub-sampling We use close documents of other queries in the same batch as the random documents of the current query $\mathcal{D}{\pmb q}^{\mathrm{random}}=\cup{\pmb q^{\prime}\in\mathcal{Q}\wedge\pmb q^{\prime}\neq\pmb q}\mathcal{D}{\pmb q^{\prime}}^{\mathrm{close}}$ where $\mathcal{Q}$ contains all queries in a batch, as shown by the green blocks in Fig. 2, which has the advantage of reusing document representations across queries. This is similar to the in-batch negatives used in DPR (Karpukhin et al., 2020) with a major difference that we reuse a token representations $(K{d}^{B+1,h},1\leq$ $h\leq H,$ ) across queries instead of a single-vector document representation.

批内随机文档子采样

我们使用同一批次中其他查询的相近文档作为当前查询的随机文档 $\mathcal{D}{\pmb q}^{\mathrm{random}}=\cup{\pmb q^{\prime}\in\mathcal{Q}\wedge\pmb q^{\prime}\neq\pmb q}\mathcal{D}{\pmb q^{\prime}}^{\mathrm{close}}$ ,其中 $\mathcal{Q}$ 包含批次中的所有查询,如图 2 中的绿色块所示。这种方法具有跨查询复用文档表示的优势,类似于 DPR (Karpukhin et al., 2020) 中使用的批内负样本,但主要区别在于我们复用 token 表示 $(K{d}^{B+1,h},1\leq$ $h\leq H,$ ) 而非单向量文档表示。

3.2 Cross-document Adjustment with Decoder-to-Encoder Attention Distillation

3.2 基于解码器到编码器注意力蒸馏的跨文档调整

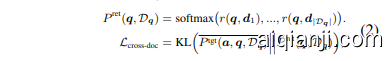

Given the sub-sampled $|\mathcal{Q}|\times K$ documents $\mathcal{D}{q}=$ Dcqlose ∪ Drqandom for each query q, we compute the retrieval attention-based relevance scores $r(\mathbf{q},d)$ and adjust them across multiple documents $d\in\mathcal{D}{q}$ only relying on end task supervision. Since retrieval is simply a means to achieve the downstream task, documents useful for downstream tasks should be promoted by retrieval. Inspired by reader-to-retriever distillation (Izacard and Grave, 2021a; Yang and Seo, 2020), we measure document usefulness based on cross-attention between decoder and encoder, and minimize retrieval attention’s discrepancy from it through distillation. In contrast to Izacard and Grave (2021a) that learns two models iterative ly and alternatively, we optimize QA and distillation loss in a single model simultaneously.

给定每个查询q的子采样$|\mathcal{Q}|\times K$文档$\mathcal{D}{q}=$ Dcqlose ∪ Drqandom,我们计算基于检索注意力的相关性分数$r(\mathbf{q},d)$,并仅依赖端任务监督在多个文档$d\in\mathcal{D}{q}$间调整这些分数。由于检索只是实现下游任务的手段,对下游任务有用的文档应通过检索得到提升。受读者到检索器蒸馏的启发[20][21],我们基于解码器与编码器间的交叉注意力衡量文档有用性,并通过蒸馏最小化检索注意力与此的差异。与[20]中迭代交替学习两个模型不同,我们在单一模型中同时优化问答和蒸馏损失。

Minimizing KL-divergence Between Retrieval and Target Attention Specifically, we denote cross-attention before softmax of the first position/token of the last decoder layer as target attention $C_{{\pmb a},{\pmb q},\mathcal{D}{{\pmb q}}}\in\mathbb{R}^{H\times|\mathcal{D}{{\pmb q}}|\times(|{\pmb d}|+|{\pmb q}|)}$ where $\textbf{\em a}$ is the answer, $|\mathcal{D}{q}|$ is the number of sub-sampled documents to be fused by the decoder $(\S\enspace2.1)$ , and $|d|$ is document length.2 To aggregate tokenlevel target attention into document-level distribution $P^{\mathrm{tgi}}(a,q,\mathcal{D}{q})\in\mathbb{R}^{|\mathcal{D}{q}|}$ , we first perform soft- max over all tokens in all query-document pairs $(|\mathcal{D}{q}|\times(|d|+|q|))$ , sum over tokens of each querydocument pair $(|d|+|q|)$ , then average across multiple heads $(H)$ :

最小化检索与目标注意力间的KL散度

具体而言,我们将最后一个解码器层首位置/token在softmax前的交叉注意力定义为目标注意力 $C_{{\pmb a},{\pmb q},\mathcal{D}{{\pmb q}}}\in\mathbb{R}^{H\times|\mathcal{D}{{\pmb q}}|\times(|{\pmb d}|+|{\pmb q}|)}$ ,其中 $\textbf{\em a}$ 是答案, $|\mathcal{D}{q}|$ 表示需通过解码器融合的子采样文档数量 $(\S\enspace2.1)$ , $|d|$ 为文档长度。为将token级目标注意力聚合为文档级分布 $P^{\mathrm{tgi}}(a,q,\mathcal{D}{q})\in\mathbb{R}^{|\mathcal{D}{q}|}$ ,我们首先对所有查询-文档对的token $(|\mathcal{D}{q}|\times(|d|+|q|))$ 执行softmax,再对每个查询-文档对的token $(|d|+|q|)$ 求和,最后在多头注意力 $(H)$ 上取平均:

Given relevance scores obtained from retrieval attention, the final cross-document adjustment loss is the KL-divergence between relevance distribution $P^{\mathrm{ret}}$ and target distribution $P^{\mathrm{tgt}}$ :

给定从检索注意力获取的相关性分数,最终的跨文档调整损失是相关性分布 $P^{\mathrm{ret}}$ 与目标分布 $P^{\mathrm{tgt}}$ 之间的KL散度:

where the overline indicates stop gradient back propagation to target distributions. Our final loss combines QA loss and cross-document adjustment loss with $\alpha$ as combination weight.

其中上划线表示对目标分布停止梯度反向传播。我们的最终损失结合了QA损失和跨文档调整损失,以$\alpha$作为组合权重。

Zero Target Attention for Random Documents For a batch with $|\mathcal{Q}|$ queries, we need to compute retrieval attention and target attention between $|\mathcal{Q}|\times|\mathcal{Q}|\times K$ query-document pairs. This is both computation- and memory-intensive when batch size is large, especially for target attention because it requires $L-B$ layers of joint encoding of querydocument pairs in the cross-encoder. To alleviate this, we make a simple and effective assumption that in-batch random documents are not relevant to the current query thus having zero target attention: $P^{\mathrm{tgt}}(\pmb{a},\pmb{q},\mathcal{D}{\pmb{q}}^{\mathrm{random}})\in\mathbb{R}^{|\mathcal{D}{\pmb{q}}^{\mathrm{random}}|}\leftarrow0$ rqandom| ← 0. As a result, we only need to run cross-encoder and decoder for $K$ close documents of each query, as shown in Fig. 2. In Appendix A we will introduce our efficient implementation to make it possible to run a large batch size over a limited number of GPUs.

随机文档的零目标注意力

对于包含$|\mathcal{Q}|$个查询的批次,我们需要计算$|\mathcal{Q}|\times|\mathcal{Q}|\times K$个查询-文档对的检索注意力和目标注意力。当批次规模较大时,这在计算和内存上都十分密集,尤其是目标注意力,因为它需要在交叉编码器中对查询-文档对进行$L-B$层的联合编码。为了缓解这一问题,我们提出一个简单有效的假设:批次内的随机文档与当前查询不相关,因此目标注意力为零:$P^{\mathrm{tgt}}(\pmb{a},\pmb{q},\mathcal{D}{\pmb{q}}^{\mathrm{random}})\in\mathbb{R}^{|\mathcal{D}{\pmb{q}}^{\mathrm{random}}|}\leftarrow0$。这样一来,我们只需为每个查询的$K$个相近文档运行交叉编码器和解码器,如图2所示。附录A将介绍我们的高效实现方案,使得在有限GPU数量下运行大批次规模成为可能。

3.3 Domain Adaptation Methods

3.3 领域自适应方法

One of the major benefits of a single end-to-end trainable model is that given a new corpus from a new domain, possibly without retrieval annotations, we can easily adapt it by end-to-end training. This section describes how we adapt ReAtt under different setups.

单端到端可训练模型的主要优势之一是,给定来自新领域的语料库(可能没有检索标注),我们可以通过端到端训练轻松适应它。本节描述了我们如何在不同设置下调整ReAtt。

We consider adapting ReAtt with (1) QA supervision, (2) information retrieval (IR) supervision, or (3) unsupervised adaptation where we only have access to the document corpus. Although our goal is to learn retrieval through downstream tasks instead of retrieval supervision, being able to consume retrieval annotations is helpful when retrieval supervision is indeed available. To do so, we convert retrieval task with annotations in the form of query-document-relevance triples $\langle\pmb{q},\pmb{d},l\rangle$ into a generative task: given a query, the target is to generate its relevant document and the corresponding relevance with the following format “relevance: $l$ . $\overrightarrow{d}^{\cdot}$ . If a query has multiple relevant documents, we follow Izacard and Grave (2021b) to randomly sample one of them. For unsupervised adaptation, with simplicity as our primary goal, we randomly choose one sentence from a document and mask one entity, which is considered as the “query”, and have our model generate the masked entity as the “answer”, similar to salient span masking (SSM) used in Guu et al. (2020).

我们考虑通过以下三种方式对ReAtt进行适配:(1) 问答监督,(2) 信息检索(IR)监督,或(3) 仅能访问文档语料的无监督适配。虽然我们的目标是通过下游任务而非检索监督来学习检索,但当确实存在检索标注时,能够利用这些标注是有帮助的。为此,我们将带有查询-文档-相关性三元组$\langle\pmb{q},\pmb{d},l\rangle$形式的检索任务转化为生成式任务:给定一个查询,目标是生成其相关文档及对应相关性,格式为"relevance: $l$ . $\overrightarrow{d}^{\cdot}$"。若一个查询有多个相关文档,我们遵循Izacard和Grave (2021b) 的做法随机采样其中一个。对于无监督适配,出于简洁性考虑,我们随机选择文档中的一个句子并掩码一个实体作为"查询",让模型生成被掩码的实体作为"答案",类似Guu等人(2020) 使用的显著跨度掩码(SSM)方法。

4 In-domain Experiments

4 域内实验

In this section, we examine if supervised ly training ReAtt end-to-end with only QA supervision yields both competitive retrieval and QA performance.

在本节中,我们探讨仅通过问答监督端到端训练ReAtt是否能同时获得具有竞争力的检索和问答性能。

Datasets, Baselines, and Metrics We train our model using the Natural Questions dataset (NQ). We compare retrieval performance with lexical models BM25 (Robertson and Zaragoza, 2009), passage-level dense retrievers DPR, ANCE, coCondenser, FiD-KD, YONO (with and without retrieval pre training) (Karpukhin et al., 2020; Oguz et al., 2021; Xiong et al., 2021; Gao and Callan, 2022; Izacard and Grave, 2021a; Lee et al., 2021a), and token/phrase-level dense retrievers Dense Phrase, ColBERT, ColBERT-NQ (Lee et al., 2021b; Khat- tab and Zaharia, 2020; Khattab et al., 2020).3 Among them ColBERT-NQ, FiD-KD and YONO are the most fair-to-compare baselines because of either similar token-level retrieval granularity (ColBERT-NQ) or similar end-to-end training settings (FiD-KD and YONO). We report top $\mathbf{\nabla\cdot}\mathbf{k}$ retrieval accuracy $(\mathbf{R}@\mathbf{k})$ , the fraction of queries with at least one retrieved document containing answers. We compare QA performance with ORQA, REALM, RAG, FiD, EMDR2, YONO, UnitedQA, and R2-D2 (Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Izacard and Grave, 2021b,a; Sachan et al., 2021; Lee et al., 2021a; Cheng et al., 2021; Fajcik et al., 2021) using exact match (EM), among which FiD, $\mathrm{EMDR^{2}}$ , and YONO are the most fair-to-compare baselines because they have similar model sizes and training settings.

数据集、基线模型与评估指标

我们使用自然问题数据集(NQ)训练模型。在检索性能方面,与以下基线模型进行对比:词法模型BM25 (Robertson and Zaragoza, 2009)、段落级稠密检索器DPR/ANCE/coCondenser/FiD-KD/YONO(含/不含检索预训练)(Karpukhin et al., 2020; Oguz et al., 2021; Xiong et al., 2021; Gao and Callan, 2022; Izacard and Grave, 2021a; Lee et al., 2021a),以及Token/短语级稠密检索器Dense Phrase/ColBERT/ColBERT-NQ (Lee et al., 2021b; Khattab and Zaharia, 2020; Khattab et al., 2020)。其中ColBERT-NQ、FiD-KD和YONO因具有相似的Token级检索粒度(ColBERT-NQ)或端到端训练设置(FiD-KD/YONO)而成为最公平的比较基线。

我们报告Top $\mathbf{\nabla\cdot}\mathbf{k}$检索准确率$(\mathbf{R}@\mathbf{k})$,即至少检索到一篇包含答案文档的查询占比。在问答性能方面,与ORQA/REALM/RAG/FiD/EMDR2/YONO/UnitedQA/R2-D2 (Lee et al., 2019; Guu et al., 2020; Lewis et al., 2020; Izacard and Grave, 2021b,a; Sachan et al., 2021; Lee et al., 2021a; Cheng et al., 2021; Fajcik et al., 2021)通过精确匹配(EM)进行对比,其中FiD、$\mathrm{EMDR^{2}}$和YONO因模型规模和训练设置相近成为最公平的比较基线。

5 Implementation Details of ReAtt

5 ReAtt 实现细节

ReAtt is based on T5-large with $B=12$ encoder layers as bi-encoder and temperatures $\tau=0.001$ to select the best retrieval head. We retrieve $K=100$ close documents for each query, and use a batch size of $|\mathcal{Q}|=64\$ queries to obtain in-batch random documents. We use $\alpha=8$ to combine crossdocument adjustment loss with QA loss. We use AdamW with a learning rate of 5e-5, $10%$ steps of warmup, and linear decay. We first warmup crossattention’s ability to distinguish documents by only using the QA loss for 3K steps, then train with the combined losses (Eq. 3) for 4 iterations, where the first iteration uses close documents returned by BM25, and the following 3 iterations use close documents returned by the previous ReAtt model (denoted as ReAtt BM25). Each iteration has 8K update steps and takes $\sim1.5$ days on a single node with $8\times\mathrm{Al00}$ GPUs with 80GB memory. Since DPR (Karpukhin et al., 2020) achieves stronger performance than BM25, training with close documents returned by DPR can potentially reduce training time. We experimented with training on close documents from DPR for a single iteration with 16K steps (denoted as ReAtt DPR). Since both approaches achieve similar performance (Tab. 1 and Tab. 2) and ReAtt DPR is cheaper to train, we use it in other experimental settings.

ReAtt基于T5-large架构,采用$B=12$层编码器作为双编码器,并通过温度参数$\tau=0.001$选择最佳检索头。我们为每个查询检索$K=100$篇相关文档,使用批量大小为$|\mathcal{Q}|=64$的查询组获取批次内随机文档。通过$\alpha=8$将跨文档调整损失与问答损失相结合,采用学习率5e-5的AdamW优化器,包含$10%$的预热步数和线性衰减策略。

训练分两个阶段:

- 前3K步仅使用问答损失预热跨注意力机制的文档区分能力

- 后续进行4轮迭代训练(公式3),首轮使用BM25返回的相关文档,后三轮采用前序ReAtt模型(标记为ReAtt BM25)返回的文档。每轮迭代包含8K更新步,在配备$8\times\mathrm{A100}$(80GB显存)的单个节点上耗时约$\sim1.5$天。

由于DPR [Karpukhin et al., 2020]性能优于BM25,我们尝试用DPR返回的文档进行单轮16K步训练(标记为ReAtt DPR)。如表1和表2所示,两种方案性能相近,但ReAtt DPR训练成本更低,故在其他实验设置中采用该方案。

Table 1: Retrieval performance on NQ. PT is retrieval pre training. Fair-to-compare baselines are highlighted with background color. Best performance is in bold.

表 1: NQ数据集上的检索性能。PT表示检索预训练。公平比较基线已用背景色高亮显示,最佳性能以粗体标注。

| 模型 | R@1 | R@5 | R@20 | R@100 | #Params. |

|---|---|---|---|---|---|

| 监督式检索器 | |||||

| BM25 | 23.9 | 45.9 | 63.8 | 78.9 | - |

| DPR | 45.9 | 68.1 | 80.0 | 85.9 | 220M |

| DPRnew | 52.5 | 72.2 | 81.3 | 87.3 | 220M |

| DPR-PAQ | - | 74.2 | 84.0 | 89.2 | 220M |

| ANCE | - | - | 81.9 | 87.5 | 220M |

| coCondenser | - | 75.8 | 84.3 | 89.0 | 220M |

| DensePhrase | 51.1 | 69.9 | 78.7 | - | 330M |

| ColBERT | - | - | 79.1 | - | 110M |

| ColBERT-NQ | 54.3 | 75.7 | 85.6 | 90.0 | 110M |

| 半监督/无监督检索器 | |||||

| FiD-KD | 49.4 | 73.8 | 84.3 | 89.3 | 220M |

| YONOw/o PT | - | 72.3 | 82.2 | - | 165M |

| YONOw/PT | - | 75.3 | 85.2 | 90.2 | 165M |

| ReAtt DPR | 54.6 | 77.2 | 86.1 | 90.7 | 165M |

| ReAtt BM25 | 55.8 | 77.4 | 86.0 | 90.4 | 165M |

At test-time, we save key vectors of all tokens in the corpus and use exact index from FAISS (i.e., faiss.Index Flat IP) to perform inner-product search. We retrieve $K^{\prime}=2048$ document tokens for each query token and return top-100 documents with the highest aggregated scores (Eq. 1) to generate answers. We found compressing index with clustering and quantization proposed by Santhanam et al. (2021) can greatly reduce search latency and index size with a minor retrieval accuracy loss.

在测试时,我们保存语料库中所有token的关键向量,并使用FAISS (即faiss.Index Flat IP) 的精确索引执行内积搜索。对于每个查询token,我们检索 $K^{\prime}=2048$ 个文档token,并返回聚合分数 (公式1) 最高的前100个文档以生成答案。我们发现,采用Santhanam等人 (2021) 提出的聚类和量化方法压缩索引,能在检索精度损失较小的情况下显著降低搜索延迟和索引大小。

5.1 Overall Results

5.1 总体结果

We compare ReAtt with various retrievers and readers in Tab. 1 and Tab. 2. ReAtt achieves both slightly better retrieval performance than the strongest retriever baseline ColBERT-NQ (Khattab et al., 2020) and comparable QA performance than the strong reader baseline FiD-KD (Izacard and Grave, 2021a) on NQ, demonstrating for the first time that fully end-to-end training using QA supervision can produce both competitive retrieval and QA performance. Compared to another singlemodel architecture YONO (Lee et al., 2021a), ReAtt offers better performance without cumbersome pre training to warm-up retrieval.

我们在表1和表2中将ReAtt与多种检索器和阅读器进行对比。在NQ数据集上,ReAtt不仅以微弱优势超越了最强检索基线ColBERT-NQ (Khattab et al., 2020)的检索性能,还与强阅读基线FiD-KD (Izacard and Grave, 2021a)的问答性能相当,首次证明完全基于问答监督的端到端训练可以同时获得有竞争力的检索和问答性能。相比另一种单模型架构YONO (Lee et al., 2021a),ReAtt无需繁琐的检索预热预训练即可实现更优性能。

Table 2: QA performance on NQ. PT is retrieval pretraining. Fair-to-compare baselines are highlighted. Best performance is in bold.

表 2: NQ问答性能对比。PT表示检索预训练。公平比较基线已高亮显示,最佳性能加粗标注。

| Models | EM | #Params. |

|---|---|---|

| ORQA (Lee et al., 2019) | 33.3 | 330M |

| REALM (Guu et al., 2020) | 40.4 | 330M |

| RAG (Lewis et al., 2020) | 44.5 | 220M |

| FiD (Izacard and Grave, 2021b) | 51.4 | 990M |

| FiD-KD (Izacard and Grave, 2021a) | 54.4 | 990M |

| EMDR2 (Sachan et al., 2021) | 52.5 | 440M |

| YONO w/o Pr (Lee et al., 2021a) | 42.4 | 440M |

| YONO w/ Pr (Lee et al., 2021a) | 53.2 | 440M |

| UnitedQA (Cheng et al., 2021) | 54.7 | 1.870B |

| R2-D2 (Fajcik et al., 2021) | 55.9 | 1.290B |

| ReAttDPR | 54.0 | 770M |

| ReAttBM25 | 54.7 | 770M |

5.2 Ablations

5.2 消融实验

We perform ablation experiments to understand the contribution of each component. Due to resource limitations, all ablations are trained with 2K steps per iteration. We use ReAtt trained with $B{=}12$ bi-encoder layers, $|\mathcal{Q}|{=}16$ batch size, and $\scriptstyle\alpha=8$ cross-document loss weight as the baseline, remove one component or modify one hyperparameter at a time to investigate its effect. As shown in Tab. 3, we found: 1. Only using QA loss without cross-document adjustment (#2) improves retrieval performance over the original T5 (#3), but cross-document adjustment is necessary to achieve further improvement (#1). 2. Iterative ly retrieving close documents with the current model is helpful (#5 vs #1). 3. In-batch random documents are beneficial (#4 vs #1), and a larger batch size leads to larger improvements (#8-11). 4. A larger weight on cross-document adjustment loss improves retrieval performance but hurts QA performance, with $4{\sim}8$ achieving a good trade-off (#12-15). 5. A small number of bi-encoder layers (#6) significantly hurts retrieval while a large number of layers (#7) significantly hurts QA, suggesting choosing equal numbers of layers in bi-encoder and cross-encoder.

我们通过消融实验来理解各组件的贡献。由于资源限制,所有消融实验每次迭代仅训练2K步。以使用 $B{=}12$ 层双向编码器、批次大小 $|\mathcal{Q}|{=}16$ 、跨文档损失权重 $\scriptstyle\alpha=8$ 训练的ReAtt作为基线,每次移除一个组件或调整一个超参数以观察其影响。如 表3 所示,我们发现:1. 仅使用QA损失而不进行跨文档调整 (#2) 比原始T5 (#3) 提升了检索性能,但需跨文档调整才能进一步优化 (#1)。2. 迭代检索当前模型关联文档有助于提升效果 (#5 vs #1)。3. 批次内随机文档具有增益效果 (#4 vs #1),且批次越大提升越显著 (#8-11)。4. 增大跨文档调整损失权重会提升检索性能但损害QA性能,权重 $4{\sim}8$ 可实现较好平衡 (#12-15)。5. 双向编码器层数过少 (#6) 会严重损害检索性能,层数过多 (#7) 则显著影响QA性能,建议双向编码器与交叉编码器采用相同层数。

6 Out-of-domain Generalization and Adaptation

6 域外泛化与适应

In this section, we examine both zero-shot retrieval performance on out-of-domain datasets and ReAtt’s end-to-end adaptability in supervised (QA, IR) and unsupervised settings.

在本节中,我们研究了零样本检索在域外数据集上的性能,以及ReAtt在监督(问答、信息检索)和无监督设置中的端到端适应性。

6.1 Datasets, Baselines, and Metrics

6.1 数据集、基线方法与评估指标

We choose 7 datasets from BEIR (Thakur et al., 2021), a benchmark covering diverse domains and tasks. On each dataset we compare ReAtt with different types of retrievers including BM25, DPR, and ColBERT. We consider 2 QA datasets (BioASQ and FiQA (Tsa tsar on is et al., 2015; Maia et al., 2018)) and one IR dataset (MS MARCO (Nguyen et al., 2016)) to evaluate su- pervised adaptation capability, and 4 other datasets (CQ AD up Stack, TREC-COVID, SCIDOCS, SciFact (Hoogeveen et al., 2015; Voorhees et al., 2020; Cohan et al., 2020; Wadden et al., 2020)) to eval- uate unsupervised adaptation capability. Detailed statistics are listed in Tab. 8. We report nDCG $@10$ to measure retrieval performance and EM to measure QA performance. We group all baselines into three categories and denote them with different colors in the following tables:

我们从涵盖多领域和多任务的基准测试BEIR (Thakur et al., 2021)中选取了7个数据集。在每个数据集上,我们将ReAtt与不同类型的检索器进行比较,包括BM25、DPR和ColBERT。我们选用2个问答数据集(BioASQ和FiQA (Tsa tsar on is et al., 2015; Maia et al., 2018))和1个信息检索数据集(MS MARCO (Nguyen et al., 2016))来评估监督适应能力,以及4个其他数据集(CQADupStack、TREC-COVID、SCIDOCS、SciFact (Hoogeveen et al., 2015; Voorhees et al., 2020; Cohan et al., 2020; Wadden et al., 2020))来评估无监督适应能力。详细统计数据见表8。我们采用nDCG $@10$来衡量检索性能,用EM来衡量问答性能。我们将所有基线方法分为三类,并在下表中用不同颜色标注:

Table 3: Ablations by removing one component or changing one hyper parameter from the ReAtt baseline.

表 3: 从ReAtt基线中移除一个组件或改变一个超参数的消融实验

| # | 方法 | R@1 | R@5 | R@20 | R@100 | EM |

|---|---|---|---|---|---|---|

| 1 | ReAtt基线 (B=12, | Q | =16, α=8) | 41.9 | 68.8 | 82.5 |

| 移除单个组件 | ||||||

| 2 | - 跨文档损失 | 21.7 | 49.0 | 71.5 | 83.5 | 46.0 |

| 3 | - QA (=T5) | 13.2 | 33.7 | 53.6 | 67.7 | 3.0 |

| 4 | - 批内负采样 | 38.1 | 66.0 | 80.3 | 87.6 | 46.7 |

| 5 | - 迭代训练 | 41.2 | 68.3 | 82.0 | 88.4 | 45.0 |

| 双编码器不同层数B | ||||||

| 6 | B=6 | 19.1 | 42.1 | 62.4 | 78.1 | 40.3 |

| 7 | B=18 | 38.2 | 63.8 | 79.3 | 87.4 | 35.2 |

| 不同批次大小Q | ||||||

| 8 | Q | =4 | 39.4 | 66.1 | 80.7 | |

| 9 | Q | =8 | 40.7 | 67.1 | 82.1 | |

| 10 | Q | =32 | 43.6 | 69.4 | 82.8 | |

| 11 | Q | =64 | 45.5 | 71.0 | 83.3 | |

| 不同跨文档损失权重α | ||||||

| 12 | α=1 | 37.4 | 65.4 | 80.9 | 88.0 | 47.3 |

| 13 | α=2 | 39.7 | 66.9 | 81.7 | 88.4 | 47.4 |

| 14 | α=4 | 40.9 | 68.0 | 82.1 | 88.8 | 46.9 |

| 15 | α=16 | 42.0 | 68.8 | 82.5 | 88.8 | 45.5 |

• Supervised adaptation models are trained with downstream task supervision, including RAG trained on BioASQ, Contriever fine-tuned on FiQA, and docT5query, ANCE, ColBERT, and Contriever fine-tuned on MS MARCO (Nogueira and Lin, 2019; Xiong et al., 2021; Khattab and Zaharia, 2020; Izacard et al., 2021). • Unsupervised adaptation models are trained on domain corpus in an unsupervised way such as contrastive learning or pseudo query generation, including SimCSE and TSDAE $^+$ GPL (Gao et al., 2021c; Wang et al., 2021a,b).

• 监督适应模型 (supervised adaptation models) 通过下游任务监督进行训练,包括在BioASQ上训练的RAG、在FiQA上微调的Contriever,以及在MS MARCO上微调的docT5query、ANCE、ColBERT和Contriever (Nogueira and Lin, 2019; Xiong et al., 2021; Khattab and Zaharia, 2020; Izacard et al., 2021)。

• 无监督适应模型 (unsupervised adaptation models) 通过对比学习或伪查询生成等无监督方式在领域语料库上训练,包括SimCSE和TSDAE$^+$GPL (Gao et al., 2021c; Wang et al., 2021a,b)。

• Pre training models are trained on corpora with

• 预训练模型在包含

Table 4: $\mathrm{nDCG}@10$ of zero-shot and supervised adaptation experiments on two QA and one IR datasets. We use colors to denote categories: pre training, unsupervised adaptation, and supervised adaptation. Baselines comparable to ReAtt are highlighted with blue background color. We also show the improvement of ReAtt over zero-shot performance in subscript.

表 4: 两个问答数据集和一个信息检索数据集的零样本和监督适应实验的 $\mathrm{nDCG}@10$ 结果。我们使用颜色区分类别: 预训练、无监督适应和监督适应。与 ReAtt 相当的基线用蓝色背景高亮显示。下标展示了 ReAtt 相比零样本性能的提升。

Table 5: RAG and ReAtt on BioASQ. Each indent indicates fine-tuning one more component than its parent with performance difference colored with green/red. ∗ denotes fine-tuning conducted sequentially instead of jointly with the current component.

表 5: BioASQ上的RAG与ReAtt对比。每级缩进表示比父项多微调一个组件,性能差异用绿色/红色标注。∗表示微调是顺序执行而非与当前组件联合进行。

| #消融实验 | nDCG@1@5 | EM | |

|---|---|---|---|

| 1 | RAG | 14.6 13.0 | 1.3 |

| 2 | +reader | 14.6 13.0 | 27.5 26.2 |

| 3 | + qry enc (e2e) | 0.0 0.0 -13.0 | 25.7 -1.9 |

| 4 | + doc/qry enc | 29.4 27.1 14.1 | 5.0 3.7 |

| 5 | + reader (pipe) | 29.4 27.1 | 27.8 22.8 |

| 6 | + qry enc | 23.3 23.2 -4.0 | 26.2 -1.6 |

| 7 | T5 | 49.2 47.7 | 0.0 |

| 8 | +e2e | 75.2 73.5 25.7 | 44.4 44.4 |

| 9 | ReAtt | 72.8 70.1 | 17.2 |

| 10 | +e2e | 77.4 75.4 5.3 | 47.2 30.0 |

Table 6: $\mathrm{nDCG}@10$ of zero-shot and unsupervised adaptation on four datasets. Format is similar to Tab. 4

表 6: $\mathrm{nDCG}@10$ 在四个数据集上的零样本和无监督适应性能。格式与表 4 类似

| 任务数据集 | QA BioASQ FiQA | 检索 MSMARCO | |

|---|---|---|---|

| 零样本性能 | |||

| BM25 | 68.1 | 23.6 | 22.8 |

| DPR | 14.1 | 11.2 | 17.7 |

| ColBERT-NQ | 65.5 | 23.8 | 32.8 |

| ReAtt | 71.1 | 30.1 | 32.3 |

| 额外训练 | |||

| Contriever | 32.9 | docT5query | |

| SimCSE | 58.1 | 31.4 | ANCE |

| TSDAE+GPL | 61.6 | 34.4 | ColBERT |

| Contrieverw/FT | 38.1 | Contriever | |

| ReAtt | +5.876.9 | +8.538.6 | ReAtt +7.639.9 |

out direct exposure to the target domain, such as Contriever (Izacard et al., 2021) trained with contrastive learning on Wikipedia and CCNet.

在没有直接接触目标领域的情况下,例如通过对比学习在Wikipedia和CCNet上训练的Contriever (Izacard et al., 2021)。

We highlight baselines in the same category as ReAtt in the following tables since comparison between them is relatively fair. Details of adaptation of ReAtt can be found in Appendix B.

我们在以下表格中突出显示与ReAtt同类型的基线方法,因为它们之间的比较相对公平。ReAtt的适配细节详见附录B。

6.2 Experimental Results

6.2 实验结果

Results of supervised and unsupervised adaptation are listed in Tab. 4, Tab. 5, and Tab. 6 respectively.

监督式和无监督式适配的结果分别列于表4、表5和表6。

Zero-shot Generalization Ability As shown in Tab. 4 and Tab. 6, the zero-shot performance of ReAtt is significantly better than other zero-shot baselines on two QA datasets and one fact checking dataset $(+3.0/+6.5/+4.5$ on BioASQ/FiQA/SciFact than the second best), and overall comparable on the rest of datasets $(-0.5/-0.6/-3.0/-1.0$ on MS

零样本泛化能力

如表 4 和表 6 所示,ReAtt 在两个问答数据集和一个事实核查数据集上的零样本性能显著优于其他零样本基线(在 BioASQ/FiQA/SciFact 上比第二名高 $(+3.0/+6.5/+4.5$),其余数据集上的表现总体相当(在 MS 上为 $(-0.5/-0.6/-3.0/-1.0$)。

| 方法 | CQA.TRECC | SCIDOCS | SciFact |

|---|---|---|---|

| BM25 | 29.9 | 65.6 | 15.8 |

| DPR | 15.3 | 33.2 | 7.7 |

| ANCE | 29.6 | 65.4 | 12.2 |

| ColBERT-NQ | 33.9 | 48.9 | 15.6 |

| ReAtt | 33.3 | 62.6 | 14.8 |

| 零样本性能 | |||

| Contriever | 34.5 | 59.6 | 16.5 |

| SimCSE | 29.0 | 68.3 | |

| TSDAE+GPL | 35.1 | 74.6 | |

| ReAtt | +3.336.6 | +13.476.0 | +1.015.8 |

MARCO/CQA./TRECC./SCIDOCS than the best which is usually BM25), demonstrating that our end-to-end training with QA loss on NQ produces a robust retriever. We conjecture that the superior performance on QA datasets can be attributed to our end-to-end training using QA loss which learns retrieval that better aligns with the end task than training with retrieval annotations.

MARCO/CQA./TRECC./SCIDOCS 上优于通常表现最佳的 BM25),这表明我们在 NQ 数据集上采用问答(QA)损失进行的端到端训练能够产生一个鲁棒的检索器。我们推测,在问答数据集上的优异表现可归因于使用 QA 损失进行的端到端训练,这种训练方式学习到的检索比基于检索标注训练更能与最终任务对齐。

Retrieval Adaptation with QA Supervision As shown in the left-hand side of Tab. 4, end-toend adaptation with QA supervision significantly improves ReAtt’s retrieval performance by 5.8/8.5 on BioASQ/FiQA, achieving similar performance as Contriever fine-tuned on FiQA, and better performance than other unsupervised methods, confirming the end-to-end adaptability of our methods.

检索适应与问答监督

如表 4 左侧所示,通过问答监督的端到端适应显著提升了 ReAtt 的检索性能,在 BioASQ/FiQA 上分别提高了 5.8/8.5 分,达到与 FiQA 微调的 Contriever 相近的性能,并优于其他无监督方法,证实了我们方法的端到端适应性。

End-to-end QA Adaptation We perform endto-end adaptation on BioASQ and compare with RAG as a baseline, which combines DPR as retriever and BART as reader, and DPR has a query and document encoder. Since updating document encoder requires corpus re-indexing, it is fixed during fine-tuning. We found end-to-end fine-tuning fails on RAG. To understand why, we conduct a rigorous experiment that breaks down each component of RAG to find the failure point in Tab. 5.

端到端问答适配

我们在BioASQ上执行端到端适配,并以RAG(结合DPR作为检索器、BART作为阅读器)作为基线进行对比。由于更新文档编码器需要重新索引语料库,该组件在微调过程中保持固定。实验发现端到端微调在RAG上失效。为探究原因,我们通过表5的严格实验拆解RAG各组件以定位故障点。

表5:

Starting from the initial model trained on NQ (#1), we first fine-tune the reader while fixing the query encoder (#2), and as expected QA performance improves. However fine-tuning both query encoder and reader (end-to-end #3) makes the retriever collapse with zero relevant documents returned, indicating end-to-end fine-tuning does not work for RAG on new domains. In order to improve both retrieval and QA, we need to fine-tune RAG in a pipeline manner: first fine-tune the retriever (both query and doc encoder) similarly to DPR using retrieval annotations (#4), then finetune the reader (#5). With the DPR-like fine-tuned retriever, end-to-end fine-tuning of query encoder and reader still fails (#6), although the retriever does not completely collapse.

从在NQ上训练的初始模型(#1)开始,我们首先固定查询编码器微调阅读器(#2),如预期那样QA性能得到提升。然而同时微调查询编码器和阅读器(端到端#3)会导致检索器崩溃,返回零相关文档,这表明端到端微调不适用于新领域的RAG。为了同时改进检索和QA性能,我们需要采用流水线方式微调RAG:首先类似DPR使用检索标注微调检索器(包括查询和文档编码器)(#4),然后微调阅读器(#5)。即使采用类DPR微调后的检索器,查询编码器和阅读器的端到端微调仍然失败(#6),不过此时检索器未完全崩溃。

End-to-end fine-tuning of ReAtt improves retrieval and QA simultaneously. Fine-tuning starting from ReAtt trained on NQ is better than starting from T5, indicating the capability learned in NQ could be transferred to BioASQ. Comparing RAG and ReAtt, we identify several keys that enable endto-end adaptation. (1) ReAtt relying on token-level attention has a strong initial performance, (2) crossdocument adjustment over both close and random documents in ReAtt provides a better gradient estimation than only using retrieved documents in RAG, (3) distillation-based loss in ReAtt might be more effective than multiplying the retrieval probability into the final generation probability.

端到端微调 ReAtt 可同时提升检索和问答性能。从在 NQ 数据集上训练的 ReAtt 开始微调优于从 T5 开始,表明在 NQ 中学到的能力可迁移至 BioASQ。对比 RAG 和 ReAtt 时,我们发现了实现端到端适应的几个关键因素:(1) 依赖 token 级注意力的 ReAtt 具备更强的初始性能,(2) ReAtt 中对密切关联文档和随机文档进行的跨文档调整,比 RAG 仅使用检索文档能提供更好的梯度估计,(3)