Hierarchical Prompting Taxonomy: A Universal Evaluation Framework for Large Language Models Aligned with Human Cognitive Principles

层次化提示分类法:符合人类认知原则的大语言模型通用评估框架

Devichand Budagam1, Ashutosh Kumar2, Mahsa Kho sh no odi 3, Sankalp $\mathbf{K}\mathbf{J}^{4}$ Vinija $\mathbf{Ja}\mathbf{in}^{5,7}$ , Aman Chadha6, 7

Devichand Budagam1、Ashutosh Kumar2、Mahsa Khoshno odi3、Sankalp $\mathbf{K}\mathbf{J}^{4}$、Vinija $\mathbf{Ja}\mathbf{in}^{5,7}$、Aman Chadha6,7

1Indian Institute of Technology Kharagpur, India 2Rochester Institute of Technology, USA 3 Researcher, Fatima Fellowship $^4\mathrm{\overline{{A}}I}$ Institute, University of South Carolina, USA 5Meta, USA 6Amazon GenAI, USA 7Stanford University, USA

1印度理工学院克勒格布尔分校,印度 2罗切斯特理工学院,美国 3法蒂玛奖学金研究员 $^4\mathrm{\overline{{A}}I}$ 研究所,南卡罗来纳大学,美国 5Meta,美国 6Amazon GenAI,美国 7斯坦福大学,美国

Abstract

摘要

Assessing the effectiveness of large language models (LLMs) in performing different tasks is crucial for understanding their strengths and weaknesses. This paper presents Hierarchical Prompting Taxonomy (HPT), grounded on human cognitive principles and designed to assess LLMs by examining the cognitive demands of various tasks. The HPT utilizes the Hierarchical Prompting Framework (HPF), which structures five unique prompting strategies in a hierarchical order based on their cognitive requirement on LLMs when compared to human mental capabilities. It assesses the complexity of tasks with the Hierarchical Prompting Index (HPI), which demonstrates the cognitive competencies of LLMs across diverse datasets and offers insights into the cognitive demands that datasets place on different LLMs. This approach enables a comprehensive evaluation of an LLM’s problem-solving abilities and the intricacy of a dataset, offering a standardized metric for task complexity. Extensive experiments with multiple datasets and LLMs show that HPF enhances LLM performance by $2%-63%$ compared to baseline performance, with GSM8k being the most cognitive ly complex task among reasoning and coding tasks with an average HPI of 3.20 confirming the effectiveness of HPT. To support future research and reproducibility in this domain, the implementations of HPT and HPF are available here.

评估大语言模型(LLM)在不同任务中的表现效果对于理解其优势与局限至关重要。本文提出基于人类认知原则的分层提示分类法(HPT),通过分析各类任务的认知需求来评估大语言模型。HPT采用分层提示框架(HPF),该框架根据LLM相较于人类心智能力的认知需求,将五种独特的提示策略按层级结构排列。系统通过分层提示指数(HPI)评估任务复杂度,该指数展示了LLM在不同数据集上的认知能力,并揭示了数据集对不同LLM的认知需求。这种方法能全面评估LLM的问题解决能力与数据集的复杂程度,为任务复杂度提供标准化度量指标。在多数据集和LLM上的大量实验表明,相比基线性能,HPF能将LLM表现提升$2%-63%$,其中GSM8k在推理和编码任务中认知复杂度最高,平均HPI达3.20,验证了HPT的有效性。为支持该领域未来研究及可复现性,HPT与HPF的实现代码已开源。

Code — https://github.com/de vich and 579/HPT

代码 — https://github.com/devichand579/HPT

1 Introduction

1 引言

Large Language Models (LLMs) have revolutionized natural language processing (NLP), enabling significant advancements in a wide range of applications. Conventional evaluation frameworks often apply a standard prompting approach to assess different LLMs, regardless of the complexity of the task, which may result in biased and suboptimal outcomes. Moreover, applying the same prompting approach across all samples within a dataset without considering each sample’s relative complexity adds to the unfair situation. To achieve a more balanced evaluation framework, it is essential to account for both the task-solving ability of LLMs and the varying cognitive complexities of the dataset samples. This limitation highlights the need for more sophisticated evaluation methods that can adapt to varying levels of task complex- ity. Within this study, complexity is defined as the cognitive demands associated with solving a task or the cognitive load introduced by a prompting strategy on LLMs. Henceforth, the term ”complexity” will be applied solely in this context. Task complexity, within the realm of human cognition, pertains to the cognitive requirements that a task imposes, which includes the diverse levels of mental effort necessary for processing, analyzing, and synthesizing information. According to Sweller (1988), tasks become more complex as they require greater cognitive resources, engaging working memory in more demanding processes such as reasoning and problem-solving. Similarly, Anderson et al. (2014) highlights that human cognitive abilities span a continuum from basic recall to higher-order thinking, with increasing difficulty correlating to tasks that demand analysis, synthesis, and evaluation. When applied to LLMs, the complexity of prompting strategies can be systematically evaluated by mapping them onto this human cognitive hierarchy. This alignment allows for an assessment of how LLMs perform tasks that reflect varying degrees of cognitive load, thereby providing a structured framework for understanding the cognitive demands associated with various tasks. By imposing this cognitive complexity framework on LLMs, this paper establishes a universal evaluation method, grounded in human cognitive principles, that enables more precise comparisons of model performance across tasks with varying levels of difficulty.

大语言模型 (LLM) 彻底改变了自然语言处理 (NLP) 领域,推动各类应用取得重大进展。传统评估框架通常采用标准提示方法评估不同大语言模型,而忽略任务复杂性差异,可能导致评估结果存在偏差且非最优。此外,对数据集中所有样本统一应用相同提示策略而不考虑样本间相对复杂度,进一步加剧了评估的不公平性。为实现更平衡的评估框架,必须同时考量大语言模型的任务解决能力与数据集样本的认知复杂度差异。这一局限性凸显了需要开发能适应不同任务复杂度的更精细评估方法。本研究将复杂度定义为:解决任务所需的认知需求,或提示策略给大语言模型带来的认知负荷。后文所述"复杂度"皆特指此定义。在人类认知领域,任务复杂度指任务对认知能力的要求,包括处理、分析和综合信息所需的不同层次脑力消耗。Sweller (1988) 指出,当任务需要更多认知资源(如涉及推理和问题解决等高要求工作记忆过程)时,其复杂度随之提升。Anderson et al. (2014) 同样强调,人类认知能力存在从基础记忆到高阶思维的连续统,任务难度随着对分析、综合和评估能力要求的提升而增加。将提示策略复杂度映射到人类认知层次体系,可系统评估大语言模型在不同认知负荷任务中的表现。这种对应关系为理解各类任务相关认知需求提供了结构化框架。通过将人类认知复杂度框架应用于大语言模型,本文建立了一种基于人类认知原理的通用评估方法,能更精准地比较模型在不同难度任务中的性能表现。

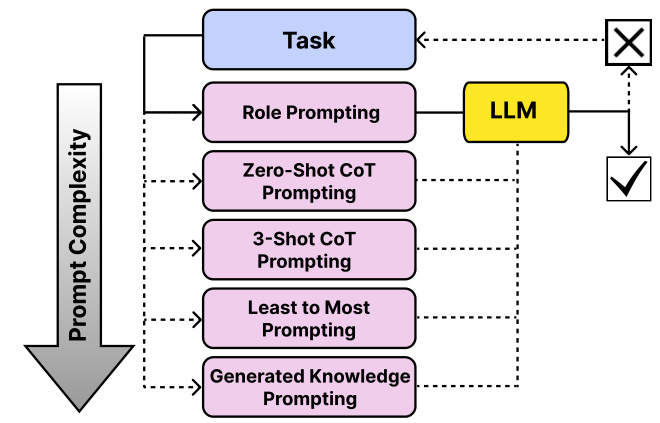

Figure 1: Hierarchical Prompting Framework includes five distinct prompting strategies, each designed for different levels of task complexity to ensure the appropriate prompt is selected for the given task. A $\checkmark$ indicates task completion, while $\mathrm{a}\times$ signifies task in completion.

图 1: 分层提示框架包含五种不同的提示策略,每种策略针对不同层次的任务复杂度设计,以确保为给定任务选择合适的提示。$\checkmark$ 表示任务完成,$\mathrm{a}\times$ 表示任务未完成。

This paper introduces the HPT, a set of rules that maps the human cognitive principles for assessing the complexity of different prompting strategies. It employs the HPF shown in Figure 1, a prompt selection framework that selects the prompt with the optimal cognitive load on LLM required in solving the task. HPF enhances the interaction with LLMs, and improves performance across various tasks by ensuring prompts resonate with human cognitive principles. The main contributions of this paper are as follows:

本文介绍了HPT,这是一套基于人类认知原则来评估不同提示策略复杂度的规则体系。该框架采用图1所示的HPF提示选择框架,能够根据任务需求选择对大语言模型(LLM)认知负荷最优的提示方案。HPF通过确保提示符合人类认知原则,不仅增强了大语言模型的交互体验,还提升了各类任务的表现。本文主要贡献如下:

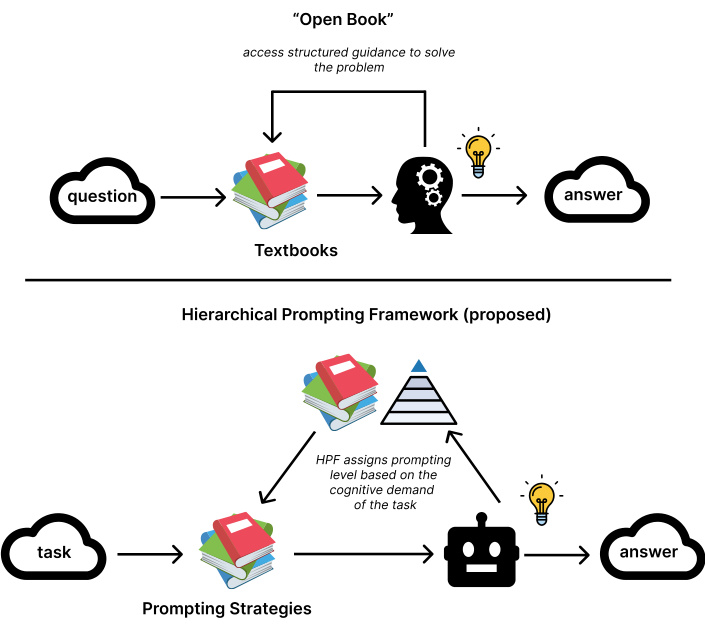

HPF can be effectively compared to an ”Open Book” examination as shown in Figure 2, where questions represent tasks and textbooks serve as prompting strategies. In this analogy, the exam questions vary in complexity, from simple factual recall to intricate analytical problems, analogous to the tasks in HPT that are evaluated based on their cognitive demands. Similarly, textbooks provide structured guidance for solving these questions, much like the HPF organizes prompts in increasing levels of complexity to support LLMs. For example, a straightforward glossary lookup corresponds to a low-complexity task, while solving a multi-step analytical problem requiring synthesis of concepts represents a highcomplexity task. The effort a student invests in answering a question mirrors the HPI, which measures the cognitive load placed on the LLM. Just as students perform better with structured resources like textbooks, LLMs improve with welldesigned hierarchical prompting strategies, enabling them to tackle progressively complex tasks effectively.

HPF可以有效地比作"开卷考试",如图2所示,其中问题代表任务,教科书则充当提示策略。在这个类比中,考试题目复杂度各异,从简单的事实回忆到复杂的分析问题,类似于HPT中根据认知需求评估的任务。同样地,教科书为解答这些问题提供了结构化指导,就像HPF通过组织复杂度递增的提示来支持大语言模型。例如,简单的术语表查找对应低复杂度任务,而需要综合概念的多步骤分析问题则代表高复杂度任务。学生解答问题所付出的努力反映了HPI,即衡量大语言模型承受的认知负荷。正如学生在教科书等结构化资源的帮助下表现更好,大语言模型也能通过精心设计的层次化提示策略得到提升,从而有效处理日益复杂的任务。

The remainder of the paper is structured as follows: Section 2 reviews the related work on prompting and evaluation in LLMs. Section 3 details the HPT and its associated frameworks. Section 4 outlines the experimental setup, results, and ablation studies. Section 5 concludes the paper. Section 6 discusses the ethical impact of the work.

本文的其余部分结构如下:第2节回顾了大语言模型中提示 (prompting) 和评估的相关工作。第3节详细介绍了HPT及其相关框架。第4节概述了实验设置、结果和消融研究。第5节对全文进行总结。第6节讨论了本研究的伦理影响。

Figure 2: Analogical framework comparing the HPF with ”Open Book” examination methodology. The diagram illus- trates how HPF components (below) mirror traditional educational assessment elements (above), with parallel relationships between task complexity levels, resource utilization (prompts/textbooks), and performance metrics (HPI/student effort). This comparison demonstrates how LLM task complexity scales similarly to educational assessment complexity, from simple lookup tasks to complex synthesis problems

图 2: 类比框架对比HPF与"开卷考试"评估方法。该图展示了HPF组件(下方)如何对应传统教育评估要素(上方),包括任务复杂度层级、资源利用(提示词/教科书)和性能指标(HPI/学生努力程度)之间的平行关系。这一比较揭示了大语言模型任务复杂度与教育评估复杂度具有相似的递进规律,从简单查找任务到复杂综合问题。

2 Related Work

2 相关工作

The advent of LLMs has revolutionized NLP by demonstrating significant improvements in few-shot and zero-shot learning capabilities. Brown et al. (2020) introduced GPT-3, a 175 billion parameter auto regressive model, showcasing its ability to perform a wide range of tasks such as question-answering, reading comprehension, translation, and natural language inference without fine-tuning. This study highlighted the potential of very large models for in-context learning while also identifying limitations in commonsense reasoning and specific comprehension tasks. Similarly, Liu et al. (2021) surveyed prompt-based learning, emphasizing the role of prompt engineering in leveraging pre-trained models for few-shot and zero-shot adaptation to new tasks with minimal labeled data.

大语言模型(LLM)的出现通过显著提升少样本和零样本学习能力,彻底改变了自然语言处理领域。Brown等人(2020)提出的GPT-3是一个1750亿参数的自回归模型,展示了其在无需微调情况下执行问答、阅读理解、翻译和自然语言推理等广泛任务的能力。该研究凸显了超大规模模型在上下文学习方面的潜力,同时也指出了其在常识推理和特定理解任务上的局限性。类似地,Liu等人(2021)对基于提示的学习进行了综述,强调提示工程在利用预训练模型进行少样本和零样本适应新任务时的重要作用,仅需少量标注数据即可实现。

2.1 Prompt Engineering

2.1 提示工程 (Prompt Engineering)

Prompting plays a vital role in unlocking the full potential of LLMs. By designing specific input prompts, the LLM’s responses can be guided, significantly influencing the quality and relevance of the output. Effective prompting strategies have enhanced LLM performance on tasks ranging from simple question-answering to complex reasoning and problemsolving. Recent research has explored various approaches to prompting and reasoning evaluation in LLMs. Chain-ofThought (CoT) prompting (Wei et al. 2022b) elicits step-bystep reasoning, improving performance on complex tasks. Specializing smaller models (Fu et al. 2023) and using large models as reasoning teachers (Ho, Schmid, and Yun 2022) have demonstrated the potential for enhancing reasoning capabilities. Emergent abilities in LLMs, which appear suddenly at certain scale thresholds, have also been a topic of interest. Wei et al. (2022a) examined these abilities in fewshot prompting, discussing the underlying factors and implications for future scaling. Complementing this, Kojima et al. (2022) demonstrated that LLMs could exhibit multistep reasoning capabilities in a zero-shot setting by simply modifying the prompt structure, thus highlighting their potential as general reasoning engines. Yao et al. (2023) introduced the Tree-of-Thoughts framework, enabling LLMs to deliberate over coherent text units and perform heuristic searches for complex reasoning tasks. This approach generalizes over chain-of-thought prompting and has shown significant performance improvements in tasks requiring planning and search, such as creative writing and problem-solving games. Kong et al. (2024) introduced role-play prompting to improve zeroshot reasoning by constructing role-immersion interactions, which implicitly trigger chain-of-thought processes and enhance performance across diverse reasoning benchmarks. Progressive-hint prompting (Zheng et al. 2023) has been proposed to conceptualize answer generation and guide LLMs toward correct responses. Meta cognitive prompting (Wang and Zhao 2024) incorporates self-aware evaluations to enhance understanding abilities.

提示工程在释放大语言模型全部潜力方面起着至关重要的作用。通过设计特定的输入提示,可以引导大语言模型的响应,显著影响输出的质量和相关性。从简单的问答到复杂的推理和问题解决,有效的提示策略已提升了大语言模型在各种任务上的表现。

近期研究探索了大语言模型中多种提示与推理评估方法。思维链 (Chain-of-Thought, CoT) 提示 (Wei et al. 2022b) 通过逐步推理提示,提升了复杂任务的表现。专业化小型模型 (Fu et al. 2023) 以及使用大模型作为推理教师 (Ho, Schmid, and Yun 2022) 的方法,都展示了增强推理能力的潜力。

大语言模型在特定规模阈值突然显现的涌现能力也备受关注。Wei等 (2022a) 研究了少样本提示中的这些能力,探讨了其内在因素及对未来扩展的影响。Kojima等 (2022) 进一步证明,仅通过修改提示结构,大语言模型就能在零样本设置中展现多步推理能力,凸显其作为通用推理引擎的潜力。

Yao等 (2023) 提出的思维树框架使大语言模型能够对连贯文本单元进行推敲,并为复杂推理任务执行启发式搜索。该方法推广了思维链提示,在需要规划和搜索的任务(如创意写作和解题游戏)中展现出显著性能提升。

Kong等 (2024) 引入角色扮演提示技术,通过构建角色沉浸式交互来改进零样本推理,隐式触发思维链过程并提升跨领域推理基准的表现。渐进式提示 (Zheng et al. 2023) 被提出用于概念化答案生成并引导大语言模型给出正确响应。元认知提示 (Wang and Zhao 2024) 则通过自我感知评估来增强理解能力。

These works collectively highlight the advancements in leveraging LLMs through innovative prompting techniques, addressing their emergent abilities, reasoning capabilities, interaction strategies, robustness, and evaluation methodologies. Despite significant advancements, the current LLM research reveals several limitations, particularly in terms of prompt design, handling complex reasoning tasks, and evaluating model performance across diverse scenarios. While promising, the emergent abilities of LLMs often lack predictability and control, and the robustness of these LLMs in the face of misleading prompts remains a concern.

这些研究共同凸显了通过创新提示技术利用大语言模型所取得的进展,涉及模型的涌现能力、推理性能、交互策略、鲁棒性以及评估方法。尽管取得了重大进展,当前大语言模型研究仍存在若干局限性,尤其在提示设计、处理复杂推理任务以及跨场景模型性能评估方面。虽然前景广阔,但大语言模型的涌现能力往往缺乏可预测性和可控性,且这些模型在面对误导性提示时的鲁棒性仍令人担忧。

2.2 Prompt Optimization and Selection

2.2 提示优化与选择

The challenge of optimizing prompts for LLMs has been addressed in several key studies, each contributing unique methodologies to enhance model performance and efficiency. Shen et al. (2023) introduce PFLAT, a metric utilizing flatness regular iz ation to quantify prompt utility, which leads to improved results in classification tasks. Do et al. (2024) propose a structured three-step methodology that contains data clustering, prompt generation, and evaluation, effectively balancing generality and specificity in prompt selection. ProTeGi (Pryzant et al. 2023) offers a non-parametric approach inspired by gradient descent, leveraging natural language ”gradients” to iterative ly refine prompts. Wang et al. (2024) present PromISe, which transforms prompt optimization into an explicit chain of thought, employing self-introspection and refinement techniques. Zhou et al. (2023b) proposed DYNAICL, a framework for efficient prompting that dynamically allocates in-context examples based on a meta-controller’s predictions, achieving better performance-efficiency tradeoffs compared to uniform example allocation.

优化大语言模型提示词的挑战已在多项关键研究中得到解决,这些研究各自提出了独特方法来提升模型性能和效率。Shen等人(2023)提出PFLAT指标,通过平坦度正则化量化提示效用,在分类任务中取得更好效果。Do等人(2024)设计了包含数据聚类、提示生成与评估的三步法,有效平衡提示选择的通用性与特异性。ProTeGi(Pryzant等人2023)受梯度下降启发提出非参数方法,利用自然语言"梯度"迭代优化提示词。Wang等人(2024)开发的PromISe将提示优化转化为显式思维链,采用自省与精炼技术。Zhou等人(2023b)提出DYNAICL框架,通过元控制器预测动态分配上下文示例,相比均匀分配实现了更优的性能-效率平衡。

These studies aim to automate prompt design, moving away from traditional manual trial-and-error methods while emphasizing efficiency and s cal ability across various tasks and models. They report significant improvements in LLMs performance, with enhancements ranging from $5%$ to $31%$ across different benchmarks. This body of work underscores the increasing importance of prompt optimization and selection in unlocking the potential of LLMs and points toward future research avenues, such as exploring theoretical foundations, integrating multiple optimization techniques, and distinguishing between task-specific and general-purpose strategies.

这些研究旨在实现提示设计的自动化,摒弃传统的手动试错方法,同时强调跨任务和模型的高效性与可扩展性。研究显示大语言模型性能显著提升,在不同基准测试中提升幅度达 $5%$ 至 $31%$。该系列工作凸显了提示优化与选择在释放大语言模型潜力方面日益增长的重要性,并为未来研究方向指明路径,例如探索理论基础、整合多种优化技术,以及区分任务专用策略与通用策略。

2.3 Evaluation Benchmarks

2.3 评估基准

To facilitate the evaluation and understanding of LLM capabilities, Zhu et al. (2024) introduced Prompt Bench, a unified library encompassing a variety of LLMs, datasets, evaluation protocols, and adversarial prompt attacks. This modular and extensible tool aims to support collaborative research and advance the comprehension of LLM strengths and weaknesses. Further exploring reasoning capabilities, Qiao et al. (2023) categorized various prompting methods and evaluated their effectiveness across different model scales and reasoning tasks, identifying key open questions for achieving robust and general iz able reasoning. (Wang et al. 2021) introduced a multi-task Benchmark for robustness Evaluation of LLMs extends the original GLUE (Wang et al. 2018) benchmark to assess model robustness against adversarial inputs. It incorporates perturbed versions of existing GLUE tasks, such as paraphrasing, negation, and noise, to test models’ abilities with challenging data. The study highlights that despite their success on clean datasets, state-of-the-art models often struggle with adversarial examples, underscoring the importance of robustness evaluations in model development.

为便于评估和理解大语言模型的能力,Zhu等人 (2024) 提出了Prompt Bench,这是一个包含多种大语言模型、数据集、评估协议和对抗性提示攻击的统一库。这个模块化且可扩展的工具旨在支持协作研究,并增进对大语言模型优缺点理解。Qiao等人 (2023) 进一步探索推理能力,对各种提示方法进行分类,并评估它们在不同模型规模和推理任务中的有效性,指出了实现稳健且可泛化推理的关键开放性问题。(Wang等人 2021) 引入了一个用于大语言模型鲁棒性评估的多任务基准,将原始GLUE (Wang等人 2018) 基准扩展到评估模型对抗对抗性输入的鲁棒性。它整合了现有GLUE任务的扰动版本,如释义、否定和噪声,以测试模型在处理挑战性数据时的能力。研究强调,尽管最先进的模型在干净数据集上表现优异,但在对抗性样本上往往表现不佳,这凸显了模型开发中鲁棒性评估的重要性。

3 Hierarchical Prompting Taxonomy 3.1 Governing Rules

3 分层提示分类法 3.1 基本规则

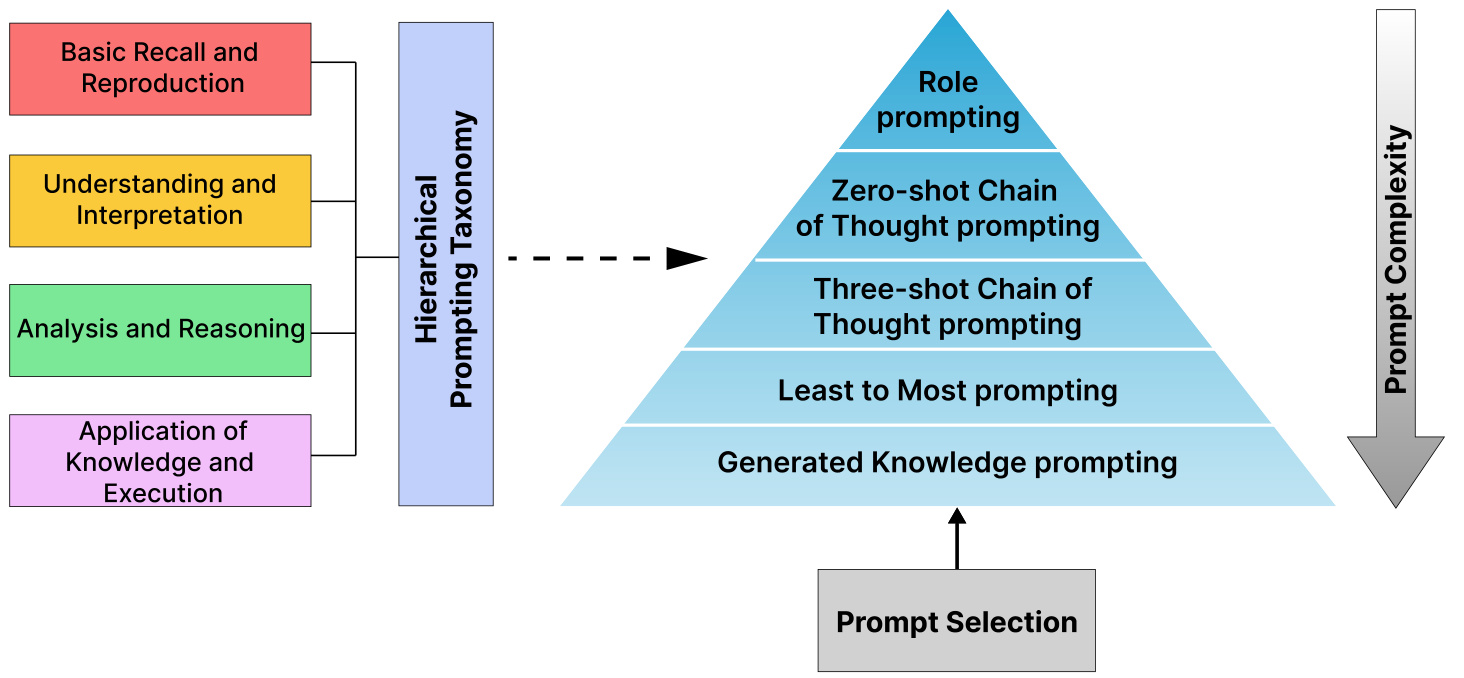

Figure 3 illustrates the HPT, a taxonomy that systematically reflects human cognitive functions as outlined in Bloom (1956). Each rule embodies complex cognitive processes based on established principles from learning and psychology.

图 3: 展示了HPT (Human Process Taxonomy)分类体系,该系统化地反映了Bloom (1956)提出的人类认知功能框架。每条规则都基于学习和心理学既定原则,体现了复杂的认知处理过程。

Figure 3: Hierarchical Prompting Taxonomy: A taxonomy designed to assess the complexity of prompting strategies based on the criteria: Basic Recall and Reproduction, Understanding and Interpretation, Analysis and Reasoning, and Application of Knowledge and Reasoning.

图 3: 分层提示分类法: 一种基于以下标准设计的提示策略复杂度评估分类体系: 基础记忆与复现、理解与解释、分析与推理、知识应用与推理。

than mere understanding because it requires examining structure and identifying patterns and connections.

超越单纯理解,因为它需要审视结构并识别模式和关联。

- Application of Knowledge and Execution: This mirrors the application and evaluation stages of (Bloom 1956), where individuals must not only understand and analyze but also use knowledge to perform multi-step tasks, solve complex problems, and execute decisions. It represents the most cognitive ly complex tasks, which require synthesis of information and practical decision-making, highlighting the critical leap from understanding theory to executing it in practice.

- 知识与执行的应用:这对应于 (Bloom 1956) 中的应用和评估阶段,个体不仅需要理解和分析,还必须运用知识完成多步骤任务、解决复杂问题并执行决策。它代表了认知复杂度最高的任务,要求综合信息并进行实际决策,突显了从理论理解到实践执行的关键跨越。

In HPT, the progression from basic recall to application of knowledge reflects increasing cognitive complexity, consistent with educational and cognitive frameworks, where more advanced cognitive processes build on foundational ones, demanding deeper engagement and mental effort.

在HPT中,从基础记忆到知识应用的进阶过程反映了认知复杂度的提升,这与教育和认知框架一致——更高级的认知过程建立在基础认知之上,需要更深层次的投入和心智努力。

3.2 Hierarchical Prompting Framework

3.2 分层提示框架

The HPF consists of five prompting strategies, each assigned a complexity level. These levels are determined by the degree to which the strategies are shaped by the four principles of the HPT. The complexity levels of the prompting strategies are assigned based on human assessment of their relative cognitive loads over a set of 7 different tasks, guaranteeing that the cognitive abilities of LLMs are in harmony with those of humans. This approach enables the assessment of tasks in terms of their complexity and the cognitive load they impose on both humans and LLMs by utilizing HPI. Section 4.4 examines the hierarchical structure of the HPF in conjunction with the LLM-as-a-Judge framework, validating that the cognitive demands on LLMs can be aligned with those of

HPF包含五种提示策略,每种策略都分配了一个复杂度等级。这些等级由策略受HPT四项原则影响的程度决定。提示策略的复杂度等级基于人类对7种不同任务中相对认知负荷的评估而设定,确保大语言模型的认知能力与人类保持一致。该方法通过利用HPI,能够从任务复杂度及其对人类和大语言模型施加的认知负荷角度进行评估。第4.4节结合LLM-as-a-Judge框架研究了HPF的层次结构,验证了大语言模型的认知需求可与人类需求对齐。

humans.

人类。

The set of five prompting strategies were chosen from a diverse range of existing strategies to populate the framework, guided by a human judgment policy, prioritizing comprehensiveness in cognitive demands rather than the sheer number of strategies. See Appendix A for more details. Consequently, the HPF can be replicated or expanded with other relevant prompting strategies that exhibit similar cognitive demands, making the framework adaptable. The five prompting strategies, listed from least to most complex, are as follows:

从现有策略中精选出五种提示策略来构建该框架,这些策略的选择基于人工判断原则,优先考虑认知需求的全面性而非策略数量。更多细节见附录A。因此,HPF框架可通过其他具有相似认知需求的相关提示策略进行复制或扩展,从而保持框架的适应性。这五种提示策略按复杂度从低到高依次为:

This approach requires recalling prior prompts, interpreting previous responses, and analyzing them to effectively solve the task, resulting in a highly cognitive ly demanding strategy.

这种方法需要回忆先前的提示、解读之前的响应并对其进行分析,才能有效解决任务,从而形成一种认知要求极高的策略。

- Generated Knowledge Prompting (GKP) (Liu et al. 2022): Prompts that require integrating external knowledge to generate relevant information represent the most complex and cognitive ly demanding strategy. This approach is strongly influenced by rules 2, 3, and 4, as it involves correlating knowledge with the prompt and applying and analyzing external information, making it the most cognitive ly demanding within the HPT framework. In the experiments, Llama-3 8B is used to generate external knowledge.

- 生成式知识提示 (GKP) (Liu et al. 2022): 需要整合外部知识以生成相关信息的提示代表了最复杂且认知需求最高的策略。该方法受规则2、3和4的强烈影响,因为它涉及将知识与提示相关联,并应用和分析外部信息,使其成为HPT框架中认知需求最高的方法。实验中使用了Llama-3 8B来生成外部知识。

3.3 Hierarchical Prompting Index

3.3 分层提示索引

HPI is an evaluation metric for assessing the task complexity of LLMs over different datasets, which is influenced by the HPT rules. A lower HPI for a dataset suggests that the corresponding LLM is more adept at solving the task with fewer cognition processes. For each dataset instance, we begin with the least complex prompting strategy and progressively move through the HPF prompting strategies until the instance is resolved. The HPI corresponds to the complexity level of the prompting strategy where the LLM first tackles the instance.

HPI是一种用于评估大语言模型(LLM)在不同数据集上任务复杂度的指标,其数值受HPT规则影响。数据集的HPI值越低,表明对应的大语言模型能以更少的认知过程熟练解决该任务。对于每个数据集实例,我们从复杂度最低的提示策略开始,逐步尝试HPF提示策略,直至该实例被解决。HPI对应大语言模型首次解决该实例时所使用的提示策略复杂度等级。

Algorithm 1: HPI Metric

算法 1: HPI 评估指标

| HPI_List= 对于评估数据集中的样本i执行 对于HPF中的层级r执行 如果大语言模型解决了任务则 HPI_List[]= 跳出循环 结束条件 结束循环 如果大语言模型未能解决任务则 |

| HPIList[]= m + HPIDataset 结束条件 结束循环 |

| HPI = ≥=1 HPI-List[] |

$m$ is the total number of levels in the HPF, and $n$ is the total number of samples in the evaluation dataset. $\mathsf{H P I}_{D a t a s e t}$ represents the penalty introduced into the framework by human assessments. For further details on human annotation, see Appendix A.

$m$ 是HPF中的总层数,$n$ 是评估数据集中的总样本数。$\mathsf{H P I}_{D a t a s e t}$ 表示人类评估给框架引入的惩罚项。关于人工标注的更多细节,请参阅附录A。

4 Results

4 结果

4.1 Experimental Setup Datasets

4.1 实验设置数据集

The experiments utilized a diverse set of datasets, including MMLU, GSM8k, HumanEval, BoolQ, CSQA, SamSum, and IWSLT en-fr covering areas such as reasoning, coding, mathematics, question-answering, sum mari z ation, and machine translation, to evaluate the framework’s robustness and applicability. For further details on evaluation dataset sizes, see

实验采用了多样化的数据集,包括 MMLU、GSM8k、HumanEval、BoolQ、CSQA、SamSum 和 IWSLT en-fr,涵盖推理、编程、数学、问答、摘要和机器翻译等领域,以评估框架的鲁棒性和适用性。有关评估数据集规模的更多详情,请参阅

Appendix A.

附录 A.

Reasoning: MMLU (Hendrycks et al. 2021) includes multiple-choice questions across 57 subjects, covering areas like humanities, social sciences, physical sciences, basic mathematics, U.S. history, computer science, and law. CommonSenseQA (CSQA) (Talmor et al. 2019) contains 12,000 questions to assess commonsense reasoning.

推理:MMLU (Hendrycks et al. 2021) 包含57个学科的多选题,涵盖人文、社会科学、自然科学、基础数学、美国历史、计算机科学和法律等领域。CommonSenseQA (CSQA) (Talmor et al. 2019) 包含12,000个用于评估常识推理的问题。

Coding: HumanEval (Chen et al. 2021a) features 164 coding challenges, each with a function signature, docstring, body, and unit tests, designed to avoid training data overlap with LLMs.

编码:HumanEval (Chen et al. 2021a) 包含164个编程挑战,每个挑战包含函数签名、文档字符串、函数体和单元测试,旨在避免与大语言模型的训练数据重叠。

Mathematics: Grade School Math 8K (GSM8k) (Cobbe et al. 2021) comprises 8.5K diverse math problems for multi-step reasoning, focusing on basic arithmetic and early Algebra.

数学:小学数学8K (GSM8k) (Cobbe et al. [2021]) 包含8500道多样化数学题,用于多步推理训练,侧重基础算术和初级代数。

Question-Answering: BoolQ (Clark et al. 2019) consists of 16,000 True/False questions based on Wikipedia passages for binary reading comprehension.

问答:BoolQ (Clark等人, 2019) 包含16,000个基于维基百科段落的真/假问题,用于二元阅读理解。

Sum mari z ation: SamSum (Gliwa et al. 2019) features 16,000 human-generated chat logs with summaries for dialogue sum mari z ation.

摘要:SamSum (Gliwa et al. 2019) 包含16,000条人工生成的聊天记录及对话摘要。

Machine Translation: IWSLT-2017 en-fr (IWSLT) (Cettolo et al. 2017) is a parallel corpus with thousands of EnglishFrench sentence pairs from TED Talks for translation tasks.

机器翻译:IWSLT-2017 en-fr (IWSLT) (Cettolo et al. 2017) 是一个包含数千个英法语句对的平行语料库,语料源自TED演讲的翻译任务。

Large Language Models

大语言模型

For the evaluation, LLMs with parameter sizes ranging from 7 billion to 12 billion from top open-source models and top proprietary models were selected to determine the effectiveness of the proposed framework across varied parameter scales and architectures.

在评估中,我们选取了参数规模从70亿到120亿的顶级开源大语言模型和专有大语言模型,以验证所提框架在不同参数规模和架构下的有效性。

Additional Evaluation Metrics

附加评估指标

• Coding: The Pass $\ @\mathrm{k}$ (Chen et al. 2021b) metric measures the probability of at least one correct solution among the top k outputs, used for evaluating code generation. • Sum mari z ation: ROUGE-L (Lin 2004) evaluates the longest common sub sequence between generated text and reference, focusing on sequence-level similarity for summaries and translations. • Machine Translation: BLEU (Papineni et al. 2002) is a precision-based metric that assesses machine-generated text quality by comparing n-grams with reference texts.

• 编码:Pass $\ @\mathrm{k}$ (Chen et al. 2021b) 指标衡量前k个输出中至少存在一个正确解的概率,用于评估代码生成质量。

• 摘要:ROUGE-L (Lin 2004) 通过计算生成文本与参考文本的最长公共子序列来评估摘要和翻译的序列级相似性。

• 机器翻译:BLEU (Papineni et al. 2002) 是基于精确度的指标,通过对比n-gram与参考文本来评估机器生成文本的质量。

In the experiments, thresholds of 0.15, and 0.20 were established for sum mari z ation and machine translation tasks to define the conditions required for task completion at each complexity level of the HPF. These thresholds allowed for iterative refinement of HPF prompting strategies.

在实验中,为摘要(summarization)和机器翻译任务设定了0.15和0.20的阈值,用于定义HPF每个复杂度级别完成任务所需的条件。这些阈值允许对HPF提示策略进行迭代优化。

4.2 Results on Standard Benchmarks: MMLU, GSM8K, and Humaneval

4.2 标准基准测试结果:MMLU、GSM8K和Humaneval

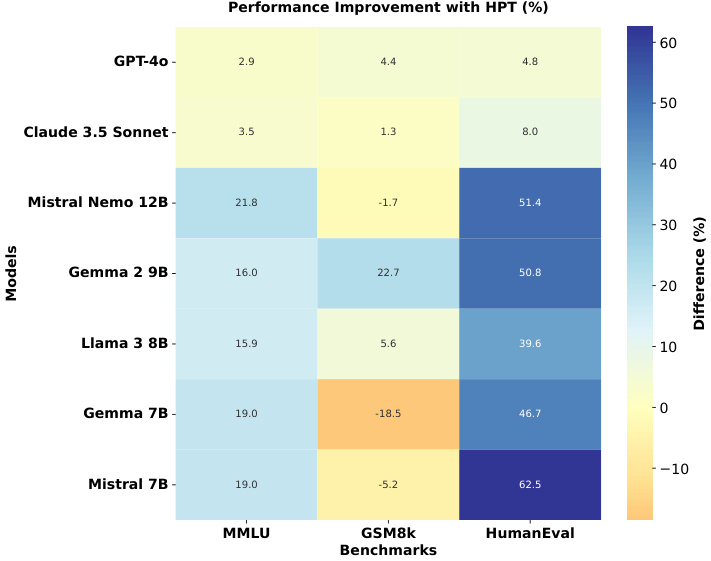

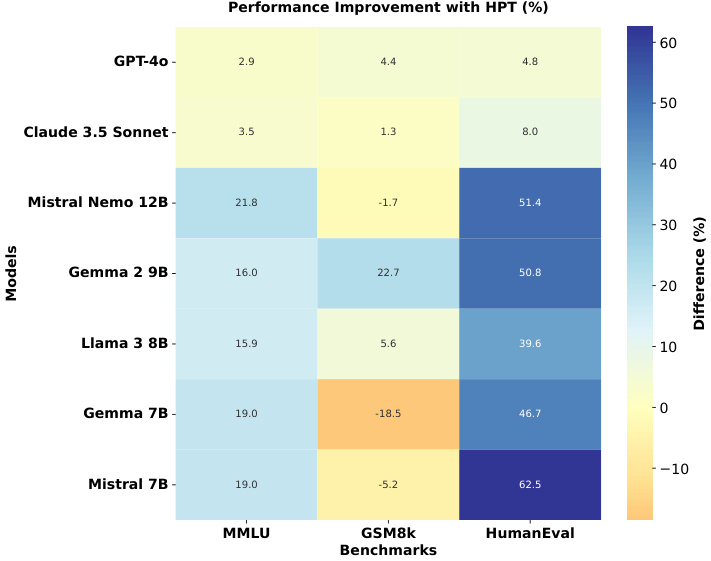

The evaluation of HPF effectiveness as shown in Figure 4 spans three standard benchmarks: MMLU, GSM8k, and HumanEval. On the MMLU benchmark, which tests general knowledge across multiple domains, all models showed notable improvements over their baseline performance. MistralNemo 12B demonstrated the most substantial MMLU enhancement $(+21.8%)$ , while Claude 3.5 Sonnet achieved a consistent improvement of $3.5%$ . In mathematical reasoning, assessed through GSM8k, the results revealed a correlation with the model scale. Larger models like GPT-4 and Claude 3.5 Sonnet showed modest gains $(+4.4%$ and $+1.3%$ respectively), while smaller models exhibited more variable performance. The HumanEval benchmark, which assesses code generation capabilities, revealed the most dramatic improvements across all models. Mistral 7B achieved an exception $62.5%$ improvement in HumanEval scores, followed by Mistral-Nemo 12B with an impressive $51.4%$ improvement, and Gemma-2 9B with a $50.8%$ enhancement. These findings indicate that HPF improves performance across all benchmarks for most of the LLMs, its impact is particularly pronounced in programming tasks, suggesting that the technique may be especially valuable for enhancing code-related capabilities.

如图 4 所示,HPF 有效性评估涵盖三个标准基准测试:MMLU、GSM8k 和 HumanEval。在测试跨领域通用知识的 MMLU 基准中,所有模型相较基线表现均有显著提升。MistralNemo 12B 展现出最大的 MMLU 提升幅度 $(+21.8%)$,Claude 3.5 Sonnet 则保持 $3.5%$ 的稳定改进。通过 GSM8k 评估的数学推理任务显示,结果与模型规模存在相关性:GPT-4 和 Claude 3.5 Sonnet 等大模型提升幅度较小 $(+4.4%$ 和 $+1.3%$),小模型则表现波动较大。在评估代码生成能力的 HumanEval 基准中,所有模型均取得最显著进步:Mistral 7B 以 $62.5%$ 的异常增幅领先,Mistral-Nemo 12B 紧随其后达 $51.4%$,Gemma-2 9B 也有 $50.8%$ 的提升。这些发现表明 HPF 能提升大多数大语言模型在所有基准测试中的表现,其对编程任务的增强效果尤为突出,暗示该技术对提升代码相关能力具有特殊价值。

Table 1 highlights the improved performance of various LLMs on MMLU, with all models showing an HPI index below three. This indicates that reasoning over most MMLU samples requires minimal cognitive effort for these models, compared to baseline multi-shot CoT methods (5 shot), which typically require more than five examples and are more cognitively demanding according to HPT. Interestingly, while Claude 3.5 Sonnet achieves the highest MMLU accuracy, GPT-4o records the best HPI score, showing that minimal cognitive effort does not necessarily equate to the best performance. The enhancement in GSM8k is relatively smaller compared to MMLU, with decreased performances for both Mistral 7B and Gemma 7B. The high HPI values for Gemma 7B and Mistral 7B indicate that none of the five prompting strategies in HPF posed significant cognitive challenges for these LLMs, highlighting a limitation of the HPF. As shown in Table 2, Claude 3.5 Sonnet achieves a perfect pass $@1$ of 1.00 with low HPI values, outperforming GPT-4o, which scores 0.95 but has a higher HPI. Gemma 7B struggles with the lowest pass $@1$ of 0.79 and the highest HPI of 3.71, indicating a need for more complex prompting strategy.

表 1: 展示了各大大语言模型在MMLU上的性能提升,所有模型的HPI指数均低于3。这表明相较于基线多样本思维链(CoT)方法(5样本),这些模型对大多数MMLU样本进行推理时所需的认知努力更少。根据HPT指标,基线方法通常需要超过五个示例且认知需求更高。有趣的是,虽然Claude 3.5 Sonnet取得了最高的MMLU准确率,但GPT-4o获得了最佳的HPI分数,说明最低认知努力并不一定等同于最佳性能。与MMLU相比,GSM8k的提升幅度相对较小,Mistral 7B和Gemma 7B的性能均有所下降。Gemma 7B和Mistral 7B的高HPI值表明,HPF中的五种提示策略均未对这些大语言模型构成显著认知挑战,这凸显了HPF的局限性。如表 2所示,Claude 3.5 Sonnet以1.00的完美pass@1分数和低HPI值表现最优,优于得分为0.95但HPI更高的GPT-4o。Gemma 7B表现最差,pass@1分数仅为0.79且HPI高达3.71,表明其需要更复杂的提示策略。

Interestingly, HPF significantly enhanced the performance of most LLMs across three benchmark datasets, even when the HPI difference was less than 1 relative to the best performing LLMs. This highlights that tailoring the prompting strategy to align with the complexity of each dataset instance can lead to substantial improvements, achieving performance levels comparable to state-of-the-art LLMs such as GPT-4o and Claude 3.5 Sonnet on these benchmarks.

有趣的是,HPF显著提升了大多数大语言模型在三个基准数据集上的表现,即使其HPI差异与表现最佳的大语言模型相比不到1。这表明,根据每个数据集实例的复杂性定制提示策略可以带来显著改进,使性能达到与GPT-4o和Claude 3.5 Sonnet等先进大语言模型相当的水平。

4.3 Results on Other Datasets

4.3 其他数据集上的结果

Table 1 presents the performance of LLMs on the BoolQ and CSQA datasets. Notably, no significant insights emerge from the results, aside from GPT-4o performing unexpectedly poorly, which contrasts with its typical performance. With most LLMs achieving near-perfect scores, the BoolQ dataset appears to lack the complexity needed to serve as an effective benchmark for modern LLMs, as they perform exceptionally well even with minimal cognitive prompting strategies. This underscores the utility of HPF in evaluating dataset complexities relative to an LLM, offering researchers valuable insights for designing more challenging and robust benchmarks.

表 1: 展示了大语言模型在BoolQ和CSQA数据集上的性能表现。值得注意的是,除了GPT-4o表现意外不佳(与其典型性能形成对比)外,结果中并未出现显著洞见。由于大多数大语言模型都获得了接近满分的成绩,BoolQ数据集似乎缺乏作为现代大语言模型有效基准所需的复杂度——即便采用最低限度的认知提示策略,它们也能表现优异。这凸显了HPF在评估数据集相对于大语言模型复杂度方面的实用性,为研究者设计更具挑战性和鲁棒性的基准提供了宝贵洞见。

Figure 4: Performance Comparison of HPT-based Evaluation vs. Standard Evaluation: Performance improvements (in $%$ ) when using HPT-based evaluation compared to standard evaluation across three benchmarks: MMLU, GSM8k, and HumanEval. Positive values indicate performance gains with HPT, while negative values indicate performance decreases. The baseline standard evaluation scores are sourced from Hugging Face leader board and official research reports. Figure 5: Hierarchy of prompting strategies with LLM-as-aJudge framework with GPT-4o as the judge.

图 4: 基于HPT的评估与标准评估性能对比:在MMLU、GSM8k和HumanEval三个基准测试中,使用基于HPT的评估相比标准评估的性能提升(以$%$计)。正值表示HPT带来性能增益,负值表示性能下降。基线标准评估分数来源于Hugging Face排行榜和官方研究报告。

图 5: 以GPT-4o为评判者的LLM-as-aJudge框架提示策略层级结构。

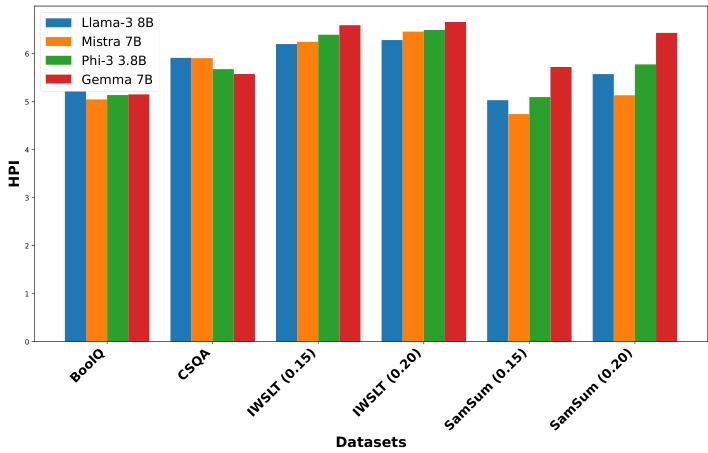

Table 3 presents the performance of LLMs on IWSLT and SamSum datasets at varying thresholds. GPT-4o consistently achieved the highest scores across all thresholds, while most models, except Gemma 7B, performed similarly. Interestingly, Claude 3.5 Sonnet, which excelled in reasoning tasks, did not perform as strongly in sum mari z ation and translation tasks. The threshold selection is guided by the observed performance plateau across most LLMs as the threshold increases. For a detailed explanation of the threshold selection process, please refer to Appendix B.

表 3: 展示了不同阈值下大语言模型在IWSLT和SamSum数据集上的性能表现。GPT-4o在所有阈值下均保持最高得分,除Gemma 7B外,其他模型表现相近。值得注意的是,在推理任务中表现出色的Claude 3.5 Sonnet在摘要和翻译任务中表现相对较弱。阈值选择依据大多数大语言模型性能随阈值增长进入平台期的现象,具体选择过程详见附录B。

Table 1: HPI (lower is better) and accuracy of LLMs across MMLU, GSM8K, BoolQ, and CSQA datasets. Blue indicates datasets where the LLM with the best HPI does not achieve the best performance. Green indicates the LLM with the best performance over the maximum number of datasets.

表 1: HPI (数值越低越好) 和大语言模型在MMLU、GSM8K、BoolQ和CSQA数据集上的准确率。蓝色表示HPI最佳的大语言模型未达到最高性能的数据集。绿色表示在最多数据集上性能最佳的大语言模型。

| DATASETS Models | MMLU HPI | MMLU Accuracy | GSM8k HPI | GSM8k Accuracy | BoolQ HPI | BoolQ Accuracy | CSQA HPI | CSQA Accuracy |

|---|---|---|---|---|---|---|---|---|

| GPT-40 | 1.81 | 91.61 | 1.71 | 96.43 | 1.32 | 96.82 | 1.65 | 92.54 |

| Claude3.5Sonnet | 1.84 | 92.16 | 1.35 | 97.72 | 1.20 | 99.81 | 2.01 | 86.15 |

| Mistral-Nemo12B | 2.45 | 89.75 | 3.01 | 86.80 | 1.75 | 99.87 | 2.06 | 90.17 |

| Gemma-29B | 2.34 | 87.28 | 2.17 | 91.28 | 1.30 | 98.28 | 1.94 | 88.86 |

| Llama-38B | 2.84 | 82.63 | 2.34 | 86.20 | 1.37 | 99.30 | 2.43 | 84.76 |

| Gemma 7B | 2.93 | 83.31 | 6.70 | 27.88 | 1.45 | 99.42 | 2.50 | 83.78 |

| Mistral 7B | 2.89 | 81.45 | 5.11 | 46.93 | 1.41 | 98.07 | 2.49 | 82.06 |

Table 2: HPI (lower is better) and Pass $@1$ of LLMs on the HumanEval dataset. Blue indicates datasets where the LLM with the best HPI does not achieve the best performance. Green indicates the LLMs with the best performance over the dataset.

表 2: HumanEval数据集上大语言模型的HPI(数值越低越好)和Pass@1指标。蓝色标注表示HPI最佳的大语言模型未在该数据集上取得最佳性能,绿色标注表示在该数据集上性能最佳的大语言模型。

| DATASET Models | HumanEval |

|---|---|

| HPI | |

| GPT-40 | 2.25 |

| Claude3.5Sonnet | 1.04 |

| Mistral-Nemo12B | 2.07 |

| Gemma-29B | 1.01 |

| Llama-38B | 1.03 |

| Gemma 7B | 3.71 |

| Mistral 7B | 1.10 |

4.4 Complexity Levels with LLM-as-a-Judge

4.4 基于大语言模型的复杂度分级

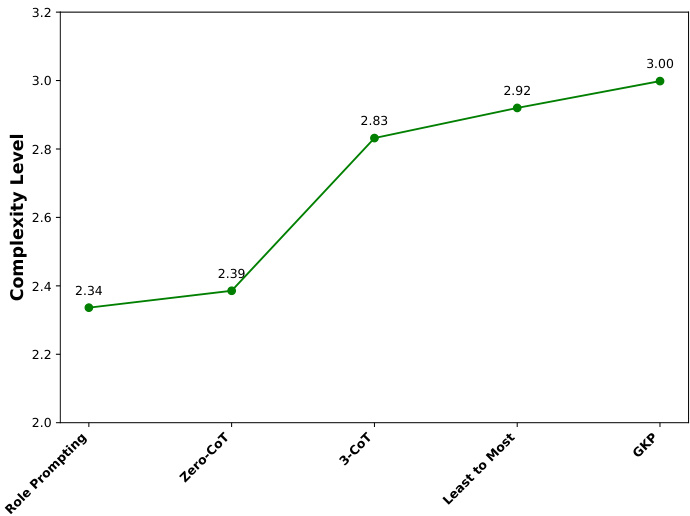

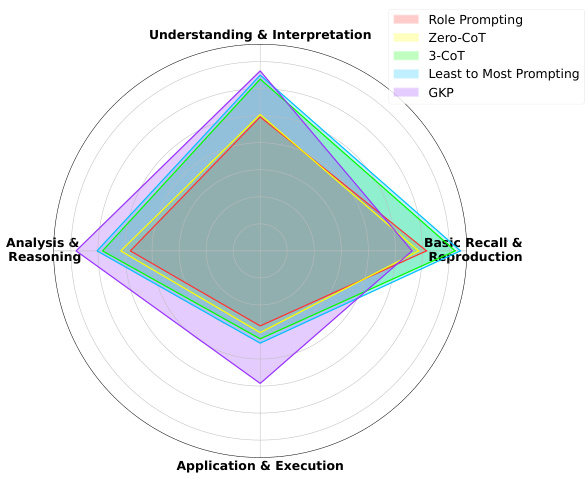

This study evaluated prompting strategies by assessing how GPT-4o, as the LLM judge, replicates the hierarchical complexity levels of these strategies using a systematic scoring approach across tasks. Figure 5 shows a consistent hierarchy with less variability than human judges, indicating a strong alignment between LLM and human judgment. These results validate the proposed framework and demonstrate the corresponden ce between human cognitive principles and LLM behavior. Figure 6 shows the scoring distribution across the four HPT rules for each strategy. Further details related to dataset specifications and scoring method are in Appendix C.

本研究通过评估作为大语言模型裁判的GPT-4o如何采用系统性评分方法在各任务中复现提示策略的层级复杂度,对不同提示策略进行了评价。图5显示该模型呈现出比人类裁判更稳定的一致性层级结构,表明大语言模型与人类判断具有高度一致性。这些结果验证了所提出的框架,并证实了人类认知原则与大语言模型行为之间的对应关系。图6展示了各策略在四条HPT规则下的评分分布情况。关于数据集规范与评分方法的详细信息见附录C。

4.5 Parallels with System 1 and System 2 Thinking

4.5 与系统1和系统2思维的类比

HPF align closely with the principles of System 1 and System 2 thinking from dual-process cognitive theories (Booch et al. 2021). HPT categorizes tasks and HPF structures prompts based on their cognitive complexity, mirroring how humans allocate cognitive resources. For tasks with low cognitive demands, HPF employs simple prompts that parallel System 1 thinking. These tasks, like fact recall or basic classification, require minimal reasoning, allowing the LLM to respond quickly and efficiently without extensive computation. For instance, asking an LLM to ”identify the capital of a coun- try” is analogous to a person retrieving a familiar fact using System 1.

HPF与双过程认知理论中的系统1和系统2思维原则高度契合 (Booch et al. 2021)。HPT对任务进行分类,HPF则根据认知复杂度构建提示词,这种机制模拟了人类分配认知资源的方式。对于低认知需求的任务,HPF采用类似系统1思维的简单提示词。这类任务(如事实回忆或基础分类)只需极少推理,使得大语言模型无需大量计算即可快速高效响应。例如让大语言模型"识别某国首都",就如同人类通过系统1调取常识信息。

Figure 6: Scoring distribution for each of the four rules of the HPT for the prompting strategies in the HPF.

图 6: HPF中不同提示策略在HPT四项规则下的得分分布

In contrast, tasks with high cognitive demands involve prompts that guide the LLM through complex reasoning, abstraction, or multi-step problem-solving—analogous to System 2 thinking. Examples include generating logical arguments or solving intricate problems, where deliberate and resource-intensive processes are necessary. Just as System 2 engages when a problem exceeds the capacity of System 1, higher levels of HPF are invoked for tasks requiring deeper analysis.

相比之下,高认知需求的任务需要提示词引导大语言模型进行复杂推理、抽象或多步骤问题解决,类似于系统2思维。例如生成逻辑论证或解决复杂问题,这些任务需要深思熟虑且消耗资源的处理过程。正如当问题超出系统1能力范围时系统2会介入,需要深度分析的任务会调用更高级别的HPF。

HPF explicitly measures this transition with HPI, assessing the cognitive load required for each task. By tailoring prompting strategies to task complexity, HPF optimizes LLM performance, much like humans adaptively switch between System 1 and System 2 based on the situation. This parallel highlights how HPT bridges computational strategies with human-like cognitive models, enabling more nuanced task evaluation and resource allocation.

HPF通过HPI明确衡量这种转变,评估每项任务所需的认知负荷。通过根据任务复杂度定制提示策略,HPF优化了大语言模型的性能,类似于人类根据情境在系统1和系统2之间自适应切换。这一相似性凸显了HPT如何将计算策略与类人认知模型相连接,从而实现更细致的任务评估和资源分配。

Table 3: HPI (lower is better), BLEU score for IWSLT, and ROUGE-L score for SamSum, of LLMs with threshold

| 数据集 | IWSLT | SamSum | ||||||

|---|---|---|---|---|---|---|---|---|

| HPI | BLEU | HPI | ROUGE-L | |||||

| 模型 | 0.15 | 0.20 | 0.15 | 0.20 | 0.15 | 0.20 | 0.15 | 0.20 |

| GPT-40 | 2.66 | 3.08 | 0.32 | 0.32 | 1.11 | 1.21 | 0.30 | 0.29 |

| Claude 3.5 Sonnet | 4.63 | 4.87 | 0.20 | 0.20 | 1.25 | 1.60 | 0.23 | 0.23 |

| Mistral-Nemo12B | 2.87 | 3.40 | 0.27 | 0.27 | 1.19 | 1.47 | 0.23 | 0.24 |

| Gemma-29B | 4.40 | 4.75 | 0.21 | 0.20 | 1.30 | 1.86 | 0.22 | 0.22 |

| Llama-3 8B | 3.40 | 3.92 | 0.24 | 0.23 | 1.30 | 1.72 | 0.22 | 0.22 |

| Gemma 7B | 5.39 | 5.84 | 0.08 | 0.09 | 3.31 | 5.03 | 0.11 | 0.10 |

| Mistral 7B | 3.52 | 4.14 | 0.22 | 0.22 | 1.26 | 1.68 | 0.21 | 0.22 |

表 3: 大语言模型在 IWSLT 数据集上的 HPI (越低越好) 和 BLEU 分数,以及在 SamSum 数据集上的 ROUGE-L 分数,阈值为

4.6 Adaptive HPF

4.6 自适应HPF

The Adaptive HPF automates the selection of the optimal complexity level in the HPF using a prompt-selector, Llama $38\mathrm{B}$ in a zero-shot setting, bypassing iterative steps. Figure 7 shows that Adaptive HPF yields higher HPI but lower evaluation scores than the standard HPF. This suggests that Adaptive HPF struggles to select the optimal complexity level, likely due to hallucinations by the prompt-selector when choosing the prompting strategy. For more results and ablation studies, see Appendix E.

自适应HPF (Adaptive HPF) 通过提示选择器 (prompt-selector) 在零样本 (zero-shot) 设置下自动选择HPF中的最佳复杂度级别,绕过了迭代步骤。图7显示,自适应HPF比标准HPF产生更高的HPI但更低的评估分数。这表明自适应HPF难以选择最佳复杂度级别,可能是由于提示选择器在选择提示策略时产生幻觉 (hallucinations) 所致。更多结果和消融研究见附录E。

Figure 7: HPI of datasets for LLMs in Adaptive HPF.

图 7: 自适应HPF中大语言模型数据集的HPI指标

5 Conclusion

5 结论

The HPT provides a strong and efficient approach for assessing LLMs by focusing solely on the cognitive demands of different tasks. The results emphasize that the HPF is effective in evaluating diverse datasets, using the most cognitive ly effective prompting strategies tailored to task complexity, which results in improved LLM performance across multiple datasets. This method not only offers an in-depth insight into LLM’s problem-solving abilities but also suggests that dynamically choosing suitable prompting strategies can enhance LLM performance, setting the stage for future improvements in LLM evaluation methods. This study paves the way for formulating and designing evaluation methods grounded in human cognitive principles, aligning them with the problem-solving capabilities of LLMs. Additionally, it facilitates the development of more robust benchmarks and in-context learning methodologies to effectively assess LLM performance across various tasks.

HPT通过专注于不同任务的认知需求,为评估大语言模型提供了一种强大而高效的方法。结果表明,HPF能有效评估多样化数据集,通过采用针对任务复杂度量身定制、最具认知效率的提示策略,从而提升大语言模型在多个数据集上的表现。该方法不仅深入揭示了大语言模型的问题解决能力,还表明动态选择合适提示策略可增强模型性能,为未来改进大语言模型评估方法奠定基础。本研究为基于人类认知原理制定评估方法开辟了道路,使其与大语言模型的问题解决能力相匹配。此外,它促进了更健壮的基准测试和上下文学习方法的开发,以有效评估大语言模型在各种任务中的表现。

6 Ethical Statement

6 伦理声明

The $\mathsf{H P I}_{D a t a s e t}$ assigned by experts to the datasets: MMLU, GSM8k, Humaneval, BoolQ, CSQA, IWSLT, and SamSum may introduce bias into the comparative analysis. This potential bias stems from the subjective nature of expert scoring, which can be influenced by individual experience and perspective. Despite this, the datasets utilized in this study are publicly available and widely recognized in the research community, thereby minimizing the risk of unanticipated ethical issues. Nevertheless, it is crucial to acknowledge the possibility of scoring bias to ensure transparency and integrity in the analysis.

专家为MMLU、GSM8K、Humaneval、BoolQ、CSQA、IWSLT和SamSum数据集分配的$\mathsf{H P I}_{D a t a s e t}$可能会在比较分析中引入偏差。这种潜在偏差源于专家评分的主观性,可能受到个人经验和观点的影响。尽管如此,本研究所用数据集均为公开可用且在研究界广泛认可,从而将意外伦理问题的风险降至最低。然而,必须承认评分偏差的可能性,以确保分析的透明性和完整性。

References

参考文献

AI@Meta. 2024. Llama 3 Model Card. https://github.com/ meta-llama/llama3/blob/main/MODEL CARD.md.

AI@Meta. 2024. Llama 3 模型卡。https://github.com/meta-llama/llama3/blob/main/MODEL CARD.md。

Anderson, L.; Krathwohl, D.; Cruikshank, K.; Airasian,P.; Raths, J.; Pintrich, P.; Mayer, R.; and Wittrock, M. 2014. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s. Always learning. Pearson. ISBN 9781292042848. Anthropic. 2024. Claude 3.5 Sonnet. https://www.anthropic. com/claude-3-5-sonnet. Accessed: 2024-09-16. Bloom, B. 1956. Taxonomy of Educational Objectives: The Classification of Educational Goals. Number v. 1 in Taxonomy of Educational Objectives: The Classification of Educational Goals. Longmans, Green. ISBN 9780679302094. Booch, G.; Fabiano, F.; Horesh, L.; Kate, K.; Lenchner, J.; Linck, N.; Loreggia, A.; Murgesan, K.; Mattei, N.; Rossi, F.; et al. 2021. Thinking fast and slow in AI. In Proceedings of

Anderson, L.; Krathwohl, D.; Cruikshank, K.; Airasian,P.; Raths, J.; Pintrich, P.; Mayer, R.; Wittrock, M. 2014. 学习、教学和评估的分类学: 布鲁姆分类法的修订版. Always learning. Pearson. ISBN 9781292042848.

Anthropic. 2024. Claude 3.5 Sonnet. https://www.anthropic.com/claude-3-5-sonnet. 访问日期: 2024-09-16.

Bloom, B. 1956. 教育目标分类学: 教育目标的分类. Number v. 1 in Taxonomy of Educational Objectives: The Classification of Educational Goals. Longmans, Green. ISBN 9780679302094.

Booch, G.; Fabiano, F.; Horesh, L.; Kate, K.; Lenchner, J.; Linck, N.; Loreggia, A.; Murgesan, K.; Mattei, N.; Rossi, F.; et al. 2021. AI中的快与慢思考. In Proceedings of

A Human Annotation and Judgement Policy A.1 Human Annotation Policy

人工标注与评判策略

A.1 人工标注策略

$\mathsf{H P I}_{D a t a