Delete, Retrieve, Generate: A Simple Approach to Sentiment and Style Transfer

删除、检索、生成:一种简单的情感与风格迁移方法

Abstract

摘要

We consider the task of text attribute transfer: transforming a sentence to alter a specific attribute (e.g., sentiment) while preserving its attribute-independent content (e.g., changing “screen is just the right size” to “screen is too small”). Our training data includes only sentences labeled with their attribute (e.g., positive or negative), but not pairs of sentences that differ only in their attributes, so we must learn to disentangle attributes from attributeindependent content in an unsupervised way. Previous work using adversarial methods has struggled to produce high-quality outputs. In this paper, we propose simpler methods motivated by the observation that text attributes are often marked by distinctive phrases (e.g., “too small”). Our strongest method extracts content words by deleting phrases associated with the sentence’s original attribute value, retrieves new phrases associated with the target attribute, and uses a neural model to fluently combine these into a final output. On human evaluation, our best method generates grammatical and appropriate responses on $22%$ more inputs than the best previous system, averaged over three attribute transfer datasets: altering sentiment of reviews on Yelp, altering sentiment of reviews on Amazon, and altering image captions to be more romantic or humorous.

我们研究文本属性迁移任务:通过改变句子中的特定属性(如情感)同时保留其属性无关内容(例如将"屏幕大小刚好"改为"屏幕太小")。训练数据仅包含带有属性标签的句子(如正面或负面),而没有仅属性不同的句子对,因此必须以无监督方式学习分离属性与属性无关内容。先前使用对抗方法的研究难以生成高质量输出。本文基于"文本属性通常由特定短语标记(如'太小')"这一观察,提出了更简单的方法。我们最优的方法通过删除与原属性相关的短语来提取内容词,检索与目标属性相关的新短语,并利用神经模型流畅组合生成最终输出。在人工评估中,我们的最佳方法在三个属性迁移数据集(修改Yelp评论情感、修改Amazon评论情感、使图片描述更浪漫或幽默)上平均比之前最优系统多产生22%语法正确且内容恰当的输出。

1 Introduction

1 引言

The success of natural language generation (NLG) systems depends on their ability to carefully control not only the topic of produced utterances, but also attributes such as sentiment and style. The desire for more sophisticated, controllable NLG has led to increased interest in text attribute transfer— the task of editing a sentence to alter specific attributes, such as style, sentiment, and tense (Hu et al., 2017; Shen et al., 2017; Fu et al., 2018). In each of these cases, the goal is to convert a sentence with one attribute (e.g., negative sentiment) to one with a different attribute (e.g., positive sentiment), while preserving all attribute-independent content1 (e.g., what properties of a restaurant are being discussed). Typically, aligned sentences with the same content but different attributes are not available; systems must learn to disentangle attributes and content given only unaligned sentences labeled with attributes.

自然语言生成(NLG)系统的成功不仅取决于其控制生成话语主题的能力,还取决于对情感和风格等属性的精确把控。对更复杂、可控NLG的需求,推动了文本属性迁移(text attribute transfer)研究的兴起——这项任务旨在通过编辑句子来改变特定属性(如风格、情感和时态) (Hu et al., 2017; Shen et al., 2017; Fu et al., 2018)。在这些研究中,目标都是将具有某种属性(如消极情感)的句子转换为具有不同属性(如积极情感)的句子,同时保留所有与属性无关的内容(如讨论餐厅的哪些特性)。通常情况下,我们无法获得内容相同但属性不同的对齐句子;系统必须仅通过带有属性标注的非对齐句子,来学习分离属性和内容。

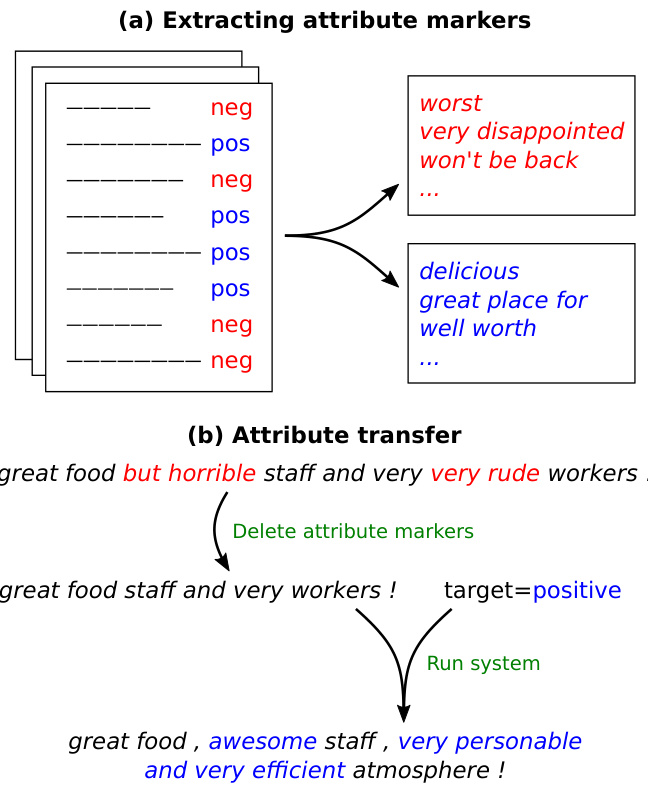

Figure 1: An overview of our approach. (a) We identify attribute markers from an unaligned corpus. (b) We transfer attributes by removing markers of the original attribute, then generating a new sentence conditioned on the remaining words and the target attribute.

图 1: 方法概述。(a) 我们从非对齐语料中识别属性标记。(b) 通过移除原始属性标记并基于剩余词语和目标属性生成新句子,实现属性迁移。

Previous work has attempted to use adversarial networks (Shen et al., 2017; Fu et al., 2018) for this task, but—as we demonstrate—their outputs tend to be low-quality, as judged by human raters. These models are also difficult to train (Salimans et al., 2016; Arjovsky and Bottou, 2017; Bousmalis et al., 2017).

先前的研究尝试使用对抗网络 (Shen et al., 2017; Fu et al., 2018) 来完成这项任务,但正如我们所展示的,根据人类评估者的判断,它们的输出往往质量较低。这些模型也较难训练 (Salimans et al., 2016; Arjovsky and Bottou, 2017; Bousmalis et al., 2017)。

In this work, we propose a set of simpler, easierto-train systems that leverage an important observation: attribute transfer can often be accomplished by changing a few attribute markers— words or phrases in the sentence that are indicative of a particular attribute—while leaving the rest of the sentence largely unchanged. Figure 1 shows an example in which the sentiment of a sentence can be altered by changing a few sentiment-specific phrases but keeping other words fixed.

在本工作中,我们提出了一组更简单、更易训练的系统,这些系统基于一个重要发现:属性迁移通常只需改变少量属性标记(即句子中指示特定属性的单词或短语),而保持句子其余部分基本不变。图 1: 展示了一个示例,通过改变少量情感相关短语但固定其他词汇,即可改变句子的情感倾向。

With this intuition, we first propose a simple baseline that already outperforms prior adversarial approaches. Consider a sentiment transfer (negative to positive) task. First, from unaligned corpora of positive and negative sentences, we identify attribute markers by finding phrases that occur much more often within sentences of one attribute than the other (e.g., “worst” and “very disp pointed” are negative markers). Second, given a sentence, we delete any negative markers in it, and regard the remaining words as its content. Third, we retrieve a sentence with similar content from the positive corpus.

基于这一直觉,我们首先提出一个简单基线方法,其性能已超越现有对抗式方法。以情感转换(负面转正面)任务为例:首先,从无对齐的正面和负面语料库中,通过识别在某一属性句子中出现频率显著更高的短语(如"worst"和"very disappointed"属于负面标记词)来确定属性标记词;其次,给定句子时删除其中的负面标记词,将剩余词汇视作内容部分;最后,从正面语料库中检索内容相似的句子。

We further improve upon this baseline by incorpora ting a neural generative model, as shown in Figure 1. Our neural system extracts content words in the same way as our baseline, then generates the final output with an RNN decoder that conditions on the extracted content and the target attribute. This approach has significant benefits at training time, compared to adversarial networks: having already separated content and attribute, we simply train our neural model to reconstruct sentences in the training data as an auto-encoder.

我们通过引入神经生成模型进一步改进了这一基线方法,如图 1 所示。我们的神经系统采用与基线相同的方式提取内容词,然后通过一个 RNN (Recurrent Neural Network) 解码器生成最终输出,该解码器以提取的内容和目标属性为条件。与对抗网络相比,这种方法在训练时具有显著优势:由于已经分离了内容和属性,我们只需将神经模型训练为自动编码器来重建训练数据中的句子。

We test our methods on three text attribute transfer datasets: altering sentiment of Yelp reviews, altering sentiment of Amazon reviews, and altering image captions to be more romantic or humorous. Averaged across these three datasets, our simple baseline generated grammatical sentences with appropriate content and attribute $23%$ of the time, according to human raters; in contrast, the best adversarial method achieved only $12%$ . Our best neural system in turn outperformed our baseline, achieving an average success rate of $34%$ . Our code and data, including newly collected human reference outputs for the Yelp and Amazon domains, can be found at https://github.com/lijuncen/ Sentiment-and-Style-Transfer.

我们在三个文本属性迁移数据集上测试了我们的方法:修改Yelp评论的情感倾向、修改Amazon评论的情感倾向,以及将图片描述改写得更浪漫或幽默。根据人工评估,在这三个数据集上,我们的简单基线模型平均有23%的概率生成语法正确且内容与属性相符的句子;相比之下,最佳对抗方法仅达到12%。而我们最好的神经网络系统又超越了基线模型,平均成功率达到34%。我们的代码和数据(包括新收集的Yelp和Amazon领域人工参考输出)可在https://github.com/lijuncen/Sentiment-and-Style-Transfer获取。

2 Problem Statement

2 问题陈述

We assume access to a corpus of labeled sentences , where $x_{i}$ is a sentence and $\ensuremath{\boldsymbol{v}}{i}\in\ensuremath{\mathcal{V}}$ , the set of possible attributes (e.g., for sentiment, $\nu=$ “positive”, “negative” ). We define $\mathcal{D}_{v}={x:$ $(x,v)\in{\mathcal{D}}}$ , the set of sentences in the corpus with attribute $v$ . Crucially, we do not assume access to a parallel corpus that pairs sentences with different attributes and the same content.

我们假设可以访问一个带标签句子的语料库 ,其中 $x_{i}$ 是句子, $\ensuremath{\boldsymbol{v}}f{i}\in\ensuremath{\mathcal{V}}$ 表示可能的属性集合 (例如情感分析中 $\nu=$ "正面"、"负面") 。定义 $\mathcal{D}_{v}={x:$ $(x,v)\in{\mathcal{D}}}$ 为语料中具有属性 $v$ 的句子集合。关键的是,我们并不假设存在平行语料库 (parallel corpus) 来匹配不同属性但内容相同的句子。

Our goal is to learn a model that takes as input $(x,v^{\mathrm{tgt}})$ where $x$ is a sentence exhibiting source (original) attribute $v^{\mathrm{src}}$ , and $v^{\mathrm{tgt}}$ is the target attribute, and outputs a sentence $y$ that retains the content of $x$ while exhibiting $v^{\mathrm{tgt}}$ .

我们的目标是学习一个模型,该模型以 $(x,v^{\mathrm{tgt}})$ 作为输入,其中 $x$ 是展现源(原始)属性 $v^{\mathrm{src}}$ 的句子,$v^{\mathrm{tgt}}$ 是目标属性,并输出一个句子 $y$,该句子在展现 $v^{\mathrm{tgt}}$ 的同时保留 $x$ 的内容。

3 Approach

3 方法

As a motivating example, suppose we wanted to change the sentiment of “The chicken was delicious.” from positive to negative. Here the word “delicious” is the only sentiment-bearing word, so we just need to replace it with an appropriate negative sentiment word. More generally, we find that the attribute is often localized to a small fraction of the words, an inductive bias not captured by previous work.

作为一个启发性的例子,假设我们想将"鸡肉很美味"的情感从正面改为负面。这里"美味"是唯一带有情感色彩的词,因此只需将其替换为适当的负面情感词即可。更普遍地说,我们发现属性通常集中在少数词汇上,这一归纳偏置未被前人工作所捕捉。

How do we know which negative sentiment word to insert? The key observation is that the remaining content words provide strong cues: given “The chicken was . . . ”, one can infer that a tasterelated word like “bland” fits, but a word like “rude” does not, even though both have negative sentiment. In other words, while the deleted sentiment words do contain non-sentiment information too, this information can often be recovered using the other content words.

我们如何确定插入哪个负面情感词?关键在于观察到剩余内容词提供了强烈线索:给定“鸡肉是……”,可以推断出像“寡淡”这样与味道相关的词是合适的,而像“粗鲁”这样的词则不合适,尽管两者都具有负面情感。换句话说,虽然被删除的情感词确实也包含非情感信息,但这些信息通常可以通过其他内容词来恢复。

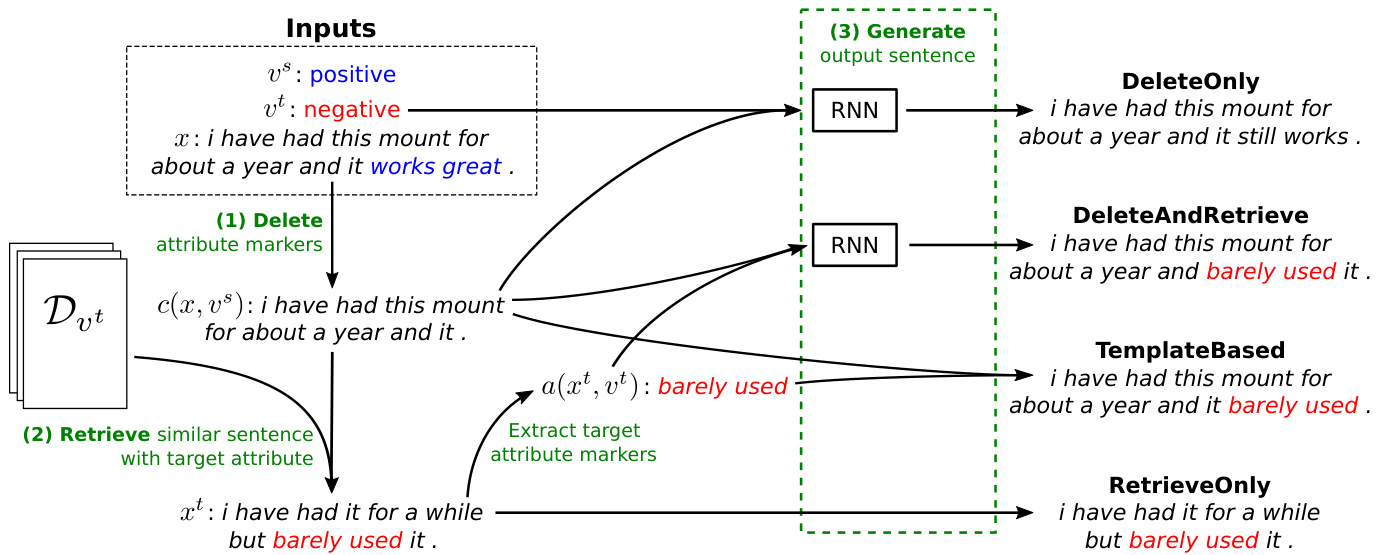

In the rest of this section, we describe our four systems: two baselines (RETRIEVE ONLY and TEMPLATE BASED) and two neural models (DELETEONLY and DELETE AND RETRIEVE). An overview of all four systems is shown in Figure 2. Formally, the main components of these systems are as follows:

在本节剩余部分,我们将描述四个系统:两个基线模型(RETRIEVE ONLY 和 TEMPLATE BASED)以及两个神经模型(DELETEONLY 和 DELETE AND RETRIEVE)。所有四个系统的概览如图 2 所示。这些系统的主要组件如下:

Figure 2: Our four proposed methods on the same sentence, taken from the AMAZON dataset. Every method uses the same procedure (1) to separate attribute and content by deleting attribute markers; they differ in the construction of the target sentence. RETRIEVE ONLY directly returns the sentence retrieved in (2). TEMPLATE BASED combines the content with the target attribute markers in the retrieved sentence by slot filling. DELETE AND RETRIEVE generates the output from the content and the retrieved target attribute markers with an RNN. DELETEONLY generates the output from the content and the target attribute with an RNN.

图 2: 我们在同一句子上提出的四种方法(取自AMAZON数据集)。所有方法都采用相同的步骤(1)通过删除属性标记来分离属性和内容,区别在于目标句子的构建方式。RETRIEVE ONLY直接返回步骤(2)检索到的句子。TEMPLATE BASED通过槽填充将内容与检索到的目标属性标记组合。DELETE AND RETRIEVE使用RNN根据内容和检索到的目标属性标记生成输出。DELETEONLY使用RNN根据内容和目标属性生成输出。

- Delete: All 4 systems use the same procedure to separate the words in $x$ into a set of attribute markers $a(x,v^{\mathrm{src}})$ and a sequence of content words $c(x,v^{\mathrm{src}})$ .

- 删除:所有4个系统都采用相同的流程,将$x$中的单词分割为一组属性标记$a(x,v^{\mathrm{src}})$和一个内容词序列$c(x,v^{\mathrm{src}})$。

- Retrieve: 3 of the 4 systems look through the corpus and retrieve a sentence $x^{\mathrm{tgt}}$ that has the target attribute $v^{\mathrm{tgt}}$ and whose content is similar to that of $x$ .

- 检索:4个系统中有3个会遍历语料库,检索出具有目标属性 $v^{\mathrm{tgt}}$ 且内容与 $x$ 相似的句子 $x^{\mathrm{tgt}}$。

- Generate: Given the content $c(x,v^{\mathrm{src}})$ , target attribute $v^{\mathrm{tgt}}$ , and (optionally) the retrieved sentence $x^{\mathrm{tgt}}$ , each system generates $y$ , either in a rule-based fashion or with a neural sequence-to-sequence model.

- 生成:给定内容 $c(x,v^{\mathrm{src}})$、目标属性 $v^{\mathrm{tgt}}$ 以及(可选)检索到的句子 $x^{\mathrm{tgt}}$,每个系统以基于规则的方式或使用神经序列到序列模型生成 $y$。

We describe each component in detail below.

我们在下面详细描述每个组件。

3.1 Delete

3.1 删除

We propose a simple method to delete attribute markers ( $n$ -grams) that have the most discriminative power. Formally, for any $v\in\nu$ , we define the salience of an $n$ -gram $u$ with respect to $v$ by its (smoothed) relative frequency in $\mathcal{D}_{v}$ :

我们提出了一种简单方法来删除最具区分力的属性标记($n$-gram)。形式上,对于任意$v\in\nu$,我们通过$n$-gram $u$在$\mathcal{D}_{v}$中的(平滑)相对频率来定义其相对于$v$的显著度:

$$

s(u,v)=\frac{\mathrm{count}(u,\mathcal{D}{v})+\lambda}{\left(\sum_{v^{\prime}\in\mathcal{V},v^{\prime}\ne v}\mathrm{count}(u,\mathcal{D}_{v^{\prime}})\right)+\lambda},

$$

$$

s(u,v)=\frac{\mathrm{count}(u,\mathcal{D}{v})+\lambda}{\left(\sum_{v^{\prime}\in\mathcal{V},v^{\prime}\ne v}\mathrm{count}(u,\mathcal{D}_{v^{\prime}})\right)+\lambda},

$$

where count $(u,\mathcal{D}{v})$ denotes the number of times an $n$ -gram $u$ appears in $\mathcal{D}_{v}$ , and $\lambda$ is the smoothing parameter. We declare $u$ to be an attribute marker for $v$ if $s(u,v)$ is larger than a specified threshold $\gamma$ . The attributed markers can be viewed as discri mi native features for a Naive Bayes classifier.

其中 $(u,\mathcal{D}{v})$ 表示 $n$-gram $u$ 在 $\mathcal{D}_{v}$ 中出现的次数,$\lambda$ 是平滑参数。当 $s(u,v)$ 大于指定阈值 $\gamma$ 时,我们将 $u$ 声明为 $v$ 的属性标记。这些属性标记可视为朴素贝叶斯分类器的判别性特征。

We define $a(x,v^{\mathrm{src}})$ to be the set of all source attribute markers in $x$ , and define $c(x,v^{\mathrm{src}})$ as the sequence of words after deleting all markers in $a(x,v^{\mathrm{src}})$ from $x$ . For example, for “The chicken was delicious,” we would delete “delicious” and consider “The chicken was. . . ” to be the content (Figure 2, Step 1).

我们定义 $a(x,v^{\mathrm{src}})$ 为 $x$ 中所有源属性标记的集合,并将 $c(x,v^{\mathrm{src}})$ 定义为从 $x$ 中删除 $a(x,v^{\mathrm{src}})$ 所有标记后得到的词序列。例如,对于句子"The chicken was delicious",我们会删除"delicious"并将"The chicken was..."视为内容 (图 2: 步骤1)。

3.2 Retrieve

3.2 检索

To decide what words to insert into $c(x,v^{\mathrm{src}})$ , one useful strategy is to look at similar sentences with the target attribute. For example, negative sentences that use phrases similar to “The chicken was. . . ” are more likely to contain “bland” than “rude.” Therefore, we retrieve sentences of similar content and use target attribute markers in them for insertion.

为了确定在 $c(x,v^{\mathrm{src}})$ 中插入哪些词语,一个有效的策略是查看具有目标属性的类似句子。例如,使用类似"The chicken was..."这类短语的否定句更可能包含"bland"而非"rude"。因此,我们检索内容相似的句子,并利用其中的目标属性标记进行插入。

Formally, we retrieve $x^{\mathrm{tgt}}$ according to:

形式上,我们根据以下公式检索 $x^{\mathrm{tgt}}$:

$$

x^{\mathrm{{tgt}}}=\operatorname*{argmin}{x^{\prime}\in\mathcal{D}_{v^{\mathrm{{tgt}}}}}d(c(x,v^{\mathrm{{src}}}),c(x^{\prime},v^{\mathrm{{tgt}}})),

$$

$$

x^{\mathrm{{tgt}}}=\operatorname*{argmin}{x^{\prime}\in\mathcal{D}_{v^{\mathrm{{tgt}}}}}d(c(x,v^{\mathrm{{src}}}),c(x^{\prime},v^{\mathrm{{tgt}}})),

$$

where $d$ may be any distance metric comparing two sequences of words. We experiment with two options: (i) TF-IDF weighted word overlap and (ii) Euclidean distance using the content embeddings in Section 3.3 (Figure 2, Step 2).

其中 $d$ 可以是比较两个词序列的任何距离度量。我们实验了两种方案:(i) TF-IDF 加权词重叠度 (ii) 使用第 3.3 节内容嵌入的欧氏距离 (图 2, 步骤 2)。

3.3 Generate

3.3 生成

Finally, we describe how each system generates $y$ (Figure 2, Step 3).

最后,我们描述每个系统如何生成 $y$ (图 2,步骤 3)。

RETRIEVE ONLY returns the retrieved sentence $x^{\mathrm{tgt}}$ verbatim. This is guaranteed to produce a grammatical sentence with the target attribute, but its content might not be similar to $x$ .

RETRIEVE ONLY 仅返回检索到的句子 $x^{\mathrm{tgt}}$ 的原文。这保证了生成的句子具有目标属性且语法正确,但其内容可能与 $x$ 不相似。

TEMPLATE BASED replaces the attribute markers deleted from the source sentence $a(x,v^{\mathrm{src}})$ with those of the target sentence $a(x^{\mathrm{{tgt}}},v^{\mathrm{{tgt}}})$ .2 This strategy relies on the assumption that if two attribute markers appear in similar contexts , they are roughly syntactically exchangeable. For example, “love” and “don’t like” appear in similar contexts (e.g., “i love this place.” and “i don’t like this place.”), and exchanging them is syntactically valid. However, this naive swapping of attribute markers can result in ungrammatical outputs.

基于模板的方法将源句 $a(x,v^{\mathrm{src}})$ 中删除的属性标记替换为目标句 $a(x^{\mathrm{{tgt}}},v^{\mathrm{{tgt}}})$ 的属性标记。该策略基于以下假设:若两个属性标记出现在相似上下文中,则它们在句法上大致可互换。例如,"love"和"don't like"出现在相似上下文(如"i love this place."与"i don't like this place."),互换它们在句法上是有效的。但这种简单的属性标记交换可能导致不合语法的输出。

DELETEONLY first embeds the content $c(x,v^{\mathrm{src}})$ into a vector using an RNN. It then concatenates the final hidden state with a learned embedding for $v^{\mathrm{tgt}}$ , and feeds this into an RNN decoder to generate $y$ . The decoder attempts to produce words indicative of the source content and target attribute, while remaining fluent.

DELETEONLY首先使用RNN将内容$c(x,v^{\mathrm{src}})$嵌入为向量,然后将最终隐藏状态与目标属性$v^{\mathrm{tgt}}$的学习嵌入向量拼接,输入RNN解码器以生成$y$。解码器在保持流畅性的同时,试图生成既反映源内容又体现目标属性的词汇。

DELETE AND RETRIEVE is similar to DELETEONLY, but uses the attribute markers of the retrieved sentence $x^{\mathrm{tgt}}$ rather than the target attribute $v^{\mathrm{tgt}}$ . Like DELETEONLY, it encodes $c(x,v^{\mathrm{src}})$ with an RNN. It then encodes the sequence of attribute markers $a(x^{\mathrm{{tgt}}},v^{\mathrm{{tgt}}})$ with another RNN. The RNN decoder uses the concatenation of this vector and the content embedding to generate $y$ .

DELETE AND RETRIEVE 与 DELETEONLY 类似,但使用检索句子 $x^{\mathrm{tgt}}$ 的属性标记而非目标属性 $v^{\mathrm{tgt}}$。与 DELETEONLY 相同,它通过 RNN 对 $c(x,v^{\mathrm{src}})$ 进行编码,随后用另一个 RNN 对属性标记序列 进行编码。RNN 解码器通过该向量与内容嵌入的拼接来生成 $y$。

DELETE AND RETRIEVE combines the advantages of TEMPLATE BASED and DELETEONLY. Unlike TEMPLATE BASED, DELETEAND RETRIEVE can pick a better place to insert the given attribute markers, and can add or remove function words to ensure grammatical it y. Compared to DELETEONLY, DELETE AND RETRIEVE has a stronger inductive bias towards using target attribute markers that are likely to fit in the current context. Guu et al. (2018) showed that retrieval strategies like ours can help neural generative models. Finally, DELETE AND RETRIEVE gives us finer control over the output; for example, we can control the degree of sentiment by deciding whether to add “good” or “fantastic” based on the retrieved sentence $x^{\mathrm{tgt}}$ .

DELETE AND RETRIEVE 结合了 TEMPLATE BASED 和 DELETEONLY 的优势。与 TEMPLATE BASED 不同,DELETE AND RETRIEVE 能够选择更合适的位置插入给定的属性标记,并能通过增减功能词保证语法正确性。相比 DELETEONLY,该方法对目标属性标记的使用具有更强的归纳偏置,使其更契合当前语境。Guu 等人 (2018) 的研究表明,类似本文采用的检索策略能有效辅助神经生成模型。此外,DELETE AND RETRIEVE 提供了更精细的输出控制能力,例如根据检索到的目标句 $x^{\mathrm{tgt}}$ 可灵活选择添加"good"或"fantastic"来实现情感强度的调控。

3.4 Training

3.4 训练

We now describe how to train DELETEANDRETRIEVE and DELETEONLY. Recall that at training time, we do not have access to ground truth outputs that express the target attribute. Instead, we train DELETEONLY to reconstruct the sentences in the training corpus given their content and original attribute value by maximizing:

我们现在描述如何训练DELETEANDRETRIEVE和DELETEONLY。回想一下,在训练时我们无法获得表达目标属性的真实输出。相反,我们通过最大化以下目标来训练DELETEONLY,使其根据训练语料库中句子的内容和原始属性值进行重建:

$$

L(\theta)=\sum_{(x,v^{\mathrm{src}})\in\mathcal{D}}\log p(x\mid c(x,v^{\mathrm{src}}),v^{\mathrm{src}});\theta).

$$

$$

L(\theta)=\sum_{(x,v^{\mathrm{src}})\in\mathcal{D}}\log p(x\mid c(x,v^{\mathrm{src}}),v^{\mathrm{src}});\theta).

$$

For DELETE AND RETRIEVE, we could similarly learn an auto-encoder that reconstructs $x$ from $c(x,v^{\mathrm{src}})$ and $a(x,v^{\mathrm{src}})$ . However, this results in a trivial solution: because $a(x,v^{\mathrm{src}})$ and $c(x,v^{\mathrm{src}})$ were known to come from the same sentence, the model merely learns to stitch the two sequences together without any smoothing. Such a model would fare poorly at test time, when we may need to alter some words to fluently combine $a(x^{\mathrm{{tgt}}},v^{\mathrm{{tgt}}})$ with $c(x,v^{\mathrm{src}})$ . To address this train/test mismatch, we adopt a denoising method similar to the denoising auto-encoder (Vincent et al., 2008). During training, we apply some noise to $a(x,v^{\mathrm{src}})$ by randomly altering each attribute marker in it independently with probability 0.1. Specifically, we replace an attribute marker with another randomly selected attribute marker of the same attribute and word-level edit distance 1 if such a noising marker exists, e.g., “was very rude” to “very rude”, which produces $a^{\prime}(x,v^{\mathrm{src}})$ .

对于 DELETE AND RETRIEVE 方法,我们同样可以训练一个自编码器 (auto-encoder) 来从 $c(x,v^{\mathrm{src}})$ 和 $a(x,v^{\mathrm{src}})$ 重构 $x$。但这样会导致一个平凡解:由于已知 $a(x,v^{\mathrm{src}})$ 和 $c(x,v^{\mathrm{src}})$ 来自同一句子,模型仅学会简单拼接这两个序列而不进行任何平滑处理。这种模型在测试时会表现不佳,因为实际场景中可能需要修改部分词汇才能流畅地将 $a(x^{\mathrm{{tgt}}},v^{\mathrm{{tgt}}})$ 与 $c(x,v^{\mathrm{src}})$ 结合。为解决训练/测试不匹配问题,我们采用了类似去噪自编码器 (denoising auto-encoder) [20] 的方法:在训练时以0.1的概率独立随机修改 $a(x,v^{\mathrm{src}})$ 中的每个属性标记 (attribute marker)。具体而言,若存在满足条件的噪声标记,我们会将原标记替换为同属性且编辑距离 (word-level edit distance) 为1的随机属性标记(例如将"was very rude"改为"very rude"),从而生成 $a^{\prime}(x,v^{\mathrm{src}})$。

Therefore, the training objective for DELETEAND RETRIEVE is to maximize:

因此,DELETEAND RETRIEVE 的训练目标是最大化:

$$

L(\theta)=\sum_{(x,v^{\mathrm{src}})\in\mathcal{D}}\log p(x\mid c(x,v^{\mathrm{src}}),a^{\prime}(x,v^{\mathrm{src}});\theta).

$$

$$

L(\theta)=\sum_{(x,v^{\mathrm{src}})\in\mathcal{D}}\log p(x\mid c(x,v^{\mathrm{src}}),a^{\prime}(x,v^{\mathrm{src}});\theta).

$$

4 Experiments

4 实验

We evaluated our approach on three domains: flipping sentiment of Yelp reviews (YELP) and Amazon reviews (AMAZON), and changing image captions to be romantic or humorous (CAPTIONS). We compared our four systems to human references and three previously published adversarial approaches. As judged by human raters, both of our two baselines outperform all three adversarial methods. Moreover, DELETE AND RETRIEVE outperforms all other automatic approaches.

我们在三个领域评估了我们的方法:翻转Yelp评论(YELP)和亚马逊评论(AMAZON)的情感倾向,以及将图片描述改为浪漫或幽默风格(CAPTIONS)。我们将四个系统与人工参考文本及三种已发表的对抗方法进行对比。根据人工评分结果显示,我们的两个基线模型均优于所有三种对抗方法。此外,DELETE AND RETRIEVE方法在所有自动化方法中表现最优。

Table 1: Dataset statistics.

表 1: 数据集统计。

| Dataset | Attributes | Train | Dev | Test |

|---|---|---|---|---|

| YELP | Positive Negative | 270K 180K | 2000 2000 | 500 500 |

| CAPTIONS | Romantic Humorous Factual | 6000 6000 0 | 300 300 0 | 0 300 |

| AMAZON | Positive Negative | 277K 278K | 985 1015 | 500 500 |

4.1 Datasets

4.1 数据集

First, we describe the three datasets we use, which are commonly used in prior works too. All datasets are randomly split into train, development, and test sets (Table 1).

首先,我们介绍所使用的三个数据集,这些数据集在先前研究中也常被使用。所有数据集均被随机划分为训练集、开发集和测试集 (表 1)。

YELP Each example is a sentence from a business review on Yelp, and is labeled as having either positive or negative sentiment.

YELP 每个示例都是Yelp上的一条商业评论句子,并被标记为具有正面或负面情感。

AMAZON Similar to YELP, each example is a sentence from a product review on Amazon, and is labeled as having either positive or negative sentiment (He and McAuley, 2016).

AMAZON 与 YELP 类似,每个示例都是来自 Amazon 产品评论的一句话,并被标记为具有正面或负面情感 (He and McAuley, 2016)。

CAPTIONS In the CAPTIONS dataset (Gan et al., 2017), each example is a sentence that describes an image, and is labeled as either factual, romantic, or humorous. We focus on the task of converting factual sentences into romantic and humorous ones. Unlike YELP and AMAZON, CAPTIONS is actually an aligned corpus—it contains captions for the same image in different styles. Our systems do not use these alignments, but we use them as gold references for evaluation.

CAPTIONS 数据集

在CAPTIONS数据集 (Gan et al., 2017) 中,每个样本都是一句描述图像的句子,并被标注为事实性、浪漫或幽默风格。我们专注于将事实性句子转换为浪漫和幽默风格的任务。与YELP和AMAZON不同,CAPTIONS实际上是一个对齐语料库——它包含同一张图像的不同风格描述。我们的系统并未使用这些对齐关系,但将其作为评估的黄金标准参考。

CAPTIONS is also unique in that we reconstruct romantic and humorous sentences during training, whereas at test time we are given factual captions. We assume these factual captions carry only content, and therefore do not look for and delete factual attribute markers; The model essentially only inserts romantic or humorous attribute markers as appropriate.

CAPTIONS 的独特之处还在于,我们在训练过程中重建浪漫和幽默的句子,而在测试时则使用事实性描述。我们假设这些事实性描述仅包含内容,因此不会寻找并删除事实属性标记;模型基本上只根据需要插入浪漫或幽默属性标记。

4.2 Human References

4.2 人类参考

To supply human reference outputs to which we could compare the system outputs for YELP and AMAZON, we hired crowd workers on Amazon Mechanical Turk to write gold outputs for all test sentences. Workers were instructed to edit a sentence to flip its sentiment while preserving its content.

为了提供可与YELP和AMAZON系统输出对比的人工参考输出,我们通过Amazon Mechanical Turk平台雇佣众包工作者为所有测试句子撰写黄金标准输出。工作人员需按要求改写句子以反转其情感倾向,同时保持内容不变。

Our delete-retrieve-generate approach relies on the prior knowledge that to accomplish attribute transfer, a small number of attribute markers should be changed, and most other words should be kept the same. We analyzed our human reference data to understand the extent to which humans follow this pattern. We measured whether humans preserved words our system marks as content, and changed words our system marks as attribute-related (Section 3.1). We define the content word preservation rate $S_{c}$ as the average fraction of words our system marks as content that were preserved by humans, and the attributerelated word change rate $S_{a}$ as the average fraction of words our system marks as attribute-related that were changed by humans:

我们的删除-检索-生成方法基于一个先验知识:要实现属性迁移,只需更改少量属性标记词,同时保持其他大多数词语不变。为验证人类是否遵循该模式,我们分析了人工参考数据:统计人类保留被系统标记为内容词的比例,以及修改被系统标记为属性相关词的情况(第3.1节)。定义内容词保留率 $S_{c}$ 为人类保留的系统标记内容词的平均比例,属性相关词修改率 $S_{a}$ 为人类修改的系统标记属性词的平均比例:

$$

\begin{array}{l}{{S_{c}=\displaystyle\frac{1}{|\mathcal{D}{\mathrm{test}}|}\sum_{(x,v^{\mathrm{src}},y^{})\in\mathcal{D}{\mathrm{test}}}\frac{|c(x,v^{\mathrm{src}})\cap y^{}|}{|c(x,v^{\mathrm{src}})|}}}\ {{S_{a}=1-\displaystyle\frac{1}{|\mathcal{D}{\mathrm{test}}|}\sum_{(x,v^{\mathrm{src}},y^{})\in\mathcal{D}_{\mathrm{test}}}\frac{|a(x,v^{\mathrm{src}})\cap y^{*}|}{|a(x,v^{\mathrm{src}})|},}}\end{array}

$$

$$

\begin{array}{l}{{S_{c}=\displaystyle\frac{1}{|\mathcal{D}{\mathrm{test}}|}\sum_{(x,v^{\mathrm{src}},y^{})\in\mathcal{D}{\mathrm{test}}}\frac{|c(x,v^{\mathrm{src}})\cap y^{}|}{|c(x,v^{\mathrm{src}})|}}}\ {{S_{a}=1-\displaystyle\frac{1}{|\mathcal{D}{\mathrm{test}}|}\sum_{(x,v^{\mathrm{src}},y^{})\in\mathcal{D}_{\mathrm{test}}}\frac{|a(x,v^{\mathrm{src}})\cap y^{*}|}{|a(x,v^{\mathrm{src}})|},}}\end{array}

$$

where $\mathcal{D}{\mathrm{test}}$ is the test set, $y^{*}$ is the human reference sentence, and $|\cdot|$ denotes the number of nonstopwords. Higher values of $S_{c}$ and $S_{a}$ indicate that humans preserve content words and change attribute-related words, in line with the inductive bias of our model. $S_{c}$ is 0.61, 0.71, and 0.50 on YELP, AMAZON, and CAPTIONS, respectively; $S_{a}$ is 0.72 on YELP and 0.54 on AMAZON (not applicable on CAPTIONS).

其中 $\mathcal{D}{\mathrm{test}}$ 是测试集,$y^{*}$ 是人类参考句子,$|\cdot|$ 表示非停用词的数量。$S_{c}$ 和 $S_{a}$ 的值越高,表明人类保留了内容词并改变了与属性相关的词,这与我们模型的归纳偏差一致。$S_{c}$ 在 YELP、AMAZON 和 CAPTIONS 上分别为 0.61、0.71 和 0.50;$S_{a}$ 在 YELP 上为 0.72,在 AMAZON 上为 0.54 (不适用于 CAPTIONS)。

To understand why humans sometimes deviated from the inductive bias of our model, we randomly sampled 50 cases from YELP where humans changed a content word or preserved an attribute-related word. $70%$ of changed content words were unimportant words (e.g., “whole” was deleted from “whole experience”), and another $18%$ were paraphrases (e.g., “charge” became “price”); the remaining $12%$ were errors where the system mislabeled an attribute-related word as a content word (e.g., “old” became “new”). $84%$ of preserved attribute-related words did pertain to sentiment but remained fixed due to changes in the surrounding context (e.g., “don’t like” became “like”, and “below average” became “above average”); the remaining $16%$ were mistagged by our system as being attribute-related (e.g., “walked out”).

为了理解人类为何有时会偏离我们模型的归纳偏置,我们从YELP随机抽取了50个案例,其中人类修改了内容词或保留了属性相关词。70%被修改的内容词是不重要词汇(例如“whole”从“whole experience”中被删除),另有18%属于同义改写(例如“charge”变为“price”);其余12%是系统错误地将属性相关词标记为内容词的情况(例如“old”变为“new”)。84%被保留的属性相关词确实与情感相关,但因上下文变化而保持固定(例如“don’t like”变为“like”,“below average”变为“above average”);剩余16%是被系统错误标记为属性相关的词汇(例如“walked out”)。

4.3 Previous Methods

4.3 先前方法

We compare with three previous models, all of which use adversarial training. STYLEEMBED

我们与之前三种采用对抗训练 (adversarial training) 的模型进行对比:STYLEEMBED

Table 2: Human evaluation results on all three datasets. We show average human ratings for grammaticality (Gra), content preservation (Con), and target attribute match (Att) on a 1 to 5 Likert scale, as well as overall success rate (Suc). On all three datasets, DELETE AND RETRIEVE is the best overall system, and all four of our methods outperform previous work.

表 2: 三个数据集上的人工评估结果。我们展示了语法正确性 (Gra)、内容保持度 (Con) 和目标属性匹配度 (Att) 的平均人工评分 (采用1-5级李克特量表),以及总体成功率 (Suc)。在所有三个数据集中,DELETE AND RETRIEVE 是整体最佳的系统,并且我们的四种方法都优于之前的工作。

| YELP | AMAZON | CAPTIONS | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gra | Con | Att | Suc | Gra | Con | Att | Suc | Gra | Con | Att | Suc | |

| CROSSALIGNED | 2.8 | 2.9 | 3.5 | 14% | 3.2 | 2.5 | 2.9 | 7% | 3.9 | 2.0 | 3.2 | 16% |

| STYLEEMBEDDING | 3.5 | 3.7 | 2.1 | 9% | 3.2 | 2.9 | 2.8 | 11% | 3.3 | 2.9 | 3.0 | 17% |

| MULTIDECODER | 2.8 | 3.1 | 3.0 | 8% | 3.0 | 2.6 | 2.8 | 7% | 3.4 | 2.8 | 3.2 | 18% |

| RETRIEVEONLY | 4.2 | 2.7 | 4.2 | 25% | 3.8 | 2.8 | 3.1 | 17% | 4.2 | 2.6 | 3.8 | 27% |

| TEMPLATEBASED | 3.0 | 3.9 | 3.9 | 21% | 3.4 | 3.6 | 3.1 | 19% | 3.3 | 4.1 | 3.5 | 33% |

| DELETEONLY | 3.0 | 3.7 | 3.9 | 24% | 3.7 | 3.8 | 3.2 | 24% | 3.6 | 3.5 | 3.5 | 32% |

| DELETEANDRETRIEVE | 3.3 | 3.7 | 4.0 | 29% | 3.9 | 3.7 | 3.4 | 29% | 3.8 | 3.5 | 3.9 | 43% |

| Human | 4.6 | 4.5 | 4.5 | 75% | 4.2 | 4.0 | 3.7 | 44% | 4.3 | 3.9 | 4.0 | 56% |

DING $\mathrm{Fu}$ et al., 2018) learns an vector encoding of the source sentence such that a decoder can use it to reconstruct the sentence, but a discriminator, which tries to identify the source attribute using this encoding, fails. They use a basic MLP disc rim in at or and an LSTM decoder. MULTIDECODER (Fu et al., 2018) is similar to STYLEEMBEDDING, except that it uses a different decoder for each attribute value. CROSS ALIGNED (Shen et al., 2017) also encodes the source sentence into a vector, but the disc rim in at or looks at the hidden states of the RNN decoder instead. The system is trained so that the disc rim in at or cannot distinguish these hidden states from those obtained by forcing the decoder to output real sentences from the target domain; this objective encourages the real and generated target sentences to look similar at a population level.

DING $\mathrm{Fu}$ 等人 (2018) 通过学习源句子的向量编码,使得解码器能够利用该编码重构句子,但判别器 (discriminator) 无法通过该编码识别源属性。他们使用了一个基础的多层感知机 (MLP) 判别器和一个长短期记忆网络 (LSTM) 解码器。MULTIDECODER (Fu 等人, 2018) 与 STYLEEMBEDDING 类似,不同之处在于它为每个属性值使用了不同的解码器。CROSS ALIGNED (Shen 等人, 2017) 同样将源句子编码为向量,但判别器关注的是 RNN 解码器的隐藏状态而非编码本身。该系统通过训练使得判别器无法区分这些隐藏状态与通过强制解码器输出目标域真实句子所获得的隐藏状态;这一目标促使生成的目标句子与真实目标句子在整体分布上看起来相似。

4.4 Experimental Details

4.4 实验细节

For our methods, we use 128-dimensional word vectors and a single-layer GRU with 512 hidden units for both encoders and the decoder. We use the maxout activation function (Goodfellow et al., 2013). All parameters are initialized by sampling from a uniform distribution between $-0.1$ and 0.1. For optimization, we use Adadelta (Zeiler, 2012) with a minibatch size of 256.

在我们的方法中,我们使用128维词向量和具有512个隐藏单元的单层GRU作为编码器和解码器。我们采用maxout激活函数 (Goodfellow et al., 2013)。所有参数通过从$-0.1$到0.1的均匀分布中采样进行初始化。优化方面,我们使用Adadelta (Zeiler, 2012),并设置256的小批量大小。

For attribute marker extraction, we cons