End-to-End Spatio-Temporal Action Local is ation with Video Transformers

基于视频Transformer的端到端时空动作定位

Abstract

摘要

The most performant spatio-temporal action localisation models use external person proposals and complex external memory banks. We propose a fully end-to-end, purely-transformer based model that directly ingests an input video, and outputs tubelets – a sequence of bounding boxes and the action classes at each frame. Our flexible model can be trained with either sparse bounding-box supervision on individual frames, or full tubelet annotations. And in both cases, it predicts coherent tubelets as the output. Moreover, our end-to-end model requires no additional pre-processing in the form of proposals, or post-processing in terms of non-maximal suppression. We perform extensive ablation experiments, and significantly advance the stateof-the-art results on four different spatio-temporal action local is ation benchmarks with both sparse keyframes and full tubelet annotations.

性能最优的时空动作定位模型通常依赖外部人物提议框和复杂的外部记忆库。我们提出了一种完全端到端、纯Transformer架构的模型,可直接输入视频并输出管状体(tubelet)——即一系列边界框序列及每帧对应的动作类别。该灵活模型既支持基于单帧稀疏边界框标注的训练,也可利用完整管状体标注进行训练,且两种情况下均能预测出连贯的管状体输出。此外,我们的端到端模型既不需要提议框等预处理步骤,也无需非极大值抑制等后处理操作。通过大量消融实验,我们在四个时空动作定位基准测试(包含稀疏关键帧标注和完整管状体标注两种设置)上显著提升了当前最优性能。

1. Introduction

1. 引言

Spatio-temporal action local is ation is an important problem with applications in advanced video search engines, robotics and security among others. It is typically formulated in one of two ways: Firstly, predicting the bounding boxes and actions performed by an actor at a single keyframe given neighbouring frames as spatio-temporal context [18, 29]. Or alternatively, predicting a sequence of bounding boxes and actions (i.e. “tubes”), for each actor at each frame in the video [22, 48].

时空动作定位是一个重要问题,在高级视频搜索引擎、机器人和安全等领域有广泛应用。它通常以两种方式之一进行建模:首先,在给定相邻帧作为时空上下文的情况下,预测单个关键帧中行为者的边界框和执行的动作 [18, 29];或者,为视频中每一帧的每个行为者预测一系列边界框和动作(即"管道")[22, 48]。

The most performant models [3, 14, 39, 61], particularly for the first, keyframe-based formulation of the problem, employ a two-stage pipeline inspired by the Fast-RCNN object detector [17]: They first run a separate person detector to obtain proposals. Features from these proposals are then aggregated and classified according to the actions of interest. These models have also been supplemented with memory banks containing long-term contextual information from other frames [39, 54, 60, 61], and/or detections of other potentially relevant objects [2, 54] to capture additional scene context, achieving state-of-the-art results.

性能最佳的模型 [3, 14, 39, 61](特别是针对基于关键帧的第一种问题表述方案)采用了受 Fast-RCNN 目标检测器 [17] 启发的两阶段流程:首先运行独立的人物检测器获取候选区域,随后聚合这些区域的特征并根据目标动作进行分类。这些模型还通过添加存储长期上下文信息的记忆库 [39, 54, 60, 61] 和/或检测其他潜在相关对象 [2, 54] 来捕获额外场景上下文,从而实现了最先进的性能。

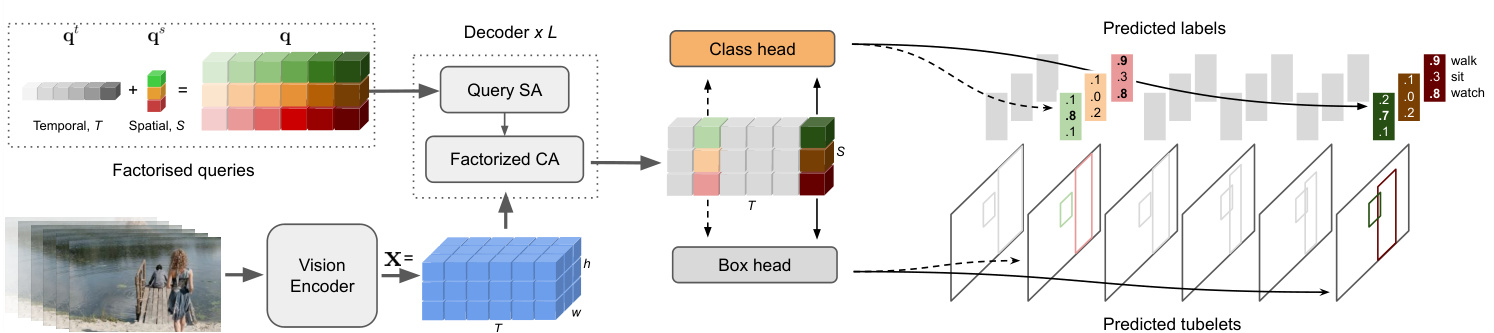

Figure 1. We propose an end-to-end Spatio-Temporal Action Recognition model named STAR. Our model is end-to-end in that it does not require any external region proposals to predict tubelets – sequences of bounding boxes associated with a given person in every frame and their corresponding action classes. Our model can be trained with either sparse box annotations on selected keyframes, or full tubelet supervision.

图 1: 我们提出了一种名为STAR的端到端时空动作识别模型。该模型的端到端特性体现在无需任何外部区域提议即可预测管段(tubelets)——即视频每帧中与特定人物关联的边界框序列及其对应动作类别。我们的模型既可通过关键帧稀疏框标注进行训练,也可采用完整管段监督方式。

And whilst proposal-free algorithms, which do not require external person detectors, have been developed for detecting both at the keyframe-level [8, 26, 52] and tubeletlevel [23, 65], their performance has typically lagged behind their proposal-based counterparts. Here, we show for the first time that an end-to-end trainable spatio-temporal model outperforms a two-stage approach.

虽然无需外部人物检测器的无提案算法 (proposal-free algorithms) 已在关键帧级别 [8, 26, 52] 和短管级别 [23, 65] 实现双模态检测,但其性能通常落后于基于提案的算法。本文首次证明端到端可训练的时空模型性能优于两阶段方法。

As shown in Fig. 1, we propose our Spatio-Temporal Action TransformeR (STAR) that consists of a puretransformer architecture, and is based on the DETR [6] detection model. Our model is “end-to-end” in that it does not require pre-processing in the form of proposals, nor postprocessing in the form of non-maximal suppression (NMS) in contrast to the majority of prior work. The initial stage of the model is a vision encoder. This is followed by a decoder that processes learned latent queries, which represent each actor in the video, into output tubelets – a sequence of bounding boxes and action classes at each time step of the input video clip. Our model is versatile in that we can train it with either fully-labeled tube annotations, or with sparse keyframe annotations (when only a limited number of keyframes are labelled). In the latter case, our network still predicts tubelets, and learns to associate detections of an actor, from one frame to the next, without explicit supervision. This behaviour is facilitated by our formulation of factorised queries, decoder architecture and tubelet matching in the loss which all contain temporal inductive biases.

如图 1 所示,我们提出了时空动作 Transformer (Spatio-Temporal Action TransformeR, STAR) ,该模型采用纯 Transformer 架构,并基于 DETR [6] 检测模型构建。我们的模型是"端到端"的,与大多数现有工作不同,它既不需要以候选框形式进行预处理,也不需要通过非极大值抑制 (NMS) 进行后处理。模型的第一阶段是视觉编码器,随后解码器会将学习到的潜在查询 (latent queries) 处理为输出管段 (tubelets) —— 即输入视频片段每个时间步的边界框序列和动作类别。我们的模型具有通用性,既可以使用完整标注的管段数据进行训练,也可以使用稀疏关键帧标注 (仅标记少量关键帧) 进行训练。在后一种情况下,我们的网络仍能预测管段,并学会在没有显式监督的情况下将演员的检测结果在帧间关联起来。这种能力得益于我们对分解查询 (factorised queries) 的表述、解码器架构以及损失函数中的管段匹配机制,这些设计都包含了时序归纳偏置。

We conduct thorough ablation studies of these modelling choices. Informed by these experiments, we achieve state-of-the-art on both keyframe-based action localisation datasets like AVA [18] and AVA-Kinetics [29], and also tubelet-based datasets like UCF101-24 [48] and JHMDB [22]. In particular, we achieve a Frame mAP of 44.6 on AVA-Kinetics outperforming previous published work [39] by 8.2 points, and a recent foundation model [58] by 2.1 points. In addition, our Video AP50 on UCF101-24 surpasses prior work [65] by 11.6 points. Moreover, our state-of-the-art results are achieved with a single forwardpass through the model, using only a video clip as input, and without any separate external person detectors providing proposals [3, 58, 61], complex memory banks [39, 61, 65], or additional object detectors [2, 54], as used by the prior state-of-the-art. Furthermore, we outperform these complex, prior, state-of-the-art two-stage models whilst also having additional functionality in that our model predicts tubelets, that is, temporally consistent bounding boxes at each frame of the input video clip.

我们对这些建模选择进行了全面的消融研究。通过这些实验,我们在基于关键帧的动作定位数据集(如 AVA [18] 和 AVA-Kinetics [29])以及基于管状片段的数据集(如 UCF101-24 [48] 和 JHMDB [22])上均取得了最先进的成果。具体而言,我们在 AVA-Kinetics 上实现了 44.6 的帧 mAP,比之前发表的工作 [39] 高出 8.2 分,比近期的基础模型 [58] 高出 2.1 分。此外,我们在 UCF101-24 上的 Video AP50 比之前的工作 [65] 高出 11.6 分。更重要的是,我们的最先进成果仅需对模型进行一次前向传播,仅使用视频片段作为输入,无需任何单独的外部人物检测器提供提议 [3, 58, 61]、复杂的记忆库 [39, 61, 65] 或额外的物体检测器 [2, 54],而这些在之前的最先进方法中均有使用。此外,我们的模型不仅超越了这些复杂的、之前最先进的两阶段模型,还具备额外的功能——能够预测管状片段,即在输入视频片段的每一帧上生成时间一致的目标框。

2. Related Work

2. 相关工作

Models for spatio-temporal action local is ation have typically built upon advances in object detectors for images. The most performant methods for action local is ation [3, 14, 39, 54, 61] are based on “two-stage” detectors like FastRCNN [17]. These models use external, pre-computed person detections, and use them to ROI-pool features which are then classified into action classes. Although these models are cumbersome in that they require an additional model and backbone to first detect people, and therefore additional detection training data as well, they are currently the leading approaches on datasets such as AVA [18]. Such models using external proposals are also particularly suited to datasets such as AVA [18] as each person is exhaustively labelled as performing an action, and therefore there are fewer false-positives from using action-agnostic person detections compared to datasets such as UCF101 [48].

时空动作定位模型通常建立在图像目标检测技术进展的基础上。当前性能最优的动作定位方法 [3, 14, 39, 54, 61] 都基于 FastRCNN [17] 等"两阶段"检测器。这些模型使用外部预计算的人物检测框进行 ROI (Region of Interest) 特征池化,再将特征分类为动作类别。尽管这类模型需要额外的人物检测模型和骨干网络,还需额外的检测训练数据,但目前在 AVA [18] 等数据集上仍保持领先优势。使用外部候选框的模型特别适合 AVA [18] 这类数据集,因为其中每个人都标注了执行动作,相比 UCF101 [48] 等数据集,使用动作无关的人物检测框产生的误报更少。

The performance of these two-stage models has further been improved by incorporating more contextual information using feature banks extracted from additional frames in the video [39, 54, 60, 61] or by utilising detections of additional objects in the scene [2, 5, 57, 64]. Both of these cases require significant additional computation and complexity to train additional auxiliary models and to precompute features from them that are then used during training and inference of the local is ation model.

通过利用从视频额外帧中提取的特征库 [39, 54, 60, 61] 或利用场景中其他物体的检测结果 [2, 5, 57, 64] 来融入更多上下文信息,这类两阶段模型的性能得到了进一步提升。这两种情况都需要大量额外计算和复杂度,以训练额外的辅助模型并预计算其特征,这些特征随后用于定位模型的训练和推理。

Our proposed method, in contrast, is end-to-end in that it directly produces detections without any additional inputs besides a video clip. Moreover, it outperforms these prior works without resorting to external proposals or memory banks, showing that a transformer backbone is sufficient to capture long-range dependencies in the input video. In addition, unlike previous two-stage methods, our method directly predicts tubelets: a sequence of bounding boxes and actions for each frame of the input video, and can do so even when we do not have full tubelet annotations available.

相比之下,我们提出的方法是端到端的,它仅需输入视频片段即可直接生成检测结果。此外,该方法无需依赖外部提案或记忆库就能超越先前工作,这表明Transformer主干网络足以捕捉输入视频中的长程依赖关系。与以往两阶段方法不同,我们的方法能直接预测小管段(tubelets):为输入视频每一帧生成边界框序列及对应动作,且即使在没有完整小管段标注的情况下也能实现这一目标。

A number of proposal-free action local is ation models have also been developed [8, 16, 23, 26, 52, 65]. These methods are based upon alternative object detection architectures such as SSD [34], CentreNet [66], YOLO [41], DETR [6] and Sparse-RCNN [53]. However, in contrast to our approach, they have been outperformed by their proposal-based counterparts. Moreover, some of these methods [16, 26, 52] also consist of separate network backbones for learning video feature representations and proposals for a keyframe, and are thus effectively two networks trained jointly, and cannot predict tubelets either.

已开发出多种无提议(proposal-free)动作定位模型[8, 16, 23, 26, 52, 65]。这些方法基于替代性目标检测架构,如SSD[34]、CentreNet[66]、YOLO[41]、DETR[6]和Sparse-RCNN[53]。但与我们的方法相比,它们的性能始终不及基于提议(proposal-based)的对应方法。此外,其中部分方法[16, 26, 52]仍采用独立网络主干来学习视频特征表示和关键帧提议,本质上仍是联合训练的两个独立网络,同样无法预测管状片段(tubelets)。

Among prior works that do not use external proposals, and also directly predict tubelets [23, 30, 31, 46, 47, 65], our work is the most similar to TubeR [65] given that our model is also based on DETR. Our model, however, is purely transformer-based (including the encoder) and achieves substantially higher performance without requiring external memory banks pre computed offline like [65]. Furthermore, unlike TubeR, we also demonstrate how our model can predict tubelets (i.e. predictions at every frame of the input video), even when we only have sparse keyframe supervision (i.e. ground truth annotation for a limited number of frames) available.

在不使用外部提案并直接预测视频片段 (tubelet) 的先前研究中 [23, 30, 31, 46, 47, 65],我们的工作与 TubeR [65] 最为相似,因为我们的模型同样基于 DETR (Detection Transformer) 。然而,我们的模型完全基于 Transformer (包括编码器) ,且无需像 [65] 那样依赖离线预计算的外部记忆库即可实现显著更高的性能。此外,与 TubeR 不同,我们还证明了即使在仅有稀疏关键帧监督 (即仅对有限帧提供真实标注) 的情况下,我们的模型仍能预测视频片段 (即对输入视频的每一帧进行预测) 。

Finally, we note that DETR has also been extended as a proposal-free method to addressing different local is ation tasks in video such as video instance segmentation [59], temporal local is ation [35,38,62] and moment retrieval [28].

最后,我们注意到DETR也被扩展为一种无提案方法,用于解决视频中的不同定位任务,如视频实例分割 [59]、时序定位 [35,38,62] 和片段检索 [28]。

3. Spatio-Temporal Action Transformer

3. 时空动作Transformer

Our proposed model ingests a sequence of video frames, and directly predicts tubelets (a sequence of bounding boxes and action labels). In contrast to leading spatio-temporal action recognition models, our model does not use external person detections [3, 39, 55, 61] or external memory banks [39, 60, 65] to achieve strong results.

我们提出的模型接收一系列视频帧,并直接预测小管序列(一组边界框和动作标签)。与领先的时空动作识别模型不同,我们的模型无需借助外部人物检测 [3, 39, 55, 61] 或外部记忆库 [39, 60, 65] 即可取得优异效果。

As summarised in Fig. 2, our model consists of a vision encoder (Sec. 3.1), followed by a decoder which processes learned query tokens into output tubelets (Sec. 3.2). We incorporate temporal inductive biases into our decoder to improve accuracy and tubelet prediction with weaker supervision. Our model is inspired by the DETR architecture [6] for object detection in images, and is also trained with a setbased loss and Hungarian matching. We detail our loss, and how we can train with either sparse keyframe supervision or full tubelet supervision, in Sec. 3.3.

如图 2 所示,我们的模型由视觉编码器 (Sec. 3.1) 和将学习到的查询 token 处理为输出管状片段 (Sec. 3.2) 的解码器组成。我们在解码器中引入时间归纳偏置,以提升弱监督下的准确性和管状片段预测能力。该模型受 DETR [6] 图像目标检测架构启发,同样采用基于集合的损失函数和匈牙利匹配进行训练。我们在 Sec. 3.3 详细阐述了损失函数设计,以及如何通过稀疏关键帧监督或完整管状片段监督进行训练。

Figure 2. Our model processes a fixed-length video clip, and for each frame, outputs tubelets (i.e. linked bounding boxes with associated action class probabilities). It consists of a transformer-based vision encoder which outputs a video representation, $\mathbf{x}\in\mathbb{R}^{T\times h\times w\times d}$ . The video representation, along with learned queries, $\mathbf{q}$ (which are factorised into spatial ${\bf q}^{s}$ and temporal components $\mathbf{q}^{t}$ ) are decoded into tubelets by a decoder of $L$ layers followed by shallow box and class prediction heads.

图 2: 我们的模型处理固定长度的视频片段,并为每帧输出管状检测区域 (即带有关联动作类别概率的链接边界框)。该模型包含基于Transformer的视觉编码器,可输出视频表征 $\mathbf{x}\in\mathbb{R}^{T\times h\times w\times d}$。视频表征与学习到的查询向量 $\mathbf{q}$ (分解为空间分量 ${\bf q}^{s}$ 和时间分量 $\mathbf{q}^{t}$) 通过 $L$ 层解码器解码为管状检测区域,最后经过浅层框预测头和类别预测头。

3.1. Vision Encoder

3.1. 视觉编码器

The vision backbone processes an input video, $\textbf{X}\in$ RT ×H×W ×3 to produce a feature representation of the input video $\mathbf{x}\in\mathbb{R}^{t\times h\times w\times d}$ . Here, $T,H$ and $W$ are the original temporal-, height- and width-dimensions of the input video respectively, whilst $t$ , $h$ and $w$ are the spatio-temporal dimensions of their feature representation, and $d$ its latent dimension. As we use a transformer, specifically the ViViT Factorised Encoder [1], these spatio-temporal dimensions depend on the patch size when tokenising the input. To retain spatio-temporal information, we remove the spatialand temporal-aggregation steps in the original transformer backbone. And if the temporal patch size is larger than 1, we bilinearly upsample the final feature map along the temporal axis to maintain the original temporal resolution.

视觉主干网络处理输入视频 $\textbf{X}\in$ RT ×H×W ×3,生成输入视频的特征表示 $\mathbf{x}\in\mathbb{R}^{t\times h\times w\times d}$。其中,$T,H$ 和 $W$ 分别表示输入视频的原始时间、高度和宽度维度,而 $t$、$h$ 和 $w$ 是其特征表示的时空维度,$d$ 为潜在维度。由于我们采用 Transformer (具体为 ViViT Factorised Encoder [1]),这些时空维度取决于输入 Token 化时的分块大小。为保留时空信息,我们移除了原始 Transformer 主干中的空间与时间聚合步骤。若时间分块大小超过 1,则沿时间轴对最终特征图进行双线性上采样以维持原始时间分辨率。

3.2. Tubelet Decoder

3.2. Tubelet解码器

Our decoder processes the visual features, $\textbf{x}\in$ $\mathbb{R}^{T\times h\times w\times c}$ , along with learned queries, $\textbf{q}\in\mathbb{R}^{T\times S\times d}$ to outputs tubelets, $\mathbf{y} =~(\mathbf{b},\mathbf{a})$ which are a sequence of bounding boxes, $b\in\mathbb{R}^{T\times S\times4}$ and corresponding actions, $\boldsymbol{a}\in\mathbb{R}^{T\times S\times C}$ . Here, $S$ denotes the maximum number of bounding boxes per frame (padded with “background” as necessary) and $C$ denotes the number of output classes.

我们的解码器处理视觉特征 $\textbf{x}\in\mathbb{R}^{T\times h\times w\times c}$ 和学习查询 $\textbf{q}\in\mathbb{R}^{T\times S\times d}$ ,输出管状体 $\mathbf{y} =~(\mathbf{b},\mathbf{a})$ ,即一系列边界框 $b\in\mathbb{R}^{T\times S\times4}$ 和对应动作 $\boldsymbol{a}\in\mathbb{R}^{T\times S\times C}$ 。其中 $S$ 表示每帧的最大边界框数量(必要时用“背景”填充), $C$ 表示输出类别数。

The idea of decoding learned queries into output detections using the transformer decoder architecture of Vaswani et al. [56] was used in DETR [6]. In summary, the decoder of [6, 56] consists of $L$ layers, each performing a series of self-attention operations on the queries, and cross-attention between the queries and encoder outputs.

将学习到的查询解码为输出检测的想法,使用 Vaswani 等人 [56] 提出的 Transformer 解码器架构,在 DETR [6] 中得到了应用。简而言之,[6, 56] 的解码器由 $L$ 层组成,每一层都对查询执行一系列自注意力操作,并在查询与编码器输出之间进行交叉注意力计算。

We modify the queries, self-attention and cross-attention operations for our spatio-temporal local is ation scenario, as shown in Fig. 2 and 3 to include additional temporal inductive biases, and to improve accuracy as detailed below.

我们对查询、自注意力 (self-attention) 和交叉注意力 (cross-attention) 操作进行了修改,以适应时空局部化场景。如图 2 和图 3 所示,这些修改引入了额外的时序归纳偏置 (temporal inductive biases) ,并提高了准确性,具体细节如下。

Queries Queries, q, in DETR, are decoded using the encoded visual features, $\mathbf{x}$ , into bounding box predictions, and are analogous to the “anchors” used in other detection architectures such as Faster-RCNN [42].

查询

在 DETR 中,查询 (queries, q) 通过编码的视觉特征 $\mathbf{x}$ 解码为边界框预测,其作用类似于 Faster-RCNN [42] 等其他检测架构中使用的"锚点 (anchors)"。

The most straightforward way to define queries is to randomly initialise $\bar{\mathbf{q}}\in\mathbb{R}^{T\times S\times d}$ , where there are $S$ bounding boxes at each of the $T$ input frames in the video clip.

定义查询最直接的方法是随机初始化 $\bar{\mathbf{q}}\in\mathbb{R}^{T\times S\times d}$ ,其中视频片段的 $T$ 个输入帧中每帧包含 $S$ 个边界框。

However, we find it is more effective to factorise the queries into separate learned spatial, $\mathbf{q}^{s}\in\mathbb{R}^{S\times d}$ , and temporal, qT ×d parameters. To obtain the final tubelet queries, we simply repeat the spatial queries across all frames, and add them to their corresponding temporal embedding at each location, as shown in Fig. 2. More concretely $\mathbf{q}{i j}=$ $\mathbf{q}{i}^{t}+\mathbf{q}_{j}^{s}$ where $i$ and $j$ denote the temporal and spatial indices respectively.

然而,我们发现将查询分解为独立学习的空间参数 $\mathbf{q}^{s}\in\mathbb{R}^{S\times d}$ 和时间参数 $\mathbf{q}^{T}\in\mathbb{R}^{T\times d}$ 更为有效。如图 2 所示,为获得最终的管状查询 (tubelet queries),只需将空间查询在所有帧上重复,并与对应位置的时序嵌入相加。具体表示为 $\mathbf{q}{i j}=\mathbf{q}{i}^{t}+\mathbf{q}_{j}^{s}$,其中 $i$ 和 $j$ 分别表示时间和空间索引。

The factorised query representation means that the same spatial embedding is used across all frames. Intuitively, this encourages the $i^{t h}$ spatial query embedding, $\mathbf{q}_{i}^{s}$ , to bind to the same location across different frames of the video, and since objects typically have small displacements from frame to frame, may help to associate bounding boxes within a tubelet together. We verify this intuition empirically in the experimental section.

分解式查询表示意味着所有帧使用相同的空间嵌入。直观上,这促使第 $i^{t h}$ 个空间查询嵌入 $\mathbf{q}_{i}^{s}$ 在视频不同帧中绑定到同一位置,由于物体通常帧间位移较小,可能有助于将管状片段内的边界框关联起来。我们通过实验部分验证了这一直觉。

Decoder layer The decoder layer in the original transformer [56] consists of self-attention on the queries, $\mathbf{q}$ , followed by cross-attention between the queries and the outputs of the encoder, $\mathbf{x}$ , and then a multilayer perceptron (MLP) layer [19, 56]. These operations can be denoted by

解码器层 原始Transformer [56]中的解码器层由查询的自注意力 (self-attention) $\mathbf{q}$、查询与编码器输出的交叉注意力 (cross-attention) $\mathbf{x}$ 以及多层感知机 (MLP) 层 [19, 56] 组成。这些操作可表示为

$$

\begin{array}{r l}&{\mathbf{u}^{\ell}=\mathrm{MHSA}(\mathbf{q}^{\ell})+\mathbf{q}^{\ell},}\ &{\mathbf{v}^{\ell}=\mathrm{CA}(\mathbf{u}^{\ell},\mathbf{x})+\mathbf{u}^{\ell},}\ &{\mathbf{z}^{\ell}=\mathrm{MLP}(\mathbf{v}^{\ell})+\mathbf{v}^{\ell},}\end{array}

$$

$$

\begin{array}{r l}&{\mathbf{u}^{\ell}=\mathrm{MHSA}(\mathbf{q}^{\ell})+\mathbf{q}^{\ell},}\ &{\mathbf{v}^{\ell}=\mathrm{CA}(\mathbf{u}^{\ell},\mathbf{x})+\mathbf{u}^{\ell},}\ &{\mathbf{z}^{\ell}=\mathrm{MLP}(\mathbf{v}^{\ell})+\mathbf{v}^{\ell},}\end{array}

$$

where $\mathbf{z}^{\ell}$ is the output of the $\ell^{t h}$ decoder layer, $\mathbf{u}$ and $\mathbf{v}$ are intermediate variables, MHSA denotes multi-headed selfattention and CA cross-attention. Note that the inputs to the MLP and self- and cross-attention operations are layernormalised [4], which we omit here for clarity.

其中 $\mathbf{z}^{\ell}$ 是第 $\ell^{t h}$ 层解码器的输出,$\mathbf{u}$ 和 $\mathbf{v}$ 是中间变量,MHSA 表示多头自注意力机制 (multi-headed self-attention),CA 表示交叉注意力机制 (cross-attention)。请注意,MLP 以及自注意力和交叉注意力操作的输入都经过层归一化 [4],为清晰起见此处省略。

Figure 3. Our decoder layer consists of factorised self-attention (SA) (left) and cross-attention (CA) (right) operations designed to provide a spatio-temporal inductive bias and reduce computation. Both operations restrict attention to the same spatial and temporal slices as the query token, as illustrated by the receptive field (blue) for a given query token (magenta). Factorised SA consists of two operations, whilst in Factorised CA, there is one operation.

图 3: 我们的解码器层由分解自注意力 (SA) (左) 和交叉注意力 (CA) (右) 操作组成,旨在提供时空归纳偏置并减少计算量。如给定查询token (洋红色) 的感受野 (蓝色) 所示,两种操作都将注意力限制在与查询token相同的空间和时间切片上。分解SA包含两个操作,而分解CA仅包含一个操作。

In our model, we factorise the self- and cross-attention layers across space and time respectively as shown in Fig. 3, to introduce a temporal locality inductive bias, and also to increase model efficiency. Concretely, when applying MHSA, we first compute the queries, keys and values, over which we attend twice: first independently at each time step with each frame, and then, independently along the time axis at each spatial location. Similarly, we modify the crossattention operation so that only tubelet queries and backbone features from the same time index attend to each other.

在我们的模型中,我们分别对空间和时间维度上的自注意力层和交叉注意力层进行分解(如图3所示),以引入时间局部性归纳偏置,同时提升模型效率。具体而言,在应用多头自注意力(MHSA)时,我们首先计算查询、键和值,然后进行两次注意力计算:第一次在每个时间步内独立处理各帧,第二次在每个空间位置沿时间轴独立处理。类似地,我们调整交叉注意力操作,使得仅相同时间索引的管状查询(tubelet queries)与骨干网络特征相互关注。

Local is ation and classification heads We obtain the final predictions of the network, $\mathbf{y}=(\mathbf{b},\mathbf{a})$ , by applying a small feed-forward network to the outputs to the decoder, $\mathbf{z}$ , following DETR [6]. The sequence of bounding boxes, b, is obtained with a 3-layer MLP, and is parameter is ed by the box center, width and height for each frame in the tubelet. A single-layer linear projection is used to obtain class logits, a. As we predict a fixed number of $S$ bounding boxes per frame, and $S$ is more than the maximum number of ground truth instances in the frame, we also include an additional class label, $\mathcal{D}$ , which represents the “background” class which tubelets with no action class can be assigned to.

定位与分类头部

我们通过将一个小型前馈网络应用于解码器输出 $\mathbf{z}$,获得网络的最终预测 $\mathbf{y}=(\mathbf{b},\mathbf{a})$,遵循 DETR [6] 的方法。边界框序列 b 通过一个 3 层 MLP 获得,其参数由每个管状片段(tubelet)中帧的框中心、宽度和高度确定。使用单层线性投影获取类别逻辑值 a。由于我们为每帧预测固定数量 $S$ 的边界框,且 $S$ 超过帧中真实实例的最大数量,因此我们还引入了一个额外的类别标签 $\mathcal{D}$,代表“背景”类,无动作类别的管状片段可被分配至该类。

3.3. Training objective

3.3. 训练目标

Our model predicts bounding boxes and action classes at each frame of the input video. Many datasets, however, such as AVA [18], are only sparsely annotated at selected keyframes of the video. In order to leverage the available annotations, we compute our training loss, Eq. 4, only at the annotated frames of the video, after having matched the predictions to the ground truth. This is denoted as

我们的模型在输入视频的每一帧预测边界框和动作类别。然而许多数据集(如AVA [18])仅在视频选定的关键帧上进行了稀疏标注。为了利用现有标注,我们仅在视频的标注帧上计算训练损失(式4),并在将预测结果与真实标注匹配后进行。这一过程表示为

$$

\mathcal{L}(\mathbf{y},\hat{\mathbf{y}})=\frac{1}{|\mathcal{T}|}\sum_{t\in\mathcal{T}}\mathcal{L}_{\mathrm{frame}}(\mathbf{y},\hat{\mathbf{y}}),

$$

$$

\mathcal{L}(\mathbf{y},\hat{\mathbf{y}})=\frac{1}{|\mathcal{T}|}\sum_{t\in\mathcal{T}}\mathcal{L}_{\mathrm{frame}}(\mathbf{y},\hat{\mathbf{y}}),

$$

where $\tau$ is the set of labelled frames; $\mathbf{y}$ and $\hat{\bf y}$ denote the ground truth and predicted tubelets after matching.

其中 $\tau$ 是标记帧的集合;$\mathbf{y}$ 和 $\hat{\bf y}$ 分别表示匹配后的真实标注和预测管段。

Following DETR [6], our training loss at each frame, ${\mathcal{L}}{\mathrm{frame}}$ , is a sum of an $L_{1}$ regression loss on bounding boxes, the generalised IoU loss [43] on bounding boxes, and a cross-entropy loss on action labels:

遵循 DETR [6] 的方法,我们在每一帧的训练损失 ${\mathcal{L}}{\mathrm{frame}}$ 由三部分组成:边界框的 $L_{1}$ 回归损失、边界框的广义 IoU 损失 [43] 以及动作标签的交叉熵损失:

$$

\begin{array}{r l r}{\lefteqn{\mathcal{L}{\mathrm{frame}}({\mathbf b}^{t},\hat{{\mathbf b}}^{t},{\mathbf a}^{t},\hat{{\mathbf a}}^{t})=\sum_{i}\mathcal{L}{\mathrm{box}}({\mathbf b}{i}^{t},\hat{{\mathbf b}}{i}^{t})+\mathcal{L}{\mathrm{iou}}({\mathbf b}{i}^{t},\hat{{\mathbf b}}{i}^{t})}}\ &{}&{+\mathcal{L}{\mathrm{class}}({\mathbf a}{i}^{t},\hat{{\mathbf a}}_{i}^{t}).\quad\quad\quad(\hat{\mathbf a}^{t},\hat{{\mathbf a}}^{t})}\end{array}

$$

$$

\begin{array}{r l r}{\lefteqn{\mathcal{L}{\mathrm{frame}}({\mathbf b}^{t},\hat{{\mathbf b}}^{t},{\mathbf a}^{t},\hat{{\mathbf a}}^{t})=\sum_{i}\mathcal{L}{\mathrm{box}}({\mathbf b}{i}^{t},\hat{{\mathbf b}}{i}^{t})+\mathcal{L}{\mathrm{iou}}({\mathbf b}{i}^{t},\hat{{\mathbf b}}{i}^{t})}}\ &{}&{+\mathcal{L}{\mathrm{class}}({\mathbf a}{i}^{t},\hat{{\mathbf a}}_{i}^{t}).\quad\quad\quad(\hat{\mathbf a}^{t},\hat{{\mathbf a}}^{t})}\end{array}

$$

Matching Set-based detection models such as DETR can make predictions in any order, which is why the predictions need to be matched to the ground truth before computing the training loss.

基于集合匹配的检测模型(如DETR)可以按任意顺序生成预测结果,因此在计算训练损失前需将预测值与真实标注进行匹配。

The first form of matching that we consider is to independently perform bipartite matching at each frame to align the model’s predictions to the ground truth (or the $\boldsymbol{\mathcal{O}}$ background class) before computing the loss. In this case, we use the Hungarian algorithm [27] to obtain $T$ permutations of $S$ elements, $\hat{\pi}^{t}\in\Pi^{t}$ , at each frame, where the permutation at the $t^{t h}$ frame minimises the per-frame loss,

我们考虑的第一种匹配形式是在计算损失之前,独立地在每一帧执行二分匹配,将模型的预测与真实值(或 $\boldsymbol{\mathcal{O}}$ 背景类)对齐。在这种情况下,我们使用匈牙利算法 [27] 在每一帧获得 $S$ 个元素的 $T$ 种排列 $\hat{\pi}^{t}\in\Pi^{t}$ ,其中第 $t^{t h}$ 帧的排列使逐帧损失最小化。

$$

\hat{\boldsymbol{\pi}}^{t}=\operatorname*{argmin}{\boldsymbol{\pi}\in\Pi^{t}}\mathcal{L}{\mathrm{frame}}(\mathbf{y}^{t},\hat{\mathbf{y}}_{\pi(i)}^{t}).

$$

$$

\hat{\boldsymbol{\pi}}^{t}=\operatorname*{argmin}{\boldsymbol{\pi}\in\Pi^{t}}\mathcal{L}{\mathrm{frame}}(\mathbf{y}^{t},\hat{\mathbf{y}}_{\pi(i)}^{t}).

$$

An alternative is to perform tubelet matching, where all queries with the same spatial index, ${\bf q}^{s}$ , must match to the same ground truth annotation across all frames of the input video. Here the permutation is obtained over $S$ elements as

另一种方法是执行小管匹配 (tubelet matching),其中所有具有相同空间索引 ${\bf q}^{s}$ 的查询必须与输入视频所有帧中的相同真实标注匹配。这里的排列是在 $S$ 个元素上获得的。

$$

\hat{\boldsymbol{\pi}}=\underset{\pi\in\Pi}{\arg\operatorname*{min}}\frac{1}{|\mathcal{T}|}\sum_{t\in\mathcal{T}}\mathcal{L}{\mathrm{frame}}(\mathbf{y}^{t},\hat{\mathbf{y}}_{\pi^{t}(i)}^{t}).

$$

$$

\hat{\boldsymbol{\pi}}=\underset{\pi\in\Pi}{\arg\operatorname*{min}}\frac{1}{|\mathcal{T}|}\sum_{t\in\mathcal{T}}\mathcal{L}{\mathrm{frame}}(\mathbf{y}^{t},\hat{\mathbf{y}}_{\pi^{t}(i)}^{t}).

$$

Intuitively, tubelet matching provides stronger supervision when we have full tubelet annotations available. Note that regardless of the type of matching that we perform, the loss computation and the overall model architecture remains the same. Note that we do not weight terms in Eq. 5, for both matching and loss calculation, for simplicity, and to avoid having additional hyper parameters, as also done in [37].

直观上,当拥有完整的小管(tubelet)标注时,小管匹配能提供更强的监督信号。需要注意的是,无论采用何种匹配方式,损失计算和整体模型架构保持不变。为简化流程并避免引入额外超参数(如[37]的做法),我们在公式5的匹配和损失计算中均未设置权重项。

3.4. Discussion

3.4. 讨论

As our approach is based on DETR, it does not require external proposals nor non-maximal suppression for postprocessing. The idea of using DETR for action local is ation has also been explored by TubeR [65] and WOO [8]. There are, however, a number of key differences: WOO does not detect tubelets at all, but only actions at the center keyframe.

由于我们的方法基于DETR (DEtection TRansformer) ,因此不需要外部提案或后处理中的非极大值抑制。TubeR [65] 和 WOO [8] 也探索了将DETR用于动作定位的想法。但存在几个关键差异:WOO完全不检测小管段 (tubelet) ,而仅检测中心关键帧的动作。

We also factorise our queries in the spatial and temporal dimensions (Sec. 3.2) to provide inductive biases urging spatio-temporal association. Moreover, we predict action classes separately for each time step in the tubelet, meaning that each of our queries binds to an actor in the video. TubeR, in contrast, parameter is es queries such that they are each associated with separate actions (features are averagepooled over the tubelet, and then linearly classified into a single action class). This choice also means that TubeR requires an additional “action switch” head to predict when tubelets start and end, which we do not require as different time steps in a tubelet can have different action classes in our model. Furthermore, we show experimentally (Tab. 1) that TubeR’s parameter is ation obtains lower accuracy. We also consider two types of matching in the loss computation (Sec. 3.3) unlike TubeR, with “tubelet matching” designed for predicting more temporally consistent tubelets. And in contrast to TubeR, we experimentally show how our decoder design allows our model to accurately predict tubelets even with weak, keyframe supervision.

我们还在空间和时间维度上对查询进行因子分解(第3.2节),以提供促使时空关联的归纳偏置。此外,我们为管状片段中的每个时间步单独预测动作类别,这意味着每个查询都与视频中的某个参与者绑定。相比之下,TubeR通过参数化查询使它们各自关联不同的动作(特征在管状片段上平均池化后,线性分类为单一动作类别)。这种选择还意味着TubeR需要一个额外的"动作切换"头来预测管状片段的开始和结束,而我们不需要,因为我们的模型中管状片段的不同时间步可以有不同的动作类别。此外,实验表明(表1),TubeR的参数化方法准确率较低。与TubeR不同,我们在损失计算中考虑了两种匹配类型(第3.3节),其中"管状片段匹配"旨在预测时间上更一致的管状片段。与TubeR相比,我们通过实验展示了解码器设计如何使模型即使在弱关键帧监督下也能准确预测管状片段。

Finally, TubeR requires additional complexity in the form of a “short-term context module” [65] and the external memory bank of [60] which is computed offline using a separate model to achieve strong results. As we show experimentally in the next section, we outperform TubeR without any additional modules, meaning that our model does indeed produce tubelets in an end-to-end manner.

最后,TubeR 需要以 "短期上下文模块" [65] 和 [60] 的外部记忆库形式增加额外复杂度,这些模块需通过离线计算的独立模型来实现优异效果。如下节实验所示,我们在无需任何附加模块的情况下超越了 TubeR,这表明我们的模型确实能以端到端方式生成 tubelet。

4. Experimental Evaluation

4. 实验评估

4.1. Experimental set-up

4.1. 实验设置

Datasets We evaluate on four spatio-temporal action localisation benchmarks. AVA and AVA-Kinetics contain sparse annotations at each keyframe, whereas UCF101-24 and JHMDB51-21 contain full tubelet annotations.

数据集

我们在四个时空动作定位基准上进行了评估。AVA和AVA-Kinetics在每个关键帧包含稀疏标注,而UCF101-24和JHMDB51-21则包含完整的管状(tubelet)标注。

AVA [18] consists of 430, 15-minute video clips from movies. Keyframes are annotated at every second in the video, with about 210 000 labelled frames in the training set, and 57 000 in the validation set. There are 80 atomic actions labelled for every actor in the clip, of which 60 are used for evaluation [18]. Following standard practice, we report the Frame Average Precision (fAP) at an IoU threshold of 0.5 using the latest v2.2 annotations [18].

AVA [18] 数据集包含从电影中截取的430段15分钟视频片段。视频每秒标注关键帧,训练集包含约21万标注帧,验证集包含5.7万标注帧。每个片段中的演员被标注了80个原子动作,其中60个用于评估 [18]。按照标准做法,我们使用最新的v2.2标注 [18],在IoU阈值为0.5时报告帧平均精度 (fAP)。

AVA-Kinetics [29] is a superset of AVA, and adds detection annotations following the AVA protocol, to a subset of Kinetics 700 [7] videos. Only a single keyframe in a 10- second Kinetics clip is labelled. In total, about 140 000 labelled keyframes are added to the training set, and 32 000 to the validation sets of AVA. Once again, we follow standard practice in reporting the Frame AP at a $0.5\mathrm{IoU}$ threshold.

AVA-Kinetics [29] 是 AVA 的超集,它按照 AVA 协议为 Kinetics 700 [7] 视频的子集添加了检测标注。在 10 秒的 Kinetics 片段中,仅标注单个关键帧。训练集共新增约 14 万标注关键帧,AVA 验证集新增 3.2 万关键帧。我们再次采用标准做法,在 $0.5\mathrm{IoU}$ 阈值下报告帧级平均精度 (Frame AP)。

UCF101-24 [48] is a subset of UCF101, and annotates 24 action classes with full spatio-temporal tubes in 3 207 untrimmed videos. Note that actions are not labelled exhaustively as in AVA, and there may be people present in the video who are not performing any labelled action. Following standard practice, we use the corrected annotations of [46]. We report both the Frame AP, which evaluates the predictions at each frame independently, and also the Video AP. The Video AP uses a 3D, spatio-temporal IoU to match predictions to targets. And since UCF101-24 videos are up to 900 frames long (median length of 164 frames), and our network processes $T=32$ frames at a time, we link together tubelet predictions from our network into full-videotubes using the same causal linking algorithm as [23,31] for fair comparison.

UCF101-24 [48] 是 UCF101 的子集,在 3,207 个未修剪视频中为 24 个动作类别标注了完整的时空管。请注意,与 AVA 不同,这些动作并未被详尽标注,视频中可能存在未执行任何标注动作的人物。按照标准做法,我们使用 [46] 修正后的标注。我们同时报告帧 AP (Frame AP) 和视频 AP (Video AP),其中帧 AP 独立评估每帧的预测结果,而视频 AP 则使用 3D 时空 IoU 来匹配预测与目标。由于 UCF101-24 的视频长度可达 900 帧(中位数为 164 帧),而我们的网络每次处理 $T=32$ 帧,因此我们采用与 [23,31] 相同的因果链接算法,将网络输出的短管预测连接成完整视频管,以确保公平比较。

Table 1. Comparison of detection architectures on AVA controlling for the same backbone (ViViT-B), resolution (160p) and training settings. Our end-to-end approach outperforms proposal-based ROI models. Binding each query to a person, rather than to an action (as done in TubeR [65]), also yields solid improvements.

表 1: 在相同骨干网络 (ViViT-B)、分辨率 (160p) 和训练设置下,AVA数据集上检测架构的对比。我们的端到端方法优于基于提案的ROI模型。将每个查询(query)绑定到人而非动作(如TubeR [65]所做)也带来了显著提升。

| Proposals | AP50 | |

|---|---|---|

| Two-stage ROI model | [60] | 25.2 |

| Query binds to action | None | 23.6 |

| Ours, query binds to person | None | 26.7 |

JHMDB51-21 [22] also contains full tube annotations in 928 trimmed videos. However, as the videos are shorter and at most 40 frames, we can process the entire clip with our network, and do not need to perform any linking.

JHMDB51-21 [22] 在928段剪辑视频中也包含完整的管状标注。但由于这些视频较短,最多只有40帧,我们可以直接用网络处理整个片段,无需进行任何链接操作。

Implementation details For our vision encoder backbone, we use ViViT Factorised Encoder [1] where model sizes, such as “Base” and “Large” follow the original definitions from [11, 12]. It is initial is ed from pretrained checkpoints, which are typically first pretrained on image datasets like ImageNet-21K [10] and then finetuned on video datasets like Kinetics [24]. Our model processes $T=32$ unless otherwise specified, and has $S=64$ spatial queries per frame, and the latent dimensionality of the decoder is $d=2048$ . Exhaustive implementation details and training hyper parameters are included in the supplementary.

实现细节

对于视觉编码器主干,我们采用ViViT Factorised Encoder [1],其中模型尺寸(如"Base"和"Large")遵循[11, 12]的原始定义。该模型从预训练检查点初始化,通常先在ImageNet-21K [10]等图像数据集上预训练,再在Kinetics [24]等视频数据集上微调。除非另有说明,我们的模型处理$T=32$帧,每帧包含$S=64$个空间查询,解码器的潜在维度为$d=2048$。完整的实现细节和训练超参数见补充材料。

4.2. Ablation studies

4.2. 消融实验

We analyse the design choices in our model by conducting experiments on both AVA (with sparse per-frame supervision) and on UCF101-24 (where we can evaluate the quality of our predicted tubelets). Unless otherwise stated, our backbone is ViViT-Base pretrained on Kinetics 400, and the frame resolution is 160 pixels (160p) on the smaller side.

我们通过在 AVA (带有稀疏逐帧监督) 和 UCF101-24 (可评估预测管状体质量) 上进行实验来分析模型的设计选择。除非另有说明,我们的主干网络是在 Kinetics 400 上预训练的 ViViT-Base,帧分辨率较小边为 160 像素 (160p)。

Comparison of detection architectures Table 1 compares our model to two relevant baselines: Firstly, a twostage Fast-RCNN model using external person detections from [60] (as used by [3, 13, 14, 60]). And secondly, we compare to using the query parameter is ation used in TubeR [65], where each query binds to an action, as described in Sec. 3.4. We control other experimental settings by using the same backbone (ViViT-Base) and resolution (160p).

检测架构对比

表 1 将我们的模型与两个相关基线进行比较:首先是一个使用来自 [60] 的外部人物检测的两阶段 Fast-RCNN 模型(如 [3, 13, 14, 60] 所用);其次,我们与 TubeR [65] 中使用的查询参数化方法进行对比,其中每个查询绑定一个动作,如第 3.4 节所述。我们通过使用相同的主干网络 (ViViT-Base) 和分辨率 (160p) 来控制其他实验设置。

Table 2. Comparison of independent and factorised queries on the AVA and UCF101-24 datasets. Factorised queries are particularly beneficial for predicting tubelets, as shown by the VideoAP on UCF101-24 which has full tube annotations. Both models use tubelet matching in the loss.

表 2: AVA 和 UCF101-24 数据集上独立查询与因子化查询的对比。如 UCF101-24 上带有完整管状标注的 VideoAP 所示,因子化查询对预测小管 (tubelet) 特别有利。两个模型在损失函数中都使用了小管匹配。

| AVA | UCF101-24 | ||||

|---|---|---|---|---|---|

| 查询方式 | fAP | fAP | VAP20 | VAP50 | VAP50:95 |

| 独立查询 | 25.2 | 85.6 | 86.3 | 59.5 | 28.9 |

| 因子化查询 | 26.3 | 86.5 | 87.4 | 63.4 | 29.8 |

Table 3. Comparison of independent and tubelet matching for computing the loss on AVA and UCF101-24. Tubelet matching helps for tube-level evaluation metrics like the Video AP (vAP) on UCF101-24. Note that tubelet matching is actually still possible on AVA as the annotations are at 1fps with actor identities.

表 3: 独立匹配与管段匹配在AVA和UCF101-24数据集上计算损失的对比。管段匹配有助于提升UCF101-24的管级评估指标,如视频平均精度(vAP)。需要注意的是,由于AVA数据集以1fps频率标注了演员身份信息,因此在该数据集上仍可实现管段匹配。

| Query | AVA (fAP) | UCF101-24 (fAP) | VAP20 | vAP50 | VAP50:95 |

|---|---|---|---|---|---|

| 逐帧匹配 | 26.7 | 88.2 | 85.7 | 63.5 | 29.4 |

| 管段匹配 | 26.3 | 86.5 | 87.4 | 63.4 | 29.8 |

The first row of Tab. 1 shows that our end-to-end model improves upon a two-stage model by 1.5 points on AVA, emphasising the promise of our approach. Note that the proposals of [60] achieve an AP50 of 93.9 for person detection on the AVA validation set. They were obtained by first pretraining a Faster-RCNN [42] detector on COCO keypoints, and then finetuning on the person boxes from the training set of AVA, using a resolution of 1333 on the longer side. Our model is end-to-end, and does not require external proposals generated by a separate model at all.

表 1 的第一行显示,我们的端到端模型在 AVA 上将两阶段模型的性能提升了 1.5 个百分点,这凸显了我们方法的潜力。需要注意的是,[60] 提出的方案在 AVA 验证集上的人体检测 AP50 达到了 93.9。该方案首先在 COCO 关键点数据集上预训练 Faster-RCNN [42] 检测器,然后使用较长边为 1333 的分辨率在 AVA 训练集的人体框上进行微调。而我们的模型是端到端的,完全不需要依赖外部模型生成的候选框。

The second row of Tab. 1 compares our model, where each query represents a person and all of their actions (Sec. 3.2) to the approach of TubeR [65] (Sec. 3.4), where there is a separate query for each action being performed. We observe that this parameter is ation has a substantial impact, with our method outperforming it significantly by 3.1 points, motivating the design of our decoder.

表 1 的第二行将我们的模型 (每个查询代表一个人及其所有行为 (第 3.2 节)) 与 TubeR [65] 的方法 (第 3.4 节) (每个执行行为对应独立查询) 进行对比。我们观察到该参数设置具有显著影响,我们的方法以 3.1 分的优势大幅领先,这验证了解码器设计的合理性。

Query parameter is ation Table 2 compares our independent and factorised query methods (Sec. 3.2) on AVA and UCF101-24. We observe that factorised queries consistently provide improvements on both the Frame AP and the Video AP across both datasets. As hypothesis ed in Sec. 3.2, we believe that this is due to the inductive bias present in this parameter is ation. Note that we can only measure the Video AP on UCF101-24 as it has tubes labelled.

查询参数化

表 2 比较了我们在 AVA 和 UCF101-24 数据集上采用的独立查询与因子化查询方法 (见第 3.2 节) 。实验表明,因子化查询在两个数据集的帧级 AP (Frame AP) 和视频级 AP (Video AP) 指标上均取得稳定提升。如第 3.2 节假设所述,我们认为这得益于该参数化方式蕴含的归纳偏置。需要注意的是,由于只有 UCF101-24 标注了动作管 (tubes) ,视频级 AP 仅能在该数据集上进行评估。

Matching for loss calculation As described in Sec. 3.3, when matching the predictions to the ground truth for loss computation, we can either independently match the outputs at each frame to the ground truths at each frame, or, we can match the entire predicted tubelets to the ground truth tubelets. Table 3 shows that tubelet matching does indeed improve the quality of the predicted tubelets, as shown by the Video AP on UCF101-24. However, this comes at the cost of the quality of per-frame predictions (i.e. Frame AP). This suggests that tubelet matching improves the association of bounding boxes predicted at different frames (hence higher Video AP), but may also impair the quality of the bounding boxes predicted at each frame (Frame AP). Note that it is technically possible for us to also perform tubelet matching on AVA, since AVA is annotated at 1fps with actor identities, and our model is input 32 frames at