A Parallel-Hierarchical Model for Machine Comprehension on Sparse Data

一种面向稀疏数据机器理解的并行-分层模型

Kaheer Suleman

Kaheer Suleman

Abstract

摘要

Understanding unstructured text is a major goal within natural language processing. Comprehension tests pose questions based on short text passages to evaluate such understanding. In this work, we investigate machine comprehension on the challenging MCTest benchmark. Partly because of its limited size, prior work on MCTest has focused mainly on engineering better features. We tackle the dataset with a neural approach, harnessing simple neural networks arranged in a parallel hierarchy. The parallel hierarchy enables our model to compare the passage, question, and answer from a variety of trainable perspectives, as opposed to using a manually designed, rigid feature set. Perspectives range from the word level to sentence fragments to sequences of sentences; the networks operate only on word-embedding representations of text. When trained with a methodology designed to help cope with limited training data, our Parallel-Hierarchical model sets a new state of the art for MCTest, outperforming previous feature-engineered approaches slightly and previous neural approaches by a significant margin (over $15%$ absolute).

理解非结构化文本是自然语言处理的主要目标之一。阅读理解测试通过基于短文段提出问题来评估这种理解能力。本研究针对具有挑战性的MCTest基准测试开展机器阅读理解研究。由于该数据集规模有限,先前研究主要集中于设计更好的特征工程。我们采用神经网络方法处理该数据集,通过并行层级结构的简单神经网络实现。这种并行层级结构使模型能够从多种可训练视角比较文本段落、问题和答案,而非依赖人工设计的固定特征集。视角范围涵盖单词级别、句子片段到句子序列;网络仅作用于文本的词嵌入(word-embedding)表示。当采用专为有限训练数据设计的方法进行训练时,我们的并行层级模型(Parallel-Hierarchical)在MCTest上创造了新纪录:以微弱优势超越基于特征工程的方法,并显著领先先前神经网络方法(绝对优势超过15%)。

1 Introduction

1 引言

Humans learn in a variety of ways—by communication with each other, and by study, the reading of text. Comprehension of unstructured text by machines, at a near-human level, is a major goal for natural language processing. It has garnered significant attention from the machine learning research community in recent years.

人类通过多种方式学习——通过彼此交流,以及通过学习和阅读文本。让机器以接近人类的水平理解非结构化文本,是自然语言处理的一个重要目标。近年来,这一目标已引起机器学习研究界的极大关注。

Machine comprehension (MC) is evaluated by posing a set of questions based on a text passage (akin to the reading tests we all took in school). Such tests are objectively gradable and can be used to assess a range of abilities, from basic understanding to causal reasoning to inference (Richardson et al., 2013). Given a text pas- sage and a question about its content, a system is tested on its ability to determine the correct answer (Sachan et al., 2015). In this work, we focus on MCTest, a complex but data-limited comprehension benchmark, whose multiple-choice questions require not only extraction but also inference and limited reasoning (Richardson et al., 2013). Inference and reasoning are important human skills that apply broadly, beyond language.

机器阅读理解 (Machine Comprehension, MC) 通过基于文本段落提出一组问题来评估 (类似于我们在学校参加的阅读测试)。这类测试可客观评分,并能用于评估从基础理解到因果推理再到推断等一系列能力 (Richardson et al., 2013)。给定一个文本段落和关于其内容的问题,系统需通过确定正确答案的能力来接受测试 (Sachan et al., 2015)。本研究中,我们聚焦于MCTest——一个复杂但数据有限的阅读理解基准测试,其多项选择题不仅要求信息提取,还需要推断和有限推理能力 (Richardson et al., 2013)。推断和推理是人类广泛适用 (超越语言范畴) 的重要技能。

We present a parallel-hierarchical approach to machine comprehension designed to work well in a data-limited setting. There are many use-cases in which comprehension over limited data would be handy: for example, user manuals, internal documentation, legal contracts, and so on. Moreover, work towards more efficient learning from any quantity of data is important in its own right, for bringing machines more in line with the way humans learn. Typically, artificial neural networks require numerous parameters to capture complex patterns, and the more parameters, the more training data is required to tune them. Likewise, deep models learn to extract their own features, but this is a data-intensive process. Our model learns to comprehend at a high level even when data is sparse.

我们提出了一种并行分层机器理解方法,专为数据有限场景设计。在许多实际应用中,对有限数据的理解能力非常有用:例如用户手册、内部文档、法律合同等。此外,推动机器从任意数据量中更高效学习本身具有重要意义,这能使机器更接近人类的学习方式。通常,人工神经网络需要大量参数来捕捉复杂模式,而参数越多,所需的训练数据量就越大。同样地,深度模型虽然能自主提取特征,但这一过程需要海量数据支撑。我们的模型即使在数据稀疏时,也能实现高层次的理解学习。

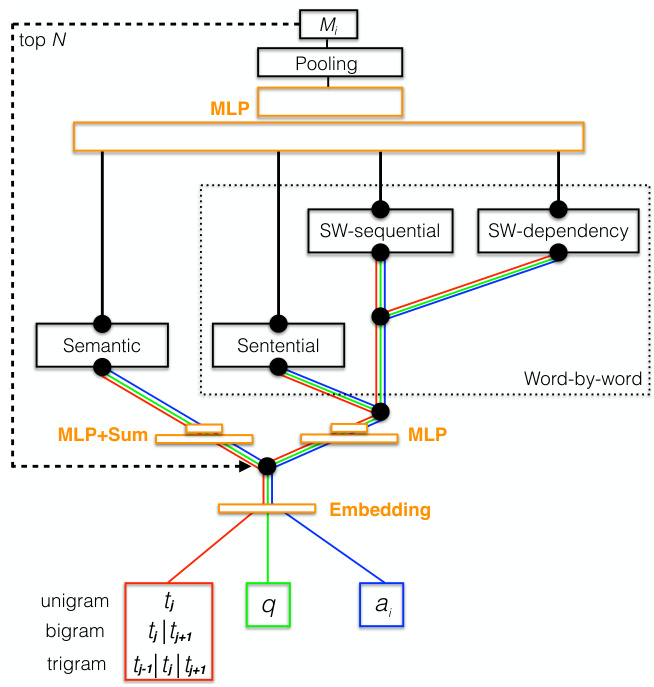

The key to our model is that it compares the question and answer candidates to the text using several distinct perspectives. We refer to a question combined with one of its answer candidates as a hypothesis (to be detailed below). The semantic perspective compares the hypothesis to sentences in the text viewed as single, self-contained thoughts; these are represented using a sum and transformation of word embedding vectors, similarly to in Weston et al. (2014). The word-by-word perspective focuses on similarity matches between individual words from hypothesis and text, at various scales. As in the semantic perspective, we consider matches over complete sentences. We also use a sliding window acting on a sub sent ent i al scale (inspired by the work of Hill et al. (2015)), which implicitly considers the linear distance between matched words. Finally, this word-level sliding window operates on two different views of text sentences: the sequential view, where words appear in their natural order, and the dependency view, where words are reordered based on a linearization of the sentence’s dependency graph. Words are represented throughout by embedding vectors (Mikolov et al., 2013). These distinct perspectives naturally form a hierarchy that we depict in Figure 1. Language is hierarchical, so it makes sense that comprehension relies on hierarchical levels of understanding.

我们模型的关键在于它通过多个不同视角将问题和候选答案与文本进行比较。我们将问题与其一个候选答案的组合称为假设(下文将详述)。语义视角将假设视为独立完整的单个思想,与文本中的句子进行比较;这些句子通过词嵌入向量的求和与变换来表示,类似于Weston等人(2014)的方法。逐词视角则聚焦于假设与文本中不同尺度下单个词语的相似性匹配。与语义视角类似,我们也考虑完整句子层面的匹配。我们还采用了受Hill等人(2015)启发的子句级滑动窗口,这种方法隐式地考虑了匹配词语之间的线性距离。最后,这个词级滑动窗口在两种不同的文本句子视图上操作:顺序视图(词语按自然顺序出现)和依存视图(词语根据句子的依存图线性化重新排序)。所有词语都通过嵌入向量(Mikolov等人,2013)表示。这些不同视角自然形成了一个层次结构,如图1所示。语言具有层次性,因此理解依赖于层次化的认知水平也是合理的。

The perspectives of our model can be considered a type of feature. However, they are implemented by parametric differentiable functions. This is in contrast to most previous efforts on MCTest, whose numerous hand-engineered features cannot be trained. Our model, significantly, can be trained end-to-end with back propagation. To facilitate learning with limited data, we also develop a unique training scheme. We initialize the model’s neural networks to perform specific heuristic functions that yield decent (thought not impressive) performance on the dataset. Thus, the training scheme gives the model a safe, reasonable baseline from which to start learning. We call this technique training wheels.

我们模型的视角可被视为一种特征。不过,这些特征是通过参数化可微函数实现的。这与之前大多数针对MCTest的研究形成鲜明对比——那些研究中大量手工设计的特征无法被训练。重要的是,我们的模型可以通过反向传播进行端到端训练。为了在有限数据条件下促进学习,我们还开发了一种独特的训练方案:将模型的神经网络初始化为执行特定启发式函数的状态,这些函数能在数据集上产生尚可(虽不惊艳)的性能。因此,该训练方案为模型提供了安全合理的学习起点基准,我们将此技术称为训练轮(training wheels)。

Computational models that comprehend (insofar as they perform well on MC datasets) have developed contemporaneously in several research groups (Weston et al., 2014; Sukhbaatar et al., 2015; Hill et al., 2015; Hermann et al., 2015; Ku- mar et al., 2015). Models designed specifically for MCTest include those of Richardson et al. (2013), and more recently Sachan et al. (2015), Wang and McAllester (2015), and Yin et al. (2016). In experiments, our Parallel-Hierarchical model achieves state-of-the-art accuracy on MCTest, outperforming these existing methods.

能够理解(表现为在MC数据集上表现良好)的计算模型已在多个研究团队中同步发展 (Weston et al., 2014; Sukhbaatar et al., 2015; Hill et al., 2015; Hermann et al., 2015; Kumar et al., 2015)。专为MCTest设计的模型包括Richardson等人 (2013) 的模型,以及近期Sachan等人 (2015)、Wang和McAllester (2015)、Yin等人 (2016) 的模型。实验表明,我们的并行分层模型 (Parallel-Hierarchical) 在MCTest上达到了最先进的准确率,优于现有方法。

Below we describe related work, the mathematical details of our model, and our experiments,

下面我们将介绍相关工作、模型的数学细节以及实验内容。

then analyze our results.

然后分析我们的结果。

2 The Problem

2 问题

In this section we borrow from Sachan et al. (2015), who laid out the MC problem nicely. Machine comprehension requires machines to answer questions based on unstructured text. This can be viewed as selecting the best answer from a set of candidates. In the multiple-choice case, candidate answers are predefined, but candidate answers may also be undefined yet restricted (e.g., to yes, no, or any noun phrase in the text) (Sachan et al., 2015).

本节借鉴了Sachan等人(2015) 的研究,他们对机器阅读理解 (Machine Comprehension) 问题进行了清晰阐述。机器阅读理解要求机器基于非结构化文本来回答问题。这可以视为从一组候选答案中选择最佳答案。在多选题情况下,候选答案是预定义的,但也可能存在未定义但受限的候选答案 (例如限定为是、否或文本中的任何名词短语) (Sachan et al., 2015)。

For each question $q$ , let $T$ be the unstructured text and $A={a_{i}}$ the set of candidate answers to $q$ . The machine comprehension task reduces to selecting the answer that has the highest evidence given $T$ . As in Sachan et al. (2015), we combine an answer and a question into a hypothesis, $h_{i}=$ $f(q,a_{i})$ . To facilitate comparisons of the text with the hypotheses, we also break down the passage into sentences $t_{j}$ , $T={t_{j}}$ . In our setting, $q$ , $a_{i}$ , and $t_{j}$ each represent a sequence of embedding vectors, one for each word and punctuation mark in the respective item.

对于每个问题 $q$,设 $T$ 为非结构化文本,$A={a_{i}}$ 为 $q$ 的候选答案集合。机器阅读理解任务简化为选择在给定 $T$ 下具有最高证据支持的答案。参照 Sachan et al. (2015) 的方法,我们将答案与问题组合成假设 $h_{i}=$ $f(q,a_{i})$。为了便于文本与假设的对比,我们还将段落分解为句子 $t_{j}$,即 $T={t_{j}}$。在本设定中,$q$、$a_{i}$ 和 $t_{j}$ 均表示嵌入向量序列,每个向量对应各自项中的单词或标点符号。

3 Related Work

3 相关工作

Machine comprehension is currently a hot topic within the machine learning community. In this section we will focus on the best-performing models applied specifically to MCTest, since it is somewhat unique among MC datasets (see Section 5). Generally, models can be divided into two categories: those that use fixed, engineered features, and neural models. The bulk of the work on MCTest falls into the former category.

机器理解目前是机器学习领域的热门话题。本节我们将重点讨论专门应用于MCTest的性能最佳模型,因为它在MC数据集中较为独特(参见第5节)。通常,模型可分为两类:使用固定工程特征的方法和神经网络方法。针对MCTest的研究工作主要集中于前者。

Manually engineered features often require significant effort on the part of a designer, and/or various auxiliary tools to extract them, and they cannot be modified by training. On the other hand, neural models can be trained end-to-end and typically harness only a single feature: vectorrepresentations of words. Word embeddings are fed into a complex and possibly deep neural network which processes and compares text to question and answer. Among deep models, mecha- nisms of attention and working memory are common, as in Weston et al. (2014) and Hermann et al. (2015).

手动设计的特征通常需要设计者付出大量努力,和/或借助各种辅助工具来提取,且无法通过训练进行修改。另一方面,神经网络模型可以进行端到端训练,通常仅利用单一特征:词语的向量表示 (vector representations)。词嵌入 (word embeddings) 会被输入到一个复杂且可能很深的神经网络中,该网络处理并比较文本与问题和答案。在深度模型中,注意力机制和工作记忆机制很常见,如 Weston 等人 (2014) 和 Hermann 等人 (2015) 的研究所示。

3.1 Feature-engineering models

3.1 特征工程模型

Sachan et al. (2015) treated MCTest as a structured prediction problem, searching for a latent answerentailing structure connecting question, answer, and text. This structure corresponds to the best latent alignment of a hypothesis with appropriate snippets of the text. The process of (latently) selecting text snippets is related to the attention mechanisms typically used in deep networks designed for MC and machine translation (Bahdanau et al., 2014; Weston et al., 2014; Hill et al., 2015; Hermann et al., 2015). The model uses event and entity co reference links across sentences along with a host of other features. These include specifically trained word vectors for synonymy; antonymy and class-inclusion relations from external database sources; dependencies and semantic role labels. The model is trained using a latent structural SVM extended to a multitask setting, so that questions are first classified using a pretrained top-level classifier. This enables the system to use different processing strategies for different question categories. The model also combines question and answer into a well-formed statement using the rules of Cucerzan and Agichtein (2005).

Sachan等人(2015)将MCTest视为结构化预测问题,通过寻找连接问题、答案和文本的潜在答案推导结构。该结构对应于假设与文本适当片段的最佳潜在对齐。(潜在)选择文本片段的过程与通常用于多项选择(MC)和机器翻译的深度网络中的注意力机制相关(Bahdanau等人,2014;Weston等人,2014;Hill等人,2015;Hermann等人,2015)。该模型使用跨句子的事件和实体共指链接以及许多其他特征,包括专门训练的同义词词向量;来自外部数据库的反义词和类包含关系;依存关系和语义角色标签。模型采用扩展到多任务设置的潜在结构SVM进行训练,因此问题首先使用预训练的顶层分类器进行分类。这使得系统能够针对不同问题类别采用不同的处理策略。该模型还使用Cucerzan和Agichtein(2005)的规则将问题和答案组合成格式良好的陈述。

Our model is simpler than that of Sachan et al. (2015) in terms of the features it takes in, the training procedure (stochastic gradient descent vs. alternating minimization), question classification (we use none), and question-answer combination (simple concatenation or mean vs. a set of rules).

我们的模型比Sachan等人 (2015) 的模型更简单,体现在输入特征、训练过程(随机梯度下降 vs. 交替最小化)、问题分类(我们未使用)以及问答组合(简单拼接或均值 vs. 一组规则)等方面。

Wang and McAllester (2015) augmented the baseline feature set from Richardson et al. (2013) with features for syntax, frame semantics, coreference chains, and word embeddings. They combined features using a linear latent-variable classifier trained to minimize a max-margin loss func- tion. As in Sachan et al. (2015), questions and answers are combined using a set of manually written rules. The method of Wang and McAllester (2015) achieved the previous state of the art, but has significant complexity in terms of the feature set.

Wang 和 McAllester (2015) 在 Richardson 等人 (2013) 的基线特征集基础上,增加了句法、框架语义、共指链和词嵌入等特征。他们采用线性潜变量分类器组合特征,通过最小化最大间隔损失函数进行训练。与 Sachan 等人 (2015) 的方法类似,问题和答案通过一组人工编写的规则进行组合。Wang 和 McAllester (2015) 的方法达到了当时的最高水平,但特征集复杂度较高。

Space does not permit a full description of all models in this category, but see also Smith et al. (2015) and Narasimhan and Barzilay (2015).

篇幅所限,无法详细描述此类所有模型,另请参阅 Smith et al. (2015) 和 Narasimhan and Barzilay (2015)。

Despite its relative lack of features, the ParallelHierarchical model improves upon the featureengineered state of the art for MCTest by a small amount (about $1%$ absolute) as detailed in Section 5.

尽管功能相对较少,ParallelHierarchical模型在MCTest的特征工程最优结果基础上略有提升(绝对提升约$1%$),具体细节见第5节。

3.2 Neural models

3.2 神经模型

Neural models have, to date, performed relatively poorly on MCTest. This is because the dataset is sparse and complex.

迄今为止,神经模型在MCTest上的表现相对较差。这是因为该数据集稀疏且复杂。

Yin et al. (2016) investigated deep-learning approaches concurrently with the present work. They measured the performance of the Attentive Reader (Hermann et al., 2015) and the Neural Reasoner (Peng et al., 2015), both deep, end-to-end recurrent models with attention mechanisms, and also developed an attention-based convolutional network, the HABCNN. Their network operates on a hierarchy similar to our own, providing further evidence of the promise of hierarchical perspectives. Specifically, the HABCNN processes text at the sentence level and the snippet level, where the latter combines adjacent sentences (as we do through an $n$ -gram input). Embedding vectors for the question and the answer candidates are combined and encoded by a convolutional network. This encoding modulates attention over sentence and snippet encodings, followed by maxpooling to determine the best matches between question, answer, and text. As in the present work, matching scores are given by cosine similarity. The HABCNN also makes use of a question classifier.

Yin等人 (2016) 与本研究同期探索了深度学习方法。他们测试了Attentive Reader (Hermann等人, 2015) 和Neural Reasoner (Peng等人, 2015) 的性能——这两个都是带有注意力机制的深度端到端循环模型,并开发了基于注意力的卷积网络HABCNN。该网络采用与我们类似的层级结构,进一步验证了层级视角的潜力。具体而言,HABCNN在句子层面和片段层面处理文本(后者通过组合相邻句子实现,类似我们的$n$-gram输入方式)。问题与候选答案的嵌入向量经卷积网络组合编码后,通过注意力机制作用于句子和片段编码,再经最大池化确定问题、答案与文本间的最佳匹配。与本研究相同,匹配分数采用余弦相似度计算。HABCNN还使用了问题分类器。

Despite the shared concepts between the HABCNN and our approach, the ParallelHierarchical model performs significantly better on MCTest (more than $15%$ absolute) as detailed in Section 5. Other neural models tested in Yin et al. (2016) fare even worse.

尽管HABCNN与我们的方法共享一些概念,但如第5节所述,ParallelHierarchical模型在MCTest上的表现显著更优(绝对优势超过15%)。Yin等人(2016)测试的其他神经模型表现更差。

4 The Parallel-Hierarchical Model

4 并行-层次模型

Let us now define our machine comprehension model in full. We first describe each of the perspectives separately, then describe how they are combined. Below, we use subscripts to index elements of sequences, like word vectors, and superscripts to indicate whether elements come from the text, question, or answer. In particular, we use the subscripts $k,m,n,p$ to index sequences from the text, question, answer, and hypothesis, respectively, and superscripts $t,q,a,h$ . We depict the model schematically in Figure 1.

现在让我们完整定义我们的机器理解模型。我们首先分别描述每个视角,然后说明它们是如何组合的。下文我们使用下标索引序列元素(如词向量),上标表示元素来自文本、问题还是答案。具体而言,我们使用下标$k,m,n,p$分别索引来自文本、问题、答案和假设的序列,上标$t,q,a,h$。模型示意图见图1:

4.1 Semantic Perspective

4.1 语义视角

The semantic perspective is similar to the Memory Networks approach for embedding inputs into memory space (Weston et al., 2014). Each sentence of the text is a sequence of $d$ -dimensional word vectors: $t_{j}={\mathbf{t}{k}},\mathbf{t}_{k}\in\mathbb{R}^{d}$ . The semantic vector $\mathbf{s}^{t}$ is computed by embedding the word vectors into a $D$ -dimensional space using a two-layer network that implements weighted sum followed by an affine tr an formation and a non linearity; i.e.,

语义视角类似于将输入嵌入到记忆空间的记忆网络方法 (Weston et al., 2014)。文本的每个句子都是一个 $d$ 维词向量序列:$t_{j}={\mathbf{t}{k}},\mathbf{t}_{k}\in\mathbb{R}^{d}$。语义向量 $\mathbf{s}^{t}$ 是通过将词向量嵌入到 $D$ 维空间计算得出的,使用一个两层网络实现加权求和,随后进行仿射变换和非线性处理;即,

Figure 1: Schematic of the Parallel-Hierarchical model. SW stands for “sliding window.” MLP represents a general neural network.

图 1: 并行-层次模型示意图。SW代表"滑动窗口"(sliding window)。MLP表示通用神经网络。

$$

\mathbf{s}^{t}=f\left(\mathbf{A}^{t}\sum_{k}\omega_{k}\mathbf{t}{k}+\mathbf{b}_{A}^{t}\right).

$$

$$

\mathbf{s}^{t}=f\left(\mathbf{A}^{t}\sum_{k}\omega_{k}\mathbf{t}{k}+\mathbf{b}_{A}^{t}\right).

$$

The matrix $\mathbf{A}^{t}\in\mathbb{R}^{D\times d}$ , the bias vector $\mathbf{b}{A}^{t}\in$ $\mathbb{R}^{D}$ , and for $f$ we use the leaky $R e L U$ function. The scalar $\omega_{k}$ is a trainable weight associ- ated to each word in the vocabulary. These scalar weights implement a kind of exogenous or bottomup attention that depends only on the input stimulus (Mayer et al., 2004). They can, for example, learn to perform the function of stopword lists in a soft, trainable way, to nullify the contribution of unimportant filler words.

矩阵 $\mathbf{A}^{t}\in\mathbb{R}^{D\times d}$,偏置向量 $\mathbf{b}{A}^{t}\in$ $\mathbb{R}^{D}$,对于函数 $f$ 我们使用 leaky $ReLU$。标量 $\omega_{k}$ 是与词汇表中每个词相关联的可训练权重。这些标量权重实现了一种仅依赖于输入刺激的外源性或自下而上的注意力机制 (Mayer et al., 2004)。例如,它们可以通过一种柔性的、可训练的方式学习执行停用词列表的功能,从而消除不重要填充词的贡献。

The semantic representation of a hypothesis is formed analogously, except that we combine the question word vectors $\mathbf{q}{m}$ and answer word vectors ${\bf a}{n}$ as a single sequence ${{{\bf h}{p}}}={{{\bf q}{m},{\bf a}{n}}}$ . For semantic vector $\mathbf{s}^{h}$ of the hypothesis, we use a unique transformation matrix $\mathbf{A}^{h}\in\mathbb{R}^{D\times d}$ and bias vector $\mathbf{b}_{A}^{h}\in\mathbb{R}^{D}$ .

假设的语义表示以类似方式形成,不同之处在于我们将问题词向量 $\mathbf{q}{m}$ 和答案词向量 ${\bf a}{n}$ 组合为单一序列 ${{{\bf h}{p}}}={{{\bf q}{m},{\bf a}{n}}}$ 。对于假设的语义向量 $\mathbf{s}^{h}$ ,我们使用唯一的变换矩阵 $\mathbf{A}^{h}\in\mathbb{R}^{D\times d}$ 和偏置向量 $\mathbf{b}_{A}^{h}\in\mathbb{R}^{D}$ 。

These transformations map a text sentence and a hypothesis into a common space where they can be compared. We compute the semantic match between text sentence and hypothesis using the cosine similarity,

这些变换将文本句子和假设映射到一个可比较的共同空间。我们使用余弦相似度计算文本句子与假设之间的语义匹配,

$$

M^{\mathrm{sem}}=\cos({\bf s}^{t},{\bf s}^{h}).

$$

$$

M^{\mathrm{sem}}=\cos({\bf s}^{t},{\bf s}^{h}).

$$

4.2 Word-by-Word Perspective

4.2 逐词视角

The first step in building the word-by-word perspective is to transform word vectors from a text sentence, question, and answer through respective neural functions. For the text, $\begin{array}{r l}{\tilde{\mathbf{t}}{k}}&{{}=}\end{array}$ $f\left(\mathbf{B}^{t}\mathbf{t}{k}+\mathbf{b}{B}^{t}\right)$ , where $\mathbf{B}^{t}\in\mathbb{R}^{D\times d}$ , ${\bf b}{B}^{t}\in\mathbb{R}^{D}$ and $f$ is again the leaky ReLU. We transform the question and the answer to $\widetilde{\mathbf{q}}{m}$ and $\tilde{\mathbf{a}}_{n}$ analogously us- ing distinct matrices and bias vectors. In contrast with the semantic perspective, we keep the question and answer candidates separate in the word- by-word perspective. This is because matches to answer words are inherently more important than matches to question words, and we want our model to learn to use this property.

构建逐词视角的第一步是通过各自的神经网络函数转换来自文本句子、问题和答案的词向量。对于文本,$\begin{array}{r l}{\tilde{\mathbf{t}}{k}}&{{}=}\end{array}$ $f\left(\mathbf{B}^{t}\mathbf{t}{k}+\mathbf{b}{B}^{t}\right)$,其中$\mathbf{B}^{t}\in\mathbb{R}^{D\times d}$,${\bf b}{B}^{t}\in\mathbb{R}^{D}$,$f$仍是泄漏型ReLU。我们类似地使用不同的矩阵和偏置向量将问题和答案转换为$\widetilde{\mathbf{q}}{m}$和$\tilde{\mathbf{a}}_{n}$。与语义视角不同,我们在逐词视角中保持问题和候选答案分离。这是因为与答案词的匹配本质上比与问题词的匹配更重要,我们希望模型学会利用这一特性。

4.2.1 Sentential

4.2.1 句子级

Inspired by the work of Wang and Jiang (2015) in paraphrase detection, we compute matches between hypotheses and text sentences at the word level. This computation uses the cosine similarity as before:

受Wang和Jiang (2015)在复述检测工作中的启发,我们在单词级别计算假设与文本句子之间的匹配度。该计算沿用之前的余弦相似度方法:

$$

\begin{array}{c}{{c_{k m}^{q}=\cos(\tilde{\bf t}{k},\tilde{\bf q}{m}),}}\ {{c_{k n}^{a}=\cos(\tilde{\bf t}{k},\tilde{\bf a}_{n}).}}\end{array}

$$

$$

\begin{array}{c}{{c_{k m}^{q}=\cos(\tilde{\bf t}{k},\tilde{\bf q}{m}),}}\ {{c_{k n}^{a}=\cos(\tilde{\bf t}{k},\tilde{\bf a}_{n}).}}\end{array}

$$

The word-by-word match between a text sentence and question is determined by taking the maximum over $k$ (finding the text word that best matches each question word) and then taking a weighted mean over $m$ (finding the average match over the full question):

文本句子与问题的逐词匹配是通过对 $k$ 取最大值 (找到与每个问题词最匹配的文本词) 然后对 $m$ 取加权平均 (计算整个问题的平均匹配度) 来确定的:

$$

M^{q}=\frac{1}{Z}\sum_{m}\omega_{m}\operatorname*{max}{k}c_{k m}^{q}.

$$

$$

M^{q}=\frac{1}{Z}\sum_{m}\omega_{m}\operatorname*{max}{k}c_{k m}^{q}.

$$

Here, $\omega_{m}$ is the word weight for the question word and $Z$ normalizes these weights to sum to one over the question. We define the match between a sentence and answer candidate, $M^{a}$ , analogously. Finally, we combine the matches to question and answer according to

这里,$\omega_{m}$ 是问题词的权重,$Z$ 将这些权重归一化,使其在问题上总和为一。我们类似地定义句子与答案候选之间的匹配度 $M^{a}$。最后,我们根据以下方式将问题与答案的匹配度结合起来:

$$

M^{\mathrm{word}}=\alpha_{1}M^{q}+\alpha_{2}M^{a}+\alpha_{3}M^{q}M^{a}.

$$

$$

M^{\mathrm{word}}=\alpha_{1}M^{q}+\alpha_{2}M^{a}+\alpha_{3}M^{q}M^{a}.

$$

Here the $\alpha$ are trainable parameters that control the relative importance of the terms.

这里的 $\alpha$ 是可训练参数,用于控制各项的相对重要性。

4.2.2 Sequential Sliding Window

4.2.2 顺序滑动窗口

The sequential sliding window is related to the original MCTest baseline by Richardson et al. (2013). Our sliding window decays from its focus word according to a Gaussian distribution, which we extend by assigning a trainable weight to each location in the window. This modification enables the window to use information about the distance between word matches; the original baseline used distance information through a predefined function.

顺序滑动窗口与Richardson等人(2013)提出的原始MCTest基线相关。我们的滑动窗口根据高斯分布从其焦点词开始衰减,我们通过为窗口中的每个位置分配可训练权重来扩展这一方法。这一改进使得窗口能够利用词语匹配间距离的信息;原始基线通过预定义函数来使用距离信息。

The sliding window scans over the words of the text as one continuous sequence, without sentence breaks. Each window is treated like a sentence in the previous subsection, but we include a location-based weight $\lambda(k)$ . This weight is based on a word’s position in the window, which, given a window, depends on its global position $k$ . The cosine similarity is adapted as

滑动窗口将文本中的单词作为一个连续序列进行扫描,不包含句子分隔符。每个窗口的处理方式与上一小节中的句子类似,但我们会加入基于位置的权重 $\lambda(k)$ 。该权重取决于单词在窗口中的位置,而给定一个窗口时,该位置又取决于其全局位置 $k$ 。余弦相似度的计算公式相应调整为

$$

\begin{array}{r}{s_{k m}^{q}=\lambda(k)\cos(\tilde{\bf t}{k},\tilde{\bf q}_{m}),}\end{array}

$$

$$

\begin{array}{r}{s_{k m}^{q}=\lambda(k)\cos(\tilde{\bf t}{k},\tilde{\bf q}_{m}),}\end{array}

$$

for the question and analogously for the answer. We initialize the location weights with a Gaussian and fine-tune them during training. The final matching score, denoted as $M^{\mathrm{sws}}$ , is computed as in (5) and (6) with sqkm replacing cqkm.

对于问题,答案也类似处理。我们用高斯分布初始化位置权重,并在训练过程中进行微调。最终的匹配分数记为 $M^{\mathrm{sws}}$ ,其计算方式与(5)和(6)相同,只是将cqkm替换为sqkm。

4.2.3 Dependency Sliding Window

4.2.3 依赖滑动窗口

The dependency sliding window operates identically to the linear sliding window, but on a different view of the text passage. The output of this component is $M^{\mathrm{swd}}$ and is formed analogously to $M^{\mathrm{sws}}$ .

依赖滑动窗口 (dependency sliding window) 的操作方式与线性滑动窗口 (linear sliding window) 完全相同,但作用于文本段落的不同视图。该组件的输出为 $M^{\mathrm{swd}}$ ,其形成方式与 $M^{\mathrm{sws}}$ 类似。

The dependency perspective uses the Stanford Dependency Parser (Chen and Manning, 2014) as an auxiliary tool. Thus, the dependency graph can be considered a fixed feature. Moreover, linearization of the dependency graph, because it relies on an ei gen decomposition, is not differentiable. However, we handle the linear iz ation in data preprocessing so that the model sees only reordered word-vector inputs.

依赖视角采用斯坦福依存句法分析器 (Chen and Manning, 2014) 作为辅助工具。因此,依存图可视为固定特征。此外,由于依存图的线性化依赖于特征分解,该过程不可微分。但我们将线性化操作置于数据预处理阶段,使模型仅接收重排序后的词向量输入。

Specifically, we run the Stanford Dependency Parser on each text sentence to build a dependency graph. This graph has $n_{w}$ vertices, one for each word in the sentence. From the dependency graph we form the Laplacian matrix $\mathbf{L}\in\mathbb{R}^{n_{w}\times n_{w}}$ and determine its ei gen vectors. The second eigenvector $\mathbf{u}_{2}$ of the Laplacian is known as the Fiedler vector. It is the solution to the minimization

具体来说,我们对每个文本句子运行Stanford Dependency Parser以构建依存图。该图包含$n_{w}$个顶点,每个顶点对应句子中的一个单词。从依存图中我们构建拉普拉斯矩阵$\mathbf{L}\in\mathbb{R}^{n_{w}\times n_{w}}$并确定其特征向量。拉普拉斯矩阵的第二特征向量$\mathbf{u}_{2}$被称为Fiedler向量,它是以下最小化问题的解

$$

\operatorname*{minimize}\sum_{i,j=1}^{N}\eta_{i j}(g(v_{i})-g(v_{j}))^{2},

$$

$$

\operatorname*{minimize}\sum_{i,j=1}^{N}\eta_{i j}(g(v_{i})-g(v_{j}))^{2},

$$

where $v_{i}$ are the vertices of the graph, and $\eta_{i j}$ is the weight of the edge from vertex $i$ to vertex $j$ (Golub and Van Loan, 2012). The Fiedler vector maps a weighted graph onto a line such that connected nodes stay close, modulated by the connection weights.1 This enables us to reorder the words of a sentence based on their proximity in the dependency graph. The reordering of the words is given by the ordered index set

其中 $v_{i}$ 是图的顶点,$\eta_{i j}$ 是从顶点 $i$ 到顶点 $j$ 的边权重 (Golub and Van Loan, 2012)。菲德勒向量 (Fiedler vector) 将加权图映射到一条直线上,使得相连节点保持接近,并由连接权重调节。这使得我们能够根据依存图中单词的邻近度对句子中的单词重新排序。单词的重新排序由有序索引集给出。

$$

I=\arg\mathrm{sort}(\mathbf{u}_{2}).

$$

$$

I=\arg\mathrm{sort}(\mathbf{u}_{2}).

$$

To give an example of how this works, consider the following sentence from MCTest and its dependency-based reordering:

为了说明其工作原理,以MCTest中的以下句子及其基于依存关系的重排为例:

Sliding-window-based matching on the original sentence will answer the question Who called the police? with Mrs. Mustard. The dependency reordering enables the window to determine the correct answer, Jenny.

基于滑动窗口的原始句子匹配会以"Mustard夫人"回答"谁报警了"这个问题。依赖关系重排序使窗口能够确定正确答案"Jenny"。

4.3 Combining Distributed Evidence

4.3 分布式证据组合

It is important in comprehension to synthesize information found throughout a document. MCTest was explicitly designed to ensure that it could not be solved by lexical techniques alone, but would instead require some form of inference or limited reasoning (Richardson et al., 2013). It therefore includes questions where the evidence for an answer spans several sentences.

理解文档时需要综合各处信息。MCTest的设计初衷就是确保无法仅通过词汇技术解决,而需要某种形式的推理或有限推理 (Richardson et al., 2013)。因此,该测试包含需要跨多个句子寻找答案依据的问题。

To perform synthesis, our model also takes in $n$ - grams of sentences, i.e., sentence pairs and triples strung together. The model treats these exactly as it does single sentences, applying all functions detailed above. A later pooling operation combines scores across all $n$ -grams (including the singlesentence input). This is described in the next subsection.

为了进行合成,我们的模型还接收句子的$n$-gram,即串联在一起的句子对和三联句。模型将这些$n$-gram与单句输入同等对待,应用上述所有函数。随后的池化操作会合并所有$n$-gram(包括单句输入)的分数。下一小节将对此进行详细描述。

With $n$ -grams, the model can combine information distributed across contiguous sentences. In some cases, however, the required evidence is spread across distant sentences. To give our model some capacity to deal with this scenario, we take the top $N$ sentences as scored by all the preceding functions, and then repeat the scoring computations viewing these top $N$ as a single sentence.

使用 $n$-gram 时,模型可以整合分布在连续句子中的信息。然而在某些情况下,所需证据分散在相距较远的句子中。为了让模型具备处理这种情况的能力,我们选取前序所有函数评分最高的 $N$ 个句子,然后将这 $N$ 个句子视为单个句子重新进行评分计算。

The reasoning behind these approaches can be explained well in a probabilistic setting. If we consider our similarity scores to model the likelihood of a text sentence given a hypothesis, $p(t_{j}|h_{i})$ , then the $n$ -gram and top $N$ approaches model a joint probability $p(t_{j_{1}},t_{j_{2}},\ldots,t_{j_{k}}|h_{i})$ . We cannot model the joint probability as a product of individual terms (score values) because distributed pieces of evidence are likely not independent.

这些方法背后的推理可以在概率设定中得到很好的解释。若将相似度分数视为给定假设下文本句子的似然度 $p(t_{j}|h_{i})$ ,那么 $n$ -gram 和 top $N$ 方法建模的是联合概率 $p(t_{j_{1}},t_{j_{2}},\ldots,t_{j_{k}}|h_{i})$ 。由于分布式证据片段很可能不独立,我们无法将联合概率建模为单个项(分数值)的乘积。

4.4 Combining Perspectives

4.4 结合视角

We use a multilayer perceptron to combine $M^{\mathrm{sem}}$ , $M^{\mathrm{word}}$ , $M^{\mathrm{swd}}$ , and $M^{\mathrm{sws}}$ as a final matc