Multi-Stream Keypoint Attention Network for Sign Language Recognition and Translation

多流关键点注意力网络在手语识别与翻译中的应用

Abstract

摘要

Sign language serves as a non-vocal means of communication, transmitting information and significance through gestures, facial expressions, and bodily movements. The majority of current approaches for sign language recognition (SLR) and translation rely on RGB video inputs, which are vulnerable to fluctuations in the background. Employing a keypoint-based strategy not only mitigates the effects of background alterations but also substantially diminishes the computational demands of the model. Nevertheless, contemporary keypoint-based methodologies fail to fully harness the implicit knowledge embedded in keypoint sequences. To tackle this challenge, our inspiration is derived from the human cognition mechanism, which discerns sign language by analyzing the interplay between gesture configurations and supplementary elements. We propose a multi-stream keypoint attention network to depict a sequence of keypoints produced by a readily available keypoint estimator. In order to facilitate interaction across multiple streams, we investigate diverse methodologies such as keypoint fusion strategies, head fusion, and self-distillation. The resulting framework is denoted as MSKA-SLR, which is expanded into a sign language translation (SLT) model through the straightforward addition of an extra translation network. We carry out comprehensive experiments on well-known benchmarks like Phoenix-2014, Phoenix-2014T, and CSL-Daily to showcase the efficacy of our methodology. Notably, we have attained a novel state-of-the-art performance in the sign language translation task of Phoenix-2014T. The code and models can be accessed at: https://github.com/sutwangyan/MSKA.

手语作为一种非语音交流方式,通过手势、面部表情和身体动作传递信息与意义。当前大多数手语识别(SLR)和翻译方法依赖RGB视频输入,易受背景变化影响。采用基于关键点的策略不仅能降低背景变化的干扰,还可大幅减少模型计算需求。然而,现有基于关键点的方法未能充分利用关键点序列中隐含的知识。为解决这一问题,我们从人类认知机制获得启发——通过分析手势形态与辅助要素的相互作用来辨识手语。我们提出多流关键点注意力网络,用于描述由现有关键点估计器生成的关键点序列。为实现多流间交互,我们研究了关键点融合策略、头部融合及自蒸馏等多种方法。该框架被命名为MSKA-SLR,通过简单添加翻译网络即可扩展为手语翻译(SLT)模型。我们在Phoenix-2014、Phoenix-2014T和CSL-Daily等知名基准测试上进行了全面实验,验证了方法的有效性。特别值得注意的是,我们在Phoenix-2014T手语翻译任务中取得了最新的最先进性能。代码与模型已开源:https://github.com/sutwangyan/MSKA。

Keywords: Sign Language Recognition, Sign Language Translation, Self-Attention, Self-Distillation, Keypoint

关键词: 手语识别, 手语翻译, 自注意力机制, 自蒸馏, 关键点

1 Introduction

1 引言

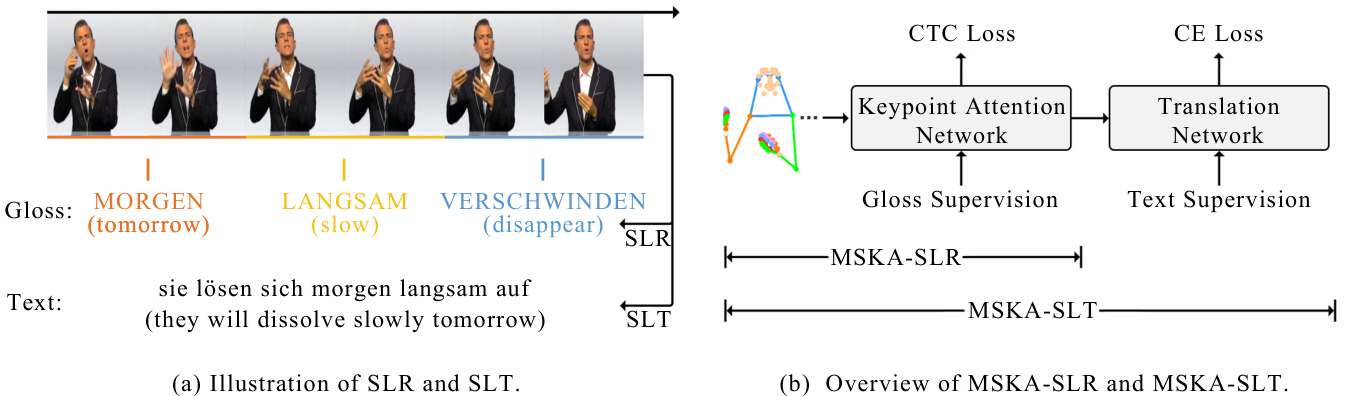

Sign language, a form of communication utilizing gestures, expressions, and bodily movements, has been the subject of extensive study Bungeroth and Ney (2004); Starner et al (1998); Tamura and Kawasaki (1988). For the deaf and mute community, sign language serves as their primary mode of communication. It holds profound significance, offering an effective medium for this particular demographic to convey thoughts, emotions, and needs, thereby facilitating their active participation in social interactions. Sign language possesses a unique structure, incorporating elements such as the shape, direction, and placement of gestures, along with facial expressions. Its grammar diverges from that of spoken language, exhibiting differences in grammatical structure and sequence. To address such disparities, certain sign language translation (SLT) tasks integrate gloss sequences before text generation. The transition from visual input to gloss sequences constitutes the process of sign language recognition (SLR). Fig. 1(a) depicts both SLR and SLT tasks.

手语作为一种通过手势、表情和身体动作进行交流的方式,已被广泛研究 [Bungeroth and Ney (2004); Starner et al (1998); Tamura and Kawasaki (1988)]。对于聋哑群体而言,手语是他们主要的沟通媒介,具有深远意义——为这一特殊群体提供了表达思想、情感和需求的有效途径,从而促进其积极参与社会交往。手语具有独特的结构体系,包含手势的形状、方向、位置以及面部表情等要素,其语法规则与口语存在差异,在语法结构和语序上均有所不同。为解决这种差异,部分手语翻译 (SLT) 任务会在文本生成前融入手语标记序列。从视觉输入到手语标记序列的转换过程即构成手语识别 (SLR) 。图 1(a) 展示了 SLR 与 SLT 任务。

Gestures play a pivotal role in the recognition and translation of sign language. Indeed, gestures occupy a modest portion of the video, rendering them vulnerable to shifts in the background and swift hand movements during sign language communication. Consequently, this results in challenges in acquiring sign language attributes. Nevertheless, owing to robustness and computational efficiency of gestures, some methodologies advocate for the employment of keypoints to convey it. Ordinarily, sign language videos undergo keypoint extraction using off-the-shelf keypoint estimator. Following this, the keypoint sequences are regionally cropped to be utilized as input for the model, allowing a more precise focus on the character istics of hand shapes. The TwoStream method, as described in Chen et al (2022b), enhances feature extraction by converting keypoints into heatmaps and implementing 3D convolution. SignBERT $^+$ , detailed in the work by Hu et al (2023a), represents hand keypoints as a graphical framework and employs graph convolutional networks for extracting gesture features. Nevertheless, a key drawback of these approaches is the inadequate exploitation of correlation data among keypoints.

手势在手语的识别与翻译中扮演着关键角色。尽管手势仅占据视频的较小部分,但在手语交流过程中容易受到背景变化和快速手部动作的干扰,这导致获取手语特征面临挑战。然而,得益于手势的鲁棒性和计算效率,部分研究方法建议采用关键点来表征手势。通常,手语视频会先通过现有关键点提取器获取关键点序列,再经过区域裁剪作为模型输入,从而更精准地聚焦手形特征。如Chen等人(2022b)提出的TwoStream方法,通过将关键点转化为热力图并应用3D卷积来增强特征提取。Hu等人(2023a)开发的SignBERT$^+$则将手部关键点构建为图结构,利用图卷积网络提取手势特征。但这些方法存在一个主要缺陷:未能充分挖掘关键点间的关联数据。

To address this challenge, we introduce an innovative network framework that depends entirely on the interplay among keypoints to achieve proficiency in sign language recognition and translation endeavors. Our methodology is influenced by the innate human inclination to prioritize the configuration of gestures and the dynamic interconnection between the hands and other bodily elements in the process of sign language interpretation. The devised multi-stream keypoint attention (MSKA) mechanism is adept at facilitating sign language translation by integrating a supplementary translation network. As a result, the all-encompassing system is designated as MSKA-SLT, as illustrated in Fig. 1(b).

为应对这一挑战,我们提出了一种创新网络框架,该框架完全依赖关键点间的相互作用来实现手语识别与翻译任务的高效处理。我们的方法受到人类在解读手语时天然倾向于关注手势构型及手部与其他身体部位动态关联的启发。所设计的多流关键点注意力机制 (MSKA) 通过集成辅助翻译网络,能够有效促进手语翻译。最终,这个完整系统被命名为 MSKA-SLT,如图 1(b) 所示。

In summary, our contributions primarily consist of the following three aspects:

总之,我们的贡献主要包括以下三个方面:

- To the best of our knowledge, we are the first to propose a multi-stream keypoint attention, which is built with pure attention modules without manual designs of traversal rules or graph topologies.

据我们所知,我们是首个提出多流关键点注意力机制的团队,该机制完全由注意力模块构建,无需人工设计遍历规则或图拓扑结构。

- We propose to decouple the keypoint sequences into four streams, left hand stream, right hand stream, face stream and whole body stream, each focuses on a specific aspect of the skeleton sequence. By fusing different types of features, the model can have a more comprehensive understanding for sign language recognition and translation.

- 我们提出将关键点序列解耦为四个流:左手流、右手流、面部流和全身流,每个流专注于骨架序列的特定方面。通过融合不同类型的特征,模型能够对手语识别和翻译有更全面的理解。

- We conducted extensive experiments to validate the proposed method, demonstrating encouraging improvements in sign language recognition tasks on the three prevalent benchmarks, i.e., Phoenix-2014 Koller et al (2015), Phoenix-2014T Camgoz et al (2018) and CSL-Daily Zhou et al (2021a). Moreover, we achieved new state-of-the-art performance in the translation task of Phoenix-2014T.

- 我们进行了大量实验来验证所提出的方法,在三个主流手语识别基准测试中均取得了显著提升,即 Phoenix-2014 Koller et al (2015) 、Phoenix-2014T Camgoz et al (2018) 和 CSL-Daily Zhou et al (2021a) 。此外,我们在 Phoenix-2014T 的翻译任务中实现了新的最先进性能。

2 Related Work

2 相关工作

2.1 Sign Language Recognition and Translation

2.1 手语识别与翻译

Sign language recognition is a prominent research domain in the realm of computer vision, with the goal of deriving sign glosses through the analysis of video or image data. 2D CNNs are frequently utilized architectures in computer vision to analyze image data, and they have garnered extensive use in research pertaining to sign language recognition Cihan Camgoz et al (2017); Niu and Mak (2020); Cui et al (2019); Zhou et al (2021b); Hu et al (2023b,c); Guo et al (2023).

手语识别是计算机视觉领域的一个重要研究方向,其目标是通过分析视频或图像数据来推导出手语词汇。2D CNN (Convolutional Neural Network) 是计算机视觉中常用于分析图像数据的架构,在Cihan Camgoz等人 (2017)、Niu和Mak (2020)、Cui等人 (2019)、Zhou等人 (2021b)、Hu等人 (2023b,c) 以及Guo等人 (2023) 的手语识别相关研究中得到了广泛应用。

STMC Zhou et al (2021b) proposed a spatiotemporal multi-cue network to address the problem of visual sequence learning. CorrNet Hu et al (2023b) model captures crucial body movement trajectories by analyzing correlation maps between consecutive frames. It employs 2D CNNs to extract image features, followed by a set of 1D CNNs to acquire temporal characteristics. AdaBrowse Hu et al (2023c) introduced a novel adaptive model that dynamically selects the most informative sub sequence from the input video sequence by effectively utilizing redundancy modeled for sequential decision tasks. CTCA Guo et al (2023) build a dual-path network that contains two branches for perceptions of local temporal context and global temporal context. By extending 2D CNNs along the temporal dimension, 3D CNNs can directly process spatio-temporal information in video data. This approach enables a better understanding of the dynamic features of sign language movements, thus enhancing recognition accuracy Li et al (2020); Pu et al (2019); Chen et al (2022a). MMTLB Chen et al (2022a) utilize a pre-trained S3D Xie et al (2018) net- work to extract features from sign language videos for sign language recognition, followed by the use of a translation network for sign language translation tasks. Recent studies in gloss decoder design have predominantly employed either Hidden Markov Models (HMM) Koller et al (2017, 2018, 2020) or Connection is t Temporal Classification (CTC) Cheng et al (2020); Min et al (2021); Zhou et al (2021b), drawing from their success in automatic speech recognition. We opted for CTC due to its straightforward implementation. While CTC loss offers only modest sentence-level guidance, approaches such as those proposed by Cui et al (2019); Zhou et al (2019); Chen et al (2022b) suggest iterative ly deriving detailed pseudo labels from CTC outputs to enhance frame-level supervision. Additionally, Min et al (2021) achieves frame-level knowledge distillation by aligning the entire model with the visual encoder.

STMC Zhou等(2021b)提出时空多线索网络来解决视觉序列学习问题。CorrNet Hu等(2023b)通过分析连续帧之间的相关性图来捕捉关键身体运动轨迹,采用2D CNN提取图像特征后,通过一组1D CNN获取时序特征。AdaBrowse Hu等(2023c)提出新型自适应模型,通过有效利用序列决策任务中的冗余建模,动态选择输入视频序列中最具信息量的子序列。CTCA Guo等(2023)构建了包含局部时序上下文和全局时序上下文感知双路径的网络。通过将2D CNN沿时间维度扩展,3D CNN可直接处理视频数据中的时空信息,这种方法能更好理解手语动作的动态特征,从而提高识别精度Li等(2020); Pu等(2019); Chen等(2022a)。MMTLB Chen等(2022a)使用预训练的S3D Xie等(2018)网络从手语视频中提取特征进行手语识别,再通过翻译网络完成手语翻译任务。

当前手语解码器设计主要采用隐马尔可夫模型(HMM) Koller等(2017,2018,2020)或连接时序分类(CTC) Cheng等(2020); Min等(2021); Zhou等(2021b),这些方法在自动语音识别领域已获成功。我们选择CTC因其实现简单。虽然CTC损失仅提供句子级监督,但Cui等(2019); Zhou等(2019); Chen等(2022b)提出的方法可通过CTC输出迭代生成详细伪标签来增强帧级监督。此外,Min等(2021)通过将整个模型与视觉编码器对齐,实现了帧级知识蒸馏。

Fig. 1: (a)We choose a sign language video from the Phoenix-2014T dataset and display its gloss sequence alongside the corresponding text. The objective of sign language recognition (SLR) is to instruct models in producing matching gloss representations derived from sign language videos. Conversely, the task of sign language translation (SLT) entails creating textual representations that align with sign language videos. (b) MSKA-SLT is constructed on the foundation of MSKA-SLR to facilitate SLT. Keypoint sequences are depicted in coordinate form.

图 1: (a) 我们选取Phoenix-2014T数据集中的手语视频,展示其手语词序列与对应文本。手语识别(SLR)的任务是指导模型从手语视频中生成匹配的手语词表征,而手语翻译(SLT)则需要生成与手语视频对应的文本表征。(b) MSKA-SLT基于MSKA-SLR框架构建以实现手语翻译功能,关键点序列以坐标形式呈现。

In this study, our distillation process leverages the multi-stream architecture to incorporate ensemble knowledge into each individual stream, thereby improving interaction and coherence among the multiple streams. Sign language translation (SLT) involves directly generating textual outputs from sign language videos. Many existing methods frame this task as a neural machine translation (NMT) challenge, employing a visual encoder to extract visual features and feeding them into a translation network for text generation Camgoz et al (2018, 2020b); Chen et al (2022a); Li et al (2020); Zhou et al (2021a); Xie et al (2018); Chen et al (2022b). We adopt mBART Liu et al (2020) as our trans- lation network, given its impressive performance in SLT Chen et al (2022a,b). To attain satis- factory outcomes, gloss supervision is commonly employed in SLT. This involves pre-training the multi-stream attention network on SLR Camgoz et al (2020b); Zhou et al (2021b,a) and jointly training SLR and SLT Zhou et al (2021b,a).

本研究采用多流架构进行知识蒸馏,将集成知识融入每个独立流中,从而提升多流间的交互与连贯性。手语翻译(SLT)任务旨在从手语视频直接生成文本输出。现有方法多将其视为神经机器翻译(NMT)问题,通过视觉编码器提取视觉特征后输入翻译网络生成文本 Camgoz et al (2018, 2020b); Chen et al (2022a); Li et al (2020); Zhou et al (2021a); Xie et al (2018); Chen et al (2022b)。我们选用mBART Liu et al (2020)作为翻译网络,因其在手语翻译中表现优异 Chen et al (2022a,b)。为获得理想效果,手语翻译通常采用词目监督策略:先在手语识别(SLR)任务上预训练多流注意力网络 Camgoz et al (2020b); Zhou et al (2021b,a),再联合训练手语识别与手语翻译 Zhou et al (2021b,a)。

2.2 Introduce Keypoints into SLR and SLT

2.2 将关键点引入SLR和SLT

The optimization of keypoints to enhance the efficacy of SLR and SLT remains a challenging issue. Camgoz et al (2020a) introduce an innovative multichannel transformer design. The suggested structure enables the modeling of both inter and intra contextual connections among distinct sign art i cula tors within the transformer network, while preserving channel-specific details. Papa dimitri ou and Potamianos (2020) presenting an end-toend deep learning methodology that depends on the fusion of multiple spatio-temporal feature streams, as well as a fully convolutional encoderdecoder for prediction. TwoStream-SLR Chen et al (2022b) put forward a dual-stream network framework that integrates domain knowledge such as hand shapes and body movements by modeling the original video and keypoint sequences separately. It utilizes existing keypoint estimators to generate keypoint sequences and explores diverse techniques to facilitate interaction between the two streams. SignBERT $^+$ Hu et al (2023a) incorporates graph convolutional networks (GCN) into hand pose representations and amalgamating them with a self-supervised pre-trained model for hand pose, the aim is to enhance sign language understanding performance. This method utilizes a multi-level masking modeling approach (including joint, frame, and clip levels) to train on extensive sign language data, capturing multilevel contextual information in sign language data. $\mathrm{C^{2}}$ SLR Zuo and Mak (2024) aims to ensure coherence between the acquired attention masks and pose keypoint heatmaps to enable the visual module to concentrate on significant areas.

优化关键点以提升手语识别(SLR)和手语翻译(SLT)效能仍是一个具有挑战性的问题。Camgoz等人(2020a)提出了一种创新的多通道Transformer架构。该结构能够在Transformer网络中建模不同手语表达部位之间的跨通道和通道内上下文关联,同时保留通道特异性信息。Papadimitriou和Potamianos(2020)提出了一种端到端深度学习方法,该方法依赖于多时空特征流的融合,以及用于预测的全卷积编码器-解码器结构。TwoStream-SLR的Chen等人(2022b)提出了一个双流网络框架,通过分别建模原始视频和关键点序列,整合了手部形状和身体动作等领域知识。该方法利用现有关键点估计器生成关键点序列,并探索多种技术来促进双流间的交互作用。SignBERT$^+$的Hu等人(2023a)将图卷积网络(GCN)整合到手部姿态表示中,并将其与自监督预训练的手部姿态模型相结合,旨在提升手语理解性能。该方法采用多级掩码建模策略(包括关节级、帧级和片段级)在大量手语数据上进行训练,以捕捉手语数据中的多级上下文信息。$\mathrm{C^{2}}$SLR的Zuo和Mak(2024)致力于确保所获得的注意力掩码与姿态关键点热图之间的一致性,从而使视觉模块能够聚焦于重要区域。

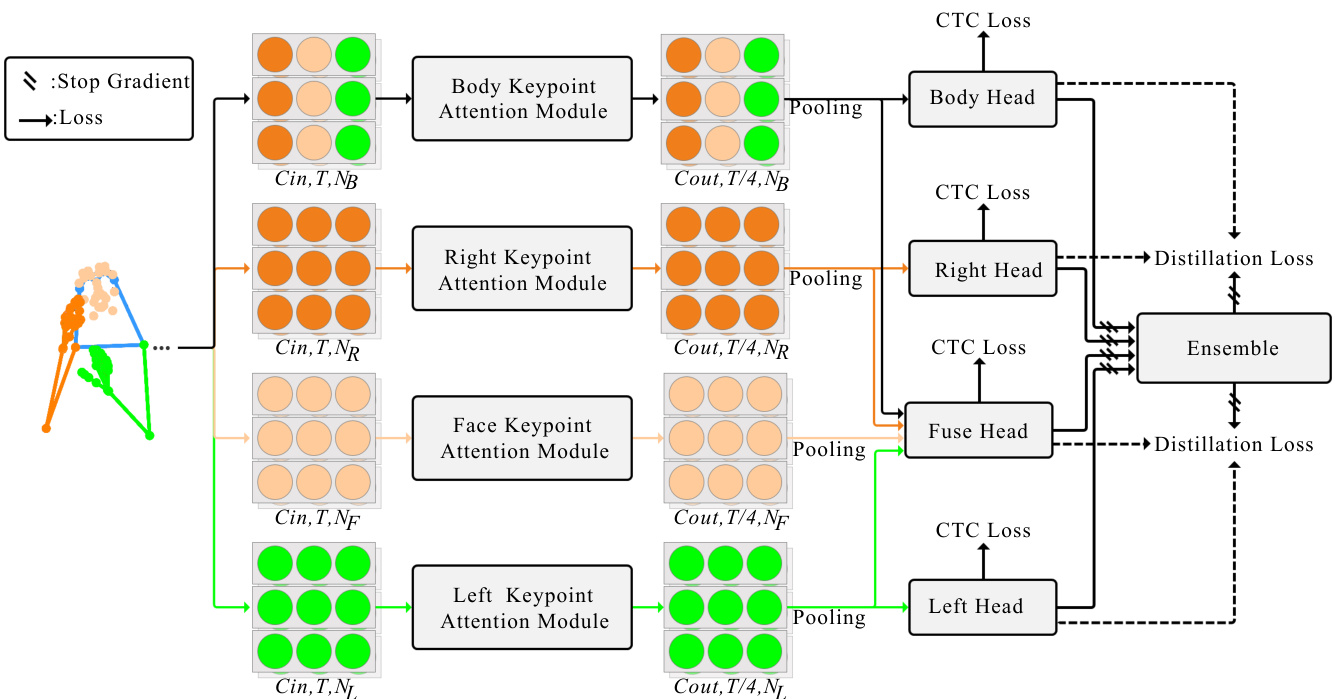

Fig. 2: The overview of our MSKA-SLR. The whole network is jointly supervised by the CTC losses and the self-distillation losses. Keypoints are represented in coordinate format.

图 2: MSKA-SLR 整体架构。整个网络由 CTC (Connectionist Temporal Classification) 损失和自蒸馏损失联合监督。关键点以坐标格式表示。

2.3 Self-attention mechanism

2.3 自注意力机制 (Self-attention mechanism)

Serves as the foundational component within the transformer architecture Vaswani et al (2017); Dai et al (2019), representing a prevalent approach in the realm of natural language processing (NLP). Its operational framework encompasses a set of queries $Q$ , keys $K$ , and values $V$ , each with a dimensionality of $C$ , arranged in matrix format to facilitate efficient computation. Initially, the mechanism computes the dot product between the queries and all keys, subsequently normalizing each by $\sqrt{C}$ and applying a softmax function to derive the corresponding weights assigned to the values Vaswani et al (2017). Mathematically, this process can be formulated as follows:

作为Transformer架构中的基础组件 [Vaswani et al (2017); Dai et al (2019)] ,代表了自然语言处理 (NLP) 领域的流行方法。其操作框架包含一组查询 $Q$ 、键 $K$ 和值 $V$ ,每个矩阵的维度均为 $C$ ,通过矩阵排列实现高效计算。该机制首先计算查询与所有键的点积,随后通过 $\sqrt{C}$ 进行归一化,并应用softmax函数生成对应的值权重分配 [Vaswani et al (2017)] 。该过程的数学表达如下:

$$

A t t e n t i o n(Q,K,V)=s o f t m a x(\frac{Q K^{T}}{\sqrt{C}})V

$$

$$

A t t e n t i o n(Q,K,V)=s o f t m a x(\frac{Q K^{T}}{\sqrt{C}})V

$$

2.4 Multi-Stream Networks

2.4 多流网络

In this work, our approach directly models keypoint sequences through an attention module. Additionally, to mitigate the issue of data scarcity and better capture glosses across different body parts, we introduce multi-stream attention to drive meaningful feature extraction of local features. Modeling the interactions among distinct streams presents a challenging challenge. I3D Carreira and Zisserman (2017) adopts a late fusion strategy by simply averaging the predictions of the two streams. Another approach involves early fusion by lateral connections Fei chten hofer et al (2019), concatenation Zhou et al (2021b), or addition Cui et al (2019) to merge intermediary features of each stream. In this study, we utilize the concept of lateral connections to facilitate mutual supplement ation between multiple streams. Additionally, our self-distillation method integrates knowledge from multiple streams into the generated pseudo-targets, thereby achieving a more profound interaction.

在本研究中,我们的方法通过注意力模块直接建模关键点序列。此外,为缓解数据稀缺问题并更好地捕捉不同身体部位的手势特征,我们引入多流注意力机制以驱动局部特征的有意义提取。建模不同流之间的交互是一个具有挑战性的难题。I3D (Carreira和Zisserman,2017) 采用后期融合策略,通过简单平均两个流的预测结果。另一种方法则采用早期融合策略,通过横向连接 (Feichtenhofer等,2019)、串联 (Zhou等,2021b) 或相加 (Cui等,2019) 来合并每个流的中间特征。本研究利用横向连接的概念促进多流之间的相互补充。此外,我们的自蒸馏方法将多流知识整合到生成的伪目标中,从而实现更深入的交互。

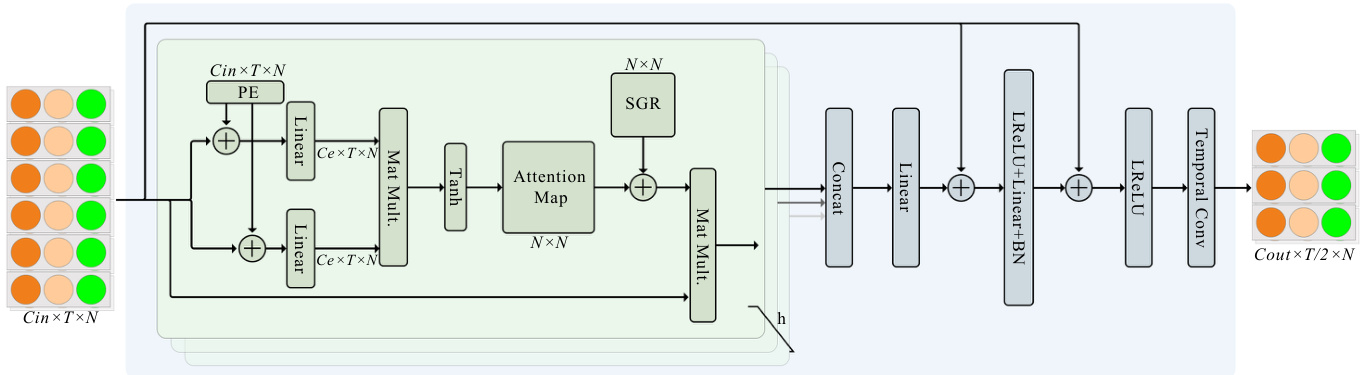

Fig. 3: Illustration of the attention module. We show the body attention module as an example. The others attention module is an analogy. The green rounded rectangle box represents a single-head selfattention module. There are totally $h$ self-attention heads, whose output are concatenated and fed into two linear layers to obtain the output. LReLU represents the leaky ReLU Maas et al (2013).

图 3: 注意力模块示意图。我们以身体注意力模块为例,其他注意力模块同理。绿色圆角矩形框代表单头自注意力模块。共有 $h$ 个自注意力头,其输出经拼接后输入两个线性层以获取最终输出。LReLU 表示带泄露修正线性单元 (leaky ReLU) Maas et al (2013)。

3 Proposed Method

3 提出的方法

In this section, we initially present the data augmentation techniques for keypoint sequences. Subsequently, we elaborate on the individual components of MSKA-SLR. Finally, we outline the composition of MSKA-SLT.

在本节中,我们首先介绍关键点序列的数据增强技术,随后详细阐述MSKA-SLR的各个组件,最后概述MSKA-SLT的构成。

3.1 Keypoint augment

3.1 关键点增强

Typically, sign language video datasets are constrained in size, underscoring the importance of data augmentation. In contrast to prior works such as Guo et al (2023); Hu et al (2023c,b); Chen et al (2022b), our input data comprises keypoint sequences. Analogous to the augmentation techniques employed in image-related tasks, we implement a step for keypoints: Utilizing HRNet Wang et al (2020) to extract keypoints from sign language videos, wherein the keypoint coordinates are denoted with respect to the topleft corner of the image, with the positive $X$ and $Y$ axes oriented towards the rightward and downward directions, respectively. To utilize data augmentation, we pull the origin back to the center of the image and normalize it by a function: $((x/W,(H-y)/H)-0.5)/0.5$ , with horizontal to the right and vertical upwards defining the positive directions of the $X$ and $Y$ axes, respectively. Within this context, the variables $x$ and $y$ denote the coordinates of a given point, whereas $H$ and $W$ symbolize the height and width of the image, respectively.

通常,手语视频数据集的规模有限,这凸显了数据增强的重要性。与Guo等人 (2023)、Hu等人 (2023c,b)、Chen等人 (2022b) 的先前工作不同,我们的输入数据由关键点序列组成。类似于图像相关任务中采用的增强技术,我们对关键点实施了以下处理步骤:使用HRNet (Wang等人, 2020) 从手语视频中提取关键点,其中关键点坐标以图像左上角为基准,正$X$轴和$Y$轴分别朝向右方和下方。为了实现数据增强,我们将原点移至图像中心,并通过函数$((x/W,(H-y)/H)-0.5)/0.5$进行归一化处理,此时水平向右和垂直向上分别定义为$X$轴和$Y$轴的正方向。在此背景下,变量$x$和$y$表示给定点的坐标,而$H$和$W$分别代表图像的高度和宽度。

- We adjust the temporal length of the keypoint sequences within the interval $[\times0.5\mathrm{-}\times1.5]$ , selecting valid frames randomly from this range. 2) The scaling process involves multiplying the coordinates of each point in the provided keypoint set by a scaling factor. 3) The transformation operation is implemented by applying the provided translation vector to the coordinates of each point in the provided set of keypoint coordinates. 4) During the process of rotation, we achieve this by creating a matrix representing the rotation angle. Given a point $P(x,y)$ in two dimensions, the formula for calculating for the resulting point $P^{\prime}(x^{\prime},y^{\prime})$ with the center at the origin, and a counterclockwise rotation by an angle of $\theta$ , is as

- 我们将关键点序列的时间长度调整在区间 $[\times0.5\mathrm{-}\times1.5]$ 内,并从这个范围内随机选择有效帧。

- 缩放过程涉及将所提供关键点集合中每个点的坐标乘以一个缩放因子。

- 变换操作是通过将提供的平移向量应用于所提供的关键点坐标集合中每个点的坐标来实现的。

- 在旋转过程中,我们通过创建一个表示旋转角度的矩阵来实现。给定二维空间中的一个点 $P(x,y)$,计算以原点为中心、逆时针旋转角度 $\theta$ 后的结果点 $P^{\prime}(x^{\prime},y^{\prime})$ 的公式如下:

Where $\cos(\theta)$ and $\displaystyle\sin(\theta)$ are respectively the cosine and sine values of the rotation angle $\theta$ . The matrix multiplication operation rotates a two-dimensional point at coordinates $(x,y)$ counter clockwise around the origin point by an angle of $\theta$ , yielding the rotated point $(x^{\prime},y^{\prime})$ .

其中 $\cos(\theta)$ 和 $\displaystyle\sin(\theta)$ 分别是旋转角度 $\theta$ 的余弦和正弦值。矩阵乘法运算将坐标 $(x,y)$ 处的二维点绕原点逆时针旋转角度 $\theta$,得到旋转后的点 $(x^{\prime},y^{\prime})$。

3.2 SLR

3.2 SLR

3.2.1 Keypoint decoupling

3.2.1 关键点解耦

We noted that the various components of the keypoint sequences within the same sign language sequence should convey the same semantic information. Thus, we divide the keypoint sequences into four sub-sequences: left hand, right hand, facial expressions and overall, and process them independently. Markers of different colors represent distinct keypoint sequences, as illustrated in Fig 2. This segmentation helps the model more accurately capture the relationships between different parts, facilitating the provision of richer diversity of information. By handling them separately, the model can more attentively capture their respective key features. This keypoint decoupling strategy result and enhances over SLR predictions as shown in our experiments.

我们注意到,同一手语序列中的关键点序列各组成部分应传达相同的语义信息。因此,我们将关键点序列划分为四个子序列:左手、右手、面部表情和整体,并分别进行处理。不同颜色的标记代表不同的关键点序列,如图2所示。这种分割有助于模型更准确地捕捉不同部位之间的关系,从而提供更丰富多样的信息。通过分别处理,模型能更专注地捕捉各自的关键特征。实验表明,这种关键点解耦策略有效提升了手语识别(SLR)的预测效果。

3.2.2 Keypoint attention module

3.2.2 关键点注意力模块

We employ HRNet Wang et al (2020), which has been trained on COCO-WholeBody Jin et al (2020) dataset, to generate 133 keypoints, including hand, mouth, eye, and body trunk keypoints. Consistent with Chen et al (2022b), we employ a subset of 79 keypoints, comprising 42 hand keypoints, 11 upper body keypoints covering shoulders, elbows, and wrists, and a subset of facial keypoints (10 mouth keypoints and 16 others). Concretely, denoting the keypoint sequence as a multidimensional array with dimensions $C\times T\times$ $N$ , where the elements of $C$ consist of $\left[x_{t}^{n},y_{t}^{n},c_{t}^{n}\right]$ , $(x_{t}^{n},y_{t}^{n})$ and $c_{t}^{n}$ denotes the coordinates and confidence of the $n$ -th keypoint in the $t$ -th frame, $T$ denotes the frame number, and $N$ is the total number of keypoints.

我们采用HRNet Wang等人 (2020) 生成133个关键点(包括手部、嘴部、眼部和躯干关键点),该模型已在COCO-WholeBody Jin等人 (2020) 数据集上训练。与Chen等人 (2022b) 一致,我们使用79个关键点的子集,包含42个手部关键点、11个覆盖肩部/肘部/腕部的上半身关键点,以及部分面部关键点(10个嘴部关键点和16个其他关键点)。具体而言,将关键点序列表示为维度为$C\times T\times$$N$的多维数组,其中$C$的元素由$\left[x_{t}^{n},y_{t}^{n},c_{t}^{n}\right]$构成,$(x_{t}^{n},y_{t}^{n})$和$c_{t}^{n}$分别表示第$t$帧中第$n$个关键点的坐标和置信度,$T$表示帧数,$N$为关键点总数。

As the attention modules for each stream are analogous, we choose the body keypoint attention module as an example for detailed elucidation. The complete attention module is depicted in the Fig. 3. The procedure within the green rounded rectangle outlines the process of single-head attention computation. The input $\textbf{\textit{X}}\in\mathbb{R}^{C\times T\times N}$ is first enriched with spatial positional encodings. It is then embedded into two linear mapping functions to obtain $\textbf{\textit{X}}\in\mathbb{R}^{C_{e}\times T\times N}$ , where $C_{e}$ is usually smaller than $C_{o u t}$ to alleviate feature redundancy and reduce computational complexity. The attention map is subjected to spatial global normalization. Note that when computing the attention map, we use the Tanh activation function instead of the softmax used in Vaswani et al (2017). This is because the output of Tanh is not restricted to positive values, thus allowing for negative correlations and providing more flexibility Shi et al (2020). Finally, the attention map is element-wise multiplied with the original input to obtain the output features.

由于各注意力模块结构类似,我们以身体关键点注意力模块为例进行详细说明。完整注意力模块如图3所示。绿色圆角矩形框内流程展示了单头注意力计算过程:输入 $\textbf{\textit{X}}\in\mathbb{R}^{C\times T\times N}$ 首先经过空间位置编码增强,随后嵌入两个线性映射函数得到 $\textbf{\textit{X}}\in\mathbb{R}^{C_{e}\times T\times N}$ ,其中 $C_{e}$ 通常小于 $C_{o u t}$ 以减轻特征冗余并降低计算复杂度。注意力图需进行空间全局归一化处理。需注意的是,在计算注意力图时,我们采用Tanh激活函数替代Vaswani等人(2017)使用的softmax,这是因为Tanh输出不限于正值,可保留负相关性从而提供更高灵活性(Shi等人, 2020)。最终,注意力图与原始输入进行逐元素相乘得到输出特征。

To facilitate the model to jointly attend to information from different representation subspaces, the module performs attention computation with $h$ heads. The outputs of all heads are concatenated and mapped to the output space. Similar to the Vaswani et al (2017), we add a feed forward layer at the end to generate the final output. We choose to use leaky ReLU Maas et al (2013) as the non-linear activation function. Additionally, the module includes two residual connections to stabilize network training and integrate different features, as illustrated in the Fig. 3. Finally, we employ 2D convolution to extract temporal features. All processes within the blue rounded rectangle constitute a complete keypoint attention module. It is worth noting that the weights of different keypoint attention modules are not shared.

为了使模型能够共同关注来自不同表示子空间的信息,该模块使用 $h$ 个头进行注意力计算。所有头的输出被拼接并映射到输出空间。与 Vaswani 等人 (2017) 类似,我们在最后添加了一个前馈层来生成最终输出。我们选择使用 leaky ReLU (Maas 等人,2013) 作为非线性激活函数。此外,该模块包含两个残差连接以稳定网络训练并整合不同特征,如图 3 所示。最后,我们采用 2D 卷积来提取时间特征。蓝色圆角矩形内的所有过程构成了一个完整的关键点注意力模块。值得注意的是,不同关键点注意力模块的权重不共享。

3.2.3 Position encoding

3.2.3 位置编码

The keypoint sequences are structured into a tensor and inputted to the neural network. Because there is no predetermined sequence or structure for each element of the tensor, we require a positional encoding mechanism to provide a unique label for every joint. Following Vaswani et al (2017); Shi et al (2020), we employ sinusoidal and cosine functions with different frequencies as

关键点序列被结构化为张量并输入到神经网络中。由于张量的每个元素没有预定的序列或结构,我们需要一种位置编码机制来为每个关节提供唯一标签。参照 Vaswani et al (2017) 和 Shi et al (2020) 的研究,我们采用不同频率的正弦和余弦函数作为...

encoding functions:

编码函数:

$$

\begin{array}{r}{P E(p,2i)=\sin(p/10000^{2i/C_{i n}})}\ {P E(p,2i+1)=\cos(p/10000^{2i/C_{i n}})}\end{array}

$$

$$

\begin{array}{r}{P E(p,2i)=\sin(p/10000^{2i/C_{i n}})}\ {P E(p,2i+1)=\cos(p/10000^{2i/C_{i n}})}\end{array}

$$

Where $p$ represents the position of the element and $i$ denotes the dimension of the positional encoding vector. Incorporating positional encoding allows the model to capture positional infor- mation of elements in the sequence. Their periodic nature provides different representations for distinct positions, enabling the model to better understand the relative positional relationships between elements in the sequence. Joints within a single frame are sequentially encoded, while identical joint across various frames shares a common encoding. It’s worth noting that in contrast to the approach proposed in Shi et al (2020), we only introduce positional encoding for the spatial dimension. We use 2D convolution to extract temporal features, eliminating the need for additional temporal encoding as the continuity of time is already considered in the convolution operation.

其中 $p$ 表示元素位置,$i$ 表示位置编码向量的维度。引入位置编码使模型能够捕捉序列中元素的位置信息,其周期性特征为不同位置提供了差异化表征,从而让模型更好地理解序列元素间的相对位置关系。同一帧内的关节采用顺序编码,而跨帧的相同关节则共享统一编码。值得注意的是,与 Shi et al (2020) 提出的方法不同,我们仅对空间维度引入位置编码。通过二维卷积提取时序特征,由于卷积运算已考虑时间连续性,因此无需额外的时间编码。

3.2.4 Spatial global regular iz ation

3.2.4 空间全局正则化

For action detection tasks on skeleton data, the fundamental concept is to utilize known information, namely that each joint of the human body have unique physical or semantic attributes that remain invariant and consistent across all time frames and instances of data. Utilizing this known information, the objective of spatial global regu lari z ation is to encourage the model to grasp broader attention patterns, thus better adapting to diverse data samples. This method is achieved by implementing a global attention matrix, presented in the form of $N\times N$ , showing the universal relationships among the body joints. This global attention matrix is shared across all data instances and optimized alongside other parameters during training of the network.

对于基于骨骼数据的动作检测任务,其核心理念在于利用已知信息——即人体每个关节都具有独特的物理或语义属性,这些属性在所有时间帧和数据实例中保持恒定不变。通过运用这种先验知识,空间全局正则化 (spatial global regularization) 的目标是促使模型掌握更广泛的注意力模式,从而更好地适应多样化的数据样本。该方法通过实现一个 $N\times N$ 形式的全局注意力矩阵来呈现,该矩阵展示了人体关节间的通用关联性。此全局注意力矩阵在所有数据实例间共享,并在网络训练过程中与其他参数同步优化。

3.2.5 Head Network

3.2.5 头部网络

The output feature from the final attention block undergoes spatial pooling to reduce its dimensions to $T/4\times256$ before being inputted into the head network in the Fig. 2. The primary objective of the head network is to further capture temporal context. It is comprised of a temporal linear layer, a batch normalization layer, a ReLU layer, along with a temporal convolutional block containing two temporal convolutional layers with a kernel size of 3 and a stride of 1, followed by a linear translation layer and another ReLU layer. The resulting feature, known as gloss representation, has dimensions of $T/4\times512$ . Subsequently, a linear classifier and a softmax function are utilized to extract gloss probabilities. We use connectionist temporal classification (CTC) loss $\mathcal{L}_{C T C}^{b o d y}$ to optimize the body attention module.

最终注意力块的输出特征经过空间池化,维度降至 $T/4\times256$ 后输入图 2 中的头部网络。头部网络的主要目标是进一步捕捉时序上下文,其结构包含:时序线性层、批归一化层、ReLU层,以及由两个核大小为3、步长为1的时序卷积层构成的时序卷积块,后接线性变换层和另一个ReLU层。所得特征(称为手语词表征)维度为 $T/4\times512$,随后通过线性分类器和softmax函数提取手语词概率。我们使用连接时序分类 (CTC) 损失 $\mathcal{L}_{C T C}^{b o d y}$ 来优化主体注意力模块。

3.2.6 Fuse Head and Ensemble

3.2.6 融合头与集成

Every keypoint attention module possesses a distinct array of network heads. To thoroughly harness the capabilities of our multi-stream architecture, we integrate an auxiliary fuse head, designed to assimilate outputs from various streams. This fusion head’s configuration mirrors that of its counterparts, like the body head, and is likewise governed by CTC loss, represented as $\mathcal{L}_{C T C}^{f u s e}$ . The forecasted frame gloss probabilities are averaged and subsequently furnished to an ensemble to fabricate the gloss sequence. This ensemble approach amalgamates outcomes from multiple streams, thereby enhancing predictions, as demonstrated in the experiments.

每个关键点注意力模块都拥有独特的网络头阵列。为充分发挥多流架构的潜力,我们引入了一个辅助融合头,用于整合不同流的输出。该融合头的配置与身体头等其他头类似,同样受CTC损失($\mathcal{L}_{C T C}^{f u s e}$)约束。预测帧手语词概率经平均处理后输入集成器以生成手语词序列,这种集成方法通过融合多流结果提升预测性能(如实验所示)。

3.2.7 Self-Distillation

3.2.7 自蒸馏

Frame-Level Self-Distillation Chen et al (2022b) is employed, where the predicted frame gloss probabilities are used as pseudo-targets. In addition to coarse-grained CTC loss, extra fine-grained supervision is provided. Pursuant to our multi-stream design, we use the average gloss probability from the four head networks as pseudo-targets to guide the learning process of each stream. In a formal capacity, we endeavor to diminish the KL divergence between the pseudo-targets and the predictions of the four head networks. This process is designated as frame-level self-distillation loss, for it provides not merely frame-specific oversight but also filters insights from the concluding ensemble into each distinct stream.

采用帧级自蒸馏 (Chen et al, 2022b) 方法,将预测的帧手语词概率作为伪目标。除了粗粒度的 CTC (Connectionist Temporal Classification) 损失外,还提供了细粒度的额外监督。根据多流设计,我们使用四个头网络的平均手语词概率作为伪目标来指导每个流的学习过程。正式而言,我们致力于减小伪目标与四个头网络预测之间的 KL (Kullback-Leibler) 散度。这一过程被称为帧级自蒸馏损失,它不仅提供帧级监督,还将最终集成模型的洞察力过滤到每个独立流中。

3.2.8 Loss Function

3.2.8 损失函数

The overall loss of MSKA-SLR is composed of two parts:1) the CTC losses applied on the outputs of the left stream( $|\mathcal{L}{C T C}^{l e f t})$ , right stream $(\mathcal{L}_{\mathit{C T C}}^{\mathit{r i g h t}})$ , body

MSKA-SLR的整体损失由两部分组成:1) 应用于左流输出 $(|\mathcal{L}{C T C}^{l e f t})$、右流 $(\mathcal{L}_{\mathit{C T C}}^{\mathit{r i g h t}})$ 和身体部分的CTC损失

stream $(\mathcal{L}{C T C}^{b o d y})$ , fuse $\mathrm{stream}({\mathcal{L}}{C T C}^{f u s e});$ 2) the distillation loss $(\mathcal{L}_{D i s t})$ . We formulate the recognition loss as follows:

流式 $(\mathcal{L}{C T C}^{b o d y})$ ,融合 $\mathrm{stream}({\mathcal{L}}{C T C}^{f u s e})$;2) 蒸馏损失 $(\mathcal{L}_{D i s t})$。我们将识别损失公式化如下:

$$

{\cal L}{S L R}={\cal L}{C T C}^{l e f t}+{\cal L}{C T C}^{r i g h t}+{\cal L}{C T C}^{b o d y}+{\cal L}{C T C}^{f u s e}+{\cal L}_{D i s t}

$$

$$

{\cal L}{S L R}={\cal L}{C T C}^{l e f t}+{\cal L}{C T C}^{r i g h t}+{\cal L}{C T C}^{b o d y}+{\cal L}{C T C}^{f u s e}+{\cal L}_{D i s t}

$$

Up to now, we have introduced all components of MSKA-SLR. Once the training is finished, MSKASLR is capable of predicting a gloss sequence by fuse head network.

截至目前,我们已经介绍了MSKA-SLR的所有组件。训练完成后,MSKA-SLR能够通过融合头网络预测手语词序列。

3.3 SLT

3.3 SLT

The traditional methodologies from previous times frequently described sign language translation (SLT) tasks as challenges in neural machine translation (NMT), where the input to the translation network is visual information. This research followed to this approach and implemented a multi-layer perceptron (MLP) with two hidden layers into the MSKA-SLR framework proposed, followed by the translation process, thereby accomplishing SLT. The network constructed in this manner is named MSKA-SLT, with its architecture illustrated in Fig. 1(b). We chose to utilize employ mBART Liu et al (2020) as the translation network due to its outstanding performance in cross-lingual translation tasks. To fully exploit the multi-stream architecture we designed, we appended an MLP and a translation network to the fuse head. The input to the MLP consists of encoded features by the fuse head network, namely the gloss representations. The translation loss is a standard sequence-to-sequence crossentropy loss Vaswani et al (2017). MSKA-SLT includes the recognition loss Eq. 4 and the translation loss represented by $L_{T}$ , as specified in the formula:

传统方法常将手语翻译(SLT)任务描述为神经机器翻译(NMT)的挑战,其翻译网络的输入是视觉信息。本研究沿用这一思路,在提出的MSKA-SLR框架中嵌入了具有两个隐藏层的多层感知机(MLP),随后进行翻译处理,从而完成SLT。按此方式构建的网络命名为MSKA-SLT,其架构如图1(b)所示。我们选择采用mBART [20] 作为翻译网络,因其在跨语言翻译任务中表现优异。为充分发挥我们设计的多流架构,我们在融合头后添加了MLP和翻译网络。MLP的输入由融合头网络编码的特征(即手势表征)构成。翻译损失采用标准的序列到序列交叉熵损失 [20]。MSKA-SLT包含公式4所示的识别损失和由 $L_{T}$ 表示的翻译损失,具体如公式所示:

$$

L_{S L T}=L_{S L R}+L_{T}

$$

$$

L_{S L T}=L_{S L R}+L_{T}

$$

4 Experiments

4 实验

Implementation Details

实现细节

To demonstrate the generalization of our methods, unless otherwise specified, we maintain the same configuration for all experiments. The network employs four streams, with each stream consisting 8 attention blocks, and each block containing 6 attention heads. The output channels are set as follows: 64, 64, 128, 128, 256, 256, 256 and 256 respectively. For SLR tasks, we utilize a cosine annealing schedule over 100 epochs and an Adam optimizer with weight decay set to $1e-3$ , and an initial learning rate of $1e-3$ . The batch size is set to 8. Following Chen et al (2022a,b), we initialize our translation network with mBART-large-cc251 pretrained on CC252. We use a beam width of 5 for both the CTC decoder and the SLT decoder during inference. We train for 40 epochs with an initial learning rate of $1e\mathrm{-~}3$ for the MLP and $1e-5$ for MSKA-SLR and the translation network in MSKA-SLT. Other hyper-parameters remain consistent with MSKA-SLR. We train our models on one Nvidia 3090 GPU.

为验证方法的泛化性,除非另有说明,所有实验均保持相同配置。网络采用四流架构,每流包含8个注意力块,每块配置6个注意力头。输出通道数依次设置为:64、64、128、128、256、256、256和256。针对手语识别(SLR)任务,我们采用100个周期的余弦退火调度,使用权重衰减为$1e-3$的Adam优化器,初始学习率为$1e-3$,批大小设为8。参照Chen等人(2022a,b)的方案,翻译网络采用CC252预训练的mBART-large-cc25进行初始化。推理阶段CTC解码器与手语翻译(SLT)解码器的束宽均设为5。多层感知器(MLP)初始学习率为$1e\mathrm{-~}3$,MSKA-SLR及MSKA-SLT中翻译网络的初始学习率为$1e-5$,共训练40个周期。其余超参数与MSKA-SLR保持一致。所有实验在Nvidia 3090 GPU上完成训练。