DFORMER: RETHINKING RGBD REPRESENTATION LEARNING FOR SEMANTIC SEGMENTATION

DFORMER: 重新思考RGBD语义分割的表征学习

ABSTRACT

摘要

We present DFormer, a novel RGB-D pre training framework to learn transferable representations for RGB-D segmentation tasks. DFormer has two new key innovations: 1) Unlike previous works that encode RGB-D information with RGB pretrained backbone, we pretrain the backbone using image-depth pairs from ImageNet-1K, and hence the DFormer is endowed with the capacity to encode RGB-D representations; 2) DFormer comprises a sequence of RGB-D blocks, which are tailored for encoding both RGB and depth information through a novel building block design. DFormer avoids the mismatched encoding of the 3D geometry relationships in depth maps by RGB pretrained backbones, which widely lies in existing methods but has not been resolved. We finetune the pretrained DFormer on two popular RGB-D tasks, i.e., RGB-D semantic segmentation and RGB-D salient object detection, with a lightweight decoder head. Experimental results show that our DFormer achieves new state-of-the-art performance on these two tasks with less than half of the computational cost of the current best methods on two RGB-D semantic segmentation datasets and five RGB-D salient object detection datasets. Our code is available at: https://github.com/VCIP-RGBD/DFormer.

我们提出DFormer,这是一种新颖的RGB-D预训练框架,旨在学习可迁移的RGB-D分割任务表征。DFormer具备两项关键创新:1) 不同于以往通过RGB预训练主干编码RGB-D信息的工作,我们使用ImageNet-1K中的图像-深度对进行主干预训练,从而使DFormer具备编码RGB-D表征的能力;2) DFormer由一系列RGB-D模块组成,这些模块通过新颖的结构设计专门用于编码RGB和深度信息。DFormer避免了现有方法中普遍存在但尚未解决的、由RGB预训练主干对深度图三维几何关系的不匹配编码问题。我们在两个主流RGB-D任务(RGB-D语义分割和RGB-D显著目标检测)上对预训练的DFormer进行轻量级解码头微调。实验结果表明,DFormer在两项任务上以不到当前最佳方法一半的计算成本,在两个RGB-D语义分割数据集和五个RGB-D显著目标检测数据集上实现了最先进的性能。代码已开源:https://github.com/VCIP-RGBD/DFormer。

1 INTRODUCTION

1 引言

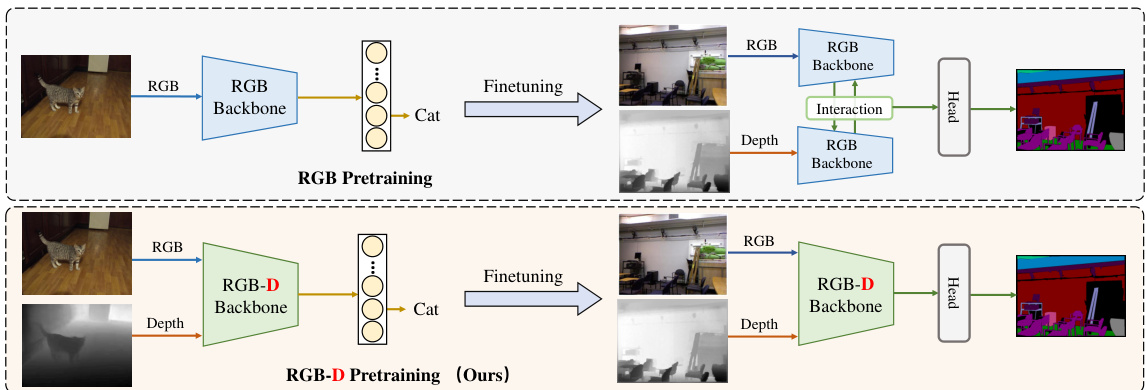

Figure 1: Comparisons between the existing popular training pipeline and ours for RGB-D segmentation. RGB pre training: Recent mainstream methods adopt two RGB pretrained backbones to separately encode RGB and depth information and fuse them at each stage. RGB-D pre training: The RGB-D backbone in DFormer learns transferable RGB-D representations during pre training and then is finetuned for segmentation.

图 1: 现有主流RGB-D分割训练流程与我们的方法对比。RGB预训练:当前主流方法采用两个RGB预训练主干网络分别编码RGB和深度信息,并在每个阶段进行特征融合。RGB-D预训练:DFormer中的RGB-D主干网络在预训练阶段学习可迁移的RGB-D表征,随后针对分割任务进行微调。

With the widespread use of 3D sensors, RGB-D data is becoming increasingly available to access. By incorporating 3D geometric information, it would be easier to distinguish instances and context, facilitating the RGB-D research for high-level scene understanding. Meanwhile, RGB-D data also presents considerable potential in a large number of applications, e.g., SLAM (Wang et al., 2023), automatic driving (Huang et al., 2022), and robotics (Marchal et al., 2020). Therefore, RGB-D research has attracted great attention over the past few years.

随着3D传感器的普及,RGB-D数据的获取日益便捷。通过融入3D几何信息,实例与场景的区分将更为容易,从而推动面向高级场景理解的RGB-D研究。同时,RGB-D数据在众多应用领域展现出巨大潜力,例如SLAM (Wang et al., 2023)、自动驾驶 (Huang et al., 2022) 和机器人技术 (Marchal et al., 2020)。因此,RGB-D研究在过去几年间备受关注。

Fig. 1 (top) shows the pipeline of current mainstream RGB-D methods. As can be observed, the features of the RGB images and depth maps are respectively extracted from two individual RGB pretrained backbones. The interactions between the information of these two modalities are performed during this process. Although the existing methods (Wang et al., 2022; Zhang et al., 2023b) have achieved excellent performance on several benchmark datasets, there are three issues that cannot be ignored: i) The backbones in the RGB-D tasks take an image-depth pair as input, which is inconsistent with the input of an image in RGB pre training, causing a huge representation distribution shift; ii) The interactions are densely performed between the RGB branch and depth branch during finetuning, which may destroy the representation distribution within the pretrained RGB backbones; iii) The dual backbones in RGB-D networks bring more computational cost compared to standard RGB methods, which is not efficient. We argue that an important reason leading to these issues is the pre training manner. The depth information is not considered during pre training.

图 1 (top) 展示了当前主流RGB-D方法的工作流程。如图所示,RGB图像和深度图的特征分别从两个独立的RGB预训练骨干网络中提取。在此过程中,这两种模态的信息会进行交互。尽管现有方法 (Wang et al., 2022; Zhang et al., 2023b) 在多个基准数据集上取得了优异性能,但仍存在三个不可忽视的问题:i) RGB-D任务中的骨干网络以图像-深度对作为输入,这与RGB预训练时的单图像输入不一致,导致表征分布发生显著偏移;ii) 微调阶段RGB分支与深度分支之间密集的交互可能会破坏预训练RGB骨干网络内部的表征分布;iii) 相比标准RGB方法,RGB-D网络采用双骨干结构会带来更高的计算成本,效率较低。我们认为导致这些问题的关键原因在于预训练方式——深度信息在预训练阶段未被纳入考量。

Taking the above issues into account, a straightforward question arises: Is it possible to specifically design an RGB-D pre training framework to eliminate this gap? This motivates us to present a novel RGB-D pre training framework, termed DFormer, as shown in Fig. 1 (bottom). During pre training, we consider taking imagedepth pairs 1, not just RGB images, as input and propose to build interactions between RGB and depth features within the building blocks of the encoder. Therefore, the inconsistency between the inputs of pre training and finetuning can be naturally avoided. In addition, during pretraining, the RGB and depth features can efficiently interact with each other in each building block, avoiding the heavy interaction modules outside the backbones, which is mostly adopted in current dominant methods. Furthermore, we also observe that the depth information only needs a small portion of channels to encode. There

考虑到上述问题,一个直接的问题随之产生:能否专门设计一个RGB-D预训练框架来消除这一差距?这促使我们提出了一种新颖的RGB-D预训练框架,称为DFormer,如图1(底部)所示。在预训练期间,我们考虑将图像-深度对1而不仅仅是RGB图像作为输入,并提议在编码器的构建块中建立RGB和深度特征之间的交互。因此,可以自然地避免预训练和微调输入之间的不一致性。此外,在预训练期间,RGB和深度特征可以在每个构建块中高效地相互交互,避免了当前主流方法中大多采用的骨干网络外部繁重的交互模块。此外,我们还观察到深度信息仅需要一小部分通道进行编码。

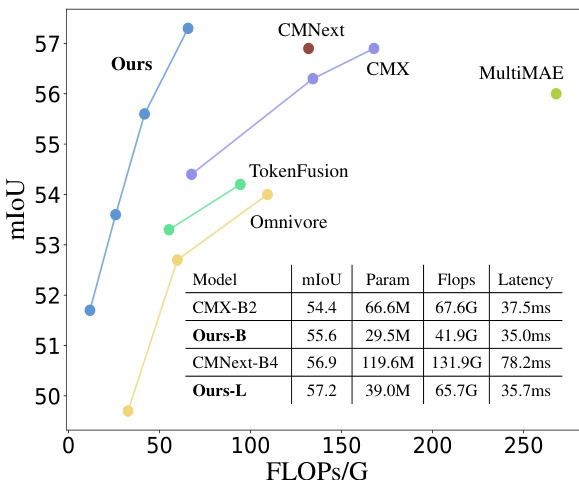

Figure 2: Performance vs. computational cost on the NYUDepthv2 dataset (Silberman et al., 2012). DFormer achieves the state-of-the-art $57.2%$ mIoU and the best trade-off compared to other methods.

图 2: NYUDepthv2数据集 (Silberman et al., 2012) 上的性能与计算成本对比。DFormer以57.2%的mIoU达到当前最优性能,并展现出最佳的性能-计算成本平衡。

is no need to use a whole RGB pretrained backbone to extract depth features as done in previous works. As the interaction starts from the pre training stage, the interaction efficiency can be largely improved compared to previous works as shown in Fig. 2.

无需像以往工作那样使用完整的RGB预训练主干网络来提取深度特征。由于交互从预训练阶段就开始进行,与之前的工作相比,交互效率可以得到大幅提升,如图2所示。

We demonstrate the effectiveness of DFormer on two popular RGB-D downstream tasks, i.e., semantic segmentation and salient object detection. By adding a lightweight decoder on top of our pretrained RGB-D backbone, DFormer sets new state-of-the-art records with less computation costs compared to previous methods. Remarkably, our largest model, DFormer-L, achieves a new stateof-the-art result, i.e., $57.2%$ mIoU on NYU Depthv2 with less than half of the computations of the second-best method CMNext (Zhang et al., 2023b). Meanwhile, our lightweight model DFormerT is able to achieve $51.8%$ mIoU on NYU Depthv2 with only $6.0\mathrm{M}$ parameters and 11.8G Flops. Compared to other recent models, our approach achieves the best trade-off between segmentation performance and computations.

我们在两个流行的RGB-D下游任务(即语义分割和显著目标检测)上验证了DFormer的有效性。通过在预训练的RGB-D骨干网络上添加轻量级解码器,DFormer以更低的计算成本创造了新的性能记录。值得注意的是,我们最大的模型DFormer-L在NYU Depthv2数据集上以不到第二名CMNext (Zhang et al., 2023b) 一半的计算量,取得了57.2% mIoU的最新最优结果。同时,轻量级模型DFormerT仅需6.0M参数和11.8G Flops,便在NYU Depthv2上实现了51.8% mIoU。与其他近期模型相比,我们的方法在分割性能与计算量之间实现了最佳平衡。

To sum up, our main contributions can be summarized as follows:

综上所述,我们的主要贡献可归纳如下:

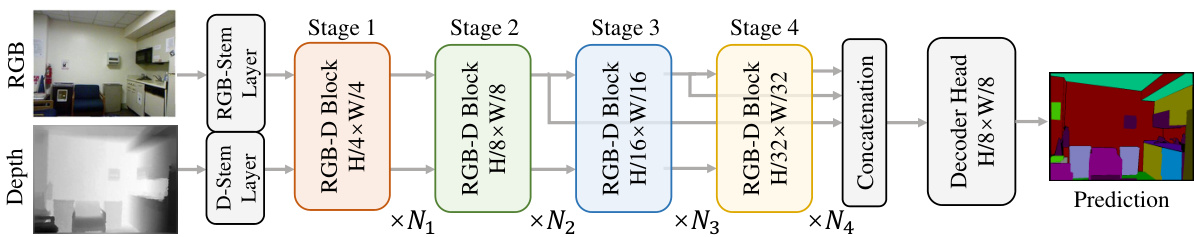

Figure 3: Overall architecture of the proposed DFormer. First, we use the pretrained DFormer to encode the RGB-D data. Then, the features from the last three stages are concatenated and delivered to a lightweight decoder head for final prediction. Note that only the RGB features from the encoder are used in the decoder.

图 3: 提出的 DFormer 整体架构。首先,我们使用预训练的 DFormer 对 RGB-D 数据进行编码。然后,将最后三个阶段的特征拼接起来,并传递给轻量级解码器头部进行最终预测。请注意,解码器中仅使用编码器的 RGB 特征。

2 PROPOSED DFORMER

2 提出的 DFORMER

Fig. 3 illustrates the overall architecture of our DFormer, which follows the popular encoder-decoder framework. In particular, the hierarchical encoder is designed to generate high-resolution coarse features and low-resolution fine features, and a lightweight decoder is employed to transform these visual features into task-specific predictions.

图 3: 展示了我们DFormer的整体架构,它遵循了流行的编码器-解码器框架。具体而言,分层编码器被设计用于生成高分辨率的粗粒度特征和低分辨率的细粒度特征,并采用轻量级解码器将这些视觉特征转换为特定任务的预测。

Given an RGB image and the corresponding depth map with spatial size of $H\times W$ , they are first separately processed by two parallel stem layers consisting of two convolutions with kernel size $3\times3$ and stride 2. Then, the RGB features and depth features are fed into the hierarchical encoder to encode multi-scale features at ${1/4,1/8,1/16,1/32}$ of the original image resolution. Next, we pretrain this encoder using the image-depth pairs from ImageNet-1K using the classification objective to generate the transferable RGB-D representations. Finally, we send the visual features from the pretrained RGB-D encoder to the decoder to produce predictions, e.g., segmentation maps with a spatial size $H\times W$ . In the rest of this section, we will describe the encoder, RGB-D pre training framework, and task-specific decoder in detail.

给定一张空间尺寸为$H\times W$的RGB图像及其对应的深度图,首先通过两个并行的stem层(各包含两个核大小为$3\times3$、步长为2的卷积)分别处理。随后,RGB特征和深度特征被输入分层编码器,在原始图像分辨率的${1/4,1/8,1/16,1/32}$尺度下编码多尺度特征。接着,我们使用ImageNet-1K中的图像-深度对,以分类目标预训练该编码器,生成可迁移的RGB-D表征。最后,将预训练RGB-D编码器输出的视觉特征送入解码器生成预测结果(例如空间尺寸为$H\times W$的分割图)。本节剩余部分将详细阐述编码器、RGB-D预训练框架及任务专用解码器。

2.1 HIERARCHICAL ENCODER

2.1 分层编码器

As shown in Fig. 3, our hierarchical encoder is composed of four stages, which are utilized to generate multi-scale RGB-D features. Each stage contains a stack of RGB-D blocks. Two convolutions with kernel size $3\times3$ and stride 2 are used to down-sample RGB and depth features, respectively, between two consecutive stages.

如图 3 所示,我们的分层编码器由四个阶段组成,用于生成多尺度 RGB-D 特征。每个阶段包含一组 RGB-D 块。在两个连续阶段之间,分别使用核大小为 $3\times3$ 、步长为 2 的两次卷积对 RGB 和深度特征进行下采样。

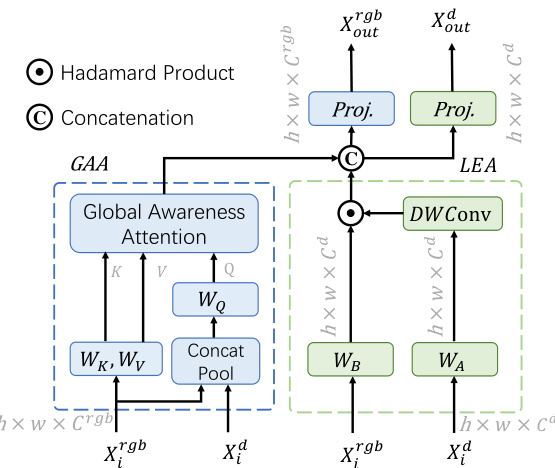

Building Block. Our building block is mainly composed of the global awareness attention (GAA) module and the local enhancement attention (LEA) module and builds interaction between the RGB and depth modalities. GAA incorporates depth information and aims to enhance the capability of object localization from a global perspective, while LEA adopts a largekernel convolution to capture the local clues from the depth features, which can refine the details of the RGB representations. The details of the interaction modules are shown in Fig. 4.

构建模块。我们的构建模块主要由全局感知注意力 (GAA) 模块和局部增强注意力 (LEA) 模块组成,并在 RGB 和深度模态之间建立交互。GAA 整合深度信息,旨在从全局角度增强目标定位能力,而 LEA 采用大核卷积从深度特征中捕获局部线索,从而细化 RGB 表示的细节。交互模块的详细信息如图 4 所示。

Our GAA fuses depth and RGB features to build relationships across the whole scene, enhancing 3D awareness and further helping capture semantic objects. Different from the selfattention mechanism (Vaswani et al., 2017) that

我们的GAA通过融合深度和RGB特征来建立整个场景的关系,增强3D感知能力,并进一步帮助捕捉语义对象。与自注意力机制 (Vaswani et al., 2017) 不同

Figure 4: Diagrammatic details on how to conduct interactions between RGB and depth features.

图 4: RGB与深度特征交互机制的示意图

introduces quadratic computation growth as the pixels or tokens increase, the Query $(Q)$ in GAA is down-sampled to a fixed size and hence the computational complexity can be reduced. Tab. 7 illustrates that fusing depth features with $Q$ is adequate and there is no need to combine them with $K$ or $V$ , which brings computation increment but no performance improvement. So, $Q$ comes from the concatenation of the RGB features and depth features, while key $(K)$ and value $(V)$ are extracted from RGB features. Given the RGB features $X_{i}^{r g b}$ and depth features $X_{i}^{d}$ , the above process can be

随着像素或token数量增加导致计算量呈二次方增长,GAA中的查询向量$(Q)$会降采样至固定尺寸,从而降低计算复杂度。表7表明深度特征只需与$Q$融合即可,无需与键$(K)$或值$(V)$结合,后者会增加计算量但无法提升性能。因此$Q$由RGB特征与深度特征拼接而成,而键$(K)$和值$(V)$则从RGB特征中提取。给定RGB特征$X_{i}^{r g b}$和深度特征$X_{i}^{d}$,上述流程可表示为:

formulated as:

表述为:

$$

Q=\operatorname{Linear}(\mathrm{Pool}{k\times k}([X_{i}^{r g b},X_{i}^{d}])),K=\operatorname{Linear}(X_{i}^{r g b}),V=\operatorname{Linear}(X_{i}^{r g b}),

$$

$$

Q=\operatorname{Linear}(\mathrm{Pool}{k\times k}([X_{i}^{r g b},X_{i}^{d}])),K=\operatorname{Linear}(X_{i}^{r g b}),V=\operatorname{Linear}(X_{i}^{r g b}),

$$

where $[\cdot,\cdot]$ denotes the concatenation operation along the channel dimension, $\mathrm{Pool}_{k\times k}(\cdot)$ performs adaptively average pooling operation across the spatial dimensions to $k\times k$ size, and Linear is linear transformation. Based on the generated $Q\in\mathbb{R}^{k\times k\times C^{d}}$ , $\b{K}\in\mathbb{R}^{\b{h}\times\boldsymbol{w}\times\boldsymbol{C}^{d}}$ , and $V\in\mathbb{R}^{h\times w\times C^{d}}$ , where $h$ and $w$ are the height and width of features in the current stage, we formulate the GAA as follows:

其中 $[\cdot,\cdot]$ 表示沿通道维度的拼接操作,$\mathrm{Pool}_{k\times k}(\cdot)$ 在空间维度上执行自适应平均池化操作至 $k\times k$ 尺寸,Linear 表示线性变换。基于生成的 $Q\in\mathbb{R}^{k\times k\times C^{d}}$、$\b{K}\in\mathbb{R}^{\b{h}\times\boldsymbol{w}\times\boldsymbol{C}^{d}}$ 和 $V\in\mathbb{R}^{h\times w\times C^{d}}$(其中 $h$ 和 $w$ 分别表示当前阶段特征图的高度和宽度),我们将 GAA 表述如下:

$$

X_{G A A}=\mathrm{UP}(V\cdot\mathrm{Softmax}(\frac{Q^{\top}K}{\sqrt{C^{d}}})),

$$

$$

X_{G A A}=\mathrm{UP}(V\cdot\mathrm{Softmax}(\frac{Q^{\top}K}{\sqrt{C^{d}}})),

$$

where $\mathrm{UP}(\cdot)$ is a bilinear upsampling operation that converts the spatial size from $k\times k$ to $h\times w$ . In practical use, Eqn. 2 can also be extended to a multi-head version, as done in the original selfattention (Vaswani et al., 2017), to augment the feature representations.

其中 $\mathrm{UP}(\cdot)$ 是一个双线性上采样操作,将空间尺寸从 $k\times k$ 转换为 $h\times w$。实际应用中,公式2也可扩展为多头版本(如原始自注意力机制 [Vaswani et al., 2017] 所示)以增强特征表示。

We also design the LEA module to capture more local details, which can be regarded as a supplement to the GAA module. Unlike most previous works that use addition and concatenation to fuse the RGB features and depth features. We conduct a depth-wise convolution with a large kernel on the depth features and use the resulting features as attention weights to reweigh the RGB features via a simple Hadamard product inspired by (Hou et al., 2022). This is reasonable in that adjacent pixels with similar depth values often belong to the same object and the 3D geometry information thereby can be easily embedded into the RGB features. To be specific, the calculation process of LEA can be defined as follows:

我们还设计了LEA模块以捕捉更多局部细节,这可以视为对GAA模块的补充。不同于以往大多数工作通过加法或拼接来融合RGB特征和深度特征,我们受(Hou et al., 2022)启发,对深度特征执行大核深度卷积,并将结果特征作为注意力权重,通过简单的Hadamard乘积对RGB特征进行重加权。这种做法是合理的,因为具有相似深度值的相邻像素通常属于同一物体,从而能轻松将3D几何信息嵌入RGB特征。具体而言,LEA的计算过程可定义如下:

$$

X_{L E A}=\mathrm{DConv}{k\times k}(\mathrm{Linear}(X_{i}^{d}))\odot\mathrm{Linear}(X_{i}^{r g b}),

$$

$$

X_{L E A}=\mathrm{DConv}{k\times k}(\mathrm{Linear}(X_{i}^{d}))\odot\mathrm{Linear}(X_{i}^{r g b}),

$$

where $\ensuremath{\mathrm{DConv}}_{k\times k}$ is a depth-wise convolution with kernel size $k\times k$ and $\odot$ is the Hadamard product.

其中 $\ensuremath{\mathrm{DConv}}_{k\times k}$ 是核尺寸为 $k\times k$ 的深度卷积 (depth-wise convolution) ,$\odot$ 为哈达玛积 (Hadamard product) 。

To preserve the diverse appearance information, we also build a base module to transform the RGB features Xirgb to XBase, which has the same spatial size as XGAA and XLEA. The calculation process of $X_{B a s e}$ can be defined as follows:

为了保留多样化的外观信息,我们还构建了一个基础模块,用于将RGB特征Xirgb转换为XBase,其空间尺寸与XGAA和XLEA相同。$X_{B a s e}$的计算过程可定义如下:

$$

X_{B a s e}=\mathrm{DConv}{k\times k}(\mathrm{Linear}(X_{i}^{r g b}))\odot\mathrm{Linear}(X_{i}^{r g b}).

$$

$$

X_{B a s e}=\mathrm{DConv}{k\times k}(\mathrm{Linear}(X_{i}^{r g b}))\odot\mathrm{Linear}(X_{i}^{r g b}).

$$

fused together by concatenation and linear projection to update the RGB features Finally, the features, i.e., XGAA ∈ Rhi×wi×Cid , XLEA ∈ Rhi×wi×Cid , XBase ∈ Rhi×wi×Cirgb, $X_{o u t}^{r g b}$ and depth features Xodut.

通过拼接和线性投影融合,更新RGB特征。最终得到的特征包括:XGAA ∈ Rhi×wi×Cid、XLEA ∈ Rhi×wi×Cid、XBase ∈ Rhi×wi×Cirgb、$X_{o u t}^{r g b}$以及深度特征Xodut。

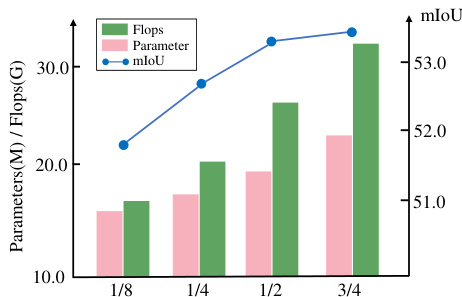

Overall Architecture. We empirically observe that encoding depth features requires fewer parameters compared to the RGB ones due to their less semantic information, which is verified in Fig. 6 and illustrated in detail in the experimental part. To reduce model complexity in our RGB-D block, we use a small portion of channels to encode the depth information. Based on the configurations of the RGB-D blocks in each stage, we design a series of DFormer encoder variants, termed DFormerT, DFormer-S, DFormer-B, and DFormer-L, respectively, with the same architecture but different model sizes. DFormer-T is a lightweight encoder for fast inference, while DFormer-L is the largest one for attaining better performance. For detailed configurations, readers can refer to Tab. 14.

整体架构。我们通过实验观察到,由于深度特征包含的语义信息较少,编码深度特征所需的参数比RGB特征更少,这一现象在图6中得到验证,并在实验部分详细说明。为降低RGB-D模块的模型复杂度,我们仅使用少量通道编码深度信息。基于各阶段RGB-D模块的配置,我们设计了DFormer编码器系列变体:DFormerT、DFormer-S、DFormer-B和DFormer-L,它们架构相同但模型规模不同。其中DFormer-T是轻量级编码器以实现快速推理,而DFormer-L是最大模型以获得更优性能。具体配置请参阅表14。

2.2 RGB-D PRE TRAINING

2.2 RGB-D 预训练

The purpose of RGB-D pre training is to endow the backbone with the ability to achieve the interaction between RGB and depth modalities and generate transferable representations with rich semantic and spatial information. To this end, we first apply a depth estimator, e.g., Adabin (Bhat et al., 2021), on the ImageNet-1K dataset (Russ a kov sky et al., 2015) to generate a large number of image-depth pairs. Then, we add a classifier head on the top of the RGB-D encoder to build the classification network for pre training. Particularly, the RGB features from the last stage are flattened along the spatial dimension and fed into the classifier head. The standard cross-entropy loss is employed as our optimization objective, and the network is pretrained on RGB-D data for 300 epochs, like ConvNext (Liu et al., 2022). Following previous works (Liu et al., 2022; Guo et al., 2022b), the AdamW (Loshchilov & Hutter, 2019) with learning rate 1e-3 and weight decay 5e-2 is employed as our optimizer, and the batch size is set to 1024. More specific settings for each variant of DFormer are described in the appendix.

RGB-D预训练的目的是让主干网络具备实现RGB与深度模态交互的能力,并生成具有丰富语义和空间信息的可迁移表征。为此,我们首先在ImageNet-1K数据集[20]上应用深度估计器(如Adabin[3])生成大量图像-深度对。接着,我们在RGB-D编码器顶部添加分类器头来构建用于预训练的分类网络。具体而言,将最后阶段提取的RGB特征沿空间维度展平后输入分类器头,采用标准交叉熵损失作为优化目标,并像ConvNext[15]那样在RGB-D数据上进行300轮预训练。沿用先前工作[15][7]的设置,我们采用学习率为1e-3、权重衰减为5e-2的AdamW优化器[14],批处理大小设为1024。DFormer各变体的具体配置详见附录。

Table 1: Results on NYU Depth V2 (Silberman et al., 2012) and SUN-RGBD (Song et al., 2015). Some methods do not report the results or settings on the SUN-RGBD datasets, so we reproduce them with the same training config. † indicates our implemented results. All the backbones are pre-trained on ImageNet-1K.

表 1: NYU Depth V2 (Silberman et al., 2012) 和 SUN-RGBD (Song et al., 2015) 数据集上的结果。部分方法未报告 SUN-RGBD 数据集的结果或设置,因此我们使用相同的训练配置复现了这些结果。† 表示我们实现的结果。所有骨干网络均在 ImageNet-1K 上进行了预训练。

| 模型 | 骨干网络 | 参数量 | NYUDepthv2 | SUN-RGBD | 代码 | ||||

|---|---|---|---|---|---|---|---|---|---|

| 输入尺寸 | 计算量 (Flops) | mIoU | 输入尺寸 | 计算量 (Flops) | mIoU | ||||

| ACNet19 (Hu et al.) | ResNet-50 | 116.6M | 480×640 | 126.7G | 48.3 | 530×730 | 163.9G | 48.1 | Link |

| SGNet2o (Chen et al.) | ResNet-101 | 64.7M | 480×640 | 108.5G | 51.1 | 530×730 | 151.5G | 48.6 | Link |

| SA-Gate2o (Chen et al.) | ResNet-101 | 110.9M | 480×640 | 193.7G | 52.4 | 530×730 | 250.1G | 49.4 | Link |

| CEN2o (Wang et al.) | ResNet-101 | 118.2M | 480×640 | 618.7G | 51.7 | 530×730 | 790.3G | 50.2 | Link |

| CEN2o (Wang et al.) | ResNet-152 | 133.9M | 480×640 | 664.4G | 52.5 | 530×730 | 849.7G | 51.1 | Link |

| ShapeConv21 (Cao et al.) | ResNext-101 | 86.8M | 480×640 | 124.6G | 51.3 | 530×730 | 161.8G | 48.6 | Link |

| ESANet21 (Seichter et al.) | ResNet-34 | 31.2M | 480×640 | 34.9G | 50.3 | 480×640 | 34.9G | 48.2 | Link |

| FRNet22 (Zhou et al.) | ResNet-34 | 85.5M | 480×640 | 115.6G | 53.6 | 530×730 | 150.0G | 51.8 | Link |

| PGDENet22 (Zhou et al.) | ResNet-34 | 100.7M | 480×640 | 178.8G | 53.7 | 530×730 | 229.1G | 51.0 | Link |

| EMSANet22 (Seichter et al.) | ResNet-34 | 46.9M | 480×640 | 45.4G | 51.0 | 530×730 | 58.6G | 48.4 | Link |

| TokenFusion22 (Wang et al.) | MiT-B2 | 26.0M | 480×640 | 55.2G | 53.3 | 530×730 | 71.1G | 50.3 | Link |

| TokenFusion22 (Wang et al.) | MiT-B3 | 45.9M | 480×640 | 94.4G | 54.2 | 530×730 | 122.1G | 51.0t | Link |

| MultiMAE22 (Bachmann et al.) | ViT-B | 95.2M | 640×640 | 267.9G | 56.0 | 640×640 | 267.9G | 51.1 | Link |

| Omnivore22 (Girdhar et al.) | Swin-T | 29.1M | 480×640 | 32.7G | 49.7 | 530×730 | Link | ||

| Omnivore22 (Girdhar et al.) | Swin-S | 51.3M | 480×640 | 59.8G | 52.7 | 530×730 | Link | ||

| Omnivore22 (Girdhar et al.) | Swin-B | 95.7M | 480×640 | 109.3G | 54.0 | 530×730 | Link | ||

| CMX22 (Zhang et al.) | MiT-B2 | 66.6M | 480×640 | 67.6G | 54.4 | 530×730 | 86.3G | 49.7 | Link |

| CMX22 (Zhang et al.) | MiT-B4 | 139.9M | 480×640 | 134.3G | 56.3 | 530×730 | 173.8G | 52.1 | Link |

| CMX22 (Zhang et al.) | MiT-B5 | 181.1M | 480×640 | 167.8G | 56.9 | 530×730 | 217.6G | 52.4 | Link |

| CMNext23 (Zhang et al.) | MiT-B4 | 119.6M | 480×640 | 131.9G | 56.9 | 530×730 | 170.3G | 51.9t | Link |

| DFormer-T | Ours-T | 6.0M | 480×640 | 11.8G | 51.8 | 530×730 | 15.1G | 48.8 | Link |

| DFormer-S | Ours-S | 18.7M | 480×640 | 25.6G | 53.6 | 530×730 | 33.0G | 50.0 | Link |

| DFormer-B | Ours-B | 29.5M | 480×640 | 41.9G | 55.6 | 530×730 | 54.1G | 51.2 | Link |

| DFormer-L | Ours-L | 39.0M | 480×640 | 65.7G | 57.2 | 530×730 | 83.3G | 52.5 | Link |

2.3 TASK-SPECIFIC DECODER.

2.3 任务专用解码器

For the applications of our DFormer to downstream tasks, we just add a lightweight decoder on top of the pretrained RGBD backbone to build the task-specific network. After being finetuned on corresponding benchmark datasets, the task-specific network is able to generate great predictions, without using extra designs like fusion modules (Chen et al., 2020a; Zhang et al., 2023a).

为了将我们的DFormer应用于下游任务,我们只需在预训练的RGBD主干网络上添加一个轻量级解码器来构建任务专用网络。在相应的基准数据集上进行微调后,该任务专用网络无需使用融合模块等额外设计 (Chen et al., 2020a; Zhang et al., 2023a) 就能生成出色的预测结果。

Take RGB-D semantic segmentation as an example. Following SegNext (Guo et al., 2022a), we adopt a lightweight Hamburger head (Geng et al., 2021) to aggregate the multi-scale RGB features from the last three stages of our pretrained encoder. Note that, our decoder only uses the $X^{r g b}$ features, while other methods (Zhang et al., 2023a; Wang et al., 2022; Zhang et al., 2023b) mostly design modules that fuse both modalities features $X^{r g b}$ and $X^{d}$ for final predictions. We will show in our experiments that our $X^{r g b}$ features can efficiently extract the 3D geometry clues from the depth modality thanks to our powerful RGB-D pretrained encoder. Delivering the depth features $X^{\dot{d}}$ to the decoder is not necessary.

以RGB-D语义分割为例。遵循SegNext (Guo等人, 2022a)的方法,我们采用轻量级Hamburger头模块(Geng等人, 2021)来聚合预训练编码器最后三个阶段的多尺度RGB特征。值得注意的是,我们的解码器仅使用$X^{r g b}$特征,而其他方法(Zhang等人, 2023a; Wang等人, 2022; Zhang等人, 2023b)大多设计融合双模态特征$X^{r g b}$和$X^{d}$的模块进行最终预测。实验将表明,得益于强大的RGB-D预训练编码器,我们的$X^{r g b}$特征能有效从深度模态中提取3D几何线索,因此无需向解码器传递深度特征$X^{\dot{d}}$。

3 EXPERIMENTS

3 实验

3.1 RGB-D SEMANTIC SEGMENTATION

3.1 RGB-D 语义分割

Datasets& implementation details. Following the common experiment settings of RGB-D semantic segmentation methods (Xie et al., 2021; Guo et al., 2022a), we finetune and evaluate the DFormer on two widely used datasets, i.e., NYUDepthv2 (Silberman et al., 2012) and SUN-RGBD (Song et al., 2015). Following SegNext Guo et al. (2022a), we employ Hamburger (Geng et al., 2021), a lightweight head, as the decoder to build our RGB-D semantic segmentation network. During finetuning, we only adopt two common data augmentation strategies, i.e., random horizontal flipping and random scaling (from 0.5 to 1.75). The training images are cropped and resized to $480\times640$ and $480\times480$ respectively for NYU Depthv2 and SUN-RGBD benchmarks. Cross-entropy loss is utilized as the optimization objective. We use AdamW (Kingma & Ba, 2015) as our optimizer with an initial learning rate of 6e-5 and the poly decay schedule. Weight decay is set to 1e-2. During testing, we employ mean Intersection over Union (mIoU), which is averaged across semantic categories, as the primary evaluation metric to measure the segmentation performance. Following recent works (Zhang et al., 2023a; Wang et al., 2022; Zhang et al., 2023b), we adopt multi-scale (MS) flip inference strategies with scales ${0.5,0.75,1,1.25,1.5}$ .

数据集与实现细节。遵循RGB-D语义分割方法的通用实验设置 (Xie et al., 2021; Guo et al., 2022a),我们在两个广泛使用的数据集NYUDepthv2 (Silberman et al., 2012) 和SUN-RGBD (Song et al., 2015) 上对DFormer进行微调和评估。参照SegNext Guo et al. (2022a) 的做法,我们采用轻量级头部Hamburger (Geng et al., 2021) 作为解码器来构建RGB-D语义分割网络。微调过程中仅采用两种常见数据增强策略:随机水平翻转和随机缩放(0.5至1.75倍)。训练图像分别裁剪调整为$480\times640$(NYU Depthv2)和$480\times480$(SUN-RGBD)尺寸。优化目标采用交叉熵损失,使用AdamW (Kingma & Ba, 2015) 优化器,初始学习率6e-5并采用多项式衰减策略,权重衰减设为1e-2。测试阶段采用语义类别平均的mIoU (mean Intersection over Union) 作为主要分割性能指标。根据近期研究 (Zhang et al., 2023a; Wang et al., 2022; Zhang et al., 2023b),我们采用多尺度(MS)翻转推理策略,尺度参数为${0.5,0.75,1,1.25,1.5}$。

Table 2: Quantitative comparisons on RGB-D SOD benchmarks. The best results are highlighted.

表 2: RGB-D SOD基准测试的定量比较。最佳结果已高亮显示。

| 数据集指标 | 参数量 (M) | 计算量 (G) | DES(135) | NLPR(300) | NJU2K(500) | STERE(1,000) | SIP(929) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | F | S | E | M | F | S | E | M | F | S | E | M | F | S | E | M | |||

| BBSNet21 (Zhai et al.) | 49.8 | 31.3 | .021 | .942 | .934 | .955 | .023 | .927 | .930 | .953 | .035 | .931 | .920 | .941 | .041 | .919 | .908 | .931 | .055 |

| DCF21 (Ji et al.) | 108.5 | 54.3 | .024 | .910 | .905 | .941 | .022 | .918 | .924 | .958 | .036 | .922 | .912 | .946 | .039 | .911 | .902 | .940 | .052 |

| DSA2F21 (Sun et al.) | 36.5 | 172.7 | .021 | .896 | .920 | .962 | .024 | .897 | .918 | .950 | .039 | .901 | .903 | .923 | .036 | .898 | .904 | .933 | |

| CMINet21 (Zhang et al.) | = | = | .016 | .944 | .940 | .975 | .020 | .931 | .932 | .959 | .028 | .940 | .929 | .954 | .032 | .925 | .918 | .946 | .040 |

| DSNet21 (Wen et al.) | 172.4 | 141.2 | .021 | .939 | .928 | .956 | .024 | .925 | .926 | .951 | .034 | .929 | .921 | .946 | .036 | .922 | .914 | .941 | .052 |

| UTANet21 (Zhao et al.) | 48.6 | 27.4 | .026 | .921 | .900 | .932 | .020 | .928 | .932 | .964 | .037 | .915 | .902 | .945 | .033 | .921 | .910 | .948 | .048 |

| BIANet21 (Zhang et al.) | 49.6 | 59.9 | .017 | .948 | .942 | .972 | .022 | .926 | .928 | .957 | .034 | .932 | .923 | .945 | .038 | .916 | .908 | .935 | .046 |

| SPNet21 (Zhou et al.) | 150.3 | 68.1 | .014 | .950 | .945 | .980 | .021 | .925 | .927 | .959 | .028 | .935 | .925 | .954 | .037 | .915 | .907 | .944 | .043 |

| VST21 (Liu et al.) | 83.3 | 31.0 | .017 | .940 | .943 | .978 | .024 | .920 | .932 | .962 | .035 | .920 | .922 | .951 | .038 | .907 | .913 | .951 | .040 |

| RD3D+22 (Chen et al.) | 28.9 | 43.3 | .017 | .946 | .950 | .982 | .022 | .921 | .933 | .964 | .033 | .928 | .928 | .955 | .037 | .905 | .914 | .946 | .046 |

| BPGNet22 (Yang et al.) | 84.3 | 138.6 | .020 | .932 | .937 | .973 | .024 | .914 | .927 | .959 | .034 | .926 | .923 | .953 | .040 | .904 | .907 | .944 | |

| C2DFNet22 (Zhang et al.) | 47.5 | 21.0 | .020 | .937 | .922 | .948 | .021 | .926 | .928 | .956 | .038 | .911 | .902 | .938 | .053 | .894 | |||

| MVSalNet22 (Zhou et al.) | = | = | .019 | .942 | .937 | .973 | .022 | .931 | .930 | .960 | .036 | .923 | .912 | .944 | .036 | .921 | .913 | .944 | |

| SPSN22 (Lee et al.) | 37.0 | 100.3 | .017 | .942 | .937 | .973 | .023 | .917 | .923 | .956 | .032 | .927 | .918 | .949 | .035 | .909 | .906 | .941 | .043 |

| HiDANet23 (Wu et al.) | 130.6 | 71.5 | .013 | .952 | .946 | .980 | .021 | .929 | .930 | .961 | .029 | .939 | .926 | .954 | .035 | .921 | .911 | .946 | .043 |

| DFormer-T | 5.9 | 4.5 | .016 | .947 | .941 | .975 | .021 | .931 | .932 | .960 | .028 | .937 | .927 | .953 | .033 | .921 | .915 | .945 | .039 |

| DFormer-S | 18.5 | 10.1 | .016 | .950 | .939 | .970 | .020 | .937 | .936 | .965 | .026 | .941 | .931 | .960 | .031 | .928 | .920 | .951 | .041 |

| DFormer-B | 29.3 | 16.7 | .013 | .957 | .948 | .982 | .019 | .933 | .936 | .965 | .025 | .941 | .933 | .960 | .029 | .931 | .925 | .951 | .035 |

| DFormer-L | 38.8 | 26.2 | .013 | .956 | .948 | .980 | .016 | .939 | .942 | .971 | .023 | .946 | .937 | .964 | .030 | .929 | .923 | .952 | .032 |

Table 3: RGB-D pre training. $\mathbf{\sigma}\cdot\mathbf{RGB}{+}\mathbf{RGB}$ ’ pretraining replaces depth maps with RGB images during pre training. Input channel of the stem layer is modified from 1 to 3. The depth map is duplicated three times during finetuning.

表 3: RGB-D 预训练。$\mathbf{\sigma}\cdot\mathbf{RGB}{+}\mathbf{RGB}$ 预训练在预训练阶段用 RGB 图像替换深度图。主干层的输入通道从 1 修改为 3。在微调阶段,深度图会被复制三次。

| pretrain | Finetune | mIoU (%) |

|---|---|---|

| RGB+RGB | RGB+D | 53.3 |

| RGB+D (Ours) | RGB+D | 55.6 |

Table 4: Different inputs of the decoder head for DFormer-B. means simultaneously uses RGB and depth features. Specifically, both features from the last three stages are used as the input of the decoder head.

表 4: DFormer-B解码器头的不同输入。表示同时使用RGB和深度特征。具体来说,最后三个阶段的特征都用作解码器头的输入。

| 解码器输入 | #Params | FLOPs | mIoU(%) |

|---|---|---|---|

| Xrgb (Ours) | 29.5 | 41.9 | 55.6 |

| Xrgb + Xd | 30.8 | 44.8 | 55.5 |

Comparison with state-of-the-art methods. We compare our DFormer with 13 recent RGB-D semantic segmentation methods on the NYUDepthv2 (Silberman et al., 2012) and SUN-RGBD (Song et al., 2015) datasets. These methods are chosen according to three criteria: a) recently published, b) representative, and c) with open-source code. As shown in Tab. 1, our DFormer achieves new state-of-the-art performance across these two benchmark datasets. We also plot the performanceefficiency curves of different methods on the validation set of the NYUDepthv2 (Silberman et al., 2012) dataset in Fig. 2. It is clear that DFormer achieves much better performance and computation trade-off compared to other methods. Particularly, DFormer-L yields $57.2%$ mIoU with 39.0M parameters and 65.7G Flops, while the recent best RGB-D semantic segmentation method, i.e., CMX (MiT-B2), only achieves $54.4%$ mIoU using $66.6\mathbf{M}$ parameters and 67.6G Flops. It is noteworthy that our DFormer-B can outperform CMX (MIT-B2) by $1.2%$ mIoU with half of the parameters (29.5M, 41.9G vs 66.6M, 67.6G). Moreover, the qualitative comparisons between the semantic segmentation results of our DFormer and CMNext (Zhang et al., 2023b) in Fig. 14 of the appendix further demonstrate the advantage of our method. In addition, the experiments on SUN-RGBD (Song et al., 2015) also present similar advantages of our DFormer over other methods. These consistent improvements indicate that our RGB-D backbone can more efficiently build interaction between RGB and depth features, and hence yields better performance with even lower computational cost.

与先进方法的比较。我们在NYUDepthv2 (Silberman et al., 2012) 和 SUN-RGBD (Song et al., 2015) 数据集上将DFormer与13种最新的RGB-D语义分割方法进行对比。这些方法根据三个标准选取:a) 近期发表,b) 具有代表性,c) 提供开源代码。如表 1 所示,我们的DFormer在这两个基准数据集上均实现了最先进的性能。图 2 展示了不同方法在NYUDepthv2验证集上的性能-效率曲线,明显可见DFormer在性能与计算量权衡方面显著优于其他方法。具体而言,DFormer-L以39.0M参数量和65.7G Flops实现了57.2% mIoU,而当前最优的RGB-D语义分割方法CMX (MiT-B2) 使用66.6M参数和67.6G Flops仅达到54.4% mIoU。值得注意的是,我们的DFormer-B仅用一半参数量 (29.5M, 41.9G vs 66.6M, 67.6G) 即可超越CMX (MIT-B2) 1.2% mIoU。此外,附录图 14 中DFormer与CMNext (Zhang et al., 2023b) 的语义分割结果定性对比进一步验证了本方法的优势。在SUN-RGBD (Song et al., 2015) 数据集上的实验同样表明DFormer的优越性。这些一致性改进证明我们的RGB-D主干网络能更高效地建立RGB与深度特征间的交互,从而以更低计算成本获得更优性能。

3.2 RGB-D SALIENT OBJECT DETCTION

3.2 RGB-D 显著目标检测

Dataset & implementation details. We finetune and test DFormer on five popular RGB-D salient object detection datasets. The finetuning dataset consists of 2,195 samples, where 1,485 are from NJU2K-train (Ju et al., 2014) and the other 700 samples are from NLPR-train (Peng et al., 2014). The model is evaluated on five datasets, i.e., DES (Cheng et al., 2014) (135), NLPR-test (Peng et al., 2014) (300), NJU2K-test (Ju et al., 2014) (500), STERE (Niu et al., 2012) (1,000), and SIP (Fan et al., 2020) (929). For performance evaluation, we adopt four golden metrics of this task, i.e., Structure-measure (S) (Fan et al., 2017), mean absolute error (M) (Perazzi et al., 2012), max F-measure (F) (Margolin et al., 2014), and max E-measure (E) (Fan et al., 2018).

数据集与实现细节。我们在五个流行的RGB-D显著目标检测数据集上对DFormer进行微调和测试。微调数据集包含2,195个样本,其中1,485个来自NJU2K-train (Ju et al., 2014),其余700个样本来自NLPR-train (Peng et al., 2014)。模型在五个数据集上进行评估,即DES (Cheng et al., 2014) (135)、NLPR-test (Peng et al., 2014) (300)、NJU2K-test (Ju et al., 2014) (500)、STERE (Niu et al., 2012) (1,000)和SIP (Fan et al., 2020) (929)。为评估性能,我们采用该任务的四个黄金指标:结构度量(S) (Fan et al., 2017)、平均绝对误差(M) (Perazzi et al., 2012)、最大F值(F) (Margolin et al., 2014)和最大E值(E) (Fan et al., 2018)。

Comparisons with state-of-the-art methods. We compare our DFormer with 11 recent RGB-D salient object detection methods on the five popular test datasets. As shown in Tab. 2, our DFormer is able to surpass all competitors with the least computational cost. More importantly, our DFormer

与现有最优方法的对比。我们将DFormer与11种最新的RGB-D显著目标检测方法在五个常用测试数据集上进行比较。如表2所示,DFormer能以最低计算成本超越所有竞争对手。更重要的是,我们的DFormer

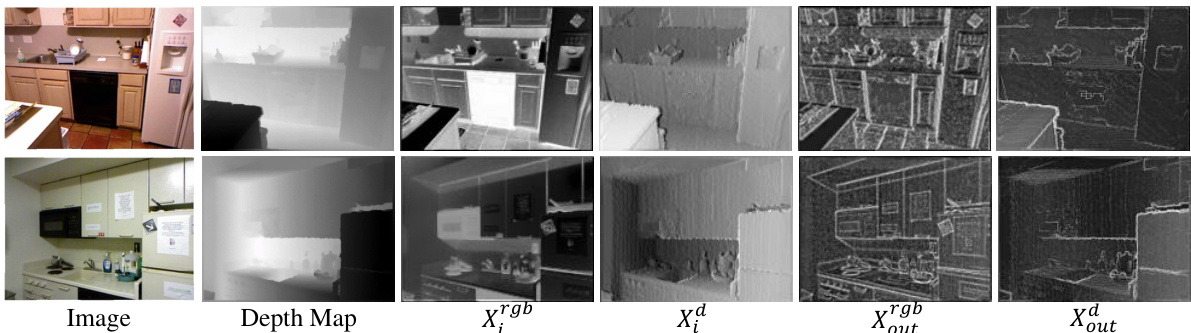

Figure 5: Visualization s of the feature maps around the last RGB-D block of the first stage.

图 5: 第一阶段最后一个RGB-D块周围特征图的可视化。

Table 5: Ablation results on the components of the RGB-D block in DFormer-S.

| Model | #Params | FLOPs | mIoU (%) |

| DFormer-S | 18.7M | 25.6G | 53.6 |

| w/oBase | 14.6M | 19.6G | 52.1 |

| w/oGAA | 16.5M | 21.7G | 52.3 |

| w/oLEA | 16.3M | 23.3G | 52.6 |

表 5: DFormer-S 中 RGB-D 模块组件的消融实验结果

| 模型 | 参数量 (#Params) | 计算量 (FLOPs) | mIoU (%) |

|---|---|---|---|

| DFormer-S | 18.7M | 25.6G | 53.6 |

| w/oBase | 14.6M | 19.6G | 52.1 |

| w/oGAA | 16.5M | 21.7G | 52.3 |

| w/oLEA | 16.3M | 23.3G | 52.6 |

Table 7: Different fusion methods for $Q$ , $K$ , and $V$ . We can see that there is no need to fuse RGB and depth features for $K$ and $V$ .

表 7: 针对 $Q$、$K$ 和 $V$ 的不同融合方法。可以看出,无需为 $K$ 和 $V$ 融合 RGB 和深度特征。

| 融合变量 | 参数量 | 计算量 (FLOPs) | mIoU (%) |

|---|---|---|---|

| 无 | 18.5M | 25.4G | 53.2 |

| (sno) Xquo | 18.7M | 25.6G | 53.6 |

| Q, K, V | 19.3M | 28.5G | 53.6 |

Table 6: Ablation on GAA in the DFormer-S. $\mathbf{\nabla}\times\mathbf{\nabla}\times\mathbf{\nabla}$ means the adaptive pooling size of $Q$ .

表 6: DFormer-S 中 GAA 的消融实验。$\mathbf{\nabla}\times\mathbf{\nabla}\times\mathbf{\nabla}$ 表示 $Q$ 的自适应池化大小。

| 核尺寸 | 参数量 | FLOPs | mIoU (%) |

|---|---|---|---|

| 3×3 | 18.7M | 22.0G | 52.7 |

| 5×5 | 18.7M | 23.3G | 53.1 |

| 7×7 | 18.7M | 25.6G | 53.6 |

| 9×9 | 18.7M | 28.8G | 53.6 |

Table 8: Different fusion manners in LEA module. Fusion manner refers to the operation to fuse the RGB and depth information in LEA (Eqn. 3).

表 8: LEA模块中的不同融合方式。融合方式指的是在LEA (Eqn. 3)中融合RGB和深度信息的操作。

| 融合方式 | #Params | FLOPs | mIoU(%) |

|---|---|---|---|

| Addition | 18.6M | 25.1G | 53.1 |

| Concatenation | 19.0M | 26.4G | 53.3 |

| Hadamard | 18.7M | 25.6G | 53.6 |

T yields comparable performance to the recent state-of-the-art method SPNet (Zhou et al., 2021a) with less than $10%$ computational cost (5.9M, 4.5G vs 150.3M, 68.1G). The significant improvement further illustrates the strong performance of DFormer.

T 的性能与近期最先进的方法 SPNet (Zhou et al., 2021a) 相当,但计算成本不到其 10% (5.9M, 4.5G vs 150.3M, 68.1G)。这一显著改进进一步证明了 DFormer 的强大性能。

3.3 ABLATION STUDY AND ANALYSIS

3.3 消融研究与分析

We perform ablation studies to investigate the effectiveness of each component. All experiments here are conducted under RGB-D segmentation setting on NYU DepthV2 (Silberman et al., 2012).

我们通过消融实验来验证各模块的有效性。所有实验均在NYU DepthV2数据集 (Silberman et al., 2012) 的RGB-D分割设置下进行。

RGB-D vs. RGB pre training. To explain the necessity of the RGB-D pre training, we attempt to replace the depth maps with RGB images during pre training, dubbed as RGB pre training. To be specific, RGB pre training modifies the input channel of the depth stem layer from 1 to 3 and duplicates the depth map three times during finetuning. Note that for the finetuning setting, the modalities of the input data and the model structure are the same. As shown in Tab. 3, our RGB-D pre training brings $2.3%$ improvement for DFormer-B compared to the RGB pre training in terms of mIoU on NYU DepthV2. We argue that this is because our RGB-D pre training avoids the mismatch encoding of the 3D geometry features of depth maps caused by the use of pretrained RGB backbones and enhances the interaction efficiency between the two modalities. Tab. 12 and Fig. 7 in the appendix also demonstrate this. These experiment results indicate that the RGB-D representation capacity learned during the RGB-D pre training is crucial for segmentation accuracy.

RGB-D与RGB预训练对比。为说明RGB-D预训练的必要性,我们尝试在预训练阶段用RGB图像替代深度图(称为RGB预训练)。具体而言,RGB预训练将深度分支层的输入通道从1改为3,并在微调阶段对深度图进行三次复制。需注意的是,微调设置中输入数据的模态与模型结构保持不变。如表3所示,在NYU DepthV2数据集上,我们的RGB-D预训练相比RGB预训练为DFormer-B带来了2.3%的mIoU提升。我们认为这是由于RGB-D预训练避免了使用预训练RGB主干网络导致的深度图3D几何特征编码失配,并增强了双模态间的交互效率。附录中的表12和图7也验证了这一点。实验结果表明,RGB-D预训练期间学习到的表征能力对分割精度至关重要。

Input features of the decoder. Benefiting from the powerful RGB-D pre training, the features of the RGB branch can efficiently fuse the information of two modalities. Thus, our decoder only uses the RGB features $X^{r g b}$ , which contains expressive clues, instead of using both $X^{r g b}$ and $X^{d}$ . As shown in Tab. 4, using only $X^{r g b}$ can save computational cost without performance drop, while other methods usually need to use both $X^{r g b}$ and $X^{d}$ . This difference also demonstrates that our proposed RGB-D pre training pipeline and block are more suitable for RGB-D segmentation.

解码器的输入特征。得益于强大的RGB-D预训练,RGB分支的特征能高效融合两种模态的信息。因此我们的解码器仅使用包含丰富线索的RGB特征$X^{r g b}$,而非同时使用$X^{r g b}$和$X^{d}$。如表4所示,仅使用$X^{r g b}$可在不损失性能的情况下节省计算成本,而其他方法通常需要同时使用$X^{r g b}$和$X^{d}$。这一差异也证明我们提出的RGB-D预训练流程和模块更适合RGB-D分割任务。

Components in our RGB-D block. Our RGB-D block is composed of a base module, GAA module, and LEA module. We take out these three components from DFormer respectively, where the results are shown in Tab. 5. It is clear that all of them are essential for our DFormer. Moreover, we visualize the features around the RGB-D block in Fig. 5. It can be seen the output features can capture more comprehensive details. As shown in Tab. 7, in the GAA module, we find that only fusing the depth features into $Q$ is adequate and further fusing depth features to $K$ and $V$ brings negligible improvement but extra computational burdens. Moreover, we find that the performance of DFormer initially rises as the fixed pooling size of the GAA increases, and it achieves the best when the fixed pooling size is set to $7\times7$ in Tab. 6. We also use two other fusion manners to replace that in LEA, i.e., concatenation, addition. As shown in Tab. 8, using the depth features that processed by a large kernel depth-wise convolution as attention weights to reweigh the RGB features via a simple Hadamard product achieves the best performance.

我们RGB-D模块中的组件。我们的RGB-D模块由基础模块、GAA模块和LEA模块组成。我们分别从DFormer中取出这三个组件进行测试,结果如 表5 所示。显然,它们对DFormer都至关重要。此外,我们在 图5 中可视化了RGB-D模块周围的特征,可以看到输出特征能捕捉更全面的细节。

如 表7 所示,在GAA模块中,我们发现仅将深度特征融合到 $Q$ 就已足够,进一步将深度特征融合到 $K$ 和 $V$ 带来的提升微乎其微,却会增加计算负担。此外,我们发现DFormer的性能最初随着GAA固定池化尺寸的增大而提升,当固定池化尺寸设为 $7\times7$ 时达到最佳(见 表6)。我们还尝试用两种其他融合方式(拼接和相加)替代LEA中的融合方式。如 表8 所示,使用经过大核深度卷积处理的深度特征作为注意力权重,通过简单的Hadamard乘积对RGB特征进行重新加权,能获得最佳性能。

Table 9: Comparison under the RGB-only pretraining. ‘NYU’ and ‘SUN’ means the performance on the NYU DepthV2 and SUNRGBD.

表 9: 仅使用RGB预训练时的对比结果。"NYU"和"SUN"分别表示在NYU DepthV2和SUNRGBD数据集上的性能。

| Model | Params | FLOPs | NYU | SUN |

|---|---|---|---|---|

| CMX (MiT-B2) | 66.6M | 67.6G | 54.4 | 49.7 |

| DFormer-B | 29.5M | 41.9G | 53.3 | 49.5 |

| DFormer-L | 39.0M | 65.7G | 55.4 | 50.6 |

Table 10: Comparison under the RGB-D pretraining. ‘NYU’ and ‘SUN’ means the performance on the NYU DepthV2 and SUNRGBD.

表 10: RGB-D预训练下的对比。"NYU"和"SUN"分别表示在NYU DepthV2和SUNRGBD数据集上的性能。

| 模型 | 参数量 | 计算量(FLOPs) | NYU | SUN |

|---|---|---|---|---|

| CMX (MiT-B2) | 66.6M | 67.6G | 55.8 | 51.1 |

| DFormer-B | 29.5M | 41.9G | 55.6 | 51.2 |

| DFormer-L | 39.0M | 65.7G | 57.2 | 52.5 |

Channel ratio between RGB and depth. RGB images contain information pertaining to object color, texture, shape, and its surroundings. In contrast, depth images typically convey the distance information from each pixel to the camera. Here, we in- vestigate the channel ratio that is used to encode the depth information. In Fig. 6, we present the performance of DFormer-S with different channel ratios, i.e., $C^{d}/C^{r g b}$ . We can see that when the channel ratio excceds $\ '1/2'$ , the improvement is trivial while the computational burden is significantly increased. Therefore, we set the ratio to 1/2 by default.

RGB与深度通道比例。RGB图像包含物体颜色、纹理、形状及其周围环境的信息,而深度图像通常传递每个像素到相机的距离信息。本文研究了用于编码深度信息的通道比例。如图6所示,我们展示了DFormer-S在不同通道比例(即$C^{d}/C^{r g b}$)下的性能表现。可以看出,当通道比例超过$\ '1/2'$时,性能提升微乎其微,而计算负担却显著增加。因此,我们默认将该比例设为1/2。

Figure 6: Performance on different channel ratios $C^{d}/C^{r g b}$ based on DFormer-S. $C^{r g b}$ is fixed and we adjust $C^{d}$ to get different ratios.

图 6: 基于 DFormer-S 的不同通道比例 $C^{d}/C^{rgb}$ 性能表现。固定 $C^{rgb}$ 并调整 $C^{d}$ 以获得不同比例。

Apply the RGB-D pre training manner to CMX. To verify the effect of the RGB-D pre training on other methods and make the comparison more fair, we pretrain the CMX (MiT-B2) on RGB-D data of ImageNet and it obtains about $1.4%$ mIoU improvement, as shown in Tab. 10. Under the RGB-D pre training, DFormer-L still outperforms CMX (MiT-B2) by a large margin, which should be attributed to that the pretrained fusion weight within DFormer can achieve better and efficient fusion between RGB-D data. Besides, we provide the RGB pretrained DFormers to provide more insights in Tab. 9. The similar situation appears under the RGB-only pre training.

对CMX采用RGB-D预训练方式。为验证RGB-D预训练对其他方法的效果并确保对比更公平,我们在ImageNet的RGB-D数据上预训练CMX (MiT-B2),其mIoU提升约$1.4%$,如 表 10 所示。在RGB-D预训练下,DFormer-L仍大幅领先CMX (MiT-B2),这应归因于DFormer内预训练的融合权重能实现RGB-D数据间更优且高效的融合。此外,我们在 表 9 中提供了RGB预训练的DFormers以提供更多洞察。仅使用RGB预训练时也出现类似情况。

Dicussion on the generalization to other modalities. Through RGB-D pre training, the DFormer is endowed with the capacity to interact the RGB and depth during pre training. To verify whether the interaction is still work when replace the depth with another modality, we apply our DFormer to some benchmarks with other modalities, i.e., RGB-T on MFNet (Ha et al., 2017) and RGB-L on KITTI-360 (Liao et al., 2021). As shown in the Tab. 11 (comparison to more methods are in the Tab. 15 and Tab. 16), RGB-D pre training still improves the performance on the RGB and other modalities, nevertheless, the improvement is limited com

关于多模态泛化能力的探讨。通过RGB-D预训练,DFormer获得了在预训练期间交互RGB和深度信息的能力。为验证这种交互机制在替换深度模态后是否依然有效,我们将DFormer应用于其他模态的基准测试:MFNet数据集(Ha等人,2017)的RGB-T模态和KITTI-360数据集(Liao等人,2021)的RGB-L模态。如表11所示(更多方法对比见表15和表16),RGB-D预训练仍能提升RGB与其他模态的性能表现,但提升幅度有限。

Table 11: Results on the RGB-T semantic segmentation benchmark MFNet (Ha et al., 2017) and RGB-L semantic segmentation benchmark KITTI-360 (Liao et al., 2021). ‘(RGB)’ and ‘(RGBD)’ mean the RGB-only and RGB-D pretraining, respectively.

表 11: RGB-T语义分割基准MFNet (Ha et al., 2017)和RGB-L语义分割基准KITTI-360 (Liao et al., 2021)上的结果。'(RGB)'和'(RGBD)'分别表示仅使用RGB和RGB-D的预训练。

| 模型 | 参数量 | 计算量 (FLOPs) | MFNet | KITTI |

|---|---|---|---|---|

| CMX-B2 | 66.6M | 67.6G | 58.2 | 64.3 |

| CMX-B4 | 139.9M | 134.3G | 59.7 | 65.5* |

| CMNeXt-B2 | 65.1M | 65.5G | 58.4* | 65.3 |

| CMNeXt-B4 | 135.6M | 132.6G | 59.9 | 65.6* |

| Ours-L(RGB) | 39.0M | 65.7G | 59.5 | 65.2 |

| Ours-L(RGBD) | 39.0M | 65.7G | 60.3 | 66.1 |

pared to that on RGB-D scenes. To address this issue, a foreseeable solution is to further scale the pre training of DFormer to other modalities. There are two ways to solve the missing of large-scale modal dataset worth trying, i.e., synthesizing the pseudo modal data, and seperately pre training on single modality dataset. As far as the former, there are some generation methods to generate other pseudo modal data. For example, Pseudo-lidar (Wang et al., 2019) propose a method to generate the pesudo lidar data from the depth map, and N-ImageNet (Kim et al., 2021) obtain the event data on the ImageNet. Besides, collecting data and training the modal generator for more modalities, is also worth exploring. For the latter one, we can separately pretrain the model for processing the supplementary modality and then combine it with the RGB model. We will attempt these methods to bring more significant improvements for DFormer on more multimodal scenes.

与RGB-D场景相比。为解决这一问题,一个可行的方案是进一步扩展DFormer在其他模态上的预训练。针对大规模多模态数据缺失问题,有两种值得尝试的解决途径:合成伪模态数据,以及在单模态数据集上分别进行预训练。就前者而言,现有多种生成方法可用于产生其他伪模态数据。例如,Pseudo-lidar (Wang等人,2019) 提出了一种从深度图生成伪激光雷达数据的方法,N-ImageNet (Kim等人,2021) 则在ImageNet上获取事件数据。此外,收集数据并训练更多模态的生成器也值得探索。对于后者,我们可以分别预训练处理补充模态的模型,再将其与RGB模型结合。我们将尝试这些方法,以期为DFormer在更多多模态场景中带来更显著的性能提升。

4 RELATED WORK

4 相关工作

RGB-D Scene Parsing In recent years, with the rise of deep learning technologies, e.g., CNNs (He et al., 2016), and Transformers (Vaswani et al., 2017; Li et al., 2023a), significant progress has been made in scene parsing (Xie et al., 2021; Yin et al., 2022; Chen et al., 2023; Zhang et al., 2023d), one of the core pursuits of computer vision. However, most methods still struggle to cope with some challenging scenes in the real world (Li et al., 2023b; Sun et al., 2020), as they only focus on RGB images that provide them with distinct colors and textures but not 3D geometric information. To overcome these challenges, researchers combine images with depth maps for a comprehensive understanding of scenes.

RGB-D场景解析

近年来,随着深度学习技术的兴起,例如CNN (He et al., 2016) 和Transformer (Vaswani et al., 2017; Li et al., 2023a),场景解析 (Xie et al., 2021; Yin et al., 2022; Chen et al., 2023; Zhang et al., 2023d) 作为计算机视觉的核心研究方向之一取得了显著进展。然而,大多数方法仍难以应对现实世界中的一些复杂场景 (Li et al., 2023b; Sun et al., 2020),因为它们仅关注提供颜色和纹理信息的RGB图像,而缺乏3D几何信息。为了克服这些挑战,研究者将图像与深度图结合以实现对场景的全面理解。

Semantic segmentation and salient object detection are two active areas in RGB-D scene parsing. Particularly, the former aims to produce per-pixel category prediction across a given scene, and the latter attempts to capture the most attention-grabbing objects. To achieve the interaction and alignment between RGB-D modalities, the dominant methods investigate a lot of effort in building fusion modules to bridge the RGB and depth features extracted by two parallel pretrained backbones. For example, methods like CMX (Zhang et al., 2023a), Token Fusion (Wang et al., 2022), and HiDANet (Wu et al., 2023) dynamically fuse the RGB-D representations from RGB and depth encoders and aggregate them in the decoder. Indeed, the evolution of fusion manners has dramatically pushed the performance boundary in these applications of RGB-D scene parsing. Nevertheless, the three common issues, as discussed in Sec. 1, are still left unresolved. Another line of work focuses on the design of operators (Wang & Neumann, 2018; Wu et al., 2020; Cao et al., 2021; Chen et al., 2021a) to extract complementary information from RGB-D modalities. For instance, methods like ShapeConv (Cao et al., 2021), SGNet (Chen et al., 2021a), and Z-ACN (Wu et al., 2020) propose depth-aware convolutions, which enable efficient RGB features and 3D spatial information integration to largely enhance the capability of perceiving geometry. Although these methods are efficient, the improvements brought by them are usually limited due to the insufficient extraction and utilization of the 3D geometry information involved in the depth modal.

语义分割和显著目标检测是RGB-D场景解析中的两个活跃领域。前者旨在对给定场景进行逐像素类别预测,后者则试图捕捉最引人注目的物体。为实现RGB-D模态间的交互与对齐,主流方法致力于构建融合模块来桥接两个并行预训练主干网络提取的RGB与深度特征。例如CMX (Zhang et al., 2023a)、Token Fusion (Wang et al., 2022)和HiDANet (Wu et al., 2023)等方法动态融合RGB-D编码器提取的表征,并在解码器中聚合。融合方式的演进确实显著推动了RGB-D场景解析应用的性能边界,但如第1节所述,三个常见问题仍未解决。另一研究方向聚焦于设计算子 (Wang & Neumann, 2018; Wu et al., 2020; Cao et al., 2021; Chen et al., 2021a) 以从RGB-D模态中提取互补信息。例如ShapeConv (Cao et al., 2021)、SGNet (Chen et al., 2021a)和Z-ACN (Wu et al., 2020)提出的深度感知卷积,通过高效整合RGB特征与3D空间信息来增强几何感知能力。尽管这些方法效率较高,但由于对深度模态中3D几何信息的提取和利用不足,其带来的性能提升通常有限。

Multi-modal Learning The great success of the pretrain-and-finetune paradigm in natural language processing and computer vision has been expanded to the multi-modal domain, and the learned transferable representations have exhibited remarkable performance on a wide variety of downstream tasks. Existing multi-modal learning methods cover a large number of modalities, e.g., image and text (Castrejon et al., 2016; Chen et al., 2020b; Radford et al., 2021; Zhang et al., 2021c; Wu et al., 2022), text and video (Akbari et al., 2021), text and 3D mesh (Zhang et al., 2023c), image, depth, and video (Girdhar et al., 2022). These methods