TALKNCE: IMPROVING ACTIVE SPEAKER DETECTION WITH TALK-AWARE CONTRASTIVE LEARNING

TALKNCE:通过对话感知对比学习改进主动说话人检测

ABSTRACT

摘要

The goal of this work is Active Speaker Detection (ASD), a task to determine whether a person is speaking or not in a series of video frames. Previous works have dealt with the task by exploring network architectures while learning effective representations have been less explored. In this work, we propose TalkNCE, a novel talk-aware contrastive loss. The loss is only applied to part of the full segments where a person on the screen is actually speaking. This encourages the model to learn effective representations through the natural correspondence of speech and facial movements. Our loss can be jointly optimized with the existing objectives for training ASD models without the need for additional supervision or training data. The experiments demonstrate that our loss can be easily integrated into the existing ASD frameworks, improving their performance. Our method achieves state-of-theart performances on AVA-Active Speaker and ASW datasets.

本工作的目标是主动说话人检测 (Active Speaker Detection, ASD),即判断一系列视频帧中的人物是否正在说话。以往研究主要通过探索网络架构来处理该任务,而对学习有效表征的探索较少。本文提出TalkNCE——一种新颖的说话感知对比损失函数。该损失仅作用于屏幕上人物实际说话的部分片段,通过语音与面部动作的自然对应关系,促使模型学习有效表征。我们的损失函数可与现有ASD模型训练目标联合优化,无需额外监督或训练数据。实验表明,该损失能轻松集成到现有ASD框架中并提升其性能。我们的方法在AVA-ActiveSpeaker和ASW数据集上达到了最先进水平。

Index Terms— Active Speaker Detection, Multi-Modal Speech Processing, InfoNCE loss

索引术语 - 主动说话人检测 (Active Speaker Detection), 多模态语音处理 (Multi-Modal Speech Processing), InfoNCE损失函数 (InfoNCE loss)

1. INTRODUCTION

1. 引言

In recent years, there has been a shift in the way we communicate, transitioning from in-person exchanges to audiovisual interactions online. With this shift in paradigm, the task of identifying the active speaker has become crucial in order to enable effective communication and understanding of conversations in context. In multi-modal conversations, active speaker detection (ASD) serves as a fundamental preprocessing module for speech-related tasks, including audiovisual speech recognition [5], speech separation [6, 7], and speaker di aris ation [8, 9].

近年来,我们的交流方式发生了转变,从面对面交谈逐渐转向线上视听互动。随着这一范式的转变,识别当前说话者的任务变得至关重要,以实现有效沟通并在上下文中理解对话内容。在多模态对话中,当前说话者检测 (ASD) 是语音相关任务的基础预处理模块,包括视听语音识别 [5]、语音分离 [6, 7] 和说话人日志化 [8, 9]。

ASD in real-world scenarios is a challenging task that requires effective integration of audio-visual information as well as leveraging their long-term relationships. In response to these specific requirements, ASD model architectures are designed to create corresponding audio-visual features and comprehensively analyze these features over long periods to capture crucial temporal context. The general ASD frameworks begin with modality-specific encoders extracting embedding and subsequent audio-visual fusion techniques enable the seamless interaction of heterogeneous modalities. A prevalent fusion method involves the utilization of a crossattention mechanism [2, 4, 10], facilitating the connection between visual streams and synchronized audio streams. After the two features are combined, self-attention [2, 4] or RNNbased architectures [3, 11] are employed to ensure consistent tracking of the active speaker throughout the utterance. These aforementioned approaches take advantage of the complementary information of both modalities. However, learning quality representations for the multi-modal task of ASD has been less explored.

现实场景中的ASD是一项具有挑战性的任务,需要有效整合视听信息并利用其长期关联关系。为满足这些特定需求,ASD模型架构被设计用于生成对应的视听特征,并通过长时间综合分析这些特征来捕捉关键时序上下文。通用ASD框架首先通过模态专用编码器提取嵌入特征,随后采用视听融合技术实现异构模态的无缝交互。主流融合方法采用跨注意力机制 [2,4,10] 来建立视觉流与同步音频流之间的关联。在特征融合后,使用自注意力 [2,4] 或基于RNN的架构 [3,11] 来确保对说话者的持续追踪。上述方法充分利用了双模态的互补信息,但对ASD多模态任务中高质量表征学习的研究仍显不足。

Fig. 1: Comparison of performances on the validation set of AVA-Active Speaker [1]. Our TalkNCE loss improves the performance of existing ASD models [2, 3, 4] consistently.

图 1: AVA-Active Speaker [1] 验证集上的性能对比。我们的 TalkNCE 损失函数持续提升了现有 ASD 模型 [2, 3, 4] 的表现。

In this paper, we focus on learning strong phonetic representations for the ASD task. To this end, we propose a novel supervised talk-aware contrastive learning strategy by devising a new loss function, named TalkNCE loss. The loss acts on audio and visual features in a frame-wise manner rather than a chunk-wise manner [12, 13] to learn transient phonetic representations required for audio-visual matching. Furthermore, the suggested loss is computed exclusively on the active speaking section extracted by using the ASD labels. Supervision imposed by the TalkNCE loss enables the encoders to concentrate on the fine details of audio-visual correspondence, thus enhancing the effect of audio-visual fusion. In contrast to the approaches that use pre-trained audio-visual embeddings [11, 14], our models are trained in an end-to-end manner by combining the proposed TalkNCE loss with the existing ASD classification losses. Our contrastive learning strategy is model-agnostic, on many of which the addition of the TalkNCE loss brings performance improvements as shown in Fig. 1. In particular, combined with [4], our method outperforms the previous state-of-the-art ASD method on the AVA-Active Speaker and ASW datasets.

本文重点研究为主动说话人检测(ASD)任务学习强语音表征。为此,我们提出一种新颖的监督式语音感知对比学习策略,通过设计名为TalkNCE loss的新损失函数。该损失函数以帧级别而非块级别[12,13]作用于音频和视觉特征,以学习视听匹配所需的瞬时语音表征。此外,建议的损失函数仅在使用ASD标签提取的活跃说话片段上计算。TalkNCE loss施加的监督使编码器能够专注于视听对应的细微细节,从而增强视听融合效果。与使用预训练视听嵌入的方法[11,14]不同,我们的模型通过将提出的TalkNCE loss与现有ASD分类损失相结合,以端到端方式进行训练。我们的对比学习策略与模型无关,如图1所示,TalkNCE loss的加入为多数模型带来性能提升。特别是与[4]结合时,我们的方法在AVA-Active Speaker和ASW数据集上超越了先前最先进的ASD方法。

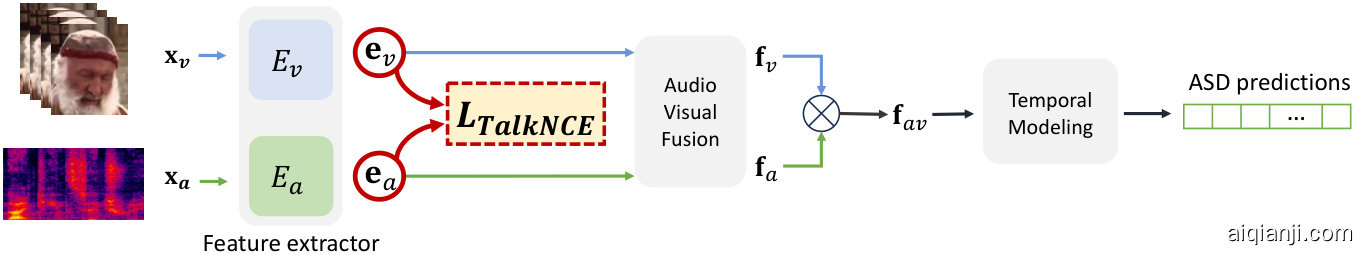

Fig. 2: General framework for the active speaker detection task. Our loss is applied to the audio and visual embedding before the fusion. $\otimes$ denotes the concatenation of two features along the temporal dimension.

图 2: 说话人检测任务的通用框架。我们的损失函数在融合前应用于音频和视觉嵌入。$\otimes$ 表示沿时间维度对两个特征进行拼接。

Our contributions can be summarized as follows: (1) We propose TalkNCE loss, a novel contrastive loss for ASD that enforces the model to exploit information from the alignment of audio and visual streams. (2) The proposed loss can be plugged into multiple existing ASD frameworks and the additional supervision imposed by the loss improves the performances without any additional data. (3) Our method achieves state-of-the-art performances on AVA-Active Speaker and ASW datasets.

我们的贡献可总结如下:(1) 提出TalkNCE损失函数,这是一种用于主动说话人检测(ASD)的新型对比损失,迫使模型利用音频和视觉流对齐的信息。(2) 该损失函数可嵌入多个现有ASD框架,通过损失施加的额外监督能在不增加数据的情况下提升性能。(3) 我们的方法在AVA-Active Speaker和ASW数据集上实现了最先进的性能。

2. METHOD

2. 方法

2.1. Preliminaries

2.1. 预备知识

This section describes the general ASD framework widely used in literature [1, 2, 3, 4, 15]. As shown in Fig. 2, ASD frameworks usually consists of feature extractors, audiovisual fusion, and temporal modeling. Given the input video frames, every face is detected, cropped, stacked, and transformed to grayscale images $\mathbf{x}{v}$ . The corresponding audio waveform is transformed into mel-spec tr ogram $\mathbf{x}{a}$ by the short-time Fourier transform. Then the audio encoder $E_{a}(\cdot)$ and visual encoder $E_{v}(\cdot)$ encode inputs into audio and visual embeddings respectively,

本节介绍文献[1, 2, 3, 4, 15]中广泛使用的通用ASD框架。如图2所示,ASD框架通常由特征提取器、视听融合和时序建模组成。给定输入视频帧后,每张人脸会被检测、裁剪、堆叠并转换为灰度图像$\mathbf{x}{v}$。对应的音频波形通过短时傅里叶变换转换为梅尔频谱图$\mathbf{x}{a}$。随后音频编码器$E_{a}(\cdot)$和视觉编码器$E_{v}(\cdot)$分别将输入编码为音频和视觉嵌入向量。

$$

\mathbf{e}{a}=E_{a}(\mathbf{x}{a}),\quad\mathbf{e}{v}=E_{v}(\mathbf{x}_{v})

$$

$$

\mathbf{e}{a}=E_{a}(\mathbf{x}{a}),\quad\mathbf{e}{v}=E_{v}(\mathbf{x}_{v})

$$

To integrate the information between two modalities, the two features are fed into an audio-visual fusion module. Recent methods such as TalkNet [2] and LoCoNet [4] utilize cross-attention layers for multi-modal fusion. By cross-attention mechanism, audio embedding is effectively aligned with the visual information of the corresponding speaker. Then the attention-weighted features ${\bf f}{a}$ and $\mathbf{f}{v}$ are concatenated to make the fused audio-visual features ${\bf f}_{a v}$ .

为了整合两种模态之间的信息,两个特征被输入到一个视听融合模块中。近期的方法如TalkNet [2]和LoCoNet [4]利用交叉注意力层进行多模态融合。通过交叉注意力机制,音频嵌入能够有效地与对应说话者的视觉信息对齐。随后,注意力加权的特征${\bf f}{a}$和$\mathbf{f}{v}$被拼接起来,形成融合后的视听特征${\bf f}_{a v}$。

Fig. 3: Our TalkNCE loss is applied only to active speaking sections (blue) in a frame-wise manner. Audio and visual embeddings of synchronized video frames are positive pairs (green), while others are negative pairs (orange).

图 3: 我们的TalkNCE损失仅以逐帧方式应用于活跃说话片段(蓝色)。同步视频帧的音频和视觉嵌入构成正样本对(绿色),其余则为负样本对(橙色)。

Finally, several works [2, 4, 3, 16, 17] employ a temporal modeling module by utilizing longer context to accurately predict the active speaker. Including self-attention modules in LoCoNet [4], a Gated Recurrent Unit (GRU), a Long Short-Term Memory (LSTM), and a Bidirectional LSTM (BiLSTM) are frequently exploited for temporal modeling.

最后,多篇研究 [2, 4, 3, 16, 17] 通过利用更长上下文来准确预测活跃说话者,采用了时序建模模块。LoCoNet [4] 中的自注意力模块、门控循环单元 (GRU)、长短期记忆网络 (LSTM) 以及双向长短期记忆网络 (BiLSTM) 常被用于时序建模。

2.2. Contrastive Learning with TalkNCE Loss

2.2. 基于TalkNCE损失的对比学习

The training objective typically used in the ASD task [1, 2, 3, 4, 15] can be represented as:

ASD任务[1, 2, 3, 4, 15]中通常使用的训练目标可以表示为:

$$

\mathcal{L}{m o d e l}=\mathcal{L}{a v}+\lambda_{a}\cdot\mathcal{L}{a}+\lambda_{v}\cdot\mathcal{L}_{v},

$$

$$

\mathcal{L}{m o d e l}=\mathcal{L}{a v}+\lambda_{a}\cdot\mathcal{L}{a}+\lambda_{v}\cdot\mathcal{L}_{v},

$$

where $\mathcal{L}{a v}$ denotes the cross-entropy loss between the groundtruth labels and the final frame-level predictions after temporal modeling. $\mathcal{L}{a}$ and $\mathcal{L}{v}$ are auxiliary cross-entropy losses that are computed with uni-modal features ${\bf f}{a}$ and $\mathbf{f}_{v}$ to make the model utilize both modalities in a balanced manner.

其中 $\mathcal{L}{a v}$ 表示真实标签与时间建模后最终帧级预测之间的交叉熵损失。$\mathcal{L}{a}$ 和 $\mathcal{L}{v}$ 是辅助交叉熵损失,分别通过单模态特征 ${\bf f}{a}$ 和 $\mathbf{f}_{v}$ 计算,以使模型以平衡的方式利用两种模态。

In addition to the cross-entropy loss, we introduce TalkNCE loss, a talk-aware contrastive loss that provides supervision for encoders to learn audio-visual correspondence. The proposed loss brings audio-visual embedding pairs closer if they come from the same video frame or pushes each other away otherwise. This encourages the encoders to detect salient information regarding audio-visual synchronization and to concentrate on phonetically fine details including frame-level correlation between audio and visual modalities.

除了交叉熵损失外,我们引入了TalkNCE损失(一种对话感知的对比损失),该损失为编码器学习视听对应关系提供了监督。所提出的损失函数会使来自同一视频帧的视听嵌入对彼此靠近,否则会将其推开。这促使编码器检测与视听同步相关的显著信息,并专注于包括音频和视觉模态间帧级关联在内的语音细节。

Table 1: Comparison of ASD performance on the AVAActive Speaker validation set. ∗Performance of previous methods are from [3]. E2E refers to end-to-end training.

表 1: AVAActive Speaker验证集上ASD性能对比。*先前方法的性能来自[3]。E2E指端到端训练。

| 方法 | 多候选? | E2E? | mAP(%) |

|---|---|---|---|

| ASC* [15] | 87.1 | ||

| MAAS* [18] | × | 88.8 | |

| TalkNet* [2] | < | 92.3 | |

| ASDNet* [19] | × | 93.5 | |

| EASEE-50* [16] | 94.1 | ||

| SPELL* [17] | 94.2 | ||

| Light-ASD* [3] | × | 94.1 | |

| TS-TalkNet [10] | × | 93.9 | |

| LoCoNet [4] | 95.2 | ||

| Ours | 95.5 |

Our new loss optimizes frame-level matching between paired audio and visual streams as denoted in Fig. 3. Given the audio and visual embeddings $\mathbf{e}{a}$ and $\mathbf{e}{v}$ , the active speaking regions are extracted using the original ASD labels. Let $T_{a c t}$ be the length of active speaking region and ${\mathbf{e}{a,i}}{i\in{1,\dots,T_{a c t}}}$ , ${{\bf e}{v,i}}{i\in{1,\dots,T_{a c t}}}$ be the frame-level audio and visual embeddings for the active speaking region. Then the TalkNCE loss $\mathcal{L}_{T a l k N C E}$ is as follows:

我们的新损失函数优化了配对音频和视频流之间的帧级匹配,如图 3 所示。给定音频和视觉嵌入 $\mathbf{e}{a}$ 和 $\mathbf{e}{v}$,使用原始 ASD (Automatic Speech Detection) 标签提取有效说话区域。设 $T_{a c t}$ 为有效说话区域的长度,${\mathbf{e}{a,i}}{i\in{1,\dots,T_{a c t}}}$ 和 ${{\bf e}{v,i}}{i\in{1,\dots,T_{a c t}}}$ 分别为有效说话区域的帧级音频和视觉嵌入。则 TalkNCE 损失 $\mathcal{L}_{T a l k N C E}$ 定义如下:

$$

\mathcal{L}{T a l k N C E}=-\frac{1}{T_{a c t}}\sum_{i=1}^{T_{a c t}}\log\frac{\exp(s_{i,i}/\tau)}{\sum_{j=1}^{T_{a c t}}\mathbb{1}{[j\neq i]}\exp(s_{i,j}/\tau)},

$$

$$

\mathcal{L}{T a l k N C E}=-\frac{1}{T_{a c t}}\sum_{i=1}^{T_{a c t}}\log\frac{\exp(s_{i,i}/\tau)}{\sum_{j=1}^{T_{a c t}}\mathbb{1}{[j\neq i]}\exp(s_{i,j}/\tau)},

$$

where $s_{i,j}=\left|\mathbf{e}{v,i}\right|^{T}\left|\mathbf{e}_{a,i}\right|$ and $\tau$ represents a temperature constant fixed to 1. With our proposed TalkNCE loss and the ASD loss, the final training objective used in this paper can be formulated as follows:

其中 $s_{i,j}=\left|\mathbf{e}{v,i}\right|^{T}\left|\mathbf{e}_{a,i}\right|$ ,$\tau$ 表示温度常数且固定为1。通过我们提出的TalkNCE损失和ASD损失,本文使用的最终训练目标可表述如下:

$$

\mathcal{L}=\mathcal{L}{m o d e l}+\lambda\cdot\mathcal{L}_{T a l k N C E},

$$

$$

\mathcal{L}=\mathcal{L}{m o d e l}+\lambda\cdot\mathcal{L}_{T a l k N C E},

$$

where $\lambda$ is the weight value of TalkNCE loss.

其中 $\lambda$ 是 TalkNCE 损失的权重值。

3. EXPERIMENTS

3. 实验

3.1. Datasets

3.1. 数据集

AVA-Active Speaker dataset [1] is a benchmark for evaluating active speaker detection performance extracted from Hollywood movies. It contains 262 videos that span 38.5 hours, of which 120, 33, and 109 videos are divided into training, validation, and test set respectively. As the videos are from movies, the dataset includes some dubbed videos.

AVA-Active Speaker数据集[1]是从好莱坞电影中提取的主动说话人检测性能评估基准。该数据集包含262段总时长38.5小时的视频,其中120段、33段和109段视频分别被划分为训练集、验证集和测试集。由于视频来源为电影,该数据集包含部分配音视频。

Table 2: Performance comparison of other baseline models trained with TalkNCE Loss. † Results are reproduced using the codes released by the original works.

表 2: 使用 TalkNCE 损失训练的其他基线模型性能对比。† 结果是通过原始工作发布的代码复现的。

| 方法 | mAP(%) |

|---|---|

| TalkNett | 92.0 |

| TalkNet+LTalkNCE | 92.5 |

| LightASDt | 93.9 |

| LightASD+LTalkNCE | 94.2 |

| LoCoNett | 95.2 |

| LoCoNet+LTalkNCE | 95.5 |

Active Speakers in the Wild (ASW) dataset [11] is a dataset derived from the existing audio-only speaker diariazation dataset Vox Converse [9] by using a subset of the videos and exploiting the di ari z ation annotations. Unlike AVA-Active Speaker, ASW excludes dubbed videos to ensure synchronization between lip movements and speech. Videos in the dataset total 30.9 hours, and the ratio of an active track duration is $56