Pillars of Grammatical Error Correction: Comprehensive Inspection Of Contemporary Approaches In The Era of Large Language Models

语法错误纠正的支柱:大语言模型时代下当代方法的全面审视

Abstract

摘要

In this paper, we carry out experimental research on Grammatical Error Correction, delving into the nuances of single-model systems, comparing the efficiency of ensembling and ranking methods, and exploring the application of large language models to GEC as singlemodel systems, as parts of ensembles, and as ranking methods. We set new state-of-theart performance 1 with $F_{0.5}$ scores of 72.8 on CoNLL-2014-test and 81.4 on BEA-test, respectively. To support further advancements in GEC and ensure the reproducibility of our research, we make our code, trained models, and systems’ outputs publicly available.2

本文对语法纠错(Grammatical Error Correction)进行了实验研究,深入探讨了单模型系统的细节,比较了集成与排序方法的效率,并探索了大语言模型在GEC中的应用——包括作为单模型系统、集成组件以及排序方法。我们在CoNLL-2014-test和BEA-test测试集上分别取得了72.8和81.4的$F_{0.5}$分数,创造了新的最先进性能[1]。为支持GEC领域的进一步发展并确保研究的可复现性,我们公开了代码、训练模型及系统输出结果[2]。

may work surprisingly well. Combining singlemodel systems is also often straightforward from an implementation perspective. Because only the outputs of the models are required for many ensembling algorithms, there is no need to retrain models or perform inference passes iterative ly. A further review of related work is presented in the end and near the descriptions of considered methods.

效果可能出奇地好。从实现角度来看,单模型系统的组合通常也很简单。由于许多集成算法只需要模型的输出,因此无需重新训练模型或迭代执行推理过程。相关工作的进一步综述将在最后部分及所考虑方法的描述附近呈现。

Our contributions are the following:

我们的贡献如下:

- Comprehensive comparison of GEC methods. We reproduce, evaluate, and compare the most promising existing methods in GEC, both singlemodel systems and ensembles. We show that usage of ensembling methods is crucial to obtain state-ofthe-art performance in GEC.

- GEC方法的全面对比。我们复现、评估并比较了GEC领域最有前景的现有方法,包括单模型系统和集成系统。研究表明,集成方法的使用对于在GEC中实现最先进性能至关重要。

1 Introduction

1 引言

Grammatical Error Correction (GEC) is the task of correcting human text for spelling and grammatical errors. There is a wide variety of GEC approaches and model architectures. In recent years, most systems have used Transformer-based architectures (Bryant et al., 2023). A current trend involves writing prompts for Large Language Models (LLMs) such as GPT-4 (OpenAI, 2023) that would generate grammatical corrections (Loem et al., 2023), (Coyne et al., 2023), (Wu et al., 2023), (Fang et al., 2023).

语法错误修正 (GEC) 的任务是纠正人类文本中的拼写和语法错误。GEC 方法和模型架构多种多样。近年来,大多数系统都采用了基于 Transformer 的架构 (Bryant et al., 2023)。当前趋势涉及为 GPT-4 (OpenAI, 2023) 等大语言模型编写提示,以生成语法修正 (Loem et al., 2023)、(Coyne et al., 2023)、(Wu et al., 2023)、(Fang et al., 2023)。

The varied approaches within GEC each possess unique strengths and limitations. Combining several single-model GEC systems through ensembling or ranking may smooth out their weaknesses and lead to better overall performance (Susanto et al., 2014). Even quite simple ensembling methods, such as majority voting (Tarnavskyi et al., 2022) or logistic regression (Qorib et al., 2022),

GEC中的各种方法各有其独特的优势和局限性。通过集成或排序结合多个单模型GEC系统,可以弥补各自的不足,从而提升整体性能 (Susanto et al., 2014)。即便是多数投票 (Tarnavskyi et al., 2022) 或逻辑回归 (Qorib et al., 2022) 这类简单的集成方法也能发挥作用。

- Establishing new state-of-the-art baselines. We show that simple ensembling by majority vote outperforms more complex approaches and significantly boosts performance. We push the boundaries of GEC quality and achieve new state-of-the-art results on the two most common GEC evaluation datasets: $F_{0.5}=72.8$ on CoNLL-2014-test and $F_{0.5}=81.4$ on BEA-test.

- 建立新的最先进基线。我们证明,通过多数投票的简单集成方法优于更复杂的方法,并显著提升性能。我们突破了语法纠错(GEC)质量的边界,在两个最常用的GEC评估数据集上取得了新的最先进成果:在CoNLL-2014-test上达到 $F_{0.5}=72.8$,在BEA-test上达到 $F_{0.5}=81.4$。

- Exploring the application of LLMs for GEC. We thoroughly investigate different scenarios for leveraging large language models (LLMs) for GEC: 1) as single-model systems in a zero-shot setting, 2) as fine-tuned single-model systems, 3) as single-model systems within ensembles, and 4) as a combining algorithm for ensembles. To the best of our knowledge, we are the first to explore using GPT-4 to rank GEC edits, which contributes to a notable improvement in the Recall of ensemble systems.

- 探索大语言模型 (LLM) 在语法错误纠正 (GEC) 中的应用。我们深入研究了利用大语言模型进行语法错误纠正的不同场景:1) 作为零样本设置下的单模型系统,2) 作为微调后的单模型系统,3) 作为集成系统中的单模型系统,以及4) 作为集成系统的组合算法。据我们所知,我们是首个探索使用 GPT-4 对语法错误纠正的编辑进行排序的研究,这显著提升了集成系统的召回率 (Recall)。

- Commitment to open science. In a move toward fostering transparency and encouraging further research, we open-source all our models, their outputs on evaluation datasets, and the accompanying code.2 This ensures the reproducibility of our work and provides a foundation for future advancements in the field.

- 致力于开放科学。为了促进透明度和鼓励进一步研究,我们开源了所有模型、它们在评估数据集上的输出以及配套代码。这确保了工作的可复现性,并为该领域的未来发展奠定了基础。

2 Data for Training and Evaluation

2 训练与评估数据

We use the following GEC datasets for training models (Table 1):

我们使用以下GEC数据集训练模型(表1):

- Lang-8, an annotated dataset from the Lang-8 Corpus of Learner English (Tajiri et al., 2012); 2. NUCLE, the National University of Singapore Corpus of Learner English (Dahlmeier et al., 2013); 3. FCE, the First Certificate in English dataset (Yann a kou dak is et al., 2011); 4. W&I, the Write & Improve Corpus (Bryant et al., 2019) (also known as BEA-Train). We also use a larger synthetic version of Lang-8 with target sentences produced by the T5 model (Raffel et al., 2020); 5. cLang-8 (Rothe et al., 2021), and synthetic data based on two monolingual datasets; 6. Troy-1BW (Tarnavskyi et al., 2022), produced from the One Billion Word Benchmark (Chelba et al., 2014); 7. Troy-Blogs (Tarnavskyi et al., 2022), produced from the Blog Authorship Corpus (Schler et al., 2006).

- Lang-8:来自Lang-8学习者英语语料库的标注数据集 (Tajiri et al., 2012);

- NUCLE:新加坡国立大学学习者英语语料库 (Dahlmeier et al., 2013);

- FCE:英语初级证书考试数据集 (Yannakoudakis et al., 2011);

- W&I:Write & Improve语料库 (Bryant et al., 2019)(亦称BEA-Train)。我们还使用了T5模型 (Raffel et al., 2020) 生成目标句的Lang-8增强合成版本;

- cLang-8 (Rothe et al., 2021),以及基于两个单语数据集的合成数据;

- Troy-1BW (Tarnavskyi et al., 2022),源自十亿词基准语料库 (Chelba et al., 2014);

- Troy-Blogs (Tarnavskyi et al., 2022),源自博客作者语料库 (Schler et al., 2006)。

Table 1: Statistics of GEC datasets used in this work for training and evaluation.

表 1: 本工作用于训练和评估的GEC数据集统计。

| # | 数据集 | 部分 | 句子数 | Token数 | 编辑比例(%) |

|---|---|---|---|---|---|

| 1 | Lang-8 | 训练集 | 1.04M | 11.86M | 42 |

| 2 | NUCLE | 训练集 | 57.0k | 1.16M | 62 |

| 3 | FCE | 测试集 训练集 | 1.3k 28.0k | 30k 455k | 90 62 |

| 4 | W&I+LOCNESS | 训练集 开发集 | 34.3k 4.4k | 628.7k 85k | 67 64 |

| 5 | cLang-8 | 测试集 训练集 | 4.5k 2.37M | 62.5k 28.0M | N/A 58 |

| 6 | Troy-1BW | 训练集 | 1.2M | 30.88M | 100 |

| 7 | Troy-Blogs | 训练集 | 1.2M | 21.49M | 100 |

For evaluation, we use current standard evaluation sets for the GEC domain: the test set from the CoNLL-2014 GEC Shared Task $\mathrm{Ng}$ et al., 2014), and the dev and test components of the W&I $^+$ LOCNESS Corpus from the BEA-2019 GEC Shared Task (BEA-dev and BEA-test) (Bryant et al., 2019). For BEA-test, submissions were made through the current competition website.3 For each dataset, we report Precision, Recall, and $F_{0.5}$ scores. To ensure an apples-to-apples comparison with previously reported GEC results, we evaluate CONLL-2014-test with M2scorer (Dahlmeier and

为评估效果,我们采用当前语法纠错(GEC)领域的标准评测集:来自CoNLL-2014语法纠错共享任务的测试集(Ng等人,2014),以及BEA-2019语法纠错共享任务中W&I+LOCNESS语料库的开发集和测试集(BEA-dev和BEA-test)(Bryant等人,2019)。针对BEA-test数据集,我们通过竞赛官网提交了系统输出。对于每个数据集,我们汇报了精确率(Precision)、召回率(Recall)和F0.5分数。为确保与既往语法纠错研究结果的可比性,我们使用M2scorer(Dahlmeier和...

Ng, 2012), and BEA-dev with ERRANT (Bryant et al., 2017).

Ng, 2012), 以及基于ERRANT的BEA-dev (Bryant et al., 2017)。

3 Single-Model Systems

3 单模型系统

3.1 Large Language Models

3.1 大语言模型 (Large Language Models)

We investigate the performance of open-source models from the LLaMa-2 family (Touvron et al., 2023), as well as two proprietary models: GPT3.5 (Chat-GPT) and GPT-4 (OpenAI, 2023). For LLaMa, we work with four models: LLaMa-2- 7B, LLaMa-2-13B, Chat-LLaMa-2-7B, and ChatLLaMa-2-7B. We use two LLaMa-2 model sizes: 7B and 13B. If the model is pre-trained for instruction following (Ouyang et al., 2022), it is denoted as "Chat-" in the model’s name.

我们研究了LLaMa-2系列(Touvron等人,2023)开源模型以及两个专有模型:GPT3.5(Chat-GPT)和GPT-4(OpenAI,2023)的性能。对于LLaMa,我们测试了四个模型:LLaMa-2-7B、LLaMa-2-13B、Chat-LLaMa-2-7B和ChatLLaMa-2-7B。我们使用了两种LLaMa-2模型规模:7B和13B。若模型经过指令跟随预训练(Ouyang等人,2022),其名称会标注为"Chat-"前缀。

Chat-GPT and GPT-4 are accessed through the Microsoft Azure API. We use versions gpt-3.5- turbo-0613 and gpt-4-0613, respectively.

Chat-GPT和GPT-4通过Microsoft Azure API访问。我们分别使用gpt-3.5-turbo-0613和gpt-4-0613版本。

We explore two scenarios for performing GEC using LLMs: zero-shot prompting (denoted as "ZS") and fine-tuning (denoted as "FT").

我们探索了使用大语言模型进行语法纠错(GEC)的两种场景:零样本提示(记为"ZS")和微调(记为"FT")。

3.1.1 Zero-Shot Prompting

3.1.1 零样本 (Zero-Shot) 提示

In recent studies dedicated to prompting LLMs for GEC, it was shown that LLM models tend to produce more fluent rewrites (Coyne et al., 2023). At the same time, performance measured by automated metrics such as MaxMatch (Dahlmeier and $\mathrm{Ng},2012)$ or ERRANT has been identified as inferior. We frequently observed that these automated metrics do not always correlate well with human scores. This makes LLMs used in zero-shot prompting mode potentially attractive, especially in conjunction with other systems in an ensemble.

在最近针对大语言模型(GEC)提示的研究中,研究表明LLM模型倾向于生成更流畅的改写(Coyne等人,2023)。同时,通过MaxMatch (Dahlmeier和Ng,2012)或ERRANT等自动化指标衡量的性能被认为较差。我们经常观察到这些自动化指标并不总是与人类评分很好地相关。这使得在零样本提示模式下使用的大语言模型可能具有吸引力,特别是在与其他系统集成时。

For the Chat-LLaMa-2 models, we use a twotiered prompting approach that involves setting the system prompt "You are a writing assistant. Please ensure that your responses consist only of corrected texts." to provide the context to direct the model focus toward GEC task. Then, we push the following instruction prompt to direct the model’s focus toward the GEC task:

对于Chat-LLaMa-2模型,我们采用双层提示策略:首先设置系统提示"你是一个写作助手。请确保你的回复仅包含修正后的文本",为模型提供指向语法纠错(GEC)任务的上下文;随后推送以下指令提示进一步引导模型聚焦于GEC任务:

Fix grammatical errors for the following text.

修正以下文本的语法错误。

Temperature is set to 1. For Chat-GPT and GPT4 models, we employ a function-calling API with the "required" parameter. This guides the LLM to more accurately identify and correct any linguistic errors within the text or replicate the input text if it was already error-free, thus ensuring consistency in the models’ responses. The instruction prompt for GPT models is:

温度设置为1。对于Chat-GPT和GPT4模型,我们使用带有"required"参数的函数调用API。这可以引导大语言模型更准确地识别并纠正文本中的语言错误,或在输入文本无误时直接复制,从而确保模型响应的一致性。GPT模型的指令提示为:

Table 2: All single-model systems evaluated on CoNLL-2014-test, BEA-dev, and BEA-test datasets.

| # | CoNLL-2014-test | BEA-dev | BEA-test | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| System | Precision | Recall F0.5 | Precision | Recall | F0.5 | Precision | Recall | F0.5 | ||

| 1 | Chat-LLaMa-2-7B-ZS | 42.9 | 47.3 | 43.7 | 19.1 | 34.1 | 21.0 | |||

| 2 | Chat-LLaMa-2-13B-ZS | 49.1 | 56.1 | 50.4 | 30.6 | 45.0 | 32.7 | |||

| 3 | GPT-3.5-ZS | 56.2 | 57.7 | 56.5 | 37.4 | 50.6 | 39.4 | 1 | ||

| 4 | GPT-3.5-CoT-ZS | 56.0 | 58.7 | 56.5 | 36.4 | 50.8 | 38.5 | |||

| 5 | GPT-4-ZS | 59.0 | 55.4 | 58.2 | 42.5 | 45.0 | 43.0 | - | 1 | - |

| 6 | Chat-LLaMa-2-7B-FT | 75.5 | 46.8 | 67.2 | 58.3 | 46.0 | 55.3 | 72.3 | 67.4 | 71.2 |

| 7 | Chat-LLaMa-2-13B-FT | 77.3 | 45.6 | 67.9 | 59.8 | 46.1 | 56.4 | 74.6 | 67.8 | 73.1 |

| 8 | T5-11B | 70.9 | 56.5 | 67.5 | 60.9 | 51.1 | 58.6 | 73.2 | 71.2 | 72.8 |

| 9 | UL2-20B | 73.8 | 50.4 | 67.5 | 60.5 | 48.6 | 57.7 | 75.2 | 70.0 | 74.1 |

| 10 | GECToR-2024 | 75.0 | 44.7 | 66.0 | 64.6 | 37.2 | 56.3 | 77.7 | 59.0 | 73.1 |

| 11 | CTC-Copy | 72.6 | 47.0 | 65.5 | 58.3 | 38.0 | 52.7 | 71.7 | 59.9 | 69.0 |

| 12 | EditScorer | 78.5 | 39.4 | 65.5 | 67.3 | 36.1 | 57.4 | 81.0 | 56.1 | 74.4 |

表 2: 在 CoNLL-2014-test、BEA-dev 和 BEA-test 数据集上评估的所有单模型系统。

Fix all mistakes in the text (spelling, punctuation, grammar, etc). If there are no errors, respond with the original text.

修正文本中的所有错误(拼写、标点、语法等)。如果没有错误,则返回原文。

Additionally, we employ a form of the chain-ofthought (CoT) prompting (Wei et al., 2022), which involves requesting reasoning from the model before it makes corrections by means of function calling.

此外,我们采用了一种思维链 (chain-of-thought, CoT) 提示方法 [20],即在模型通过函数调用进行修正前,要求其先给出推理过程。

3.1.2 Fine-tuning the Large Language Models

3.1.2 大语言模型 (Large Language Model) 微调

Fine-tuning is a mainstream method for knowledge transfer. Since we have several available annotated GEC datasets, they may be used to fine-tune LLMs (Zhang et al., 2023b; Kaneko and Okazaki, 2023).

微调 (fine-tuning) 是知识迁移的主流方法。由于我们拥有多个可用的标注 GEC 数据集,它们可用于对大语言模型进行微调 (Zhang et al., 2023b; Kaneko and Okazaki, 2023)。

We use three datasets for fine-tuning — NUCLE, W&I, and cLang-8 (Table 1) — as they are commonly used in recent GEC research (Zhang et al., 2023b; Kaneko and Okazaki, 2023; Loem et al., 2023). We varied the datasets and their shares to find the best combination.

我们使用了三个数据集进行微调——NUCLE、W&I和cLang-8 (表 1) ——因为它们最近在GEC研究中被广泛使用 (Zhang et al., 2023b; Kaneko and Okazaki, 2023; Loem et al., 2023)。我们调整了数据集及其比例以寻找最佳组合。

We use the Transformers library4 to conduct 1000–1200 updates with 250 warm-up steps, a batch size of 8, and a learning rate of $1e-5$ . We fine-tune only LLaMA-2 models on next token prediction task, both autocomplete and instructionfollowing pre-trained versions (denoted as "Chat"). For the Chat-LLaMA-2 models, we use the following prompt:

我们使用Transformers库4进行1000-1200次更新,包含250步预热,批量大小为8,学习率为$1e-5$。我们仅针对下一个token预测任务微调LLaMA-2模型,包括自动补全和指令跟随预训练版本(标记为"Chat")。对于Chat-LLaMA-2模型,我们使用以下提示:

Additionally, we perform an ablation study on the models’ size and the usefulness of the instructions (Appendix D, Table 11). Not surprisingly, our results indicate that instructions work better for "Chat" versions of models.

此外,我们对模型规模和指令有效性进行了消融研究(附录D,表11)。不出所料,结果表明指令对模型的"Chat"版本效果更好。

3.2 Sequence-to-Sequence models

3.2 序列到序列模型

In a sequence-to-sequence approach, GEC is considered a machine translation task, where errorful sentences correspond to the source language, and error-free sentences correspond to the target language (Gr und kiew i cz et al., 2019; Kiyono et al., 2019). In this work, we investigate two powerful Transformer-based Seq2Seq models: the opensourced "T5-11B" (Rothe et al., 2021), and "UL2- $20\mathrm{B"}$ , the instruction-tuned version of FLAN (Tay et al., 2022).

在序列到序列方法中,语法错误纠正(GEC)被视为机器翻译任务,其中错误句子对应源语言,无误句子对应目标语言 (Gr und kiew i cz et al., 2019; Kiyono et al., 2019)。本研究探索了两种基于Transformer的强效Seq2Seq模型:开源模型"T5-11B" (Rothe et al., 2021) 和指令调优版FLAN模型"UL2-$20\mathrm{B"}$ (Tay et al., 2022)。

T5-11B is fine-tuned on train data for 500 updates with batch size 256 and a learning rate of $1e-4$ . UL2-20B is fine-tuned on W& $\mathrm{\Delta}1+\mathrm{LOCNESS}$ train data for 300 updates with batch size 16 and a learning rate of $5e-5$ .

T5-11B在训练数据上进行了500次微调,批次大小为256,学习率为$1e-4$。UL2-20B在W&$\mathrm{\Delta}1+\mathrm{LOCNESS}$训练数据上进行了300次微调,批次大小为16,学习率为$5e-5$。

3.3 Edit-based Systems

3.3 基于编辑的系统

Edit-based GEC systems produce explicit text changes, restoring error-free language from the errorful source text. Usually, such systems are based on encoder-only architectures and are nonauto regressive; therefore, they are less resourceconsuming and more attractive for product iz ation. In this work, we consider three publicly available open-source edit-based systems for GEC: GECToR, CTC-Copy, and EditScorer.

基于编辑的语法纠错 (GEC) 系统通过显式文本修改,将含错误的源文本恢复为规范表达。这类系统通常采用仅编码器 (encoder-only) 架构且非自回归 (nonautoregressive) 设计,因此资源消耗更低,更易于产品化。本文研究了三个开源的编辑式语法纠错系统:GECToR、CTC-Copy 和 EditScorer。

GECToR5 (Ome lian ch uk et al., 2020), (Tarnavskyi et al., 2022) is a family of nonauto regressive sequence tagging GEC systems. The concept revolves around training Transformerbased, encoder-only models to generate corrective edits.

GECToR5 (Ome lian ch uk et al., 2020), (Tarnavskyi et al., 2022) 是一系列非自回归序列标注的语法纠错 (GEC) 系统。其核心思想是训练基于Transformer的纯编码器模型来生成纠正性编辑。

CTC-Copy6 (Zhang et al., 2023a) is another nonauto regressive text editing approach. It uses Connectionist Temporal Classification (CTC) (Graves et al., 2006) initially developed for automatic speech recognition and introduces a novel text editing method by modeling the editing process with latent CTC alignments. This allows more flexible editing operations to be generated.

CTC-Copy6 (Zhang et al., 2023a) 是另一种非自回归文本编辑方法。它采用最初为自动语音识别开发的连接时序分类 (Connectionist Temporal Classification, CTC) (Graves et al., 2006) ,通过潜在CTC对齐对编辑过程建模,提出了一种新颖的文本编辑方法。这使得生成更灵活的编辑操作成为可能。

Table 3: GECToR fine-tuning experiments. We compare the performance of our fine-tuned model after stage I and stage II to the initial off-the-shelf model as a baseline.

| 系统名称 | CoNLL-2014-test | BEA-test | ||||

|---|---|---|---|---|---|---|

| 精确率 (Precision) | 召回率 (Recall) | F0.5 | 精确率 (Precision) | 召回率 (Recall) | F0.5 | |

| GECToR-RoBERTa(L) (Tarnavskyi et al., 2022) | 70.1 | 42.7 | 62.2 | 80.6 | 52.3 | 72.7 |

| GECToR-FT-Stage-I | 75.2 | 44.1 | 65.9 | 78.1 | 57.7 | 72.9 |

| GECToR-FT-Stage-II (GECToR-2024) | 75.0 | 44.7 | 66.0 | 77.7 | 59.0 | 73.1 |

表 3: GECToR微调实验。我们将第一阶段和第二阶段微调后的模型性能与初始现成模型作为基线进行比较。

Edit Scorer 7 (Sorokin, 2022) splits GEC into two steps: generating and scoring edits. We consider it a single-model system approach because all edits are generated by a single-model system.

Edit Scorer 7 (Sorokin, 2022) 将语法纠错 (GEC) 拆分为两个步骤:生成编辑和评分编辑。我们将其视为单模型系统方案,因为所有编辑均由单一模型系统生成。

We also attempt to reproduce the Seq2Edit approach (Stahlberg and Kumar, 2020), (Kaneko and Okazaki, 2023), but fail to achieve meaningful results. Please find more details in Appendix B.

我们还尝试复现了 Seq2Edit 方法 (Stahlberg and Kumar, 2020) (Kaneko and Okazaki, 2023),但未能取得有意义的结果。更多细节请参阅附录 B。

For GECToR, we use the top-performing model, $\mathrm{GECToR-RoBERTa}^{(L)}$ (Tarnavskyi et al., 2022). Since this model was not trained on cLang-8 data, we additionally fine-tune it on a mix of cLang8, BEA, Troy-1BW, and Troy-Blogs data. We leverage a multi-stage fine-tuning approach from (Ome lian ch uk et al., 2020). In stage I, a mix of cLang-8, W&I $^+$ LOCNESS train (BEA-train), Troy-1BW, and Troy-Blogs datasets is used for fine-tuning; in stage II, the high-quality W&I $^+$ LOCNESS train dataset is used to finish the training. During stage I, we fine-tune the model for 5 epochs, early-stopping after 3 epochs, with each epoch equal to 10000 updates and a batch size of 256. During stage II, we further fine-tune the model for 4 epochs, with each epoch equal to 130 updates. The full list of hyper parameters for fine-tuning can be found in Appendix D, Table 7. We refer to this new, improved GECToR model as GECToR-2024.

对于GECToR,我们采用性能最优的模型$\mathrm{GECToR-RoBERTa}^{(L)}$ (Tarnavskyi et al., 2022)。由于该模型未在cLang-8数据上训练,我们额外使用cLang8、BEA、Troy-1BW和Troy-Blogs的混合数据进行微调。我们采用(Ome lian ch uk et al., 2020)提出的多阶段微调方法:第一阶段使用cLang-8、W&I$^+$LOCNESS训练集(BEA-train)、Troy-1BW和Troy-Blogs的混合数据进行微调;第二阶段使用高质量的W&I$^+$LOCNESS训练集完成训练。第一阶段微调5个周期(每周期10000次更新,批量大小256),3个周期后提前停止;第二阶段继续微调4个周期(每周期130次更新)。完整超参数列表见附录D表7。我们将这个改进后的新模型称为GECToR-2024。

For CTC-Copy, we use the official code6 with the RoBERTa encoder to train the English GEC model.

对于CTC-Copy,我们使用官方代码6和RoBERTa编码器来训练英语GEC模型。

For EditScorer, we use the open-sourced code7 for GECToR-XLNet(L) option from (Tarnavskyi et al., 2022) to sample possible edits and stagewise decoding with the RoBERTa-Large encoder to rescore them.

对于EditScorer,我们使用(Tarnavskyi et al., 2022)中开源的GECToR-XLNet(L)方案代码7来采样可能的编辑,并通过RoBERTa-Large编码器进行分阶段解码以重新评分。

3.4 Single-Model Systems Results

3.4 单模型系统结果

The performance of single-model GEC systems is presented in Table 2.

表 2: 单模型 GEC 系统的性能表现

We see that all zero-shot approaches considered have $F_{0.5}$ scores lower than 60 on the CoNLL2014-test dataset, which we assume to be a lower bound on satisfactory GEC quality. They all suffer from an over correcting issue (Fang et al., 2023), (Wu et al., 2023) that leads to poor Precision and inferior $F_{0.5}$ scores. Notably, GPT models show consistently better results compared to LLaMa. Implementing the chain-of-thought approach doesn’t improve the quality.

我们发现,所有考虑的零样本方法在CoNLL2014-test数据集上的$F_{0.5}$分数均低于60,我们假设这是满意GEC质量的下限。这些方法都存在过度纠正问题 (Fang et al., 2023) (Wu et al., 2023),导致精确度差且$F_{0.5}$分数较低。值得注意的是,GPT模型相比LLaMa始终表现出更好的结果。采用思维链方法并未提升质量。

Among the remaining approaches — LLMs with fine-tuning, sequence-to-sequence models, and edit-based systems — we do not see a clear winner. Not surprisingly, we observe that larger models (T5-11B, UL2-20B, Chat-LLaMA-2-7B-FT, Chat-LLaMA-2-13B-FT) have slightly higher Recall compared to smaller models (GECToR-2024, CTC-Copy, EditScorer). This is expressed in $1-2%$ higher $F_{0.5}$ scores on CoNLLL-2014-test; however, the values on BEA-dev and BEA-test don’t show the same behavior.

在剩余的方法中——经过微调的大语言模型、序列到序列模型和基于编辑的系统——我们没有看到明显的赢家。不出所料,我们发现较大模型(T5-11B、UL2-20B、Chat-LLaMA-2-7B-FT、Chat-LLaMA-2-13B-FT)的召回率略高于较小模型(GECToR-2024、CTC-Copy、EditScorer)。这体现在CoNLL-2014-test上$F_{0.5}$分数高出$1-2%$;然而,BEA-dev和BEA-test上的数值并未表现出相同趋势。

Additionally, we observe that simply scaling the model does not help achieve a breakthrough in benchmark scores. For example, a relatively small model such as GECToR-2024 ( $\mathbf{\widetilde{\Gamma}}\approx300M$ parameters) still performs well enough compared to much larger models $(\approx7-20B$ parameters). We hypoth- esize that the limiting factor for English GEC is the amount of high-quality data rather than model size. We have not been able to realize an $F_{0.5}$ score of more than $68%/59%/75%$ on CoNLLL-2014- test / BEA-dev / BEA-test, respectively, with any single-model system approach, which is consistent with previously published results.

此外,我们观察到单纯扩大模型规模并不能帮助实现基准分数的突破。例如,相对较小的模型如GECToR-2024(约3亿参数)仍能媲美参数量大得多的模型(约70-200亿参数)。我们假设英语语法纠错(GEC)的限制因素在于高质量数据量而非模型规模。采用任何单模型系统方案时,我们始终无法在CoNLL-2014-test/BEA-dev/BEA-test上实现超过68%/59%/75%的F0.5分数,这与先前发表的研究结果[20]一致。

For GECToR, after two stages of fine-tuning, we were able to improve the $F_{0.5}$ score of the top-performing single-model model by $3.8%$ on CoNLL-2014 and by $0.4%$ on BEA-test, mostly due to the increase in Recall (Table 3).

对于GECToR,经过两个阶段的微调后,我们在CoNLL-2014上将最佳单模型的$F_{0.5}$分数提高了$3.8%$,在BEA-test上提高了$0.4%$,这主要归功于召回率的提升 (表 3)。

Interestingly, we see a trend where larger models exhibit diminishing returns with multi-staged training approaches. Our exploration of various training data setups reveals that a simple and straight forward approach, focusing exclusively on the W&I $+\mathrm{LOCNESS}$ train dataset, performs on par with more complex configurations across both evaluation datasets.

有趣的是,我们发现一个趋势:规模更大的模型采用多阶段训练方法时收益递减。通过对不同训练数据设置的探索,我们发现仅专注于 W&I $+\mathrm{LOCNESS}$ 训练数据集的简单直接方法,在两个评估数据集上的表现与更复杂的配置相当。

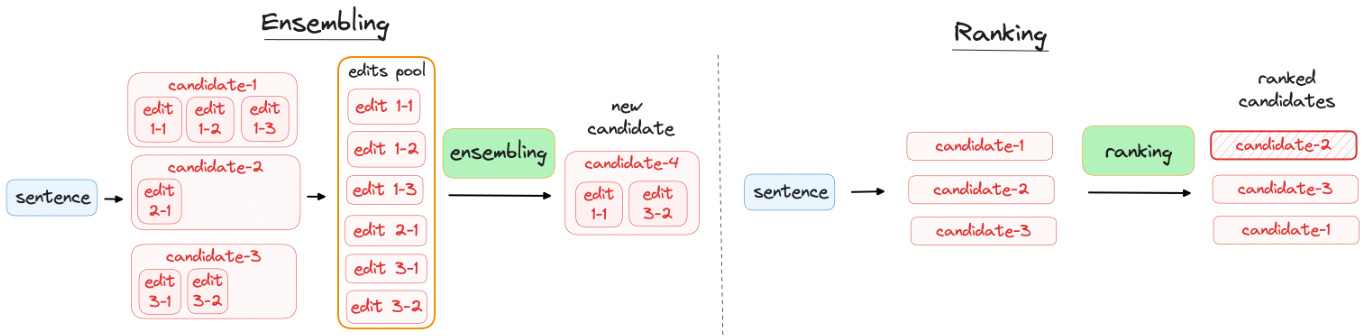

Figure 1: Combining the single-model systems’ outputs. Left: In ensembling, candidates (system outputs) are aggregated on an edit level. Right: In ranking, candidates (system outputs) are aggregated on a sentence level. We consider ranking to be a special case of ensembling.

图 1: 单模型系统输出的组合方式。左图: 在集成(ensembling)中,候选结果(系统输出)在编辑级别进行聚合。右图: 在排序(ranking)中,候选结果(系统输出)在句子级别进行聚合。我们认为排序是集成的一种特殊情况。

4 Ensembling and Ranking of Single-Model Systems

4 单模型系统的集成与排序

Combining the outputs of single-model GEC systems can improve their quality. In this paper, we explore two combining methods: ensembling and ranking (Figure 1).

结合单模型GEC系统的输出可以提升其质量。本文探讨了两种结合方法:集成(ensembling)和排序(ranking) (图1: )。

Ensembling combines outputs of single-model systems on an edit level. The ensemble method exploits the strengths of each model, potentially leading to more robust and accurate corrections than any single-model system could provide on its own.

集成方法在编辑层面结合了单模型系统的输出。这种集成方法利用了每个模型的优势,可能比任何单一模型系统单独提供的修正更稳健和准确。

Ranking is a special case of ensembling that combines individual outputs on a sentence level. In this approach, the performance of each system’s candidate is assessed against a set of predefined criteria, and the most effective candidate is selected. Ranking maintains the internal coherence of each model’s output, potentially leading to more natural and readable corrections.

排序是集成方法的一种特殊形式,它在句子级别上结合各个系统的输出。这种方法通过一组预定义标准评估每个系统候选输出的性能,并选择最有效的候选结果。排序保持了每个模型输出的内部一致性,可能产生更自然、更易读的修正结果。

4.1 Oracle-Ensembling and Oracle-Ranking as Upper-Bound Baselines

4.1 Oracle-Ensembling 和 Oracle-Ranking 作为上限基线

To set the upper-bound baseline for our experiments in combining single models, we introduce two oracle systems: Oracle-Ensembling and Oracle-Ranking.

为了设定实验中组合单一模型性能的上限基准,我们引入了两个理想系统:Oracle-Ensembling和Oracle-Ranking。

Oracle-Ensembling approximates an optimal combination of edits of available single-model systems. It is computationally challenging because the number of possible edit combinations grows exponentially with the number of edits. We use a heuristic to mitigate this; it optimizes Precision at the cost of reducing Recall.

Oracle-Ensembling近似于可用单模型系统编辑的最佳组合。由于可能的编辑组合数量随编辑次数呈指数级增长,这在计算上具有挑战性。我们采用启发式方法来缓解这一问题,该方法以降低召回率为代价来优化精确率。

Using golden references from evaluation sets, Oracle-Ensembling works as follows:

利用评估集中的黄金参考标准,Oracle-Ensembling 的工作流程如下:

- Aggregate the edits from all systems into a single pool. 2. Identify and select edits that are present in both the edit pool and the available annotation. 3. In the case of multiple annotations, we obtain a set of edits for each annotation separately. We then select the largest set of edits among the multiple annotations.

- 将所有系统的编辑内容汇总到一个池中。

- 识别并选择编辑池与可用标注中同时存在的编辑内容。

- 若存在多个标注,则分别获取每个标注对应的编辑集合,并从中选择编辑数量最多的集合。

Oracle-Ranking approximates an optimal output selection for available single-model systems. Again using golden references from evaluation sets, we use M2scorer8 to obtain $(F_{0.5},n_{c o r r e c t},n_{p r o p o s e d})$ for each system’s output candidate against the available annotation. The output candidates are then sorted by $(+F_{0.5},+n_{c o r r e c t},-n_{p r o p o s e d})$ and the top one is selected.

Oracle-Ranking近似实现了可用单模型系统的最优输出选择。我们再次利用评估集中的黄金参考标注,通过M2scorer8获取每个系统输出候选与可用标注之间的$(F_{0.5},n_{correct},n_{proposed})$指标值。随后按$(+F_{0.5},+n_{correct},-n_{proposed})$对输出候选进行排序,并选取排名最高的结果。

For our explorations into combining models’ outputs, we select the seven single-model systems that show the best performance on CoNLL-2014-test (Table 2): Chat-LLaMa-2-7B-FT, Chat-LLaMa-2- 13B-FT, T5-11B, UL2-20B, GECToR-2024, CTC- Copy, and EditScorer. As our selection criteria, we take i) systems of different types to maximize the diversity and ii) systems that have an $F_{0.5}$ score of at least 65 on CoNLL-2014-test. We refer to this set of models as "best $7"$ .

在探索模型输出组合时,我们选择了在CoNLL-2014-test上表现最佳的七个单模型系统(表2):Chat-LLaMa-2-7B-FT、Chat-LLaMa-2-13B-FT、T5-11B、UL2-20B、GECToR-2024、CTC-Copy和EditScorer。选择标准包括:i) 采用不同类型的系统以最大化多样性;ii) 系统在CoNLL-2014-test上的$F_{0.5}$分数至少达到65。我们将这组模型称为"best $7$"。

4.2 Ensembling by Majority Votes on Edit Spans (Unsupervised)

4.2 基于编辑片段多数投票的集成方法 (无监督)

To experiment with ensembling different GEC systems, we needed a method that is tolerant to model architecture and vocabulary size. Ensembling by majority votes (Tarnavskyi et al., 2022) on spanlevel edits satisfies this requirement, and it’s simple to implement, so we decided to start with this approach. We use the same "best 7" set of models in our experiments.

为了尝试集成不同的语法错误纠正(GEC)系统,我们需要一种能兼容不同模型架构和词汇量的方法。基于编辑片段多数投票的集成方法(Tarnavskyi et al., 2022)满足这一需求且易于实现,因此我们决定从该方法入手。实验中我们采用相同的"最优7模型"组合。

Our majority-vote ensembling implementation consists of the following steps:

我们的多数投票集成实现包含以下步骤:

- Initialization. a) Select the set of single-model systems for the ensemble. We denote the number of selected systems by $N_{s y s}$ . b) Set $N_{m i n}$ , the threshold for the minimum number of edit suggestions to be accepted, $0\leq N_{m i n}\leq N_{s y s}$ .

- 初始化。a) 为集成选择单模型系统集合,用 $N_{s y s}$ 表示所选系统的数量。b) 设置 $N_{m i n}$ 作为可接受编辑建议的最小数量阈值,满足 $0\leq N_{m i n}\leq N_{s y s}$。

- Extract all edit suggestions from all singlemodel systems of the ensemble.

- 从集成中所有单模型系统中提取所有编辑建议。

- For each edit suggestion $i$ , calculate the number of single-model systems $n_{i}$ that triggered it.

- 对于每个编辑建议 $i$,计算触发它的单模型系统数量 $n_{i}$。

- Leave only those edit suggestions that are triggered more times than the $N_{m i n}$ threshold: $\forall i$ : $n_{i}>N_{m i n}$ .

- 仅保留触发次数超过 $N_{m i n}$ 阈值的编辑建议: $\forall i$ : $n_{i}>N_{m i n}$。

- Iterative ly apply the filtered edit suggestions, beginning with the edit suggestions with the most agreement across systems (greatest $n_{i}$ ) and ending with the edit suggestions where $n_{i}$ is lowest. Don’t apply an edit suggestion if it overlaps with one of the edits applied on a previous iteration.

- 迭代应用经过筛选的编辑建议,从各系统间一致性最高的编辑建议(最大的$n_{i}$)开始,到$n_{i}$最低的编辑建议结束。若某编辑建议与之前迭代中已应用的编辑存在重叠,则不应用该建议。

4.3 Ensembling and Ranking by GRECO Model (Supervised Quality Estimation)

4.3 基于GRECO模型的集成与排序(监督式质量评估)

The quality estimation approach for combining single-model systems’ outputs achieved two recent state-of-the-art results: logistic regression-based ESC (Edit-based System Combination) (Qorib et al., 2022), and its evolution, DeBERTA-based GRECO (Grammatical it y scorer for re-ranking corrections) (Qorib and Ng, 2023). In this paper, we experiment with GRECO because it is open source and demonstrates state-of-the-art performance on the GEC task to