Revisiting Skeleton-based Action Recognition

重新审视基于骨架的动作识别

Abstract

摘要

Human skeleton, as a compact representation of human action, has received increasing attention in recent years. Many skeleton-based action recognition methods adopt GCNs to extract features on top of human skeletons. Despite the positive results shown in these attempts, GCN-based methods are subject to limitations in robustness, interoperability, and s cal ability. In this work, we propose PoseConv3D, a new approach to skeleton-based action recognition. PoseConv3D relies on a 3D heatmap vol- ume instead of a graph sequence as the base representation of human skeletons. Compared to GCN-based methods, PoseConv3D is more effective in learning s patio temporal features, more robust against pose estimation noises, and generalizes better in cross-dataset settings. Also, PoseConv3D can handle multiple-person scenarios without additional computation costs. The hierarchical features can be easily integrated with other modalities at early fusion stages, providing a great design space to boost the performance. PoseConv3D achieves the state-of-the-art on five of six standard skeleton-based action recognition benchmarks. Once fused with other modalities, it achieves the state-of-the-art on all eight multi-modality action recognition benchmarks. Code has been made available at: https://github.com/kenny m ck or mick/pyskl.

人体骨架作为人类动作的紧凑表示形式,近年来受到越来越多的关注。许多基于骨架的动作识别方法采用GCN(图卷积网络)在人体骨架上提取特征。尽管这些尝试显示出积极成果,但基于GCN的方法在鲁棒性、互操作性和可扩展性方面存在局限。本文提出PoseConv3D,一种基于骨架动作识别的新方法。该方法以3D热图体积而非图序列作为人体骨架的基础表示。与基于GCN的方法相比,PoseConv3D能更有效地学习时空特征,对姿态估计噪声具有更强鲁棒性,并在跨数据集场景中表现更优。此外,PoseConv3D无需额外计算成本即可处理多人场景。其分层特征可轻松与其他模态在早期融合阶段集成,为性能提升提供了广阔设计空间。PoseConv3D在六个标准骨架动作识别基准中的五个取得最优结果,当与其他模态融合时,在全部八个多模态动作识别基准上均达到最优。代码已开源:https://github.com/kennymckormick/pyskl。

1. Introduction

1. 引言

Action recognition is a central task in video understanding. Existing studies have explored various modalities for feature representation, such as RGB frames [6, 60, 65], optical flows [53], audio waves [69], and human skeletons [67, 71]. Among these modalities, skeleton-based action recognition has received increasing attention in recent years due to its action-focusing nature and compactness. In practice, human skeletons in a video are mainly represented as a sequence of joint coordinate lists, where the coordinates are extracted by pose estimators. Since only the pose information is included, skeleton sequences capture only action information while being immune to contextual nuisances, such as background variation and lighting changes.

动作识别是视频理解的核心任务。现有研究探索了多种特征表示模态,例如RGB帧 [6, 60, 65]、光流 [53]、音频波形 [69] 和人体骨骼 [67, 71]。在这些模态中,基于骨骼的动作识别近年来因其聚焦动作特性和紧凑性而受到越来越多的关注。实际应用中,视频中的人体骨骼主要表示为关节坐标列表的序列,这些坐标通过姿态估计器提取。由于仅包含姿态信息,骨骼序列仅捕获动作信息,同时不受背景变化和光照变化等上下文干扰的影响。

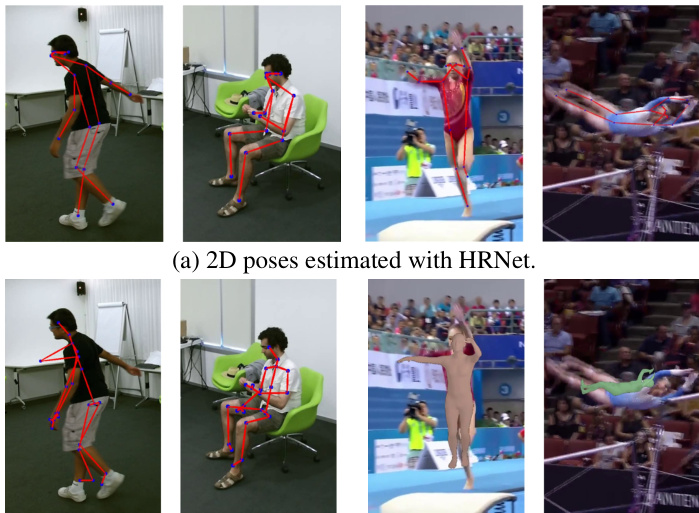

(b) 3D poses collected with Kinect. (c) 3D poses estimated with VIBE Figure 1. PoseConv3D takes 2D poses as inputs. In general, 2D poses are of better quality than 3D poses. We visualize 2D poses estimated with HRNet for videos in NTU-60 and FineGYM in (a). Apparently, their quality is much better than 3D poses collected by sensors (b) or estimated with state-of-the-art estimators (c).

图 1: PoseConv3D以2D姿态作为输入。通常情况下,2D姿态的质量优于3D姿态。我们使用HRNet对NTU-60和FineGYM视频中的2D姿态进行可视化展示 (a)。显然,其质量远高于通过传感器采集的3D姿态 (b) 或采用最先进估计算法生成的3D姿态 (c)。

Table 1. Differences between PoseConv3D and GCN.

表 1: PoseConv3D 与 GCN 的差异

| PreviousWork | PoseConv3D | |

|---|---|---|

| Input | 2D/3D Skeleton | 2D Skeleton |

| Format | Coordinates | 3D Heatmap Volumes |

| Architecture | GCN | 3D-CNN |

Among all the methods for skeleton-based action recognition [15, 63, 64], graph convolutional networks (GCN) [71] have been one of the most popular approaches. Specifically, GCNs regard every human joint at every timestep as a node. Neighboring nodes along the spatial and temporal dimensions are connected with edges. Graph convolution layers are then applied to the constructed graph to discover action patterns across space and time. Due to the good performance on standard benchmarks for skeletonbased action recognition, GCNs have been a standard approach when processing skeleton sequences.

在基于骨架的动作识别方法中[15, 63, 64],图卷积网络(GCN)[71]已成为最主流的方法之一。该方法将每个时间步上的人体关节视为节点,沿空间维度和时间维度连接相邻节点构成边,再通过图卷积层从构建的图中挖掘跨时空的动作模式。由于在骨架动作识别基准测试中表现优异,GCN已成为处理骨架序列的标准方法。

While encouraging results have been observed, GCNbased methods are limited in the following aspects: (1) Robustness: While GCN directly handles coordinates of human joints, its recognition ability is significantly affected by the distribution shift of coordinates, which can often occur when applying a different pose estimator to acquire the coordinates. A small perturbation in coordinates often leads to completely different predictions [74]. (2) Inter opera bility: Previous works have shown that representations from different modalities, such as RGB, optical flows, and skeletons, are complementary. Hence, an effective combination of such modalities can often result in a performance boost in action recognition. However, GCN is operated on an irregular graph of skeletons, making it difficult to fuse with other modalities that are often represented on regular grids, especially in the early stages. (3) S cal ability: In addition, since GCN regards every human joint as a node, the complexity of GCN scales linearly with the number of persons, limiting its applicability to scenarios that involve multiple persons, such as group activity recognition.

尽管已观察到鼓舞人心的成果,基于GCN的方法仍存在以下局限性:(1) 鲁棒性:虽然GCN直接处理人体关节坐标,但其识别能力极易受坐标分布偏移的影响。当使用不同姿态估计器获取坐标时,这种偏移经常发生。微小的坐标扰动常导致完全不同的预测结果[74]。(2) 跨模态互操作性:已有研究表明RGB、光流和骨骼等不同模态的表征具有互补性。有效组合这些模态通常能提升动作识别性能。但GCN基于不规则的骨骼图结构运行,难以与通常以规则网格表示的其他模态(尤其是早期阶段)进行融合。(3) 可扩展性:由于GCN将每个关节视为节点,其计算复杂度随人数线性增长,限制了其在多人场景(如群体活动识别)中的应用。

In this paper, we propose a novel framework PoseConv3D that serves as a competitive alternative to GCNbased approaches. In particular, PoseConv3D takes as input 2D poses obtained by modern pose estimators shown in Figure 1. The 2D poses are represented by stacks of heatmaps of skeleton joints rather than coordinates operated on a human skeleton graph. The heatmaps at different timesteps will be stacked along the temporal dimension to form a 3D heatmap volume. PoseConv3D then adopts a 3D convolutional neural network on top of the 3D heatmap volume to recognize actions. Main differences between PoseConv3D and GCN-based approaches are summarized in Table 1.

本文提出了一种新型框架PoseConv3D,可作为基于GCN方法的有效替代方案。具体而言,PoseConv3D以现代姿态估计器获取的2D姿态作为输入(如图1所示)。这些2D姿态通过骨骼关节热图堆栈表示,而非作用于人体骨骼图的坐标数据。不同时间步的热图将沿时间维度堆叠形成3D热图体。PoseConv3D随后在该3D热图体上采用3D卷积神经网络进行动作识别。PoseConv3D与基于GCN方法的主要差异总结于表1。

PoseConv3D can address the limitations of GCN-based approaches stated above. First, using 3D heatmap volumes is more robust to the up-stream pose estimation: we empirically find that PoseConv3D generalizes well across input skeletons obtained by different approaches. Also, PoseConv3D, which relies on heatmaps of the base representation, enjoys the recent advances in convolutional network architectures and is easier to integrate with other modalities into multi-stream convolutional networks. This characteristic opens up great design space to further improve the recognition performance. Finally, PoseConv3D can handle different numbers of persons without increasing computational overhead since the complexity over 3D heatmap volume is independent of the number of persons. To verify the efficiency and effectiveness of PoseConv3D, we conduct comprehensive studies across several datasets, including FineGYM [49], NTURGB-D [38], UCF101 [57], HMDB51 [29], Kinetics 400 [6], and Volleyball [23], where PoseConv3D achieves state-of-the-art performance compared to GCN-based approaches.

PoseConv3D能够解决上述基于GCN方法的局限性。首先,使用3D热图体积对上游姿态估计更具鲁棒性:我们通过实验发现,PoseConv3D在不同方法获得的输入骨骼上均表现出良好的泛化能力。此外,基于基础表示热图的PoseConv3D能够利用卷积网络架构的最新进展,并更容易与其他模态集成到多流卷积网络中。这一特性为提升识别性能开辟了广阔的设计空间。最后,由于3D热图体积的计算复杂度与人数无关,PoseConv3D可在不增加计算开销的情况下处理不同数量的人员。为验证PoseConv3D的效率和有效性,我们在多个数据集上进行了全面研究,包括FineGYM [49]、NTURGB-D [38]、UCF101 [57]、HMDB51 [29]、Kinetics 400 [6]和Volleyball [23],实验表明PoseConv3D相比基于GCN的方法实现了最先进的性能。

2. Related Work

2. 相关工作

3D-CNN for RGB-based action recognition. 3D-CNN is a natural extension of 2D-CNN for spatial feature learning to s patio temporal in videos. It has long been used in action recognition [24, 60]. Due to a large number of param- eters, 3D-CNN requires huge amounts of videos to learn good representation. 3D-CNN has become the mainstream approach for action recognition since I3D [6]. From then on, many advanced 3D-CNN architectures [17, 18, 61, 62] have been proposed by the action recognition community, which outperform I3D both in precision and efficiency. In this work, we first propose to use 3D-CNN with 3D heatmap volumes as inputs and obtain the state-of-the-art in skeletonbased action recognition.

基于RGB的3D-CNN动作识别方法。3D-CNN是2D-CNN在视频时空特征学习领域的自然延伸,长期应用于动作识别领域[24,60]。由于参数量庞大,3D-CNN需要海量视频数据才能学习到有效表征。自I3D[6]问世以来,3D-CNN已成为动作识别的主流方法。此后,动作识别领域相继提出了多种先进3D-CNN架构[17,18,61,62],在精度和效率上均超越了I3D。本研究首次提出采用3D热图体积作为输入的3D-CNN方案,在基于骨架的动作识别任务中取得了最先进性能。

GCN for skeleton-based action recognition. Graph convolutional network is widely adopted in skeleton-based action recognition [3,7,19,55,56,71]. It models human skele- ton sequences as s patio temporal graphs. ST-GCN [71] is a well-known baseline for GCN-based approaches, which combines spatial graph convolutions and interleaving temporal convolutions for s patio temporal modeling. Upon the baseline, adjacency powering is used for multiscale modeling [34, 40], while self-attention mechanisms improve the modeling capacity [31, 51]. Despite the great success of GCN in skeleton-based action recognition, it is also limited in robustness [74] and s cal ability. Besides, for GCNbased approaches, fusing features from skeletons and other modalities may need careful design [13].

基于骨骼动作识别的图卷积网络 (GCN)。图卷积网络在基于骨骼的动作识别领域被广泛采用 [3,7,19,55,56,71]。它将人体骨骼序列建模为时空图。ST-GCN [71] 是该领域著名的基线方法,通过结合空间图卷积和交错时序卷积实现时空建模。在此基线基础上,邻接矩阵幂运算被用于多尺度建模 [34, 40],而自注意力机制则提升了建模能力 [31, 51]。尽管GCN在骨骼动作识别中取得巨大成功,但其鲁棒性和可扩展性仍存在局限 [74]。此外,对于基于GCN的方法,骨骼与其他模态的特征融合可能需要精心设计 [13]。

CNN for skeleton-based action recognition. Another stream of work adopts convolutional neural networks for skeleton-based action recognition. 2D-CNN-based approaches first model the skeleton sequence as a pseudo image based on manually designed transformations. One line of works aggregates heatmaps along the temporal dimension into a 2D input with color encodings [10] or learned modules [1, 70]. Although carefully designed, information loss still occurs during the aggregation, which leads to inferior recognition performance. Other works [2,25,26,33,41] directly convert the coordinates in a skeleton sequence to a pseudo image with transformations, typically generate a 2D input of shape $K\times T$ , where $K$ is the number of joints, $T$ is the temporal length. Such input cannot exploit the locality nature of convolution networks, which makes these methods not as competitive as GCN on popular benchmarks [2]. Only a few previous works have adopted 3D-CNNs for skeleton-based action recognition. To construct the 3D input, they either stack the pseudo images of distance matrices [21, 36] or directly sum up the 3D skeletons into a cuboid [37]. These approaches also severely suffer from information loss and obtain much inferior performance to the state-of-the-art. Our work stacks heatmaps along the temporal dimension to form 3D heatmap volumes, preserving all information during this process. Besides, we use 3D-CNN instead of 2D-CNN due to its good capability for s patio temporal feature learning.

基于骨骼动作识别的CNN方法。另一类研究采用卷积神经网络(CNN)进行基于骨骼的动作识别。基于2D-CNN的方法首先通过人工设计的变换将骨骼序列建模为伪图像。部分研究通过颜色编码[10]或学习模块[1,70]将热图沿时间维度聚合为2D输入。尽管经过精心设计,聚合过程中仍存在信息损失,导致识别性能欠佳。其他研究[2,25,26,33,41]直接通过变换将骨骼序列坐标转换为伪图像,通常生成形状为$K\times T$的2D输入($K$表示关节数量,$T$表示时间长度)。这种输入无法利用卷积网络的局部性特征,使得这些方法在主流基准测试[2]上的表现不及图卷积网络(GCN)。仅有少数先前研究采用3D-CNN进行骨骼动作识别。为构建3D输入,这些方法要么堆叠距离矩阵的伪图像[21,36],要么直接将3D骨骼求和成立方体[37]。这些方法同样存在严重信息损失,其性能远低于最先进水平。本研究将热图沿时间维度堆叠形成3D热图体,在此过程中保留全部信息。此外,由于3D-CNN在时空特征学习方面的优势,我们选用3D-CNN而非2D-CNN。

3. Framework

3. 框架

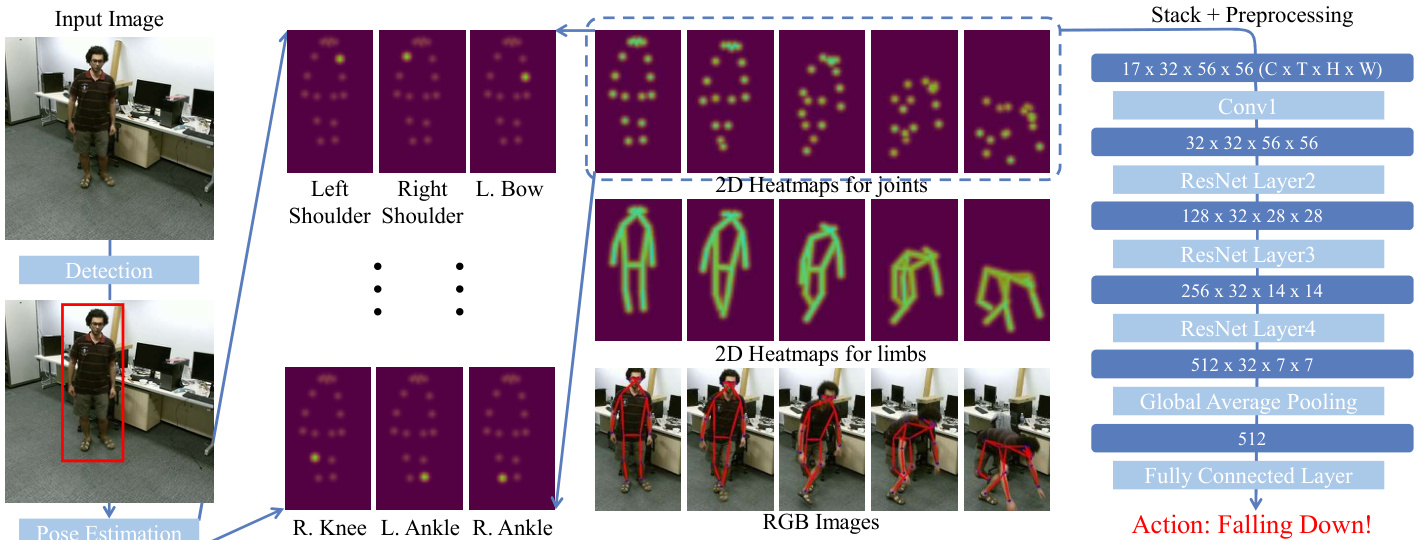

We propose PoseConv3D, a 3D-CNN-based approach for skeleton-based action recognition, which can be a competitive alternative to GCN-based approaches, outperforming GCN under various settings in terms of accuracy with improved robustness, interoperability, and s cal ability. An overview of PoseConv3D is depicted in Figure 2, and details of PoseConv3D will be covered in the following sections. We begin with a review of skeleton extraction, which is the basis of skeleton-based action recognition but is often overlooked in previous literature. We point out several aspects that should be considered when choosing a skeleton extractor and motivate the use of 2D skeletons in Pose Con v 3 D 1. Subsequently, we introduce 3D Heatmap Volume that is the representation of a 2D skeleton sequence used in PoseConv3D, followed by the structural designs of PoseConv3D, including a variant that focuses on the modality of human skeletons as well as a variant that combines the modalities of human skeletons and RGB frames to demonstrate the inte roper ability of PoseConv3D.

我们提出PoseConv3D,一种基于3D-CNN的骨架动作识别方法,可作为GCN方法的有力替代方案。该方案在多种设定下均以更高准确度超越GCN,并具备更强的鲁棒性、互操作性和可扩展性。图2展示了PoseConv3D的总体框架,具体细节将在后续章节展开。我们首先回顾骨架提取技术——这是骨架动作识别的基础,却常被前人研究忽视。文中指出选择骨架提取器时需考量的多个维度,并论证了PoseConv3D采用2D骨架的合理性。随后介绍3D热图体积(3D Heatmap Volume)这一PoseConv3D中使用的2D骨架序列表征方法,继而阐述PoseConv3D的结构设计:包括专注人体骨架模态的变体,以及融合人体骨架与RGB帧模态以展示其互操作能力的变体。

3.1. Good Practices for Pose Extraction

3.1. 姿态提取的最佳实践

Being a critical pre-processing step for skeleton-based action recognition, human skeleton or pose extraction largely affects the final recognition accuracy. However, its importance is often overlooked in previous literature, in which poses estimated by sensors [38, 48] or existing pose estimators [4,71] are used without considering the potential effects. Here we conduct a review on key aspects of pose extraction to find a good practice.

作为基于骨架的动作识别的关键预处理步骤,人体骨架或姿态提取在很大程度上影响着最终识别精度。然而,其重要性在以往文献中常被忽视——这些研究直接使用传感器[38, 48]或现有姿态估计器[4,71]输出的姿态数据,而未考虑潜在影响。本文通过系统梳理姿态提取的关键环节,旨在探索最佳实践方案。

In general, 2D poses are of better quality compared to 3D poses, as shown in Figure 1. We adopt 2D Top-Down pose estimators [44, 59, 68] for pose extraction. Compared to its 2D Bottom-Up counterparts [5, 8, 43], Top-Down methods obtain superior performance on standard benchmarks such as COCO-keypoints [35]. In most cases, we feed proposals predicted by a human detector to the Top-Down pose estimators, which is sufficient enough to generate 2D poses of good quality for action recognition. When only a few persons are of interest out of dozens of candidates 2, some priors are essential for skeleton-based action recognition to achieve good performance, e.g., knowing the interested person locations at the first frame of the video. In terms of the storage of estimated heatmaps, they are often stored as coordinate-triplets $(x,y,c)$ in previous literature, where $c$ marks the maximum score of the heatmap and $(x,y)$ is the corresponding coordinate of $c$ . In experiments, we find that coordinate-triplets $(x,y,c)$ help save the majority of storage space at the cost of little performance drop. The detailed ablation study is included in Sec D.1.

通常来说,2D姿态的质量优于3D姿态,如图 1 所示。我们采用 2D Top-Down(自上而下)姿态估计器 [44, 59, 68] 进行姿态提取。与 2D Bottom-Up(自下而上)方法 [5, 8, 43] 相比,Top-Down 方法在 COCO-keypoints [35] 等标准基准测试中表现更优。多数情况下,我们将人体检测器预测的候选框输入 Top-Down 姿态估计器,这足以生成适用于动作识别的高质量 2D 姿态。当需要从数十个候选者中筛选少数目标人物时,基于骨架的动作识别需依赖某些先验知识才能获得良好性能,例如知晓目标人物在视频首帧的位置。关于估计热图的存储方式,先前研究通常采用坐标三元组 $(x,y,c)$ ,其中 $c$ 表示热图最高分,$(x,y)$ 是对应 $c$ 的坐标。实验发现,坐标三元组 $(x,y,c)$ 能以微小性能损失为代价节省大部分存储空间。详细消融实验见附录 D.1 节。

3.2. From 2D Poses to 3D Heatmap Volumes

3.2. 从二维姿态到三维热图体积

After 2D poses are extracted from video frames, to feed into PoseConv3D, we reformulate them into a 3D heatmap volume. Formally, we represent a 2D pose as a heatmap of size $K\times H\times W$ , where $K$ is the number of joints, $H$ and $W$ are the height and width of the frame. We can directly use the heatmap produced by the Top-Down pose estimator as the target heatmap, which should be zero-padded to match the original frame given the corresponding bounding box. In case we have only coordinate-triplets $(x_{k},y_{k},c_{k})$ of skeleton joints, we can obtain a joint heatmap $\boldsymbol{J}$ by composing $K$ gaussian maps centered at every joint:

从视频帧中提取2D姿态后,为输入PoseConv3D网络,我们将其重构为3D热力图体积。形式上,我们将2D姿态表示为尺寸为$K\times H\times W$的热力图,其中$K$表示关节数量,$H$和$W$分别代表帧的高度与宽度。可直接采用Top-Down姿态估计器生成的热力图作为目标热力图,并根据对应边界框进行零填充以匹配原始帧尺寸。若仅有骨骼关节坐标三元组$(x_{k},y_{k},c_{k})$,可通过在每个关节中心合成$K$个高斯图来获得关节热力图$\boldsymbol{J}$:

$$

\begin{array}{r}{J_{k i j}=e^{-\frac{(i-x_{k})^{2}+(j-y_{k})^{2}}{2*\sigma^{2}}}*c_{k},}\end{array}

$$

$$

\begin{array}{r}{J_{k i j}=e^{-\frac{(i-x_{k})^{2}+(j-y_{k})^{2}}{2*\sigma^{2}}}*c_{k},}\end{array}

$$

$\sigma$ controls the variance of gaussian maps, and $(x_{k},y_{k})$ and $c_{k}$ are respectively the location and confidence score of the $k$ -th joint. We can also create a limb heatmap $\pmb{L}$ :

$\sigma$ 控制高斯图的方差,$(x_{k},y_{k})$ 和 $c_{k}$ 分别是第 $k$ 个关节的位置和置信度分数。我们还可以创建肢体热图 $\pmb{L}$:

$$

L_{k i j}=e^{-\frac{\mathcal{D}((i,j),s e g[a_{k},b_{k}])^{2}}{2*\sigma^{2}}}\operatorname*{min}\bigl(c_{a_{k}},c_{b_{k}}\bigr).

$$

$$

L_{k i j}=e^{-\frac{\mathcal{D}((i,j),s e g[a_{k},b_{k}])^{2}}{2*\sigma^{2}}}\operatorname*{min}\bigl(c_{a_{k}},c_{b_{k}}\bigr).

$$

The $k_{t h}$ limb is between two joints $a_{k}$ and $b_{k}$ . The function $\mathcal{D}$ calculates the distance from the point $(i,j)$ to the segment $\left[(x_{a_{k}},y_{a_{k}}),(x_{b_{k}},y_{b_{k}})\right]$ . It is worth noting that although the above process assumes a single person in every frame, we can easily extend it to the multi-person case, where we directly accumulate the $k$ -th gaussian maps of all persons without enlarging the heatmap. Finally, a 3D heatmap volume is obtained by stacking all heatmaps $_{\mathrm{~}}$ or $\pmb{L}$ ) along the temporal dimension, which thus has the size of $K\times T\times H\times W$ .

第 $k_{t h}$ 肢体位于关节 $a_{k}$ 和 $b_{k}$ 之间。函数 $\mathcal{D}$ 计算点 $(i,j)$ 到线段 $\left[(x_{a_{k}},y_{a_{k}}),(x_{b_{k}},y_{b_{k}})\right]$ 的距离。值得注意的是,虽然上述过程假设每帧中只有一个人,但我们可以轻松将其扩展到多人场景,此时直接累加所有人的第 $k$ 个高斯热图而无需扩大热图尺寸。最终通过沿时间维度堆叠所有热图 $_{\mathrm{~}}$ 或 $\pmb{L}$ ) 得到 3D 热图体积,其尺寸为 $K\times T\times H\times W$。

In practice, we further apply two techniques to reduce the redundancy of 3D heatmap volumes. (1) SubjectsCentered Cropping. Making the heatmap as large as the frame is inefficient, especially when the persons of interest only act in a small region. In such cases, we first find the smallest bounding box that envelops all the 2D poses across frames. Then we crop all frames according to the found box and resize them to the target size. Consequently, the size of the 3D heatmap volume can be reduced spatially while all 2D poses and their motion are kept. (2) Uniform Sampling. The 3D heatmap volume can also be reduced along the temporal dimension by sampling a subset of frames. Unlike previous works on RGB-based action recognition, where researchers usually sample frames in a short temporal window, such as sampling frames in a 64-frame temporal window as in SlowFast [18], we propose to use a uniform sampling strategy [65] for 3D-CNNs instead. In particular, to sample $n$ frames from a video, we divide the video into $n$ segments of equal length and randomly select one frame from each segment. The uniform sampling strategy is better at maintaining the global dynamics of the video. Our empirical studies show that the uniform sampling strategy is significantly beneficial for skeleton-based action recognition. More illustration about generating 3D heatmap volumes is provided in Sec B.

实践中,我们进一步应用两种技术来降低3D热图体积的冗余度。(1) 以主体为中心的裁剪。将热图保持与帧同尺寸是低效的,特别是当目标人物仅在小范围活动时。这种情况下,我们首先找到跨帧包裹所有2D姿态的最小边界框,然后根据该边界框裁剪所有帧并调整至目标尺寸。这样可以在保留所有2D姿态及其运动的同时,从空间维度减小3D热图体积。(2) 均匀采样。通过抽取帧子集,3D热图体积还能沿时间维度缩减。与基于RGB的动作识别研究不同(前人通常采用短时窗采样,如SlowFast [18]采用的64帧时窗采样),我们提出对3D-CNN采用均匀采样策略 [65]。具体而言,要从视频中采样$n$帧,我们将视频均分为$n$段,每段随机选取一帧。均匀采样策略能更好地保持视频的全局动态特征。实证研究表明,该策略对基于骨架的动作识别具有显著优势。更多关于3D热图生成的说明详见附录B节。

Figure 2. Our Framework. For each frame in a video, we first use a two-stage pose estimator (detection $^+$ pose estimation) for 2D human pose extraction. Then we stack heatmaps of joints or limbs along the temporal dimension and apply pre-processing to the generated 3D heatmap volumes. Finally, we use a 3D-CNN to classify the 3D heatmap volumes.

图 2: 我们的框架。对于视频中的每一帧,我们首先使用两阶段姿态估计器(检测 $^+$ 姿态估计)进行二维人体姿态提取。接着沿时间维度堆叠关节或肢体的热图,并对生成的3D热图体积进行预处理。最后,我们使用3D-CNN对3D热图体积进行分类。

Table 2. Evalution of PoseConv3D variants. ‘s’ indicates shallow (fewer layers); ‘HR’ indicates high-resolution (double height & width); ‘wd’ indicates wider network with double channel size.

表 2: PoseConv3D变体评估。's'表示浅层(较少层数);'HR'表示高分辨率(高度和宽度加倍);'wd'表示通道数加倍的宽网络。

| Backbone | Variant | NTU60-XSub | FLOPs | Params |

|---|---|---|---|---|

| SlowOnly | 93.7 | 15.9G | 2.0M | |

| SlowOnly | HR | 93.6 | 73.0G | 8.0M |

| SlowOnly | wd | 93.7 | 54.9G | 7.9M |

| C3D | 93.0 | 25.2G | 6.9M | |

| C3D | S | 92.9 | 16.8G | 3.4M |

| X3D | 92.6 | 1.1G | 531K | |

| X3D | S | 92.3 | 0.6G | 241K |

3.3. 3D-CNN for Skeleton-based Action Recognition

3.3. 基于骨架动作识别的3D-CNN

For skeleton-based action recognition, GCN has long been the mainstream backbone. In contrast, 3D-CNN, an effective network structure commonly used in RGB-based action recognition [6, 18, 20], is less explored in this direction. To demonstrate the power of 3D-CNN in capturing s patio temporal dynamics of skeleton sequences, we design two families of 3D-CNNs, namely PoseConv3D for the Pose modality and RGBPose-Conv3D for the $R G B{+}P o s e$ dual-modality.

对于基于骨架的动作识别,GCN长期以来一直是主流骨干网络。相比之下,3D-CNN这一在基于RGB的动作识别中常用的有效网络结构[6, 18, 20],在该领域却鲜少被探索。为了展示3D-CNN在捕捉骨架序列时空动态特性方面的能力,我们设计了两类3D-CNN架构:针对姿态模态的PoseConv3D,以及面向$RGB{+}Pose$双模态的RGBPose-Conv3D。

PoseConv3D. PoseConv3D focuses on the modality of human skeletons, which takes 3D heatmap volumes as input and can be instantiated with various 3D-CNN backbones. Two modifications are needed to adapt 3D-CNNs to skeleton-based action recognition: (1) down-sampling operations in early stages are removed from the 3D-CNN since the spatial resolution of 3D heatmap volumes does not need to be as large as RGB clips $4\times$ smaller in our setting); (2) a shallower (fewer layers) and thinner (fewer channels) network is sufficient to model s patio temporal dynamics of human skeleton sequences since 3D heatmap volumes are already mid-level features for action recognition. Based on these principles, we adapt three popular 3D-CNNs: C3D [60], SlowOnly [18], and X3D [17], to skeleton-based action recognition (Table 11 demonstrates the architectures of the three backbones as well as their variants). The different variants of adapted 3D-CNNs are evaluated on the NTURGB $^+$ D-XSub benchmark (Table 2). Adopting a lightweight version of 3D-CNNs can significantly reduce the computational complexity at the cost of a slight recognition performance drop $(\leq0.3%$ for all 3D backbones). In experiments, we use SlowOnly as the default backbone, considering its simplicity (directly inflated from ResNet) and good recognition performance. PoseConv3D can outperform representative GCN / 2D-CNN counterparts across various benchmarks, both in accuracy and efficiency. More importantly, the interoperability between PoseConv3D and popular networks for RGB-based action recognition makes it easy to involve human skeletons in multi-modality fusion.

PoseConv3D。PoseConv3D专注于人体骨骼模态,以3D热图体积作为输入,并可通过多种3D-CNN骨干网络实例化。为使3D-CNN适配基于骨骼的动作识别,需进行两项改进:(1) 移除3D-CNN早期阶段的下采样操作,因为3D热图体积的空间分辨率无需像RGB片段那么大(在我们的设置中小4倍);(2) 由于3D热图体积已是动作识别的中层特征,更浅(更少层数)更薄(更少通道)的网络足以建模人体骨骼序列的时空动态。基于这些原则,我们改造了三种主流3D-CNN:C3D [60]、SlowOnly [18]和X3D [17](表11展示了这三种骨干网络及其变体的架构)。改造后的3D-CNN不同变体在NTURGB$^+$D-XSub基准上进行了评估(表2)。采用轻量级3D-CNN版本可显著降低计算复杂度,仅需付出轻微识别性能下降的代价(所有3D骨干网络均≤0.3%)。实验中我们选择SlowOnly作为默认骨干网络,因其结构简单(直接从ResNet扩展而来)且识别性能良好。PoseConv3D在各类基准测试的准确率和效率上均优于代表性GCN/2D-CNN方案。更重要的是,PoseConv3D与基于RGB的动作识别通用网络之间的互操作性,使其能轻松融入多模态融合中的人体骨骼信息。

RGBPose-Conv3D. To show the interoperability of PoseConv3D, we propose RGBPose-Conv3D for the early fusion of human skeletons and RGB frames. It is a two-stream 3D-CNN with two pathways that respectively process RGB modality and Pose modality. While a detailed instantiation of RGBPose-Conv3D is included in Sec C.2, the architecture of RGBPose-Conv3D follows several principles in general: (1) the two pathways are asymmetrical due to the different characteristics of the two modalities: Compared to the RGB pathway, the pose pathway has a smaller channel width, a smaller depth, as well as a smaller input spatial resolution. (2) Inspired by SlowFast [18], bidirectional lateral connections between the two pathways are added to promote early-stage feature fusion between two modalities. To avoid over fitting, RGBPose-Conv3D is trained with two individual cross-entropy losses respectively for each pathway. In experiments, we find that early-stage feature fusion, achieved by lateral connections, leads to consistent improvement compared to late-fusion only.

RGBPose-Conv3D。为了展示PoseConv3D的互操作性,我们提出了RGBPose-Conv3D用于人体骨骼和RGB帧的早期融合。这是一个双流3D-CNN架构,包含分别处理RGB模态和姿态模态的两条通路。虽然附录C.2详细阐述了RGBPose-Conv3D的具体实现,但其架构总体上遵循以下原则:(1) 由于两种模态的特性差异,两条通路采用非对称设计:与RGB通路相比,姿态通路具有更小的通道宽度、更浅的深度以及更低的输入空间分辨率。(2) 受SlowFast [18]启发,我们在两条通路间添加了双向横向连接以促进早期特征融合。为避免过拟合,RGBPose-Conv3D采用双路独立交叉熵损失进行训练。实验表明,通过横向连接实现的早期特征融合相比仅用后期融合能带来持续的性能提升。

4. Experiments

4. 实验

4.1. Dataset Preparation

4.1. 数据集准备

We use six datasets in our experiments: FineGYM [49], NTURGB $+\mathrm{D}$ [38, 48], Kinetics400 [6, 71], UCF101 [57], HMDB51 [29] and Volleyball [23]. Unless otherwise specified, we use the Top-Down approach for pose extraction: the detector is Faster-RCNN [46] with the ResNet50 backbone, the pose estimator is HRNet [59] pre-trained on COCO-keypoint [35]. For all datasets except FineGYM, 2D poses are obtained by directly applying TopDown pose estimators to RGB inputs. We report the Mean Top-1 accuracy for FineGYM and Top-1 accuracy for other datasets. We adopt the 3D ConvNets implemented in MMAction2 [11] in experiments.

我们在实验中使用了六个数据集:FineGYM [49]、NTURGB+D [38, 48]、Kinetics400 [6, 71]、UCF101 [57]、HMDB51 [29] 和 Volleyball [23]。除非另有说明,我们采用自上而下 (Top-Down) 方法进行姿态提取:检测器为基于 ResNet50 骨干网络的 Faster-RCNN [46],姿态估计器为在 COCO-keypoint [35] 上预训练的 HRNet [59]。除 FineGYM 外,其他数据集的 2D 姿态均通过直接将 TopDown 姿态估计器应用于 RGB 输入获得。我们报告 FineGYM 的平均 Top-1 准确率和其他数据集的 Top-1 准确率。实验中采用了 MMAction2 [11] 实现的 3D ConvNets。

FineGYM. FineGYM is a fine-grained action recognition dataset with 29K videos of 99 fine-grained gymnastic action classes. During pose extraction, we compare three different kinds of person bounding boxes: 1. Person bounding boxes predicted by the detector (Detection); 2. GT bounding boxes for the athlete in the first frame, tracking boxes for the rest frames (Tracking). 3. GT bounding boxes for the athlete in all frames (GT). In experiments, we use human poses extracted with the third kind of bounding boxes unless otherwise noted.

FineGYM。FineGYM是一个细粒度动作识别数据集,包含99个细粒度体操动作类别的2.9万段视频。在姿态提取过程中,我们比较了三种不同的人物边界框:1. 检测器预测的人物边界框(Detection);2. 第一帧中运动员的真实边界框(GT),其余帧使用跟踪框(Tracking);3. 所有帧中运动员的真实边界框(GT)。实验中如无特别说明,均采用第三种边界框提取的人体姿态。

NTURGB $\mathbf{+D}$ . NTURGB $+\mathrm{D}$ is a large-scale human action recognition dataset collected in the lab. It has two versions, namely NTU-60 and NTU-120 (a superset of NTU60): NTU-60 contains 57K videos of 60 human actions, while NTU-120 contains 114K videos of 120 human actions. The datasets are split in three ways: Cross-subject (X-Sub), Cross-view (X-View, for NTU-60), Cross-setup (X-Set, for NTU-120), for which action subjects, camera views, camera setups are different in training and validation. The 3D skeletons collected by sensors are available for this dataset. Unless otherwise specified, we conduct experiments on the $\mathbf{X}$ -sub splits for NTU-60 and NTU-120.

NTURGB $\mathbf{+D}$。NTURGB $+\mathrm{D}$ 是一个在实验室环境下收集的大规模人类动作识别数据集,包含NTU-60和NTU-120(NTU60的超集)两个版本:NTU-60包含60类人类动作的57K段视频,NTU-120则包含120类动作的114K段视频。数据集提供三种划分方式:跨被试者(X-Sub)、跨视角(X-View,仅NTU-60)、跨设备(X-Set,仅NTU-120),其训练集与验证集在动作执行者、摄像机视角或采集设备配置上存在差异。该数据集提供传感器采集的3D骨骼数据。除非特别说明,我们的实验均在NTU-60和NTU-120的$\mathbf{X}$-sub划分上进行。

Kinetics 400, UCF101, and HMDB51. The three datasets are general action recognition datasets collected from the web. Kinetics 400 is a large-scale video dataset with 300K videos from 400 action classes. UCF101 and HMDB51 are smaller, contain 13K videos from 101 classes and 6.7K videos from 51 classes, respectively. We conduct experiments using 2D-pose annotations extracted with our TopDown pipeline.

Kinetics 400、UCF101和HMDB51。这三个数据集是从网络收集的通用动作识别数据集。Kinetics 400是一个大规模视频数据集,包含来自400个动作类别的30万段视频。UCF101和HMDB51规模较小,分别包含来自101个类别的1.3万段视频和来自51个类别的6700段视频。我们使用TopDown流程提取的2D姿态标注进行实验。

Volleyball. Volleyball is a group activity recognition dataset with 4830 videos of 8 group activity classes. Each frame contains approximately 12 persons, while only the center frame has annotations for GT person boxes. We use tracking boxes from [47] for pose extraction.

排球

排球是一个群体活动识别数据集,包含4830个视频,涵盖8种群体活动类别。每帧画面平均有12人,但仅中心帧标注了真实人物边界框(GT person boxes)。我们采用[47]的追踪框进行姿态提取。

4.2. Good properties of PoseConv3D

4.2. PoseConv3D 的良好特性

To elaborate on the good properties of 3D convolutional networks over graph networks, we compare PoseSlowOnly with MS-G3D [40], a representative GCN-based approach in multiple dimensions. Two models take exactly the same input (coordinate-triplets for GCN, heatmaps generated from coordinate-triplets for PoseConv3D).

为了详细说明3D卷积网络相对于图网络的优越特性,我们从多个维度将PoseSlowOnly与基于GCN的代表性方法MS-G3D [40]进行对比。两个模型采用完全相同的输入(GCN使用坐标三元组,PoseConv3D使用坐标三元组生成的热图)。

Performance & Efficiency. In performance comparison between PoseConv3D and GCN, we adopt the input shape $48\times56\times56$ for PoseConv3D. Table 3 shows that under such configuration, PoseConv3D is lighter than the GCN counterpart, both in the number of parameters and FLOPs. Though being light-weighted, PoseConv3D achieves competitive performance on different datasets. The 1-clip testing result is better than or comparable with a state-of-theart GCN while requiring much less computation. With 10-clip testing, PoseConv3D consistently outperforms the state-of-the-art GCN. Only PoseConv3D can take advantage of multi-view testing since it subsamples the entire heatmap volumes to form each input. Besides, PoseConv3D uses the same architecture and hyper parameters for different datasets, while GCN relies on heavy tuning of architectures and hyper parameters on different datasets [40].

性能与效率。在PoseConv3D与GCN的性能对比中,我们为PoseConv3D采用$48\times56\times56$的输入尺寸。表3显示,在此配置下,PoseConv3D在参数量和FLOPs方面均比GCN更轻量。尽管轻量化,PoseConv3D在不同数据集上仍具备竞争力:单片段测试结果优于或持平当前最优GCN,同时计算需求显著降低;十片段测试时PoseConv3D持续超越最优GCN。此外,PoseConv3D是唯一能利用多视角测试优势的方法,因其通过子采样完整热图体积生成每个输入。值得注意的是,PoseConv3D对不同数据集采用统一架构和超参数,而GCN需针对不同数据集进行繁重的架构调优和超参数调整 [40]。

Robustness. To test the robustness of both models, we can drop a proportion of keypoints in the input and see how such perturbation will affect the final accuracy. Since limb keypoints3 are more critical for gymnastics than the torso or face keypoints, we test both models by randomly dropping one limb keypoint in each frame with probability $p$ . In Table 4, we see that PoseConv3D is highly robust to input perturbations: dropping one limb keypoint per frame leads to a moderate drop (less than $1%$ ) in Mean-Top1, while for GCN, it’s $14.3%$ . Someone would argue that we can train GCN with the noisy input, similar to the dropout operation [58]. However, even under this setting, the Mean-Top1 accuracy of GCN still drops by $1.4%$ for the case $p=1$ . Besides, with robust training, there will be an additional $1.1%$ drop for the case $p=0$ . The experiment results show that PoseConv3D significantly outperforms GCN in terms of robustness for pose recognition.

鲁棒性。为测试两种模型的鲁棒性,我们可以在输入中随机丢弃一定比例的关键点,观察此类扰动如何影响最终准确率。由于体操动作中肢体关键点3比躯干或面部关键点更为重要,我们通过以概率$p$随机丢弃每帧中的一个肢体关键点来测试两种模型。表4显示,PoseConv3D对输入扰动具有高度鲁棒性:每帧丢弃一个肢体关键点仅导致Mean-Top1适度下降(小于$1%$),而GCN的降幅达$14.3%$。有人可能提出可以像dropout操作[58]那样用噪声输入训练GCN,但即使在此设置下,当$p=1$时GCN的Mean-Top1准确率仍会下降$1.4%$。此外,采用鲁棒训练会导致$p=0$时的准确率额外下降$1.1%$。实验结果表明,在姿态识别的鲁棒性方面,PoseConv3D显著优于GCN。

Generalization. To compare the generalization of GCN and 3D-CNN, we design a cross-model check on FineGYM.

泛化性。为了比较 GCN 和 3D-CNN 的泛化能力,我们在 FineGYM 数据集上设计了跨模型验证。

Table 3. PoseConv3D v.s. GCN. We compare the performance of PoseConv3D and GCN on several datasets. For PoseConv3D, we report the results of 1/10-clip testing. We exclude parameters and FLOPs of the FC layer, since it depends on the number of classes.

表 3: PoseConv3D 与 GCN 对比。我们在多个数据集上比较了 PoseConv3D 和 GCN 的性能。对于 PoseConv3D,我们报告了 1/10-clip 测试的结果。我们排除了全连接层 (FC) 的参数和 FLOPs,因为这取决于类别数量。

| 数据集 | MS-G3D Acc | MS-G3D Params | MS-G3D FLOPs | Pose-SlowOnly 1-clip | Pose-SlowOnly 10-clip | Pose-SlowOnly Params | Pose-SlowOnly FLOPs |

|---|---|---|---|---|---|---|---|

| FineGYM | 92.0 | 2.8M | 24.7G | 92.4 | 93.2 | 15.9G | |

| NTU-60 | 91.9 | 2.8M | 16.7G | 93.1 | 93.7 | 15.9G | |

| NTU-120 | 84.8 | 2.8M | 16.7G | 85.1 | 86.0 | 15.9G | |

| Kinetics400 | 44.9 | 2.8M | 17.5G | 44.8 | 46.0 | 15.9G |

Table 4. Recognition performance w. different dropping KP probabilities. PoseConv3D is more robust to input perturbations.

表 4: 不同关键点丢弃概率下的识别性能。PoseConv3D对输入扰动具有更强的鲁棒性。

| 方法 / p | 0 | 1/8 | 1/4 | 1/2 | 1 |

|---|---|---|---|---|---|

| MS-G3D | 92.0 | 91.0 | 90.2 | 86.5 | 77.7 |

| + 鲁棒训练 | 90.9 | 91.0 | 91.0 | 91.0 | 90.6 |

| Pose-SlowOnly | 92.4 | 92.4 | 92.3 | 92.1 | 91.5 |

Specifically, we use two models, i.e., HRNet (HigherQuality, or HQ for short) and MobileNet (Lower-Quality, LQ) for pose estimation and train two PoseConv3D on top, respectively. During testing, we feed LQ input into the model trained with HQ one and vice versa. From Table 5a, we see that the accuracy drops less when using lowerquality poses for both training & testing with PoseConv3D compared to GCN. Similarly, we can also vary the source of person boxes, using either GT boxes (HQ) or tracking results (LQ) for training and testing. The results are shown in Table 5b. The performance drop of PoseConv3D is also much smaller than GCN.

具体而言,我们采用HRNet(高质量,简称HQ)和MobileNet(低质量,简称LQ)两种模型进行姿态估计,并分别在其基础上训练两个PoseConv3D。测试时,我们将LQ输入数据输入到用HQ数据训练的模型中,反之亦然。从表5a可见,与GCN相比,PoseConv3D在训练和测试阶段均使用低质量姿态数据时,准确率下降幅度更小。类似地,我们还可以调整人物检测框的来源,在训练和测试时分别使用GT框(HQ)或跟踪结果(LQ)。结果如表5b所示,PoseConv3D的性能下降幅度也远小于GCN。

S cal ability. The computation of GCN scales linearly with the increasing number of persons in the video, making it less efficient for group activity recognition. We use an experiment on the Volleyball dataset [23] to prove that. Each video in the dataset contains 13 persons and 20 frames. For GCN, the corresponding input shape will be $13\times20\times17\times3$ , 13 times larger than the input for one person. Under such configuration, the number of parameters and FLOPs for GCN is $2.8\mathbf{M}$ and 7.2G $(13\times)$ . For PoseConv3D, we can use one single heatmap volume (with shape $17\times12\times56\times56)$ to represent all 13 persons4. The base channel-width of Pose-SlowOnly is set to 16, leading to only 0.52M parameters and 1.6 GFLOPs. Despite the much smaller parameters and FLOPs, PoseConv3D achieves $91.3%$ Top-1 accuracy on Volleyball-validation, $2.1%$ higher than the GCN-based approach.

可扩展性。GCN的计算量随着视频中人数增加而线性增长,导致其在群体活动识别中效率较低。我们通过Volleyball数据集[23]的实验验证了这一点:该数据集每段视频包含13人和20帧画面。对GCN而言,其输入张量维度将达到$13\times20\times17\times3$,是单人输入的13倍。在此配置下,GCN参数量为$2.8\mathbf{M}$,计算量为7.2G$(13\times)$。而PoseConv3D仅需单个热图体量(维度$17\times12\times56\times56)$即可表征全部13人4。当Pose-SlowOnly基础通道宽度设为16时,参数量仅0.52M,计算量1.6 GFLOPs。尽管参数量和计算量显著降低,PoseConv3D在Volleyball验证集上仍取得$91.3%$的Top-1准确率,较基于GCN的方法提升$2.1%$。

4.3. Multi-Modality Fusion with RGBPose-Conv3D

4.3. 基于 RGBPose-Conv3D 的多模态融合

The 3D-CNN architecture of PoseConv3D makes it more flexible to fuse pose with other modalities via some early fusion strategies. For example, in RGBPose-Conv3D, lateral connections between the $R G B$ -pathway and Pose-pathway are exploited for cross-modality feature fusion in the early stage. In practice, we first train two models for RGB and Pose modalities separately and use them to initialize the RGBPose-Conv3D. We continue to finetune the network for several epochs to train the lateral connections. The final prediction is achieved by late fusing the prediction scores from both pathways. RGBPose-Conv3D can achieve better fusing results with early+late fusion.

PoseConv3D的3D-CNN架构使其能够通过一些早期融合策略更灵活地将姿态与其他模态融合。例如,在RGBPose-Conv3D中,RGB通道和姿态通道之间的横向连接被用于早期的跨模态特征融合。实践中,我们首先分别训练RGB和姿态模态的两个模型,并用它们初始化RGBPose-Conv3D。然后继续微调网络几个周期以训练横向连接。最终预测通过后期融合两个通道的预测分数实现。RGBPose-Conv3D通过早期+后期融合可以获得更好的融合效果。

Table 5. Train/Test w. different pose annotations. PoseConv3D shows great generalization capability in the cross-PoseAnno setting (LQ for low-quality; HQ for high-quality).

(a) Train/Test w. Pose from different estimators.

表 5: 采用不同姿态标注的训练/测试。PoseConv3D 在跨姿态标注设置中展现出强大的泛化能力 (LQ 表示低质量;HQ 表示高质量)。

| Train→Test | |||

|---|---|---|---|

| HQ→LQ | LQ→HQ | LQ→LQ | |

| MS-G3D | 79.3 | 87.9 | 89.0 |

| PoseConv3D | 86.5 | 91.6 | 90.7 |

(a) 采用不同估计器生成姿态的训练/测试。

(b) Train/Test w. Pose extracted with different boxes.

| Train→Test | ||

|---|---|---|

| HQ→LQ LQ→HQ | LQ→LQ | |

| MS-G3D | 78.5 89.1 | 82.9 |

| PoseConv3D | 82.1 90.6 | 85.4 |

(b) 使用不同边界框提取姿态的训练/测试。

Table 6. The design of RGBPose-Conv3D. Bi-directional lateral connections outperform uni-directional ones in the early stage feature fusion.

表 6: RGBPose-Conv3D的设计。双向横向连接在早期特征融合中表现优于单向连接。

| 后期融合 | RGB→Pose | Pose→RGB | RGB↔Pose | |

|---|---|---|---|---|

| 1-clip | 92.6 | 93.0 | 93.4 | 93.6 |

| 10-clip | 93.4 | 93.7 | 93.8 | 94.1 |

Table 7. The universality of RGBPose-Conv3D. The early+late fusion strategy works both on RGB-dominant NTU-60 and Posedominant FineGYM.

表 7. RGBPose-Conv3D的通用性。早期+晚期融合策略在RGB主导的NTU-60和姿态主导的FineGYM上均有效。

| RGB | Pose | latefusion | early+late fusion | |

|---|---|---|---|---|

| FineGYM | 87.2/88.5 | 91.0/92.0 | 92.6/93.4 | 93.6/94.1 |

| NTU-60 | 94.1/94.9 | 92.8/93.2 | 95.5/96.0 | 96.2/96.5 |

We first compare uni-directional lateral connections and bi-directional lateral connections in Table 6. The result shows that bi-directional feature fusion is better than unidirectional ones for RGB and Pose. With bi-directional feature fusion in the early stage, the early+late fusion with 1-clip testing can outperform the late fusion with 10-clip testing. Besides, RGBPose-Conv3D also works in situations when the importance of two modalities is different. The pose modality is more important in FineGYM and vice versa in NTU-60. Yet we observe performance improvement by early+late fusion on both of them in Table 7. We demonstrate the detailed instantiation of RGBPose-Conv3D we used in Sec C.2.

我们首先在表6中比较了单向横向连接和双向横向连接。结果表明,对于RGB和姿态模态,双向特征融合优于单向融合。通过在早期阶段采用双向特征融合,1片段测试的早期+晚期融合效果能超越10片段测试的晚期融合。此外,RGBPose-Conv3D在两种模态重要性不同的场景下同样有效:在FineGYM数据集中姿态模态更重要,而在NTU-60中则相反。但如表7所示,我们观察到早期+晚期融合在这两个数据集上均能提升性能。具体实现细节将在C.2节展示。

Table 8. PoseConv3D is better or comparable to previous state-of-the-arts. With estimated high-quality 2D skeletons and the great capacity of 3D-CNN to learn s patio temporal features, PoseConv3D achieves superior performance across 5 out of 6 benchmarks. ${\cal J},{\cal L}$ means using joint/limb-based heatmaps. $^{++}$ denotes using the same human skeletons as ours. Numbers with * are reported by [49].

表 8. PoseConv3D优于或与现有最优方法相当。通过估计高质量2D骨骼和3D-CNN强大的时空特征学习能力,PoseConv3D在6个基准测试中的5个上实现了卓越性能。${\cal J},{\cal L}$表示使用基于关节/肢体的热图。$^{++}$表示使用与我们相同的人体骨骼。带*的数字来自[49]。

| 方法 | NTU60-XSub | NTU60-XView | NTU120-XSub | NTU120-XSet | Kinetics | FineGYM |

|---|---|---|---|---|---|---|

| ST-GCN [71] | 81.5 | 88.3 | 70.7 | 73.2 | 30.7 | 25.2* |

| AS-GCN [34] | 86.8 | 94.2 | 78.3 | 79.8 | 34.8 | |

| RA-GCN [54] | 87.3 | 93.6 | 81.1 | 82.7 | ||

| AGCN [51] | 88.5 | 95.1 | 36.1 | |||

| DGNN [50] | 89.9 | 96.1 | 36.9 | |||

| FGCN [72] | 90.2 | 96.3 | 85.4 | 87.4 | ||

| Shift-GCN [9] | 90.7 | 96.5 | 85.9 | 87.6 | ||

| DSTA-Net [52] | 91.5 | 96.4 | 86.6 | 89.0 | ||

| MS-G3D [40] | 91.5 | 96.2 | 86.9 | 88.4 | 38.0 | |

| MS-G3D ++ | 92.2 | 96.6 | 87.2 | 89.0 | 45.1 | 92.6 |

| PoseConv3D (J) | 93.7 | 96.6 | 86.0 | 89.6 | 46.0 | 93.2 |

| PoseConv3D (J + L) | 94.1 | 97.1 | 86.9 | 90.3 | 47.7 | 94.3 |

Table 9. Comparison to the state-of-the-art of Multi-Modality Action Recognition. Strong recognition performance is achieved on multiple benchmarks with multi-modality fusion. R, F, P indicate RGB, Flow, Pose.

表 9: 多模态动作识别的当前最优方法对比。通过多模态融合在多个基准测试中实现了强大的识别性能。R、F、P分别表示RGB、光流、姿态。

| 数据集 | 先前最优方法 | 本文方法 |

|---|---|---|

| FineGYM-99 | 87.7 (R) [30] | 95.6 (R + P) |

| NTU60(X-Sub/X-View) | 95.7 / 98.9 (R + P) [14] | 97.0 / 99.6 (R + P) |

| NTU120 (X-Sub /X-Set) | 90.7/ 92.5 (R + P) [12] | 95.3/96.4 (R + P) |

4.4. Comparisons with the state-of-the-art

4.4. 与最先进技术的比较

Skeleton-based Action Recognition. In Table 8, we compare PoseConv3D with prior works for skeleton-based action recognition. Prior works (Table 8 upper) use 3D skeletons collected with Kinect for NTURGB $+\mathrm{D}$ , 2D skeletons extracted with OpenPose for Kinetics (details for FineGYM skeleton data are unknown). PoseConv3D adopts 2D skeletons extracted with good practices introduced in Sec 3.1, which have better quality. We instantiate PoseConv3D with the SlowOnly backbone, feed 3D heatmap volumes of shape $48\times56\times56$ as inputs, and report the accuracy obtained by 10-clip testing. For a fair comparison, we also evaluate the state-of-the-art MS-G3D with our 2D human skeletons $(M S{-}G3D{+}{+})$ : $M S{\bf-}G3D{\bf+}{\bf+}$ directly takes the extracted coordinate-triplets $(x,y,c)$ as inputs, while PoseConv3D takes pseudo heatmaps generated from the coordinate-triplets as inputs. With high quality 2D human skeletons, $M S{-}G3D{+}{+}$ and PoseConv3D both achieve far better performance than previous state-of-the-arts, demonstrating the importance of the proposed practices for pose extraction in skeleton-based action recognition. When both take high-quality 2D poses as inputs, PoseConv3D outperforms the state-of-the-art MS-G3D across 5 of 6 benchmarks, showing its great s patio temporal feature learning capability. PoseConv3D achieves by far the best results on 3 of 4 NTURGB $+\mathrm{D}$ benchmarks. On Kinetics, PoseConv3D surpasses $\mathrm{MS-G3D++}$ by a noticeable margin, significantly outperforming all previous methods. Except for the baseline reported in [49], no work aims at skeleton-based action recognition on FineGYM before, while our work first improves the performance to a decent level.

基于骨架的动作识别。在表8中,我们将PoseConv3D与先前基于骨架动作识别的工作进行对比。先前工作(表8上半部分)使用Kinect采集的3D骨架处理NTURGB+D数据集,采用OpenPose提取的2D骨架处理Kinetics数据集(FineGYM骨架数据细节未知)。PoseConv3D采用第3.1节介绍的优化方法提取2D骨架,质量更优。我们以SlowOnly为主干网络实例化PoseConv3D,输入形状为48×56×56的3D热力图体积,并报告10片段测试的准确率。为公平对比,我们还用自提2D人体骨架评估了当前最优的MS-G3D $(MS-G3D++)$ :$MS{\bf-}G3D{\bf+}{\bf+}$ 直接使用提取的坐标三元组 $(x,y,c)$ 作为输入,而PoseConv3D使用坐标三元组生成的伪热力图作为输入。采用高质量2D人体骨架时,$MS-G3D++$ 和PoseConv3D均显著超越先前最优方法,证明所提姿态提取方案对骨架动作识别的重要性。当两者均采用高质量2D姿态输入时,PoseConv3D在6个基准测试中的5个超越当前最优MS-G3D,展现出色时空特征学习能力。PoseConv3D在4个NTURGB+D基准测试中的3个取得迄今最佳结果。在Kinetics数据集上,PoseConv3D明显超越 $\mathrm{MS-G3D++}$ ,显著优于所有先前方法。除[49]报告的基线外,此前未有工作针对FineGYM进行骨架动作识别,而本研究首次将其性能提升至较好水平。

(a) Mulit-modality action recognition with RGBPose-Conv3D. (b) Mulit-modality action recognition with LateFusion.5

(a) 基于RGBPose-Conv3D的多模态动作识别

(b) 基于LateFusion的多模态动作识别

| 数据集 | 先前最佳方法 | 我们的方法 (姿态) | 我们的方法 (融合) |

|---|---|---|---|

| Kinetics400 | 84.9 (R) [39] | 47.7 | 85.5 (R + P) |

| UCF101 | 98.6 (R + F) [16] | 87.0 | 98.8 (R + F + P) |

| HMDB51 | 83.8 (R + F) [16] | 69.3 | 85.0 (R + F + P) |

Multi-modality Fusion. As a powerful representation itself, skeletons are also complementary to other modalities, like RGB appearance. With multi-modality fusion (RGBPose-Conv3D or LateFusion), we achieve state-ofthe-art results across 8 different video recognition benchmarks. We apply the proposed RGBPose-Conv3D to FineGYM and 4 NTURGB $+\mathrm{D}$ benchmarks, using R50 as the backbone; 16, 48 as the temporal length for RGB/PosePathway. Table 9a shows that our early+late fusion achieves excellent performance across various benchmarks. We also try to fuse the predictions of PoseConv3D directly with other modalities with LateFusion. Table 9b shows that late fusion with the Pose modality can push the recognition precision to a new level. We achieve the new state-of-theart on three action recognition benchmarks: Kinetics 400, UCF101, and HMDB51. On the challenging Kinetics 400 benchmark, fusing with PoseConv3D predictions increases the recognition accuracy by $0.6%$ beyond the state-of-theart [39], which is strong evidence for the complement ari ty

多模态融合。作为一种强大的表征形式,骨架数据与其他模态(如RGB外观)具有互补性。通过多模态融合(RGBPose-Conv3D或LateFusion),我们在8个不同的视频识别基准测试中取得了最先进的结果。我们将提出的RGBPose-Conv3D应用于FineGYM和4个NTURGB $+\mathrm{D}$ 基准测试,采用R50作为主干网络,RGB/PosePathway的时间长度设为16和48。表9a显示我们的早期+晚期融合在各种基准测试中均表现出色。我们还尝试通过LateFusion将PoseConv3D的预测结果直接与其他模态融合。表9b表明,与姿态模态的晚期融合能将识别精度提升至新高度。我们在三个动作识别基准测试(Kinetics 400、UCF101和HMDB51)中实现了新的最先进水平。在具有挑战性的Kinetics 400基准测试中,与PoseConv3D预测结果的融合使识别准确率比现有最高水平[39]提升了 $0.6%$ ,这有力证明了互补性。

of the Pose modality.

姿态模态的

4.5. Ablation on Heatmap Processing

4.5. 热图处理消融实验

Subjects-Centered Cropping. Since the sizes and locations of persons can vary a lot in a dataset, focusing on the action subjects is the key to reserving as much information as possible with a relatively small $H\times W$ budget. To validate this, we conduct a pair of experiments on FineGYM with input size $32\times56\times56$ , with or without subjectscentered cropping. We find that subjects-centered cropping is helpful in data preprocessing, which improves the MeanTop1 by $1.0%$ , from $91.7%$ to $92.7%$ .

以主体为中心的裁剪。由于数据集中人物的尺寸和位置可能存在较大差异,在有限的 $H\times W$ 预算下,聚焦动作主体是保留最多信息的关键。为验证这一点,我们在FineGYM数据集上进行了两组输入尺寸为 $32\times56\times56$ 的实验(采用/不采用以主体为中心的裁剪)。结果表明,以主体为中心的裁剪能有效提升数据预处理效果,使MeanTop1准确率从 $91.7%$ 提升至 $92.7%$ ,增幅达 $1.0%$ 。

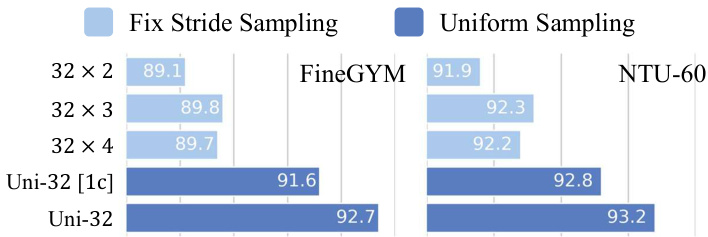

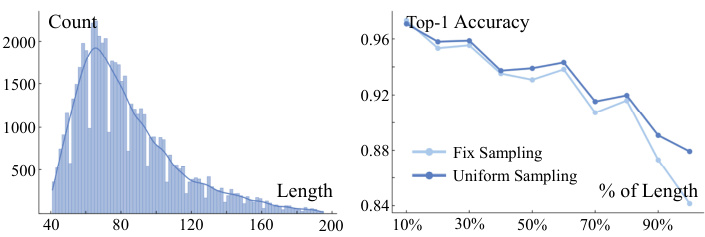

Uniform Sampling. The input sampled from a small temporal window may not capture the entire dynamic of the human action. To validate this, we conduct experiments on FineGYM and NTU-60. For fixed stride sampling, which samples from a fixed temporal window, we try to sample 32 frames with the temporal stride 2, 3, 4; for uniform sampling, we sample 32 frames uniformly from the entire clip. In testing, we adopt a fixed random seed when sampling frames from each clip to make sure the test results are reproducible. From Figure 3, we see that uniform sampling consistently outperforms sampling with fixed temporal strides. With uniform sampling, 1-clip testing can even achieve better results than fixed stride sampling with 10-clip testing. Note that the video length can vary a lot in NTU-60 and FineGYM. In a more detailed analysis, we find that uniform sampling mainly improves the recognition performance for longer videos in the dataset (Figure 4). Besides, uniform sampling also outperforms fixed stride sampling on RGBbased recognition on the two datasets6.

均匀采样。从较小时间窗口采样的输入可能无法完整捕捉人体动作的动态特性。为验证这一点,我们在FineGYM和NTU-60数据集上进行实验。对于固定步长采样(从固定时间窗口采样),我们尝试以2、3、4的时序步长采样32帧;而均匀采样则是从整个片段中均匀抽取32帧。测试时采用固定随机种子进行帧采样以确保结果可复现。如图3所示,均匀采样始终优于固定步长采样。采用均匀采样时,单片段测试效果甚至优于10片段固定步长采样。需注意NTU-60和FineGYM中视频长度差异较大,详细分析表明均匀采样主要提升数据集中较长视频的识别性能(图4)。此外,在这两个数据集的RGB识别任务上,均匀采样同样优于固定步长采样[6]。

Pseudo Heatmaps for Joints and Limbs. GCN approaches for skeleton-based action recognition usually ensemble results of multiple streams (joint, bone, etc.) to obtain better recognition performance [51]. The practice is also feasible for PoseConv3D. Based on the coordinates $(x,y,c)$ we saved, we can generate pseudo heatmaps for joints and limbs. In general, we find that both joint heatmaps and limb heatmaps are good inputs for 3D-CNNs. Ensembling the results from joint-PoseConv3D and limbPoseConv3D (namely PoseConv3D $(\pmb{J}+\pmb{L}))$ can lead to noticeable and consistent performance improvement.

关节与肢体的伪热图。基于骨架的动作识别GCN方法通常通过融合多流(关节、骨骼等)结果来提升识别性能[51]。这一做法同样适用于PoseConv3D。根据存储的坐标$(x,y,c)$,我们可以生成关节和肢体的伪热图。实验表明,关节热图和肢体热图都是3D-CNN的有效输入。融合关节PoseConv3D与肢体PoseConv3D(即PoseConv3D $(\pmb{J}+\pmb{L})$)的结果能带来显著且稳定的性能提升。

3D Heatmap Volumes v.s 2D Heatmap Aggregations. The 3D heatmap volume is a more ‘lossless’ 2D-pose representation, compared to 2D pseudo images aggregating heatmaps with color iz ation or temporal convolutions. PoTion [10] and PA3D [70] are not evaluated on popular benchmarks for skeleton-based action recognition, and there are no public implementations. In the preliminary study, we find that the accuracy of PoTion is much inferior $(\leq85%)$ to GCN or PoseConv3D $\mathrm{}\geq90%$ ). For an apple-to-apple comparison, we also re-implement PoTion, PA3D (with higher accuracy than reported) and evaluate them on UCF101, HMDB51, and NTURGB $+\mathrm{D}$ . PoseConv3D achieves much better recognition results with 3D heatmap volumes, than 2D-CNNs with 2D heatmap aggregations as inputs. With the lightweight X3D, PoseConv3D significantly outperforms 2D-CNNs, with comparable FLOPs and far fewer parameters (Table 10).

3D热图体积 vs 2D热图聚合。与通过着色或时序卷积聚合热图生成的2D伪图像相比,3D热图体积是一种更"无损"的2D姿态表示方法。PoTion [10] 和 PA3D [70] 未在基于骨架动作识别的流行基准测试中进行评估,且没有公开实现。初步研究表明,PoTion的准确率远低于GCN或PoseConv3D $(\leq85%\ vs\ \Delta all\geq90%)$。为进行公平对比,我们重新实现了PoTion和PA3D(精度高于原论文报告值),并在UCF101、HMDB51和NTURGB$+\mathrm{D}$数据集上进行评估。使用3D热图体积的PoseConv3D相比以2D热图聚合为输入的2D-CNNs取得了显著更好的识别效果。配合轻量级X3D框架时,PoseConv3D在计算量(FLOPs)相当但参数量大幅减少的情况下(表10),性能显著优于2D-CNNs。

Figure 3. Uniform Sampling outperforms Fix-Stride Sampling. All results are for 10-clip testing, except Uni-32[1c], which uses 1-clip testing.

图 3: 均匀采样 (Uniform Sampling) 优于固定步长采样 (Fix-Stride Sampling)。除 Uni-32[1c] 使用单片段测试外,其余结果均为 10 片段测试。

Figure 4. Uniform Sampling helps in modeling longer videos. L: The length distribution of NTU60-XSub val videos. R: Uniform Sampling improves the recognition accuracy of longer videos.

图 4: 均匀采样有助于建模更长视频。左: NTU60-XSub验证集视频长度分布。右: 均匀采样提高了较长视频的识别准确率。

Table 10. An apple-to-apple comparison between 3D heatmap volumes and 2D heatmap aggregations.

表 10: 3D热图体积与2D热图聚合的同类比较。

| 方法 | HMDB51 | UCF101 | NTU60-XSub | FLOPs | Params |

|---|---|---|---|---|---|

| PoTion[10] | 51.7 | 67.2 |