Single Frame Semantic Segmentation Using Multi-Modal Spherical Images

基于多模态球面图像的单帧语义分割

Abstract

摘要

In recent years, the research community has shown a lot of interest to panoramic images that offer a $360^{\circ}$ directional perspective. Multiple data modalities can be fed, and complimentary characteristics can be utilized for more robust and rich scene interpretation based on semantic segmentation, to fully realize the potential. Existing research, however, mostly concentrated on pinhole RGB-X semantic segmentation. In this study, we propose a transformer-based cross-modal fusion architecture to bridge the gap between multi-modal fusion and omni directional scene perception. We employ distortion-aware modules to address extreme object deformations and panorama distortions that result from equirectangular representation. Additionally, we conduct cross-modal interactions for feature rectification and information exchange before merging the features in order to communicate long-range contexts for bi-modal and tri-modal feature streams. In thorough tests using combinations of four different modality types in three indoor panoramic-view datasets, our technique achieved state-ofthe-art mIoU performance: $60.60%$ on Stanford 2 D 3 DS [2] (RGB-HHA), $71.97%$ Structured 3 D [44] (RGB-D-N), and $35.92%$ Matter port 3 D [5] (RGB-D) 1.

近年来,研究界对提供360°全方位视角的全景图像表现出浓厚兴趣。为实现其潜力,可输入多种数据模态,并基于语义分割利用互补特征进行更鲁棒、更丰富的场景理解。然而现有研究主要集中于针孔RGB-X语义分割。本研究提出一种基于Transformer的跨模态融合架构,以弥合多模态融合与全向场景感知之间的差距。我们采用失真感知模块来解决等距柱状投影导致的极端物体形变和全景畸变。此外,在合并特征前进行跨模态交互以实现特征校正和信息交换,从而在双模态和三模态特征流中传递远程上下文关系。通过在三个室内全景数据集的四种模态组合上进行全面测试,我们的技术实现了最先进的mIoU性能:斯坦福2D3DS[2] (RGB-HHA)达60.60%,Structured3D[44] (RGB-D-N)达71.97%,Matterport3D[5] (RGB-D)达35.92%。

1. Introduction

1. 引言

With the increased availability of affordable commercial 3D sensing devices, in recent years, researchers are more interested in working with omni directional images, also often referred to as $360^{\circ}$ , panoramic, or spherical images. In contrast to pinhole cameras, the captured spherical images provide an ultra-wide $360^{\circ}\times180^{\circ}$ field-of-view (FoV) allowing for the capture of more detailed spatial information of the entire scene from a single frame [14, 43]. Practical applications of such immersive and complete view perception include holistic and dense visual scene understanding [1], augmented- and virtual reality (AR/VR) [26, 37], autonomous driving [11], and robot navigation [6].

随着经济型商用3D传感设备的普及,近年来研究者对全向图像(也称为$360^{\circ}$图像、全景图像或球面图像)的研究兴趣日益浓厚。与传统针孔相机相比,球面图像能提供超宽视场角 ($360^{\circ}\times180^{\circ}$ FoV),仅需单帧即可捕获整个场景更详尽的空间信息 [14, 43]。这类沉浸式全景感知技术的实际应用包括:整体稠密视觉场景理解 [1]、增强现实/虚拟现实 (AR/VR) [26, 37]、自动驾驶 [11] 以及机器人导航 [6]。

Figure 1. Overview of our multi-modal panoramic segmentation architecture. The inputs are an combination of RGB, Depth, and Normals.

图 1: 我们的多模态全景分割架构概览。输入为 RGB、Depth 和 Normals 的组合。

Generally, spherical images are represented using equirectangular projection (ERP) [38] or cubemap projection (CP) [31], which introduces additional challenges like scene discontinuities, large image distortions, object deformations, and lack of open-source datasets with diverse realworld scenarios. While extensive research has been conducted on pinhole based learning methods [4,22,24,34,35], approaches tailored for processing ultra-wide panoramic images and inherently accounting for spherical deformations remain ongoing research. Furthermore, the scarcity of labeled data, in indoor and outdoor scenarios, required for model training with panoramic images has slowed down the progress in this domain.

通常,球形图像使用等距柱状投影 (ERP) [38] 或立方体贴图投影 (CP) [31] 来表示,这带来了额外的挑战,如场景不连续性、大图像失真、物体变形以及缺乏多样真实场景的开源数据集。尽管基于针孔的学习方法已经进行了广泛研究 [4,22,24,34,35],但专门用于处理超宽全景图像并固有地考虑球形变形的方法仍是正在进行的研究。此外,在室内和室外场景中,全景图像模型训练所需的标记数据稀缺,也减缓了这一领域的进展。

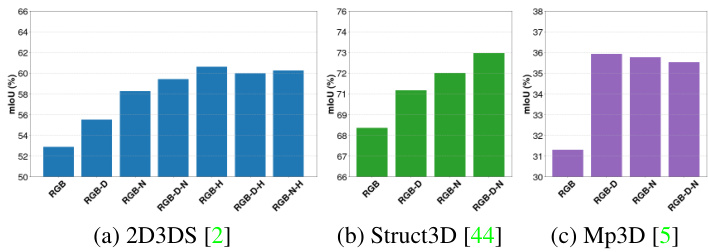

While previous panorama segmentation techniques have attained state-of-the-art performance for RGB-only images, they do not take advantage of the complementary modalities to develop disc rim i native features in situations when it is difficult to discriminate only based on texture information. With comprehensive cross-modal interactions for RGB-X modality [22], our work expands the current Trans $4\mathrm{PASS+}$ [41] methodology for multimodal panoramic semantic segmentation. For the Stanford2D3DS [2] dataset, we evaluate on 4 distinct multimodal semantic segmentation tasks, including RGB, RGBDepth, RGB-Normal, and RGB-HHA, and we reach a state-of-the-art $60.60%$ with RGB-HHA semantic segmentation. We proposed a tri-modal fusion architecture and achieved top mIoU of $75.86%$ on Structure 3 D [44] (RGBD-N) and $39.26%$ on Matter port 3 D [5] (RGB-D-N) for situations when $\mathrm{HHA}^{2}$ is not accessible. The performance of our system on the aforementioned indoor panoramic-view datasets is shown in Fig. 2.

虽然先前的全景分割技术在仅使用RGB图像时已取得最先进的性能,但这些方法未能利用互补模态在纹理信息难以区分的场景下构建判别性特征。通过RGB-X模态的全面跨模态交互[22],我们的工作扩展了当前Trans $4\mathrm{PASS+}$[41]方法,用于多模态全景语义分割。在Stanford2D3DS[2]数据集中,我们评估了4种不同的多模态语义分割任务(包括RGB、RGB-Depth、RGB-Normal和RGB-HHA),其中RGB-HHA语义分割以$60.60%$的性能达到当前最优水平。我们提出了一种三模态融合架构,在无法获取$\mathrm{HHA}^{2}$数据时,于Structure3D[44](RGBD-N)和Matterport3D[5](RGB-D-N)数据集上分别取得$75.86%$和$39.26%$的最高mIoU。图2展示了我们的系统在上述室内全景数据集上的性能表现。

Figure 2. Our cross-modal panoramic segmentation results with RGB, Depth, Normals, and HHA combinations from Stanford2D3DS (left), Structure 3 D (middle) and Matter port 3 D (right) datasets.

图 2: 我们在 Stanford2D3DS (左)、Structure 3D (中) 和 Matterport 3D (右) 数据集上采用 RGB、深度 (Depth)、法线 (Normals) 和 HHA 组合的跨模态全景分割结果。

In summary, we provide the following contributions:

我们主要贡献如下:

- We investigate multi-modal panoramic semantic segmentation in four types of sensory data combinations for the first time. 2. We explore the multi-modal fusion paradigm in this study and introduce the tri-modal paradigm with crossmodal interactions for exploring texture, depth, and geometry information in panoramas. 3. On three indoor panoramic datasets that include RGB, Depth, Normal, and HHA sensor data combinations, our technique provides state-of-the-art performance.

- 我们首次研究了四种感知数据组合的多模态全景语义分割。

- 本研究探索了多模态融合范式,并引入跨模态交互的三模态范式,用于挖掘全景中的纹理、深度和几何信息。

- 在包含RGB、深度(Depth)、法线(Normal)和HHA传感器数据组合的三个室内全景数据集上,我们的技术实现了最先进的性能。

2. Related Work

2. 相关工作

Semantic segmentation An encoder-decoder paradigm with two stages is typically used in existing semantic segmentation designs [3, 8]. A backbone encoder module [15, 17, 36] creates a series of feature maps in the earlier stage in order to capture high-level semantic data. Later, a decoder module gradually extracts the spatial data from the feature maps. Recent research has focused on replacing convolutional backbones with transformer-based ones in light of the success of vision transformer (ViT) in imagine classification [12]. Early studies mostly concentrated on the Transformer encoder design [9, 23, 33, 45], while later study avoided sophisticated decoders in favor of a lightweight All-MLP architecture [35], which produced results with improved efficiency, accuracy, and robustness.

语义分割

现有语义分割设计通常采用两阶段的编码器-解码器范式 [3, 8]。在前期阶段,主干编码器模块 [15, 17, 36] 会生成一系列特征图以捕获高层语义数据。随后,解码器模块逐步从特征图中提取空间数据。鉴于视觉 Transformer (ViT) 在图像分类中的成功 [12],近期研究重点转向用基于 Transformer 的架构替代卷积主干网络。早期研究主要聚焦 Transformer 编码器设计 [9, 23, 33, 45],而后续研究则采用轻量化的全 MLP 架构 [35] 替代复杂解码器,在效率、精度和鲁棒性方面均取得提升。

Panoramic segmentation Early methods for interpreting a picture holistic ally centered on using perspective image-based models in conjunction with distortedmitigated wide-field of view images. A distortion-mitigated locally-planar image grid tangents to a subdivided icosahedron is Eder et al. [13] novel proposal for a tangent image spherical representation. Lee et al. [21], on the other hand, uses a spherical polyhedron to symbolize comparable omnidirectional perspectives. Recent studies [25], however, use distortion-aware modules in the network architecture to directly operate on equirectangular representation. Sun et al. [30] suggests a discrete transformation for predicting dense features after an effective height compression module for latent feature representation. To improve the receptive field and learn the distortion distribution beforehand, Zheng et al. [46] combines the complimentary horizontal and vertical representation in the same line of research. In an encoder-decoder framework, Shen et al. [28] introduces a brand-new panoramic transformer block to take the place of the conventional block. Modern panoramic distortionaware and deformable modules [10] have been added to the state-of-the-art UNet [27] and SegFormer [35] segmentation architectures to improve their performance in the spherical domain [14, 25, 40, 41].

全景分割

早期解释图像整体性的方法主要集中在使用基于透视图像的模型结合畸变抑制的广视角图像。Eder等人[13]提出了一种新颖的切面图像球面表示法,即采用畸变抑制的局部平面图像网格切分二十面体。另一方面,Lee等人[21]使用球形多面体来象征类似的全向视角。然而,最近的研究[25]在网络架构中直接使用畸变感知模块处理等距柱状投影表示。Sun等人[30]提出了一种离散变换方法,在潜在特征表示的有效高度压缩模块后预测密集特征。Zheng等人[46]在同一研究方向中结合了互补的水平与垂直表示,以扩大感受野并预先学习畸变分布。Shen等人[28]在编码器-解码器框架中引入了一种全新的全景Transformer模块,取代了传统模块。现代全景畸变感知与可变形模块[10]已被集成到最先进的UNet[27]和SegFormer[35]分割架构中,以提升其在球面域的性能[14,25,40,41]。

Multimodal semantic segmentation Fusion strategies leverage the advantages of several data sources and show notable performance improvements for image-based semantic segmentation [7,18]. The key contributions for comprehending RGB-D scenes concentrated on: 1) creating new layers or operators based on the geometric properties of RGB-D data [4, 7, 32], and 2) creating specialized archi tec ture s for combining the complimentary data streams in various stages [18, 20, 28, 30]. When modalities other than depth maps are employed, these approaches perform less well because they were created exclusively for RGB-D modality [42]. Recent studies have concentrated on establishing unique fusion algorithms for RGB-X semantic segmentation that are adaptable across various sensing modality combinations [22, 34, 39]. In the omni directional realm, however, the integration of several modalities with crossmodal interactions is still an unresolved issue. The main issue in this scenario is to recognize the distorted and deformed geometric structures in the ultra-wide 360-degree images while taking advantage of a variety of comprehensive complementing information. To jointly use the many sources of information from RGB, Depth, and Normals equirectangular images, we propose our framework, which makes use of cross-modal interactions and panoramic perception abilities.

多模态语义分割融合策略利用多种数据源的优势,在基于图像的语义分割任务中展现出显著性能提升 [7,18]。理解RGB-D场景的核心贡献集中在:1) 基于RGB-D数据几何特性创建新层或算子 [4,7,32],2) 设计专门架构在不同阶段融合互补数据流 [18,20,28,30]。由于这些方法专为RGB-D模态设计 [42],当采用深度图以外的模态时性能会下降。近期研究专注于建立适用于RGB-X语义分割的通用融合算法,可适配不同传感模态组合 [22,34,39]。然而在全向感知领域,多模态与跨模态交互的融合仍是待解难题。该场景下的核心问题在于:利用多种互补信息的同时,识别超宽360度图像中扭曲变形的几何结构。为协同利用RGB、深度和法线等距柱状投影图像的多源信息,我们提出利用跨模态交互与全景感知能力的框架。

3. Methodology

3. 方法论

Section 3.1 provides a summary of the framework we propose for panoramic multi-modal semantic segmentation.

第3.1节概述了我们提出的全景多模态语义分割框架。

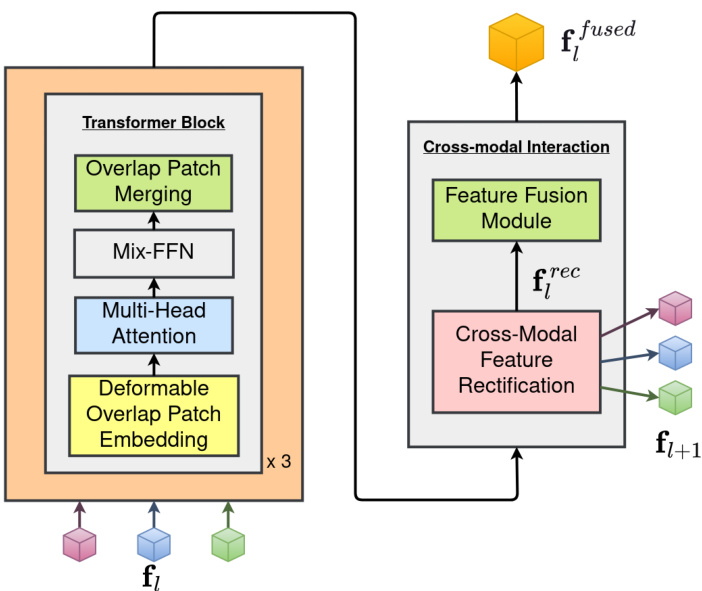

Figure 3. Panoramic encoder stage to extract RGB, Depth, and Normals features.

图 3: 全景编码器阶段用于提取 RGB (红绿蓝)、Depth (深度) 和 Normals (法线) 特征。

Although our framework may be used for bi-modal and tri-modal input scenarios,for simplicity, we explain only the encoder and decoder architectures design for crossmodal (RGB-Depth-Normals) panorama segmentation in Sec. 3.2 and Sec. 3.3, respectively. Our design is based on Trans4PASS+ [41] and uses an extension of CMX [22] for ternary modal streams feature extraction and fusion to learn object deformations and panoramic image distortions. We adopt a notation f to represent multi-modal feature maps, i.e. $\textbf{f}\in{{\bf f}{r g b},{\bf f}{d e p t h},{\bf f}_{n o r m a l}}$ , in order to keep the notation simple and avoid the $l$ notation for inputs and outputs to network modules in the $l$ -th encoder-decoder stage.

虽然我们的框架可用于双模态和三模态输入场景,但为简化说明,我们仅在3.2节和3.3节分别阐述跨模态(RGB-深度-法线)全景分割的编码器与解码器架构设计。该设计基于Trans4PASS+ [41],并扩展了CMX [22]的三模态流特征提取与融合方法,以学习物体形变和全景图像畸变。我们采用符号f表示多模态特征图,即$\textbf{f}\in{{\bf f}{r g b},{\bf f}{d e p t h},{\bf f}_{n o r m a l}}$,以简化符号体系,避免在第$l$个编码器-解码器阶段对网络模块输入输出使用$l$标注。

3.1. Framework Overview

3.1. 框架概述

In accordance with Xie et al. [35], we proposed the multi-modal panoramic segmentation architecture depicted in Fig. 1. The $H\times W\times3$ input image is first separated into patches. We provide panoramic hierarchical encoder stages to address the severe distortions in panoramas while allowing cross-modal interactions between RGB-Depth-Normals patch features, as described in Sec. 3.2. The encoder uses these patches as input to produce multi-level features at resolutions of ${1/4,1/8,1/16,1/32}$ of the original image. Finally, our panoramic decoder (refer Sec. 3.3) receives these multi-level features in order to predict the segmentation mask at a $H\times W\times N_{c l a s s}$ resolution, where $N_{c l a s s}$ is the number of object categories.

根据Xie等人[35]的研究,我们提出了图1所示的多模态全景分割架构。$H\times W\times3$的输入图像首先被分割为多个图像块。如第3.2节所述,我们设计了全景分层编码器阶段来处理全景图像中的严重畸变,同时实现RGB-深度-法线三种模态图像块特征的跨模态交互。编码器以这些图像块作为输入,生成原始图像${1/4,1/8,1/16,1/32}$分辨率下的多级特征。最终,我们的全景解码器(参见第3.3节)接收这些多级特征,以预测$H\times W\times N_{c l a s s}$分辨率的语义分割掩码,其中$N_{c l a s s}$表示目标类别数量。

3.2. Panoramic Hierarchical Encoding

3.2. 全景分层编码

Each stage of our encoding process for extracting hierarchical characteristics is specifically designed and optimized for semantic segmentation. Figure 3 illustrates how our architecture incorporates recently proposed Cross-modal Feature Rectification (CM-FRM) and Feature Fusion (FFM) modules [22] as well as Deformable Patch Embeddings (DPE) module [40] to deal with the severe distortions in RGB, Depth, and Normals panoramas caused by equirectangular representation.

我们为提取层次特征设计的编码流程每个阶段都专门针对语义分割任务进行了优化。图 3 展示了如何通过最新提出的跨模态特征校正 (CM-FRM) 、特征融合 (FFM) 模块 [22] 以及可变形块嵌入 (DPE) 模块 [40] 来处理等距柱状投影导致的 RGB、深度和法线全景图严重畸变问题。

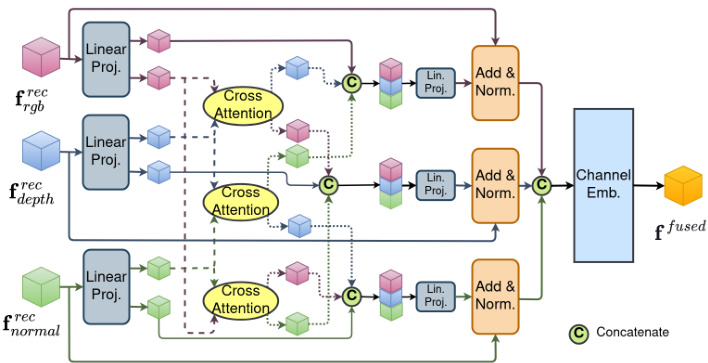

Figure 4. Cross-modal feature rectification module to calibrate RGB, Depth, and Normals features.

图 4: 用于校准RGB、Depth和Normals特征的跨模态特征校正模块。

Deformable patch embedding A typical Patch Embeddings (PE) module [12, 35] divides an input image or feature map of size $\mathbf{f}\in\mathbb{R}^{H\times W\times C_{i n}}$ into a flattened 2D patch sequence of shape $s\times s$ each. In this patch, the position offset with respect to a location $(i,j)$ is defined as $\Delta_{(i,j)}\in$ $\begin{array}{r}{\left[\frac{-s}{2},\frac{s}{2}\right]\times\left[\frac{-s}{2},\frac{s}{2}\right]}\end{array}$ , where $(i,j)\in[1,s]$ . However, these fixed sample points fail to learn deformation-aware features and do not respect object shape distortions. To learn a datadependent offset, we deploy a Deformable Patch Embeddings (DPE) module that was proposed by Zhang et al. [40]. We formulate Eq. (1), using the deformable convolution operation $g(.)$ [10] with a hyper parameter of $r=4$ .

可变形块嵌入

典型的块嵌入 (Patch Embeddings, PE) 模块 [12, 35] 将尺寸为 $\mathbf{f}\in\mathbb{R}^{H\times W\times C_{i n}}$ 的输入图像或特征图划分为形状为 $s\times s$ 的扁平化二维块序列。在该块中,相对于位置 $(i,j)$ 的偏移量定义为 $\Delta_{(i,j)}\in$ $\begin{array}{r}{\left[\frac{-s}{2},\frac{s}{2}\right]\times\left[\frac{-s}{2},\frac{s}{2}\right]}\end{array}$,其中 $(i,j)\in[1,s]$。然而,这些固定采样点无法学习变形感知特征,也无法适应物体形状畸变。为学习数据依赖型偏移,我们采用了 Zhang 等人 [40] 提出的可变形块嵌入 (Deformable Patch Embeddings, DPE) 模块。我们使用超参数 $r=4$ 的可变形卷积操作 $g(.)$ [10] 构建了公式 (1)。

$$

\pmb{\Delta}{(i,j)}^{D P E}=\left[\begin{array}{l}{m i n\big(m a x\big(-\frac{H}{r},g(\mathbf{f}){(i,j)}\big),\frac{H}{r}\big)}\ {m i n\big(m a x\big(-\frac{W}{r},g(\mathbf{f})_{(i,j)}\big),\frac{W}{r}\big)}\end{array}\right]

$$

$$

\pmb{\Delta}{(i,j)}^{D P E}=\left[\begin{array}{l}{m i n\big(m a x\big(-\frac{H}{r},g(\mathbf{f}){(i,j)}\big),\frac{H}{r}\big)}\ {m i n\big(m a x\big(-\frac{W}{r},g(\mathbf{f})_{(i,j)}\big),\frac{W}{r}\big)}\end{array}\right]

$$

Cross-modal feature rectification Measurements that are noisy are frequently present in the data from various complementing sensor modalities. By utilizing features from a different modality, the noisy information can be filtered and calibrated. Regarding this, Liu et al. [22] present a novel Cross-Modal Feature Rectification Module (CM-FRM) to execute feature rectification between parallel streams at each stage, throughout feature extraction process. In our work, we expand this calibration scheme using ternary features from RGB, Depth, and Normals panorama stream, as seen in Fig. 4. Our two-stage CMFRM processes the input features channel- and spatialwise to address noises and uncertainties in RGB-DepthNormals modalities, providing a comprehensive calibration for improved multi-modal feature extraction and inter- action. While the spatial-wise rectification stage focuses on local calibration, the channel-wise rectification stage is more concerned with global calibrations. Hyper para meters ${\lambda}{c},{\lambda}{s}~=~0.5$ are utilized to rectify the noisy input multi-modal features as shown in Eq. (2) by using the channel $\mathbf{f}_{c h a n n e l}^{r e c}$ and spatial f srpeactial weights that have been obtained.

跨模态特征校正

不同互补传感器模态的数据中常存在噪声测量。通过利用另一模态的特征,可以过滤和校准噪声信息。对此,Liu等人[22]提出了一种新颖的跨模态特征校正模块(CM-FRM),在特征提取过程中对各阶段的并行流执行特征校正。如图4所示,我们的工作通过RGB、深度和法线全景流的三元特征扩展了这一校准方案。我们的两阶段CMFRM对输入特征进行通道和空间维度的处理,以解决RGB-深度-法线模态中的噪声和不确定性,为改进的多模态特征提取与交互提供全面校准。空间校正阶段侧重于局部校准,而通道校正阶段更关注全局校准。超参数${\lambda}{c},{\lambda}{s}~=~0.5$用于通过已获得的通道权重$\mathbf{f}{channel}^{rec}$和空间权重$f_{spatial}^{rec}$来校正噪声输入的多模态特征,如式(2)所示。

Figure 5. Cross-modal feature fusion module to fuse RGB, Depth, and Normals features.

图 5: 跨模态特征融合模块,用于融合 RGB、深度和法线特征。

$$

\textbf{f}^{r e c}=\textbf{f}+\lambda_{c}\textbf{f}{c h a n n e l}^{r e c}+\lambda_{s}\textbf{f}_{s p a t i a l}^{r e c}

$$

$$

\textbf{f}^{r e c}=\textbf{f}+\lambda_{c}\textbf{f}{c h a n n e l}^{r e c}+\lambda_{s}\textbf{f}_{s p a t i a l}^{r e c}

$$

Cross-modal feature fusion To improve information interaction and combine the features into a single feature map the rectified multi-modal feature maps f rec are passed through a two-stage Feature Fusion Module (FFM) at the end of each encoder stage. As seen in Fig. 5, we use a ternary multi-head cross-attention mechanism to expand Liu et al. [22] information sharing stage by allowing for global information flow between the RGB, Depth, and Normals modalities. In the fusion stage, a channel embedding [22] is utilized to combine ternary features to f fused and passed through the decoding step for semantics prediction.

跨模态特征融合

为提升信息交互并将特征整合为单一特征图,校正后的多模态特征图 f rec 在每个编码器阶段末尾通过两阶段特征融合模块 (FFM)。如图 5 所示,我们采用三元多头交叉注意力机制扩展了 Liu 等人 [22] 的信息共享阶段,实现 RGB、深度和法线模态间的全局信息流。在融合阶段,利用通道嵌入 [22] 将三元特征组合为 f fused,并传递至解码步骤进行语义预测。

3.3. Panoramic Token Mixer Decoder

3.3. 全景 Token 混合解码器

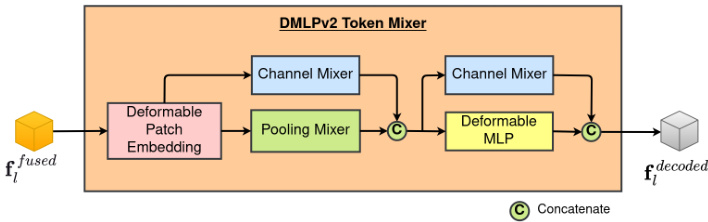

Figure 6. Panoramic decoder stage with fused features from RGB, Depth, and Normals modalities.

图 6: 融合了RGB、深度和法线模态特征的全景解码器阶段。

The Channel Mixer (CX) of the DMLPv2 considers space-consistent yet channel-wise feature re weighting, strengthening the feature by emphasizing informative channels. Focusing on spatial-wise sampling using fixed and adaptive offsets, respectively, the Pooling Mixer (PX) and Deformable MLP (DMLP) are used in DMLPv2. The nonparametric Pooling Mixer (PX) is implemented by an average pooling operator. The adaptive data-dependent spatial offset $\Delta_{(i,j,c)}^{D\widetilde{M}L\dot{P}}$ is predicted channel-wise.

DMLPv2中的通道混合器 (CX) 采用空间一致但通道自适应的特征重加权策略,通过增强信息丰富的通道来强化特征。DMLPv2分别使用固定偏移和自适应偏移进行空间采样的池化混合器 (PX) 和可变形MLP (DMLP) 。非参数化的池化混合器 (PX) 通过平均池化算子实现,而自适应数据依赖的空间偏移 $\Delta_{(i,j,c)}^{D\widetilde{M}L\dot{P}}$ 则以通道为单位进行预测。

Finally, to output the prediction for $N_{c l a s s}$ semantics masks, the decoded features from the four steps are concatenated and given to a segmentation header module, depicted in Fig. 1.

最后,为了输出 $N_{c l a s s}$ 个语义掩码的预测结果,将四个步骤解码得到的特征拼接起来,并输入到一个分割头模块中,如图 1 所示。

4. Experiments

4. 实验

4.1. Datasets

4.1. 数据集

For the purpose of evaluating our suggested cross-modal framework for interior settings, we use three multi-modal equirectangular semantic segmentation datasets. In each of our tests, we resize the input image to $512\times1024$ , and then we compute evaluation metrics, such as Mean Region Intersection Over Union (mIoU), Pixel Accuracy (aAcc), and Mean Accuracy (mAcc), using the MM Segmentation IoU script3.

为了评估我们提出的室内场景跨模态框架,我们使用了三个多模态等距柱状投影语义分割数据集。在每次测试中,我们将输入图像尺寸调整为$512\times1024$,并使用MM Segmentation IoU脚本计算评估指标,如平均区域交并比(mIoU)、像素准确率(aAcc)和平均准确率(mAcc)。

Stanford 2 D 3 DS dataset [2] contains 1713 multi-modal equirectangular images with 13 object categories. We split the data from area 1 to area 6 for training and validation in a manner similar to Armeni et al. [2], using a 3-fold crossvalidation scheme, and we give the mean values across the folds. Furthermore, the publicly accessible code4 is used to compute the panoramic HHA [16] modality using the appropriate depth and camera parameters.

斯坦福2D-3DS数据集[2]包含1713张多模态等距柱状投影图像,涵盖13个物体类别。我们参照Armeni等人[2]的方法,将区域1至区域6的数据划分为训练集和验证集,采用3折交叉验证方案,并给出各折的平均值。此外,使用公开代码4结合深度和相机参数计算全景HHA[16]模态。

Structured 3 D dataset [44] offers 40 NYU-Depthv2 [29] object categories, 196515 synthetic, multi-modal, equirectangular images with a variety of lighting setups. In line with Zheng et al. [44], we establish typical training, validation, and test splits as follows: scene 00000 to scene 02999 for training, scene 03000 to scene 03249 for validation, and scene 03250 to scene 03499 for testing. For all of the tests we conduct, we use rendered raw lighting images with full furniture arrangements.

结构化3D数据集[44]提供了40个NYU-Depthv2[29]物体类别,包含196,515张合成、多模态、等距柱状投影图像,涵盖多种光照配置。参照Zheng等人[44]的方法,我们划分了标准的训练集、验证集和测试集:场景00000至场景02999用于训练,场景03000至场景03249用于验证,场景03250至场景03499用于测试。所有测试均使用完整家具布局的渲染原始光照图像。

Matter port 3 D dataset [5] The 10800 panoramic views in the Matter port 3 D [5] collection are represented by 18 viewpoints per image frame, necessitating an explicit conversion to an equirectangular format. Second, the associated semantic annotations are spread among four files (xxx.house, xxx.ply, xxx.fsegs.json, and xxx.semseg.json). We employ the open-source matterport utils5 code for postprocessing, where the mpview script is used to produce annotation images and the prepare pano script is used to stitch the 18 images that were taken into a 360-degree panorama. For our trials using the 40 object categories, we created own training, validation, and test splits, refer to appendix.

Matterport 3D数据集[5]

Matterport 3D[5]集合中的10800张全景图以每帧18个视角呈现,需显式转换为等距柱状投影格式。其次,相关语义标注分散在四个文件中(xxx.house、xxx.ply、xxx.fsegs.json和xxx.semseg.json)。我们采用开源matterport utils5代码进行后处理:使用mpview脚本生成标注图像,通过prepare pano脚本将18张拍摄图像拼接为360度全景图。针对40个物体类别的实验,我们自行划分了训练集、验证集和测试集,详见附录。

4.2. Implementation Details

4.2. 实现细节

With an initial learning rate of 6e-5 programmed by the poly strategy with power 0.9 over the training epochs, we train our models using a pre-trained SegFormer MiT $\mathbf{B}2^{6}$ RGB backbone on the RTXA6000 GPU. For Stanford2D3DS [2], Structured 3 D [44], and Matter port 3 D [5] experiments, there are 200 training epochs, 50, and 100 respectively. The optimizer AdamW [19] is employed with the following parameters: batch size 4, epsilon 1e-8, weight decay 1e-2, and betas (0.9, 0.999). Random horizontal flipping, random scaling to scales of ${0.5,0.75,1,1.25,1.5,1.75}$ , and random cropping to $512\times512$ are added for image argumentation s. Deformable Patch Embedding module (DPE), refer to Sec. 3.2, is used for the panoramic encoder stage-1 and a conventional Overlapping Patch Embedding (OPE) module [35], for the other stages of our framework. More specific settings are described in detail in the appendix.

我们采用初始学习率为6e-5的多项式策略(power=0.9)进行训练,在RTXA6000 GPU上使用预训练的SegFormer MiT $\mathbf{B}2^{6}$ RGB主干网络。对于Stanford2D3DS [2]、Structured3D [44]和Matterport3D [5]数据集,分别设置200、50和100个训练周期。优化器采用AdamW [19],参数配置为:批量大小4、epsilon 1e-8、权重衰减1e-2、betas (0.9, 0.999)。数据增强包括随机水平翻转、随机缩放比例 ${0.5,0.75,1,1.25,1.5,1.75}$ 以及随机裁剪至 $512\times512$ 尺寸。全景编码器第一阶段使用可变形块嵌入模块(DPE)(参见第3.2节),框架其他阶段采用传统重叠块嵌入模块(OPE)[35]。更多细节设置详见附录。

We conducted our tests for the following fusion config u rations: RGB-only, RGB-Depth, RGB-Normal, RGBHHA, and RGB-Depth-Normal, RGB-Depth-HHA, and RGB-Normal-HHA. In our tests, we only use pathways and modules in our encoding-decoding stages and skip any unnecessary parts of our framework based on these combinations. For example, in the CM-FRM and FFM modules discussed in Sec. 3.2, we employ bi-directional features for cross-modal interactions for the RGB-Depth scenario, whereas for the RGB-Depth-Normal situation, we use routes that lead to tri-directional interactions across the features.

我们测试了以下融合配置:仅RGB、RGB-深度、RGB-法线、RGBHHA、RGB-深度-法线、RGB-深度-HHA以及RGB-法线-HHA。测试中,我们仅在编码-解码阶段使用对应路径和模块,并根据这些组合跳过框架中不必要的部分。例如,在第3.2节讨论的CM-FRM和FFM模块中,针对RGB-深度场景采用双向特征进行跨模态交互,而在RGB-深度-法线场景中则使用引发三向特征交互的路径。

4.3. Experiment Results and Analysis

4.3. 实验结果与分析

We carry out comprehensive tests on multimodal segmentation datasets for indoor settings to demonstrate the effectiveness of our proposed architecture of cross-modal fusion using panoramas. We employ the aforementioned training epochs, random crop-size, and batch size variables to compare our method against the current stateof-the-art approaches Trans4PASS+ [41], HoHoNet [30], PanoFormer [28], CMNeXt [39], and Token Fusion [34]. For a detailed description of their implementation, see the corresponding works. While all other approaches have been reproduced using the conditions of our experiment, the CBFC [46] and Tangent [13] results described here are from the related original paper. In Figure 2, Figure 7 and Figure 8, as well as in Table 1 and Table 2, are visualization s of the quantitative results and comparisons to the state-ofthe-art.

我们在室内场景的多模态分割数据集上进行了全面测试,以验证所提出的全景跨模态融合架构的有效性。采用前述训练周期、随机裁剪尺寸和批量大小等变量,将本方法与当前最先进方法Trans4PASS+ [41]、HoHoNet [30]、PanoFormer [28]、CMNeXt [39]和Token Fusion [34]进行对比。具体实现细节请参阅对应文献。除CBFC [46]和Tangent [13]直接引用原始论文结果外,其他方法均在本实验条件下复现。定量结果的可视化对比详见 图2、图7、图8 以及 表1、表2。

Table 1. Results on Stanford 2 D 3 DS [2].

表 1: Stanford 2D-3DS [2] 上的结果

| 方法 | 模态 | 3折验证 mIoU (%) | mAcc (%) |

|---|---|---|---|

| Trans4PASS+ [41] HoHoNet [30] PanoFormer [28] CBFC [46] Tangent [13] OURS | RGB | 52.04 51.99 52.35 52.20 45.60 52.87 | 63.98 62.97 64.31 65.60 65.20 63.96 |

| HoHoNet [30] PanoFormer [28] CBFC [46] Tangent [13] OURS | RGB-D | 56.73 57.03 56.70 52.50 55.49 | 68.23 68.08 70.80 70.10 68.57 |

| OURS | RGB-N RGB-H RGB-D-N RGB-D-H RGB-N-H | 58.24 60.60 59.43 59.99 60.24 | 68.79 70.68 69.03 70.44 70.61 |

Results on Stanford 2 D 3 DS Table 1 presents the thorough comparisons between our method and other current panoramic methods. Overall, our method delivers cutting-edge performance in the merging of complementary modalities for semantic segmentation. Our method produces results that are comparable to those of existing methods [13,28,30,46] when used with RGB-Depth panoramas, and it further improved the results when RGB, Depth, Normals, and HHA combinations were combined. With RGB-HHA image-based fusion, the highest mIoU was reached at $60.60%$ . By utilizing the complementary geometric, disparity, and textural information, the mIoU metric increased from RGB-only to gradually fusing Depth and Normals, $52.87%\rightarrow55.49%\rightarrow59.43%$

斯坦福2D-3DS数据集上的结果

表1: 展示了我们的方法与当前其他全景方法的全面对比。总体而言,我们的方法在多模态融合的语义分割任务中实现了最先进的性能。在使用RGB-深度全景图时,我们的方法取得了与现有方法[13,28,30,46]相当的结果,而在结合RGB、深度、法线及HHA特征时进一步提升了性能。基于RGB-HHA图像的融合达到了最高mIoU指标 $60.60%$ 。通过利用互补的几何、视差和纹理信息,mIoU指标从仅使用RGB时的 $52.87%$ 逐步提升至融合深度和法线特征后的 $55.49%\rightarrow59.43%$ 。

Results on Structured 3 D We further test Structured 3 D using simply RGB, Depth, and Normals, as seen in Table 2. On the validation and test data splits, our RGB-only model performs at the cutting edge at $71.94%$ and $68.34%$ , respectively. Additionally, by combining depth and normals data, we were able to outperform benchmark results for (validation, test) by $\left(+1.84,+1.83\right)$ for RGB-Depth, $(+2.44,+2.66)$ for RGB-Normals, and $(+3.92,3.63)$ for RGB-Depth-Normals fusion.

结构化3D数据集上的结果

我们进一步测试了仅使用RGB、深度(Depth)和法线(Normals)数据的结构化3D模型,如表2所示。在验证集和测试集划分上,我们的纯RGB模型分别达到了最先进的71.94%和68.34%准确率。此外,通过融合深度和法线数据,我们在(RGB-深度)组合上以(+1.84,+1.83)的幅度超越基准结果,(RGB-法线)组合提升(+2.44,+2.66),而(RGB-深度-法线)融合方案则实现了(+3.92,3.63)的性能增益。

表2:

Table 2. Results on Structured 3 D [44] and Matter port 3 D [5] datasets.

| 方法 | 模态 | Structured3D 验证集 mIoU (%) | Structured3D 测试集 mIoU (%) | Matterport3D 验证集 mIoU (%) | Matterport3D 测试集 mIoU (%) |

|---|---|---|---|---|---|

| Trans4PASS+ [41] | RGB | 66.74 | 66.90 | 33.43 | 29.19 |

| HoHoNet [30] | RGB | 66.09 | 64.41 | 31.91 | 29.33 |

| RGB | 55.57 | 54.87 | 30.04 | 26.87 | |

| OURS | 71.94 | 68.34 | 35.15 | 31.30 | |

| HoHoNet [30] | 69.51 | 66.99 | 35.36 | 32.02 | |

| 60.98 | 59.27 | 33.99 | 31. |