Evaluating surgical skills from kinematic data using convolutional neural networks

基于运动学数据和卷积神经网络的手术技能评估

Abstract. The need for automatic surgical skills assessment is increasing, especially because manual feedback from senior surgeons observing junior surgeons is prone to subjectivity and time consuming. Thus, automating surgical skills evaluation is a very important step towards improving surgical practice. In this paper, we designed a Convolutional Neural Network (CNN) to evaluate surgeon skills by extracting patterns in the surgeon motions performed in robotic surgery. The proposed method is validated on the JIGSAWS dataset and achieved very competitive results with $100%$ accuracy on the suturing and needle passing tasks. While we leveraged from the CNNs efficiency, we also managed to mitigate its black-box effect using class activation map. This feature allows our method to automatically highlight which parts of the surgical task influenced the skill prediction and can be used to explain the classification and to provide personalized feedback to the trainee.

摘要。自动手术技能评估的需求日益增长,尤其是因为资深外科医生观察初级外科医生时提供的手动反馈容易受主观影响且耗时。因此,自动化手术技能评估是改善外科实践的重要一步。本文设计了一种卷积神经网络 (CNN) ,通过提取机器人手术中外科医生动作的模式来评估其技能。所提方法在 JIGSAWS 数据集上进行了验证,在缝合和穿针任务中取得了极具竞争力的结果,准确率达到 $100%$ 。在利用 CNN 高效性的同时,我们还通过类别激活图减轻了其黑箱效应。这一特性使我们的方法能自动突出显示手术任务中影响技能预测的部分,既可用于解释分类结果,也能为学员提供个性化反馈。

Keywords: kinematic data, RMIS, deep learning, CNN

关键词:运动学数据、RMIS、深度学习、CNN

1 Introduction

1 引言

Over the past one hundred years, the classical training program of Dr. William Halsted has governed surgical training in different parts of the world [15]. His teaching philosophy of “see one, do one, teach one” is still one of the most practiced methods to this day [1]. The idea is that the trainee could become an expert surgeon by watching and assisting in mentored surgeries [15]. These training methods, although broadly used, lack of an objective surgical skill evaluation technique [9]. Conventional surgical skill assessment is currently based on checklists that are filled by an expert surgeon observing the surgery [14]. In an attempt to evaluate surgical skills without relying on an expert’s opinion, Objective Structured Assessment of Technical Skills (OSATS) has been proposed and is used in clinical practice [12]. Unfortunately, this type of observational evaluation is still prone to several external and subjective variables: the checklists’ development process, the inter-rater reliability and the evaluator bias [7].

在过去的一百年间,William Halsted博士创立的经典外科培训体系主导了全球各地的外科教学实践[15]。其"观摩一例、操作一例、教学一例"的理念至今仍是应用最广泛的教学方法之一[1]。该体系认为学员通过观察和协助指导手术即可成长为优秀外科医生[15]。然而这些传统培训方法普遍缺乏客观的手术技能评估技术[9]。现行常规外科技能评估主要依赖专家观察手术过程后填写的检查清单[14]。为建立不依赖专家意见的评估体系,技术技能客观结构化评估(OSATS)被提出并应用于临床实践[12]。但此类观察性评估仍易受多重外部和主观变量影响:检查表制定流程、评估者间信度以及评价者偏见等[7]。

Other studies showed that a strong relationship exists between the postoperative outcomes and the technical skill of a surgeon [2]. This type of approach suffers from the fact that a surgery’s outcome also depends on the patient’s physiological characteristics [9]. In addition, acquiring such type of data is very difficult, which makes these skill evaluation methods difficult to apply for surgical training. Recent advances in surgical robotics such as the da Vinci surgical robot (Intuitive Surgical Inc. Sunnyvale, CA) enabled the collection of motion and video data from different surgical activities. Hence, an alternative for checklists and outcome-based methods is to extract, from these motion data, global movement features such as the surgical task’s time completion, speed, curvature, motion smoothness and other holistic features [3,19,9]. Although most of these methods are efficient, it is not clear how they could be used to provide a detailed and constructive feedback for the trainee to go beyond the simple classification into a category (i.e. novice, expert, etc.). This is problematic as studies [8] showed that feedback on medical practice allows surgeons to improve their performance and reach higher skill levels.

其他研究表明,术后结果与外科医生的技术能力存在强相关性 [2]。但这类方法的局限性在于手术结果还取决于患者的生理特征 [9]。此外,此类数据获取难度大,导致这些技能评估方法难以应用于手术培训。达芬奇手术机器人 (Intuitive Surgical Inc. Sunnyvale, CA) 等手术机器人技术的最新进展,实现了从不同手术活动中采集运动数据和视频数据。因此,除检查表和基于结果的方法外,还可从这些运动数据中提取全局运动特征,如手术任务完成时间、速度、曲率、运动平滑度等整体特征 [3,19,9]。尽管这些方法大多有效,但尚不清楚如何利用它们为学员提供详细且具有建设性的反馈,而非简单归类(如新手、专家等)。这成为显著问题,因为研究 [8] 表明医疗实践中的反馈能帮助外科医生提升表现并达到更高技能水平。

Recently, a new field named Surgical Data Science [11] has emerged thanks to the increasing access to large amounts of complex data which pertain to the patient, the staff and sensors for perceiving the patient and procedure related data such as videos and kinematic variables [5]. As an alternative to extracting global movement features, recent studies tend to break down surgical tasks into smaller segments called surgical gestures, manually before the training phase, and assess the performance of the surgical task based on the assessment of these gestures [13]. Although these methods obtained very accurate and promising results in terms of surgical skill evaluation, they require a huge amount of labeled gestures for the training phase [13]. We have identified two main limits in the existing approaches that classify a surgeon’s skill level based on the kinematic data. First is the lack of an interpret able result of skill evaluation usable by the trainee to achieve higher skill levels. Additionally current state of the art Hidden Markov Models require gesture boundaries that are pre-defined by human annotators which is time consuming and prone to inter-annotator reliability [16].

近来,随着可获取的患者、医护人员及感知患者相关数据的传感器(如视频和运动学变量) [5] 等复杂数据量不断增加,一个名为外科数据科学(Surgical Data Science) [11] 的新领域应运而生。与提取全局运动特征不同,近期研究倾向于在训练阶段前将外科任务手动分解为更小的片段(称为外科手势),并根据这些手势的评估来衡量手术任务表现 [13]。尽管这些方法在外科技能评估方面取得了非常准确且有前景的结果,但训练阶段需要大量标注手势数据 [13]。我们发现现有基于运动学数据评估外科医生技能水平的方法存在两个主要局限:一是缺乏可供受训者用于提升技能的可解释性评估结果;二是当前最先进的隐马尔可夫模型(Hidden Markov Models)需要人工标注预定义手势边界,这种方法耗时且易受标注者间可靠性影响 [16]。

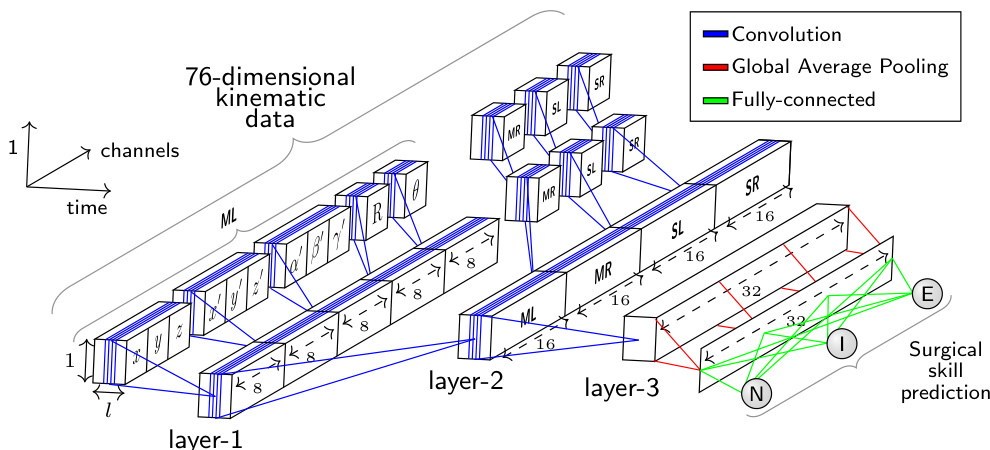

In this paper, we propose a new architecture of Convolutional Neural Networks (CNN) dedicated to surgical skill evaluation (Figure 1). By using one dimensional filters over the kinematic data, we mitigate the need to pre-define sensitive and unreliable gesture boundaries. The original hierarchical structure of our deep learning model enables us to represent the gestures in latent low-level variables (first and second layers), as well as capturing global information related to the surgical skill level (third layer). To provide interpret able feedback, instead of using a final fully-connected layer like most traditional approaches [18], we place a Global Average Pooling (GAP) layer which enables us to benefit from the Class Activation Map [18] (CAM) to visualize which parts of the surgical task contributed the most to the surgical skill classification (Figure 2). We demonstrate the accuracy of our approach using a standardized experimental setup on the largest publicly available dataset for surgical data analysis: the JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS) [5]. The main contribution of our work is to show that deep learning can be used to understand the latent and complex structures of what constitutes a surgical skill, especially that there is still much to be learned on what is exactly a surgical skill [9].

本文提出了一种专用于手术技能评估的新型卷积神经网络 (CNN) 架构 (图 1)。通过在运动学数据上使用一维滤波器,我们减少了对敏感且不可靠手势边界预定义的需求。深度学习模型的原始分层结构使我们能够在潜在低层变量 (第一层和第二层) 中表示手势,同时捕获与手术技能水平相关的全局信息 (第三层)。为了提供可解释的反馈,与大多数传统方法 [18] 不同,我们没有使用最终的全连接层,而是采用了全局平均池化 (GAP) 层,这使我们能够利用类别激活图 [18] (CAM) 来可视化手术任务的哪些部分对手术技能分类贡献最大 (图 2)。我们在最大的公开手术数据分析数据集 JHU-ISI 手势与技能评估工作集 (JIGSAWS) [5] 上使用标准化实验设置验证了该方法的准确性。本研究的主要贡献在于证明深度学习可用于理解构成手术技能的潜在复杂结构,特别是考虑到目前对手术技能的准确定义仍存在大量未知 [9]。

Fig. 1: The network architecture whose input is a surgical task with variable length $\imath$ recorded by the four manipulators (ML: master left, MR: master right, SL: slave left, SR: slave right) of the da Vinci surgical system. The output is a surgical skill prediction (N: Novice, I: Intermediate and E: Expert).

图 1: 网络架构的输入是由达芬奇手术系统的四个机械臂 (ML: 主左, MR: 主右, SL: 从左, SR: 从右) 记录的可变长度手术任务 $\imath$。输出为手术技能预测 (N: 新手, I: 中级, E: 专家)。

2 Method

2 方法

2.1 Dataset

2.1 数据集

We first present briefly the dataset used in this paper as we rely on features definition to describe our method. The JIGSAWS [5] dataset has been collected from eight right-handed subjects with three different skill levels (Novice (N), Intermediate (I) and Expert (E)) performing three different surgical tasks (suturing, needle passing and knot tying) using the da Vinci surgical system. Each subject performed five trials of each task. For each trial the kinematic and video data were recorded.

我们首先简要介绍本文使用的数据集,因为我们的方法依赖于特征定义来描述。JIGSAWS [5] 数据集收集自八名右利手受试者,他们具有三种不同的技能水平(新手 (N)、中级 (I) 和专家 (E)),使用达芬奇手术系统执行三种不同的外科手术任务(缝合、穿针和打结)。每位受试者对每项任务进行了五次试验,每次试验均记录了运动学和视频数据。

In our work, we only focused on kinematic data which are numeric variables of four manipulators: left and right masters (controlled directly by the subject’s hands) and left and right slaves (controlled indirectly by the subject via the master manipulators). These kinematic variables (76 in total) are captured at a frequency equal to 30 frames per second for each trial. We considered each trial as a multivariate time series (MTS) and designed a one dimensional CNN dedicated to learn automatically useful features for surgical skill classification.

在我们的工作中,我们仅关注四种机械臂的数值型运动学数据:左右主控臂(由受试者双手直接操控)和左右从动臂(受试者通过主控臂间接操控)。这些运动学变量(共76个)以每秒30帧的频率在每次试验中采集。我们将每次试验视为多元时间序列(MTS),并设计了一维CNN(卷积神经网络)来自动学习用于手术技能分类的有效特征。

2.2 Architecture

2.2 架构

Our approach takes inspiration of the recent success of CNN for time series classification [17]. The proposed architecture (Figure 1) has been specifically designed to classify surgical skills using kinematic data. The input of the CNN is a MTS with variable length $\it l$ and 76 channels. The output layer contains the surgical skill level (N, I, E). Comparing to CNNs for image classification, where usually the network’s input has two dimensions (width and height) and 3 channels (RGB), our network’s input is a time series with one dimension (length $\textit{l}$ of the surgical task) and 76 channels (the kinematic variables $x,y,z,x^{\prime}$ , etc.).

我们的方法受到近期CNN(卷积神经网络)在时间序列分类领域成功应用的启发[17]。所提出的架构(图1)专为利用运动学数据对手术技能进行分类而设计。该CNN的输入是一个可变长度$\it l$、76通道的多变量时间序列(MTS),输出层包含手术技能等级(N, I, E)。与通常处理二维输入(宽度和高度)及3通道(RGB)图像的图像分类CNN相比,我们的网络输入是一个单维时间序列(手术任务长度$\textit{l}$)和76个通道(运动学变量$x,y,z,x^{\prime}$等)。

The main challenge we encountered when designing our network was the huge number of input channels (76) compared to the RGB channels (3) for the image classification task. Therefore, instead of applying the convolutions over the 76 channels, we proposed to carry out different convolutions for each cluster and sub-cluster of channels. In order to decide which channels should be grouped together, we used domain knowledge when clustering the channels.

在设计网络时遇到的主要挑战是,与图像分类任务的RGB通道(3)相比,输入通道数量巨大(76)。因此,我们没有对这76个通道直接进行卷积运算,而是提出对每个通道簇及其子簇分别执行不同的卷积操作。在确定通道分组方案时,我们运用领域知识对通道进行聚类。

First we divide the 76 variables into four different clusters, such as each cluster contains the variables from one of the four manipulators: the $1^{s t},2^{n d},3^{r d}$ and $4^{t h}$ clusters correspond respectively to the four manipulators (ML: master left, MR: master right, SL: slave left and SR: slave right) of the da Vinci surgical system. Thus, each cluster contains 19 of the 76 total kinematic variables.

首先我们将76个变量划分为四个不同的集群,每个集群包含来自四个机械臂之一的变量:$1^{s t}$、$2^{n d}$、$3^{r d}$和$4^{t h}$集群分别对应达芬奇手术系统的四个机械臂 (ML: 主左臂, MR: 主右臂, SL: 从左臂, SR: 从右臂) 。因此,每个集群包含76个运动学变量中的19个。

Next, each cluster of 19 variables is split into five different sub-clusters such as each sub-cluster contains variables that we hypothesize are highly correlated. For each cluster, the variables are grouped into five sub-clusters: $1^{s t}$ sub-cluster with 3 variables for the Cartesian coordinates $(x,y,z)$ ; $2^{n d}$ sub-cluster with 3 variables for the linear velocity $(x^{\prime},y^{\prime},z^{\prime})$ ; $3^{r d}$ sub-cluster with 3 variables for the rotational velocity $(\alpha^{\prime},\beta^{\prime},\gamma^{\prime})$ ; $4^{t h}$ sub-cluster with 9 variables for the rotation matrix R; $5^{t h}$ sub-cluster with 1 variable for the gripper angular velocity $(\theta)$ .

接下来,每组19个变量被划分为五个不同的子集群,每个子集群包含我们假设高度相关的变量。具体分组方式为:$1^{st}$子集群包含3个笛卡尔坐标变量$(x,y,z)$;$2^{nd}$子集群包含3个线性速度变量$(x^{\prime},y^{\prime},z^{\prime})$;$3^{rd}$子集群包含3个旋转速度变量$(\alpha^{\prime},\beta^{\prime},\gamma^{\prime})$;$4^{th}$子集群包含9个旋转矩阵R变量;$5^{th}$子集群包含1个夹爪角速度变量$(\theta)$。

Figure 1 shows how the convolutions in the first layer are different for each sub-cluster of channels. Following the same reasoning, the convolutions in the second layer are different for each cluster of channels (ML, MR, SL and SR). However, in the third layer, the same convolutions are applied for all channels.

图 1: 展示了第一层中每个子通道群的卷积操作如何各不相同。同理,第二层的卷积操作对每个通道群 (ML、MR、SL 和 SR) 也各不相同。然而在第三层中,所有通道都应用相同的卷积操作。

In order to reduce the number of parameters in our model and benefit from the CAM method [18], we replaced the fully-connected layer with a GAP operation after the third convolutional layer. This results in a summarized MTS that shrinks from a length $\it l$ to 1, while preserving the same number of channels in the third layer. As for the output layer, we use a fully-connected softmax layer with three neurons, one for each class (N, I, E).

为了减少模型参数量并利用CAM方法[18]的优势,我们在第三卷积层后使用全局平均池化(GAP)操作替代全连接层。这使得多时间序列(MTS)的表示长度从$\it l$缩减为1,同时保持第三层的通道数不变。输出层采用包含三个神经元(分别对应N、I、E三类)的全连接softmax层。

Without any cross-validation, we choose to use 8 filters at the first convolutional layer, then we increase the number of filters (by a factor of 2), thus balancing the number of parameters for each layer while going deeper into the network. The Rectified Linear Unit (ReLU) activation function is employed for the three convolutional layers with a filter size of 3 and a stride of 1.

不进行任何交叉验证的情况下,我们选择在第一卷积层使用8个滤波器,随