Exploring Self-Attention Mechanisms for Speech Separation

探索自注意力机制在语音分离中的应用

Abstract—Transformers have enabled impressive improvements in deep learning. They often outperform recurrent and convolutional models in many tasks while taking advantage of parallel processing. Recently, we proposed the SepFormer, which obtains state-of-the-art performance in speech separation with the WSJ0-2/3 Mix datasets. This paper studies in-depth Transformers for speech separation. In particular, we extend our previous findings on the SepFormer by providing results on more challenging noisy and noisy-reverb e rant datasets, such as LibriMix, WHAM!, and WHAMR!. Moreover, we extend our model to perform speech enhancement and provide experimental evidence on denoising and de reverberation tasks. Finally, we investigate, for the first time in speech separation, the use of efficient self-attention mechanisms such as Linformers, Lonformers, and ReFormers. We found that they reduce memory requirements significantly. For example, we show that the Reformerbased attention outperforms the popular Conv-TasNet model on the WSJ0-2Mix dataset while being faster at inference and comparable in terms of memory consumption.

摘要—Transformer 在深度学习领域实现了显著进步。在许多任务中,其表现往往优于循环和卷积模型,同时还能利用并行处理优势。近期,我们提出的 SepFormer 在 WSJ0-2/3 Mix 数据集上实现了语音分离的最先进性能。本文深入研究了语音分离领域的 Transformer 模型,特别通过 LibriMix、WHAM! 和 WHAMR! 等更具挑战性的含噪及含噪混响数据集扩展了 SepFormer 的先前研究成果。此外,我们将模型扩展至语音增强任务,并在去噪和去混响实验中提供了实证。最后,我们首次在语音分离领域探索了高效自注意力机制(如 Linformer、Longformer 和 Reformer)的应用,发现其能显著降低内存需求。例如,基于 Reformer 的注意力机制在 WSJ0-2Mix 数据集上优于流行的 Conv-TasNet 模型,且推理速度更快,内存消耗相当。

Index Terms—speech separation, source separation, transformer, attention, deep learning.

索引术语—语音分离,源分离,Transformer,注意力机制,深度学习。

I. INTRODUCTION

I. 引言

deep learning [1]. They contributed to a paradigm shift in sequence learning and made it possible to achieve unprecedented performance in many Natural Language Processing (NLP) tasks, such as language modeling [2], machine translation [3], and various applications in computer vision [4], [5]. Transformers enable more accurate modeling of longer-term dependencies, which makes them suitable for speech and audio processing as well. They have indeed been recently adopted for speech recognition, speaker verification, speech enhancement, and other tasks [6], [7].

深度学习 [1]。它们推动了序列学习的范式转变,使得在自然语言处理 (NLP) 任务中实现前所未有的性能成为可能,例如语言建模 [2]、机器翻译 [3] 以及计算机视觉中的各种应用 [4], [5]。Transformer 能够更准确地建模长期依赖关系,因此也适用于语音和音频处理。近年来,它们确实已被应用于语音识别、说话人验证、语音增强等任务 [6], [7]。

Powerful sequence modeling is central to source separation as well, where long-term modeling turned out to impact performance significantly [8], [9]. However, speech separation typically involves long sequences in the order of tens of thousands of frames, and using Transformers poses a challenge because of the quadratic complexity of the standard selfattention mechanism [1]. For a sequence of length $N$ , it needs to compare $N^{2}$ elements, leading to a computational bottleneck that emerges more clearly when processing long signals. Mitigating the memory bottleneck of Transformers has been the object of intense research in the last years [10]–[13]. A popular way to address the problem consists of attending to a subset of elements only. For instance, Sparse Transformer [10] employs local and dilated sliding windows over a fixed number of elements to learn short and long-term dependencies. LongFormer [11] augments the Sparse Transformer by adding global attention heads. Linformers [12], approximate the full sparse attention with low-rank matrix multiplication, while Reformers [13] cluster the elements to attend through an efficient locality-sensitive hashing function.

强大的序列建模同样是源分离的核心,其中长期建模对性能有显著影响 [8], [9]。然而,语音分离通常涉及数万帧量级的长序列,由于标准自注意力机制 (self-attention) 的二次方复杂度 [1],使用 Transformer 带来了挑战。对于长度为 $N$ 的序列,需要比较 $N^{2}$ 个元素,导致计算瓶颈在处理长信号时更为明显。近年来,缓解 Transformer 的内存瓶颈成为密集研究的焦点 [10]–[13]。解决该问题的常用方法之一是仅关注元素的子集。例如,稀疏 Transformer (Sparse Transformer) [10] 通过局部和扩张滑动窗口在固定数量的元素上学习短期和长期依赖关系。LongFormer [11] 通过添加全局注意力头扩展了稀疏 Transformer。Linformers [12] 通过低秩矩阵乘法近似完整的稀疏注意力,而 Reformers [13] 则通过高效的局部敏感哈希函数对元素进行聚类以实现注意力计算。

To address this issue in speech separation, we recently proposed the SepFormer [14], a Transformer specifically designed for speech separation that inherits the dual-path processing pipeline proposed originally in [8] for recurrent neural networks (RNN). Dual-path processing splits long sequences into chunks, thus naturally alleviating the quadratic complexity issue. Moreover, it combines short-term and long-term modeling by adding a cascade of two distinct stages, making it particularly appealing for audio processing. Differently from its predecessors, SepFormer employs a Masking Network composed of Transformer encoder layers only. This property significantly improves the parallel iz ation and inference speed of the SepFormer compared to previous RNN-based methods.

为了解决语音分离中的这一问题,我们近期提出了SepFormer [14],这是一种专为语音分离设计的Transformer,它继承了最初在[8]中为循环神经网络(RNN)提出的双路径处理流程。双路径处理将长序列分割成块,从而自然缓解了二次复杂度问题。此外,它通过添加两个不同阶段的级联,结合了短期和长期建模,使其特别适用于音频处理。与之前的方法不同,SepFormer采用仅由Transformer编码器层组成的掩码网络。这一特性显著提高了SepFormer的并行化能力和推理速度,相比之前基于RNN的方法。

This paper extends our previous studies on Transformerbased architectures for speech separation. In our previous work [14], for instance, we focused on the standard WSJ0- 2/3Mix benchmark datasets only. Here, we propose additional experiments and insights on more realistic and challenging datasets such as Libri2/3-Mix [15], which includes long mixtures, WHAM! [16] and WHAMR! [17], which feature noisy and noisy and reverb e rant conditions, respectively. Moreover, we adapted the SepFormer to perform speech enhancement and provide experimental evidence on VoiceBank-DEMAND, WHAM!, and WHAMR! datasets. Another contribution of this paper is investigating different types of self-attention mechanisms for speech separation. We explore, for the first time in speech separation, three efficient self-attention mechanisms [8], namely Longformer [11], Linformer [12], and Reformer [13] attention, all found to yield comparable or even better results than standard self-attention in natural language processing (NLP) applications.

本文扩展了我们先前基于Transformer的语音分离架构研究。在我们之前的工作[14]中,例如,我们仅关注标准的WSJ0-2/3Mix基准数据集。在此,我们针对更现实且更具挑战性的数据集提出了更多实验和见解,例如包含长混合音频的Libri2/3-Mix[15]、分别具有噪声和噪声加混响条件的WHAM![16]和WHAMR![17]。此外,我们调整了SepFormer以执行语音增强,并在VoiceBank-DEMAND、WHAM!和WHAMR!数据集上提供了实验证据。本文的另一贡献是研究了不同类型的自注意力机制在语音分离中的应用。我们首次在语音分离中探索了三种高效的自注意力机制[8],即Longformer[11]、Linformer[12]和Reformer[13]注意力,这些机制在自然语言处理(NLP)应用中均表现出与标准自注意力相当甚至更优的效果。

We found that Reformer and Longformer attention mechanisms exhibit a favorable trade-off between performance and memory requirements. For instance, the Reformer-based model turned out to be even more efficient in terms of memory usage and inference speed than the popular Conv-TasNet model while yielding significantly better performance than the latter ( $16.7\mathrm{dB}$ versus 15.3 dB SI-SNR improvement). Despite the impressive improvements in the memory footprint and inference time, we found that the best performance, in speech separation, is still achieved, by far, with the regular selfattention mechanism used in the original SepFormer model, confirming the importance of full self-attention mechanisms.

我们发现Reformer和Longformer的注意力机制在性能和内存需求之间展现出良好的平衡。例如,基于Reformer的模型在内存占用和推理速度上甚至比流行的Conv-TasNet模型更高效,同时性能显著优于后者(SI-SNR提升16.7dB对比15.3dB)。尽管在内存占用和推理时间方面取得了显著改进,但我们发现迄今为止,语音分离的最佳性能仍由原始SepFormer模型中使用的常规自注意力机制实现,这证实了完整自注意力机制的重要性。

The training recipes for the main experiments are available in the Speech brain [18] toolkit. Moreover, the pretrained models for the WSJ0-2/3Mix datasets, Libri2/3Mix datasets, WHAM!/WHAMR! datasets are available on the Speech Brain Hugging face page1.

主要实验的训练方案可在SpeechBrain[18]工具包中获取。此外,WSJ0-2/3Mix数据集、Libri2/3Mix数据集以及WHAM!/WHAMR!数据集的预训练模型已发布于SpeechBrain的Hugging Face页面。

The contributions in this paper are summarized as follows,

本文的贡献总结如下:

The paper is organized as follows. In Section III, we introduce the building blocks of Transformers and also describe the three efficient attention mechanisms (Longformer, Linformer, and Reformer) explored in this work. In Section IV, we describe the SepFormer model in detail with a focus on the dual-path processing pipeline employed by the model. Finally, in Section VI, we present the results on speech separation, speech enhancement, and experimental analysis of different types of self-attention.

本文组织结构如下。在第三节中,我们介绍了Transformer的基础模块,并详细阐述了本研究中探索的三种高效注意力机制 (Longformer、Linformer和Reformer)。第四节重点描述了SepFormer模型采用的双路径处理流程。最后,第六节展示了语音分离、语音增强的实验结果,以及不同类型自注意力的对比分析。

II. RELATED WORKS

II. 相关工作

A. Deep learning for source separation

A. 源分离的深度学习

Thanks to the advances in deep learning, tremendous progress has been made in the source separation domain. Notable early works include Deep Clustering [19], where a recurrent neural network is trained on an affinity matrix in order to estimate embeddings for each source from the magnitude spectra of the mixture. TasNet and Conv-TasNet [20], [21] achieved impressive performance by introducing time-domain processing, combined with utterance level and permutation-invariant training [22].

得益于深度学习的进步,源分离领域取得了巨大进展。早期重要工作包括Deep Clustering [19],该方法通过训练循环神经网络处理亲和矩阵,从混合信号的幅度谱中估计各声源的嵌入表示。TasNet与Conv-TasNet [20][21] 通过引入时域处理技术,结合语句级和置换不变训练 [22],实现了令人瞩目的性能突破。

More recent works include Sudo rm -rf [23], which uses a U-Net type architecture to reduce computational complexity, and Dual-Path Recurrent Neural Network (DPRNN) [8]. The latter first introduced the extremely effective dual-path processing framework adopted in this work. Other works focused more on training strategies, such as [24], which proposed to split the learning of the encoder-decoder pair and the masking network into two steps in time domain separation. [25] reports impressive performance with a modified DPRNN model using a technique to determine the number of speakers. In [26] Wavesplit was proposed and it further improved over [25] by leveraging speaker-id information.

近期的工作包括 Sudo rm -rf [23],它采用 U-Net 架构来降低计算复杂度,以及双路径循环神经网络 (DPRNN) [8]。后者首次引入了本文采用的极其高效的双路径处理框架。其他研究更侧重于训练策略,例如 [24] 提出将编码器-解码器对和掩码网络的学习分两步进行时域分离。[25] 报告了采用改进版 DPRNN 模型的出色性能,该模型使用了一种确定说话人数量的技术。[26] 提出了 Wavesplit,它通过利用说话人 ID 信息进一步改进了 [25] 的性能。

At the time of the writing, only a few papers exist on Transformers for source separation. These works include DPTNet [9], which adopts an architecture similar to DPRNN but adds

在撰写本文时,仅有少数关于Transformer用于音源分离的论文。这些工作包括采用类似DPRNN架构但增加了Transformer模块的DPTNet [9]

B. Transformers for source separation

B. 用于源分离的Transformer

a multi-head-attention layer plus layer normalization in each block before the RNN part. SepFormer, on the contrary, uses multiple Transformer encoder blocks without any RNN in each dual-path processing block. Our results in Section VI indicate that SepFormer outperforms DPTNet, and is faster and more parallel iz able at inference time due to the absence of recurrent operations.

多头注意力层加上层归一化,每个模块在RNN部分之前。相反,SepFormer在每个双路径处理模块中使用多个Transformer编码器块,没有任何RNN。我们在第六部分的结果表明,SepFormer优于DPTNet,并且由于没有循环操作,在推理时更快且更易于并行化。

In [27], the authors propose Transformers to deal with a multi-microphone meeting-like scenario, where the amount of overlapping speech is low and a powerful separation might not be needed. The system’s main feature is adapting the number of transformer layers according to the complexity of the input mixture. In [28], a multi-scale transformer is proposed. As shown in Section VI, the SepFormer outperforms this method. Other Transformer-based source separation works include [29], which uses a similar architecture as SepFormer but uses RNNs before the self-attention blocks (like DPTNet). There also exists methods that leverage Transformers for continuous speech separation, which aim to do source separation on long, meeting-like audio recordings. Prominent examples include [27], [30], [31]. Moreover, there have been recent concurrent works exploring efficient attention mechanisms for speech separation [32], [33]. We note that, the architectures of the separation networks that we explore for this purpose are different from these aforementioned works.

在[27]中,作者提出用Transformer处理多麦克风会议场景,该场景下语音重叠率较低且可能不需要强分离能力。该系统主要特性是根据输入混合音的复杂度自适应调整Transformer层数。[28]提出了一种多尺度Transformer,如第六节所示,SepFormer性能优于该方法。其他基于Transformer的语音分离工作包括[29],其采用与SepFormer相似的架构,但在自注意力模块前使用RNN(类似DPTNet)。另有方法利用Transformer进行连续语音分离,旨在对会议式长音频进行音源分离,典型代表包括[27]、[30]、[31]。此外,近期有并行研究探索语音分离的高效注意力机制[32]、[33]。需要说明的是,本文研究的分离网络架构与上述工作均不相同。

III. TRANSFORMERS

III. TRANSFORMER

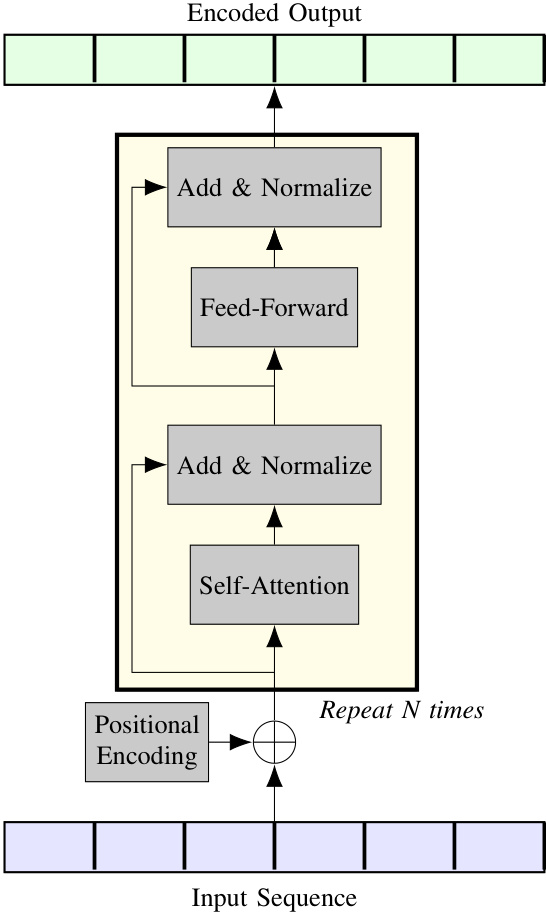

In this paper, we utilize the encoder part of the Transformer architecture, which is depicted in Fig. 1. The encoder turns an input sequence $X={x_{1},\dots,x_{T}}\in\mathbb{R}^{F\times T}$ (where $F$ denotes the number of features, and $T$ denotes the signal length) into an output sequence $Y~={y_{0},\dots,y_{T}}\in~\mathbb{R}^{F\times T}$ using a pipeline of computations that involve positional embedding, multi-head self-attention, normalization, feed-forward layers, and residual connections.

本文采用Transformer架构的编码器部分,其结构如图1所示。编码器通过包含位置嵌入 (positional embedding)、多头自注意力 (multi-head self-attention)、归一化 (normalization)、前馈层 (feed-forward layers) 和残差连接 (residual connections) 的计算流程,将输入序列 $X={x_{1},\dots,x_{T}}\in\mathbb{R}^{F\times T}$(其中 $F$ 表示特征数量,$T$ 表示信号长度)转换为输出序列 $Y~={y_{0},\dots,y_{T}}\in~\mathbb{R}^{F\times T}$。

A. Multi-head self-attention

A. 多头自注意力 (Multi-head self-attention)

The multi-head self-attention mechanism allows the Transformer to model dependencies across all the elements of the sequence. The first step calculates the Query, Key, and Value matrices from the input sequence $X$ of length $T$ . This operation is performed by multiplying the input vector by weight matrices: $Q=W_{Q}X$ , $K=W_{K}X$ , $V=W_{V}X$ , where $W_{Q},W_{K},W_{V}\in\mathbb{R}^{d_{\mathrm{model}}\times\dot{F}}$ . The attention layer consists of the following operations:

多头自注意力机制使Transformer能够建模序列中所有元素之间的依赖关系。第一步从长度为$T$的输入序列$X$中计算查询(Query)、键(Key)和值(Value)矩阵。该操作通过将输入向量与权重矩阵相乘实现:$Q=W_{Q}X$,$K=W_{K}X$,$V=W_{V}X$,其中$W_{Q},W_{K},W_{V}\in\mathbb{R}^{d_{\mathrm{model}}\times\dot{F}}$。注意力层包含以下运算:

$$

{\mathrm{Attention}}(Q,K,V)={\mathrm{SoftMax}}\left({\frac{Q^{\top}K}{\sqrt{d_{k}}}}\right)V^{\top},

$$

$$

{\mathrm{Attention}}(Q,K,V)={\mathrm{SoftMax}}\left({\frac{Q^{\top}K}{\sqrt{d_{k}}}}\right)V^{\top},

$$

where $d_{k}$ represents the latent dimensionality of the $Q$ and $K$ matrices. The attention weights are obtained from a scaled dot-product between queries and keys: closer queries and key vectors will have higher dot products. The softmax function ensures that the attention weights range between 0 and 1. The attention mechanism produces $T$ weights for each input element (i.e., $T^{2}$ attention weights). All in all, the softmax part of the attention layer computes relative importance weights, and then we multiply this attention map with the input value sequence $V$ . Multi-head attention consists of several parallel attention layers. More in detail, it is computed as follows:

其中 $d_{k}$ 表示 $Q$ 和 $K$ 矩阵的潜在维度。注意力权重通过查询(query)和键(key)之间的缩放点积获得:越接近的查询向量和键向量会产生更高的点积值。softmax函数确保注意力权重范围在0到1之间。该注意力机制为每个输入元素生成 $T$ 个权重(即 $T^{2}$ 个注意力权重)。总体而言,注意力层的softmax部分计算相对重要性权重,然后将该注意力图与输入值序列 $V$ 相乘。多头注意力由多个并行的注意力层组成,具体计算方式如下:

Fig. 1. The encoder of a standard Transformer. Positional embeddings are added to the input sequence. Then, N encoding layers based on multihead self-attention, normalization, feed-forward transformations, and residual connections process it to generate an output sequence.

图 1: 标准 Transformer 的编码器结构。位置编码 (positional embeddings) 被添加到输入序列中,随后经过基于多头自注意力 (multihead self-attention)、归一化、前馈变换和残差连接的 N 个编码层处理,最终生成输出序列。

$$

\begin{array}{r l}&{\mathrm{MultiHeadAttention}(Q,K,V)}\ &{=\mathrm{Concat}(\mathrm{Head}{1},...,\mathrm{Head}{h})W^{O},}\ &{\mathrm{whereHead}{i}=\mathrm{Attention}(Q_{i},K_{i},V_{i}),}\end{array}

$$

$$

\begin{array}{r l}&{\mathrm{MultiHeadAttention}(Q,K,V)}\ &{=\mathrm{Concat}(\mathrm{Head}{1},...,\mathrm{Head}{h})W^{O},}\ &{\mathrm{whereHead}{i}=\mathrm{Attention}(Q_{i},K_{i},V_{i}),}\end{array}

$$

where $h$ is the number of parallel attention heads and $W^{O}\in~\mathbb{R}^{d_{\mathrm{model}}\times h F}$ is the matrix that combines the parallel attention heads, and $d_{\mathrm{model}}$ denotes the latent dimensionality of this combined model.

其中 $h$ 是并行注意力头 (parallel attention heads) 的数量,$W^{O}\in~\mathbb{R}^{d_{\mathrm{model}}\times h F}$ 是组合并行注意力头的矩阵,$d_{\mathrm{model}}$ 表示该组合模型的潜在维度。

B. Feed-Forward Layers

B. 前馈层

The feed-forward component of the Transformer architecture consists of a two-layer perceptron. The exact definition of it is the following:

Transformer架构的前馈组件由一个两层感知机构成。其具体定义如下:

$$

\mathrm{Feed-Forward}(x)=\mathrm{ReLU}(x W_{1}+b_{1})W_{2}+b_{2},

$$

$$

\mathrm{Feed-Forward}(x)=\mathrm{ReLU}(x W_{1}+b_{1})W_{2}+b_{2},

$$

where $x$ is the input. In the context of sequences, this feedforward transformation is applied to each time point separately. $W_{1}$ , and $W_{2}$ are learnable matrices, and $b_{1}$ , and $b_{2}$ are learnable bias vectors.

其中 $x$ 是输入。在序列处理场景中,该前馈变换会独立作用于每个时间点。$W_{1}$ 和 $W_{2}$ 是可学习矩阵,$b_{1}$ 和 $b_{2}$ 是可学习偏置向量。

C. Positional encoding

C. 位置编码

As the self-attention layer and feed-forward layers do not embed any notion of order, the transformer uses a positional

由于自注意力层和前馈层不包含任何顺序概念,Transformer使用了位置编码

encoding scheme for injecting sequence ordering information. The positional encoding is defined as follows:

用于注入序列排序信息的编码方案。位置编码定义如下:

$$

\begin{array}{r}{P E_{t,2i}=\mathrm{Sin}(t/10000^{2i/d_{\mathrm{model}}})}\ {P E_{t,2i+1}=\mathrm{Cos}(t/10000^{2i/d_{\mathrm{model}}})}\end{array}

$$

$$

\begin{array}{r}{P E_{t,2i}=\mathrm{Sin}(t/10000^{2i/d_{\mathrm{model}}})}\ {P E_{t,2i+1}=\mathrm{Cos}(t/10000^{2i/d_{\mathrm{model}}})}\end{array}

$$

This encoding relies on sinusoids of different frequencies in each latent dimension to encode positional information [1].

这种编码方式利用每个潜在维度中不同频率的正弦波来编码位置信息 [1]。

D. Reducing the memory bottleneck

D. 缓解内存瓶颈

Many alternative architectures have been proposed in the literature. Popular variations for mitigating the quadratic memory issues are described in the following.

文献中提出了许多替代架构。以下是缓解二次内存问题的常见变体。

- Longformer: Longformer [11] aims to reduce the quadratic complexity by replacing the full self-attention structure with a combination of local and global attention. Specifically, Longformer relies on local attention that captures dependencies from nearby elements and global attention that globally captures dependencies from all the elements. To keep the computational requirements manageable, global attention is performed for a few special elements in the sequence.

- Longformer: Longformer [11] 旨在通过用局部和全局注意力相结合的方式替代完整的自注意力结构,从而降低二次复杂度。具体来说,Longformer 依赖于局部注意力(捕捉附近元素的依赖关系)和全局注意力(全局捕捉所有元素的依赖关系)。为了保持计算需求可控,全局注意力仅针对序列中的少数特殊元素执行。

- Linformer: Linformer [12] avoids the quadratic complexity by reducing the size of the time dimension of the matrices $K,V\in\mathbb{R}^{d_{\mathrm{model}}\times T}$ . This is done by projecting the time dimension $T$ to a smaller dimensionality $k$ by using projection matrices $P,F\in\mathbb{R}^{T\times k}$ . The multi-head attention equation, therefore, becomes the following:

- Linformer: Linformer [12] 通过缩减矩阵 $K,V\in\mathbb{R}^{d_{\mathrm{model}}\times T}$ 的时间维度规模来避免二次复杂度。具体实现方式是使用投影矩阵 $P,F\in\mathbb{R}^{T\times k}$ 将时间维度 $T$ 映射到更小的维度 $k$。因此,多头注意力方程变为如下形式:

$$

\mathrm{ention}\left(Q,K,V\right)=\mathrm{SoftMax}\left(\frac{Q^{\top}(K P)}{\sqrt{d_{k}}}\right)(V F)^{\top},

$$

$$

\mathrm{ention}\left(Q,K,V\right)=\mathrm{SoftMax}\left(\frac{Q^{\top}(K P)}{\sqrt{d_{k}}}\right)(V F)^{\top},

$$

which effectively limits the complexity of the matrix product between the softmax output and the $V$ matrix.

这有效地限制了softmax输出与$V$矩阵之间矩阵乘积的复杂度。

- Reformer: Reformer [13] uses locality-sensitive hashing (LSH) to reduce the complexity of self-attention. LSH is used to find the vector pairs $(q,k),q\in Q,k\in K$ that are closer. The intuition is that those pairs will have a bigger dot product and contribute the most to the attention matrix. Because of this, the authors limit the attention computation to the close pairs $(q,k)$ , while ignoring the others (saving time and memory). In addition, the Reformer, inspired by [34], implements reversible Transformer layers that avoid the linear memory complexity scaling with respect to the number of layers. An example usage of Reformer in speech is in Text-to-Speech [35].

- Reformer: Reformer [13] 使用局部敏感哈希 (LSH) 来降低自注意力 (self-attention) 的复杂度。LSH 用于寻找向量对 $(q,k),q\in Q,k\in K$ 中距离较近的组合。其核心思想是这些组合的点积会更大,对注意力矩阵的贡献也最显著。因此,作者将注意力计算限制在相近的 $(q,k)$ 对上,同时忽略其他组合(从而节省时间和内存)。此外,受 [34] 启发,Reformer 实现了可逆的 Transformer 层,避免了内存复杂度随层数线性增长的问题。Reformer 在语音领域的应用案例包括文本转语音 (Text-to-Speech) [35]。

IV. SEPARATION TRANSFORMER (SEPFORMER)

IV. 分离Transformer (SEPFORMER)

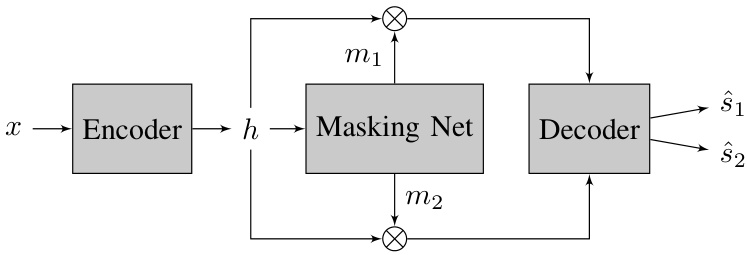

The SepFormer relies on the masking-based source separation framework popularized by [20], [21] (Figure 2). An input mixture $x\in\mathbb{R}^{T}$ feeds the architecture. An encoder learns a latent representation $h\in\mathbb{R}^{F\times T^{\prime}}$ . Afterwards, a masking network estimates the masks $m_{1},m_{2}\in\mathbb{R}^{F\times T^{\prime}}$ . The separated time domain signals for source 1 and source 2 are obtained by passing the $hm_{1}$ , and $hm_{2}$ through the decoder, where $^*$ denotes element-wise multiplication. In the following, we explain in detail each block separately.

SepFormer基于[20]、[21]提出的掩码分离框架(图2)。输入混合信号$x\in\mathbb{R}^{T}$进入架构后,编码器学习潜在表示$h\in\mathbb{R}^{F\times T^{\prime}}$。随后掩码网络估计出掩码$m_{1},m_{2}\in\mathbb{R}^{F\times T^{\prime}}$。通过将$hm_{1}$和$hm_{2}$输入解码器(其中$^*$表示逐元素相乘),可获得源1和源2的分离时域信号。下文将分别详解各模块。

Fig. 2. The high-level description of the masking-based source separation pipeline: The encoder block learns a latent representation for the input signal. The masking network estimates optimal masks to separate the sources in the mixtures. The decoder finally reconstructs the estimated sources in the time domain using these masks. The self-attention-based modeling is applied inside the masking network.

图 2: 基于掩码的源分离流程概览:编码器模块学习输入信号的潜在表征。掩码网络估计最优掩码以分离混合信号中的各源信号。解码器最终利用这些掩码在时域中重建估计的源信号。基于自注意力(self-attention)的建模应用于掩码网络内部。

A. Encoder

A. 编码器

The encoder takes the time-domain mixture-signal $x\in\mathbb{R}^{T}$ as input. It learns a time-frequency latent representation $h\in$ $\mathbb{R}^{F\times\mathbf{\dot{T}}^{\prime}}$ using a single convolutional layer:

编码器以时域混合信号 $x\in\mathbb{R}^{T}$ 作为输入,通过单层卷积学习到时频潜在表示 $h\in\mathbb{R}^{F\times\mathbf{\dot{T}}^{\prime}}$:

$$

h=\operatorname{ReLU}(\operatorname{Conv1d}(x)).

$$

$$

h=\operatorname{ReLU}(\operatorname{Conv1d}(x)).

$$

As we will describe in Section VI-A, the stride factor of this convolution impacts significantly the performance, speed, and memory of the model.

正如我们将在第 VI-A 节中所述,该卷积的步长因子 (stride factor) 会显著影响模型的性能、速度和内存占用。

B. Decoder

B. 解码器

The decoder uses a simple transposed convolutional layer with the same stride and kernel size as the encoder. Its input is the element-wise multiplication between the mask of the $k$ -th source $m_{k}$ and the output of the encoder $h$ . The transformation operated by the decoder is the following:

解码器使用与编码器相同步长和核大小的简单转置卷积层。其输入是第 $k$ 个源掩码 $m_{k}$ 与编码器输出 $h$ 之间的逐元素乘积。解码器执行的变换如下:

$$

\widehat{s}{k}=\mathrm{Conv1dTranspose}(m_{k}\odot h),

$$

$$

\widehat{s}{k}=\mathrm{Conv1dTranspose}(m_{k}\odot h),

$$

where $\widehat{s}_{k}\in\mathbb{R}^{T}$ denotes the separated source $k$ , and $\odot$ denotes eleme nbt-wise multiplication.

其中 $\widehat{s}_{k}\in\mathbb{R}^{T}$ 表示分离出的源信号 $k$ ,$\odot$ 表示逐元素乘法。

C. Masking Network

C. 掩码网络

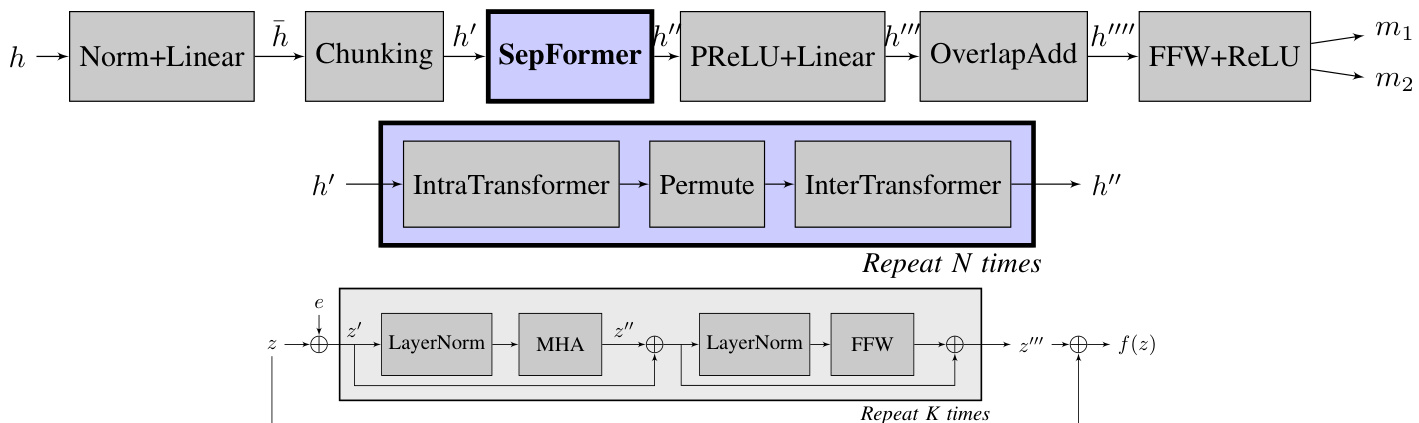

Figure 3 (top) shows the detailed architecture of the masking network (Masking Net). The masking network is fed by the encoded representations $\boldsymbol{h}\in\mathbb{R}^{F\times T^{\gamma}}$ and estimates a mask ${m_{1},\dots,m_{N s}}$ for each of the $N s$ speakers in the mixture.

图 3 (top) 展示了掩码网络 (Masking Net) 的详细架构。该网络以编码表示 $\boldsymbol{h}\in\mathbb{R}^{F\times T^{\gamma}}$ 作为输入,并为混合音频中的 $N s$ 个说话人分别估计掩码 ${m_{1},\dots,m_{N s}}$。

As in [21], the encoded input $h$ is normalized with layer normalization [36] and processed by a linear layer (with dimensionality $F$ ). Following the dual-path framework introduced in [8], we create overlapping chunks of size $C$ by chopping up $h$ on the time axis with an overlap factor of $50%$ . We denote the output of the chunking operation with $h^{\prime}\in\mathbb{R}^{F\times C\times N c}$ , where $C$ is the length of each chunk, and $N c$ is the resulting number of chunks.

如[21]所述,编码输入 $h$ 经过层归一化[36]处理,并通过一个维度为 $F$ 的线性层。根据[8]提出的双路径框架,我们在时间轴上以 $50%$ 的重叠因子将 $h$ 切分为大小为 $C$ 的重叠块。用 $h^{\prime}\in\mathbb{R}^{F\times C\times N c}$ 表示分块操作的输出,其中 $C$ 为每个块的长度,$N c$ 为最终生成的块数。

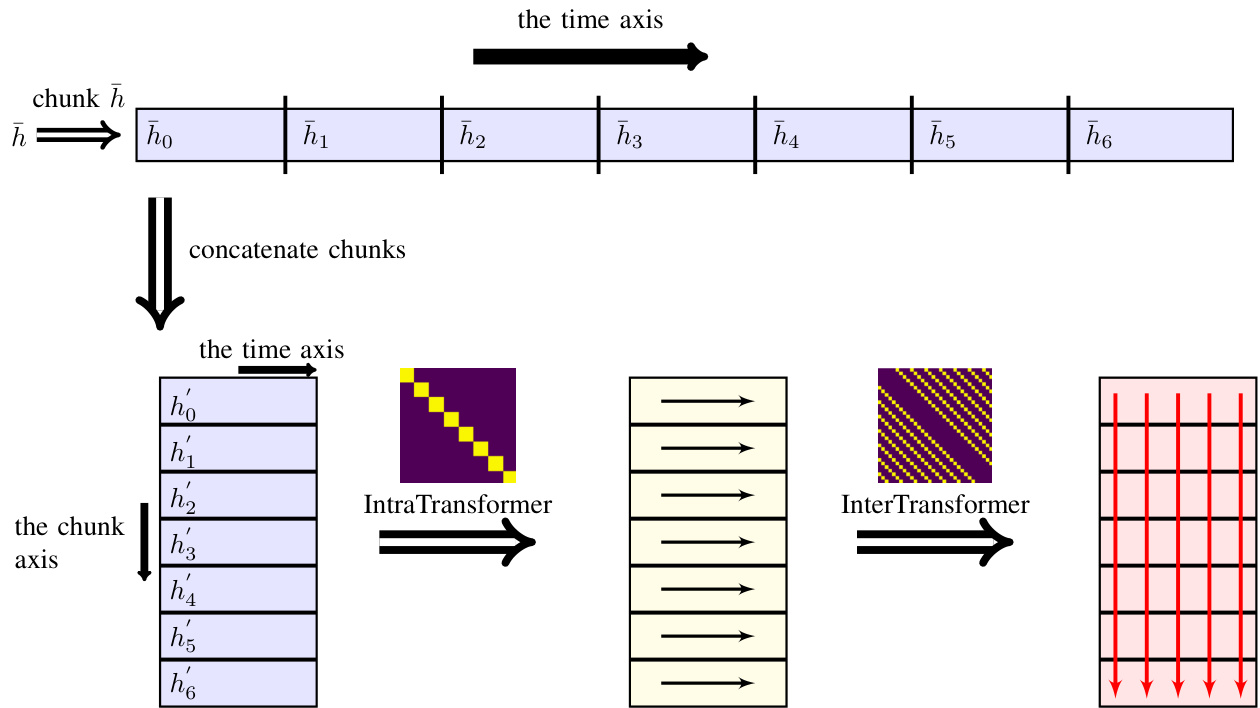

The representation $h^{\prime}$ feeds the SepFormer separator block, which is the main component of the masking network. This block, which will be described in detail in Section IV-D, employs a pipeline composed of two Transformers able to learn short and long-term dependencies. We pictorially describe the overall pipeline of the SepFormer separator block in Figure 4. We would like to note that, for graphical convenience, nonoverlapping chunks were used in the figure. However, in the experiments in this paper, we used $50%$ overlapping chunks.

表示 $h^{\prime}$ 输入到 SepFormer 分离器模块,这是掩码网络的核心组件。该模块将在第 IV-D 节详细描述,它采用由两个 Transformer 组成的管道,能够学习短期和长期依赖关系。我们在图 4 中直观展示了 SepFormer 分离器模块的整体流程。需要说明的是,出于图示便利性,图中使用了非重叠分块,但在本文实验中我们采用了 $50%$ 重叠分块。

The output of the SepFormer $h^{\prime\prime}\in\mathbb{R}^{F\times C\times N c}$ is processed by PReLU activation s followed by a linear layer. We denote the output of this module by $h^{\prime\prime\prime}\in\dot{\mathbb{R}}^{(F\times N s)\times C\times N c}$ , where $N s$ is the number of speakers. Afterward, we apply the overlapadd scheme described in [8] and obtain h′′′′ ∈ RF ×Ns×T ′. We pass this representation through two feed-forward layers and a ReLU activation at the end to obtain a mask $m_{k}$ for each one of the speakers.

SepFormer的输出$h^{\prime\prime}\in\mathbb{R}^{F\times C\times N c}$经过PReLU激活函数处理后接入线性层。该模块的输出记为$h^{\prime\prime\prime}\in\dot{\mathbb{R}}^{(F\times N s)\times C\times N c}$,其中$N s$代表说话者数量。随后应用[8]中描述的重叠相加方案,得到h′′′′ ∈ RF ×Ns×T ′。将该表征通过两个前馈层,并在末端使用ReLU激活函数,最终为每位说话者生成掩码$m_{k}$。

D. Dual-Path Processing Pipeline and SepFormer

D. 双路径处理流程与SepFormer

The dual-path processing pipeline [8] (shown in Fig. 4) enables a combination of short and long-term modeling while making long sequence processing feasible with a self-attention block. In the context of the masking-based end-to-end source separation shown in Fig. 2, the latent representation $h\in$ RF ×T ′ is divided into overlapping chunks.

双路径处理流程 [8] (如图 4 所示) 实现了短期与长期建模的结合,同时通过自注意力模块使长序列处理成为可能。在图 2 所示的基于掩码的端到端音源分离场景中,潜在表示 $h\in$ RF ×T ′ 被划分为重叠区块。

A Transformer encoder is applied to each chunk separately. We call this block Intra Transformer (IntraT) and denote it with $f_{\mathrm{intra}}(.)$ . This block effectively operates as a block diagonal attention structure, modeling the interaction between all elements in each chunk separately so that the attention memory requirements are bounded. Another transformer encoder is applied to model the inter-chunk interactions, i.e. between each chunk element. We call this block Inter Transformer (InterT) and denote with $f_{\mathrm{inter}}(.)$ . Because this block attends to all elements that occupy the same position in each chunk, it effectively operates as a strided-attention structure. Mathematically, the overall transformation can be summarized as follows:

对每个分块单独应用一个Transformer编码器。我们称该模块为块内Transformer (IntraT),用$f_{\mathrm{intra}}(.)$表示。该模块通过块对角注意力结构有效建模各分块内部元素间的交互,从而限制注意力内存需求。另一个Transformer编码器用于建模分块间交互(即各分块对应位置元素间的交互),我们称该模块为块间Transformer (InterT),用$f_{\mathrm{inter}}(.)$表示。由于该模块关注所有分块中相同位置的元素,实际上形成了跨步注意力结构。整体变换可数学表述为:

$$

h^{\prime\prime}=f_{\mathrm{inter}}(f_{\mathrm{intra}}(h^{\prime})).

$$

$$

h^{\prime\prime}=f_{\mathrm{inter}}(f_{\mathrm{intra}}(h^{\prime})).

$$

In the standard SepFormer model defined in [14], $f_{\mathrm{inter}}$ and $f_{\mathrm{intra}}$ are chosen to be full self-attention blocks. In Section IV-E, we describe the full self-attention block employed in SepFormer in detail.

在[14]中定义的标准SepFormer模型中,$f_{\mathrm{inter}}$和$f_{\mathrm{intra}}$被选为完整的自注意力块。在第四节E部分,我们将详细描述SepFormer中采用的完整自注意力块。

E. Full Self-Attention Transformer Encoder

E. 全自注意力 Transformer 编码器

The architecture for the full self-attention transformer encoder layers follows the original Transformer architecture [1]. Figure 3 (Bottom) shows the architecture used for the Intra Transformer and Inter Transformer blocks.

完整自注意力Transformer编码器层的架构遵循原始Transformer架构[1]。图3 (Bottom)展示了用于Intra Transformer和Inter Transformer模块的架构。

We use the variable $z$ to denote the input to the Transformer. First of all, sinusoidal positional encoding $e$ is added to the input $z$ , such that,

我们使用变量 $z$ 表示Transformer的输入。首先,将正弦位置编码 $e$ 添加到输入 $z$ 中,使得

$$

z^{\prime}=z+e.

$$

$$

z^{\prime}=z+e.

$$

We follow the positional encoding definition in [1]. We then apply multiple Transformer layers. Inside each Transformer layer $g(.)$ , we first apply layer normalization, followed by multi-head attention (MHA):

我们遵循[1]中的位置编码定义,随后应用多个Transformer层。在每个Transformer层 $g(.)$ 内部,首先进行层归一化,接着执行多头注意力机制 (MHA):

$$

z^{\prime\prime}=\mathrm{MultiHeadAttention}(\mathrm{LayerNorm}(z^{\prime})).

$$

$$

z^{\prime\prime}=\mathrm{MultiHeadAttention}(\mathrm{LayerNorm}(z^{\prime})).

$$

As proposed in [1], each attention head computes the scaled dot-product attention between all the sequence elements. The Transformer finally employs a feed-forward network (FFW), which is applied to each position independently:

如[1]所提出的,每个注意力头会计算所有序列元素之间的缩放点积注意力。Transformer最终采用一个前馈网络(FFW),该网络独立应用于每个位置:

$$

z^{\prime\prime\prime}=\operatorname{Feed-Forward}(\operatorname{LayerNorm}(z^{\prime\prime}+z^{\prime}))+z^{\prime\prime}+z^{\prime}.

$$

$$

z^{\prime\prime\prime}=\operatorname{Feed-Forward}(\operatorname{LayerNorm}(z^{\prime\prime}+z^{\prime}))+z^{\prime\prime}+z^{\prime}.

$$

Fig. 3. (Top) The overall architecture proposed for the masking network. (Middle) The SepFormer Block. (Bottom) The transformer architecture $f(.)$ that is used both in the Intra Transformer block and in the Inter Transformer block.

图 3: (上) 提出的掩码网络整体架构。(中) SepFormer模块。(下) 同时用于Intra Transformer模块和Inter Transformer模块的Transformer架构 $f(.)$。

Fig. 4. The dual-path processing employed in the SepFormer masking network. The input representation $\bar{h}$ is first of all chunked to get the chunks $\bar{h}{0}$ , $\bar{h}{1}$ , $\therefore,\bar{h}_{6}$ . Then the chunks are concatenated on another dimension (chunk dimension). Afterward, we apply the Intra Transformer along the time dimension of each chunk and the Inter Transformer along the chunk dimension. As mentioned in IV-C, for graphical convenience, non-overlapping chunks are used in the figure. However, for the experiments in this paper, we used chunks with $50%$ overlap. The attention maps show how elements of the input feature vectors are interconnected sequentially inside the Intra and Inter blocks.

图 4: SepFormer掩码网络采用的双路径处理流程。输入表征$\bar{h}$首先被分块处理,得到分块序列$\bar{h}{0}$、$\bar{h}{1}$、$\therefore,\bar{h}_{6}$。随后这些分块在另一个维度(分块维度)上进行拼接。接着,我们沿每个分块的时间维度应用Intra Transformer,并沿分块维度应用Inter Transformer。如第IV-C节所述,为图示清晰,图中使用了非重叠分块。但本文实验实际采用$50%$重叠的分块方案。注意力图谱展示了输入特征向量元素在Intra和Inter模块内部的序列化关联方式。

The overall transformer block is therefore defined as follows:

整体 Transformer 模块定义如下:

$$

f(z)=g^{K}(z+e)+z,

$$

$$

f(z)=g^{K}(z+e)+z,

$$

where $g^{K}(.)$ denotes $K$ layers of transformer layer $g(.)$ . We use $K=N_{\mathrm{{intra}}}$ layers for the IntraT, and $K=N_{\mathrm{{inter}}}$ layers for the InterT. As shown in Figure 3 (Bottom) and Eq. (13), we add residual connections across the transformer layers and across the transformer architecture to improve gradient back propagation. We would like to note that, in the experiments presented in this paper, a non-causal attention mechanism was utilized.

其中 $g^{K}(.)$ 表示 $K$ 层 Transformer 层 $g(.)$。我们使用 $K=N_{\mathrm{{intra}}}$ 层作为 IntraT,$K=N_{\mathrm{{inter}}}$ 层作为 InterT。如图 3 (底部) 和公式 (13) 所示,我们在 Transformer 层之间以及 Transformer 架构中添加了残差连接以改善梯度反向传播。需要说明的是,本文实验中采用了非因果注意力机制。

V. EXPERIMENTAL SETUP

V. 实验设置

A. Datasets

A. 数据集

In our experiments, we use the popular WSJ0-2mix and WSJ0-3mix datasets [19], where mixtures of two speakers and three speakers are created by randomly mixing utterances in the WSJ0 corpus. The relative levels for the sources are sampled uniformly between 0 dB to 5 dB. Respectively, 30, 10, and 5 hours of speech are used for training (20k utterances), validation $\mathrm{5k}$ utterances), and testing $3\mathrm{k}$ utterances). The training and test sets are created with different sets of speakers. We use the 8kHz version of the dataset, with the ‘min’ version where the added waveforms are clipped to the shorter signal’s length. These datasets have become the de-facto standard benchmark for source separation algorithms.

在我们的实验中,使用了流行的WSJ0-2mix和WSJ0-3mix数据集[19],其中通过随机混合WSJ0语料库中的话语创建了两人和三人混合语音。源信号的相对电平在0 dB至5 dB之间均匀采样。训练集(20k条话语)、验证集($\mathrm{5k}$条话语)和测试集($3\mathrm{k}$条话语)分别使用了30小时、10小时和5小时的语音数据。训练集和测试集由不同的说话人组成。我们使用8kHz版本的"min"数据集,其中添加的波形被截断为较短信号的长度。这些数据集已成为源分离算法的事实标准基准。

In addition to the WSJ0-2/3 Mix we also provide experimental evidence on WHAM! [16], and WHAMR! datasets [17] which are essentially derived from the WSJ0-2Mix dataset by adding environmental noise and environmental noise plus reverberation respectively. In WHAM! and WHAMR! datasets the environmental noises contain ambient noise from coffee shops, restaurants, and bars, which is collected by the authors of the original paper [16]. In WHAMR! the reverberation time RT60 is randomly uniformly sampled from three categories– low, medium, and high, which respectively have the distributions $\mathcal{U}(0.1,0.3)$ , $\mathcal{U}(0.2,0.4)$ , $\mathcal{U}(0.4,1.0)$ . More details regarding the $R T_{60}$ distribution of the WHAMR! dataset is given in the original paper [17].

除了WSJ0-2/3 Mix数据集外,我们还提供了在WHAM! [16]和WHAMR!数据集[17]上的实验证据。这两个数据集本质上是通过分别在WSJ0-2Mix数据集上添加环境噪声(环境噪声加混响)衍生而来。WHAM!和WHAMR!数据集中的环境噪声包含咖啡馆、餐厅和酒吧的 ambient noise(环境噪声),这些噪声由原论文作者[16]采集。在WHAMR!数据集中,混响时间RT60从低、中、高三类中随机均匀采样,其分布分别为$\mathcal{U}(0.1,0.3)$、$\mathcal{U}(0.2,0.4)$和$\mathcal{U}(0.4,1.0)$。关于WHAMR!数据集$R T_{60}$分布的更多细节详见原论文[17]。

The popular speech enhancement VoiceBank-DEMAND dataset [37] is also used to compare the SepFormer architecture with other state-of-the-art denoising models. We also provide experimental results on the LibriMix dataset [15], which contains longer and more challenging mixtures than the WSJ0-Mix dataset.

流行的语音增强 VoiceBank-DEMAND 数据集 [37] 也被用于比较 SepFormer 架构与其他最先进的降噪模型。我们还提供了 LibriMix 数据集 [15] 上的实验结果,该数据集包含比 WSJ0-Mix 数据集更长且更具挑战性的混合音频。

B. Architecture Details

B. 架构细节

The SepFormer encoder employs 256 convolutional filters with a kernel size of 16 samples and a stride factor of 8. The decoder uses the same kernel size and the stride factors of the encoder.

SepFormer编码器采用256个卷积滤波器,卷积核大小为16个样本,步长因子为8。解码器使用相同的卷积核大小和编码器的步长因子。

In our best models, the masking network processes chunks of size $C~=~250$ with a $50%$ overlap between them and employs 8 layers of Transformer encoder in both IntraTransformer and Inter Transformer. The dual-path processing pipeline is repeated $N=2$ times. We used 8 parallel attention heads and 1024-dimensional positional feed-forward networks within each Transformer layer. The model has a total of 25.7 million parameters.

在我们最佳模型中,掩码网络处理大小为 $C~=~250$ 的数据块,块间重叠率为 $50%$,并在IntraTransformer和Inter Transformer中均采用8层Transformer编码器。这种双路径处理流程重复 $N=2$ 次。每个Transformer层使用8个并行注意力头和1024维位置前馈网络。该模型总参数量为2570万。

C. Training Details

C. 训练细节

We use dynamic mixing (DM) data augmentation [26], which consists of an on-the-fly creation of new mixtures from single speaker sources. In this work, we expanded this powerful technique by applying speed perturbation before mixing the sources. The speed randomly changes between 95 $%$ slow-down and $105%$ speed-up.

我们采用动态混音 (dynamic mixing, DM) 数据增强技术 [26],该方法通过实时混合单说话人音源生成新混合物。本研究在混音前引入速度扰动对该技术进行扩展,速度随机变化范围为降速95%至加速105%。

We use the Adam algorithm [38] as an optimizer, with a learning rate of $1.5e^{-4}$ . After epoch 65 (after epoch 85 with DM), the learning rate is annealed by halving it if we do not observe any improvement of the validation performance for 3 successive epochs (5 epochs for DM). Gradient clipping is employed to limit the $l^{2}$ -norm of the gradients to 5. During training, we used a batch size of 1, and used the scaleinvariant signal-to-noise ratio (SI-SNR) [39] via utterancelevel permutation invariant loss [22], with clipping at $30\mathrm{dB}$ [26]. The SI-SNR measures the energy of the signal over the energy of the noise by using a zero mean signal estimate and the ground truth signal. We also use SDR (Signal to Distortion Ratio) which measures the energy of the signal over the energy of the noise, artifacts, and interference, as defined in [40]. In our tables, we report SI-SNRi, and SDRi values that denote the SI-SNR and SDR improvement values from the baseline results obtained when SI-SNR and SDR are computed with the mixture as the source estimate.

我们采用Adam算法[38]作为优化器,学习率为$1.5e^{-4}$。在第65个epoch后(使用DM时为第85个epoch后),若连续3个epoch(DM为5个epoch)未观察到验证性能提升,则将学习率减半。采用梯度裁剪将梯度的$l^{2}$-范数限制为5。训练时使用批量大小为1,并通过语句级排列不变损失[22]采用尺度不变信噪比(SI-SNR)[39],以$30\mathrm{dB}$[26]为裁剪阈值。SI-SNR通过零均值信号估计和真实信号,衡量信号能量与噪声能量的比值。我们还使用信号失真比(SDR)[40]来度量信号能量与噪声、伪影及干扰能量的比值。在表格中,我们报告SI-SNRi和SDRi值,这些值表示以混合信号作为源估计时,SI-SNR和SDR相对于基线结果的改进量。

We use automatic mixed precision to speed up training. Each model is trained for a maximum of 200 epochs. Each epoch takes approximately 1.5 hours on a single NVIDIA V100 GPU with 32 GB of memory with automatic mixed precision. The training recipes for SepFormer on WSJ0-2/3Mix datasets, Libri2/3Mix datasets, WHAM! and WHAMR! datasets can found in Speech brain 2.

我们使用自动混合精度 (automatic mixed precision) 来加速训练。每个模型最多训练200个周期,在配备32GB内存的NVIDIA V100 GPU上,使用自动混合精度时每个周期约需1.5小时。SepFormer在WSJ0-2/3Mix数据集、Libri2/3Mix数据集、WHAM!和WHAMR!数据集上的训练方案可在Speech brain 2中找到。

TABLE I BEST RESULTS ON THE WSJ0-2MIX DATASET (TEST-SET). DM STANDS FOR DYNAMIC MIXING.

*only SI-SNR and SDR (without improvement) are reported. **our experimentation ***uses speaker-ids as additional info.

表 1: WSJ0-2MIX 数据集(测试集)上的最佳结果 (DM 代表动态混合)

| 模型 | SI-SNRi | SDRi | #参数 | 步长 |

|---|---|---|---|---|

| Tasnet [20] | 10.8 | 11.1 | n.a. | 20 |

| SignPredictionNet[41] | 15.3 | 15.6 | 55.2M | 8 |

| Conv-TasNet [21] | 15.3 | 15.6 | 5.1M | 10 |

| Two-Step CTN[24] | 16.1 | n.a. | 8.6M | 10 |

| MGST[28] | 17.0 | 17.3 | n.a. | n.a. |

| DeepCASA [42] | 17.7 | 18.0 | 12.8M | 1 |

| FurcaNeXt [43] | n.a. | 18.4 | 51.4M | n.a. |

| DualPathRNN[8] | 18.8 | 19.0 | 2.6M | 1 |

| sudo rm -rf [23] | 18.9 | n.a. | 2.6M | 10 |

| VSUNOS[25] | 20.1 | 20.4 | 7.5M | 2 |

| DPTNet* [9] | 20.2 | 20.6 | 2.6M | 1 |

| DPTNet + DM** [9] | 20.6 | 20.8 | 26.2M | 8 |

| DPRNN + DM** [8] | 21.8 | 22.0 | 27.5M | 8 |

| SepFormer-CF+DM** | 21.9 | 22.1 | 38.5M | 8 |

| Wavesplit*** [26] | 21.0 | 21.2 | 29M | 1 |

| Wavesplit*** + DM [26] SepFormer | 22.2 20.4 | 22.3 20.5 | 29M 25.7M | 1 8 |

*仅报告了SI-SNR和SDR(无改进值)

**我们的实验

***使用说话人ID作为附加信息

TABLE II ABLATION OF THE SEPFORMER ON WSJ0-2MIX (VALIDATION SET).

表 2 WSJ0-2MIX 验证集上 SEPFORMER 的消融实验

| SI-SNRi | N | Nintra | Ninter | #Heads | DFF | PosEnc | DM |

|---|---|---|---|---|---|---|---|

| 22.3 | 2 | 8----8 | 8 | 1024 | Yes | Yes | |

| 20.5 | 2 | 8 | 8 | 8 | 1024 | Yes | No |

| 19.9 | 2 | 4 | 4 | 8 | 2048 | Yes | No |

| 19.4 | 2 | 4---4 | 8 | 2048 | No | No | |

| 19.2 | 2 | 4 | 1 | 8 | 2048 | Yes | No |

| 18.3 | 2 | 1 | 4 | 8 | 2048 | Yes | No |

| 19.1 | 2 | 3 | 3 | 8 | 2048 | Yes | No |

| 19.0 | 2 | 3 | 3 | 8 | 2048 | No | No |

VI. RESULTS

VI. 结果

A. Results on WSJ0-2/3 Mix datasets

A. WSJ0-2/3 Mix 数据集上的结果

WSJ0-2/3 Mix datasets are standard benchmarks in the source-separation literature. In Table I, we compare the performance achieved by the proposed SepFormer with the best results reported in the literature on the WSJ0-2mix dataset. The SepFormer achieves an SI-SNR improvement (SI-SNRi) of $22.3\mathrm{dB}$ and a Signal-to-Distortion Ratio [40] (SDRi) improvement of $22.4\mathrm{dB}$ on the test-set with dynamic mixing. When using dynamic mixing, the proposed architecture achieves stateof-the-art performance. The SepFormer outperforms previous systems without dynamic mixing, except for Wavesplit, which leverages speaker identity as additional information.

WSJ0-2/3混合数据集是源分离文献中的标准基准。表1比较了所提出的SepFormer在WSJ0-2mix数据集上取得的性能与文献报道的最佳结果。采用动态混合时,SepFormer在测试集上实现了22.3dB的SI-SNR提升(SI-SNRi)和22.4dB的信号失真比40提升。使用动态混合时,该架构达到了最先进的性能。除利用说话人身份作为额外信息的Wavesplit外,SepFormer在非动态混合条件下均优于先前系统。

In Table II, we also study the effect of various hyperparameters and data augmentations strategies (the reported performance is computed on the validation set). We observe that the number of InterT and IntraT blocks has an important impact on the performance. The best performance is reached with 8 layers for both blocks replicated two times. We also would like to point out that a respectable performance of $19.2\mathrm{dB}$ is obtained even when we use a single-layer transformer for the Inter Transformer. In contrast, when a single layer Transformer is used for Intra Transformer the performance drops to 18.3 dB. This suggests that the Intra Transformer, and thus local processing, has a greater influence on the final performance. It also emerges that positional encoding is helpful (e.g. see lines 3-4, and 7-8 of Table II). A similar outcome has been observed in [44] for speech enhancement. As for the number of attention heads, we observe a slight performance difference between 8 and 16 heads. Finally, as expected, we observed that dynamic mixing improves the performance.

在表 II 中,我们还研究了各种超参数和数据增强策略的影响(报告的性能是在验证集上计算的)。我们观察到 InterT 和 IntraT 块的数量对性能有重要影响。当两个块各使用 8 层并重复两次时,性能达到最佳。我们还想指出,即使 Inter Transformer 使用单层 Transformer,也能获得 19.2dB 的不错性能。相比之下,当 Intra Transformer 使用单层 Transformer 时,性能下降到 18.3 dB。这表明 Intra Transformer(即局部处理)对最终性能的影响更大。此外,位置编码也被证明是有帮助的(例如参见表 II 的第 3-4 行和第 7-8 行)。在语音增强任务中,[44] 也观察到了类似的结果。至于注意力头的数量,我们观察到 8 头和 16 头之间的性能差异较小。最后,正如预期的那样,动态混合提高了性能。

TABLE III BEST RESULTS ON THE WSJ0-3MIX DATASET.

表 III WSJ0-3MIX 数据集上的最佳结果

| 模型 | SI-SNRi | SDRi | 参数量 |

|---|---|---|---|

| Conv-TasNet [21] | 12.7 | 13.1 | 5.1M |

| 2.6M | |||

| DualPathRNN [8] | 14.7 | n.a | |

| VSUNOS [25] Wavesplit [26] | 16.9 17.3 | n.a 17.6 | 7.5M 29M |

| Wavesplit [26] + DM | 17.8 | 18.1 | 29M |

| SepFormer | 17.6 | 17.9 | 26M |

| SepFormer + DM | 19.5 | 19.7 | 26M |

To ascertain that SepFormer has better performance because of architectural choices (and not just because it has more parameters or dynamic mixing), we have compared the SepFormer with DPTNet, DPRNN, and with a SepFormer that utilizes a Conformer [7] block in the Intra Transformer. These three models are trained under the same conditions (with dynamic mixing, and $k e r n e l s i z e=16$ , kernel stride $=8$ as SepFormer). The results of this experiment in terms of SISNR on the test are as follows (shown in Table I) $20.6\mathrm{dB}$ for DPTNet (26.2M parameters), $21.8\mathrm{dB}$ for DPRNN (27.5M parameters), and 21.9 dB for SepFormer with Conformer block as intra block (38.5M parameters) (SepFormer-CF in Table I). We, therefore, observe that the better performance of SepFormer is due to the architectural differences, and not just because it has more parameters or is trained with dynamic mixing.

为了确认SepFormer的优越性能源于架构选择(而非仅因参数量更多或动态混音技术),我们将其与DPTNet、DPRNN以及采用Conformer [7] 模块替代Intra Transformer的SepFormer变体进行对比。这三个模型在相同条件下训练(均采用动态混音技术,卷积核尺寸$kernelsize=16$,步长$=8$,与SepFormer一致)。测试集的SISNR指标结果如下(见表1):DPTNet(2620万参数)为$20.6\mathrm{dB}$,DPRNN(2750万参数)为$21.8\mathrm{dB}$,而采用Conformer模块的SepFormer(3850万参数,表1中标记为SepFormer-CF)达到