Multi-View Hyper complex Learning for Breast Cancer Screening

多视角超复数学习在乳腺癌筛查中的应用

Abstract—Traditionally, deep learning methods for breast cancer classification perform a single-view analysis. However, radiologists simultaneously analyze all four views that compose a mammography exam, owing to the correlations contained in mammography views, which present crucial information for identifying tumors. In light of this, some studies have started to propose multi-view methods. Nevertheless, in such existing architectures, mammogram views are processed as independent images by separate convolutional branches, thus losing correlations among them. To overcome such limitations, in this paper, we propose a methodological approach for multi-view breast cancer classification based on parameterized hyper complex neural networks. Thanks to hyper complex algebra properties, our networks are able to model, and thus leverage, existing correlations between the different views that comprise a mammogram, thus mimicking the reading process performed by clinicians. This happens because hyper complex networks capture both global properties, as standard neural models, as well as local relations, i.e., inter-view correlations, which real-valued networks fail at modeling. We define architectures designed to process two-view exams, namely PHResNets, and four-view exams, i.e., PHYSEnet and PHYBOnet. Through an extensive experimental evaluation conducted with publicly available datasets, we demonstrate that our proposed models clearly outperform real-valued counterparts and state-of-the-art methods, proving that breast cancer classification benefits from the proposed multi-view architectures. We also assess the method general iz ability beyond mammogram analysis by considering different benchmarks, as well as a finerscaled task such as segmentation. Full code and pretrained models for complete reproducibility of our experiments are freely available at https://github.com/ispamm/PHBreast.

摘要—传统上,用于乳腺癌分类的深度学习方法仅进行单视图分析。然而,由于乳腺X光检查视图间存在相关性,放射科医师会同时分析构成乳腺X光检查的所有四个视图,这些相关性为识别肿瘤提供了关键信息。鉴于此,一些研究开始提出多视图方法。然而,在此类现有架构中,乳腺X光视图被独立的卷积分支作为独立图像处理,从而丢失了它们之间的相关性。为克服这些限制,本文提出了一种基于参数化超复数神经网络的多视图乳腺癌分类方法。得益于超复数代数特性,我们的网络能够建模并利用构成乳腺X光的不同视图间的现有相关性,从而模拟临床医生的阅片过程。这是因为超复数网络既能像标准神经模型一样捕获全局属性,又能捕获实值网络无法建模的局部关系(即视图间相关性)。我们定义了处理双视图检查的架构PHResNets,以及处理四视图检查的架构PHYSEnet和PHYBOnet。通过在公开数据集上进行的广泛实验评估,我们证明所提模型明显优于实值对应模型和最先进方法,证实乳腺癌分类能从所提多视图架构中受益。我们还通过考虑不同基准测试及更精细的分割任务,评估了该方法在乳腺X光分析之外的泛化能力。实验完整复现所需的全部代码和预训练模型已开源:https://github.com/ispamm/PHBreast。

Index Terms—Multi-View Learning, Hyper complex Neural Networks, Hyper complex Algebra, Breast Cancer Screening

索引术语 - 多视图学习 (Multi-View Learning)、超复数神经网络 (Hypercomplex Neural Networks)、超复数代数 (Hypercomplex Algebra)、乳腺癌筛查 (Breast Cancer Screening)

I. INTRODUCTION

I. 引言

Among the different types of cancer that affect women worldwide, breast cancer alone accounts for almost one-third, making it by far the cancer with highest incidence among women [1]. For this reason, early detection of this disease is of extreme importance and, to this end, screening mammography is performed annually on all women above a certain age [2], [3]. During a mammography exam, two views of the breast are taken, thus capturing it from above, i.e., the cr a nio caudal (CC) view, and from the side, i.e., the medio lateral oblique (MLO) view. More in detail, the CC and MLO views of the same breast are known as ips i lateral views, while the same view of both breasts as bilateral views. Importantly, when reading a mammogram, radiologists examine the views by performing a double comparison, that is comparing ips i lateral views along with bilateral views, as each comparison provides valuable information. Such multi-view analysis has been found to be essential in order to make an accurate diagnosis of breast cancer [4], [5].

在全球影响女性的各类癌症中,仅乳腺癌就占近三分之一,成为目前女性发病率最高的癌症 [1]。因此,该疾病的早期检测至关重要。为此,所有达到特定年龄的女性每年都要接受乳腺X光筛查 [2][3]。

乳腺X光检查会拍摄乳房的两种视图:从上向下拍摄的头尾位 (CC) 视图,以及从侧面拍摄的内外侧斜位 (MLO) 视图。具体而言,同一乳房的CC和MLO视图称为同侧视图,而双侧乳房的相同视图则称为双侧视图。值得注意的是,放射科医生在解读乳腺X光片时,会通过双重对比进行分析——既比较同侧视图,又比较双侧视图,因为每种对比都能提供有价值的信息。研究发现,这种多视图分析对于准确诊断乳腺癌至关重要 [4][5]。

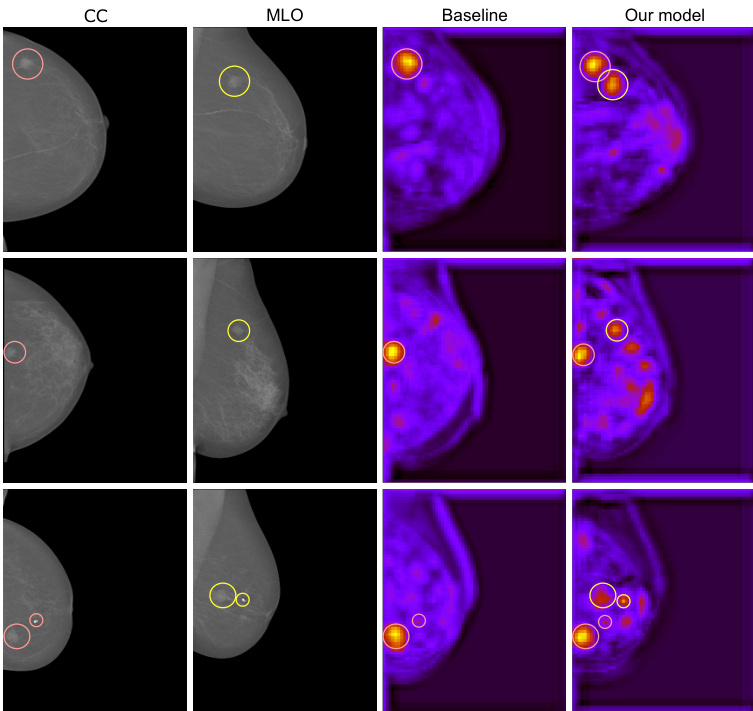

Fig. 1. Visualization of hyper complex multi-view learning. In the figure, there are the two views (CC and MLO) corresponding to one breast containing a malignant mass (highlighted in red and green in the respective views). Then, we show the activation maps of a baseline, i.e. the real-valued counterpart of our network (SEnet), and the proposed hyper complex architecture (PHYSEnet). Unlike the network in the real domain, ours learns from both views as it is visible that there are highlighted areas corresponding to both views.

图 1: 超复数多视图学习的可视化示意图。图中展示了包含恶性肿块(分别在CC和MLO视图中用红色和绿色高亮显示)的同一乳房对应的两个视图。随后,我们展示了基线模型(即我们网络中实值对应的SEnet)与提出的超复数架构(PHYSEnet)的激活图。与实域网络不同,我们的模型能从两个视图中学习,这体现在激活图中同时存在对应两个视图的高亮区域。

Recently, many works are employing deep learning (DL)- based methods in the medical field and, especially, for breast cancer classification and detection with encouraging results [6]–[17]. Inspired by the multi-view analysis performed by radiologists, several recent studies try to adopt a multi-view architecture in order to obtain a more robust and performing model [18]–[29].

近来,许多研究在医学领域采用基于深度学习 (DL) 的方法,特别是在乳腺癌分类和检测方面取得了令人鼓舞的成果 [6]–[17]。受放射科医师多视角分析的启发,近期多项研究尝试采用多视角架构以获得更鲁棒且性能更优的模型 [18]–[29]。

The general approach taken by these works consists in designing a neural network with different branches dedicated to processing the different views. However, there are several issues associated with this procedure. To begin with, a recent study [19] observes that this kind of model can favor one of the two views during learning, thus not truly taking advantage of the multi-view input. Additionally, the model might fail entirely in leveraging the correlated views and actually worsen the performance with respect to its single-view counterpart [19], [30]. Thus, it is made clear that improving the ability of deep networks to truly exploit the information contained in multiple views is still a largely open research question. To address and overcome these problems, we employ a novel technique in deep learning that relies on exotic algebraic systems, such as qua tern ions and, more in general, hyper complex ones.

这些研究采用的通用方法是设计一个具有不同分支的神经网络,专门用于处理不同视图。然而,这种方法存在若干问题。首先,最近的一项研究 [19] 指出,这类模型在学习过程中可能会偏向其中一个视图,从而无法真正利用多视图输入的优势。此外,模型可能完全无法利用相关视图,甚至导致性能比单视图版本更差 [19][30]。因此,如何提升深度网络真正利用多视图中信息的能力,仍然是一个亟待解决的研究问题。为解决并克服这些问题,我们采用了一种依赖非常规代数系统(如四元数及更广义的超复数)的深度学习新技术。

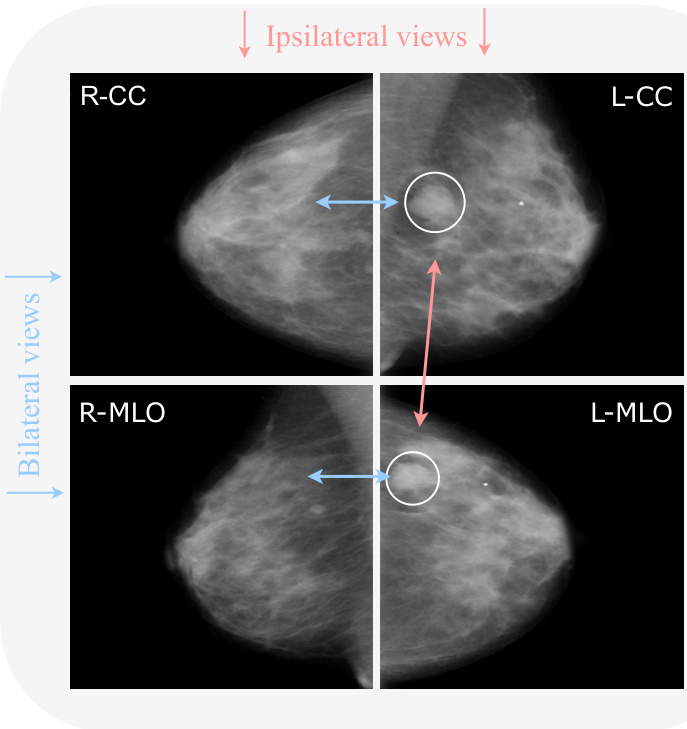

Fig. 2. Example of a mammography exam from the CBIS-DDSM dataset with the four views: left CC (L-CC), right CC (R-CC), left MLO (L-MLO), and right MLO (R-MLO). Horizontal couples are bilateral views (blue), while vertical couples are ips i lateral views (red). Arrows indicate the relations among views. Bilateral relations reveal an asymmetry (as there is a mass on the left that is not present on the right. Ips i lateral relations give a better view of the specific mass.

图 2: CBIS-DDSM数据集中乳腺X光检查的示例,包含四个视图:左头尾位 (L-CC)、右头尾位 (R-CC)、左斜侧位 (L-MLO) 和右斜侧位 (R-MLO)。水平成对的是双侧视图 (蓝色),垂直成对的是同侧视图 (红色)。箭头表示视图之间的关系。双侧关系显示出不对称性 (因为左侧有一个肿块而右侧没有)。同侧关系提供了该肿块的更清晰视图。

In recent years, quaternion neural networks (QNNs) have gained a lot of interest in a variety of applications [31]–[36]. The reason for this is the particular properties that characterize these models. As a matter of fact, thanks to the quaternion algebra rules on which these models are based (e.g., the Hamilton product), quaternion networks possess the capability of modeling interactions between input channels. Thus, they capture internal latent relations within them and additionally reduce the total number of parameters by $75%$ , while still attaining comparable performance to their real-valued counterparts. Furthermore, built upon the idea of QNNs, the recent parameterized hyper complex neural networks (PHNNs) generalize hyper complex multiplications as a sum of $n$ Kronecker products, where $n$ is a hyper parameter that controls in which domain the model operates [37], [38], going beyond quaternion algebra. Thus, they improve previous shortcomings by making these models applicable to any $n$ -dimensional input (instead of just 3D/4D as the quaternion domain) thanks to the introduction of the parameterized hyper complex multiplication (PHM) and convolutional (PHC) layer [37], [38].

近年来,四元数神经网络(QNNs)在各类应用中引起了广泛关注[31]–[36]。这源于该模型独特的性质:基于四元数代数规则(如Hamilton积)构建的网络能够建模输入通道间的交互关系,从而捕捉其内部潜在关联,并将参数量减少75%,同时保持与实数网络相当的性能。进一步地,基于QNNs思想提出的参数化超复数神经网络(PHNNs)将超复数乘法推广为n个Kronecker积之和,其中n作为超参数控制模型运算域[37][38],突破了四元数代数的限制。通过引入参数化超复数乘法(PHM)和卷积(PHC)层[37][38],这类模型可适用于任意n维输入(而非仅限于3D/4D的四元数域),从而改进了先前局限。

Motivated by the problems mentioned above and the benefits of hyper complex models, we propose a framework of multi-view learning for breast cancer classification based on PHNNs, taking a completely different approach with respect to the literature. More in detail, we propose a family of parameterized hyper complex ResNets (PHResNets) able to process ips i lateral views corresponding to one breast, i.e., two views. Additionally, we design two parameterized hypercomplex networks for four views, namely PHYBOnet and PHYSEnet. PHYBOnet involves a bottleneck with $n=4$ to process learned features in a joint fashion, performing a patient-level analysis. Instead, PHYSEnet is characterized by a shared encoder with $n=2$ to learn stronger representations of the ips i lateral views by sharing the weights between bilateral ones, focusing on a breast-level analysis.

受到上述问题和超复数模型优势的启发,我们提出了一种基于PHNNs的多视角乳腺癌分类框架,这与文献中的方法完全不同。具体而言,我们提出了一系列参数化超复数ResNet(PHResNet)模型,能够处理对应于单侧乳房(即两个视角)的同侧视图。此外,我们还设计了两种用于四视角的参数化超复数网络:PHYBOnet和PHYSEnet。PHYBOnet采用$n=4$的瓶颈结构以联合方式处理学习到的特征,实现患者级别的分析;而PHYSEnet则通过$n=2$的共享编码器,在双侧视图间共享权重来学习更强的同侧视图表征,侧重于乳房级别的分析。

The advantages of our approach are manifold. Firstly, instead of handling the m ammo graphic views independently, which results in losing significant correlations, our models process them as a unique component, without breaking the original nature of the exam. Secondly, thanks to hyper complex algebra properties, the proposed models are endowed with the capability of preserving existing latent relations between views by modeling and capturing their interactions, as shown in Fig. 1. In fact, hyper complex networks have been shown to effectively model not only global relations as standard deep learning networks but also local relations [32]. In the context of the mammography exam, these local relations are essential since they represent the complementary information contained in the multiple views, which are critical for a correct diagnosis and represent a fundamental step of the reading process performed by radiologists [4], [5]. In this sense, with the proposed approach in the hyper complex domain, we can say that our networks mimic the examination process of radiologists in real-life settings. Thirdly, our parameterized hyper complex networks are characterized by the number of free parameters halved, when $n=2$ , and reduced by $1/4$ , when $n=4$ , with respect to their real-valued counterparts. Finally, our proposed approach is both portable and flexible. It can be seamlessly applied to other medical exams involving multiple views or modalities, signifying its portability. Furthermore, any neural network can be easily defined within the hyper complex domain by simply integrating PHC layers, thus highlighting its flexibility. To the best of our knowledge, this is the first time that hyper complex models have been investigated for multi-view medical exams, particularly in the context of mammography.

我们方法的优势是多方面的。首先,与独立处理乳腺钼靶视图(这会导致重要相关性丢失)不同,我们的模型将其作为独特组件处理,同时保持检查的原始特性。其次,得益于超复数代数特性,所提模型能够通过建模和捕获视图间交互来保持潜在的现有关系,如图1所示。实际上,超复数网络不仅能像标准深度学习网络那样有效建模全局关系,还能建模局部关系[32]。在乳腺钼靶检查中,这些局部关系至关重要,因为它们代表了多视图包含的互补信息——这对正确诊断至关重要,也是放射科医师阅片过程的基本步骤[4][5]。就此而言,采用超复数域的所提方法,可以说我们的网络模拟了现实环境中放射科医师的检查过程。第三,当$n=2$时,我们的参数化超复数网络自由参数数量减半;当$n=4$时,参数数量减少$1/4$(相较于实数域对应模型)。最后,所提方法兼具可移植性和灵活性:它能无缝应用于其他涉及多视图或多模态的医学检查,体现其可移植性;此外,通过简单集成PHC层,任何神经网络都能轻松在超复数域中定义,彰显其灵活性。据我们所知,这是首次将超复数模型应用于多视图医学检查(尤其是乳腺钼靶)的研究。

We evaluate the effectiveness of our approach on two publicly available benchmark datasets of mammography images, namely CBIS-DDSM [39] and INbreast [2]. We conduct a meticulous experimental evaluation that demonstrates how our proposed models, owing to the aforementioned abilities, possess the means for properly leveraging information contained in multiple m ammo graphic views and thus exceed the performance of both real-valued baselines and state-of-the-art methods. Finally, we further validate the proposed method on two additional benchmark datasets, i.e., Chexpert [40] for chest $\mathrm{\DeltaX}$ -rays and BraTS19 [41], [42] for brain MRI, to prove the general iz ability of the proposed approach in different applications and medical exams, going beyond mammograms and classification.

我们在两个公开可用的乳腺X光影像基准数据集上评估了所提方法的有效性,分别是CBIS-DDSM [39]和INbreast [2]。通过细致的实验验证,我们证明了所提出的模型凭借前文所述能力,能够有效利用多视角乳腺X光影像中的信息,其性能不仅超越了实数基线方法,更优于当前最先进的技术。此外,我们还在另外两个基准数据集上进行了进一步验证:用于胸部X光的Chexpert [40]和用于脑部MRI的BraTS19 [41][42],以此证明该方法在乳腺X光分类之外的多种医学影像应用场景中具有普适性 (generalizability)。

The rest of the paper is organized as follows. Section II gives a detailed overview of the multi-view approach for breast cancer analysis, delving into why it is important to design a painstaking multi-view method. Section III provides theoretical aspects and concepts of hyper complex models, and Section IV presents the proposed method. The experiments are set up in Section V and evaluated in Section VI. A summary of the proposed models with specific data cases is provided in Section VIII, while conclusions are drawn in Section IX.

本文其余部分组织结构如下。第II节详细概述了乳腺癌分析的多视角方法,深入探讨为何需要精心设计多视角方法。第III节介绍超复数模型的理论基础和概念,第IV节阐述所提出的方法。实验设置见第V节,评估结果见第VI节。第VIII节通过具体数据案例对所提模型进行总结,第IX节给出结论。

II. MULTI-VIEW APPROACH IN BREAST CANCERANALYSIS

II. 乳腺癌分析中的多视角方法

There exist several types of medical exams for the detection process of breast cancer, such as mammography, ultrasound, biopsy, and so on. Among these, mammography is considered the best imaging method for breast cancer screening and the most effective for early detection [3]. A mammography exam comprises four X-ray images produced by the recording of two views for each breast: the CC view, which is a top to bottom view, and the MLO view, which is a side view. The diagnosis procedure adopted by radiologists consists in looking for specific abnormalities, the most common being masses, calcification s, architectural distortions of breast tissue, and a symmetries [2]. During the reading of a mammography exam, employing multiple views is crucial in order to make an accurate diagnosis, as they retain highly correlated characteristics, which, in reality, represent complementary information. Admittedly, comparing ips i lateral views (CC and MLO views of the same breast) helps to detect eventual tumors, as sometimes they are visible only in one of the two views, and additionally helps to analyze the 3D structure of masses. Whereas, studying bilateral views (same view of both breasts) helps to locate masses as a symmetries between them are an indicating factor [5]. An example of a complete mammogram exam with ips i lateral and bilateral views is shown in Fig. 2.

乳腺癌检测过程中存在多种医学检查方法,如乳腺X线摄影(mammography)、超声检查、活组织检查等。其中,乳腺X线摄影被视为乳腺癌筛查的最佳影像学方法,也是早期检测最有效的手段[3]。一次乳腺X线检查包含四张X光图像,通过每侧乳房两个视角拍摄获得:CC位(自上而下视角)和MLO位(侧视角)。放射科医师采用的诊断流程需寻找特定异常表现,最常见包括肿块、钙化灶、乳腺组织结构扭曲以及不对称征[2]。

解读乳腺X线影像时,采用多视角对比对精准诊断至关重要,因为这些视角间存在高度关联特征,实际构成互补信息。公认的是,同侧视图(同一乳房的CC位与MLO位)对比有助于发现潜在肿瘤(某些情况下肿瘤仅在单一视角可见),并能辅助分析肿块的三维结构;而双侧视图(双乳相同视角)对比则可通过不对称征象定位肿块,此类不对称具有指示意义[5]。图2展示了一个包含同侧与双侧视图的完整乳腺X线检查示例。

Given the multi-view nature of the exam and the multi-view approach employed by radiologists, many works are focusing on utilizing two or four views for the purpose of classifying breast cancer, with the goal of leveraging the information coming from ips i lateral and/or bilateral views. Indeed, studies have shown that a model employing multiple views can learn to generalize better compared to its single-view counterpart, increasing its disc rim i native power, while also reducing the number of false positives and false negatives [6], [9], [18].

考虑到乳腺检查的多视角特性以及放射科医师采用的多视角诊断方法,许多研究致力于利用双视角或四视图进行乳腺癌分类,旨在整合同侧和/或双侧乳腺影像的信息。研究表明,与单视角模型相比,多视角模型能够提升泛化能力、增强判别力,同时降低假阳性与假阴性率 [6] [9] [18]。

Several works show the advantages of leveraging multiple views by adopting simple approaches. For instance, one approach involves simply averaging the predictions generated by the same model when provided with two ips i lateral views [6], which can be classified as a late-fusion approach. However, late-fusion methods ignore local interactions between views [29]. In contrast to late-fusion, also early-fusion techniques have been employed. These techniques involve the concatenation of feature vectors into a single vector [43]. Nevertheless, the issue with early fusion is that it treats the resulting vector as a unimodal input, potentially failing to fully exploit the complementary nature of the views [44], [45]. Instead, more complex approaches consist in designing multi-view architectures that exploit correlations for learning and not just at inference time, like late-fusion methods. Recent techniques such as these propose architectures comprising multiple convolutional neural networks (CNNs) paths or columns, where each column processes a different view and their output is subsequently concatenated together and fed to a number of fully connected layers to obtain the final output [18]–[25]. A recent paper proposes an approach to utilize the complete mammography exam, thus comprised of four views [18]. Alternatively, a number of works [20]–[22], [26] adopt the same idea of having multiple columns but instead focus on using just two ips i lateral views.

多项研究表明,采用简单方法利用多视图具有优势。例如,一种方法仅对同一模型提供的双侧视图预测结果进行平均处理[6],可归类为后融合(late-fusion)方法。但后融合方法忽略了视图间的局部交互[29]。与后融合不同,早期融合技术也被采用,其通过将特征向量拼接为单一向量实现[43]。然而早期融合的问题在于将结果向量视为单模态输入,可能无法充分利用视图的互补特性[44][45]。更复杂的方法则是设计多视图架构,不仅在推理阶段、更在学习阶段利用视图相关性。这类最新技术提出包含多个卷积神经网络(CNN)路径或分支的架构,每个分支处理不同视图,输出结果经拼接后输入全连接层获得最终输出[18][25]。近期有论文提出利用完整乳腺X光检查(含四个视图)的方法[18]。另有多项研究[20][22][26]采用相同多分支思路,但仅聚焦于使用双侧视图。

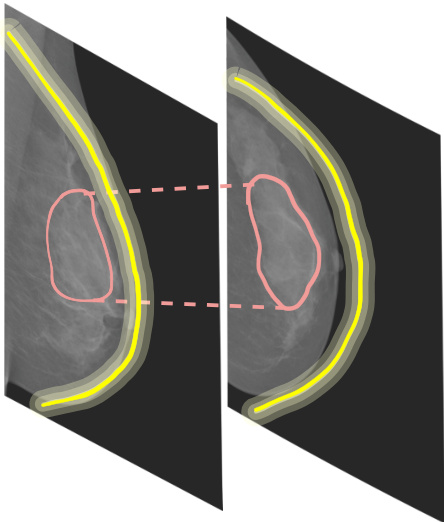

Fig. 3. Global and local relations. Global relations are intra-view features, e.g., the shape of the breast (depicted in shades of yellow). Local relations are inter-view features, e.g., textures (depicted in red).

图 3: 全局与局部关系。全局关系是视图内部特征 (如乳房的形状,用黄色阴影表示)。局部关系是视图间特征 (如纹理,用红色表示)。

Even though multimodal methods have been employed in a variety of recent deep learning works for breast cancer analysis, not much attention has been paid to how the DL model actually leverages information contained in the multiple views. Indeed, a recent study shows that such multimodal approaches might actually fail to exploit such information, leading to a counter-intuitive situation in which the single-view counterpart outperforms the multi-view one [19]. Therefore, just employing an architecture with multiple columns for each view is not enough to really leverage the knowledge coming from the correlated inputs [19]. Even in other applications of multimodal learning (e.g., involving speech or text), it is a common phenomenon that DL networks that are not properly modeled, fail to utilize the information contained in the different input modalities [30]. Although the context may differ, this underlying issue is a common concern in the multimodal and multi-view scenarios. Therefore, when processing multi-view exams, a painstaking and meticulous method has to be developed. To this end, we propose a method based on hyper complex algebra, whose properties are described in the following sections.

尽管多模态方法已广泛应用于近期乳腺癌分析的深度学习研究中,但人们很少关注深度学习模型如何真正利用多视角中包含的信息。事实上,最新研究表明,这类多模态方法可能实际上未能有效利用此类信息,导致单视角模型反超多视角模型的违反直觉现象 [19]。因此,仅采用为每个视角配备多分支的架构并不足以真正利用相关输入的知识 [19]。即便在其他多模态学习应用中(如涉及语音或文本的场景),建模不当的深度学习网络无法利用不同输入模态信息的现象也十分普遍 [30]。虽然具体情境不同,但这一根本问题在多模态和多视角场景中普遍存在。因此,在处理多视角检查时,必须开发细致周密的方法。为此,我们提出了一种基于超复数代数的方法,其特性将在后续章节详述。

III. QUATERNION AND HYPER COMPLEX NEURALNETWORKS

III. 四元数与超复数神经网络

Quaternion and hyper complex neural networks have their foundations in a hyper complex number system $\mathbb{H}$ equipped with its own algebra rules to regulate additions and multiplications. Hyper complex numbers generalize a plethora of algebraic systems, including complex numbers $\mathbb{C}$ , qua tern ions $\mathbb{Q}$ and octonions $\mathbb{O}$ , among others. A generic hyper complex number is defined as

四元数与超复数神经网络的基础在于超复数系统 $\mathbb{H}$,该系统拥有独立的代数规则来规范加法和乘法运算。超复数推广了多种代数体系,包括复数 $\mathbb{C}$、四元数 $\mathbb{Q}$ 和八元数 $\mathbb{O}$ 等。通用超复数的定义为

$$

h=h_{0}+h_{1}\hat{\imath}{1}+...+h_{i}\hat{\imath}{i}+...+h_{n-1}\hat{\imath}_{n-1},

$$

$$

h=h_{0}+h_{1}\hat{\imath}{1}+...+h_{i}\hat{\imath}{i}+...+h_{n-1}\hat{\imath}_{n-1},

$$

whereby $h_{0},\ldots,h_{n-1}$ are the real-valued coefficients and $\hat{\i}{i},\dots,\hat{\i}{n-1}$ the imaginary units. The first coefficient $h_{0}$ represents the real component, while the remaining ones compose the imaginary part. Therefore, the algebraic subsets of $\mathbb{H}$ are identified by the number of imaginary units and by the algebraic rules that govern the interactions among them. For instance, a complex number has just one imaginary unit, while a quaternion has three imaginary units. In the latter domain, the vector product is not commutative so the Hamilton product has been introduced to multiply two qua tern ions. Interestingly, a real number can be expressed through eq. (1) by setting $i=0$ and considering the real part only. Being hyper complex algebras part of the Cayley-Dickson algebras, they always have a dimension equal to a power of 2, therefore it is important to note that subset domains exist solely at predefined dimensions, i.e., $n=2,4,8,16,\dots$ , while no algebra rules have been discovered yet for other values.

其中 $h_{0},\ldots,h_{n-1}$ 为实值系数,$\hat{\i}{i},\dots,\hat{\i}{n-1}$ 为虚数单位。首项系数 $h_{0}$ 表示实部,其余系数构成虚部。因此,$\mathbb{H}$ 的代数子集可通过虚数单位数量及其相互作用规则来定义。例如,复数仅含一个虚数单位,而四元数包含三个虚数单位。在后者的数域中,由于向量乘法不满足交换律,汉密尔顿积被引入以实现四元数乘法。值得注意的是,实数可通过令 $i=0$ 并仅保留实部,由公式 (1) 表示。作为凯莱-迪克森代数中的超复数代数,其维度总是2的幂次方,因此需注意子数域仅存在于预设维度(即 $n=2,4,8,16,\dots$),目前尚未发现其他维度的代数规则。

The addition operation is performed through an elementwise addition of terms, i.e., $h+p=(h_{0}+p_{0})+(h_{1}+p_{1})+...+$ $(h_{i}+p_{i})\hat{\imath}{i}+...+(h_{n-1}+p_{n-1})\hat{\imath}{n-1}$ . Similarly, the product between a quaternion and a scalar value $\alpha\in\mathbb R$ can be formulated with $\alpha h=\alpha h_{0}+\alpha h_{1}+...+\alpha h_{i}\hat{\imath}{i}+...+\alpha h_{n-1}\hat{\imath}{n-1}$ by multiplying the scalar to real-valued components. Nevertheless, with the increasing of $n$ , Cayley-Dickson algebras lose some properties regarding the vector multiplication, and more specific formulas need to be introduced to model this operation because of imaginary units interplays [46]. Indeed, as an example, qua tern ions and octonions products are not commutative due to imaginary unit properties for which $\hat{\i}{1}\hat{\i}{2}\neq\hat{\i}{2}\hat{\i}{1}$ . Therefore, quaternion convolutional neural network (QCNN) layers are based on the Hamilton product, which organizes the filter weight matrix to be encapsulated into a quaternion as $\mathbf{W}=\mathbf{W}{0}+\mathbf{W}{1}\boldsymbol{\hat{\imath}}{1}+\mathbf{W}{2}\boldsymbol{\hat{\imath}}{2}+\mathbf{W}{3}\boldsymbol{\hat{\imath}}{3}$ and to perform convolution with the quaternion input ${\bf x}={\bf x}{0}+{\bf x}{1}\hat{\imath}{1}+{\bf x}{2}\hat{\imath}{2}+{\bf x}{3}\hat{\imath}_{3}$ as:

加法运算通过逐项元素相加实现,即 $h+p=(h_{0}+p_{0})+(h_{1}+p_{1})+...+$ $(h_{i}+p_{i})\hat{\imath}{i}+...+(h_{n-1}+p_{n-1})\hat{\imath}{n-1}$。类似地,四元数与标量值 $\alpha\in\mathbb R$ 的乘积可表示为 $\alpha h=\alpha h_{0}+\alpha h_{1}+...+\alpha h_{i}\hat{\imath}{i}+...+\alpha h_{n-1}\hat{\imath}{n-1}$,即对实值分量进行标量乘法。然而随着 $n$ 增大,Cayley-Dickson代数在向量乘法方面会损失部分性质,由于虚数单位的相互作用,需要引入更特定的公式来建模该运算 [46]。例如,四元数和八元数的乘法不具备交换性,因为虚数单位满足 $\hat{\i}{1}\hat{\i}{2}\neq\hat{\i}{2}\hat{\i}{1}$。因此,四元数卷积神经网络 (QCNN) 层基于汉密尔顿积实现,其将滤波器权重矩阵封装为四元数形式 $\mathbf{W}=\mathbf{W}{0}+\mathbf{W}{1}\boldsymbol{\hat{\imath}}{1}+\mathbf{W}{2}\boldsymbol{\hat{\imath}}{2}+\mathbf{W}{3}\boldsymbol{\hat{\imath}}{3}$,并与四元数输入 ${\bf x}={\bf x}{0}+{\bf x}{1}\hat{\imath}{1}+{\bf x}{2}\hat{\imath}{2}+{\bf x}{3}\hat{\imath}_{3}$ 进行如下卷积运算:

$$

\mathbf{W}\mathbf{x}=\left[\begin{array}{c c c c}{\mathbf{W}{0}}&{-\mathbf{W}{1}}&{-\mathbf{W}{2}}&{-\mathbf{W}{3}}\ {\mathbf{W}{1}}&{\mathbf{W}{0}}&{-\mathbf{W}{3}}&{\mathbf{W}{2}}\ {\mathbf{W}{2}}&{\mathbf{W}{3}}&{\mathbf{W}{0}}&{-\mathbf{W}{1}}\ {\mathbf{W}{3}}&{-\mathbf{W}{2}}&{\mathbf{W}{1}}&{\mathbf{W}{0}}\end{array}\right]*\left[\begin{array}{c c c c}{\mathbf{x}{0}}\ {\mathbf{x}{1}}\ {\mathbf{x}{2}}\ {\mathbf{x}_{3}}\end{array}\right].

$$

$$

\mathbf{W}\mathbf{x}=\left[\begin{array}{c c c c}{\mathbf{W}{0}}&{-\mathbf{W}{1}}&{-\mathbf{W}{2}}&{-\mathbf{W}{3}}\ {\mathbf{W}{1}}&{\mathbf{W}{0}}&{-\mathbf{W}{3}}&{\mathbf{W}{2}}\ {\mathbf{W}{2}}&{\mathbf{W}{3}}&{\mathbf{W}{0}}&{-\mathbf{W}{1}}\ {\mathbf{W}{3}}&{-\mathbf{W}{2}}&{\mathbf{W}{1}}&{\mathbf{W}{0}}\end{array}\right]*\left[\begin{array}{c c c c}{\mathbf{x}{0}}\ {\mathbf{x}{1}}\ {\mathbf{x}{2}}\ {\mathbf{x}_{3}}\end{array}\right].

$$

Processing multidimensional inputs with QCNNs has several advantages. Indeed, due to the reusing of filter sub matrices $\mathbf{W}_{i}$ , $\begin{array}{l l l}{i}&{=}&{0,\ldots,3}\end{array}$ in eq. (2), QCNNs are defined with $1/4$ free parameters with respect to real-valued counterparts with the same architecture structure. Moreover, sharing the filter sub matrices among input components allows QCNNs to capture internal relations in input dimensions and to preserve correlations among them [32], [47]. However, this approach is limited to 4D inputs, thus various knacks are usually employed to apply QCNNs to different 3D inputs, such as RGB color images. In these cases, a padding channel is concatenated to the three-channel image to build a 4D image adding, however, useless information. Similarly, when processing grayscale images with QCNNs, the single channel has to be replicated in order to be fed into the quaternionvalued network, without annexing any additional information for the model. Recently, novel approaches proposed to parameter ize hyper complex multiplications and convolutions to maintain QCNNs and hyper complex algebras advantages, while extending their applicability to any $n\mathrm{D}$ input [37], [38]. The core idea of these methods is to develop the filter matrix $\mathbf{W}$ as a parameterized sum of Kronecker products:

使用QCNN处理多维输入具有多重优势。由于在公式(2)中重复使用滤波器子矩阵 $\mathbf{W}_{i}$ ( $i=0,\ldots,3$ ),QCNN的自由参数数量仅为同架构实值网络的1/4。此外,通过在输入分量间共享滤波器子矩阵,QCNN能够捕捉输入维度间的内在关联并保持其相关性[32][47]。但该方法仅适用于4D输入,因此通常需要特殊技巧将QCNN应用于RGB彩色图像等3D输入——此时需通过填充通道构建4D图像,但会引入冗余信息。类似地,处理灰度图像时需复制单通道以适配四元数网络,同样未增添有效信息。最新研究提出参数化超复数乘法和卷积运算的方法[37][38],在保留QCNN和超复数代数优势的同时,将其适用范围扩展至任意$n\mathrm{D}$输入。这些方法的核心思想是将滤波器矩阵$\mathbf{W}$构建为克罗内克积的参数化组合:

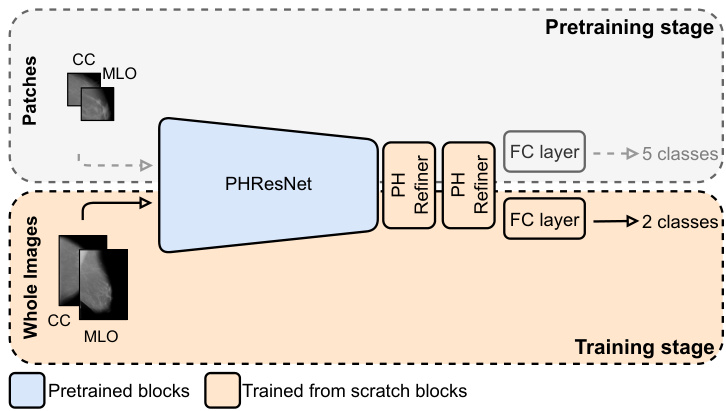

Fig. 4. Training pipeline and PHResNet overview. We employ a pertaining stage to overcome issues presented in Section efsubsec:training. During this stage, the PHResNet is trained on patches extracted from the original mammogram. They are classified into five classes: background or normal, benign and malignant calcification s and masses, respectively. At downstream time, i.e., when training on whole images, two PH convolutional refiner blocks are added and trained from scratch together with the final classification layer.

图 4: 训练流程与PHResNet架构概览。我们采用预训练阶段来解决第efsubsec:training节所述问题。在此阶段,PHResNet通过从原始乳腺X光片中提取的 patches 进行训练,这些 patches 被分为五类:背景/正常组织、良性钙化、恶性钙化、良性肿块和恶性肿块。在下游阶段(即整图训练时),会新增两个PH卷积精炼块 (PH convolutional refiner blocks) 并与最终分类层一起从头开始训练。

$$

\mathbf{W}=\sum_{i=0}^{n}\mathbf{A}{i}\otimes\mathbf{F}_{i},

$$

$$

\mathbf{W}=\sum_{i=0}^{n}\mathbf{A}{i}\otimes\mathbf{F}_{i},

$$

whereby $n$ is a tunable or user-defined hyper parameter that determines the domain in which the model operates (i.e., $n=4$ for the quaternion domain, $n=8$ for octonions, and so on). The matrices $\mathbf{A}{i}$ encode the algebra rules, that is the filter organization for convolutional layers, while the matrices $\mathbf{F}_{i}$ enclose the weight filters. Both these elements are completely learned from data during training, thus grasping algebra rules or adapting them, if no algebra exists for the specific value of $n$ , directly from inputs. The PHC layer is malleable to operate in any $n\mathrm{D}$ domain by easily setting the hyper parameter $n$ , thus extending QCNNs advantages to every multidimensional input. Indeed, PHNNs can process color images in their natural domain $(n:=:3)$ ) without adding any uninformative channel (as previously done for QCNNs), while still exploiting latent relations between channels. Moreover, due to the data-driven fashion in which this approach operates, PHNNs with $n=4$ outperform QCNNs both in terms of prediction accuracy and training as well as inference time [37], [38]. Furthermore, PHC layers employ $1/n$ free parameters with respect to real-valued counterparts, so the user can govern both the domain and the parameters reduction by simply setting the value of $n$ .

其中 $n$ 是一个可调或用户定义的超参数,用于确定模型运行的域(即四元数域对应 $n=4$,八元数对应 $n=8$,以此类推)。矩阵 $\mathbf{A}{i}$ 编码代数规则,即卷积层的滤波器组织结构,而矩阵 $\mathbf{F}_{i}$ 则包含权重滤波器。这两类元素均在训练过程中完全从数据中学习,从而掌握代数规则或直接根据输入调整规则(若特定 $n$ 值不存在对应代数)。通过简单设置超参数 $n$,PHC 层可灵活适应任意 $n\mathrm{D}$ 域,从而将 QCNNs 的优势扩展到所有多维输入。实际上,PHNNs 能在自然域 $(n=3)$ 处理彩色图像,无需添加任何无信息通道(如 QCNNs 先前所做),同时仍能利用通道间的潜在关联。此外,由于该方法采用数据驱动方式,$n=4$ 的 PHNNs 在预测精度、训练及推理时间方面均优于 QCNNs [37][38]。值得注意的是,PHC 层使用的自由参数数量仅为实数对应版本的 $1/n$,因此用户仅需设定 $n$ 值即可同时控制运算域和参数量缩减。

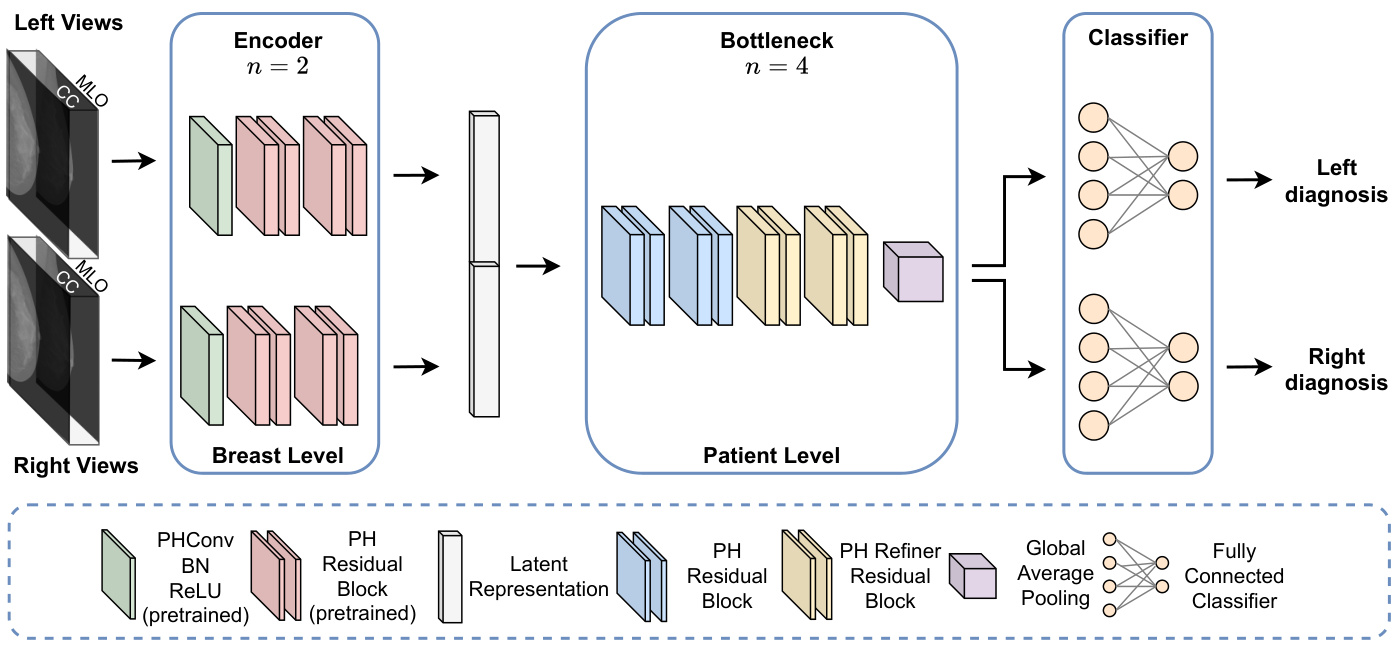

Fig. 5. PHYBOnet architecture. The model first performs a breast-level analysis by taking as input two pairs of ips i lateral views that are handled by two pretrained PH encoder branches with $n=2$ . The learned latent representations are then processed in a joint fashion by four PH residual blocks with $n=4$ Finally, the outputs from the two branches are fed to a separate final fully connected layer after a global average pooling operation.

图 5: PHYBOnet架构。该模型首先通过输入两对同侧视图进行乳腺级别分析,这些视图由两个预训练的PH编码器分支处理($n=2$)。学习到的潜在表征随后通过四个PH残差块($n=4$)以联合方式处理。最后,两个分支的输出在经过全局平均池化操作后,分别馈入独立的最终全连接层。

The reason behind the success of hyper complex models is their ability to model not only global relations as standard neural networks but also local relations. Global dependencies are related to spatial relations among pixels of the same level (i.e., for an RGB image, the R, G, and B channels are different levels). Instead, local dependencies implicitly link different levels. In the latter case, implicit local relations for an RGB image are the natural link that exists within the three-channel components defining a pixel. This kind of correlation among the different levels of the input is usually not taken into account by conventional neural networks that operate in the real domain, as they consider the pixels at different levels as sets of de correlated points [32]. Considering the multiple dimensions/levels of a sample as unrelated components may break the inner nature of the data, losing information about the inner structure of the sample. Instead, PHNNs can explore both global and local relations, not losing crucial information. Indeed, global relations are modeled as in conventional models, e.g., with convolutional operations. Rather, thanks to the formulation of convolution defined in Eq. 2, the filter sub matrices are shared among input dimensions, i.e., the different levels, thus hyper complex networks are endowed with the ability to model also local relations. In the context of mammography, the different views can be regarded as the color channels of an RGB image. Thus, global relations are intraview features, such as the shape of the breast. In contrast, local relations are inter-view features, thus encompassing the interdependencies found across the various views. A depiction of such relations in the context of a mammography exam can be seen in Fig. 3. Indeed, local relations are an inherent part of multi-view data. Nevertheless, standard neural networks have been shown to ignore such relations [32]. Therefore, hyper complex algebra may be a powerful tool to leverage mammogram views as it is able to describe multidimensional data preserving its inner structure [38].

超复数模型成功的原因在于它们不仅能像标准神经网络那样建模全局关系,还能捕捉局部关联。全局依赖性与同层级像素间的空间关系相关(例如RGB图像中R、G、B通道属于不同层级),而局部依赖性则隐式连接不同层级。以RGB图像为例,其隐含的局部关系就是构成像素的三个通道组件之间天然存在的联系。传统实数域神经网络通常忽略这种输入数据不同层级间的相关性,它们将不同层级的像素视为互不关联的点集[32]。若将样本的多维度/层级视为无关组件,可能会破坏数据的内在特性,丢失样本内部结构信息。相比之下,PHNN(超复数神经网络)能同时探索全局与局部关系,避免关键信息丢失。其中全局关系通过常规操作(如卷积运算)建模,而借助公式(2)定义的卷积形式,滤波器子矩阵在输入维度(即不同层级)间共享,因此超复数网络也具备建模局部关系的能力。

在乳腺X光检查场景中,不同视角可类比RGB图像的色彩通道:全局关系对应视角内特征(如乳房形状),局部关系则对应跨视角特征,涵盖不同视图间的相互依赖关系。图3展示了乳腺检查中这类关系的示意图。实际上,局部关系是多视图数据与生俱来的特性,但标准神经网络已被证明会忽略这种关联[32]。因此,超复数代数可能是利用乳腺X光视图的强大工具,因其能描述多维数据同时保留内在结构[38]。

IV. PROPOSED METHOD

IV. 提出的方法

In the following section, we expound the proposed approach and we delineate the structure of our models and the training recipes we adopt. More in detail, we design ad hoc networks for two-view and four-view exams, i.e., a complete mammography exam.

在以下部分,我们将阐述所提出的方法,并详细说明模型结构及采用的训练方案。具体而言,我们针对双视图检查和四视图检查(即完整的乳腺X光检查)设计了专用网络。

A. Multi-view PHResNet

A. 多视角 PHResNet

The core idea of our method is to take advantage of the information contained in multiple views through PHC layers in order to obtain a more performant and robust classifier for breast cancer.

我们方法的核心思想是通过PHC层利用多视图中的信息,以获得性能更强、鲁棒性更好的乳腺癌分类器。

ResNets are among the most widespread models for medical image classification [6], [9], [15], [18], [19], [48], [49]. They are characterized by residual connections that ensure proper gradient propagation during training. A ResNet block is typically defined by:

ResNets 是医学图像分类领域应用最广泛的模型之一 [6], [9], [15], [18], [19], [48], [49]。其核心特征是通过残差连接确保训练过程中的梯度正常传播。典型的 ResNet 块定义为:

$$

\begin{array}{r}{\mathbf{y}=\mathcal{F}(\mathbf{x})+\mathbf{x},}\end{array}

$$

$$

\begin{array}{r}{\mathbf{y}=\mathcal{F}(\mathbf{x})+\mathbf{x},}\end{array}

$$

where $\mathcal F(\ensuremath{\mathbf{x}})$ is usually composed by interleaving convolutional layers, batch normalization (BN) and ReLU activation functions. When equipped with PHC layers, real-valued convolu- tions are replaced with PHC to build PHResNets, therefore $\mathcal{F}(\mathbf{x})$ becomes:

其中 $\mathcal F(\ensuremath{\mathbf{x}})$ 通常由交替的卷积层、批归一化 (BN) 和 ReLU 激活函数组成。当配备 PHC 层时,实值卷积被替换为 PHC 以构建 PHResNets,因此 $\mathcal{F}(\mathbf{x})$ 变为:

$$

\mathcal{F}(\mathbf{x})=\mathrm{BN}\left(\mathrm{PHC}\left(\mathrm{ReLU}\left(\mathrm{BN}\left(\mathrm{PHC}\left(\mathbf{x}\right)\right)\right)\right)\right),

$$

$$

\mathcal{F}(\mathbf{x})=\mathrm{BN}\left(\mathrm{PHC}\left(\mathrm{ReLU}\left(\mathrm{BN}\left(\mathrm{PHC}\left(\mathbf{x}\right)\right)\right)\right)\right),

$$

in which $\mathbf{x}$ can be any multidimensional input with correlated components. It is worth noting that PH models generalize realvalued counterparts and also mono dimensional inputs. As a

其中 $\mathbf{x}$ 可以是具有相关分量的任意多维输入。值得注意的是,PH模型不仅推广了实值对应模型,还适用于单维输入。

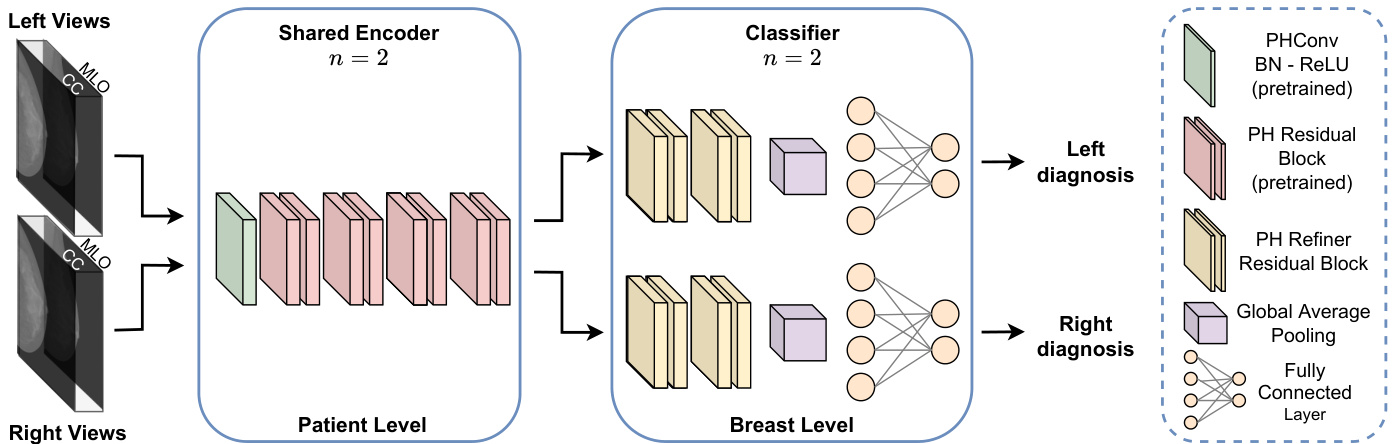

Fig. 6. PHYSEnet architecture. The model comprises an initial deep patient-level framework that takes as input two pairs of ips i lateral views which are processed by a pretrained shared PHResNet18 with $n=2$ that serves as the encoder. Ultimately, the two learned latent representations are fed to the respective classification branch composed of PH refiner residual blocks with global average pooling and the final fully connected layer to perform the breast-level learning.

图 6: PHYSEnet架构。该模型包含一个初始的深度患者级框架,输入为两对同侧视图,由预训练的共享PHResNet18 (n=2) 作为编码器进行处理。最终,两个学习到的潜在表征被送入各自的分类分支,该分支由带有全局平均池化的PH细化残差块和最终的全连接层组成,以执行乳腺级别的学习。

matter of fact, a PH model with $n=1$ is equivalent to a real-valued model receiving 1D inputs.

事实上,当 $n=1$ 时,PH 模型等价于接收一维输入的实值模型。

B. PH architectures for two views

B. 双视图的PH架构

The proposed multi-view architecture in the case of two views is straightforward. We employ PHResNets and we fix the hyper parameter $n=2$ . The model is depicted in Fig. 4 together with the training strategy we deploy. The two views of the same breast, i.e., ips i lateral views, are fed to the network as a multidimensional input (channel-wise) enabling PHC layers to exploit their correlations. We adopt ips i lateral views because, as mentioned in Section VI-B, they help in both the detection and classification process, unlike bilateral views which aid only in the former.

所提出的双视图多视角架构较为简单。我们采用PHResNets并固定超参数$n=2$。该模型及训练策略如图4所示。同一乳房的两个视角(即同侧视图)以多维输入(通道维度)形式馈入网络,使PHC层能够利用其相关性。我们选用同侧视图是因为,如第VI-B节所述,它们同时有助于检测和分类过程,而异侧视图仅对前者有帮助。

Ultimately, the model produces a binary prediction indicating the presence of either a malignant or benign/normal finding (depending on the dataset). In such a manner, the model is able to process the two mammograms as a unique entity by preserving the original multidimensional structure as elucidated in Section III [32]. As a consequence, it is endowed with the capacity to exploit both global and local relations, i.e., the interactions between the highly correlated views, mimicking the diagnostic process of radiologists.

最终,该模型会生成一个二元预测,用于指示是否存在恶性或良性/正常发现(具体取决于数据集)。通过这种方式,模型能够将两张乳腺X光片作为一个独特实体进行处理,同时保留原始的多维结构,如第三节 [32] 所述。因此,该模型具备利用全局和局部关系的能力,即高度相关视图之间的相互作用,从而模拟放射科医师的诊断过程。

C. PH architectures for four views

C. 四视图的PH架构

- PHYBOnet: The first model we propose is shown in Fig. 5, namely Parameterized Hyper complex Bottleneck network (PHYBOnet). PHYBOnet is based on an initial breastlevel focus and a consequent patient-level one, through the following components: two encoder branches for each breast side with $n~=~2$ , a bottleneck with the hyper parameter $n=4$ and two final classifier layers that produce the binary prediction relative to the corresponding side. Each encoder takes as input two views (CC and MLO) and has the objective of learning a latent representation of the ips i lateral views. The learned latent representations are then merged together and processed by the bottleneck which has $n$ set to 4 in order for PHC layers to grasp correlations among them. Indeed, in this way we additionally obtain a light network since the number of parameters is reduced by $1/4$ .

- PHYBOnet:我们提出的第一个模型如图5所示,即参数化超复数瓶颈网络 (PHYBOnet)。该模型基于初始乳房层级关注和后续患者层级关注,通过以下组件实现:两侧乳房各设两个编码器分支($n~=~2$),一个超参数为$n=4$的瓶颈层,以及两个最终分类层用于生成对应侧的二元预测。每个编码器以两个视图(CC和MLO)作为输入,旨在学习同侧视图的潜在表征。习得的潜在表征经合并后由瓶颈层处理(此处$n$设为4),使PHC层能捕捉其间的相关性。实际上,这种方式还实现了网络轻量化,因为参数量减少了$1/4$。

- PHYSEnet: The second architecture we present, namely Parameterized Hyper complex Shared Encoder network (PHYSEnet), is depicted in Fig. 6. It has a broader focus on the patient-level analysis through an entire PHResNet18 with $n=$ 2 as the encoder model, which takes as input two ips i lateral views. The weights of the encoder are shared between left and right inputs to jointly analyze the whole information of the patient. Then, two final classification branches, consisting of residual blocks and a final fully connected layer, perform a breast-level analysis to output the final prediction for each breast. This design allows the model to leverage information from both ips i lateral and bilateral views. Indeed, thanks to PHC layers, ips i lateral information is leveraged as in the case of two views, while by sharing the encoder between the two sides, the model is also able to take advantage of bilateral information.

- PHYSEnet:我们提出的第二种架构,即参数化超复数共享编码器网络 (PHYSEnet),如图 6 所示。它通过一个完整的 PHResNet18(其中 $n=$ 2 作为编码器模型)更广泛地关注患者层面的分析,该模型以两个同侧视图作为输入。编码器的权重在左右输入之间共享,以共同分析患者的全部信息。然后,两个最终分类分支(由残差块和一个最终全连接层组成)执行乳房层面的分析,输出每个乳房的最终预测。这种设计使模型能够利用同侧和双侧视图的信息。事实上,得益于 PHC 层,同侧信息可以像两个视图的情况一样被利用,而通过在两侧共享编码器,模型还能够利用双侧信息。

D. Training procedure

D. 训练流程

Training a classifier from scratch for this kind of task is very difficult for a number of reasons. To begin, neural models require huge volumes of data for training, but there are only a handful of publicly available datasets for breast cancer, which also present a limited number of examples. Additionally, a lesion occupies only a tremendously small portion of the original image, thus making it arduous to be detected by a model [6].

针对此类任务从头训练分类器存在诸多困难。首先,神经网络模型需要海量训练数据,但公开可用的乳腺癌数据集寥寥无几,且样本数量有限。此外,病灶在原图像中占比极小,导致模型检测难度极大 [6]。

To overcome these challenges, we deploy an ad hoc pretraining strategy, illustrated in Fig. 4 divided into two main steps. First, we pretrain the model on patches of mammograms and, second, we involve the pretrained weights to initialize the network for training on whole images. Specifically, in the first step patches are extracted from the original mammograms by taking 20 patches for each lesion present in the dataset: 10 of background or normal tissue and 10 around the region of interest (ROI) in question. Aiming to utilize this classifier for the training of whole mammograms with two views, we also require two views at the patch level. The definition of two views for patches is straightforward. For all lesions that are visible in both views of the breast, patches around that lesion are taken for both views. Thus, the patch classifier takes as input two-view $224\times224$ patches of the original mammogram, concatenated along the channel dimension and classifies them into one of the following five classes: ext it background or normal, benign calcification, malignant calcification, benign mass, and malignant mass [6]. At downstream time, i.e., when training on whole images, the network is initialized with the pretrained weights to classify exams as either malignant or benign. Indeed, pre training on patches is a way to exploit the fine-grained detail characteristic of mammograms which is crucial for discriminating between malignant and benign findings, that would otherwise be lost due to the resizing of images [6], [18]. Such training strategy plays a determining role in boosting the performance of the models as we demonstrate in the experimental Section VI.

为克服这些挑战,我们采用了一种专门的预训练策略,如图4所示,该策略分为两个主要步骤。首先,我们在乳腺X光片的小块上进行模型预训练;其次,利用预训练权重初始化网络,以在整个图像上进行训练。具体而言,第一步从原始乳腺X光片中提取小块,数据集中每个病灶对应提取20个小块:10个来自背景或正常组织,10个来自相关感兴趣区域(ROI)周边。为了使该分类器能用于训练包含两个视角的完整乳腺X光片,我们在小块层面也要求两个视角。定义小块的双视角很简单:对于乳房两个视角中都可见的所有病灶,分别从两个视角提取该病灶周边的小块。因此,小块分类器的输入是原始乳腺X光片双视角的$224\times224$小块(沿通道维度拼接),并将其分类为以下五类之一:背景或正常组织、良性钙化、恶性钙化、良性肿块和恶性肿块[6]。在下游阶段(即在整个图像训练时),网络使用预训练权重初始化,用于将检查分类为恶性或良性。实际上,小块预训练是利用乳腺X光片细粒度细节特征的方法,这对区分恶性和良性结果至关重要,否则这些细节会因图像尺寸调整而丢失[6][18]。如实验章节VI所示,这种训练策略对提升模型性能具有决定性作用。

V. EXPERIMENTAL SETUP

五、实验设置

In this section, we describe the experimental setup of our work that comprises the datasets we consider, the metrics employed for evaluation, model architectures details and training hyper parameters.

在本节中,我们将介绍实验设置,包括使用的数据集、评估指标、模型架构细节以及训练超参数。

A. Data

A. 数据

We validate the proposed method with two publicly available datasets of mammography images, whose sample summary is presented in Tab. I. We additionally demonstrate the general iz ability and flexibility of the proposed method on two additional tasks and datasets described herein.

我们使用两个公开可用的乳腺X光影像数据集验证了所提出的方法,其样本摘要如表1所示。此外,我们还通过本文描述的两个额外任务和数据集,展示了所提方法的泛化能力和灵活性。

- CBIS-DDSM: The Curated Breast Imaging Subset of DDSM (CBIS-DDSM) [39] is an updated and standardized version of the Digital Database for Screening Mammography (DDSM). It contains 2478 scanned film mammography images in the standard DICOM format and provides both pixellevel and whole-image labels from biopsy-proven pathology results. Furthermore, for each lesion, the type of abnormality is reported: calcification (753 cases) or mass (891 cases). Importantly, the dataset does not contain healthy cases but only positive ones (i.e., benign or malignant), where for the majority of them a biopsy was requested by the radiologist in order to make a final diagnosis, meaning that the dataset is mainly comprised of the most difficult cases. Additionally, the dataset provides the data divided into splits containing only masses and only calcification s, respectively, in turn split into official training and test sets, characterized by the same level of difficulty. CBIS-DDSM is employed for the training of the patch classifier as well as whole-image classifier in the twoview scenario. It is not used for four-view experiments as the official training/test splits do not contain enough full-exam cases and creating different splits would result in data leakage between patches and whole images. Finally, the images are resized to $600\times500$ and are augmented with a random rotation between $-25$ and $+25$ degrees and a random horizontal and vertical flip [6], [8].

- CBIS-DDSM:DDSM精选乳腺影像子集 (CBIS-DDSM) [39] 是数字化乳腺筛查数据库 (DDSM) 的标准化升级版本。该数据集包含2478张标准DICOM格式的扫描胶片乳腺X光影像,并提供基于活检病理结果的像素级和全图标注。每处病灶均标注异常类型:钙化灶 (753例) 或肿块 (891例)。值得注意的是,该数据集仅包含阳性病例 (即良性或恶性),不含健康病例,其中多数病例需放射科医师通过活检确诊,表明数据集主要由疑难病例构成。此外,数据集按病灶类型划分为仅含肿块和仅含钙化灶的子集,每个子集又分为官方训练集和测试集,且难度水平保持一致。CBIS-DDSM用于双视角场景下的局部分类器和全图分类器训练,未用于四视角实验,因其官方训练/测试划分未包含足够完整检查病例,且重新划分会导致局部图像与全图数据泄露。最终图像统一调整为$600×500$分辨率,并采用随机旋转 ($-25$至$+25$度) 及随机水平/垂直翻转进行数据增强 [6][8]。

- INbreast: The second dataset employed in this study, INbreast [2], is a database of full-field digital mammography (FFDM) images. It contains 115 mammography exams for a total of 410 images, involving several types of lesions, among which are masses and calcification s. The data splits are not provided, thus they are manually created by splitting the dataset patient-wise in a stratified fashion, using $20%$ of the data for testing. Finally, INbreast does not provide pathological confirmation of malignancy but BI-RADS labels. Therefore, in the aim of obtaining binary labels, we consider BI-RADS categories 4, 5 and 6 as positive and 1, 2 as negative, whilst ruling out category 3 following the approach of [6]. INbreast is utilized for experiments in both the two-view and fourview scenarios and the same preprocessing as CBIS-DDSM is applied. Finally, as can be seen in Tab. I, the dataset is highly imbalanced. To tackle this issue, in addition to using the same data augmentation techniques employed for CBIS-DDSM, we deploy a weighted loss during training, as elaborated in Section V-C.

- INbreast: 本研究采用的第二个数据集INbreast [2]是一个全视野数字乳腺摄影(FFDM)图像数据库。它包含115例乳腺检查,共计410张图像,涉及多种类型的病变,包括肿块和钙化。该数据集未提供预设划分,因此我们采用分层方式按患者手动划分数据集,其中20%数据用于测试。需要注意的是,INbreast未提供恶性肿瘤的病理学确认,而是采用BI-RADS标注。为获得二分类标签,我们参照[6]的方法,将BI-RADS 4、5、6类视为阳性,1、2类视为阴性,同时排除3类病例。该数据集被用于双视图和四视图场景的实验,并采用与CBIS-DDSM相同的预处理流程。如表1所示,该数据集存在严重不平衡问题。为此,除了采用与CBIS-DDSM相同的数据增强技术外,我们还在训练阶段使用了加权损失函数(详见第V-C节)。

TABLE I DATA DISTRIBUTION FOR SPLITS OF CBIS-DDSM AND FOR INBREAST WHEN CONSIDERING TWO AND FOUR VIEWS. IN THE WHOLE TABLE, TWO VIEWS OF THE SAME BREAST ARE COUNTED AS ONE INSTANCE.

表 1: CBIS-DDSM 和 INbreast 数据集在考虑双视图和四视图时的数据分布。全表中,同一乳房的两个视图计为一个实例。

| CBIS-DDSM | |||||

|---|---|---|---|---|---|

| Mass split | Mass-calc split | ||||

| Malignant | Benign | Malignant | Benign/Normal | ||

| Train | 254 | 255 | 480 | 582 | |

| Test | 59 | 83 | 104 | 149 | |

| INbreast | |||||

| Two views | Four views | ||||

| Malignant | Benign | Malignant | Benign/Normal | ||

| Train | 34 | 89 | 85 | ||

| Test | 14 | 39 | 8 | 22 |

- CheXpert: The CheXpert [40] dataset contains 224,316 chest X-rays with both frontal and lateral views and provides labels corresponding to 14 common chest radio graphic observations. Images are resized to $320\times320$ [40] for training and the same augmentation operations as before are applied.

- CheXpert: CheXpert [40] 数据集包含224,316张包含正位和侧位视图的胸部X光片,并提供14种常见胸部放射学观察结果的标签。训练时将图像尺寸调整为$320\times320$ [40],并应用与之前相同的增强操作。

- BraTS19: BraTS19 [41], [42], [50] is a dataset of multimodal brain MRI scans, providing four modalities for each exam, segmentation maps, demographic information (such as age) and overall survival (OS) data as the number of days, for 210 patients. For training, volumes are resized to $128\times128\times128$ and augmented via random scaling between 1 and 1.1 [51] for the task of overall survival prediction. Rather, for segmentation, 2D axial slices are extracted from the volumes and the original shape of $240\times240$ is maintained.

- BraTS19: BraTS19 [41], [42], [50] 是一个多模态脑部MRI扫描数据集,为210名患者提供每次检查的四种模态、分割图、人口统计信息(如年龄)以及以天数为单位的总生存期 (OS) 数据。在训练中,为总生存期预测任务,体积被调整为 $128\times128\times128$ 并通过1到1.1之间的随机缩放进行增强 [51]。而对于分割任务,则从体积中提取2D轴向切片,并保持原始 $240\times240$ 的形状。

B. Evaluation metrics

B. 评估指标

We adopt AUC (Area Under the ROC Curve) as the main performance metric to evaluate our models, as it is one of the most common metrics employed in medical imaging tasks [6], [18]–[20]. The ROC curve summarizes the trade-off between True Positive Rate (TPR) and False Positive Rate (FPR) for a predictive model using different probability thresholds. To further assess network performance, we additionally evaluate our models in terms of classification accuracy. Finally, we employ the Dice score for the segmentation task, which measures the pixel-wise agreement between a predicted mask and its corresponding ground truth.

我们采用AUC (Area Under the ROC Curve)作为评估模型的主要性能指标,因为这是医学影像任务中最常用的指标之一 [6], [18]–[20]。ROC曲线总结了预测模型在不同概率阈值下真阳性率(TPR)与假阳性率(FPR)之间的权衡关系。为进一步评估网络性能,我们还通过分类准确率对模型进行评价。最后,在分割任务中采用Dice分数衡量预测掩膜与真实标注之间的像素级吻合度。

C. Training details

C. 训练细节

We train our models to minimize the binary cross-entropy loss using Adam optimizer [52], with a learning rate of $10^{-5}$ for classification experiments and $2\times10^{-4}$ for segmentation. Regarding two-view models, the batch size is 8 for PHResNet18, 2 for PHResNet50 and 32 for PHUNet. Instead, for four-view models the batch size is set to 4. Notably, in our initial experiments concerning four-view exams, we employed a standard cross-entropy loss. However, the models struggled to generalize due to the high class imbalance within the dataset. Consequently, to address this issue and achieve meaningful results, we adopted a weighted loss [53], [54] assigning a weight to positive examples equal to the number of benign/normal examples divided by the number of positive examples. We adopt two regular iz ation techniques: weight decay at $5\times10^{-4}$ and early stopping.

我们训练模型时使用Adam优化器[52]最小化二元交叉熵损失,分类实验的学习率为$10^{-5}$,分割任务则为$2\times10^{-4}$。对于双视图模型,PHResNet18的批次大小为8,PHResNet50为2,PHUNet为32;而四视图模型的批次大小统一设为4。值得注意的是,在初期四视图检查实验中,我们采用标准交叉熵损失函数,但由于数据集中类别高度不平衡,模型难以泛化。为此,我们改用加权损失[53][54]以取得有效结果——将良性/正常样本数除以阳性样本数作为阳性样本权重。我们采用两种正则化技术:权重衰减系数设为$5\times10^{-4}$,并实施早停策略。

- Two-view architectures: We perform the experimental validation with two ResNet backbones: PHResNet18 and PHResNet50. The networks have the same structure as in the original paper [38] with some variations. In the first convolutional layer, the number of channels in input is set to be equal to the hyper parameter $n~=~2$ and we omit the max pooling operation since we apply a global average pooling operation, after which we add 4 refiner residual blocks constructed with the bottleneck design [55]. Ultimately, the output of such blocks is fed to the final fully connected layer responsible for classification. The backbone network is initialized with the patch classifier weights, while the refiner blocks and final layer are trained from scratch, following the approach of [6]. In both hyper complex and real domains, the models take as input two views as if they were a single multidimensional (channel-wise) entity.

- 双视图架构:我们采用两种ResNet主干网络进行实验验证:PHResNet18和PHResNet50。这些网络结构与原论文[38]基本一致,但存在一些调整。在第一个卷积层中,输入通道数设置为等于超参数$n~=~2$,并省略了最大池化操作(因为我们采用了全局平均池化)。随后添加了4个采用瓶颈结构[55]设计的细化残差块。这些块的输出最终送入负责分类的全连接层。主干网络使用补丁分类器的权重进行初始化,而细化块和最终层则遵循[6]的方法从头开始训练。无论在超复数域还是实数域,模型都将两个视图作为单一多维(通道维度)实体输入。

- Four-view architectures: Here, we expound the architectural details of the proposed models when considering as input the whole mammogram exam. Also in this case, we use as baselines the respective real-valued counterparts, the bottleneck model (BOnet) and the shared encoder network (SEnet), for which the structural details are the same as in the hyper complex domain.

- 四视图架构:在此,我们详细阐述了将整个乳腺X光检查作为输入时所提出模型的架构细节。同样地,我们采用各自的实数域对应模型作为基线,即瓶颈模型 (BOnet) 和共享编码器网络 (SEnet) ,其结构细节与超复数域中的模型保持一致。

The first proposed model is PHYBOnet. We start from a PHResNet18 and divide its blocks such that the first part of the network serves as an encoder for each side and the remaining blocks compose the bottleneck. Thus, the encoders comprise a first $3\times3$ convolutional layer with a stride of 1, together with batch normalization and ReLU, and the first 4 residual blocks of ResNet18, as shown in Fig. 5. Then, the bottleneck is composed of 8 residual blocks and a global average pooling layer, with the first 4 residual blocks being the standard remaining blocks of ResNet18, and the last 4 the refiner residual blocks employed also in the architecture with two views. Finally, the two outputs are fed to the respective fully connected layer, each responsible to produce the prediction related to its side. The second proposed architecture, PHYSEnet, presents as shared encoder model a whole PHResNet18, while the two classifier branches are comprised of the 4 refiner blocks with a global average pooling layer and the final classification layer. For both proposed models, the choice of PHResNet18 as backbone instead of PHResNet50 is motivated by the results obtained in the experimental evaluation in the two-view scenario described in Subsection VI-B2.

首个提出的模型是PHYBOnet。我们从PHResNet18出发,将其模块划分,使网络的前半部分作为各侧的编码器,剩余模块构成瓶颈部分。因此,编码器包含一个步长为1的初始3×3卷积层(带批归一化和ReLU激活)以及ResNet18的前4个残差块,如图5所示。随后,瓶颈部分由8个残差块和全局平均池化层构成,其中前4个残差块是ResNet18的标准剩余模块,后4个为双视图架构中也采用的精炼残差块。最终,两个输出分别送入各自的全连接层,各负责生成对应侧的预测结果。第二个提出的架构PHYSEnet采用完整的PHResNet18作为共享编码器模型,两个分类器分支则由4个精炼块(含全局平均池化层)和最终分类层组成。选择PHResNet18而非PHResNet50作为这两个模型的骨干网络,是基于第VI-B2小节所述双视图场景实验评估结果而定的。

TABLE II RESULTS FOR PATCH CLASS IF I ERS ON CBIS DATASET CONTAINING BOTH MASS AND CALC. THE PHRESNETS OUTPERFORM REAL-VALUED COUNTERPARTS.

表 2: 包含肿块和钙化的 CBIS 数据集上 PATCH CLASS IF I ERS 的结果。PHResNet 优于实值对应模型。

| 模型 | 准确率 (%) |

|---|---|

| ResNet18 | 74.942 |

| PHResNet18 | 76.825 |

| ResNet50 | 75.989 |

| PHResNet50 | 77.338 |

At training time, both for PHYBOnet and PHYSEnet, the encoder portions of the networks are initialized with the patch classifier weights, while the rest is trained from scratch. Rather, in a second set of experiments conducted only with PHYSEnet, the whole architecture is initialized with the weights of the best whole-image two-view classifier trained on CBIS-DDSM.

在训练阶段,无论是PHYBOnet还是PHYSEnet,网络的编码器部分均使用补丁分类器的权重进行初始化,其余部分则从头开始训练。而在仅针对PHYSEnet进行的第二组实验中,整个架构采用在CBIS-DDSM上训练的最佳全图像双视图分类器的权重进行初始化。

VI. EXPERIMENTAL EVALUATION

六、实验评估

In this section, we p