Subpixel Heatmap Regression for Facial Landmark Localization

基于亚像素热图回归的面部关键点定位

Abstract

摘要

Deep Learning models based on heatmap regression have revolutionized the task of facial landmark localization with existing models working robustly under large poses, non-uniform illumination and shadows, occlusions and self-occlusions, low resolution and blur. However, despite their wide adoption, heatmap regression approaches suffer from disc ret iz ation-induced errors related to both the heatmap encoding and decoding process. In this work we show that these errors have a surprisingly large negative impact on facial alignment accuracy. To alleviate this problem, we propose a new approach for the heatmap encoding and decoding process by leveraging the underlying continuous distribution. To take full advantage of the newly proposed encoding-decoding mechanism, we also introduce a Siamese-based training that enforces heatmap consistency across various geometric image transformations. Our approach offers noticeable gains across multiple datasets setting a new state-of-the-art result in facial landmark localization. Code alongside the pretrained models will be made available here.

基于热图回归的深度学习模型彻底改变了面部关键点定位任务,现有模型在大姿态、非均匀光照与阴影、遮挡与自遮挡、低分辨率及模糊条件下均表现出强大鲁棒性。然而,尽管热图回归方法被广泛采用,其仍存在由离散化过程引发的编码与解码误差。本研究表明,这些误差对面部对齐精度存在超乎预期的显著负面影响。为解决该问题,我们提出一种利用底层连续分布的新型热图编解码方法。为充分发挥新编解码机制的优势,我们还引入了基于孪生网络的训练策略,通过强制热图在不同几何图像变换下的一致性实现性能提升。该方法在多个数据集上取得显著效果提升,创造了面部关键点定位任务的新标杆。预训练模型及代码将在此公开。

1 Introduction

1 引言

This paper is on the popular task of localizing landmarks (or keypoints) on the human face, also known as facial landmark localization or face alignment. Current state-of-the-art is represented by fully convolutional networks trained to perform heatmap regression [5, 16, 24, 42, 46, 48]. Such methods can work robustly under large poses, non-uniform illumination and shadows, occlusions and self-occlusions [3, 5, 24, 43] and even very low resolution [6]. However, despite their wide adoption, heatmap-based regression approaches suffer from disc ret iz ation-induced errors. Although this is in general known, there are very few papers that study this problem [29, 44, 47]. Yet, in this paper, we show that this overlooked problem makes actually has surprisingly negative impact on the accuracy of the model.

本文聚焦于人脸标志点(或关键点)定位这一热门任务,也称为面部标志点定位或人脸对齐。当前最先进的方法采用全卷积网络进行热图回归训练[5, 16, 24, 42, 46, 48]。这类方法能够在大姿态、非均匀光照与阴影、遮挡与自遮挡[3, 5, 24, 43]甚至极低分辨率[6]条件下稳定工作。然而,尽管热图回归方法被广泛采用,其仍存在离散化导致的误差问题。虽然该问题已被普遍认知,但相关研究论文极少[29, 44, 47]。本文揭示了这个被忽视的问题实际上对模型精度有着惊人的负面影响。

In particular, as working in high resolutions is computationally and memory prohibitive, typically, heatmap regression networks make predictions at $\frac{1}{4}$ of the input resolution [5]. Note that the input image may already be a down sampled version of the original facial image. Due to the heatmap construction process that disc ret ize s all values into a grid and the subsequent estimation process that consists of finding the coordinates of the maximum, large disc ret iz ation errors are introduced. This in turn causes at least two problems: (a) the encoding process forces the network to learn randomly displaced points and, (b) the inference process of the decoder is done on a discrete grid failing to account for the continuous underlying Gaussian distribution of the heatmap.

特别是由于高分辨率工作对计算和内存的要求极高,热图回归网络通常会在输入分辨率的$\frac{1}{4}$下进行预测[5]。需要注意的是,输入图像本身可能已经是原始面部图像的下采样版本。由于热图构建过程将所有值离散化为网格,而后续的估计过程又涉及寻找最大值坐标,这会导致较大的离散化误差。进而引发至少两个问题:(a) 编码过程迫使网络学习随机偏移的点;(b) 解码器的推理过程在离散网格上进行,无法考虑热图背后连续的高斯分布。

To alleviate the above problem, in this paper, we make the following contributions:

为缓解上述问题,本文作出以下贡献:

2 Related work

2 相关工作

Most recent efforts on improving the accuracy of face alignment fall into one of the following two categories: network architecture improvements and loss function improvements.

提升人脸对齐准确度的最新研究主要集中在以下两个方向:网络架构优化和损失函数改进。

Network architectural improvements: The first work to popularize and make use of encoder decoder models with heatmap-based regression for face alignment was the work of Bulat&Tzi miro poul os [5] where the authors adapted an HourGlass network [31] with 4 stages and the Hierarchical Block of [4] for face alignment. Subsequent works generally preserved the same style of U-Net [37] and Hourglass structures with notable differences in [43, 48, 53] where the authors used ResNets [19] adapted for dense pixel-wise predictions. More specifically, in [53], the authors removed the last fully connected layer and the global pooling operation from a ResNet model and then attempted to recover the lost resolution using a series of convolutions and de convolutional layers. In [48], Wang et al. expanded upon this by introducing a novel structure that connects high-to-low convolution streams in parallel, maintaining the high-resolution representations through the entire model. Building on top of [5], in CU-Net [46] and DU-Net [45] Tang et al. combined U-Nets with DenseNet-like [20] architectures connecting the $i$ -th U-Net with all previous ones via skip connections.

网络架构改进:首次推广并应用基于热图回归的编码器-解码器模型进行人脸对齐的是Bulat&Tzimiropoulos [5]的工作,作者采用了4阶段的HourGlass网络[31]和[4]的分层块。后续研究基本沿用了U-Net[37]和Hourglass结构风格,其中[43,48,53]的显著差异在于作者采用了适用于密集像素预测的ResNet[19]。具体而言,在[53]中,作者移除了ResNet模型的最后一个全连接层和全局池化操作,随后通过一系列卷积和反卷积层尝试恢复丢失的分辨率。Wang等人在[48]中进一步扩展,提出了一种连接高低分辨率卷积流的并行新颖结构,在整个模型中保持高分辨率表征。在[5]基础上,Tang等人在CU-Net[46]和DU-Net[45]中将U-Net与类DenseNet[20]架构相结合,通过跳跃连接将第$i$个U-Net与之前所有U-Net相连。

Loss function improvements: The standard loss typically used for heatmap regression is a pixel-wise $\ell_{2}$ or $\ell_{1}$ loss [2, 3, 5, 42, 46, 48]. Feng et al. [16] argued that more attention should be payed to small and medium range errors during training, introducing the Wing loss that amplifies the impact of the errors within a defined interval by switching from an $\ell_{1}$ to a modified log-based loss. Improving upon this, in [49], the authors introduced the Adaptive Wing Loss, a loss capable to update its curvature based on the ground truth pixels. The predictions are further aided by the integration of coordinates encoding via CoordConv [28] into the model. In [24], Kumar et al. introduced the so-called LUVLi loss that jointly optimizes the location of the keypoints, the uncertainty, and the visibility likelihood. Albeit for human pose estimation, [29] proposes an alternative to heatmap-based regression by introducing a differential soft-argmax function applied globally to the output features. However, the lack of structure induced by a Gaussian prior, hinders their accuracy.

损失函数改进:通常用于热图回归的标准损失是逐像素的 $\ell_{2}$ 或 $\ell_{1}$ 损失 [2, 3, 5, 42, 46, 48]。Feng等人 [16] 提出训练时应更关注中小范围误差,通过从 $\ell_{1}$ 切换到改进的基于对数的损失函数,引入Wing损失来放大特定区间内误差的影响。在此基础上,文献 [49] 的作者提出了自适应Wing损失 (Adaptive Wing Loss),该损失能够根据真实像素更新其曲率。通过将CoordConv [28] 的坐标编码集成到模型中,进一步辅助了预测。Kumar等人在 [24] 中提出了所谓的LUVLi损失,联合优化关键点位置、不确定性和可见性似然。尽管是针对人体姿态估计,文献 [29] 通过引入全局应用于输出特征的微分soft-argmax函数,提出了基于热图回归的替代方案。然而,缺乏高斯先验引入的结构会阻碍其准确性。

Contrary to the aforementioned works, we attempt to address the quantization-induced error by proposing a simple continuous approach to the heatmap encoding and decoding process. In this direction, [44] proposes an analytic solution to obtain the fractional shift by assuming that the generated heatmap follows a Gaussian distribution and applies this to stabilize facial landmark localization in video. A similar assumption is made by [47] which solves an optimization problem to obtain the subpixel solution. Finally, [29] uses global softargmax. Our method is mostly similar to [29] which we compare with in Section 4.

与上述工作不同,我们尝试通过提出一种简单的连续方法来解决热图编码和解码过程中的量化误差问题。在这一方向上,[44] 提出了一种解析解,假设生成的热图服从高斯分布,通过计算分数位移来稳定视频中的人脸关键点定位。[47] 采用了类似假设,通过求解优化问题获得亚像素级解。最后,[29] 使用了全局软最大值 (softargmax) 方法。我们的方法与 [29] 最为相似,第4节将对此进行对比分析。

3 Method

3 方法

3.1 Preliminaries

3.1 预备知识

Given a training sample $(\mathbf{X},\mathbf{y})$ , with $\mathbf{y}\in{\mathbb{R}}^{k\times2}$ denoting the coordinates of the $K$ joints in the corresponding image $\mathbf{X}$ , current facial landmark localization methods encode the target ground truth coordinates as a set of $k$ heatmaps with a 2D Gaussian centered at them:

给定训练样本 $(\mathbf{X},\mathbf{y})$ ,其中 $\mathbf{y}\in{\mathbb{R}}^{k\times2}$ 表示对应图像 $\mathbf{X}$ 中 $K$ 个关节的坐标,当前面部关键点定位方法将目标真实坐标编码为一组以它们为中心的二维高斯分布的 $k$ 个热图:

$$

\mathcal{G}{i,j,k}(\mathbf{y})=\frac{1}{2\pi\sigma^{2}}e^{-\frac{1}{2\sigma^{2}}[(i-\tilde{y}{k}^{[1]})^{2}+(j-\tilde{y}_{k}^{[2]})^{2}]},

$$

$$

\mathcal{G}{i,j,k}(\mathbf{y})=\frac{1}{2\pi\sigma^{2}}e^{-\frac{1}{2\sigma^{2}}[(i-\tilde{y}{k}^{[1]})^{2}+(j-\tilde{y}_{k}^{[2]})^{2}]},

$$

where $y_{k}^{[1]}$ and $y_{k}^{[2]}$ are the spatial coordinates of the $k$ -th point, and $\tilde{y}{k}^{[1]}$ and $\tilde{y}_{k}^{[2]}$ their scaled, quantized version:

其中 $y_{k}^{[1]}$ 和 $y_{k}^{[2]}$ 是第 $k$ 个点的空间坐标,$\tilde{y}{k}^{[1]}$ 和 $\tilde{y}_{k}^{[2]}$ 是它们的缩放量化版本:

$$

(\tilde{y}{k}^{[1]},\tilde{y}{k}^{[2]})=(\lfloor\frac{1}{s}y_{k}^{[1]}\rceil,\lfloor\frac{1}{s}y_{k}^{[2]}\rceil)

$$

$$

(\tilde{y}{k}^{[1]},\tilde{y}{k}^{[2]})=(\lfloor\frac{1}{s}y_{k}^{[1]}\rceil,\lfloor\frac{1}{s}y_{k}^{[2]}\rceil)

$$

where $\lfloor.\rceil$ is the rounding operator and $1/s$ is the scaling factor used to scale the image to a pre-defined resolution. $\sigma$ is the variance, a fixed value which is task and dataset dependent. For a given set of landmarks y, Eq. 1 produces a corresponding heatmap $\mathcal{H}\in\mathbb{R}^{k\times W_{h m}\times H_{h m}}$ .

其中 $\lfloor.\rceil$ 是取整运算符,$1/s$ 是用于将图像缩放到预定义分辨率的比例因子。$\sigma$ 是方差,一个固定值,其大小取决于具体任务和数据集。对于给定的关键点集 y,公式 1 会生成对应的热图 $\mathcal{H}\in\mathbb{R}^{k\times W_{h m}\times H_{h m}}$。

Heatmap-based regression overcomes the lack of a spatial and contextual information of direct coordinate regression. Not only such representations are easier to learn by allowing visually similar parts to produce proportionally high responses instead of predicting a unique value, but they are also more interpret able and semantically meaningful.

基于热图的回归克服了直接坐标回归缺乏空间和上下文信息的问题。这种表示不仅通过允许视觉相似部分产生成比例的高响应而非预测唯一值来更易于学习,还具有更强的可解释性和语义意义。

3.2 Continuous Heatmap Encoding

3.2 连续热图编码

Despite the advantages of heatmap regression, one key inherent issue with the approach has been overlooked: The heatmap generation process introduces relatively high quantization errors. This is a direct consequence of the trade-offs made during the generation process: since generating the heatmaps predictions at the original image resolution is prohibitive, the localization process involves cropping and re-scaling the facial images such that the final predicted heatmaps are typically at a $64\times64\mathrm{px}$ resolution [5]. As described in Section 3.1, this process re-scales and quantizes the landmark coordinates as $\begin{array}{r}{\hat{\mathbf{y}}=\mathrm{quant}\mathrm{i}\mathrm{ze}(\frac{1}{s}\mathbf{y})}\end{array}$ , where round or floor is the quantization function. However, there is no need to quantize. One can simply create a Gaussian located at:

尽管热图回归具有诸多优势,该方法一个关键的内在问题却被忽视了:热图生成过程会引入较高的量化误差。这是生成过程中权衡取舍的直接结果:由于以原始图像分辨率生成热图预测的计算成本过高,定位过程通常需要对面部图像进行裁剪和重新缩放,使得最终预测的热图分辨率通常为$64\times64\mathrm{px}$[5]。如第3.1节所述,该过程将关键点坐标重新缩放并量化为$\begin{array}{r}{\hat{\mathbf{y}}=\mathrm{quant}\mathrm{i}\mathrm{ze}(\frac{1}{s}\mathbf{y})}\end{array}$,其中round或floor为量化函数。但实际上无需进行量化,只需在以下位置创建高斯分布即可:

$$

(\tilde{y}{k}^{[1]},\tilde{y}{k}^{[2]})=(\frac{1}{s}y_{k}^{[1]},\frac{1}{s}y_{k}^{[2]}),~

$$

$$

(\tilde{y}{k}^{[1]},\tilde{y}{k}^{[2]})=(\frac{1}{s}y_{k}^{[1]},\frac{1}{s}y_{k}^{[2]}),~

$$

and then sample it over a regular spatial grid. This will completely remove the quantization error introduced previously and will only add some aliasing due to the sampling process.

然后在规则的空间网格上对其进行采样。这将完全消除之前引入的量化误差,仅因采样过程引入少量混叠。

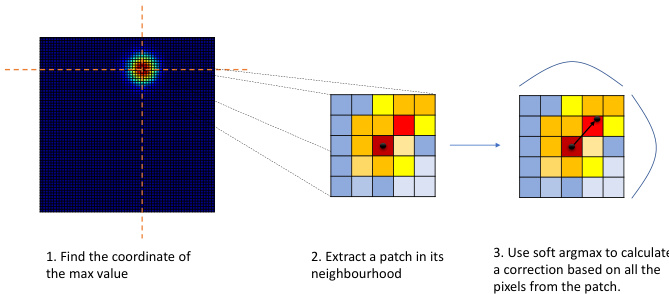

Figure 1: Proposed heatmap decoding. Given a predicted heatmap, (1) we find the location of the maximum, (2) and then crop around it a $k\times k$ patch. Finally, (3) we apply a softargmax on the patch and retrieve a correction applied to the location estimated at step (1).

图 1: 提出的热力图解码方法。给定预测的热力图,(1) 首先找到最大值的位置,(2) 然后在其周围裁剪一个 $k\times k$ 的补丁。最后,(3) 在该补丁上应用 softargmax 并获取对步骤 (1) 中估计位置的修正量。

3.3 Continuous Heatmap Decoding with Local Soft-argmax

3.3 基于局部软最大值 (Local Soft-argmax) 的连续热图解码

Currently, the typical landmark localization process from 2D heatmaps consists of finding the location of the pixel with the highest value [5]. This is typically followed by a heuristic correction with $0.25\mathrm{px}$ toward the location of the second highest neighboring pixel. The goal of this adjustment is to partially compensate for the effect induced by the quantization process: on one side by the heatmap generation process itself (as described in Section 3.2) and on other side, by the coarse nature of the predicted heatmap that uses the maximum value solely as the location of the point. We note that, despite the fact that the ground truth heatmaps are affected by quantization errors, generally, the networks learns to adjust, to some extent its predictions, making the later heuristic correction work well in practice.

目前,从二维热图中定位典型地标的过程通常包括寻找像素值最高的位置 [5]。随后通常会采用一个启发式修正,即向次高相邻像素的位置偏移 $0.25\mathrm{px}$。这一调整的目的是部分补偿量化过程带来的影响:一方面是热图生成过程本身(如第3.2节所述)导致的量化,另一方面是预测热图的粗糙性——仅将最大值作为点的位置。值得注意的是,尽管真实热图存在量化误差,但网络通常能在一定程度上学会调整其预测结果,使得后续的启发式修正在实践中效果良好。

Rather than using the above heuristic, we propose to predict the location of the keypoint by analyzing the pixels in its neighbourhood and exploiting the known targeted Gaussian distribution. For a given heatmap $\mathcal{H}{k}$ , we firstly find the coordinates corresponding to the maximum value $(\hat{y}{k}^{[\bar{1}]},\hat{y}{k}^{[2]})=\arg\operatorname*{max}\mathcal{H}{k}$ and then, around this location, we select a small square matrix $h_{k}$ of size $d\times d$ , where $\begin{array}{r}{l=\frac{d}{2}}\end{array}$ . Then, we predict an offset $(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}_{k}^{[2]})$ by finding a soft continuous maximum value within the selected matrix, effectively retrieving a correction, using a local soft-argmax:

我们提出通过分析关键点邻域像素并利用已知目标高斯分布来预测其位置,而非采用上述启发式方法。对于给定热图 $\mathcal{H}{k}$,首先找到最大值对应坐标 $(\hat{y}{k}^{[\bar{1}]},\hat{y}{k}^{[2]})=\arg\operatorname*{max}\mathcal{H}{k}$,然后在该位置周围选取尺寸为 $d\times d$ 的小方阵 $h_{k}$(其中 $\begin{array}{r}{l=\frac{d}{2}}\end{array}$)。随后通过局部软最大值定位技术,在选定矩阵内寻找软连续最大值来预测偏移量 $(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}_{k}^{[2]})$,从而获得修正量:

$$

\big(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}{k}^{[2]}\big)=\sum_{m,n}\mathsf{s o f t m a x}(\tau h_{k})_{m,n}(m,n),

$$

$$

\big(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}{k}^{[2]}\big)=\sum_{m,n}\mathsf{s o f t m a x}(\tau h_{k})_{m,n}(m,n),

$$

where $\tau$ is the temperature that controls the resulting probability map, and $(m,n)$ are the indices that iterate over the pixel coordinates of the heatmap $h_{k}$ . softmax is defined as:

其中 $\tau$ 是控制输出概率分布的温度参数,$(m,n)$ 是遍历热力图 $h_{k}$ 像素坐标的索引。softmax 定义为:

$$

\mathsf{s o f t m a x}(h){m,n}=\frac{e^{h_{m,n}}}{\sum_{m^{\prime},n^{\prime}}e^{h_{m^{\prime},n^{\prime}}}}

$$

$$

\mathsf{s o f t m a x}(h){m,n}=\frac{e^{h_{m,n}}}{\sum_{m^{\prime},n^{\prime}}e^{h_{m^{\prime},n^{\prime}}}}

$$

The final prediction is then obtained as: $(\hat{y}{k}^{[1]}+\Delta\hat{y}{k}^{[1]}-l,\hat{y}{k}^{[2]}+\Delta\hat{y}_{k}^{[2]}-l)$ . The 3 step process is illustrated in Fig. 1.

最终预测结果通过以下公式获得:$(\hat{y}{k}^{[1]}+\Delta\hat{y}{k}^{[1]}-l,\hat{y}{k}^{[2]}+\Delta\hat{y}_{k}^{[2]}-l)$。该三步流程如图 1 所示。

3.4 Siamese consistency training

3.4 孪生一致性训练

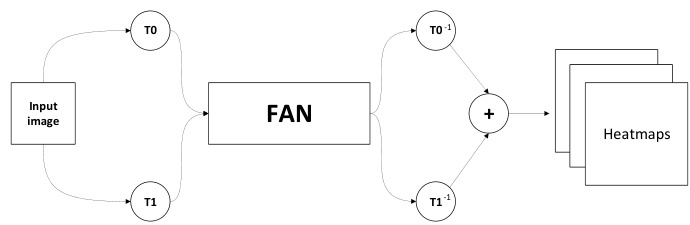

Largely, the face alignment training procedure has remained unchanged since the very first deep learning methods of [5, 65]. Herein, we propose to deviate from this paradigm adopting a Siamese-based training, where two different random augmentations of the same image are passed through the network, producing in the process a set of heatmaps. We then revert the transformation of each of these heatmaps and combine them via element-wise summation.

很大程度上,人脸对齐训练流程自最早的深度学习方法 [5, 65] 以来基本保持不变。本文中,我们提出采用基于孪生网络 (Siamese-based) 的训练范式,将同一张图像的两种不同随机增强版本输入网络,生成一组热力图 (heatmaps)。随后我们还原每张热力图的变换,并通过逐元素求和进行融合。

Figure 2: Siamese transformation-invariant training. T0 and T1 are two randomly sampled data augmentation transformations applied on the input image. After passing the augmented images through the network a set of heatmaps are produced. Finally, the transformations are reversed and the two outputs merged.

图 2: 孪生变换不变性训练。T0 和 T1 是输入图像上随机采样的两种数据增强变换。增强后的图像通过网络后生成一组热力图。最后,变换被逆转并将两个输出合并。

The advantages of this training process are twofold: Firstly, convolutional networks are not invariant under arbitrary affine transformations, and, as such, relatively small variances in the input space can result in large differences in the output. Therefore, by optimizing jointly and combining the two predictions we can improve the consistency of the predictions.

这种训练过程的优势有两点:首先,卷积网络在任意仿射变换下并不具有不变性,因此输入空间中相对较小的方差可能导致输出上的巨大差异。因此,通过联合优化并结合两个预测,我们可以提高预测的一致性。

Secondly, while previously the 2D Gaussians were always centered around an integer pixel location due to the quantization of the coordinates via rounding, the newly proposed heatmap generation can have the center in-between (i.e. on a sub-pixel). As such, to avoid small sub-pixel inconsistencies and misalignment introduced by the data augmentation process we adopt the above-mentioned Siamese based training. Our approach, depicted in Fig. 2, defines the output heatmaps $\hat{\mathcal{H}}$ as:

其次,由于之前通过坐标舍入量化,2D高斯分布始终以整数像素位置为中心,而新提出的热图生成方法可以将中心定位在像素之间(即亚像素位置)。为避免数据增强过程中引入的微小亚像素不一致和错位问题,我们采用了上述基于孪生网络的训练方法。如图 2 所示,我们的方法将输出热图 $\hat{\mathcal{H}}$ 定义为:

$$

\tilde{\mathcal{H}}=T_{0}^{-1}(\Phi(T_{0}(\mathbf{X}{i}),\theta))+T_{1}^{-1}(\Phi(T_{1}(\mathbf{X}_{i})),\theta),

$$

$$

\tilde{\mathcal{H}}=T_{0}^{-1}(\Phi(T_{0}(\mathbf{X}{i}),\theta))+T_{1}^{-1}(\Phi(T_{1}(\mathbf{X}_{i})),\theta),

$$

where $\Phi$ is the network for heatmap regression with parameters $\theta.T_{0}$ and $T_{1}$ are two random transformations applied on the input image $\mathbf{X}{i}$ and, $T_{0}^{-1}$ and $T_{1}^{-1}$ denote their inverse.

其中 $\Phi$ 是用于热图回归的网络,参数为 $\theta$。$T_{0}$ 和 $T_{1}$ 是应用于输入图像 $\mathbf{X}{i}$ 的两种随机变换,$T_{0}^{-1}$ 和 $T_{1}^{-1}$ 表示它们的逆变换。

4 Ablation studies

4 消融实验

4.1 Comparison with other landmarks localization losses

4.1 与其他地标定位损失函数的对比

Beyond comparisons with recently proposed methods for face alignment in Section 6 (e.g. [16, 24, 49]), herein we compare our approach against a few additional baselines.

除第6节中与近期提出的人脸对齐方法(如[16, 24, 49])的比较外,本文还将我们的方法与一些额外基线进行比较。

Heatmap prediction with coordinate correction: In DeepCut [33], for human pose estimation, the authors propose to add a coordinate refinement layer that predicts a $(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}{k}^{[2]})$ displacement that is then added to the integer predictions generated by the heatmaps. To implement this, we added a global pooling operation followed by a fully connected layer and then trained it jointly using an $\ell_{2}$ loss. We attempted 2 different variants:where tisnstructed by measuring the heatmap encoding

带坐标修正的热图预测:在DeepCut [33]中,针对人体姿态估计任务,作者提出添加一个坐标细化层来预测位移量 $(\Delta\hat{y}{k}^{[1]},\Delta\hat{y}{k}^{[2]})$ ,该位移量会与热图生成的整数预测值相加。为实现这一机制,我们添加了全局池化操作和全连接层,并使用 $\ell_{2}$ 损失函数进行联合训练。我们尝试了两种不同变体:其中通过测量热图编码构建

errors and the other is dynamically constructed at runtime by measuring the error between the heatmap prediction and the ground truth. As Table 1 shows, these learned corrections offer minimal improvements on top of the standard heatmap regression loss and are noticeably worse than the accuracy scored by the proposed method. This shows that predicting sub-pixel errors using a second branch is less effective than constructing better heatmaps from the first place.

错误,另一个是在运行时通过测量热图预测与真实值之间的误差动态构建的。如表 1 所示,这些学习到的修正对标准热图回归损失的改进微乎其微,明显不如所提方法达到的精度。这表明使用第二个分支预测亚像素误差的效果不如从一开始就构建更好的热图。

Table 1: Comparison between various losses baselines on 300W test set.

表 1: 300W测试集上不同损失基线的对比

| 方法 | NMEbox |

|---|---|

| l2热图回归 | 2.32 |

| 坐标校正(静态gt) | 2.27 |

| 坐标校正(动态gt) | 2.30 |

| Globalsoft-argmax | 3.19 |

| Local soft-argmax(本文方法) | 2.04 |

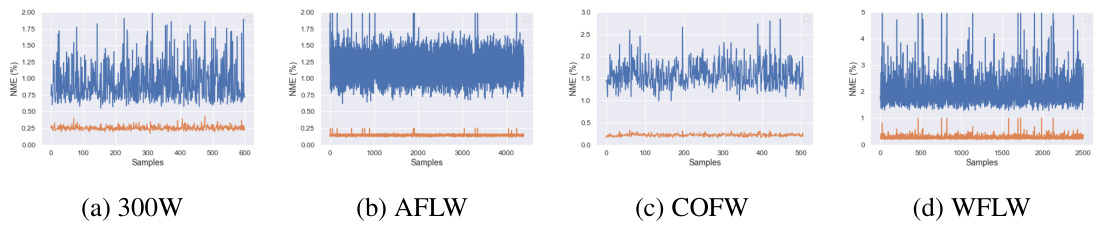

Figure 3: NME after encoding and then decoding of the ground truth heatmaps for various datasets using our proposed approach (orange) and the standard one [5] (blue). Notice that our approach significantly reduces the error rate across all samples from the datasets.

图 3: 采用我们提出的方法(橙色)和标准方法5对多个数据集的真实热图进行编码再解码后的NME。请注意,我们的方法显著降低了所有数据集样本的错误率。

Global soft-argmax: In [29], the authors propose to to predict the locations of the points of interest on the human body by estimating their position using a global soft-argmax as a differentiable alternative to taking the argmax. From a first glance this is akin to the idea proposed in this work: local soft-argmax. However, applying soft-argmax globally leads to semantically unstructured outputs [29] that hurt the performance. Even adding a Gaussian prior is insufficient for achieving high accuracy on face alignment. As the results from Table 1 conclusively show, our simple improvement, namely the proposed local soft-argmax is the key idea for obtaining highly accurate results.

全局 soft-argmax:在 [29] 中,作者提出通过全局 soft-argmax 作为可微分的 argmax 替代方案来预测人体关键点位置。初看这与本文提出的局部 soft-argmax 思路相似。但全局应用 soft-argmax 会导致语义结构缺失的输出 [29],从而影响性能。即使添加高斯先验也无法实现人脸对齐的高精度。如 表 1 结果明确所示,我们提出的局部 soft-argmax 这一简单改进才是获得高精度结果的关键。

4.2 Effect of method’s components

4.2 方法各组件的影响

Herein, we explore the impact of each our method’s component on the overall performance of the network. As the results from Table 2 show, starting from the baseline introduced in [5], the addition of the proposed heatmap encoding and decoding process significantly improves the accuracy. If we analyze this result in tandem with Fig. 3 it becomes apparent what is the source of these gains: In particular, Fig. 3 shows the heatmap encoding and decoding process of the baseline method [5] as well as of our method using directly the ground truth landmarks (i.e. these are not network’s predictions). As shown in Fig. 3, simply encoding and decoding the heatmaps corresponding to the ground truth alone induces high NME for [5]. While the training procedure is able to compensate this, these inaccuracies representations hinder the learning process. Furthermore, due to the sub-pixel errors introduced, the performance in the high accuracy regime of the cumulative error curve degrades.

本文探讨了方法中各组件对网络整体性能的影响。如表2所示,从文献[5]提出的基线方法出发,增加热图编码和解码过程后精度显著提升。结合图3分析可知性能提升的来源:图3对比展示了基线方法[5]与本方法(直接使用真实关键点,非网络预测)的热图编解码过程。如图所示,仅对真实值热图进行编解码就会导致[5]方法产生较高NME误差。虽然训练过程能够部分补偿这种误差,但这些不精确的表征仍会阻碍学习过程。此外,由于引入的亚像素误差,累积误差曲线在高精度区间的性能会出现下降。

Table 2: Effect of the proposed components on the WFLW dataset.

表 2: 所提组件在 WFLW 数据集上的效果

| 方法 | NMEic (%) |

|---|---|

| Baseline [5] | 4.20 |

| +proposedhm | 3.90 |

| + proposed hm (w/o 3.3) | 4.00 |

| +siamese training | 3.72 |

The rest of the gains are achieved by switching to the proposed Siamese training that reduces the discrepancies between multiple views of the same image while also reducing potential sub-pixel displacements that may occur between the image and the heatmaps.

其余增益通过切换至所提出的孪生训练 (Siamese training) 实现,该方法减少了同一图像多视角间的差异,同时降低了图像与热图之间可能出现的亚像素位移。

4.3 Local window size

4.3 局部窗口大小

In this section, we analyze the relation between the local soft-argmax window size and the model’s accuracy. As the results from Table 3 show, the optimal window has a size of

在本节中,我们分析了局部 soft-argmax 窗口大小与模型精度之间的关系。如表 3 所示,最佳窗口尺寸为

$5\times5\mathrm{px}$ , which corresponds to the size of the generated gaussian (i.e., most of the non-zero values will be contained within this window). Furthermore, as the window size increases the amount of noise and background pixels also increases and hence the accuracy decreases. The same value is used across all datasets. Note, that explicitly using the local window loss during training doesn’t improve the performance further which suggest that the pixel-wise loss alone is sufficient, if the encoding process is accurate.

5×5像素,这对应于生成的高斯窗口大小(即大部分非零值将包含在此窗口内)。此外,随着窗口尺寸增大,噪声和背景像素的数量也会增加,从而导致精度下降。所有数据集均采用相同值。需注意的是,在训练期间显式使用局部窗口损失并不会进一步提升性能,这表明只要编码过程准确,仅像素级损失就已足够。

5 Experimental setup

5 实验设置

Datasets: We preformed extensive evaluations to quantify the effectiveness of the proposed method. We trained and/or tested our method on the following datasets: 300W [38] (constructed in [38] using images from LFPW [1], AFW [64], HELEN [25] and iBUG [39]), 300W-LP [65],

数据集:我们进行了大量评估以量化所提方法的有效性。在以下数据集上训练和/或测试了我们的方法:300W [38](使用来自LFPW [1]、AFW [64]、HELEN [25]和iBUG [39]的图像构建于[38]中)、300W-LP [65]

Table 3: Effect of window size on the 300W test set.

表 3: 窗口大小对 300W 测试集的影响

| none | 3×3 5×5 | 7×7 | |

|---|---|---|---|

| NMEbox | 2.21 | 2.06 | 2.04 2.07 |

Menpo [58], COFW-29 [7], COFW-68 [17], AFLW [22], WFLW [50] and 300VW [41]. For a detailed description of each dataset see supplementary material.

Menpo [58]、COFW-29 [7]、COFW-68 [17]、AFLW [22]、WFLW [50] 和 300VW [41]。各数据集的详细说明见补充材料。

Metrics: Depending on the evaluation protocol of each dataset we used one or more of the following metrics:

指标:根据每个数据集的评估协议,我们使用以下一个或多个指标:

Normalized Mean Error (NME) that measures the point-to-point normalized Euclidean distance. Depending on the testing protocol, the NME type will vary. In this paper, we distinguish between the following types: $d_{i c}$ – computed as the inter-occular distance [38], $d_{b o x}$ – computed as the geometric mean of the ground truth bounding box [5] $d=\sqrt{(\boldsymbol{w}{b b o x}}$ · $h_{b b o x})$ , and finally $d_{d i a g}$ – defined as the diagonal of the bounding box.

归一化平均误差 (NME) 用于测量点对点归一化欧氏距离。根据测试协议不同,NME 类型会有所变化。本文区分了以下类型:$d_{i c}$ —— 以眼间距离计算 [38],$d_{b o x}$ —— 以真实标注框的几何平均数计算 [5] $d=\sqrt{(\boldsymbol{w}{b b o x}}$ · $h_{b b o x})$,最后是 $d_{d i a g}$ —— 定义为标注框的对角线长度。

Area Under the Curve(AUC): The AUC is computed by measuring the area under the curve up to a given user defined cut-off threshold of the cumulative error curve.

曲线下面积 (AUC): AUC的计算方法是测量累积误差曲线在用户定义的截止阈值下的曲线下面积。

Failure Rate (FR): The failure rate is defined as the percentage of images the NME of which is bigger than a given (large) threshold.

失败率 (FR): 失败率定义为归一化最大误差 (NME) 超过给定 (较大) 阈值的图像百分比。

5.1 Training details

5.1 训练细节

For training the models used throughout this paper we largely followed the common best practices from literature. Mainly, during training we applied the following augmentation techniques: Random rotation (between $\pm30^{o}$ ), image flipping and color(0.6, 1.4) and scale jittering (between 0.85 and 1.15). The models where trained for 50 epochs using a step scheduler that dropped the learning rate at epoch 25 and 40 starting from a starting learning rate of 0.0001. Finally, we used Adam [21] for optimization. The predicted heatmaps were at a resolution of $64\times64\mathrm{px}$ , i.e. $4\times$ smaller than the input images which were resized to $256\times$ 256 pixels with the face size being approximately equal to $220\times220\mathrm{px}$ . The network was optimized using an $\ell_{2}$ pixel-wise loss. For the heatmap decoding process, the temperature of the soft-argmax $\tau$ was set to 10 for all datasets, however slightly higher values perform similarly. Values that are too small or high would ignore and respectively overly emphasise the pixels found around the coordinates of the max. All the experiments were implemented using PyTorch [32] and Kornia [35].

在训练本文所用模型时,我们主要遵循文献中的常见最佳实践。训练过程中主要应用了以下数据增强技术:随机旋转(范围 $\pm30^{o}$)、图像翻转、色彩调整(0.6-1.4倍)以及尺度抖动(0.85-1.15倍)。模型训练50个epoch,采用分步学习率调度器,初始学习率为0.0001,在第25和40个epoch时降低学习率。优化器选用Adam [21]。预测热图分辨率为 $64\times64\mathrm{px}$(即比输入图像缩小 $4\times$ 倍),输入图像统一调整为 $256\times256$ 像素,面部区域尺寸约为 $220\times220\mathrm{px}$。网络优化采用 $\ell_{2}$ 逐像素损失函数。热图解码过程中,soft-argmax的温度参数 $\tau$ 在所有数据集均设为10(略高值表现相近),过小或过大的值会分别忽略或过度强调最大值坐标周围的像素。所有实验均通过PyTorch [32]和Kornia [35]实现。

Network architecture: All models trained throughout this work, unless otherwise specified, follow a 2-stack Hourglass based architecture with a width of 256 channels, operating at a resolution of $256\times256\mathrm{px}$ as introduced in [5]. Inside the hourglass, the features are rescaled down-to $4\times4\mathrm{px}$ and then upsampled back, with skip connection linking features found at the same resolution. The network is constructed used the building block from [4] as in [5]. For more details regarding the network structure see [5, 31].

网络架构:除非另有说明,本研究中训练的所有模型均采用基于双栈Hourglass的架构,宽度为256通道,工作分辨率为$256\times256\mathrm{px}$,如[5]所述。在Hourglass内部,特征被下采样至$4\times4\mathrm{px}$后再进行上采样,并通过跳跃连接(skip connection)链接同分辨率特征。该网络使用[4]提出的构建模块搭建,具体方法见[5]。更多网络结构细节请参阅[5, 31]。

6 Comparison against state-of-the-art

6 与最先进技术的对比

Herein, we compare against the current state-of-the-art face alignment methods across a plethora of datasets. Throughout this section the best result is marked in table with bold and red while the second best with bold and blue color. The important finding of this section is by means of two simple improvements: (a) improving the heatmap encoding and decoding process and, (b) including the Siamese training, we managed to obtain results which are significantly better than all recent prior work, setting in this way a new state-of-the-art.

在此,我们与当前最先进的人脸对齐方法在多个数据集上进行了比较。本节中,最佳结果在表格中以加粗红色标记,次佳结果以加粗蓝色标记。本部分的重要发现在于通过两项简单改进:(a) 优化热图编码解码过程,(b) 引入连体训练 (Siamese training),我们取得了显著优于近期所有相关工作的成果,从而确立了新的技术标杆。

Comparison on WFLW: On WFLW, and following their evaluation protocol, we report results in terms of $\mathrm{NME}{i c}$ , $\mathrm{AUC}{i c}^{10}$ and $\mathrm{FR}{i c}^{10}$ . As the results from Table 10 show, our method improves the previous best results of [24] by more than $0.5%$ for $\mathrm{NME}{i c}$ and $5%$ in terms of $\mathrm{AUC}_{i c}^{10}$ almost halving the error rate. This shows that our method offers improvements in the high accuracy regime while also reducing the overall failure ratio for difficult images.

WFLW上的对比:在WFLW数据集上,遵循其评估协议,我们以$\mathrm{NME}{i c}$、$\mathrm{AUC}{i c}^{10}$和$\mathrm{FR}{i c}^{10}$为指标报告结果。如表10所示,我们的方法将[24]的最佳结果在$\mathrm{NME}{i c}$上提升了超过$0.5%$,在$\mathrm{AUC}_{i c}^{10}$上提升了$5%$,几乎将错误率减半。这表明我们的方法在高精度区域实现了改进,同时降低了困难图像的整体失败率。

Comparison on AFLW: Following [24], we report results in terms of $\mathrm{NME}{d i a g}$ , $\mathrm{NME}{b o x}$ and $\mathrm{AUC}_{b o x}^{7}$ . As the results from Table 11 show, we improve across all metrics on top of the current best result even on this nearly saturated dataset.

AFLW对比实验:参照[24],我们采用$\mathrm{NME}{diag}$、$\mathrm{NME}{box}$和$\mathrm{AUC}_{box}^{7}$作为评估指标。如表11所示,即使在这个近乎饱和的数据集上,我们的方法仍在所有指标上超越了当前最佳结果。

Table 4: Results on WFLW (a) and $300\mathbf{W}$ (b) datasets.

(a) Comparison against the state-of-the-art on (b) Comparison against state-of-the-art on the WFLW in terms of . ${\mathrm{NME}}{i n t e r-o c u l a r}$ , $\mathrm{AUC}{i c}^{10}$ and 300W Common, Challenge and Full datasets (i.e. and $\mathrm{FR}_{i c}^{10}$ Split II) in terms of NMEinter−occular

表 4: WFLW (a) 和 $300\mathbf{W}$ (b) 数据集上的结果。

| 方法 | NMEic(%) | AUC!0 | FR10 (%) | Common | Challenge | Full | |

|---|---|---|---|---|---|---|---|

| Wing [16] | 5.11 | 0.554 | 6.00 | Teacher[11] | 2.91 | 5.91 | 3.49 |

| MHHN [47] | 4.77 | DU-Net [46] | 2.97 | 5.53 | 3.47 | ||

| DeCaFa [10] | 4.62 | 0.563 | 4.84 | DeCaFa [10] | 2.93 | 5.26 | 3.39 |

| AVS [34] | 4.39 | 0.591 | 4.08 | HR-Net [43] | 2.87 | 5.15 | 3.32 |

| AWing [49] | 4.36 | 0.572 | 2.84 | HG-HSLE [66] | 2.85 | 5.03 | 3.28 |

| LUVLi [24] | 4.37 | 0.577 | 3.12 | Awing[49] | 2.72 | 4.52 | 3.07 |

| GCN [26] | 4.21 | 0.589 | 3.04 | LUVLi [24] | 2.76 | 5.16 | 3.23 |

| Ours | 3.72 | 0.631 | 1.55 | Ours | 2.61 | 4.13 | 2.94 |

(a) 在 WFLW 数据集上关于 ${\mathrm{NME}}{inter-ocular}\