OmniPose: A Multi-Scale Framework for Multi-Person Pose Estimation

OmniPose: 一种用于多人姿态估计的多尺度框架

Abstract

摘要

We propose OmniPose, a single-pass, end-to-end trainable framework, that achieves state-of-the-art results for multi-person pose estimation. Using a novel waterfall module, the OmniPose architecture leverages multi-scale feature representations that increase the effectiveness of backbone feature extractors, without the need for postprocessing. OmniPose incorporates contextual information across scales and joint localization with Gaussian heatmap modulation at the multi-scale feature extractor to estimate human pose with state-of-the-art accuracy. The multi-scale representations, obtained by the improved waterfall module in OmniPose, leverage the efficiency of progressive filtering in the cascade architecture, while maintaining multiscale fields-of-view comparable to spatial pyramid configurations. Our results on multiple datasets demonstrate that OmniPose, with an improved HRNet backbone and waterfall module, is a robust and efficient architecture for multiperson pose estimation that achieves state-of-the-art results.

我们提出OmniPose——一个单次前向、端到端可训练的框架,在多人体姿态估计任务中实现了最先进的性能。该架构通过创新的瀑布模块(waterfall module)利用多尺度特征表示,在无需后处理的情况下显著提升了主干特征提取器的效能。OmniPose在多尺度特征提取器中整合了跨尺度上下文信息,并通过高斯热图调制进行关节定位,以前所未有的精度实现人体姿态估计。改进版瀑布模块生成的多尺度表示,既保持了空间金字塔配置级别的多尺度视野,又继承了级联架构中渐进式过滤的高效特性。在多个数据集上的实验表明:采用优化HRNet主干网络和瀑布模块的OmniPose,是兼具鲁棒性与高效性的多人体姿态估计架构,其性能达到当前最优水平。

1. Introduction

1. 引言

Human pose estimation is an important task in computer vision that has generated high interest for methods on 2D pose estimation [33], [23], [34], [4], [31] and 3D [29], [41], [1]; on a single frame [5] or a video sequence [13]; for a single [34] or multiple subjects [6]. The main challenges of pose estimation, especially in multi-person settings, are due the large number of degrees of freedom in the human body mechanics and the occurrence of joint occlusions. To overcome the difficulty of detecting joints under occlusion, it is common for methods to rely on statistical and geometric models to estimate occluded joints [26], [24]. Anchor poses are also used as a resource to overcome occlusion [29], but this could limit the generalization power of the model and its ability to handle unforeseen poses.

人体姿态估计是计算机视觉领域的一项重要任务,引发了学界对2D姿态估计方法 [33]、[23]、[34]、[4]、[31] 和3D姿态估计 [29]、[41]、[1] 的高度关注;研究场景涵盖单帧图像 [5] 或视频序列 [13];目标对象包括单人 [34] 或多人物 [6]。姿态估计的主要挑战(尤其在多人场景中)源于人体运动学的高自由度特性以及关节遮挡现象。为应对遮挡条件下的关节检测难题,现有方法通常依赖统计与几何模型来估计被遮挡关节 [26]、[24]。锚定姿态(anchor poses)也被用作克服遮挡的手段 [29],但这可能限制模型的泛化能力及处理未知姿态的灵活性。

Inspired by recent advances in the use of multi-scale approaches for semantic segmentation [9], [37], and expanding upon state-of-the-art results on 2D pose estimation by HRNet [31] and UniPose [4], we propose OmniPose, an expanded single-stage network that is end-to-end trainable and generates state-of-the-art results without requiring multiple iterations, intermediate supervision, anchor poses or postprocessing. A main aspect of our novel architecture is an expanded multi-scale feature representation that combines an improved HRNet feature extractor with advanced Waterfall Atrous Spatial Pooling (WASPv2) module. Our improved WASPv2 module combines the cascaded approach for atrous convolution with larger Field-of-View (FOV) and is integrated with the network decoder offering significant improvements in accuracy.

受多尺度方法在语义分割领域最新进展的启发 [9][37],并基于HRNet [31] 和 UniPose [4] 在二维姿态估计上的最先进成果,我们提出了OmniPose——一个可端到端训练的扩展单阶段网络,无需多次迭代、中间监督、锚点姿态或后处理即可生成最先进的结果。我们新架构的主要特点是扩展的多尺度特征表示,它将改进的HRNet特征提取器与先进的瀑布式空洞空间金字塔 (WASPv2) 模块相结合。我们改进的WASPv2模块将级联空洞卷积方法与更大的视场 (FOV) 相结合,并与网络解码器集成,显著提高了精度。

Figure 1. Pose estimation examples with our OmniPose method.

图 1: 采用我们的OmniPose方法进行姿态估计的示例。

Our OmniPose framework predicts the location of multiple people’s joints based on contextual information due to the multi-scale feature representation used in our network. The contextual approach allows our network to include the information from the entire frame, and consequently does not require post analysis based on statistical or geometric methods. In addition, the waterfall atrous module, allows a better detection of shapes, resulting in a more accurate estimation of occluded joints. Examples of pose estimation

我们的OmniPose框架基于网络采用的多尺度特征表示,能够利用上下文信息预测多人关节位置。这种上下文方法使网络能够整合整个帧的信息,因此无需依赖统计或几何方法进行后处理分析。此外,瀑布式空洞模块可更好地检测形状,从而更准确地估计被遮挡关节。姿态估计示例如下

2. Related Work

2. 相关工作

In recent years, deep learning methods relying on Convolutional Neural Networks (CNNs) have achieved superior results in human pose estimation [33], [34], [6], [31], [29] over early works [28], [36]. The popular Convolu- tional Pose Machine (CPM) [34] proposed an architecture that refined joint detection via a set of stages in the network. Building upon [34], Yan et al. integrated the concept of Part Affinity Fields (PAF) resulting in the OpenPose method [6].

近年来,基于卷积神经网络 (CNN) 的深度学习方法在人体姿态估计任务中取得了超越早期研究 [28], [36] 的优异成果 [33], [34], [6], [31], [29]。经典的卷积姿态机 (CPM) [34] 提出了一种通过多级网络结构逐步优化关节点检测的架构。在 [34] 的基础上,Yan 等人引入部件亲和场 (PAF) 概念,最终提出了 OpenPose 方法 [6]。

Multi-scale representations have been successfully used in backbone structures for pose estimation. Stacked hourglass networks [23] use cascaded structures of the hourglass method for pose estimation. Expanding on the hourglass structure, the multi-context approach in [14] relies on an hourglass backbone to perform pose estimation. The original backbone is augmented by the Hourglass Residual Units (HRU) with the goal of increasing the receptive FOV. Postprocessing with Conditional Random Fields (CRFs) is used to assemble the relations between detected joints. However, the drawback of CRFs is increased complexity that requires high computational power and reduces speed.

多尺度表征已成功应用于姿态估计的骨干网络结构中。堆叠沙漏网络 [23] 采用级联沙漏结构进行姿态估计。基于沙漏结构的扩展,文献 [14] 中的多上下文方法依托沙漏骨干网络实现姿态估计。原始骨干网络通过沙漏残差单元 (HRU) 进行增强,旨在扩大感受野 (FOV)。采用条件随机场 (CRFs) 后处理来整合检测关节间的关系,但 CRFs 的缺点在于复杂度较高,需要强大算力且会降低速度。

The High-Resolution Network (HRNet) [31] includes both high and low resolution representations. HRNet benefits from the larger FOV of multi resolution, a capability that we achieve in a simpler fashion with our WASPv2 module. An analogous approach to HRNet is used by the Multi

高分辨率网络 (HRNet) [31] 同时包含高分辨率和低分辨率表示。HRNet 受益于多分辨率的大视野 (FOV) ,而我们通过 WASPv2 模块以更简单的方式实现了这一能力。Multi 采用了与 HRNet 类似的方法。

Stage Pose Network (MSPN) [19], where the HRNet structure is combined with cross-stage feature aggregation and coarse-to-fine supervision.

阶段姿态网络 (MSPN) [19],其中 HRNet 结构结合了跨阶段特征聚合和由粗到精的监督机制。

UniPose [4] combined the bounding box generation and pose estimation in a single one-pass network. This was achieved by the use of WASP module that increases significantly the multi-scale representation and FOV of the network, allowing the method to extract a greater amount of contextual information.

UniPose [4] 将边界框生成和姿态估计结合到一个单次前向网络中。这是通过使用WASP模块实现的,该模块显著提升了网络的多尺度表示和视野(FOV),使方法能够提取更多上下文信息。

More recently, the HRNet structure was combined with multi-resolution pyramids in [12] to further explore multiscale features. The Distribution-Aware coordinate Representation of Keypoints (DARK) method [38] aims to reduce loss during the inference processing of the decoder stage when using an HRNet backbone.

最近,[12]中将HRNet结构与多分辨率金字塔相结合,以进一步探索多尺度特征。基于关键点分布感知的坐标表示方法(DARK) [38]旨在减少使用HRNet主干网络时解码器阶段推理过程中的损失。

Aiming to use contextual information for pose estimation, the Cascade Prediction Fusion (CPF) [39] uses graphical components in order to exploit the context for pose estimation. Similarly, the Cascade Feature Aggregation (CFA) [30] uses semantic information for pose with a cascade approach. In a related context, Generative Adversarial Networks (GANs) were used in [7] to learn dependencies and contextual information for pose.

旨在利用上下文信息进行姿态估计,Cascade Prediction Fusion (CPF) [39] 采用图形化组件来挖掘上下文信息。类似地,Cascade Feature Aggregation (CFA) [30] 通过级联方式融合语义信息进行姿态估计。相关研究中,生成对抗网络 (GANs) 被应用于 [7] 来学习姿态的依赖关系和上下文信息。

2.1. Multi-Scale Feature Representations

2.1. 多尺度特征表示

A challenge with CNN-based pose estimation, as well as semantic segmentation methods, is a significant reduction of resolution caused by pooling. Fully Convolutional Networks (FCN) [21] addressed this problem by deploying upsampling strategies across de convolution layers that increase the size of feature maps back to the dimensions of the input image. In DeepLab, dilated or atrous convolutions [8] were used to increase the size of the receptive fields in the network and avoid down sampling in a multi-scale framework. The Atrous Spacial Pyramid Pooling (ASPP) approach assembles atrous convolutions in four parallel branches with different rates, that are combined by fast bilinear interpolation with an additional factor of eight. This configuration recovers the feature maps in the original image resolution. The increase in resolution and FOV in the ASPP network can be beneficial for a contextual detection of body parts during pose estimation.

基于CNN的姿态估计方法以及语义分割方法面临的一个挑战是池化操作导致的分辨率显著下降。全卷积网络(FCN) [21]通过在反卷积层采用上采样策略,将特征图尺寸恢复至输入图像大小来解决这一问题。DeepLab采用扩张卷积(atrous convolutions) [8]来增大网络感受野,并在多尺度框架中避免下采样操作。空洞空间金字塔池化(ASPP)方法通过四条并行分支整合不同膨胀率的空洞卷积,再通过快速双线性插值配合额外的八倍因子进行特征融合。这种配置能恢复原始图像分辨率的特征图。ASPP网络中分辨率和视野范围的提升,有助于姿态估计过程中对身体部位的上下文检测。

Improving upon [8], the waterfall architecture of the WASP module incorporates multi-scale features without immediately parallel i zing the input stream [3], [4]. Instead, it creates a waterfall flow by first processing through a filter and then creating a new branch. The WASP module goes beyond the cascade approach by combining the streams from all its branches and average pooling of the original input to achieve a multi-scale representation.

在[8]的基础上改进,WASP模块的瀑布式架构融合了多尺度特征,但并未立即并行化输入流[3][4]。相反,它通过先经过滤波器处理再创建新分支的方式形成瀑布流。WASP模块通过合并所有分支流并对原始输入进行平均池化,实现了超越级联方法的多尺度表征。

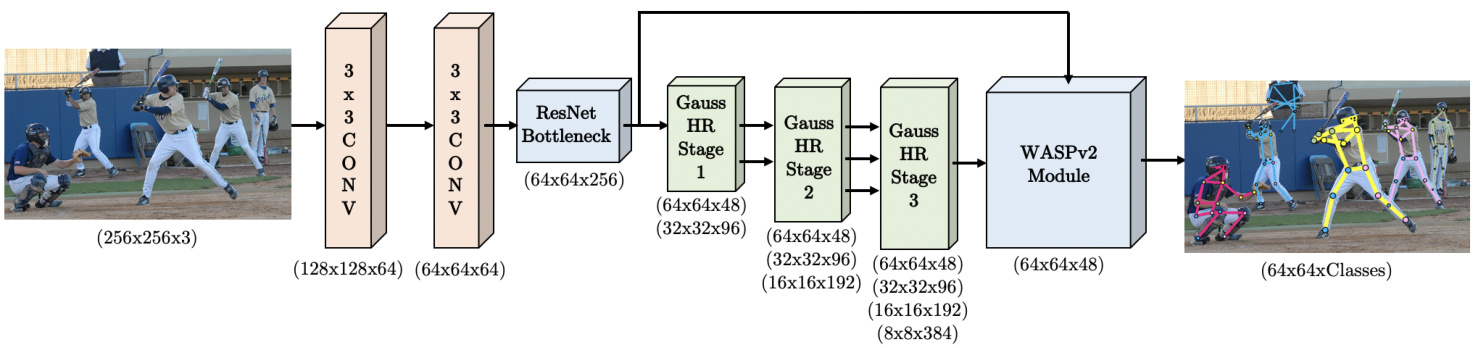

Figure 2. OmniPose framework for multi-person pose estimation. The input color image is fed through the improved HRNet backbone and WASPv2 module to generate one heatmap per joint or class.

图 2: 用于多人姿态估计的OmniPose框架。输入彩色图像通过改进的HRNet主干网络和WASPv2模块处理,为每个关节或类别生成一个热力图。

3. OmniPose Architecture

3. OmniPose 架构

The proposed OmniPose framework, illustrated in Figure 2, is a single-pass, single output branch network for pose estimation of multiple people instances. OmniPose incorporates improvements in feature representation from multi-scale approaches [31], [38] and an encoder-decoder structure combined with spatial pyramid pooling [10] and our proposed advanced waterfall module (WASPv2).

提出的OmniPose框架如图2所示,是一个单次前传、单输出分支网络,用于多人实例的姿态估计。OmniPose融合了多尺度方法[31][38]在特征表示方面的改进,采用编码器-解码器结构结合空间金字塔池化[10]和我们提出的进阶瀑布模块(WASPv2)。

The processing pipeline of the OmniPose architecture is shown in Figure 2. The input image is initially fed into a deep CNN backbone, consisting of our modified version of HRNet [31]. The resultant feature maps are processed by our WASPv2 decoder module that generates K heatmaps, one for each joint, with the corresponding confidence maps. The integrated WASPv2 decoder in our network generates detections for both visible and occluded joints while maintaining the image high resolution through the network.

OmniPose架构的处理流程如图2所示。输入图像首先输入到深度CNN主干网络(由我们改进版的HRNet [31]构成)。生成的特征图通过我们的WASPv2解码器模块处理,该模块会为每个关节生成K个热力图及对应的置信度图。我们网络中集成的WASPv2解码器能够同时检测可见和被遮挡的关节,并在整个网络中保持图像的高分辨率。

Our architecture includes several innovations to increase accuracy. The first is the application of atrous convolutions and waterfall architecture of the WASPv2 module, that increases the network’s capacity to compute multi-scale contextual information. This is accomplished by the probing of feature maps at multiple rates of dilation during convolutions, resulting in a larger FOV in the encoder. Our architecture integrates the decoding process within the WASPv2 module without requiring a separate decoder. Additionally, our network demonstrates good ability to detect shapes by the use of spatial pyramids combined with our modified HRNet feature extraction, as indicated by state-of-the-art (SOTA) results. Finally, the modularity of the OmniPose framework enables easy implementation and training.

我们的架构包含多项创新以提高准确性。首先是应用了空洞卷积 (atrous convolutions) 和WASPv2模块的瀑布式架构,增强了网络计算多尺度上下文信息的能力。这通过在卷积过程中以多种膨胀率探测特征图来实现,从而在编码器中获得更大的视野 (FOV)。我们的架构将解码过程集成在WASPv2模块内,无需单独的解码器。此外,如最先进 (SOTA) 结果表明,我们的网络通过结合空间金字塔和改进版HRNet特征提取,展现出良好的形状检测能力。最后,OmniPose框架的模块化设计使其易于实现和训练。

OmniPose leverages the large number of feature maps at multiple scales in the proposed WASPv2 module. In addition, we improved the results of the backbone network by incorporating gaussian modulated de convolutions in place of the upsampling operations during transition stages of the original HRNet architecture. The modified HRNet feature extractor is followed by the improved and integrated multi-scale waterfall configuration of the WASPv2 decoder, which further improves the efficiency of the joint detection with the incorporation of Gaussian heatmap modula- tion of the decoder stage, and full integration with the de- coder module.

OmniPose利用所提出的WASPv2模块中多尺度的大量特征图。此外,我们通过在原始HRNet架构过渡阶段采用高斯调制反卷积替代上采样操作,提升了主干网络的性能。改进后的HRNet特征提取器后接优化集成的WASPv2解码器多尺度瀑布结构,该结构通过引入解码器阶段的高斯热图调制技术并与解码器模块完全集成,进一步提升了关节检测效率。

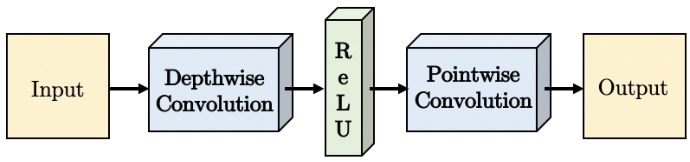

Targeting the reduction of computational cost and number of parameters, we implement separable convolutions replacing the initial two layers of strided convolutions in our model and the atrous convolutions in the WASPv2 module. Figure 3 demonstrates the implementation of the strided convolution that consists of a spatial (or depth-wise) convolution through the individual channels of the feature maps, followed by a rectified linear unit (ReLU) activation function, and a point-wise convolution to incorporate all the lay- ers of the feature maps.

以降低计算成本和参数量为目标,我们采用可分离卷积替代模型中的初始两层跨步卷积以及WASPv2模块中的空洞卷积。图3展示了跨步卷积的实现方式:先对特征图的各通道进行空间(或深度)卷积,接着通过修正线性单元(ReLU)激活函数,最后执行点卷积以整合特征图的所有层级。

Figure 3. Implementation of our separable convolution. The cascade of depth-wise convolution, ReLU activation, and point-wise convolution replace the standard convolution in order to reduce the number of parameters and computations in the network.

图 3: 我们提出的可分离卷积实现方案。通过级联深度卷积 (depth-wise convolution) 、ReLU激活函数和逐点卷积 (point-wise convolution) 来替代标准卷积,从而减少网络中的参数量和计算量。

3.1. WASPv2 Module

3.1. WASPv2 模块

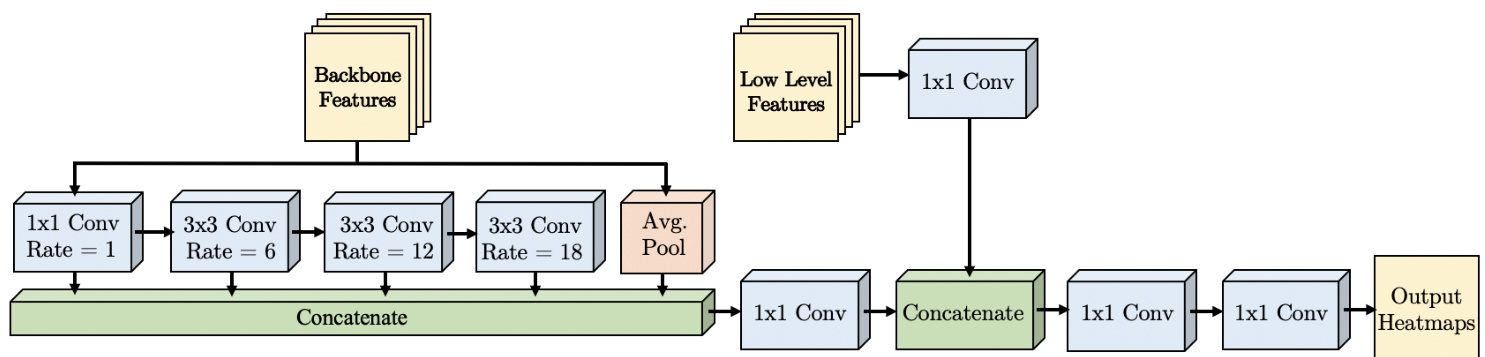

The proposed advanced “Waterfall Atrous Spatial Pyramid” module, or WASPv2, shown in Figure 4, generates an efficient multi-scale representation that helps OmniPose achieve SOTA results. Our improved WASPv2 module expands the feature extraction through its multi-level architecture. It increases the FOV of the network with consistent high resolution processing of the feature maps in all its branches, which contributes to higher accuracy. In addition, WASPv2 generates the final heatmaps for joint localization without the requirement of an additional decoder module, interpolation or pooling operations.

提出的先进"Waterfall Atrous Spatial Pyramid"模块(简称WASPv2)如图4所示,它能生成高效的多尺度表征,帮助OmniPose取得最先进(SOTA)成果。我们改进的WASPv2模块通过其多层次架构扩展了特征提取能力。该模块在所有分支中对特征图保持高分辨率处理,从而扩大网络的视野(FOV),这有助于提高精度。此外,WASPv2无需额外的解码器模块、插值或池化操作,就能直接生成用于关节定位的最终热图。

Figure 4. The proposed WASPv2 advanced waterfall module. The inputs are 48 features maps from the modified HRNet backbone and low-level features from the initial layers of the framework.

图 4: 提出的 WASPv2 高级瀑布模块。输入包括来自改进版 HRNet 骨干网络的 48 个特征图,以及框架初始层的低级特征。

The WASPv2 architecture relies on atrous convolutions to maintain a large FOV, performing a cascade of atrous convolutions at increasing rates to gain efficiency. In contrast to ASPP [10], WASPv2 does not immediately parallelize the input stream. Instead, it creates a waterfall flow by first processing through a filter and then creating a new branch. In addition, WASPv2 goes beyond the cascade approach by combining the streams from all its branches and average pooling of the original input to achieve a multiscale representation.

WASPv2架构依靠空洞卷积(dilated convolution)来保持大视场(FOV),通过以递增速率执行级联空洞卷积来提高效率。与ASPP [10]不同,WASPv2不会立即并行化输入流,而是先通过滤波器处理创建瀑布流,再生成新分支。此外,WASPv2通过组合所有分支流与原始输入的平均池化,实现了超越级联方法的多尺度表征。

Expanding upon the original WASP module [4], WASPv2 incorporates the decoder in an integrated unit shown in Figure 4, and processes both of the waterfall branches with different dilation rates and low-level features in the same higher resolution, resulting in a more accurate and refined response. The WASPv2 module output $f_{W A S P v2}$ is described as follows:

在原有WASP模块[4]的基础上,WASPv2将解码器整合为图4所示的集成单元,并以相同更高分辨率处理具有不同扩张率和低层级特征的两个瀑布分支,从而产生更精确细致的响应。WASPv2模块输出$f_{W A S P v2}$描述如下:

$$

f_{W a t e r f a l l}=K_{1}\circledast(\sum_{i=1}^{4}(K_{d_{i}}\circledast f_{i-1})+A P(f_{0}))

$$

$$

f_{W a t e r f a l l}=K_{1}\circledast(\sum_{i=1}^{4}(K_{d_{i}}\circledast f_{i-1})+A P(f_{0}))

$$

$$

f_{W A S P v2}=K_{1}\circledast\left(K_{1}\circledast f_{L L F}+f_{W a t e r f a l l}\right)

$$

$$

f_{W A S P v2}=K_{1}\circledast\left(K_{1}\circledast f_{L L F}+f_{W a t e r f a l l}\right)

$$

where $\circledast$ represents convolution, $f_{0}$ is the input feature map, $f_{i}$ is the feature map resulting from the $i^{t h}$ atrous convolution, $A P$ is the average pooling operation, $f_{L L F}$ are the low-level feature maps, $K_{1}$ and $K_{d_{i}}$ represent convolutions of kernel size $1\times1$ and $3\times3$ with dilations of $d_{i}=[1,6,12,18]$ , as shown in Figure 4. After concatenation, the feature maps are combined with low level features. The last $1\times1$ convolution brings the number of feature maps down to the final number of joints for the pose estimation.

其中 $\circledast$ 表示卷积运算,$f_{0}$ 是输入特征图,$f_{i}$ 是第 $i^{t h}$ 个空洞卷积输出的特征图,$AP$ 是平均池化操作,$f_{LLF}$ 是低级特征图,$K_{1}$ 和 $K_{d_{i}}$ 分别表示核尺寸为 $1\times1$ 和 $3\times3$、膨胀率为 $d_{i}=[1,6,12,18]$ 的卷积运算 (如 图 4 所示)。拼接后的特征图会与低级特征相结合,最后的 $1\times1$ 卷积将特征图通道数降至姿态估计所需的关键点数量。

Differently than the previous version of WASP, our $\mathbf{WASP}\mathbf{v}2$ integrates in the same resolution the feature maps from the low-level features and the first part of the waterfall module, converting the score maps from the WASPv2 module to heatmaps corresponding to body joints. Due to the higher resolution afforded by the modified HRNet backbone, the WASPv2 module directly outputs the final heatmaps without requiring an additional decoder module or need for bilinear interpolations to resize the output to the original input size.

与上一版WASP不同,我们的$\mathbf{WASP}\mathbf{v}2$在同一分辨率下集成了低级特征和瀑布模块第一部分的特征图,将WASPv2模块的得分图转换为对应人体关节的热力图。由于改进后的HRNet主干网络提供了更高分辨率,WASPv2模块无需额外解码器模块或双线性插值来调整输出至原始输入尺寸,可直接生成最终热力图。

Aiming to reduce the computational complexity and size of the network, and inspired by [10], our WASPv2 module implements separable atrous convolutions to its feature extraction waterfall branches. The inclusion of separable atrous convolutions in the WASPv2 module further reduces the number of parameters and computation cost of the framework.

为了降低计算复杂度和网络规模,并受[10]启发,我们的WASPv2模块在特征提取瀑布分支中实现了可分离空洞卷积 (separable atrous convolutions)。WASPv2模块引入可分离空洞卷积后,进一步减少了框架的参数数量和计算成本。

3.2. Gaussian Heatmap Modulation

3.2. 高斯热图调制

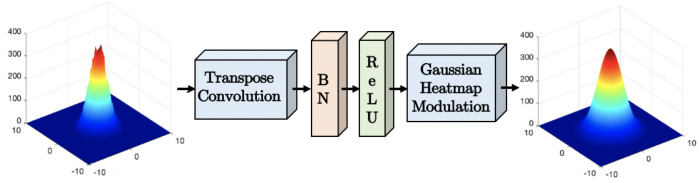

Conventional interpolation or upsampling methods during the decoding stage of the network result in an inevitable loss in resolution and consequently accuracy, limiting the potential of the network. Motivated by recent results with distribution aware modulation [38], we include Gaussian heatmap modulation to all interpolation stages of our network, resulting in a more accurate and robust network that diminishes the localization error due to interpolation.

在网络解码阶段采用传统插值或上采样方法会导致分辨率及精度不可避免的损失,从而限制网络潜力。受分布感知调制[38]最新研究成果启发,我们在网络所有插值阶段引入高斯热图调制,由此构建出更精准、更鲁棒的网络架构,有效降低了插值导致的定位误差。

Gaussian interpolation allows the network to achieve sub-pixel resolution for peak localization following the anticipated Gaussian pattern of the feature response. This method results in a smoother response and more accurate peak prediction for joints, by eliminating false positives in noisy responses during the joint detection.

高斯插值使网络能够按照特征响应预期的高斯模式,实现亚像素精度的峰值定位。该方法通过消除关节检测过程中噪声响应造成的误报,可获得更平滑的响应和更精确的关节峰值预测。

Figure 5 demonstrates the modular iz ation of a feature map response in our improved HRNet feature extractor. We utilize a transposed convolution operation of the feature map $f_{D}$ with a Gaussian kernel $K$ , shown in Equation (2), aiming to approximate the response shape to the expected label of the dataset during training. The feature maps $f_{G}$ after the Gaussian convolution operation are:

图 5: 展示了我们改进版HRNet特征提取器中特征图响应的模块化过程。我们使用特征图$f_{D}$与高斯核$K$的转置卷积操作(如公式(2)所示),旨在训练期间使响应形状逼近数据集的预期标签。经过高斯卷积操作后的特征图$f_{G}$为:

$$

f_{G}=K\circledast f_{D}

$$

$$

f_{G}=K\circledast f_{D}

$$

This behavior is learned and reproduced by the network during all parts of training, validation, and testing.

这种行为在网络训练、验证和测试的所有阶段都被学习并复现。

Following convolution with the Gaussian kernel, the modulation of the interpolation output is scaled to $f_{G_{s}}$ by

经过高斯核卷积处理后,插值输出的调制幅度被缩放至 $f_{G_{s}}$

mapping $f_{G}$ to the range of the response of the original feature map $f_{D}$ using:

将映射 $f_{G}$ 调整至原始特征图 $f_{D}$ 的响应范围,采用:

$$

f_{G_{s}}=\frac{f_{G}-m i n(f_{G})}{m a x(f_{G})-m i n(f_{G})}*m a x(f_{D})

$$

$$

f_{G_{s}}=\frac{f_{G}-m i n(f_{G})}{m a x(f_{G})-m i n(f_{G})}*m a x(f_{D})

$$

Our Gaussian heatmap modulation approach allows for better localization of the coordinates during the transposed convolutions, by overcoming the quantization error naturally inherited from the increase in resolution.

我们的高斯热图调制方法通过克服分辨率提升过程中自然产生的量化误差,能够在转置卷积过程中更精准地定位坐标。

Figure 5. Illustration of the proposed transpose convolution with Gaussian modulation replacing upsampling stages of the network.

图 5: 采用高斯调制的转置卷积替代网络上采样阶段的示意图。

3.3. OmniPose-Lite

3.3. OmniPose-Lite

We introduce OmniPose-Lite, a lightweight version of OmniPose that is suitable for mobile and embedded platforms, as it achieves a drastic reduction in memory requirements and operations required for computation. The proposed OmniPose-Lite leverages the reduced computational complexity and size of separable convolutions, inspired by results obtained by MobileNet [17].

我们推出OmniPose-Lite,这是OmniPose的轻量级版本,适用于移动和嵌入式平台,因为它大幅降低了内存需求和计算所需的操作。受MobileNet [17] 成果启发,所提出的OmniPose-Lite利用了可分离卷积 (separable convolutions) 降低的计算复杂度和尺寸。

We implemented separable strided convolutions, as shown in Figure 3, for all convolutional layers of the original HRNet backbone, and implemented atrous separable convolutions in the WASPv2 decoder, resulting in a reduction of $74.3%$ of the network GFLOPs, from 22.6 GFLOPs to 5.8 GFLOPs required to process an image of size $256\mathrm{x}256$ . In addition, OmniPose-Lite also reduces the number of parameters by $71.4%$ , from $67.9\mathrm{M}$ to $19.4\mathbf{M}$ .

我们在原始HRNet骨干网络的所有卷积层中实现了可分离的跨步卷积(如图 3所示),并在WASPv2解码器中实现了空洞可分离卷积,使处理256x256尺寸图像所需的网络GFLOPs从22.6 GFLOPs降至5.8 GFLOPs,减少了74.3%。此外,OmniPose-Lite还将参数量从67.9M减少到19.4M,降低了71.4%。

The small size of the proposed OmniPose-Lite architecture, in combination with the reduced number of parameters allows the implementation of the OmniPose architecture for mobile applications without a large computational burden.

所提出的OmniPose-Lite架构体积小巧,加之参数数量减少,使得OmniPose架构能够在移动应用中实现,而不会带来较大的计算负担。

4. Datasets

4. 数据集

We performed multi-person experiments on two datasets: Common Objects in Context (COCO) [20] and MPII [2]. The COCO dataset [20] is composed of over 200K images containing over 250K instances of the person class. The labelled poses contain 17 keypoints. The dataset is considered a challenging dataset due to the large number of images in a diverse set of scales and occlusion for poses in the wild.

我们在两个数据集上进行了多人实验:Common Objects in Context (COCO) [20] 和 MPII [2]。COCO数据集 [20] 包含超过20万张图像,涵盖超过25万个人物类实例,标注姿态包含17个关键点。由于该数据集包含大量不同尺度的野外姿态图像及遮挡情况,被视为具有挑战性的基准。

The MPII dataset [2] contains approximately 25K images of annotated body joints of over 40K subjects. The images are collected from YouTube videos in 410 everyday activities. The dataset contains frames with joints annotations, head and torso orientations, and body part occlusions.

MPII数据集 [2] 包含约2.5万张图像,标注了超过4万人的身体关节。这些图像采集自410种日常活动的YouTube视频。该数据集提供带有关节标注、头部与躯干朝向以及身体部位遮挡情况的帧。

In order to better train our network for joint detection, ideal Gaussian maps were generated at the joint locations in the ground truth. These maps are more effective for training than single points at the joint locations, and were used to train our network to generate Gaussian heatmaps corresponding to the location of each joint. Gaussians with different $\sigma$ values were considered and a value of $\sigma=3$ was adopted, resulting in a well defined Gaussian curve for both the ground truth and predicted outputs. This value of $\sigma$ also allows enough separation between joints in the image.

为了更好地训练我们的网络进行联合检测,在真实标注的关节位置生成了理想的高斯(Gaussian)图。这些图比关节位置的单点标注更有效,用于训练网络生成与每个关节位置对应的高斯热图。我们考虑了不同$\sigma$值的高斯分布,最终采用$\sigma=3$,使得真实标注和预测输出都能形成清晰的高斯曲线。该$\sigma$值还能确保图像中关节之间有足够的区分度。

5. Experiments

5. 实验

OmniPose experiments were based on the metrics set by each dataset, and procedures applied by [31], [12], and [38].

OmniPose实验基于各数据集设定的指标,并采用了[31]、[12]和[38]所应用的方法。

5.1. Metrics

5.1. 指标

For the evaluation of OmniPose, various metrics were used depending on previously reported results and the available ground truth for each dataset. The first metric used is the Percentage of Correct Keypoints (PCK). This metric considers the prediction of