Are Transformers More Robust Than CNNs?

Transformer比CNN更鲁棒吗?

Abstract

摘要

Transformer emerges as a powerful tool for visual recognition. In addition to demonstrating competitive performance on a broad range of visual benchmarks, recent works also argue that Transformers are much more robust than Convolutions Neural Networks (CNNs). Nonetheless, surprisingly, we find these conclusions are drawn from unfair experimental settings, where Transformers and CNNs are compared at different scales and are applied with distinct training frameworks. In this paper, we aim to provide the first fair in-depth comparisons between Transformers and CNNs, focusing on robustness evaluations.

Transformer 成为视觉识别的强大工具。除了在广泛的视觉基准测试中展现出有竞争力的性能外,近期研究还认为 Transformer 比卷积神经网络 (CNN) 更加鲁棒。然而,令人惊讶的是,我们发现这些结论源自不公平的实验设置——Transformer 和 CNN 在不同规模下进行比较,并采用了不同的训练框架。本文旨在首次公平 深入地比较 Transformer 和 CNN,重点关注鲁棒性评估。

With our unified training setup, we first challenge the previous belief that Transformers outshine CNNs when measuring adversarial robustness. More surprisingly, we find CNNs can easily be as robust as Transformers on defending against adversarial attacks, if they properly adopt Transformers’ training recipes. While regarding generalization on out-of-distribution samples, we show pretraining on (external) large-scale datasets is not a fundamental request for enabling Transformers to achieve better performance than CNNs. Moreover, our ablations suggest such stronger generalization is largely benefited by the Transformer’s self-attention-like architectures per se, rather than by other training setups. We hope this work can help the community better understand and benchmark the robustness of Transformers and CNNs. The code and models are publicly available at https://github.com/ytongbai/ViTs-vs-CNNs.

通过统一的训练设置,我们首先挑战了先前关于Transformer在对抗鲁棒性方面优于CNN的观点。更令人惊讶的是,我们发现如果CNN适当采用Transformer的训练方案,它们可以轻松达到与Transformer相当的对抗攻击防御能力。而在分布外样本的泛化性方面,我们证明(外部)大规模数据集的预训练并非使Transformer性能超越CNN的必要条件。此外,消融实验表明,这种更强的泛化能力主要得益于Transformer本身的自注意力架构,而非其他训练设置。我们希望这项工作能帮助学界更好地理解和评估Transformer与CNN的鲁棒性。代码和模型已开源:https://github.com/ytongbai/ViTs-vs-CNNs。

1 Introduction

1 引言

Convolutional Neural Networks (CNNs) have been the widely-used architecture for visual recognition in recent years [16, 21, 22, 36, 38]. It is commonly believed the key to such success is the usage of the convolutional operation, as it introduces several useful inductive biases (e.g., translation equivalence) to models for benefiting object recognition. Interestingly, recent works alternatively suggest that it is also possible to build successful recognition models without convolutions [3, 32, 56]. The most representative work in this direction is Vision Transformer (ViT) [12], which applies the pure self-attention-based architecture to sequences of images patches and attains competitive performance on the challenging ImageNet classification task [33] compared to CNNs. Later works [26, 45] further expand Transformers with compelling performance on other visual benchmarks, including COCO detection and instance segmentation [23], ADE20K semantic segmentation [57].

卷积神经网络 (Convolutional Neural Networks, CNNs) 近年来已成为视觉识别领域广泛采用的架构 [16, 21, 22, 36, 38]。人们普遍认为其成功的关键在于卷积运算的运用,因为它为物体识别模型引入了若干有益的归纳偏置 (inductive biases) (例如平移等效性)。有趣的是,近期研究提出另一种可能性:无需卷积也能构建成功的识别模型 [3, 32, 56]。该方向最具代表性的工作是 Vision Transformer (ViT) [12],它将纯基于自注意力 (self-attention) 的架构应用于图像块序列,在具有挑战性的 ImageNet 分类任务 [33] 上取得了与 CNN 相媲美的性能。后续研究 [26, 45] 进一步扩展了 Transformer 在其他视觉基准测试中的卓越表现,包括 COCO 检测与实例分割 [23]、ADE20K 语义分割 [57]。

The dominion of CNNs on visual recognition is further challenged by the recent findings that Transformers appear to be much more robust than CNNs. For example, Shao et al. [35] observe that the usage of convolutions may introduce a negative effect on models’ adversarial robustness, while migrating to Transformer-like architectures (e.g., the Conv-Transformer hybrid model or the pure Transformer) can help secure models’ adversarial robustness. Similarly, B hoja napa lli et al. [4] report that, if pre-trained on sufficiently large datasets, Transformers exhibit considerably stronger robustness than CNNs on a spectrum of out-of-distribution tests (e.g., common image corruptions [17], texture-shape cue conflicting stimuli [13]).

CNN在视觉识别领域的统治地位正受到Transformer表现更稳健的最新研究挑战。例如,Shao等人[35]发现卷积结构可能对模型对抗鲁棒性产生负面影响,而转向类Transformer架构(如卷积-Transformer混合模型或纯Transformer)能有效提升对抗鲁棒性。同样,Bhojanapalli等人[4]指出,当在足够大规模数据集上预训练时,Transformer在一系列分布外测试(如图像损坏[17]、纹理-形状线索冲突刺激[13])中展现出显著优于CNN的鲁棒性。

35th Conference on Neural Information Processing Systems (NeurIPS 2021), Sydney, Australia.

第35届神经信息处理系统大会 (NeurIPS 2021),澳大利亚悉尼。

Though both [4] and [35] claim that Transformers are preferable to CNNs in terms of robustness, we find that such conclusion cannot be strongly drawn based on their existing experiments. Firstly, Transformers and CNNs are not compared at the same model scale, e.g., a small CNN, ResNet50 ${\sim}25$ million parameters), by default is compared to a much larger Transformer, ViT-B ( ${\sim}86$ million parameters), for these robustness evaluations. Secondly, the training frameworks applied to Transformers and CNNs are distinct from each other (e.g., training datasets, number of epochs, and augmentation strategies are all different), while little efforts are devoted on ablating the corresponding effects. In a nutshell, due to these inconsistent and unfair experiment settings, it remains an open question whether Transformers are truly more robust than CNNs.

尽管[4]和[35]都声称Transformer在鲁棒性方面优于CNN,但我们发现基于现有实验无法得出这一强结论。首先,Transformer与CNN的模型规模未保持一致,例如默认将小型CNN(ResNet50,约2500万参数)与更大的Transformer(ViT-B,约8600万参数)进行鲁棒性评估。其次,两者采用的训练框架存在差异(如训练数据集、训练轮次和数据增强策略均不同),却鲜少研究对这些影响因素进行消融实验。简而言之,由于这些不一致且不公平的实验设置,Transformer是否真正比CNN更具鲁棒性仍是一个悬而未决的问题。

To answer it, in this paper, we aim to provide the first benchmark to fairly compare Transformers to CNNs in robustness evaluations. We particularly focus on the comparisons between Small Data-efficient image Transformer (DeiT-S) [41] and ResNet-50 [16], as they have similar model capacity (i.e., $\sim22$ million parameters vs. ${\sim}25$ million parameters) and achieve similar performance on ImageNet (i.e., $76.8%$ top-1 accuracy vs. $76.9%$ top-1 accuracy1). Our evaluation suite accesses model robustness in two ways: 1) adversarial robustness, where the attackers can actively and aggressively manipulate inputs to approximate the worst-case scenario; 2) generalization on out-of-distribution samples, including common image corruptions (ImageNet-C [17]), texture-shape cue conflicting stimuli (Stylized-ImageNet [13]) and natural adversarial examples (ImageNet-A [19]).

为了回答这个问题,本文旨在提供首个公平比较Transformer与CNN在鲁棒性评估中的基准。我们特别关注小型数据高效图像Transformer (DeiT-S) [41] 与ResNet-50 [16] 的对比,因为二者具有相近的模型容量(即$\sim22$百万参数 vs. ${\sim}25$百万参数)且在ImageNet上表现相当(即$76.8%$ top-1准确率 vs. $76.9%$ top-1准确率1)。我们的评估套件通过两种方式检验模型鲁棒性:1) 对抗鲁棒性,攻击者可主动激进地操纵输入以模拟最坏情况;2) 分布外样本的泛化能力,包括常见图像损坏(ImageNet-C [17])、纹理-形状线索冲突刺激(Stylized-ImageNet [13])和自然对抗样本(ImageNet-A [19])。

With this unified training setup, we present a completely different picture from previous ones [4, 35]. Regarding adversarial robustness, we find that Transformers actually are no more robust than CNNs— if CNNs are allowed to properly adopt Transformers’ training recipes, then these two types of models will attain similar robustness on defending against both perturbation-based adversarial attacks and patch-based adversarial attacks. While for generalization on out-of-distribution samples, we find Transformers can still substantially outperform CNNs even without the needs of pre-training on sufficiently large (external) datasets. Additionally, our ablations show that adopting Transformer’s self-attention-like architecture is the key for achieving strong robustness on these out-of-distribution samples, while tuning other training setups will only yield subtle effects here. We hope this work can serve as a useful benchmark for future explorations on robustness, using different network architectures, like CNNs, Transformers, and beyond [24, 40].

通过这种统一的训练设置,我们呈现出了与之前研究[4,35]截然不同的图景。在对抗鲁棒性方面,我们发现若允许CNN(卷积神经网络)充分采用Transformer的训练方案,这两类模型在抵御基于扰动的对抗攻击和基于补丁的对抗攻击时,其鲁棒性表现将趋于相当。而在分布外样本的泛化能力上,即使无需在足够大规模(外部)数据集上进行预训练,Transformer仍能显著优于CNN。此外,我们的消融实验表明,采用类Transformer的自注意力架构是实现分布外样本强鲁棒性的关键,而调整其他训练设置仅会产生细微影响。我们希望这项工作能为未来探索CNN、Transformer等不同网络架构的鲁棒性研究提供有价值的基准[24,40]。

2 Related Works

2 相关工作

Vision Transformer. Transformers, invented by Vaswani et al. in 2017 [44], have largely advanced the field of natural language processing (NLP). With the introduction of self-attention module, Transformer can effectively capture the non-local relationships between all input sequence elements, achieving the state-of-the-art performance on numerous NLP tasks [5, 10, 11, 29, 30, 51].

Vision Transformer。Transformer 由 Vaswani 等人在 2017 年提出 [44],极大地推动了自然语言处理 (NLP) 领域的发展。通过引入自注意力 (self-attention) 模块,Transformer 能够有效捕捉所有输入序列元素之间的非局部关系,在众多 NLP 任务上实现了最先进的性能 [5, 10, 11, 29, 30, 51]。

The success of Transformer on NLP also starts to get witnessed in computer vision. The pioneering work, ViT [12], demonstrates that the pure Transformer architectures are able to achieve exciting results on several visual benchmarks, especially when extremely large datasets (e.g., JFT-300M [37]) are available for pre-training. This work is then subsequently improved by carefully curating the training pipeline and the distillation strategy to Transformers [41], enhancing the Transformers’ token iz ation module [52], building multi-resolution feature maps on Transformers [26, 45], designing parameter-efficient Transformers for scaling [43, 49, 54], etc. In this work, rather than focusing on furthering Transformers on standard visual benchmarks, we aim to provide a fair and comprehensive study of their performance when testing out of the box.

Transformer在NLP领域的成功也开始在计算机视觉领域得到验证。开创性工作ViT [12]证明,纯Transformer架构能够在多个视觉基准测试上取得令人振奋的结果,尤其是在使用超大规模数据集(如JFT-300M [37])进行预训练时。后续研究通过精心设计训练流程和蒸馏策略[41]、改进Transformer的token化模块[52]、构建多分辨率特征图[26,45]、设计参数高效的扩展型Transformer[43,49,54]等方式持续优化该架构。本研究不专注于提升Transformer在标准视觉基准上的表现,而是旨在对其开箱即用的性能进行公平全面的评估。

Robustness Evaluations. Conventional learning paradigm assumes training data and testing data are drawn from the same distribution. This assumption generally does not hold, especially in the real-world case where the underlying distribution is too complicated to be covered in a (limitedsized) dataset. To properly access model performance in the wild, a set of robustness generalization benchmarks have been built, e.g., ImageNet-C [17], Stylized-ImageNet [13], ImageNet-A [19], etc. Another standard surrogate for testing model robustness is via adversarial attacks, where the attackers deliberately add small perturbations or patches to input images, for approximating the worst-case evaluation scenario [14, 39]. In this work, both robustness generalization and adversarial robustness are considered in our robustness evaluation suite.

稳健性评估。传统学习范式假设训练数据和测试数据来自同一分布。这一假设通常不成立,尤其在现实场景中,底层分布过于复杂而无法被(有限规模的)数据集完全覆盖。为准确评估模型在真实环境中的表现,研究者构建了一系列稳健性泛化基准,例如ImageNet-C [17]、Stylized-ImageNet [13]、ImageNet-A [19]等。另一种测试模型稳健性的标准替代方法是通过对抗攻击,攻击者故意在输入图像中添加微小扰动或补丁,以模拟最坏情况评估场景[14, 39]。本工作中,我们的稳健性评估套件同时考虑了泛化稳健性和对抗稳健性。

Concurrent to ours, both B hoja napa lli et al. [4] and Shao et al. [35] conduct robustness comparisons between Transformers and CNNs. Nonetheless, we find their experimental settings are unfair, e.g., models are compared at different capacity [4, 35] or are trained under distinct frameworks [35]. In this work, our comparison carefully align the model capacity and the training setups, which draws completely different conclusions from the previous ones.

与我们同时,Bhojanapalli等人[4]和Shao等人[35]也对Transformer和CNN进行了鲁棒性比较。然而,我们发现他们的实验设置存在不公平之处,例如模型在不同容量[4,35]或不同框架下训练[35]的情况下进行比较。在本工作中,我们的比较仔细对齐了模型容量和训练设置,从而得出了与之前完全不同的结论。

3 Settings

3 设置

3.1 Training CNNs and Transformers

3.1 训练CNN和Transformer

Convolutional Neural Networks. ResNet [16] is a milestone architecture in the history of CNN. We choose its most popular instantiation, ResNet-50 (with ${\sim}25$ million parameters), as the default CNN architecture. To train CNNs on ImageNet, we follow the standard recipe of [15, 31]. Specifically, we train all CNNs for a total of 100 epochs, using momentum-SGD optimizer; we set the initial learning rate to 0.1, and decrease the learning rate by $10\times$ at the 30-th, 60-th, and 90-th epoch; no regular iz ation except weight decay is applied.

卷积神经网络。ResNet [16] 是CNN发展史上的里程碑架构。我们选择其最流行的实例化版本ResNet-50(约2500万参数)作为默认CNN架构。在ImageNet上训练CNN时,我们遵循[15, 31]的标准方案:使用动量SGD优化器训练100个epoch,初始学习率设为0.1,并在第30、60、90个epoch时将学习率降低10倍;除权重衰减外不施加其他正则化措施。

Vision Transformer. ViT [12] successfully introduces Transformers from natural language processing to computer vision, achieving excellent performance on several visual benchmarks compared to CNNs. In this paper, we follow the training recipe of DeiT [41], which successfully trains ViT on ImageNet without any external data, and set DeiT-S (with ${\sim}22$ million parameters) as the default Transformer architecture. Specifically, we train all Transformers using AdamW optimizer [27]; we set the initial learning rate to 5e-4, and apply the cosine learning rate scheduler to decrease it; besides weight decay, we additionally adopt three data augmentation strategies (i.e., RandAug [9], MixUp [55] and CutMix [53]) to regularize training (otherwise DeiT-S will attain significantly lower ImageNet accuracy due to over fitting [6]).

Vision Transformer。ViT [12] 成功将 Transformer 从自然语言处理领域引入计算机视觉,在多个视觉基准测试中相比 CNN 取得了优异表现。本文遵循 DeiT [41] 的训练方案(该方案无需外部数据即可在 ImageNet 上成功训练 ViT),并采用 DeiT-S(约 2200 万参数)作为默认 Transformer 架构。具体实现中,我们使用 AdamW 优化器 [27] 训练所有 Transformer:初始学习率设为 5e-4,并采用余弦学习率调度器进行衰减;除权重衰减外,还额外应用三种数据增强策略(RandAug [9]、MixUp [55] 和 CutMix [53])来规范训练(否则 DeiT-S 会因过拟合 [6] 导致 ImageNet 准确率显著下降)。

Note that different from the standard recipe of DeiT (which applies 300 training epochs by default), we hereby train Transformers only for a total of 100 epochs, i.e., same as the setup in ResNet. We also remove {Erasing, Stochastic Depth, Repeated Augmentation}, which were applied in the original DeiT framework, in this basic 100 epoch schedule, for preventing over-regular iz ation in training. Such trained DeiT-S yields $76.8%$ top-1 ImageNet accuracy, which is similar to the ResNet-50’s performance ( $76.9%$ top-1 ImageNet accuracy).

需要注意的是,与DeiT的标准配方(默认采用300个训练周期)不同,我们在此仅对Transformer进行了总计100个周期的训练,即与ResNet的设置相同。在此基础100周期训练计划中,我们还移除了原始DeiT框架中采用的{擦除、随机深度、重复增强}等策略,以防止训练过程中的过度正则化。经过这样训练的DeiT-S在ImageNet上达到了76.8%的top-1准确率,与ResNet-50的性能相近(76.9%的top-1 ImageNet准确率)。

3.2 Robustness Evaluations

3.2 鲁棒性评估

Our experiments mainly consider two types of robustness here, i.e., robustness on adversarial examples and robustness on out-of-distribution samples.

我们的实验主要考虑两种鲁棒性,即对抗样本 (adversarial examples) 的鲁棒性和分布外样本 (out-of-distribution samples) 的鲁棒性。

Adversarial Examples, which are crafted by adding human-imperceptible perturbations or smallsized patches to images, can lead deep neural networks to make wrong predictions. In addition to the very popular PGD attack [28], our robustness evaluation suite also contains: A) AutoAttack [8], which is an ensemble of diverse attacks (i.e., two variants of PGD attack, FAB attack [7] and Square Attack [1]) and is parameter-free; and B) Texture Patch Attack (TPA) [50], which uses a predefined texture dictionary of patches to fool deep neural networks.

对抗样本 (Adversarial Examples) 是通过在图像上添加人眼难以察觉的扰动或小块补丁来制作的,会导致深度神经网络做出错误预测。除了非常流行的 PGD 攻击 [28] 之外,我们的鲁棒性评估套件还包含:A) AutoAttack [8],这是一种由多种攻击组成的集成方法 (即两种 PGD 攻击变体、FAB 攻击 [7] 和 Square 攻击 [1]),且无需参数调整;以及 B) 纹理补丁攻击 (TPA) [50],它使用预定义的纹理补丁字典来欺骗深度神经网络。

Recently, several benchmarks of out-of-distribution samples have been proposed to evaluate how deep neural networks perform when testing out of the box. Particularly, our robustness evaluation suite contains three such benchmarks: A) ImageNet-A [19], which are real-world images but are collected from challenging recognition scenarios (e.g., occlusion, fog scene); B) ImageNet-C [17], which is designed for measuring model robustness against 75 distinct common image corruptions; and C) Stylized-ImageNet [13], which creates texture-shape cue conflicting stimuli by removing local texture cues from images while retaining their global shape information.

最近,为了评估深度神经网络在开箱即用测试中的表现,研究者们提出了多个分布外样本基准。具体而言,我们的鲁棒性评估套件包含三个此类基准:A) ImageNet-A [19],这些是真实世界的图像,但采集自具有挑战性的识别场景(例如遮挡、雾天场景);B) ImageNet-C [17],旨在衡量模型对75种不同常见图像损坏的鲁棒性;以及C) Stylized-ImageNet [13],通过移除图像的局部纹理线索同时保留其全局形状信息,创建了纹理-形状线索冲突的刺激。

4 Adversarial Robustness

4 对抗鲁棒性

In this section, we investigate the robustness of Transformers and CNNs on defending against adversarial attacks, using ImageNet validation set (with 50,000 images). We consider both perturbation-based attacks (i.e., PGD and AutoAttack) and patch-based attacks (i.e., TPA) for robustness evaluations.

在本节中,我们使用ImageNet验证集(包含50,000张图像)研究Transformer和CNN在防御对抗攻击方面的鲁棒性。我们考虑基于扰动的攻击(即PGD和AutoAttack)和基于补丁的攻击(即TPA)来进行鲁棒性评估。

4.1 Robustness to Perturbation-Based Attacks

4.1 抗扰动攻击鲁棒性

Following [35], we first report the robustness of ResNet-50 and DeiT-S on defending against AutoAttack. We verify that, when applying with a small perturbation radius $\epsilon=0.001$ , DeiT-S indeed achieves higher robustness than ResNet-50, i.e., $22.1%$ vs. $17.8%$ as shown in Table 1.

遵循[35],我们首先报告了ResNet-50和DeiT-S在防御AutoAttack方面的鲁棒性。验证发现,当应用较小的扰动半径$\epsilon=0.001$时,DeiT-S确实比ResNet-50具有更高的鲁棒性,即如表1所示,$22.1%$对比$17.8%$。

However, when increasing the perturbation radius to 4/255, a more challenging but standard case studied in previous works [34, 46, 47], both models will be circumvented completely, i.e., $0%$ robustness on defending against AutoAttack. This is mainly due to that both models are not adversarial ly trained [14, 28], which is an effective way to secure model robustness against adversarial attacks, and we will study it next.

然而,当扰动半径增加到4/255时(这是先前研究[34, 46, 47]中探讨的一个更具挑战性但标准的情况),两个模型都会被完全规避,即在防御AutoAttack时鲁棒性为$0%$。这主要是因为两个模型都没有经过对抗训练[14, 28](这是确保模型对抗攻击鲁棒性的有效方法),我们接下来将对此进行研究。

Table 1: Performance of ResNet-50 and DeiT-S on defending against AutoAttack, using ImageNet validation set. We note both models are completely broken when setting perturbation radius to 4/255.

| Clean | PerturbationRadius | ||

| 0.001 | 4/255 | ||

| ResNet-50 | 76.9 | 17.8 | 0.0 |

| DeiT-S | 76.8 | 22.1 | 0.0 |

表 1: ResNet-50 和 DeiT-S 在 ImageNet 验证集上防御 AutoAttack 的性能表现。我们注意到当扰动半径设置为 4/255 时,两个模型完全失效。

| Clean | PerturbationRadius | ||

|---|---|---|---|

| 0.001 | 4/255 | ||

| ResNet-50 | 76.9 | 17.8 | 0.0 |

| DeiT-S | 76.8 | 22.1 | 0.0 |

4.1.1 Adversarial Training

4.1.1 对抗训练 (Adversarial Training)

Adversarial training [14, 28], which trains models with adversarial examples that are generated on-the-fly, aims to optimize the following min-max framework:

对抗训练 [14, 28] 通过动态生成对抗样本来训练模型,旨在优化以下最小-最大框架:

$$

\arg\operatorname*{min}{\theta}\mathbb{E}_{(x,y)\sim\mathbb{D}}\biggl[\underset{\epsilon\in\mathbb{S}}{\operatorname*{max}}L(\theta,x+\epsilon,y)\biggr],

$$

$$

\arg\operatorname*{min}{\theta}\mathbb{E}_{(x,y)\sim\mathbb{D}}\biggl[\underset{\epsilon\in\mathbb{S}}{\operatorname*{max}}L(\theta,x+\epsilon,y)\biggr],

$$

where $\mathbb{D}$ is the underlying data distribution, $L(\cdot,\cdot,\cdot)$ is the loss function, $\theta$ is the network parameter, $x$ is a training sample with the ground-truth label $y,\epsilon$ is the added adversarial perturbation, and $\mathbb{S}$ is the allowed perturbation range. Following [46, 48], the adversarial training here applies single-step PGD (PGD-1) to generate adversarial examples (for lowering training cost), with the constrain that maximum per-pixel change $\epsilon=4/255$ .

其中 $\mathbb{D}$ 是底层数据分布,$L(\cdot,\cdot,\cdot)$ 是损失函数,$\theta$ 是网络参数,$x$ 是带有真实标签 $y$ 的训练样本,$\epsilon$ 是添加的对抗扰动,$\mathbb{S}$ 是允许的扰动范围。根据 [46, 48],这里的对抗训练采用单步 PGD (PGD-1) 生成对抗样本 (以降低训练成本),并限制最大每像素变化 $\epsilon=4/255$。

Adversarial Training on Transformers. We apply the setup above to adversarial ly train both ResNet-50 and DeiT-S. However, surprisingly, this default setup works for ResNet-50 but will collapse the training with DeiT-S, i.e., the robustness of such trained DeiT-S is merely ${\sim}4%$ when evaluating against PGD-5. We identify the issue is over-regular iz ation—when combining strong data augmentation strategies (i.e., RangAug, Mixup and CutMix) with adversarial attacks, the yielded training samples are too hard to be learnt by DeiT-S.

Transformers 对抗训练。我们将上述设置应用于对抗性训练 ResNet-50 和 DeiT-S。然而出乎意料的是,这一默认设置对 ResNet-50 有效,却会导致 DeiT-S 的训练崩溃——即当使用 PGD-5 进行评估时,如此训练的 DeiT-S 鲁棒性仅为 ${\sim}4%$。我们发现问题在于过正则化 (over-regularization):当将强数据增强策略 (即 RangAug、Mixup 和 CutMix) 与对抗攻击结合时,生成的训练样本对 DeiT-S 而言过于困难而无法学习。

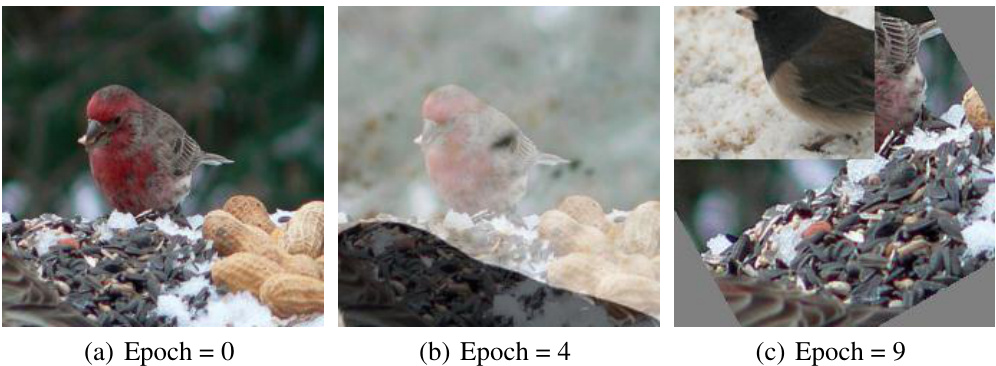

Figure 1: The illustration of the proposed augmentation warm-up strategy. At the beginning of adversarial training (from epoch $=0$ to epoch $_{=9}$ ), we progressively increase the augmentation strength.

图 1: 提出的增强预热策略示意图。在对抗训练开始时 (从 epoch $=0$ 到 epoch $_{=9}$),我们逐步增强数据扩增强度。

To ease this observed training difficulty, we design a curriculum of the applied augmentation strategies. Specifically, as shown in Figure 1, at the first 10 epoch, we progressively enhance the augmentation strength (e.g., gradually changing the distortion magnitudes in RandAug from 1 to 9) to warmup the training process. Our experiment verifies this curriculum enables a successful adversarial training—DeiT-S now attains ${\sim}44%$ robustness (boosted from ${\sim}4%$ ) on defending against PGD-5.

为缓解观察到的训练困难,我们设计了数据增强策略的渐进课程。具体而言,如图1所示,在前10个训练周期内,我们逐步增强数据增强强度(例如将RandAug中的失真幅度从1逐渐提升至9)以实现训练预热。实验验证该课程方案能成功实现对抗训练——DeiT-S模型在防御PGD-5攻击时鲁棒性达到${\sim}44%$(从${\sim}4%$显著提升)。

Transformers with CNNs’ Training Recipes. Interestingly, an alternative way to address the observed training difficulty is directly adopting CNN’s recipes to train Transformers [35], i.e., applying M-SGD with step decay learning rate scheduler and removing strong data augmentation strategies (like Mixup). Though this setup can stabilize the adversarial training process, it significantly hurts the overall performance of DeiT-S—the clean accuracy drops to $59.9%$ $(-6.6%)$ , and the robustness on defending against PGD-100 drops to $31.9%$ $(-8.4%)$ .

采用CNN训练方案的Transformer。有趣的是,解决训练难题的另一种方法是直接采用CNN的训练方案来训练Transformer [35],即使用带动量的随机梯度下降(M-SGD)配合阶梯式学习率衰减策略,并移除强数据增强方法(如Mixup)。虽然这种设置能稳定对抗训练过程,但会显著损害DeiT-S模型的整体性能——其干净准确率降至$59.9%$ $(-6.6%)$,防御PGD-100攻击的鲁棒性降至$31.9%$ $(-8.4%)$。

One reason for this degenerated performance is that strong data augmentation strategies are not included in CNNs’ recipes, therefore Transformers will be easily overfitted during training [6]. Another key factor here is the incompatibility between the SGD optimizer and Transformers. As explained in [25], compared to SGD, adaptive optimizers (like AdamW) are capable of assigning different learning rates to different parameters, resulting in consistent update magnitudes even with unbalanced gradients. This property is crucial for enabling successful training of Transformers, given the gradients of attention modules are highly unbalanced.

性能退化的一个原因是,CNN的训练方案中未包含强数据增强策略,因此Transformer在训练过程中容易过拟合[6]。另一个关键因素是SGD优化器与Transformer之间的不兼容性。如[25]所述,与SGD相比,自适应优化器(如AdamW)能够为不同参数分配不同学习率,即使在梯度不平衡的情况下也能保持一致的更新幅度。考虑到注意力模块的梯度高度不平衡,这一特性对成功训练Transformer至关重要。

CNNs with Transformers’ Training Recipes. As shown in Table 2, adversarial ly trained ResNet50 is less robust than adversarial ly trained DeiT-S, i.e., $32.26%$ vs. $40.32%$ on defending against PGD-100. It motivates us to explore whether adopting Transformers’ training recipes to CNNs can enhance CNNs’ adversarial training. Interestingly, if we directly apply AdamW to ResNet-50, the adversarial training will collapses. We also explore the possibility of adversarial ly training ResNet-50 with strong data augmentation strategies (i.e., RandAug, Mixup and CutMix). However, we find ResNet-50 will be overly regularized in adversarial training, leading to very unstable training process, sometimes may even collapse completely.

采用Transformer训练方法的CNN。如表2所示,经过对抗训练的ResNet50鲁棒性低于对抗训练的DeiT-S(即防御PGD-100攻击时准确率为32.26% vs 40.32%)。这促使我们探索:将Transformer的训练方案应用于CNN是否能增强CNN的对抗训练效果。有趣的是,若直接在ResNet-50上使用AdamW优化器,对抗训练会出现崩溃现象。我们还尝试用强数据增强策略(如RandAug、Mixup和CutMix)对ResNet-50进行对抗训练,但发现该模型在对抗训练中会因过度正则化导致训练过程极不稳定,有时甚至完全崩溃。

Though Transformers’ optimizer and augmentation strategies cannot improve CNNs’ adversarial training, we find Transformers’ choice of activation functions matters. Unlike the widely-used activation function in CNNs is ReLU, Transformers by default use GELU [18]. As suggested in [47], ReLU significantly weakens adversarial training due to its non-smooth nature; replacing ReLU with its smooth approximations (e.g., GELU, SoftPlus) can strengthen adversarial training. We verify that by replacing ReLU with Transformers’ activation function (i.e., GELU) in ResNet-50. As shown in Table 2, adversarial training now can be significantly enhanced, i.e., ResNet $50+$ GELU substantially outperforms its ReLU counterpart by $8.01%$ on defending against PGD-100. Moreover, we note the usage of GELU enables ResNet-50 to match DeiT-S in adversarial robustness, i.e., $40.27%$ vs. $40.32%$ for defending against PGD-100, and $35.51%$ vs. $35.50%$ for defending against AutoAttack, challenging the previous conclusions [4, 35] that Transformers are more robust than CNNs on defending against adversarial attacks.

虽然Transformer的优化器和数据增强策略无法提升CNN的对抗训练效果,但我们发现Transformer的激活函数选择至关重要。与CNN普遍使用ReLU不同,Transformer默认采用GELU [18]。如[47]所述,ReLU因其非平滑特性会显著削弱对抗训练效果,而改用平滑近似函数(如GELU、SoftPlus)能增强对抗训练。我们在ResNet-50中将ReLU替换为Transformer的激活函数(即GELU)进行验证。如表2所示,对抗训练效果得到显著提升:ResNet $50+$ GELU在防御PGD-100攻击时的表现比ReLU版本高出$8.01%$。值得注意的是,使用GELU使ResNet-50在对抗鲁棒性上达到了与DeiT-S相当的水平(防御PGD-100时为$40.27%$ vs. $40.32%$,防御AutoAttack时为$35.51%$ vs. $35.50%$),这对先前认为Transformer比CNN更能抵御对抗攻击的结论[4,35]提出了挑战。

Table 2: The performance of ResNet-50 and DeiT-S on defending against adversarial attacks (with $\epsilon=4$ ). After replacing ReLU with DeiT’s activation function GELU in ResNet-50, its robustness can match the robustness of DeiT-S.

表 2: ResNet-50 和 DeiT-S 在防御对抗攻击 ( $\epsilon=4$ ) 时的性能表现。将 ResNet-50 中的 ReLU 替换为 DeiT 的激活函数 GELU 后,其鲁棒性可达到与 DeiT-S 相当的水平。

| 激活函数 | 干净准确率 | PGD-5 | PGD-10 | PGD-50 | PGD-100 | AutoAttack | |

|---|---|---|---|---|---|---|---|

| ResNet-50 | ReLU GELU | 66.77 | 38.70 44.01 | 34.19 | 32.47 | 32.26 | 26.41 |

| DeiT-S | GELU | 67.38 66.50 | 43.95 | 40.98 41.03 | 40.28 40.34 | 40.27 40.32 | 35.51 35.50 |

4.2 Robustness to Patch-Based Attacks

4.2 基于图像块攻击的鲁棒性

We next study the robustness of CNNs and Transformers on defending against patch-based attacks. We choose Texture Patch Attack (TPA) [50] as the attacker. Note that different from typical patchbased attacks which apply monochrome patches, TPA additionally optimizes the pattern of the patches to enhance attack strength. By default, we set the number of attacking patches to 4, limit the largest manipulated area to $10%$ of the whole image area, and set the attack mode as the non-targeted attack. For ResNet-50 and DeiT-S, we do not consider adversarial training here as their vanilla counterparts already demonstrate non-trivial performance on defending against TPA.

我们接下来研究CNN和Transformer在抵御基于补丁攻击时的鲁棒性。选择纹理补丁攻击(TPA) [50]作为攻击方法。需注意,与典型使用单色补丁的攻击不同,TPA还会优化补丁图案以增强攻击强度。默认设置攻击补丁数量为4个,最大篡改区域限制为整图面积的$10%$,攻击模式设为非定向攻击。对于ResNet-50和DeiT-S,此处不考虑对抗训练,因其原始版本在防御TPA时已展现出显著性能。

Table 3: Performance of ResNet-50 and DeiT-S on defending against Texture Patch Attack.

表 3: ResNet-50 和 DeiT-S 在防御纹理块攻击 (Texture Patch Attack) 时的性能表现

| 架构 | 干净准确率 (Clean Acc) | 纹理块攻击 (TexturePatch Attack) |

|---|---|---|

| ResNet-50 | 76.9 | 19.7 |

| DeiT-S | 76.8 | 47.7 |

Interestingly, as shown in Table 3, though both models attain similar clean image accuracy, DeiT-S substantially outperforms ResNet-50 by $28%$ on defending against TPA. We conjecture such huge performance gap is originated from the differences in training setups; more specifically, it may be resulted by the fact DeiT-S by default use strong data augmentation strategies while ResNet-50 use none of them. The augmentation strategies like CutMix already naïvely introduce occlusion or image/patch mixing during training, therefore are potentially helpful for securing model robustness against patch-based adversarial attacks.

有趣的是,如表 3 所示,尽管两种模型在干净图像准确率上表现相近,但 DeiT-S 在防御 TPA 时比 ResNet-50 高出 28%。我们推测如此巨大的性能差距源于训练设置的差异;更具体地说,这可能是因为 DeiT-S 默认使用了强大的数据增强策略,而 ResNet-50 完全没有使用。像 CutMix 这样的增强策略在训练过程中会天然引入遮挡或图像/块混合,因此可能有助于提升模型针对基于块的对抗攻击的鲁棒性。

To verify the hypothesis above, we next ablate how strong augmentation strategies in DeiT-S (i.e., RandAug, Mixup and CutMix) affect ResNet-50’s robustness. We report the results in Table 4. Firstly, we note all augmentation strategies can help ResNet-50 achieve stronger TPA robustness, with improvements ranging from $+4.6%$ to $+32.7%$ . Among all these augmentation strategies, CutMix stands as the most effective one to secure model’s TPA robustness, i.e., CutMix alone can improve TPA robustness by $29.4%$ . Our best model is obtained by using both CutMix and RandAug, reporting $52.4%$ TPA robustness, which is even stronger than DeiT-S $47.7%$ TPA robustness). This observation still holds by using stronger TPA with 10 patches (increased from 4), i.e., ResNet-50 now attains $34.5%$ TPA robustness, outperforming DeiT-S by $5.6%$ . These results suggest that Transformers are also no more robust than CNNs on defending against patch-based adversarial attacks.

为验证上述假设,我们接下来消融研究了DeiT-S中的强数据增强策略(即RandAug、Mixup和CutMix)对ResNet-50鲁棒性的影响。结果如表4所示。首先,我们发现所有增强策略都能帮助ResNet-50获得更强的TPA(Token-Patch Attack)鲁棒性,提升幅度从$+4.6%$到$+32.7%$不等。其中CutMix是提升模型TPA鲁棒性最有效的策略,仅使用CutMix即可将TPA鲁棒性提高$29.4%$。最佳模型通过同时使用CutMix和RandAug获得,其TPA鲁棒性达到$52.4%$,甚至超过了DeiT-S的$47.7%$)。这一结论在使用10个补丁(从4个增加)的更强TPA攻击时依然成立,此时ResNet-50的TPA鲁棒性达到$34.5%$,比DeiT-S高出$5.6%$。这些结果表明,在防御基于补丁的对抗攻击时,Transformer并不比CNN更具鲁棒性。

Table 4: Performance of ResNet-50 trained with different augmentation strategies on defending against Texture Patch Attack. We note 1) all augmentation strategies can improve model robustness, and 2) CutMix is the most effective augmentation strategy to secure model robustness.

表 4: 采用不同数据增强策略训练的ResNet-50在抵御纹理块攻击(Texture Patch Attack)时的性能表现。我们注意到:1) 所有增强策略都能提升模型鲁棒性;2) CutMix是保障模型鲁棒性最有效的数据增强策略。

| 数据增强策略 | RandAug | MixUp | CutMix | 干净准确率(CleanAcc) | 纹理块攻击(TexturePatchAttack) |

|---|---|---|---|---|---|

| × | × | × | 76.9 | 19.7 | |

| × | × | 77.5 | 24.3 (+4.6) | ||

| × | 75.9 | 31.5 (+11.8) | |||

| × | 77.2 | 49.1 (+29.4) | |||

| < | 75.7 | 31.7 (+12.0) | |||

| 76.7 | 52.4 (+32.7) | ||||

| 77.1 | 39.8 (+20.1) | ||||

| 76.4 | 48.6 (+28.9) |

5 Robustness on Out-of-distribution Samples

5 分布外样本的鲁棒性

In addition to adversarial robustness, we are also interested in comparing the robustness of CNNs and Transformers on out-of-distribution samples. We hereby select three datasets, i.e., ImageNet-A, ImageNet-C and Stylized ImageNet, to capture the different aspects of out-of-distribution robustness.

除了对抗鲁棒性,我们还对比较CNN和Transf