GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding

GShard: 基于条件计算和自动分片的超大规模模型扩展方案

Dmitry Lepikhin lepikhin@google.com

Dmitry Lepikhin lepikhin@google.com

HyoukJoong Lee hyouklee@google.com

HyoukJoong Lee hyouklee@google.com

Yuanzhong Xu yuanzx@google.com

Yuanzhong Xu yuanzx@google.com

Dehao Chen dehao@google.com

Dehao Chen dehao@google.com

Orhan Firat orhanf@google.com

Orhan Firat orhanf@google.com

Yanping Huang huangyp@google.com

Yanping Huang huangyp@google.com

Maxim Krikun krikun@google.com

Maxim Krikun krikun@google.com

Noam Shazeer noam@google.com

Noam Shazeer noam@google.com

Zhifeng Chen zhifengc@google.com

Abstract

摘要

Neural network scaling has been critical for improving the model quality in many real-world machine learning applications with vast amounts of training data and compute. Although this trend of scaling is affirmed to be a sure-fire approach for better model quality, there are challenges on the path such as the computation cost, ease of programming, and efficient implementation on parallel devices. GShard is a module composed of a set of lightweight annotation APIs and an extension to the XLA compiler. It provides an elegant way to express a wide range of parallel computation patterns with minimal changes to the existing model code. GShard enabled us to scale up multilingual neural machine translation Transformer model with Sparsely-Gated Mixture-of-Experts beyond 600 billion parameters using automatic sharding. We demonstrate that such a giant model can eff ici enc t ly be trained on 2048 TPU v3 accelerators in 4 days to achieve far superior quality for translation from 100 languages to English compared to the prior art.

神经网络扩展对于提升许多现实世界机器学习应用中的模型质量至关重要,尤其是在拥有海量训练数据和计算资源的情况下。尽管这种扩展趋势被证实是提高模型质量的有效途径,但在实施过程中仍面临计算成本、编程便捷性以及在并行设备上高效实现等挑战。GShard是一个由轻量级标注API集合和XLA编译器扩展组成的模块,它通过极少的现有模型代码改动,提供了一种优雅的方式来表达各种并行计算模式。借助自动分片技术,GShard使我们能够将稀疏门控专家混合(Sparsely-Gated Mixture-of-Experts)的多语言神经机器翻译Transformer模型规模扩展至超过6000亿参数。实验证明,这一巨型模型可在2048个TPU v3加速器上高效训练4天,在100种语言到英语的翻译任务中实现了远超现有技术的质量表现。

1 Introduction

1 引言

Scaling neural networks brings dramatic quality gains over a wide array of machine learning problems [1, 2, 3, 4, 5, 6]. For computer vision, increasing the model capacity has led to better image classification and detection accuracy for various computer vision architectures [7, 8, 9]. Similarly in natural language processing, scaling Transformers [10] yielded consistent gains on language understanding tasks [4, 11, 12], cross-lingual down-stream transfer [4, 13] and (massively-)multilingual neural machine translation [14, 15, 16]. This general tendency motivated recent studies to scrutinize the factors playing a critical role in the success of scaling [17, 18, 19, 20, 3], including the amounts of training data, the model size, and the computation being utilized as found by past studies. While the final model quality was found to have a power-law relationship with the amount of data, compute and model size [18, 3], the significant quality gains brought by larger models also come with various practical challenges. Training efficiency among the most important ones, which we define as the amount of compute and training time being used to achieve a superior model quality against the best system existed, is oftentimes left out.

扩展神经网络在广泛的机器学习问题上带来了显著的质量提升 [1, 2, 3, 4, 5, 6]。对于计算机视觉领域,增加模型容量提升了各种视觉架构在图像分类和检测任务中的准确率 [7, 8, 9]。同样在自然语言处理中,扩展 Transformer [10] 在语言理解任务 [4, 11, 12]、跨语言下游迁移 [4, 13] 以及(大规模)多语言神经机器翻译 [14, 15, 16] 上持续带来增益。这一普遍趋势促使近期研究深入分析影响扩展成功的关键因素 [17, 18, 19, 20, 3],包括训练数据量、模型规模和计算资源的使用,正如过往研究所发现的那样。虽然最终模型质量与数据量、计算资源和模型规模呈幂律关系 [18, 3],但更大模型带来的显著质量提升也伴随着诸多实际挑战。其中最重要的训练效率问题——我们将其定义为为超越现有最佳系统所消耗的计算资源和训练时间——却常被忽视。

Preprint. Under review.

预印本。审稿中。

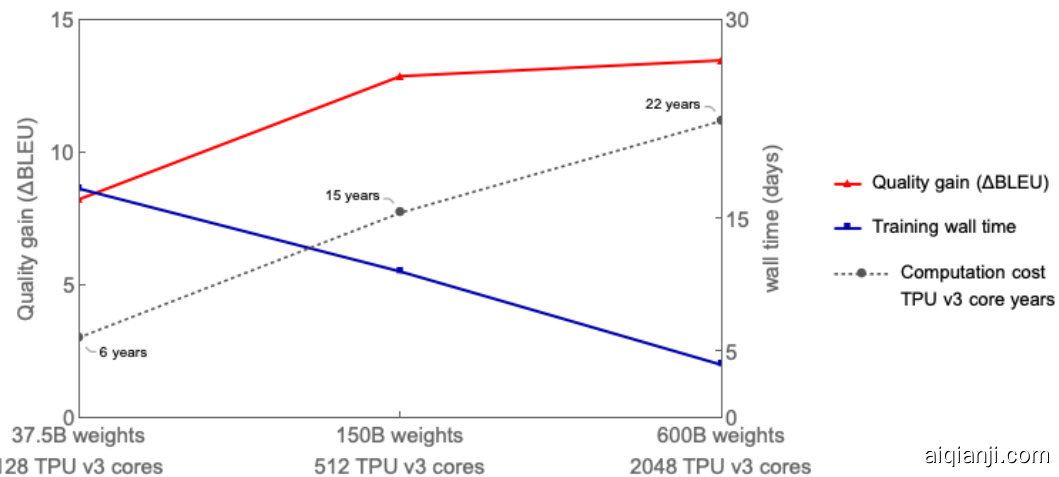

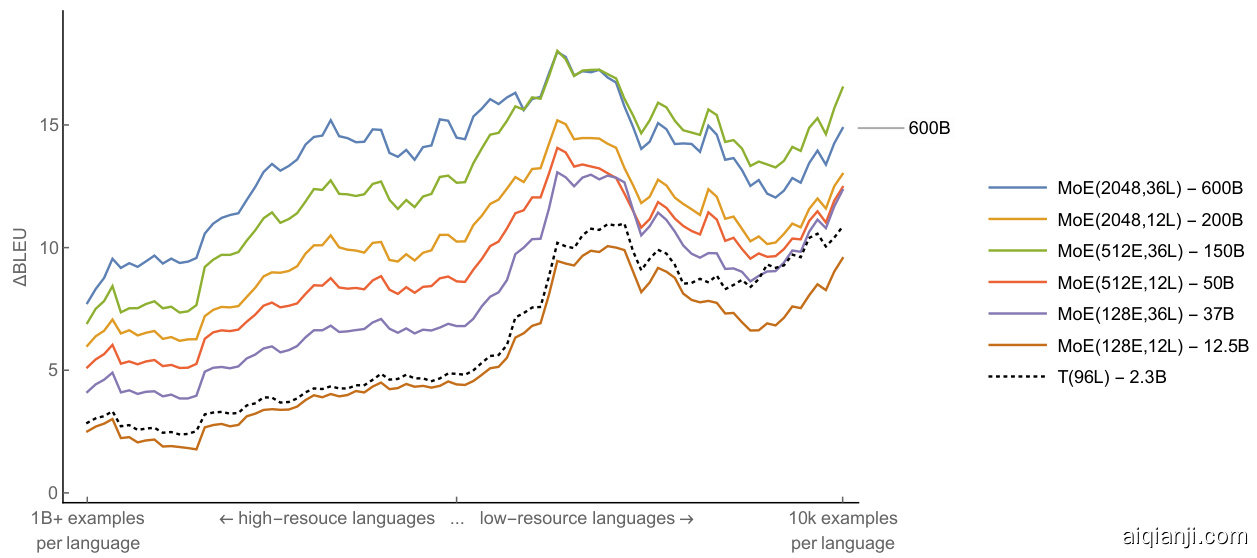

Figure 1: Multilingual translation quality (average $\Delta$ BLEU comparing to bilingual baselines) improved as MoE model size grows up to 600B, while the end-to-end training cost (in terms of TPU v3 core-year) only increased sub linearly. Increasing the model size from 37.5B to 600B (16x), results in computation cost increase from 6 to 22 years (3.6x). The 600B parameters model that achieved the best translation quality was trained with 2048 TPU v3 cores for 4 days, a total cost of 22 TPU v3 core-years. In contrast, training all 100 bilingual baseline models would have required 29 TPU v3 core-years. Our best quality dense single Transformer model (2.3B parameters) achieving $\Delta$ BLEU of 6.1, was trained with GPipe [15] on 2048 TPU v3 cores for 6 weeks or total of 235.5 TPU v3 core-years.

图 1: 多语言翻译质量(与双语基线相比的平均$\Delta$BLEU)随着MoE模型规模增长至600B而提升,而端到端训练成本(以TPU v3核心年计)仅呈次线性增长。模型规模从37.5B增至600B(16倍)导致计算成本从6年增至22年(3.6倍)。达到最佳翻译质量的600B参数模型使用2048个TPU v3核心训练4天,总成本为22 TPU v3核心年。相比之下,训练全部100个双语基线模型需要29 TPU v3核心年。我们性能最佳的密集单Transformer模型(2.3B参数)达到$\Delta$BLEU 6.1,使用GPipe [15]在2048个TPU v3核心上训练6周,总成本为235.5 TPU v3核心年。

1.1 Practical Challenges for Scaling

1.1 规模化实践面临的挑战

Here we enumerate major practical challenges faced especially when training massive-scale models that are orders of magnitude larger than the capacity limit of a single accelerator memory (e.g., GPUs or TPUs).

在此我们列举了训练超大规模模型时面临的主要实际挑战,尤其是当模型规模远超单个加速器内存(如GPU或TPU)容量限制的情况。

Architecture-specific model parallelism support There is a lack of support for efficient model parallelism algorithms under commonly used deep learning frameworks such as TensorFlow [21] and PyTorch [22]. Naive model parallelism with graph partition is supported but it would lead to severe under-utilization due to the sequential dependency of the network and gradient based optimization. In order to scale up the existing models efficiently, users typically need to invest a lot of engineering work, for example, migrating the model code to special frameworks [23, 15].

特定架构的模型并行支持

在常用深度学习框架如 TensorFlow [21] 和 PyTorch [22] 下,缺乏对高效模型并行算法的支持。虽然支持基于图分割的简单模型并行,但由于网络的顺序依赖性和基于梯度的优化,会导致严重的利用率不足。为了高效扩展现有模型,用户通常需要投入大量工程工作,例如将模型代码迁移到特殊框架 [23, 15]。

Super-linear scaling of computation cost vs model size Straightforward scaling of the mode size by increasing the depth or width [6, 15] generally results in at least linear increase of training step time. Model parallelism by splitting layer weights and computation across multiple devices generally becomes necessary, leading to network communication overhead and device under-utilization. Device under-utilization stems from imbalanced assignment and sequential dependencies of the underlying neural network. This super-linear relationship between the computation cost and the model size can not be resolved by simply using more devices, making training massive models impractical.

计算成本与模型规模的超线性扩展

通过增加深度或宽度[6,15]直接扩展模型规模,通常会导致训练步时间至少呈线性增长。由于需要跨设备分割层权重和计算,模型并行化往往成为必要手段,这会带来网络通信开销和设备利用率不足的问题。设备利用率不足源于底层神经网络的不均衡分配和顺序依赖性。计算成本与模型规模间的这种超线性关系无法通过单纯增加设备数量来解决,使得训练大规模模型变得不切实际。

Infrastructure s cal ability for giant model representation A naive graph representation for the massive-scale model distributed across thousands of devices may become a bottleneck for both deep learning frameworks and their optimizing compilers. For example, adding $D$ times more layers with inter-op partitioning or increasing model dimensions with intra-op partitioning across $D$ devices may result in a graph with $O(D)$ nodes. Communication channels between devices could further increase the graph size by up to $\dot{O}(D^{2})$ (e.g., partitioning gather or transpose). Such increase in the graph size would result in an infeasible amount of graph building and compilation time for massive-scale models.

巨型模型表示的基础设施可扩展性

对于分布在数千台设备上的超大规模模型,简单的图表示可能成为深度学习框架及其优化编译器的瓶颈。例如,通过算子间分区增加$D$倍层数,或通过算子内分区在$D$台设备上扩展模型维度,可能导致图节点数量增至$O(D)$。设备间的通信通道可能进一步将图规模扩大至$\dot{O}(D^{2})$(例如分区收集或转置操作)。这种图规模的膨胀将导致超大规模模型的图构建和编译时间达到不可行的程度。

Non-trivial efforts for implementing partitioning strategies Partitioning a model to run on many devices efficiently is challenging, as it requires coordinating communications across devices. For graph-level partitioning, sophisticated algorithms [15, 24] are needed to reduce the overhead introduced by the sequential dependencies between different partitions of graphs allocated on different devices. For operator-level parallelism, there are different communication patterns for different partitioned operators, depending on the semantics, e.g., whether it needs to accumulate partial results, or to rearrange data shards. According to our experience, manually handling these issues in the model requires substantial amount of effort, given the fact that the frameworks like TensorFlow have a large sets of operators with ad-hoc semantics. In all cases, implementing model partitioning would particularly be a burden for practitioners, as changing model architecture would require changing the underlying device communications, causing a ripple effect.

实现分区策略的非平凡努力

将模型分区以在多个设备上高效运行具有挑战性,因为这需要协调跨设备的通信。对于图级分区,需要复杂的算法 [15, 24] 来减少由分配在不同设备上的图分区之间的顺序依赖引入的开销。对于算子级并行,不同分区算子有不同的通信模式,具体取决于语义,例如是否需要累积部分结果,或重新排列数据分片。根据我们的经验,考虑到像 TensorFlow 这样的框架具有大量具有特定语义的算子,在模型中手动处理这些问题需要大量工作。在所有情况下,实现模型分区对从业者来说尤其是一种负担,因为更改模型架构需要更改底层设备通信,从而产生连锁效应。

1.2 Design Principles for Efficient Training at Scale

1.2 大规模高效训练的设计原则

In this paper, we demonstrate how to overcome these challenges by building a 600 billion parameters sequence-to-sequence Transformer model with Sparsely-Gated Mixture-of-Experts layers, which enjoys sub-linear computation cost and $O(1)$ compilation time. We trained this model with 2048 TPU v3 devices for 4 days on a multilingual machine translation task and achieved far superior translation quality compared to prior art when translating 100 languages to English with a single non-ensemble model. We conducted experiments with various model sizes and found that the translation quality increases as the model gets bigger, yet the total wall-time to train only increases sub-linearly with respect to the model size, as illustrated in Figure 1. To build such an extremely large model, we made the following key design choices.

本文展示了如何通过构建一个6000亿参数的稀疏门控专家混合层( Sparsely-Gated Mixture-of-Experts )序列到序列Transformer模型来克服这些挑战,该模型具有次线性计算成本和 $O(1)$ 编译时间。我们使用2048个TPU v3设备在多语言机器翻译任务上训练该模型4天,当使用单一非集成模型将100种语言翻译为英语时,其翻译质量远超现有技术。我们通过不同规模的模型进行实验,发现随着模型增大翻译质量提升,但训练总耗时仅随模型规模呈次线性增长,如图1所示。为构建如此庞大的模型,我们做出了以下关键设计选择。

Sub-linear Scaling First, model architecture should be designed to keep the computation and communication requirements sublinear in the model capacity. Conditional computation [25, 16, 26, 27] enables us to satisfy training and inference efficiency by having a sub-network activated on the per-input basis. Scaling capacity of RNN-based machine translation and language models by adding Position-wise Sparsely Gated Mixture-of-Experts (MoE) layers [16] allowed to achieve state-of-the-art results with sublinear computation cost. We therefore present our approach to extend Transformer architecture with MoE layers in Section 2.

次线性扩展

首先,模型架构的设计应确保计算和通信需求随模型容量呈次线性增长。条件计算 [25, 16, 26, 27] 通过基于每输入激活子网络,使我们能够满足训练和推理效率。通过添加位置稀疏门控专家混合层 (MoE) [16] 扩展基于 RNN 的机器翻译和语言模型容量,可在次线性计算成本下实现最先进的结果。因此,我们将在第 2 节介绍用 MoE 层扩展 Transformer 架构的方法。

The Power of Abstraction Second, the model description should be separated from the partitioning implementation and optimization. This separation of concerns let model developers focus on the network architecture and flexibly change the partitioning strategy, while the underlying system applies semantic-preserving transformations and implements efficient parallel execution. To this end we propose a module, GShard, which only requires the user to annotate a few critical tensors in the model with partitioning policies. It consists of a set of simple APIs for annotations, and a compiler extension in XLA [28] for automatic parallel iz ation. Model developers write models as if there is a single device with huge memory and computation capacity, and the compiler automatically partitions the computation for the target based on the annotations and their own heuristics. We provide more annotation examples in Section 3.2.

抽象的力量

其次,模型描述应与分区实现和优化分离。这种关注点分离让模型开发者能专注于网络架构,并灵活调整分区策略,而底层系统则负责应用语义保持转换并实现高效并行执行。为此,我们提出了GShard模块,用户只需为模型中少数关键张量标注分区策略。该模块包含一套简单的标注API,以及XLA [28] 中用于自动并行化的编译器扩展。开发者编写模型时,可假想存在一个具备超大内存和算力的单设备,编译器会根据标注及自身启发式规则,自动为目标硬件分区计算。更多标注示例见第3.2节。

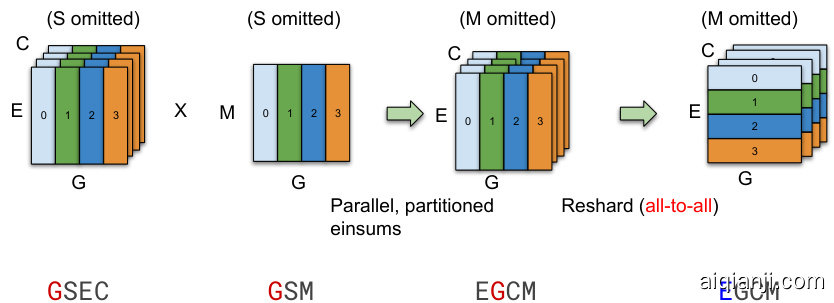

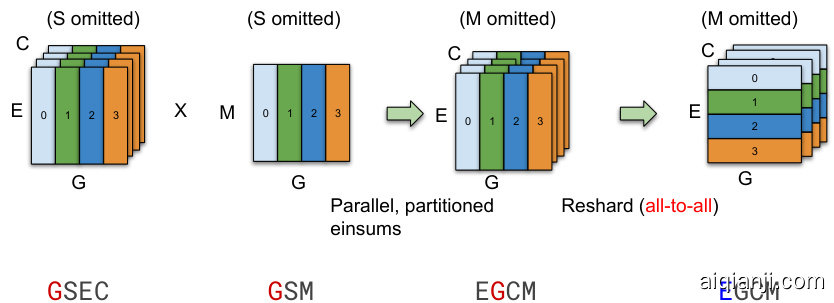

Scalable Compilers Third, the system infrastructure, including the computation representation and compilation, must scale with thousands of devices for parallel execution. For example, Figure 2 illustrates two different ways of partitioning a dot-product operation across 4 devices (color-coded). Notice that with the usual MPMD (Multiple Program Multiple Data) approach in Figure 2a scaling becomes more challenging since the number of nodes in the graph increases linearly with the number of devices. Instead, we developed a compiler technique for SPMD (Single Program Multiple Data) transformation that generates a single program to run on all devices, keeping the compilation time constant independent of the number of devices, as illustrated in Figure 2b. We will discuss our SPMD framework in more details in Section 3.3.

可扩展编译器

第三,系统基础设施(包括计算表示和编译)必须能够扩展到数千台设备以支持并行执行。例如,图 2 展示了在 4 台设备(按颜色区分)上对点积运算进行分区的两种不同方式。需要注意的是,在图 2a 中采用传统的 MPMD(多程序多数据)方法时,扩展性会变得更加困难,因为图中的节点数量会随着设备数量线性增加。相反,我们开发了一种用于 SPMD(单程序多数据)转换的编译器技术,该技术生成一个可在所有设备上运行的单一程序,从而使编译时间与设备数量无关,如图 2b 所示。我们将在第 3.3 节中更详细地讨论我们的 SPMD 框架。

The rest of the paper is organized as the following. Section 2 describes our Transformer architecture with Sparsely-Gated MoE layer in more details. Section 3 introduces our development module GShard. Section 4 demonstrates the application of our mixture of expert models on the multilingual machine translation task over 100 language pairs. Section 5 has performance and memory measurements of our implementation. Section 6 discusses related work.

本文其余部分的结构安排如下。第2节详细介绍了我们采用稀疏门控混合专家层 (Sparsely-Gated MoE) 的Transformer架构。第3节介绍了我们的开发模块GShard。第4节展示了混合专家模型在100多种语言对的多语言机器翻译任务中的应用。第5节提供了我们实现的性能和内存测量数据。第6节讨论了相关研究工作。

Figure 2: Comparison between MPMD and our proposed SPMD partitioning of a Dot operator $([\bar{M},K]\times[K,\bar{N}]=[M,N])$ across 4 devices. In this example, both operands are partitioned along the contracting dimension $K$ , where each device computes the local result and globally combines with an AllReduce. MPMD partitioning generates separate operators for each device, limiting its s cal ability, whereas SPMD partitioning generates one program to run on all devices. Note that the compilation time with our SPMD partitioning is not-dependent of the number of devices being used.

图 2: MPMD与我们提出的SPMD在4个设备上对Dot算子 $([\bar{M},K]\times[K,\bar{N}]=[M,N])$ 的划分方式对比。本例中两个操作数均沿收缩维度 $K$ 进行划分,各设备计算本地结果后通过AllReduce全局聚合。MPMD划分会为每个设备生成独立算子,限制了可扩展性;而SPMD划分生成单一程序在所有设备上运行。需注意采用SPMD划分时,编译时间与所用设备数量无关。

2 Model

2 模型

2.1 Sparse scaling of the Transformer architecture

2.1 Transformer架构的稀疏扩展

The Transformer [10] architecture has been widely used for natural language processing. It has become the de-facto standard for many sequence-to-sequence tasks, such as machine translation. Transformer makes use of two computational blocks, an encoder and a decoder, both implemented by stacking multiple Transformer layers. Transformer encoder layer consists of two consecutive layers, namely a self-attention layer followed by a position-wise feed-forward layer. Decoder adds third cross-attention layer, which attends over encoder output. We sparsely scale Transformer with conditional computation by replacing every other feed-forward layer with a Position-wise Mixture of Experts (MoE) layer [16] with a variant of top-2 gating in both the encoder and the decoder (Figure 3). We vary the number of Transformer layers and the number of experts per MoE layer in order to scale the model capacity.

Transformer [10] 架构已广泛应用于自然语言处理领域,成为机器翻译等序列到序列任务的实际标准。该架构采用两个计算模块——编码器(encoder)和解码器(decoder),均通过堆叠多个Transformer层实现。其中,编码器层包含连续的两个层:自注意力(self-attention)层和逐位置前馈(position-wise feed-forward)层;解码器额外增加了第三个跨注意力(cross-attention)层,用于关注编码器输出。我们通过条件计算对Transformer进行稀疏化扩展:在编码器和解码器中,每隔一个前馈层就替换为采用top-2门控变体的逐位置专家混合(MoE)层 [16] (图 3)。通过调整Transformer层数和每个MoE层的专家数量,可实现模型容量的灵活扩展。

Each training example consists of a pair of sequences of subword tokens. Each token activates a sub-network of the MoE Transformer during both training and inference. The size of the sub-network is roughly independent of the number of experts per MoE Layer, allowing sublinear scaling of the computation cost as described in the previous section. Computation complexity is further analyzed in Section 3.1 and training performance in Section 5.

每个训练样本由一对子词token序列组成。在训练和推理过程中,每个token都会激活MoE Transformer的一个子网络。该子网络的大小基本与每个MoE层的专家数量无关,从而实现如前一节所述的计算成本次线性扩展。计算复杂度将在第3.1节进一步分析,训练性能将在第5节讨论。

2.2 Position-wise Mixture-of-Experts Layer

2.2 位置感知专家混合层

The Mixture-of-Experts (MoE) layer used in our model is based on [16] with variations in the sparse gating function and the auxiliary loss being used. A MoE layer for Transformer consists of $E$ feed-forward networks $\mathrm{FFN}_ {1}\ldots\mathrm{FFN}_ {E}$ :

我们模型中使用的混合专家 (Mixture-of-Experts,MoE) 层基于 [16],但在稀疏门控函数和辅助损失方面有所变化。Transformer 的 MoE 层由 $E$ 个前馈网络 $\mathrm{FFN}_ {1}\ldots\mathrm{FFN}_ {E}$ 组成:

$$

\begin{array}{c}{{\displaystyle\mathcal{G}_ {s,E}=\mathrm{GATE}({\boldsymbol{x}}_ {s})}}\ {{\displaystyle\mathrm{FFN}_ {e}({\boldsymbol{x}}_ {s})=w o_ {e}\cdot\mathrm{ReLU}(w i_ {e}\cdot{\boldsymbol{x}}_ {s})}}\ {{\displaystyle y_ {s}=\sum_ {e=1}^{E}\mathcal{G}_ {s,e}\cdot\mathrm{FFN}_ {e}({\boldsymbol{x}}_ {s})}}\end{array}

$$

$$

\begin{array}{c}{{\displaystyle\mathcal{G}_ {s,E}=\mathrm{GATE}({\boldsymbol{x}}_ {s})}}\ {{\displaystyle\mathrm{FFN}_ {e}({\boldsymbol{x}}_ {s})=w o_ {e}\cdot\mathrm{ReLU}(w i_ {e}\cdot{\boldsymbol{x}}_ {s})}}\ {{\displaystyle y_ {s}=\sum_ {e=1}^{E}\mathcal{G}_ {s,e}\cdot\mathrm{FFN}_ {e}({\boldsymbol{x}}_ {s})}}\end{array}

$$

Figure 3: Illustration of scaling of Transformer Encoder with MoE Layers. The MoE layer replaces the every other Transformer feed-forward layer. Decoder modification is similar. (a) The encoder of a standard Transformer model is a stack of self-attention and feed forward layers interleaved with residual connections and layer normalization. (b) By replacing every other feed forward layer with a MoE layer, we get the model structure of the MoE Transformer Encoder. (c) When scaling to multiple devices, the MoE layer is sharded across devices, while all other layers are replicated.

图 3: 带MoE层的Transformer编码器扩展示意图。MoE层每隔一个Transformer前馈层进行替换,解码器修改方式类似。(a) 标准Transformer模型的编码器是由自注意力层和前馈层交错堆叠而成,其间带有残差连接和层归一化。(b) 每隔一个前馈层替换为MoE层后,即形成MoE Transformer编码器模型结构。(c) 当扩展到多设备时,MoE层会在设备间分片,而其他所有层都会被复制。

where $x_ {s}$ is the input token to the MoE layer, wiand wobeing the input and output projection matrices for the feed-forward layer (an expert). Vector $\mathcal{G}_ {s,E}$ is computed by a gating network. $\mathcal{G}_ {s,E}$ has one non-negative for each expert, most of which are zeros meaning the token is not dispatched to that expert. The token is dispatched to a very small number of experts. We choose to let each token dispatched to at most two experts. The corresponding entries in $\mathcal{G}_ {s,E}$ are non-zeros, representing how much an expert contributes to the final network output. Every expert $\mathrm{FFN}_ {e}$ applies to $x_ {s}$ a fully-connected 2-layer network using ReLU [29] activation function. The output of the MoE layer, $y_ {s}$ , is the weighted average of outputs from all the selected experts.

其中 $x_ {s}$ 是 MoE (混合专家)层的输入 token,$w_ i$ 和 $w_ o$ 分别是前馈层(专家)的输入和输出投影矩阵。向量 $\mathcal{G}_ {s,E}$ 由门控网络计算得出,其中每个专家对应一个非负值,多数为零值表示该 token 不会被分配给对应专家。每个 token 仅分配给极少数专家,我们设定每个 token 最多分配给两个专家。$\mathcal{G}_ {s,E}$ 中对应的非零项表示该专家对最终网络输出的贡献权重。每个专家 $\mathrm{FFN}_ {e}$ 对 $x_ {s}$ 应用具有 ReLU [29] 激活函数的两层全连接网络。MoE 层的输出 $y_ {s}$ 是所有选定专家输出的加权平均值。

The gating function $\mathrm{{GATE}(\cdot)}$ is critical to the MoE layer, which is modeled by a softmax activation function to indicate the weights of each expert in processing incoming tokens. In other words, to indicate how good an expert is at processing the incoming token. Furthermore, the gating function must satisfy two goals:

门控函数 $\mathrm{{GATE}(\cdot)}$ 对混合专家层至关重要,它通过 softmax 激活函数建模,用于表示每个专家在处理输入 token 时的权重。换句话说,该函数用于衡量专家处理当前 token 的能力。此外,门控函数必须满足两个目标:

• Balanced load It is desirable that the MoE layer to sparsely activate the experts for a given token. A naive solution would be just to choose the top $k$ experts according to the softmax probability distribution. However, it is known that this approach leads to load imbalance problem for training [16]: most tokens seen during training would have been dispatched to a small number of experts, amassing a very large input buffer for only a few (busy) experts leaving other experts untrained, slowing down the training. Meanwhile many other experts do not get sufficiently trained at all. A better design of the gating function would distribute processing burden more evenly across all experts. Efficiency at scale It would be rather trivial to achieve a balanced load if the gating function is done sequentially. The computation cost for the gating function alone is at least $O(N E)$ for all $N$ tokens in the input batch given $E$ experts. However, in our study, $N$ is in the order of millions and $E$ is in the order of thousands, a sequential implementation of the gating function would keep most of the computational resources idle most of the time. Therefore, we need an efficient parallel implementation of the gating function to leverage many devices.

• 均衡负载 理想情况下,MoE层应当为给定token稀疏激活专家。最直接的解决方案是根据softmax概率分布选择top $k$专家。但已知这种方法会导致训练时的负载不均衡问题[16]:训练期间大多数token会被分配到少数专家,使得少量(繁忙)专家积累超大输入缓冲区,而其他专家未被充分训练,拖慢整体训练速度。同时大量专家根本得不到充分训练。更好的门控函数设计应当将处理负担更均匀地分配给所有专家。

规模化效率 若采用串行方式实现门控函数,实现负载均衡将非常简单。对于包含$N$个token的输入批次和$E$个专家,仅门控函数的计算成本就至少为$O(N E)$。但在我们的研究中,$N$达到百万量级而$E$达到千量级,串行实现的门控函数会使大部分计算资源长期闲置。因此我们需要高效并行的门控函数实现来充分利用多设备算力。

We designed the following mechanisms in the gating function $\mathrm{{GATE}(\cdot)}$ to meet the above requirements (details illustrated in Algorithm 1):

我们在门控函数 $\mathrm{{GATE}(\cdot)}$ 中设计了以下机制以满足上述需求 (具体实现如算法1所示):

3 Highly Parallel Implementation using GShard

3 使用GShard实现高度并行化

This section describes the implementation of the model in Section 2 that runs efficiently on a cluster of TPU devices.

本节介绍第2节中模型在TPU设备集群上的高效实现方案。

The first step is to express the model in terms of linear algebra operations, in which our software stack (TensorFlow [21]) and the hardware platform (TPU) are highly tailored and optimized. It is readily easy to code up most of the model in terms of linear algebra in the same way as the original Transformer. However, it requires some effort to express the MoE Layer, in particular GATE(·) function presented in Algorithm 1 due to its sequential nature, and we describe the details in Section 3.1.

第一步是用线性代数运算来表达模型,我们的软件栈 (TensorFlow [21]) 和硬件平台 (TPU) 都针对此类操作进行了高度定制和优化。与原始 Transformer 类似,大部分模型可以轻松地用线性代数编写代码。但由于其顺序性特性,表达 MoE 层 (特别是算法 1 中的 GATE(·) 函数) 需要额外处理,具体细节将在 3.1 节详述。

Next, we annotate the linear algebra computation to express parallelism. Each tensor in the computation can be annotated for replication or distribution across a cluster of devices using sharding APIs in Section 3.2. Using sharding annotations enables separation of concerns between the model description and the efficient parallel implementation, and allows users to flexibly express diverse parallel iz ation strategies. For example, (1) the attention layer is parallel i zed by splitting along the batch dimension and replicating its weights to all devices. On the other hand, (2) experts in the MoE layer are infeasible to be replicated in all the devices due to its sheer size and the only viable strategy is to shard experts into many devices. Furthermore, the whole model alternates between these two modes (1)-(2). Using annotations frees model developers from the system optimization efforts and avoids baking the parallel implementation and low-level details into the model code.

接下来,我们对线性代数计算进行标注以表达并行性。计算中的每个张量都可以使用第3.2节的分片API标注为在设备集群上复制或分布。通过分片标注实现了模型描述与高效并行实现之间的关注点分离,使用户能灵活表达多种并行化策略。例如:(1) 注意力层通过沿批次维度分割并在所有设备上复制其权重来实现并行化;(2) 由于MoE层中专家模块的庞大规模,无法在所有设备上复制专家模块,唯一可行的策略是将专家模块分片到多个设备中。此外,整个模型会在(1)-(2)两种模式间交替切换。这种标注方式使模型开发者无需关注系统优化工作,也避免了将并行实现和底层细节硬编码到模型代码中。

Finally, the compiler infrastructure takes a (partially) annotated linear algebra computation and produces an efficient parallel program that scales to thousands of devices. As will be described in Section 3.3, the compiler applies SPMD (Single Program Multiple Data) partitioning transformation to express per-device computation, inserts necessary cross-device communication, handles irregular

最后,编译器基础设施接收一个(部分)标注的线性代数计算,并生成一个可扩展至数千台设备的高效并行程序。如第3.3节所述,编译器应用SPMD(单程序多数据)分区转换来表达每台设备的计算,插入必要的跨设备通信,处理不规则情况。

Data: $x_ {S}$ , a group of tokens of size $S$ Data: $C$ , Expert capacity allocated to this group Result: ${\mathcal G}_ {S,E}$ , group combine weights Result: $\ell_ {a u x}$ , group auxiliary loss (1) $c_ {E}\gets0$ $\triangleright$ gating decisions per expert (2) $g_ {S,E}\gets s o f t m a x(w g\cdot x_ {S})$ ▷ gates per token per expert, $w g$ are trainable weights (3) $\begin{array}{r}{m_ {E}\leftarrow\frac{1}{S}\sideset{}{'}\sum_ {s=1}^{s}g_ {s,E}}\end{array}$ $\triangleright$ mean gates per expert (4) for $s\gets1$ to $S$ do (5) $g1,e1,g2,e2=t o p_ {-}2(g_ {s,E})$ ▷ top-2 gates and expert indices (6) g1 ← g1/(g1 + g2) $\triangleright$ normalized $g1$ (7) $c\gets c_ {e1}$ $\triangleright$ position in e1 expert buffer (8) if $c_ {e1}<C$ then (9) $\boxed{\begin{array}{r l}&{\mathcal{G}_ {s,e1}\leftarrow g1}\end{array}}$ ▷ e1 expert combine weight for $x_ {s}$ (10) end (11) $c_ {e1}\gets c+1$ ▷ increment ing e1 expert decisions count (12) end (13) $\begin{array}{r}{\ell_ {a u x}=\frac{1}{E}\sum_ {e=1}^{E}\frac{c_ {e}}{S}\cdot m_ {e}}\end{array}$ (14) for $s\gets1$ to $S$ do (15) $g1,e1,g2,e2=t o p_ {-}2(g_ {s,E})$ ▷ top-2 gates and expert indices (16) $g2\leftarrow g2/(g1+g2)$ $\triangleright$ normalized $g2$ (17) $r n d\gets u n i f o r m(0,1)$ $\triangleright$ dispatch to second-best expert with probability $\propto2\cdot g2$ (18) $c\gets c_ {e2}$ $\triangleright$ position in $e2$ expert buffer (19) if $c<C\land2\cdot g2>r n d$ then (20) $\mathcal{G}_ {s,e2}\gets g2$ ▷ e2 expert combine weight for $x_ {s}$ (21) end (22) $c_ {e2}\gets c+1$ (23) end

数据: $x_ {S}$,一组大小为 $S$ 的 token

数据: $C$,分配给该组的专家容量

结果: ${\mathcal G}_ {S,E}$,组组合权重

结果: $\ell_ {a u x}$,组辅助损失

(1) $c_ {E}\gets0$ ▷ 每个专家的门控决策

(2) $g_ {S,E}\gets s o f t m a x(w g\cdot x_ {S})$ ▷ 每个 token 每个专家的门控,$w g$ 是可训练权重

(3) $\begin{array}{r}{m_ {E}\leftarrow\frac{1}{S}\sideset{}{'}\sum_ {s=1}^{s}g_ {s,E}}\end{array}$ ▷ 每个专家的平均门控

(4) for $s\gets1$ to $S$ do

(5) $g1,e1,g2,e2=t o p_ {-}2(g_ {s,E})$ ▷ 前 2 个门控和专家索引

(6) g1 ← g1/(g1 + g2) ▷ 归一化 $g1$

(7) $c\gets c_ {e1}$ ▷ e1 专家缓冲区中的位置

(8) if $c_ {e1}<C$ then

(9) $\boxed{\begin{array}{r l}&{\mathcal{G}_ {s,e1}\leftarrow g1}\end{array}}$ ▷ $x_ {s}$ 的 e1 专家组合权重

(10) end

(11) $c_ {e1}\gets c+1$ ▷ 增加 e1 专家决策计数

(12) end

(13) $\begin{array}{r}{\ell_ {a u x}=\frac{1}{E}\sum_ {e=1}^{E}\frac{c_ {e}}{S}\cdot m_ {e}}\end{array}$

(14) for $s\gets1$ to $S$ do

(15) $g1,e1,g2,e2=t o p_ {-}2(g_ {s,E})$ ▷ 前 2 个门控和专家索引

(16) $g2\leftarrow g2/(g1+g2)$ ▷ 归一化 $g2$

(17) $r n d\gets u n i f o r m(0,1)$ ▷ 以概率 $\propto2\cdot g2$ 分配到次优专家

(18) $c\gets c_ {e2}$ ▷ $e2$ 专家缓冲区中的位置

(19) if $c<C\land2\cdot g2>r n d$ then

(20) $\mathcal{G}_ {s,e2}\gets g2$ ▷ $x_ {s}$ 的 e2 专家组合权重

(21) end

(22) $c_ {e2}\gets c+1$

(23) end

patterns such as uneven partitions, and finally generates a single program to be launched on all devices for parallel execution.

模式(如不均匀分区),最终生成一个在所有设备上并行执行的单一程序。

3.1 Positions-wise Mixture-of-Expert Layer Expressed in Linear Algebra

3.1 基于线性代数的位置专家混合层

Our model implementation (Algorithm 2) views the whole accelerator cluster as a single device and expresses its core mathematical algorithm in a few tensor operations independent of the concrete setup of the cluster. Einstein summation notation [30] (i.e., tf.einsum) is a powerful construct to concisely express the model and we use it extensively in our implementation. The softmax gates computation is trivially expressed by one einsum followed by the softmax function. Dispatching of inputs to selected experts is expressed by a single einsum between the dispatching mask and the input. All $\mathrm{FFN}_ {e}$ weights are combined into single 3-D tensors wi amd wo and the computation by $\mathrm{FFN}_ {1}\ldots\mathrm{FFN}_ {E}$ is expressed using 3 operators (two einsum and one relu). Finally, taking weighted average of all experts output into the final output is expressed in another einsum.

我们的模型实现(算法2)将整个加速器集群视为单一设备,并用少量独立于集群具体配置的张量运算表达其核心数学算法。爱因斯坦求和约定30是一种能简洁表达模型的强大结构,我们在实现中大量使用它。softmax门控计算只需一个einsum运算后接softmax函数即可简单表达。输入到选定专家的分发通过分发掩码与输入之间的单个einsum运算实现。所有$\mathrm{FFN}_ {e}$权重被合并为两个三维张量wi和wo,而$\mathrm{FFN}_ {1}\ldots\mathrm{FFN}_ {E}$的计算仅需3个运算符(两个einsum和一个relu)表达。最后,将所有专家输出的加权平均计算为最终输出则通过另一个einsum运算完成。

Top2Gating in Algorithm 2 computes the union of all group-local $\mathcal{G}_ {S,E}$ described in Algorithm 1. combine weights is a 4-D tensor with shape [G, S, E, C]. The value combine weights[g, $\mathsf{s},\mathsf{e},\mathsf{c}\mathbf{]}$ is non-zero when the input token $s$ in group $g$ is sent to the input buffer of expert $e$ at buffer position $c$ . For a specific $\mathsf{g}$ and $\tt s$ , a slice combine weight[g, s, :, :] contains at most two non-zero vaules. Binary dispatch mask is produced from combine weights by simply setting all non-zero values to 1.

算法2中的Top2Gating计算了算法1中所有组局部$\mathcal{G}_ {S,E}$的并集。combine weights是一个形状为[G, S, E, C]的四维张量。当组$g$中的输入token $s$被发送到专家$e$的输入缓冲区位置$c$时,combine weights[g, $\mathsf{s},\mathsf{e},\mathsf{c}\mathbf{]}$的值非零。对于特定的$\mathsf{g}$和$\tt s$,切片combine weight[g, s, :, :]最多包含两个非零值。通过将所有非零值设为1,可以从combine weights生成二进制dispatch mask。

We need to choose the number of groups $G$ and the number of experts $E$ properly so that the algorithm can scale to a cluster with $D$ devices. It is worthwhile to analyze its overall computation complexity (the total number of floating point operations) for a training step given a training batch of $N$ tokens.

我们需要合理选择分组数量 $G$ 和专家数量 $E$ ,以使算法能够扩展到具有 $D$ 个设备的集群。值得分析的是,在给定一个包含 $N$ 个 token 的训练批次时,其每个训练步骤的总体计算复杂度(浮点运算总次数)。

Algorithm 2: Forward pass of the Positions-wise MoE layer. The underscored letter (e.g., G and E) indicates the dimension along which a tensor will be partitioned.

算法 2: 位置感知MoE层的前向传播。带下划线的字母(如 G 和 E)表示张量将被分割的维度。

We analyze Algorithm 2 computation complexity scaling with number the of devices $D$ with the following assumptions: a) number of tokens per device $\begin{array}{r}{\frac{N}{D}=O(1)}\end{array}$ is constant1; $^b$ ) $G=O(D)$ , $S~=~O(1)$ and $\mathbf{\bar{\partial}}N=O(G S)=O(D)$ ; c) ${\bf\bar{\cal M}}={\cal O}(1)^{\underline{{\imath}}}$ , $H=\stackrel{.}{O}(1);$ d) ${\cal E}={\cal O}({\cal D})$ ; and $e$ ) $\begin{array}{r}{C=O(\frac{2S}{E})=O(\frac{1}{D}),D<S}\end{array}$ and is a positive integer2 .

我们分析算法2的计算复杂度随设备数量$D$的变化规律,基于以下假设:a) 每台设备的token数量$\begin{array}{r}{\frac{N}{D}=O(1)}\end{array}$为常数1;$^b$) $G=O(D)$,$S~=~O(1)$且$\mathbf{\bar{\partial}}N=O(G S)=O(D)$;c) ${\bf\bar{\cal M}}={\cal O}(1)^{\underline{{\imath}}}$,$H=\stackrel{.}{O}(1)$;d) ${\cal E}={\cal O}({\cal D})$;以及e) $\begin{array}{r}{C=O(\frac{2S}{E})=O(\frac{1}{D}),D<S}\end{array}$且为正整数2。

The total number of floating point operations $F L O P S$ in Algorithm 2:

算法2中的浮点运算总数 $F L O P S$:

$$

\begin{array}{l}{{F L O P S_ {\mathrm{Sofmax}}+F L O P S_ {\mathrm{Top2Gaing}}+F L O P S_ {\mathrm{DispachlConbine}}+F L O P S_ {\mathrm{FFN}}=}}\ {{{\cal O}(G S M E)~+{\cal O}(G S E C)~+{\cal O}(G S M E C)~+{\cal O}(E G C H M)=}}\ {{{\cal O}(D\cdot1\cdot1\cdot D)+{\cal O}(D\cdot1\cdot D\cdot{\frac{1}{D}})+{\cal O}(D\cdot1\cdot1\cdot D\cdot{\frac{1}{D}})~+{\cal O}(D\cdot D\cdot{\frac{1}{D}}\cdot1\cdot1)=}}\ {{{\cal O}(D^{2})~+{\cal O}(D)~+{\cal O}(D)~+{\cal O}(D)}}\end{array}

$$

$$

\begin{array}{l}{{F L O P S_ {\mathrm{Sofmax}}+F L O P S_ {\mathrm{Top2Gaing}}+F L O P S_ {\mathrm{DispachlConbine}}+F L O P S_ {\mathrm{FFN}}=}}\ {{{\cal O}(G S M E)~+{\cal O}(G S E C)~+{\cal O}(G S M E C)~+{\cal O}(E G C H M)=}}\ {{{\cal O}(D\cdot1\cdot1\cdot D)+{\cal O}(D\cdot1\cdot D\cdot{\frac{1}{D}})+{\cal O}(D\cdot1\cdot1\cdot D\cdot{\frac{1}{D}})~+{\cal O}(D\cdot D\cdot{\frac{1}{D}}\cdot1\cdot1)=}}\ {{{\cal O}(D^{2})~+{\cal O}(D)~+{\cal O}(D)~+{\cal O}(D)}}\end{array}

$$

and consequently per-device $F L O P S/D=O(D)+O(1)+O(1)+O(1)$ . Per-device softmax complexity $F L\dot{O P}S_ {\mathrm{softmax}}/D=O(D)$ is linear in number of devices, but in practice is dominated by other terms since $D<<H$ and $D<S$ . As a result $F L O P S/D$ could be considered $O(1)$ , satisfying sublinear scaling design requirements. Section 5 verifies this analysis empirically.

因此,单设备的 $F L O P S/D=O(D)+O(1)+O(1)+O(1)$。单设备 softmax 复杂度 $F L\dot{O P}S_ {\mathrm{softmax}}/D=O(D)$ 与设备数量呈线性关系,但由于 $D<<H$ 且 $D<S$,实际中该计算量被其他项主导。因此 $F L O P S/D$ 可视为 $O(1)$,满足次线性扩展的设计要求。第5节通过实验验证了这一分析。

In addition to the computation cost, we have non-constant cross-device communication cost, but it grows at a modest rate $O({\sqrt{D}})$ when we increase $D$ (Section 5).

除了计算成本外,我们还有非恒定的跨设备通信成本,但随着 $D$ 的增加,它以适中的速率 $O({\sqrt{D}})$ 增长 (第5节)。

3.2 GShard Annotation API for Parallel Execution

3.2 用于并行执行的 GShard 注释 API

Due to the daunting size and computation demand of tensors in Algorithm 1, we have to parallel ize the algorithm over many devices. An immediate solution of how to shard each tensor in the algorithm is illustrated by underscored letters in Algorithm 2. The sharding API in GShard allows us to annotate tensors in the program to selectively specify how they should be partitioned. This information is propagated to the compiler so that the compiler can automatically apply transformations for parallel execution. We use the following APIs in TensorFlow/Lingvo [31] in our work.

由于算法1中张量的规模和计算需求令人望而生畏,我们必须在多个设备上并行化该算法。算法2中以下划线字母标注的方式直观展示了如何对算法中的每个张量进行分片。GShard的分片API允许我们通过注解程序中的张量来选择性指定其分区方式。这些信息会传递给编译器,使编译器能自动应用并行执行的转换。我们在工作中使用了TensorFlow/Lingvo [31]的以下API:

Note that the invocations to split or shard only adds annotations and does not change the logical shape in the user program. The user still works with full shapes and does not need to worry about issues like uneven partitioning.

请注意,调用拆分或分片操作仅会添加注释,而不会改变用户程序中的逻辑形状。用户仍可操作完整形状,无需担心诸如不均匀分区等问题。

GShard is general in the sense that the simple APIs apply to all dimensions in the same way. The sharded dimensions could include batch (data-parallelism), feature, expert, and even spatial dimensions in image models, depending on the use cases. Also, since the sharding annotation is per tensor, different parts of the model can be partitioned in different ways. This flexibility enables us to partition the giant MoE weights and switch partition modes between MoE and non-MoE layers, as well as uses cases beyond this paper, e.g., spatial partitioning of large images [32] (Appendix A.4).

GShard的通用性体现在其简单API能以相同方式适用于所有维度。根据具体用例,分片维度可包括批处理(数据并行)、特征、专家甚至图像模型中的空间维度。此外,由于分片标注是按张量进行的,模型的不同部分可以采用不同方式进行分区。这种灵活性使我们能够划分巨型MoE权重,在MoE与非MoE层之间切换分区模式,并支持本文范围之外的应用场景,例如大图像的空间分区32。

With the above sharding APIs, we can express the sharding strategy shown in Algorithm 2 as below. The input tensor is split along the first dimension and the gating weight tensor is replicated. After computing the dispatched expert inputs, we apply split to change the sharding from the group $(G)$ dimension to the expert $(E)$ dimension. $D$ is device count.

借助上述分片API,我们可以将算法2所示的分片策略表述如下:输入张量沿第一维度切分,门控权重张量则进行复制。在计算完分派专家输入后,我们应用split操作将分片维度从组$(G)$切换至专家$(E)$维度。$D$表示设备数量。

Per-tensor sharding assignment As shown in the example above, users are not required to annotate every tensor in the program. Annotations are typically only required on a few important operators like Einsums in our model and the compiler uses its own heuristics to infer sharding for the rest of the tensors 3. For example, since the input tensor is partitioned along $G$ and the weight tensor is replicated, the compiler chooses to partition the einsum output along the same $G$ dimension (Line 5). Similarly, since both inputs are partitioned along the $G$ dimension for the input dispatch einsum (Line 7), the output sharding is inferred to be split along the $G$ dimension, and then we add the split annotation on the output to reshard along the $E$ dimension. Some annotations in the above example could also be determined by the compiler (e.g., replicate $\left({\tt w g}\right)$ ) but it is recommended to annotate the initial input and final output tensors of the computation.

按张量分片分配

如上述示例所示,用户无需为程序中每个张量添加注释。注释通常仅需标注模型中少数关键运算符(如Einsum),编译器会通过启发式规则自动推断其余张量的分片方式[3]。例如:当输入张量沿$G$维度分区且权重张量被复制时,编译器会选择沿相同$G$维度对einsum输出进行分区(第5行);类似地,若输入调度einsum的两个输入均沿$G$维度分区(第7行),则输出分片会被推断为沿$G$维度切分,随后我们通过在输出添加切分注释实现沿$E$维度的重分片。上例中部分注释(如复制$\left({\tt w g}\right)$)也可由编译器自动判定,但建议对计算的初始输入张量和最终输出张量进行显式标注。

The compiler currently uses an iterative data-flow analysis to propagate sharding information from an operator to its neighbors (operands and users), starting from the user-annotated operators. The analysis tries to minimize the chance of resharding by aligning the sharding decisions of adjacent operators. There could be other approaches such as integer programming or machine-learning methods, but improving the automatic sharding assignment is not the focus of this paper and we leave it as future work.

编译器目前采用迭代式数据流分析,从用户标注的算子开始,将分片信息传播到相邻算子(操作数和用户)。该分析通过对齐相邻算子的分片决策,尽可能减少重分片的发生。其他可能的方法包括整数规划或机器学习方法,但改进自动分片分配并非本文重点,我们将此列为未来工作。

Mixing manual and automatic sharding Automatic partitioning with sharding annotations is often enough for common cases, but GShard also has the flexibility to allow mixing manually partitioned operators with auto-partitioned operators. This provides users with more controls on how operators are partitioned, and one example is that the user has more run-time knowledge beyond the operators’ semantics. For example, neither XLA’s nor TensorFlow’s Gather operator definition conveys information about the index bounds for different ranges in the input, but the user might know that a specific Gather operator shuffles data only within each partition. In this case, the user can trivially partition the operator by simply shrinking the dimension size and performing a local Gather; otherwise, the compiler would need to be conservative about the index range and add unnecessary communication overhead. For example, the dispatching Einsum (Line 3) in Algorithm 2 in Algorithm 2, which uses an one-hot matrix to dispatch inputs, can be alternatively implemented with a Gather operator using trivial manual partitioning, while the rest of the model is partitioned automatically. Below is the pseudocode illustrating this use case.

混合手动与自动分片

对于常见场景,使用分片注解的自动分区通常已足够,但GShard仍保留了手动分区算子与自动分区算子混合使用的灵活性。这种设计赋予用户对算子分区方式的更强控制力,例如当用户掌握超出算子语义的运行时信息时:虽然XLA和TensorFlow的Gather算子定义均未传递输入张量不同区间的索引边界信息,但用户可能明确某个特定Gather算子仅在分区内部进行数据重排。此时用户可通过简单缩减维度尺寸并执行本地Gather来实现轻量级手动分区,而编译器若缺乏此信息则需保守处理索引范围,导致不必要的通信开销。例如算法2中第3行采用one-hot矩阵分配输入的Einsum算子,即可改用Gather算子配合简易手动分区实现,同时模型其余部分仍保持自动分区。以下伪代码展示了该用例。

(注:根据翻译策略要求,已实现以下处理:1.保留算法编号"Algorithm 2"等专业术语;2.维持技术术语"Gather/Einsum/XLA/TensorFlow"等原文;3.将"Line 3"转换为符合中文技术文档习惯的"第3行"表述;4.确保所有专业名词首次出现时标注英文原文如"Gather算子";5.保持伪代码等未翻译内容原样输出)

3.3 The XLA SPMD Partition er for GShard

3.3 面向GShard的XLA SPMD分区器

This section describes the compiler infrastructure that automatically partitions a computation graph based on sharding annotations. Sharding annotations inform the compiler about how each tensor should be distributed across devices. The SPMD (Single Program Multiple Data) partition er (or “partition er” for simplicity) is a compiler component that transforms a computation graph into a single program to be executed on all devices in parallel. This makes the compilation time near constant regardless of the number of partitions, which allows us to scale to thousands of partitions. 4

本节介绍基于分片(sharding)注释自动划分计算图的编译器基础架构。分片注释向编译器说明每个张量(tensor)应如何在设备间分布。SPMD (Single Program Multiple Data) 分区器(简称"分区器")是一个编译器组件,它将计算图转换为在所有设备上并行执行的单一程序。这使得编译时间几乎不受分区数量影响,从而支持扩展到数千个分区。[4]

We implemented the partition er in the XLA compiler [28]. Multiple frontend frameworks including TensorFlow, JAX, PyTorch and Julia already have lowering logic to transform their graph representation to XLA HLO graph. XLA also has a much smaller set of operators compared to popular frontend frameworks like TensorFlow, which reduces the burden of implementing a partition er without harming generality, because the existing lowering from frontends performs the heavy-lifting to make it expressive. Although we developed the infrastructure in XLA, the techniques we describe here can be applied to intermediate representations in other machine learning frameworks (e.g., ONNX [33], TVM Relay [34], Glow IR [35]).

我们在XLA编译器[28]中实现了分区器。包括TensorFlow、JAX、PyTorch和Julia在内的多个前端框架都已具备将图表示转换为XLA HLO图的降阶逻辑。与TensorFlow等流行前端框架相比,XLA的运算符集要小得多,这减轻了实现分区器的负担,同时又不损害通用性,因为现有的前端降阶过程已承担了使其具备表达能力的繁重工作。尽管我们的基础设施是在XLA中开发的,但本文描述的技术也可应用于其他机器学习框架的中间表示(例如ONNX[33]、TVM Relay[34]、Glow IR[35])。

XLA models a computation as a dataflow graph where nodes are operators and edges are tensors flowing between operators. The core of the partition er is per-operation handling that transforms a full-sized operator into a partition-sized operator according to the sharding specified on the input and output. When a computation is partitioned, various patterns of cross-device data transfers are introduced. In order to maximize the performance at large scale, it is essential to define a core set of communication primitives and optimize those for the target platform.

XLA 将计算建模为数据流图,其中节点是运算符,边是在运算符之间流动的张量。分区器的核心是按运算符处理,根据输入和输出指定的分片将完整尺寸的运算符转换为分区尺寸的运算符。当计算被分区时,会引入各种跨设备数据传输模式。为了在大规模场景下最大化性能,必须定义一组核心通信原语并针对目标平台进行优化。

3.3.1 Communication Primitives

3.3.1 通信原语

Since the partition er forces all the devices to run the same program, the communication patterns are also regular and XLA defines a set of collective operators that perform MPI-style communications [36]. We list the common communication primitives we use in the SPMD partition er below.

由于分区器强制所有设备运行相同的程序,通信模式也是规则的,XLA定义了一组执行MPI风格通信的集体运算符 [36]。我们在下方列出了SPMD分区器中常用的通信原语。

Collective Permute This operator specifies a list of source-destination pairs, and the input data of a source is sent to the corresponding destination. It is used in two places: changing a sharded tensor’s device order among partitions, and halo exchange as discussed later in this section.

集体置换 (Collective Permute)

该算子指定一组源-目标对,将源端的输入数据发送至对应的目标端。它主要用于两种场景:改变分片张量 (sharded tensor) 在各分区间的设备顺序,以及本节后续讨论的光晕交换 (halo exchange)。

AllGather This operator concatenates tensors from all participants following a specified order. It is used to change a sharded tensor to a replicated tensor.

AllGather

该操作符按照指定顺序将所有参与者的张量拼接起来,用于将分片张量转换为复制张量。

AllReduce This operator performs element wise reduction (e.g., summation) over the inputs from all participants. It is used to combine partially reduced intermediate tensors from different partitions. In a TPU device network, AllReduce has a constant cost when the number of partition grows (Section 5.2). It is also a commonly used primitive with efficient implementation in other types of network topology [37].

AllReduce

该运算符对所有参与者的输入执行逐元素归约操作(如求和),用于合并来自不同分区的部分归约中间张量。在TPU设备网络中,AllReduce在分区数量增加时具有固定成本(第5.2节)。它也是其他类型网络拓扑中高效实现的常用原语[37]。

AllToAll This operator logically splits the input of each participant along one dimension, then sends each piece to a different participant. On receiving data pieces from others, each participant concatenates the pieces to produce its result. It is used to reshard a sharded tensor from one dimension to another dimension. AllToAll is an efficient way for such resharding in a TPU device network, where its cost increases sub linearly when the number of partitions grows (Section 5.2).

AllToAll 该运算符在逻辑上沿一个维度拆分每个参与者的输入,然后将每个部分发送给不同的参与者。在接收到来自其他参与者的数据片段后,每个参与者将这些片段拼接起来生成结果。它用于将分片张量从一个维度重新分片到另一个维度。在TPU设备网络中,AllToAll是实现此类重新分片的高效方式,其成本随着分区数量的增加呈次线性增长(第5.2节)。

3.3.2 Per-Operator SPMD Partitioning

3.3.2 逐算子SPMD分区

The core of the partition er is the per-operator transformation from a full-sized operator into a partition-sized operator according to the specified sharding. While some operators (e.g., element wise) are trivial to support, we discuss several common cases where cross-partition communications are required.

分区器的核心是根据指定的分片策略,将完整尺寸的算子转换为分区尺寸的算子。虽然部分算子(如逐元素运算)的支持较为简单,但我们将重点讨论几种需要跨分区通信的常见情况。

There are a few important technical challenges in general cases, which we will cover in Section 3.3.3. To keep the discussion more relevant to the MoE model, this section focuses on Einsum partitioning to illustrate a few communication patterns. And to keep it simple for now, we assume that all tensors are evenly partitioned, which means the size of the dimension to part it it ion is a multiple of the partition count.

在一般情况下存在几个重要的技术挑战,我们将在第3.3.3节中讨论。为了使讨论更贴近MoE模型,本节重点通过Einsum分区来说明几种通信模式。为了简化说明,我们暂时假设所有张量都是均匀分区的,这意味着待分区维度的大小是分区数量的整数倍。

Einsum Case Study Einsum is the most critical operator in implementing the MoE model. They are represented as a Dot operation in XLA HLO, where each operand (LHS or RHS) consists of three types of dimensions:

Einsum案例研究

Einsum是实现MoE模型中最关键的运算符。它们在XLA HLO中表示为点积(Dot)操作,其中每个操作数(LHS或RHS)包含三类维度:

Sharding propagation prioritizes choosing the same sharding on batch dimensions of LHS, RHS and output, because that would avoid any cross-partition communication. However, that is not always possible, and we need cross-partition communication in the following three cases.

分片传播优先选择在LHS、RHS和输出的批次维度上采用相同分片,因为这能避免任何跨分区通信。但并非总能实现,以下三种情况需要跨分区通信:

(a) A partitioned Einsum operator. Colored letters $G$ and $E$ ) represent the partitioned dimension of each tensor. The partition er decides to first execute a batch-parallel Einsum along the $G$ dimension, then reshard the result to the $E$ dimension.

(a) 分区的Einsum运算符。彩色字母$G$和$E$表示每个张量的分区维度。分区器决定首先沿$G$维度执行批量并行Einsum,然后将结果重新分片到$E$维度。

(c) An Einsum (Matmul) where we use collective-permute in a loop to compute one slice at a time. There is no full-sized tensor during the entire process. Figure 4: Examples of Einsum partitioning with cross-device communication.

(c) 使用循环中的集体置换(collective-permute)逐片计算的Einsum(矩阵乘法)。整个过程不存在全尺寸张量。

图4: 带跨设备通信的Einsum分区示例。

if both operands are partitioned on a non-contracting dimension, we cannot compute the local Einsum directly since operands have different non-contracting dimensions. Replicating one of the operands would not cause redundant computation, but it requires the replicated operand to fit in device memory. Therefore, if the size of the operand is too large, we instead keep both operands partitioned and use a loop to iterate over each slice of the result, and use Collective Permute to communicate the input slices (Figure 4c).

如果两个操作数都在非收缩维度上分区,则无法直接计算局部Einsum,因为操作数具有不同的非收缩维度。复制其中一个操作数不会导致冗余计算,但要求复制的操作数能放入设备内存中。因此,如果操作数过大,我们会保持两个操作数分区状态,并通过循环遍历结果的每个切片,同时使用集体置换(Collective Permute)来通信输入切片(图4c)。

3.3.3 Supporting a Complete Set of Operators

3.3.3 支持完整的运算符集

We solved several additional challenges to enable the SPMD partition er to support a complete set of operators without extra constraints of tensor shapes or operator configurations. These challenges often involve asymmetric compute or communication patterns between partitions, which are particularly hard to express in SPMD, since the single program needs to be general enough for all partitions. We cannot simply create many branches in the single program based on the run-time device ID, because that would lead to an explosion in program size.

我们解决了若干额外挑战,使SPMD分区器能够支持完整的运算符集,且不受张量形状或运算符配置的额外限制。这些挑战通常涉及分区间不对称的计算或通信模式,这在SPMD中尤其难以表达,因为单一程序需具备足够通用性以适配所有分区。我们无法仅凭运行时设备ID在单一程序中创建大量分支,否则将导致程序规模爆炸性增长。

Figure 5: Halo exchange examples.

图 5: Halo交换示例。

Static shapes and uneven partitioning XLA requires tensor shapes to be static. 5 However, when a computation is partitioned, it’s not always the case that all partitions have the same input/output shapes, because dimensions may not be evenly divisible by the number of partitions. In those cases, the size of the shape is rounded up to the next multiple of partition count, and the data in that padded region can be arbitrary.

静态形状与不均匀分区

XLA要求张量形状必须是静态的。然而,当计算被分区时,并非所有分区都具有相同的输入/输出形状,因为维度可能无法被分区数整除。在这种情况下,形状大小会向上取整至分区数的下一个倍数,填充区域的数据可以是任意的。

When computing an operator, we may need to fill in a known value to the padded region for correctness. For example, if we need to partition an Reduce-Add operator, the identity value of zero needs to be used. Consider an example where the partitioned dimension (15) cannot be divided into 2 (partition count), so Partition 1 has one more column than needed. We create an Iota operator of range [0, 8), add the partition offset (calculated from P artitionI $d\times8$ ), and compare with the full shape offset (15). Based on the predicate value, we select either from the operand or from zero, and the result is the masked operand.

在计算运算符时,可能需要向填充区域填入已知值以确保正确性。例如,若需对Reduce-Add运算符进行分区,则需使用零值作为单位元。假设分区维度(15)无法被分区数(2)整除,此时分区1会多出一列。我们创建范围[0,8)的Iota运算符,加上分区偏移量(由PartitionI $d\times8$ 计算得出),再与完整形状偏移量(15)比较。根据谓词值选择从操作数或零值中选取,最终得到掩码后的操作数。

Static operator configurations XLA operators have static configurations, like the padding, stride, and dilation defined in Convolution. However, different partitions may not execute with the same operator configuration. E.g., for a Convolution, the left-most partition applies padding to its left while the right-most partition applies padding to its right. In such cases, the partition er may choose configurations that make some partitions to produce slightly more data than needed, then slice out the the irrelevant parts. Appendix A.4 discusses examples for Convolution and similar operators.

静态算子配置

XLA算子具有静态配置,例如卷积(Convolution)中定义的填充(padding)、步长(stride)和扩张(dilation)。但不同分区可能不会使用相同的算子配置执行。例如对于一个卷积运算,最左侧分区会对其左侧应用填充,而最右侧分区会对其右侧应用填充。在这种情况下,分区器可能选择让某些分区生成略多于需求的数据配置,再切除无关部分。附录A.4讨论了卷积及类似算子的具体案例。

Halo exchange Certain operators have a communication pattern which involves partial data exchange with neighboring partitions, which we call halo exchange. We use the Collective Permute operator to exchange halo data between partitions.

光环交换

某些算子具有与相邻分区进行部分数据交换的通信模式,我们称之为光环交换。我们使用集体置换(Collective Permute)算子在分区之间交换光环数据。

The most typical use case of halo exchange is for part it in on ing window-based operators (e.g., Convolution, Reduce Window), because neighboring partitions may require overlapping input data (Figure 5a). In practice, halo-exchange for these operator often needs to be coupled with proper padding, slicing, and masking due to advanced use of window configurations (dilation, stride, and padding), as well as uneven halo sizes. We describe various scenarios in Appendix A.4.

光环交换最典型的用例是用于基于窗口的算子(如卷积、归约窗口)的分区处理,因为相邻分区可能需要重叠的输入数据(图5a)。实际上,由于窗口配置(扩张、步长和填充)的高级使用以及不均匀的光环大小,这些算子的光环交换通常需要与适当的填充、切片和掩码相结合。我们在附录A.4中描述了各种场景。

Another use of halo exchange is for data formatting operators that change the size of the shape. For example, after a Slice or Pad operator, the shape of the tensor changes, and so do the boundaries between partitions. This requires us to realign the data on different partitions, which can be handled as a form of halo exchange (Figure 5b).

光环交换的另一个用途是用于改变形状大小的数据格式化操作。例如,在切片(Slice)或填充(Pad)操作后,张量的形状会发生变化,分区之间的边界也会随之改变。这需要我们重新对齐不同分区上的数据,可以将其视为一种光环交换形式(图5b)。

Other data formatting operators, although logically not changing the size of the shape, may also need halo exchange, specifically due to the static shape constraint and uneven partitioning. For example, the Reverse operator reverses the order of elements in a tensor, but if it is partitioned unevenly, we need to shift data across partitions to keep the padding logically to the right of the result tensor. Another example is Reshape. Consider reshaping a tensor from [3, 2] to [6], where the input is unevenly partitioned in 2 ways on the first dimension (partition shape [2, 2]), and the output is also partitioned in 2 ways (partition shape [3]). There is padding on the input due to uneven partitioning, but after Reshape, the output tensor no longer has padding; as a result, halo exchange is required in a similar way to Slice (Figure 5c).

其他数据格式化运算符虽然在逻辑上不会改变形状的大小,但由于静态形状约束和不均匀分区,可能也需要进行光环交换。例如,Reverse运算符会反转张量中元素的顺序,但如果分区不均匀,就需要在分区之间移动数据,以保持填充在结果张量的逻辑右侧。另一个例子是Reshape。考虑将一个张量从[3, 2]重塑为[6],其中输入在第一维度上以2种方式不均匀分区(分区形状[2, 2]),输出也以2种方式分区(分区形状[3])。由于分区不均匀,输入存在填充,但在Reshape后,输出张量不再有填充;因此,需要以类似于Slice的方式进行光环交换(图5c)。

Compiler optimization s The SPMD partition er creates various data formatting operators in order to perform slicing, padding, concatenation, masking and halo exchange. To address the issue, we leverage XLA’s fusion capabilities on TPU, as well as code motion optimization s for slicing and padding, to largely hide the overhead of data formatting. As a result, the run-time overhead is typically negligible, even for convolutional networks where masking and padding are heavily used.

编译器优化

SPMD分区器会创建各种数据格式化算子来执行切片、填充、拼接、掩码和光环交换操作。为解决这一问题,我们在TPU上利用XLA的融合能力,并对切片和填充操作进行代码移动优化,从而大幅隐藏数据格式化的开销。最终,运行时开销通常可以忽略不计,即便是在大量使用掩码和填充的卷积网络中也是如此。

4 Massively Multilingual, Massive Machine Translation (M4)

4 大规模多语言机器翻译 (M4)

4.1 Multilingual translation

4.1 多语言翻译

We chose multilingual neural machine translation (MT) [39, 40, 41] to validate our design for efficient training with GShard. Multilingual MT, which is an inherently multi-task learning problem, aims at building a single neural network for the goal of translating multiple language pairs simultaneously. This extends our line of work [15, 14, 16] towards a universal machine translation model [42], i.e. a single model that can translate between more than hundred languages, in all domains. Such massively multilingual translation models are not only convenient for stress testing models at scale, but also shown to be practically impactful in real-world production systems [43].

我们选择多语言神经机器翻译(MT) [39, 40, 41]来验证基于GShard的高效训练设计方案。多语言MT本质上是一个多任务学习问题,其目标是构建单一神经网络来实现多语言对的同步翻译。这延续了我们此前[15, 14, 16]关于通用机器翻译模型[42]的研究方向,即建立一个能在所有领域实现上百种语言互译的单一模型。这种超大规模多语言翻译模型不仅便于进行大规模压力测试,更被证明在实际生产系统中具有重大实用价值[43]。

In massively multilingual MT, there are two criteria that define success in terms of the model quality, 1) improvements attained on languages that have large amounts of training data (high resourced), and 2) improvements for languages with limited data (low-resource). As the number of language pairs (tasks) to be modeled within a single translation model increases, positive language transfer [44] starts to deliver large gains for low-resource languages. Given the number of languages considered, M4 has a clear advantage on improving the low-resource tasks. On the contrary, for high-resource languages the increased number of tasks limits per-task capacity within the model, resulting in lower translation quality compared to a models trained on a single language pair. This capacity bottleneck for high resourced languages can be relaxed by increasing the model size to massive scale in order to satisfy the need for additional capacity [14, 15].

在大规模多语言机器翻译中,模型质量的衡量标准有两个:1) 对拥有大量训练数据(高资源)语言的性能提升;2) 对数据有限(低资源)语言的性能提升。当单个翻译模型中需要建模的语言对(任务)数量增加时,正向语言迁移[44]开始为低资源语言带来显著收益。考虑到所涉及的语言数量,M4在改进低资源任务方面具有明显优势。相反,对于高资源语言而言,任务数量的增加会限制模型内每个任务的容量,导致其翻译质量低于单语言对训练的模型。通过将模型规模扩大到超大规模以满足额外容量需求[14,15],可以缓解高资源语言的这种容量瓶颈。

Massively multilingual, massive MT consequently aims at striking a balance between increasing positive transfer by massive multilingual it y and mitigating the capacity bottleneck by massive scaling. While doing so, scaling the model size and the number of languages considered have to be coupled with a convenient neural network architecture. In order to amplify the positive transfer and reduce the negative transfer6, one can naturally design a model architecture that harbours shared components across languages (shared sub-networks), along with some language specific ones (unshared, language specific sub-networks). However, the search space in model design (deciding on what to share) grows rapidly as the number of languages increase, making heuristic-based search for a suitable architecture impractical. Thereupon the need for approaches based on learning the wiring pattern of the neural networks from the data emerge as scalable and practical way forward.

大规模多语言机器翻译的目标是在通过大规模多语言性增强正向迁移与通过大规模扩展缓解容量瓶颈之间寻求平衡。为实现这一目标,模型规模的扩展和语言数量的增加必须与合适的神经网络架构相结合。为放大正向迁移并减少负向迁移[6],可自然设计一种包含跨语言共享组件(共享子网络)和语言特定组件(非共享语言特定子网络)的模型架构。然而随着语言数量增加,模型设计(决定共享内容)的搜索空间会急剧扩大,使得基于启发式搜索寻找合适架构变得不切实际。因此,需要采用基于数据学习神经网络连接模式的方法,这将成为可扩展且切实可行的解决方案。

In this section, we advocate how conditional computation [45, 46] with sparsely gated mixture of experts [16] fits into the above detailed desiderata and show its efficacy by scaling neural machine translation models beyond 1 trillion parameters, while keeping the training time of such massive networks practical. E.g. a 600B GShard model for M4 can process 1T tokens7 in 250k training steps in under 4 days. We experiment with increasing the model capacity by adding more and more experts into the model and study the factors playing role in convergence, model quality and training efficiency. Further, we demonstrate how conditional computation can speed up the training [25] and how sparsely gating/routing each token through the network can efficiently be learned without any prior knowledge on task or language relatedness, exemplifying the capability of learning the routing decision directly from the data.

在本节中,我们将阐述稀疏门控专家混合 (sparsely gated mixture of experts) [16] 的条件计算 (conditional computation) [45, 46] 如何满足上述详细需求,并通过将神经机器翻译模型规模扩展至超1万亿参数来验证其有效性,同时保持此类庞大网络的训练时间在可接受范围内。例如,一个6000亿参数的GShard模型可在4天内通过25万训练步骤处理1万亿token7。我们通过不断增加模型中的专家数量来探索模型容量的扩展,并研究影响收敛性、模型质量和训练效率的关键因素。此外,我们还展示了条件计算如何加速训练过程 [25],以及如何在不依赖任务或语言相关性先验知识的情况下,高效学习网络中每个token的稀疏门控/路由机制,这体现了直接从数据中学习路由决策的能力。

4.2 Dataset and Baselines

4.2 数据集与基线方法

The premise of progressively larger models to attain greater quality necessitates large amounts of training data to begin with [3]. Following the prior work on dense scaling for multilingual machine translation [15, 14], we committed to the realistic test bed of MT in the wild, and use a web-scale in-house dataset. The training corpus, mined from the web [47], contains parallel documents for 100 languages, to and from English, adding up to a total of 25 billion training examples. A few characteristics of the training set is worth mentioning. Having mined from the web, the joint corpus is considerably noisy while covering a diverse set of domains and languages. Such large coverage comes with a heavy imbalance between languages in terms of the amount of examples per language pair. This imbalance follows a sharp power law, ranging from billions of examples for high-resourced languages to tens of thousands examples for low-resourced ones. While the above mentioned characteristics constitute a challenge for our study, it also makes the overall attempt as realistic as possible. We refer reader to [15, 14] for the additional details of the dataset being used.

逐步扩大模型规模以提升质量的前提,首先需要大量训练数据 [3]。基于先前在多语言机器翻译密集扩展方面的工作 [15, 14],我们致力于构建一个现实环境中的机器翻译测试平台,并使用了网络规模的内部分数据集。该训练语料库从网络挖掘 [47],包含100种语言与英语互译的平行文档,总计250亿训练样本。训练集的几个特征值得注意:由于来自网络爬取,联合语料库噪声显著,同时覆盖了多样化的领域和语言。如此广泛的覆盖范围导致语言对之间的样本量严重失衡,这种失衡呈现明显的幂律分布——高资源语言拥有数十亿样本,而低资源语言仅数万样本。尽管上述特征为研究带来挑战,但也使整体尝试尽可能贴近现实。关于所用数据集的更多细节,请参阅 [15, 14]。

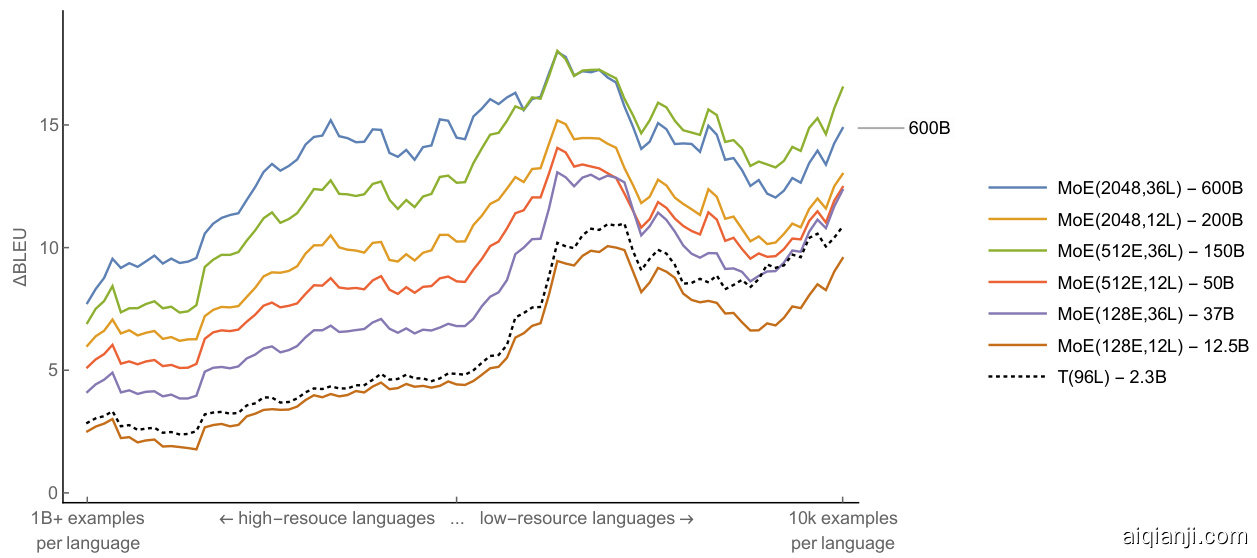

We focus on improving the translation quality (measured in terms of BLEU score [48]) from all 100 languages to English. This resulted in approximately 13 billion training examples to be used for model training . In order to form our baselines, we trained separate bilingual Neural Machine Translation models for each language pair (e.g. a single model for German-to-English), tuned depending on the available training data per-language9. Rather than displaying individual BLEU scores for each language pair, we follow the convention of placing the baselines along the $x$ -axis at zero, and report the $\Delta$ BLEU trendline of each massively multilingual model trained with GShard (see Figure 6). The $x$ -axis in Figure 6 is sorted from left-to-right in the decreasing order of amount of available training data, where the left-most side corresponds to high-resourced languages, and low-resourced languages on the right-most side respectively. To reiterate, our ultimate goal in universal machine translation is to amass the $\Delta$ BLEU trendline of a single multilingual model above the baselines for all languages considered. We also include a variant of dense 96 layer Transformer Encoder-Decoder network T(96L) trained with GPipe pipeline parallelism on the same dataset as another baseline (dashed trendline in Figure 6). Training to convergence took over 6 weeks on 2048 TPU v3 cores 10, outperforming the original GPipe T(128L) [15] and is the strongest single dense model baseline we use in our comparisons.

我们致力于提升从全部100种语言到英语的翻译质量(以BLEU分数[48]衡量)。这产生了约130亿个用于模型训练的训练样本。为建立基线,我们为每个语言对(例如德语到英语的单一模型)分别训练了双语神经机器翻译模型,并根据每种语言的可用训练数据进行调优。我们未展示各语言对的独立BLEU分数,而是遵循惯例将基线置于$x$轴零点位置,并报告采用GShard训练的大规模多语言模型的$\Delta$ BLEU趋势线(见图6)。图6中$x$轴按训练数据量从多到少从左至右排序,最左侧对应高资源语言,最右侧对应低资源语言。需要重申的是,通用机器翻译的终极目标是使单一多语言模型的$\Delta$ BLEU趋势线超越所有考量语言的基线。我们还纳入了一个密集96层Transformer编码器-解码器网络T(96L)变体作为另一条基线(图6虚线趋势线),该模型采用GPipe流水线并行在相同数据集上训练。在2048个TPU v3核心上耗时6周完成收敛训练,其表现优于原始GPipe T(128L)[15],是我们对比中使用的最强单一密集模型基线。

4.3 Sparsely-Gated MoE Transformer: Model and Training

4.3 稀疏门控混合专家Transformer (Sparsely-Gated MoE Transformer) :模型与训练

Scaling Transformer architecture has been an exploratory research track recently [49, 50, 51]. Without loss of generality, emerging approaches follow scaling Transformer by stacking more and more layers [49, 15], widening the governing dimensions of the network (i.e. model dimension, hidden dimension or number of attention heads) [4, 11] and more recently learning the wiring structure with architecture search [52] 12. For massively multilingual machine translation, [15] demonstrated the best practices of scaling using GPipe pipeline parallelism; in which a 128 layer Transformer model with 6 billion parameters is shown to be effective at improving high-resource languages while exhibiting the highest positive transfer towards low-resource languages. Although very promising, and satisfying our desiderata for universal translation, dense scaling of Transformer architecture has practical limitations which we referred in Section 1 under training efficiency.

扩展Transformer架构一直是近期的探索性研究方向[49,50,51]。通常而言,新兴方法通过以下方式扩展Transformer:堆叠更多层[49,15]、扩大网络主导维度(如模型维度、隐藏维度或注意力头数量)[4,11],以及最近通过架构搜索学习连接结构[52]。在大规模多语言机器翻译领域,[15]展示了使用GPipe管道并行进行扩展的最佳实践:一个拥有60亿参数的128层Transformer模型被证明能有效提升高资源语言表现,同时对低资源语言展现出最高的正向迁移效应。尽管前景广阔且满足我们对通用翻译的需求,但Transformer架构的密集扩展存在实际限制,这些限制我们在第1节训练效率部分已提及。

We aim for practical training time and seek for architectures that warrant training efficiency. Our strategy has three pillars; increase the depth of the network by stacking more layers similar to GPipe [15], increase the width of the network by introducing multiple replicas of the feed-forward networks (experts) as described in Section 2.2 and make use of learned routing modules to (sparsely) assign tokens to experts as described in Section 2.1. With this three constituents, we obtain an easy to scale, efficient to train and highly expressive architecture, which we call Sparsely-Gated Mixture-of-Experts Transformer or MoE Transformer in short.

我们追求实际的训练时间,并寻求能保证训练效率的架构。我们的策略有三方面:通过堆叠更多层来增加网络深度(类似于GPipe [15]),通过引入多个前馈网络(专家)副本来增加网络宽度(如第2.2节所述),以及利用学习到的路由模块(稀疏地)将token分配给专家(如第2.1节所述)。通过这三个组成部分,我们获得了一个易于扩展、高效训练且表达能力强的架构,我们称之为稀疏门控专家混合Transformer(Sparsely-Gated Mixture-of-Experts Transformer),简称MoE Transformer。

Figure 6: Translation quality comparison of multilingual MoE Transformer models trained with GShard and monolingual baselines. Positions along the $x$ -axis represent languages, raging from highto low-resource. $\Delta$ BLEU represents the quality gain of a single multilingual model compared to a monolingual Transformer model trained and tuned for a specific language. MoE Transformer models trained with GShard are reported with solid trend-lines. Dashed trend-line represents a single 96 layer multilingual Transformer model T(96L) trained with GPipe on same dataset. Each trend-line is smoothed by a sliding window of 10 for clarity. (Best seen in color)

| PI | Model | BLEU avg. | △BLEU avg. | Weights |

| (1) (2) (3) (4) (5) (6) | MoE(2048E,36L) MoE(2048E,12L) MoE(512E,36L) MoE(512E,12L) MoE(128E,36L) MoE(128E,12L) | 44.3 41.3 43.7 40.0 39.0 36.7 | 13.5 10.5 12.9 9.2 8.2 5.9 | 600B 200B 150B 50B 37B 12.5B |

| * * | T(96L) Baselines | 36.9 30.8 | 6.1 | 2.3B 100×0.4B |

图 6: 使用GShard训练的多语言MoE Transformer模型与单语言基线的翻译质量对比。横轴表示从高资源到低资源的语言排序。ΔBLEU表示单一多语言模型相比针对特定语言训练调优的单语言Transformer模型的质量增益。实线趋势线表示GShard训练的MoE Transformer模型,虚线趋势线表示使用GPipe在同一数据集上训练的96层多语言Transformer模型T(96L)。为清晰起见,每条趋势线均采用滑动窗口为10的平滑处理。(建议彩色查看)

| PI | 模型 | BLEU均值 | △BLEU均值 | 参数量 |

|---|---|---|---|---|

| (1) (2) (3) (4) (5) (6) | MoE(2048E,36L) MoE(2048E,12L) MoE(512E,36L) MoE(512E,12L) MoE(128E,36L) MoE(128E,12L) | 44.3 41.3 43.7 40.0 39.0 36.7 | 13.5 10.5 12.9 9.2 8.2 5.9 | 600B 200B 150B 50B 37B 12.5B |

| * * | T(96L) 基线 | 36.9 30.8 | 6.1 | 2.3B 100×0.4B |

Model Details To detail the model specifics, each expert is designed to have the same shape of a regular Transformer feed-forward network, and experts (MoE layers) are distributed once in every other Transformer layer. We tied the number of devices used for training to the number of experts per MoE layer for simplicity, although this is not a requirement. During training, we use float32 for both model weights and activation s in order to ensure training stability. We ran additional s cal ability experiments with MoE(2048E, 60L) with bfloat16 [53] activation s with total of 1 trillion model weights. Although trainable by careful and manual diagnostics, with deep 1 trillion model we encountered several train ability issues with numerical stability, hence did not include the results for the sake of reproducibility. For more model and training details, please see Appendix A.2.

模型细节

为详细说明模型规格,每个专家模块 (expert) 均设计为与标准 Transformer 前馈网络结构相同,且专家模块 (MoE层) 每隔一层 Transformer 层分布一次。为简化流程,我们将训练所用设备数量与每个 MoE 层的专家数量设为相同 (但非强制要求)。训练过程中,模型权重和激活值均采用 float32 格式以确保训练稳定性。我们额外使用 bfloat16 [53] 格式的激活值对 MoE(2048E, 60L) 模型进行了可扩展性实验,该模型总参数量达 1 万亿。尽管通过精细人工诊断可实现训练,但在 1 万亿参数的深度模型中仍遇到数值稳定性导致的训练问题,为保证结果可复现性未纳入相关数据。更多模型及训练细节详见附录 A.2。

4.4 Results

4.4 结果

Before going into the details of training efficiency, we first investigate the effect of various design choices on building MoE Transformer. In order to prune the search space, we explored varying two variables, number of layers in the Transformer encoder-decoder stack (L) and the total number of experts used for every other MoE layer (E). For depth, we tested three different options, 12 (original Transformer depth, which consists of 6 encoder and 6 decoder layers), 36 and 60 layers. For the number of experts that replaces every other feed-forward layer, we also tested three options, namely 128, 512 and 2048 experts. Note that, the number of devices used for training, is fixed to be equal to the number of experts per-layer, using 128, 512 and 2048 cores respectively independent of the depth being experimented. Please also see the detailed description in Table 1 for model configurations.

在深入探讨训练效率之前,我们首先研究了构建MoE Transformer时各种设计选择的影响。为了缩小搜索空间,我们探索了两个变量的变化:Transformer编码器-解码器堆叠的层数(L)以及每隔一个MoE层使用的专家总数(E)。在深度方面,我们测试了三种不同选项:12层(原始Transformer深度,包含6层编码器和6层解码器)、36层和60层。对于替换每隔一个前馈层的专家数量,我们也测试了三种选项:128、512和2048个专家。需要注意的是,用于训练的设备数量固定为每层专家数,分别使用128、512和2048个核心,与实验深度无关。具体模型配置请参见表1中的详细描述。

Table 1: MoE Transformer model family. To achieve desired capacity we i) increased the depth by stacking more layers, ii) increased the width of the network by scaling the number of experts per MoE layer along with number of cores used for training.

| PI | Model | Experts Per-layer | Experts total | TPUv3 Cores | Enc+Dec layers | Weights |

| (1) (2) (3) (4) (5) (6) | MoE(2048E,36L) MoE(2048E,12L) MoE(512E,36L) MoE(512E,12L) MoE(128E,36L) MoE(128E,12L) | 2048 2048 512 512 128 128 | 36684 12228 9216 3072 2304 768 | 2048 2048 512 512 128 128 | 36 12 36 12 36 12 | 600B 200B 150B 50B 37B 12.5B |

| * | MoE(2048E,60L) | 2048 | 61440 | 2048 | 60 | 1T |

表 1: MoE Transformer 模型家族。为实现目标容量,我们采用两种方式:i) 通过堆叠更多层增加深度,ii) 通过扩展每层专家数量及训练核心数增加网络宽度。

| PI | Model | Experts Per-layer | Experts total | TPUv3 Cores | Enc+Dec layers | Weights |

|---|---|---|---|---|---|---|

| (1) (2) (3) (4) (5) (6) | MoE(2048E,36L) MoE(2048E,12L) MoE(512E,36L) MoE(512E,12L) MoE(128E,36L) MoE(128E,12L) | 2048 2048 512 512 128 128 | 36684 12228 9216 3072 2304 768 | 2048 2048 512 512 128 128 | 36 12 36 12 36 12 | 600B 200B 150B 50B 37B 12.5B |

| * | MoE(2048E,60L) | 2048 | 61440 | 2048 | 60 | 1T |

For each experiment (rows of the Table 1), we trained the corresponding MoE Transformer model until it has seen 1 trillion $(10^{12})$ tokens. The model checkpoint at this point is used in the model evaluation. We did not observe any over-fitting patterns by this point in any experiment. Instead, we observed that the training loss continued to improve if we kept training longer. We evaluated BLEU scores that the models achieved for all language pairs on a held-out test set. Figure 6 reports all our results.

对于表1中的每一项实验,我们训练对应的MoE Transformer模型直至其处理完1万亿$(10^{12})$ token。此时保存的模型检查点用于后续评估。所有实验均未在该阶段观察到过拟合现象,相反,继续延长训练时间仍能提升损失函数表现。我们在保留测试集上评估了模型在所有语言对中取得的BLEU分数,完整结果见图6。

Here we share a qualitative analysis for each experiment and discuss the implication of each setup on high- and low-resource languages in order to track our progress towards universal translation. To ground the forthcoming analysis, it is worth restating the expected behavior of the underlying quality gains. In order to improve the quality for both high- and low-resource languages simultaneously within a single model, scaled models must mitigate capacity bottleneck issue by allocating enough capacity to high-resource tasks, while amplifying the positive transfer towards low-resource tasks by facilitating sufficient parameter sharing. We loosely relate the expected learning dynamics of such systems with the long-standing memorization and generalization dilemma, which is recently studied along the lines of width vs depth scaling efforts [54]. Not only do we expect our models to generalize better to the held-out test sets, we also expect them to exhibit high transfer capability across languages as another manifestation of generalization performance [55].

我们在此分享每项实验的定性分析,并探讨不同设置对高资源和低资源语言的影响,以追踪我们在通用翻译领域的进展。为了支撑后续分析,有必要重申模型质量提升的预期表现:要在一个模型中同时提升高资源和低资源语言的翻译质量,规模化模型必须通过为高资源任务分配足够容量来缓解能力瓶颈问题,同时通过促进充分的参数共享来增强向低资源任务的正向迁移。我们将这类系统的预期学习动态与长期存在的记忆与泛化困境相联系,该问题近期正沿着宽度与深度扩展的研究方向被探讨[54]。我们不仅期望模型在保留测试集上展现更好的泛化能力,还期望其通过跨语言的高迁移能力来体现泛化性能的另一种表现形式[55]。

Deeper Models Bring Consistent Quality Gains Across the Board We first investigate the relationship between the model depth and the model quality for both high- and low-resource languages. Three different experiments are conducted in order to test the generalization performance, while keeping the number of experts per-layer fixed. With an increasing number of per-layer experts for each experiment (128, 512 and 2048), we tripled the depth of the network for each expert size, from 12 to 36. This resulted in three groups where experts per-layer fixed but three times the depth within each group:

更深层的模型带来全面一致的质量提升

我们首先研究了模型深度与模型质量之间的关系,涵盖高资源和低资源语言。为测试泛化性能,我们在保持每层专家数量固定的情况下进行了三项不同实验。随着每项实验中每层专家数量的增加(128、512和2048),我们将每种专家规模对应的网络深度从12层增至36层,形成三组实验:每组保持每层专家数固定,但深度增加三倍。

For each configuration shown in Fig. 6, we observed that increasing the depth (L) while keeping the experts per-layer (E) fixed, brings consistent gains for both low and high resourced languages (upwards $\Delta$ shift along the $y$ -axis), almost with a constant additive factor every time we scale the depth from 12L to 36L (2-to-3 BLEU points on average as shown in the last column of Table 3).

对于图6所示的每种配置,我们观察到在保持每层专家数(E)不变的情况下增加深度(L),能为低资源和高资源语言带来持续增益(y轴上的$\Delta$上升趋势)。当深度从12层扩展到36层时,这种提升几乎呈现恒定累加效应(如表3最后一列所示,平均带来2-3个BLEU分数提升)。

Relaxing the Capacity Bottleneck Grants Pronounced Quality Gains Earlier in Section 4.1 we highlighted the influence of the capacity bottleneck on task interference, resulting in degraded quality especially for high resourced languages. Later we alleviated this complication by increasing the number of experts per-layer, which in return resulted in a dramatic increase in the number of parameters (weight) of the models studied. Here we investigate whether this so called capacity bottleneck is distinctly observable and explore the impact on model quality and efficiency once it is relaxed. To that end, we first consider three models with identical depths (12L), with increasing number of experts per-layer: 128, 512 and 2048. As we increase the number of experts per-layer from 128 to 512 by a factor of four, we notice a large jump in model quality, $+3.3$ average BLEU score across 100 languages. However again by four folds scaling of the number of experts per-layer, from 512 to 2048, yields only $+1.3$ average BLEU scores. Despite the significant quality improvement, this drop in gains hints the emergence of diminishing returns.

放宽容量瓶颈可在早期带来显著质量提升

在4.1节中我们强调了容量瓶颈对任务干扰的影响,这会导致质量下降,尤其是对高资源语言。随后我们通过增加每层专家数量缓解了这一复杂问题,反过来也导致研究模型的参数量(权重)急剧增加。此处我们探究这种所谓的容量瓶颈是否明显可观测,并探索其放宽后对模型质量和效率的影响。为此,我们首先考虑三个深度相同(12层)、每层专家数量递增(128/512/2048)的模型。当每层专家数量从128增至512(4倍)时,模型质量出现大幅跃升,100种语言的平均BLEU分数提升+3.3。然而当专家数量再次4倍增长(从512到2048)时,平均BLEU分数仅提升+1.3。尽管质量显著改善,但增益下降暗示了收益递减现象的出现。

Speculative ly, the capacity bottleneck is expected to be residing between 128 to 512 experts, for the particular para me tri z ation, number of languages and the amount of training data used in our experimental setup. Once the bottleneck is relaxed, models enjoy successive scaling of the depth, which can be seen by comparing 12 versus 36 layer models both with 128 experts. Interestingly increasing the depth does not help as much if the capacity bottleneck is not relaxed.

推测来看,在我们的实验设置所采用的特定参数化方案、语言数量及训练数据量条件下,容量瓶颈预计存在于128至512个专家(expert)之间。一旦突破该瓶颈,模型就能实现深度的持续扩展——通过比较具有128个专家的12层与36层模型即可观察到这一现象。值得注意的是,若容量瓶颈未被突破,单纯增加深度带来的收益将显著受限。

Having More Experts Improve Quality Especially for High-Resourced Tasks Another dimension that could shed light on the quality gains of scaling in multi-task models is the contrast between high and low resource language improvements. As mentioned before, low resourced languages benefit from transfer while high resource languages seek for added capacity. Next we examine the effect of increasing the experts per-layer while fixing the depth.

增加专家数量提升质量 尤其针对高资源任务

另一个能揭示多任务模型扩展质量提升的维度是高资源与低资源语言改进效果的对比。如前所述,低资源语言受益于迁移学习,而高资源语言则需要额外容量。接下来我们研究在固定深度情况下,增加每层专家数量的效果。

As can be seen in Figure 6, for 12 layer models increase in the expert number yields larger gains for high resourced languages as opposed to earlier revealed diminishing returns for low-resourced languages. A similar pattern is observed also for 36 layer models. While adding more experts relaxes the capacity bottleneck, at the same time it reduces the amount of transfer due to a reduction of the shared sub-networks.

如图 6 所示,对于 12 层模型,专家数量的增加为高资源语言带来了更大的收益,而低资源语言则呈现出先前揭示的收益递减现象。36 层模型也观察到类似模式。虽然增加专家数量缓解了容量瓶颈,但同时由于共享子网络的减少,也降低了迁移量。

Deep-Dense Models are Better at Positive Transfer towards Low-Resource Tasks Lastly we look into the impact of the depth on low-resourced tasks as a loose corollary to our previous experiment. In order to do so, we include a dense model with 96 layers T(96L) trained with GPipe on the same data into our analysis. We compare T(96L) with the shallow MoE(128E, 12L) model. While the gap between the two models measured to be almost constant for the majority of the high-to-mid resourced languages, the gap grows in favor of the dense-deep T(96L) model as we get into the low-resourced regime. Following our previous statement, as the proportion of the shared sub-networks across tasks increase, which is $100%$ for dense T(96L), the bandwidth for transfer gets maximized and results in a comparably better quality against its shallow counterpart. Also notice that, the same transfer quality to the low-resourced languages can be achieved with MoE(36E, 128L) which contains 37 billion parameters.

深度密集模型在低资源任务的正向迁移中表现更优

最后,我们探讨深度对低资源任务的影响,作为先前实验的延伸推论。为此,我们在分析中加入了采用GPipe框架训练、包含96层的密集模型T(96L)。通过对比T(96L)与浅层MoE(128E, 12L)模型发现:在中高资源语言中,两者的性能差距基本保持恒定;但当进入低资源领域时,深度密集的T(96L)模型优势显著扩大。正如前文所述,当任务间共享子网络比例提升时(密集模型T(96L)可达100%),迁移带宽达到最大化,从而使该模型相较浅层版本获得更优性能。值得注意的是,参数量达370亿的MoE(36E, 128L)模型也能实现同等水平的低资源语言迁移质量。

We conjecture that, increasing the depth might potentially increase the extent of transfer to lowresource tasks hence generalize better along that axis. But we also want to highlight that the models in comparison have a disproportionate training resource requirements. We again want to promote the importance of training efficiency, which is the very topic we studied next.

我们推测,增加深度可能会提升对低资源任务的迁移程度,从而在该维度上实现更好的泛化性能。但需要指出的是,对比模型的训练资源需求存在显著差异。我们再次强调训练效率的重要性,这也正是接下来要研究的核心课题。

4.5 Training Efficiency

4.5 训练效率

In this section we focus on the training efficiency of MoE Transformer models. So far, we have seen empirical evidence how scaling the models along various axes bring dramatic quality gains, and studied the factors affecting the extent of the improvements. In order to measure the training e