Tree of Clarifications: Answering Ambiguous Questions with Retrieval-Augmented Large Language Models

Tree of Clarifications: 基于检索增强大语言模型的模糊问题解答

Gangwoo $\mathbf{Kim}^{1}$ Sungdong $\mathbf{Kim^{2,3,4}}$ Byeongguk Jeon1 Joonsuk Park2,3,5 Jaewoo Kang Korea University 1 NAVER Cloud2 NAVER AI Lab3 KAIST AI4 University of Richmond5 {gang woo kim, bkjeon1211, kangj}@korea.ac.kr sungdong.kim@navercorp.com park@joonsuk.org

Gangwoo $\mathbf{Kim}^{1}$ Sungdong $\mathbf{Kim^{2,3,4}}$ Byeongguk Jeon1 Joonsuk Park2,3,5 Jaewoo Kang 高丽大学1 NAVER Cloud2 NAVER AI Lab3 韩国科学技术院人工智能4 里士满大学5 {gang woo kim, bkjeon1211, kangj}@korea.ac.kr sungdong.kim@navercorp.com park@joonsuk.org

Abstract

摘要

Questions in open-domain question answering are often ambiguous, allowing multiple interpretations. One approach to handling them is to identify all possible interpretations of the ambiguous question (AQ) and to generate a long-form answer addressing them all, as suggested by Stelmakh et al. (2022). While it provides a comprehensive response without bothering the user for clarification, considering multiple dimensions of ambiguity and gathering corresponding knowledge remains a chal- lenge. To cope with the challenge, we propose a novel framework, TREE OF CLARIFICATIONS (TOC): It recursively constructs a tree of disambiguations for the AQ—via few-shot prompting leveraging external knowledge—and uses it to generate a long-form answer. TOC outperforms existing baselines on ASQA in a fewshot setup across all metrics, while surpassing fully-supervised baselines trained on the whole training set in terms of Disambig-F1 and Disambig-ROUGE. Code is available at github.com/gankim/tree-of-clarifications.

开放域问答中的问题往往具有歧义性,可能存在多种解读方式。Stelmakh等人(2022)提出的一种处理方法是识别歧义问题(AQ)的所有可能解释,并生成涵盖所有解释的长篇答案。虽然这种方法能在不打扰用户澄清的情况下提供全面回答,但如何考量歧义的多个维度并收集相应知识仍具挑战性。为此,我们提出了一个新颖框架——澄清树(TREE OF CLARIFICATIONS,TOC):它通过少样本提示结合外部知识,递归地为歧义问题构建消歧树,并利用该树生成长篇答案。在ASQA数据集上,TOC在少样本设置下的所有指标均优于现有基线方法,同时在Disambig-F1和Disambig-ROUGE指标上超越了使用完整训练集训练的完全监督基线。代码已开源:github.com/gankim/tree-of-clarifications。

1 Introduction

1 引言

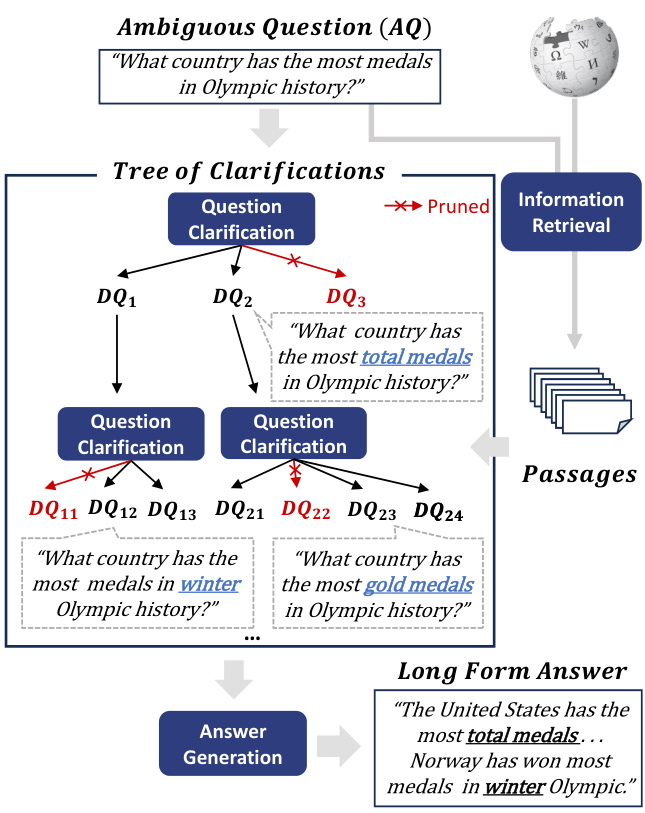

In open-domain question answering (ODQA), users often ask ambiguous questions (AQs), which can be interpreted in multiple ways. To handle AQs, several approaches have been proposed, such as providing individual answers to disambiguate d questions (DQs) for all plausible interpretations of the given AQ (Min et al., 2020) or asking a clarification question (Guo et al., 2021). Among them, we adopt that of Stelmakh et al. (2022), which provides a comprehensive response without bothering the user for clarification: The task is to identify all DQs of the given AQ and generate a long-form answer addressing all the DQs (See Figure 1).

在开放域问答(ODQA)中,用户经常提出具有多义性的模糊问题(AQs)。针对这类问题,现有研究提出了多种解决方案:如为模糊问题的所有可能解释提供对应的明确问题(DQs)答案(Min等人, 2020),或通过澄清提问来消除歧义(Guo等人, 2021)。我们采用Stelmakh等人(2022)提出的方案,该方案无需用户澄清即可提供全面响应:其核心任务是识别给定模糊问题对应的所有明确问题,并生成涵盖所有明确问题的长文本答案(见图1)。

There are two main challenges to this task: (1) the AQ may need to be clarified by considering multiple dimensions of ambiguity. For example, the AQ “what country has the most medals in Olympic history” in Figure 1 can be clarified with respect to the type of medals—gold, silver, or bronze—or Olympics—summer or winter; and (2) substantial knowledge is required to identify DQs and respective answers. For example, it requires knowledge to be aware of the existence of different types of medals and the exact counts for each country.

该任务面临两大主要挑战:(1) 澄清模糊问题(AQ)可能需要考虑多维度歧义。例如图1中的AQ"奥运史上哪个国家奖牌最多",可以从奖牌类型(金牌、银牌或铜牌)或奥运会类型(夏季或冬季)进行澄清;(2) 识别衍生问题(DQ)及相应答案需要大量知识储备。例如需了解不同奖牌类型的存在,以及每个国家的具体奖牌数量。

Figure 1: Overview of TREE OF CLARIFICATIONS. (1) relevant passages for the ambiguous question (AQ) are retrieved. (2) leveraging the passages, disambiguate d questions (DQs) for the AQ are recursively generated via few-shot prompting and pruned as necessary. (3) a long-form answer addressing all DQs is generated.

图 1: TREE OF CLARIFICATIONS 流程概览。(1) 检索与模糊问题 (AQ) 相关的文本段落。(2) 基于这些段落,通过少样本提示递归生成 AQ 的消歧问题 (DQs) 并按需剪枝。(3) 生成涵盖所有 DQs 的长篇回答。

To address the challenges and provide a longform answer to AQ, we propose a novel framework, TREE OF CLARIFICATIONS (TOC): It recursively constructs a tree of DQs for the AQ—via few-shot prompting leveraging external knowledge—and uses it to generate a long-form answer. More specifically, first, relevant passages for the AQ are retrieved. Then, leveraging the passages, DQs for the AQ are recursively generated via few-shot prompting and pruned as necessary. Lastly, a long-form answer addressing all DQs is generated. The tree structure promotes exploring DQs in targeting par- ticular dimensions of clarification, addressing the first challenge, and the external sources offer additional knowledge to cope with the second challenge.

为应对挑战并提供对AQ的长篇回答,我们提出了一种新颖框架——澄清树(TREE OF CLARIFICATIONS, TOC):它通过少样本提示利用外部知识,递归地为AQ构建DQ树,并以此生成长篇回答。具体而言,首先检索与AQ相关的段落;随后借助这些段落,通过少样本提示递归生成AQ的DQ并根据需要进行剪枝;最后生成解决所有DQ的长篇回答。该树状结构有助于探索针对特定澄清维度的DQ,应对第一个挑战,而外部知识源则为应对第二个挑战提供了额外信息。

Experiments demonstrate that our proposed use of LLMs with retrieval-augmentation and guidance to pursue diverse paths of clarification results in the new state-of-the-art on ASQA (Stelmakh et al., 2022)—a long-form QA benchmark for AQs. TOC outperforms existing baselines on ASQA in a few-shot setup across all metrics. In addition, this 5-shot performance surpasses that of the fullysupervised baselines trained on the whole training set by 7.3 and 2.9 in terms of Disambig-F1 and Disambig-ROUGE, respectively.

实验表明,我们提出的结合检索增强和引导策略使大语言模型(LLM)探索多样化澄清路径的方法,在ASQA (Stelmakh et al., 2022) 这个面向歧义问题的长文本问答基准上取得了最新最优性能。在少样本设定下,TOC在所有指标上均超越现有基线模型。此外,其5样本性能在Disambig-F1和Disambig-ROUGE指标上分别比完整训练集训练的全监督基线模型高出7.3和2.9分。

The main contribution of this work is proposing a novel framework, TREE OF CLARIFICATIONS (TOC), for generating long-form answers to AQs in ODQA, advancing the state-of-the-art on the ASQA benchmark. TOC introduces two main innovations:

这项工作的主要贡献是提出了一种新颖的框架——澄清树(Tree of Clarifications,TOC),用于在开放域问答(ODQA)中生成长篇答案,从而提升了ASQA基准的最先进水平。TOC引入了两大创新:

• It guides LLMs to explore diverse paths of clarification of the given AQ in a tree structure with the ability to prune unhelpful DQs. • To the best of our knowledge, it is the first to combine retrieval systems with LLM for generating long-form answers to AQs.

• 它引导大语言模型以树状结构探索给定澄清问题 (AQ) 的多样化解决路径,并具备剪枝无效澄清追问 (DQ) 的能力。

• 据我们所知,这是首次将检索系统与大语言模型结合用于生成针对澄清问题的长文本答案。

2 Related Work

2 相关工作

A line of studies (Min et al., 2020, 2021; Gao et al., 2021; Shao and Huang, 2022) extends retrieve-andread frameworks dominant in ODQA task (Chen et al., 2017; Karpukhin et al., 2020; Lewis et al., 2020; Izacard and Grave, 2021) to clarify AQ and generate DQs with corresponding answers to them. However, their approaches require fine-tuning models on the large-scale train set. On the other hand, our framework enables LLM to generate a comprehensive response addressing all DQs via few-shot prompting.

一系列研究 (Min et al., 2020, 2021; Gao et al., 2021; Shao and Huang, 2022) 扩展了开放域问答 (ODQA) 任务中主流的检索-阅读框架 (Chen et al., 2017; Karpukhin et al., 2020; Lewis et al., 2020; Izacard and Grave, 2021) ,通过澄清问题 (AQ) 并生成衍生问题 (DQ) 及其对应答案。然而,这些方法需要在大规模训练集上微调模型。相比之下,我们的框架通过少样本提示 (few-shot prompting) 使大语言模型能够生成涵盖所有衍生问题的综合回答。

Recent studies introduce LLM-based methods to generate a long-form answer to the AQ. Amplayo et al. (2023) suggest optimal prompts specifically engineered for the task. Kuhn et al. (2022) prompt LLMs to clarify ambiguous questions selectively. However, the studies do not utilize external information to ensure the factual correctness of the disambi gu at ions, thereby potentially increasing the risk of hallucinations from LLMs. Moreover, the results could be bounded by inherent parametric knowledge of LLM. Concurrently, Lee et al. (2023) automatically generate clarifying questions to resolve ambiguity.

近期研究提出了基于大语言模型(LLM)的方法来生成AQ的长篇回答。Amplayo等人(2023)提出了专门为该任务设计的最优提示模板。Kuhn等人(2022)通过提示大语言模型选择性地澄清模糊问题。然而这些研究都未利用外部信息来确保消歧的事实准确性,从而可能增加大语言模型产生幻觉的风险。此外,其结果可能受限于大语言模型固有的参数化知识。与此同时,Lee等人(2023)通过自动生成澄清性问题来解决歧义问题。

Our framework involves the recursive tree architecture, inspired by several prior studies. Min et al. (2021) propose the tree-decoding algorithm to auto regressive ly rerank passages in ambiguous QA. Gao et al. (2021) iterative ly explore additional interpretations and verify them in a round-trip manner. Concurrently, extending chain of thoughts (Wei et al., 2022) prompting, Yao et al. (2023) apply the tree architecture to reasoning tasks for deductive or mathematical problems. On the contrary, TOC recursively clarifies questions and introduces a self-verification method to prune unhelpful DQs.

我们的框架采用递归树结构,灵感来自多项先前研究。Min等人 (2021) 提出树解码算法,用于在模糊QA任务中自回归地重排段落。Gao等人 (2021) 通过迭代探索额外解释并以往返方式验证。与此同时,Yao等人 (2023) 在扩展思维链 (Wei等人, 2022) 提示的基础上,将树结构应用于演绎或数学问题的推理任务。与之相反,TOC通过递归澄清问题,并引入自验证方法来修剪无益的DQ。

3 Tree of Clarifications

3 澄清之树

We introduce a novel framework, TREE OF CLARIFICATIONS (TOC), as illustrated in Figure 1. We first devise retrieval-augmented clarification (RAC; Sec. 3.1), a basic component that clarifies AQ and generates DQs based on relevant passages. TOC explores various fine-grained interpretations, represented as a tree structure (TS; Sec. 3.2) by recursively performing RAC and pruning unhelpful DQs. Lastly, it aggregates the tree and generates a long-form answer addressing all valid interpreta- tions.

我们提出了一种新颖的框架——澄清树 (TREE OF CLARIFICATIONS, TOC) ,如图 1 所示。首先设计了检索增强澄清 (retrieval-augmented clarification, RAC;第 3.1 节) 这一基础组件,用于澄清初始问题 (AQ) 并根据相关段落生成细化问题 (DQ)。TOC 通过递归执行 RAC 并剪除无效的 DQ,以树形结构 (tree structure, TS;第 3.2 节) 探索多种细粒度解释。最后,框架会聚合整棵树并生成涵盖所有有效解释的长篇答案。

3.1 Retrieval-Augmented Clarification (RAC)

3.1 检索增强澄清 (RAC)

We first retrieve relevant Wikipedia documents for the AQ by using two retrieval systems, ColBERT (Khattab and Zaharia, 2020) and Bing search engine1. ColBERT is a recent dense retriever that has effective and efficient zero-shot search quality. Following Khattab et al. (2022), we use the off-the-shelf model pre-trained on MS-Marco (Bajaj et al., 2016). We additionally include the Bing search engine to promote the diversity of retrieved Wikipedia passages. Finally, we obtain over 200 passages by combining passages retrieved by each system.

我们首先使用两种检索系统ColBERT (Khattab and Zaharia, 2020)和Bing搜索引擎1为AQ检索相关的维基百科文档。ColBERT是近期提出的高效密集检索器,具有出色的零样本搜索性能。遵循Khattab等人(2022)的方法,我们直接使用在MS-Marco (Bajaj et al., 2016)上预训练的现成模型。为了提升检索维基百科段落的多样性,我们还加入了Bing搜索引擎。最终,通过合并两个系统检索到的段落,我们获得了超过200个相关段落。

After collecting a passage set for the AQ, we rerank and choose top $k$ passages and augment them to a prompt. We use Sentence BERT (Reimers and Gurevych, 2019) pre-trained on MS-Marco as the reranker backbone. For in-context learning setup, we dynamically choose $k$ -shot examples with the nearest neighbor search2 and add them to the prompt. We initiate with the instruction of Amplayo et al. (2023) and revise it for our setup. Given the prompt with relevant passages and AQs, LLM generates all possible DQs and their corresponding answers .

为AQ收集段落集后,我们重新排序并选择前$k$个段落,将其增强至提示词中。我们采用基于MS-Marco预训练的Sentence BERT (Reimers and Gurevych, 2019) 作为重排序主干模型。在上下文学习设置中,通过最近邻搜索动态选取$k$个少样本示例并加入提示词。初始指令基于Amplayo等人 (2023) 的研究,并根据我们的设置进行了调整。当提示词包含相关段落和AQ时,大语言模型会生成所有可能的DQ及其对应答案。

3.2 Tree Structure (TS)

3.2 树结构 (TS)

To effectively explore the diverse dimensions of ambiguity, we introduce a recursive tree structure of clarifications. Starting from the root node with AQ, it progressively inserts child nodes by recursively performing RAC, each of which contains a disambiguate d question-answer pair. In each expansion step, passages are reranked again regarding the current query. It allows each step to focus on its own DQ, encouraging TOC to comprehend a wider range of knowledge. Exploration of a tree ends when it satisfies termination conditions; it reaches the maximum number of valid nodes or the maximum depth. We choose the breadth-first search (BFS) by default, hence the resulting tree could cover the broader interpretations 4.

为了有效探索歧义的多元维度,我们引入了一种递归澄清树结构。从根节点AQ开始,通过递归执行RAC逐步插入子节点,每个子节点包含一个消歧后的问答对。在每次扩展步骤中,会根据当前查询重新对段落进行排序。这使得每一步都能专注于自身的DQ,促使TOC理解更广泛的知识范围。当满足终止条件时,树形探索结束;即达到有效节点的最大数量或最大深度。默认情况下我们选择广度优先搜索(BFS),因此生成的树能覆盖更广泛的解释[4]。

Pruning with Self-Verification To remove unhelpful nodes, we design a pruning method, inspired by current studies for self-verification (Kadavath et al., 2022; Cole et al., 2023). Specifically, we check the factual coherency between the answers in a target node and the AQ in the root node. By doing so, we discard the generated DQs that ask different or irrelevant facts from the original one. For example, given an AQ “Who will host the next world cup 2022?”, a generated disambiguation “DQ: Who hosted the world cup 2018? A: Russia” is a factually consistent question-answer pair but it changes the original scope of the $\mathrm{AQ}^{5}$ . We perform self-verification by prompting LLMs to determine whether the current node would be pruned or not. Prompted with AQ, the answer to the target DQ, and the answer-containing passage, LLM identifies

基于自验证的剪枝方法

为移除无效节点,我们受当前自验证研究 (Kadavath et al., 2022; Cole et al., 2023) 启发设计了一种剪枝方法。具体而言,我们检查目标节点答案与根节点歧义问题 (AQ) 之间的事实一致性,从而剔除那些询问不同或无关事实的生成消歧问题 (DQ)。例如,给定AQ"2022年下届世界杯由谁主办?",生成的消歧问题"DQ: 2018年世界杯由谁主办?A: 俄罗斯"虽是事实一致的问题-答案对,但改变了原始 $\mathrm{AQ}^{5}$ 的范畴。我们通过提示大语言模型判断当前节点是否应被剪枝来实现自验证:向大语言模型提供AQ、目标DQ的答案及包含答案的段落,由其进行判定。

| Model | D-F1 | R-L | DR |

| Fully-supervised | |||

| T5-Large Closed-Book | 7.4 | 33.5 | 15.7 |

| T5-Large w/JPR | 26.4 | 43.0 | 33.7 |

| PaLM w/Soft Prompt Tuning | 27.8 | 37.4 | 32.1 |

| Few-shotPrompting(5-shot) | |||

| PaLM* | 25.3 | 34.5 | 29.6 |

| GPT-3* | 25.0 | 31.8 | 28.2 |

| Tree of Clarifications (ToC; Ours) | |||

| GPT-3+RAC | 31.1 | 39.6 | 35.1 |

| GPT-3+RAC+TS | 32.4 | 40.0 | 36.0 |

| GPT-3 + RAC + TS w/Pruning | 33.7 | 39.7 | 36.6 |

from Amplayo et al. (2023)

| 模型 | D-F1 | R-L | DR |

|---|---|---|---|

| 全监督 | |||

| T5-Large Closed-Book | 7.4 | 33.5 | 15.7 |

| T5-Large w/JPR | 26.4 | 43.0 | 33.7 |

| PaLM w/Soft Prompt Tuning | 27.8 | 37.4 | 32.1 |

| 少样本提示(5-shot) | |||

| PaLM* | 25.3 | 34.5 | 29.6 |

| GPT-3* | 25.0 | 31.8 | 28.2 |

| 澄清树(ToC; 我们的方法) | |||

| GPT-3+RAC | 31.1 | 39.6 | 35.1 |

| GPT-3+RAC+TS | 32.4 | 40.0 | 36.0 |

| GPT-3 + RAC + TS w/Pruning | 33.7 | 39.7 | 36.6 |

来自 Amplayo 等人 (2023)

Table 1: Evaluation results for long-form QA on ambiguous questions from the development set of ASQA (Stelmakh et al., 2022). Baselines are either fully-supervised or 5-shot prompted. Note, TOC framework consists of retrieval-augmented clarification (RAC) and tree structure (TS).

表 1: ASQA开发集中模糊问题的长文本问答评估结果(Stelmakh等人, 2022)。基线方法采用全监督或5样本提示。请注意,TOC框架包含检索增强澄清(RAC)和树状结构(TS)。

if the given answer could be a correct answer to AQ.

如果给定答案可能是AQ的正确回答。

Answer Generation Once constructing the tree of clarifications, TOC aggregates all valid nodes and generates a comprehensive long-form answer to AQ. It selects the disambiguation s in retained nodes of the resulting tree with the relevant passages. If the number of nodes is insufficient, we undo the pruning steps from closer nodes to the root node in BFS order. Passages that contain the answers of valid nodes are prioritized. It finally generates a long-form answer, encoding AQ, selected disambiguation s, and relevant passages6.

答案生成

在构建澄清树后,TOC会聚合所有有效节点,并为AQ生成全面的长格式答案。它会从结果树的保留节点中选择消歧项及相关段落。若节点数量不足,则按广度优先搜索(BFS)顺序从靠近根节点的位置逐步撤销剪枝步骤。包含有效节点答案的段落会被优先处理。最终生成的长格式答案会编码AQ、选定的消歧项及相关段落[6]。

(注:根据策略要求,保留术语TOC/AQ/BFS不翻译,引用标记[6]保持原格式,段落结构与原文一致)

4 Experiment

4 实验

4.1 Experimental Setup

4.1 实验设置

Datasets All baselines and our framework are evaluated on ASQA (Stelmakh et al., 2022). It is a long-form QA dataset built upon the 6K ambiguous questions identified from AmbigNQ (Min et al., 2020). More details are in Appendix A.1

数据集

所有基线方法和我们的框架均在ASQA (Stelmakh et al., 2022) 数据集上进行评估。该数据集是一个长格式问答数据集,基于从AmbigNQ (Min et al., 2020) 中筛选出的6K个模糊问题构建。更多细节详见附录A.1。

Evaluation Metrics We use three evaluation metrics, following Stelmakh et al. (2022). (1) Disambig-F1 (D-F1) measures the factual correctness of generated predictions. It extracts short answers to each DQ and computes their F1 accuracy. (2) ROUGE-L (R-L) measures the lexical overlap between long-form answers from references and predictions. (3) DR score is the geometric mean of two scores, which assesses the overall performance. For validating intermediate nodes, we additionally use Answer-F1 that measures the accuracy of generated short answers in disambiguation. Further details are in Appendix A.2.

评估指标

我们采用Stelmakh等人 (2022) 提出的三项评估指标:(1) 消歧F1值 (D-F1) 用于衡量生成预测的事实准确性,通过提取每个DQ的简短答案并计算其F1准确率;(2) ROUGE-L (R-L) 用于衡量参考文本与预测文本之间长答案的词汇重叠度;(3) DR分数是两项分数的几何平均值,用于评估整体性能。针对中间节点验证,我们额外使用Answer-F1指标来评估消歧过程中生成的简短答案准确性。更多细节详见附录A.2。

Table 2: Ablation study on all components of retrievalaugmented clarification (RAC).

| Model | D-F1 | R-L | DR |

| GPT-3(Baseline) | 24.2 | 36.0 | 29.5 |

| GPT-3w/RAC | 31.1 | 39.6 | 35.1 |

| -Disambiguations | 30.5 | 37.3 | 33.7 |

| -Bing Search Engine | 28.5 | 37.4 | 32.7 |

| -RetrievalSystems | 25.6 | 35.1 | 30.0 |

表 2: 检索增强澄清(RAC)所有组件的消融研究

| 模型 | D-F1 | R-L | DR |

|---|---|---|---|

| GPT-3(基线) | 24.2 | 36.0 | 29.5 |

| GPT-3w/RAC | 31.1 | 39.6 | 35.1 |

| -消歧 | 30.5 | 37.3 | 33.7 |

| -Bing搜索引擎 | 28.5 | 37.4 | 32.7 |

| -检索系统 | 25.6 | 35.1 | 30.0 |

Baselines Stelmakh et al. (2022) propose finetuned baselines. They fine-tune T5-large (Raffel et al., 2020) to generate long-form answers on the whole train set. Models are evaluated in the closed-book setup or combined with JPR (Min et al., 2021), task-specific dense retriever for ambiguous QA by enhancing DPR (Karpukhin et al., 2020). On the other hand, Amplayo et al. (2023) propose a prompt engineering method to adapt LLMs to the ASQA benchmark. They employ PaLM (Chowdhery et al., 2022) and InstructGPT (Ouyang et al., 2022) that learn the soft prompts or adopt in-context learning with few-shot examples. They conduct experiments in the closedbook setup. Note that they share the same backbone with our models, GPT-3 with 175B parameters (text-davinci-002).

基线方法

Stelmakh等人(2022)提出了微调基线方法。他们在完整训练集上微调T5-large(Raffel等人,2020)来生成长篇答案。模型评估采用闭卷设置,或结合JPR(Min等人,2021)——通过增强DPR(Karpukhin等人,2020)实现的模糊问答任务专用密集检索器。另一方面,Amplayo等人(2023)提出了一种提示工程方法使大语言模型适配ASQA基准。他们采用PaLM(Chowdhery等人,2022)和InstructGPT(Ouyang等人,2022),通过学习软提示或采用少样本示例的上下文学习。实验在闭卷设置下进行。需要注意的是,这些模型与我们使用的GPT-3(text-davinci-002)具有相同的1750亿参数主干网络。

4.2 Experimental Results

4.2 实验结果

TOC outperforms fully-supervised and few-shot prompting baselines. Table 1 shows the long-form QA performance of baselines and TOC on the development set of ASQA. Among baselines, using the whole training set (Fully-supervised) achieves greater performances than Few-shot Prompting in all metrics. It implies that long-form QA task is challenging in the few-shot setup. In the closed-book setup, GPT-3 shows competitive performances with T5-large with JPR in D-F1 score, showing LLM’s strong reasoning ability over its inherent knowledge.

TOC 优于全监督和少样本提示基线。表 1 展示了基线和 TOC 在 ASQA 开发集上的长格式问答性能。在基线中,使用完整训练集 (全监督) 在所有指标上都优于少样本提示,这表明长格式问答任务在少样本设置中具有挑战性。在闭卷设置中,GPT-3 在 D-F1 分数上与采用 JPR 的 T5-large 表现相当,显示了大语言模型基于其固有知识的强大推理能力。

Among our models, LLM with RAC outperforms all other baselines in D-F1 and DR scores. It indicates the importance of leveraging external knowledge in clarifying AQs. Employing the tree structure (TS) helps the model to explore diverse interpretations, improving D-F1 and DR scores by

在我们的模型中,采用RAC的大语言模型在D-F1和DR分数上优于所有其他基线方法。这表明利用外部知识对澄清问题(AQ)的重要性。引入树结构(TS)有助于模型探索多样化解释,将D-F1和DR分数分别提升了

Table 3: Ablated results with and without pruning methods. The number of retained DQs after pruning and Answer-F1 are reported.

| Filtration | #(DQs) | Answer-F1 |

| w/o Pruning (None) | 12,838 | 40.9 |

| wPruning | ||

| +Deduplication | 10,598 | 40.1 |

| +Self-Verification | 4,239 | 59.3 |

表 3: 使用剪枝方法前后的消融实验结果。报告了剪枝后保留的 DQ (DQs) 数量及 Answer-F1 分数。

| Filtration | #(DQs) | Answer-F1 |

|---|---|---|

| w/o Pruning (None) | 12,838 | 40.9 |

| wPruning | ||

| +Deduplication | 10,598 | 40.1 |

| +Self-Verification | 4,239 | 59.3 |

1.3 and 0.9. When pruning the tree with our proposed self-verification (TS w/ Pruning), the model achieves state-of-the-art performance in D-F1 and DR score, surpassing the previous few-shot baseline by 8.4 and 7.0. Notably, it outperforms the best model in a fully-supervised setup (T5-large with JPR) by 7.3 and 2.9. In the experiment, T5-Large in a closed-book setup achieves comparable performance with LLM baselines in ROUGE-L score despite its poor D-F1 scores. It reconfirms the observation from Krishna et al. (2021) that shows the limitations of the ROUGE-L metric.

1.3和0.9。当使用我们提出的自验证方法进行剪枝时(TS w/ Pruning),该模型在D-F1和DR分数上达到了最先进的性能,比之前的少样本基线分别提高了8.4和7.0。值得注意的是,它比全监督设置下的最佳模型(T5-large with JPR)分别高出7.3和2.9。在实验中,封闭设置下的T5-Large尽管D-F1分数较低,但在ROUGE-L分数上与LLM基线取得了相当的性能。这再次印证了Krishna等人(2021)的研究发现,即ROUGE-L指标存在局限性。

Integrating retrieval systems largely contributes to accurate and diverse disambiguations. Table 2 displays the ablation study for measuring the contributions of each proposed component. When removing disambiguation s from fewshot training examples, the ROUGE-L score is significantly degraded, which shows the importance of the intermediate step to provide the complete answer. Integrating retrieval systems (i.e., Bing search engine and ColBERT) largely improves the model performance, especially in the D-F1 score. It indicates using external knowledge is key to enhancing the factual correctness of clarification. We report intrinsic evaluation for each retrieval system in Appendix B.

集成检索系统对提升消歧的准确性和多样性具有重要作用。表2展示了用于衡量各组件贡献的消融实验结果。移除少样本训练示例中的消歧步骤会导致ROUGE-L分数显著下降,这表明提供完整答案的中间步骤至关重要。集成检索系统(即Bing搜索引擎和ColBERT)大幅提升了模型性能,尤其在D-F1分数上表现突出,说明使用外部知识是增强澄清事实准确性的关键。各检索系统的内在评估结果详见附录B。

Our pruning method precisely identifies helpful disambiguation s from the tree. Table 3 shows intrinsic evaluation for generated disambiguation s, where all baselines are evaluated with Answer-F1 score that measures the F1 accuracy of the answer to the target DQ. Compared to the baseline, the valid nodes that pass self-verification contain more accurate disambiguation s, achieving much higher Answer-F1 score $(+18.4)$ . On the other hand, solely using de duplication does not advance the accuracy, indicating the efficacy of our proposed self-verification method.

我们的剪枝方法能精准识别树中有助于消歧的节点。表3展示了生成消歧结果的内部评估,所有基线模型均采用Answer-F1分数(衡量对目标DQ问题回答的F1准确率)进行评估。相比基线,通过自验证的有效节点包含更精确的消歧结果,Answer-F1分数显著提升$(+18.4)$。而仅使用去重策略并未提高准确率,这验证了我们提出的自验证方法的有效性。

5 Discussion

5 讨论

Ambiguity Detection TOC is designed to clarify AQs without bothering users; hence does not explicitly identify whether the given question is ambiguous or not. It tries to perform clarification even if the question cannot be disambiguate d anymore, often resulting in generating duplicate or irrelevant $\mathrm{DQs^{7}}$ . However, we could presume a question to be unambiguous if it can no longer be disambiguated8. In TOC, when it fails to disambiguate the given question or all generated disambiguation s are pruned, the question could be regarded as unambiguous.

歧义检测TOC旨在不打扰用户的情况下澄清模棱两可的问题(AQ),因此不会明确判断给定问题是否存在歧义。即使问题已无法进一步消歧,它仍会尝试进行澄清,这常导致生成重复或无关的消歧问题(DQ$^{7}$)。不过当问题无法被消歧时$^8$,我们可以推定该问题是无歧义的。在TOC中,当系统无法对给定问题消歧或所有生成的消歧选项都被剪枝时,该问题可被视为无歧义。

Computational Complexity Although TOC requires multiple LLM calls, its maximum number is less than 20 times per question. Exploration of the tree ends when it obtains the pre-defined number of valid nodes (10 in our experiments). Since the clarification process generates from two to five disambiguation s for each question, it satisfies the termination condition in a few steps without the pruning method. Failing to expand three times in a row also terminates the exploration. Pruning steps consume a smaller amount of tokens since they encode a single passage without few-shot exemplars. Compared to the existing ensemble methods such as self-consistency (Wei et al., 2022) which cannot be directly adopted to the generative task, ToC achieves a state-of-the-art performance with a comparable number of LLM calls.

计算复杂度

尽管TOC需要多次调用大语言模型,但每个问题的最大调用次数不超过20次。当获得预定义数量的有效节点(实验中设为10个)时,树探索即终止。由于澄清过程会为每个问题生成2到5个消歧选项,无需剪枝方法即可在几步内满足终止条件。连续三次扩展失败也会终止探索。剪枝步骤消耗的token较少,因为它们仅编码单个段落且不含少样本示例。与现有集成方法(如无法直接应用于生成任务的自洽方法 [20])相比,TOC在调用次数相近的情况下实现了最先进的性能。

General iz ability The key idea of ToC could be potentially generalized to other tasks and model architectures. It has a model-agnostic structure that could effectively explore diverse paths of recursive reasoning, which would be helpful for tasks that require multi-step reasoning, such as multi-hop QA. Future work might investigate the general iz ability of TOC to diverse tasks, datasets, and LM architectures.

泛化能力

ToC的核心思想可能推广到其他任务和模型架构。它具有与模型无关的结构,能有效探索递归推理的多样化路径,这对需要多步推理的任务(如多跳问答)很有帮助。未来工作可研究ToC在不同任务、数据集和大语言模型架构中的泛化能力。

6 Conclusion

6 结论

In this work, we propose a novel framework, TREE OF CLARIFICATIONS. It recursively builds a tree of disambiguation s for the AQ via few-shot prompting with external knowledge and utilizes it to generate a long-form answer. Our framework explores diverse dimensions of interpretations of ambiguity. Experimental results demonstrate TOC successfully guide LLMs to traverse diverse paths of clarification for a given AQ within tree structure and generate comprehensive answers. We hope this work could shed light on building robust clarification models, which can be generalized toward real-world scenarios.

在这项工作中,我们提出了一种新颖的框架——澄清树(TREE OF CLARIFICATIONS)。它通过少样本提示结合外部知识,为模糊问题(AQ)递归构建消歧树,并利用该结构生成长篇答案。我们的框架探索了模糊性解释的多个维度。实验结果表明,TOC能成功引导大语言模型在树状结构中遍历给定AQ的不同澄清路径,并生成全面答案。我们希望这项工作能为构建鲁棒的澄清模型提供启示,这些模型可推广至现实场景。

Limitations

局限性

Although TOC is a model-agnostic framework that could be combined with other components, our study is limited in demonstrating the genera liza bility of different kinds or sizes of LLMs. In addition, the experiments are only conducted on a benchmark, ASQA (Stelmakh et al., 2022). Although TOC enables LLM to explore diverse reasoning paths by iterative ly prompting LLM, the cost of multiple prompting is not negligible.

虽然TOC是一个与模型无关的框架,可以与其他组件结合使用,但我们的研究在展示不同类型或规模的大语言模型的通用性方面存在局限。此外,实验仅在ASQA基准测试(Stelmakh et al., 2022)上进行。尽管TOC通过迭代提示大语言模型使其能够探索多样化的推理路径,但多次提示的成本不容忽视。

We tried the recent prompting method, chain of thoughts (Wei et al., 2022), but failed to enhance the performance in our pilot experiments. It might indicate the disambiguation process requires external knowledge, which shows the importance of document-grounded or retrieval-augmented systems. Future work could suggest other pruning methods that identify unhelpful DQs more effec- tively. The performance could be further enhanced by using the state-of-the-art reranker in the answer sentence selection task, as proposed by recent works (Garg et al., 2020; Lauriola and Moschitti, 2021).

我们尝试了最新的提示方法——思维链 (Wei et al., 2022) ,但在初步实验中未能提升性能。这可能表明消歧过程需要外部知识,从而凸显了基于文档或检索增强系统的重要性。未来工作可以探索其他剪枝方法,以更有效地识别无用的DQ。通过采用答案句子选择任务中最先进的重新排序器 (如Garg et al., 2020和Lauriola and Moschitti, 2021提出的方案) ,性能有望得到进一步提升。

Acknowledgements

致谢

The first author, Gangwoo Kim, has been supported by the Hyundai Motor Chung Mong-Koo Foundation. This research was supported by the National Research Foundation of Korea (NRF2023R1A2C3004176, RS-2023-00262002), the MSIT (Ministry of Science and ICT), Korea, under the ICT Creative Cons i lien ce program (IITP-2022- 2020-0-01819) supervised by the IITP (Institute for Information & communications Technology Planning & Evaluation), and the Electronics and Telecommunications Research Institute (RS-2023- 00220195).

第一作者 Gangwoo Kim 获得了现代汽车郑梦九基金会的资助。本研究同时受到韩国国家研究基金会 (NRF2023R1A2C3004176, RS-2023-00262002) 、韩国科学技术信息通信部 (MSIT) 信息通信技术创新人才培育项目 (IITP-2022-2020-0-01819) 的资助 (项目主管单位:韩国信息通信技术规划评价院/IITP) ,以及韩国电子通信研究院 (RS-2023-00220195) 的资助。

References

参考文献

Reinald Kim Amplayo, Kellie Webster, Michael Collins, Dipanjan Das, and Shashi Narayan. 2023. Query refinement prompts for closed-book long-form question answering. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics.

Reinald Kim Amplayo、Kellie Webster、Michael Collins、Dipanjan Das 和 Shashi Narayan。2023。闭卷长文本问答中的查询优化提示。第61届计算语言学协会年会论文集。

Payal Bajaj, Daniel Campos, Nick Craswell, Li Deng, Jianfeng Gao, Xiaodong Liu, Rangan Majumder, Andrew McNamara, Bhaskar Mitra, Tri Nguyen, et al. 2016. Ms marco: A human generated machine reading comprehension dataset. 30th Conference on Neu- ral Information Processing Systems (NIPS 2016), Barcelona, Spain.

Payal Bajaj、Daniel Campos、Nick Craswell、Li Deng、Jianfeng Gao、Xiaodong Liu、Rangan Majumder、Andrew McNamara、Bhaskar Mitra、Tri Nguyen等。2016。MS MARCO:一个人工生成的机器阅读理解数据集。第30届神经信息处理系统大会(NIPS 2016),西班牙巴塞罗那。

Danqi Chen, Adam Fisch, Jason Weston, and Antoine Bordes. 2017. Reading wikipedia to answer opendomain questions. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1870–1879.

Danqi Chen、Adam Fisch、Jason Weston和Antoine Bordes。2017。通过阅读维基百科回答开放域问题。见《第55届计算语言学协会年会论文集(第一卷:长论文)》,第1870–1879页。

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann, et al. 2022. Palm: Scaling language modeling with pathways. arXiv preprint arXiv:2204.02311.

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann 等. 2022. PaLM: 基于Pathways的大语言模型扩展. arXiv预印本 arXiv:2204.02311.

Jeremy R Cole, Michael JQ Zhang, Daniel Gillick, Ju- lian Martin Eisen schlo s, Bhuwan Dhingra, and Jacob Eisenstein. 2023. Selectively answering ambiguous questions. arXiv preprint arXiv:2305.14613.

Jeremy R Cole、Michael JQ Zhang、Daniel Gillick、Julian Martin Eisenschlos、Bhuwan Dhingra 和 Jacob Eisenstein。2023年。《选择性回答模糊问题》。arXiv预印本 arXiv:2305.14613。

Yifan Gao, Henghui Zhu, Patrick Ng, Cicero dos San- tos, Zhiguo Wang, Feng Nan, Dejiao Zhang, Ramesh Nallapati, Andrew O Arnold, and Bing Xiang. 2021. Answering ambiguous questions through generative evidence fusion and round-trip prediction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 3263– 3276.

Yifan Gao, Henghui Zhu, Patrick Ng, Cicero dos Santos, Zhiguo Wang, Feng Nan, Dejiao Zhang, Ramesh Nallapati, Andrew O Arnold, Bing Xiang. 2021. 通过生成式证据融合与往返预测回答模糊问题. 见: 《第59届计算语言学协会年会暨第11届自然语言处理国际联合会议论文集(第一卷: 长论文)》, 第3263–3276页.

Siddhant Garg, Thuy Vu, and Alessandro Moschitti. 2020. Tanda: Transfer and adapt pre-trained transformer models for answer sentence selection. In Proceedings of the AAAI conference on artificial intelligence, volume 34, pages 7780–7788.

Siddhant Garg、Thuy Vu和Alessandro Moschitti。2020。Tanda:迁移与适配预训练Transformer模型用于答案句选择。载于《AAAI人工智能会议论文集》第34卷,第7780–7788页。

Meiqi Guo, Mingda Zhang, Siva Reddy, and Malihe Alikhani. 2021. Abg-coqa: Clarifying ambiguity in conversational question answering. In 3rd Conference on Automated Knowledge Base Construction.

梅琪·郭、明达·张、Siva Reddy和Malihe Alikhani。2021年。Abg-coqa:对话问答中的歧义澄清。收录于第三届自动知识库构建会议。

Gautier Izacard and Édouard Grave. 2021. Leveraging passage retrieval with generative models for open domain question answering. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 874–880.

Gautier Izacard 和 Édouard Grave. 2021. 利用生成式模型结合段落检索实现开放域问答. 载于《第16届欧洲计算语言学协会会议论文集: 主卷》, 第874–880页.

Jeff Johnson, Matthijs Douze, and Hervé Jégou. 2019. Billion-scale similarity search with gpus. IEEE Transactions on Big Data, 7(3):535–547.

Jeff Johnson、Matthijs Douze 和 Hervé Jégou。2019。基于 GPU 的十亿级相似性搜索。《IEEE 大数据汇刊》7(3):535–547。

Saurav Kadavath, Tom Conerly, Amanda Askell, Tom Henighan, Dawn Drain, Ethan Perez, Nicholas Schiefer, Zac Hatfield Dodds, Nova DasSarma, Eli Tran-Johnson, et al. 2022. Language models (mostly) know what they know. arXiv preprint arXiv:2207.05221.

Saurav Kadavath、Tom Conerly、Amanda Askell、Tom Henighan、Dawn Drain、Ethan Perez、Nicholas Schiefer、Zac Hatfield Dodds、Nova DasSarma、Eli Tran-Johnson 等. 2022. 语言模型 (大多) 知道自己知道什么. arXiv预印本 arXiv:2207.05221.

Vladimir Karpukhin, Barlas Oguz, Sewon Min, Patrick Lewis, Ledell Wu, Sergey Edunov, Danqi Chen, and Wen-tau Yih. 2020. Dense passage retrieval for opendomain question answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 6769–6781.

Vladimir Karpukhin、Barlas Oguz、Sewon Min、Patrick Lewis、Ledell Wu、Sergey Edunov、Danqi Chen和Wen-tau Yih。2020。开放域问答的密集段落检索。载于《2020年自然语言处理实证方法会议论文集》(EMNLP),第6769–6781页。

Omar Khattab, Keshav Santhanam, Xiang Lisa Li, David Hall, Percy Liang, Christopher Potts, and Matei Zaharia. 2022. Demonstrate-searchpredict: Composing retrieval and language models for knowledge-intensive nlp. arXiv preprint arXiv:2212.14024.

Omar Khattab、Keshav Santhanam、Xiang Lisa Li、David Hall、Percy Liang、Christopher Potts 和 Matei Zaharia。2022。演示-搜索-预测:组合检索与语言模型实现知识密集型自然语言处理。arXiv预印本 arXiv:2212.14024。

Omar Khattab and Matei Zaharia. 2020. Colbert: Efficient and effective passage search via contextual i zed late interaction over bert. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, pages 39– 48.

Omar Khattab和Matei Zaharia。2020。Colbert:基于BERT的情境化延迟交互实现高效段落搜索。见《第43届国际ACM SIGIR信息检索研究与发展会议论文集》,第39–48页。

Kalpesh Krishna, Aurko Roy, and Mohit Iyyer. 2021. Hurdles to progress in long-form question answering. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 4940–4957.

Kalpesh Krishna、Aurko Roy和Mohit Iyyer。2021。长形式问答中的进展障碍。载于《2021年北美计算语言学协会人类语言技术会议论文集》,第4940–4957页。

Lorenz Kuhn, Yarin Gal, and Sebastian Farquhar. 2022. Clam: Selective clarification for ambiguous questions with large language models. arXiv preprint arXiv:2212.07769.

Lorenz Kuhn、Yarin Gal 和 Sebastian Farquhar。2022。Clam: 大语言模型针对模糊问题的选择性澄清。arXiv预印本 arXiv:2212.07769。

Tom Kwiatkowski, Jennimaria Palomaki, Olivia Red- field, Michael Collins, Ankur Parikh, Chris Alberti, Danielle Epstein, Illia Polosukhin, Jacob Devlin, Kenton Lee, et al. 2019. Natural questions: A benchmark for question answering research. Transactions of the Association for Computational Linguistics, 7:452– 466.

Tom Kwiatkowski、Jennimaria Palomaki、Olivia Redfield、Michael Collins、Ankur Parikh、Chris Alberti、Danielle Epstein、Illia Polosukhin、Jacob Devlin、Kenton Lee等。2019。自然问题(Natural Questions):问答研究的基准测试。《计算语言学协会汇刊》7:452–466。

Ivano Lauriola and Alessandro Moschitti. 2021. An- swer sentence selection using local and global context in transformer models. In European Conference on Information Retrieval, pages 298–312. Springer.

Ivano Lauriola 和 Alessandro Moschitti. 2021. 基于 Transformer 模型的局部与全局上下文答案句选择. 欧洲信息检索会议, 第 298–312 页. Springer.

Dongryeol Lee, Segwang Kim, Minwoo Lee, Hwanhee Lee, Joonsuk Park, Sang-Woo Lee, and Kyomin Jung. 2023. Asking clarification questions to handle ambiguity in open-domain qa. arXiv preprint arXiv:2305.13808.

Dongryel Lee、Segwang Kim、Minwoo Lee、Hwanhee Lee、Joonsuk Park、Sang-Woo Lee 和 Kyomin Jung。2023。通过澄清问题处理开放域问答中的歧义。arXiv预印本 arXiv:2305.13808。

Patrick