Improving Language Models via Plug-and-Play Retrieval Feedback

通过即插即用检索反馈改进语言模型

Wenhao $\mathbf{Y}\mathbf{u}^{\pmb{\phi}}$ , Zhihan Zhang♣, Zhenwen Liang♣ Meng Jiang♣, Ashish Sabharwal♠ ♣University of Notre Dame; ♠Allen Institute for Artificial Intelligence ♣wyu1@nd.edu; ♠ashishs@allenai.org

Wenhao $\mathbf{Y}\mathbf{u}^{\pmb{\phi}}$ , Zhihan Zhang♣, Zhenwen Liang♣, Meng Jiang♣, Ashish Sabharwal♠

♣圣母大学; ♠艾伦人工智能研究所

♣wyu1@nd.edu; ♠ashishs@allenai.org

Abstract

摘要

Large language models (LLMs) exhibit remarkable performance across various NLP tasks. However, they often generate incorrect or hallucinated information, which hinders their practical applicability in real-world scenarios. Human feedback has been shown to effectively enhance the factuality and quality of generated content, addressing some of these limitations. However, this approach is resourceintensive, involving manual input and supervision, which can be time-consuming and expensive. Moreover, it cannot be provided during inference, further limiting its practical utility in dynamic and interactive applications. In this paper, we introduce REFEED, a novel pipeline designed to enhance LLMs by providing automatic retrieval feedback in a plugand-play framework without the need for expensive fine-tuning. REFEED first generates initial outputs, then utilizes a retrieval model to acquire relevant information from large document collections, and finally incorporates the retrieved information into the in-context demonstration for output refinement, thereby addressing the limitations of LLMs in a more efficient and cost-effective manner. Experiments on four knowledge-intensive benchmark datasets demonstrate our proposed REFEED could improve over $+6.0%$ under zero-shot setting and $+2.5%$ under few-shot setting, compared to baselines without using retrieval feedback.

大语言模型(LLM)在各种自然语言处理任务中展现出卓越性能,但仍常产生错误或虚构信息,制约了其实际应用价值。人类反馈虽能有效提升生成内容的真实性与质量,但存在资源消耗大、需人工介入监督等缺陷,且无法在推理阶段实时提供。本文提出REFEED创新框架,通过即插即用式自动检索反馈机制增强大语言模型,无需昂贵微调。该框架首先生成初始输出,随后利用检索模型从海量文档库获取相关信息,最终将检索结果融入上下文示例以实现输出优化,从而以更高性价比解决大语言模型的固有缺陷。在四个知识密集型基准数据集上的实验表明,相较于未使用检索反馈的基线模型,REFEED在零样本设定下可实现超过+6.0%的性能提升,在少样本设定下提升幅度达+2.5%。

1 Introduction

1 引言

Large language models (LLMs) have demonstrated exceptional performance in various NLP tasks, utilizing in-context learning to eliminate the need for task-specific fine-tuning (Brown et al., 2020; Chowdhery et al., 2022; OpenAI, 2023). Such models are typically trained on extensive datasets, capturing a wealth of world or domain-specific knowledge within their parameters.

大语言模型 (LLM) 在各种 NLP 任务中展现出卓越性能,通过上下文学习 (in-context learning) 避免了针对特定任务进行微调的需求 (Brown et al., 2020; Chowdhery et al., 2022; OpenAI, 2023)。这类模型通常在庞大数据集上训练,将丰富的世界知识或领域知识编码至参数中。

Despite these achievements, LLMs exhibit certain shortcomings, particularly when confronted with complex reasoning and knowledge-intensive tasks (Zhang et al., 2023; Yu et al., 2023). One prominent drawback is their propensity to hallucinate content, generating information not grounded by world knowledge, leading to untrustworthy outputs and a diminished capacity to provide accurate information (Yu et al., 2022b; Manakul et al., 2023; Alkaissi and McFarlane, 2023). Another limitation of LLMs is the quality and scope of the knowledge they store. The knowledge embedded within an LLM may be incomplete or out-of-date due to the reliability of the sources in the pre-training corpus (Lazaridou et al., 2022; Shi et al., 2023). The vastness of the information landscape exacerbates this issue, making it difficult for models to maintain a comprehensive and up-to-date understanding of world facts. Moreover, LLMs cannot “memorize” all world information, especially struggling with the long tail of knowledge from their training corpus (Mallen et al., 2022; Kandpal et al., 2022). This inherent limitation compels them to balance the storage and retrieval of diverse and rare knowledge against focusing on more frequently encountered information, leading to potential inaccuracies when addressing questions related to less common topics or requiring nuanced understanding.

尽管取得了这些成就,大语言模型(LLM)仍存在某些缺陷,尤其是在处理复杂推理和知识密集型任务时[20][21]。一个显著缺点是容易产生幻觉内容,生成缺乏现实依据的信息,导致输出不可靠且提供准确信息的能力下降[22][23][24]。另一个局限在于其存储知识的质量和范围。由于预训练语料库来源的可靠性问题,大语言模型中内嵌的知识可能不完整或过时[25][26]。海量信息环境加剧了这一难题,使得模型难以保持对世界事实全面且及时的理解。此外,大语言模型无法"记忆"所有世界信息,尤其难以掌握训练语料中的长尾知识[27][28]。这种固有局限迫使模型在存储检索多样罕见知识,与聚焦高频信息之间寻求平衡,导致处理冷门话题或需要细致理解的问题时可能出现偏差。

Existing methods for enhancing the factuality of language models involve adjusting model outputs based on human feedback, followed by reinforcement learning-based fine-tuning (Nakano et al., 2021; Campos and Shern, 2022; Ouyang et al., 2022; Liu et al., 2023). While this approach simu- lates human-to-human task learning environments, fine-tuning LLMs, can be exceedingly costly due to the exponential growth in LLM size and the necessity for annotators to provide extensive feedback. Over-reliance on positively-rated data may limit the model’s ability to identify and rectify negative attributes or errors, potentially hampering its capacity to generalize to unseen data and novel scenarios. Furthermore, once LLMs are fine-tuned, they are unable to receive real-time feedback during inference or facilitate immediate error correction.

现有提升语言模型事实性的方法包括基于人类反馈调整模型输出,随后进行基于强化学习的微调 (Nakano et al., 2021; Campos and Shern, 2022; Ouyang et al., 2022; Liu et al., 2023)。虽然这种方法模拟了人类间任务学习环境,但由于大语言模型规模的指数级增长以及标注者需提供大量反馈,微调大语言模型的成本可能极其高昂。过度依赖正面评分数据可能限制模型识别和纠正负面属性或错误的能力,进而影响其对未见数据和新场景的泛化能力。此外,大语言模型完成微调后,在推理阶段无法接收实时反馈或实现即时纠错。

In this paper, we aim to provide automatic feedback in a plug-and-play manner without the need for fine-tuning LLMs. We explore two primary research questions: First, can we employ a retrieval method to provide feedback on individual generated outputs without relying on human annotators? Second, can we integrate the feedback to refine previous outputs in a plug-and-play manner, circumventing the expensive fine-tuning of language models? With regards to the two questions posed, we propose a novel pipeline for improving language model inference through automatic retrieval feedback, named REFEED, in a plug-and-play framework. Specifically, the language model generates initial outputs, followed by a retrieval model using the original query and generated outputs as a new query to retrieve relevant information from large document collections like Wikipedia. The retrieved information enables the language model to reconsider the generated outputs and refine them, potentially producing a new output (though it may remain the same if no changes are made).

本文旨在以即插即用(plug-and-play)方式提供自动反馈,无需对大语言模型(LLM)进行微调。我们探讨两个核心研究问题:首先,能否采用检索方法对单个生成输出提供反馈,而无需依赖人工标注?其次,能否以即插即用方式整合反馈来优化先前输出,从而规避语言模型的高成本微调?针对这两个问题,我们提出名为REFEED的创新流程,通过自动检索反馈在即插即用框架中改进语言模型推理。具体而言,语言模型首先生成初始输出,随后检索模型以原始查询和生成输出作为新查询,从Wikipedia等大型文档集合中检索相关信息。检索到的信息促使语言模型重新审视生成输出并进行优化,可能产生新输出(若未作修改则保持原样)。

Notably, compared to retrieve-then-read methods (Lewis et al., 2020; Lazaridou et al., 2022; Shi et al., 2023), our method benefits from more relevant documents retrieved from the corpus to directly elucidate the relationship between query and outputs. Besides, without generating the initial output, the supporting document cannot be easily retrieved due to the lack of lexical or semantic overlap with the question. We discuss the comparison in more detail in related work and experiments.

值得注意的是,与先检索后阅读的方法 [Lewis et al., 2020; Lazaridou et al., 2022; Shi et al., 2023] 相比,我们的方法通过从语料库中检索更相关的文档来直接阐明查询与输出之间的关系。此外,若不生成初始输出,由于缺乏与问题的词汇或语义重叠,支持文档将难以被检索到。我们将在相关工作与实验部分进行更详细的对比分析。

To further enhance our proposed REFEED pipeline, we introduce two innovative modules within this framework. Firstly, we diversify the initial generation step to produce multiple output candidates, enabling the model to determine the most reliable answer by analyzing the diverse set of retrieved documents. Secondly, we adopt an ensemble approach that merges language model outputs before and after retrieval feedback using a perplexity ranking method, as the retrieval feedback may occasionally mislead the language model (refer to the case study in Figure 5 for details).

为进一步优化我们提出的REFEED流程,我们在该框架中引入了两个创新模块。首先,我们在初始生成阶段实现多样化输出,通过生成多个候选答案使模型能够基于检索到的多样化文档集确定最可靠的回答。其次,我们采用集成策略,使用困惑度排序方法将检索反馈前后的语言模型输出进行融合,因为检索反馈有时可能误导语言模型(具体案例分析参见图5)。

Overall, our main contributions can be summarized as follows:

总体而言,我们的主要贡献可概括如下:

- We propose a novel pipeline utilizing retrieval feedback, named REFEED to improve large language models in a plug-and-play manner.

- 我们提出了一种利用检索反馈的新型流程,名为 REFEED,以即插即用的方式改进大语言模型。

- We design two novel modules to advance the REFEED pipeline, specifically diversifying generation outputs and ensembling initial and postfeedback answers.

- 我们设计了两个创新模块来优化REFEED流程,具体包括:生成结果多样化处理,以及初始答案与反馈后答案的集成。

- Our experiments on three challenging knowledge-intensive tasks demonstrate that REFEED can achieve state-of-the-art performance under the few-shot setting.

- 在三个具有挑战性的知识密集型任务上的实验表明,REFEED 能够在少样本设置下实现最先进的性能。

2 Related Work

2 相关工作

2.1 Solving Knowledge-intensive Tasks via Retrieve-then-Read Pipeline

2.1 通过检索-阅读流程解决知识密集型任务

Mainstream methods for solving knowledgeintensive NLP tasks employ a retrieve-then-read model pipeline. Given an input query, a retriever is employed to search a large evidence corpus (e.g., Wikipedia) for relevant documents that may contain the answer. Subsequently, a reader is used to scrutinize the retrieved documents and predict an answer. Recent research has primarily focused on improving either the retriever (Karpukhin et al., 2020; Qu et al., 2021; Sachan et al., 2022) or the reader (Izacard and Grave, 2021; Yu et al., 2022a; Ju et al., 2022), or training the entire system endto-end (Singh et al., 2021; Shi et al., 2023). Early retrieval methods largely employed sparse retrievers, such as BM25 (Chen et al., 2017). Recently, ORQA (Lee et al., 2019) and DPR (Karpukhin et al., 2020) have revolutionized the field by using dense contextual i zed vectors for document indexing, resulting in superior performance compared to traditional approaches. Recently, several work proposed to replace the retrieval model with a large language model as retriever, owing to the powerful knowledge memorization cap a bil ties (Yu et al., 2023; Sun et al., 2023). However, these methods may still be prone to hallucination issues and are unable to access up-to-date information. Notably, compared to retrieve-then-read pipelines like RePLUG (Shi et al., 2023), our method benefits from more relevant documents retrieved from the corpus to directly elucidate the relationship between query and outputs. Additionally, without generating the initial output, the text supporting the output cannot be easily identified due to the lack of lexical or semantic overlap with the question.

解决知识密集型NLP任务的主流方法采用检索-阅读的模型流程。给定输入查询时,首先使用检索器在大型证据语料库(如维基百科)中搜索可能包含答案的相关文档,随后通过阅读器对检索到的文档进行细读并预测答案。近期研究主要集中于改进检索器(Karpukhin et al., 2020; Qu et al., 2021; Sachan et al., 2022)或阅读器(Izacard and Grave, 2021; Yu et al., 2022a; Ju et al., 2022),或训练端到端系统(Singh et al., 2021; Shi et al., 2023)。早期检索方法多采用稀疏检索器如BM25(Chen et al., 2017),而ORQA(Lee et al., 2019)和DPR(Karpukhin et al., 2020)通过使用稠密上下文向量进行文档索引实现了性能突破。近期研究(Yu et al., 2023; Sun et al., 2023)利用大语言模型强大的知识记忆能力替代传统检索模型,但这些方法仍存在幻觉问题且无法获取最新信息。值得注意的是,相较于RePLUG(Shi et al., 2023)等检索-阅读流程,我们的方法能从语料库中检索更相关的文档来直接阐明查询与输出的关系。此外,若不生成初始输出,由于缺乏与问题的词汇或语义重叠,支持输出的文本将难以识别。

2.2 Aligning Language Model with Instructions via Human Feedback

2.2 通过人类反馈对齐语言模型与指令

Human feedback plays a crucial role in evaluating language model performance, addressing accuracy, fairness, and bias issues, and offering insights for model improvement to better align with human expectations. Recognizing the significance of integrating human feedback into language models, researchers have developed and tested various humanin-the-loop methodologies (Nakano et al., 2021; Campos and Shern, 2022; Ouyang et al., 2022; Liu et al., 2023; Scheurer et al., 2023). Instruct- GPT (Ouyang et al., 2022) was a trailblazer in this domain, utilizing reinforcement learning from human feedback (RLHF) to fine-tune GPT-3 to adhere to a wide range of written instructions. It trained a reward model (RM) on this dataset to predict the preferred model output based on human labelers’ preferences. The RM then served as a reward function, and the supervised learning baseline was fine-tuned to maximize this reward using the PPO algorithm. Liu et al. (2023) proposed converting all forms of feedback into sentences, which were subsequently used to fine-tune the model. This approach leveraged the language comprehension capabilities of language models.

人类反馈在评估语言模型性能、解决准确性、公平性和偏见问题以及为模型改进提供见解以更好地符合人类期望方面发挥着至关重要的作用。认识到将人类反馈整合到语言模型中的重要性,研究人员开发并测试了各种人在回路方法 (Nakano et al., 2021; Campos and Shern, 2022; Ouyang et al., 2022; Liu et al., 2023; Scheurer et al., 2023)。Instruct-GPT (Ouyang et al., 2022) 是该领域的先驱,利用基于人类反馈的强化学习 (RLHF) 来微调 GPT-3 以遵循各种书面指令。它在该数据集上训练了一个奖励模型 (RM) ,根据人类标注者的偏好预测首选模型输出。随后,RM 作为奖励函数,监督学习基线使用 PPO 算法进行微调以最大化此奖励。Liu et al. (2023) 提出将所有形式的反馈转换为句子,随后用于微调模型。这种方法利用了语言模型的语言理解能力。

Although these approaches have shown promising results in enhancing language model performance on specific tasks, they also present several significant limitations. These methods rely on human-annotated data and positively-rated model generations for fine-tuning pre-trained language models, which can involve considerable costs and time investments. Moreover, by relying exclusively on positively-rated data, the model’s capacity to identify and address negative attributes or errors may be limited, consequently reducing its generalizability to novel and unseen data.

尽管这些方法在提升语言模型针对特定任务的表现上展现出良好效果,但也存在若干显著局限。这些方法依赖于人工标注数据和对模型生成结果的正向评分来微调预训练语言模型,可能涉及高昂成本与时间投入。此外,由于仅依赖正向评分数据,模型识别和处理负面属性或错误的能力会受到限制,从而降低其对未见过新数据的泛化能力。

2.3 Comparative Analysis of Concurrent Related Work

2.3 并发相关工作的对比分析

In light of rapid advancements in the field, numerous concurrent works have adopted a similar philosophy, employing automated feedback to enhance language model performance (He et al., 2023; Peng et al., 2023). While these studies jointly validate the efficacy and practicality of incorporating retrieval feedback, it is crucial to emphasize the differences between our work and these contemporary investigations. He et al. (2023) initially demonstrated that retrieval feedback could bolster faithfulness in chain-of-thought reasoning. However, their study is confined to commonsense reasoning tasks, and the performance improvement observed is not substantial. In contrast, our work predominantly targets knowledge-intensive NLP tasks, wherein external evidence assumes a more critical role in providing valuable feedback to augment model performance. Peng et al. (2023) proposed utilizing ChatGPT to generate feedback based on retrieved evidence. In comparison, our research demonstrates that retrieved documents can be directly employed as feedback to refine language model outputs, significantly enhancing the efficiency of this method. Simultaneously, building on the fundamental retrieval feedback concept, we introduce two novel modules, i.e., diversifying generation outputs and ensembling initial and post-feedback answers. In comparison to existing research, our proposed REFEED methodology offers a distinctive contribution to the ongoing discourse. As we persist in exploring this avenue of inquiry, we foresee future studies refining these techniques, ultimately achieving even greater performance gains.

鉴于该领域的快速发展,许多同期研究采用了相似的思路,利用自动化反馈提升语言模型性能 (He et al., 2023; Peng et al., 2023)。虽然这些研究共同验证了引入检索反馈的有效性和实用性,但必须强调我们的工作与这些当代研究之间的差异。He et al. (2023) 首次证明检索反馈可以增强思维链推理的可信度,但其研究仅限于常识推理任务,且观察到的性能提升并不显著。相比之下,我们的工作主要针对知识密集型 NLP 任务,其中外部证据在提供有价值的反馈以增强模型性能方面发挥着更关键的作用。Peng et al. (2023) 提出利用 ChatGPT 基于检索证据生成反馈,而我们的研究表明检索文档可直接作为反馈来优化语言模型输出,显著提升了该方法的效率。同时,在基础检索反馈概念的基础上,我们引入了两个新模块——多样化生成输出以及集成初始反馈后答案。与现有研究相比,我们提出的 REFEED 方法为当前讨论做出了独特贡献。随着我们持续探索这一研究方向,我们预见未来研究将完善这些技术,最终实现更大的性能提升。

3 Proposed Method

3 提出的方法

In this section, we provide an in-depth description of our innovative plug-and-play retrieval feedback (REFEED) pipeline, specifically designed to tackle a variety of knowledge-intensive tasks (§3.1). The pipeline operates by initially prompting a language model (e.g., Instruct GP T) to generate an answer in response to a given query, followed by the retrieval of documents from extensive document collections, such as Wikipedia. Subsequently, the pipeline refines the initial answer by incorporating the information gleaned from the retrieved documents.

在本节中,我们将深入介绍创新的即插即用检索反馈 (REFEED) 流程,该流程专为处理各类知识密集型任务而设计 (§3.1)。其运作机制是:首先提示语言模型 (例如 Instruct GPT) 根据给定查询生成答案,随后从大规模文档集合 (如 Wikipedia) 中检索相关文档,最终通过整合检索文档中的信息来优化初始答案。

Besides, we introduce two novel modules based on our REFEED framework. The first module aims to diversify the initial generation step, producing multiple output candidates. This enables the model to identify the most reliable answer by examining the broad range of retrieved documents. The second module employs an ensemble approach that combines language model outputs from both before and after the retrieval feedback process. This is achieved using a perplexity ranking method, which mitigates the risk of retrieval feedback inadvertently misleading the language model.

此外,我们基于REFEED框架引入了两个创新模块。第一个模块旨在多样化初始生成步骤,产生多个输出候选,使模型能够通过检查广泛检索到的文档范围来识别最可靠的答案。第二个模块采用集成方法,结合检索反馈过程前后的语言模型输出,通过困惑度(perplexity)排序方法实现,从而降低检索反馈无意中误导语言模型的风险。

3.1 Proposed Method: REFEED

3.1 提出方法:REFEED

Background. Traditional large language models, such as GPT-3.5 based architectures, have primarily focused on encoding an input query and predicting the corresponding output answer (Brown et al., 2020; Ouyang et al., 2022). In this process, the question $q$ , when combined with a text prompt, serves as input to the model, which then generates the answer. This can be represented as $p(a|q,\theta)$ , where $\theta$ denotes the pre-trained model parameters. In practical scenarios, the maximum a posteriori estimation (MAP) serves as the final answer, as illustrated by $\hat{a}=\mathrm{argmax}_ {a}p(a|q,\theta)$ . However, this direct approach to eliciting answers from large language models often leads to suboptimal performance. This is because it does not fully exploit the wealth of supplementary world knowledge available to the model (Levine et al., 2022). To address this limitation, recent research has explored methods to improve model performance by incorporating an additional auxiliary variable, corresponding to a retrieved document $(d)$ . This extension modifies the model formulation to $\begin{array}{r}{p(a|q)=\sum_{i}p(a|d_{i},q)p(d_{i}|q)}\end{array}$ , marginal- izing over all pos sible documents. In practice, it is infeasible to compute the sum over all possible documents $(d)$ due to the vast number of potential sources. Consequently, the most common approach involves approximating the sum over $d$ using the $k$ highest ranked documents, and providing all these documents as part of the input. We assume, w.l.o.g., that these documents are $d_{1},\ldots,d_{k}$ , yielding $\begin{array}{r}{p(\bar{a}|q)=\sum_{i=1}^{k}p(a|d_{i},q)p(d_{i}|q)}\end{array}$ . This technique is referre d to as the retrieve-then-read pipeline (Lazaridou et al., 2022; Shi et al., 2023).

背景。传统的大语言模型(如基于GPT-3.5架构的模型)主要专注于对输入查询进行编码并预测相应的输出答案(Brown等人,2020;Ouyang等人,2022)。在此过程中,问题$q$与文本提示结合后作为模型输入,模型随后生成答案。这可以表示为$p(a|q,\theta)$,其中$\theta$表示预训练模型参数。在实际场景中,最大后验估计(MAP)作为最终答案,如$\hat{a}=\mathrm{argmax}_ {a}p(a|q,\theta)$所示。然而,这种直接从大语言模型中获取答案的方法往往导致性能欠佳,因为它未能充分利用模型可用的丰富辅助世界知识(Levine等人,2022)。为解决这一局限,近期研究探索通过引入额外辅助变量(对应检索文档$(d)$)来提升模型性能。这一扩展将模型公式修改为$\begin{array}{r}{p(a|q)=\sum_{i}p(a|d_{i},q)p(d_{i}|q)}\end{array}$,对所有可能文档进行边缘化处理。实践中,由于潜在来源数量庞大,计算所有可能文档$(d)$的和不可行。因此,最常见的方法是使用$k$个排名最高的文档近似求和$d$,并将这些文档全部作为输入部分。假设这些文档为$d_{1},\ldots,d_{k}$,则得到$\begin{array}{r}{p(\bar{a}|q)=\sum_{i=1}^{k}p(a|d_{i},q)p(d_{i}|q)}\end{array}$。该技术被称为检索-阅读管道(Lazaridou等人,2022;Shi等人,2023)。

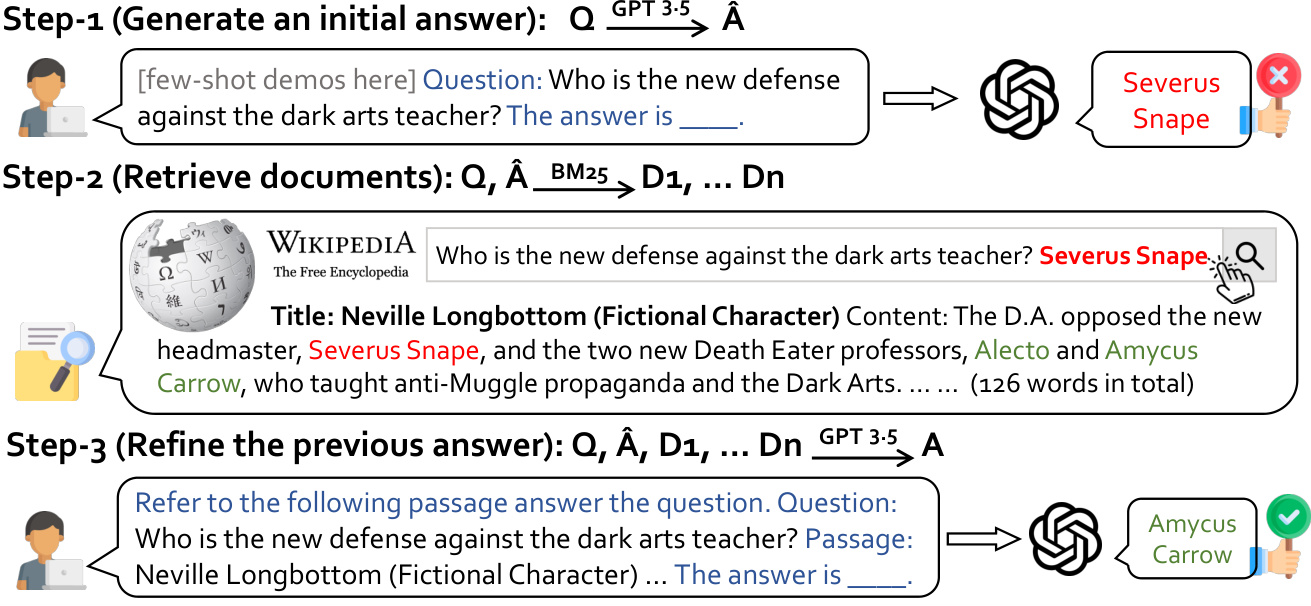

Figure 1: REFEED operates by initially prompting a large language model to generate an answer in response to a given query, followed by the retrieval of documents from extensive document collections. Subsequently, the pipeline refines the initial answer by incorporating the information gleaned from the retrieved documents.

图 1: REFEED 的工作流程是首先提示一个大语言模型根据给定查询生成答案,随后从大量文档集合中检索相关文档。接着,该流程通过整合从检索到的文档中提取的信息来优化初始答案。

3.1.1 Basic Pipeline

3.1.1 基础流程

Contrary to traditional methods mentioned above, REFEED is designed to offer feedback via retrieval targeted specifically to individually generated outputs. It can be formulated as $p(a|q)=$ $\begin{array}{r}{\sum_{i}p(a|d_{i},q,\widehat{a})p(d_{i}|\widehat{a},q)p(\widehat{a}|q)}\end{array}$ , where $\widehat{a}$ represents the init ibal outpbut, $a$ isb the final ou tpbut, and $d_{i}$ is conditioned not only on $q$ but also on $\widehat{a}$ Thus, $d_{i}$ is intended to provide feedback spec ibfically on $\widehat{a}$ as the output, rather than providing general i nb formation to the query $q$ . As in the case of the retrieve-and-read pipeline, we retain only the top $k=10$ highest ranked documents: $\begin{array}{r}{p(a|q)=\sum_{i=1}^{k}p(a|d_{i},q,\widehat{a})p(d_{i}|\widehat{a},q)p(\widehat{a}|q)}\end{array}$ .

与传统方法不同,REFEED旨在通过检索为单个生成输出提供针对性反馈。其公式可表示为 $p(a|q)=$ $\begin{array}{r}{\sum_{i}p(a|d_{i},q,\widehat{a})p(d_{i}|\widehat{a},q)p(\widehat{a}|q)}\end{array}$ ,其中 $\widehat{a}$ 代表初始输出,$a$ 是最终输出,$d_{i}$ 不仅以 $q$ 为条件,还以 $\widehat{a}$ 为条件。因此,$d_{i}$ 旨在针对 $\widehat{a}$ 作为输出提供特定反馈,而非为查询 $q$ 提供通用信息。与检索-读取流程类似,我们仅保留排名前 $k=10$ 的文档:$\begin{array}{r}{p(a|q)=\sum_{i=1}^{k}p(a|d_{i},q,\widehat{a})p(d_{i}|\widehat{a},q)p(\widehat{a}|q)}\end{array}$ 。

This method enables ab smootbh in te gb ration of feedback to refine previous outputs in a plug-andplay fashion, eliminating the need for costly finetuning. REFEED takes advantage of a collection of relevant documents retrieved from an extensive textual corpus, facilitating the direct elucidation of relationships between queries and outputs. Additionally, without generating an initial output, it becomes difficult to retrieve text that supports the output due to the absence of lexical or semantic overlap with the question. Essentially, REFEED functions by first prompting a large language model to produce an answer in response to a given query, followed by the retrieval of documents from external sources. The pipeline then refines the initial answer by incorporating information obtained from the retrieved documents. The three-step process is illustrated in Figure 1 and outlined below.

该方法能够流畅地整合反馈以优化先前输出,实现即插即用式改进,无需昂贵的微调。REFEED利用从海量文本语料库中检索的相关文档集合,直接阐明查询与输出间的关联。此外,若未生成初始输出,由于缺乏与问题的词汇或语义重叠,将难以检索到支持输出的文本。本质上,REFEED的运行机制分为三步:(1) 首先提示大语言模型根据给定查询生成答案,(2) 随后从外部源检索文档,(3) 最终结合检索文档信息优化初始答案。该流程如图1所示并概述如下。

Step 1: Generate an Initial Answer. In this initial step, our primary objective is to prompt a language model to generate an answer based on the given

步骤1:生成初始答案。在这一初始步骤中,我们的主要目标是提示语言模型根据给定的

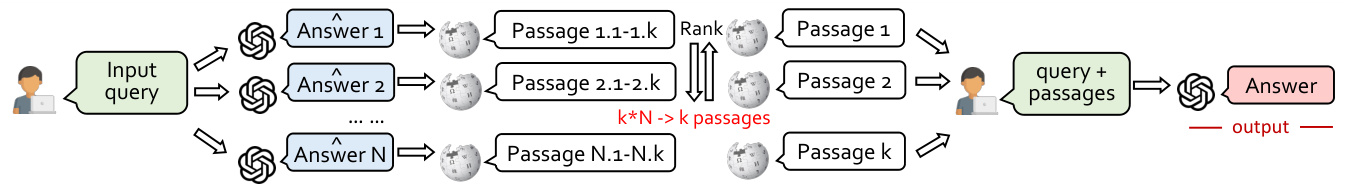

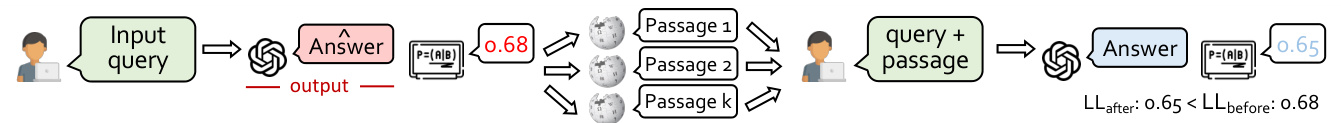

Figure 2: Rather than generating only one initial answer, we prompt the language model to sample multiple answers, allowing for a more comprehensive retrieval feedback based on different answers.

图 2: 我们不再仅生成一个初始答案,而是提示大语言模型生成多个答案样本,从而基于不同答案获得更全面的检索反馈。

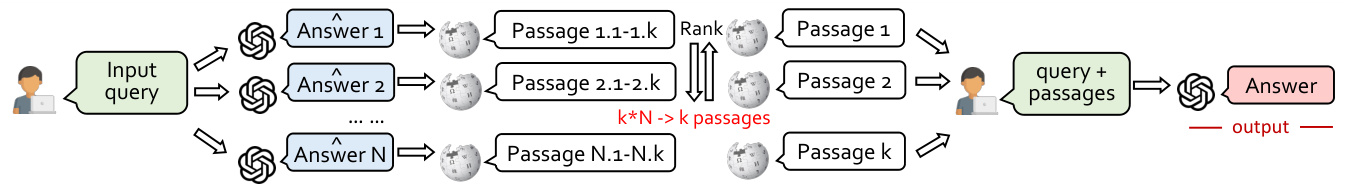

Figure 3: By employing an ensemble method that evaluates initial and refined answers with retrieval feedback, we enhance the assessment of answer trustworthiness, ensuring a more accurate output.

图 3: 通过采用集成方法评估带有检索反馈的初始答案和优化答案,我们增强了对答案可信度的评估,确保输出更准确。

question. To achieve this, various decoding strategies can be employed, e.g., greedy decoding and sampling methods. In our experiments, we opted for greedy decoding due to its simplicity and reproducibility, allowing more consistent performance across multiple runs. This step is essential for establishing a foundation upon which the following steps can build and refine the initial answer.

问题。为实现这一目标,可采用多种解码策略,例如贪婪解码和采样方法。在实验中,我们选择了贪婪解码,因其简单且可复现,能在多次运行中保持更一致的性能。这一步骤至关重要,为后续步骤建立基础,以便在初始答案基础上进行改进。

Step 2: Retrieve Supporting Documents. The second step in our pipeline involves utilizing a retrieval model (e.g., BM25) to acquire a set of document from an extensive document collection, such as Wikipedia. In our experiments, we retrieve top10 documents, which offers a balanced trade-off between computational efficiency for step 3 inference and the inclusion of sufficient information. The primary goal of this step is to identify relevant information that can corroborate or refute the relationship between the question and the initially generated answer. By extracting pertinent information from these vast sources, the pipeline can effec- tively leverage external knowledge to improve the accuracy and reliability of the generated response.

步骤2:检索支持文档。我们流程的第二步涉及利用检索模型(如BM25)从海量文档集合(例如维基百科)中获取一组文档。实验中我们检索前10篇文档,在第三步推理的计算效率与信息充分性之间实现了平衡。此步骤的主要目标是识别能够证实或反驳问题与初始生成答案之间关联的相关信息。通过从这些庞大来源中提取关键信息,该流程能有效利用外部知识提升生成响应的准确性与可靠性。

Step 3: Refine the Previous Answer. The final step of our pipeline focuses on refining the previously generated answer by taking into account the document retrieved in step 2. During this stage, the language model evaluates the retrieved information and adjusts the initial answer accordingly, ensuring that the response is more accurate. This refinement process may involve rephrasing, expanding, or even changing the answer based on the newfound knowledge. By incorporating the insights gleaned from the retrieved document, the refined answer is better equipped to address the initial query comprehensively and accurately, resulting in an improved overall performance. This step is critical for bridging the gap between the initial generation and the wealth of external knowledge, ultimately producing a high-quality, well-informed output.

步骤3:优化先前答案。我们流程的最后一步重点在于结合第二步检索到的文档来优化先前生成的答案。在此阶段,语言模型会评估检索到的信息,并据此调整初始答案,确保回答更加准确。这一优化过程可能涉及根据新发现的知识对答案进行改写、扩展甚至完全更改。通过整合从检索文档中获得的洞见,优化后的答案能更全面、准确地回应初始查询,从而提升整体表现。该步骤对于弥合初始生成内容与外部知识库之间的差距至关重要,最终产出高质量、信息充分的输出结果。

3.1.2 Enhanced Modules

3.1.2 增强模块

Diverse Answer Generation. Diversity in answer generation plays a pivotal role in the first step of our REFEED pipeline. Rather than merely generating a single answer with the highest probability, we implement sampling methods to produce a set of potential answers. This approach fosters diversity in the generated outputs and enables a more comprehensive retrieval feedback based on diverse answers. As a result, a more refined and accurate final response is produced that effectively addresses the given question.

多样化答案生成。答案生成的多样性在我们REFEED流程的第一步中起着关键作用。我们并非仅生成概率最高的单一答案,而是采用采样方法产生一组潜在答案。这种方法促进了生成结果的多样性,并能基于多样化答案实现更全面的检索反馈。最终产生更精细准确的最终回答,从而有效解决给定问题。

To elaborate, we input the question $q$ along with a text prompt into the model, which subsequently samples multiple distinct answers, denoted as $p(a_{j}|q,\theta)$ . We then utilize the $n$ generated answers as input queries for the retrieval process, i.e., $[q,a_{1}],\cdot\cdot\cdot,[q,a_{n}]$ . This stage is realized by multiple decoding passes, wherein the input query is fed into the language model with nucleus sampling. This strategy increases the probability of obtaining a more diverse set of retrieved documents encompassing a broader spectrum of relevant information. Formally, it can be represented as $\begin{array}{r}{p(a|q)=\sum_{i,j}p(a|d_{i,j},q,\widehat{a}_ {j})p(d_{i,j}|\widehat{a}_ {j},q)p(\widehat{a}_{j}|q)}\end{array}$ .

具体来说,我们将问题$q$和文本提示输入模型,随后模型采样生成多个不同答案,记为$p(a_{j}|q,\theta)$。接着将这$n$个生成答案作为检索过程的输入查询,即$[q,a_{1}],\cdot\cdot\cdot,[q,a_{n}]$。该阶段通过多次解码过程实现,其中输入查询通过核心采样送入语言模型。此策略提高了获取更多样化检索文档的概率,从而涵盖更广泛的相关信息。其形式化表示为$\begin{array}{r}{p(a|q)=\sum_{i,j}p(a|d_{i,j},q,\widehat{a}_ {j})p(d_{i,j}|\widehat{a}_ {j},q)p(\widehat{a}_{j}|q)}\end{array}$。

Consid ering the limita tbions on thbe numbber of documents, or say context length of language model input, that can be employed in STEP 3, we merge all retrieved documents (across different $\widehat{a}_ {j}$ ), rank them based on query-document similari tby scores, and retain only the top $k$ documents for further processing. In our experiments, we use $k=10$ , which offers a balanced trade-off between computational efficiency and the inclusion of diverse information. Furthermore, to account for the possibility of different queries retrieving identical documents, we perform de-duplication to ensure that the set of retrieved documents remains diverse and relevant. Lastly, when computing the final answer, we provide all $n$ generated answers as well as the aforementioned top $k$ documents as part of the prompt. Formally, this can be represented as By incorporating diversity in answer generation in STEP 1, we effectively broaden the potential answer space, facilitating the exploration of a wider variety of possible solutions. $p(a|q)=$ $\begin{array}{r}{\sum_{i=1}^{k}\sum_{j=1}^{n}p(a|d_{i,j},q,\widehat{a}_ {j})p(d_{i,j}|\widehat{a}_ {j},q)p(\widehat{a}_{j}|{q})}\end{array}$ .

考虑到步骤3中可使用的文档数量(即语言模型输入的上下文长度)限制,我们将所有检索到的文档(跨不同$\widehat{a}_ {j}$)合并,根据查询-文档相似度评分进行排序,并仅保留前$k$个文档进行后续处理。实验中设定$k=10$,以在计算效率与信息多样性之间取得平衡。此外,针对不同查询可能检索到相同文档的情况,我们执行去重操作以确保文档集的多样性和相关性。最后,在计算最终答案时,我们将所有$n$个生成答案及前述前$k$个文档作为提示词的一部分。其形式化表示为:通过在步骤1中引入答案多样性生成机制,我们有效扩展了潜在答案空间,促进了对更广泛可能解的探索。$p(a|q)=$$\begin{array}{r}{\sum_{i=1}^{k}\sum_{j=1}^{n}p(a|d_{i,j},q,\widehat{a}_ {j})p(d_{i,j}|\widehat{a}_ {j},q)p(\widehat{a}_{j}|{q})}\end{array}$。

Ensembling Initial and Post-Feedback Answers.

集成初始答案与反馈后答案

Retrieval feedback serves as a crucial component in obtaining relevant information to validate the accuracy of initially generated answers. Nonetheless, there may be instances where the retrieved documents inadvertently mislead the language model, causing a correct answer to be revised into an incorrect one (see examples in Figure 5). To address this challenge, we introduce an ensemble technique that considers both the initial answers and the revised answers post-retrieval feedback, ultimately improving the overall generation performance.

检索反馈作为获取相关信息以验证初始生成答案准确性的关键环节,其重要性不言而喻。然而,检索到的文档有时可能会误导语言模型,导致原本正确的答案被修改为错误答案(具体示例见图5)。为解决这一问题,我们采用了一种集成技术,综合考虑初始答案和经过检索反馈修正后的答案,从而提升整体生成性能。

In ensemble process, we utilize average negative log-likelihood to rank the generated answers before (i.e., $\begin{array}{r}{\mathrm{LL}_ {\mathrm{before}}(a|q)=\frac{1}{t}\sum_{i=1}^{t}p(x_{i}|x_{<i},q))}\end{array}$ and after incorporating retrieved documents (i.e., $\begin{array}{r}{\mathrm{LL}_ {\mathrm{after}}(a|q)=\frac{1}{t}\sum_{i=1}^{t}p(x_{i}|x_{<i},q,\widehat{a},d))}\end{array}$ . If the log-likelihood of an answer before r betrieval feedback is higher than that after retrieval feedback, we retain the initially generated answer. On the other hand, if the log-likelihood is lower after retrieval feedback, we choose the refined answer. This strategy allows for a more informed assessment of the trustworthiness of answer before and after retrieval feedback, ensuring a more accurate final response.

在集成过程中,我们利用平均负对数似然对生成的答案进行排序,包括检索前(即 $\begin{array}{r}{\mathrm{LL}_ {\mathrm{before}}(a|q)=\frac{1}{t}\sum_{i=1}^{t}p(x_{i}|x_{<i},q))}\end{array}$)和整合检索文档后(即 $\begin{array}{r}{\mathrm{LL}_ {\mathrm{after}}(a|q)=\frac{1}{t}\sum_{i=1}^{t}p(x_{i}|x_{<i},q,\widehat{a},d))}\end{array}$)。若某答案在检索反馈前的对数似然高于检索反馈后,则保留初始生成的答案;反之则选择优化后的答案。该策略能更有效地评估检索反馈前后答案的可信度,从而确保最终响应的准确性。

4 Experiments

4 实验

In this section, we conduct comprehensive experiments on three knowledge-intensive NLP tasks, including single-hop QA (i.e., NQ (Kwiatkowski et al., 2019), TriviaQA (Joshi et al., 2017)), multi- hop QA (i.e., HotpotQA (Yang et al., 2018)) and dialogue system (i.e., WoW (Dinan et al., 2019)). In single-hop QA datasets, we employ the same splits as previous approaches (Karpukhin et al., 2020; Izacard and Grave, 2021). With regard to the HotpotQA and WoW datasets, our approach involves the usage of dataset splits provided by the

在本节中,我们对三个知识密集型自然语言处理任务进行了全面实验,包括单跳问答(即NQ (Kwiatkowski et al., 2019)、TriviaQA (Joshi et al., 2017))、多跳问答(即HotpotQA (Yang et al., 2018))以及对话系统(即WoW (Dinan et al., 2019))。在单跳问答数据集中,我们采用了与先前方法相同的划分方式 (Karpukhin et al., 2020; Izacard and Grave, 2021)。对于HotpotQA和WoW数据集,我们的方法使用了由

KILT challenge (Petroni et al., 2021).

KILT挑战赛 (Petroni et al., 2021)。

To thoroughly assess the performance of our model, we employ a variety of evaluation metrics, taking into consideration the professional standards established in the field. For the evaluation of open-domain QA, we use exact match (EM) and F1 score for evaluating model performance (Zhu et al., 2021). For EM score, an answer is deemed correct if its normalized form – obtained through the normalization procedure delineated by (Karpukhin et al., 2020) – corresponds to any acceptable answer in the provided list. Similar to EM score, F1 score treats the prediction and ground truth as bags of tokens, and compute the average overlap between the prediction and ground truth answer. Besides, we also incorporate Recall $\ @\mathrm{K}$ $(\mathbf{R}\ @\mathbf{K})$ as an intermediate evaluation metric, which is calculated as the percentage of top-K retrieved or generated documents containing the correct answer. This metric has been widely adopted in previous research (Karpukhin et al., 2020; Sachan et al., 2022; Yu et al., 2023), thereby establishing its credibility within the field. When evaluating open-domain dialogue systems, we adhere to the guidelines set forth by the KILT benchmark (Petroni et al., 2021), which recommends using a combination of F1 and Rouge-L (R-L) scores as evaluation metrics. This approach ensures a comprehensive and rigorous assessment of our model’s performance, aligning with professional standards and best practices.

为全面评估模型性能,我们采用多种评价指标,并参考该领域制定的专业标准。针对开放域问答任务,我们使用精确匹配(EM)和F1分数评估模型表现(Zhu et al., 2021)。EM分数的判定标准是:当答案经过(Karpukhin et al., 2020)描述的规范化处理后,与参考答案列表中的任一可接受答案相符即视为正确。与EM分数类似,F1分数将预测结果和标准答案视为token集合,计算两者间的平均重叠度。此外,我们还引入召回率@K(R@K)作为中间评价指标,该指标计算前K个检索或生成文档中包含正确答案的百分比。该指标已被多项研究广泛采用(Karpukhin et al., 2020; Sachan et al., 2022; Yu et al., 2023),在领域内具有公认可信度。评估开放域对话系统时,我们遵循KILT基准测试(Petroni et al., 2021)的指导原则,推荐采用F1分数与Rouge-L(R-L)分数相结合的评估方案。这种方法确保了对模型性能进行全面严谨的评估,符合专业标准与最佳实践。

4.1 Backbone Language Model

4.1 骨干语言模型

Codex: OpenAI Codex, i.e., code-davinci-002, a sophisticated successor to the GPT-3 model, has undergone extensive training utilizing an immense quantity of data. This data comprises not only natural language but also billions of lines of source code obtained from publicly accessible repositories, such as those found on GitHub. As a result, the Codex model boasts unparalleled proficiency in generating human-like language and understanding diverse programming languages.

Codex: OpenAI Codex (即 code-davinci-002) 作为 GPT-3 模型的先进后继者,通过海量数据进行了广泛训练。这些数据不仅包含自然语言,还包括从 GitHub 等公开代码库获取的数十亿行源代码。因此,Codex 模型在生成类人语言和理解多种编程语言方面展现出无与伦比的能力。

Text-davinci-003: Building on the foundation laid by previous Instruct GP T models, OpenAI’s text-davinci-003 represents a significant advancement in the series. This cutting-edge model showcases considerable progress in multiple areas, including the ability to generate superior quality written content, an enhanced capacity to process and execute complex instructions, and an expanded capability to create coherent, long-form narratives.

Text-davinci-003:在先前Instruct GPT模型奠定的基础上,OpenAI的text-davinci-003实现了该系列的重大突破。这一尖端模型在多个领域展现出显著进步,包括生成更优质文本内容的能力、处理和执行复杂指令的增强性能,以及构建连贯长篇叙事的扩展能力。

| Models | NQ EM | TriviaQA | HotpotQA | Wow | ||||

| F1 | EM | F1 | EM | F1 | F1 | R-L | ||

| *closebookmethodswithoutusingretriever | ||||||||

| TD-003 (Ouyang et al., 2022) | 29.9 | 35.4 | 65.8 | 73.2 | 26.0 | 28.2 | 14.2 | 13.3 |

| GenRead (Yu et al.,2023) | 32.5 | 42.0 | 66.2 | 73.9 | 36.4 | 39.9 | 14.7 | 13.5 |

| *open book methods with usingretriever | ||||||||

| Retrieve-then-Read | 31.7 | 41.2 | 61.4 | 67.4 | 35.2 | 38.0 | 14.6 | 13.4 |

| REFEED (Ours) | 39.6 | 48.0 | 68.9 | 75.2 | 41.5 | 45.1 | 15.1 | 14.0 |

Table 1: REFEED achieves SoTA performance on three zero-shot knowledge intensive NLP tasks. The backbone model is Text-Davinci-003 (TD-003), which is trained to follow human instructions.

| 模型 | NQ EM | F1 | TriviaQA EM | F1 | HotpotQA EM | F1 | Wow F1 | R-L |

|---|---|---|---|---|---|---|---|---|

| *闭卷方法(不使用检索器) | ||||||||

| TD-003 (Ouyang et al., 2022) | 29.9 | 35.4 | 65.8 | 73.2 | 26.0 | 28.2 | 14.2 | 13.3 |

| GenRead (Yu et al.,2023) | 32.5 | 42.0 | 66.2 | 73.9 | 36.4 | 39.9 | 14.7 | 13.5 |

| *开卷方法(使用检索器) | ||||||||

| Retrieve-then-Read | 31.7 | 41.2 | 61.4 | 67.4 | 35.2 | 38.0 | 14.6 | 13.4 |

| REFEED (Ours) | 39.6 | 48.0 | 68.9 | 75.2 | 41.5 | 45.1 | 15.1 | 14.0 |

表 1: REFEED在三个零样本知识密集型NLP任务上达到SoTA性能。主干模型为Text-Davinci-003 (TD-003),该模型经过训练以遵循人类指令。

| Models | NQ EM F1 | TriviaQA EM F1 | HotpotQA EM F1 | Wow F1 R-L | ||||

| Backbone Language Model: Text-Davinci-003 (TD-003) | ||||||||

| *closebook methods without usingretriever | ||||||||

| TD-003 (Ouyang et al., 2022) | 36.5 | 46.3 | 71.2 | 76.5 | 31.2 | 37.5 | 14.1 | 13.3 |

| GenRead (Yu et al., 2023) | 38.2 | 47.3 | 71.4 | 76.8 | 36.6 | 47.5 | 14.7 | 14.1 |

| *open book methods with using retriever | ||||||||

| Retrieve-then-Read | 34.3 | 45.6 | 66.5 | 70.6 | 35.2 | 46.8 | 14.5 | 13.8 |

| REFEED (Ours) | 40.1 | 50.0 | 71.8 | 77.2 | 41.5 | 54.2 | 15.1 | 14.3 |

| Backbone Language Model: Code-Davinci-002 (Codex) | ||||||||

| *close book methods without using retriever | ||||||||

| Codex (Ouyang et al., 2022) | 41.6 | 52.8 | 73.3 | 79.2 | 32.5 | 42.8 | 16.9 | 14.7 |

| GenRead (Yu et al., 2023) | 44.2 | 55.2 | 73.7 | 79.6 | 37.5 | 48.8 | 17.2 | 15.1 |

| *open book methods with using retriever | ||||||||

| Retrieve-then-Read | 43.9 | 54.9 | 75.5 | 81.7 | 41.5 | 53.7 | 17.0 | 14.9 |

| REFEED (Ours) | 46.4 | 57.0 | 76.6 | 82.7 | 43.5 | 56.5 | 17.6 | 15.5 |

Table 2: REFEED achieved SoTA performance on three few-shot knowledge intensive NLP tasks.

| 模型 | NQ EM F1 | TriviaQA EM F1 | HotpotQA EM F1 | Wow F1 R-L |

|---|---|---|---|---|

| 主干语言模型: Text-Davinci-003 (TD-003) | ||||

| 闭卷方法(不使用检索器) | ||||

| TD-003 (Ouyang et al., 2022) | 36.5 | 46.3 | 71.2 | 76.5 |

| GenRead (Yu et al., 2023) | 38.2 | 47.3 | 71.4 | 76.8 |

| 开卷方法(使用检索器) | ||||

| Retrieve-then-Read | 34.3 | 45.6 | 66.5 | 70.6 |

| REFEED (Ours) | 40.1 | 50.0 | 71.8 | 77.2 |

| 主干语言模型: Code-Davinci-002 (Codex) | ||||

| 闭卷方法(不使用检索器) | ||||

| Codex (Ouyang et al., 2022) | 41.6 | 52.8 | 73.3 | 79.2 |

| GenRead (Yu et al., 2023) | 44.2 | 55.2 | 73.7 | 79.6 |

| 开卷方法(使用检索器) | ||||

| Retrieve-then-Read | 43.9 | 54.9 | 75.5 | 81.7 |

| REFEED (Ours) | 46.4 | 57.0 | 76.6 | 82.7 |

表 2: REFEED 在三个少样本知识密集型 NLP 任务上取得了 SoTA 性能。

After careful consideration, we ultimately decided against employing ChatGPT and GPT-4 as the foundational models for our project. The primary reason for this decision is OpenAI’s announcement that both models will be subject to ongoing updates in their model parameters 1. These continual modifications would lead to nonreproducible experiments, potentially compromising the reliability of our research outcomes.

经过慎重考虑,我们最终决定不采用ChatGPT和GPT-4作为项目的基础模型。这一决定的主要原因是OpenAI宣布这两款模型的参数将持续更新[1],这些持续改动会导致实验无法复现,可能影响研究结果的可靠性。

4.2 Baseline Methods

4.2 基线方法

In our comparative analysis, we assess our proposed model against two distinct groups of baseline methodologies. The first group encompasses closed-book models, including Instruct

在我们的对比分析中,我们评估了提出的模型与两组不同的基线方法。第一组包括闭卷模型,如Instruct

GPT (Ouyang et al., 2022) and GenRead (Yu et al., 2023), which operate without the assistance of any external supporting documents during the inference process. Each of these baseline methods adheres to a uniform input format, specifically utilizing the structure: [prompt words; question].

GPT (Ouyang et al., 2022) 和 GenRead (Yu et al., 2023) 在推理过程中无需任何外部辅助文档支持。这些基线方法均采用统一的输入格式,具体结构为: [提示词; 问题]。

The second group of models adheres to a retrieve-then-read pipeline (Lazaridou et al., 2022; Shi et al., 2023), which entails a two-stage process. In the initial stage, a retriever component is employed to identify and extract a select number of relevant documents pertaining to a given question from an extensive corpus, such as Wikipedia. Subsequently, a reader component is tasked with inferring a conclusive answer based on the content gleaned from the retrieved documents. Similar to the first group, all baseline methods within this second group adhere to a standardized input format, which is defined as: [prompt words; passage; question]. As RePLUG are not a open-source model, we implemented it independently, which may result in slightly different outcomes compared to the performance reported in their respective papers.

第二类模型遵循检索-阅读流程 (Lazaridou et al., 2022; Shi et al., 2023),包含两个阶段。第一阶段使用检索组件从大规模语料库(如维基百科)中识别并提取与给定问题相关的若干文档;随后,阅读组件基于检索到的文档内容推断出最终答案。与第一类模型类似,该组所有基线方法都采用标准化输入格式:[提示词;段落;问题]。由于RePLUG并非开源模型,我们独立实现了该模型,可能导致与原始论文报告的性能存在细微