SELF CHECK GP T: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models

SELF CHECK GP T: 大语言模型零资源黑盒幻觉检测

Potsawee Manakul, Adian Liusie, Mark J. F. Gales ALTA Institute, Department of Engineering, University of Cambridge pm574@cam.ac.uk, al826@cam.ac.uk, mjfg@eng.cam.ac.uk

Potsawee Manakul, Adian Liusie, Mark J. F. Gales

剑桥大学工程学院ALTA研究所

pm574@cam.ac.uk, al826@cam.ac.uk, mjfg@eng.cam.ac.uk

Abstract

摘要

Generative Large Language Models (LLMs) such as GPT-3 are capable of generating highly fluent responses to a wide variety of user prompts. However, LLMs are known to hallucinate facts and make non-factual statements which can undermine trust in their output. Existing fact-checking approaches either require access to the output probability distribution (which may not be available for systems such as ChatGPT) or external databases that are interfaced via separate, often complex, modules. In this work, we propose "Self Check GP T", a simple sampling-based approach that can be used to fact-check the responses of black-box models in a zero-resource fashion, i.e. without an external database. Self Check GP T leverages the simple idea that if an LLM has knowledge of a given concept, sampled responses are likely to be similar and contain consistent facts. However, for hallucinated facts, stochastically sampled responses are likely to diverge and contradict one another. We investigate this approach by using GPT-3 to generate passages about individuals from the WikiBio dataset, and manually annotate the factuality of the generated passages. We demonstrate that SelfCheckGPT can: i) detect non-factual and factual sentences; and ii) rank passages in terms of factuality. We compare our approach to several baselines and show that our approach has considerably higher AUC-PR scores in sentence-level hallucination detection and higher correlation scores in passage-level factuality assessment compared to grey-box methods.1

生成式大语言模型 (Generative Large Language Models/LLMs) 如 GPT-3 能够针对各种用户提示生成高度流畅的响应。然而,大语言模型存在虚构事实和发表非事实性陈述的问题,这会削弱其输出的可信度。现有的事实核查方法要么需要访问输出概率分布 (对于 ChatGPT 等系统可能无法获取) ,要么依赖通过独立复杂模块连接的外部数据库。本文提出 "SelfCheckGPT" —— 一种基于采样的简易方法,可在零资源条件下 (即无需外部数据库) 对黑盒模型的响应进行事实核查。该方法基于一个简单原理:若大语言模型掌握某个概念,其采样响应应具有相似性且包含一致事实;而对于虚构事实,随机采样响应则容易出现分歧和矛盾。我们通过 GPT-3 生成 WikiBio 数据集中人物描述段落并进行人工事实标注,验证了 SelfCheckGPT 能够:i) 检测非事实与事实性语句;ii) 根据事实性对段落进行排序。与多个基线方法对比表明,本方法在语句级幻觉检测中具有显著更高的 AUC-PR 值,在段落级事实评估中也比灰盒方法获得更高的相关性分数 [20] 。

1 Introduction

1 引言

Large Language Models (LLMs) such as GPT-3 (Brown et al., 2020) and PaLM (Chowdhery et al., 2022) are capable of generating fluent and realistic responses to a variety of user prompts. They have been used in many applications such as automatic tools to draft reports, virtual assistants and summarization systems. Despite the convincing and realistic nature of LLM-generated texts, a growing concern with LLMs is their tendency to hallucinate facts. It has been widely observed that models can confidently generate fictitious information, and worryingly there are few, if any, existing approaches to suitably identify LLM hallucinations.

大语言模型 (LLMs) 如 GPT-3 (Brown 等人, 2020) 和 PaLM (Chowdhery 等人, 2022) 能够针对各类用户提示生成流畅且逼真的响应。它们已被广泛应用于自动报告起草工具、虚拟助手和摘要系统等场景。尽管大语言模型生成的文本具有令人信服的真实性,但人们日益担忧其容易产生事实性幻觉。大量观察表明,这些模型会自信地生成虚构信息,而令人担忧的是,目前几乎不存在能有效识别大语言模型幻觉的成熟方法。

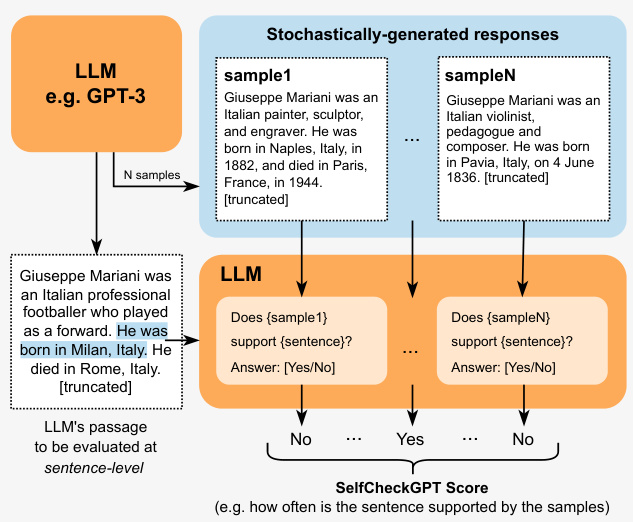

Figure 1: Self Check GP T with Prompt. Each LLM-generated sentence is compared against stochastic ally generated responses with no external database. A comparison method can be, for example, through LLM prompting as shown above.

图 1: 带提示的自检GPT。每个由大语言模型生成的句子都会与随机生成的响应进行对比(无需外部数据库)。对比方法可以是如上所示的大语言模型提示方式。

A possible approach of hallucination detection is to leverage existing intrinsic uncertainty metrics to determine the parts of the output sequence that the system is least certain of (Yuan et al., 2021; Fu et al., 2023). However, uncertainty metrics such as token probability or entropy require access to token-level probability distributions, information which may not be available to users for example when systems are accessed through limited external APIs. An alternate approach is to leverage fact-verification approaches, where evidence is retrieved from an external database to assess the veracity of a claim (Thorne et al., 2018; Guo et al., 2022). However, facts can only be assessed relative to the knowledge present in the database. Additionally, hallucinations are observed over a wide range of tasks beyond pure fact verification (Kryscinski et al., 2020; Maynez et al., 2020).

一种可能的幻觉检测方法是利用现有的内在不确定性指标来确定系统最不确定的输出部分 (Yuan et al., 2021; Fu et al., 2023)。然而,诸如token概率或熵等不确定性指标需要获取token级别的概率分布信息,这些信息可能无法通过有限的外部API提供给用户。另一种方法是利用事实验证技术,从外部数据库检索证据来评估声明的真实性 (Thorne et al., 2018; Guo et al., 2022)。但这种方法只能评估数据库中已有知识相关的事实。此外,幻觉现象广泛存在于纯事实验证之外的多种任务中 (Kryscinski et al., 2020; Maynez et al., 2020)。

In this paper, we propose Self Check GP T, a sampling-based approach that can detect whether responses generated by LLMs are hallucinated or factual. To the best of our knowledge, SelfCheckGPT is the first work to analyze model hallucination of general LLM responses, and is the first zero-resource hallucination detection solution that can be applied to black-box systems. The motivating idea of Self Check GP T is that when an LLM has been trained on a given concept, the sampled responses are likely to be similar and contain consistent facts. However, for hallucinated facts, stochastically sampled responses are likely to diverge and may contradict one another. By sampling multiple responses from an LLM, one can measure information consistency between the different responses and determine if statements are factual or hallucinated. Since Self Check GP T only leverages sampled responses, it has the added benefit that it can be used for black-box models, and it requires no external database. Five variants of Self Check GP T for measuring informational consistency are considered: BERTScore, question-answering, $n$ -gram, NLI, and LLM prompting. Through analysis of annotated articles generated by GPT-3, we show that Self Check GP T is a highly effective hallucination detection method that can even outperform greybox methods, and serves as a strong first baseline for an increasingly important problem of LLMs.

本文提出了一种基于采样的方法 SelfCheckGPT,用于检测大语言模型生成的回答是虚构还是事实。据我们所知,这是首个分析通用大语言模型生成内容幻觉现象的研究,也是首个适用于黑盒系统的零资源幻觉检测方案。SelfCheckGPT 的核心思想是:当大语言模型针对某个概念进行过训练时,其采样生成的回答往往具有相似性且包含一致事实;而对于虚构内容,随机采样的回答则容易出现分歧甚至相互矛盾。通过从大语言模型中采样多个回答,可以测量不同回答间的信息一致性,从而判断陈述的真实性。由于 SelfCheckGPT 仅利用采样回答,该方法不仅适用于黑盒模型,还无需依赖外部数据库。我们考虑了五种测量信息一致性的变体方法:BERTScore、问答匹配、$n$元语法、自然语言推理(NLI)以及大语言模型提示。通过对 GPT-3 生成标注文章的分析表明,SelfCheckGPT 是一种高效的幻觉检测方法,其性能甚至优于灰盒方法,为解决日益重要的大语言模型幻觉问题建立了强有力的基准。

2 Background and Related Work

2 背景与相关工作

2.1 Hallucination of Large Language Models

2.1 大语言模型的幻觉问题

Hallucination has been studied in text generation tasks, including sum mari z ation (Huang et al., 2021) and dialogue generation (Shuster et al., 2021), as well as in a variety of other natural language generation tasks (Ji et al., 2023). Self-consistency decoding has shown to improve chain-of-thought prompting performance on complex reasoning tasks (Wang et al., 2023). Further, Liu et al. (2022) introduce a hallucination detection dataset, however, texts are obtained by perturbing factual texts and thus may not reflect true LLM hallucination.

幻觉现象已在文本生成任务中得到研究,包括摘要生成 (Huang et al., 2021) 和对话生成 (Shuster et al., 2021),以及其他多种自然语言生成任务 (Ji et al., 2023)。自洽解码 (self-consistency decoding) 被证明能提升大语言模型在复杂推理任务中的思维链提示性能 (Wang et al., 2023)。此外,Liu et al. (2022) 提出了一个幻觉检测数据集,但其文本是通过扰动事实文本获得的,可能无法反映真实的大语言模型幻觉。

Recently, Azaria and Mitchell (2023) trained a multi-layer perception classifier where an LLM’s hidden representations are used as inputs to predict the truthfulness of a sentence. However, this approach is a white-box approach that uses the internal states of the LLM, which may not be available through API calls, and requires labelled data for supervised training. Another recent approach is self-evaluation (Kadavath et al., 2022), where an LLM is prompted to evaluate its previous prediction, e.g., to predict the probability that its generated response/answer is true.

最近,Azaria 和 Mitchell (2023) 训练了一个多层感知分类器,利用大语言模型的隐藏表征作为输入来预测句子的真实性。然而,这种方法属于白盒方法,依赖大语言模型的内部状态,这些状态可能无法通过 API 调用获取,并且需要标注数据进行监督训练。另一种近期方法是自评估 (Kadavath et al., 2022),即提示大语言模型评估其先前的预测,例如预测其生成回答的真实概率。

2.2 Sequence Level Uncertainty Estimation

2.2 序列级别不确定性估计

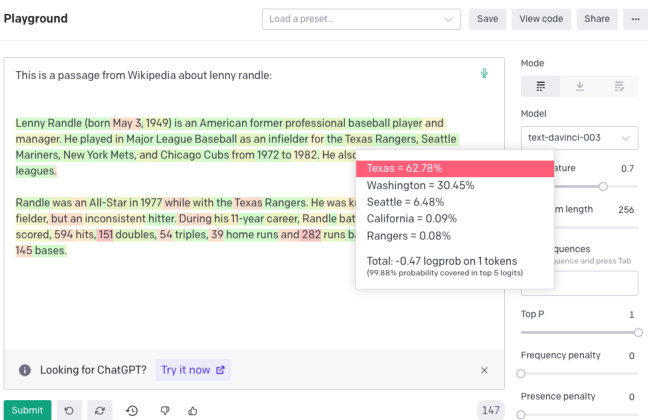

Token probabilities have been used as an indication of model certainty. For example, OpenAI’s GPT-3 web interface allows users to display token probabilities (as shown in Figure 2), and further uncertainty estimation approaches based on aleatoric and epistemic uncertainty have been studied for auto regressive generation (Xiao and Wang, 2021; Malinin and Gales, 2021). Additionally, conditional language model scores have been used to evaluate properties of texts (Yuan et al., 2021; Fu et al., 2023). Recently, semantic uncertainty has been proposed to address uncertainty in free-form generation tasks where probabilities are attached to concepts instead of tokens (Kuhn et al., 2023).

Token概率常被用作模型确定性的指标。例如,OpenAI的GPT-3网页界面允许用户显示Token概率(如图2所示),并且针对自回归生成任务,研究者还探索了基于偶然不确定性和认知不确定性的进一步不确定性估计方法(Xiao and Wang, 2021; Malinin and Gales, 2021)。此外,条件语言模型分数已被用于评估文本属性(Yuan et al., 2021; Fu et al., 2023)。最近,语义不确定性被提出用于解决自由形式生成任务中的不确定性问题,其中概率被附加到概念而非Token上(Kuhn et al., 2023)。

Figure 2: Example of OpenAI’s GPT-3 web interface with output token-level probabilities displayed.

图 2: OpenAI的GPT-3网页界面示例,展示了输出token级别的概率。

2.3 Fact Verification

2.3 事实核查

Existing fact-verification approaches follow a multi-stage pipeline of claim detection, evidence retrieval and verdict prediction (Guo et al., 2022; Zhong et al., 2020). Such methods, however, require access to external databases and can have considerable inference costs.

现有的事实核查方法遵循声明检测、证据检索和结论预测的多阶段流程 (Guo et al., 2022; Zhong et al., 2020)。然而,这类方法需要访问外部数据库且可能产生较高的推理成本。

3 Grey-Box Factuality Assessment

3 灰盒事实性评估

This section will introduce methods that can be used to determine the factuality of LLM responses in a zero-resource setting when one has full access to output distributions.2 We will use ‘factual’ to define when statements are grounded in valid information, i.e. when hallucinations are avoided, and ‘zero-resource’ when no external database is used.

本节将介绍在零资源环境下,当能够完全访问输出分布时,可用于判定大语言模型(LLM)响应事实性的方法。我们用"事实性"指代陈述基于有效信息(即避免幻觉)的情况,用"零资源"表示未使用外部数据库的场景。

3.1 Uncertainty-based Assessment

3.1 基于不确定性的评估

To understand how the factuality of a generated response can be determined in a zero-resource setting, we consider LLM pre-training. During pretraining, the model is trained with next-word prediction over massive corpora of textual data. This gives the model a strong understanding of language (Jawahar et al., 2019; Raffel et al., 2020), powerful contextual reasoning (Zhang et al., 2020), as well as world knowledge (Liusie et al., 2023). Consider the input "Lionel Messi is a _". Since Messi is a world-famous athlete who may have appeared multiple times in pre-training, the LLM is likely to know who Messi is. Therefore given the context, the token "footballer" may be assigned a high probability while other professions such as "carpenter" may be considered improbable. However, for a different input such as "John Smith is a _", the system will be unsure of the continuation which may result in a flat probability distribution. During inference, this is likely to lead to a non-factual word being generated.

为了理解在零资源环境下如何确定生成回答的事实性,我们考察大语言模型的预训练过程。在预训练阶段,模型通过海量文本数据的下一词预测任务进行训练。这使得模型具备:(1) 对语言的深刻理解 (Jawahar et al., 2019; Raffel et al., 2020);(2) 强大的上下文推理能力 (Zhang et al., 2020);(3) 世界知识储备 (Liusie et al., 2023)。以输入"Lionel Messi is a _"为例,由于梅西作为世界知名运动员可能在预训练数据中频繁出现,大语言模型很可能知晓其身份。因此在该上下文中,token"footballer"会被赋予高概率,而"carpenter"等其他职业则概率极低。但对于"John Smith is a _"这类输入,系统将无法确定后续内容,导致概率分布趋于平缓。在推理阶段,这种情况往往会导致生成非事实性内容。

This insight allows us to understand the connection between uncertainty metrics and factuality. Factual sentences are likely to contain tokens with higher likelihood and lower entropy, while hallucinations are likely to come from positions with flat probability distributions with high uncertainty.

这一洞见让我们得以理解不确定性指标与事实性之间的关联。事实性句子通常包含似然度较高、熵值较低的token,而幻觉往往源于概率分布平缓、不确定性较高的位置。

Token-level Probability

Token级概率

Given the LLM’s response $R$ , let $i$ denote the $i$ -th sentence in $R,j$ denote the $j$ -th token in the $i$ -th sentence, $J$ is the number of tokens in the sentence, and $p_{i j}$ be the probability of the word generated by the LLM at the $j$ -th token of the $i$ -th sentence. Two probability metrics are used:

给定大语言模型的响应 $R$,设 $i$ 表示 $R$ 中的第 $i$ 个句子,$j$ 表示第 $i$ 个句子中的第 $j$ 个 token,$J$ 为句子中的 token 数量,$p_{i j}$ 表示大语言模型在第 $i$ 个句子第 $j$ 个 token 处生成该词的概率。采用以下两种概率指标:

$$

\begin{array}{r}{\mathsf{A v g}\big(-\log p\big)=-\frac{1}{J}\underset{j}{\sum_{j}}\log p_{i j}}\ {\mathsf{M a x}\big(-\log p\big)=\underset{j}{\operatorname*{max}}\big(-\log p_{i j}\big)}\end{array}

$$

$$

\begin{array}{r}{\mathsf{A v g}\big(-\log p\big)=-\frac{1}{J}\underset{j}{\sum_{j}}\log p_{i j}}\ {\mathsf{M a x}\big(-\log p\big)=\underset{j}{\operatorname*{max}}\big(-\log p_{i j}\big)}\end{array}

$$

$\mathbf{Max(-log}p\mathbf{)}$ measures the sentence’s likelihood by assessing the least likely token in the sentence.

$\mathbf{Max(-log}p\mathbf{)}$ 通过评估句子中最不可能的Token来衡量句子的可能性。

Entropy

熵

The entropy of the output distribution is:

输出分布的熵为:

$$

\mathcal{H}_ {i j}=-\sum_{\tilde{w}\in\mathcal{W}}p_{i j}(\tilde{w})\log p_{i j}(\tilde{w})

$$

$$

\mathcal{H}_ {i j}=-\sum_{\tilde{w}\in\mathcal{W}}p_{i j}(\tilde{w})\log p_{i j}(\tilde{w})

$$

where $p_{i j}(\tilde{w})$ is the probability of the word $\tilde{w}$ being generated at the $j$ -th token of the $i$ -th sentence, and $\mathcal{W}$ is the set of all possible words in the vocabulary. Similar to the probability-based metrics, two entropy-based metrics are used:

其中 $p_{i j}(\tilde{w})$ 是词汇表 $\mathcal{W}$ 中单词 $\tilde{w}$ 在第 $i$ 个句子第 $j$ 个 token 处被生成的概率。与基于概率的指标类似,这里采用两种基于熵的度量方式:

$$

\operatorname{Avg}(\mathcal{H})=\frac{1}{J}\sum_{j}\mathcal{H}_ {i j};\quad\operatorname{Max}(\mathcal{H})=\operatorname*{max}_ {j}\left(\mathcal{H}_{i j}\right)

$$

$$

\operatorname{Avg}(\mathcal{H})=\frac{1}{J}\sum_{j}\mathcal{H}_ {i j};\quad\operatorname{Max}(\mathcal{H})=\operatorname*{max}_ {j}\left(\mathcal{H}_{i j}\right)

$$

4 Black-Box Factuality Assessment

4 黑盒事实性评估

A drawback of grey-box methods is that they require output token-level probabilities. Though this may seem a reasonable requirement, for massive LLMs only available through limited API calls, such token-level information may not be available (such as with ChatGPT). Therefore, we consider black-box approaches which remain applicable even when only text-based responses are available.

灰盒方法的缺点在于需要输出token级别的概率信息。虽然这看起来是个合理要求,但对于只能通过有限API调用访问的超大规模语言模型(如ChatGPT),此类token级信息可能无法获取。因此我们考虑采用黑盒方法,这种方法在仅能获得文本响应时依然适用。

Proxy LLMs

代理大语言模型

A simple approach to approximate the grey-box approaches is by using a proxy LLM, i.e. another LLM that we have full access to, such as LLaMA (Touvron et al., 2023). A proxy LLM can be used to approximate the output token-level probabilities of the black-box LLM generating the text. In the next section, we propose Self Check GP T, which is also a black-box approach.

一种近似灰盒方法的简单途径是使用代理大语言模型 (proxy LLM) ,即另一个我们拥有完全访问权限的大语言模型,例如 LLaMA (Touvron et al., 2023) 。代理大语言模型可用于近似黑盒大语言模型生成文本时的输出 token 级概率。在下一节中,我们将提出 Self Check GPT ,这也是一种黑盒方法。

5 Self Check GP T

5 自我检查 GPT

Self Check GP T is our proposed black-box zeroresource hallucination detection scheme, which operates by comparing multiple sampled responses and measuring consistency.

自检GPT是我们提出的黑盒零资源幻觉检测方案,其通过比较多个采样响应并测量一致性来运作。

Notation: Let $R$ refer to an LLM response drawn from a given user query. Self Check GP T draws a further $N$ stochastic LLM response samples ${S^{1},S^{2},...,S^{n},...,S^{N}}$ using the same query, and then measures the consistency between the response and the stochastic samples. We design Self Check GP T to predict the hallucination score of the $i$ -th sentence, $S(i)$ , such that $S(i)\in[0.0,1.0]$ , where $S(i)\to0.0$ if the $i$ -th sentence is grounded in valid information and $S(i)\to1.0$ if the $i$ -th sentence is hallucinated.3 The following subsections will describe each of the Self Check GP T variants.

符号说明:设 $R$ 表示从给定用户查询中生成的大语言模型 (LLM) 响应。Self Check GPT 会使用相同查询进一步生成 $N$ 个随机大语言模型响应样本 ${S^{1},S^{2},...,S^{n},...,S^{N}}$,然后测量原始响应与随机样本之间的一致性。我们将 Self Check GPT 设计为预测第 $i$ 个句子 $S(i)$ 的幻觉分数,其中 $S(i)\in[0.0,1.0]$:当 $S(i)\to0.0$ 时表示该句子基于有效信息,$S(i)\to1.0$ 时表示该句子存在幻觉。3 后续小节将分别描述 Self Check GPT 的各个变体。

5.1 Self Check GP T with BERTScore

5.1 基于BERTScore的自检GPT

Let $\boldsymbol{\mathcal{B}}(.,.)$ denote the BERTScore between two sentences. Self Check GP T with BERTScore finds the average BERTScore of the $i$ -th sentence with the most similar sentence from each drawn sample:

设 $\boldsymbol{\mathcal{B}}(.,.)$ 表示两句话之间的BERTScore。基于BERTScore的Self Check GPT通过计算第 $i$ 句话与每个抽样样本中最相似句子的平均BERTScore来实现:

$$

S_{\mathrm{BERT}}(i)=1-\frac{1}{N}\sum_{n=1}^{N}\operatorname*{max}_ {k}\left(\mathcal{B}(r_{i},s_{k}^{n})\right)

$$

$$

S_{\mathrm{BERT}}(i)=1-\frac{1}{N}\sum_{n=1}^{N}\operatorname*{max}_ {k}\left(\mathcal{B}(r_{i},s_{k}^{n})\right)

$$

where $r_{i}$ represents the $i$ -th sentence in $R$ and $s_{k}^{n}$ represents the $k$ -th sentence in the $n$ -th sample $S^{n}$ . This way if the information in a sentence appears in many drawn samples, one may assume that the information is factual, whereas if the statement appears in no other sample, it is likely a hallucination. In this work, RoBERTa-Large (Liu et al., 2019) is used as the backbone of BERTScore.

其中 $r_{i}$ 表示 $R$ 中的第 $i$ 个句子,$s_{k}^{n}$ 表示第 $n$ 个样本 $S^{n}$ 中的第 $k$ 个句子。通过这种方式,如果一个句子中的信息出现在多个抽取样本中,则可以认为该信息是事实性的;反之,若该陈述未出现在其他样本中,则很可能是幻觉。本工作采用 RoBERTa-Large (Liu et al., 2019) 作为 BERTScore 的骨干网络。

5.2 Self Check GP T with Question Answering

5.2 自检GP T与问答

We also consider using the automatic multiplechoice question answering generation (MQAG) framework (Manakul et al., 2023) to measure consistency for Self Check GP T. MQAG assesses consistency by generating multiple-choice questions over the main generated response, which an independent answering system can attempt to answer while conditioned on the other sampled responses. If questions on consistent information are queried, the answering system is expected to predict similar answers. MQAG consists of two stages: question generation G and question answering A. For the sentence $r_{i}$ in the response $R$ , we draw questions $q$ and options $\mathbf{o}$ :

我们还考虑使用自动多选题生成 (MQAG) 框架 (Manakul et al., 2023) 来衡量 Self Check GP T 的一致性。MQAG 通过在主生成响应上生成多选题来评估一致性,一个独立的答题系统可以在以其他采样响应为条件的情况下尝试回答这些问题。如果查询的是关于一致信息的问题,答题系统应能预测出相似的答案。MQAG 包含两个阶段:问题生成 G 和问题回答 A。对于响应 $R$ 中的句子 $r_{i}$,我们抽取问题 $q$ 和选项 $\mathbf{o}$:

$$

q,\mathbf{o}\sim P_{\mathtt{G}}(q,\mathbf{o}|r_{i},R)

$$

$$

q,\mathbf{o}\sim P_{\mathtt{G}}(q,\mathbf{o}|r_{i},R)

$$

The answering stage A selects the answers:

回答阶段A选择答案:

NmN+nNn . To take into account the answer ability of generated questions, we show in Appendix B that we can modify the inconsistency score by applying soft-counting, resulting in:

NmN+nNn。为了考虑生成问题的回答能力,我们在附录B中展示了可以通过应用软计数来修改不一致性分数,最终得到:

$$

S_{\mathrm{{QA}}}(i,q)=\frac{\gamma_{2}^{N_{\mathrm{{n}}}^{\prime}}}{\gamma_{1}^{N_{\mathrm{{n}}}^{\prime}}+\gamma_{2}^{N_{\mathrm{{n}}}^{\prime}}}

$$

$$

S_{\mathrm{{QA}}}(i,q)=\frac{\gamma_{2}^{N_{\mathrm{{n}}}^{\prime}}}{\gamma_{1}^{N_{\mathrm{{n}}}^{\prime}}+\gamma_{2}^{N_{\mathrm{{n}}}^{\prime}}}

$$

where ${\cal{N}}_ {\mathtt{m}}^{\prime}=$ the effective match count, $N_{\mathtt{n}}^{\prime}=$ the effective mismatch count, with $\gamma_{1}$ and $\gamma_{2}$ defined in Appendix B.1. Ultimately, Self Check GP T with QA is the average of inconsistency scores across $q$ ,

其中 ${\cal{N}}_ {\mathtt{m}}^{\prime}=$ 有效匹配计数,$N_{\mathtt{n}}^{\prime}=$ 有效失配计数,$\gamma_{1}$ 和 $\gamma_{2}$ 的定义见附录 B.1。最终,带 QA 的 Self Check GPT 是 $q$ 个不一致性得分的平均值。

$$

\begin{array}{r}{S_{\mathrm{QA}}(i)=\mathbb{E}_ {q}\left[S_{\mathrm{QA}}(i,q)\right]}\end{array}

$$

$$

\begin{array}{r}{S_{\mathrm{QA}}(i)=\mathbb{E}_ {q}\left[S_{\mathrm{QA}}(i,q)\right]}\end{array}

$$

5.3 Self Check GPT with n-gram

5.3 基于 n-gram 的自检 GPT

Given samples ${S^{1},...,S^{N}}$ generated by an LLM, one can use the samples to create a new language model that approximates the LLM. In the limit as $N$ gets sufficiently large, the new language model will converge to the LLM that generated the responses. We can therefore approximate the LLM’s token probabilities using the new language model.

给定由大语言模型生成的样本 ${S^{1},...,S^{N}}$,可以利用这些样本来创建一个近似该大语言模型的新语言模型。当 $N$ 足够大时,新语言模型将收敛于生成这些响应的大语言模型。因此,我们可以使用新语言模型来近似大语言模型的 Token 概率。

In practice, due to time and/or cost constraints, there can only be a limited number of samples $N$ . Consequently, we train a simple $n$ -gram model using the samples ${S^{1},...,S^{N}}$ as well as the main response $R$ (which is assessed), where we note that including $R$ can be considered as a smoothing method where the count of each token in $R$ is increased by 1. We then compute the average of the log-probabilities of the sentence in response $R$ ,

实践中,由于时间和/或成本限制,只能获取有限数量的样本 $N$。因此,我们使用样本 ${S^{1},...,S^{N}}$ 以及主响应 $R$(被评估的)训练一个简单的 $n$-gram 模型。需要注意的是,包含 $R$ 可视为一种平滑方法,即 $R$ 中每个 token 的计数增加 1。随后,我们计算响应 $R$ 中句子的对数概率平均值。

$$

S_{n-\mathrm{gram}}^{\mathrm{Avg}}(i)=-\frac{1}{J}\sum_{j}\log\tilde{p}_{i j}

$$

$$

S_{n-\mathrm{gram}}^{\mathrm{Avg}}(i)=-\frac{1}{J}\sum_{j}\log\tilde{p}_{i j}

$$

where $\tilde{p}_{i j}$ is the probability (of the $j$ -th token of the $i$ -th sentence) computed using the $n$ -gram model. Similar to the grey-box approach, we can also use the maximum of the negative log probabilities,

其中 $\tilde{p}_{i j}$ 是使用 $n$ 元模型计算得到的概率(第 $i$ 个句子的第 $j$ 个 token)。与灰盒方法类似,我们也可以使用负对数概率的最大值,

$$

\begin{array}{c}{a_{R}=\underset{k}{\mathrm{argmax}}\left[P_{\mathrm{A}}(o_{k}|q,R,\mathbf{o})\right]}\ {a_{S^{n}}=\underset{k}{\mathrm{argmax}}\left[P_{\mathrm{A}}(o_{k}|q,S^{n},\mathbf{o})\right]}\end{array}

$$

$$

\begin{array}{c}{a_{R}=\underset{k}{\mathrm{argmax}}\left[P_{\mathrm{A}}(o_{k}|q,R,\mathbf{o})\right]}\ {a_{S^{n}}=\underset{k}{\mathrm{argmax}}\left[P_{\mathrm{A}}(o_{k}|q,S^{n},\mathbf{o})\right]}\end{array}

$$

We compare whether $a_{R}$ is equal to $a_{S^{n}}$ for each sample in ${S^{1},...,S^{N}}$ , yielding #matches $N_{\mathfrak{m}}$ and #not-matches $N_{\mathbf{n}}$ . A simple inconsistency score for the $i$ -th sentence and question $q$ based on the match/not-match counts is defined: $S_{\mathrm{QA}}(i,q)=$

我们比较 ${S^{1},...,S^{N}}$ 中每个样本的 $a_{R}$ 是否等于 $a_{S^{n}}$,得到匹配数 $N_{\mathfrak{m}}$ 和非匹配数 $N_{\mathbf{n}}$。基于匹配/非匹配计数,定义第 $i$ 个句子与问题 $q$ 的简单不一致性分数:$S_{\mathrm{QA}}(i,q)=$

$$

S_{n-\mathrm{gram}}^{\mathrm{Max}}(i)=\operatorname*{max}_ {j}(-\log\tilde{p}_{i j})

$$

$$

S_{n-\mathrm{gram}}^{\mathrm{Max}}(i)=\operatorname*{max}_ {j}(-\log\tilde{p}_{i j})

$$

5.4 Self Check GP T with NLI

5.4 自检 GP T 与 NLI

Natural Language Inference (NLI) determines whether a hypothesis follows a premise, classified into either entailment/neutral/contradiction. NLI measures have been used to measure faithfulness in sum mari z ation, where Maynez et al. (2020) use a textual entailment classifier trained on MNLI (Williams et al., 2018) to determine if a summary contradicts a context or not. Inspired by NLI-based summary assessment, we consider using the NLI contradiction score as a Self Check GP T score.

自然语言推理 (Natural Language Inference, NLI) 用于判断假设是否遵循前提,分类为蕴含/中立/矛盾。NLI 指标已被用于衡量摘要的忠实度,其中 Maynez 等人 (2020) 使用基于 MNLI (Williams 等人, 2018) 训练的文本蕴含分类器来判断摘要是否与上下文矛盾。受基于 NLI 的摘要评估启发,我们考虑将 NLI 矛盾分数作为 Self Check GPT 的评分指标。

For SelfCheck-NLI, we use DeBERTa-v3-large (He et al., 2023) fine-tuned to MNLI as the NLI model. The input for NLI class if i ers is typically the premise concatenated to the hypothesis, which for our methodology is the sampled passage $S^{n}$ concatenated to the sentence to be assessed $r_{i}$ . Only the logits associated with the ‘entailment’ and ‘contradiction’ classes are considered,

对于SelfCheck-NLI,我们使用经过MNLI微调的DeBERTa-v3-large (He et al., 2023)作为NLI模型。NLI分类器的输入通常是前提与假设的拼接,在我们的方法中即为采样段落$S^{n}$与被评估句子$r_{i}$的拼接。仅考虑与"蕴含"和"矛盾"类别相关的logits。

$$

P(\mathrm{contradict}|r_{i},S^{n})=\frac{\exp(z_{c})}{\exp(z_{e})+\exp(z_{c})}

$$

$$

P(\mathrm{contradict}|r_{i},S^{n})=\frac{\exp(z_{c})}{\exp(z_{e})+\exp(z_{c})}

$$

where $z_{e}$ and $z_{c}$ are the logits of the ‘entailment’ and ‘contradiction’ classes, respectively. This normalization ignores the neutral class and ensures that the probability is bounded between 0.0 and 1.0. The Self Check GP T with NLI score for each sample $S^{n}$ is then defined as,

其中 $z_{e}$ 和 $z_{c}$ 分别是“蕴含”和“矛盾”类别的 logits。这种归一化忽略了中性类别,并确保概率值在 0.0 到 1.0 之间。每个样本 $S^{n}$ 的 Self Check GPT 与 NLI 分数定义为:

$${\boldsymbol{S}}_ {\mathrm{NLI}}(i)=\frac{1}{N}\sum_{n=1}^{N}P(\mathrm{contradict}|{\boldsymbol{r}}_{i},S^{n})

$$

$${\boldsymbol{S}}_ {\mathrm{NLI}}(i)=\frac{1}{N}\sum_{n=1}^{N}P(\mathrm{contradict}|{\boldsymbol{r}}_{i},S^{n})

$$

5.5 Self Check GP T with Prompt

5.5 带提示的自检GPT

LLMs have recently been shown to be effective in assessing information consistency between a document and its summary in zero-shot settings (Luo et al., 2023). Thus, we query an LLM to assess whether the $i$ -th sentence is supported by sample $S^{n}$ (as the context) using the following prompt.

大语言模型 (LLM) 近期被证明能在零样本 (Zero-shot) 设置下有效评估文档与其摘要间的信息一致性 (Luo et al., 2023) 。因此,我们通过以下提示词查询大语言模型,以评估第 $i$ 个句子是否被样本 $S^{n}$ (作为上下文) 所支持。

Initial investigation showed that GPT-3 (textdavinci-003) will output either Yes or No $98%$ of the time, while any remaining outputs can be set to N/A. The output from prompting when comparing the $i$ -th sentence against sample $S^{n}$ is converted to score $x_{i}^{n}$ through the mapping {Yes: 0.0, No: 1.0, N/A: 0.5}. The final inconsistency score is then calculated as:

初步调查显示,GPT-3 (textdavinci-003) 在98%的情况下会输出Yes或No,其余输出可设为N/A。当比较第i个句子与样本S^n时,通过映射{Yes: 0.0, No: 1.0, N/A: 0.5}将提示输出转换为分数x_i^n。最终不一致性得分计算公式为:

$$

S_{\mathrm{Prompt}}(i)=\frac{1}{N}\sum_{n=1}^{N}x_{i}^{n}

$$

$$

S_{\mathrm{Prompt}}(i)=\frac{1}{N}\sum_{n=1}^{N}x_{i}^{n}

$$

Self Check GP T-Prompt is illustrated in Figure 1. Note that our initial investigations found that less capable models such as GPT-3 (text-curie-001) or LLaMA failed to effectively perform consistency assessment via such prompting.

图 1: 展示了自检GP T-Prompt的流程。需要注意的是,我们的初步研究发现,像GPT-3 (text-curie-001)或LLaMA这类能力较弱的模型无法通过此类提示有效执行一致性评估。

6 Data and Annotation

6 数据与标注

As, currently, there are no standard hallucination detection datasets available, we evaluate our hallucination detection approaches by 1) generating synthetic Wikipedia articles using GPT-3 on the individuals/concepts from the WikiBio dataset (Lebret et al., 2016); 2) manually annotating the factuality of the passage at a sentence level; 3) evaluating the system’s ability to detect hallucinations.

由于目前尚无标准的幻觉检测数据集可用,我们通过以下方式评估幻觉检测方法:1) 使用GPT-3基于WikiBio数据集(Lebret et al., 2016)中的人物/概念生成合成维基百科文章;2) 在句子级别人工标注文本的事实性;3) 评估系统检测幻觉的能力。

WikiBio is a dataset where each input contains the first paragraph (along with tabular information) of Wikipedia articles of a specific concept. We rank the WikiBio test set in terms of paragraph length and randomly sample 238 articles from the top $20%$ of longest articles (to ensure no very obscure concept is selected). GPT-3 (text-davinci-003) is then used to generate Wikipedia articles on a concept, using the prompt "This is a Wikipedia passage about {concept}:". Table 1 provides the statistics of GPT-3 generated passages.

WikiBio是一个数据集,其每条输入包含特定概念维基百科文章的首段(附表格信息)。我们按段落长度对WikiBio测试集进行排序,并从最长文章的前20%中随机抽取238篇(确保不选择过于冷门的概念)。随后使用GPT-3(text-davinci-003)以提示语"这是关于{概念}的维基百科文章:"生成对应概念的维基百科内容。表1提供了GPT-3生成文本的统计数据。

Table 1: The statistics of WikiBio GPT-3 dataset where the number of tokens is based on the OpenAI GPT-2 tokenizer.

| #Passages | #Sentences | #Tokens/passage |

| 238 | 1908 | 184.7±36.9 |

表 1: WikiBio GPT-3 数据集的统计信息,其中 token 数量基于 OpenAI GPT-2 的分词器。

| #段落 | #句子 | #Token/段落 |

|---|---|---|

| 238 | 1908 | 184.7±36.9 |

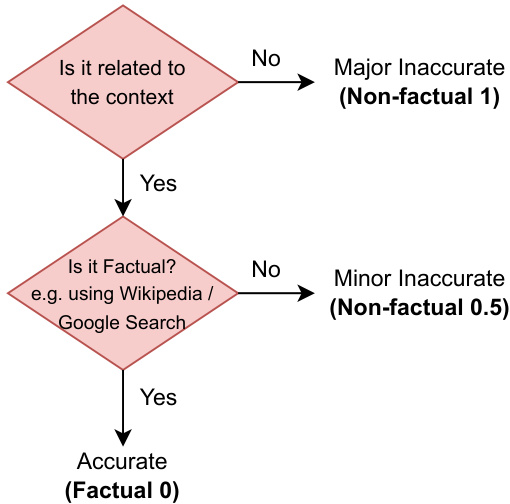

We then annotate the sentences of the generated passages using the guidelines shown in Figure 3 such that each sentence is classified as either:

然后我们根据图3所示的指导原则对生成段落的句子进行标注,将每个句子分类为以下类别之一:

• Major Inaccurate (Non-Factual, 1): The sentence is entirely hallucinated, i.e. the sentence is unrelated to the topic. • Minor Inaccurate (Non-Factual, 0.5): The sentence consists of some non-factual information, but the sentence is related to the topic. • Accurate (Factual, 0): The information presented in the sentence is accurate.

- 严重不准确 (非事实性,1): 句子完全属于幻觉内容,即该句子与主题无关。

- 轻微不准确 (非事实性,0.5): 句子包含部分非事实性信息,但与主题相关。

- 准确 (事实性,0): 句子中呈现的信息准确无误。

Of the 1908 annotated sentences, 761 $(39.9%)$ of the sentences were labelled major-inaccurate, 631 $(33.1%)$ minor-inaccurate, and 516 $(27.0%)$ accurate. 201 sentences in the dataset had annotations from two different annotators. To obtain a single label for this subset, if both annotators agree, then the agreed label is used. However, if there is disagreement, then the worse-case label is selected (e.g., {minor inaccurate, major inaccurate} is mapped to major inaccurate). The inter-annotator agreement, as measured by Cohen’s $\kappa$ (Cohen, 1960), has $\kappa$ values of 0.595 and 0.748, indicating moderate and substantial agreement (Viera et al., 2005) for the 3-class and 2-class scenarios, respectively.4

在1908条标注句子中,761条$(39.9%)$被标记为严重不准确,631条$(33.1%)$为轻微不准确,516条$(27.0%)$为准确。数据集中有201条句子由两位不同标注者进行了标注。为获得该子集的单一标签,若两位标注者意见一致则采用一致标签;若存在分歧则选择更严重的标签(例如{轻微不准确,严重不准确}映射为严重不准确)。通过Cohen's $\kappa$ (Cohen, 1960)测量的标注者间一致性显示,$\kappa$值分别为0.595和0.748,表明在3分类和2分类场景下分别达到中等和高度一致性(Viera et al., 2005)。4

Figure 3: Flowchart of our annotation process

图 3: 我们的标注流程示意图

Furthermore, passage-level scores are obtained by averaging the sentence-level labels in each passage. The distribution of passage-level scores is shown in Figure 4, where we observe a large peak at $+1.0$ . We refer to the points at this peak as total hallucination, which occurs when the information of the response is unrelated to the real concept and is entirely fabricated by the LLM.

此外,段落级分数是通过对每个段落中的句子级标签取平均得到的。段落级分数的分布如图4所示,其中我们观察到在$+1.0$处有一个较大的峰值。我们将该峰值处的点称为完全幻觉 (total hallucination) ,即当响应的信息与真实概念无关且完全由大语言模型虚构时发生。

Figure 4: Document factuality scores histogram plot

图 4: 文档事实性评分直方图

$N{=}20$ samples. For the proxy LLM approach, we use LLaMA (Touvron et al., 2023), one of the bestperforming open-source LLMs currently available. For Self Check GP T-Prompt, we consider both GPT3 (which is the same LLM that is used to generate passages) as well as the newly released ChatGPT (gpt-3.5-turbo). More details about the systems in Self Check GP T and results using other proxy LLMs can be found in the appendix.

$N{=}20$ 个样本。对于代理大语言模型方法,我们采用当前性能最优的开源大语言模型LLaMA (Touvron et al., 2023)。在Self Check GPT提示法中,我们同时测试了GPT-3(即用于生成文本的同一大语言模型)和新发布的ChatGPT (gpt-3.5-turbo)。关于Self Check GPT系统的更多细节及其他代理大语言模型的实验结果详见附录。

7.1 Sentence-level Hallucination Detection

7.1 句子级幻觉检测

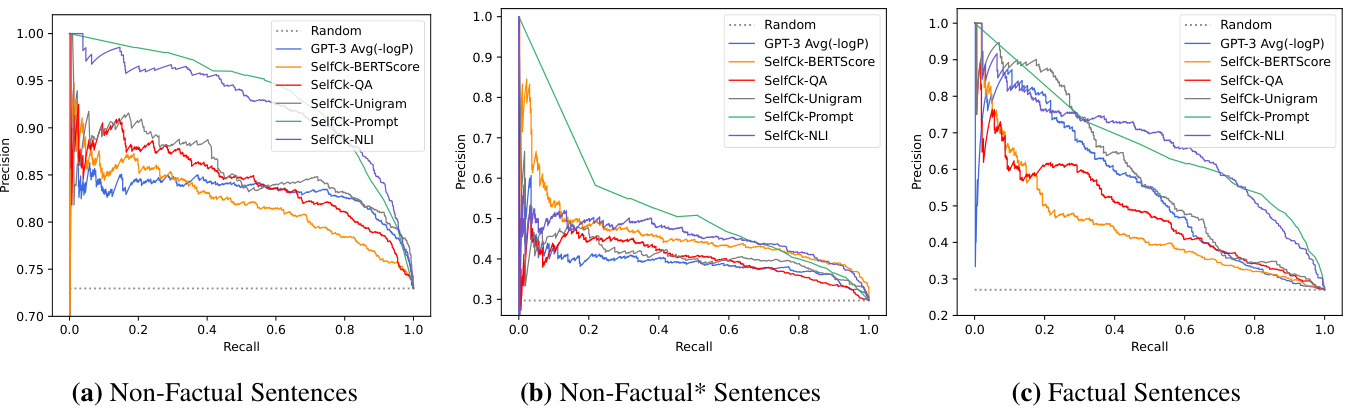

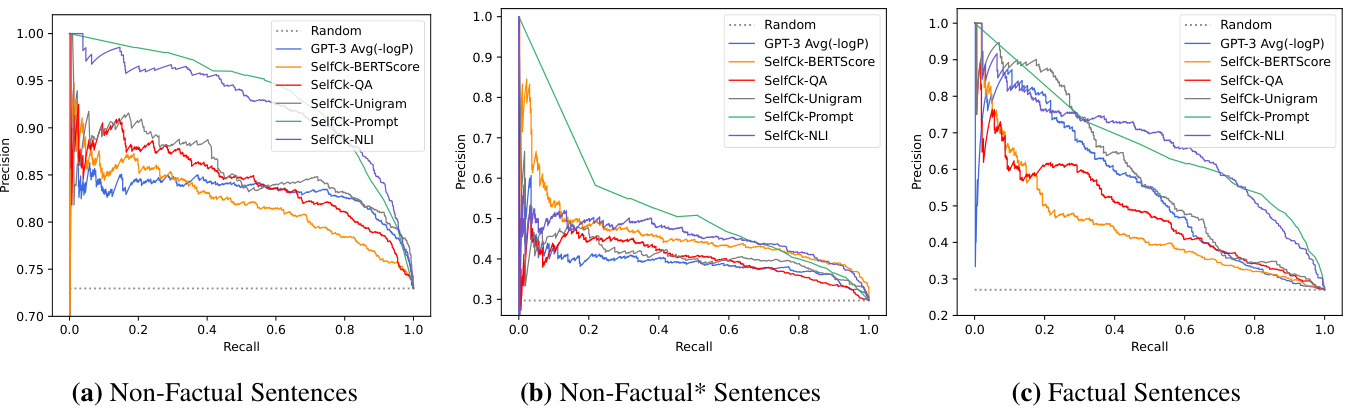

First, we investigate whether our hallucination detection methods can identify the factuality of sentences. In detecting non-factual sentences, both major-inaccurate labels and minor-inaccurate labels are grouped together into the non-factual class, while the factual class refers to accurate sentences. In addition, we consider a more challenging task of detecting major-inaccurate sentences in passages that are not total hallucination passages, which we refer to as non-factual∗.5 Figure 5 and Table 2 show the performance of our approaches, where the following observations can be made:

首先,我们研究幻觉检测方法能否识别句子的真实性。在检测非事实性句子时,主要不准确标签和次要不准确标签均归入非事实类,而事实类指准确句子。此外,我们考虑更具挑战性的任务:检测非完全幻觉段落中的主要不准确句子(记为non-factual∗)。图5和表2展示了我们方法的性能表现,可得出以下观察结论:

- LLM’s probabilities $p$ correlate well with factuality. Our results show that probability measures (from the LLM generating the texts) are strong baselines for assessing factuality. Factual sentences can be identified with an AUC-PR of 53.97, significantly better than the random baseline of 27.04, with the AUC-PR for hallucination detection also increasing from 72.96 to 83.21. This supports the hypothesis that when the LLMs are uncertain about generated information, generated tokens often have higher uncertainty, paving a promising direction for hallucination detection approaches. Also, the probability $p$ measure performs better than the entropy $\mathcal{H}$ measure of top-5 tokens.

- 大语言模型的概率 $p$ 与事实性高度相关。我们的结果表明,(由生成文本的大语言模型提供的)概率指标是评估事实性的强基线。事实性句子的AUC-PR可达53.97,显著优于随机基线27.04,幻觉检测的AUC-PR也从72.96提升至83.21。这验证了以下假设:当大语言模型对生成信息不确定时,生成token往往具有更高不确定性,为幻觉检测方法指明了可行方向。此外,概率 $p$ 指标的检测效果优于top-5 token的熵 $\mathcal{H}$ 指标。

7 Experiments

7 实验

The generative LLM used to generate passages for our dataset is GPT-3 (text-davinci-003), the stateof-the-art system at the time of creating and annotating the dataset. To obtain the main response, we set the temperature to 0.0 and use standard beam search decoding. For the stochastic ally generated samples, we set the temperature to 1.0 and generate

用于为我们的数据集生成段落的大语言模型是GPT-3 (text-davinci-003),这是创建和标注数据集时最先进的系统。为了获得主要响应,我们将温度设置为0.0并使用标准束搜索解码。对于随机生成的样本,我们将温度设置为1.0并生成

- Proxy LLM perform noticeably worse than LLM (GPT-3). The results of proxy LLM (based on LLaMA) show that the entropy $\mathcal{H}$ measures outperform the probability measures. This suggests that using richer uncertainty information can improve factuality/hallucination detection performance, and that previously the entropy of top-5 tokens is likely to be insufficient. In addition, when using other proxy LLMs such as GPT-NeoX or OPT-30B, the performance is near that of the random baseline. We believe this poor performance occurs as different LLMs have different generating patterns, and so even common tokens may have a low probability in situations where the response is dissimilar to the generation style of the proxy LLM. We note that a weighted conditional LM score such as BARTScore (Yuan et al., 2021) could be incorporated in future investigations.

- 代理大语言模型 (proxy LLM) 的表现明显差于大语言模型 (GPT-3)。基于 LLaMA 的代理大语言模型结果显示,熵 $\mathcal{H}$ 度量优于概率度量。这表明使用更丰富的不确定性信息可以提升事实性/幻觉检测性能,而此前使用的 top-5 token 熵可能不够充分。此外,当使用其他代理大语言模型如 GPT-NeoX 或 OPT-30B 时,性能接近随机基线。我们认为这种较差的表现源于不同大语言模型具有不同的生成模式,因此即使常见 token 在响应与代理大语言模型生成风格不符时也可能具有较低概率。我们注意到未来研究可以引入加权条件语言模型分数(如 BARTScore [20])。

Figure 5: PR-Curve of detecting non-factual and factual sentences in the GPT-3 generated WikiBio passages. Table 2: AUC-PR for sentence-level detection tasks. Passage-level ranking performances are measured by Pearson correlation coefficient and Spearman’s rank correlation coefficient w.r.t. human judgements. The results of other proxy LLMs, in addition to LLaMA, can be found in the appendix. †GPT-3 API returns the top-5 tokens’ probabilities, which are used to compute entropy.

| Method | Sentence-level (AUC-PR) | Factual | Passage-level (Corr.) | ||

| NonFact | NonFact* | Pearson | Spearman | ||

| Random | 72.96 | 29.72 | 27.04 | ||

| GPT-3 (text-davinci-003)'s probabilities (LLM, grey-box) | |||||

| Avg(-logp) | 83.21 | 38.89 | 53.97 | 57.04 | 53.93 |

| Avg(H)t | 80.73 | 37.09 | 52.07 | 55.52 | 50.87 |

| Max(-logp) | 87.51 | 35.88 | 50.46 | 57.83 | 55.69 |

| Max(H)t | 85.75 | 32.43 | 50.27 | 52.48 | 49.55 |

| LLaMA-30B's probabilities (Proxy LLM, black-box) | |||||

| Avg(-logp) | 75.43 | 30.32 | 41.29 | 21.72 | 20.20 |

| Avg(H) | 80.80 | 39.01 | 42.97 | 33.80 | 39.49 |

| Max(-logp) | 74.01 | 27.14 | 31.08 | -22.83 | -22.71 |

| Max(H) | 80.92 | 37.32 | 37.90 | 35.57 | 38.94 |

| SelfCheckGPT (black-box) | |||||

| w/BERTScore | 81.96 | 45.96 | 44.23 | 58.18 | 55.90 |

| W/ QA | 84.26 | 40.06 | 48.14 | 61.07 | 59.29 |

| w/ Unigram (max) | 85.63 | 41.04 | 58.47 | 64.71 | 64.91 |

| w/ NLI | 92.50 | 45.17 | 66.08 | 74.14 | 73.78 |

| w/ Prompt | 93.42 | 53.19 | 67.09 | 78.32 | 78.30 |

图 5: GPT-3生成的WikiBio段落中非事实与事实句子的PR曲线。

表 2: 句子级检测任务的AUC-PR。段落级排序性能通过皮尔逊相关系数和斯皮尔曼等级相关系数衡量(基于人工标注)。除LLaMA外,其他代理大语言模型的结果见附录。†GPT-3 API返回前5个Token的概率,用于计算熵。

| 方法 | 句子级 (AUC-PR) | 事实性 | 段落级 (相关系数) | ||

|---|---|---|---|---|---|

| NonFact | NonFact* | Pearson | Spearman | ||

| Random | 72.96 | 29.72 | 27.04 | ||

| GPT-3 (text-davinci-003)概率 (大语言模型,灰盒) | |||||

| Avg(-logp) | 83.21 | 38.89 | 53.97 | 57.04 | 53.93 |

| Avg(H)† | 80.73 | 37.09 | 52.07 | 55.52 | 50.87 |

| Max(-logp) | 87.51 | 35.88 | 50.46 | 57.83 | 55.69 |

| Max(H)† | 85.75 | 32.43 | 50.27 | 52.48 | 49.55 |

| LLaMA-30B概率 (代理大语言模型,黑盒) | |||||

| Avg(-logp) | 75.43 | 30.32 | 41.29 | 21.72 | 20.20 |

| Avg(H) | 80.80 | 39.01 | 42.97 | 33.80 | 39.49 |

| Max(-logp) | 74.01 | 27.14 | 31.08 | -22.83 | -22.71 |

| Max(H) | 80.92 | 37.32 | 37.90 | 35.57 | 38.94 |

| SelfCheckGPT (黑盒) | |||||

| w/BERTScore | 81.96 | 45.96 | 44.23 | 58.18 | 55.90 |

| w/ QA | 84.26 | 40.06 | 48.14 | 61.07 | 59.29 |

| w/ Unigram (max) | 85.63 | 41.04 | 58.47 | 64.71 | 64.91 |

| w/ NLI | 92.50 | 45.17 | 66.08 | 74.14 | 73.78 |

| w/ Prompt | 93.42 | 53.19 | 67.09 | 78.32 | 78.30 |

- Self Check GP T outperforms grey-box approaches. It can be seen that Self Check GP TPrompt considerably outperforms the grey-box approaches (including GPT-3’s output probabilities) as well as other black-box approaches. Even other variants of Self Check GP T, including BERTScore, QA, and $n$ -gram, outperform the grey-box approaches in most setups. Interestingly, despite being the least computationally expensive method, Self Check GP T with unigram (max) works well across different setups. Essentially, when assessing a sentence, this method picks up the token with the lowest occurrence given all the samples. This suggests that if a token only appears a few times (or once) within the generated samples $(N{=}20)$ ), it is likely non-factual.

- Self Check GPT优于灰盒方法。可以看出,Self Check GPT Prompt显著优于灰盒方法(包括GPT-3的输出概率)以及其他黑盒方法。甚至Self Check GPT的其他变体,包括BERTScore、QA和$n$-gram,在大多数设置中也优于灰盒方法。有趣的是,尽管是计算成本最低的方法,使用unigram (max)的Self Check GPT在不同设置中表现良好。本质上,在评估句子时,该方法会选择所有样本中出现频率最低的token。这表明如果一个token在生成的样本$(N{=}20)$中仅出现少数几次(或一次),它很可能是非事实性的。

- Self Check GP T with $n$ -gram. When investigating the $n$ -gram performance from 1-gram to 5-gram, the results show that simply finding the least likely token/n-gram is more effective than computing the average $n$ -gram score of the sentence, details in appendix Table 7. Additionally, as $n$ increases, the performance of Self Check GP T with $n$ -gram (max) drops.

- 基于 $n$ -gram 的 Self Check GPT。在考察 1-gram 到 5-gram 的性能时,结果表明直接寻找最不可能的 token/n-gram 比计算句子的平均 $n$ -gram 分数更有效,详见附录表 7。此外,随着 $n$ 增大,基于 $n$ -gram (max) 的 Self Check GPT 性能会下降。

- Self Check GP T with NLI. The NLI-based method outperforms all black-box and grey-box baselines, and its performance is close to the performance of the Prompt method. As SelfCheckGPT with Prompt can be computationally heavy, Self Check GP T with NLI could be the most practical method as it provides a good trade-off between performance and computation.

- 基于 NLI 的 SelfCheckGPT。基于 NLI 的方法优于所有黑盒和灰盒基线,其性能接近基于提示 (Prompt) 的方法。由于使用提示的 SelfCheckGPT 计算量较大,基于 NLI 的 SelfCheckGPT 可能是最实用的方法,它在性能和计算量之间取得了良好的平衡。

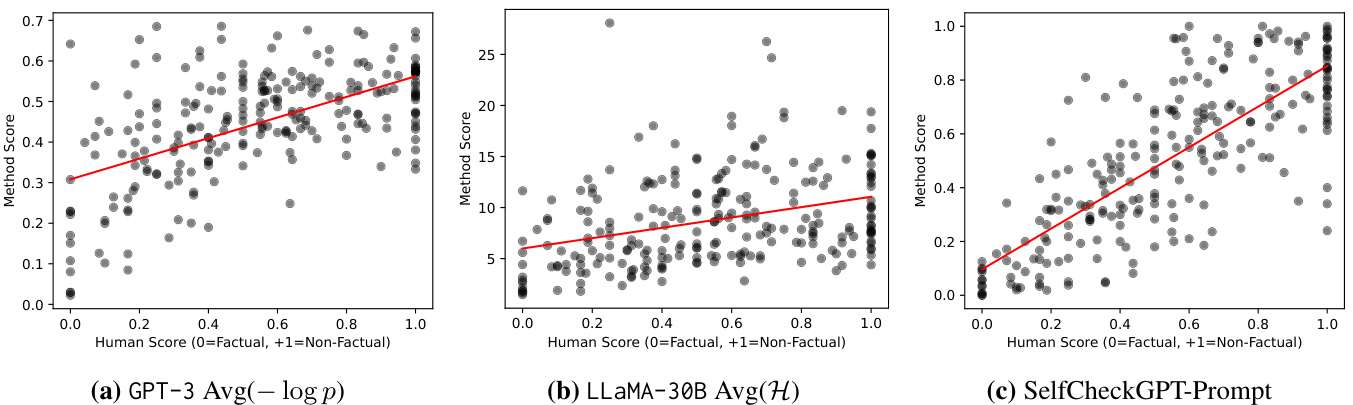

Figure 6: Scatter plot of passage-level scores where Y-axis $=$ Method scores, X-axis $=$ Human scores. Correlations are reported in Table 2. The scatter plots of other Self Check GP T variants are provided in Figure 10 in the appendix.

图 6: 段落级分数散点图,其中 Y 轴 $=$ 方法得分,X 轴 $=$ 人工评分。相关性数据见表 2。其他 Self Check GPT 变体的散点图见附录中的图 10。

7.2 Passage-level Factuality Ranking

7.2 段落级事实性排序

Previous results demonstrate that Self Check GP T is an effective approach for predicting sentencelevel factuality. An additional consideration is whether Self Check GP T can also be used to determine the overall factuality of passages. Passagelevel factuality scores are calculated by averaging the sentence-level scores over all sentences.

先前结果表明,Self Check GPT是一种预测句子层面事实性的有效方法。另一个考量是Self Check GPT能否用于判断段落整体事实性。段落层面事实性分数通过平均所有句子的句子级分数计算得出。

$$

\mathcal{S}_ {\mathrm{passage}}=\frac{1}{|R|}\sum_{i}\mathcal{S}(i)

$$

$$

\mathcal{S}_ {\mathrm{passage}}=\frac{1}{|R|}\sum_{i}\mathcal{S}(i)

$$

where $S(i)$ is the sentence-level score, and $|R|$ is the number of sentences in the passage. Since human judgement is somewhat subjective, averaging the sentence-level labels would lead to ground truths with less noise. Note that for $\operatorname{Avg}(-\log p)$ and $\operatorname{Avg}(\mathcal{H})$ , we compute the average over all tokens in a passage. Whereas for $\mathbf{Max}(-\log p)$ and $\operatorname{Max}(\mathcal{H})$ , we first take the maximum operation over tokens at the sentence level, and we then average over all sentences following Equation 12.

其中 $S(i)$ 是句子级分数,$|R|$ 是段落中的句子数量。由于人类判断具有一定主观性,对句子级标签取平均能降低真实标签的噪声。注意对于 $\operatorname{Avg}(-\log p)$ 和 $\operatorname{Avg}(\mathcal{H})$ ,我们计算段落中所有 token 的平均值;而对于 $\mathbf{Max}(-\log p)$ 和 $\operatorname{Max}(\mathcal{H})$ ,我们首先在句子级别对 token 取最大值操作,然后按照公式 12 对所有句子取平均值。

Our results in Table 2 and Figure 6 show that all Self Check GP T methods correlate far better with human judgements than the other baselines, including the grey-box probability and entropy methods. Self Check GP T-Prompt is the best-performing method, achieving the highest Pearson correlation of 78.32. Un surprisingly, the proxy LLM approach again achieves considerably lower correlations.

表 2 和图 6 中的结果显示,所有 Self Check GPT 方法与其他基线(包括灰盒概率和熵方法)相比,与人类判断的相关性都要好得多。其中 Self Check GPT-Prompt 表现最佳,达到了最高的皮尔逊相关系数 78.32。不出所料,代理大语言模型方法的相关性再次明显偏低。

7.3 Ablation Studies

7.3 消融实验

External Knowledge (instead of SelfCheck)

外部知识(替代SelfCheck)

If external knowledge is available, one can measure the informational consistency between the LLM response and the information source. In this experiment, we use the first paragraph of each concept that is available in WikiBio.6

如果有外部知识可用,就可以测量大语言模型响应与信息源之间的信息一致性。在本实验中,我们使用了WikiBio中每个概念的第一段。

Table 3: The performance when using Self Check GP T samples versus external stored knowledge.

| Method | Sent-lvlAUC-PR | Passage-lvl Pear. Spear. | ||

| NoFac | NoFac* | Fact | ||

| SelfCk-BERT | 81.96 | 45.96 | 44.23 | 58.18 55.90 |

| WikiBio+BERT | 81.32 | 40.62 | 49.15 | 58.71 55.80 |

| SelfCk-QA | 84.26 | 40.06 | 48.14 | 61.07 59.29 |

| WikiBio+QA | 84.18 | 45.40 | 52.03 | 57.26 53.62 |

| SelfCk-1gm | 85.63 | 41.04 | 58.47 | 64.71 64.91 |

| WikiBio+1gm | 80.43 | 31.47 | 40.53 | 28.67 26.70 |

| SelfCk-NLI | 92.50 | 45.17 | 66.08 | 74.14 73.78 |

| WikiBio+NLI | 91.18 | 48.14 | 71.61 | 78.84 80.00 |

| SelfCk-Prompt | 93.42 | 53.19 | 67.09 | 78.32 78.30 |

| WikiBio+Prompt | 93.59 | 65.26 | 73.11 | 85.90 86.11 |

表 3: 使用 Self Check GPT 样本与外部存储知识时的性能表现

| 方法 | Sent-lvlAUC-PR | Passage-lvl Pear. Spear. | ||

|---|---|---|---|---|

| NoFac | NoFac* | Fact | ||

| SelfCk-BERT | 81.96 | 45.96 | 44.23 | 58.18 55.90 |

| WikiBio+BERT | 81.32 | 40.62 | 49.15 | 58.71 55.80 |

| SelfCk-QA | 84.26 | 40.06 | 48.14 | 61.07 59.29 |

| WikiBio+QA | 84.18 | 45.40 | 52.03 | 57.26 53.62 |

| SelfCk-1gm | 85.63 | 41.04 | 58.47 | 64.71 64.91 |

| WikiBio+1gm | 80.43 | 31.47 | 40.53 | 28.67 26.70 |

| SelfCk-NLI | 92.50 | 45.17 | 66.08 | 74.14 73.78 |

| WikiBio+NLI | 91.18 | 48.14 | 71.61 | 78.84 80.00 |

| SelfCk-Prompt | 93.42 | 53.19 | 67.09 | 78.32 78.30 |

| WikiBio+Prompt | 93.59 | 65.26 | 73.11 | 85.90 86.11 |

Our findings in Table 3 show the following. First, Self Check GP T with BERTScore/QA, using selfsamples, can yield comparable or even better performance than when using the reference passage. Second, Self Check GP T with $n$ -gram shows a large performance drop when using the WikiBio passages instead of self-samples. This failure is attributed to the fact that the WikiBio reference text alone is not sufficient to train an $n$ -gram model. Third, in contrast, Self Check GP T with NLI/Prompt can benefit considerably when access to retrieved information is available. Nevertheless, in practice, it is infeasible to have an external database for every possible use case of LLM generation.

表3中的研究结果显示以下几点:首先,采用自采样(selfsamples)的Self Check GPT with BERTScore/QA方法,其表现可媲美甚至优于使用参考段落的情况。其次,当使用WikiBio段落替代自采样时,基于$n$-gram的Self Check GPT性能显著下降,这是由于仅凭WikiBio参考文本不足以训练$n$-gram模型所致。第三,与之相反,当能获取检索信息时,采用NLI/Prompt的Self Check GPT可获得显著提升。然而在实际应用中,为每个大语言模型生成用例都配备外部数据库并不可行。

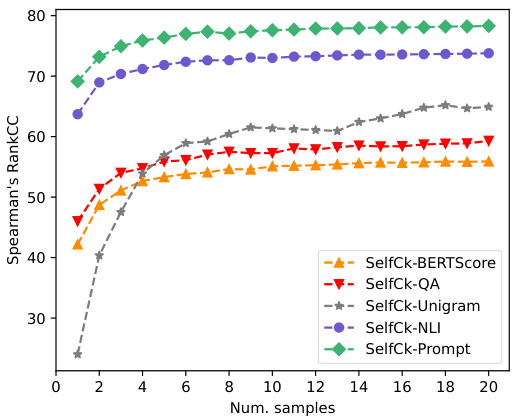

The Impact of the Number of Samples

样本数量的影响

Although sample-based methods are expected to perform better when more samples are drawn, this has higher computational costs. Thus, we investigate performance as the number of samples is varied. Our results in Figure 7 show that the performance of Self Check GP T increases smoothly as more samples are used, with diminishing gains as more samples are generated. Self Check GP T with $n$ -gram requires the highest number of samples before its performance reaches a plateau.

虽然基于样本的方法在抽取更多样本时预期表现更好,但会带来更高的计算成本。因此,我们研究了不同样本数量下的性能变化。图7的结果表明,随着使用样本数量的增加,Self Check GPT的性能平稳提升,但生成更多样本时收益逐渐递减。其中基于$n$-gram的Self Check GPT需要最多样本量才能达到性能平台期。

Figure 7: The performance of Self Check GP T methods on ranking passages (Spearman’s) versus the number of samples.

图 7: Self Check GPT方法在段落排序任务(Spearman)上的性能随样本数量的变化

The Choice of LLM for Self Check GP T-Prompt

大语言模型自检GP T-Prompt的选择

We investigate whether the LLM generating the text can self-check its own text. We conduct this ablation using a reduced set of the samples $(N{=}4)$ .

我们研究生成文本的大语言模型(LLM)是否能够自我检查其生成的文本。我们使用缩减后的样本集$(N{=}4)$进行这项消融实验。

Table 4: Comparison of GPT-3 (text-davinci-003) and ChatGPT (gpt-3.5.turbo) as the prompt-based text evaluator in Self Check GP T-Prompt. †Taken from Table 2 for comparison.

| Text-Gen | SelfCk-Prompt | N | Pear. | Spear. |

| GPT-3 | ChatGPT | 20 | 78.32 | 78.30 |

| GPT-3 | ChatGPT | 4 | 76.47 | 76.41 |

| GPT-3 | GPT-3 | 4 | 73.11 | 74.69 |

| +SelfCheck w/ unigram (max) | 20 | 64.71 | 64.91 | |

| +SelfCheck w/ NLI | 20 | 74.14 | 73.78 |

表 4: GPT-3 (text-davinci-003) 与 ChatGPT (gpt-3.5.turbo) 在 Self Check GP T-Prompt 中作为基于提示的文本评估器的对比。†数据来自表 2 用于比较。

| Text-Gen | SelfCk-Prompt | N | Pear. | Spear. |

|---|---|---|---|---|

| GPT-3 | ChatGPT | 20 | 78.32 | 78.30 |

| GPT-3 | ChatGPT | 4 | 76.47 | 76.41 |

| GPT-3 | GPT-3 | 4 | 73.11 | 74.69 |

| +SelfCheck w/ unigram (max) | 20 | 64.71 | 64.91 | |

| +SelfCheck w/ NLI | 20 | 74.14 | 73.78 |

The results in Table 4 show that GPT-3 can selfcheck its own text, and is better than the unigram method even when using only 4 samples. However, ChatGPT shows a slight improvement over GPT-3 in evaluating whether the sentence is supported by the context. More details are in Appendix C.

表 4 中的结果表明,GPT-3 能够自我检查其生成的文本,即使仅使用 4 个样本,其表现也优于 unigram 方法。不过,ChatGPT 在评估句子是否得到上下文支持方面比 GPT-3 略有提升。更多细节见附录 C。

8 Conclusions

8 结论

This paper is the first work to consider the task of hallucination detection for general large language model responses. We propose SelfCheckGPT, a zero-resource approach that is applicable to any black-box LLM without the need for external resources, and demonstrate the efficacy of our method. Self Check GP T outperforms a range of considered grey-box and black-box baseline detection methods at both the sentence and passage levels, and we further release an annotated dataset for GPT-3 hallucination detection with sentencelevel factuality labels.

本文是首个针对通用大语言模型响应进行幻觉检测的研究工作。我们提出了SelfCheckGPT这一零资源方法,该方法适用于任何无需外部资源的黑盒大语言模型,并通过实验验证了其有效性。SelfCheckGPT在句子和段落层面均优于一系列灰盒与黑盒基线检测方法,同时我们进一步发布了带有句子级真实性标注的GPT-3幻觉检测数据集。

Limitations

局限性

In this study, the 238 GPT-3 generated texts were predominantly passages about individuals in the WikiBio dataset. To further investigate the nature of LLM’s hallucination, this study could be extended to a wider range of concepts, e.g., to also consider generated texts about locations and objects. Further, this work considers factuality at the sentence level, but we note that a single sentence may consist of both factual and non-factual information. For example, the following work by Min et al. (2023) considers a fine-grained factuality evaluation by decomposing sentences into atomic facts. Finally, Self Check GP T with Prompt, which was convincingly the best selfcheck method, is quite computationally heavy. This might lead to impractical computational costs, which could be addressed in future work to be made more efficient.

在本研究中,238篇GPT-3生成的文本主要围绕WikiBio数据集中的人物段落。为深入探究大语言模型(LLM)幻觉的本质,未来研究可扩展至更广泛的概念范畴(例如涵盖地点和对象的生成文本)。当前工作聚焦于句子层面的事实性评估,但需注意单个句子可能同时包含真实与非真实信息。例如Min等人(2023)的最新研究通过将句子分解为原子事实(atomic facts)实现了细粒度的事实性评估。最后需要指出,当前最优的自检方法Self Check GPT with Prompt存在较高计算负荷,可能导致实际应用成本过高,这为未来研究提供了优化计算效率的方向。

Ethics Statement

伦理声明

As this work addresses the issue of LLM’s hallucination, we note that if hallucinated contents are not detected, they could lead to misinformation.

由于本研究针对大语言模型(LLM)的幻觉问题,我们注意到若未能检测出幻觉内容,可能导致错误信息传播。

Acknowledgments

致谢

This work is supported by Cambridge University Press & Assessment (CUP&A), a department of The Chancellor, Masters, and Scholars of the University of Cambridge, and the Cambridge Commonwealth, European & International Trust. We would like to thank the anonymous reviewers for their helpful comments.

本研究由剑桥大学出版社与考评局 (Cambridge University Press & Assessment, CUP&A) 、剑桥大学校长及学者学院以及剑桥联邦、欧洲与国际信托基金共同支持。我们谨向匿名评审专家提供的宝贵意见致以谢意。

References

参考文献

Amos Azaria and Tom Mitchell. 2023. The internal state of an llm knows when its lying. arXiv preprint arXiv:2304.13734.

Amos Azaria 和 Tom Mitchell. 2023. 大语言模型的内部状态知道何时在撒谎. arXiv 预印本 arXiv:2304.13734.

Iz Beltagy, Matthew E. Peters, and Arman Cohan. 2020. Longformer: The long-document transformer.

Iz Beltagy、Matthew E. Peters 和 Arman Cohan。2020。Longformer:长文档 Transformer。

Sidney Black, Stella Biderman, Eric Hallahan, Quentin Anthony, Leo Gao, Laurence Golding, Horace He, Connor Leahy, Kyle McDonell, Jason Phang, Michael Pieler, Usvsn Sai Prashanth, Shivanshu Purohit, Laria Reynolds, Jonathan Tow, Ben Wang, and Samuel Weinbach. 2022. GPT-NeoX-20B: An opensource auto regressive language model. In Proceedings of BigScience Episode #5 – Workshop on Challenges & Perspectives in Creating Large Language Models, pages 95–136, virtual+Dublin. Association for Computational Linguistics.

Sidney Black、Stella Biderman、Eric Hallahan、Quentin Anthony、Leo Gao、Laurence Golding、Horace He、Connor Leahy、Kyle McDonell、Jason Phang、Michael Pieler、Usvsn Sai Prashanth、Shivanshu Purohit、Laria Reynolds、Jonathan Tow、Ben Wang 和 Samuel Weinbach。2022。GPT-NeoX-20B:一种开源自回归大语言模型。载于《BigScience Episode #5——大语言模型创建中的挑战与前景研讨会论文集》,第95-136页,线上+都柏林。计算语言学协会。

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda Askell, et al. 2020. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901.

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell等. 2020. 大语言模型是少样本学习者. 神经信息处理系统进展, 33:1877–1901.

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Sebastian Gehrmann, et al. 2022. Palm: Scaling language modeling with pathways. arXiv preprint arXiv:2204.02311.

Aakanksha Chowdhery, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham, Hyung Won Chung, Charles Sutton, Se