Toward Efficient Language Model Pre training and Downstream Adaptation via Self-Evolution: A Case Study on SuperGLUE

通过自我进化实现高效语言模型预训练与下游适配:以SuperGLUE为例

Abstract

摘要

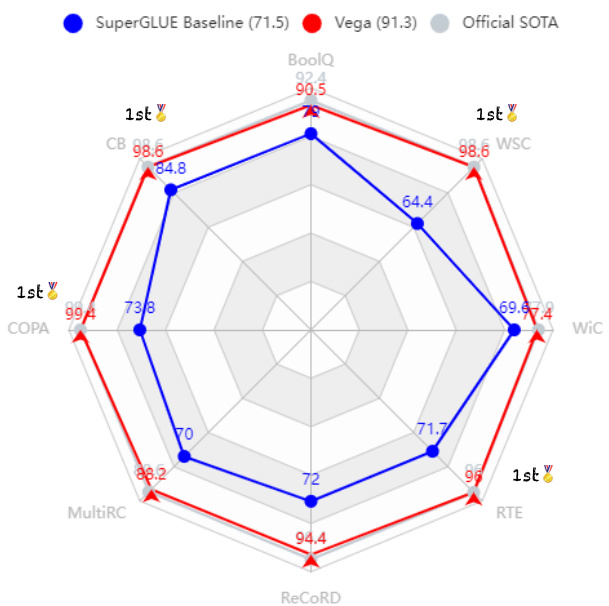

This technical report briefly describes our JDExplore d-team’s Vega v2 submission on the SuperGLUE leader board 1. SuperGLUE is more challenging than the widely used general language understanding evaluation (GLUE) benchmark, containing eight difficult language understanding tasks, including question answering, natural language inference, word sense disambiguation, co reference resolution, and reasoning. [Method] Instead of arbitrarily increasing the size of a pretrained language model (PLM), our aim is to 1) fully extract knowledge from the input pre training data given a certain parameter budget, e.g., 6B, and 2) effectively transfer this knowledge to downstream tasks. To achieve goal 1), we propose self-evolution learning for PLMs to wisely predict the informative tokens that should be masked, and supervise the masked language modeling (MLM) process with rectified smooth labels. For goal 2), we leverage the prompt transfer technique to improve the low-resource tasks by transferring the knowledge from the foundation model and related downstream tasks to the target task. [Results] According to our submission record (Oct. 2022), with our optimized pre training and fine-tuning strategies, our 6B Vega method achieved new state-of-the-art performance on 4/8 tasks, sitting atop the SuperGLUE leader board on Oct. 8, 2022, with an average score of 91.3.

本技术报告简要介绍了我们JDExplore d-team在SuperGLUE排行榜上的Vega v2提交方案。SuperGLUE比广泛使用的通用语言理解评估(GLUE)基准更具挑战性,包含八项困难的语言理解任务,涵盖问答、自然语言推理、词义消歧、共指消解和推理等领域。[方法] 我们并未盲目增大预训练语言模型(PLM)的规模,而是致力于:1) 在给定参数量(如60亿)下充分提取输入预训练数据的知识;2) 高效将该知识迁移至下游任务。为实现目标1),我们提出PLM的自进化学习机制,智能预测应被掩码的信息化token,并通过修正平滑标签监督掩码语言建模(MLM)过程。针对目标2),我们采用提示迁移技术,通过从基础模型和相关下游任务转移知识来提升低资源目标任务的性能。[结果] 根据2022年10月的提交记录,我们优化的预训练与微调策略使60亿参数的Vega方法在8项任务中4项取得最先进性能,于2022年10月8日以91.3的平均分位居SuperGLUE榜首。

Figure 1: Vega v2 achieves state-of-the-art records on 4 out of 8 tasks among all submissions, producing the best average score of 91.3 and significantly outperforming the competitive official SuperGLUE Baseline (Wang et al., 2019a, BERT $^{++}$ ).

图 1: Vega v2 在所有提交中,在 8 项任务中的 4 项上达到了最先进的记录,取得了 91.3 的最佳平均分,显著优于竞争性的官方 SuperGLUE 基线 (Wang et al., 2019a, BERT$^{++}$)。

1 Introduction

1 引言

The last several years have witnessed notable progress across many natural language processing (NLP) tasks, led by pretrained language models (PLMs) such as bidirectional encoder representations from transformers (BERT) (Devlin et al., 2019), OpenAI GPT (Radford et al., 2019) and its most renowned evolution GPT3 (Brown et al., 2020). The unifying theme of the above methods is that they conduct self-supervised learning with massive easy-to-acquire unlabelled text corpora during the pre training stage and effectively fine-tune on a few labeled data in target tasks. Such a “pre training-fine-tuning” paradigm has been widely adopted by academia and industry, and the main research and development direction involves scaling the sizes of foundation models up to extremely large settings, such as Google’s 540B PaLM (Chowdhery et al., 2022b), to determine the upper capacity bounds of foundation models.

过去几年,自然语言处理(NLP)领域在预训练语言模型(PLM)的引领下取得了显著进展,代表性模型包括Transformer双向编码表示(BERT) (Devlin等人,2019)、OpenAI GPT (Radford等人,2019)及其最著名的演进版本GPT3 (Brown等人,2020)。这些方法的共同特点是在预训练阶段利用海量易获取的无标注文本语料进行自监督学习,并在目标任务中使用少量标注数据进行有效微调。这种"预训练-微调"范式已被学术界和工业界广泛采用,当前主要研发方向是将基础模型规模扩展至极大参数级别,例如谷歌5400亿参数的PaLM (Chowdhery等人,2022b),以探索基础模型的能力上限。

In such a context, the SuperGLUE (Wang et al., 2019a) (a more challenging version of the general language understanding evaluation (GLUE) benchmark (Wang et al., 2018)) has become the most influential and prominent evaluation benchmark for the foundation model community. Most highperforming models on the GLUE/ SuperGLUE leader board bring new insights and better practices to properly guide future research and applications.

在此背景下,SuperGLUE (Wang et al., 2019a) (通用语言理解评估基准GLUE (Wang et al., 2018) 的进阶挑战版) 已成为基础模型领域最具影响力和代表性的评估基准。GLUE/SuperGLUE排行榜上多数高性能模型都为未来研究和应用提供了新见解与更佳实践方案。

We recently submitted our 6B Vega v2 model to the SuperGLUE leader board and, as seen in Figure 1, obtained state-of-the-art records on 4 out of 8 tasks, sitting atop the leader board as of Oct. 8, 2022, with an average score of 91.3. Encouragingly, our 6B model with deliberately optimized pre training and downstream adaptation strategies substantially outperforms 540B PaLM (Chowdhery et al., 2022b), showing the effectiveness and parameter-efficiency of our Vega model. This technical report briefly describes how we build our powerful model under a certain parameter budget, i.e., 6B, from different aspects, including backbone framework (§2.1), the efficient pre training process (§2.2), and the downstream adaptation approach (§2.3). To fully extract knowledge from the given pre training data to PLMs, we propose a self-evolution learning (in Figure 2) mechanism to wisely predict the informative tokens that should be masked and supervise the mask language modeling process with rectified smooth labels. To effectively transfer the knowledge to different downstream tasks, especially the low-resource tasks, e.g., CB, COPA, and WSC, we design a knowledge distillation-based prompt transfer method (Zhong et al., 2022b) (in Figure 3) to achieve better performance with improved robustness.

我们近期将6B Vega v2模型提交至SuperGLUE排行榜。如图1所示,该模型在8项任务中斩获4项最先进指标,并以91.3的平均分位列2022年10月8日榜单首位。值得注意的是,通过精心优化的预训练与下游适配策略,我们的6B模型显著超越了540B参数的PaLM (Chowdhery et al., 2022b),彰显了Vega模型的高效性与参数利用率。本技术报告从主干框架(§2.1)、高效预训练流程(§2.2)和下游适配方法(§2.3)等维度,阐述了如何在6B参数预算内构建强大模型。为充分挖掘预训练数据价值,我们提出自演进学习机制(图2),智能预测需遮蔽的信息化token,并通过平滑修正标签监督掩码语言建模过程。针对下游任务(特别是CB、COPA、WSC等低资源任务)的知识迁移,我们设计了基于知识蒸馏的提示迁移方法(Zhong et al., 2022b)(图3),在提升鲁棒性的同时获得更优性能。

The remainder of this paper is designed as follows. We introduce the major utilized approaches in Section 2. In Section 3, we review the task descriptions and data statistics and present the experimental settings and major results. Conclusions are described in Section 4.

本文剩余部分结构如下:第2节介绍主要采用的方法;第3节回顾任务描述与数据统计,并展示实验设置与核心结果;第4节给出结论。

2 Approaches

2 方法

In this section, we describe the main techniques in our model, including the backbone framework in $\S2.1$ , the efficient pre training approaches in $\S2.2$ , and the downstream adaptation technique in $\S2.3$ .

在本节中,我们将介绍模型的主要技术,包括主干框架($\S2.1$)、高效预训练方法($\S2.2$)以及下游适配技术($\S2.3$)。

2.1 Backbone Framework

2.1 骨干框架

Vanilla transformers (Vaswani et al., 2017) enjoy appealing s cal ability as large-scale PLM backbones (Devlin et al., 2019; Raffel et al., 2020; Brown et al., 2020; Zan et al., 2022b); for example, T5 and GPT3 flexibly scale their feed forward dimensions and layers up to 65,534 and 96, respectively. We hereby employ a vanilla transformer, i.e., multihead self-attention followed by a fully connected feed forward network, as our major backbone framework. As encoder-only PLMs have an overwhelming advantage over the existing methods on the SuperGLUE leader board, we train our large model in an encoder-only fashion to facilitate downstream language understanding tasks. According to our PLM parameter budget – 6 Billion, we empirically set the model as follows: 24 layers, 4096 as the hidden layer size, an FFN of size 16,384, 32 heads, and 128 as the head size. In addition, He et al. (2021) empirically demonstrated the necessity of computing self-attention with disentangled matrices based on their contents and relative positions, namely disentangled attention2, which is adopted in Vega v2.

Vanilla transformers (Vaswani等人,2017) 作为大规模预训练语言模型 (PLM) 的主干网络展现出卓越的可扩展性 (Devlin等人,2019; Raffel等人,2020; Brown等人,2020; Zan等人,2022b)。例如,T5和GPT3可灵活扩展其前馈网络维度至65,534,层数至96层。本文采用标准Transformer架构 (即多头自注意力机制后接全连接前馈网络) 作为主干框架。鉴于仅编码器型PLM在SuperGLUE榜单上对现有方法的压倒性优势,我们以仅编码器模式训练大模型以提升下游语言理解任务性能。根据6 Billion的PLM参数量预算,我们经验性地设置模型结构如下:24层网络,隐藏层维度4096,前馈网络维度16,384,32个注意力头,头维度128。此外,He等人 (2021) 通过实验验证了基于内容与相对位置解耦矩阵 (即解耦注意力2) 计算自注意力的必要性,该机制被Vega v2采用。

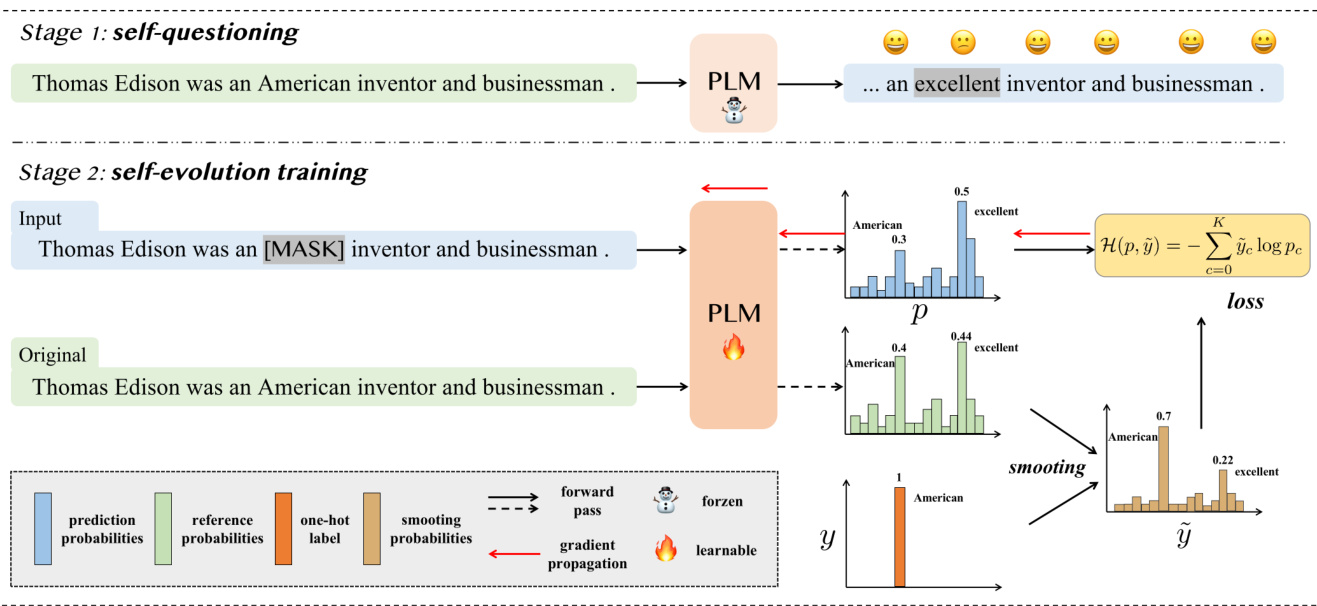

Figure 2: Overview of the proposed self-evolution learning mechanism for PLMs.

图 2: 提出的PLMs自进化学习机制概览。

2.2 Efficient Pre training

2.2 高效预训练

Recall that our aim is not to arbitrarily increase the model scales, but to facilitate storing the informative derived knowledge from the pre training data in PLMs. To approach this goal, we first revisit the representative self-supervision objective – masked language modeling (Devlin et al., 2019) (MLM), and propose a novel self-evolution learning mechanism to enable our PLM to wisely predict the informative tokens that should be masked, and train the model with smooth self-evolution labels.

需要明确的是,我们的目标并非随意扩大模型规模,而是促进预训练语言模型(PLM)高效存储从预训练数据中提取的信息化知识。为实现这一目标,我们首先重新审视了经典的掩码语言建模(MLM)自监督目标 [20],并提出创新的自进化学习机制:使PLM能够智能预测应当被掩码的信息化token,同时采用平滑自进化标签进行模型训练。

Masked Language Modeling MLM is a widely used self-supervision objective when conducting largescale pre training on large amounts of text to learn contextual word representations. In practice, MLM randomly selects a subset of tokens from a sentence and replaces them with a special mask token, i.e., [MASK]. However, such a random masking procedure is usually suboptimal, as the masked tokens are sometimes too easy to guess with only local cues or shallow patterns. Hence, some prior works focused on more informative masking strategies, such as span-level masking (Joshi et al., 2020), entity-level masking (Sun et al., 2019), and pointwise mutual information (PMI)-based masking (Sadeq et al., 2022). These efforts achieved better performance than vanilla random masking, which inspires us to explore more approaches for fully extracting knowledge from pre training data.

掩码语言建模 (Masked Language Modeling, MLM) 是一种广泛使用的自监督目标,用于在大规模文本预训练中学习上下文词表征。实践中,MLM 会从句子中随机选取部分 token 并用特殊掩码标记 [MASK] 替换。然而,这种随机掩码策略通常并非最优,因为被掩码的 token 有时仅通过局部线索或浅层模式就能轻易推测。因此,部分先前研究聚焦于更具信息量的掩码策略,例如基于片段级掩码 (Joshi et al., 2020)、实体级掩码 (Sun et al., 2019) 和点间互信息 (PMI) 的掩码 (Sadeq et al., 2022)。这些方法相比原始随机掩码取得了更好的性能,这启发我们探索更多方法以充分提取预训练数据中的知识。

Self-Evolution Learning Based on the above motivations, we propose a novel self-evolution learning mechanism for PLMs, as illustrated in Figure 2. Different from the prior works that designed masking strategies to train language models from scratch, our self-evolution learning approach aims to encourage the given “naive” PLMs to find patterns (tokens) that are not learned well but are more informative, and then fix them. Specifically, there are two stages in our self-evolution learning mechanism, as follows.

基于上述动机,我们提出了一种新颖的自进化学习机制用于预训练语言模型 (PLM) ,如图 2 所示。与之前通过设计掩码策略从头训练语言模型的工作不同,我们的自进化学习方法旨在促使给定的"初始"预训练语言模型发现那些未充分学习但信息量更大的模式 (token) ,进而修复它们。具体而言,我们的自进化学习机制包含以下两个阶段:

Stage 1 is self-questioning. Given a vanilla PLM (e.g., trained with the random masking objective), we first feed the original training samples into the PLM and make it re-predict the output probabilities for each token. As the PLM has seen these samples and learned from them in the pre training stage, it can make deterministic and correct predictions for most of the tokens, which we denote as learned tokens. However, for some tokens, such as “American” in the sentence “Thomas Edison was an American inventor and businessman”, the PLM tends to predict this token as “excellent” (the probability of “excellent” is 0.44, while the probability of “American” is 0.4). We attribute this phenomenon to the fact that the PLM does not learn this knowledge-intense pattern but only makes its prediction based on the local cues. We refer to these harder and more informative tokens as neglected tokens. After all training samples are fed into the PLM, we can obtain a set of neglected tokens for each training sample. Note that this procedure is conducted offline and does not update the parameters of the original PLM.

阶段1是自提问阶段。给定一个基础PLM(例如采用随机掩码目标训练的模型),我们首先将原始训练样本输入PLM,使其重新预测每个token的输出概率。由于PLM在预训练阶段已见过这些样本并从中学习,它能对大多数token做出确定且正确的预测,这些token我们称为已学习token。但对于部分token(如句子"Thomas Edison was an American inventor and businessman"中的"American"),PLM更倾向于预测为"excellent"("excellent"概率为0.44,而"American"概率为0.4)。我们将这种现象归因于PLM未能学习这种知识密集型模式,仅基于局部线索进行预测。这类难度较高且信息量更大的token被称为被忽视token。当所有训练样本输入PLM后,可为每个训练样本获得一组被忽视token。需注意的是,此过程为离线操作,不会更新原始PLM的参数。

| Algorithm 1: Transductive Fine-tuning | |

| Input: Finetuned (FT) Model M, DownstreamSeedD Output: Transductively FT Model M | |

| 1 t:=0 while not convergence do | |

| 3 | Estimate D with M and get DM |

| 4 | Tune M on DU DM and get M', then M = M |

| I+=: | |

| 6end | |

算法 1: 传导式微调 (Transductive Fine-tuning)

输入: 微调 (FT) 模型 M, 下游种子数据 D

输出: 传导式微调模型 M

1 t:=0

2 while 未收敛 do

3 | 用 M 估计 D 得到 DM

4 | 在 DU DM 上微调 M 得到 M', 然后 M = M

5 | t+=1

6 end

Stage 2 is self-evolution training. Given the neglected tokens (obtained in stage 1), we can select them for masking and then encourage the PLM to learn from these informative patterns, thus continuously improving the capability of the PLM. Intuitively, we can make the PLM learn how to predict these tokens, by minimizing the loss between the predicted probabilities and one-hot labels. However, considering the diversity of the neglected token, if we force the PLM to promote one specified ground truth over others, the other reasonable “ground truths” (for a given masking token, there can be more than one reasonable prediction) become false negatives that may plague the training process or cause a performance decrease (Li et al., 2022).

阶段2是自进化训练。给定被忽略的token(在阶段1中获得),我们可以选择它们进行掩码处理,然后促使预训练语言模型(PLM)从这些信息丰富的模式中学习,从而持续提升PLM的能力。直观上,我们可以通过最小化预测概率与独热标签之间的损失,让PLM学习如何预测这些token。然而,考虑到被忽略token的多样性,如果我们强制PLM提升某个特定真实标签而忽略其他,其他合理的"真实标签"(对于给定的掩码token,可能存在多个合理的预测)就会变成假阴性,这可能会干扰训练过程或导致性能下降 [20]。

Hence, we propose a novel self-evolution training method to help the PLM learn from the informative tokens, without damaging the diversification ability of the PLM. In practice, we feed the masked sentence and original sentence into the PLM, and obtain the prediction probabilities $p$ and reference probabilities $r$ for the [MASK] token. Then, we merge the $r$ and the one-hat label $y$ as $\tilde{y}=(1-\alpha)y+\alphar$ , where $\tilde{y}$ denotes the smoothing label probabilities and $\alpha$ is a weighting factor that is empirically set as 0.5. Finally, we use the cross-entropy loss function to minimize the difference between $p$ and $\tilde{y}$ . In this way, different from the strong-headed supervision of the one-hot labels $y$ , the PLM can benefit more from the smooth and informative labels $\tilde{y}$ .

因此,我们提出了一种新颖的自进化训练方法,帮助预训练语言模型 (PLM) 从信息丰富的token中学习,同时不损害PLM的多样化能力。具体实现时,我们将掩码句子和原始句子输入PLM,分别获得[MASK] token的预测概率$p$和参考概率$r$。接着,我们将$r$与one-hot标签$y$融合为$\tilde{y}=(1-\alpha)y+\alphar$,其中$\tilde{y}$表示平滑后的标签概率,$\alpha$为权重因子(经验值设为0.5)。最后采用交叉熵损失函数最小化$p$与$\tilde{y}$之间的差异。这种方式不同于one-hot标签$y$的强监督信号,PLM能从更平滑且信息丰富的标签$\tilde{y}$中获得更大收益。

2.3 Downstream Adaptation

2.3 下游适配

In addition to the above efficient pre training methods, we also introduce some useful fine-tuning strategies for effectively adapting our Vega v2 to downstream tasks. Specifically, there are two main problems that hinder the adaptation performance of a model. 1) The first concerns the domain gaps between the training and test sets, which lead to poor performance on target test sets. 2) The second is the use of limited training data, e.g., the CB task, which only consists of 250 training samples, as limited data can hardly update the total parameters of PLMs effectively. Note that in addition to the strategies listed below, we have also designed and implemented other methods from different perspectives to improve the generalization and efficiency of models, e.g. the FSAM optimizer for PLMs (Zhong et al., 2022c), Sparse Adapter (He et al., 2022), and continued training with downstream data (Zan et al., 2022a). Although these approaches can help to some extent, they do not provide complementary benefits compared to the listed approaches, so they are not described here.

除了上述高效的预训练方法外,我们还引入了一些实用的微调策略,以有效适配Vega v2至下游任务。具体而言,阻碍模型适配性能的主要有两个问题:1) 首先是训练集与测试集之间的领域差距,这会导致目标测试集上表现不佳;2) 其次是使用有限的训练数据,例如CB任务仅包含250个训练样本,有限的数据难以有效更新预训练语言模型(PLM)的全部参数。需要注意的是,除以下列出的策略外,我们还从不同角度设计并实现了其他方法来提升模型的泛化能力和效率,例如用于PLM的FSAM优化器(Zhong et al., 2022c)、稀疏适配器(Sparse Adapter)(He et al., 2022)以及下游数据持续训练(Zan et al., 2022a)。尽管这些方法在一定程度上有所帮助,但与所列方法相比并未提供互补优势,因此在此不做赘述。

Trans duct ive Fine-tuning Regrading the domain or linguistic style gap between the training and test sets (the first problem), we adopt a trans duct ive fine-tuning strategy to improve the target domain performance, which is a common practice in machine translation evaluations (Wu et al., 2020; Ding and Tao, 2021) and some domain adaptation applications (Liu et al., 2020). The proposed trans duct ive fine-tuning technique is shown in Algorithm 1. Whether we should conduct trans duct ive fine-tuning depends on the practical downstream performance achieved.

传导式微调

针对训练集与测试集之间的领域或语言风格差异(第一个问题),我们采用传导式微调策略来提升目标领域性能。该策略在机器翻译评估(Wu et al., 2020; Ding and Tao, 2021)和部分领域适应应用(Liu et al., 2020)中已有成熟实践。算法1展示了所提出的传导式微调技术。是否执行传导式微调需根据实际下游任务表现决定。

(注:根据术语处理规则,"transductive fine-tuning"首次出现时本应标注英文原文,但用户提供的术语表中未包含该词条,故按默认策略保留不译。若需补充术语对应关系,请提供该词条的中文译法。)

Prompt-Tuning To address the second problem, we replace the vanilla fine-tuning process with a more parameter-efficient method, prompt-tuning (Lester et al., 2021), for low-resource tasks. Despite the success of prompts in many NLU tasks (Wang et al., 2022; Zhong et al., 2022a), directly using prompttuning might lead to poor results, as this approach is sensitive to the prompt’s parameter initialization settings (Zhong et al., 2022b). An intuitive approach, termed as prompt transfer (Vu et al., 2022), is to initialize the prompt on the target task with the trained prompts from similar source tasks. Unfortunately, such a vanilla prompt transfer approach usually achieves suboptimal performance, as (i) the prompt transfer process is highly dependent on the similarity of the source-target pair and (ii) directly tuning a prompt initialized with the source prompt on the target task might lead to forgetting the useful general knowledge learned from the source task.

提示调优 (Prompt-Tuning)

为解决第二个问题,我们针对低资源任务采用参数效率更高的提示调优方法 (Lester et al., 2021) 替代传统微调。尽管提示在众多自然语言理解任务中成效显著 (Wang et al., 2022; Zhong et al., 2022a),直接使用提示调优可能效果欠佳,因为该方法对提示参数的初始化设置极为敏感 (Zhong et al., 2022b)。一种直观的解决方案是提示迁移 (Vu et al., 2022),即使用相似源任务的训练后提示来初始化目标任务的提示。然而,这种基础提示迁移方法往往表现欠佳,原因在于:(i) 提示迁移过程高度依赖源任务-目标任务的相似度;(ii) 直接在目标任务上微调源自源任务的初始化提示,可能导致遗忘从源任务中学到的有用通用知识。

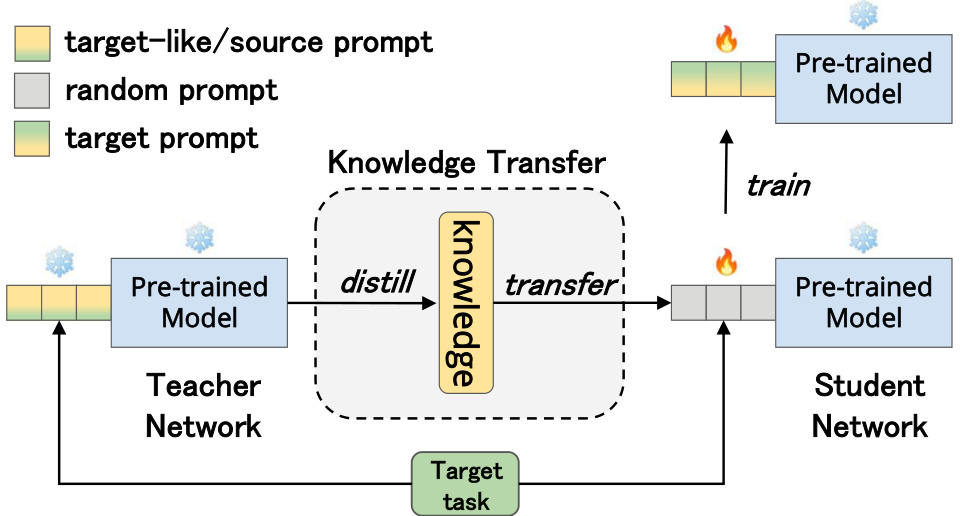

Figure 3: The architecture of our proposed KD-based prompt transfer method.

图 3: 我们提出的基于知识蒸馏 (KD) 的提示迁移方法架构。

To this end, we introduce a novel prompt transfer framework (Zhong et al., 2022b) to tackle the above problems. For (i), we propose a new metric to accurately predict prompt transfer ability. In practice, the metric first maps the source/target tasks into a shared semantic space to obtain their task embeddings based on the source/target soft prompts and then measures the prompt transfer ability via the similarity of corresponding task embeddings. In our primary experiments, we found that this metric could make appropriately choose which source tasks should be used for a target task. For instance, to perform prompt transfer among the SuperGLUE tasks, WSC is a better source task for the CB task, while COPA benefits more from the RTE task.

为此,我们引入了一种新颖的提示迁移框架 (Zhong et al., 2022b) 来解决上述问题。针对问题 (i),我们提出了一种新指标来准确预测提示迁移能力。该指标首先将源/目标任务映射到共享语义空间,基于源/目标软提示获取任务嵌入,然后通过对应任务嵌入的相似度来衡量提示迁移能力。在初步实验中,我们发现该指标能合理选择适用于目标任务的源任务。例如,在SuperGLUE任务间进行提示迁移时,WSC是CB任务更优的源任务,而COPA则从RTE任务中获益更多。

Regarding (ii), inspired by the knowledge distillation (KD) paradigm (Hinton et al., 2015; Liu et al., 2021; Rao et al., 2022) that leverages a powerful teacher model to guide the training process of a student model, we propose a KD-based prompt transfer method that leverages the KD technique to transfer the knowledge from the source prompt to the target prompt in a subtle manner, thus effectively alleviating the problem of prior knowledge forgetting. An illustration of our proposed method is shown in Figure 3. More specifically, our KD-based prompt transfer approach first uses the PLM with the source prompt as the teacher network and the PLM with the randomly initialized prompt as the student network. Then, the student network is trained using the supervision signals from both the ground-truth labels in the target task and the soft targets predicted by the te