From Matching to Generation: A Survey on Generative Information Retrieval

从匹配到生成:生成式信息检索综述

XIAOXI LI and JIAJIE JIN, Renmin University of China, China YUJIA ZHOU, Tsinghua University, China YUYAO ZHANG and PEITIAN ZHANG, Renmin University of China, China YUTAO ZHU and ZHICHENG DOU∗, Renmin University of China, China

李晓曦和靳佳杰,中国人民大学,中国

周雨佳,清华大学,中国

张雨瑶和张沛天,中国人民大学,中国

朱玉涛和窦志成*,中国人民大学,中国

Information Retrieval (IR) systems are crucial tools for users to access information, which have long been dominated by traditional methods relying on similarity matching. With the advancement of pre-trained language models, generative information retrieval (GenIR) emerges as a novel paradigm, attracting increasing attention. Based on the form of information provided to users, current research in GenIR can be categorized into two aspects: (1) Generative Document Retrieval (GR) leverages the generative model’s parameters for memorizing documents, enabling retrieval by directly generating relevant document identifiers without explicit indexing. (2) Reliable Response Generation employs language models to directly generate information users seek, breaking the limitations of traditional IR in terms of document granularity and relevance matching while offering flexibility, efficiency, and creativity to meet practical needs. This paper aims to systematically review the latest research progress in GenIR. We will summarize the advancements in GR regarding model training and structure, document identifier, incremental learning, etc., as well as progress in reliable response generation in aspects of internal knowledge memorization, external knowledge augmentation, etc. We also review the evaluation, challenges and future developments in GenIR systems. This review aims to offer a comprehensive reference for researchers, encouraging further development in the GenIR field. 1

信息检索 (IR) 系统是用户获取信息的关键工具,长期以来一直由依赖相似性匹配的传统方法主导。随着预训练语言模型的发展,生成式信息检索 (GenIR) 作为一种新范式出现,吸引了越来越多的关注。根据向用户提供信息的形式,当前 GenIR 的研究可分为两个方面:(1) 生成式文档检索 (GR) 利用生成模型的参数记忆文档,无需显式索引即可通过直接生成相关文档标识符实现检索。(2) 可靠响应生成利用语言模型直接生成用户寻求的信息,打破传统 IR 在文档粒度和相关性匹配方面的限制,同时提供灵活性、效率和创造性以满足实际需求。本文旨在系统梳理 GenIR 的最新研究进展。我们将总结 GR 在模型训练与结构、文档标识符、增量学习等方面的进展,以及可靠响应生成在内部知识记忆、外部知识增强等方面的进展。我们还回顾了 GenIR 系统的评估、挑战与未来发展。本综述旨在为研究者提供全面参考,推动 GenIR 领域的进一步发展。[1]

CCS Concepts: • Information systems $\rightarrow$ Retrieval models and ranking.

CCS概念:• 信息系统 $\rightarrow$ 检索模型与排序

Additional Key Words and Phrases: Generative Information Retrieval; Generative Document Retrieval; Reliable Response Generation

附加关键词与短语:生成式信息检索 (Generative Information Retrieval);生成式文档检索 (Generative Document Retrieval);可靠响应生成

1 INTRODUCTION

1 引言

Information retrieval (IR) systems are crucial for navigating the vast sea of online information in today’s digital landscape. From using search engines such as Google [76], Bing [196], and Baidu [209], to engaging with question-answering or dialogue systems like ChatGPT [209] and Bing Chat [197], and discovering content via recommendation platforms like Amazon [4] and

信息检索 (IR) 系统在当今数字环境中对海量在线信息的导航至关重要。从使用 Google [76]、Bing [196] 和百度 [209] 等搜索引擎,到与 ChatGPT [209] 和 Bing Chat [197] 等问答或对话系统互动,再到通过 Amazon [4] 等内容推荐平台发现信息

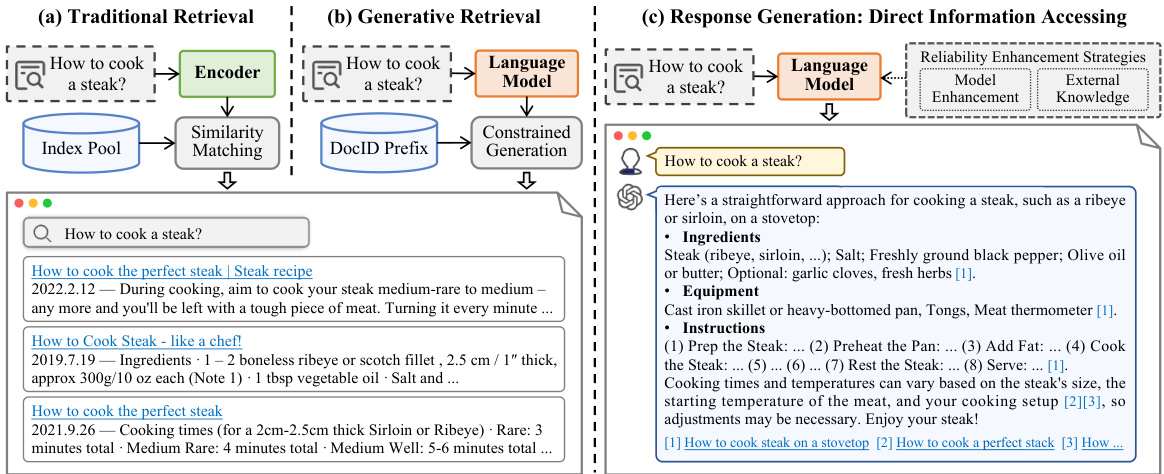

Fig. 1. Exploring IR Evolution: From Traditional to Generative Methods - This diagram illustrates the shift from traditional similarity-based document matching (a) to GenIR techniques. Current GenIR methods can be categorized into two types: generative retrieval (b), which retrieves documents by directly generating relevant DocIDs constrained by a DocID prefix tree; and response generation (c), which directly generates reliable and user-centric answers.

图 1: 探索信息检索(IR)演进:从传统方法到生成式方法 - 该图展示了从基于相似度的传统文档匹配(a)到生成式信息检索(GenIR)技术的转变。当前GenIR方法可分为两类:生成式检索(b)通过受文档ID前缀树约束直接生成相关DocID来检索文档;响应生成(c)直接生成可靠且以用户为中心的答案。

YouTube [77], IR technologies are integral to our everyday online experiences. These systems are reliable and play a key role in spreading knowledge and ideas globally.

YouTube [77],信息检索(IR)技术已成为我们日常在线体验不可或缺的组成部分。这些系统稳定可靠,在全球知识传播和思想交流中发挥着关键作用。

Traditional IR systems primarily rely on sparse retrieval methods based on word-level matching. These methods, which include Boolean Retrieval [242], BM25 [238], SPLADE [65], and UniCOIL [163], establish connections between vocabulary and documents, offering high retrieval efficiency and robust system performance. With the rise of deep learning, dense retrieval methods such as DPR [117] and ANCE [324], based on the bidirectional encoding representations from the BERT model [121], capture the deep semantic information of documents, significantly improving retrieval precision. Although these methods have achieved leaps in accuracy, they rely on large-scale document indices [57, 187] and cannot be optimized in an end-to-end way. Moreover, when people search for information, what they really need is a precise and reliable answer. This document ranking list-based IR approach still requires users to spend time summarizing their required answers, which is not ideal enough for information seeking [195].

传统信息检索(IR)系统主要依赖于基于词级匹配的稀疏检索方法。这些方法包括布尔检索(Boolean Retrieval)[242]、BM25 [238]、SPLADE [65]和UniCOIL [163],它们建立了词汇与文档之间的联系,具有高检索效率和强大的系统性能。随着深度学习的兴起,基于BERT模型(Bidirectional Encoder Representations from Transformers)[121]的双向编码表示的密集检索方法,如DPR [117]和ANCE [324],能够捕捉文档的深层语义信息,显著提高了检索精度。尽管这些方法在准确性上实现了飞跃,但它们依赖于大规模文档索引[57, 187],且无法以端到端的方式进行优化。此外,当人们搜索信息时,真正需要的是一个精确可靠的答案。这种基于文档排序列表的信息检索方法仍然需要用户花费时间总结所需答案,对于信息寻求来说还不够理想[195]。

With the development of Transformer-based pre-trained language models such as T5 [231], BART [138], and GPT [228], they have demonstrated their strong text generation capabilities. In recent years, large language models (LLMs) have brought about revolutionary changes in the field of AI-generated content (AIGC) [19, 359]. Based on large pre-training corpora and advanced training techniques like RLHF [36], LLMs [8, 105, 209, 286] have made significant progress in natural language tasks, such as dialogue [209, 282] and question answering [174, 225]. The rapid development of LLMs is transforming IR systems, giving rise to a new paradigm of generative information retrieval (GenIR), which achieves IR goals through generative approaches.

随着基于Transformer的预训练语言模型(如T5 [231]、BART [138]和GPT [228])的发展,它们展现了强大的文本生成能力。近年来,大语言模型(LLM)为AI生成内容(AIGC)领域带来了革命性变革[19, 359]。基于大规模预训练语料和RLHF [36]等先进训练技术,大语言模型[8, 105, 209, 286]在对话[209, 282]、问答[174, 225]等自然语言任务中取得显著进展。大语言模型的快速发展正在重塑信息检索(IR)系统,催生出通过生成式方法实现IR目标的新范式——生成式信息检索(GenIR)。

As envisioned by Metzler et al. [195], in order to build an IR system that can respond like a domain expert, the system should not only provide accurate responses but also include source citations to ensure the credibility of the results. To achieve this, GenIR models must possess both sufficient memorized knowledge and the ability to recall the associations between knowledge and source documents, which could be the final goal of GenIR systems. Currently, research in GenIR primarily focuses on two main patterns: (1) Generative Document Retrieval (GR), which involves retrieving documents by generating their identifiers; and (2) Reliable Response Generation, which entails directly generating user-centric responses with reliability enhancement strategies. Noting that although these two methods have not yet been integrated technically, they represent two primary forms by which IR systems present information to users in generative manners: either by generating lists of document identifiers or by generating reliable and user-centric responses. Figure 1 illustrates the difference between these two forms. These strategies are essential to the next generation of information retrieval and constitute the central focus of this survey.

正如 Metzler 等人 [195] 所设想的那样,要构建一个能像领域专家一样响应的信息检索 (IR) 系统,该系统不仅要提供准确的响应,还应包含来源引用以确保结果的可信度。为实现这一目标,生成式信息检索 (GenIR) 模型必须同时具备足够的记忆知识以及回忆知识与源文档关联的能力,这可能是 GenIR 系统的终极目标。目前 GenIR 的研究主要聚焦于两种模式:(1) 生成式文档检索 (Generative Document Retrieval, GR),即通过生成文档标识符来检索文档;(2) 可靠响应生成,即通过可靠性增强策略直接生成以用户为中心的响应。值得注意的是,虽然这两种方法在技术上尚未融合,但它们代表了信息检索系统以生成方式向用户呈现信息的两种主要形式:要么生成文档标识符列表,要么生成可靠且以用户为中心的响应。图 1 展示了这两种形式的区别。这些策略对下一代信息检索至关重要,也是本综述的核心焦点。

Generative document retrieval, a new retrieval paradigm based on generative models, is garnering increasing attention. This approach leverages the parametric memory of generative models to directly generate document identifiers (DocIDs) related to the documents [18, 281, 307, 371]. Figure 1 illustrates this transition, where traditional IR systems match queries to documents based on an indexed database (Figure 1(a)), while generative methods use language models to retrieve by directly generating relevant document identifiers (Figure 1(b)). Specifically, GR assigns a unique identifier to each document, which can be numeric-based or text-based, and then trains a generative retrieval model to learn the mapping from queries to the relevant DocIDs. This allows the model to index documents using its internal parameters. During inference, GR models use constrained beam search to limit the generated DocIDs to be valid within the corpus, ranking them based on generation probability to produce a ranked list of DocIDs. This eliminates the need for large-scale document indexes in traditional methods, enabling end-to-end training of the model.

生成式文档检索 (Generative document retrieval) 是一种基于生成模型的新型检索范式,正受到越来越多的关注。该方法利用生成模型的参数化记忆直接生成与文档相关的文档标识符 (DocIDs) [18, 281, 307, 371]。图 1 展示了这一转变:传统信息检索系统通过索引数据库匹配查询与文档 (图 1(a)),而生成式方法则通过语言模型直接生成相关文档标识符进行检索 (图 1(b))。具体而言,生成式检索会为每个文档分配唯一标识符 (可以是数字或文本形式),然后训练生成式检索模型学习从查询到相关 DocIDs 的映射关系,使模型能够利用内部参数对文档建立索引。在推理阶段,生成式检索模型通过约束束搜索将生成的 DocIDs 限制在语料库有效范围内,并根据生成概率进行排序,最终输出排序后的 DocIDs 列表。这种方法消除了传统方法对大规模文档索引的依赖,实现了模型的端到端训练。

Recent studies on generative retrieval have delved into model training and structure [6, 153, 281, 307, 365, 369, 372], document identifier design [18, 265, 281, 288, 330], continual learning on dynamic corpora [80, 124, 192], downstream task adaptation [27, 28, 152], multi-modal generative retrieval [157, 178, 357], and generative recommend er systems [74, 233, 304]. The progress in GR is shifting retrieval systems from matching to generation. It has also led to the emergence of workshops [10] and tutorials [279]. However, there is currently no comprehensive review that systematically organizes the research, challenges, and prospects of this emerging field.

近期关于生成式检索的研究深入探讨了模型训练与结构[6,153,281,307,365,369,372]、文档标识符设计[18,265,281,288,330]、动态语料库的持续学习[80,124,192]、下游任务适配[27,28,152]、多模态生成式检索[157,178,357]以及生成式推荐系统[74,233,304]。生成式检索的进步正推动检索系统从匹配转向生成,并催生了专题研讨会[10]和教程[279]。然而,目前尚无系统梳理这一新兴领域研究进展、挑战与前景的全面综述。

Reliable response generation is also a promising direction in the IR field, offering usercentric and accurate answers that directly meet users’ needs. Since LLMs are particularly adept at following instructions [359], capable of generating customized responses, and can even cite the knowledge sources [204, 223], making direct response generation a new and intuitive way to access information [54, 75, 241, 315, 367]. As illustrated in Figure 1, the generative approach marks a significant shift from traditional IR systems, which return a ranked list of documents (as shown in Figure 1(a,b)). Instead, response generation methods (depicted in Figure 1(c)) offer a more dynamic form of information access by directly generating detailed, user-centric responses, thereby providing a richer and more immediate understanding of the information need behind the users’ queries.

可靠响应生成也是信息检索(IR)领域一个极具前景的方向,它能提供以用户为中心且精准的答案,直接满足用户需求。由于大语言模型(LLM)特别擅长遵循指令[359],能够生成定制化响应,甚至可以引用知识来源[204, 223],这使得直接响应生成成为了一种新颖直观的信息获取方式[54, 75, 241, 315, 367]。如图1所示,这种生成式方法标志着与传统IR系统的重大转变——传统系统返回的是文档排序列表(如图1(a,b)所示),而响应生成方法(如图1(c)所示)通过直接生成详细的、以用户为中心的响应,提供了更具动态性的信息获取形式,从而使用户能更丰富、更即时地理解查询背后的信息需求。

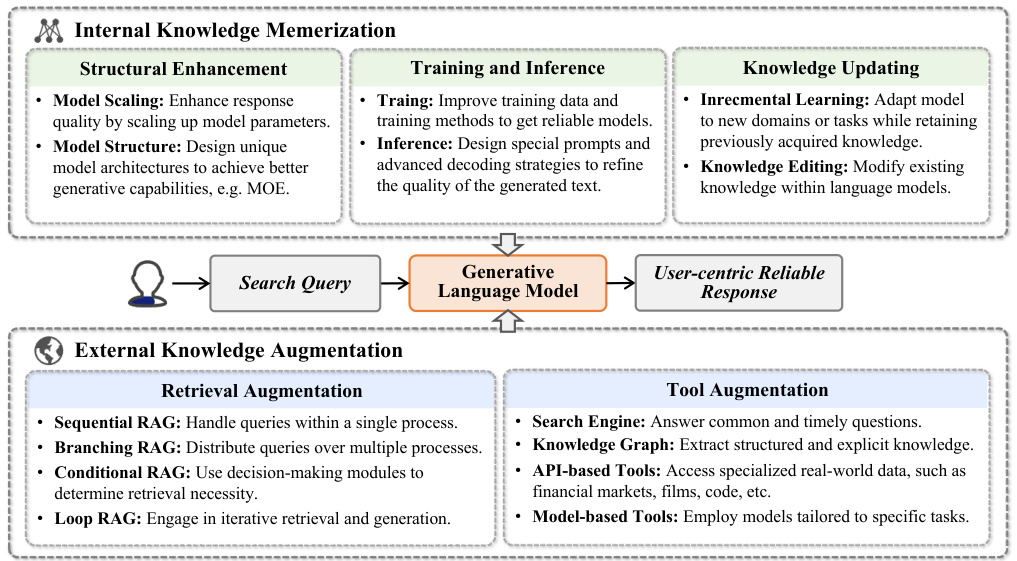

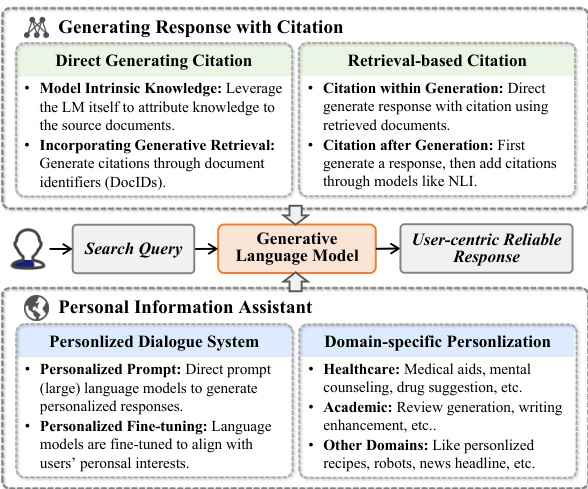

However, the responses generated by language models may not always be reliable. They have the potential to generate irrelevant answers [85], contradict factual information [90, 104], provide outdated data [291], or generate toxic content [93, 263]. Consequently, these limitations render them unsuitable for many scenarios that require accurate and up-to-date information. To address these challenges, the academic community has developed strategies across four key aspects: enhancing internal knowledge [16, 37, 56, 119, 132, 193, 243, 267, 285]; augmenting external knowledge [5, 113, 139, 151, 204, 245, 333]; generating responses with citation [129, 142, 156, 204, 314]; and improving personal information assistance [149, 172, 295, 327]. Despite these efforts, there is still a lack of a systematic review that organizes the existing research under this new paradigm of generative information access.

然而,语言模型生成的回答并不总是可靠的。它们可能产生无关答案[85]、与事实矛盾[90, 104]、提供过时数据[291]或生成有害内容[93, 263]。这些局限性使其难以适用于需要精准时效信息的场景。针对这些问题,学术界从四个关键方向提出了解决方案:增强内部知识[16, 37, 56, 119, 132, 193, 243, 267, 285];扩充外部知识[5, 113, 139, 151, 204, 245, 333];生成带引用的回答[129, 142, 156, 204, 314];以及改进个人信息辅助[149, 172, 295, 327]。尽管已有这些探索,目前仍缺乏系统性综述来梳理生成式信息获取新范式下的研究成果。

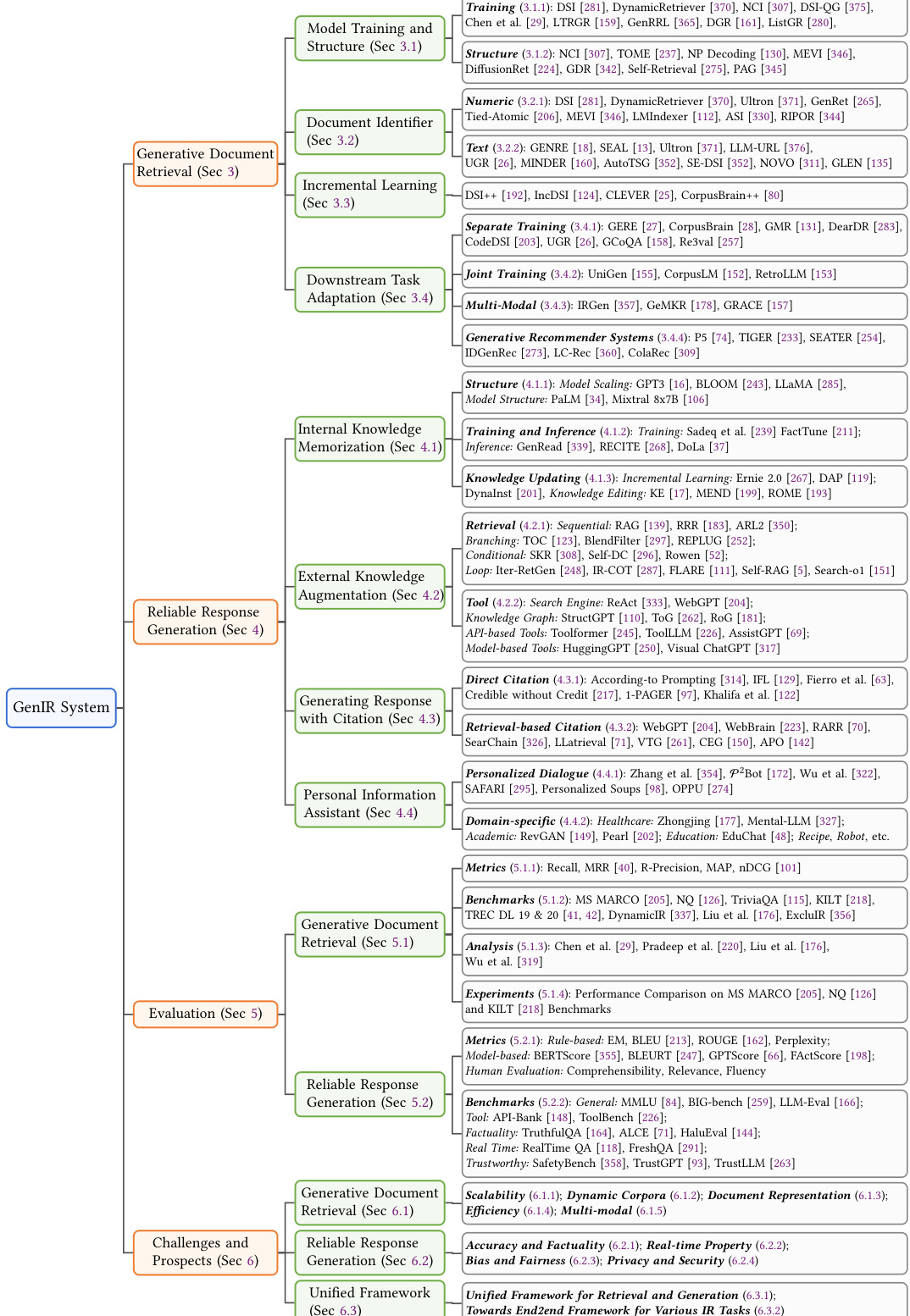

This review will systematically review the latest research progress and future developments in the field of GenIR, as shown in Figure 2, which displays the classification of research related to the GenIR system. We will introduce background knowledge in Section 2, generative document retrieval technologies in Section 3, direct information accessing with generative language models in Section 4, evaluation in Section 5, current challenges and future directions in Section 6, respectively. Section 7 will summarize the content of this review. This article is the first to systematically organize the research, evaluation, challenges and prospects of generative IR, while also looking forward to the potential and importance of GenIR’s future development. Through this review, readers will gain a deep understanding of the latest progress in developing GenIR systems and how it shapes the future of information access. The main contribution of this survey is summarized as follows:

本综述将系统梳理GenIR领域的最新研究进展与未来发展,如图2所示,该图展示了GenIR系统相关研究的分类。我们将在第2节介绍背景知识,第3节讨论生成式文档检索技术,第4节探讨基于生成式语言模型的直接信息获取,第5节分析评估方法,第6节分别阐述当前挑战与未来方向。第7节将总结本综述内容。本文首次系统梳理了生成式IR的研究、评估、挑战与前景,同时展望了GenIR未来发展的潜力与重要性。通过本综述,读者将深入了解开发GenIR系统的最新进展及其如何塑造信息获取的未来。本调查的主要贡献总结如下:

Fig. 2. Taxonomy of research on generative information retrieval: investigating generative document retrieval, reliable response generation, evaluation, challenges and prospects.

图 2: 生成式信息检索研究分类:涵盖生成式文档检索、可靠响应生成、评估方法、挑战与前景。

• First comprehensive survey on generative information retrieval (GenIR): This survey is the first to comprehensively organize the techniques, evaluation, challenges, and prospects on the emerging field of GenIR, providing a deep understanding of the latest progress in developing GenIR systems and its future in shaping information access. • Systematic categorization and in-depth analysis: The survey offers a systematic categorization of research related to GenIR systems, including generative document retrieval, reliable response generation. It provides an in-depth analysis of each category, covering model training and structure, document identifier, etc. in generative document retrieval; internal knowledge memorization, external knowledge enhancement, etc. for reliable response generation. • Comprehensive review of evaluation metrics and benchmarks: The survey reviews a range of widely used evaluation metrics and benchmark datasets for accessing GenIR methods, alongside analysis on the effectiveness and weaknesses of existing GenIR methods. • Discussions of current challenges and future directions: The survey identifies and discusses the current challenges faced in the GenIR field. We also provide potential solutions for each challenge and outline future research directions for building GenIR systems.

• 首次关于生成式信息检索 (Generative Information Retrieval, GenIR) 的综合综述:本综述首次全面梳理了新兴领域GenIR的技术、评估、挑战与前景,深入阐释了GenIR系统开发的最新进展及其在重塑信息获取方式的未来潜力。

• 系统性分类与深度分析:综述对GenIR相关研究进行了系统分类,包括生成式文档检索、可靠响应生成等方向。针对每类研究提供深度分析,涵盖生成式文档检索中的模型训练与结构、文档标识符等技术细节;可靠响应生成中的内部知识记忆、外部知识增强等关键方法。

• 评估指标与基准的全面评述:综述系统梳理了评估GenIR方法的常用指标和基准数据集,并分析了现有GenIR方法的有效性与局限性。

• 当前挑战与未来方向探讨:综述明确指出了GenIR领域面临的挑战,针对每项挑战提出潜在解决方案,并规划了构建GenIR系统的未来研究方向。

2 BACKGROUND AND PRELIMINARIES

2 背景与基础知识

Information retrieval techniques aim at efficiently obtaining, processing, and understanding information from massive data. Technological advancements have continuously driven the evolution of these methods: from early keyword-based sparse retrieval to deep learning-based dense retrieval, and more recently, to generative retrieval, large language models, and their augmentation techniques. Each advancement enhances retrieval accuracy and efficiency, catering to the complex and diverse query needs of users.

信息检索技术旨在从海量数据中高效获取、处理和理解信息。技术进步持续推动着这些方法的演进:从早期基于关键词的稀疏检索,到基于深度学习的稠密检索,再到最近的生成式检索、大语言模型及其增强技术。每一次进步都提升了检索的准确性和效率,以满足用户复杂多样的查询需求。

2.1 Traditional Information Retrieval

2.1 传统信息检索

Sparse Retrieval. In the field of traditional information retrieval, sparse retrieval techniques implement fast and accurate document retrieval through the inverted index method. Inverted indexing technology maps each unique term to a list of all documents containing that term, providing an efficient means for information retrieval in large document collections. Among these methods, TF-IDF (Term Frequency-Inverse Document Frequency) [235] is a particularly important statistical tool used to assess the importance of a word in a document collection, thereby widely applied in various traditional retrieval systems.

稀疏检索 (Sparse Retrieval)。在传统信息检索领域,稀疏检索技术通过倒排索引 (inverted index) 方法实现快速精准的文档检索。倒排索引技术将每个唯一词项映射到包含该词项的所有文档列表,为海量文档集合中的信息检索提供了高效手段。其中,TF-IDF (词频-逆文档频率) [235] 是评估词项在文档集合中重要性的重要统计工具,因而被广泛应用于各类传统检索系统。

The core of sparse retrieval technology lies in evaluating the relevance between documents and user queries. Specifically, given a document collection $\mathcal{D}$ and a user query $q$ , traditional information retrieval systems identify and retrieve information by calculating the relevance $\mathcal{R}$ between document $d$ and query $q$ . This relevance evaluation typically relies on the similarity measure between document $d$ and query $q$ , as shown below:

稀疏检索技术的核心在于评估文档与用户查询之间的相关性。具体而言,给定文档集合 $\mathcal{D}$ 和用户查询 $q$,传统信息检索系统通过计算文档 $d$ 与查询 $q$ 之间的相关性 $\mathcal{R}$ 来识别并检索信息。这种相关性评估通常依赖于文档 $d$ 与查询 $q$ 之间的相似性度量,如下所示:

$$

\mathcal{R}(q,d)=\sum_{t\in q\cap d}\operatorname{tf-idf}(t,d)\cdot\operatorname{tf-idf}(t,q),

$$

$$

\mathcal{R}(q,d)=\sum_{t\in q\cap d}\operatorname{tf-idf}(t,d)\cdot\operatorname{tf-idf}(t,q),

$$

ACM Trans. Inf. Syst., Vol. 1, No. 1, Article . Publication date: March 2025.

ACM Trans. Inf. Syst.,第1卷,第1期,文章。出版日期:2025年3月。

where 𝑡 represents the terms common to both query $q$ and document $d$ , and tf-idf $(t,d)$ and tf $\operatorname{idf}(t,q)$ represent the TF-IDF weights of term $t$ in document $d$ and query $q$ , respectively. Although sparse retrieval methods like TF-IDF [235] and BM25 [238] excel at fast retrieval, it struggles with complex queries involving synonyms, specialized terms, or context, as term matching and TF-IDF may not fully meet users’ information needs [180].

其中,𝑡表示查询$q$和文档$d$共有的词项,tf-idf$(t,d)$和tf$\operatorname{idf}(t,q)$分别表示词项$t$在文档$d$和查询$q$中的TF-IDF权重。尽管TF-IDF [235]和BM25 [238]等稀疏检索方法擅长快速检索,但对于涉及同义词、专业术语或上下文的复杂查询,由于词项匹配和TF-IDF可能无法完全满足用户的信息需求 [180],这类方法仍存在不足。

Dense Retrieval. The advent of pre-trained language models like BERT [121] has revolutionized information retrieval, leading to the development of dense retrieval methods, like DPR [117], ANCE [324], E5 [298], SimLM [299]. Unlike traditional sparse retrieval, these methods leverage Transformer-based encoders to create dense vector representations for both queries and documents. This approach enhances the capability to grasp the underlying semantics, thereby improving retrieval accuracy.

稠密检索 (Dense Retrieval)。BERT [121] 等预训练语言模型的出现彻底改变了信息检索领域,催生了 DPR [117]、ANCE [324]、E5 [298]、SimLM [299] 等稠密检索方法。与传统稀疏检索不同,这些方法利用基于 Transformer 的编码器为查询和文档生成稠密向量表示。该方法增强了对底层语义的捕捉能力,从而提高了检索准确性。

The core of dense retrieval lies in converting documents and queries into vector representations. Given document $d$ and query $q$ and their vector representations $\mathbf{v}_ {q}$ , each document $d$ is transformed into a dense vector $\mathbf{v}_ {d}$ through a pre-trained language model, similarly, query $q$ is transformed into vector $\mathbf{v}_ {q}$ . Specifically, we can use encoder functions $E_{d}(\cdot)$ and $E_{q}(\cdot)$ to represent the encoding process for documents and queries, respectively:

密集检索的核心在于将文档和查询转化为向量表示。给定文档 $d$ 和查询 $q$ 及其向量表示 $\mathbf{v}_ {q}$,每个文档 $d$ 通过预训练语言模型转换为密集向量 $\mathbf{v}_ {d}$,类似地,查询 $q$ 转换为向量 $\mathbf{v}_ {q}$。具体而言,我们可以使用编码函数 $E_{d}(\cdot)$ 和 $E_{q}(\cdot)$ 分别表示文档和查询的编码过程:

$$

\mathbf{v}_ {d}=E_{d}(d),\quad\mathbf{v}_ {q}=E_{q}(q),

$$

$$

\mathbf{v}_ {d}=E_{d}(d),\quad\mathbf{v}_ {q}=E_{q}(q),

$$

where $E_{d}(\cdot)$ and $E_{q}(\cdot)$ can be the same model or different models optimized for specific tasks.

其中 $E_{d}(\cdot)$ 和 $E_{q}(\cdot)$ 可以是相同模型,也可以是针对特定任务优化的不同模型。

Dense retrieval methods evaluate relevance by calculating the similarity between the query vector and document vector, which can be measured by cosine similarity, expressed as follows:

密集检索方法通过计算查询向量和文档向量之间的相似度来评估相关性,可采用余弦相似度衡量,表达式如下:

$$

\mathcal{R}(q,d)=\cos({\mathbf{v}_ {q}},{\mathbf{v}_ {d}})=\frac{{\mathbf{v}_ {q}}\cdot\mathbf{v}_ {d}}{|\mathbf{v}_ {q}||\mathbf{v}_{d}|},

$$

$$

\mathcal{R}(q,d)=\cos({\mathbf{v}_ {q}},{\mathbf{v}_ {d}})=\frac{{\mathbf{v}_ {q}}\cdot\mathbf{v}_ {d}}{|\mathbf{v}_ {q}||\mathbf{v}_{d}|},

$$

where $\mathbf{v}_ {q}\cdot\mathbf{v}_ {d}$ represents the dot product of query vector $\mathbf{v}_ {q}$ and document vector ${\bf v}_ {d}$ , and $|\mathbf{v}_ {q}|$ and $|\mathbf{v}_{d}|$ respectively represent the magnitudes of the query and document vector. Finally, documents are ranked based on these similarity scores to identify the most relevant ones for the user.

其中 $\mathbf{v}_ {q}\cdot\mathbf{v}_ {d}$ 表示查询向量 $\mathbf{v}_ {q}$ 和文档向量 ${\bf v}_ {d}$ 的点积,$|\mathbf{v}_ {q}|$ 和 $|\mathbf{v}_{d}|$ 分别表示查询向量和文档向量的模长。最后根据这些相似度得分对文档进行排序,从而为用户找出最相关的文档。

2.2 Generative Retrieval

2.2 生成式检索 (Generative Retrieval)

With the significant progress of language models, generative retrieval has emerged as a new direction in the field of information retrieval [195, 281, 328]. Unlike traditional index-based retrieval methods, generative retrieval relies on pre-trained generative language models, such as T5 [231] and BART [138], to directly generate document identifiers (DocIDs) relevant to the query, thereby achieving end-to-end retrieval without relying on large-scale pre-built document indices.

随着语言模型的显著进步,生成式检索 (generative retrieval) 已成为信息检索领域的新方向 [195, 281, 328]。与传统基于索引的检索方法不同,生成式检索依赖预训练的生成式语言模型(如 T5 [231] 和 BART [138]),直接生成与查询相关的文档标识符 (DocIDs),从而实现不依赖大规模预建文档索引的端到端检索。

DocID Construction and Prefix Constraints. To facilitate generative retrieval, each document $d$ in the corpus $\mathcal{D}={d_{1},d_{2},\ldots,d_{N}}$ is assigned a unique document identifier $d^{\prime}$ , forming the set $\mathcal{D}^{\prime}={d_{1}^{\prime},d_{2}^{\prime},\ldots,d_{N}^{\prime}}$ . This mapping is typically established via a bijective function $\phi:{\mathcal{D}}\rightarrow{\mathcal{D}}^{\prime}$ , ensuring that:

文档ID构建与前缀约束。为实现生成式检索,语料库 $\mathcal{D}={d_{1},d_{2},\ldots,d_{N}}$ 中的每个文档 $d$ 都被分配唯一的文档标识符 $d^{\prime}$,构成集合 $\mathcal{D}^{\prime}={d_{1}^{\prime},d_{2}^{\prime},\ldots,d_{N}^{\prime}}$。该映射通常通过双射函数 $\phi:{\mathcal{D}}\rightarrow{\mathcal{D}}^{\prime}$ 建立,并满足以下条件:

$$

\phi(d_{i})=d_{i}^{\prime},\quad\forall d_{i}\in{\mathcal{D}}.

$$

$$

\phi(d_{i})=d_{i}^{\prime},\quad\forall d_{i}\in{\mathcal{D}}.

$$

To enable the language model to generate only valid DocIDs during inference, we construct prefix constraints based on ${\mathcal{D}}^{\prime}$ . This is typically implemented using a trie (prefix tree), where each path from the root to a leaf node corresponds to a valid DocID.

为了使语言模型在推理过程中仅生成有效的DocID,我们基于${\mathcal{D}}^{\prime}$构建前缀约束。这通常通过字典树(前缀树)实现,其中从根节点到叶节点的每条路径对应一个有效DocID。

Constrained Beam Search. Given a query $q$ , the generative retrieval model aims to generate the top $k$ DocIDs that are most relevant to $q$ . The language model $P(\cdot|q;\theta)$ generates DocIDs token by token, guided by the prefix constraints. At each decoding step $i$ , only those tokens that extend the current partial sequence $d_{<i}^{\prime}$ into a valid prefix of some DocIDs in $\mathcal{D}^{\prime}$ are considered. Formally, the set of allowable next tokens is:

约束束搜索 (Constrained Beam Search)。给定查询 $q$,生成式检索模型旨在生成与 $q$ 最相关的 top $k$ 个 DocID。在前缀约束的引导下,语言模型 $P(\cdot|q;\theta)$ 逐 Token 生成 DocID。在每个解码步骤 $i$ 中,仅考虑那些能将当前部分序列 $d_{<i}^{\prime}$ 扩展为 $\mathcal{D}^{\prime}$ 中某些 DocID 有效前缀的 Token。形式化地,允许的下一 Token 集合为:

$$

\mathcal V(d_{<i}^{\prime})={v\mid\exists d^{\prime}\in\mathcal D^{\prime}\mathrm{ such that }d_{<i}^{\prime}v\mathrm{ is a prefix of }d^{\prime}}.

$$

$$

\mathcal V(d_{<i}^{\prime})={v\mid\exists d^{\prime}\in\mathcal D^{\prime}\mathrm{ such that }d_{<i}^{\prime}v\mathrm{ is a prefix of }d^{\prime}}.

$$

ACM Trans. Inf. Syst., Vol. 1, No. 1, Article . Publication date: March 2025.

ACM Trans. Inf. Syst.,第1卷,第1期,文章。出版日期:2025年3月。

By employing constrained beam search, the model efficiently explores the space of valid DocIDs, maintaining a beam of the most probable sequences at each decoding step while adhering to the DocID prefix constraints.

通过采用受限束搜索 (constrained beam search),该模型能高效探索有效文档ID (DocID) 的空间,在遵守文档ID前缀约束的同时,于每个解码步骤保留最可能的序列束。

Document Relevance. The relevance between the query $q$ and a document $d$ is quantified by the probability of generating its corresponding DocID $d^{\prime}$ given $q$ . This is computed as:

文档相关性。查询 $q$ 与文档 $d$ 之间的相关性通过给定 $q$ 时生成其对应 DocID $d^{\prime}$ 的概率来量化,计算公式为:

$$

\mathcal{R}(q,d)=P(d^{\prime}|q;\theta)=\prod_{i=1}^{T}P(d_{i}^{\prime}\mid d_{<i}^{\prime},q;\theta),

$$

$$

\mathcal{R}(q,d)=P(d^{\prime}|q;\theta)=\prod_{i=1}^{T}P(d_{i}^{\prime}\mid d_{<i}^{\prime},q;\theta),

$$

where $T$ is the length of the DocID $d^{\prime}$ in tokens, $d_{i}^{\prime}$ is the token at position $i$ , and $d_{<i}^{\prime}$ denotes the sequence of tokens generated before position $i.$ . The constrained beam search produces a ranked list of top $k$ DocIDs ${d^{\prime(1)},d^{\prime(2)},\ldots,d^{\prime(k)}}$ based on their generation probabilities ${\mathcal{R}(q,d^{(1)}),\mathcal{R}(q,d^{(2)}) , \cdot\cdot\cdot,\mathcal{R}(q,d^{(k)})}$ . The corresponding documents ${d^{(\bar{1})},d^{(2)},\ldots,\bar{d}^{(k)}}$ are then considered the most relevant to the query 𝑞.

其中 $T$ 是文档ID $d^{\prime}$ 的Token长度,$d_{i}^{\prime}$ 表示位置 $i$ 的Token,$d_{<i}^{\prime}$ 表示位置 $i$ 之前生成的Token序列。约束束搜索根据生成概率 ${\mathcal{R}(q,d^{(1)}),\mathcal{R}(q,d^{(2)}), \cdot\cdot\cdot,\mathcal{R}(q,d^{(k)})}$ 生成前 $k$ 个文档ID的排序列表 ${d^{\prime(1)},d^{\prime(2)},\ldots,d^{\prime(k)}}$。对应的文档 ${d^{(\bar{1})},d^{(2)},\ldots,\bar{d}^{(k)}}$ 则被视为与查询 𝑞 最相关的结果。

Model Optimization. Generative retrieval models are typically optimized using cross-entropy loss, which measures the discrepancy between the generated DocID sequence and the ground truth DocID. Given a query $q$ and its corresponding DocID $d^{\prime}$ , the cross-entropy loss is defined as:

模型优化。生成式检索模型通常使用交叉熵损失进行优化,该损失衡量生成的DocID序列与真实DocID之间的差异。给定查询$q$及其对应的DocID$d^{\prime}$,交叉熵损失定义为:

$$

\mathcal{L}=-\sum_{i=1}^{T}\log P(d_{i}^{\prime}\mid d_{<i}^{\prime},q;\theta),

$$

$$

\mathcal{L}=-\sum_{i=1}^{T}\log P(d_{i}^{\prime}\mid d_{<i}^{\prime},q;\theta),

$$

where $T$ is the length of the DocID in tokens, $d_{i}^{\prime}$ is the token at position $i$ , and $d_{<i}^{\prime}$ denotes the sequence of tokens generated before position $i$ . This loss function encourages the model to learn the association between query and labeled DocID sequence.

其中 $T$ 是DocID的Token长度,$d_{i}^{\prime}$ 是位置 $i$ 的Token,$d_{<i}^{\prime}$ 表示位置 $i$ 之前生成的Token序列。该损失函数促使模型学习查询与标注DocID序列之间的关联。

This approach allows the generative retrieval model to produce a relevance-ordered list of documents without relying on traditional indexing structures. The core of this approach lies in leveraging the language model’s capability to generate DocID sequences within prefix constraints. This section discusses the simplest generative retrieval method. In Section 3, we will delve into advanced methods from multiple perspectives, including model architectures, training strategies, and DocID design, to further enhance retrieval performance across various scenarios.

该方法使生成式检索模型无需依赖传统索引结构即可生成按相关性排序的文档列表。其核心在于利用语言模型在前缀约束下生成DocID序列的能力。本节讨论最简单的生成式检索方法,第3节我们将从模型架构、训练策略和DocID设计等多角度深入探讨进阶方法,以提升不同场景下的检索性能。

2.3 Large Language Models

2.3 大语言模型 (Large Language Models)

The evolution of Large Language Models (LLMs) marks a significant leap in natural language processing (NLP), rooted from early statistical and neural network-based language models [374]. These models, through pre-training on vast text corpora, learned deep semantic features of language, greatly enriching the understanding of text. Subsequently, generative language models, most notably the GPT series [16, 228, 229], significantly improved text generation and understanding capabilities with improved model size and number of parameters.

大语言模型 (Large Language Models, LLMs) 的演进标志着自然语言处理 (NLP) 领域的重大飞跃,其根源可追溯至早期的统计和基于神经网络的语言模型 [374]。这些模型通过在海量文本语料库上进行预训练,学习语言的深层语义特征,极大地丰富了文本理解能力。随后,生成式语言模型(尤其是 GPT 系列 [16, 228, 229])通过扩大模型规模和参数量,显著提升了文本生成与理解能力。

LLMs can be mainly divided into two categories: encoder-decoder models and decoder-only models. Encoder-decoder models, like T5 [231] and BART [138], convert input text into vector representations through their encoder, then the decoder generates output text based on these representations. This model perspective treats various NLP tasks as text-to-text conversion problems, solving them through text generation. On the other hand, decoder-only models, like the GPT [228] and GPT-2 [229], rely entirely on the Transformer decoder, generating text step by step through the self-attention mechanism. The introduction of GPT-3 [16], with its 175 billion parameters, marked a significant milestone in this field and led to the creation of models like Instruct GP T [210], Falcon [215], PaLM [34] and Llama series [59, 285, 286]. These models, all using a decoder-only architecture, trained on large-scale datasets, have shown astonishing language processing capabilities [359].

大语言模型(LLM)主要可分为两类:编码器-解码器模型和仅解码器模型。编码器-解码器模型如T5 [231]和BART [138],通过编码器将输入文本转换为向量表示,再由解码器基于这些表示生成输出文本。该模型视角将各类NLP任务视为文本到文本的转换问题,通过文本生成来解决。另一方面,仅解码器模型如GPT [228]和GPT-2 [229]完全依赖Transformer解码器,通过自注意力机制逐步生成文本。拥有1750亿参数的GPT-3 [16]问世成为该领域重要里程碑,并催生了InstructGPT [210]、Falcon [215]、PaLM [34]以及Llama系列 [59, 285, 286]等模型。这些采用仅解码器架构、基于海量数据训练的模型,展现出惊人的语言处理能力 [359]。

For information retrieval tasks, large language models (LLMs) play a crucial role in directly generating the exact information users seek [55, 173, 374]. This capability marks a significant step towards a new era of generative information retrieval. In this era, the retrieval process is not solely about locating existing information but also about creating new content that meets the specific needs of users. This feature is especially advantageous in situations where users might not know how to phrase their queries or when they are in search of complex and highly personalized information, scenarios where traditional matching-based methods fall short.

在信息检索任务中,大语言模型(LLMs) 通过直接生成用户所需的精确信息发挥着关键作用 [55, 173, 374]。这一能力标志着生成式信息检索新时代的重要进展。在此背景下,检索过程不仅关乎定位现有信息,更涉及创造符合用户特定需求的新内容。当用户不知如何准确表述查询需求,或需要获取复杂且高度个性化的信息时,这一特性展现出显著优势,而传统基于匹配的方法在此类场景中往往表现不足。

2.4 Augmented Language Models

2.4 增强型语言模型

Despite the advances of LLMs, they still face significant challenges such as hallucination, particularly in complex tasks or those requiring access to long-tail or real-time information [90, 359]. To address these issues, retrieval augmentation and tool augmentation have emerged as effective strategies. Retrieval augmentation involves integrating external knowledge sources into the language model’s workflow. This integration allows the model to access up-to-date and accurate information during the generation process, thereby grounding its responses in verified data and reducing the likelihood of hallucinations [139, 252, 271]. Tool augmentation, on the other hand, extends the capabilities of LLMs by incorporating specialized tools or APIs that can perform specific functions like mathematical computations, data retrieval, or executing predefined commands [226, 245, 276]. With retrieval and tool augmentations, language models can provide more precise and con textually relevant responses, thereby improving factuality and functionality in practical applications.

尽管大语言模型取得了进展,但它们仍面临重大挑战,例如幻觉问题(hallucination),尤其是在复杂任务或需要获取长尾或实时信息的任务中 [90, 359]。为解决这些问题,检索增强(retrieval augmentation)和工具增强(tool augmentation)已成为有效策略。检索增强涉及将外部知识源集成到语言模型的工作流程中,使模型在生成过程中能够访问最新且准确的信息,从而将其响应建立在已验证数据的基础上,降低幻觉发生的可能性 [139, 252, 271]。另一方面,工具增强通过整合专用工具或 API 来扩展大语言模型的能力,这些工具或 API 可以执行特定功能,如数学计算、数据检索或执行预定义命令 [226, 245, 276]。通过检索增强和工具增强,语言模型能够提供更精确且与上下文相关的响应,从而在实际应用中提高事实性和功能性。

Moreover, due to the aforementioned issue of hallucinations, the responses generated by LLMs are often considered unreliable because users are unaware of the sources behind the generated content, making it difficult to verify its accuracy. To enhance the credibility of responses, some studies have focused on generating responses with citations [143, 204, 256]. This approach involves enabling language models to cite the source documents of their generated content, thereby increasing the trustworthiness of the responses. All these methods are effective strategies for improving both the quality and reliability of language model outputs and are essential technologies for building the next generation of generative information retrieval systems.

此外,由于上述幻觉问题,大语言模型生成的回答常被认为不可靠,因为用户无法知晓生成内容背后的来源,难以验证其准确性。为提高回答的可信度,部分研究聚焦于生成带引用的回答 [143, 204, 256]。该方法通过使语言模型能够引用生成内容的源文档,从而提升回答的可信度。这些方法都是提升语言模型输出质量与可靠性的有效策略,也是构建下一代生成式信息检索系统的关键技术。

3 GENERATIVE DOCUMENT RETRIEVAL: FROM SIMILARITY MATCHING TO GENERATING DOCUMENT IDENTIFIERS

3 生成式文档检索:从相似性匹配到生成文档标识符

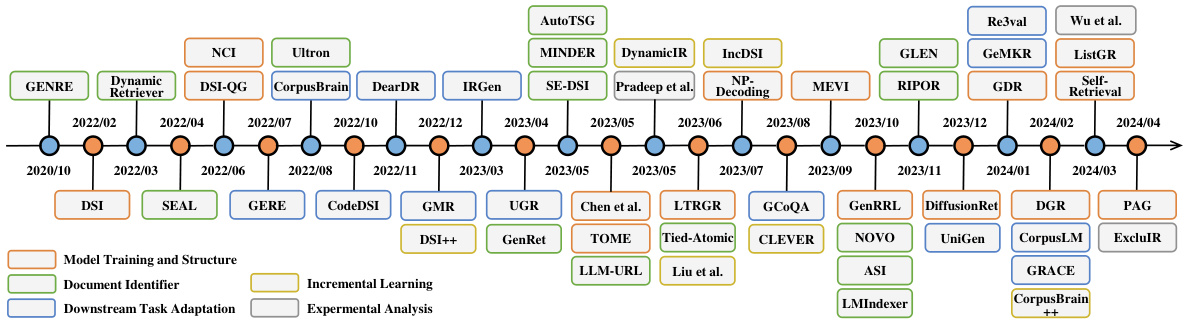

In recent advancements in AIGC, generative retrieval (GR) has emerged as an promising approach in the field of information retrieval, garnering increasing interest from the academic community. Figure 3 showcases a timeline of the GR methods. Initially, GENRE [18] proposed to retrieve entities by generating their unique names through constrained beam search via a pre-built entity prefix tree, achieving advanced entity retrieval performance. Subsequently, Metzler et al. [195] envisioned a model-based information retrieval framework aiming to combine the strengths of traditional document retrieval systems and pre-trained language models to create systems capable of providing expert-quality answers in various domains.

在AIGC领域的最新进展中,生成式检索 (Generative Retrieval, GR) 已成为信息检索领域一种颇具前景的方法,日益受到学术界的关注。图 3: 展示了GR方法的发展时间线。最初,GENRE [18] 提出通过预构建的实体前缀树进行约束束搜索来生成实体唯一名称以实现检索,从而取得了先进的实体检索性能。随后,Metzler等人 [195] 设想了一种基于模型的信息检索框架,旨在结合传统文档检索系统和预训练语言模型的优势,构建能够提供跨领域专家级答案的系统。

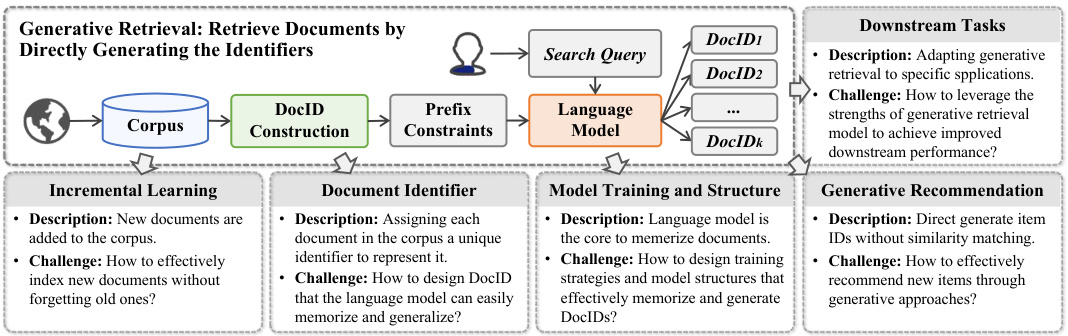

Following their lead, a diverse range of methods including DSI [281], Dynamic Retriever [370], SEAL [13], NCI [307], etc., have been developed, with a continuously growing body of work. These methods explore various aspects such as model training and architectures, document identifiers, incremental learning, task-specific adaptation, and generative recommendations. Figure 4 presents an overview of the GR system and we’ll provide an in-depth discussion of each associated challenge in the following sections.

继这些研究之后,DSI [281]、Dynamic Retriever [370]、SEAL [13]、NCI [307] 等多种方法相继被提出,相关研究成果持续增长。这些方法探索了模型训练与架构、文档标识符、增量学习、任务特定适配以及生成式推荐等多个方面。图 4 展示了 GR (Generative Retrieval) 系统的概览,我们将在后续章节深入讨论每个相关挑战。

3.1 Model Training and Structure

3.1 模型训练与结构

One of the core components of GR is the model training and structure, aiming to enhance the model’s ability to memorize documents in the corpus.

GR的核心组件之一是模型训练和结构,旨在增强模型记忆语料库中文档的能力。

Fig. 3. Timeline of research in generative retrieval: focus on model training and structure, document identifier design, incremental learning and downstream task adaptation.

图 3: 生成式检索研究时间轴:重点关注模型训练与结构、文档标识符设计、增量学习及下游任务适配。

3.1.1 Model Training. To effectively train generative models for indexing documents, the standard approach is to train the mapping from queries to relevant DocIDs, based on standard sequence-tosequence (seq2seq) training methods, as described in Equation (2). This method has been widely used in numerous GR research works, such as DSI [281], NCI [307], SEAL [13], etc. Moreover, a series of works have proposed various model training methods tailored for GR tasks to further enhance GR retrieval performance, such as sampling documents or generating queries based on document content to serve as pseudo queries for data augmentation; or including training objectives for document ranking.

3.1.1 模型训练。为有效训练面向文档索引的生成式模型,标准方法是基于序列到序列(seq2seq)训练方法,按照公式(2)所述训练从查询到相关DocID的映射。该方法已广泛应用于众多生成式检索(GR)研究工作中,如DSI [281]、NCI [307]、SEAL [13]等。此外,一系列研究提出了针对GR任务定制的多样化模型训练方法以进一步提升检索性能,例如通过采样文档或基于文档内容生成查询作为数据增强的伪查询,或引入文档排序的训练目标。

Specifically, DSI [281] proposed two training strategies: one is “indexing”, that is, training the model to associate document tokens with their corresponding DocIDs, where DocIDs are pre-built based on documents in corpus, which will be discussed in detail in Section 3.2; the other is “retrieval”, using labeled query-DocID pairs to fine-tune the model. Notably, DSI was the first to realize a differentiable search index based on the Transformer [290] structure, showing good performance in web search [205] and question answering [126] scenarios. Next, a series of methods propose training methods for data augmentation and improving GR model ranking ability

具体而言,DSI [281] 提出了两种训练策略:一是"索引构建 (indexing)",即训练模型将文档 token 与对应的 DocID 关联起来(DocID 基于语料库文档预先构建,详见第3.2节讨论);二是"检索 (retrieval)",使用标注的查询-DocID 对来微调模型。值得注意的是,DSI 首次实现了基于 Transformer [290] 结构的可微分搜索索引,在网页搜索 [205] 和问答 [126] 场景中展现出良好性能。随后,一系列方法提出了针对数据增强和提升 GR (Generative Retrieval) 模型排序能力的训练方案

Sampling Document Pieces as Pseudo Queries. In the same era, Dynamic Retriever [370], also based on the encoder-decoder model, constructed a model-based IR system by initializing the encoder with a pre-trained BERT [121]. Besides, Dynamic Retriever utilizes passages, sampled terms and $N$-grams to serve as pseudo queries to enhance the model’s memorization of DocIDs. Formally, the training methods can be summarized as follows:

采样文档片段作为伪查询。同一时期,同样基于编码器-解码器模型的Dynamic Retriever [370]通过用预训练BERT [121]初始化编码器,构建了基于模型的IR系统。此外,Dynamic Retriever利用段落、采样词项和$N$-gram作为伪查询来增强模型对DocID的记忆能力。其训练方法可形式化表述为:

$$

\begin{array}{r l}&{\mathrm{Sampled Document:}d_{s_{i}}\longrightarrow\mathrm{DocID},i\in{1,...,k_{d_{s}}},}\ &{\qquad\mathrm{Labeled Query:}q_{i}\longrightarrow\mathrm{DocID},i\in{1,...,k_{q}},}\end{array}

$$

$$

\begin{array}{r l}&{\mathrm{采样文档:}d_{s_{i}}\longrightarrow\mathrm{文档ID},i\in{1,...,k_{d_{s}}},}\ &{\qquad\mathrm{标注查询:}q_{i}\longrightarrow\mathrm{文档ID},i\in{1,...,k_{q}},}\end{array}

$$

where $d_{s_{i}}$ and $q_{i}$ denote each of the $k_{d_{s}}$ sampled document text and each of the $k_{q}$ labeled query for the corresponding DocID, respectively.

其中 $d_{s_{i}}$ 和 $q_{i}$ 分别表示对应 DocID 的 $k_{d_{s}}$ 个采样文档文本和 $k_{q}$ 个标注查询。

Generating Pseudo Queries from Documents. Following DSI, the NCI [307] model was trained using a combination of labeled query-document pairs and augmented pseudo querydocument pairs. Specifically, NCI proposed two strategies: one using the DocT5Query [208] model as a query generator, generating pseudo queries for each document in the corpus through beam search; the other strategy directly uses the document as a query, as stated in Equation (8). Similarly, DSI-QG [375] also proposed using a query generator to enhance training data, establishing a bridge between indexing and retrieval in DSI. This data augmentation method has been proven in subsequent works to be an effective method to enhance the model’s memorization for DocIDs,

从文档生成伪查询。遵循DSI方法,NCI[307]模型通过结合标注的查询-文档对和增强的伪查询-文档对进行训练。具体而言,NCI提出了两种策略:一种使用DocT5Query[208]模型作为查询生成器,通过束搜索为语料库中的每个文档生成伪查询;另一种策略则直接使用文档作为查询,如公式(8)所示。类似地,DSI-QG[375]也提出使用查询生成器来增强训练数据,在DSI中建立了索引与检索之间的桥梁。后续研究证明,这种数据增强方法是提升模型对DocID记忆能力的有效手段。

Fig. 4. A conceptual framework for a generative retrieval system, with a focus on challenges in incremental learning, identifier construction, model training and structure, and integration with downstream tasks and recommendation systems.

图 4: 生成式检索系统的概念框架,重点关注增量学习、标识符构建、模型训练与结构,以及与下游任务和推荐系统集成方面的挑战。

which can be expressed as follows:

可以表示为:

$$

\begin{array}{r}{\operatorname{Pseudo}\operatorname{Query}:q_{s_{i}}\longrightarrow\operatorname{DocID},i\in{1,...,k_{q_{s}}},}\end{array}

$$

$$

\begin{array}{r}{\operatorname{Pseudo}\operatorname{Query}:q_{s_{i}}\longrightarrow\operatorname{DocID},i\in{1,...,k_{q_{s}}},}\end{array}

$$

where $q_{s_{i}}$ represents each of the $k_{q_{s}}$ generated pseudo query for the corresponding DocID.

其中,$q_{s_{i}}$ 表示对应 DocID 生成的 $k_{q_{s}}$ 个伪查询中的每一个。

Improving Ranking Capability. Additionally, a series of methods focus on further optimizing the ranking capability of GR models. Chen et al. [30] proposed a multi-task distillation method to improve retrieval quality without changing the model structure, thereby obtaining better indexing and ranking capabilities. Meanwhile, LTRGR [159] introduced a ranking loss to train the model in ranking paragraphs. Subsequently, [365] proposed GenRRL, which improves ranking quality through reinforcement learning with relevance feedback, aligning token-level DocID generation with document-level relevance estimation. Moreover, [161] introduced DGR, which enhances generative retrieval through knowledge distillation. Specifically, DGR uses a cross-encoder as a teacher model, providing fine-grained passage ranking supervision signals, and then optimizes the model with a distilled RankNet loss. ListGR [280] defined positional conditional probabilities, emphasizing the importance of the generation order of each DocID in the list. In addition, ListGR employs relevance calibration that adjusts the generated list of DocIDs to better align with the labeled ranking list. See Table 1 for a detailed comparison of GR methods.

提升排序能力。此外,一系列方法专注于进一步优化GR模型的排序能力。Chen等人[30]提出了一种多任务蒸馏方法,在不改变模型结构的情况下提升检索质量,从而获得更好的索引和排序能力。同时,LTRGR[159]引入了排序损失来训练模型对段落进行排序。随后,[365]提出了GenRRL,通过基于相关性反馈的强化学习提升排序质量,使Token级别的DocID生成与文档级别的相关性估计对齐。此外,[161]提出了DGR,通过知识蒸馏增强生成式检索。具体而言,DGR使用交叉编码器作为教师模型,提供细粒度的段落排序监督信号,并通过蒸馏的RankNet损失优化模型。ListGR[280]定义了位置条件概率,强调列表中每个DocID生成顺序的重要性。此外,ListGR采用相关性校准技术,调整生成的DocID列表以更好地匹配标注的排序列表。GR方法的详细对比见表1。

3.1.2 Model Structure. Basic generative retrieval models mostly use pre-trained encoder-decoder structured generative models, such as T5 [231] and BART [138], fine-tuned for the DocID generation task. To better adapt to the GR task, researchers have proposed a series of specifically designed model structures [130, 224, 237, 275, 307, 342, 346].

3.1.2 模型结构。基础生成式检索模型主要采用预训练的编码器-解码器结构生成模型(如T5 [231]和BART [138]),通过微调适应DocID生成任务。为更好适配GR任务,研究者提出了一系列专门设计的模型结构[130, 224, 237, 275, 307, 342, 346]。

Model Decoding Methods. For the semantic structured DocID proposed by DSI [281], NCI [307] designed a Prefix-Aware Weight-Adaptive (PAWA) decoder. By adjusting the weights at different positions of DocIDs, this decoder can capture the semantic hierarchy of DocIDs. To allow the GR model to utilize both own parametric knowledge and external information, NP-Decoding [130] proposed using non-parametric contextual i zed word embeddings (as external memory) instead of traditional word embeddings as the input to the decoder. Additionally, PAG [345] proposed a planning-ahead generation approach, which first decodes the set-based DocID to approximate document-level scores, and then continues to decode the sequence-based DocID on this basis.

模型解码方法。针对DSI [281]提出的语义结构化DocID,NCI [307]设计了一种前缀感知权重自适应(PAWA)解码器。该解码器通过调整DocID不同位置的权重,能够捕捉DocID的语义层级结构。为使GR模型既能利用自身参数化知识又能结合外部信息,NP-Decoding [130]提出使用非参数化上下文词嵌入(作为外部记忆)替代传统词嵌入作为解码器输入。此外,PAG [345]提出了一种前瞻式生成方法,先解码基于集合的DocID以近似文档级评分,再在此基础上继续解码基于序列的DocID。

Combining Generative and Dense Retrieval Methods. Combining seq2seq generative models with dual-encoder retrieval models, MEVI [346] utilizes Residual Quantization (RQ) [189] to organize documents into hierarchical clusters, enabling efficient retrieval of candidate clusters and precise document retrieval within those clusters. Similarly, Generative Dense Retrieval (GDR) [342] proposed to first broadly match queries to document clusters, optimizing for interaction depth and memory efficiency, and then perform precise, cluster-specific document retrieval, boosting both recall and s cal ability.

结合生成式与密集检索方法。MEVI [346] 将序列到序列(seq2seq)生成模型与双编码器检索模型相结合,利用残差量化(Residual Quantization,RQ)[189] 将文档组织为分层聚类结构,从而实现候选聚类的高效检索及聚类内文档的精准定位。类似地,生成式密集检索(Generative Dense Retrieval,GDR)[342] 提出先对查询与文档聚类进行粗粒度匹配,优化交互深度与内存效率,再执行聚类专属的精确文档检索,显著提升了召回率与可扩展性。

Utilizing Multiple Models. TOME [237] proposed to decompose the GR task into two stages, first generating text paragraphs related to the query through an additional model, then using the GR model to generate the URL related to the paragraph. Diffusion Ret [224] proposed to first use a diffusion model (Seq DiffuSe q [341]) to generate a pseudo-document from a query, where the generated pseudo-document is similar to real documents in length, format, and content, rich in semantic information; Then, it employs another generative model to perform retrieval based on N-grams, similar to the process used by SEAL[13], leveraging an FM-Index[62] for generating N-grams found in the corpus. Self-Retrieval [275] fully integrated indexing, retrieval, and evaluation into a single large language model. It generates natural language indices and document segments, and performs self-evaluation to score and rank the generated documents.

利用多模型策略。TOME [237] 提出将GR (Generative Retrieval) 任务分解为两个阶段:先通过额外模型生成与查询相关的文本段落,再由GR模型生成与该段落关联的URL。Diffusion Ret [224] 提出先用扩散模型 (Seq DiffuSe q [341]) 根据查询生成伪文档,生成的伪文档在长度、格式和内容上与真实文档相似且语义信息丰富;随后采用另一生成式模型基于N-grams进行检索,类似SEAL[13]使用的流程,并利用FM-Index[62]生成语料库中存在的N-grams。Self-Retrieval [275] 将索引构建、检索和评估完全整合到单个大语言模型中,通过生成自然语言索引和文档片段,并执行自评估对生成文档进行打分排序。

3.2 Design of Document Identifiers

3.2 文档标识符设计

Another essential component of generative retrieval is document representation, also known as document identifiers (DocIDs), which act as the target outputs for the GR model. Accurate document representations are crucial as they enable the model to more effectively memorize document information, leading to enhanced retrieval performance. Table 1 provides a detailed comparison of the states, data types, and order of DocIDs across numerous GR methods. In the following sections, we will explore the design of DocIDs from two categories: numeric-based identifiers and text-based identifiers.

生成式检索的另一关键组成部分是文档表示,也称为文档标识符(DocID),它们作为GR模型的目标输出。准确的文档表示至关重要,因为它们能让模型更有效地记忆文档信息,从而提升检索性能。表1详细比较了多种GR方法中DocID的状态、数据类型和顺序。在接下来的章节中,我们将从两类DocID设计展开探讨:基于数字的标识符和基于文本的标识符。

3.2.1 Numeric-based Identifiers. An intuitive method to represent documents is by using a single number or a series of numbers, referred to as DocIDs. Existing methods have designed both static and learnable DocIDs.

3.2.1 基于数字的标识符。表示文档的一种直观方法是使用单个数字或一系列数字,称为文档ID (DocID)。现有方法设计了静态和可学习的文档ID。

Static DocIDs. Initially, DSI [281] introduced three numeric DocIDs to represent documents: (1) Unstructured Atomic DocID: a unique integer identifier is randomly assigned to each document, containing no structure or semantic information. (2) Naively Structured String DocID: treating random integers as divisible strings, implementing character-level DocID decoding to replace large softmax output layers. (3) Semantically Structured DocID: introducing semantic structure through hierarchical $k$ -means method, allowing semantically similar documents to share prefixes in their identifiers, effectively reducing the search space. Concurrently, Dynamic Retriever [370] also built a model-based IR system based on unstructured atomic DocID. Subsequently, Ultron [371] encoded documents into a latent semantic space using BERT [121], and compressed vectors into a smaller semantic space via Product Quantization (PQ) [73, 102], preserving semantic information. Each document’s PQ code serves as its semantic identifier. MEVI [346] clusters documents using Residual Quantization (RQ) [189] and utilizes dual-tower and seq2seq model embeddings for a balanced performance in large-scale document retrieval.

静态DocID。最初,DSI [281] 引入了三种数字DocID来表示文档:(1) 非结构化原子DocID:为每个文档随机分配一个唯一的整数标识符,不包含任何结构或语义信息。(2) 朴素结构化字符串DocID:将随机整数视为可分割的字符串,实现字符级DocID解码以替代大型softmax输出层。(3) 语义结构化DocID:通过分层 $k$ -means方法引入语义结构,使语义相似的文档在其标识符中共享前缀,有效缩小搜索空间。与此同时,Dynamic Retriever [370] 也基于非结构化原子DocID构建了基于模型的IR系统。随后,Ultron [371] 使用BERT [121] 将文档编码到潜在语义空间,并通过乘积量化(PQ) [73, 102] 将向量压缩到更小的语义空间,保留语义信息。每个文档的PQ代码作为其语义标识符。MEVI [346] 使用残差量化(RQ) [189] 对文档进行聚类,并利用双塔和seq2seq模型嵌入,在大规模文档检索中实现平衡性能。

Learnable DocIDs. Unlike previous static DocIDs, GenRet [265] proposed learnable document representations, transforming documents into DocIDs through an encoder, then reconstructs documents from DocIDs using a decoder, trained to minimize reconstruction error. Furthermore, it used progressive training and diversity clustering for optimization. To ensure that DocID embeddings can reflect document content, Tied-Atomic [206] proposed to link document text with token embeddings and employs contrastive loss for DocID generation. LMIndexer [112] and ASI [330] learned optimal DocIDs through semantic indexing, with LMIndexer using a re parameter iz ation mechanism for unified optimization, facilitating efficient retrieval by aligning semantically similar documents under common DocIDs. ASI extends this by establishing an end-to-end retrieval framework, incorporating semantic loss functions and re parameter iz ation to enable joint training.

可学习的文档标识符 (Learnable DocIDs)。与以往静态文档标识符不同,GenRet [265] 提出可学习的文档表示方法,通过编码器将文档转换为文档标识符,再使用解码器从文档标识符重构文档,并通过最小化重构误差进行训练。此外,该方法采用渐进式训练和多样性聚类进行优化。为确保文档标识符嵌入能反映文档内容,Tied-Atomic [206] 提出将文档文本与 token 嵌入关联,并采用对比损失生成文档标识符。LMIndexer [112] 和 ASI [330] 通过语义索引学习最优文档标识符:LMIndexer 使用重参数化机制进行统一优化,使语义相似的文档在相同文档标识符下对齐以提升检索效率;ASI 进一步扩展该方法,构建端到端检索框架,结合语义损失函数和重参数化实现联合训练。

Table 1. Comparisons of representative generative retrieval methods, focusing on document identifier, training data augmentation, and training objective.

| Method | DocumentIdentifier | Training Data Augmentation | Training Objective | |||||

| State | Data Type | Order | Sample Doc | Doc2Query | Seq2seq | DocID | Ranking | |

| GENRE [18] | Static | Text | Sequence | |||||

| DSI [281] | Static | Numeric | Sequence | |||||

| DynamicRetriever [370] | Static | Numeric | Sequence | |||||

| SEAL [13] | Static | Text | Sequence | |||||

| DSI-QG [375] | Static | Numeric | Sequence | |||||

| NCI [307] | Static | Numeric | Sequence | |||||

| Ultron [371] | Static | Numeric/Text | Sequence | |||||

| CorpusBrain[28] | Static | Text | Sequence | |||||

| GenRet [265] | Learnable | Numeric | Sequence | |||||

| AutoTSG[352] | Static | Text | Set | |||||

| SE-DSI [278] | Static | Text | Sequence | |||||

| Chen et al. [30] | Static | Numeric | Sequence | √ | ||||

| LLM-URL [376] | Static | Text | Sequence | |||||

| MINDER[160] | Static | Text | Sequence | |||||

| LTRGR [159] | Static | Text | Sequence | |||||

| NOVO [311] | Learnable | Text | Set | |||||

| GenRRL [365] | Static | Text | Sequence | √ | ||||

| LMIndexer [112] | Learnable | Numeric | Sequence | |||||

| ASI [330] | Learnable | Numeric | Sequence | |||||

| RIPOR [344] | Learnable | Numeric | Sequence | |||||

| GLEN [135] | Learnable | Text | Sequence | |||||

| DGR [161] | Static | Text | Sequence | |||||

| ListGR [280] | Static | Numeric | Sequence | |||||

表 1: 代表性生成式检索方法对比,重点关注文档标识符、训练数据增强和训练目标。

| 方法 | 文档标识符 | 训练数据增强 | 训练目标 |

|---|---|---|---|

| 状态 | 数据类型 | 顺序 | |

| GENRE [18] | 静态 | 文本 | 序列 |

| DSI [281] | 静态 | 数值 | 序列 |

| DynamicRetriever [370] | 静态 | 数值 | 序列 |

| SEAL [13] | 静态 | 文本 | 序列 |

| DSI-QG [375] | 静态 | 数值 | 序列 |

| NCI [307] | 静态 | 数值 | 序列 |

| Ultron [371] | 静态 | 数值/文本 | 序列 |

| CorpusBrain[28] | 静态 | 文本 | 序列 |

| GenRet [265] | 可学习 | 数值 | 序列 |

| AutoTSG[352] | 静态 | 文本 | 集合 |

| SE-DSI [278] | 静态 | 文本 | 序列 |

| Chen et al. [30] | 静态 | 数值 | 序列 |

| LLM-URL [376] | 静态 | 文本 | 序列 |

| MINDER[160] | 静态 | 文本 | 序列 |

| LTRGR [159] | 静态 | 文本 | 序列 |

| NOVO [311] | 可学习 | 文本 | 集合 |

| GenRRL [365] | 静态 | 文本 | 序列 |

| LMIndexer [112] | 可学习 | 数值 | 序列 |

| ASI [330] | 可学习 | 数值 | 序列 |

| RIPOR [344] | 可学习 | 数值 | 序列 |

| GLEN [135] | 可学习 | 文本 | 序列 |

| DGR [161] | 静态 | 文本 | 序列 |

| ListGR [280] | 静态 | 数值 | 序列 |

Furthermore, RIPOR [344] treats the GR model as a dense encoder to encode document content. It then splits these representations into vectors via RQ [189], creating unique DocID sequences. Furthermore, RIPOR implements a prefix-guided ranking optimization, increasing relevance scores for prefixes of pertinent DocIDs through margin decomposed pairwise loss during decoding.

此外,RIPOR [344] 将GR模型视为密集编码器来编码文档内容,随后通过RQ [189] 将这些表示拆分为向量,生成独特的DocID序列。此外,RIPOR采用前缀引导的排序优化策略,在解码过程中通过边缘分解成对损失提升相关DocID前缀的关联分数。

In summary, numeric-based document representations can utilize the embeddings of dense retrievers, obtaining semantically meaningful DocID sequences through methods such as $k$ -means, PQ [102], and RQ [189]; they can also combine encoder-decoder GR models with bi-encoder DR models to achieve complementary advantages [206, 346].

总之,基于数值的文档表示可以利用密集检索器的嵌入,通过诸如 $k$ -means、PQ [102] 和 RQ [189] 等方法获得具有语义意义的 DocID 序列;也可以将编码器-解码器 GR 模型与双编码器 DR 模型相结合,实现优势互补 [206, 346]。

3.2.2 Text-based Identifiers. Text-based DocIDs have the inherent advantage of effectively leveraging the strong capabilities of pre-trained language models and offering better interpret ability.

3.2.2 基于文本的标识符。基于文本的文档标识符(DocID)具有天然优势:能有效利用预训练语言模型的强大能力,并提供更好的可解释性。

Document Titles. The most straightforward text-based identifier is the document title, which requires each title to uniquely represent a document in the corpus, otherwise, it would not be possible to accurately retrieve a specific document. The Wikipedia corpus used in the KILT [218] benchmark, due to its well-regulated manual annotation, has a unique title corresponding to each document. Thus, GENRE [18], based on the title as DocID and leveraging the generative model BART [138] and pre-built DocID prefix, achieved superior retrieval performance across 11 datasets in KILT. Following GENRE, GERE [27], Corpus Brain [28], Re3val [257], and Corpus Brain ${}_{,++}$ [80] also based their work on title DocIDs for Wikipedia-based tasks. Notably, LLM-URL [376] directly generated URLs using ChatGPT prompts, achieving commendable performance after removing invalid URLs. However, in the web search scenario [205], document titles in the corpus often have significant duplication and many meaningless titles, making it unfeasible to use titles alone as DocIDs. Thus, Ultron [371] effectively addressed this issue by combining URLs and titles as DocIDs, identifying documents through keywords in web page URLs and titles.

文档标题。最直接的基于文本的标识符是文档标题,它要求每个标题能唯一代表语料库中的一篇文档,否则就无法准确检索到特定文档。KILT [218] 基准中使用的维基百科语料库由于其规范的人工标注,每篇文档都有唯一的标题。因此,GENRE [18] 以标题作为 DocID,并利用生成式模型 BART [138] 和预构建的 DocID 前缀,在 KILT 的 11 个数据集上实现了卓越的检索性能。继 GENRE 之后,GERE [27]、Corpus Brain [28]、Re3val [257] 和 Corpus Brain ${}_{,++}$ [80] 也基于维基百科任务的标题 DocID 展开工作。值得注意的是,LLM-URL [376] 直接使用 ChatGPT 提示生成 URL,在去除无效 URL 后取得了令人称赞的性能。然而,在网络搜索场景 [205] 中,语料库中的文档标题往往存在大量重复和无意义的标题,因此仅使用标题作为 DocID 不可行。为此,Ultron [371] 通过将 URL 和标题结合作为 DocID,有效解决了这一问题,通过网页 URL 和标题中的关键词来识别文档。

Sub-strings of Documents. To increase the flexibility of DocIDs, SEAL [13] proposed a substring identifier, representing documents with any N-grams within them. Using FM-Index (a compressed full-text sub-string index) [62], SEAL could generate N-grams present in the corpus to retrieve all documents containing those N-grams, scoring and ranking documents based on the frequency of N-grams in each document and the importance of N-grams. Following SEAL, various GR models [26, 159–161] also utilized sub-string DocIDs and FM-Index during inference. For a more comprehensive representation of documents, MINDER [160] proposed multi-view identifiers, including generated pseudo queries from document content via DocT5Query [208], titles, and sub-strings. This multi-view DocID was also used in LTRGR [159] and DGR [161].

文档子串。为提高文档标识符(DocID)的灵活性,SEAL [13]提出了子串标识符方案,允许用文档内任意N元语法(N-gram)进行表征。通过FM-Index(一种压缩全文子串索引)[62],SEAL能生成语料库中存在的N元语法,检索包含这些N元语法的所有文档,并根据N元语法在文档中的出现频率及其重要性进行打分排序。继SEAL之后,多种生成式检索(GR)模型[26, 159–161]在推理阶段也采用了子串DocID和FM-Index技术。为获得更全面的文档表征,MINDER[160]提出了多视角标识符方案,包含通过DocT5Query[208]生成的文档内容伪查询、标题以及子串。这种多视角DocID同样被应用于LTRGR[159]和DGR[161]模型。

Term Sets. Unlike the sequential DocIDs described earlier, AutoTSG [352] proposed a term set-based document representation, using keywords extracted from titles and content, rather than predefined sequences, allowing for retrieval of the target document as long as the generated term set is included in the extracted keywords. Recently, PAG [345] also constructed DocIDs based on sets of key terms, disregarding the order of terms, which is utilized for approximating document relevance in decoding.

术语集。与之前描述的序列化文档ID不同,AutoTSG [352]提出了一种基于术语集的文档表示方法,该方法使用从标题和内容中提取的关键词,而非预定义的序列,只要生成的术语集包含在提取的关键词中,就能检索到目标文档。最近,PAG [345]也基于关键术语集构建了文档ID,忽略术语的顺序,用于在解码过程中近似文档相关性。

Learnable DocIDs. Text-based identifiers can also be learnable. Similarly based on term-sets, NOVO [311] proposed learnable continuous N-grams constituting term-set DocIDs. Through denoising query modeling, the model learned to generate queries from documents with noise, thereby implicitly learning to filter out document N-grams more relevant to queries. NOVO also improves the document’s semantic representation by updating N-gram embeddings. Later, GLEN[135] uses dynamic lexical DocIDs and follows a two-phase index learning strategy. First, it assigns DocIDs by extracting keywords from documents using self-supervised signals. Then, it refines DocIDs by integrating query-document relevance through two loss functions. During inference, GLEN ranks documents using DocID weights without additional overhead.

可学习的文档标识符。基于文本的标识符同样可以具备可学习性。NOVO [311] 同样基于词项集合,提出了由可学习连续N元词项构成的文档标识符。该模型通过去噪查询建模,学习从带噪声的文档生成查询,从而隐式地筛选出与查询更相关的文档N元词项。NOVO还通过更新N元词项嵌入来优化文档的语义表示。后续研究GLEN [135] 采用动态词汇文档标识符,并采用两阶段索引学习策略:首先利用自监督信号从文档提取关键词来分配文档标识符;然后通过两种损失函数整合查询-文档相关性来优化标识符。在推理阶段,GLEN直接利用文档标识符权重进行排序,无需额外计算开销。

3.3 Incremental Learning on Dynamic Corpora

3.3 动态语料库上的增量学习

Prior studies have focused on generative retrieval from static document corpora. However, in reality, the documents available for retrieval are continuously updated and expanded. To address this challenge, researchers have developed a range of methods to optimize GR models for adapting to dynamic corpora.

先前的研究主要集中于从静态文档库中进行生成式检索。然而现实中,可供检索的文档会持续更新和扩展。为应对这一挑战,研究人员开发了一系列方法来优化生成式检索(Generative Retrieval, GR)模型,使其适应动态语料库。

Optimizer and Document Rehearsal. At first, $\mathrm{DSI++}$ [192] aims to address the incremental learning challenges encountered by DSI [281]. $\mathrm{DSI++}$ modifies the training by optimizing flat loss basins through the Sharpness-Aware Minimization (SAM) optimizer, stabilizing the learning process of the model. It also employs DocT5Query [208] to generate pseudo queries for documents in the existing corpus as training data augmentation, mitigating the forgetting issue of GR models.

优化器与文档复述机制。最初,$\mathrm{DSI++}$[192]旨在解决DSI[281]面临的增量学习挑战。该方法通过Sharpness-Aware Minimization (SAM) 优化器优化平坦损失盆地来调整训练过程,从而稳定模型的学习轨迹。同时采用DocT5Query[208]为现有语料库中的文档生成伪查询作为训练数据增强,缓解生成式检索(GR)模型的遗忘问题。

Constrained Optimization Addressing the scenario of real-time addition of new documents, such as news or scientific literature IR systems, IncDSI [124] views the addition of new documents as a constrained optimization problem to find optimal representations for the new documents. This approach aims to (1) ensure new documents can be correctly retrieved by their relevant queries, and (2) maintain the retrieval performance of existing documents unaffected.

约束优化

针对实时新增文档(如新闻或科学文献信息检索系统)的场景,IncDSI [124] 将新增文档视为一个约束优化问题,旨在为新文档找到最优表示。该方法力求实现两个目标:(1) 确保新文档能被相关查询正确检索,(2) 保持现有文档的检索性能不受影响。

Incremental Product Quantization. CLEVER [25], based on Product Quantization (PQ) [102], proposes Incremental Product Quantization (IPQ) for generating PQ codes as DocIDs for documents. Compared to traditional PQ methods, IPQ designs two adaptive thresholds to update only a subset of centroids instead of all, maintaining the indices of updated centroids constant. This method reduces computational costs and allows the system to adapt flexibly to new documents.

增量乘积量化 (Incremental Product Quantization)。基于乘积量化 (PQ) [102] 的 CLEVER [25] 提出了增量乘积量化 (IPQ) 方法,用于生成文档的 PQ 编码作为文档 ID。与传统 PQ 方法相比,IPQ 设计了两个自适应阈值来仅更新部分中心点而非全部,同时保持已更新中心点的索引不变。该方法降低了计算成本,并使系统能够灵活适应新文档。

Fine-tuning Adatpers for Specific Tasks. Corpus Brain ${}++$ [80] introduces the $\mathrm{KLT++}$ benchmark for continuously updated KILT [218] tasks and designs a dynamic architecture paradigm with a backbone-adapter structure. By fixing a shared backbone model to provide basic retrieval capabilities while introducing task-specific adapters to increment ally learn new documents for each task, it effectively avoids catastrophic forgetting. During training, Corpus Brain $^{++}$ generates pseudo queries for new document sets and continues to pre-train adapters for specific tasks.

微调适配器以执行特定任务。Corpus Brain ${}++$ [80] 提出了持续更新的KILT [218]任务基准$\mathrm{KLT++}$,并设计了一种基于主干-适配器结构的动态架构范式。该方法通过固定共享主干模型提供基础检索能力,同时引入任务专用适配器逐步学习各任务的新文档,有效避免了灾难性遗忘。训练过程中,Corpus Brain$^{++}$会为新文档集生成伪查询,并持续针对特定任务预训练适配器。

3.4 Downstream Task Adaption

3.4 下游任务适配

Generative retrieval methods, apart from addressing retrieval tasks individually, have been tailored to various downstream generative tasks. These include fact verification [284], entity linking [86], open-domain QA [126], dialogue [51], slot filling [137], among others, as well as knowledgeintensive tasks [218], code [179], conversational QA [3], and multi-modal retrieval scenarios [165], demonstrating superior performance and efficiency. These methods are discussed in terms of separate training, joint training, and multi-modal generative retrieval.

生成式检索方法除了单独处理检索任务外,还被定制用于各种下游生成任务。这些任务包括事实验证 [284]、实体链接 [86]、开放域问答 [126]、对话 [51]、槽位填充 [137] 等,以及知识密集型任务 [218]、代码 [179]、会话式问答 [3] 和多模态检索场景 [165],展现出卓越的性能和效率。这些方法分别从独立训练、联合训练和多模态生成式检索的角度进行了讨论。

3.4.1 Separate Training. For fact verification tasks [284], which involve determining the correctness of input claims, GERE [27] proposed using an encoder-decoder-based GR model to replace traditional indexing-based methods. Specifically, GERE first utilizes a claim encoder to encode input claims, and then generates document titles related to the claim through a title decoder to obtain candidate sentences for corresponding documents.

3.4.1 独立训练

针对事实核查任务 [284](即判定输入主张正确性的任务),GERE [27] 提出采用基于编码器-解码器的生成式检索 (Generative Retrieval, GR) 模型替代传统的基于索引的方法。具体而言,GERE 首先通过主张编码器对输入主张进行编码,随后通过标题解码器生成与主张相关的文档标题,从而获取对应文档的候选句子。

Knowledge-Intensive Language Tasks. For Knowledge-Intensive Language Tasks (KILT) [218], Corpus Brain [28] introduced three pre-training tasks to enhance the model’s understanding of query-document relationships at various granular i ties: Internal Sentence Selection, Leading Paragraph Selection, and Hyperlink Identifier Prediction. Similarly, UGR [26] proposed using different granular i ties of N-gram DocIDs to adapt to various downstream tasks, unifying different retrieval tasks into a single generative form. UGR achieves this by letting the GR model learn prompts specific to tasks, generating corresponding document, passage, sentence, or entity identifiers.

知识密集型语言任务。针对知识密集型语言任务 (KILT) [218],Corpus Brain [28] 引入了三种预训练任务来增强模型在不同粒度上对查询-文档关系的理解:内部句子选择、主导段落选择和超链接标识符预测。类似地,UGR [26] 提出使用不同粒度的 N-gram 文档标识符 (DocIDs) 来适应各种下游任务,将不同的检索任务统一为单一的生成形式。UGR 通过让 GR 模型学习特定于任务的提示 (prompts),生成相应的文档、段落、句子或实体标识符来实现这一目标。

Futhermore, DearDR [283] utilizes remote supervision and self-supervised learning techniques, using Wikipedia page titles and hyperlinks as training data. The model samples sentences from Wikipedia documents as input and trains a self-regressive model to decode page titles or hyperlinks, or both, without the need for manually labeled data. Re3val [257] proposes a retrieval framework combining generative reordering and reinforcement learning. It first reorders retrieved page titles using context information obtained from a dense retriever, then optimizes the reordering using the REINFORCE algorithm to maximize rewards generated by constrained decoding.

此外,DearDR [283] 采用远程监督和自监督学习技术,利用维基百科页面标题和超链接作为训练数据。该模型从维基百科文档中采样句子作为输入,并训练一个自回归模型来解码页面标题或超链接(或两者),无需手动标注数据。Re3val [257] 提出了一种结合生成式重排序和强化学习的检索框架。它首先使用密集检索器获取的上下文信息对检索到的页面标题进行重排序,然后通过 REINFORCE 算法优化排序,以最大化由约束解码生成的奖励。

Multi-hop retrieval. In multi-hop retrieval tasks, which require iterative document retrievals to gather adequate evidence for answering a query, GMR [131] proposed to employ language model memory and multi-hop memory to train a generative retrieval model, enabling it to memorize the target corpus and simulate real retrieval scenarios through constructing pseudo multi-hop query data, achieving dynamic stopping and efficient performance in multi-hop retrieval tasks.

多跳检索。在多跳检索任务中,需要通过迭代文档检索来收集足够的证据以回答查询,GMR [131] 提出利用语言模型记忆和多跳记忆训练生成式检索模型,使其能够记忆目标语料库,并通过构建伪多跳查询数据模拟真实检索场景,从而在多跳检索任务中实现动态停止和高效性能。

Code Retrieval. CodeDSI [203] is an end-to-end generative code search method that directly maps queries to pre-stored code samples’ DocIDs instead of generating new code. Similar to DSI [281], it includes indexing and retrieval stages, learning to map code samples and real queries to their respective DocIDs. CodeDSI explores different DocID representation strategies, including direct and clustered representation, as well as numerical and character representations.

代码检索。CodeDSI [203] 是一种端到端的生成式代码搜索方法,它直接将查询映射到预存储代码样本的 DocID,而非生成新代码。与 DSI [281] 类似,它包含索引和检索两个阶段,学习将代码样本和真实查询映射到各自的 DocID。CodeDSI 探索了不同的 DocID 表示策略,包括直接表示和聚类表示,以及数值和字符表示。

Conversational Question Answering. GCoQA [158] is a generative retrieval method for conversational QA systems that directly generates DocIDs for passage retrieval. This method focuses on key information in the dialogue context at each decoding step, achieving more precise and efficient passage retrieval and answer generation, thereby improving retrieval performance and overall system efficiency.

会话问答。GCoQA [158] 是一种用于会话问答系统的生成式检索方法,可直接生成用于段落检索的 DocID。该方法在每一步解码时聚焦对话上下文中的关键信息,实现更精准高效的段落检索与答案生成,从而提升检索性能及系统整体效率。

3.4.2 Joint Training. The methods in the previous section involve separately training generative retrievers and downstream task generators. However, due to the inherent nature of GR models as generative models, a natural advantage lies in their ability to be jointly trained with downstream generators to obtain a unified model for retrieval and generation tasks.

3.4.2 联合训练。上一节的方法涉及分别训练生成式检索器(Generative Retriever)和下游任务生成器。然而,由于GR模型作为生成式模型的固有特性,其天然优势在于能够与下游生成器进行联合训练,从而获得一个适用于检索和生成任务的统一模型。

Multi-decoder Structure. UniGen [155] proposes a unified generation framework to integrate retrieval and question answering tasks, bridging the gap between query input and generation targets using connectors generated by large language models. UniGen employs shared encoders and task-specific decoders for retrieval and question answering, introducing iterative enhancement strategies to continuously improve the performance of both tasks.

多解码器结构。UniGen [155] 提出了一种统一的生成框架,用于整合检索和问答任务,通过大语言模型生成的连接器弥合查询输入与生成目标之间的差距。UniGen 采用共享编码器和任务专用解码器分别处理检索与问答,并引入迭代增强策略持续提升两项任务的性能。

Multi-task Training. Later, CorpusLM [152] introduces a unified language model that integrates GR, closed-book generation, and retrieval-augmented generation to handle various knowledgeintensive tasks. The model adopts a multi-task learning approach and introduces ranking-guided DocID decoding strategies and continuous generation strategies to improve retrieval and generation performance. In addition, CorpusLM designs a series of auxiliary DocID understanding tasks to deepen the model’s understanding of DocID semantics.

多任务训练。随后,CorpusLM [152] 提出了一种统一的大语言模型,整合了生成式检索 (GR)、闭卷生成和检索增强生成,以处理各种知识密集型任务。该模型采用多任务学习方法,并引入了基于排名的DocID解码策略和连续生成策略,以提升检索和生成性能。此外,CorpusLM还设计了一系列辅助DocID理解任务,以加深模型对DocID语义的理解。

3.4.3 Multi-modal Generative Retrieval. Generative retrieval methods can also leverage multimodal data such as text, images, etc., to achieve end-to-end multi-modal retrieval.

3.4.3 多模态生成式检索。生成式检索方法同样可利用文本、图像等多模态数据,实现端到端的多模态检索。

Tokenizing Images to DocID Sequences. At first, IRGen [357] transforms image retrieval problems into generative problems, predicting relevant discrete visual tokens, i.e., image identifiers, through a seq2seq model given a query image. IRGen proposed a semantic image tokenizer, which converts global image features into short sequences capturing high-level semantic information.

将图像转换为DocID序列的Token化方法。首先,IRGen [357] 将图像检索问题转化为生成式问题,通过一个seq2seq模型在给定查询图像的情况下预测相关的离散视觉token(即图像标识符)。IRGen提出了一种语义图像token化器,能够将全局图像特征转换为捕捉高层语义信息的短序列。

Advanced Model Training and Structure. Later, GeMKR [178] combines LLMs’ generation capabilities with visual-text features, designing a generative knowledge retrieval framework. It first guides multi-granularity visual learning using object-aware prefix tuning techniques to align visual features with LLMs’ text feature space, achieving cross-modal interaction. GeMKR then employs a two-step retrieval process: generating knowledge clues closely related to the query and then retrieving corresponding documents based on these clues. GRACE [178] achieves generative cross-modal retrieval method by assigning unique identifier strings to images and training multimodal large language models (MLLMs) [7] to memorize the association between images and their identifiers. The training process includes (1) learning to memorize images and their corresponding identifiers, and (2) learning to generate the target image identifiers from textual queries. GRACE explores various types of image identifiers, including strings, numbers, semantic and atomic identifiers, to adapt to different memory and retrieval requirements.

高级模型训练与结构。随后,GeMKR [178] 将大语言模型的生成能力与视觉-文本特征相结合,设计了一个生成式知识检索框架。它首先利用对象感知前缀调优技术引导多粒度视觉学习,将视觉特征与大语言模型的文本特征空间对齐,实现跨模态交互。GeMKR 随后采用两步检索流程:生成与查询密切相关的知识线索,然后根据这些线索检索对应文档。GRACE [178] 通过为图像分配唯一标识符字符串并训练多模态大语言模型 (MLLMs) [7] 来记忆图像与其标识符的关联,实现了生成式跨模态检索方法。训练过程包括:(1) 学习记忆图像及其对应标识符,(2) 学习从文本查询生成目标图像标识符。GRACE 探索了多种类型的图像标识符,包括字符串、数字、语义和原子标识符,以适应不同的记忆和检索需求。

3.4.4 Generative Recommend er Systems. Recommendation systems, as an integral part of the information retrieval, are currently undergoing a paradigm shift from disc rim i native models to generative models. Generative recommendation systems do not require the computation of ranking scores for each item followed by database indexing, but instead accomplish item recommendations through the direct generation of IDs. In this section, several seminal works, including P5 [74], GPT4Rec [146], TIGER [233], SEATER [254], IDGenRec [273], LC-Rec [360] and ColaRec [309], are summarized to outline the development trends in generative recommendations.

3.4.4 生成式推荐系统

推荐系统作为信息检索的重要组成部分,当前正经历从判别式模型向生成式模型的范式转变。生成式推荐系统无需计算每个物品的排序分数再进行数据库索引,而是通过直接生成ID来完成物品推荐。本节总结了P5 [74]、GPT4Rec [146]、TIGER [233]、SEATER [254]、IDGenRec [273]、LC-Rec [360]和ColaRec [309]等开创性工作,以概述生成式推荐的发展趋势。

P5 [74] transforms various recommendation tasks into different natural language sequences, designing a universal, shared framework for recommendation completion. This method, by setting unique training objectives, prompts, and prediction paradigms for each recommendation domain’s downstream tasks, serves well as a backbone model, accomplishing various recommendation tasks through generated text. In generative retrieval, effective indexing identifiers have been proven to significantly enhance the performance of generative methods. Similarly, TIGER [233] initially learns a residual quantized auto encoder to generate semantically informative indexing identifiers for different items. It then trains a transformer-based encoder-decoder model with this semantically informative indexing identifier sequence to generate item identifiers for recommending the next item based on historical sequences.

P5 [74] 将各类推荐任务转化为不同的自然语言序列,设计了一个通用的共享框架来完成推荐。该方法通过为每个推荐领域的下游任务设置独特的训练目标、提示和预测范式,很好地充当了骨干模型,通过生成文本来完成各种推荐任务。在生成式检索中,有效的索引标识符已被证明能显著提升生成方法的性能。类似地,TIGER [233] 首先学习残差量化自编码器,为不同物品生成具有语义信息的索引标识符,随后利用这些语义丰富的索引标识符序列训练基于Transformer的编码器-解码器模型,从而根据历史序列生成物品标识符来推荐下一个物品。

Focusing solely on semantic information and overlooking the collaborative filtering information under the recommendation context might limit the further development of generative models. Therefore, after generating semantic indexing identifiers similar to TIGER using a residual quantized auto encoder with uniform semantic mapping, LC-Rec [360] also engages in a series of alignment tasks, including sequential item prediction, explicit index-language alignment, and recommendation-oriented implicit alignment. Based on the learned item identifiers, it integrates semantic and collaborative information, enabling large language models to better adapt to sequence recommendation tasks.

仅关注语义信息而忽略推荐场景下的协同过滤信息,可能会限制生成模型的进一步发展。因此,在通过具有统一语义映射的残差量化自编码器生成类似TIGER的语义索引标识符后,LC-Rec [360]还进行了一系列对齐任务,包括序列物品预测、显式索引-语言对齐以及面向推荐的隐式对齐。基于学习到的物品标识符,它整合了语义和协同信息,使大语言模型能更好地适应序列推荐任务。

IDGenRec [273] innovative ly combines generative recommendation systems with large language models by using human language tokens to generate unique, concise, semantically rich and platform-agnostic texual identifiers for recommended items. The framework includes a text ID generator trained on item metadata with a diversified ID generation algorithm, and an alternating training strategy that optimizes both the ID generator and the LLM-based recommendation model for improved performance and accuracy in sequential recommendations. SEATER [254] designs a balanced $\mathbf{k}$ -ary tree-structured indexes, using a constrained $\mathbf{k}$ -means clustering method to recursively cluster vectors encoded from item texts, obtaining equal-length identifiers. Compared to the method proposed by DSI [281], this balanced $\mathbf{k}$ -ary tree index maintains semantic consistency at every level. It then trains a Transformer-based encoder-decoder model and enhances the semantics of each level of indexing through contrastive learning and multi-task learning. ColaRec [309] integrates collaborative filtering signals and content information by deriving generative item identifiers from a pretrained recommendation model and representing users via aggregated item content. Then it uses an item indexing generation loss and contrastive loss to align content-based semantic spaces with collaborative interaction spaces, enhancing the model’s ability to recommend items in an end-to-end framework.

IDGenRec [273] 创新性地将生成式推荐系统与大语言模型相结合,通过使用人类语言token为推荐项生成独特、简洁、语义丰富且与平台无关的文本标识符。该框架包含一个基于物品元数据训练的文本ID生成器(采用多样化ID生成算法),以及一种交替训练策略,可同步优化ID生成器和基于大语言模型的推荐模型,从而提升序列推荐的性能和准确性。SEATER [254] 设计了平衡的 $\mathbf{k}$ 叉树结构索引,采用约束型 $\mathbf{k}$ 均值聚类方法递归聚类从物品文本编码得到的向量,生成等长标识符。与DSI [281] 提出的方法相比,这种平衡 $\mathbf{k}$ 叉树索引在每一层级都保持语义一致性。随后训练基于Transformer的编码器-解码器模型,并通过对比学习和多任务学习增强各层级索引的语义。ColaRec [309] 通过从预训练推荐模型中导出生成式物品标识符,并基于聚合物品内容表示用户,从而整合协同过滤信号与内容信息。接着使用物品索引生成损失和对比损失,将基于内容的语义空间与协同交互空间对齐,增强模型在端到端框架中的物品推荐能力。

4 RELIABLE RESPONSE GENERATION: DIRECT INFORMATION ACCESSING WITHGENERATIVE LANGUAGE MODELS

4 可靠的响应生成:利用生成式语言模型直接获取信息

The rapid advancement of large language models has positioned them as a novel form of IR system, capable of generating reliable responses directly aligned with users’ informational needs. This not only saves the time users would otherwise spend on collecting and integrating information but also provides personalized, user-centric answers tailored to individual users.

大语言模型的快速发展使其成为一种新型的信息检索(IR)系统,能够直接生成与用户信息需求高度匹配的可靠响应。这不仅节省了用户原本需要花费在收集和整合信息上的时间,还能提供针对个体用户量身定制的个性化、以用户为中心的答案。

However, challenges remain in creating a grounded system that delivers faithful answers, such as hallucination, prolonged inference time, and high operational costs. This section will outline strategies for constructing a faithful GenIR system by: (1) Optimizing the GenIR model internally, (2) Enhancing the model with external knowledge, (3) Increasing accountability, and (4) Developing personalized information assistants.

然而,构建一个能提供真实答案的可靠系统仍面临诸多挑战,例如幻觉问题、推理时间过长以及高昂的运营成本。本节将概述构建可信生成式信息检索(Generative IR)系统的策略:(1) 内部优化GenIR模型,(2) 通过外部知识增强模型,(3) 提高问责机制,(4) 开发个性化信息助手。

4.1 Internal Knowledge Memorization

4.1 内部知识记忆

To develop a user-friendly and reliable IR system, the generative model should be equipped with comprehensive internal knowledge. Optimization of the backbone generative model can be categorized into three aspects: structural enhancements, training strategies, and inference techniques. The overview of this section is shown in the green part of Figure 5.

为开发用户友好且可靠的信息检索(IR)系统,生成式模型需具备全面的内部知识。主干生成模型的优化可分为三个层面:结构增强、训练策略和推理技术。本节概述如图5绿色部分所示。

4.1.1 Model Structure. With the advent of generative models, various methods have been introduced to improve model structure and enhance generative reliability. We aim to discuss the crucial technologies contributing to this advancement in this subsection.

4.1.1 模型结构

随着生成式模型(Generative Model)的出现,各种改进模型结构并增强生成可靠性的方法被提出。本节将重点讨论推动这一进步的关键技术。

Fig. 5. An Illustration of strategies for enhancing language models to generate user-centric and reliable responses, including model internal knowledge memorization and external knowledge augmentation.

图 5: 提升语言模型生成以用户为中心且可靠回答的策略示意图,包括模型内部知识记忆和外部知识增强。