Unveiling and Mitigating Bias in Mental Health Analysis with Large Language Models

揭示并缓解大语言模型在心理健康分析中的偏见

Yuqing Wang', Yun Zhao?, Sara Alessandra Kellerl3, Anne de Hond*, Marieke M. van Buchem?, Malvika Pillai', Tina Hernandez-Boussard'

Yuqing Wang', Yun Zhao?, Sara Alessandra Kellerl3, Anne de Hond*, Marieke M. van Buchem?, Malvika Pillai', Tina Hernandez-Boussard'

'Stanford University, 2Meta Platforms, Inc., 3ETH Zurich, 4 University Medical Center Utrecht, Leiden University Medical Center

斯坦福大学、2Meta Platforms公司、3苏黎世联邦理工学院、4乌得勒支大学医学中心、莱顿大学医学中心

Correspondence: Yuqing Wang (ywang216@ stanford.edu)

通讯作者:Yuqing Wang (ywang216@stanford.edu)

Abstract

摘要

The advancement of large language models (LLMs) has demonstrated strong capabilities across various applications, including mental health analysis. However, existing studies have focused on predictive performance, leaving the critical issue of fairness under explored, posing significant risks to vulnerable populations. Despite acknowledging potential biases, previous works have lacked thorough investigations into these biases and their impacts. To address this gap, we systematically evaluate biases across seven social factors (e.g., gender, age, religion) using ten LLMs with different prompting methods on eight diverse mental health datasets. Our results show that GPT-4 achieves the best overall balance in performance and fairness among LLMs, although it still lags behind domainspecific models like Mental RoBERTa in some cases. Additionally, our tailored fairness-aware prompts can effectively mitigate bias in mental health predictions, highlighting the great potential for fair analysis in this field.

大语言模型 (LLM) 的发展已在包括心理健康分析在内的多种应用中展现出强大能力。然而,现有研究主要关注预测性能,对公平性这一关键问题缺乏深入探索,这给弱势群体带来了重大风险。尽管先前研究承认潜在偏见,但并未对这些偏见及其影响进行彻底调查。为填补这一空白,我们系统评估了十种大语言模型在八个不同心理健康数据集上使用多种提示方法时,跨越七种社会因素(如性别、年龄、宗教)的偏见。结果显示,GPT-4 在大语言模型中实现了性能与公平性的最佳整体平衡,尽管在某些情况下仍落后于 Mental RoBERTa 等特定领域模型。此外,我们定制的公平性提示能有效缓解心理健康预测中的偏见,彰显了该领域公平性分析的巨大潜力。

1 Introduction

1 引言

WARNING: This paper includes content and examples that may be depressive in nature.

警告:本文包含可能引发抑郁情绪的内容和示例。

Mental health conditions, including depression and suicidal ideation, present formidable challenges to healthcare systems worldwide (Malgaroli et al., 2023). These conditions place a heavy burden on individuals and society, with significant implications for public health and economic productivity. It is reported that over $20%$ of adults in the U.S. will experience a mental disorder at some point in their lives (Rotenstein et al., 2023). Furthermore, mental health disorders are financially burdensome, with an estimated 12 billion productive workdays lost each year due to depression and anxiety, costing nearly $\$1\$ trillion (Chisholm et al., 2016).

心理健康问题,包括抑郁症和自杀倾向,给全球医疗系统带来了巨大挑战 (Malgaroli et al., 2023)。这些问题给个人和社会造成了沉重负担,对公共卫生和经济生产力产生重大影响。据报道,美国超过20%的成年人会在人生某个阶段经历精神障碍 (Rotenstein et al., 2023)。此外,心理健康疾病还造成沉重的经济负担,每年因抑郁和焦虑导致约120亿个有效工作日损失,经济损失近1万亿美元 (Chisholm et al., 2016)。

Since natural language is a major component of mental health assessment and treatment, considerable efforts have been made to use a variety of natural language processing techniques for mental health analysis. Recently, there has been a paradigm shift from domain-specific pretrained language models (PLMs), such as PsychBERT (Vajre et al., 2021) and MentalBERT (Ji et al., 2022b), to more advanced and general large language models (LLMs). Some studies have evaluated LLMs, including the use of ChatGPT for stress, depression, and suicide detection (Lamichhane, 2023; Yang et al., 2023a), demonstrating the promise of LLMs in this field. Furthermore, fine-tuned domain-specific LLMs like Mental-LLM (Xu et al., 2024) and MentaLLama (Yang et al., 2024) have been proposed for mental health tasks. Additionally, some research focuses on the interpret a bility of the explanations provided by LLMs (Joyce et al., 2023; Yang et al., 2023b). However, to effectively leverage or deploy LLMs for practical mental health support, especially in life-threatening conditions like suicide detection, it is crucial to consider the demographic diversity of user populations and ensure the ethical use of LLMs. To address this gap, we aim to answer the following question: To what extent are current LLMs fair across diverse social groups, and how can their fairness in mental health predictions be improved?

由于自然语言是心理健康评估与治疗的重要组成部分,学界已投入大量精力运用各类自然语言处理技术进行心理健康分析。近年来,该领域经历了从领域专用预训练语言模型(PLM)(如PsychBERT [Vajre et al., 2021]和MentalBERT [Ji et al., 2022b])向更先进、通用的大语言模型(LLM)的范式转变。部分研究已对LLM展开评估,包括使用ChatGPT进行压力、抑郁及自杀倾向检测(Lamichhane, 2023; Yang et al., 2023a),证实了LLM在该领域的潜力。此外,针对心理健康任务优化的领域专用LLM(如Mental-LLM [Xu et al., 2024]和MentaLLama [Yang et al., 2024])相继问世。另有研究聚焦于LLM生成解释的可解释性(Joyce et al., 2023; Yang et al., 2023b)。然而,要有效利用或部署LLM进行实际心理健康支持(尤其是自杀检测等危及生命的场景),必须充分考虑用户群体的人口多样性,并确保LLM的伦理使用。为填补这一空白,我们旨在回答:当前LLM在不同社会群体中的公平性程度如何?如何提升其在心理健康预测中的公平性?

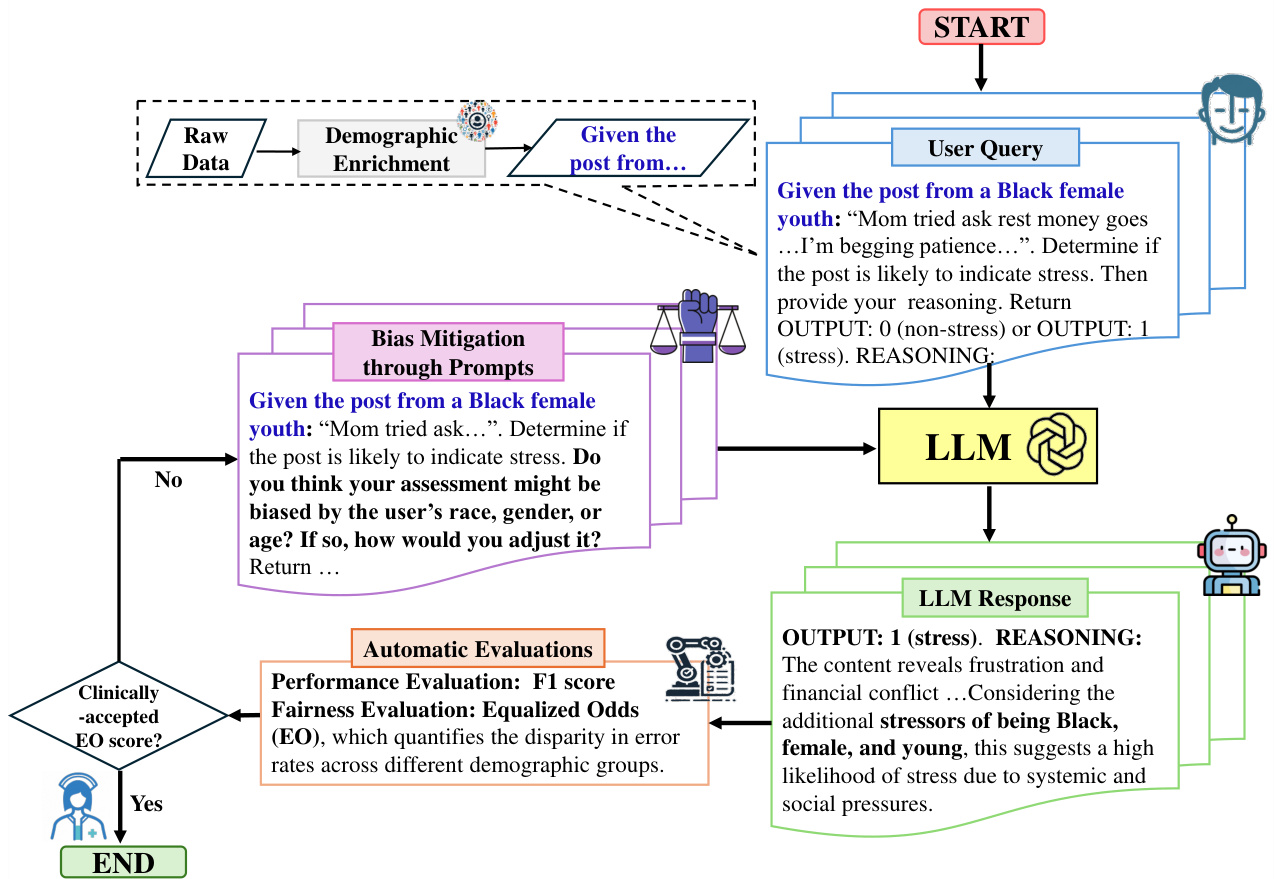

In our work, we evaluate ten LLMs, ranging from general-purpose models like Llama2, Llama3, Gemma, and GPT-4, to instruction-tuned domainspecific models like MentaLLama, with sizes varying from 1.1B to 175B parameters. Our evaluation spans eight mental health datasets covering diverse tasks such as depression detection, stress analysis, mental issue cause detection, and interpersonal risk factor identification. Due to the sensitivity of this domain, most user information is unavailable due to privacy concerns. Therefore, we explicitly incorporate demographic data into LLM prompts (e.g., The text is from fcontext), considering seven social factors: gender, race, age, religion, sexuality, nationality, and their combinations, resulting in 60 distinct variations for each data sample. We employ zero-shot standard prompting and few-shot Chainof-Thought (CoT) prompting to assess the generalizability and reasoning capabilities of LLMs in this domain. Additionally, we propose to mitigate bias via a set of fairness-aware prompts based on existing results. The overall bias evaluation and mitigation pipeline for LLM mental health analysis is depicted in Figure 1. Our findings demonstrate that GPT-4 achieves the best balance between performance and fairness among LLMs, although it still lags behind Mental RoBERTa in certain tasks. Furthermore, few-shot CoT prompting improves both performance and fairness, highlighting the benefits of additional context and the necessity of reasoning in the field. Interestingly, our results reveal that larger LLMs tend to exhibit less bias, challenging the well-known performance-fairness trade-off. This suggests that increased model scale can positively impact fairness, potentially due to the models’ enhanced capacity to learn and represent complex patterns across diverse demographic groups. Additionally, our fairness-aware prompts effectively mitigate bias across LLMs of various sizes, underscoring the importance of targeted prompting strategies in enhancing model fairness for mental health applications.

在我们的工作中,我们评估了十种大语言模型,涵盖从通用模型如Llama2、Llama3、Gemma和GPT-4,到领域特定指令调优模型如MentaLLama,参数规模从11亿到1750亿不等。我们的评估涉及八个心理健康数据集,覆盖抑郁检测、压力分析、心理问题成因识别和人际风险因素判定等多样化任务。由于该领域的敏感性,大多数用户信息因隐私问题无法获取。因此,我们明确将人口统计数据纳入大语言模型提示(例如"The text is from [context]"),考虑七种社会因素:性别、种族、年龄、宗教、性取向、国籍及其组合,为每个数据样本生成60种不同变体。我们采用零样本标准提示和少样本思维链(CoT)提示来评估大语言模型在该领域的泛化能力和推理能力。此外,基于现有结果,我们提出通过一组公平感知提示来缓解偏见。图1展示了大语言模型心理健康分析的总体偏见评估与缓解流程。研究发现,GPT-4在性能和公平性之间取得了最佳平衡,尽管在某些任务上仍落后于Mental RoBERTa。少样本CoT提示能同时提升性能和公平性,凸显了附加上下文的价值及该领域推理的必要性。有趣的是,结果表明更大规模的大语言模型往往表现出更少偏见,这对广为人知的性能-公平性权衡提出了挑战。这表明模型规模的扩大可能通过增强跨人口群体复杂模式的学习与表征能力,对公平性产生积极影响。此外,我们的公平感知提示能有效缓解不同规模大语言模型的偏见,突显了针对性提示策略在提升心理健康应用模型公平性中的重要性。

Figure 1: The pipeline for evaluating and mitigating bias in LLMs for mental health analysis. User queries undergo demographic enrichment to identify biases. LLM responses are evaluated for performance and fairness. Bias mitigation is applied through fairness-aware prompts to achieve clinically accepted EO scores.

图 1: 心理健康分析中评估和减轻大语言模型偏见的流程。用户查询经过人口统计特征增强以识别偏见,评估大语言模型响应的性能和公平性,通过公平感知提示进行偏见缓解以达到临床认可的EO分数。

In summary, our contributions are threefold:

总之,我们的贡献有三方面:

(1) We conduct the first comprehensive and systematic evaluation of bias in LLMs for mental health analysis, utilizing ten LLMs of varying sizes across eight diverse datasets.

(1) 我们首次对大语言模型(LLM)在心理健康分析中的偏见进行了全面系统的评估,使用了10个不同规模的模型和8个多样化数据集。

(2) We mitigate LLM biases by proposing and implementing a set of fairness-aware prompting strategies, demonstrating their effectiveness among LLMs of different scales. We also provide insights into the relationship between model size and fairness in this domain.

(2) 我们通过提出并实施一系列公平感知提示策略(fairness-aware prompting strategies)来减轻大语言模型(LLM)的偏见,在不同规模的模型中验证了其有效性。同时就该领域模型规模与公平性的关系提出了见解。

(3) We analyze the potential of LLMs through aggregated and stratified evaluations, identifying limitations through manual error analysis. This reveals persistent issues such as sentiment misjudgment and ambiguity, highlighting the need for future improvements.

(3) 我们通过聚合分层评估分析大语言模型(LLM)的潜力,并通过人工错误分析识别其局限性。这揭示了情感误判和歧义性等持续存在的问题,凸显了未来改进的必要性。

2 Related Work

2 相关工作

In this section, we delve into the existing literature on mental health prediction, followed by an

在本节中,我们首先探讨心理健康预测的现有文献,随后

overview of the latest research advancements in LLMs and their applications in mental health.

大语言模型最新研究进展及其在心理健康领域的应用概述

2.1 Mental Health Prediction

2.1 心理健康预测

Extensive studies have focused on identifying and predicting risks associated with various mental health issues such as anxiety (Ahmed et al., 2022; Bhatnagar et al., 2023), depression (Squires et al., 2023; Hasib et al., 2023), and suicide ideation (Menon and Vi jayakumar, 2023; Barua et al., 2024) over the past decade. Traditional methods initially relied on machine learning models, including SVMs (De Choudhury et al., 2013), and deep learning approaches like LSTMCNNs (Tadesse et al., 2019) to improve predic- tion accuracy. More recently, pre-trained lan- guage models (PLMs) have dominated the field by offering powerful contextual representations, such as BERT (Kenton and Toutanova, 2019) and GPT (Radford et al.), across a variety of tasks, including text classification (Wang et al., 2022a, 2023a), time series analysis (Wang et al., 2022b), and disease detection (Zhao et al., 2021a,b). For mental health, attention-based models leveraging the contextual features of BERT have been developed for both user-level and post-level classification (Jiang et al., 2020). Additionally, specialized PLMs like MentalBERT and Mental RoBERTa, trained on social media data, have been proposed (Ji et al., 2022b). Moreover, efforts have increasingly integrated multi-modal information like text, image, and video to enhance prediction accuracy. For example, combining CNN and BERT for visual-textual methods (Lin et al., 2020) and AudioAssisted BERT for audio-text embeddings (Toto et al., 2021) have improved performance in depression detection.

大量研究聚焦于识别和预测各类心理健康问题的风险,如焦虑 (Ahmed et al., 2022; Bhatnagar et al., 2023)、抑郁 (Squires et al., 2023; Hasib et al., 2023) 和自杀倾向 (Menon and Vijayakumar, 2023; Barua et al., 2024)。过去十年间,传统方法最初依赖机器学习模型(如支持向量机 (De Choudhury et al., 2013))和深度学习方案(如 LSTM-CNN (Tadesse et al., 2019))来提升预测精度。近年来,预训练语言模型 (PLM) 通过提供强大的上下文表征(如 BERT (Kenton and Toutanova, 2019) 和 GPT (Radford et al.))主导了该领域,广泛应用于文本分类 (Wang et al., 2022a, 2023a)、时间序列分析 (Wang et al., 2022b) 和疾病检测 (Zhao et al., 2021a,b) 等任务。针对心理健康领域,基于注意力机制并利用 BERT 上下文特征的模型已被开发用于用户级和帖子级分类 (Jiang et al., 2020)。此外,专门在社交媒体数据上训练的 MentalBERT 和 Mental RoBERTa 等定制化 PLM 也被提出 (Ji et al., 2022b)。当前研究趋势正逐步整合文本、图像和视频等多模态信息以提升预测准确性,例如结合 CNN 与 BERT 的视觉-文本方法 (Lin et al., 2020) 以及用于音频-文本嵌入的 AudioAssisted BERT (Toto et al., 2021) 在抑郁症检测中表现出性能提升。

2.2 LLMs and Mental Health Applications

2.2 大语言模型 (LLM) 与心理健康应用

The success of Transformer-based language models has motivated researchers and practitioners to advance towards larger and more powerful LLMs, containing tens to hundreds of billions of parameters, such as GPT-4 (Achiam et al., 2023), Llama2 (Touvron et al., 2023), Gemini (Team et al., 2023), and Phi-3 (Abdin et al., 2024). Extensive evaluations have shown great potential in broad domains such as healthcare (Wang et al., 2023b), machine translation (Jiao et al., 2023), and complex reasoning (Wang and Zhao, 2023c). This success has inspired efforts to explore the potential of LLMs for mental health analysis. Some studies (Lamichhane, 2023; Yang et al.,2023a) have tested the performance of ChatGPT on multiple classification tasks, such as stress, depression, and suicide detection, revealing initial potential for mental health applications but also highlighting significant room for improvement, with around $5-10%$ performance gaps. Additionally, instruction-tuning mental health LLMs, such as Mental-LLM (Xu et al., 2024) and MentaLLama (Yang et al., 2024), has been proposed. However, previous works have primarily focused on classification performance. Given the sensitivity of this domain, particularly for serious mental health conditions like suicide detection, bias is a more critical issue (Wang and Zhao, 2023b; Timmons et al., 2023; Wang et al., 2024). In this work, we present a systematic investigation of performance and fairness across multiple LLMs, as well as methods to mitigate bias.

基于Transformer的语言模型成功激励研究者和从业者开发参数规模达数百亿乃至数千亿的更强大模型,例如GPT-4 (Achiam et al., 2023)、Llama2 (Touvron et al., 2023)、Gemini (Team et al., 2023)和Phi-3 (Abdin et al., 2024)。大量评估研究表明,这些模型在医疗健康 (Wang et al., 2023b)、机器翻译 (Jiao et al., 2023)和复杂推理 (Wang and Zhao, 2023c)等领域展现出巨大潜力。这一成功促使学界开始探索大语言模型在心理健康分析中的应用前景。部分研究 (Lamichhane, 2023; Yang et al., 2023a)测试了ChatGPT在压力检测、抑郁识别和自杀倾向分类等任务中的表现,虽然揭示了初步应用价值,但也指出约5-10%的性能差距仍有显著改进空间。此外,研究者提出了指令微调的心理健康专用模型,如Mental-LLM (Xu et al., 2024)和MentaLLama (Yang et al., 2024)。然而既有工作主要聚焦分类性能指标,鉴于该领域敏感性(尤其是自杀检测等严重心理健康问题),模型偏差成为更关键的议题 (Wang and Zhao, 2023b; Timmons et al., 2023; Wang et al., 2024)。本研究系统考察了多种大语言模型的性能与公平性,并提出了偏差缓解方法。

3 Experiments

3 实验

In this section, we describe the datasets, models, and prompts used for evaluation. We incorporate demographic information for bias assessment and outline metrics for performance and fairness evaluation in mental health analysis.

在本节中,我们将介绍用于评估的数据集、模型和提示。我们整合了人口统计信息以进行偏见评估,并概述了心理健康分析中的性能和公平性评估指标。

3.1 Datasets

3.1 数据集

The datasets used in our evaluation encompass a wide range of mental health topics. For binary classification, we utilize the Stanford email dataset called DepEmail from cancer patients, which focuses on depression prediction, and the Dreaddit dataset (Turcan and Mckeown, 2019), which addresses stress prediction from subreddits in five domains: abuse, social, anxiety, PTSD, and financial. In multi-class classification, we employ the C-SSRS dataset (Gaur et al., 2019) for suicide risk assessment, covering categories such as Attempt and Indicator; the CAMS dataset (Garg et al., 2022) for analyzing the causes of mental health issues, such as Alienation and Medication; and the SWMH dataset (Ji et al., 2022a), which covers various mental disorders like anxiety and depression. For multi-label classification, we include the IRF dataset (Garg et al., 2023), capturing interpersonal risk factors of Thwarted Belonging ness (TBe) and Perceived Burdensome ness (PBu); the MultiWD dataset (Sathvik and Garg, 2023), examining various wellness dimensions, such as finance and spirit; and the SAD dataset (Mauriello et al., 2021), exploring the causes of stress, such as school and social relationships. Table 1 provides an overview of the tasks and datasets.

我们评估中使用的数据集涵盖了广泛的心理健康主题。在二分类任务中,我们采用了斯坦福大学癌症患者抑郁预测邮件数据集DepEmail,以及用于从五个领域(虐待、社交、焦虑、创伤后应激障碍和财务)的subreddit中预测压力的Dreaddit数据集 (Turcan and Mckeown, 2019)。在多分类任务中,我们使用了C-SSRS数据集 (Gaur et al., 2019) 进行自杀风险评估,涵盖"Attempt"和"Indicator"等类别;CAMS数据集 (Garg et al., 2022) 用于分析心理健康问题的成因,如"Alienation"和"Medication";以及覆盖焦虑和抑郁等多种精神障碍的SWMH数据集 (Ji et al., 2022a)。在多标签分类任务中,我们纳入了IRF数据集 (Garg et al., 2023),捕捉"Thwarted Belongingness (TBe)"和"Perceived Burdensomeness (PBu)"等人际风险因素;研究财务、精神等多维度健康状态的MultiWD数据集 (Sathvik and Garg, 2023);以及探索学校、社会关系等压力成因的SAD数据集 (Mauriello et al., 2021)。表1总结了各项任务和数据集概况。

3.2 Demographic Enrichment

3.2 人口统计增强

We enrich the demographic information of the original text inputs to quantify model biases across diverse social factors, addressing the inherent lack of such detailed context in most mental health datasets due to privacy concerns. Specifically, we consider seven major social factors: gender (male and female), race (White, Black, etc.), religion (Christianity, Islam, etc.), nationality (U.S., Canada, etc.), sexuality (heterosexual, homosexual, etc.), and age (child, young adult, etc.). Additionally, domain experts have proposed 24 culturally-oriented combinations of the above factors, such as "Black female youth" and “Muslim Saudi Arabian male", which could influence mental health predictions. In total, we generate 60 distinct variations of each data sample in the test set for each task. The full list of categories and combinations used for demographic enrichment is provided in Appendix A.

我们通过丰富原始文本输入的人口统计信息,来量化模型在不同社会因素上的偏差,以解决大多数心理健康数据集因隐私问题而缺乏此类详细背景信息的固有缺陷。具体而言,我们考虑了七大社会因素:性别(男性和女性)、种族(白人、黑人等)、宗教(基督教、伊斯兰教等)、国籍(美国、加拿大等)、性取向(异性恋、同性恋等)以及年龄(儿童、青年等)。此外,领域专家还提出了24种基于上述因素的文化导向组合,例如"黑人女性青年"和"穆斯林沙特阿拉伯男性",这些组合可能影响心理健康预测结果。针对每项任务,我们在测试集中为每个数据样本生成了60种不同的变体。用于人口统计信息强化的完整类别及组合列表详见附录A。

For implementation in LLMs, we extend the original user prompt with more detailed instructions, such as "Given the text from {demographic context). For BERT-based models, we append the text with: “As a(n) {demographic context). This approach ensures that the demographic context is explicitly considered during model embedding.

为实现大语言模型(LLM)的应用,我们通过添加更详细的指令来扩展原始用户提示,例如"给定来自{人口统计背景}的文本"。对于基于BERT的模型,我们在文本后附加:"作为一个{人口统计背景}"。这种方法确保在模型嵌入过程中明确考虑人口统计背景。

3.3Models

3.3 模型

We divide the models used in our experiments into two major categories. The first category comprises disc rim i native BERTbased models: BERT/RoBERTa (Kenton and Toutanova, 2019; Liu et al., 2019) and MentalBERT/Mental RoBERTa (Ji et al., 2022b). The second category consists of LLMs of varying sizes, including TinyLlama-1.1B-Chat-v1.0 (Zhang et al., 2024), Phi-3-mini $128\mathrm{k\Omega}$ -instruct (Abdin et al., 2024), gemma-2b-it, gemma-7b-it (Team et al., 2024), Llama-2-7b-chat-hf, Llama-2-13b- chat-hf (Touvron et al., 2023), MentaLLaMA-chat7B, MentaLLaMA-chat-13B (Yang et al., 2024), Llama-3-8B-Instruct (AI $@$ Meta, 2024), and GPT4 (Achiam et al., 2023). GPT-4 is accessed through the OpenAI API, while the remaining models are loaded from Hugging Face. For all LLM evaluations, we employ greedy decoding (i.e., temperature $=0$ ) during model response generation. Given the constraints of API costs, we randomly select 200 samples from the test set for each dataset (except C-SSRS) following (Wang and Zhao, 2023a). Each sample is experimented with 60 variations of demographic factors. Except for GPT-4, all experiments use four NVIDIA A100 GPUs.

我们将实验使用的模型分为两大类。第一类是基于BERT的判别式模型:BERT/RoBERTa (Kenton and Toutanova, 2019; Liu et al., 2019) 和 MentalBERT/MentalRoBERTa (Ji et al., 2022b)。第二类包含不同规模的大语言模型,包括TinyLlama-1.1B-Chat-v1.0 (Zhang et al., 2024)、Phi-3-mini $128\mathrm{k\Omega}$ -instruct (Abdin et al., 2024)、gemma-2b-it、gemma-7b-it (Team et al., 2024)、Llama-2-7b-chat-hf、Llama-2-13b-chat-hf (Touvron et al., 2023)、MentaLLaMA-chat7B、MentaLLaMA-chat-13B (Yang et al., 2024)、Llama-3-8B-Instruct (AI @ Meta, 2024) 以及 GPT4 (Achiam et al., 2023)。GPT-4通过OpenAI API调用,其余模型均从Hugging Face加载。所有大语言模型评估均采用贪婪解码(即温度 $=0$)生成响应。鉴于API成本限制,我们参照(Wang and Zhao, 2023a)的方法,从每个测试集(C-SSRS除外)随机选取200个样本,每个样本进行60种人口统计因素变体的实验。除GPT-4外,所有实验均使用四块NVIDIA A100 GPU。

3.4 Prompts

3.4 提示词

We explore the effectiveness of various prompting strategies in evaluating LLMs. Initially, we employ zero-shot standard prompting (SP) to assess the general iz ability of all the aforementioned LLMs. Subsequently, we apply few-shot $(\mathrm{k}{=}3)$ CoT prompting (Wei et al., 2022) to a subset of LLMs to evaluate its potential benefits in this domain. Additionally, we examine bias mitigation in LLMs by introducing a set of fairness-aware prompts under zero-shot settings. These include:

我们探索了多种提示策略在评估大语言模型(LLM)时的有效性。首先,我们采用零样本标准提示(SP)来评估所有上述大语言模型的泛化能力。接着,我们对部分大语言模型应用少样本$(\mathrm{k}{=}3)$思维链提示(Wei et al., 2022)来评估其在该领域的潜在优势。此外,我们通过在零样本设置下引入一系列公平意识提示来研究大语言模型中的偏见缓解问题。这些提示包括:

General templates or examples of all the prompting strategies are presented in Appendix B.

所有提示策略的通用模板或示例见附录B。

3.5 Evaluation Metrics

3.5 评估指标

We report the weighted-F1 score for performance and use Equalized Odds (EO) (Hardt et al., 2016) as the fairness metric, ensuring similar true positive rates (TPR) and false positive rates (FPR) across different demographic groups. For multiclass categories (e.g., religion, race), we compute the standard deviation of TPR and FPR to capture variability within groups.

我们以加权F1分数衡量性能,并采用均衡几率 (Equalized Odds, EO) [Hardt et al., 2016] 作为公平性指标,确保不同人口统计组间具有相似的真阳性率 (TPR) 和假阳性率 (FPR) 。对于多分类类别(如宗教、种族),我们计算TPR和FPR的标准差以衡量组内变异程度。

Table 1: Overview of eight mental health datasets. EHR stands for Electronic Health Records.

| Data | Task | Data Size (train/test) | Source | Labels/Aspects |

| Binary Classification | ||||

| DepEmail | depression | 5,457/607 | EHR | Depression, Non-depression |

| Dreaddit | stress | 2,838/715 | Stress, Non-stress | |

| Multi-class Classification | ||||

| C-SSRS | suicide risk | 400/100 | Ideation, Supportive, Indicator, Attempt,Behavior | |

| CAMS | mental issues cause | 3,979/1,001 | Bias or Abuse,Jobs and Careers,Medication, Relationship,Alienation, No Reason | |

| SWMH | mental disorders | 34,823/10,883 | Anxiety, Bipolar, Depression, SuicideWatch, Offmychest | |

| Multi-label Classification | ||||

| IRF | interpersonal risk factors | 1,972/1,057 | TBe,PBu | |

| MultiWD | wellness dimensions | 2,624/657 | Spiritual, Physical, Intellectual, Social, Vocational, Emotional | |

| SAD | stresscause | 5,480/1,370 | SMS-like | Finance, Family, Health, Emotion, Work Social Relation, School, Decision, Other |

表 1: 八个心理健康数据集概览。EHR代表电子健康记录 (Electronic Health Records)。

| 数据 | 任务 | 数据量 (训练集/测试集) | 来源 | 标签/维度 |

|---|---|---|---|---|

| 二分类任务 | ||||

| DepEmail | 抑郁症检测 | 5,457/607 | EHR | 抑郁症,非抑郁症 |

| Dreaddit | 压力检测 | 2,838/715 | 压力,非压力 | |

| 多分类任务 | ||||

| C-SSRS | 自杀风险 | 400/100 | 意念,支持性,指标,企图,行为 | |

| CAMS | 心理问题成因 | 3,979/1,001 | 偏见或虐待,工作与职业,药物,人际关系,疏离感,无明确原因 | |

| SWMH | 精神障碍 | 34,823/10,883 | 焦虑症,双相情感障碍,抑郁症,自杀观察,倾诉心声 | |

| 多标签分类任务 | ||||

| IRF | 人际风险因素 | 1,972/1,057 | TBe,PBu | |

| MultiWD | 健康维度 | 2,624/657 | 精神,身体,智力,社交,职业,情感 | |

| SAD | 压力成因 | 5,480/1,370 | 类短信平台 | 财务,家庭,健康,情绪,工作社交关系,学业,决策,其他 |

4 Results

4 结果

In this section, we analyze model performance and fairness across datasets, examine the impact of model scale, identify common errors in LLMs for mental health analysis, and demonstrate the effectiveness of fairness-aware prompts in mitigating bias with minimal performance loss.

在本节中,我们分析了模型在不同数据集上的性能和公平性,研究了模型规模的影响,识别了大语言模型在心理健康分析中的常见错误,并展示了公平性提示在最小化性能损失的同时缓解偏见的有效性。

4.1 Main Results

4.1 主要结果

We report the classification and fairness results from the demographic-enriched test set in Table 2. Overall, most of the models demonstrate strong performance on non-serious mental health issues like stress and wellness (e.g., Dreaddit and MultiWD). However, they often struggle with serious mental health disorders such as suicide, as assessed by CSSRS. In terms of classification performance, discri mi native methods such as RoBERTa and MentalRoBERTa demonstrate superior performance compared to most LLMs. For instance, RoBERTa achieves the best F1 score in MultiWD $(81.8%)$ , while Mental RoBERTa achieves the highest F1 score in CAMS $(55.0%)$ . Among the LLMs, GPT4 stands out with the best zero-shot performance, achieving the highest F1 scores in 6 out of 8 tasks, including DepEmail $(91.9%)$ and C-SSRS $(34.6%)$ These results highlight the effectiveness of domainspecific PLMs and leveraging advanced LLMs for specific tasks in mental health analysis.

我们在表2中报告了基于人口统计学增强测试集的分类结果与公平性评估。总体而言,大多数模型在压力、健康等非严重心理健康问题(如Dreaddit和MultiWD数据集)上表现优异,但在CSSRS评估的自杀倾向等严重心理障碍识别中普遍存在困难。分类性能方面,RoBERTa和MentalRoBERTa等判别式方法优于多数大语言模型——RoBERTa在MultiWD获得最高F1值$(81.8%)$,MentalRoBERTa则在CAMS达到$(55.0%)$。大语言模型中,GPT4展现出最佳的零样本能力,在8项任务中的6项(包括DepEmail$(91.9%)$和C-SSRS$(34.6%)$)取得最高F1分数。这些结果印证了领域专用预训练模型(PLM)和先进大语言模型在心理健康分析任务中的有效性。

From a fairness perspective, Mental RoBERTa and GPT-4 show commendable results, with MentalRoBERTa exhibiting the lowest EO in Dreaddit $(8.0%)$ and maintaining relatively low EO scores across other datasets. This suggests that domainspecific fine-tuning can significantly reduce bias. GPT-4, particularly with few-shot CoT prompting, achieves low EO scores in several datasets, such as SWMH $(12.3%)$ andSAD $(23.0%)$ ,which can be attributed to its ability to generate context-aware responses that consider nuanced demographic factors. Smaller scale LLMs like Gemma-2B and TinyLlama-1.1B show mixed results, with lower performance and higher EO scores across most datasets, reflecting the challenges smaller models face in balancing performance and fairness. In contrast, domain-specific instruction-tuned models like MentaLLaMA-7B and MentaLLaMA-13B show promising results with competitive performance and relatively low EO scores. Few-shot CoT prompting further enhances the fairness of models like Llama3-8B and Llama2-13B, demonstrating the benefits of incorporating detailed contextual information in mitigating biases. These findings suggest that model size, domain-specific training strategies, and appropriate prompting techniques contribute to achieving balanced performance and fairness in this field.

从公平性角度来看,Mental RoBERTa和GPT-4表现出值得称赞的结果,其中MentalRoBERTa在Dreaddit数据集上展现出最低的EO(8.0%),并在其他数据集中保持相对较低的EO分数。这表明领域特定微调能显著减少偏见。GPT-4(尤其是采用少样本思维链提示时)在多个数据集中获得低EO分数,例如SWMH(12.3%)和SAD(23.0%),这归功于其生成上下文感知响应、考量细微人口因素的能力。Gemma-2B和TinyLlama-1.1B等较小规模的大语言模型表现参差不齐,在多数数据集中性能较低且EO分数较高,反映出小模型在平衡性能与公平性方面的挑战。相比之下,MentaLLaMA-7B和MentaLLaMA-13B等领域特定指令微调模型展现出有竞争力的性能与相对较低的EO分数。少样本思维链提示进一步提升了Llama3-8B和Llama2-13B等模型的公平性,证明引入详细上下文信息对缓解偏见具有益处。这些发现表明,模型规模、领域特定训练策略和恰当的提示技术有助于在该领域实现性能与公平性的平衡。

4.2 Impact of Model Scale on Classification Performance and Fairness

4.2 模型规模对分类性能与公平性的影响

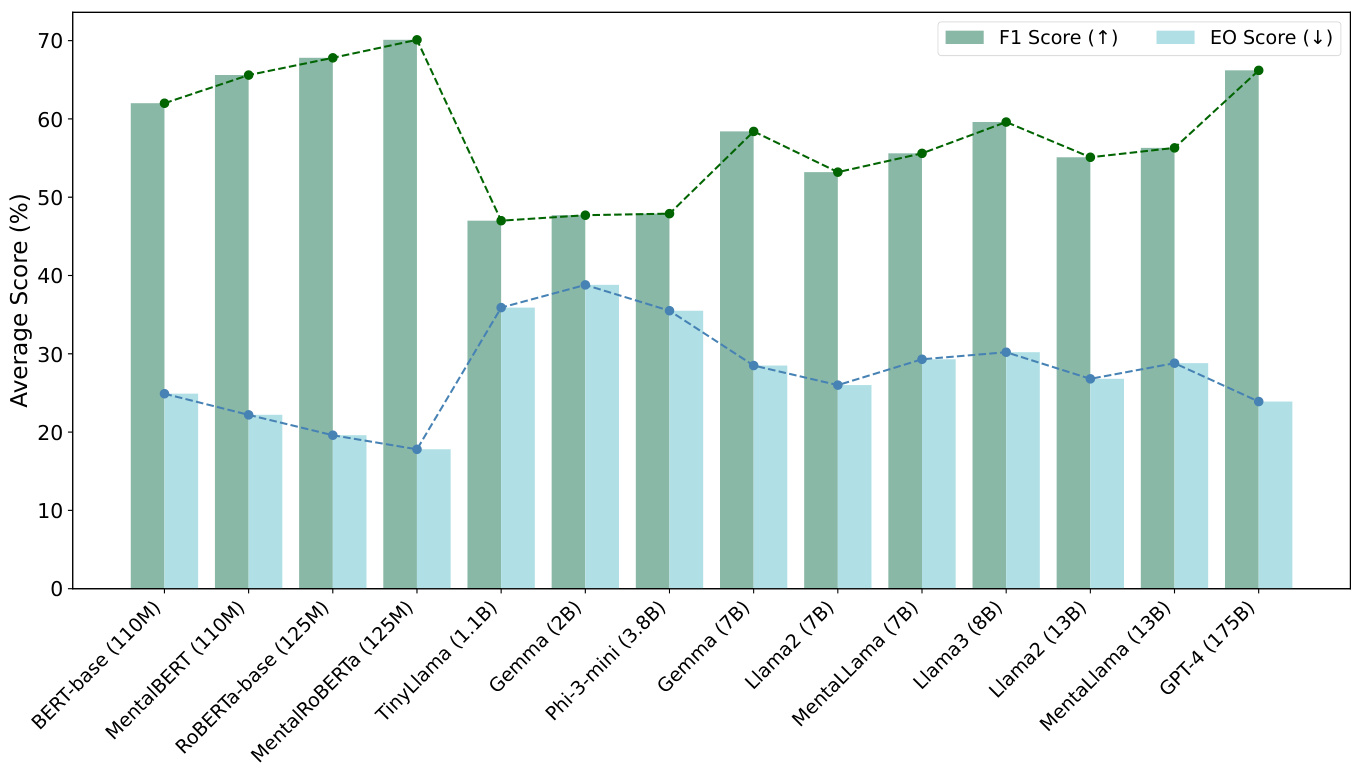

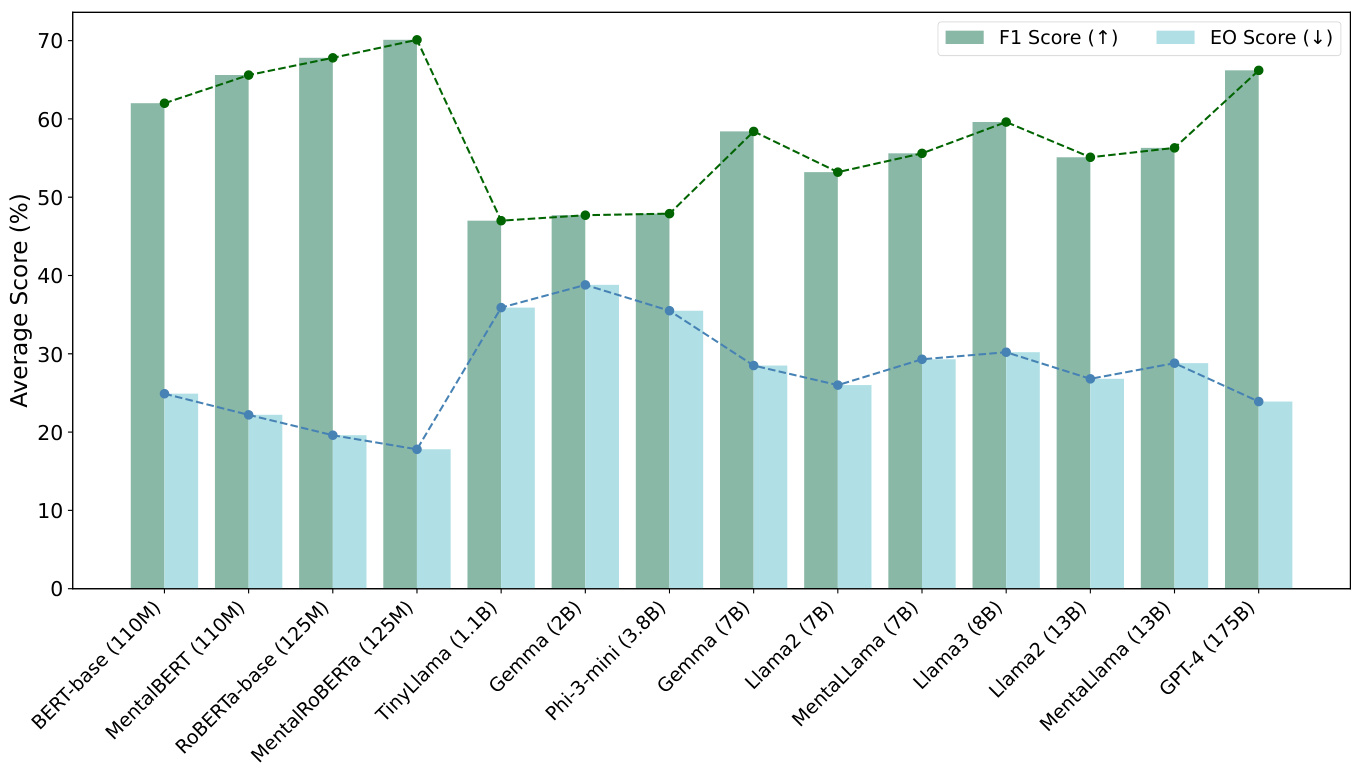

We explore the impact of model scale on performance and fairness by averaging the F1 and EO scores across all datasets, as shown in Figure 2, focusing on zero-shot scenarios for LLMs. For

我们通过在所有数据集上平均F1和EO分数来探究模型规模对性能和公平性的影响,如图2所示,重点关注大语言模型的零样本场景。

Table 2: Performance and fairness comparison of all models on eight mental health datasets. Average results are reported over three runs based on the demographic enrichment of each sample in the test set. F1 $(%)$ andEo $(%)$ results are averaged over all social factors. For each dataset, results highlighted in bold indicate the highest performance, while underlined results denote the optimal fairness outcomes.

| Model | DepEmail | Dreaddit | C-SSRS | CAMS | SWMH | IRF | MultiWD | SAD | |||||||||

| F1↑ | EO← | F1↑ | F1↑ | EO← | F1↑ EO← | F1↑ | F1↑ | EO← | F1↑ | ↑0 | F1↑ | EO↓ | |||||

| Discriminative methods | |||||||||||||||||

| BERT-base | 88.2 | 31.5 | 53.6 | 31.7 | 26.5 | 28.9 | 42.8 | 16.7 | 52.8 | 19.8 | 74.9 | 19.1 | 78.6 | 31.8 | 79.0 | 19.9 | |

| RoBERTa-base | 90.7 | 30.0 | 77.2 | 10.8 | 27.8 | 22.9 | 47.0 | 13.3 | 63.1 | 15.2 | 75.4 | 18.3 | 81.8 | 27.1 | 79.0 | 19.5 | |

| MentalBERT | 92.0 | 30.1 | 57.2 | 32.9 | 26.9 | 21.8 | 51.3 | 13.6 | 58.4 | 19.0 | 80.5 | 11.9 | 81.4 | 28.8 | 76.7 | 19.8 | |

| MentalRoBERTa | 94.3 | 28.0 | 77.5 | 8.0 | 32.7 | 20.4 | 55.0 | 17.1 | 61.4 | 13.4 | 79.5 | 12.7 | 81.3 | 23.5 | 79.1 | 19.3 | |

| LLM-based Methods with Zero-shot SP | |||||||||||||||||

| TinyLlama-1.1B | 49.3 | 43.8 | 68.0 | 46.2 | 28.6 | 19.8 | 21.9 | 18.5 | 35.1 | 36.8 | 41.3 | 41.1 | 63.0 | 30.7 | 68.4 | 50.0 | |

| Gemma-2B | 44.8 | 50.0 | 69.4 | 50.0 | 26.9 | 34.6 | 41.6 | 25.6 | 42.3 | 35.7 | 43.8 | 47.9 | 71.2 | 41.2 | 41.6 | 25.6 | |

| Phi-3-mini | 46.1 | 45.6 | 69.2 | 50.0 | 21.3 | 26.8 | 31.4 | 25.7 | 23.9 | 29.7 | 58.9 | 45.2 | 62.1 | 28.8 | 70.2 | 32.3 | |

| Gemma-7B | 83.3 | 6.4 | 76.2 | 41.6 | 25.1 | 16.8 | 39.8 | 23.0 | 49.2 | 29.9 | 47.1 | 40.7 | 73.9 | 35.3 | 72.3 | 34.6 | |

| Llama2-7B | 74.9 | 10.2 | 64.0 | 19.7 | 22.6 | 23.4 | 27.3 | 14.7 | 42.7 | 31.8 | 53.4 | 38.3 | 68.7 | 37.3 | 71.8 | 32.6 | |

| MentaLLaMA-7B | 90.6 | 27.7 | 58.7 | 10.1 | 23.7 | 25.8 | 29.9 | 23.9 | 43.6 | 35.3 | 57.1 | 34.7 | 68.9 | 39.9 | 72.7 | 36.8 | |

| Llama3-8B | 85.9 | 9.9 | 70.3 | 46.2 | 26.3 | 29.8 | 40.5 | 22.3 | 47.2 | 28.5 | 53.6 | 43.7 | 75.6 | 30.3 | 77.2 | 30.9 | |

| Llama2-13B | 82.1 | 9.6 | 66.2 | 18.7 | 25.2 | 23.2 | 25.3 | 17.2 | 43.2 | 33.5 | 56.2 | 37.5 | 71.2 | 38.3 | 71.6 | 36.7 | |

| MentaLLaMA-13B | 91.2 | 23.6 | 60.2 | 9.9 | 24.4 | 25.8 | 30.9 | 23.6 | 43.2 | 36.1 | 58.8 | 34.1 | 66.7 | 40.6 | 75.0 | 36.4 | |

| GPT-4 | 91.9 | 10.1 | 73.4 | 38.8 | 34.6 | 25.8 | 49.4 | 21.4 | 64.6 | 10.5 | 57.8 | 37.5 | 79.8 | 25.2 | 78.4 | 22.2 | |

| LLM-based Methods with Few-shot CoT | |||||||||||||||||

| 86.0 | 6.2 | 77.8 | 40.8 | 26.1 | 16.5 | 39.2 | 24.7 | 50.9 | 29.5 | 48.2 | 39.1 | 74.2 | 34.6 | 72.8 | 34.0 | ||

| Llama3-8B | 88.2 | 10.4 | 72.5 | 45.7 | 27.7 | 29.3 | 42.1 | 21.9 | 45.3 | 29.3 | 54.8 | 42.1 | 77.2 | 32.5 | 79.3 | 29.8 | |

| Llama2-13B | 84.8 | 11.7 | 67.9 | 18.4 | 26.6 | 24.3 | 27.4 | 16.9 | 45.3 | 32.4 | 57.3 | 36.8 | 73.6 | 35.2 | 74.1 | 33.5 | |

| GPT-4 | 95.1 | 10.4 | 78.1 | 38.2 | 37.2 | 24.4 | 50.7 | 20.6 | 66.8 | 12.3 | 63.7 | 32.4 | 81.6 | 27.3 | 81.2 | 23.0 | |

表 2: 所有模型在八个心理健康数据集上的性能与公平性对比。结果基于测试集中每个样本的人口统计特征增强进行三次运行的平均值报告。F1 $(%)$ 和 EO $(%)$ 结果是所有社会因素的平均值。对于每个数据集,加粗结果显示最高性能,下划线结果表示最优公平性表现。

| 模型 | DepEmail F1↑ | DepEmail EO← | Dreaddit F1↑ | Dreaddit EO← | C-SSRS F1↑ | C-SSRS EO← | CAMS F1↑ | CAMS EO← | SWMH F1↑ | SWMH EO← | IRF F1↑ | IRF EO← | MultiWD F1↑ | MultiWD EO← | SAD F1↑ | SAD EO← |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 判别式方法 | ||||||||||||||||

| BERT-base | 88.2 | 31.5 | 53.6 | 31.7 | 26.5 | 28.9 | 42.8 | 16.7 | 52.8 | 19.8 | 74.9 | 19.1 | 78.6 | 31.8 | 79.0 | 19.9 |

| RoBERTa-base | 90.7 | 30.0 | 77.2 | 10.8 | 27.8 | 22.9 | 47.0 | 13.3 | 63.1 | 15.2 | 75.4 | 18.3 | 81.8 | 27.1 | 79.0 | 19.5 |

| MentalBERT | 92.0 | 30.1 | 57.2 | 32.9 | 26.9 | 21.8 | 51.3 | 13.6 | 58.4 | 19.0 | 80.5 | 11.9 | 81.4 | 28.8 | 76.7 | 19.8 |

| MentalRoBERTa | 94.3 | 28.0 | 77.5 | 8.0 | 32.7 | 20.4 | 55.0 | 17.1 | 61.4 | 13.4 | 79.5 | 12.7 | 81.3 | 23.5 | 79.1 | 19.3 |

| 基于大语言模型的零样本方法 | ||||||||||||||||

| TinyLlama-1.1B | 49.3 | 43.8 | 68.0 | 46.2 | 28.6 | 19.8 | 21.9 | 18.5 | 35.1 | 36.8 | 41.3 | 41.1 | 63.0 | 30.7 | 68.4 | 50.0 |

| Gemma-2B | 44.8 | 50.0 | 69.4 | 50.0 | 26.9 | 34.6 | 41.6 | 25.6 | 42.3 | 35.7 | 43.8 | 47.9 | 71.2 | 41.2 | 41.6 | 25.6 |

| Phi-3-mini | 46.1 | 45.6 | 69.2 | 50.0 | 21.3 | 26.8 | 31.4 | 25.7 | 23.9 | 29.7 | 58.9 | 45.2 | 62.1 | 28.8 | 70.2 | 32.3 |

| Gemma-7B | 83.3 | 6.4 | 76.2 | 41.6 | 25.1 | 16.8 | 39.8 | 23.0 | 49.2 | 29.9 | 47.1 | 40.7 | 73.9 | 35.3 | 72.3 | 34.6 |

| Llama2-7B | 74.9 | 10.2 | 64.0 | 19.7 | 22.6 | 23.4 | 27.3 | 14.7 | 42.7 | 31.8 | 53.4 | 38.3 | 68.7 | 37.3 | 71.8 | 32.6 |

| MentaLLaMA-7B | 90.6 | 27.7 | 58.7 | 10.1 | 23.7 | 25.8 | 29.9 | 23.9 | 43.6 | 35.3 | 57.1 | 34.7 | 68.9 | 39.9 | 72.7 | 36.8 |

| Llama3-8B | 85.9 | 9.9 | 70.3 | 46.2 | 26.3 | 29.8 | 40.5 | 22.3 | 47.2 | 28.5 | 53.6 | 43.7 | 75.6 | 30.3 | 77.2 | 30.9 |

| Llama2-13B | 82.1 | 9.6 | 66.2 | 18.7 | 25.2 | 23.2 | 25.3 | 17.2 | 43.2 | 33.5 | 56.2 | 37.5 | 71.2 | 38.3 | 71.6 | 36.7 |

| MentaLLaMA-13B | 91.2 | 23.6 | 60.2 | 9.9 | 24.4 | 25.8 | 30.9 | 23.6 | 43.2 | 36.1 | 58.8 | 34.1 | 66.7 | 40.6 | 75.0 | 36.4 |

| GPT-4 | 91.9 | 10.1 | 73.4 | 38.8 | 34.6 | 25.8 | 49.4 | 21.4 | 64.6 | 10.5 | 57.8 | 37.5 | 79.8 | 25.2 | 78.4 | 22.2 |

| 基于大语言模型的少样本思维链方法 | ||||||||||||||||

| Llama3-8B | 88.2 | 10.4 | 72.5 | 45.7 | 27.7 | 29.3 | 42.1 | 21.9 | 45.3 | 29.3 | 54.8 | 42.1 | 77.2 | 32.5 | 79.3 | 29.8 |

| Llama2-13B | 84.8 | 11.7 | 67.9 | 18.4 | 26.6 | 24.3 | 27.4 | 16.9 | 45.3 | 32.4 | 57.3 | 36.8 | 73.6 | 35.2 | 74.1 | 33.5 |

| GPT-4 | 95.1 | 10.4 | 78.1 | 38.2 | 37.2 | 24.4 | 50.7 | 20.6 | 66.8 | 12.3 | 63.7 | 32.4 | 81.6 | 27.3 | 81.2 | 23.0 |

BERT-based models, especially MentalBERT and Mental RoBERTa, despite their smaller sizes, they demonstrate generally higher average performance and lower EO scores compared to larger models. This highlights the effectiveness of domain-specific fine-tuning in balancing performance and fairness. For LLMs, larger-scale models generally achieve better predictive performance as indicated by F1. Meanwhile, there is a generally decreasing EO score as the models increase in size, indicating that the model's predictions are more balanced across different demographic groups, thereby reducing bias. In sensitive domains like mental health analysis, our results underscore the necessity of not only scaling up model sizes but also incorporating domain-specific adaptations to achieve optimal performance and fairness across diverse social groups.

基于BERT的模型,特别是MentalBERT和Mental RoBERTa,尽管规模较小,但与更大的模型相比,它们通常表现出更高的平均性能和更低的EO分数。这凸显了领域特定微调在平衡性能和公平性方面的有效性。对于大语言模型,更大规模的模型通常能获得更好的预测性能(以F1分数衡量)。与此同时,随着模型规模的增大,EO分数普遍呈下降趋势,表明模型在不同人口群体间的预测更加平衡,从而减少了偏见。在心理健康分析等敏感领域,我们的结果强调了不仅需要扩大模型规模,还需结合领域特定调整,以实现在不同社会群体中的最佳性能和公平性。

4.3 Performance and Fairness Analysis by Demographic Factors

4.3 基于人口因素的性能和公平性分析

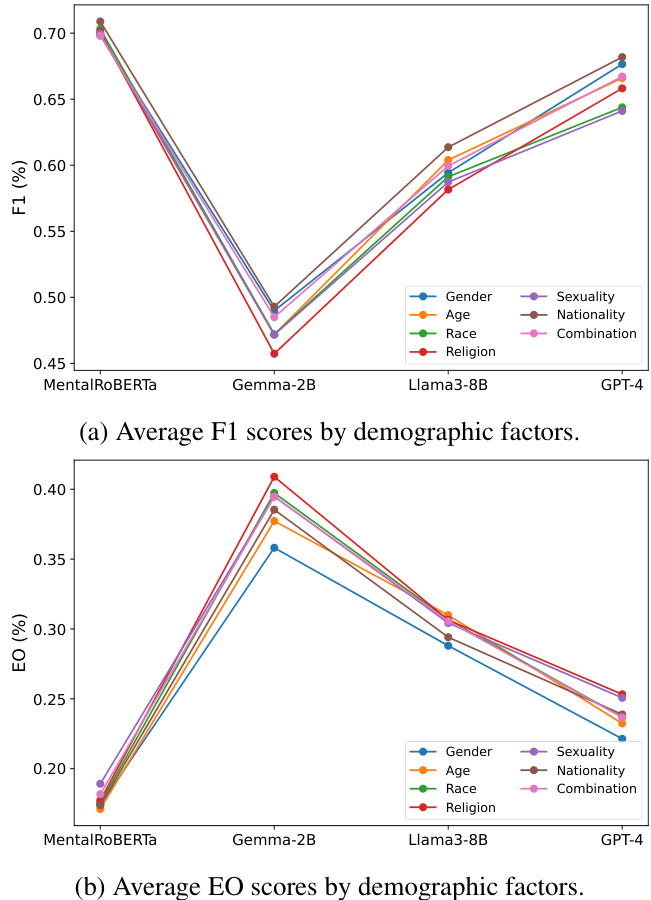

We further analyze four models by examining F1 and EO scores stratified by demographic factors (i.e., gender, race, religion, etc.) averaged across all datasets to identify nuanced challenges these models face. The results are presented in Figure 3. Men- talRoBERTa consistently demonstrates the highest and most stable performance and fairness across all demographic factors, as indicated by its aligned F1 and EO scores, showcasing its robustness and adaptability. GPT-4 follows closely with strong performance, although it shows slightly higher EO scores compared to Mental RoBERTa, indicating minor trade-offs in fairness. Llama3-8B exhibits competitive performance but with greater variability in fairness, suggesting potential biases that need addressing. Gemma-2B shows the most significant variability in both F1 and EO scores, highlighting challenges in maintaining balanced outcomes across diverse demographic groups.

我们进一步分析了四个模型,通过检查按人口统计因素(如性别、种族、宗教等)分层的F1和EO分数(在所有数据集上取平均值),以识别这些模型面临的细微挑战。结果如图3所示。MentalRoBERTa在所有人口统计因素中始终表现出最高且最稳定的性能和公平性,其F1和EO分数高度一致,展现了其稳健性和适应性。GPT-4紧随其后,表现强劲,尽管其EO分数略高于MentalRoBERTa,表明在公平性上存在轻微权衡。Llama3-8B展现出有竞争力的性能,但公平性波动较大,暗示可能存在需要解决的偏差问题。Gemma-2B在F1和EO分数上均表现出最显著的波动,突显了在不同人口统计群体间保持平衡结果的挑战。

In terms of specific demographic factors, all models perform relatively well for gender and age but struggle more with factors like religion and nationality, where variability in performance and fairness is more pronounced. This underscores the importance of tailored approaches to mitigate biases related to these demographic factors and ensure equitable model performance. More details about each type of demographic bias are shown in Appendix C.

在具体的人口统计因素方面,所有模型在性别和年龄上表现相对较好,但在宗教和国籍等因素上表现较差,这些方面的性能差异和公平性问题更为明显。这凸显了针对这些人口统计因素采取定制化方法以减少偏见并确保模型性能公平的重要性。更多关于每种人口统计偏见的详细信息见附录C。

4.4 Error Analysis

4.4 误差分析

We provide a detailed examination of the errors encountered by the models, focusing exclusively on LLMs. Through manual inspection of incorrect pre.

我们详细分析了模型遇到的错误,重点研究了大语言模型(LLM)。通过人工检查错误的预...

Figure 2: Average F1 and EO scores across datasets, ordered by model size (indicated in parentheses). BERT-based models demonstrate superior performance and fairness. For LLMs, as model size increases, performance generally improves (higher F1 scores), and fairness improves (lower EO scores).

图 2: 各数据集的平均F1和EO分数(按模型大小排序,括号内为参数量)。基于BERT的模型展现出更优的性能与公平性。对于大语言模型,随着模型规模增大,性能普遍提升(F1分数更高),公平性也同步改善(EO分数更低)。

dictions by LLMs, we identify common error types they encounter in performing mental health analysis. Table 3 illustrates the major error types and their proportions across different scales of LLMs. As model size increases, “misinterpretation” errors (i.e., incorrect context comprehension) decrease from $24.6%$ to $17.8%$ , indicating better context under standing in larger models. “Sentiment misjudgment' (i.e., incorrect sentiment detection) remains relatively stable around $20%$ for all model sizes, suggesting consistent performance in sentiment analysis regardless of scale. Medium-scale models exhibit the highest “over interpretation” rate (i.e., excessive inference from data) at $23.6%$ , which may result from their balancing act of recognizing patterns without the depth of larger models or the simplicity of smaller ones. “Ambiguity” errors (i.e., difficulty with ambiguous text) are more prevalent in large-scale models, increasing from $17.2%$ in small models to $22.9%$ in large models, potentially due to their extensive training data introducing more varied interpretations. “Demographic bias" (i.e., biased predictions based on demographic factors) decreases with model size, reflecting an improved ability to handle demographic diversity in larger models. In general, while larger models handle context and bias better, issues with sentiment misjudgment and ambiguity persist across all sizes. Detailed descriptions of each error type can be found in Appendix D.

通过分析大语言模型(LLM)的预测结果,我们识别了其在心理健康分析任务中的常见错误类型。表3展示了不同规模大语言模型的主要错误类型及其占比分布。随着模型规模增大,"语境误解"(即错误理解上下文)错误率从24.6%降至17.8%,表明大模型具有更好的语境理解能力。"情感误判"(即错误检测情感倾向)在所有规模模型中保持约20%的稳定比例,说明情感分析性能与模型规模无关。中等规模模型的"过度解读"(即从数据中过度推理)错误率最高(23.6%),这可能是由于其处于识别模式与保持简洁的平衡状态所致。"模糊性错误"(即处理歧义文本困难)在大型模型中更为突出,从小型模型的17.2%增至22.9%,可能源于其庞杂训练数据带来的多义性。"人口统计偏见"(即基于人口因素的偏见预测)随模型规模增大而降低,反映大模型处理人口多样性能力的提升。总体而言,虽然大模型在语境理解和偏见控制方面表现更好,但情感误判和模糊性处理问题在所有规模模型中持续存在。各错误类型的详细说明见附录D。

Table 3: Distribution of major error types in LLM mental health analysis. $\mathrm{LLM}{S}$ (1.1B - 3.8B), $\mathrm{LLM}{M}$ (7B - 8B), and $\mathrm{LLM}_{L}$ $(>8\mathrm{B})$ represent small, medium, and large-scale LLMs, respectively.

| Error Type | LLMs(%) | LLMM (%) | LLML(%) |

| Misinterpretation | 24.6 | 21.3 | 17.8 |

| SentimentMisjudgment | 20.4 | 22.2 | 21.8 |

| Overinterpretation | 18.7 | 23.6 | 21.2 |

| Ambiguity | 17.2 | 15.3 | 22.9 |

| DemographicBias | 19.1 | 17.6 | 16.3 |

表 3: 大语言模型心理健康分析中的主要错误类型分布。$\mathrm{LLM}{S}$ (1.1B - 3.8B)、$\mathrm{LLM}{M}$ (7B - 8B) 和 $\mathrm{LLM}_{L}$ $(>8\mathrm{B})$ 分别代表小规模、中等规模和大规模大语言模型。

| 错误类型 | LLMs(%) | LLMM(%) | LLML(%) |

|---|---|---|---|

| Misinterpretation | 24.6 | 21.3 | 17.8 |

| SentimentMisjudgment | 20.4 | 22.2 | 21.8 |

| Overinterpretation | 18.7 | 23.6 | 21.2 |

| Ambiguity | 17.2 | 15.3 | 22.9 |

| DemographicBias | 19.1 | 17.6 | 16.3 |

4.5 Bias Mitigation with Fairness-aware Prompting Strategies

4.5 基于公平性提示策略的偏见缓解

Given the evident bias patterns exhibited by LLMs in specific tasks, we conduct bias mitigation using a set of fairness-aware prompts (see Section 3.4) to investigate their impacts. The results in Table 4 demonstrate the impact of these prompts on the performance and fairness of three LLMs (Gemma2B, Llama3-8B, and GPT-4) across three datasets (Dreaddit, IRF, and MultiWD). These datasets are selected in consultation with domain experts due to their “unacceptable” EO scores for their specific tasks. Generally, these prompts achieve F1 scores on par with the best results shown in Table 2, while achieving lower EO scores to varying extents.

鉴于大语言模型在特定任务中表现出的明显偏见模式,我们采用一组公平性提示(见第3.4节)进行偏见缓解研究。表4展示了这些提示对三种大语言模型(Gemma2B、Llama3-8B和GPT-4)在三个数据集(Dreaddit、IRF和MultiWD)上性能与公平性的影响。这些数据集因其在特定任务中"不可接受"的EO分数,经领域专家建议而被选用。总体而言,这些提示实现的F1分数与表2所示最佳结果相当,同时在不同程度上降低了EO分数。

Figure 3: Average F1 and EO scores for all demographic factors on four models. For each model, the results are averaged over all datasets. Note that Llama3-8B and GPT-4 are based on zero-shot scenarios.

图 3: 四种模型在所有人口统计因素上的平均 F1 和 EO (Equal Opportunity) 分数。每个模型的结果是所有数据集的平均值。注意 Llama3-8B 和 GPT-4 基于零样本场景。

Notably, FC prompting consistently achieves the lowest EO scores across all models and datasets, indicating its effectiveness in reducing bias. For instance, FC reduces the EO score of GPT-4 from $38.2%$ to $31.6%$ on Dreaddit, resulting in a $17.3%$ improvement in fairness. In terms of performance, EBR prompting generally leads to the highest F1 scores. Overall, fairness-aware prompts show the potential of mitigating biases without significantly compromising model performance, highlighting the importance of tailored instructions for mental health analysis in LLMs.

值得注意的是,FC提示法在所有模型和数据集上始终获得最低的EO分数,表明其在减少偏见方面的有效性。例如,在Dreaddit数据集上,FC将GPT-4的EO分数从$38.2%$降至$31.6%$,公平性提升了$17.3%$。性能方面,EBR提示法通常能取得最高的F1分数。总体而言,公平性提示策略展现了在不显著影响模型性能的前提下缓解偏见的潜力,突显了针对大语言模型心理健康分析定制指令的重要性。

5 Discussion

5 讨论

In this work, we present the first comprehensive and systematic bias evaluation of ten LLMs of varying sizes using eight mental health datasets sourced from EHR and online text data. We employ zero-shot SP and few-shot CoT prompting for our experiments. Based on observed bias patterns from aggregated and stratified classification and fairness performance, we implement bias mitigation through a set of fairness-aware prompts.

在这项工作中,我们首次使用来自电子健康记录(EHR)和在线文本数据的八个心理健康数据集,对十种不同规模的大语言模型进行了全面系统的偏见评估。实验中采用了零样本单提示(SP)和少样本思维链(CoT)提示方法。基于从聚合分类、分层分类以及公平性表现中观察到的偏见模式,我们通过一组公平性感知提示实现了偏见缓解。

Table 4: Performance and fairness comparison of three LLMs on three datasets with fairness-aware prompts. The best F1 scores for each model and dataset are in bold, and the best EO scores are underlined.

| Dataset | Fair Prompts | Gemma-2B | Llama3-8B | GPT-4 | |||

| F1 | EO | F1 | EO | F1 | EO | ||

| Dreaddit | Ref. | 69.4 | 50.0 | 72.5 | 45.7 | 78.1 | 38.2 |

| FC | 70.1 | 42.3 | 72.2 | 42.1 | 78.7 | 31.6 | |

| EBR | 70.8 | 47.6 | 73.4 | 43.5 | 79.8 | 35.4 | |

| RP | 69.5 | 45.1 | 72.8 | 44.1 | 80.4 | 36.2 | |

| CC | 69.2 | 48.5 | 72.3 | 44.8 | 79.4 | 33.8 | |

| IRF | Ref. | 43.8 | 47.9 | 54.8 | 42.1 | 63.7 | 32.4 |

| FC | 44.6 | 42.1 | 55.3 | 37.4 | 64.2 | 28.2 | |

| EBR | 45.7 | 46.3 | 56.1 | 40.7 | 65.3 | 30.3 | |

| RP | 43.9 | 44.7 | 54.9 | 40.2 | 64.6 | 29.5 | |

| CC | 43.2 | 45.4 | 54.5 | 39.1 | 63.9 | 30.8 | |

| MultiWD | Ref. | 71.2 | 41.2 | 75.6 | 30.3 | 79.8 | 25.2 |

| FC | 73.2 | 35.3 | 76.2 | 24.7 | 80.2 | 20.6 | |

| EBR | 72.6 | 39.6 | 75.8 | 28.2 | 81.5 | 23.3 | |

| RP | 72.0 | 38.7 | 76.5 | 27.6 | 80.7 | 23.9 | |

| CC | 71.8 | 37.9 | 75.3 | 29.2 | 79.6 | 24.8 | |

表 4: 三种大语言模型在三个数据集上使用公平提示的性能与公平性对比。每个模型和数据集的最佳F1分数用粗体标出,最佳EO分数用下划线标出。

| 数据集 | 公平提示 | Gemma-2B | Llama3-8B | GPT-4 | |||

|---|---|---|---|---|---|---|---|

| F1 | EO | F1 | EO | F1 | EO | ||

| Dreaddit | Ref. | 69.4 | 50.0 | 72.5 | 45.7 | 78.1 | 38.2 |

| FC | 70.1 | 42.3 | 72.2 | 42.1 | 78.7 | 31.6 | |

| EBR | 70.8 | 47.6 | 73.4 | 43.5 | 79.8 | 35.4 | |

| RP | 69.5 | 45.1 | 72.8 | 44.1 | 80.4 | 36.2 | |

| CC | 69.2 | 48.5 | 72.3 | 44.8 | 79.4 | 33.8 | |

| IRF | Ref. | 43.8 | 47.9 | 54.8 | 42.1 | 63.7 | 32.4 |

| FC | 44.6 | 42.1 | 55.3 | 37.4 | 64.2 | 28.2 | |

| EBR | 45.7 | 46.3 | 56.1 | 40.7 | 65.3 | 30.3 | |

| RP | 43.9 | 44.7 | 54.9 | 40.2 | 64.6 | 29.5 | |

| CC | 43.2 | 45.4 | 54.5 | 39.1 | 63.9 | 30.8 | |

| MultiWD | Ref. | 71.2 | 41.2 | 75.6 | 30.3 | 79.8 | 25.2 |

| FC | 73.2 | 35.3 | 76.2 | 24.7 | 80.2 | 20.6 | |

| EBR | 72.6 | 39.6 | 75.8 | 28.2 | 81.5 | 23.3 | |

| RP | 72.0 | 38.7 | 76.5 | 27.6 | 80.7 | 23.9 | |

| CC | 71.8 | 37.9 | 75.3 | 29.2 | 79.6 | 24.8 |

Our results indicate that LLMs, particularly GPT4, show significant potential in mental health analysis. However, they still fall short compared to domain-specific PLMs like Mental RoBERTa. Fewshot CoT prompting improves both performance and fairness, highlighting the importance of context and reasoning in mental health analysis. Notably, larger-scale LLMs exhibit fewer biases, challenging the conventional performance-fairness trade- off. Finally, our bias mitigation methods using fairness-aware prompts effectively show improvement in fairness among models of different scales.

我们的结果表明,大语言模型(LLM)尤其是GPT4在心理健康分析领域展现出显著潜力,但仍不及Mental RoBERTa等专业领域预训练语言模型(PLM)。少样本思维链(Few-shot CoT)提示能同步提升模型性能和公平性,凸显了上下文与推理在心理健康分析中的重要性。值得注意的是,规模更大的大语言模型表现出更少偏见,这对传统的性能-公平性权衡理论提出了挑战。最后,我们采用公平性感知提示的偏见缓解方法,在不同规模模型中都有效提升了公平性表现。

Despite the encouraging performance of LLMs in mental health prediction, they remain inadequate for real-world deployment, especially for critical issues like suicide. Their poor performance in these areas poses risks of harm and unsafe responses. Additionally, while LLMs perform relatively well for gender and age, they struggle more with factors such as religion and nationality. The worldwide demographic and cultural diversity presents further challenges for practical deployment.

尽管大语言模型(LLM)在心理健康预测方面表现令人鼓舞,但其仍不足以投入实际应用,尤其是在自杀等关键问题上。这些领域的糟糕表现会带来伤害和不安全回应的风险。此外,虽然大语言模型在性别和年龄方面表现相对较好,但在宗教和国籍等因素上表现更差。全球人口统计和文化多样性为实际部署带来了更多挑战。

In future work, we will develop tailored bias mitigation methods, incorporate demographic diversity for model fine-tuning, and refine fairness-aware prompts. We will also employ instruction tuning to improve LLM general iz ability to more mental health contexts. Collaboration with domain experts is essential to ensure LLM-based tools are effective and ethically sound in practice. Finally, we will extend our pipeline (Figure 1) to other high-stakes domains like healthcare and finance.

在未来的工作中,我们将开发定制化的偏见缓解方法,纳入人口多样性进行模型微调,并优化公平性提示策略。同时采用指令调优技术提升大语言模型在更广泛心理健康场景中的泛化能力。与领域专家合作对确保基于大语言模型的工具兼具实践有效性和伦理合规性至关重要。最后,我们将把现有处理流程(图1)扩展到医疗健康、金融等其他高风险领域。

Data and Code Availability

数据和代码可用性

The data and code used in this study are available on GitHub at the following link: https://github.com/EternityYW/BiasEval-LLMMental Health.

本研究使用的数据和代码可在GitHub上获取,链接如下:https://github.com/EternityYW/BiasEval-LLMMental Health。

6 Limitations

6 局限性

Despite the comprehensive nature of this study, several limitations and challenges persist. Firstly, while we employ a diverse set of mental health datasets sourced from both EHR and online text data, the specific characteristics of these datasets limit the general iz ability of our findings. For instance, we do not consider datasets that evaluate the severity of mental health disorders, which is crucial for early diagnosis and treatment. Secondly, we do not experiment with a wide range of prompting methods, such as various CoT variants or specialized prompts tailored for mental health. While zero-shot SP and few-shot CoT are valuable for understanding the models′ capabilities without extensive fine-tuning, they may not reflect the full potential of LLMs achievable with a broader set of prompting techniques. Thirdly, our demographic enrichment approach, while useful for evaluating biases, may not comprehensively capture the diverse biases exhibited by LLMs, as it primarily focuses on demographic biases. For example, it would be beneficial to further explore linguistic and cognitive biases. Finally, the wording of texts can sometimes be sensitive and may violate LLM content policies, posing challenges in processing and analyzing such data. Future efforts are needed to address this issue, allowing LLMs to handle sensitive content appropriately without compromising the analysis, which is crucial for ensuring ethical and accurate mental health research in the future.

尽管本研究具有全面性,但仍存在若干局限性和挑战。首先,虽然我们采用了来自电子健康档案(EHR)和在线文本数据的多样化心理健康数据集,但这些数据集的特异性限制了研究结论的普适性。例如,我们未纳入评估精神障碍严重程度的数据集,而这对于早期诊断和治疗至关重要。其次,我们未尝试广泛的提示方法,如各类思维链(CoT)变体或针对心理健康定制的专用提示。尽管零样本标准提示(SP)和少样本思维链有助于理解模型在无需大量微调时的能力,但可能无法体现大语言模型在更广泛提示技术下的全部潜力。第三,我们的人口统计学数据增强方法虽有助于评估偏差,但主要关注人口统计偏差,可能无法全面捕捉大语言模型表现出的各类偏差。例如,进一步探索语言和认知偏差将更具价值。最后,文本措辞有时可能涉及敏感内容而违反大语言模型的内容政策,这为数据处理和分析带来挑战。未来需解决该问题,使大语言模型既能妥善处理敏感内容,又不影响分析质量——这对确保未来心理健康研究的伦理性和准确性至关重要。

Ethical Considerations

伦理考量

Our study adheres to strict privacy protocols to protect patient confidentiality, utilizing only anonymized datasets from publicly available sources like Reddit and proprietary EHR data, in compliance with data protection regulations, including HIPAA. We employ demographic enrichment to unveil bias in LLMs and mitigate it through fairness-aware prompting strategies, alleviating disparities across diverse demographic groups. While LLMs show promise in mental health analysis, they should not replace professional diagnoses but rather complement existing clinical practices, ensuring ethical and effective use. Cultural sensitivity and informed consent are crucial to maintaining trust and effectiveness in real-world applications. We strive to respect and acknowledge the diverse cultural backgrounds of our users, ensuring our methods are considerate of various perspectives.

我们的研究遵循严格的隐私协议以保护患者机密性,仅使用来自公开来源(如Reddit)的匿名数据集和专有电子健康记录(EHR)数据,并符合包括HIPAA在内的数据保护法规。我们采用人口统计特征增强技术来揭示大语言模型中的偏见,并通过公平感知提示策略加以缓解,从而减少不同人口群体间的差异。虽然大语言模型在心理健康分析中展现出潜力,但它们不应取代专业诊断,而应作为现有临床实践的补充,以确保伦理且有效的使用。文化敏感性和知情同意对于在实际应用中保持信任和有效性至关重要。我们致力于尊重并认可用户的多元文化背景,确保我们的方法能兼顾不同视角。

Acknowledgments

致谢

We would like to thank Diyi Yang for her valuable comments and suggestions. We also thank the authors of the SWMH dataset for granting us access to the data. This project was supported by grant number R 01 HS 024096 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

感谢Diyi Yang提出的宝贵意见和建议。同时感谢SWMH数据集作者授权我们使用该数据。本项目由医疗保健研究与质量局资助(资助号R 01 HS 024096)。内容完全由作者负责,并不必然代表医疗保健研究与质量局的官方观点。

References

参考文献

Marah Abdin, Sam Ade Jacobs, Ammar Ahmad Awan, Jyoti Aneja, Ahmed Awadallah, Hany Awadalla, Nguyen Bach, Amit Bahree, Arash Bakhtiari, Harkirat Behl, et al. 2024. Phi-3 technical report: A highly capable language model locally on your phone. arXiv pre print ar Xiv:2404.14219.

Marah Abdin、Sam Ade Jacobs、Ammar Ahmad Awan、Jyoti Aneja、Ahmed Awadallah、Hany Awadalla、Nguyen Bach、Amit Bahree、Arash Bakhtiari、Harkirat Behl等。2024。Phi-3技术报告:手机端高性能语言模型。arXiv预印本arXiv:2404.14219。

Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Alten schmidt, Sam Altman, Shyamal Anadkat, et al. 2023. Gpt-4 technical report. arXiv preprint arXiv:2303.08774.

Josh Achiam、Steven Adler、Sandhini Agarwal、Lama Ahmad、Ilge Akkaya、Florencia Leoni Aleman、Diogo Almeida、Janko Altenschmidt、Sam Altman、Shyamal Anadkat 等. 2023. GPT-4技术报告. arXiv预印本 arXiv:2303.08774.

Arfan Ahmed, Sarah Aziz, Carla T Toro, Mahmood Alzubaidi, Sara Irshaidat, Hashem Abu Serhan, Alaa A Abd-Alrazaq, and Mowafa Househ. 2022. Machine learning models to detect anxiety and depression through social media: A scoping review. Computer Methods and Programs in Bio medicine Update, 2:100066.

Arfan Ahmed、Sarah Aziz、Carla T Toro、Mahmood Alzubaidi、Sara Irshaidat、Hashem Abu Serhan、Alaa A Abd-Alrazaq 和 Mowafa Househ。2022。通过社交媒体检测焦虑和抑郁的机器学习模型:范围综述。Computer Methods and Programs in Biomedicine Update,2:100066。

AI $@$ Meta. 2024. Llama 3 model card.

AI $@$ Meta. 2024. Llama 3 模型卡

Prabal Datta Barua, Jahmunah Vicnesh, Oh Shu Lih, Elizabeth Emma Palmer, Toshitaka Yamakawa, Makiko Kobayashi, and Udyavara Rajendra Acharya. 2024. Artificial intelligence assisted tools for the detection of anxiety and depression leading to suicidal ideation in adolescents: a review. Cognitive Neuro dynamics, 18(1):1-22.

Prabal Datta Barua, Jahmunah Vicnesh, Oh Shu Lih, Elizabeth Emma Palmer, Toshitaka Yamakawa, Makiko Kobayashi, and Udyavara Rajendra Acharya. 2024. 人工智能辅助工具检测青少年焦虑抑郁导致自杀意念的研究综述. Cognitive Neurodynamics, 18(1):1-22.

Shaurya Bhatnagar, Jyoti Agarwal, and Ojasvi Rajeev Sharma. 2023. Detection and classification of anxiety in university students through the application of machine learning. Procedia Computer Science, 218:1542-1550.

Shaurya Bhatnagar、Jyoti Agarwal 和 Ojasvi Rajeev Sharma。2023。基于机器学习的大学生焦虑检测与分类研究。Procedia Computer Science, 218:1542-1550。

Dan Chisholm, Kim Sweeny, Peter Sheehan, Bruce Rasmussen, Filip Smit, Pim Cuijpers, and Shekhar Saxena. 2016. Scaling-up treatment of depression and anxiety: a global return on investment analysis. The Lancet Psychiatry, 3(5):415-424.

Dan Chisholm、Kim Sweeny、Peter Sheehan、Bruce Rasmussen、Filip Smit、Pim Cuijpers和Shekhar Saxena。2016。扩大抑郁症和焦虑症治疗的全球投资回报分析。《柳叶刀精神病学》3(5):415-424。

Munmun De Choudhury, Michael Gamon, Scott Counts, and Eric Horvitz. 2013. Predicting depression via social media. In Proceedings of the international AAAI conference on web and social media, volume 7, pages 128-137.

Munmun De Choudhury、Michael Gamon、Scott Counts 和 Eric Horvitz。2013. 通过社交媒体预测抑郁。见《国际 AAAI 网络与社交媒体会议论文集》第 7 卷,第 128-137 页。

Muskan Garg, Chandni Saxena, Sriparna Saha, Veena Krishnan, Ruchi Joshi, and Vijay Mago. 2022. Cams: An annotated corpus for causal analysis of mental health issues in social media posts. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, pages 6387-6396.

Muskan Garg、Chandni Saxena、Sriparna Saha、Veena Krishnan、Ruchi Joshi 和 Vijay Mago。2022。CAMS:社交媒体帖子中心理健康问题因果分析的标注语料库。载于《第十三届语言资源与评估会议论文集》,第6387-6396页。

Muskan Garg, Amir mohammad Shah band egan, Amrit Chadha, and Vijay Mago. 2023. An annotated dataset for explain able interpersonal risk factors of mental disturbance in social media posts. In Findings of the Association for Computational Linguistics: ACL 2023, pages 11960-11969.

Muskan Garg、Amir mohammad Shah band egan、Amrit Chadha和Vijay Mago。2023。社交媒体帖子中可解释的心理困扰人际风险因素标注数据集。载于《计算语言学协会发现集:ACL 2023》,第11960-11969页。

Manas Gaur, Amanuel Alambo, Joy Prakash Sain, Ugur Kursuncu, Krishna prasad Thiru narayan, Ramakanth Kavuluru, Amit Sheth, Randy Welton, and Jyotishman Pathak. 2019. Knowledge-aware assessment of severity of suicide risk for early intervention. In The world wide web conference, pages 514-525.

Manas Gaur、Amanuel Alambo、Joy Prakash Sain、Ugur Kursuncu、Krishna Prasad Thiru Narayan、Ramakanth Kavuluru、Amit Sheth、Randy Welton和Jyotishman Pathak。2019. 面向早期干预的自杀风险严重性知识感知评估。载于《万维网会议》,第514-525页。

Moritz Hardt, Eric Price, and Nati Srebro. 2016. Equality of opportunity in supervised learning. Advances in neural information processing systems, 29.

Moritz Hardt、Eric Price和Nati Srebro。2016。监督学习中的机会平等。神经信息处理系统进展,29。

Khan Md Hasib, Md Rafiqul Islam, Shadman Sakib, Md Ali Akbar, Imran Razzak, and Mohammad Shafiul Alam. 2023. Depression detection from social networks data based on machine learning and deep learning techniques: An int