LaMI-DETR: Open-Vocabulary Detection with Language Model Instruction

LaMI-DETR: 基于语言模型指令的开放词汇检测

Abstract. Existing methods enhance open-vocabulary object detection by leveraging the robust open-vocabulary recognition capabilities of VisionLanguage Models (VLMs), such as CLIP. However, two main challenges emerge: (1) A deficiency in concept representation, where the category names in CLIP’s text space lack textual and visual knowledge. (2) An over fitting tendency towards base categories, with the open vocabulary knowledge biased towards base categories during the transfer from VLMs to detectors. To address these challenges, we propose the Language Model Instruction (LaMI) strategy, which leverages the relationships between visual concepts and applies them within a simple yet effective DETR-like detector, termed LaMI-DETR. LaMI utilizes GPT to construct visual concepts and employs T5 to investigate visual similarities across categories. These inter-category relationships refine concept representation and avoid over fitting to base categories. Comprehensive experiments validate our approach’s superior performance over existing methods in the same rigorous setting without reliance on external training resources. LaMI-DETR achieves a rare box AP of 43.4 on OV-LVIS, surpassing the previous best by 7.8 rare box AP.

摘要。现有方法通过利用视觉语言模型(VLMs)(如CLIP)强大的开放词汇识别能力来增强开放词汇目标检测。然而存在两个主要挑战:(1)概念表征不足,CLIP文本空间中的类别名称缺乏文本和视觉知识;(2)对基类别的过拟合倾向,在从VLM迁移到检测器时,开放词汇知识会偏向基类别。为解决这些挑战,我们提出语言模型指令(LaMI)策略,利用视觉概念间的关系,并将其应用于简单高效的类DETR检测器LaMI-DETR中。LaMI使用GPT构建视觉概念,并采用T5研究跨类别视觉相似性。这些类别间关系能优化概念表征并避免对基类别的过拟合。综合实验表明,在不依赖外部训练资源的同等严格设置下,我们的方法性能优于现有方案。LaMI-DETR在OV-LVIS上取得43.4的稀有框AP,较之前最佳结果提升7.8稀有框AP。

Keywords: Inter-category Relationships $\cdot$ Language Model · DETR

关键词:跨类别关系 $\cdot$ 大语言模型 (Large Language Model) · DETR

1 Introduction

1 引言

Open-vocabulary object detection (OVOD) aims to identify and locate objects from a wide range of categories, including base and novel categories during inference, even though it is only trained on a limited set of base categories. Existing works $[6,9,13,29,33,35,36,40]$ in open-vocabulary object detection have been focusing on the development of sophisticated modules within detectors. These modules are tailored to effectively adapt the zero-shot and few-shot learning capabilities inherent in Vision-Language Models (VLMs) to the context of object detection.

开放词汇目标检测 (OVOD) 旨在识别和定位来自广泛类别的物体,包括推理过程中的基类和新类,尽管它仅在有限的基类集合上进行训练。现有工作 [6,9,13,29,33,35,36,40] 在开放词汇目标检测领域主要聚焦于开发检测器内部的复杂模块。这些模块专门设计用于有效适配视觉语言模型 (VLMs) 中固有的零样本和少样本学习能力至目标检测场景。

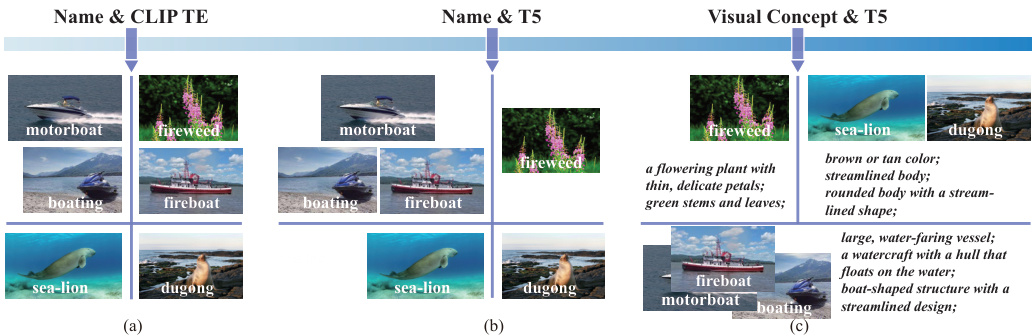

Fig. 1: Illustration of the concept representation challenge. The clustering results are from (a) name embeddings by CLIP text encoder, (b) name embeddings by T5, and (c) visual description embeddings by T5, respectively. (a) CLIP text encoder struggles to distinguish between category names that are composition ally similar in letters, such as "fireboat" and "fireweed". (b) T5 fails to cluster categories that are visually comparable but composition ally different in name around the same cluster center, such as "sealion" and "dugong". (c) Marrying T5’s textual semantic knowledge with visual insights achieves reasonable cluster results.

图 1: 概念表征挑战示意图。聚类结果分别来自 (a) CLIP文本编码器的名称嵌入, (b) T5的名称嵌入, 以及 (c) T5的视觉描述嵌入。(a) CLIP文本编码器难以区分字母组合相似的类别名称, 如"fireboat"和"fireweed"。(b) T5无法将视觉相似但名称构成不同的类别(如"sealion"和"dugong")聚类到同一中心。(c) 结合T5的文本语义知识与视觉理解后获得了合理的聚类结果。

However, there are two challenges in most existing methods: (1) Concept Represent ation. Most existing methods represent concepts using name embeddings from CLIP text encoder. However, this approach of concept representation has a limitation in capturing the textual and visual semantic similarities between categories, which could aid in discriminating visually confusable categories and exploring potential novel objects; (2) Overfit to base categories. Although VLMs can perform well on novel categories, only base detection data is used in open vocabulary detectors’ optimization, resulting in detectors’ over fitting to base categories. As a result, novel objects are easily regarded as background or base categories.

然而,现有方法存在两个挑战:(1) 概念表征 (Concept Representation)。多数方法采用CLIP文本编码器的名称嵌入来表征概念,但这种方式难以捕捉类别间的文本与视觉语义相似性,而这种相似性有助于区分视觉易混淆类别并探索潜在新物体;(2) 对基类过拟合 (Overfit to base categories)。尽管视觉语言模型 (VLMs) 在新类别上表现良好,但开放词汇检测器的优化仅使用基类检测数据,导致检测器对基类过拟合,从而使新物体易被误判为背景或基类。

Firstly, the issue of concept representation. Category names within CLIP’s textual space are deficient in both textual depth and visual information.

首先,概念表示的问题。CLIP文本空间中的类别名称在文本深度和视觉信息方面都存在不足。

(1) The VLM’s text encoder lacks textual semantic knowledge compared with language model. As depicted in Figure 1(a), relying solely on name representations from CLIP concentrates on the similarity of letter composition, neglecting the hierarchical and common-sense understanding behind language. This method is disadvantageous for categorizing clustering as it fails to consider the conceptual relationships between categories. (2) Existing concept representations based on abstract category names or definitions fail to account for visual characteristics. Figure 1(b) demonstrates this problem, where sea lions and dugongs, despite their visual similarity, are allocated to separate clusters. Representing concept only with category name overlooks the rich visual context that language provides, which can facilitate the discovery of potential novel objects.

(1) 与语言模型相比,VLM的文本编码器缺乏文本语义知识。如图1(a)所示,仅依赖CLIP的名称表示会聚焦于字母组合的相似性,而忽略了语言背后的层次结构和常识理解。这种方法不利于聚类分类,因为它未能考虑类别之间的概念关联。(2) 现有基于抽象类别名称或定义的概念表示未能考虑视觉特征。图1(b)展示了这个问题:海狮和儒艮尽管视觉相似,却被划分到不同聚类中。仅用类别名称表示概念,忽视了语言提供的丰富视觉上下文,而这些上下文有助于发现潜在的新对象。

Secondly, the issue of over fitting to base categories. To leverage the open vocabulary capabilities of VLMs, we employ a frozen CLIP image encoder as the backbone and utilize category embeddings from the CLIP text encoder as classification weights. We regard that detector training should serve two main functions: firstly, to differentiate foreground from background; and secondly, to maintain the open vocabulary classification capability of CLIP. However, training solely on base category annotations, without incorporating additional strategies, often results in over fitting: novel objects are commonly mis classified as either background or base categories. This problem has been further elucidated in prior research [29, 32].

其次,基础类别过拟合问题。为利用视觉语言模型(VLM)的开放词汇能力,我们采用冻结的CLIP图像编码器作为主干网络,并使用CLIP文本编码器的类别嵌入作为分类权重。我们认为检测器训练应实现两个主要功能:一是区分前景与背景;二是保持CLIP的开放词汇分类能力。但若仅基于基础类别标注进行训练且未引入额外策略,往往会导致过拟合现象:新物体常被误判为背景或基础类别。该问题在先前研究[29, 32]中已得到进一步阐释。

We pinpoint the exploration of inter-category relationships as pivotal in tackling the aforementioned challenges. By cultivating a nuanced understanding of these relationships, we can develop a concept representation method that integrates both textual and visual semantics. This approach can also identify visually similar categories, guiding the model to focus more on learning generalized foreground features and preventing over fitting to base categories. Consequently, in this paper, we introduce LaMI-DETR (Frozen CLIP-based DETR with Language Model Instruction), a simple but effective DETR-based detector that leverages language model insights to extract inter-category relationships, aiming to solve the aforementioned challenges.

我们指出,探索类别间关系是解决上述挑战的关键。通过深入理解这些关系,我们可以开发一种融合文本和视觉语义的概念表征方法。这种方法还能识别视觉相似的类别,引导模型更专注于学习通用前景特征,防止对基础类别的过拟合。因此,本文提出LaMI-DETR(基于冻结CLIP且带有语言模型指令的DETR),这是一种简单但有效的基于DETR的检测器,它利用语言模型的洞察力来提取类别间关系,旨在解决上述挑战。

To tackle the concept representation, we first adopt the Instructor Embedding [31], a T5 language model, to re-evaluate category similarities. As we find that language models exhibit a more refined semantic space compared to the CLIP text encoder. As shown in Figure 1(b), "fireweed" and "fireboat" are categorized into separate clusters, mirroring human recognition more closely. Next, we introduce the use of GPT-3.5 [2] to generate visual descriptions for each category. This includes detailing aspects such as shape, color, and size, effectively converting these categories into visual concepts. Figure 1(c) shows that, with similar visual descriptions, sea lions and dugongs are now grouped into the same cluster. To mitigate the over fitting issue, we cluster visual concepts into groups based on visual description embeddings from T5. This clustering result enables the identification and sampling of negative classes that are visually different from ground truth categories in each iteration. This relaxes the optimization of classification and focuses the model on deriving more generalized foreground features rather than over fitting to base categories. Consequently, this approach enhances the model’s general iz ability by reducing over training on base categories while preserving CLIP image backbone’s ability to categorize.

为了解决概念表征问题,我们首先采用Instructor Embedding [31](一种T5语言模型)重新评估类别相似性。我们发现语言模型相比CLIP文本编码器能展现出更精细的语义空间。如图1(b)所示,"fireweed"和"fireboat"被划分到不同聚类簇,更贴近人类认知模式。接着,我们引入GPT-3.5 [2]为每个类别生成视觉描述,包括形状、颜色、尺寸等细节特征,从而将这些类别有效转化为视觉概念。图1(c)显示,在获得相似视觉描述后,海狮和儒艮被归入同一聚类簇。为缓解过拟合问题,我们基于T5生成的视觉描述嵌入向量对视觉概念进行聚类分组。该聚类结果可在每次迭代时识别并采样与真实类别视觉差异大的负类,从而放宽分类优化目标,使模型更专注于学习泛化性强的前景特征而非过度拟合基类。该方法通过减少基类过训练同时保留CLIP图像骨干的分类能力,有效提升了模型的泛化性能。

In summary, we introduce a novel approach, LaMI, to enhance base-to-novel generalization in OVOD. LaMI harnesses large language models to extract intercategory relationships, utilizing this information to sample easy negative categories and avoid over fitting to base categories, while also refining concept represent at ions to enable effective classification between visually similar categories. We propose a simple but effective end-to-end LaMI-DETR framework, enabling the effective transfer of open vocabulary knowledge from pretrained VLMs to detectors. We demonstrate the superiority of our LaMI-DETR framework through rigorous testing on large vocabulary OVOD benchmark, including +7.8 AP $\mathbf{r}$ on OV-LVIS and $+2.9\mathrm{AP_{r}}$ on VG-dedup(fair comparison with OWL [20,22]). Code is available at https://github.com/eternal dolphin/LaMI-DETR.

总之,我们提出了一种名为LaMI的新方法,用于增强开放词汇目标检测(OVOD)中基础类别到新类别的泛化能力。LaMI利用大语言模型提取类别间关系,通过该信息采样简单负类别以避免对基础类别的过拟合,同时优化概念表示以实现视觉相似类别间的有效分类。我们提出了一种简单但有效的端到端LaMI-DETR框架,能够将预训练视觉语言模型(VLM)中的开放词汇知识有效迁移至检测器。通过在大型词汇OVOD基准测试上的严格验证,包括OV-LVIS上+7.8 AP $\mathbf{r}$ 和VG-dedup上 $+2.9\mathrm{AP_{r}}$ (与OWL [20,22]的公平对比),我们证明了LaMI-DETR框架的优越性。代码已开源:https://github.com/eternal dolphin/LaMI-DETR。

2 Related Work

2 相关工作

Open-vocabulary object detection (OVOD) leverages the image and language alignment knowledge stored in image-level dataset, e.g., Conceptual Captions [28], or large pre-trained VLMs, e.g., CLIP [25], to incorporate the openvocabulary information into object detectors. One group of OVOD utilizes largescale image-text pairs to expand detection vocabulary [7, 19, 26, 41, 44–46] However, based on VLMs’ proven strong zero-shot recognition abilities, most openvocabulary object detectors leverage VLM-derived knowledge to handle open vocabularies. The methods for object detectors to obtain open vocabulary knowledge from VLM can be divided into three categories: pseudo labels [26, 40, 45], distillation [6, 9, 33, 35] or parameter transfer [15, 36]. Despite its utility, performances of these methods are arguably restricted by the teacher VLM, which is shown to be largely unaware of inter-category visual relationship. Our method is orthogonal to all the aforementioned approaches in the sense that it not only explicitly models region-word correspondences, but also leverages visual correspondences across categories to help localize novel categories, which greatly improves the performance, especially in the DETR-based architecture [3, 11, 42, 43].

开放词汇目标检测 (OVOD) 利用存储在图像级数据集 (如 Conceptual Captions [28]) 或大型预训练视觉语言模型 (如 CLIP [25]) 中的图文对齐知识,将开放词汇信息融入目标检测器。一类 OVOD 方法通过大规模图文对扩展检测词汇表 [7, 19, 26, 41, 44-46]。然而,基于视觉语言模型已被证实的强大零样本识别能力,大多数开放词汇目标检测器都利用视觉语言模型衍生的知识来处理开放词汇。目标检测器从视觉语言模型获取开放词汇知识的方法可分为三类:伪标签 [26, 40, 45]、蒸馏 [6, 9, 33, 35] 或参数迁移 [15, 36]。尽管这些方法有效,但其性能仍受限于教师视觉语言模型——研究表明该模型很大程度上缺乏对类别间视觉关系的认知。我们的方法与上述所有方法正交,不仅显式建模区域-单词对应关系,还利用跨类别视觉对应关系来帮助定位新类别,这显著提升了性能,特别是在基于 DETR 的架构中 [3, 11, 42, 43]。

Zero-shot object detection (ZSD) addresses the challenge of detecting novel, unseen classes by leveraging language features for generalization. Traditional approaches utilize word embeddings, such as GloVe [23], as classifier weights to project region features into a pre-computed text embedding space [1,5]. This enables ZSD models to recognize unseen objects by their names during inference. However, the primary limitation of ZSD lies in its training on a constrained set of seen classes, failing to adequately align the vision and language feature spaces. Some methods attempt to mitigate this issue by generating feature representations of novel classes using Generative Adversarial Networks [8, 30] or through data augmentation strategies for synthesizing unseen classes [48]. Despite these efforts, ZSD still faces significant performance gaps compared to supervised detection methods, highlighting the difficulty in extending detection capabilities to entirely unseen objects without access to relevant resources.

零样本目标检测 (ZSD) 通过利用语言特征实现泛化,解决了检测新颖未见类别的挑战。传统方法使用词嵌入 (例如 GloVe [23]) 作为分类器权重,将区域特征映射到预计算的文本嵌入空间 [1,5]。这使得 ZSD 模型能够在推理时通过名称识别未见物体。然而,ZSD 的主要局限在于其训练仅基于有限的已知类别集,未能充分对齐视觉与语言特征空间。部分方法尝试通过生成对抗网络 [8, 30] 生成新类别特征表示,或采用数据增强策略合成未见类别 [48] 来缓解该问题。尽管存在这些努力,与监督检测方法相比,ZSD 仍存在显著性能差距,这凸显了在缺乏相关资源的情况下将检测能力扩展到完全未见物体的困难性。

Large Language Model (LLM) Language data has increasingly played a pivotal role in open-vocabulary research, with recent Large Language Models (LLMs) showcasing vast knowledge applicable across various Natural Language Processing tasks. Works such as [21,24,37] have leveraged language insights from LLMs to generate descriptive labels for visual categories, thus enriching VLMs without necessitating further training or labeling. Nonetheless, there are gaps in current methodologies: firstly, the potential of disc rim i native LLMs for enhancing VLMs is frequently overlooked; secondly, the inter-category relationships remain under explored. We propose a novel, straightforward clustering approach that employs GPT and Instructor Embeddings to investigate visual similarities among concepts, addressing these oversights.

大语言模型 (LLM)

语言数据在开放词汇研究中日益发挥关键作用,近期的大语言模型展现出适用于各类自然语言处理任务的广泛知识。研究如[21,24,37]利用LLM的语言洞察力为视觉类别生成描述性标签,从而无需额外训练或标注即可增强视觉语言模型(VLM)。然而当前方法存在不足:首先,判别式LLM提升VLM的潜力常被忽视;其次,类别间关联性尚未充分探索。我们提出一种新颖简洁的聚类方法,采用GPT和Instructor Embeddings来探究概念间的视觉相似性,以解决这些遗漏问题。

3 Method

3 方法

In this section, we begin with an introduction to open-vocabulary object detection (OVOD) in Section 3.1. Following this, we describe our proposed architecture of LaMI-DETR, a straightforward and efficient OVOD baseline, detailed in Section 3.2. Finally, we provide a detailed explanation of Language Model Instruction (LaMI) in Section 3.3.

在本节中,我们首先在第3.1节介绍开放词汇目标检测 (open-vocabulary object detection, OVOD) 。接着,在第3.2节详细描述我们提出的LaMI-DETR架构——一种简单高效的OVOD基线方法。最后,在第3.3节对语言模型指令 (Language Model Instruction, LaMI) 进行详细说明。

3.1 Preliminaries

3.1 预备知识

Given an image $I R^{H W3}$ as input to an open-vocabulary ob ject detector, two primary outputs are typically generated: (1) Classification, wherein a class label, $c_{j}\in\mathcal{C}{\mathrm{{test}}}$ , is assigned to the $j^{\mathrm{th}}$ predicted object in the image, with $\mathcal{C}{\mathrm{test}}$ ect representing the set of categories targeted during inference. (2) Localization, which involves determining the bounding box coordinates, $\mathbf{b}{j}\in\mathbb{R}^{4}$ , that identify the location of the $j^{\mathrm{th}}$ predicted object. Following the framework established by OVR-CNN [41], there is a detection dataset, $\mathcal{D}{\mathrm{det}}$ , comprising bounding box coordinates, class labels, and corresponding images, and addressing a category vocabulary, $\mathcal{C}_{\mathrm{det}}$ .

给定一张图像 $\mathbf{I}\in\mathbb{R}^{H\times W\times3}$ 作为开放词汇目标检测器的输入,通常会生成两个主要输出:(1) 分类,其中为图像中第 $j^{\mathrm{th}}$ 个预测对象分配一个类别标签 $c_{j}\in\mathcal{C}{\mathrm{{test}}}$ ,$\mathcal{C}{\mathrm{test}}$ 表示推理过程中目标类别的集合;(2) 定位,涉及确定边界框坐标 $\mathbf{b}{j}\in\mathbb{R}^{4}$ ,以标识第 $j^{\mathrm{th}}$ 个预测对象的位置。遵循 OVR-CNN [41] 建立的框架,存在一个检测数据集 $\mathcal{D}{\mathrm{det}}$ ,包含边界框坐标、类别标签和对应图像,并处理一个类别词汇表 $\mathcal{C}_{\mathrm{det}}$ 。

In line with the conventions of OVOD, we denote the category spaces of $\mathcal{C}{\mathrm{test}}$ ect and $\mathcal{C}{\mathrm{det}}$ as $\mathcal{C}$ and ${\mathit{C}}{\mathrm{B}}$ rotect respectively. Typically, $\mathcal{C}{\mathrm{B}}\subset\mathcal{C}$ . The categories within ${\mathit{C}}{\mathrm{B}}$ are known as base categories, whereas those exclusively appearing in $\mathcal{C}{\mathrm{test}}$ are identified as novel categories. The set of novel categories is expressed as ${\mathcal{C}}{\mathrm{N}}={\mathcal{C}}\setminus{\mathcal{C}}{\mathrm{B}}\neq\emptyset$ . For each category $c\in{\mathcal{C}}$ , we utilize CLIP to encode its text embedding $t_{c}\in\mathbb{R}^{d}$ , and $\mathcal{T}{\mathrm{CLS}}=~{t_{c}}_{c=1}^{C}$ ( $C$ is the size of the category vocabulary).

按照 OVOD 的惯例,我们将 $\mathcal{C}{\mathrm{test}}$ 和 $\mathcal{C}{\mathrm{det}}$ 的类别空间分别表示为 $\mathcal{C}$ 和 $\mathcal{C}{\mathrm{B}}$。通常,$\mathcal{C}{\mathrm{B}}\subset\mathcal{C}$。$\mathcal{C}{\mathrm{B}}$ 中的类别称为基类 (base categories),而仅出现在 $\mathcal{C}{\mathrm{test}}$ 中的类别则被识别为新类 (novel categories)。新类集合表示为 $\mathcal{C}{\mathrm{N}}=\mathcal{C}\setminus\mathcal{C}{\mathrm{B}}\neq\emptyset$。对于每个类别 $c\in\mathcal{C}$,我们使用 CLIP 编码其文本嵌入 $t_{c}\in\mathbb{R}^{d}$,且 $\mathcal{T}{\mathrm{CLS}}=~{t_{c}}_{c=1}^{C}$($C$ 为类别词汇表大小)。

3.2 Architecture of LaMI-DETR

3.2 LaMI-DETR架构

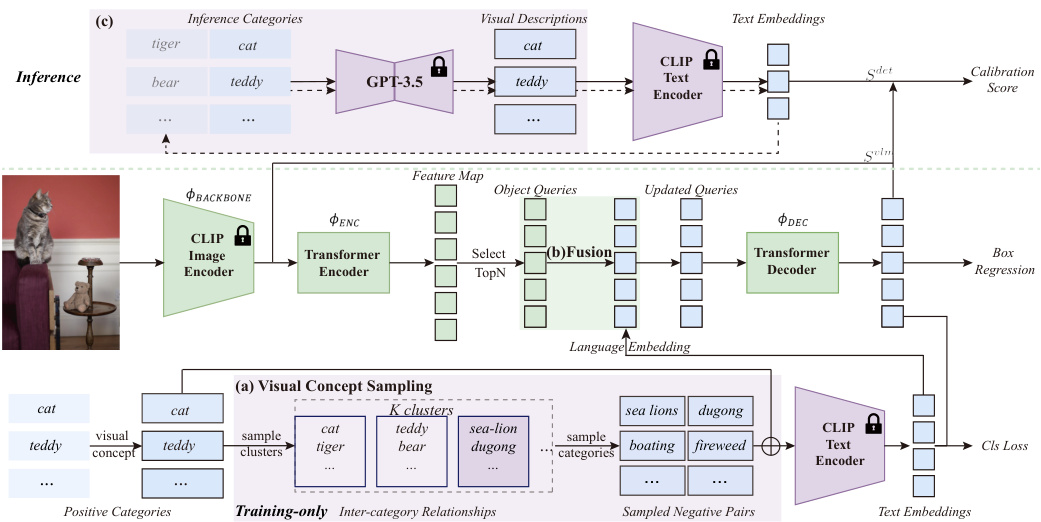

The overall framework of LaMI-DETR is illustrated in Figure 2. Given an image input, we obtain the spatial feature map using the ConvNext backbone from the pre-trained CLIP image encoder $\phi_{\mathrm{BACKBONE}}$ , which remains frozen during training. Then the feature map is sequentially subjected to a series of operations: a transformer encoder $\left(\varPhi_{\mathrm{ENC}}\right)$ to refine the feature map; a transformer decoder $\phi_{\mathrm{DEC}}$ , producing a set of query features ${f_{j}}{j=1}^{N}$ ; The query features are then processed by a bounding box module \ $\cdot\varPhi_{\mathrm{BBOX.}}$ to infer the positions of objects, denoted as ${\mathbf{b}{j}}{j=1}^{N}$ . We follow the inference pipeline of F-VLM [15] and use VLM score $S^{v l m}$ to calibrate detection score $S^{d e t}$ .

LaMI-DETR的整体框架如图2所示。给定图像输入,我们使用预训练CLIP图像编码器中的ConvNext主干网络$\phi_{\mathrm{BACKBONE}}$ 获取空间特征图,该主干网络在训练期间保持冻结状态。随后对特征图依次执行以下操作:通过Transformer编码器$\left(\varPhi_{\mathrm{ENC}}\right)$ 细化特征图;通过Transformer解码器$\phi_{\mathrm{DEC}}$ 生成一组查询特征${f_{j}}{j=1}^{N}$;这些查询特征随后由边界框模块$\cdot\varPhi_{\mathrm{BBOX.}}$ 处理以推断物体位置,记为${\mathbf{b}{j}}{j=1}^{N}$ {\mathbf {b}j\right }_{j=1}^{N}。我们遵循F-VLM[15]的推理流程,使用VLM分数$S^{v l m}$校准检测分数$S^{d e t}$。

Fig. 2: An overview of LaMI-DETR Framework. LaMI-DETR adapts the DETR model by incorporating a frozen CLIP image encoder as the backbone and replacing the final classification layer with CLIP text embeddings. (a) Visual Concept Sampling, applied only during the training phase, leverages pre-extracted inter-category relationships to sample easy negative categories that are visually distinct from ground truth classes. This encourages the detector to derive more generalized foreground features rather than over fitting to base categories. (b) Language embeddings selected are integrated into the object queries for enhanced classification accuracy. (c) During inference, confusing categories are identified to improve VLM score.

图 2: LaMI-DETR框架概览。该框架通过引入冻结的CLIP图像编码器作为主干网络,并将最终分类层替换为CLIP文本嵌入来改进DETR模型。(a) 视觉概念采样(仅训练阶段使用)利用预提取的类别间关系,从与真实类别视觉差异较大的负类别中采样简单样本,促使检测器学习更具泛化性的前景特征而非过拟合基类。(b) 精选的语言嵌入被整合至对象查询中以提升分类精度。(c) 推理阶段通过识别易混淆类别来优化VLM评分。

Comparison with other Open-Vocabulary DETR. CORA [36] and EdaDet [29] also propose to use a frozen CLIP image encoder in DETR for extracting image features. However, LaMI-DETR significantly differs from these two approaches in the following aspects.

与其他开放词汇DETR的对比。CORA [36] 和 EdaDet [29] 也提出在DETR中使用冻结的CLIP图像编码器来提取图像特征。然而,LaMI-DETR在以下方面与这两种方法有显著差异。

Firstly, regarding the number of backbones used, both LaMI-DETR and CORA employ a single backbone. In contrast, EdaDet utilizes two backbones: a learnable backbone and a frozen CLIP image encoder.

首先,关于使用的骨干网络数量,LaMI-DETR和CORA都采用单一骨干网络。相比之下,EdaDet使用了两个骨干网络:一个可学习的骨干网络和一个冻结的CLIP图像编码器。

Secondly, both CORA and EdaDet adopt an architecture that decouples classification and regression tasks. While this method addresses the issue of failing to recall novel classes, it necessitates extra post-processing steps, such as NMS, disrupting DETR’s original end-to-end structure.

其次,CORA和EdaDet都采用了分类与回归任务解耦的架构。虽然这种方法解决了无法召回新类别的问题,但需要额外的后处理步骤(如NMS),破坏了DETR原有的端到端结构。

Furthermore, both CORA and EdaDet require RoI-Align operations during training. In CORA, the DETR only predicts objectness, necessitating RoIAlign on the CLIP feature map during anchor pre-matching to determine the specific categories of proposals. EdaDet minimizes the cross-entropy loss based on each proposal’s classification scores, obtained through a pooling operation. Consequently, CORA and EdaDet require multiple pooling operations during inference. In contrast, LaMI-DETR simplifies this process, needing only a single pooling operation at the inference stage.

此外,CORA和EdaDet在训练过程中都需要RoI-Align操作。在CORA中,DETR仅预测物体性(objectness),因此在锚框预匹配阶段需要对CLIP特征图进行RoIAlign以确定提案的具体类别。EdaDet则基于每个提案的分类分数(通过池化操作获得)最小化交叉熵损失。因此,CORA和EdaDet在推理阶段需要多次池化操作。相比之下,LaMI-DETR简化了这一流程,在推理阶段仅需单次池化操作。

3.3 Language Model Instruction

3.3 大语言模型指令

Unlike previous methods that only rely on the vision-language alignment of VLMs, we aim to improve open-vocabulary detectors by enhancing concept represent ation and investigating inter-category relationships. To achieve this, we first explain the process of constructing visual concepts and delineating their rela tion ships. In Language Embedding Fusion and Confusing Category sections, we describe methods for more accurately representing concepts during the training and inference processes. The Visual Concept Sampling section addresses how to mitigate over fitting issue through the use of inter-category relationships. Finally, we detail the distinctions with other research effort.

与以往仅依赖视觉语言模型(VLM)对齐的方法不同,我们致力于通过增强概念表征(concept representation)和探究类别间关系来改进开放词汇检测器。为此,我们首先阐释了视觉概念构建及其关系界定的流程。在"语言嵌入融合"和"混淆类别"章节中,我们描述了在训练与推理过程中更精准表征概念的方法。"视觉概念采样"章节则探讨如何利用类别间关系缓解过拟合问题。最后,我们详述了与其他研究的区别。

Inter-category Relationships Extraction. Based on the problem identified in Figure 1, we employ visual descriptions to establish visual concepts, refining concept representation. Furthermore, we utilize T5, which possesses extensive textual semantic knowledge, to measure similarity relationships among visual concepts, thereby extracting inter-category relationships.

跨类别关系提取。基于图 1 中识别的问题,我们采用视觉描述来建立视觉概念,优化概念表征。此外,我们利用具有丰富文本语义知识的 T5 来度量视觉概念之间的相似性关系,从而提取跨类别关系。

As illustraed in Figure 3, given a category name $c\in{\mathcal{C}}$ , we extract its finegrained visual feature descriptors $d$ using the method described in [21]. We define $\mathcal{D}$ as the visual description space for categories in $\mathcal{C}$ . These visual descriptions $d\in\mathcal{D}$ are then sent to the T5 model to obtain the visual description embeddings $e\in\mathcal{E}$ . Consequently, we construct an open set of visual concepts $\mathcal{D}$ and their corresponding embeddings $\mathcal{E}$ . To identify visually similar concepts, we propose clustering the visual description embeddings $\mathcal{E}$ into $K$ cluster centroids. Concepts grouped under the same cluster centroid are deemed to possess similar visual characteristics. The extracted inter-category relationships are then applied in the visual concept sampling as shown in Figure 2(a).

如图 3 所示,给定类别名称 $c\in{\mathcal{C}}$ ,我们使用 [21] 中描述的方法提取其细粒度视觉特征描述符 $d$ 。我们将 $\mathcal{D}$ 定义为 $\mathcal{C}$ 中类别的视觉描述空间。这些视觉描述 $d\in\mathcal{D}$ 随后被输入 T5 模型以获得视觉描述嵌入 $e\in\mathcal{E}$ 。因此,我们构建了一个开放的视觉概念集合 $\mathcal{D}$ 及其对应的嵌入 $\mathcal{E}$ 。为了识别视觉相似的概念,我们提出将视觉描述嵌入 $\mathcal{E}$ 聚类为 $K$ 个聚类中心。被归入同一聚类中心下的概念被认为具有相似的视觉特征。提取的类别间关系随后被应用于视觉概念采样,如图 2(a) 所示。

Fig. 3: Illustration of Inter-category Relationships Extraction. Visual descriptions generated by GPT-3.5 are processed by T5 to cluster categories with visual similarities.

图 3: 跨类别关系提取示意图。GPT-3.5生成的视觉描述通过T5处理,将具有视觉相似性的类别进行聚类。

Language Embedding Fusion. As shown in Figure 2(b), after transformer encoder, each pixel on the feature map ${f_{i}}{i=1}^{M}$ \lbrace \else \text brace left \fi f_i}{i=1}^{M} is interpreted as an ob ject query, with each directly predicting a bounding box. To select the top $N$ scoring bounding boxes as region proposals, the process can be encapsulated as follows:

语言嵌入融合。如图 2(b) 所示,经过 Transformer 编码器后,特征图 ${f_{i}}_{i=1}^{M}$ 上的每个像素被解释为一个对象查询 (object query),每个查询直接预测一个边界框。为了选择得分最高的 $N$ 个边界框作为区域提议 (region proposals),该过程可概括如下:

$$

{q_{j}}_{j=1}^{N}=\operatorname{Top}_{N}\bigl({\mathcal{T}_{\mathrm{cLs}}\cdot f_{i}}_{i=1}^{M}\bigr).

$$

$$

{q_{j}}_{j=1}^{N}=\operatorname{Top}_{N}\bigl({\mathcal{T}_{\mathrm{cLs}}\cdot f_{i}}_{i=1}^{M}\bigr).

$$

In LaMI-DETR, we fuse each query ${q_{j}}_{j=1}^{N}$ with its closest text embedding, resulting in:

在LaMI-DETR中,我们将每个查询${q_{j}}_{j=1}^{N}$与其最接近的文本嵌入进行融合,得到:

$$

{q_{j}}_{j=1}^{N}={q_{j}\oplus t_{j}}_{j=1}^{N},

$$

$$

{q_{j}}_{j=1}^{N}={q_{j}\oplus t_{j}}_{j=1}^{N},

$$

where $\bigoplus$ denotes element-wise addition.

其中 $\bigoplus$ 表示逐元素相加。

On one hand, the visual descriptions are sent to the T5 model to cluster visually similar categories, as previously described. On the other hand, the visual descriptions $d_{j}\in{\mathcal{D}}$ are forwarded to the text encoder of the CLIP model to update the classification weights, denoted as $\mathcal{T}{\mathrm{CLS}}={t{c}^{\prime}}{c=1}^{C}$ , where $t{c}^{\prime}$ represents the text embedding of $d$ in the CLIP text encoder space. Consequently, the text embeddings used in the language embedding fusion process are updated accordingly:

一方面,视觉描述被送入T5模型以聚类视觉相似的类别,如前所述。另一方面,视觉描述 $d_{j}\in{\mathcal{D}}$ 被传递到CLIP模型的文本编码器来更新分类权重,记为 $\mathcal{T}{\mathrm{CLS}}={t{c}^{\prime}}{c=1}^{C}$ ,其中 $t{c}^{\prime}$ 表示 $d$ 在CLIP文本编码器空间中的文本嵌入。因此,语言嵌入融合过程中使用的文本嵌入也相应更新:

$$

{q_{j}}_{j=1}^{N}={q_{j}\oplus t_{j}^{\prime}}_{j=1}^{N}

$$

$$

{q_{j}}_{j=1}^{N}={q_{j}\oplus t_{j}^{\prime}}_{j=1}^{N}

$$

Confusing Category. Due to similar visual concepts often sharing common features, nearly identical visual descriptors can be generated for these categories. This similarity poses challenges in distinguishing similar visual concepts during the inference process.

混淆类别。由于具有相似视觉概念的类别通常共享共同特征,这些类别可能生成几乎相同的视觉描述符。这种相似性在推理过程中对区分相似视觉概念提出了挑战。

To distinguish easily confusable categories during the inference process, we initially identify the most similar category $c^{\mathrm{conf}}\in{\mathcal{C}}$ for each class $c\in{\mathcal{C}}$ within the CLIP text encoder semantic space, based on $\tau_{\mathrm{crs}}$ . We then modify the prompt for generating visual descriptions $d^{\prime}\in\mathcal{D}^{\prime}$ for category $c$ to emphasize the features that differentiate $c$ from $c^{\mathrm{conf}}$ ext {conf}}. Let $t^{\prime\prime}$ be the text embedding of $d^{\prime}$ in the CLIP text encoder space. As shown in Figure 2(c), We update the inference pipeline as follows:

为在推理过程中区分易混淆类别,我们首先基于$\tau_{\mathrm{crs}}$,在CLIP文本编码器语义空间内为每个类别$c\in{\mathcal{C}}$确定最相似类别$c^{\mathrm{conf}}\in{\mathcal{C}}$。随后修改生成视觉描述$d^{\prime}\in\mathcal{D}^{\prime}$的提示词,以突出$c$与$c^{\mathrm{conf}}$的区分特征。设$t^{\prime\prime}$为$d^{\prime}$在CLIP文本编码器空间中的文本嵌入。如图2(c)所示,我们按以下方式更新推理流程:

$$

\begin{array}{r l}&{\mathcal{T}{\mathrm{cLs}}^{\prime}={t_{c}^{\prime\prime}}{c=1}^{C},}\ &{S_{j}^{v l m}=\mathcal{T}{\mathrm{cLs}}^{\prime}\cdot\boldsymbol{\varPhi}{\mathrm{pooling}}\left(b_{j}\right).}\end{array}

$$

Visual Concept Sampling. To address the challenges posed by incomplete annotations in open-vocabulary detection datasets, we employ Federated Loss [47], originally introduced for long-tail datasets [10]. This approach involves randomly selecting a set of categories to calculate detection losses for each minibatch, effectively minimizing issues related to missing annotations in certain classes. Given category occurrence frequency $p=[p_{1},p_{2},\ldots,p_{C}]$ ldots , p_C], where $p_{c}$ denotes the occurrence frequency in training data of the $c^{\mathrm{th}}$ visual concept and $C$ represents the total number of categories. We randomly draw $\boldsymbol{C}{\mathrm{fed}}$ samples based on the probability distribution $p$ . The likelihood of selecting the $c^{\mathrm{th}}$ sample $x_{c}$ is proportional to its corresponding weight $p_{c}$ . This method facilitates the transfer of visual similarity knowledge, extracted by the language model, to the detector, thereby reducing the issue of over fitting:

视觉概念采样。为解决开放词汇检测数据集中标注不完整带来的挑战,我们采用最初为长尾数据集[10]提出的联邦损失(Federated Loss)[47]。该方法通过随机选择一组类别计算每个小批量的检测损失,有效缓解某些类别缺失标注的问题。给定类别出现频率$p=[p_{1},p_{2},\ldots,p_{C}]$,其中$p_{c}$表示第$c^{\mathrm{th}}$个视觉概念在训练数据中的出现频率,$C$为类别总数。我们基于概率分布$p$随机抽取$\boldsymbol{C}{\mathrm{fed}}$个样本,第$c^{\mathrm{th}}$个样本$x_{c}$的选取概率与其对应权重$p_{c}$成正比。该方法能促进语言模型提取的视觉相似性知识向检测器迁移,从而缓解过拟合问题:

$$

P(X=c)=p_{c},\quad{\mathrm{for~}}c=1,2,\ldots,C

$$

$$

P(X=c)=p_{c},\quad{\mathrm{对于~}}c=1,2,\ldots,C

$$

Incorporating federated loss, the classification weight is reformulated as $\tau_{\mathrm{cLs}}=$ ${t_{c}^{\prime\prime}}{c=1}^{\bar{C}{\mathrm{fed}}}$ , where $\mathcal{C}{\mathrm{FED}}$ denotes the categories engaged in the loss calculation of each iteration, and $\boldsymbol{C}{\mathrm{fed}}$ text {fed}} is the count of $\mathcal{C}_{\mathrm{FED}}$ .

结合联邦损失,分类权重被重新表述为 $\tau_{\mathrm{cLs}}=$ ${t_{c}^{\prime\prime}}{c=1}^{\bar{C}{\mathrm{fed}}}$ ,其中 $\mathcal{C}{\mathrm{FED}}$ 表示每次迭代参与损失计算的类别,$\boldsymbol{C}{\mathrm{fed}}$ text {fed}} 是 $\mathcal{C}_{\mathrm{FED}}$ 的计数。

We utilize a frozen CLIP with strong open vocabulary capabilities as LaMIDETR’s backbone. However, due to the limited categories in detection datasets, over fitting to base classes is inevitable after training. To mitigate over training on base categories, we aim to sample straightforward negative categories based on the results of visual concepts clustering. In LaMI-DETR, let the clusters containing the ground truth categories be denoted by $\kappa_{G}$ in a given iteration. We denote all the categories within $K_{G}$ rotect \mathcal {K}G as $\mathcal{C}{g}$ . Specifically, we aim to exclude $\mathcal{C}{g}$ from being sampled in the current iteration. To achieve this, we set the frequency of occurrence for categories within $\mathcal{C}_{g}$ to zero.

我们采用具有强大开放词汇能力的冻结CLIP作为LaMIDETR的骨干网络。然而由于检测数据集的类别有限,训练后对基类的过拟合不可避免。为缓解基类过训练问题,我们基于视觉概念聚类结果采样简单负类别。在LaMI-DETR中,设当前迭代中包含真实类别的聚类为$\kappa_{G}$,将$\mathcal{K}G$内所有类别记为$\mathcal{C}{g}$。

where $p_{c}^{c a l}$ indicates the frequency of occurrence of category $c$ after language model calibration, ensuring visually similar categories are not sampled during this iteration. This process is shown in Figure $2(\mathrm{a})$ .

其中 $p_{c}^{c a l}$ 表示经过语言模型校准后类别 $c$ 的出现频率,确保在此次迭代中不会采样视觉上相似的类别。该过程如图 $2(\mathrm{a})$ 所示。

Comparison with concept enrichment. The visual concept description is different from the concept enrichment employed in DetCLIP [39]. The visual descriptions used in LaMI place more emphasis on the visual attributes inherent to the object itself. In DetCLIP, category label is supplemented with definitions, which may include concepts not present in the pictures to rigorously characterize a class.

与概念增强的对比。视觉概念描述与DetCLIP [39] 中采用的概念增强不同。LaMI使用的视觉描述更侧重于物体本身固有的视觉属性。在DetCLIP中,类别标签会补充定义,这些定义可能包含图片中未出现的概念,以严格表征某个类别。

4 Experiments

4 实验

Section 4.1 introduces the standard dataset and benchmarks commonly utilized in the field, as detailed in [9]. Section 4.2 outlines the implementation and training details of our LaMI-DETR, which leverages knowledge of visual character istics from language models. We present a comparison of our models with existing works in Section 4.3, showcasing state-of-the-art performance. Additionally, Section 4.3 includes results on cross-dataset transfer to demonstrate the genera liz ability of our approach. Finally, Section 4.4 conducts ablation studies to examine the impact of our design decisions.

4.1 节介绍了该领域常用的标准数据集和基准测试,具体细节见 [9]。4.2 节概述了我们 LaMI-DETR 的实现和训练细节,该方法利用了语言模型中视觉特征的知识。4.3 节展示了我们的模型与现有工作的对比,呈现了最先进的性能表现。此外,4.3 节还包含跨数据集迁移的结果,以证明我们方法的泛化能力。最后,4.4 节进行了消融实验,以检验我们设