ALBERT: A LITE BERT FOR SELF-SUPERVISED LEARNING OF LANGUAGE REPRESENTATIONS

ALBERT: 一种轻量级BERT模型用于语言表征的自监督学习

ABSTRACT

摘要

Increasing model size when pre training natural language representations often results in improved performance on downstream tasks. However, at some point further model increases become harder due to GPU/TPU memory limitations and longer training times. To address these problems, we present two parameterreduction techniques to lower memory consumption and increase the training speed of BERT (Devlin et al., 2019). Comprehensive empirical evidence shows that our proposed methods lead to models that scale much better compared to the original BERT. We also use a self-supervised loss that focuses on modeling inter-sentence coherence, and show it consistently helps downstream tasks with multi-sentence inputs. As a result, our best model establishes new state-of-the-art results on the GLUE, RACE, and SQuAD benchmarks while having fewer parameters compared to BERT-large. The code and the pretrained models are available at https://github.com/google-research/ALBERT.

在预训练自然语言表示时增加模型规模通常能提升下游任务的表现。但随着模型持续增大,GPU/TPU内存限制和训练时间延长会导致进一步扩展变得困难。为解决这些问题,我们提出两种参数压缩技术来降低内存占用并加速BERT训练(Devlin et al., 2019)。大量实验证明,相比原始BERT,我们提出的方法能使模型获得更优的扩展性。我们还采用了一种专注于建模句子间连贯性的自监督损失函数,实验表明该设计能持续提升多句子输入的下游任务表现。最终,我们的最佳模型在参数量少于BERT-large的情况下,于GLUE、RACE和SQuAD基准测试中创造了新的性能记录。代码与预训练模型已开源:https://github.com/google-research/ALBERT。

1 INTRODUCTION

1 引言

Full network pre-training (Dai & Le, 2015; Radford et al., 2018; Devlin et al., 2019; Howard & Ruder, 2018) has led to a series of breakthroughs in language representation learning. Many nontrivial NLP tasks, including those that have limited training data, have greatly benefited from these pre-trained models. One of the most compelling signs of these breakthroughs is the evolution of machine performance on a reading comprehension task designed for middle and high-school English exams in China, the RACE test (Lai et al., 2017): the paper that originally describes the task and formulates the modeling challenge reports then state-of-the-art machine accuracy at $44.1%$ ; the latest published result reports their model performance at $83.2%$ (Liu et al., 2019); the work we present here pushes it even higher to $89.4%$ , a stunning $45.3%$ improvement that is mainly attributable to our current ability to build high-performance pretrained language representations.

全网络预训练 (Dai & Le, 2015; Radford et al., 2018; Devlin et al., 2019; Howard & Ruder, 2018) 在语言表征学习领域引发了一系列突破。许多重要的自然语言处理任务 (包括训练数据有限的任务) 都从这些预训练模型中受益匪浅。这些突破最显著的标志之一是中国初高中英语考试阅读理解任务 RACE (Lai et al., 2017) 上机器表现的演进: 最初描述该任务并提出建模挑战的论文报告的最先进机器准确率为 $44.1%$ ; 最新发表的结果显示其模型性能达到 $83.2%$ (Liu et al., 2019) ; 本文工作将其进一步提升至 $89.4%$ , 这惊人的 $45.3%$ 提升主要归功于当前构建高性能预训练语言表征的能力。

Evidence from these improvements reveals that a large network is of crucial importance for achieving state-of-the-art performance (Devlin et al., 2019; Radford et al., 2019). It has become common practice to pre-train large models and distill them down to smaller ones (Sun et al., 2019; Turc et al., 2019) for real applications. Given the importance of model size, we ask: Is having better NLP models as easy as having larger models?

这些改进的证据表明,大型网络对于实现最先进性能至关重要 (Devlin et al., 2019; Radford et al., 2019)。预训练大模型并将其蒸馏为更小模型 (Sun et al., 2019; Turc et al., 2019) 已成为实际应用中的常见做法。鉴于模型规模的重要性,我们不禁要问:获得更好的自然语言处理 (NLP) 模型是否只需构建更大的模型?

An obstacle to answering this question is the memory limitations of available hardware. Given that current state-of-the-art models often have hundreds of millions or even billions of parameters, it is easy to hit these limitations as we try to scale our models. Training speed can also be significantly hampered in distributed training, as the communication overhead is directly proportional to the number of parameters in the model.

回答这个问题的一个障碍是现有硬件的内存限制。鉴于当前最先进的模型通常具有数亿甚至数十亿参数,在尝试扩展模型时很容易触及这些限制。分布式训练中的训练速度也会受到显著阻碍,因为通信开销与模型参数数量成正比。

Existing solutions to the aforementioned problems include model parallel iz ation (Shazeer et al., 2018; Shoeybi et al., 2019) and clever memory management (Chen et al., 2016; Gomez et al., 2017).

现有解决方案包括模型并行化 (Shazeer et al., 2018; Shoeybi et al., 2019) 和智能内存管理 (Chen et al., 2016; Gomez et al., 2017)。

These solutions address the memory limitation problem, but not the communication overhead. In this paper, we address all of the aforementioned problems, by designing A Lite BERT (ALBERT) architecture that has significantly fewer parameters than a traditional BERT architecture.

这些解决方案解决了内存限制问题,但并未降低通信开销。本文通过设计参数量远少于传统BERT架构的轻量级BERT (ALBERT) 架构,解决了上述所有问题。

ALBERT incorporates two parameter reduction techniques that lift the major obstacles in scaling pre-trained models. The first one is a factorized embedding parameter iz ation. By decomposing the large vocabulary embedding matrix into two small matrices, we separate the size of the hidden layers from the size of vocabulary embedding. This separation makes it easier to grow the hidden size without significantly increasing the parameter size of the vocabulary embeddings. The second technique is cross-layer parameter sharing. This technique prevents the parameter from growing with the depth of the network. Both techniques significantly reduce the number of parameters for BERT without seriously hurting performance, thus improving parameter-efficiency. An ALBERT configuration similar to BERT-large has 18x fewer parameters and can be trained about $1.7\mathbf{X}$ faster. The parameter reduction techniques also act as a form of regular iz ation that stabilizes the training and helps with generalization.

ALBERT采用了两项参数削减技术,有效解决了预训练模型规模化过程中的主要障碍。第一项是因子化嵌入参数化 (factorized embedding parameterization) ,通过将大型词表嵌入矩阵分解为两个小矩阵,实现了隐藏层维度与词表嵌入维度的解耦。这种分离使得增加隐藏层维度时,不会显著增加词表嵌入的参数规模。第二项技术是跨层参数共享,该技术能防止参数量随网络深度增长。这两种技术在基本不影响BERT性能的前提下大幅减少了参数量,从而提升了参数效率。与BERT-large配置相似的ALBERT模型参数量减少了18倍,训练速度提升约$1.7\mathbf{X}$。这些参数削减技术还起到了正则化作用,稳定了训练过程并提升了泛化能力。

To further improve the performance of ALBERT, we also introduce a self-supervised loss for sentence-order prediction (SOP). SOP primary focuses on inter-sentence coherence and is designed to address the ineffectiveness (Yang et al., 2019; Liu et al., 2019) of the next sentence prediction (NSP) loss proposed in the original BERT.

为进一步提升ALBERT的性能,我们还引入了句子顺序预测(SOP)的自监督损失。SOP主要关注句间连贯性,旨在解决原始BERT中提出的下一句预测(NSP)损失效率低下的问题(Yang et al., 2019; Liu et al., 2019)。

As a result of these design decisions, we are able to scale up to much larger ALBERT configurations that still have fewer parameters than BERT-large but achieve significantly better performance. We establish new state-of-the-art results on the well-known GLUE, SQuAD, and RACE benchmarks for natural language understanding. Specifically, we push the RACE accuracy to $89.4%$ , the GLUE benchmark to 89.4, and the F1 score of SQuAD 2.0 to 92.2.

由于这些设计决策,我们能够扩展至更大的ALBERT配置,其参数量仍少于BERT-large但性能显著提升。我们在自然语言理解的经典基准测试GLUE、SQuAD和RACE上创造了新的最优成绩。具体而言,我们将RACE准确率提升至$89.4%$,GLUE基准得分提升至89.4,SQuAD 2.0的F1分数提升至92.2。

2 RELATED WORK

2 相关工作

2.1 SCALING UP REPRESENTATION LEARNING FOR NATURAL LANGUAGE

2.1 自然语言表征学习的规模化扩展

Learning representations of natural language has been shown to be useful for a wide range of NLP tasks and has been widely adopted (Mikolov et al., 2013; Le & Mikolov, 2014; Dai & Le, 2015; Peters et al., 2018; Devlin et al., 2019; Radford et al., 2018; 2019). One of the most significant changes in the last two years is the shift from pre-training word embeddings, whether standard (Mikolov et al., 2013; Pennington et al., 2014) or contextual i zed (McCann et al., 2017; Peters et al., 2018), to full-network pre-training followed by task-specific fine-tuning (Dai & Le, 2015; Radford et al., 2018; Devlin et al., 2019). In this line of work, it is often shown that larger model size improves performance. For example, Devlin et al. (2019) show that across three selected natural language understanding tasks, using larger hidden size, more hidden layers, and more attention heads always leads to better performance. However, they stop at a hidden size of 1024, presumably because of the model size and computation cost problems.

学习自然语言的表示已被证明对广泛的自然语言处理(NLP)任务非常有用,并得到了广泛采用 (Mikolov et al., 2013; Le & Mikolov, 2014; Dai & Le, 2015; Peters et al., 2018; Devlin et al., 2019; Radford et al., 2018; 2019)。过去两年最显著的变化是从预训练词嵌入(无论是标准的 (Mikolov et al., 2013; Pennington et al., 2014) 还是上下文敏感的 (McCann et al., 2017; Peters et al., 2018))转向全网络预训练后进行任务特定微调 (Dai & Le, 2015; Radford et al., 2018; Devlin et al., 2019)。在这类工作中,通常表明更大的模型规模能提升性能。例如,Devlin et al. (2019) 表明,在三个选定的自然语言理解任务中,使用更大的隐藏层大小、更多的隐藏层和更多的注意力头总能带来更好的性能。然而,他们将隐藏层大小停留在1024,推测是由于模型规模和计算成本问题。

It is difficult to experiment with large models due to computational constraints, especially in terms of GPU/TPU memory limitations. Given that current state-of-the-art models often have hundreds of millions or even billions of parameters, we can easily hit memory limits. To address this issue, Chen et al. (2016) propose a method called gradient check pointing to reduce the memory requirement to be sublinear at the cost of an extra forward pass. Gomez et al. (2017) propose a way to reconstruct each layer’s activation s from the next layer so that they do not need to store the intermediate activation s. Both methods reduce the memory consumption at the cost of speed. Raffel et al. (2019) proposed to use model parallel iz ation to train a giant model. In contrast, our parameter-reduction techniques reduce memory consumption and increase training speed.

由于计算资源限制,尤其是GPU/TPU内存限制,对大模型进行实验具有挑战性。鉴于当前最先进的模型通常具有数亿甚至数十亿参数,我们很容易触及内存上限。为解决这一问题,Chen等人 (2016) 提出了一种称为梯度检查点 (gradient check pointing) 的方法,通过额外一次前向传播的代价将内存需求降至亚线性级别。Gomez等人 (2017) 提出通过下一层重构每一层的激活值 (activation),从而无需存储中间激活值。这两种方法都以牺牲速度为代价降低内存消耗。Raffel等人 (2019) 提出使用模型并行化 (model parallelization) 来训练巨型模型。相比之下,我们的参数削减技术既能降低内存消耗,又能提升训练速度。

2.2 CROSS-LAYER PARAMETER SHARING

2.2 跨层参数共享

The idea of sharing parameters across layers has been previously explored with the Transformer architecture (Vaswani et al., 2017), but this prior work has focused on training for standard encoderdecoder tasks rather than the pre training/finetuning setting. Different from our observations, Dehghani et al. (2018) show that networks with cross-layer parameter sharing (Universal Transformer, UT) get better performance on language modeling and subject-verb agreement than the standard transformer. Very recently, Bai et al. (2019) propose a Deep Equilibrium Model (DQE) for transformer networks and show that DQE can reach an equilibrium point for which the input embedding and the output embedding of a certain layer stay the same. Our observations show that our embeddings are oscillating rather than converging. Hao et al. (2019) combine a parameter-sharing transformer with the standard one, which further increases the number of parameters of the standard transformer.

在Transformer架构中共享参数的想法此前已有研究 (Vaswani等人,2017) ,但这些工作主要针对标准编码器-解码器任务的训练,而非预训练/微调场景。与我们的发现不同,Dehghani等人 (2018) 证明跨层参数共享网络 (通用Transformer,UT) 在语言建模和主谓一致任务上表现优于标准Transformer。最近,Bai等人 (2019) 提出Transformer网络的深度均衡模型 (DQE) ,并证明DQE能使特定层的输入嵌入和输出嵌入达到均衡状态。而我们的实验显示嵌入向量呈现振荡而非收敛状态。Hao等人 (2019) 将参数共享Transformer与标准Transformer结合,这反而增加了标准Transformer的参数规模。

2.3 SENTENCE ORDERING OBJECTIVES

2.3 句子排序目标

ALBERT uses a pre training loss based on predicting the ordering of two consecutive segments of text. Several researchers have experimented with pre training objectives that similarly relate to discourse coherence. Coherence and cohesion in discourse have been widely studied and many phenomena have been identified that connect neighboring text segments (Hobbs, 1979; Halliday & Hasan, 1976; Grosz et al., 1995). Most objectives found effective in practice are quite simple. Skipthought (Kiros et al., 2015) and FastSent (Hill et al., 2016) sentence embeddings are learned by using an encoding of a sentence to predict words in neighboring sentences. Other objectives for sentence embedding learning include predicting future sentences rather than only neighbors (Gan et al., 2017) and predicting explicit discourse markers (Jernite et al., 2017; Nie et al., 2019). Our loss is most similar to the sentence ordering objective of Jernite et al. (2017), where sentence embeddings are learned in order to determine the ordering of two consecutive sentences. Unlike most of the above work, however, our loss is defined on textual segments rather than sentences. BERT (Devlin et al., 2019) uses a loss based on predicting whether the second segment in a pair has been swapped with a segment from another document. We compare to this loss in our experiments and find that sentence ordering is a more challenging pre training task and more useful for certain downstream tasks. Concurrently to our work, Wang et al. (2019) also try to predict the order of two consecutive segments of text, but they combine it with the original next sentence prediction in a three-way classification task rather than empirically comparing the two.

ALBERT采用基于预测连续两段文本顺序的预训练损失函数。多位研究者尝试过与语篇连贯性相关的预训练目标。语篇中的连贯与衔接已被广泛研究,学界已发现许多连接相邻文本段的现象 (Hobbs, 1979; Halliday & Hasan, 1976; Grosz et al., 1995)。实践中有效的目标大多较为简单:Skipthought (Kiros et al., 2015) 和FastSent (Hill et al., 2016) 通过使用句子编码预测相邻句子中的词来学习句子嵌入;其他句子嵌入学习目标包括预测未来句子而非仅限相邻句 (Gan et al., 2017),以及预测显式语篇标记 (Jernite et al., 2017; Nie et al., 2019)。我们的损失函数与Jernite等人 (2017) 的句子排序目标最为相似,其通过确定连续两句的顺序来学习句子嵌入。但与上述多数工作不同,我们的损失函数作用于文本段而非句子。BERT (Devlin et al., 2019) 使用基于预测文本对中第二段是否被替换为其他文档片段的损失函数。实验表明,句子排序是更具挑战性的预训练任务,对某些下游任务更有效。与我们同期的工作中,Wang等人 (2019) 也尝试预测连续文本段的顺序,但他们将其与原始下一句预测结合为三向分类任务,而未对两者进行实证比较。

3 THE ELEMENTS OF ALBERT

3 ALBERT 的构成要素

In this section, we present the design decisions for ALBERT and provide quantified comparisons against corresponding configurations of the original BERT architecture (Devlin et al., 2019).

在本节中,我们将介绍ALBERT的设计决策,并提供与原始BERT架构 (Devlin et al., 2019) 相应配置的量化对比。

3.1 MODEL ARCHITECTURE CHOICES

3.1 模型架构选择

The backbone of the ALBERT architecture is similar to BERT in that it uses a transformer encoder (Vaswani et al., 2017) with GELU nonlinear i ties (Hendrycks & Gimpel, 2016). We follow the BERT notation conventions and denote the vocabulary embedding size as $E$ , the number of encoder layers as $L$ , and the hidden size as $H$ . Following Devlin et al. (2019), we set the feed-forward/filter size to be $4H$ and the number of attention heads to be $H/64$ .

ALBERT架构的主干与BERT类似,都采用了Transformer编码器 (Vaswani et al., 2017) 和GELU非线性激活函数 (Hendrycks & Gimpel, 2016)。我们沿用BERT的符号约定:词汇嵌入维度记为$E$,编码器层数记为$L$,隐藏层维度记为$H$。参照Devlin et al. (2019)的设置,前馈网络/滤波器维度设为$4H$,注意力头数设为$H/64$。

There are three main contributions that ALBERT makes over the design choices of BERT.

ALBERT相比BERT的设计选择主要有三点贡献。

Factorized embedding parameter iz ation. In BERT, as well as subsequent modeling improvements such as XLNet (Yang et al., 2019) and RoBERTa (Liu et al., 2019), the WordPiece embedding size $E$ is tied with the hidden layer size $H$ , i.e., $E\equiv H$ . This decision appears suboptimal for both modeling and practical reasons, as follows.

因子化嵌入参数化。在BERT及后续改进模型如XLNet (Yang等人,2019) 和RoBERTa (Liu等人,2019) 中,WordPiece嵌入维度$E$与隐藏层维度$H$绑定,即$E\equiv H$。从建模和实际应用角度来看,这种设计存在以下次优问题:

From a modeling perspective, WordPiece embeddings are meant to learn context-independent representations, whereas hidden-layer embeddings are meant to learn context-dependent representations. As experiments with context length indicate (Liu et al., 2019), the power of BERT-like representations comes from the use of context to provide the signal for learning such context-dependent representations. As such, untying the WordPiece embedding size $E$ from the hidden layer size $H$ allows us to make a more efficient usage of the total model parameters as informed by modeling needs, which dictate that $H\gg E$ .

从建模角度来看,WordPiece嵌入旨在学习上下文无关的表示,而隐藏层嵌入则用于学习上下文相关的表示。正如上下文长度实验所示 [20],类BERT表示的优势源于利用上下文信号来学习这种上下文相关的表示。因此,将WordPiece嵌入尺寸$E$与隐藏层尺寸$H$解耦,能让我们根据建模需求更高效地利用总参数量——该需求决定了$H\gg E$的关系。

From a practical perspective, natural language processing usually require the vocabulary size $V$ to be large.1 If $E\equiv H$ , then increasing $H$ increases the size of the embedding matrix, which has size

从实践角度来看,自然语言处理通常需要词汇表大小 $V$ 足够大。若 $E\equiv H$ ,则增大 $H$ 会导致嵌入矩阵 (embedding matrix) 的尺寸增加,其尺寸为

$V\times E$ . This can easily result in a model with billions of parameters, most of which are only updated sparsely during training.

$V\times E$。这很容易导致模型具有数十亿参数,其中大多数在训练期间仅稀疏更新。

Therefore, for ALBERT we use a factorization of the embedding parameters, decomposing them into two smaller matrices. Instead of projecting the one-hot vectors directly into the hidden space of size $H$ , we first project them into a lower dimensional embedding space of size $E$ , and then project it to the hidden space. By using this decomposition, we reduce the embedding parameters from $O(V\times H)$ to $O(\bar{V}\times E\dot{+}E\dot{\times}H)$ . This parameter reduction is significant when $H\gg E$ . We choose to use the same E for all word pieces because they are much more evenly distributed across documents compared to whole-word embedding, where having different embedding size (Grave et al. (2017); Baevski & Auli (2018); Dai et al. (2019) ) for different words is important.

因此,对于ALBERT模型,我们采用了嵌入参数因式分解策略,将其分解为两个较小的矩阵。我们不再将独热向量直接投影到维度为$H$的隐藏空间,而是先将其投影到维度为$E$的低维嵌入空间,再映射至隐藏空间。通过这种分解方式,嵌入参数量从$O(V\times H)$降至$O(\bar{V}\times E\dot{+}E\dot{\times}H)$。当$H\gg E$时,这种参数量削减效果尤为显著。我们为所有词片段(word piece)选择相同的$E$值,因为相较于需要为不同单词设置不同嵌入维度(Grave et al. (2017); Baevski & Auli (2018); Dai et al. (2019))的整词嵌入,词片段在文档中的分布更为均匀。

Cross-layer parameter sharing. For ALBERT, we propose cross-layer parameter sharing as another way to improve parameter efficiency. There are multiple ways to share parameters, e.g., only sharing feed-forward network (FFN) parameters across layers, or only sharing attention parameters. The default decision for ALBERT is to share all parameters across layers. All our experiments use this default decision unless otherwise specified. We compare this design decision against other strategies in our experiments in Sec. 4.5.

跨层参数共享。对于ALBERT,我们提出跨层参数共享作为另一种提升参数效率的方法。参数共享存在多种方式,例如仅在各层间共享前馈网络(FFN)参数,或仅共享注意力参数。ALBERT的默认方案是在所有层间共享全部参数。除非另有说明,我们所有实验均采用这一默认方案。我们将在4.5节的实验中对比该设计方案与其他策略的效果。

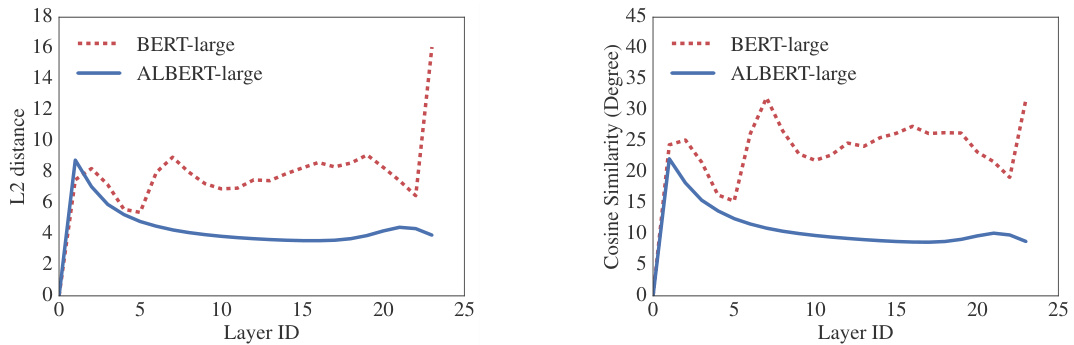

Similar strategies have been explored by Dehghani et al. (2018) (Universal Transformer, UT) and Bai et al. (2019) (Deep Equilibrium Models, DQE) for Transformer networks. Different from our observations, Dehghani et al. (2018) show that UT outperforms a vanilla Transformer. Bai et al. (2019) show that their DQEs reach an equilibrium point for which the input and output embedding of a certain layer stay the same. Our measurement on the L2 distances and cosine similarity show that our embeddings are oscillating rather than converging.

Dehghani等人(2018)(Universal Transformer, UT)和Bai等人(2019)(Deep Equilibrium Models, DQE)针对Transformer网络探索了类似策略。与我们的观察不同,Dehghani等人(2018)表明UT优于原始Transformer。Bai等人(2019)证明他们的DQE能达到某个平衡点,使得特定层的输入和输出嵌入保持不变。而我们对L2距离和余弦相似度的测量显示,我们的嵌入是在振荡而非收敛。

Figure 1: The L2 distances and cosine similarity (in terms of degree) of the input and output embedding of each layer for BERT-large and ALBERT-large.

图 1: BERT-large 和 ALBERT-large 各层输入与输出嵌入的 L2 距离及余弦相似度(以角度表示)。

Figure 1 shows the L2 distances and cosine similarity of the input and output embeddings for each layer, using BERT-large and ALBERT-large configurations (see Table 1). We observe that the transitions from layer to layer are much smoother for ALBERT than for BERT. These results show that weight-sharing has an effect on stabilizing network parameters. Although there is a drop for both metrics compared to BERT, they nevertheless do not converge to 0 even after 24 layers. This shows that the solution space for ALBERT parameters is very different from the one found by DQE.

图1展示了使用BERT-large和ALBERT-large配置(见表1)时,各层输入与输出嵌入向量的L2距离和余弦相似度。我们观察到ALBERT的层间变化比BERT更为平滑。这些结果表明权重共享对稳定网络参数具有影响。尽管两项指标相较BERT均有所下降,但即便经过24层处理后仍未收敛至0。这说明ALBERT参数的解空间与DQE发现的解空间存在显著差异。

Inter-sentence coherence loss. In addition to the masked language modeling (MLM) loss (Devlin et al., 2019), BERT uses an additional loss called next-sentence prediction (NSP). NSP is a binary classification loss for predicting whether two segments appear consecutively in the original text, as follows: positive examples are created by taking consecutive segments from the training corpus; negative examples are created by pairing segments from different documents; positive and negative examples are sampled with equal probability. The NSP objective was designed to improve performance on downstream tasks, such as natural language inference, that require reasoning about the relationship between sentence pairs. However, subsequent studies (Yang et al., 2019; Liu et al., 2019) found NSP’s impact unreliable and decided to eliminate it, a decision supported by an improvement in downstream task performance across several tasks.

句间连贯性损失。除了掩码语言建模(MLM)损失(Devlin等人,2019)外,BERT还使用了一种称为下一句预测(NSP)的额外损失。NSP是一个二元分类损失,用于预测两个片段是否在原始文本中连续出现,具体如下:正例通过从训练语料中提取连续片段生成;负例通过配对不同文档的片段生成;正负例以相同概率采样。NSP目标旨在提升需要推理句对关系的下游任务(如自然语言推理)性能。但后续研究(Yang等人,2019;Liu等人,2019)发现NSP的影响不可靠,决定取消该机制,这一决策得到了多项任务下游性能提升的支持。

We conjecture that the main reason behind NSP’s ineffectiveness is its lack of difficulty as a task, as compared to MLM. As formulated, NSP conflates topic prediction and coherence prediction in a

我们推测NSP(Next Sentence Prediction)无效的主要原因在于,与MLM(Masked Language Modeling)相比,其任务难度不足。按照现有设计,NSP将主题预测和连贯性预测混为一谈。

| 模型 | 版本 | 参数量 | 层数 | 隐藏层维度 | 嵌入维度 | 参数共享 |

|---|---|---|---|---|---|---|

| BERT | base | 108M | 12 | 768 | 768 | False |

| large | 334M | 24 | 1024 | 1024 | False | |

| ALBERT | base | 12M | 12 | 768 | 128 | True |

| large | 18M | 24 | 1024 | 128 | True | |

| xlarge | 60M | 24 | 2048 | 128 | True | |

| xxlarge | 235M | 12 | 4096 | 128 | True |

Table 1: The configurations of the main BERT and ALBERT models analyzed in this paper.

表 1: 本文分析的主要 BERT 和 ALBERT 模型配置。

single task2. However, topic prediction is easier to learn compared to coherence prediction, and also overlaps more with what is learned using the MLM loss.

然而,与连贯性预测相比,主题预测更容易学习,并且与使用MLM损失学习的内容重叠更多。

We maintain that inter-sentence modeling is an important aspect of language understanding, but we propose a loss based primarily on coherence. That is, for ALBERT, we use a sentence-order prediction (SOP) loss, which avoids topic prediction and instead focuses on modeling inter-sentence coherence. The SOP loss uses as positive examples the same technique as BERT (two consecutive segments from the same document), and as negative examples the same two consecutive segments but with their order swapped. This forces the model to learn finer-grained distinctions about discourse-level coherence properties. As we show in Sec. 4.6, it turns out that NSP cannot solve the SOP task at all (i.e., it ends up learning the easier topic-prediction signal, and performs at randombaseline level on the SOP task), while SOP can solve the NSP task to a reasonable degree, presumably based on analyzing misaligned coherence cues. As a result, ALBERT models consistently improve downstream task performance for multi-sentence encoding tasks.

我们坚持认为句子间建模是语言理解的重要方面,但提出了一种主要基于连贯性的损失函数。具体而言,ALBERT采用句子顺序预测(SOP)损失,避免主题预测而专注于句子间连贯性建模。该损失函数以BERT相同的技术构建正例(来自同一文档的两个连续段落),负例则保持相同段落但交换顺序。这种设计迫使模型学习语篇层面连贯性的细粒度区分特征。如第4.6节所示,NSP完全无法解决SOP任务(最终仅学习到更简单的主题预测信号,在SOP任务上表现与随机基线相当),而SOP能较好解决NSP任务,这可能是基于对错位连贯性线索的分析。因此,ALBERT模型在多句子编码任务中持续提升下游任务表现。

3.2 MODEL SETUP

3.2 模型设置

We present the differences between BERT and ALBERT models with comparable hyper parameter settings in Table 1. Due to the design choices discussed above, ALBERT models have much smaller parameter size compared to corresponding BERT models.

我们在表1中展示了具有可比超参数设置的BERT和ALBERT模型之间的差异。由于上述设计选择,ALBERT模型的参数量比对应的BERT模型小得多。

For example, ALBERT-large has about $18\mathrm{x}$ fewer parameters compared to BERT-large, 18M versus 334M. An ALBERT-xlarge configuration with $H=2048$ has only 60M parameters and an ALBERT-xxlarge configuration with $H=4096$ has 233M parameters, i.e., around $70%$ of BERTlarge’s parameters. Note that for ALBERT-xxlarge, we mainly report results on a 12-layer network because a 24-layer network (with the same configuration) obtains similar results but is computationally more expensive.

例如,ALBERT-large 的参数数量比 BERT-large 少约 $18\mathrm{x}$,分别为 18M 和 334M。配置为 $H=2048$ 的 ALBERT-xlarge 仅有 60M 参数,而 $H=4096$ 的 ALBERT-xxlarge 则有 233M 参数,约为 BERT-large 参数的 $70%$。需要注意的是,对于 ALBERT-xxlarge,我们主要报告了 12 层网络的结果,因为 24 层网络(相同配置)虽然结果相似,但计算成本更高。

This improvement in parameter efficiency is the most important advantage of ALBERT’s design choices. Before we can quantify this advantage, we need to introduce our experimental setup in more detail.

参数效率的提升是ALBERT设计决策中最重要的优势。在量化这一优势之前,我们需要更详细地介绍实验设置。

4 EXPERIMENTAL RESULTS

4 实验结果

4.1 EXPERIMENTAL SETUP

4.1 实验设置

To keep the comparison as meaningful as possible, we follow the BERT (Devlin et al., 2019) setup in using the BOOKCORPUS (Zhu et al., 2015) and English Wikipedia (Devlin et al., 2019) for pretraining baseline models. These two corpora consist of around 16GB of uncompressed text. We format our inputs as “[CLS] $x_{1}$ [SEP] $x_{2}$ [SEP]”, where $x_{1}=x_{1,1},x_{1,2}\cdot\cdot\cdot$ and $x_{2}=x_{1,1},x_{1,2}\cdot\cdot\cdot$ are two segments.3 We always limit the maximum input length to 512, and randomly generate input sequences shorter than 512 with a probability of $10%$ . Like BERT, we use a vocabulary size of 30,000, tokenized using Sentence Piece (Kudo & Richardson, 2018) as in XLNet (Yang et al., 2019).

为了使对比尽可能有意义,我们遵循BERT (Devlin et al., 2019)的设置,使用BOOKCORPUS (Zhu et al., 2015)和英文维基百科(Devlin et al., 2019)作为预训练基准模型的数据集。这两个语料库包含约16GB未压缩文本。我们将输入格式化为"[CLS] $x_{1}$ [SEP] $x_{2}$ [SEP]",其中$x_{1}=x_{1,1},x_{1,2}\cdot\cdot\cdot$和$x_{2}=x_{1,1},x_{1,2}\cdot\cdot\cdot$是两个文本段。我们始终将最大输入长度限制为512,并以$10%$的概率随机生成短于512的输入序列。与BERT类似,我们使用30,000大小的词汇表,采用XLNet (Yang et al., 2019)中的Sentence Piece (Kudo & Richardson, 2018)进行token化处理。

We generate masked inputs for the MLM targets using $n$ -gram masking (Joshi et al., 2019), with the length of each $n$ -gram mask selected randomly. The probability for the length $n$ is given by

我们使用$n$-gram掩码(Joshi等人,2019)为MLM目标生成掩码输入,其中每个$n$-gram掩码的长度随机选择。长度$n$的概率由

$$

p(n)=\frac{1/n}{\sum_{k=1}^{N}1/k}

$$

$$

p(n)=\frac{1/n}{\sum_{k=1}^{N}1/k}

$$

We set the maximum length of $n$ -gram (i.e., $n$ ) to be 3 (i.e., the MLM target can consist of up to a 3-gram of complete words, such as “White House correspondents”).

我们将 $n$ -gram (即 $n$ )的最大长度设为3 (即MLM目标最多可由3个完整单词组成,例如"White House correspondents")。

All the model updates use a batch size of 4096 and a LAMB optimizer with learning rate 0.00176 (You et al., 2019). We train all models for 125,000 steps unless otherwise specified. Training was done on Cloud TPU V3. The number of TPUs used for training ranged from 64 to 512, depending on model size.

所有模型更新均采用批量大小(batch size)为4096的配置,并使用学习率为0.00176的LAMB优化器 (You et al., 2019) 。除非另有说明,所有模型均训练125,000步。训练在Cloud TPU V3上完成,使用的TPU数量根据模型规模从64到512不等。

The experimental setup described in this section is used for all of our own versions of BERT as well as ALBERT models, unless otherwise specified.

本节描述的实验设置适用于我们所有自研BERT版本及ALBERT模型,除非另有说明。

4.2 EVALUATION BENCHMARKS

4.2 评估基准

4.2.1 INTRINSIC EVALUATION

4.2.1 内在评估

To monitor the training progress, we create a development set based on the development sets from SQuAD and RACE using the same procedure as in Sec. 4.1. We report accuracies for both MLM and sentence classification tasks. Note that we only use this set to check how the model is converging; it has not been used in a way that would affect the performance of any downstream evaluation, such as via model selection.

为了监控训练进度,我们基于SQuAD和RACE的开发集按照第4.1节相同流程创建了一个开发集。我们同时报告了MLM(掩码语言建模)和句子分类任务的准确率。需注意的是,该数据集仅用于观察模型收敛情况,未以任何影响下游评估性能的方式(例如模型选择)被使用。

4.2.2 DOWNSTREAM EVALUATION

4.2.2 下游评估

Following Yang et al. (2019) and Liu et al. (2019), we evaluate our models on three popular benchmarks: The General Language Understanding Evaluation (GLUE) benchmark (Wang et al., 2018), two versions of the Stanford Question Answering Dataset (SQuAD; Rajpurkar et al., 2016; 2018), and the ReAding Comprehension from Examinations (RACE) dataset (Lai et al., 2017). For completeness, we provide description of these benchmarks in Appendix A.3. As in (Liu et al., 2019), we perform early stopping on the development sets, on which we report all comparisons except for our final comparisons based on the task leader boards, for which we also report test set results. For GLUE datasets that have large variances on the dev set, we report median over 5 runs.

遵循 Yang 等人 (2019) 和 Liu 等人 (2019) 的方法,我们在三个主流基准上评估模型:通用语言理解评估 (GLUE) 基准 (Wang 等人, 2018)、两个版本的斯坦福问答数据集 (SQuAD; Rajpurkar 等人, 2016; 2018),以及来自考试的阅读理解 (RACE) 数据集 (Lai 等人, 2017)。为完整起见,我们在附录 A.3 中提供了这些基准的描述。与 (Liu 等人, 2019) 相同,我们在开发集上采用早停策略,并基于该集合报告所有对比结果(最终基于任务排行榜的对比除外,此时我们同时报告测试集结果)。对于开发集方差较大的 GLUE 数据集,我们报告 5 次运行的中位数。

4.3 OVERALL COMPARISON BETWEEN BERT AND ALBERT

4.3 BERT与ALBERT的整体对比

We are now ready to quantify the impact of the design choices described in Sec. 3, specifically the ones around parameter efficiency. The improvement in parameter efficiency showcases the most important advantage of ALBERT’s design choices, as shown in Table 2: with only around $70%$ of BERT-large’s parameters, ALBERT-xxlarge achieves significant improvements over BERT-large, as measured by the difference on development set scores for several representative downstream tasks: SQuAD v1.1 $(+1.9%)$ , SQuAD v2.0 $(+3.1%)$ , MNLI $(+1.4%)$ , SST-2 $(+2.2%)$ , and RACE $(+8.4%)$ .

我们现在可以量化第3节所述设计选择的影响,特别是围绕参数效率的那些。如表2所示,参数效率的提升体现了ALBERT设计选择的最大优势:ALBERT-xxlarge仅用BERT-large约70%的参数,就在多个代表性下游任务的开发集分数上取得了显著提升,具体表现为SQuAD v1.1 (+1.9%)、SQuAD v2.0 (+3.1%)、MNLI (+1.4%)、SST-2 (+2.2%)和RACE (+8.4%)的差异。

Another interesting observation is the speed of data throughput at training time under the same training configuration (same number of TPUs). Because of less communication and fewer computations, ALBERT models have higher data throughput compared to their corresponding BERT models. If we use BERT-large as the baseline, we observe that ALBERT-large is about 1.7 times faster in iterating through the data while ALBERT-xxlarge is about 3 times slower because of the larger structure.

另一个有趣的观察是在相同训练配置(相同数量的TPU)下训练时的数据吞吐速度。由于通信更少、计算量更小,ALBERT模型相比对应的BERT模型具有更高的数据吞吐量。若以BERT-large为基准,我们观察到ALBERT-large的数据迭代速度约快1.7倍,而ALBERT-xxlarge由于结构更大则约慢3倍。

Next, we perform ablation experiments that quantify the individual contribution of each of the design choices for ALBERT.

接下来,我们进行消融实验以量化ALBERT各项设计选择的独立贡献。

4.4 FACTORIZED EMBEDDING PARAMETER IZ ATION

4.4 因子化嵌入参数化

Table 3 shows the effect of changing the vocabulary embedding size $E$ using an ALBERT-base configuration setting (see Table 1), using the same set of representative downstream tasks. Under the non-shared condition (BERT-style), larger embedding sizes give better performance, but not by

表 3: 展示了在ALBERT-base配置(见表1)下改变词汇嵌入维度$E$的效果,使用相同的代表性下游任务集。在非共享条件下(BERT风格),增大嵌入维度能提升性能,但提升幅度不大。

Table 2: Dev set results for models pretrained over BOOKCORPUS and Wikipedia for $125\mathrm{k\Omega}$ steps. Here and everywhere else, the Avg column is computed by averaging the scores of the downstream tasks to its left (the two numbers of F1 and EM for each SQuAD are first averaged).

表 2: 在 BOOKCORPUS 和 Wikipedia 上预训练 $125\mathrm{k\Omega}$ 步的模型在开发集上的结果。此处及后续表格中,Avg 列是通过对左侧下游任务得分取平均计算得出 (每个 SQuAD 的 F1 和 EM 两个分数先取平均)。

| 模型 | 变体 | 参数量 | SQuAD1.1 | SQuAD2.0 | MNLI | SST-2 | RACE | Avg | 加速比 |

|---|---|---|---|---|---|---|---|---|---|

| BERT | base | 108M | 90.4/83.2 | 80.4/77.6 | 84.5 | 92.8 | 68.2 | 82.3 | 4.7x |

| BERT | large | 334M | 92.2/85.5 | 85.0/82.2 | 86.6 | 93.0 | 73.9 | 85.2 | 1.0 |

| ALBERT | base | 12M | 89.3/82.3 | 80.0/77.1 | 81.6 | 90.3 | 64.0 | 80.1 | 5.6x |

| ALBERT | large | 18M | 90.6/83.9 | 82.3/79.4 | 83.5 | 91.7 | 68.5 | 82.4 | 1.7x |

| ALBERT | xlarge | 60M | 92.5/86.1 | 86.1/83.1 | 86.4 | 92.4 | 74.8 | 85.5 | 0.6x |

| ALBERT | xxlarge | 235M | 94.1/88.3 | 88.1/85.1 | 88.0 | 95.2 | 82.3 | 88.7 | 0.3x |

much. Under the all-shared condition (ALBERT-style), an embedding of size 128 appears to be the best. Based on these results, we use an embedding size $E=128$ in all future settings, as a necessary step to do further scaling.

在所有参数共享的条件下(ALBERT风格),128维的嵌入效果最佳。基于这些结果,我们在后续所有设置中采用嵌入维度 $E=128$ ,作为进一步扩展的必要步骤。

Table 3: The effect of vocabulary embedding size on the performance of ALBERT-base.

表 3: 词嵌入维度对 ALBERT-base 模型性能的影响

| 模型 | E | 参数量 | SQuAD1.1 | SQuAD2.0 | MNLI | SST-2 | RACE | 平均 |

|---|---|---|---|---|---|---|---|---|

| ALBERT base not-shared | 64 | 87M | 89.9/82.9 | 80.1/77.8 | 82.9 | 91.5 | 66.7 | 81.3 |

| 128 | 89M | 89.9/82.8 | 80.3/77.3 | 83.7 | 91.5 | 67.9 | 81.7 | |

| 256 | 93M | 90.2/83.2 | 80.3/77.4 | 84.1 | 91.9 | 67.3 | 81.8 | |

| 768 | 108M | 90.4/83.2 | 80.4/77.6 | 84.5 | 92.8 | 68.2 | 82.3 | |

| ALBERT base all-shared | 64 | 10M | 88.7/81.4 | 77.5/74.8 | 80.8 | 89.4 | 63.5 | 79.0 |

| 128 | 12M | 89.3/82.3 | 80.0/77.1 | 81.6 | 90.3 | 64.0 | 80.1 | |

| 256 | 16M | 88.8/81.5 | 79.1/76.3 | 81.5 | 90.3 | 63.4 | 79.6 | |

| 768 | 31M | 88.6/81.5 | 79.2/76.6 | 82.0 | 90.6 | 63.3 | 79.8 |

4.5 CROSS-LAYER PARAMETER SHARING

4.5 跨层参数共享

Table 4 presents experiments for various cross-layer parameter-sharing strategies, using an ALBERT-base configuration (Table 1) with two embedding sizes $X=768$ and $E=128$ ). We compare the all-shared strategy (ALBERT-style), the not-shared strategy (BERT-style), and intermediate strategies in which only the attention parameters are shared (but not the FNN ones) or only the FFN parameters are shared (but not the attention ones).

表 4 展示了不同跨层参数共享策略的实验结果,采用 ALBERT-base 配置 (表 1) 并设置两种嵌入尺寸 $X=768$ 和 $E=128$。我们对比了全共享策略 (ALBERT 风格)、无共享策略 (BERT 风格) 以及两种中间策略:仅共享注意力参数 (不共享 FNN 参数) 和仅共享 FFN 参数 (不共享注意力参数)。

The all-shared strategy hurts performance under both conditions, but it is less severe for $E=128$ (- 1.5 on Avg) compared to $E=768$ (-2.5 on Avg). In addition, most of the performance drop appears to come from sharing the FFN-layer parameters, while sharing the attention parameters results in no drop when $E=128$ $(+0.1$ on Avg), and a slight drop when $E=768$ (-0.7 on Avg).

全共享策略在两种情况下都会损害性能,但对于$E=128$(平均下降1.5)的影响比$E=768$(平均下降2.5)更轻微。此外,大部分性能下降似乎来自共享FFN层参数,而共享注意力参数在$E=128$时不会导致性能下降(平均+0.1),在$E=768$时仅轻微下降(平均-0.7)。

There are other strategies of sharing the parameters cross layers. For example, We can divide the $L$ layers into $N$ groups of size $M$ , and each size $\mathcal{M}$ group shares parameters. Overall, our experimental results shows that the smaller the group size $M$ is, the better the performance we get. However, decreasing group size $M$ also dramatically increase the number of overall parameters. We choose all-shared strategy as our default choice.

存在其他跨层共享参数的策略。例如,我们可以将 $L$ 层划分为 $N$ 个大小为 $M$ 的组,每个大小为 $\mathcal{M}$ 的组共享参数。总体而言,实验结果表明,组大小 $M$ 越小,性能越好。然而,减小组大小 $M$ 也会显著增加总参数数量。我们选择全共享策略作为默认方案。

Table 4: The effect of cross-layer parameter-sharing strategies, ALBERT-base configuration.

表 4: 跨层参数共享策略的效果,ALBERT-base配置。

| 模型 | 参数共享策略 | 参数量 | SQuAD1.1 | SQuAD2.0 | MNLI | SST-2 | RACE | 平均 |

|---|---|---|---|---|---|---|---|---|

| ALBERT base E=768 | 全共享 | 31M | 88.6/81.5 | 79.2/76.6 | 82.0 | 90.6 | 63. |