Large Language Models Struggle to Learn Long-Tail Knowledge

大语言模型难以掌握长尾知识

Nikhil Kandpal 1 Haikang Deng 1 Adam Roberts 2 Eric Wallace 3 Colin Raffel 1

Nikhil Kandpal 1 Haikang Deng 1 Adam Roberts 2 Eric Wallace 3 Colin Raffel 1

Abstract

摘要

The Internet contains a wealth of knowledge— from the birthdays of historical figures to tutorials on how to code—all of which may be learned by language models. However, while certain pieces of information are ubiquitous on the web, others appear extremely rarely. In this paper, we study the relationship between the knowledge memorized by large language models and the information in pre-training datasets scraped from the web. In particular, we show that a language model’s ability to answer a fact-based question relates to how many documents associated with that question were seen during pre-training. We identify these relevant documents by entity linking pre-training datasets and counting documents that contain the same entities as a given questionanswer pair. Our results demonstrate strong correlational and causal relationships between accuracy and relevant document count for numerous question answering datasets (e.g., TriviaQA), pretraining corpora (e.g., ROOTS), and model sizes (e.g., 176B parameters). Moreover, while larger models are better at learning long-tail knowledge, we estimate that today’s models must be scaled by many orders of magnitude to reach competitive QA performance on questions with little support in the pre-training data. Finally, we show that retrieval-augmentation can reduce the dependence on relevant pre-training information, presenting a promising approach for capturing the long-tail.

互联网蕴藏着丰富的知识——从历史人物的生日到编程教程——这些都可能被语言模型学习。然而,尽管某些信息在网络上无处不在,但其他信息却极为罕见。本文研究了大语言模型记忆的知识与从网络抓取的预训练数据集信息之间的关系。具体而言,我们发现语言模型回答基于事实问题的能力与预训练期间看到的与该问题相关的文档数量有关。我们通过实体链接预训练数据集并统计包含与给定问答对相同实体的文档来识别这些相关文档。我们的结果表明,在多个问答数据集(如TriviaQA)、预训练语料库(如ROOTS)和模型规模(如1760亿参数)中,准确率与相关文档数量之间存在强烈的相关性和因果关系。此外,虽然更大的模型更擅长学习长尾知识,但我们估计,当前模型必须扩大多个数量级才能在预训练数据支持极少的问题上达到有竞争力的问答性能。最后,我们展示了检索增强可以减少对相关预训练信息的依赖,为捕获长尾知识提供了一种有前景的方法。

1. Introduction

1. 引言

Large language models (LLMs) trained on text from the Internet capture many facts about the world, ranging from well-known factoids to esoteric domain-specific information. These models implicitly store knowledge in their parameters (Petroni et al., 2019; Roberts et al., 2020), and given the scale of today’s pre-training datasets and LLMs, one would hope that they can learn a huge amount of information from web-sourced text. However, not all of the knowledge on the Internet appears equally often—there is a long-tail of information that appears rarely or only once.

大语言模型(LLM)通过互联网文本训练,掌握了从广为人知的琐碎知识到冷门领域专业信息的海量事实。这些模型将知识隐式存储在参数中(Petroni et al., 2019; Roberts et al., 2020),考虑到当今预训练数据集和大语言模型的规模,人们期望它们能从网络文本中学习到巨量信息。然而,并非所有网络知识都以相同频率出现——存在大量仅出现一次或极少出现的长尾信息。

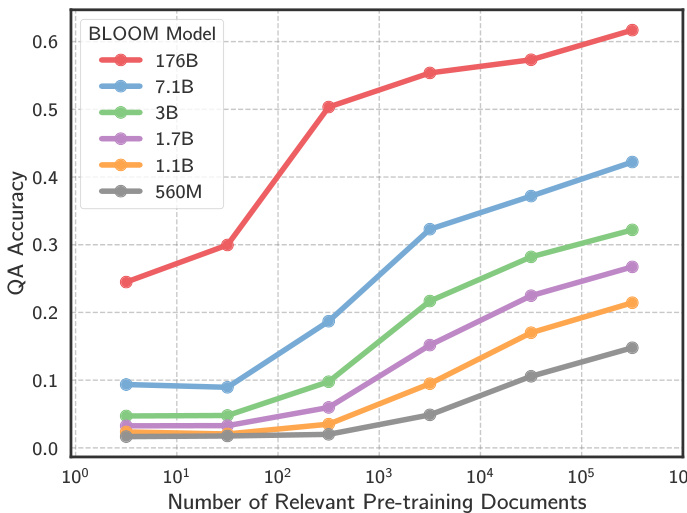

Figure 1. Language models struggle to capture the long-tail of information on the web. Above, we plot accuracy for the BLOOM model family on TriviaQA as a function of how many documents in the model’s pre-training data are relevant to each question.

图 1: 语言模型难以捕捉网络信息的长尾分布。上图展示了BLOOM模型家族在TriviaQA上的准确率,该准确率与模型预训练数据中与每个问题相关的文档数量呈函数关系。

In this work, we explore the relationship between the knowledge learned by an LLM and the information in its pretraining dataset. Specifically, we study how an LLM’s ability to answer a question relates to how many documents associated with that question were seen during pretraining. We focus on factoid QA datasets (Joshi et al., 2017; Kwiatkowski et al., 2019), which lets us ground questionanswer pairs into concrete subject-object co-occurrences. As an example, for the QA pair (In what city was the poet Dante born?, Florence), we consider documents where the entities Dante and Florence co-occur as highly relevant. To identify these entity co-occurrences we apply a highlyparallel i zed entity linking pipeline to trillions of tokens from datasets such as C4 (Raffel et al., 2020), The Pile (Gao et al., 2020), ROOTS (Laurencon et al., 2022), OpenWeb- Text (Gokaslan & Cohen, 2019), and Wikipedia.

在本研究中,我们探索了大语言模型(LLM)所学知识与预训练数据集中信息之间的关系。具体而言,我们研究了大语言模型回答问题的能力与其在预训练期间看到的与该问题相关的文档数量之间的联系。我们以事实型问答数据集 (Joshi et al., 2017; Kwiatkowski et al., 2019) 为重点,这使得我们可以将问答对落实到具体的主客体共现关系中。例如,对于问答对 (诗人但丁出生在哪个城市?,佛罗伦萨),我们认为同时出现但丁和佛罗伦萨这两个实体的文档具有高度相关性。为了识别这些实体共现情况,我们对来自C4 (Raffel et al., 2020)、The Pile (Gao et al., 2020)、ROOTS (Laurencon et al., 2022)、OpenWebText (Gokaslan & Cohen, 2019) 和维基百科等数据集的数万亿token应用了高度并行化的实体链接流程。

We observe a strong correlation between an LM’s ability to answer a question and the number of pre-training documents relevant to that question for numerous QA datasets, pretraining datasets, and model sizes (e.g., Figure 1). For example, the accuracy of BLOOM-176B (Scao et al., 2022) jumps from $25%$ to above $55%$ when the number of relevant pre-training documents increases from $10^{1}$ to $10^{4}$ .

我们观察到,在众多问答数据集、预训练数据集和模型规模(如 图 1)中,大语言模型回答问题的能力与其预训练文档中相关问题数量的相关性很强。例如,当相关预训练文档数量从 $10^{1}$ 增加到 $10^{4}$ 时,BLOOM-176B (Scao et al., 2022)的准确率从 $25%$ 跃升至 $55%$ 以上。

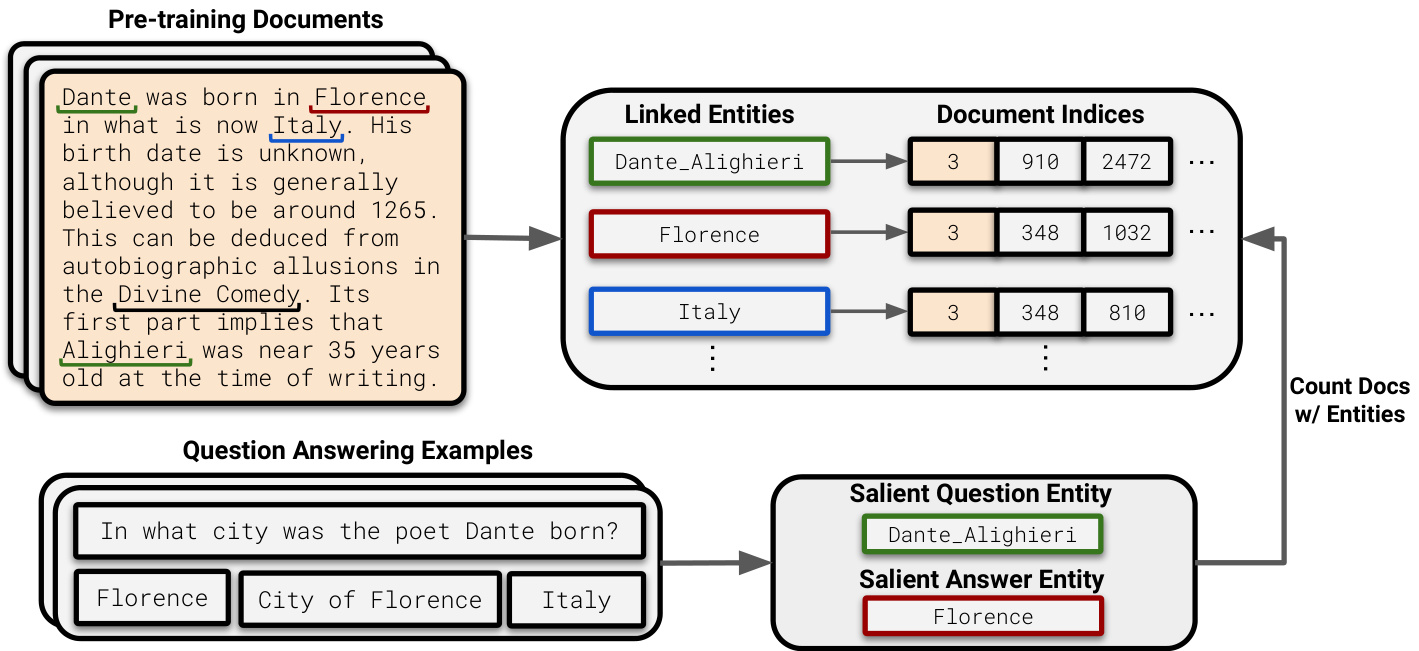

Figure 2. In our document counting pipeline, we first run entity linking on large pre-training datasets (top left) and store the set of the document indices in which each entity appears (top right). We then entity link downstream QA pairs and extract the salient question and answer entities (bottom). Finally, for each question we count the number of documents in which the question and answer entities co-occur.

图 2: 在我们的文档计数流程中,首先在大型预训练数据集(左上)上运行实体链接,并存储每个实体出现的文档索引集合(右上)。接着对下游问答对进行实体链接,提取关键问题和答案实体(底部)。最后,针对每个问题统计问题和答案实体共同出现的文档数量。

We also conduct a counter factual re-training experiment, where we train a 4.8B-parameter LM with and without certain documents. Model accuracy drops significantly on questions whose relevant documents were removed, which validates our entity linking pipeline and shows that the observed correlation al trends are likely causal in nature.

我们还进行了一项反事实重新训练实验,在实验中分别使用包含和排除特定文档的数据训练了一个48亿参数的大语言模型。当移除相关文档时,模型在这些问题上的准确率显著下降,这验证了我们的实体链接流程,并表明观察到的相关性趋势很可能具有因果性。

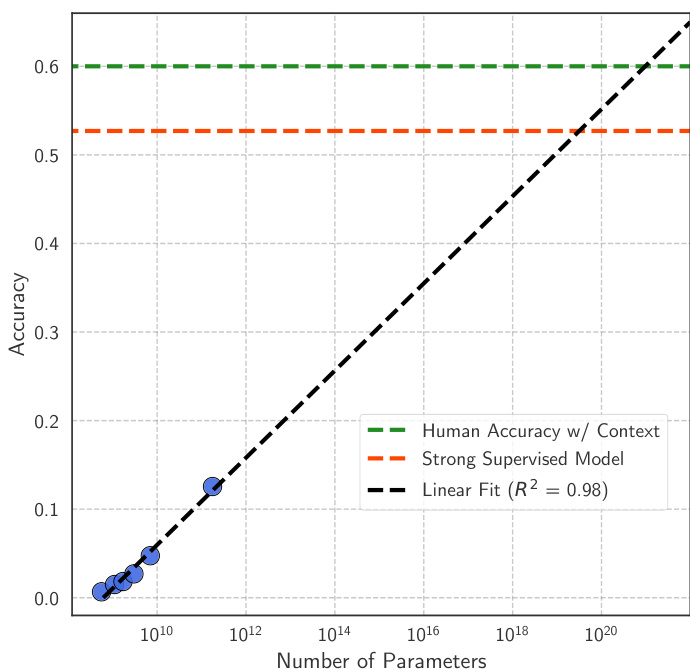

Finally, we analyze ways to better capture knowledge that rarely appears in the pre-training data: model scaling and retrieval-augmentation. For model scaling, we find a strong log-linear relationship between parameter count and QA accuracy. These trends show that while scaling up LMs improves knowledge learning, models would need to be scaled dramatically (e.g., to one quadrillion parameters) to achieve competitive QA accuracy on long-tail questions. Retrieval-augmented systems are more promising—when a retriever succeeds in finding a relevant document, it reduces an LLM’s need to have a large amount of relevant pretraining text. Nevertheless, retrieval systems themselves still exhibit a mild dependence on relevant document count.

最后,我们分析了如何更好地捕捉预训练数据中罕见的知识:模型扩展和检索增强。对于模型扩展,我们发现参数量与问答准确率之间存在显著的对数线性关系。这些趋势表明,虽然扩大大语言模型能提升知识学习能力,但要使模型在长尾问题上达到有竞争力的问答准确率,仍需大幅扩展规模(例如达到千万亿参数)。检索增强系统更具前景——当检索器成功找到相关文档时,可大幅降低大语言模型对海量相关预训练文本的需求。但检索系统本身仍对相关文档数量存在轻度依赖。

Overall, our work is one of the first to study how LLM knowledge is influenced by pre-training data. To enable future research, we release our code as well as the entity data for ROOTS, The Pile, C4, Open Web Text, and Wikipedia at https://github.com/nkandpa2/long tail knowledge.

总体而言,我们的工作是首批研究大语言模型(LLM)知识如何受预训练数据影响的成果之一。为促进后续研究,我们在https://github.com/nkandpa2/long tail knowledge发布了代码及ROOTS、The Pile、C4、Open Web Text和Wikipedia的实体数据。

2. Identifying Relevant Pre-training Data

2. 识别相关预训练数据

Background and Research Question Numerous NLP tasks are knowledge-intensive: they require recalling and synthesizing facts from a knowledge source (e.g. Wikipedia or the web). Results on knowledge-intensive tasks have been dramatically improved using LLMs, as these models have been shown to leverage the vast amounts of knowledge they learn from their pre-training corpora (Roberts et al., 2020; Petroni et al., 2019; De Cao et al., 2021). However, it remains unclear as to what kind of knowledge LMs actually capture—for example, do they simply learn “easy” facts that frequently appear in their pre-training data?

背景与研究问题

许多自然语言处理(NLP)任务具有知识密集型特性:它们需要从知识源(如维基百科或互联网)中回忆并综合事实。使用大语言模型(LLM)显著提升了知识密集型任务的表现,因为这些模型已被证明能够利用从预训练语料中学到的大量知识(Roberts et al., 2020; Petroni et al., 2019; De Cao et al., 2021)。然而,目前尚不清楚语言模型究竟捕获了何种知识——例如,它们是否仅学习了预训练数据中频繁出现的"简单"事实?

We study this question using closed-book QA evaluations (Roberts et al., 2020) of LLMs in the few-shot setting (Brown et al., 2020). Models are prompted with incontext training examples (QA pairs) and a test question without any relevant background text. The goal of our work is to investigate the relationship between an LM’s ability to answer a question and the number of times information relevant to that question appears in the pre-training data.

我们通过在少样本(few-shot)设置下对大语言模型进行闭卷问答评估(Roberts等人,2020)来研究这个问题(Brown等人,2020)。模型通过上下文训练示例(问答对)和无相关背景文本的测试问题进行提示。我们工作的目标是探究语言模型回答问题的能力与预训练数据中相关信息出现次数之间的关系。

Our Approach The key challenge is to efficiently identify all of the documents that are relevant to a particular QA pair in pre-training datasets that are hundreds of gigabytes in size. To tackle this, we begin by identifying the salient entities that are contained in a question and its set of ground-truth answer aliases. We then identify relevant pre-training documents by searching for instances where the salient question entity and the answer entity co-occur.

我们的方法

关键挑战在于如何高效地从数百GB规模的预训练数据集中识别出与特定问答对相关的所有文档。为此,我们首先从问题及其真实答案别名集合中提取关键实体,然后通过搜索关键问题实体与答案实体共现的实例来定位相关预训练文档。

For example, consider the question In what city was the poet Dante born? with the valid answers Florence, City of Florence, and Italy (e.g., Figure 2). We extract the salient question and answer entities, Dante Alighieri and Florence, and count the documents that contain both entities.

例如,考虑问题"诗人Dante出生在哪个城市?"的有效答案为Florence、City of Florence和Italy (如 图 2: )。我们提取关键问题实体Dante Alighieri和答案实体Florence,并统计同时包含这两个实体的文档数量。

Our approach is motivated by Elsahar et al. (2018), who show that when only the subject and object of a subjectobject-relation triple co-occur in text, the resulting triple is often also present. In addition, we conduct human studies that show our document counting pipeline selects relevant documents a majority of the time (Section 2.3). Moreover, we further validate our pipeline by training an LM without certain relevant documents and showing that this reduces accuracy on the associated questions (Section 3.2). Based on these findings, we refer to documents that contain the salient question and answer entities as relevant documents.

我们的方法受 Elsahar 等人 (2018) 的启发,他们表明当主语-宾语关系三元组中仅主语和宾语在文本中共现时,生成的三元组通常也存在。此外,我们进行的人类研究表明,我们的文档计数流程在大多数情况下能选择相关文档 (第 2.3 节)。更重要的是,我们通过训练一个不包含某些相关文档的大语言模型来进一步验证该流程,结果显示这会降低相关问题的准确率 (第 3.2 节)。基于这些发现,我们将包含显著问题和答案实体的文档称为相关文档。

To apply the above method, we must entity link massive pre-training corpora, as well as downstream QA datasets. We accomplish this by building a parallel i zed pipeline for entity linking (Section 2.1), which we then customize for downstream QA datasets (Section 2.2).

要应用上述方法,我们必须对海量预训练语料及下游问答数据集进行实体链接。为此,我们构建了并行化实体链接流水线 (第2.1节),并针对下游问答数据集进行了定制化适配 (第2.2节)。

2.1. Entity Linking Pre-training Data

2.1. 实体链接预训练数据

We perform entity linking at scale using a massively distributed run of the DBpedia Spotlight Entity Linker (Mendes et al., 2011), which uses traditional entity linking methods to link entities to DBpedia or Wikidata IDs. We entity link the following pre-training datasets, which were chosen based on their use in the LLMs we consider:

我们使用DBpedia Spotlight实体链接器(Mendes et al., 2011)的大规模分布式运行进行实体链接,该工具采用传统实体链接方法将实体关联到DBpedia或Wikidata ID。我们对以下预训练数据集进行了实体链接,这些数据集的选择基于我们所研究的大语言模型的使用情况:

For each document in these pre-training datasets, we record the linked entities in a data structure that enables quickly counting individual entity occurrences and entity co-occurrences. This pipeline took approximately 3 weeks to entity link 2.1TB of data on a 128-CPU-core machine.

对于预训练数据集中的每个文档,我们将链接实体记录在一个数据结构中,以便快速统计单个实体出现次数及实体共现情况。该流程在128核CPU机器上处理2.1TB数据的实体链接耗时约3周。

2.2. Finding Entity Pairs in QA Data

2.2. 在问答数据中寻找实体对

We next entity link two standard open-domain QA datasets: Natural Questions (Kwiatkowski et al., 2019) and TriviaQA (Joshi et al., 2017). To expand our sample sizes, we use both the training and validation data, except for a small set of examples used for few-shot learning prompts.

我们接下来对两个标准的开放域问答数据集进行实体链接:Natural Questions (Kwiatkowski等人,2019) 和 TriviaQA (Joshi等人,2017)。为了扩大样本量,我们同时使用了训练数据和验证数据,仅保留少量示例用于少样本学习提示。

We first run the DBPedia entity linker on each example. Because there can be multiple annotated answers for a single example, we concatenate the question and all valid answers, as this enabled more accurate entity linking. We use the most common entity found in the set of ground truth answers as the salient answer entity. We then iterate over all entities found in the question and select the entity that co-occurs the most with the salient answer entity in the pre-training data. In cases where no entity is found in the question, answer, or both, we discard the example. If the resulting number of relevant documents is zero, we discard the example, as this is likely due to an entity linking error.

我们首先在每个样本上运行DBPedia实体链接器。由于单个样本可能存在多个标注答案,我们将问题与所有有效答案拼接起来,以提高实体链接的准确性。选取真实答案集合中出现频率最高的实体作为核心答案实体。随后遍历问题中的所有实体,选择在预训练数据中与核心答案实体共现次数最多的实体。若问题或答案中未检测到任何实体,则丢弃该样本。若最终相关文档数量为零(通常由实体链接错误导致),该样本同样被剔除。

2.3. Human Evaluation of Document Counting Pipeline

2.3. 文档计数流程的人工评估

Here, we conduct a human evaluation of our document identification pipeline. Note that a document can vary in the extent to which it is “relevant” to a particular QA pair. For instance, consider the QA pair (William Van Allan designed which New York building—the tallest brick building in the world in 1930?, Chrysler Building). The documents that we identify as relevant may (1) contain enough information to correctly answer the question, (2) contain information relevant to the question but not enough to correctly answer it, or (3) contain no relevant information. For example, a document that mentions that the Chrysler building was designed by William Van Allan, but not that it was the tallest brick building in 1930, would fall into the second category.

在此,我们对文档识别流程进行了人工评估。需要注意的是,文档对特定问答对的"相关性"程度可能有所不同。例如,考虑问答对 (William Van Allan设计了哪座纽约建筑——1930年世界上最高的砖砌建筑?,克莱斯勒大厦)。我们识别出的相关文档可能:(1) 包含足够信息以正确回答问题;(2) 包含与问题相关的信息但不足以正确回答;(3) 完全不包含相关信息。举例来说,若某文档提到克莱斯勒大厦由William Van Allan设计,但未提及它是1930年最高的砖砌建筑,则该文档属于第二类。

We randomly sample $300\mathrm{QA}$ pairs from TriviaQA and selected one of their relevant documents at random. We then manually labeled the documents into one of the three categories: $33%$ of documents contained enough information to answer the question and an additional $27%$ of contained some relevant information. Thus, our pipeline has ${\sim}60%$ precision at identifying relevant documents for TriviaQA.

我们从TriviaQA中随机抽取了300组问答对,并随机选取了每组对应的一个相关文档。随后,我们人工将这些文档标注为以下三类:33%的文档包含足够信息来回答问题,另有27%的文档包含部分相关信息。因此,我们的流程在识别TriviaQA相关文档时具有约60%的精确度。

Our pipeline is imperfect as (1) the entity linker sometimes mis-identifies entities and (2) not all documents containing the salient question and answer entity are relevant. However, when applied at the scale of large-scale pre-training datasets, this pipeline is efficient and achieves enough precision and recall to observe correlation al (Section 3.1) and causal (Section 3.2) relationships to QA performance.

我们的流程存在以下不足:(1) 实体链接器有时会错误识别实体;(2) 并非所有包含核心问题和答案实体的文档都具备相关性。但将其应用于大规模预训练数据集时,该流程仍能高效运行,并达到足够的精确率和召回率,从而观察到与问答性能的相关性(第3.1节)和因果关系(第3.2节)。

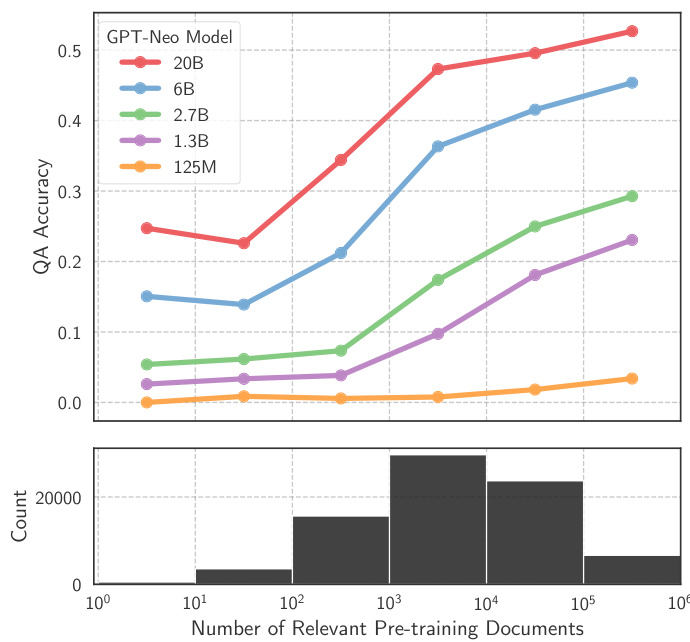

Figure 3. We plot accuracy on TriviaQA versus relevant document count for GPT-Neo. The trends match those seen for BLOOM (Figure 1). We also include a histogram that shows how many QA examples fall into each bucket; TriviaQA often asks about knowledge represented $10^{2}$ to $10^{5}$ times in the pre-training data.

图 3: 我们绘制了GPT-Neo在TriviaQA上的准确率与相关文档数量的关系。这一趋势与BLOOM的结果(图1)一致。图中还包含一个直方图,显示每个区间的问答样本数量;TriviaQA的问题通常涉及预训练数据中出现 $10^{2}$ 至 $10^{5}$ 次的知识点。

3. LM Accuracy Depends on Relevant Document Count

3. 大语言模型准确性与相关文档数量相关

In this section, we measure the relationship between an LLM’s ability to answer a question and the number of relevant documents in the pre-training corpus. We use popular Transformer decoder-only LMs (Vaswani et al., 2017) that span three orders of magnitude in size:

在本节中,我们衡量了大语言模型(LLM)回答问题能力与预训练语料中相关文档数量之间的关系。我们使用了仅含Transformer解码器的流行语言模型(Vaswani et al., 2017),其规模跨越三个数量级:

• GPT-Neo: The GPT-Neo, GPT-NeoX, and GPT-J LMs trained by EleutherAI on the Pile (Gao et al., 2020) that range in size from 125M to 20B parameters (Black et al., 2021; Wang & Komatsuzaki, 2021; Black et al., 2022). We refer to these models collectively as GPT-Neo models.

• GPT-Neo:由EleutherAI基于The Pile数据集 (Gao et al., 2020) 训练的一系列大语言模型,包括GPT-Neo、GPT-NeoX和GPT-J,参数量级从1.25亿到200亿不等 (Black et al., 2021; Wang & Komatsuzaki, 2021; Black et al., 2022)。这些模型统称为GPT-Neo系列模型。

• BLOOM: Models trained by the BigScience initiative on the ROOTS dataset (Scao et al., 2022). The BLOOM models are multi-lingual; we analyze their English performance only. The models range in size from 560M to 176B parameters.

• BLOOM:由BigScience计划在ROOTS数据集上训练的模型 (Scao等人, 2022)。BLOOM模型支持多语言,我们仅分析其英语表现。模型参数量级从5.6亿到1760亿不等。

• GPT-3: Models trained by OpenAI that range in size from ${\approx}350\mathrm{M}$ (Ada) to ${\approx}175\mathrm{B}$ parameters (Davinci). Since the pre-training data for these models is not public, we estimate relevant document counts by scaling up the counts from Open Web Text to simulate if the dataset was the same size as GPT-3’s pre-training data. We recognize that there is uncertainty around these models’ pre-training data, their exact sizes, and whether they have been fine-tuned. We therefore report these results in the Appendix for readers to interpret with these sources of error in mind.

• GPT-3:由OpenAI训练的模型系列,参数量级从${\approx}350\mathrm{M}$(Ada)到${\approx}175\mathrm{B}$(Davinci)不等。由于这些模型的预训练数据未公开,我们通过按比例放大Open Web Text的文档数量来模拟其数据规模与GPT-3预训练数据相当的情况。需要说明的是,这些模型的预训练数据构成、具体参数量级及是否经过微调仍存在不确定性,因此我们将相关结果置于附录中供读者参考,并建议结合误差来源进行解读。

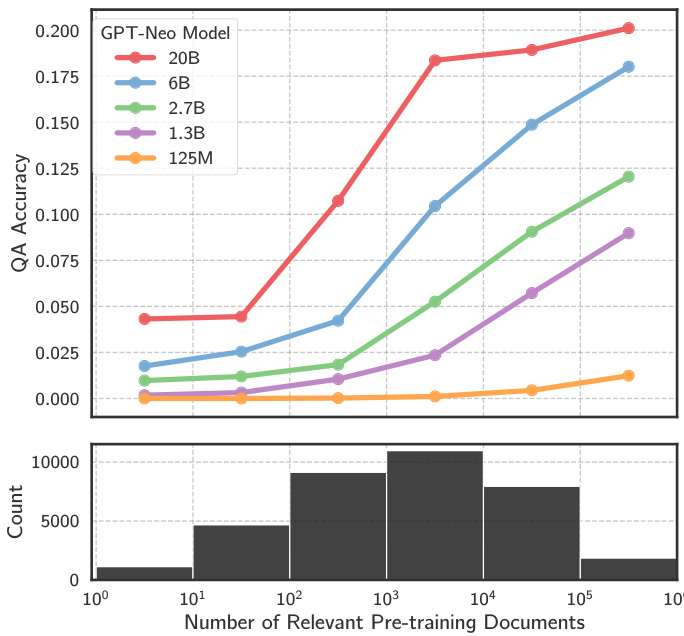

Figure 4. We plot accuracy on Natural Questions versus relevant document count for GPT-Neo. The trends match those in TriviaQA—model accuracy is highly dependent on fact count.

图 4: 我们绘制了 GPT-Neo 在 Natural Questions 数据集上的准确率与相关文档数量的关系。其趋势与 TriviaQA 一致——模型准确率高度依赖于事实数量。

We use these LMs because (with the exception of GPT3) they are the largest open-source models for which the pre-training data is publicly available. We focus on 4-shot evaluation, although we found that other amounts of incontext training examples produced similar trends. We use simple prompts consisting of templates of the form

我们选用这些大语言模型是因为(除GPT3外)它们是当前最大且预训练数据公开的开源模型。我们主要采用4样本评估,不过发现其他数量的上下文训练示例也呈现相似趋势。使用的提示模板形式为

Q: [In-Context Question 1] A: [In-Context Answer 1] Q: [In-Context Question n] A: [In-Context Answer n] Q: [Test Question]

问: [上下文问题1]

答: [上下文回答1]

问: [上下文问题n]

答: [上下文回答n]

问: [测试问题]

We generate answers by greedy decoding until the models generate a newline character, and we evaluate answers using the standard Exatch Match (EM) metric against the groundtruth answer set (Rajpurkar et al., 2016).

我们通过贪婪解码生成答案,直到模型生成换行符为止,并使用标准精确匹配(EM)指标对照真实答案集评估答案(Rajpurkar等人,2016)。

3.1. Correlation al Analysis

3.1. 相关性分析

We first evaluate the BLOOM and GPT-Neo model families on TriviaQA and plot their QA accuracies versus the number of relevant documents in Figures 1 and 3. For improved readability, we average the accuracy for QA pairs using logspaced bins (e.g., the accuracy for all questions with 1 to 10 relevant documents, 10 to 100 relevant documents, etc.). Below each plot, we also include a histogram that shows how many QA examples fall into each bin. We trim the plots when the bins contain fewer than 500 QA examples to avoid reporting accuracies for small sample sizes.

我们首先在TriviaQA上评估BLOOM和GPT-Neo模型系列,并在图1和图3中绘制它们的问答准确率与相关文档数量的关系。为提高可读性,我们使用对数间隔分箱对问答对的准确率进行平均(例如,所有具有1到10篇相关文档、10到100篇相关文档等问题的准确率)。在每个图表下方,我们还包含一个直方图,显示每个分箱中有多少问答示例。当分箱中的问答示例少于500个时,我们会修剪图表以避免报告小样本量的准确率。

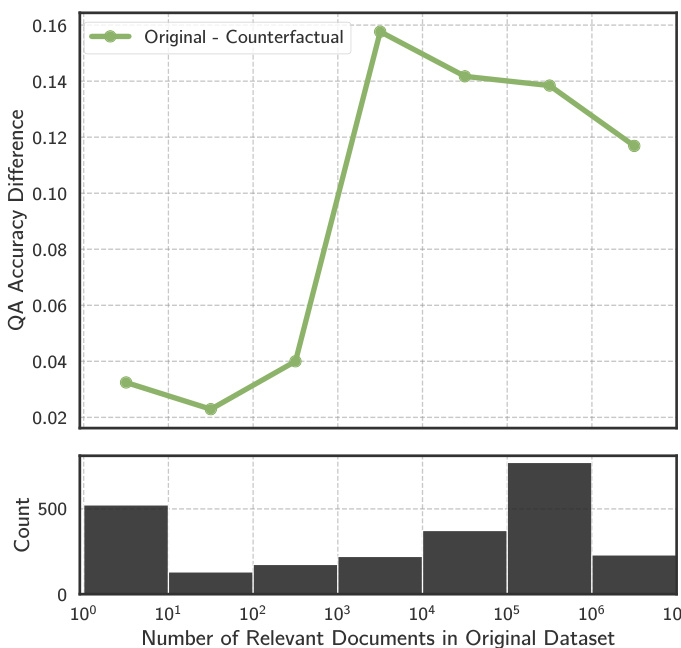

Figure 5. We run a counter factual experiment, where we re-train an LM without certain documents. We take TriviaQA questions with different document counts and delete all of their relevant pre-training documents. The difference in accuracy between the original model and the re-trained LM (counter factual) is high when the original number of relevant documents is large.

图 5: 我们进行了一项反事实实验,通过重新训练不包含特定文档的大语言模型(LM)。我们选取具有不同文档数量的TriviaQA问题,并删除其所有相关的预训练文档。当原始相关文档数量较多时,原始模型与重新训练的反事实模型之间的准确率差异较大。

There is a strong correlation between question answering accuracy and relevant document count for all tested models. Correspondingly, when the number of relevant documents is low, models are quite inaccurate, e.g., the accuracy of BLOOM-176B jumps from $25%$ to above $55%$ when the number relevant documents increases from $10^{1}$ to $10^{4}$ Model size is also a major factor in knowledge learning: as the number of model parameters is increased, the QA performance substantially improves. For example, BLOOM176B has over $4\times$ higher accuracy than BLOOM-560M on TriviaQA questions with more than $10^{5}$ relevant documents.

在所有测试模型中,问答准确率与相关文档数量存在强相关性。当相关文档数量较少时,模型表现明显欠佳,例如BLOOM-176B的准确率从$25%$跃升至$55%$以上(相关文档数量从$10^{1}$增至$10^{4}$)。模型规模同样是知识学习的关键因素:随着参数量的增加,问答性能显著提升。以TriviaQA数据为例,当相关文档超过$10^{5}$篇时,BLOOM-176B的准确率比BLOOM-560M高出$4\times$以上。

We repeat this experiment using the Natural Questions QA dataset and find similar trends for all model families (see Figure 4 for GPT-Neo, and Figures 10 and 11 in the Appendix for BLOOM and GPT-3 results).

我们使用Natural Questions QA数据集重复了这一实验,发现所有模型系列都呈现相似趋势(GPT-Neo结果见图4,BLOOM和GPT-3结果见附录中的图10和图11)。

Simpler Methods for Identifying Relevant Documents Are Less Effective In the experiments above, we identify relevant documents by searching for co-occurrences of salient question and answer entities. To evaluate whether this process is necessary, we compare against two baseline document identification methods: counting documents that contain the salient question entity and counting documents that contain the salient answer entity (as done in Petroni et al. 2019).

更简单的相关文档识别方法效果较差

在上述实验中,我们通过搜索显著问题和答案实体的共现来识别相关文档。为评估该流程的必要性,我们对比了两种基线文档识别方法:统计包含显著问题实体的文档数量,以及统计包含显著答案实体的文档数量 (如Petroni等人2019年所做)。

We show in Figure 13 that all three document identification methods are correlated with QA accuracy. However, when only considering QA examples where the question and answer entities co-occur few $(<5)$ ) times, the two baseline methods no longer correlate with QA accuracy. This indicates that counting documents with just the answer entity or question entity alone is insufficient for explaining why LMs are able to answer certain questions. This validates our definition of relevant documents as those that contain both the question entity and answer entity.

我们在图13中展示了所有三种文档识别方法与问答准确率均存在相关性。然而,当仅考虑问题与答案实体共现次数较少$(<5)$的问答样本时,两种基线方法不再与问答准确率相关。这表明仅统计包含答案实体或问题实体的文档不足以解释语言模型为何能回答特定问题,从而验证了我们将相关文档定义为同时包含问题实体和答案实体的合理性。

Humans Show Different Trends Than LMs An alternate explanation for our results is that questions with lower document counts are simply “harder”, which causes the drop in model performance. We show that this is not the case by measuring human accuracy on Natural Questions. We use a leave-one-annotator-out metric, where we take questions that are labeled by 5 different human raters (all of whom can see the necessary background text), hold out one of the raters, and use the other four as the ground-truth answer set. We plot the human accuracy versus relevant document count in the top of Figure 7. Human accuracy is actually highest for the questions with few relevant documents, the opposite trend of models. We hypothesize that humans are better on questions with few relevant documents because (1) questions about rarer facts are more likely to be simple factoids compared to common entities, and (2) the Wikipedia documents are that are provided to the annotators are shorter for rarer entities, which makes reading comprehension easier and increases inner-annotator agreement.

人类与大语言模型呈现相反趋势

我们结果的另一种解释是,文档数量较少的问题单纯"更难",导致模型性能下降。通过测量人类在Natural Questions数据集上的准确率,我们证明事实并非如此。我们采用留一标注者评估法:选取5位不同标注者(均能查看必要背景文本)标注的问题,保留其中一位标注者的答案作为预测结果,其余四位标注者的答案作为真实答案集。图7顶部展示了人类准确率与相关文档数量的关系——人类在相关文档较少的问题上准确率最高,这与模型趋势完全相反。我们推测人类在低文档量问题上表现更优的原因在于:(1) 关于冷门事实的问题相比常见实体更可能是简单事实型问题;(2) 提供给标注者的维基百科文档对冷门实体的描述更简短,这降低了阅读理解难度并提高了标注者间一致性。

3.2. Causal Analysis via Re-training

3.2. 基于重新训练的因果分析

Our results thus far are correlation al in nature: there may be unknown confounds that explain them away, i.e., the rarer questions are more difficult for LMs for other reasons. Here we establish a causal relationship by removing certain documents in the training data and re-training the LM.

我们目前的结果本质上是相关性研究:可能存在未知的混杂因素可以解释这些现象,即某些问题之所以对大语言模型更难,可能是由于其他原因。在此,我们通过从训练数据中移除特定文档并重新训练大语言模型,来建立因果关系。

We first train a baseline 4.8 billion parameter LM on C4, fol- lowing the setup from Wang et al. (2022). We then measure the effect of deleting certain documents from the training set. For each log-scaled bin of relevant document count (e.g., $10^{0}$ to $10^{1}$ relevant documents, $10^{1}$ to $10^{2}$ , ...) we sample 100 questions from Trivia QA and remove all relevant documents for those questions in C4. In total, this removes about $30%$ of C4. Finally, we train a “counter factual” LM on this modified pre-training dataset and compare its performance to the baseline model. For both the baseline model and the counter factual model, we train for a single epoch. Note that the counter factual model was trained for $30%$ fewer steps, which makes it slightly worse in performance overall. To account for this, we only study the performance on questions whose relevant documents were removed.

我们首先按照Wang等人 (2022) 的设置,在C4数据集上训练了一个48亿参数的基线大语言模型。随后通过删除训练集中特定文档来测量影响:针对每个对数分档的相关文档数量(如 $10^{0}$ 至 $10^{1}$ 篇、$10^{1}$ 至 $10^{2}$ 篇等),从Trivia QA采样100个问题并移除C4中与之相关的所有文档,总计删除约 $30%$ 的C4数据。接着在这个修改后的预训练数据集上训练"反事实"模型,并与基线模型进行性能对比。两个模型均训练单周期,需注意反事实模型因训练步数减少 $30%$ 会导致整体性能轻微下降。为消除该影响,我们仅分析被移除相关文档问题的性能表现。

We show the difference in performance between the two LMs on questions whose documents were removed in Figure 5. For questions with few relevant documents in the original C4 dataset, performance is poor for both the baseline and the counter factual LM, i.e., their performance difference is small. However, for questions with many relevant documents, performance is significantly worse for the counter factual LM. This suggests a causal link between the number of relevant documents and QA performance.

我们在图5中展示了两种大语言模型在被移除文档问题上的性能差异。对于原始C4数据集中相关文档较少的问题,基线模型和反事实模型的性能都很差(即它们的性能差异很小)。但对于相关文档较多的问题,反事实模型的性能明显更差。这表明相关文档数量与问答性能之间存在因果关系。

Figure 6. Scaling trends for fact learning. We plot BLOOM accuracy on rare instances from Natural Questions(<100 relevant docs) as a function of the log of the model size. Extrapolating from the empirical line of best fit—which approximates the trend well at $R^{2}=0.98$ —implies that immensely large models would be necessary to get high accuracy.

图 6: 事实学习的扩展趋势。我们绘制了BLOOM在Natural Questions罕见实例(相关文档数<100)上的准确率随模型规模对数的变化关系。根据拟合优度达$R^{2}=0.98$的最佳拟合线外推表明,要实现高准确率需要极其庞大的模型规模。

4. Methods to Improve Rare Fact Learning

4. 提升稀有事实学习的方法

Thus far, we showed that LLMs have a strong dependence on relevant document count. Here, we investigate methods to mitigate this dependence: increasing data scale, increasing model scale, and adding an auxiliary retrieval module.

目前,我们已经证明大语言模型 (LLM) 对相关文档数量存在强依赖性。接下来,我们将研究缓解这种依赖性的方法:扩大数据规模、增大模型规模以及添加辅助检索模块。

4.1. Can We Scale Up Datasets?

4.1. 我们能否扩展数据集?

Today’s largest LLMs are pre-trained on hundreds of billions of tokens. One naive approach for improving accuracy on questions about less-prevalent knowledge is to collect larger quantities of data. Our results suggest that this would not significantly improve accuracy as scaling datasets by moderate factors (e.g., 5x) usually results in small accuracy gains. An alternative idea would be to increase the diversity of the pre-training data. However, we also believe this would provide minimal benefit because many data sources are surprisingly correlated . Although each of the pre-training datasets considered were collected independently, the amount of supporting information they provide for different TriviaQA examples is highly consistent as seen by the rank correlations between their relevant document counts in Table 1.

当今最大的大语言模型 (LLM) 是在数千亿 token 上预训练的。对于涉及较少见知识的问题,提高准确性的一种简单方法是收集更多数据。但我们的结果表明,这种方法不会显著提升准确性,因为适度扩大数据集规模(例如5倍)通常只能带来微小的准确率提升。另一个思路是增加预训练数据的多样性。然而,我们也认为这收效甚微,因为许多数据源存在惊人的相关性。尽管所考察的每个预训练数据集都是独立收集的,但它们为不同 TriviaQA 示例提供的支持信息量高度一致,这从表 1 中相关文档数量的秩相关性可见一斑。

Table 1. Spearman rank correlations of the relevant document counts for TriviaQA examples in The Pile, ROOTS, C4, OpenWebText, and Wikipedia. Despite having different collection methodologies, these pre-training datasets are highly correlated in terms of how much information they contain related to different QA pairs.

| ROOTS | Pile | C4 | OWT | Wiki | |

| ROOTS | 0.97 | 0.97 | 0.94 | 0.87 | |

| Pile | 0.95 | 0.96 | 0.87 | ||

| C4 | 0.96 | 0.90 | |||

| OWT | 0.91 | ||||

| Wiki | - |

表 1: The Pile、ROOTS、C4、OpenWebText 和 Wikipedia 中 TriviaQA 示例相关文档计数的 Spearman 等级相关性。尽管收集方法不同,这些预训练数据集在不同问答对相关信息量方面高度相关。

| ROOTS | Pile | C4 | OWT | Wiki | |

|---|---|---|---|---|---|

| ROOTS | 0.97 | 0.97 | 0.94 | 0.87 | |

| Pile | 0.95 | 0.96 | 0.87 | ||

| C4 |