END-TO-END ADVERSARIAL TEXT-TO-SPEECH

端到端对抗性文本到语音

Jeff Donahue* Sander Dieleman, Mikolaj Binkowski, Erich Elsen, Karen Simonyan* DeepMind {jeff donahue,sedielem,binek,eriche,simonyan}@google.com

Jeff Donahue* Sander Dieleman, Mikolaj Binkowski, Erich Elsen, Karen Simonyan* DeepMind {jeff donahue,sedielem,binek,eriche,simonyan}@google.com

ABSTRACT

摘要

Modern text-to-speech synthesis pipelines typically involve multiple processing stages, each of which is designed or learnt independently from the rest. In this work, we take on the challenging task of learning to synthesise speech from normalised text or phonemes in an end-to-end manner, resulting in models which operate directly on character or phoneme input sequences and produce raw speech audio outputs. Our proposed generator is feed-forward and thus efficient for both training and inference, using a differentiable alignment scheme based on token length prediction. It learns to produce high fidelity audio through a combination of adversarial feedback and prediction losses constraining the generated audio to roughly match the ground truth in terms of its total duration and mel-spec tr ogram. To allow the model to capture temporal variation in the generated audio, we employ soft dynamic time warping in the spec tr ogram-based prediction loss. The resulting model achieves a mean opinion score exceeding 4 on a 5 point scale, which is comparable to the state-of-the-art models relying on multi-stage training and additional supervision.1

现代文本转语音合成流程通常包含多个独立设计或训练的处理阶段。本研究致力于实现从规范化文本或音素端到端学习语音合成的挑战性任务,构建可直接处理字符或音素输入序列并输出原始语音音频的模型。我们提出的生成器采用前馈结构,基于token长度预测的可微分对齐方案,在训练和推理阶段均保持高效。通过结合对抗性反馈与预测损失(约束生成音频在总时长和梅尔频谱图方面与真实音频大致匹配),该模型能学习生成高保真音频。为捕捉生成音频的时间变化特性,我们在基于频谱图的预测损失中采用软动态时间规整技术。最终模型在5分量表上获得超过4分的平均意见得分,其性能可与依赖多阶段训练和额外监督的先进模型相媲美[20]。

1 INTRODUCTION

1 引言

A text-to-speech (TTS) system processes natural language text inputs to produce synthetic human-like speech outputs. Typical TTS pipelines consist of a number of stages trained or designed independently – e.g. text normalisation, aligned linguistic feat uris ation, mel-spec tr ogram synthesis, and raw audio waveform synthesis (Taylor, 2009). Although these pipelines have proven capable of realistic and high-fidelity speech synthesis and enjoy wide real-world use today, these modular approaches come with a number of drawbacks. They often require supervision at each stage, in some cases necessitating expensive “ground truth” annotations to guide the outputs of each stage, and sequential training of the stages. Further, they are unable to reap the full potential rewards of data-driven “end-to-end" learning widely observed in a number of prediction and synthesis task domains across machine learning.

文本转语音 (TTS) 系统通过处理自然语言文本输入来合成类人语音输出。典型的TTS流程由多个独立训练或设计的阶段组成,例如文本归一化、对齐的语言特征提取、梅尔频谱合成和原始音频波形合成 (Taylor, 2009)。尽管这些流程已被证明能够实现逼真且高保真的语音合成,并在当今现实世界中得到广泛应用,但这种模块化方法存在若干缺点。它们通常需要在每个阶段进行监督,某些情况下还需要昂贵的"真实标注"来指导每个阶段的输出,以及各阶段的顺序训练。此外,它们无法充分获益于数据驱动的"端到端"学习,而这种学习方式在机器学习领域的众多预测和合成任务中已被广泛证实其潜力。

In this work, we aim to simplify the TTS pipeline and take on the challenging task of synthesising speech from text or phonemes in an end-to-end manner. We propose EATS – End-to-end Adversarial Text-to-Speech – generative models for TTS trained adversarial ly (Goodfellow et al., 2014) that operate on either pure text or raw (temporally unaligned) phoneme input sequences, and produce raw speech waveforms as output. These models eliminate the typical intermediate bottlenecks present in most state-of-the-art TTS engines by maintaining learnt intermediate feature representations throughout the network.

在本工作中,我们旨在简化TTS流程,并挑战以端到端方式从文本或音素合成语音的艰巨任务。我们提出EATS——端到端对抗文本转语音(End-to-end Adversarial Text-to-Speech)——通过对抗训练(Goodfellow et al., 2014)的TTS生成模型,可直接处理纯文本或原始(时间未对齐的)音素输入序列,并输出原始语音波形。这些模型通过在整个网络中保持学习到的中间特征表示,消除了当前最先进TTS引擎中常见的中间瓶颈。

Our speech synthesis models are composed of two high-level submodules, detailed in Section 2. An aligner processes the raw input sequence and produces relatively low-frequency $(200~\mathrm{Hz})$ aligned features in its own learnt, abstract feature space. The features output by the aligner may be thought of as taking the place of the earlier stages of typical TTS pipelines – e.g., temporally aligned melspec tro grams or linguistic features. These features are then input to the decoder which upsamples the features from the aligner by 1D convolutions to produce $24\mathrm{kHz}$ audio waveforms.

我们的语音合成模型由两个高级子模块组成,详见第2节。对齐器 (aligner) 处理原始输入序列,并在其自学习的抽象特征空间中生成相对低频 $(200~\mathrm{Hz})$ 的对齐特征。对齐器输出的特征可视为替代了典型TTS流程的前期阶段(例如时间对齐的梅尔频谱图或语言学特征)。这些特征随后输入解码器,通过一维卷积将对齐器的特征上采样为 $24\mathrm{kHz}$ 的音频波形。

By carefully designing the aligner and guiding training by a combination of adversarial feedback and domain-specific loss functions, we demonstrate that a TTS system can be learnt nearly end-to-end, resulting in high-fidelity natural-sounding speech approaching the state-of-the-art TTS systems. Our main contributions include:

通过精心设计对齐器并结合对抗性反馈和领域特定损失函数来指导训练,我们证明可以近乎端到端地学习TTS系统,从而生成接近最先进TTS系统的高保真自然语音。我们的主要贡献包括:

2 METHOD

2 方法

Our goal is to learn a neural network (the generator) which maps an input sequence of characters or phonemes to raw audio at $24~\mathrm{kHz}$ . Beyond the vastly different lengths of the input and output signals, this task is also challenging because the input and output are not aligned, i.e. it is not known beforehand which output tokens each input token will correspond to. To address these challenges, we divide the generator into two blocks: (i) the aligner, which maps the unaligned input sequence to a representation which is aligned with the output, but has a lower sample rate of $200\mathrm{Hz}$ ; and (ii) the decoder, which upsamples the aligner’s output to the full audio frequency. The entire generator architecture is differentiable, and is trained end to end. Importantly, it is also a feed-forward convolutional network, which makes it well-suited for applications where fast batched inference is important: our EATS implementation generates speech at a speed of $200\times$ realtime on a single NVIDIA V100 GPU (see Appendix A and Table 3 for details). It is illustrated in Figure 1.

我们的目标是训练一个神经网络(生成器),将输入的字符或音素序列映射为24 kHz的原始音频。除了输入和输出信号长度差异巨大之外,这项任务的挑战性还在于输入输出未对齐,即无法预先知道每个输入token对应哪些输出token。为解决这些问题,我们将生成器分为两个模块:(i) 对齐器(aligner),将未对齐的输入序列映射为与输出对齐的200Hz低采样率表征;(ii) 解码器(decoder),将对齐器的输出上采样至完整音频频率。整个生成器架构采用可微分设计并端到端训练。值得注意的是,它还是前馈卷积网络结构,特别适合需要快速批量推理的应用场景:我们的EATS实现方案在单个NVIDIA V100 GPU上能达到200倍实时语音生成速度(详见附录A和表3)。其架构如图1所示。

The generator is inspired by GAN-TTS (Binkowski et al., 2020), a text-to-speech generative adversarial network operating on aligned linguistic features. We employ the GAN-TTS generator as the decoder in our model, but instead of upsampling pre-computed linguistic features, its input comes from the aligner block. We make it speaker-conditional by feeding in a speaker embedding s alongside the latent vector $\mathbf{z}$ , to enable training on a larger dataset with recordings from multiple speakers. We also adopt the multiple random window disc rim in at or s (RWDs) from GAN-TTS, which have been proven effective for adversarial raw waveform modelling, and we preprocess real audio input by applying a simple $\mu$ -law transform. Hence, the generator is trained to produce audio in the $\mu$ -law domain and we apply the inverse transformation to its outputs when sampling.

生成器受到GAN-TTS (Binkowski等人, 2020)的启发,这是一种基于对齐语言学特征的文本到语音生成对抗网络。我们采用GAN-TTS的生成器作为模型中的解码器,但其输入并非来自预计算的语言学特征上采样,而是来自对齐模块。我们通过同时输入说话人嵌入向量s和潜在向量$\mathbf{z}$使其具备说话人条件性,从而支持使用多说话人录音的大规模数据集进行训练。我们还沿用了GAN-TTS中经过验证对对抗性原始波形建模有效的多随机窗口判别器(RWDs),并对真实音频输入应用简单的$\mu$律变换进行预处理。因此,生成器被训练用于产生$\mu$律域内的音频,在采样时我们对其输出应用逆变换。

The loss function we use to train the generator is as follows:

我们用于训练生成器的损失函数如下:

$$

\mathcal{L}_ {G}=\mathcal{L}_ {G,\mathrm{adv}}+\lambda_{\mathrm{pred}}\cdot\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}+\lambda_{\mathrm{length}}\cdot\mathcal{L}_{\mathrm{length}},

$$

$$

\mathcal{L}_ {G}=\mathcal{L}_ {G,\mathrm{adv}}+\lambda_{\mathrm{pred}}\cdot\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}+\lambda_{\mathrm{length}}\cdot\mathcal{L}_{\mathrm{length}},

$$

where $\mathcal{L}_ {G,\mathrm{adv}}$ is the adversarial loss, linear in the disc rim in at or s’ outputs, paired with the hinge loss (Lim & Ye, 2017; Tran et al., 2017) used as the disc rim in at or s’ objective, as used in GAN TTS (Binkowski et al., 2020). The use of an adversarial (Goodfellow et al., 2014) loss is an advantage of our approach, as this setup allows for efficient feed-forward training and inference, and such losses tend to be mode-seeking in practice, a useful behaviour in a strongly conditioned setting where realism is an important design goal, as in the case of text-to-speech. In the remainder of this section, we describe the aligner network and the auxiliary prediction $(\mathcal{L}_ {\mathrm{{pred}}}^{\prime\prime})$ and length $(\mathcal{L}_{\mathrm{length}})$ losses in detail, and recap the components which were adopted from GAN-TTS.

其中 $\mathcal{L}_ {G,\mathrm{adv}}$ 是对抗损失,与判别器输出呈线性关系,配合铰链损失 (Lim & Ye, 2017; Tran et al., 2017) 作为判别器目标函数,如GAN TTS (Binkowski et al., 2020) 所用。采用对抗损失 (Goodfellow et al., 2014) 是我们方法的优势,这种设置能实现高效的前馈训练与推理,且实践中此类损失往往具有模式寻求特性,在强约束条件下(如以逼真度为重要目标的文本转语音任务)尤为有益。本节后续将详细描述对齐网络、辅助预测损失 $(\mathcal{L}_ {\mathrm{{pred}}}^{\prime\prime})$ 和时长损失 $(\mathcal{L}_{\mathrm{length}})$,并回顾从GAN-TTS继承的组件。

2.1 ALIGNER

2.1 ALIGNER

Given a token sequence $\mathbf{x}=(x_{1},\ldots,x_{N})$ of length $N$ , we first compute token representations $\mathbf{h}=f(\mathbf{x},\mathbf{z},\mathbf{s})$ , where $f$ is a stack of dilated convolutions (van den Oord et al., 2016) interspersed with batch normalisation (Ioffe & Szegedy, 2015) and ReLU activation s. The latents $\mathbf{z}$ and speaker embedding s modulate the scale and shift parameters of the batch normalisation layers (Dumoulin et al., 2017; De Vries et al., 2017). We then predict the length for each input token individually: $l_{n}=g(h_{n},\mathbf{z},\mathbf{s})$ , where $g$ is an MLP. We use a ReLU non linearity at the output to ensure that the predicted lengths are non-negative. We can then find the predicted token end positions as a cumulative sum of the token lengths: $\begin{array}{r}{e_{n}=\sum_{m=1}^{n}l_{m}}\end{array}$ , and the token centre positions as $\begin{array}{r}{c_{n}=e_{n}-\frac{1}{2}l_{n}}\end{array}$ . Based on these predicted positions, w e can interpolate the token representations into an audio-aligned representation at $200\mathrm{Hz}$ , $\mathbf{a}=(a_{1},\dots,a_{S})$ , where $S=\left\lceil e_{N}\right\rceil$ is the total number of output time steps. To compute $a_{t}$ , we obtain interpolation weights for the token representations $h_{n}$ using a softmax over the squared distance between $t$ and $c_{n}$ , scaled by a temperature parameter $\sigma^{2}$ , which we set to 10.0 (i.e. a Gaussian kernel):

给定一个长度为 $N$ 的token序列 $\mathbf{x}=(x_{1},\ldots,x_{N})$,我们首先计算token表示 $\mathbf{h}=f(\mathbf{x},\mathbf{z},\mathbf{s})$,其中 $f$ 是由扩张卷积 (van den Oord et al., 2016) 堆叠而成,中间穿插了批量归一化 (Ioffe & Szegedy, 2015) 和ReLU激活函数。潜在变量 $\mathbf{z}$ 和说话人嵌入 $\mathbf{s}$ 调节批量归一化层的缩放和偏移参数 (Dumoulin et al., 2017; De Vries et al., 2017)。然后我们单独预测每个输入token的长度:$l_{n}=g(h_{n},\mathbf{z},\mathbf{s})$,其中 $g$ 是一个多层感知机 (MLP)。我们在输出端使用ReLU非线性函数以确保预测的长度为非负数。接着,我们可以通过token长度的累加和计算预测的token结束位置:$\begin{array}{r}{e_{n}=\sum_{m=1}^{n}l_{m}}\end{array}$,以及token中心位置为 $\begin{array}{r}{c_{n}=e_{n}-\frac{1}{2}l_{n}}\end{array}$。基于这些预测的位置,我们可以将token表示插值为音频对齐的表示 $\mathbf{a}=(a_{1},\dots,a_{S})$,频率为 $200\mathrm{Hz}$,其中 $S=\left\lceil e_{N}\right\rceil$ 是输出时间步的总数。为了计算 $a_{t}$,我们使用一个关于 $t$ 和 $c_{n}$ 之间平方距离的softmax来获得token表示 $h_{n}$ 的插值权重,并通过温度参数 $\sigma^{2}$ 进行缩放,我们将其设置为10.0(即高斯核)。

$$

w_{t}^{n}=\frac{\exp{\left(-\sigma^{-2}(t-c_{n})^{2}\right)}}{\sum_{m=1}^{N}\exp{\left(-\sigma^{-2}(t-c_{m})^{2}\right)}}.

$$

$$

w_{t}^{n}=\frac{\exp{\left(-\sigma^{-2}(t-c_{n})^{2}\right)}}{\sum_{m=1}^{N}\exp{\left(-\sigma^{-2}(t-c_{m})^{2}\right)}}.

$$

Using these weights, we can then compute $\begin{array}{r}{a_{t}=\sum_{n=1}^{N}w_{t}^{n}h_{n}}\end{array}$ nN=1 wtn hn, which amounts to non-uniform interpolation. By predicting token lengths and ob taining positions using cumulative summation, instead of predicting positions directly, we implicitly enforce monotonic it y of the alignment. Note that tokens which have a non-monotonic effect on prosody, such as punctuation, can still affect the entire utterance thanks to the stack of dilated convolutions $f$ , whose receptive field is large enough to allow for propagation of information across the entire token sequence. The convolutions also ensure generalisation across different sequence lengths. Appendix B includes pseudocode for the aligner.

利用这些权重,我们可以计算 $\begin{array}{r}{a_{t}=\sum_{n=1}^{N}w_{t}^{n}h_{n}}\end{array}$ ,这相当于非均匀插值。通过预测token长度并使用累加求和获取位置(而非直接预测位置),我们隐式地强制对齐的单调性。需注意标点符号等对韵律有非单调性影响的token,仍能借助扩张卷积堆栈 $f$ 的足够大感受野,将信息传播至整个token序列从而影响整段话语。该卷积结构还确保了不同序列长度间的泛化能力。附录B包含对齐器的伪代码实现。

2.2 WINDOWED GENERATOR TRAINING

2.2 窗口化生成器训练

Training examples vary widely in length, from about 1 to 20 seconds. We cannot pad all sequences to a maximal length during training, as this would be wasteful and prohibitively expensive: 20 seconds of audio at $24\mathrm{kHz}$ correspond to 480,000 timesteps, which results in high memory requirements. Instead, we randomly extract a 2 second window from each example, which we will refer to as a training window, by uniformly sampling a random offset $\eta$ . The aligner produces a $200:\mathrm{Hz}$ audio-aligned representation for this window, which is then fed to the decoder (see Figure 1). Note that we only need to compute $a_{t}$ for time steps $t$ that fall within the sampled window, but we do have to compute the predicted token lengths $l_{n}$ for the entire input sequence. During evaluation, we simply produce the audio-aligned representation for the full utterance and run the decoder on it, which is possible because it is fully convolutional.

训练样本的长度差异很大,从约1秒到20秒不等。我们无法在训练时将全部序列填充至最大长度,因为这会浪费资源且成本过高:24kHz采样率下20秒音频对应480,000个时间步,将导致极高的内存需求。为此,我们通过均匀采样随机偏移量η,从每个样本中随机提取2秒的窗口(称为训练窗口)。对齐器为该窗口生成200Hz的音频对齐表征,随后输入解码器(见图1)。需要注意的是,我们只需计算采样窗口内时间步t对应的a_t,但仍需计算整个输入序列的预测token长度l_n。在评估阶段,我们直接为完整话语生成音频对齐表征并运行解码器,这得益于其全卷积架构的特性。

2.3 ADVERSARIAL DISC RIM IN AT OR S

2.3 对抗判别器

Figure 1: A diagram of the generator, including the monotonic interpolation-based aligner. $z$ and $c h$ denote the latent Gaussian vector and the number of output channels, respectively. During training, audio windows have a fixed length of 2 seconds and are generated from the conditioning text using random offsets $\eta$ and predicted phoneme lengths; the shaded areas in the logits grid and waveform are not synthesised. For inference (sampling), we set $\eta=0$ . In the No Phonemes ablation, the phonemizer is skipped and the character sequence is fed directly into the aligner.

图 1: 生成器结构示意图,包含基于单调插值的对齐器。$z$ 和 $c h$ 分别表示潜在高斯向量和输出通道数。训练时,音频窗口固定为2秒长度,通过随机偏移量 $\eta$ 和预测音素时长从条件文本生成;对数概率网格和波形中的阴影区域不参与合成。推理(采样)时设置 $\eta=0$。在无音素消融实验中,跳过音素转换器直接将字符序列输入对齐器。

Random window disc rim in at or s. We use an ensemble of random window disc rim in at or s (RWDs) adopted from GAN-TTS. Each RWD operates on audio fragments of different lengths, randomly sampled from the training window. We use five RWDs with window sizes 240, 480, 960, 1920 and 3600. This enables each RWD to operate at a different resolution. Note that 3600 samples at $24~\mathrm{kHz}$ corresponds to $150~\mathrm{ms}$ of audio, so all RWDs operate on short timescales. All RWDs in our model are unconditional with respect to text: they cannot access the text sequence or the aligner output. (GAN-TTS uses 10 RWDs, including 5 conditioned on linguistic features which we omit.) They are, however, conditioned on the speaker, via projection embedding (Miyato & Koyama, 2018).

随机窗口判别器 (RWD) 集合。我们采用了来自GAN-TTS的随机窗口判别器 (RWD) 集合。每个RWD作用于从训练窗口中随机采样的不同长度音频片段,使用了五种窗口尺寸分别为240、480、960、1920和3600的RWD。这使得每个RWD能在不同分辨率下运作。需要注意的是,在24kHz采样率下,3600个样本对应150ms的音频片段,因此所有RWD都在短时域上工作。本模型中所有RWD都不依赖文本条件:它们无法访问文本序列或对齐器输出 (GAN-TTS使用了10个RWD,其中5个依赖语言特征的条件判别器被我们省略) 。不过,通过投影嵌入 (Miyato & Koyama, 2018) ,这些判别器具备说话人条件感知能力。

Spec tr ogram disc rim in at or. We use an additional disc rim in at or which operates on the full training window in the spec tr ogram domain. We extract log-scaled mel-spec tro grams from the audio signals and use the BigGAN-deep architecture (Brock et al., 2018), essentially treating the spec tro grams as

频谱图判别器。我们使用一个额外的判别器,该判别器在频谱图域中对完整训练窗口进行操作。我们从音频信号中提取对数缩放的梅尔频谱图,并采用BigGAN-deep架构 (Brock et al., 2018),本质上将频谱图视为

2.4 SPEC TR OGRAM PREDICTION LOSS

2.4 SPEC TR OGRAM PREDICTION LOSS

In preliminary experiments, we discovered that adversarial feedback is insufficient to learn alignment. At the start of training, the aligner does not produce an accurate alignment, so the information in the input tokens is incorrectly temporally distributed. This encourages the decoder to ignore the aligner output. The unconditional disc rim in at or s provide no useful learning signal to correct this. If we want to use conditional disc rim in at or s instead, we face a different problem: we do not have aligned ground truth. Conditional disc rim in at or s also need an aligner module, which cannot function correctly at the start of training, effectively turning them into unconditional disc rim in at or s. Although it should be possible in theory to train the disc rim in at or s’ aligner modules adversarial ly, we find that this does not work in practice, and training gets stuck.

在初步实验中,我们发现对抗性反馈不足以学习对齐。训练开始时,对齐器无法生成准确的对齐结果,导致输入token中的信息在时间分布上出现错误。这会促使解码器忽略对齐器输出。此时无条件判别器无法提供有效的学习信号来纠正此问题。若改用条件判别器,则面临另一个问题:缺乏已对齐的真实数据。条件判别器同样需要对齐模块,而该模块在训练初期无法正常工作,实际上使它们退化为无条件判别器。尽管理论上可以通过对抗方式训练判别器的对齐模块,但实际测试表明该方法无效,训练会陷入停滞。

Instead, we propose to guide learning by using an explicit prediction loss in the spec tr ogram domain: we minimise the $L_{1}$ loss between the log-scaled mel-spec tro grams of the generator output, and the corresponding ground truth training window. This helps training to take off, and renders conditional disc rim in at or s unnecessary, simplifying the model. Let $S_{\mathrm{gen}}$ be the spec tr ogram of the generated audio, $S_{\mathrm{gt}}$ the spec tr ogram of the corresponding ground truth, and $S[t,f]$ the log-scaled magnitude at time step $t$ and mel-frequency bin $f$ . Then the prediction loss is:

相反,我们提出通过在频谱图 (spectrogram) 域使用显式预测损失来指导学习:我们最小化生成器输出的对数梅尔频谱与对应真实训练窗口之间的 $L_{1}$ 损失。这有助于训练启动,并使条件判别器变得不必要,从而简化模型。设 $S_{\mathrm{gen}}$ 为生成音频的频谱图,$S_{\mathrm{gt}}$ 为对应真实音频的频谱图,$S[t,f]$ 表示时间步 $t$ 和梅尔频率区间 $f$ 处的对数幅度值,则预测损失为:

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{pred}}=\frac{1}{F}\sum_{t=1}^{T}\sum_{f=1}^{F}|S_{\mathrm{gen}}[t,f]-S_{\mathrm{gt}}[t,f]|.}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{pred}}=\frac{1}{F}\sum_{t=1}^{T}\sum_{f=1}^{F}|S_{\mathrm{gen}}[t,f]-S_{\mathrm{gt}}[t,f]|.}\end{array}

$$

$T$ and $F$ are the total number of time steps and mel-frequency bins respectively. Computing the prediction loss in the spec tr ogram domain, rather than the time domain, has the advantage of increased invariance to phase differences between the generated and ground truth signals, which are not perceptual ly salient. Seeing as the spec tr ogram extraction operation has several hyper parameters and its implementation is not standardised, we provide the code we used for this in Appendix D. We applied a small amount of jitter (by up to $\pm60$ samples at $24\mathrm{~kHz}$ ) to the ground truth waveform before computing $S_{\mathrm{gt}}$ , which helped to reduce artifacts in the generated audio.

$T$ 和 $F$ 分别表示总时间步数和梅尔频率区间数。在频谱图 (spectrogram) 域而非时域计算预测损失的优势在于,其对生成信号与真实信号之间的相位差异具有更高的不变性,而这些相位差异在感知上并不显著。鉴于频谱图提取操作涉及多个超参数且实现方式尚未标准化,我们在附录 D 中提供了所使用的代码。在计算 $S_{\mathrm{gt}}$ 前,我们对真实波形施加了小幅抖动(在 $24\mathrm{~kHz}$ 采样率下最多 $\pm60$ 个样本),这有助于减少生成音频中的伪影。

The inability to learn alignment from adversarial feedback alone is worth expanding on: likelihoodbased auto regressive models have no issues learning alignment, because they are able to benefit from teacher forcing (Williams & Zipser, 1989) during training: the model is trained to perform next step prediction on each sequence step, given the preceding ground truth, and it is expected to infer alignment only one step at a time. This is not compatible with feed-forward adversarial models however, so the prediction loss is necessary to bootstrap alignment learning for our model.

仅从对抗性反馈中学习对齐能力的不足值得深入探讨:基于似然的自动回归模型在学习对齐时没有困难,因为它们能在训练过程中受益于教师强制(Williams & Zipser, 1989) ——模型被训练为在给定前序真实值的情况下执行下一步预测,且每次只需推断单步对齐。然而这与前馈对抗模型不兼容,因此预测损失对于引导我们模型的对齐学习是必要的。

Note that although we make use of mel-spec tro grams for training in $\mathcal{L}_{\mathrm{pred}}$ (and to compute the inputs for the spec tr ogram disc rim in at or, Section 2.3), the generator itself does not produce spec tro grams as part of the generation process. Rather, its outputs are raw waveforms, and we convert these waveforms to spec tro grams only for training (back propagating gradients through the waveform to mel-spec tr ogram conversion operation).

请注意,尽管我们在训练 $\mathcal{L}_{\mathrm{pred}}$ 时使用了梅尔频谱图 (以及为频谱图判别器计算输入,见第2.3节),但生成器本身在生成过程中并不产生频谱图。相反,其输出为原始波形,我们仅在训练时将这些波形转换为频谱图 (通过波形到梅尔频谱图的转换操作反向传播梯度)。

2.5 DYNAMIC TIME WARPING

2.5 动态时间规整 (Dynamic Time Warping)

The spec tr ogram prediction loss incorrectly assumes that token lengths are deterministic. We can relax the requirement that the generated and ground truth spec tro grams are exactly aligned, by incorporating dynamic time warping (DTW) (Sakoe, 1971; Sakoe & Chiba, 1978). We calculate the prediction loss by iterative ly finding a minimal-cost alignment path $p$ between the generated and target spec tro grams, $S_{\mathrm{gen}}$ and $S_{\mathrm{gt}}$ . We start at the first time step in both spec tro grams: $p_{\mathrm{gen},1}=p_{\mathrm{gt},1}=1$ At each iteration $k$ , we take one of three possible actions:

频谱图预测损失错误地假设了token长度是确定性的。我们可以通过引入动态时间规整 (DTW) (Sakoe, 1971; Sakoe & Chiba, 1978) 来放宽生成频谱图与真实频谱图必须严格对齐的要求。通过迭代寻找生成频谱图 $S_{\mathrm{gen}}$ 和目标频谱图 $S_{\mathrm{gt}}$ 之间的最小成本对齐路径 $p$ 来计算预测损失。我们从两个频谱图的第一步开始: $p_{\mathrm{gen},1}=p_{\mathrm{gt},1}=1$。在每次迭代 $k$ 时,我们采取以下三种可能的操作之一:

The resulting path is $p=\langle(p_{\mathrm{gen,1}},p_{\mathrm{gt,1}}),\ldots,(p_{\mathrm{gen,}K_{p}},p_{\mathrm{gt,}K_{p}})\rangle$ , where $K_{p}$ is the length. Each action is assigned a cost based on the $L_{1}$ distance between $S_{\mathrm{gen}}[p_{\mathrm{gen},k}]$ and $\hat{S}_ {\mathrm{gt}}[p_{\mathrm{gt},k}]$ , and a warp penalty $w$ which is incurred if we choose not to advance both spec tro grams in lockstep (i.e. we are warping the spec tr ogram by taking action 2 or 3; we use $w=1.0$ ). The warp penalty thus encourages alignment paths that do not deviate too far from the identity alignment. Let $\delta_{k}$ be an indicator which is 1 for iterations where warping occurs, and 0 otherwise. Then the total path cost $c_{p}$ is:

生成的路径为 $p=\langle(p_{\mathrm{gen,1}},p_{\mathrm{gt,1}}),\ldots,(p_{\mathrm{gen,}K_{p}},p_{\mathrm{gt,}K_{p}})\rangle$ ,其中 $K_{p}$ 为路径长度。每个动作的成本基于 $S_{\mathrm{gen}}[p_{\mathrm{gen},k}]$ 与 $\hat{S}_ {\mathrm{gt}}[p_{\mathrm{gt},k}]$ 之间的 $L_{1}$ 距离,以及弯曲惩罚 $w$ (当选择非同步推进两个频谱图时产生,即采取动作2或3时发生频谱图弯曲,我们设 $w=1.0$ )。弯曲惩罚机制促使对齐路径不会过度偏离恒等对齐。设 $\delta_{k}$ 为指示变量,发生弯曲时为1,否则为0。则路径总成本 $c_{p}$ 为:

$$

\begin{array}{r}{c_{p}=\sum_{k=1}^{K_{p}}\Big(w\cdot\delta_{k}+\frac{1}{F}\sum_{f=1}^{F}|S_{\mathrm{gen}}[p_{\mathrm{gen},k},f]-S_{\mathrm{gt}}[p_{\mathrm{gt},k},f]|\Big).}\end{array}

$$

$$

\begin{array}{r}{c_{p}=\sum_{k=1}^{K_{p}}\Big(w\cdot\delta_{k}+\frac{1}{F}\sum_{f=1}^{F}|S_{\mathrm{gen}}[p_{\mathrm{gen},k},f]-S_{\mathrm{gt}}[p_{\mathrm{gt},k},f]|\Big).}\end{array}

$$

depends on the degree of warping $(T\leq K_{p}\leq2T-1)$ . The DTW prediction loss is then

取决于扭曲程度 $(T\leq K_{p}\leq2T-1)$。随后计算DTW预测损失

$$

\mathcal{L}_ {\mathrm{pred}}^{\prime}=\operatorname*{min}_ {p\in\mathcal{P}}c_{p},

$$

$$

\mathcal{L}_ {\mathrm{pred}}^{\prime}=\operatorname*{min}_ {p\in\mathcal{P}}c_{p},

$$

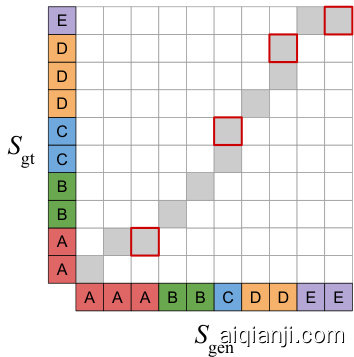

where $\mathcal{P}$ is the set of all valid paths. $p\in\mathcal P$ only when $p_{\mathrm{gen},1}=p_{\mathrm{gt},1}=1$ and $p_{\mathrm{gen},K_{p}}=p_{\mathrm{gt},K_{p}}=T$ , i.e. the first and last timesteps of the spec tro grams are aligned. To find the minimum, we use dynamic programming. Figure 2 shows a diagram of an optimal alignment path between two sequences.

其中 $\mathcal{P}$ 是所有有效路径的集合。当且仅当 $p_{\mathrm{gen},1}=p_{\mathrm{gt},1}=1$ 且 $p_{\mathrm{gen},K_{p}}=p_{\mathrm{gt},K_{p}}=T$ 时,$p\in\mathcal P$ 成立,即频谱图的起始和结束时间步必须对齐。我们采用动态规划算法来寻找最优路径。图 2: 展示了两条序列间的最优对齐路径示意图。

DTW is differentiable, but the minimum across all paths makes optimisation difficult, because the gradient is propagated only through the minimal path. We use a soft version of DTW instead (Cuturi & Blondel, 2017), which replaces the minimum with the soft minimum:

DTW是可微分的,但由于梯度仅通过最小路径传播,对所有路径取最小值使得优化变得困难。我们改用软版本DTW (Cuturi & Blondel, 2017),用软最小值替代最小值:

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}=-\tau\cdot\log\sum_{p\in\mathcal{P}}\exp\left(-\frac{c_{p}}{\tau}\right),}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}=-\tau\cdot\log\sum_{p\in\mathcal{P}}\exp\left(-\frac{c_{p}}{\tau}\right),}\end{array}

$$

where $\tau=0.01$ is a temperature parameter and the loss scale factor $\lambda_{\mathrm{pred}}=1.0$ . Note that the minimum operation is recovered by letting $\tau\rightarrow0$ . The resulting loss is a weighted aggregated cost across all paths, enabling gradient propagation through all feasible paths. This creates a trade-off: a higher $\tau$ makes optimisation easier, but the resulting loss less accurately reflects the minimal path cost. Pseudocode for the soft DTW procedure is provided in Appendix E.

其中 $\tau=0.01$ 为温度参数,损失比例因子 $\lambda_{\mathrm{pred}}=1.0$。注意当 $\tau\rightarrow0$ 时可恢复为最小运算。最终损失是所有路径的加权聚合成本,支持梯度通过所有可行路径传播。这会形成一种权衡:较高的 $\tau$ 使优化更容易,但会降低损失函数对最小路径成本的反映精度。软DTW算法的伪代码见附录E。

By relaxing alignment in the prediction loss, the generator can produce waveforms that are not exactly aligned, without being heavily penalised for it. This creates a synergy with the adversarial loss: instead of working against each other because of the rigidity of the prediction loss, the losses now cooperate to reward realistic audio generation with stochastic alignment. Note that the prediction loss is computed on a training window, and not on full length utterances, so we still assume that the star and end points of the windows are exactly aligned. While this might be incorrect, it does not seem to be much of a problem in practice.

通过放宽预测损失的对齐要求,生成器可以产生不完全对齐的波形,而不会因此受到严重惩罚。这与对抗损失形成了协同效应:由于预测损失的刚性不再相互对抗,两种损失现在通过随机对齐共同促进逼真音频的生成。需要注意的是,预测损失是在训练窗口上计算的,而不是在整个话语长度上,因此我们仍然假设窗口的起点和终点是完全对齐的。虽然这可能并不完全准确,但在实践中似乎没有造成太大问题。

Figure 2: Dynamic time warping between two sequences finds a minimal-cost alignment path. Positions where warping occurs are marked with a border.

图 2: 两条序列间的动态时间规整 (Dynamic Time Warping) 寻找最小成本对齐路径。发生规整的位置用边框标出。

2.6 ALIGNER LENGTH LOSS

2.6 ALIGNER 长度损失

To ensure that the model produces realistic token length predictions, we add a loss which encourages the predicted utterance length to be close to the ground truth length. This length is found by summing all token length predictions. Let $L$ be the the number of time steps in the training utterance at $200\mathrm{Hz}$ , $l_{n}$ the predicted length of the $n$ th token, and $N$ the number of tokens, then the length loss is:

为确保模型生成真实的token长度预测,我们添加了一项损失函数,促使预测的语句长度接近真实长度。该长度通过累加所有token长度预测值得出。设$L$为训练语句在$200\mathrm{Hz}$下的时间步数,$l_{n}$为第$n$个token的预测长度,$N$为token总数,则长度损失函数为:

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{length}}=\frac{1}{2}\left(L-\sum_{n=1}^{N}l_{n}\right)^{2}.}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}_ {\mathrm{length}}=\frac{1}{2}\left(L-\sum_{n=1}^{N}l_{n}\right)^{2}.}\end{array}

$$

We use a scale factor $\lambda_{\mathrm{length}}=0.1$ . Note that we cannot match the predicted lengths $l_{n}$ to the ground truth lengths individually, because the latter are not available.

我们使用比例因子 $\lambda_{\mathrm{length}}=0.1$。需要注意的是,我们无法将预测长度 $l_{n}$ 与真实长度逐一匹配,因为后者不可用。

2.7 TEXT PRE-PROCESSING

2.7 文本预处理

Although our model works well with character input, we find that sample quality improves significantly using phoneme input instead. This is not too surprising, given the heterogeneous way in which spellings map to phonemes, particularly in the English language. Many character sequences also have special pronunciations, such as numbers, dates, units of measurement and website domains, and a very large training dataset would be required for the model to learn to pronounce these correctly. Text normalisation (Zhang et al., 2019) can be applied beforehand to spell out these sequences as they are typically pronounced (e.g., 1976 could become nineteen seventy six), potentially followed by conversion to phonemes. We use an open source tool, phonemizer (Bernard, 2020), which performs partial normalisation and phone mis ation (see Appendix F). Finally, whether we train on text or phoneme input sequences, we pre- and post-pad the sequence with a special silence token (for training and inference), to allow the aligner to account for silence at the beginning and end of each utterance.

虽然我们的模型在处理字符输入时表现良好,但我们发现使用音素输入能显著提升样本质量。考虑到拼写与音素间存在非固定映射关系(尤其是英语),这一结果并不令人意外。许多字符序列还存在特殊发音规则,例如数字、日期、计量单位和网站域名,要让模型学会正确发音需要极其庞大的训练数据集。文本归一化(Zhang et al., 2019)可预先将这些序列展开为常规发音形式(如将1976转换为nineteen seventy six),随后再转换为音素。我们采用开源工具phonemizer(Bernard, 2020)进行部分归一化和音素转换(详见附录F)。最后,无论训练文本还是音素输入序列,我们都会在序列首尾添加特殊静音token(用于训练和推理),使对齐器能够处理语句首尾的静音段。

3 RELATED WORK

3 相关工作

Speech generation saw significant quality improvements once treating it as a generative modelling problem became the norm (Zen et al., 2009; van den Oord et al., 2016). Likelihood-based approaches dominate, but generative adversarial networks (GANs) (Goodfellow et al., 2014) have been making significant inroads recently. A common thread through most of the literature is a separation of the speech generation process into multiple stages: coarse-grained temporally aligned intermediate representations, such as mel-spec tro grams, are used to divide the task into more manageable subproblems. Many works focus exclusively on either spec tr ogram generation or vocoding (generating a waveform from a spec tr ogram). Our work is different in this respect, and we will point out which stages of the generation process are addressed by each model. In Appendix J, Table 6 we compare these methods in terms of the inputs and outputs to each stage of their pipelines.

语音生成在将其视为生成式建模问题成为常态后(Zen et al., 2009; van den Oord et al., 2016),质量得到了显著提升。基于似然的方法占主导地位,但生成对抗网络(GANs)(Goodfellow et al., 2014)最近取得了重大进展。大多数文献的共同点是将语音生成过程分为多个阶段:使用粗粒度时间对齐的中间表示(如梅尔频谱图)将任务分解为更易处理的子问题。许多工作专门关注频谱图生成或声码器(从频谱图生成波形)。我们的工作在这方面有所不同,我们将指出每个模型处理生成过程的哪些阶段。在附录J的表6中,我们比较了这些方法在流程各阶段输入输出方面的差异。

Initially, most likelihood-based models for TTS were auto regressive (van den Oord et al., 2016; Mehri et al., 2017; Arik et al., 2017), which means that there is a sequential dependency between subsequent time steps of the produced output signal. That makes these models impractical for real-time use, although this can be addressed with careful engineering (Kal ch brenner et al., 2018; Valin & Skoglund, 2019). More recently, flow-based models (Papa makarios et al., 2019) have been explored as a feed-forward alternative that enables fast inference (without sequential dependencies). These can either be trained directly using maximum likelihood (Prenger et al., 2019; Kim et al., 2019; Ping et al., 2019b), or through distillation from an auto regressive model (van den Oord et al., 2018; Ping et al., 2019a). All of these models produce waveforms conditioned on an intermediate representation: either spec tro grams or “linguistic features”, which contain temporally-aligned high-level information about the speech signal. Spec tr ogram-conditioned waveform models are often referred to as vocoders.

最初,大多数基于似然的文本转语音(TTS)模型都是自回归的(van den Oord等人,2016;Mehri等人,2017;Arik等人,2017),这意味着生成输出信号的后续时间步之间存在顺序依赖性。这使得这些模型难以实时应用,尽管通过精心设计可以解决这一问题(Kalchbrenner等人,2018;Valin & Skoglund,2019)。最近,基于流的模型(Papamakarios等人,2019)作为一种前馈替代方案被探索,能够实现快速推理(无需顺序依赖)。这些模型可以直接通过最大似然训练(Prenger等人,2019;Kim等人,2019;Ping等人,2019b),或通过自回归模型蒸馏得到(van den Oord等人,2018;Ping等人,2019a)。所有这些模型都基于中间表示生成波形:要么是声谱图,要么是包含语音信号时间对齐高层信息的"语言学特征"。基于声谱图的波形模型通常被称为声码器。

A growing body of work has applied GAN (Goodfellow et al., 2014) variants to speech synthesis (Donahue et al., 2019). An important advantage of adversarial losses for TTS is a focus on realism over diversity; the latter is less important in this setting. This enables a more efficient use of capacity compared to models trained with maximum likelihood. MelGAN (Kumar et al., 2019) and Parallel WaveGAN (Yamamoto et al., 2020) are adversarial vocoders, producing raw waveforms from mel-spec tro grams. Neekhara et al. (2019) predict magnitude spec tro grams from mel-spec tro grams. Most directly related to our work is GAN-TTS (Binkowski et al., 2020), which produces waveforms conditioned on aligned linguistic features, and we build upon that work.

越来越多的研究将GAN (Goodfellow等人,2014) 的变体应用于语音合成 (Donahue等人,2019)。对抗性损失在TTS中的一个重要优势是更注重真实性而非多样性,后者在此场景中相对次要。与基于最大似然训练的模型相比,这使得模型容量得到更高效的利用。MelGAN (Kumar等人,2019) 和Parallel WaveGAN (Yamamoto等人,2020) 是采用对抗训练的声码器,能够从梅尔频谱图生成原始波形。Neekhara等人 (2019) 则实现了从梅尔频谱图预测幅度频谱图。与本研究最直接相关的是GAN-TTS (Binkowski等人,2020),该工作基于对齐的语言特征生成波形,我们的研究正是在此基础上展开。

Another important line of work covers spec tr ogram generation from text. Such models rely on a vocoder to convert the spec tro grams into waveforms (for which one of the previously mentioned models could be used, or a traditional spec tr ogram inversion technique (Griffin & Lim, 1984)). Tacotron 1 & 2 (Wang et al., 2017; Shen et al., 2018), Deep Voice 2 & 3 (Gibiansky et al., 2017; Ping et al., 2018), Transformer TT S (Li et al., 2019), Flowtron (Valle et al., 2020), and VoiceLoop (Taigman et al., 2017) are auto regressive models that generate spec tro grams or vocoder features frame by frame. Guo et al. (2019) suggest using an adversarial loss to reduce exposure bias (Bengio et al., 2015; Ranzato et al., 2016) in such models. MelNet (Vasquez & Lewis, 2019) is auto regressive over both time and frequency. ParaNet (Peng et al., 2019) and FastSpeech (Ren et al., 2019) are nonauto regressive, but they require distillation (Hinton et al., 2015) from an auto regressive model. Recent flow-based approaches Flow-TTS (Miao et al., 2020) and Glow-TTS (Kim et al., 2020) are feedforward without requiring distillation. Most spec tr ogram generation models require training of a custom vocoder model on generated spec tro grams, because their predictions are imperfect and the vocoder needs to be able to compensate for this2. Note that some of these works also propose new vocoder architectures in tandem with spec tr ogram generation models.

另一项重要工作涉及从文本生成频谱图。这类模型依赖声码器将频谱图转换为波形(可使用前文提到的任一模型,或传统频谱图反转技术 (Griffin & Lim, 1984))。Tacotron 1 & 2 (Wang et al., 2017; Shen et al., 2018)、Deep Voice 2 & 3 (Gibiansky et al., 2017; Ping et al., 2018)、Transformer TTS (Li et al., 2019)、Flowtron (Valle et al., 2020) 和 VoiceLoop (Taigman et al., 2017) 是通过逐帧生成频谱图或声码器特征的自回归模型。Guo et al. (2019) 提出使用对抗损失来减少此类模型中的曝光偏差 (Bengio et al., 2015; Ranzato et al., 2016)。MelNet (Vasquez & Lewis, 2019) 在时间和频率维度上均为自回归。ParaNet (Peng et al., 2019) 和 FastSpeech (Ren et al., 2019) 为非自回归模型,但需要从自回归模型进行知识蒸馏 (Hinton et al., 2015)。近期基于流的 Flow-TTS (Miao et al., 2020) 和 Glow-TTS (Kim et al., 2020) 为前馈结构且无需蒸馏。多数频谱图生成模型需在生成频谱图上训练定制声码器,因其预测存在缺陷需声码器补偿2。需注意部分研究在提出频谱图生成模型时同步设计了新声码器架构。

Unlike all of the aforementioned methods, as highlighted in Appendix J, Table 6, our model is a single feed-forward neural network, trained end-to-end in a single stage, which produces waveforms given character or phoneme sequences, and learns to align without additional supervision from auxiliary sources (e.g. temporally aligned linguistic features from an external model) or teacher forcing. This simplifies the training process considerably. Char2wav (Sotelo et al., 2017) is finetuned end-to-end in the same fashion, but requires a pre-training stage with vocoder features used for intermediate supervision.

与附录J表6中强调的所有前述方法不同,我们的模型是一个单一的前馈神经网络,通过单阶段端到端训练,能够在给定字符或音素序列时生成波形,并且无需来自辅助源(例如外部模型的时间对齐语言特征)或教师强制(teacher forcing)的额外监督就能学习对齐。这大大简化了训练过程。Char2wav (Sotelo等人,2017)采用相同方式进行端到端微调,但需要以声码器特征作为中间监督的预训练阶段。

Spec tr ogram prediction losses have been used extensively for feed-forward audio prediction models (Yamamoto et al., 2019; 2020; Yang et al., 2020; Arık et al., 2018; Engel et al., 2020; Wang et al.,

频谱图预测损失已广泛应用于前馈音频预测模型 (Yamamoto et al., 2019; 2020; Yang et al., 2020; Arık et al., 2018; Engel et al., 2020; Wang et al.,

2019; Défossez et al., 2018). We note that the $L_{1}$ loss we use (along with (Défossez et al., 2018)), is comparatively simple, as spec tr ogram losses in the literature tend to have separate terms penalising magnitudes, log-magnitudes and phase components, each with their own scaling factors, and often across multiple resolutions. Dynamic time warping on spec tro grams is a component of many speech recognition systems (Sakoe, 1971; Sakoe & Chiba, 1978), and has also been used for evaluation of TTS systems (Sailor & Patil, 2014; Chevelu et al., 2015). Cuturi & Blondel (2017) proposed the soft version of DTW we use in this work as a differentiable loss function for time series models. Kim et al. (2020) propose Monotonic Alignment Search (MAS), which relates to DTW in that both use dynamic programming to implicitly align sequences for TTS. However, they have different goals: MAS finds the optimal alignment between the text and a latent representation, whereas we use DTW to relax the constraints imposed by our spec tr ogram prediction loss term. Several mechanisms have been proposed to exploit monotonic it y in tasks that require sequence alignment, including attention mechanisms (Graves, 2013; Zhang et al., 2018; Vasquez & Lewis, 2019; He et al., 2019; Raffel et al., 2017; Chiu & Raffel, 2018), loss functions (Graves et al., 2006; Graves, 2012) and search-based approaches (Kim et al., 2020). For TTS, incorporating this constraint has been found to help generalisation to long sequences (Battenberg et al., 2020). We incorporate monotonic it y by using an interpolation mechanism, which is cheap to compute because it is not recurrent (unlike many monotonic attention mechanisms).

2019; Défossez等人, 2018)。我们注意到,所使用的$L_{1}$损失函数(与(Défossez等人, 2018)一致)相对简单,因为文献中的频谱图损失通常包含分别惩罚幅度、对数幅度和相位分量的独立项,每项都有各自的缩放因子,且常在多分辨率下计算。频谱图的动态时间规整(DTW)是许多语音识别系统的组成部分(Sakoe, 1971; Sakoe & Chiba, 1978),也被用于TTS系统评估(Sailor & Patil, 2014; Chevelu等人, 2015)。Cuturi & Blondel (2017)提出了本文采用的软DTW版本,作为时序模型的可微损失函数。Kim等人(2020)提出的单调对齐搜索(MAS)与DTW相关,两者都使用动态规划隐式对齐TTS序列。但目标不同:MAS寻找文本与潜在表示之间的最优对齐,而我们用DTW放松频谱图预测损失项的约束。已有多种机制利用单调性处理序列对齐任务,包括注意力机制(Graves, 2013; Zhang等人, 2018; Vasquez & Lewis, 2019; He等人, 2019; Raffel等人, 2017; Chiu & Raffel, 2018)、损失函数(Graves等人, 2006; Graves, 2012)和基于搜索的方法(Kim等人, 2020)。在TTS中,这种约束有助于提升长序列泛化能力(Battenberg等人, 2020)。我们通过插值机制实现单调性,该机制计算成本低(与多数单调注意力机制不同)。

4 EVALUATION

4 评估

In this section we discuss the setup and results of our empirical evaluation, describing the hyperparameter settings used for training and validating the architectural decisions and loss function components detailed in Section 2. Our primary metric used to evaluate speech quality is the Mean Opinion Score (MOS) given by human raters, computed by taking the mean of 1-5 naturalness ratings given across 1000 held-out conditioning sequences. In Appendix I we also report the Fréchet DeepSpeech Distance (FDSD), proposed by Binkowski et al. (2020) as a speech synthesis quality metric. Appendix A reports training and evaluation hyper parameters we used for all experiments.

本节我们将讨论实证评估的设置与结果,详细说明用于训练的超参数配置以及验证第2章所述的架构决策与损失函数组件。评估语音质量的核心指标是人工评分者给出的平均意见得分(MOS),通过对1000条保留条件序列的1-5分自然度评分取均值计算得出。附录I还报告了由Binkowski等人(2020)提出的Fréchet DeepSpeech距离(FDSD)作为语音合成质量指标。附录A列出了所有实验采用的训练与评估超参数。

4.1 MULTI-SPEAKER DATASET

4.1 多说话人数据集

We train all models on a private dataset that consists of high-quality recordings of human speech performed by professional voice actors, and corresponding text. The voice pool consists of 69 female and male voices of North American English speakers, while the audio clips contain full sentences of lengths varying from less than 1 to 20 seconds at $24\mathrm{kHz}$ frequency. Individual voices are unevenly distributed, accounting for from 15 minutes to over 51 hours of recorded speech, totalling 260.49 hours. At training time, we sample 2 second windows from the individual clips, post-padding those shorter than 2 seconds with silence. For evaluation, we focus on the single most prolific speaker in our dataset, with all our main MOS results reported with the model conditioned on that speaker ID, but also report MOS results for each of the top four speakers using our main multi-speaker model.

我们在一个私有数据集上训练所有模型,该数据集包含专业配音演员录制的高质量人类语音及对应文本。语音库由69位北美英语母语者的男女声组成,音频片段为完整句子,时长从不足1秒到20秒不等,采样频率为$24\mathrm{kHz}$。个体语音数据分布不均,录制时长从15分钟到51小时以上不等,总计260.49小时。训练时,我们从单个片段中采样2秒窗口,对不足2秒的片段用静音进行后补零。评估时,我们聚焦数据集中产量最高的单一说话人,所有主要MOS(平均意见分)结果均基于该说话人ID的条件化模型得出,但同时也使用主多说话人模型报告了前四位说话人各自的MOS结果。

4.2 RESULTS

4.2 结果

In Table 1 we present quantitative results for our EATS model described in Section 2, as well as several ablations of the different model and learning signal components. The architecture and training setup of each ablation is identical to our base EATS model except in terms of the differences described by the columns in Table 1. Each ablation is “subtractive”, representing the full EATS system minus one particular feature. Our main result achieved by the base multi-speaker model is a mean opinion score (MOS) of 4.083. Although it is difficult to compare directly with prior results from the literature due to dataset differences, we nonetheless include MOS results from prior works (Binkowski et al. 2020; van den Oord et al., 2016; 2018), with MOS in the 4.2 to $4.4+$ range. Compared to these prior models, which rely on aligned linguistic features, EATS uses substantially less supervision.

在表1中,我们展示了第2节描述的EATS模型的定量结果,以及不同模型和学习信号组件的多项消融实验。每个消融实验的架构和训练设置与基础EATS模型完全相同,仅存在表1各列所描述的差异。所有消融实验均为"减法式",代表完整EATS系统减去某一特定功能。基础多说话人模型取得的主要成果是4.083的平均意见得分(MOS)。尽管由于数据集差异难以直接与文献中的先前结果比较,我们仍列出了先前工作(Binkowski等人2020;van den Oord等人2016;2018)的MOS结果,其得分在4.2至$4.4+$范围内。与这些依赖对齐语言特征的先前模型相比,EATS使用的监督信息显著减少。

The No RWDs, No MelSpecD, and No Disc rim in at or s ablations all achieved substantially worse MOS results than our proposed model, demonstrating the importance of adversarial feedback. In particular, the No RWDs ablation, with an MOS of 2.526, demonstrates the importance of the raw audio feedback, and removing RWDs significantly degrades the high frequency components. No MelSpecD causes intermittent artifacts and distortion, and removing all disc rim in at or s results in audio that sounds robotic and distorted throughout. The No $\mathcal{L}_ {\mathrm{length}}$ and No $\mathcal{L}_{\mathrm{pred}}$ ablations result in a model that does not train at all. Comparing our model with No DTW (MOS 3.559), the temporal flexibility provided by dynamic time warping significantly improves fidelity: removing it causes warbling and unnatural phoneme lengths. No Phonemes is trained with raw character inputs and attains MOS 3.423, due to occasional mispronunciations and unusual stress patterns. No Mon. Int. uses an aligner with a transformer-based attention mechanism (described in Appendix G) in place of our monotonic interpolation architecture, which turns out to generalise poorly to long utterances (yielding MOS 3.551). Finally, comparing against training with only a Single Speaker (MOS 3.829) shows that our EATS model benefits from a much larger multi-speaker dataset, even though MOS is evaluated only on this same single speaker on which the ablation was solely trained. Samples from each ablation are available at https://deepmind.com/research/publications/ End-to-End-Adversarial-Text-to-Speech.

无RWDs、无MelSpecD和无判别器的消融实验均比我们提出的模型获得了显著更差的MOS评分,这证明了对抗性反馈的重要性。特别是无RWDs消融实验(MOS 2.526)验证了原始音频反馈的关键作用,移除RWDs会显著劣化高频成分。无MelSpecD会导致间歇性伪影和失真,而移除所有判别器则会使音频全程呈现机械感与失真。无$\mathcal{L}_ {\mathrm{length}}$和无$\mathcal{L}_{\mathrm{pred}}$的消融实验会导致模型完全无法训练。将我们的模型与无DTW版本(MOS 3.559)对比表明,动态时间规整提供的时间灵活性显著提升了保真度:移除该功能会导致颤音现象和非自然音素长度。无音素版本使用原始字符输入训练,因偶发发音错误和异常重音模式获得MOS 3.423。无单调插值版本使用基于Transformer注意力机制的对齐器(附录G详述)替代我们的单调插值架构,该方案对长语句泛化能力较差(MOS 3.551)。最后,与单说话人训练(MOS 3.829)的对比表明,我们的EATS模型受益于更大的多说话人数据集,尽管MOS评估仅针对消融实验训练所用的同一说话人。各消融实验样本详见https://deepmind.com/research/publications/End-to-End-Adversarial-Text-to-Speech。

Table 1: Mean Opinion Scores (MOS) for our final EATS model and the ablations described in Section 4, sorted by MOS. The middle columns indicate which components of our final model are enabled or ablated. Data describes the training set as Multi speaker (MS) or Single Speaker (SS). Inputs describes the inputs as raw characters $\mathrm{(Ch)}$ or phonemes $\mathrm{(Ph)}$ produced by Phonemizer. RWD (Random Window Disc rim in at or s), $M S D$ (Mel-spec tr ogram Disc rim in at or), and $\mathcal{L}_ {\mathrm{length}}$ (length prediction loss) indicate the presence $(\checkmark)$ or absence $(\times)$ of each of these training components described in Section 2. $\mathcal{L}_ {\mathrm{pred}}$ indicates which spec tr ogram prediction loss was used: with DTW $(\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}$ , Eq. 6), without DTW $\mathcal{L}_{\mathrm{pred}}$ , Eq. 3), or absent $(\times)$ . Align describes the architecture of the aligner as monotonic interpolation (MI) or attention-based (Attn). We also compare against recent state-of-the-art approaches from the literature which are trained on aligned linguistic features (unlike our models). Our MOS evaluation set matches that of GAN-TTS (Binkowski et al., 2020) (and our “Single Speaker” training subset matches the GAN-TTS training set); the other approaches are not directly comparable due to dataset differences.

| Model | Data Inputs | RWD | MSD | Llength Lpred | Align | MOS | |

| Natural Speech | 4.55 ± 0.075 | ||||||

| GAN-TTS (Binkowskiet al.,2020) | 4.213 ± 0.046 | ||||||

| WaveNet(vandenOordetal.,2016) | 4.41 ± 0.069 | ||||||

| Par:WaveNet(van den Oord et al.,2018) | 4.41 ± 0.078 | ||||||

| Tacotron2 (Shenet al.,2018) | 4.526 ±0.066 | ||||||

| No Llength | MS | Ph | × | MI | [does not train] | ||

| No Lpred | MS | Ph | MI | [does not train] | |||

| NoDiscriminators | MS | Ph | √ × | C" pred | MI | 1.407 ± 0.040 | |

| No RWDs | MS | Ph | MI | 2.526 ±0.060 | |||

| No Phonemes | MS | Ch | pred | MI | 3.423±0.073 | ||

| No MelSpecD | MS | Ph | MI | 3.525 ±0.057 | |||

| No Mon. Int. | MS | Ph | 'pred | Attn | 3.551±0.073 | ||

| NoDTW | MS | Ph | Lpred | MI | 3.559 ± 0.065 | ||

| Single Speaker | SS | Ph | MI | 3.829 ± 0.055 | |||

| EATS (Ours) | MS | Ph | √ | Clpred MI | 4.083 ± 0.049 |

表 1: 最终EATS模型及各消融实验的平均意见得分(MOS),按MOS排序。中间列表示最终模型中启用或消融的组件。"Data"描述训练集为多说话人(MS)或单说话人(SS)。"Inputs"描述输入为原始字符$\mathrm{(Ch)}$或由Phonemizer生成的音素$\mathrm{(Ph)}$。RWD(随机窗口判别器)、$MSD$(梅尔谱判别器)和$\mathcal{L}_ {\mathrm{length}}$(长度预测损失)表示这些训练组件的存在$(\checkmark)$或缺失$(\times)$。$\mathcal{L}_ {\mathrm{pred}}$表示使用的谱图预测损失类型:带DTW的$(\mathcal{L}_ {\mathrm{pred}}^{\prime\prime}$,公式6)、不带DTW的($\mathcal{L}_{\mathrm{pred}}$,公式3)或缺失$(\times)$。"Align"描述对齐器架构为单调插值(MI)或基于注意力机制(Attn)。我们还与文献中使用对齐语言学特征的最新方法进行了对比(与我们的模型不同)。我们的MOS评估集与GAN-TTS(Binkowski等人,2020)一致(且"单说话人"训练子集与GAN-TTS训练集匹配);由于数据集差异,其他方法不具备直接可比性。

| 模型 | 数据输入 | RWD | MSD | $\mathcal{L}_ {\mathrm{length}}$ $\mathcal{L}_ {\mathrm{pred}}$ | 对齐方式 | MOS |

|---|---|---|---|---|---|---|

| 自然语音 | - | - | - | - | - | 4.55 ± 0.075 |

| GAN-TTS (Binkowski等人,2020) | - | - | - | - | - | 4.213 ± 0.046 |

| WaveNet(van den Oord等人,2016) | - | - | - | - | - | 4.41 ± 0.069 |

| Parallel WaveNet(van den Oord等人,2018) | - | - | - | - | - | 4.41 ± 0.078 |

| Tacotron2 (Shen等人,2018) | - | - | - | - | - | 4.526 ±0.066 |

| 无长度损失 | MS | Ph | × | - | MI | [不训练] |

| 无预测损失 | MS | Ph | - | × | MI | [不训练] |

| 无判别器 | MS | Ph | × | × | $\mathcal{L}_{\mathrm{pred}}^{\prime\prime}$ | MI |

| 无RWD | MS | Ph | × | √ | $\mathcal{L}_{\mathrm{pred}}$ | MI |

| 无音素 | MS | Ch | √ | √ | $\mathcal{L}_{\mathrm{pred}}$ | MI |

| 无梅尔谱判别器 | MS | Ph | √ | × | $\mathcal{L}_{\mathrm{pred}}$ | MI |

| 无单调插值 | MS | Ph | √ | √ | $\mathcal{L}_{\mathrm{pred}}^{\prime}$ | Attn |

| 无DTW | MS | Ph | √ | √ | $\mathcal{L}_{\mathrm{pred}}$ | MI |

| 单说话人 | SS | Ph | √ | √ | $\mathcal{L}_{\mathrm{pred}}^{\prime\prime}$ | MI |

| EATS (本文) | MS | Ph | √ | √ | $\mathcal{L}_{\mathrm{pred}}^{\prime\prime}$ | MI |

Table 2: Mean Opinion Scores (MOS) for the top four speakers with the most data in our training set. All evaluations are done using our single multi-speaker EATS model.

| Speaker | #1 | #2 | #3 | #4 |

| Speaking Time (Hours) | 51.68 | 31.21 | 20.68 | 10.32 |

| MOS | 4.083 ± 0.049 | 3.828± 0.051 | 4.149 ± 0.045 | 3.761± 0.052 |

表 2: 训练集中数据量最多的四位说话人的平均意见得分(MOS)。所有评估均使用我们的单一多说话人EATS模型完成。

| Speaker | #1 | #2 | #3 | #4 |

|---|---|---|---|---|

| Speaking Time (Hours) | 51.68 | 31.21 | 20.68 | 10.32 |

| MOS | 4.083 ± 0.049 | 3.828± 0.051 | 4.149 ± 0.045 | 3.761± 0.052 |

We demonstrate that the aligner learns to use the latent vector $\mathbf{z}$ to vary the predicted token lengths in Appendix H. In Table 2 we present additional MOS results from our main multi-speaker EATS model for the four most prolific speakers in our training data3. MOS generally improves with more training data, although the correlation is imperfect (e.g., Speaker #3 achieves the highest MOS with only the third most training data).

我们证明对齐器学会了利用潜在向量$\mathbf{z}$来改变预测的token长度(详见附录H)。在表2中,我们展示了主多说话人EATS模型中训练数据量最大的四位说话人的额外MOS结果。虽然相关性并不完美(例如说话人#3仅用第三多的训练数据就获得了最高MOS),但MOS通常随着训练数据的增加而提升。

5 DISCUSSION

5 讨论

We have presented an adversarial approach to text-to-speech synthesis which can learn from a relatively weak supervisory signal – normalised text or phonemes paired with corresponding speech audio. The speech generated by our proposed model matches the given conditioning texts and general is es to unobserved texts, wi