MULDE: Multiscale Log-Density Estimation via Denoising Score Matching for Video Anomaly Detection

MULDE:基于去噪分数匹配的多尺度对数密度估计视频异常检测方法

Abstract

摘要

in which the system is trained exclusively on normal data.

系统仅针对正常数据进行训练。

We propose a novel approach to video anomaly detection: we treat feature vectors extracted from videos as realizations of a random variable with a fixed distribution and model this distribution with a neural network. This lets us estimate the likelihood of test videos and detect video anomalies by threshold ing the likelihood estimates. We train our video anomaly detector using a modification of denoising score matching, a method that injects training data with noise to facilitate modeling its distribution. To eliminate hyper parameter selection, we model the distribution of noisy video features across a range of noise levels and introduce a regularize r that tends to align the models for different levels of noise. At test time, we combine anomaly indications at multiple noise scales with a Gaussian mixture model. Running our video anomaly detector induces minimal delays as inference requires merely extracting the features and forward-propagating them through a shallow neural network and a Gaussian mixture model. Our experiments on five popular video anomaly detection benchmarks demonstrate state-of-the-art performance, both in the object-centric and in the frame-centric setup.

我们提出了一种新颖的视频异常检测方法:将视频中提取的特征向量视为具有固定分布的随机变量实现,并用神经网络对该分布进行建模。通过估算测试视频的似然值并对似然估计进行阈值处理来检测视频异常。我们采用改进的去噪分数匹配方法训练视频异常检测器,该方法通过向训练数据注入噪声来促进分布建模。为消除超参数选择,我们模拟了不同噪声级别下含噪视频特征的分布,并引入一个正则化项来协调不同噪声级别的模型。测试时,我们通过高斯混合模型整合多噪声尺度下的异常指标。由于推理过程仅需提取特征并通过浅层神经网络和高斯混合模型进行前向传播,我们的视频异常检测器运行时延极低。在五个主流视频异常检测基准测试中,无论是面向对象还是面向帧的设定,我们的方法都实现了最先进的性能。

Traditional approaches to one-class video anomaly detection rely on training a deep network in auxiliary self-supervised tasks, like auto-encoding the frame sequence [10–13, 16, 31], predicting future frames [23, 30], inpainting spatio-temporal volumes [10], and solving jigsaw puzzles [2, 42]. The underlying assumption is that given a video sufficiently different from those of the training set, i.e. one containing an anomaly, the network should fail to complete the self-supervised task. However, the connection between data normality or abnormality and the performance of the network remains unclear. Deep networks can generalize beyond their training set, and there is no guarantee that anomalies make them fail to complete their task.

传统的一类视频异常检测方法依赖于在辅助自监督任务中训练深度网络,例如对帧序列进行自动编码 [10–13, 16, 31]、预测未来帧 [23, 30]、修复时空块 [10] 以及解决拼图任务 [2, 42]。其基本假设是,当输入与训练集差异显著的视频(即包含异常的视频)时,网络应无法完成自监督任务。然而,数据正常性或异常性与网络性能之间的关联仍不明确。深度网络能够泛化至训练集之外的数据,且无法保证异常必然导致任务失败。

Our motivation is to lay more solid foundations for video anomaly detection. To that end, we treat feature vectors extracted from videos as realization s of a random variable with a fixed distribution, and seek to approximate its probability density function with a neural network. Such approximation would enable a principled and intuitive approach to detecting anomalies: since anomalous data is characterized by a low likelihood under the statistical model of normal data, it could be detected by threshold ing the approximate density function.

我们的目标是为视频异常检测奠定更坚实的基础。为此,我们将从视频中提取的特征向量视为具有固定分布的随机变量的实现,并尝试用神经网络近似其概率密度函数。这种近似将提供一种基于原理且直观的异常检测方法:由于异常数据在正常数据统计模型下的似然较低,因此可以通过对近似密度函数进行阈值处理来检测异常。

1. Introduction

1. 引言

The goal of video anomaly detection (VAD) is to detect events that deviate from normal patterns in videos. VAD has numerous potential applications in healthcare, safety, and traffic monitoring. It can be used to detect events like human falling down, workplace, or traffic accidents, and holds the promise of dramatically reducing the time needed to respond to emergencies that can result from them. The main challenge of anomaly detection stems from the fact that, unlike classes of actions in video action recognition, anomalies do not form a coherent group of patterns and typically cannot be anticipated in advance. In consequence, in many applications anomalous training data is not available, neces sita ting the so-called one-class classification approach,

视频异常检测 (VAD) 的目标是检测视频中偏离正常模式的事件。VAD 在医疗保健、安全和交通监控等领域具有广泛的应用潜力,可用于检测如人体跌倒、工作场所或交通事故等事件,并有望大幅缩短对此类突发事件做出响应所需的时间。异常检测的主要挑战源于一个事实:与视频动作识别中的动作类别不同,异常事件并不构成一组连贯的模式,通常也无法提前预知。因此,在许多应用场景中,异常训练数据往往不可获取,这就使得所谓的单类分类方法成为必要选择。

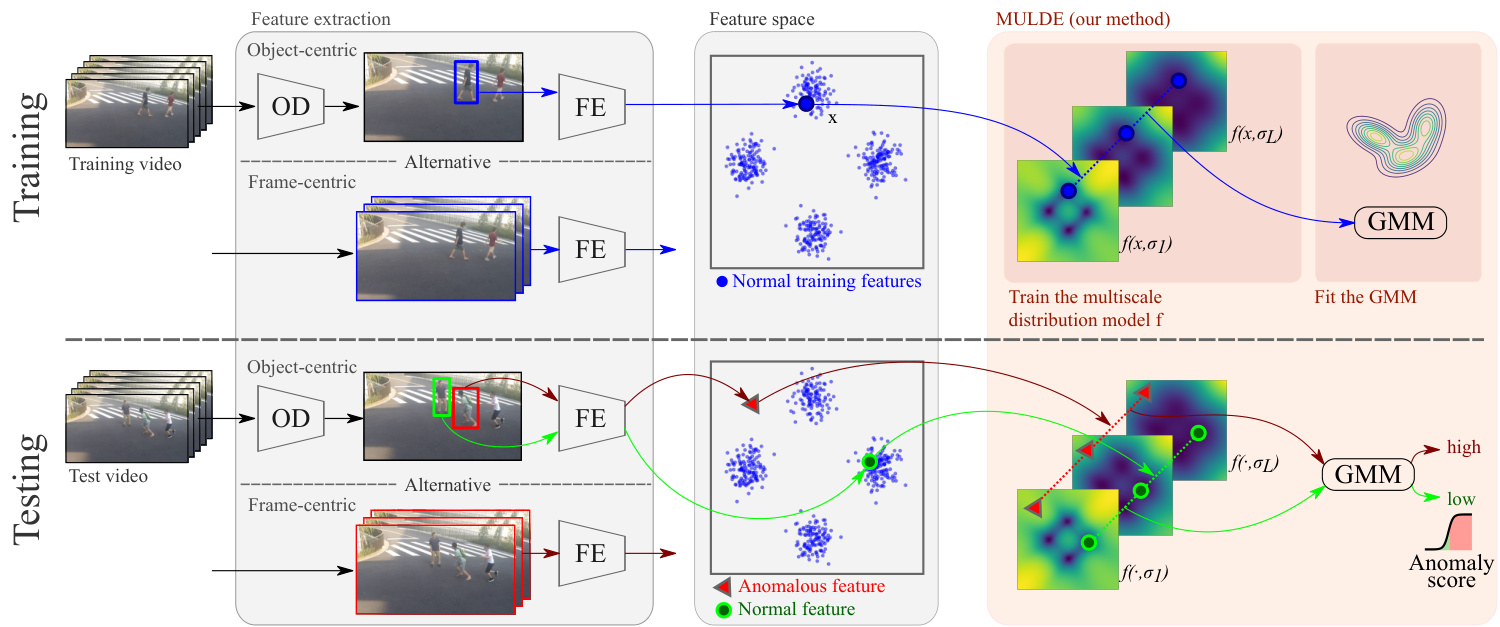

Training a neural network to directly approximate $p(\mathbf{x})$ , the probability density function of the training data is very challenging. However, Vincent [41] showed that injecting the data with zero-centered, iid Gaussian noise makes it easier to model the distribution of the noisy data $q(\tilde{\mathbf{x}})$ . For sufficiently low levels of noise, $q$ preserves the shape of $p$ , which makes it a suitable basis for our anomaly indicator. Vincent’s contribution consisted in proposing denoising score matching, a method to train a neural network to approximate $-\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})$ , the negative log-gradient of the density function of noisy data, which became a core algorithm of a recent class of generative models [38]. We modify this method to train a neural anomaly indicator that approximates, up to a constant, the log-density, $-\log q(\tilde{\mathbf{x}})$ , well suited to indicating anomalies thanks to its one-to-one relation to $q(\tilde{\mathbf{x}})$ . Our approach is illustrated in Figure 1.

训练神经网络直接近似训练数据的概率密度函数 $p(\mathbf{x})$ 非常具有挑战性。然而,Vincent [41] 的研究表明,向数据注入零均值独立同分布 (iid) 的高斯噪声后,噪声数据的分布 $q(\tilde{\mathbf{x}})$ 会更容易建模。当噪声水平足够低时,$q$ 能保持 $p$ 的形态特征,这使其成为构建异常指标的理想基础。Vincent 的核心贡献是提出了去噪分数匹配 (denoising score matching) 方法,通过训练神经网络来近似噪声数据密度函数的负对数梯度 $-\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})$ ,该算法后来成为一类新兴生成模型 [38] 的核心组件。我们改进该方法以训练神经异常指标,使其在常数范围内逼近对数密度 $-\log q(\tilde{\mathbf{x}})$ ——由于该指标与 $q(\tilde{\mathbf{x}})$ 存在一一对应关系,因此特别适合用于异常检测。图 1 展示了我们的方法框架。

Figure 1. MULDE approximates the negative log-density of noisy, normal video features at multiple levels of noise $\sigma$ with a neural network $f(\cdot,\sigma)$ . The log-likelihoods estimated at multiple noise levels are combined into a single anomaly score with a Gaussian mixture model (GMM). MULDE can be trained to detect video anomalies in an object-centric or frame-centric manner. In the object-centric approach, an object detector (OD) is used to detect objects which are then fed to the feature extractor (FE). In the frame-centric approach, the feature extractor is applied to short sequences of entire frames.

图 1: MULDE 使用神经网络 $f(\cdot,\sigma)$ 在多个噪声水平 $\sigma$ 下近似含噪正常视频特征的负对数密度。通过高斯混合模型 (GMM) 将多个噪声水平估计的对数似然合并为单一异常分数。MULDE 可采用以对象为中心或以帧为中心的方式进行视频异常检测训练。在以对象为中心的方法中,使用对象检测器 (OD) 检测对象后将其输入特征提取器 (FE) ;在以帧为中心的方法中,特征提取器直接作用于整帧的短序列。

In its basic form, introduced above, our method requires choosing the standard deviation $\sigma$ of the noise injected into the data, also called the noise scale. This choice represents a compromise between making $q$ closer to $p$ for small values of $\sigma$ and extending the support of $q$ to cover more possible anomalies at larger noise levels. To avoid this unwelcome compromise, we do not settle on a single $\sigma$ , but approximate the log-density for a range of noise scales with a neural network $f(\cdot,\sigma)$ , and introduce a regularization term that tends to align the approximations at different scales. At test time, we compute anomaly indicators for a range of noise scales and combine them into a single anomaly score with a Gaussian mixture model, fitted to normal data. Our experiments show that MULDE, the regularized MUltiscale Log-DEnsity approximation, is a very effective video anomaly detector.

在上述基本形式中,我们的方法需要选择注入数据的噪声标准差 $\sigma$ (也称为噪声尺度)。这一选择代表了在小 $\sigma$ 值下使 $q$ 更接近 $p$ 与在较大噪声水平下扩展 $q$ 的支持以覆盖更多潜在异常之间的折衷。为了避免这种不理想的权衡,我们并不固定单一 $\sigma$ 值,而是用神经网络 $f(\cdot,\sigma)$ 近似一组噪声尺度 下的对数密度,并引入一个正则化项来对齐不同尺度的近似结果。测试时,我们计算一组噪声尺度的异常指标,并通过拟合正常数据的高斯混合模型将其组合成单一异常分数。实验表明,经过正则化的多尺度对数密度近似方法 MULDE 是一种非常有效的视频异常检测器。

To summarize, the main contribution of this paper is a novel approach to detecting anomalies from video features with a neural approximation of their log-density function. In technical terms, we propose a modification of multiscale denoising score matching for training anomaly indicators and a new method to regularize this training. Our anomaly detector is simple, mathematically sound, and fast at test time, as inference requires merely extracting the features and forward-propagating them through a neural network and a Gaussian mixture model. Moreover, it is agnostic of the feature vector it consumes on input. Our experiments on the Ped2 [28], Avenue [25], Shanghai Tech [26], UCFCrime [39], and UBnormal [1] data sets demonstrate state-of-the-art performance in anomaly detection both in the object-centric setup, where features are extracted from bounding boxes of detected objects, and in the frame-centric setup, with features computed for entire frames.

总结来说,本文的主要贡献是提出了一种新颖方法,通过神经逼近视频特征的对数密度函数来检测异常。从技术上讲,我们改进了多尺度去噪分数匹配 (multiscale denoising score matching) 用于训练异常指标,并提出了一种新的训练正则化方法。我们的异常检测器结构简单、数学严谨且测试速度快,因为推理过程仅需提取特征并通过神经网络和高斯混合模型 (Gaussian mixture model) 进行前向传播。此外,它对输入的特征向量具有通用性。在 Ped2 [28]、Avenue [25]、Shanghai Tech [26]、UCFCrime [39] 和 UBnormal [1] 数据集上的实验表明,无论是在以物体为中心(从检测到的物体边界框提取特征)还是以帧为中心(对整个帧计算特征)的设置中,我们的方法都实现了最先进的异常检测性能。

2. Related Work

2. 相关工作

VAD was studied in multiple settings: as a one-class classification problem, where no anomalous data is available for training [4–6, 8, 9, 13, 14, 25, 26, 30, 31, 35, 43, 45– 48], as an unsupervised learning task, where anomalies are present in the training set, but it is not known which training videos contain them [48], and as a supervised, or weakly supervised problem, where training labels indicate anomalous video frames, or videos containing anomalies, respectively [1, 39, 48]. We address the first of these settings – we assume the training set is limited to normal videos.

视频异常检测(VAD)的研究存在多种设定:作为单类别分类问题(此时训练集中不含异常数据)[4–6, 8, 9, 13, 14, 25, 26, 30, 31, 35, 43, 45–48];作为无监督学习任务(训练集中存在异常但未标注具体视频)[48];以及作为有监督或弱监督问题(训练标签分别标注异常视频帧或含异常的视频)[1, 39, 48]。本文针对第一种设定展开研究——假设训练集仅包含正常视频。

Existing VAD methods can be categorized as framecentric when they operate on features computed from entire frames or their sequences [1, 39, 43, 45, 48], and objectcentric, if they estimate the abnormality of each bounding box in every frame [2, 9–11, 16, 35, 42], typically using a pre-trained feature extractor. The frame-centric design is more suited for global events, like fires, or smoke, while the object-centric one is oriented at anomalies associated with people or objects, like human falls, or vehicle accidents. In Sec. 4, we show that our method can establish state-of-theart performance with features of either type.

现有的视频异常检测(VAD)方法可分为两类:帧中心式(frame-centric)方法基于整帧或其序列计算特征进行操作[1, 39, 43, 45, 48];目标中心式(object-centric)方法则通过预训练特征提取器评估每帧中各个边界框的异常程度[2, 9–11, 16, 35, 42]。帧中心式设计更适合火灾、烟雾等全局事件,而目标中心式则针对人体跌倒、车辆事故等与人或物体相关的异常。如第4节所示,我们的方法使用任一类型的特征都能实现最先进的性能。

The predominant approach to VAD is to train a deep network to auto-encode normal videos and use the reconstruction error as anomaly indicator [4, 8, 13, 26, 30, 31, 46, 47]. The idea of using the error of a model pre-trained on normal data to detect anomalies was extended from auto-encoding to multiple other tasks, including predicting future frames, or the optical flow [8, 22, 23, 30, 47], inpainting spatiotemporal volumes [10], and solving jigsaw puzzles [2, 42]. This over arching approach is predicated on the assumption that the error is higher for anomalous frames than for normal frames. However, there is no certainty that this assumption holds: it is not well understood under what conditions a neural network fails to perform its task and there is no guarantee that all anomalies make it fail. By contrast, no heuristic assumptions underlie the functioning of MULDE.

视频异常检测(VAD)的主流方法是训练深度网络对正常视频进行自动编码,并将重构误差作为异常指标[4, 8, 13, 26, 30, 31, 46, 47]。这种利用在正常数据上预训练模型的误差来检测异常的思路,已从自动编码扩展到多项其他任务,包括预测未来帧或光流[8, 22, 23, 30, 47]、时空修复[10]以及解决拼图问题[2, 42]。这一总体方法基于一个假设:异常帧的重构误差会高于正常帧。然而该假设并不必然成立:我们尚不清楚神经网络在什么条件下会无法完成任务,也无法保证所有异常都会导致其失效。相比之下,MULDE的运行机制不依赖于任何启发式假设。

The idea of detecting video anomalies by modeling the distribution of normal video features recurs in the literature, but, to date, effective modeling techniques remain elusive. Some methods, like Gaussian mixture models [35], one-class Support Vector Machines [5, 6, 25], or multilinear class if i ers [43], may lack the expressive power needed to reflect the complex and high-dimensional distribution of video features. Adversarial ly trained models [11, 21] offer high expressive power, but cannot guarantee to fully capture the distribution, because parts of the feature space may remain unexplored by the generator-disc rim in at or pair during training. Diffusion models capture data distribution, but their use in VAD consists in generating samples of normal frames [45], or human poses [9], and comparing observed frames or poses to generated ones. This requires multiple diffusion steps and reduces the anomaly measure to a distance between the observation and the sample. Normalizing flows, recently used for detecting anomalies in human pose features [14], are free from these drawbacks as they explicitly approximate the likelihood of the training data. However, their performance decreases in the presence of complex correlations between features [19]. In contrast to these methods, MULDE combines all key ingredients of a VAD approach: high expressive power, the capacity to fully capture the distribution, and to accommodate arbitrary features.

通过建模正常视频特征的分布来检测视频异常的想法在文献中反复出现,但迄今为止,有效的建模技术仍然难以捉摸。一些方法,如高斯混合模型 [35]、单类支持向量机 [5, 6, 25] 或多线性分类器 [43],可能缺乏反映视频特征复杂高维分布所需的表达能力。对抗训练模型 [11, 21] 具有高表达能力,但无法保证完全捕获分布,因为在训练过程中生成器-判别器对可能未探索特征空间的某些部分。扩散模型能捕获数据分布,但它们在视频异常检测 (VAD) 中的应用仅限于生成正常帧样本 [45] 或人体姿态 [9],并将观察到的帧或姿态与生成的样本进行比较。这需要多次扩散步骤,并将异常度量简化为观测值与样本之间的距离。最近用于检测人体姿态特征异常的归一化流 [14] 避免了这些缺点,因为它们显式地近似训练数据的似然。然而,当特征之间存在复杂相关性时,其性能会下降 [19]。与这些方法不同,MULDE 结合了视频异常检测方法的所有关键要素:高表达能力、完全捕获分布的能力以及适应任意特征的能力。

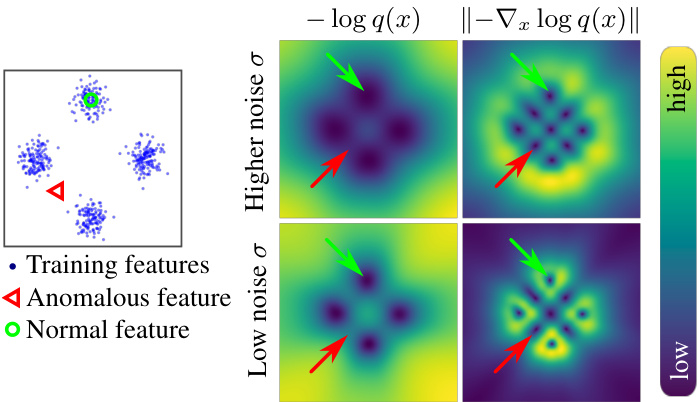

The log-density approximation $f$ , employed by MULDE to model the distribution of normal data, is often called the energy, in reference to the energy-based models [20], which represent probability distributions in the Boltzmann form $\begin{array}{r}{q(\bar{x})=\frac{1}{Z}e^{-f(x)}}\end{array}$ , but restrict the model to the energy function $f$ , since computing the normalization constant $Z$ is typically infeasible. MULDE can therefore be seen as an energy-based model. For training $f$ , MULDE relies on a modification of denoising score matching [41], a method to train a neural network to approximate the energy gradient. Score matching models the distribution of the training data injected with iid Gaussian noise, and Song and Ermon [38] extended it to multiple noise levels. Mahmood et al. [29] used the norm of this multi-level energy gradient approximation to detect anomalies in images. However, the gradient indicates all stationary points of the log-density function, which may appear both at the modes of the distribution, where normal data is concentrated, and in low-density regions, where anomalous data may reside. As shown in Fig. 2, some minima and maxima of the distribution remain indistinguishable even across a range of noise scales. By contrast, MULDE approximates the log-density function which, unlike its gradient, is a good anomaly indicator.

MULDE 用于建模正常数据分布的对数密度近似 $f$ 通常被称为能量 (energy) ,这是基于能量模型 [20] 的概念。这些模型以玻尔兹曼形式表示概率分布 $\begin{array}{r}{q(\bar{x})=\frac{1}{Z}e^{-f(x)}}\end{array}$ ,但将模型限制在能量函数 $f$ 上,因为计算归一化常数 $Z$ 通常不可行。因此,MULDE 可被视为一种基于能量的模型。

为了训练 $f$ ,MULDE 采用了去噪分数匹配 [41] 的改进方法,该方法通过训练神经网络来近似能量梯度。分数匹配对注入独立同分布高斯噪声的训练数据分布进行建模,而 Song 和 Ermon [38] 将其扩展到多个噪声级别。Mahmood 等人 [29] 利用这种多级能量梯度近似的范数来检测图像中的异常。然而,梯度仅指示对数密度函数的所有驻点,这些点可能出现在分布模态(正常数据集中区域)或低密度区域(异常数据可能存在的区域)。如图 2 所示,即使在不同噪声尺度下,分布的某些极小值和极大值仍难以区分。相比之下,MULDE 直接近似对数密度函数,与梯度不同,它是一个更好的异常指标。

Figure 2. The log-density function is well suited for indicating anomalies, but its gradient is not. (Left:) A sample from a mixture of 4 Gaussians. (Right:) Learned negative log-density approximation (left column) and the norm of its gradient (right column). The negative log-density is a good anomaly indicator, taking low values for normal data and higher values for anomalous data. By contrast, the log-gradient norm is low not only at the modes of the distribution, but also at its minima between the modes, making it impossible to distinguish some anomalies from normal data.

图 2: 对数密度函数适合用于指示异常,但其梯度并不适用。(左图:) 来自4个高斯混合分布的样本。(右图:) 学习到的负对数密度近似(左列)及其梯度范数(右列)。负对数密度是一个良好的异常指标,对正常数据取值较低而对异常数据取值较高。相比之下,对数梯度范数不仅在分布的众数处较低,在众数之间的极小值处也较低,导致无法区分某些异常与正常数据。

3. Method

3. 方法

We perform anomaly detection in the space of semantic features extracted from videos. This lets us focus on detecting semantic anomalies, for example, unusual actions involving objects observed also under normal conditions, as opposed to anomalies in the space of raw input, like frame sequences that do not resemble real videos. We delegate feature extraction to off-the-shelf models and focus on the effective detection of anomalous events that they encode.

我们在从视频中提取的语义特征空间进行异常检测。这种方法让我们专注于检测语义层面的异常,例如在正常条件下也能观察到的物体涉及的不寻常动作,而非原始输入空间中的异常(如与现实视频不符的帧序列)。我们将特征提取任务交由现成模型完成,重点在于有效检测这些模型编码的异常事件。

Motivation Intuitively, anomalous video features should not be observed under ‘normal’ conditions. More formally, an anomalous feature is characterized by a small likelihood under the assumed statistical model of anomaly-free video features. This suggests an approach to detecting anomalies by approximating the probability density function $p$ and simply declaring test features with sufficiently low probability anomalous. We adopt this approach and model the probability density with a neural network.

动机 直观上,在"正常"条件下不应观察到异常视频特征。更正式地说,异常特征的特点是在假设的无异常视频特征统计模型中具有较小的似然性。这表明可以通过近似概率密度函数$p$来检测异常,并简单地声明概率足够低的测试特征为异常。我们采用这种方法,并用神经网络对概率密度进行建模。

Overview In practice, it is difficult to train a neural network to directly approximate the probability density of its training data $p$ . However, injecting the data with noise makes this approximation feasible, as we will explain below. The distribution of the noisy data takes the form

概述

在实践中,很难训练神经网络直接近似其训练数据的概率密度 $p$ 。然而,为数据注入噪声使得这种近似变得可行,我们将在下文解释。噪声数据的分布形式为

$$

q(\tilde{\mathbf{x}})=\int\rho(\tilde{\mathbf{x}}|\mathbf{x})p(\mathbf{x})d\mathbf{x},

$$

$$

q(\tilde{\mathbf{x}})=\int\rho(\tilde{\mathbf{x}}|\mathbf{x})p(\mathbf{x})d\mathbf{x},

$$

where $\rho(\ensuremath{\widetilde{\mathbf{x}}}|\ensuremath{\mathbf{x}})$ denotes the conditional distribution of a noisy sample $\tilde{\bf x}$ given a noise-free sample $\mathbf{x}$ , which we take to be an iid Gaussian centered at $\mathbf{x}$ . The main idea behind our anomaly detector is that $q$ preserves the shape of $p$ but, in contrast to it, yields itself to a neural approximation. Specifically, we approximate, up to a constant, the negative logdensity function $-\log q(\tilde{\mathbf{x}})$ , which is an excellent anomaly indicator due to its bijective relation to $q(\tilde{\mathbf{x}})$ . Low values of $-\log q(\tilde{\mathbf{x}})$ correspond to high probability density and are characteristic of normal data. Its high values indicate areas of low probability density where anomalous data may reside. Fig. 2 illustrates this on a synthetic example. The technique we use to approximate the log-density with a neural network is a modification of score matching [41], a method to approximate the log-density gradient.

其中 $\rho(\ensuremath{\widetilde{\mathbf{x}}}|\ensuremath{\mathbf{x}})$ 表示给定无噪声样本 $\mathbf{x}$ 时噪声样本 $\tilde{\bf x}$ 的条件分布,我们假设其为以 $\mathbf{x}$ 为中心的独立同分布高斯分布。我们异常检测器的核心思想是 $q$ 保留了 $p$ 的形态,但与之不同的是,它可以通过神经网络进行近似。具体来说,我们近似负对数密度函数 $-\log q(\tilde{\mathbf{x}})$(相差一个常数),由于其与 $q(\tilde{\mathbf{x}})$ 的双射关系,这是一个极佳的异常指标。$-\log q(\tilde{\mathbf{x}})$ 的低值对应于高概率密度,是正常数据的特征;其高值则表明低概率密度区域,可能是异常数据所在之处。图 2 在一个合成示例中展示了这一点。我们用于通过神经网络近似对数密度的技术是对分数匹配 [41](一种近似对数密度梯度的方法)的改进。

We introduce score matching in Sec. 3.1 and our training method in Sec. 3.2. In Sec. 3.3 and 3.4, we extend this method to approximating the negative log-density at different scales of injected noise, and introduce a regular iz ation term intended to facilitate combining the multi-scale approxima t ions. We discuss the choice of video features in Sec. 3.5. A diagram of our approach is presented in Fig. 1.

我们在第3.1节介绍分数匹配方法,并在第3.2节阐述训练方法。第3.3和3.4节将该方法扩展到不同噪声尺度下的负对数密度近似,并引入正则化项以促进多尺度近似的结合。第3.5节讨论视频特征的选择。图1展示了本方法的框架示意图。

3.1. Background: Denoising score matching

3.1. 背景:去噪分数匹配

Vincent [41] proposed a method to train a neural network $s$ , parameterized with a vector $\theta$ , to approximate the gradient of the negative log-density function of data perturbed with iid Gaussian noise, in the sense of solving

Vincent [41] 提出了一种训练神经网络 $s$ 的方法,该网络由向量 $\theta$ 参数化,用于近似被独立同分布高斯噪声干扰的数据的负对数密度函数的梯度,其求解目标为

$$

\operatorname*{min}{\theta}\mathbb{E}{\tilde{\mathbf{x}}\sim q(\tilde{\mathbf{x}})}\left|s_{\theta}(\tilde{\mathbf{x}})+\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})\right|_{2}^{2}.

$$

$$

\operatorname*{min}{\theta}\mathbb{E}{\tilde{\mathbf{x}}\sim q(\tilde{\mathbf{x}})}\left|s_{\theta}(\tilde{\mathbf{x}})+\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})\right|_{2}^{2}.

$$

This gradient approximation forms the foundation of a family of generative models [38] which initialize a sample with noise and use the log-gradient approximation to drive the sample close to the mode of the distribution. We will show that it also enables effective detection of video anomalies.

该梯度近似方法构成了一系列生成式模型的基础[38],这些模型通过噪声初始化样本,并利用对数梯度近似驱动样本接近分布模态。我们将证明该方法也能有效检测视频异常。

Directly evaluating the objective (2) is impossible because $q(\tilde{\mathbf{x}})$ is not known analytically, but Vincent [41] showed that it is equivalent, up to a constant, to

直接评估目标函数 (2) 是不可能的,因为 $q(\tilde{\mathbf{x}})$ 无法解析求出,但 Vincent [41] 证明它在常数范围内等价于

$$

\operatorname*{min}{\theta}\mathbb{E}{\tilde{\mathbf{x}}\sim\mathcal{N}(\tilde{\mathbf{x}})}\left|s_{\theta}(\tilde{\mathbf{x}})-\frac{\tilde{\mathbf{x}}-\mathbf{x}}{\sigma^{2}}\right|_{2}^{2},

$$

$$

\operatorname*{min}{\theta}\mathbb{E}{\tilde{\mathbf{x}}\sim\mathcal{N}(\tilde{\mathbf{x}})}\left|s_{\theta}(\tilde{\mathbf{x}})-\frac{\tilde{\mathbf{x}}-\mathbf{x}}{\sigma^{2}}\right|_{2}^{2},

$$

which can be evaluated effectively. This gives rise to a stochastic algorithm for training $s$ that iterates: composing a batch of noise-free training data $\mathbf{x}$ , perturbing it with Gaussian noise to obtain a batch of noisy data $\tilde{\bf x}$ , and making a gradient step on the expectation in Eq. (3), evaluated for the batch. Since this resembles training $s$ to predict the noise injected to $\mathbf{x}$ , it is often called denoising score matching.

这催生了一种用于训练 $s$ 的随机算法,其迭代过程包括:构建一批无噪声训练数据 $\mathbf{x}$,用高斯噪声扰动得到一批含噪数据 $\tilde{\bf x}$,并对式 (3) 中的期望进行梯度下降(基于当前批次计算)。由于这种训练方式类似于让 $s$ 预测注入到 $\mathbf{x}$ 的噪声,因此常被称为去噪分数匹配。

3.2. Anomaly detection by denoising score matching

3.2. 基于去噪分数匹配的异常检测

We modify the denoising score matching formulation to train a neural network $f_{\theta}$ , where $\theta$ denotes the vector of parameters, to approximate $-\log q(\tilde{\mathbf{x}})$ , as opposed to its gradient. To that end, we change the objective (3), to train the gradient of $f$ , instead of the network itself, which yields

我们修改了去噪分数匹配的公式,训练一个神经网络 $f_{\theta}$ (其中 $\theta$ 表示参数向量)来近似 $-\log q(\tilde{\mathbf{x}})$ ,而非其梯度。为此,我们调整了目标函数 (3) ,改为训练 $f$ 的梯度而非网络本身,从而得到

$$

\left.\underset{\vphantom{\int}{\mathrm{ \boldmath~\pi~}}\tilde{\theta}}{\operatorname*{min}}\mathbb{E}{{\bf x}\sim p\left({\bf x}\right)}{\left.\mathbf{x}\sim\mathcal{N}\left(\tilde{\bf x}\right|{\bf x},\sigma{\bf I}\right)}\right|\left|\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\bf x}\right)-\frac{\tilde{\bf x}-{\bf x}}{\sigma^{2}}\right|_{2}^{2}.

$$

$$

\left.\underset{\vphantom{\int}{\mathrm{ \boldmath~\pi~}}\tilde{\theta}}{\operatorname*{min}}\mathbb{E}{{\bf x}\sim p\left({\bf x}\right)}{\left.\mathbf{x}\sim\mathcal{N}\left(\tilde{\bf x}\right|{\bf x},\sigma{\bf I}\right)}\right|\left|\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\bf x}\right)-\frac{\tilde{\bf x}-{\bf x}}{\sigma^{2}}\right|_{2}^{2}.

$$

This makes $\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\mathbf{x}}\right)$ approximate $-\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})$ and, by the fundamental theorem of calculus, aligns $f$ with $-\log q(\tilde{\mathbf{x}})$ up to a constant. Here, $f:\mathbb{R}^{d}\rightarrow\mathbb{R}$ is a mapping from the space of video features to scalar log-density values, and $\nabla_{\tilde{\mathbf{x}}}f_{\theta}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{d}$ , like $s$ in the standard score matching formulation (3), maps $d$ -dimensional video features to $d$ -dimensional vectors of log-density gradients.

这使得 $\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\mathbf{x}}\right)$ 近似于 $-\nabla_{\tilde{\mathbf{x}}}\log q(\tilde{\mathbf{x}})$ ,并且根据微积分基本定理,将 $f$ 与 $-\log q(\tilde{\mathbf{x}})$ 对齐至一个常数。这里, $f:\mathbb{R}^{d}\rightarrow\mathbb{R}$ 是从视频特征空间到标量对数密度值的映射,而 $\nabla_{\tilde{\mathbf{x}}}f_{\theta}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{d}$ ,如标准分数匹配公式 (3) 中的 $s$ 一样,将 $d$ 维视频特征映射到 $d$ 维对数密度梯度向量。

Notably, our formulation has an advantage over the standard denoising score matching even when the goal is to approximate the gradient of the log-density, as opposed to the log-density itself. By the Stokes’ theorem, gradients form conservative – that is, curl-free – vector fields. Directly approxima ting the log-density gradient with a neural network, as done by the standard approach, may result in a vector field that is not conservative, in other words, does not represent a gradient of any function. By contrast, the gradient of a neural network trained using our formulation is guaranteed to form a conservative vector field. On the downside, training $f_{\theta}$ with our loss requires the network to be twice differentiable, which precludes the use of ReLU, several other nonlinear i ties, and max pooling.

值得注意的是,即使目标是近似对数密度梯度而非对数密度本身,我们的公式也比标准去噪分数匹配更具优势。根据斯托克斯定理,梯度构成保守(即无旋)向量场。标准方法直接使用神经网络近似对数密度梯度,可能导致向量场不保守(即不代表任何函数的梯度)。相比之下,通过我们的公式训练的神经网络梯度,必然构成保守向量场。不足之处在于,使用我们的损失函数训练$f_{\theta}$需要网络具备二阶可微性,这排除了ReLU激活函数、某些其他非线性单元以及最大池化的使用。

3.3. Distribution modeling across noise scales

3.3. 跨噪声尺度的分布建模

We recall that training with our loss (4) makes $f_{\theta}$ approximate the negative log-density of the distribution of noisy data $q(\tilde{\mathbf{x}})$ , connected to the distribution of noise-free data $p(\mathbf{x})$ through the noise distribution $\rho(\ensuremath{\widetilde{\mathbf{x}}}|\ensuremath{\mathbf{x}})$ . $\rho$ is an iid Gaussian centered at $\mathbf{x}$ and with a standard deviation $\sigma$ . The choice of $\sigma$ , called the noise scale, represents a compromise between making $q$ closer to $p$ at small noise scales and extending its support to cover more anomalies for larger noise levels. In theory, the optimal noise scale could be selected by cross-validation on a combination of normal and anomalous data, but in practice, anomalous validation data is rarely available. Therefore, instead of settling for a single noise scale, we approximate the log-density for a range of noise scales and combine the estimates at different scales by modeling their joint distribution with a Gaussian mixture.

我们回顾一下,使用损失函数 (4) 进行训练会使 $f_{\theta}$ 近似于含噪数据分布 $q(\tilde{\mathbf{x}})$ 的负对数密度,该分布通过噪声分布 $\rho(\ensuremath{\widetilde{\mathbf{x}}}|\ensuremath{\mathbf{x}})$ 与无噪数据分布 $p(\mathbf{x})$ 相关联。$\rho$ 是一个以 $\mathbf{x}$ 为中心、标准差为 $\sigma$ 的独立同分布高斯分布。噪声尺度 $\sigma$ 的选择需要在以下两者之间取得平衡:在小噪声尺度下使 $q$ 更接近 $p$,以及在较大噪声水平下扩展其支持范围以覆盖更多异常。理论上,可以通过在正常数据和异常数据组合上进行交叉验证来选择最优噪声尺度,但实际上很少能获得异常验证数据。因此,我们不局限于单一噪声尺度,而是通过高斯混合模型对不同噪声尺度的对数密度估计进行联合建模,从而组合多个尺度下的估计结果。

To implement the multiscale log-density approximation, we take inspiration from Song and Ermon [38], who extended the original score matching formulation, presented in Eq. (3), to multiple noise scales, and apply a similar extension to our objective (4). Instead of approximating $-\log q(\tilde{\mathbf{x}})$ for a fixed $\sigma$ , we approximate a family of functions $-\log q_{\sigma}(\tilde{\mathbf{x}})$ , parameterized by $\sigma$ , with a neural net- work $f_{\theta}$ , conditioned on $\sigma$ . We found it beneficial to put more emphasis on smaller values of $\sigma$ when training $f_{\theta}$ . Thus, we sample $\sigma$ from the log-uniform distribution on the interval $[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]$ and minimize

为实现多尺度对数密度近似,我们借鉴了Song和Ermon [38] 的方法,他们将原始分数匹配公式(如式(3)所示)扩展到多噪声尺度,并对我们的目标函数(4)进行了类似扩展。我们不再针对固定σ近似$-\log q(\tilde{\mathbf{x}})$,而是通过神经网络$f_{\theta}$来近似一组由σ参数化的函数$-\log q_{\sigma}(\tilde{\mathbf{x}})$,其中网络以σ为条件。我们发现训练$f_{\theta}$时更关注较小的σ值效果更好。因此,我们从区间$[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]$上的对数均匀分布中采样σ并最小化

$$

\left.\begin{array}{l}{\displaystyle\operatorname*{min}{\theta}\mathbb{E}{\mathrm{\boldmath~\scriptstyle{\tilde{\alpha}}~}\sim\mathcal{N}(\tilde{\bf x})}\lambda(\sigma)\left|\nabla_{\tilde{\bf x}}f_{\theta}\left(\tilde{\bf x},\sigma\right)-\frac{\tilde{\bf x}-{\bf x}}{\sigma^{2}}\right|{2}^{2},}\ {\displaystyle\quad\sigma\sim\mathcal{L}\mathcal{U}(\sigma_{\mathrm{low}},\sigma_{\mathrm{high}})}\end{array}\right.

$$

$$

\left.\begin{array}{l}{\displaystyle\operatorname*{min}{\theta}\mathbb{E}{\mathrm{\boldmath~\scriptstyle{\tilde{\alpha}}~}\sim\mathcal{N}(\tilde{\bf x})}\lambda(\sigma)\left|\nabla_{\tilde{\bf x}}f_{\theta}\left(\tilde{\bf x},\sigma\right)-\frac{\tilde{\bf x}-{\bf x}}{\sigma^{2}}\right|{2}^{2},}\ {\displaystyle\quad\sigma\sim\mathcal{L}\mathcal{U}(\sigma_{\mathrm{low}},\sigma_{\mathrm{high}})}\end{array}\right.

$$

where $\lambda(\sigma)$ is a factor that balances the influence of the loss terms at different noise levels. We set $\lambda(\sigma)=\sigma^{2}$ .

其中 $\lambda(\sigma)$ 是平衡不同噪声水平下损失项影响的因子。我们设 $\lambda(\sigma)=\sigma^{2}$。

Once the network is trained, we fit a Gaussian mixture model to multi-scale log-density approximation vectors $[f_{\boldsymbol\theta}(\mathbf{x},\sigma_{i})]{i=1\dots L}$ for an evenly spaced sequence of noise levels ${\sigma_{i}}{i=1}^{L}$ , where ${\sigma_{1}}\mathrm{ =~}\sigma_{\mathrm{{low}}}$ and $\sigma_{L}=\sigma_{\mathrm{high}}$ . At test time, our neural network takes a vector of video features and produces a multi-scale vector of log-density approximations, which is then input to the Gaussian mixture model yielding the final anomaly score.

网络训练完成后,我们对多尺度对数密度近似向量 $[f_{\boldsymbol\theta}(\mathbf{x},\sigma_{i})]{i=1\dots L}$ 拟合高斯混合模型 (Gaussian mixture model),其中噪声水平序列 ${\sigma_{i}}{i=1}^{L}$ 为等间距分布,且满足 ${\sigma_{1}}\mathrm{ =~}\sigma_{\mathrm{{low}}}$ 和 $\sigma_{L}=\sigma_{\mathrm{high}}$。测试阶段,神经网络接收视频特征向量并输出多尺度对数密度近似向量,该向量随后输入高斯混合模型生成最终异常分数。

3.4. Multiscale training regular iz ation

3.4. 多尺度训练正则化

The limitation of our method is that $f_{\boldsymbol{\theta}}(\cdot,\boldsymbol{\sigma})$ can be trained to approximate $-\log q_{\sigma}(\tilde{\mathbf{x}})$ only up to a constant. That is, $f_{\boldsymbol{\theta}}(\tilde{{\mathbf x}},\boldsymbol{\sigma})$ effectively approximates $-\log q_{\sigma}(\tilde{\mathbf{x}})+C_{\sigma}$ , where $C_{\sigma}$ is a constant that we do not know. In our formulation, there is no guarantee that this constant does not change across the range of $\sigma$ . Since the variation of $C_{\sigma}$ may make it more difficult to aggregate the estimates at different scales, we discourage it by using a regular iz ation term $f_{\theta}(\mathbf{x},\sigma)^{2}$ , that penalizes the log-densities of noise-free examples. Our full training objective thus becomes

我们方法的局限性在于 $f_{\boldsymbol{\theta}}(\cdot,\boldsymbol{\sigma})$ 只能训练到近似 $-\log q_{\sigma}(\tilde{\mathbf{x}})$ 加上一个常数。也就是说,$f_{\boldsymbol{\theta}}(\tilde{{\mathbf x}},\boldsymbol{\sigma})$ 实际上近似的是 $-\log q_{\sigma}(\tilde{\mathbf{x}})+C_{\sigma}$,其中 $C_{\sigma}$ 是一个未知常数。在我们的公式中,无法保证这个常数在 $\sigma$ 的取值范围内保持不变。由于 $C_{\sigma}$ 的变化可能会增加不同尺度下估计值聚合的难度,我们通过使用正则化项 $f_{\theta}(\mathbf{x},\sigma)^{2}$ 来抑制这种情况,该正则化项会对无噪声样本的对数密度进行惩罚。因此,我们的完整训练目标变为

$$

\begin{array}{r l}{\underset{\theta}{\mathop{\operatorname*{min}}}\underbrace{{\mathbb{E}}\mathbf{\phi}{\mathbf{x}\sim p(\mathbf{x})}}{\underbrace{\mathbf{\tilde{x}}\sim\mathcal{N}(\tilde{\mathbf{x}}|\mathbf{x},\sigma\mathbf{I})}}\quad\Big[\lambda(\sigma)\left|\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\mathbf{x}},\sigma\right)-\frac{\tilde{\mathbf{x}}-\mathbf{x}}{\sigma^{2}}\right|{2}^{2}}\ {\qquad\sigma\sim\mathcal{L U}(\sigma_{\mathrm{low}},\sigma_{\mathrm{high}})}\ &{\qquad+\beta f_{\theta}(\mathbf{x},\sigma)^{2}\Big],}\end{array}

$$

$$

\begin{array}{r l}{\underset{\theta}{\mathop{\operatorname*{min}}}\underbrace{{\mathbb{E}}\mathbf{\phi}{\mathbf{x}\sim p(\mathbf{x})}}{\underbrace{\mathbf{\tilde{x}}\sim\mathcal{N}(\tilde{\mathbf{x}}|\mathbf{x},\sigma\mathbf{I})}}\quad\Big[\lambda(\sigma)\left|\nabla_{\tilde{\mathbf{x}}}f_{\theta}\left(\tilde{\mathbf{x}},\sigma\right)-\frac{\tilde{\mathbf{x}}-\mathbf{x}}{\sigma^{2}}\right|{2}^{2}}\ &{\qquad\sigma\sim\mathcal{L U}(\sigma_{\mathrm{low}},\sigma_{\mathrm{high}})}\ {\qquad+\beta f_{\theta}(\mathbf{x},\sigma)^{2}\Big],}\end{array}

$$

where $\beta$ is a hyper parameter of our method. Minimizing (6) no longer makes $\nabla_{\mathbf x}f_{\theta}$ an unbiased estimate of the loggradient of the distribution, but as shown in our ablation studies, it improves our results in video anomaly detection. Algorithm 1 summarizes training the log-density approximation $f_{\theta}$ . The Gaussian mixture model is fitted with the standard expectation-maximization algorithm.

其中 $\beta$ 是我们方法的一个超参数。最小化 (6) 不再使 $\nabla_{\mathbf x}f_{\theta}$ 成为分布对数梯度的无偏估计,但如我们的消融实验所示,它提升了视频异常检测的效果。算法 1 总结了训练对数密度近似 $f_{\theta}$ 的过程。高斯混合模型采用标准期望最大化算法进行拟合。

3.5. Selection of video features

3.5. 视频特征选择

Feature selection is closely tied to the type of target anomalies. For example, human pose features are well suited for detecting falls, and optical-flow-based ones help detect objects moving with unusual speeds or in unusual directions. MULDE is feature agnostic and in Sec. 4 we demonstrate

特征选择与目标异常类型密切相关。例如,人体姿态特征非常适合检测跌倒行为,而基于光流(optical flow)的特征有助于检测以异常速度或方向移动的物体。MULDE具有特征无关性,在第4节我们将展示

Algorithm 1 Training MULDE’s anomaly indicator. The terms and steps that differ from the standard multi-scale denoising score matching [38] are highlighted.

算法 1: 训练 MULDE 的异常指标。与标准多尺度去噪分数匹配 [38] 不同的术语和步骤已高亮标注。

Require:

要求:

针对神经对数密度模型,参数化为θ

训练集T包含正常视频特征

噪声尺度范围下限Olow与上限Ohigh

正则化强度β

1: θ ← 随机初始化

2: while 未收敛 do

3: X ← 从T中采样一个批次

4: for x ∈ X do // 遍历批次元素

5: σ ← 对数均匀采样(Olow, Ohigh)

its application with pose, velocity, and deep features, extracted from bounding boxes of object proposals, and ones extracted from entire frames and their sequences. As reported in Sec. 4, feature extraction dominates the running time of our method, which enables selecting the feature extractor to match the desired frame rate.

其应用包括姿态、速度以及从物体提议的边界框中提取的深度特征,以及从整个帧及其序列中提取的特征。如第4节所述,特征提取在我们的方法中占据了大部分运行时间,这使得可以根据所需的帧率选择合适的特征提取器。

4. Experimental Evaluation

4. 实验评估

Data sets We evaluated MULDE on five VAD benchmarks, containing videos captured with static cameras:

数据集

我们在五个视频异常检测(VAD)基准上评估了MULDE,这些数据集均采用静态摄像头拍摄的视频:

• Ped2 [28] includes 16 training and 12 test videos of a campus scene. The training videos show pedestrians and the test videos contain anomalies, like cyclists, skateboarders, and cars in pedestrian areas. • Avenue [25] consists of 16 training and 21 test videos of a walkway. Anomalies include people running, throwing objects, and walking in the wrong direction. • Shanghai Tech [26] comprises 330 training and 107 test videos of 13 pedestrian traffic scenes differing by the camera viewpoint and lighting conditions. Anomalous events include robbery, jumping, fighting, and cycling. • UBnormal [1] is composed of 543 synthetic videos of 29 virtual scenes. It contains human-related anomalies, like fighting, running, and jumping, but it also includes car accidents and environmental anomalies, like fog. • UCFCrime [39] contains 1900 real-world surveillance videos, totaling 128 hours, and including 13 anomaly types, like fighting, robbery, road accidents, and burglary.

- Ped2 [28] 包含16个训练视频和12个测试视频,场景为校园。训练视频展示行人,测试视频则包含异常行为,如骑行、滑板以及行人区域出现车辆。

- Avenue [25] 由16个训练视频和21个测试视频组成,拍摄于一条人行道。异常行为包括奔跑、投掷物品以及逆向行走。

- Shanghai Tech [26] 包含330个训练视频和107个测试视频,涵盖13个不同摄像头视角与光照条件的行人交通场景。异常事件涉及抢劫、跳跃、斗殴及骑行。

- UBnormal [1] 由543个合成视频构成,覆盖29个虚拟场景。除人类相关异常(如斗殴、奔跑、跳跃)外,还包含车祸及环境异常(如雾气)。

- UCFCrime [39] 包含1900段真实监控视频,总时长128小时,涉及13类异常事件,例如斗殴、抢劫、交通事故和入室盗窃。

All our experiments were run in the ‘one-class classification’ setting, that is, we used no anomalous videos for training. In the experiments on UBnormal and UCFCrime, which contain anomalous training videos, we discarded these videos and restricted training to normal data.

我们的所有实验均在"单类分类"设置下运行,即训练过程中未使用任何异常视频。对于包含异常训练视频的UBnormal和UCFCrime数据集实验,我们弃用这些视频并将训练数据严格限定为正常样本。

Performance metrics We followed the standard practice and gauged performance on a frame-by-frame basis. For the object-centric approaches, which yield an anomaly score for each object detected in every frame, we took the highest, i.e. most anomalous, score in a frame for the evaluation. We used the area under the receiver operating characteristic curve (AUC-ROC) as the main performance metric.

性能指标

我们遵循标准做法,逐帧评估性能。对于以物体为中心的方法,这些方法会为每帧中检测到的每个物体生成一个异常分数,我们取帧中最高的(即最异常的)分数进行评估。我们使用接收者操作特征曲线下面积 (AUC-ROC) 作为主要性能指标。

Two methods to aggregate the AUC-ROC over multiple videos can be found in the literature: the micro and the macro score [1, 2, 11, 35, 36]. For the macro score, the AUC-ROC is computed separately for each video and then averaged across all videos in the test set. The micro score computes the AUC-ROC jointly for all frames of all test videos. In abstract terms, the macro score reflects performance attained by adjusting the detection threshold for each video independently, and the micro score is more conservative and applies the same threshold to all test videos. Since we address a VAD use case without an adaptive threshold, we rely on the micro score in our evaluation, but report the results in terms of both metrics. In the supplementary material, we additionally report the tracking- and region-based metrics by Rama chandra and Jones [33].

文献中记载了两种聚合多视频AUC-ROC的方法:微观分数(micro score)和宏观分数(macro score) [1, 2, 11, 35, 36]。宏观分数会为每个视频单独计算AUC-ROC,然后对测试集中所有视频的结果取平均值。微观分数则联合计算所有测试视频帧的AUC-ROC。抽象地说,宏观分数反映了通过为每个视频独立调整检测阈值所获得的性能,而微观分数更为保守,对所有测试视频应用相同的阈值。由于我们处理的视频异常检测(VAD)用例没有自适应阈值,因此在评估中采用微观分数,但会同时报告两种指标的结果。在补充材料中,我们还额外报告了Rama chandra和Jones [33]提出的基于跟踪和区域的指标。

Baselines We compared MULDE to the best-performing VAD algorithms, including ones based on the reconstruction error [10, 16, 47], auxiliary tasks [1, 2, 10, 24, 36, 42, 45], adversarial training [1, 8, 11, 48], normalizing flows [14], and one-class classification with a multi linear classifier [43]. We reproduced the performance metrics of these methods as reported in the original papers. We present an even broader comparison, including less recent work, in the supplementary material.

基线方法

我们将MULDE与性能最佳的语音活动检测(VAD)算法进行了比较,包括基于重构误差的方法 [10, 16, 47]、基于辅助任务的方法 [1, 2, 10, 24, 36, 42, 45]、基于对抗训练的方法 [1, 8, 11, 48]、基于标准化流的方法 [14] 以及使用多线性分类器的单类分类方法 [43]。我们按照原始论文报告的性能指标复现了这些方法的结果。补充材料中还提供了更广泛的比较,包括一些较早的研究工作。

Two baselines are related to our method more closely than the others. The AccI-VAD [35] approximates the probability density function of normal video features with a Gaussian mixture model, while we perform this approximation with a neural network. MSMA [29] uses the norm of the log-density gradient as an anomaly indicator, while our anomaly indicator is based on the log-density itself. Since MSMA was developed for image anomaly detection, we reimplemented it to work with video features.

有两种基线方法与我们的方法关联更为密切。AccI-VAD [35] 采用高斯混合模型近似正常视频特征的概率密度函数,而我们使用神经网络进行这种近似。MSMA [29] 使用对数密度梯度的范数作为异常指标,而我们的异常指标基于对数密度本身。由于 MSMA 是为图像异常检测开发的,我们重新实现了它以处理视频特征。

Implementation details In our experiments, our density model $f_{\theta}$ para met rize d by $\theta$ has two hidden layers with 4096 units followed by GELU nonlinear i ties. The final layer has an output dimension of one without any nonlinearity. It is trained using the Adam update rule [18], with exponential decay rates $\beta_{1}=0.5$ and $\beta_{2}=0.9$ , and a batch size of 2048. We use the learning rates of 5e-4 and 1e-4 in the object- and frame-centric experiments, respectively.

实现细节

在我们的实验中,密度模型 $f_{\theta}$ (参数化为 $\theta$) 包含两个隐藏层,每层有4096个单元,后接GELU非线性激活。最后一层输出维度为1且不包含任何非线性。模型使用Adam更新规则 [18] 进行训练,指数衰减率 $\beta_{1}=0.5$ 和 $\beta_{2}=0.9$,批量大小为2048。在物体中心实验和帧中心实验中,我们分别使用5e-4和1e-4的学习率。

Table 1. Object-centric results. Frame-level AUC-ROC $(%)$ comparison (best marked bold, second best underlined). *implemented by us.

表 1. 以对象为中心的结果。帧级AUC-ROC $(%)$ 对比 (最优值加粗显示,次优值加下划线)。*由我们实现。

| 方法 | Ped2 | Avenue | ShanghaiTech | |||

|---|---|---|---|---|---|---|

| Micro | Macro | Micro | Macro | Micro | Macro | |

| CAE-SVM[16] | 94.3 | 97.8 | 87.4 | 90.4 | 78.7 | 84.9 |

| VEC [47] | 97.3 | 90.2 | 74.8 | |||

| SSMTL [10] | 97.5 | 99.8 | 91.5 | 91.9 | 82.4 | 89.3 |

| HF2 [24] | 99.3 | 91.1 | 93.5 | 76.2 | ||

| BA-AED [11] | 98.7 | 99.7 | 92.3 | 90.4 | 82.7 | 89.3 |

| [11]+SSPCAB[36] | 92.9 | 91.9 | 83.6 | 89.5 | ||

| Jigsaw-Puzzle[42] | 99.0 | 99.9 | 92.2 | 93.0 | 84.3 | 89.8 |

| [10]+UbNormal[1] | 93.0 | 93.2 | 83.7 | 90.5 | ||

| AccI-VAD[35] | 99.1 | 99.9 | 93.3 | 96.2 | 85.9 | 89.6 |

| SSMTL++ [2] | 93.7 | 92.5 | 83.8 | 90.5 | ||

| MSMA*[29] | 99.5 | 99.9 | 90.2 | 92.5 | 84.1 | 90.2 |

| STG-NF [14] | 85.9 | |||||

| MULDE3=0 (ours) | 99.7 | 99.9 | 93.1 | 96.1 | 86.4 | 91.0 |

| MULDE (ours) | 99.7 | 99.9 | 94.3 | 96.1 | 86.7 | 91.5 |

Both during training and at test time, we standardize the video features component-wise using the statistics of the training set. During training, we sample the noise scale used for each batch element from the log-uniform distribution on the interval $[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]=[1\mathrm{e}{-}3,1.0]$ . For evaluation, we use $L=16$ evenly spaced noise levels between $[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]$ .

在训练和测试期间,我们使用训练集的统计数据对视频特征进行逐分量标准化。训练时,我们从区间 $[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]=[1\mathrm{e}{-}3,1.0]$ 的对数均匀分布中为每批元素采样噪声尺度。评估时,我们在 $[\sigma_{\mathrm{low}},\sigma_{\mathrm{high}}]$ 之间使用 $L=16$ 个均匀间隔的噪声级别。

Video feature extraction MULDE is agnostic of the features used to represent the video content. In our experiments, we reused off-the-shelf video feature extractors.

视频特征提取

MULDE 不限定用于表示视频内容的特征类型。在我们的实验中,我们复用了现成的视频特征提取器。

In the object-centric experiments, we used the feature extraction pipeline proposed by Reiss and Hoshen [35]. It detects objects in each video frame and extracts deep and velocity features for each detected object. Additionally, pose features are extracted for each detected person. The pose vector contains coordinates of 17 keypoints and is obtained with AlphaPose [7]. CLIP image encoder [32] is used to extract deep features, which take the form of 512-dimensional vectors. The velocity features are produced by FlowNet 2.0 [15] and binned into histograms of oriented flows.

在以物体为中心的实验中,我们采用了Reiss和Hoshen [35]提出的特征提取流程。该流程检测每帧视频中的物体,并为每个检测到的物体提取深度特征和速度特征。此外,还会为每个检测到的人体提取姿态特征。姿态向量包含17个关键点的坐标,通过AlphaPose [7]获得。CLIP图像编码器 [32]用于提取深度特征,这些特征以512维向量的形式呈现。速度特征由FlowNet 2.0 [15]生成,并被分箱为定向流直方图。

In the frame-centric experiments, we extracted features using Hiera-L [37], a masked auto encoder pre-trained on images and fine-tuned on Kinetics 400 [17], a largescale video action recognition data set. Hiera-L takes sequences of 16 frames as input and produces feature 1152- dimensional feature vectors.

在帧中心实验中,我们使用Hiera-L [37]提取特征,这是一种在图像上预训练并在Kinetics 400 [17](一个大规模视频动作识别数据集)上微调的掩码自编码器。Hiera-L以16帧序列作为输入,并生成1152维特征向量。

Performance in object-centric VAD We present the results in object-centric VAD in Table 1. MULDE outperforms all the baselines in terms of the more conservative micro score. Interestingly, AccI-VAD which, like MULDE, relies on modeling the probability density of normal data, ranks third on all three data sets. The high accuracy of both methods speaks in favor of the density modeling approach, while the edge MULDE holds over AccI-VAD attests to the superiority of MULDE’s neural density model over the combination of Gaussian mixture models and the $\mathbf{k}\mathbf{\cdot}$ -th nearest neighbor technique employed by AccI-VAD. MSMA, which uses an approximation of the log-density gradient as anomaly indicator, is outperformed by our logdensity-based method by a fair margin.

以目标为中心的视频异常检测性能

我们在表1中展示了以目标为中心的视频异常检测(VAD)结果。MULDE在更为保守的微观评分指标上超越了所有基线方法。值得注意的是,与MULDE类似、同样基于正常数据概率密度建模的AccI-VAD,在三个数据集中均位列第三。两种方法的高准确率印证了密度建模方法的有效性,而MULDE对AccI-VAD的优势则证明了其神经密度模型优于后者采用的高斯混合模型与$\mathbf{k}\mathbf{\cdot}$近邻技术组合的方案。使用对数密度梯度近似作为异常指标的MSMA方法,其表现显著逊于我们基于对数密度的方法。

Table 2. Frame-centric results. Frame-level AUC-ROC $(%)$ comparison (best marked bold, second best underlined). *implemented by us.

表 2. 帧中心结果。帧级 AUC-ROC $(%)$ 比较 (最佳加粗,次佳加下划线)。*由我们实现。

| 方法 | ShanghaiTech | UCF-Crime | UBnormal | |||

|---|---|---|---|---|---|---|

| Micro | Macro | Micro | Macro | Micro | Macro | |

| CT-D2GAN[8] | 77.7 | |||||

| CAC [44] | 79.3 | |||||

| Scene-Aware[40] | 74.7 | 72.7 | ||||

| GODS [43] | 70.5 | |||||

| GCL [48] | 74.2 | |||||

| UBnormal [1] | 68.5 | 80.3 | ||||

| FPDM [45] | 78.6 | 74.7 | 62.7 | |||

| AccI-VADGMM* [35] | 76.2 | 82.9 | 60.3 | 84.5 | 66.8 | 83.2 |

| AccI-VADkNN*[35] | 71.9 | 83.1 | 53.0 | 82.7 | 65.2 | 82.5 |

| MSMA*[29] | 76.7 | 84.2 | 64.5 | 83.4 | 70.3 | 85.1 |

| MULDEg=o(ours) | 78.4 | 86.0 | 75.9 | 84.8 | 71.3 | 86.0 |

| MULDE(ours) | 81.3 | 85.9 | 78.5 | 84.9 | 72.8 | 85.5 |

Performance in frame-centric VAD As can be seen in Table 2, in frame-centric VAD, MULDE outperforms other methods on all three data sets. Notably, on the UCFCrime data set, by far the largest publicly available VAD benchmark, we advance the state of the art in terms of the micro score by 3.8 percent points. We improve the state of the art by more than 2pp on Shanghai Tech and UBnormal.

帧中心VAD性能表现

如表2所示,在帧中心VAD任务中,MULDE方法在三个数据集上均优于其他方法。值得注意的是,在当前最大的公开VAD基准数据集UCFCrime上,我们将微观分数指标提升了3.8个百分点。在Shanghai Tech和UBnormal数据集上,我们的方法也将当前最优结果提高了超过2个百分点。

Moreover, while recent object-centric methods dominate frame-centric methods on data sets enabling both types of evaluation, MULDE narrows this performance gap, attaining the micro score of $81.3%$ in frame-centric VAD on Shanghai Tech and $86.7%$ in object-centric VAD on the same data set. On UBnormal, our frame-centric approach even outperforms the object-centric state-of-the-art method [14] by 1.1 percent points of the micro score.

此外,尽管近期以物体为中心的方法在支持两种评估类型的数据集上优于以帧为中心的方法,但MULDE缩小了这一性能差距,在上海科技大学数据集上以帧为中心的异常检测(VAD)中取得了81.3%的微平均分数,在同一数据集上以物体为中心的VAD中达到86.7%。在UBnormal数据集上,我们的帧中心方法甚至以1.1个百分点的微平均分数优势超越了当前最先进的物体中心方法[14]。

Ablation studies To validate the design of MULDE, we run ablation studies and evaluated the contribution to performance from our regular iz ation term, the Gaussian mixture model, and the architecture of our anomaly indicator.

消融实验

为验证 MULDE 的设计,我们进行了消融实验,评估了正则化项、高斯混合模型以及异常指标架构对性能的贡献。

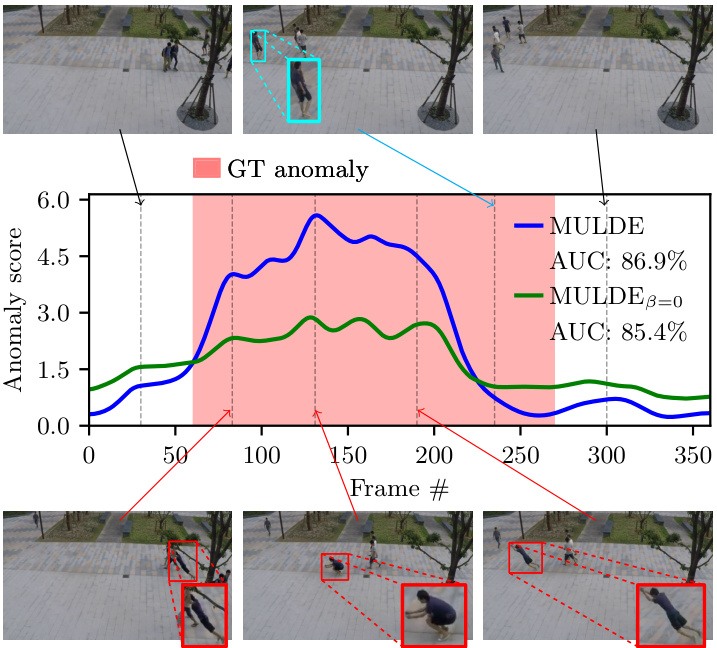

As shown in Table 3, regular iz ation improves the results of frame-centric VAD in terms of the micro score, although different values of the regular iz ation factor $\beta$ are optimal for different data sets. We take $\beta=0.1$ as a compromise among performance on the three data sets. A qualitative example of anomaly detection by MULDE with and without regular iz ation, shown in Fig. 3, demonstrates that a regularized model produces stronger anomaly indications.

如表 3 所示,正则化 (regularization) 在微观分数 (micro score) 方面提升了帧中心语音活动检测 (frame-centric VAD) 的结果,尽管不同的正则化因子 $\beta$ 值对不同数据集效果最佳。我们选择 $\beta=0.1$ 作为在三个数据集性能之间的折衷方案。图 3 展示了 MULDE 在有/无正则化情况下的异常检测定性示例,表明经过正则化的模型能产生更显著的异常指示。

Table 3. Frame-centric performance of MULDE trained with different values of the regular iz ation parameter $\beta$ . Frame-level AUCROC $(%)$ comparison (best marked bold, second best underlined).

表 3: 采用不同正则化参数 $\beta$ 训练的 MULDE 帧中心性能。帧级 AUCROC $(%)$ 对比 (最优值加粗,次优值加下划线)。

| β | ShanghaiTech | UCF-Crime | UBnormal | |||

|---|---|---|---|---|---|---|

| Micro | Macro | Micro | Macro | Micro | Macro | |

| 0.0 | 78.4 | 86.0 | 75.9 | 84.8 | 71.3 | 86.0 |

| 0.01 | 80.7 | 84.9 | 76.6 | 84.9 | 72.9 | 85.2 |

| 0.1 | 81.3 | 85.9 | 78.5 | 84.9 | 72.8 | 85.5 |

| 1.0 | 81.4 | 84.5 | 77.2 | 85.5 | 72.5 | 84.7 |

Figure 3. Anomaly detection with MULDE in a test video of the Shanghai Tech data set (video 13 in scene 4). Pedestrians walking in frames 30 and 300 represent normal behavior. A person jumping across the scene is annotated as anomalous. The anomaly indication produced by MULDE is aligned with the ground truth (GT) at its beginning but terminates earlier than the GT annotation. However, careful examination of the video reveals that normal behavior (walking, cyan bounding box in the top row) is re-instantiated